Abstract

As an immensely important characteristic of natural images, the nonlocal self-similarity (NSS) prior has demonstrated great promise in a variety of inverse problems. Unfortunately, most current methods utilize either the internal or the external NSS prior learned from the degraded image or training images. The former is inevitably disturbed by degradation, while the latter is not adapted to the image to be restored. To mitigate such problems, this work proposes to learn a hybrid NSS prior from both internal images and external training images and employs it in image restoration tasks. To achieve our aims, we first learn internal and external NSS priors from the measured image and high-quality image sets, respectively. Then, with the learned priors, an efficient method, involving only singular value decomposition (SVD) and a simple weighting method, is developed to learn the HNSS prior for patch groups. Subsequently, taking the learned HNSS prior as the dictionary, we formulate a structural sparse representation model with adaptive regularization parameters called HNSS-SSR for image restoration, and a general and efficient image restoration algorithm is developed via an alternating minimization strategy. The experimental results indicate that the proposed HNSS-SSR-based restoration method exceeds many existing competition algorithms in PSNR and SSIM values.

Keywords:

image prior learning; nonlocal self-similarity; image restoration; structural sparse representation; adaptive regularization parameter MSC:

94A08; 68U10

1. Introduction

Along with the advancement of various optical technologies and sensors, images have become one of the most important carriers of information. Unfortunately, image degradation is inevitable during acquisition, transmission, and storage because of defects in the imaging system and interference from various external factors. Therefore, image restoration, which strives to reconstruct the underlying uncorrupted image from the corrupted measurement , is essential in a lot of fields of science and engineering. In general, the image degradation process is modeled as:

where denotes the degradation operator and represents the white Gaussian noise. In Equation (1), different settings of correspond to different image restoration problems. To be specific, when is an identity matrix, Equation (1) becomes image denoising [1]; when is a blurring matrix, Equation (1) converts to image deblurring [2,3]; and when is a random projection matrix, Equation (1) denotes image compressive sensing [4,5].

As image restoration in Equation (1) is a typically ill-posed linear inverse problem, an image prior is often required to constrain the solution space. Specifically, from the standpoint of maximum a posteriori (MAP) estimation, the latent high-quality image can be inferred by solving the following regularization problem [2]:

where denotes the -norm, is the fidelity term associated with Gaussian noise, is the regularization term that relies on the image prior, and is employed to balance these two terms.

Due to the curse of dimensionality, it is almost impossible to model the whole image. A remedy is to use the image patch as the basic unit of modeling [6,7]. Thus, over the past few decades, the patch-based prior has been extensively studied and has achieved favorable image restoration performance, such as patch-based sparse representation [8,9,10,11,12,13] and patch-based image modeling [6,14,15,16]. Recently, deep learning has also been adopted to learn image priors in a supervised manner and has spawned promising results in various image restoration applications [17,18,19,20,21,22]. Both the model-based and deep learning-based approaches mentioned above, however, are dedicated to mining the local properties of images, whose performance is restricted by largely neglecting the self-similarity and nonlocal properties of images [1,23,24]. In addition, deep learning methods require a training set consisting of extensive degraded/high quality image pairs for supervised learning, which renders them difficult to apply or causes undesirable artifacts in some tasks, such as medical imaging and remote sensing [25,26].

As we all know, natural images have rich self-repeating structures in nonlocal regions, i.e., the so-called nonlocal self-similarity (NSS) prior [27,28]. Compared to the patch-based prior, the NSS prior enables us to cluster together nonlocal patches with similar patterns over the whole image and use such similar patch groups as the basic unit for restoration, which is especially helpful for recovering image structures [27,28,29], such as textures and edges. Given the great success of the nonlocal means (NLM) [27] method, a series of NSS prior-based methods have been developed successively and have shown impressive restoration effects. These approaches can be broadly summarized into three clusters, i.e., filter-based methods [27,29,30], patch group-based sparse representation methods [4,23,31,32,33,34,35,36,37,38], and low-rank approximation-based methods [2,39,40,41,42,43,44]. Apart from focusing on the internal NSS prior of the corrupted image, some recent approaches have paid attention to exploiting the external NSS prior learned from high-quality natural images [28,45,46]. For example, Xu et al. [28] developed a patch group prior-based denoising (PGPD) method for learning dictionaries from the natural image corpus. Liu et al. [46] formulated a external NSS prior-based group sparsity mixture model for image denoising. Although the aforementioned NSS prior-based methods have shown their potential in recovering image structures, exploiting the internal NSS of the observed image often suffers from overfitting data corruption [5], while the external NSS prior learned from training images is not well-adapted to the image to be recovered [47].

To rectify the weakness of using a single NSS prior, some more recent works proposed to jointly utilize internal and external NSS priors [1,3,5,7,24,26,48,49]. For instance, Zha et al. [1] developed a denoising method based on sparse residuals by using an external NSS prior. Liu et al. [49] proposed a group sparse representation-based super-resolution algorithm to leverage internal and external correlations. Zha et al. [24] proposed to simultaneously use internal and external NSS priors for image restoration. Yuan et al. [3] formulated a joint group dictionary-based structural sparse model for image restoration. Zha et al. [5] developed a hybrid structural sparsification error model to jointly exploit internal and external NSS priors. Yuan et al. [7] suggested the joint use of a low-rank prior and an external NSS prior. These methods have led to promising restoration results, since the complementary information of internal and external NSS priors is exploited.

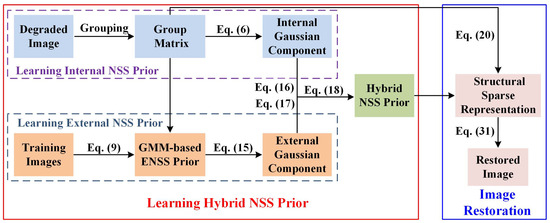

Unlike the above works, in this paper, we propose to learn a hybrid NSS (HNSS) prior for image restoration. In particular, most of existing works mainly concentrate their attention on how to jointly utilize two priors, i.e., internal and external NSS priors, while this work focuses on developing a new paradigm to learn one HNSS prior from both the internal degraded and external natural image sets and applies the learned prior to image restoration. It can be seen that the technical route of our proposed method is quite different from the above existing works. The flowchart of our method is presented in Figure 1. To the best of our knowledge, how to learn an HNSS prior remains an unsolved problem, and this paper thus takes a stab at it. We summarize the main contributions as follows:

Figure 1.

The flowchart of the proposed method.

- We develop a flexible yet simple approach to learn the HNSS prior from both internal degraded and external natural image sets.

- An HNSS prior-based structural sparse representation (HNSS-SSR) model with adaptive regularization parameters is formulated for image restoration.

- A general and efficient image restoration algorithm is developed by employing an alternating minimization strategy to solve the resulting image restoration problem.

- Extensive experimental results indicate that our proposed HNSS-SSR model exceeds many existing competition algorithms in terms of quantitative and qualitative quality.

2. Learning the Hybrid Nonlocal Self-Similarity Prior

Here, internal and external NSS priors are first learned from the observed image and training image sets. Specifically, the Gaussian mixture model (GMM) is employed to learn internal and external NSS priors, respectively, since Zoran and Weiss [14,50] have shown that GMM can learn priors more efficiently, i.e., obtaining higher log likelihood scores and better denoising performance, compared to other common methods. On this basis, the HNSS prior is then learned by singular value decomposition (SVD) and by an efficient yet simple weighting method.

2.1. Learning the Internal NSS Prior from a Degraded Image

Given a degraded image , our desired goal is to learn the NSS prior of its corresponding latent high-quality image , i.e., the internal NSS prior. However, since the underlying original image is unknown, it is first initialized to the degraded image, i.e., . Then, we divide into N overlapped local patches with size , and the n most similar patches for each are found to construct a similar patch group , where is a vectorized image patch. Specifically, for patch , we compute the Euclidean distance between it and each patch, i.e., , and then select n patches with the smallest distance as similar patches. In practice, this can be done via the K-Nearest Neighbor (KNN) [51] method.

In view of its great success in modeling image patches [6,14,15] and patch groups [28,52], GMM with finite Gaussian components is adopted in this paper to learn both internal and external NSS priors (which will be introduced in the next subsection). As a result, the following likelihood:

is employed for each patch group to learn the internal NSS prior, where is a hyperparameter denoting the total number of Gaussian components; , , and denote the mean vector, covariance matrix, and weight of the k-th Gaussian component, respectively; and . Regarding all patch groups as independent samples [1,28,52], the overall log-likelihood function for learning the internal NSS prior can be given as:

By maximizing Equation (4) over all patch groups , the parameters of the GMM can be learned, which describe the internal NSS prior. Note that the subscript is used to indicate the internal NSS prior.

However, it is a fact that different patch groups contain different fine-scale details of the image be recovered. Accordingly, in this paper, when learning the internal NSS prior, instead of directly optimizing Equation (4), we assign an exclusive Gaussian component to each patch group, i.e.,

Hence, the total number of Gaussian components for learning the internal NSS prior is naturally set as , and for each patch group , its corresponding and are obtained by the following maximum likelihood (ML) estimate [15,52]:

Specifically, and can be estimated as:

2.2. Learning the External NSS Prior from a Natural Image Corpus

With a set of pre-collected natural images, a total of L similar patch groups are first extracted to form an external training patch group set, which is denoted as , where , is the j-th vectorized patch of patch group , and d is the number of similar patches. As in Equation (4), by the use of the GMM, the log-likelihood function over the training set for learning the external NSS prior is formulated as:

where the subscript is used to indicate the external NSS prior, and the other variables have meanings similar to those in Equation (4).

Instead of capturing fine-scale details of the image be recovered, the aim of the external NSS prior is to learn the rich structural information of the images, such as edges with different orientations and contours with various shape. As a result, the Expectation Maximization (EM) algorithm [53] is adopted to maximize Equation (9). In the E-step, the posterior probability and mixing weight for the k-th component are updated as follows:

In the M-step, the k-th Gaussian component is calculated as:

The external NSS prior can be progressively learned by performing the above two steps successively until convergence. Please refer to [53] for more details about the EM algorithm. In practice, it is notable that, as the internal NSS prior has learned the main background information of the image be recovered, i.e., , it is not a requirement to learn them from training images. Therefore, all patch groups in are preprocessed by mean subtraction, and in Equation (13) is naturally set to be . This mean subtraction operation can also greatly reduce the total number of mixing components needed to learn [1,28].

2.3. Learning the Hybrid NSS Prior for Patch Groups

Now, for each patch group of the image be recovered, we learn the HNSS prior from its corresponding internal and external NSS priors. As described in Section 2.1, the Gaussian component with parameters and depicts the internal NSS prior of , and the most suitable external NSS prior for is determined by calculating the MAP probability:

where denotes the identity matrix. The corresponding Gaussian component is parameterized by and .

Next, to better characterize the structure and detail information contained in patch group , we first learn a set of internal and external bases by performing SVD on and , respectively:

With the internal NSS prior and external NSS prior , an improved HNSS prior for can then be learned by the following form:

where with . One can see that Equation (18) provides a simple yet flexible way to learn the HNSS prior. Specifically, a weighting scheme that allows different weights to be assigned to different bases is employed, and Equation (18) can be reduced to the internal or external prior by setting or .

As shown in Equation (18), the problem becomes how to learn . A straightforward approach is to set , but it treats each basis equally. However, as is learned from external natural images and represents the k-th subspaces of the external NSS prior, it is beneficial to recover the common latent structures, but it cannot be adaptive to the given image. While can characterize the fine-scale details that are particular to the degraded image, the common structures are disturbed by degradation. As a result, different weights should be assigned to different bases. Actually, the SVD in Equation (17) has helped us learn such weights implicitly. It is well-known that singular values in characterize the properties of singular vectors in . Concretely, singular value vectors with large singular values characterize the main structure of the image, while singular value vectors with small singular values represent the fine-scale details. Hence, in this work, each weight is computed as follows:

where is the r-th singular value of .

By learning the HNSS prior for each patch group in the above manner, the HNSS prior for the whole image can be formed as . In the next section, the learned prior is used for image restoration.

3. Image Restoration via the Hybrid NSS Prior

In this section, we first formulate an HNSS prior-based structural sparse representation (HNSS-SSR) model with adaptive regularization parameters and then develop a general restoration algorithm by applying it to image restoration.

3.1. HNSS Prior-Based Structural Sparse Representation

As described in Section 2, the learned HNSS prior can characterize the common structures and fine-scale details of the given image well. On the other hand, the structural sparse representation has exhibited notable success in many image restoration tasks [1,4,23,24,28,35]. As a result, we incorporate the learned HNSS prior into the structured sparse representation. Specifically, by using the learned HNSS prior as the dictionary, the proposed HNSS-SSR model is formulated as:

where denotes the Frobenius norm, is the matrix form of , with , stands for the group sparse coefficient, denotes that the -norm is imposed on each column in , and is a regularization parameter vector with non-negative . Note that, since contains similar patches, the same regularization parameter is assigned to the coefficients associated with the r-th atom in .

To make the proposed HNSS-SSR model more stable, we connect the sparse estimation problem in Equation (20) with the MAP estimation problem to adaptively update regularization parameters. Concretely, for a given patch group , where is the Gaussian noise, we can form the MAP estimation of as:

In literature, the i.i.d. Laplacian distribution is usually used to characterize the statistical properties of sparse coefficients [1,11,13,28,35,47]. Hence, by imposing the Laplacian distribution with the same parameter on the coefficients associated with the same atom of , can be written as:

where is the -th element of , and is the estimated standard deviation of [13,39]. Substituting Equation (22) into Equation (21) and deriving, we have the following:

By connecting Equation (20) with Equation (23), each can be adaptively calculated as follows:

where is a small constant for numerical stability.

Once the group sparse coefficient is estimated by solving Equation (20), the corresponding patch group can be reconstructed as:

3.2. Image Restoration

The proposed HNSS-SSR model is now used for image restoration tasks, and we develop a general restoration algorithm. Specifically, by embedding our proposed HNSS-SSR of Equation (20) into the regularization problem of Equation (2), the HNSS-SSR-based restoration framework can be first formulated as:

where denotes the patch group extraction operation, and is a patch extraction matrix. With the learned HNSS prior, the proposed restoration framework in Equation (26) can both adapt to the image to be recovered and also mitigate the overfitting to degradation.

Then, we employ the alternating minimization strategy to efficiently solve Equation (26). In particular, Equation (26) can be decomposed into sub-problem and sub-problems, which can be solved efficiently.

3.2.1. Solving the Sub-Problem

Given , Equation (26) reduces to the following sub-problem:

which consists of a series of HNSS-SSR problems proposed in Equation (20). As a result, we here adopt the Iterative Soft Thresholding Algorithm (ISTA) [54] to update , i.e.,

where c represents the square spectral norm of , and is the soft-thresholding operator:

where with all elements 1, and ⊙ represents the element-wise multiplication operation. Note that ISTA has been proven to converge effectively to a global optimum.

3.2.2. Solving the x Sub-Problem

Given the updated , let , and we can naturally obtain the sub-problem as follows:

which allows for the following solution:

where stands for the j-th column vector in .

In practice, the higher performance can be achieved by alternately solving the above and sub-problems T times. To mitigate the effect of degradation on prior learning, in the t-th iteration, the output of the previous iteration is used to update the HNSS prior. Furthermore, to steadily create solutions, the iterative regularization strategy [55] is employed to estimate in each iteration as follows:

where denotes a constant. To conclude, Algorithm 1 fully summarizes our proposed HNSS-SSR-based restoration algorithm.

| Algorithm 1 HNSS-SSR-based Image Restoration |

Input: Degraded image , measurement matrix , and external NSS prior GMM model Output: The restored image .

|

4. Experimental Results

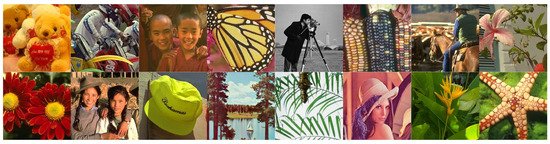

Here, we conduct image denoising and deblurring experiments to reveal the validity of our learned HNSS prior and proposed restoration algorithm. Figure 2 illustrates 16 test images used in this work. As the human vision system is susceptible to variations in illuminance, the restoration for color images is only focused on the luminance channel. To objectively assess the different restoration algorithms, the peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) [56] are jointly used as evaluation metrics. To achieve fair comparisons, we run the source codes released by the authors to obtain the restoration results of other competing approaches. In external NSS prior learning, the total number of Gaussian components and the number of similar patches d are set to 32 and 10, respectively. The patch groups for learning were extracted from the Kodak photoCD dataset (http://r0k.us/graphics/kodak/, accessed on 13 September 2022).

Figure 2.

Test images in experiments.

4.1. Image Denoising

This subsection performs image denoising experiments using our proposed HNSS-SSR restoration algorithm. It is worth noting that image denoising is an ideal benchmark for evaluating image priors and restoration algorithms. The noisy observations are generated by disturbing test images with additive white Gaussian noises. The detailed parameter settings for denoising experiments are given below. The size of the image patch is set to , , and for , , and , respectively. The number of similar patches n, scaling factor , and iteration times T are set to , , , and for , , , and , respectively. We empirically fix the regularization parameter for all cases.

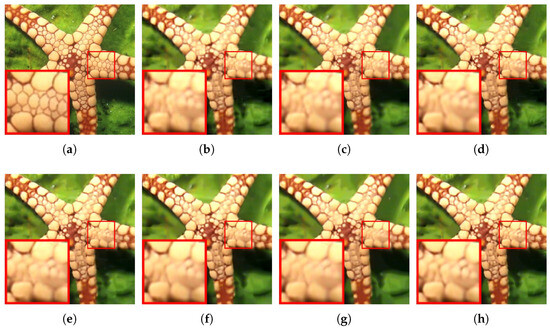

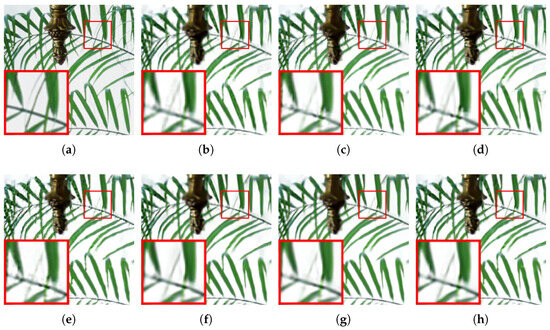

To objectively demonstrate its denoising capability, our proposed HNSS-SSR is first contrasted with several existing superior denoising algorithms, which include BM3D [29], NCSR [13], PGPD [28], GSRC-ENSS [1], RRC [41], and SNSS [24]. Among them, BM3D, NCSR, and RRC utilize the internal NSS prior, while PGPD uses the external NSS prior. Moreover, GSRC-ENSS and SNSS jointly use internal and external NSS priors and achieve superior denoising effects. Table 1 and Table 2 illustrate the denoising results of various competing approaches, and we mark the highest objective metric values in bold. It is obvious that our proposed HNSS-SSR delivers admirable denoising capabilities. Specifically, in Table 1, one can observe that our proposed HNSS-SSR has the highest PSNR in a majority of cases. Furthermore, in terms of average PSNR, our proposed HNSS-SSR enjoys a performance gain over BM3D by 0.35 dB, over NCSR by 0.50 dB, over PGPD by 0.18 dB, over GSRC-ENSS by 0.25 dB, over RRC by 0.22 dB, and over SNSS by 0.17 dB. In Table 2, it can be observed that the SSIM results of the proposed HNSS-SSR exceed other competing approaches in most cases. In terms of average SSIM, our proposed HNSS-SSR realizes 0.0112–0.0278, 0.0116–0.0228, 0.0044–0.0254, 0.0149–0.0225, 0.0058–0.0170, and 0.0075–0.0133 gains over the other six denoising methods respectively mentioned above. Moreover, the visual denoising results of various approaches are presented in Figure 3 and Figure 4. From Figure 3, we can observe that the comparison methods have a tendency to over-smooth edge details. In Figure 4, it can be observed that the comparison algorithms not only are likely to smooth the latent structure, but also suffer from different degrees of undesired artifacts. Fortunately, our proposed HNSS-SSR is extremely beneficial in recovering the latent structure and fine-scale details while effectively suppressing artifacts.

Table 1.

PSNR comparison of BM3D [29], NCSR [13], PGPD [28], GSRC-ENSS [1], RRC [41], SNSS [24], and HNSS-SSR for image denoising.

Table 2.

SSIM comparison of BM3D [29], NCSR [13], PGPD [28], GSRC-ENSS [1], RRC [41], SNSS [24], and HNSS-SSR for image denoising.

Figure 3.

Denoising visual results for Starfish with . (a) Original image; (b) BM3D [29] (PSNR = 25.04 dB, SSIM = 0.7433); (c) NCSR [13] (PSNR = 25.09 dB, SSIM = 0.7453); (d) PGPD [28] (PSNR = 25.11 dB, SSIM = 0.7454); (e) GSRC-ENSS [1] (PSNR = 25.44 dB, SSIM=0.7606); (f) RRC [41] (PSNR = 25.34 dB, SSIM = 0.7589); (g) SNSS [24] (PSNR = 25.25 dB, SSIM = 0.7491); (h) HNSS-SSR (PSNR = 25.53 dB, SSIM = 0.7671).

Figure 4.

Denoising visual results for Leaves with . (a) Original image; (b) BM3D [29] (PSNR = 22.49 dB, SSIM = 0.8072); (c) NCSR [13] (PSNR = 22.60 dB, SSIM=0.8233); (d) PGPD [28] (PSNR = 22.61 dB, SSIM = 0.8121); (e) GSRC-ENSS [1] (PSNR = 22.90 dB, SSIM = 0.8339); (f) RRC [41] (PSNR = 22.91 dB, SSIM = 0.8377); (g) SNSS [24] (PSNR = 22.98 dB, SSIM = 0.8365); (h) HNSS-SSR (PSNR = 23.17 dB, SSIM = 0.8465).

We also evaluate the proposed HNSS-SSR on the BSD68 dataset [57]. In addition to the above methods, two recently proposed methods with excellent denoising performance, i.e., GSMM [46] and LRENSS [7], are also used to compare with our method. Table 3 lists the corresponding PSNR and SSIM results. Note that the denoising results of GSMM are quoted from Reference [46]. From Table 3, it can be seen that the proposed HNSS-SSR consistently outperforms all other methods except LRENSS. Furthermore, the denoising results of the proposed HNSS-SSR are comparable to LRENSS in terms of PSNR and SSIM.

Table 3.

Average denoising result comparison of BM3D [29], NCSR [13], PGPD [28], GSRC-ENSS [1], RRC [41], SNSS [24], GSMM [46], LRENSS [7], and HNSS-SSR on the BSD68 dataset [57].

The validity of our proposed HNSS-SSR is further demonstrated by comparing it with the deep learning-based denoising approaches. Specifically, we evaluate our proposed HNSS-SSR, TNRD [19], and S2S [58] on the Set12 dataset [20]. The average PSNR and SSIM results are listed in Table 4, with the best results highlighted in bold. It can be seen that the proposed HNSS-SSR is better than TNRD and S2S across the board. In particular, the proposed HNSS-SSR achieves {0.19 dB, 0.43 dB} average PSNR gains, and {0.0072, 0.0196} average SSIM gains over TNRD and S2S, respectively.

Table 4.

Average denoising result comparison of TNRD [19], S2S [58], and HNSS-SSR on the Set12 dataset [20].

4.2. Image Deblurring

In this subsection, we apply the proposed HNSS-SSR to image deblurring. Following prior works [13,24], we adopt the uniform blur kernel with size and the Gaussian kernel with standard deviation 1.6 to assess all deblurring approaches. For each test image, it is first blurred by a blur kernel and then corrupted by the additive white Gaussian noise with standard deviation to generate the degraded image. In deblurring experiments, we set to , respectively.

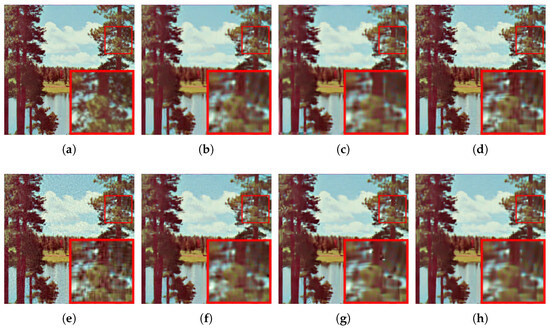

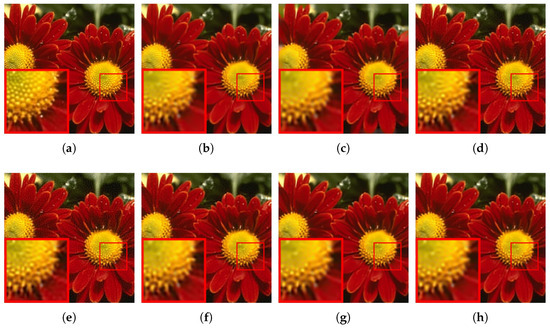

The deblurring performance of our proposed HNSS-SSR is verified by comparing it with several leading methods, including BM3D [59], EPLL [14], NCSR [13], JSM [60], MS-EPLL [6], and SNSS [24]. Note that BM3D, EPLL, and NCSR are three typical deblurring approaches, and JSM, MS-EPLL, and SNSS are recently developed algorithms with advanced performance. The single NSS prior is utilized by all comparison methods except SNSS, which uses both internal and external NSS priors. The deblurring results of different algorithms are presented in Table 5 and Table 6. We can observe that our proposed HNSS-SSR has the highest PSNR and SSIM in most cases compared to other competing deblurring approaches, and only SNSS is slightly better than the proposed HNSS-SSR in individual cases. Furthermore, for uniform blur, the proposed HNSS-SSR achieves {1.35 dB, 3.25 dB, 0.33 dB, 3.16 dB, 2.85 dB, 0.18 dB} average PSNR gains and {0.0391, 0.0391, 0.0135, 0.1672, 0.0340, 0.0030} average SSIM gains over BM3D, EPLL, NCSR, JSM, MS-EPLL, and SNSS, respectively. For Gaussian blur, our proposed HNSS-SSR achieves {1.22 dB, 5.51 dB, 0.67 dB, 1.64 dB, 4.78 dB, 0.35 dB} average PSNR gains and {0.0265, 0.0440, 0.0214, 0.0675, 0.0408, 0.0034} average SSIM gains over BM3D, EPLL, NCSR, JSM, MS-EPLL, and SNSS, respectively. The visual deblurring results of different approaches are presented in Figure 5 and Figure 6. It can be obviously observed that BM3D, NCSR, JSM, and MS-EPLL produce a lot of unpleasant artifacts, while EPLL and SNSS cause over-smoothing phenomena. In comparison, our proposed HNSS-SSR method effectively eliminates artifacts while delivering a friendly visual perception.

Table 5.

PSNR comparison of BM3D [59], EPLL [14], NCSR [13], JSM [60], MS-EPLL [6], SNSS [24], and HNSS-SSR for image deblurring.

Table 6.

SSIM comparison of BM3D [59], EPLL [14], NCSR [13], JSM [60], MS-EPLL [6], SNSS [24], and HNSS-SSR for image deblurring.

Figure 5.

Deblurring results for Lake with uniform kernel. (a) Original image; (b) BM3D [59] (PSNR = 27.32 dB, SSIM = 0.8230); (c) EPLL [14] (PSNR = 25.12 dB, SSIM = 0.8285); (d) NCSR [13] (PSNR = 28.12 dB, SSIM = 0.8471); (e) JSM [60] (PSNR = 25.90 dB, SSIM = 0.7021); (f) MS-EPLL [6] (PSNR = 25.74 dB, SSIM = 0.8288); (g) SNSS [24] (PSNR = 28.06 dB, SSIM = 0.8538); (h) HNSS-SSR (PSNR = 28.41 dB, SSIM = 0.8609).

Figure 6.

Deblurring results for Flowers with Gaussian kernel. (a) Original image; (b) BM3D [59] (PSNR = 29.84 dB, SSIM = 0.8592); (c) EPLL [14] (PSNR = 25.14 dB, SSIM = 0.8397); (d) NCSR [13] (PSNR = 30.20 dB, SSIM = 0.8617); (e) JSM [60] (PSNR = 29.51 dB, SSIM = 0.8081); (f) MS-EPLL [6] (PSNR = 27.20 dB, SSIM = 0.8569); (g) SNSS [24] (PSNR = 30.25 dB, SSIM = 0.8773); (h) HNSS-SSR (PSNR = 30.52 dB, SSIM = 0.8827).

The proposed HNSS-SSR is also tested on the Set14 dataset [61], and compared with the recently proposed JGD-SSR model [3] and LRENSS prior [7]. Note that JGD-SSR jointly utilizes the internal and external NSS priors, while LRENSS jointly utilizes the low-rank prior and external NSS prior. The average PSNR and SSIM results are listed in Table 7. It can be seen that the proposed HNSS-SSR has performance comparable to JGD-SSR and LRENSS and has considerable PSNR and SSIM gains compared to other methods.

Table 7.

Average deblurring result comparison of BM3D [59], EPLL [14], NCSR [13], JSM [60], MS-EPLL [6], SNSS [24], JGD-SSR [3], LRENSS [7], and HNSS-SSR on the Set14 dataset [61].

The benefit of our proposed HNSS-SSR is further evidenced by making a comparison with deep learning-based approaches, specifically involving RED [62], IRCNN [63], and H-PnP [64], on the Set14 dataset [61]. Table 8 presents the deblurring results. One can clearly see that our proposed HNSS-SSR is far preferable to RED. Meanwhile, the proposed HNSS-SSR not only yields comparable PSNR results with IRCNN and H-PnP, but also has the best SSIM results. As we all know, SSIM is more consistent with human vision than PSNR, so SSIM can usually lead to a more objective quantitative evaluation [56]. In particular, the SSIM gains of our proposed HNSS-SSR over RED, IRCNN, and H-PnP are 0.0081, 0.0045, and 0.0038, respectively.

Table 8.

Average deblurring result comparison of RED [62], IRCNN [63], H-PnP [64], and HNSS-SSR on the Set14 dataset [61].

4.3. Computational Time

In this subsection, we report the running time of different denoising and deblurring methods on the image in Table 9. All methods are tested on Intel® Core™ i7-9700 3.00 GHz CPU PC under the MATLAB 2019a environment. Note that the experimental results of GSMM are obtained from Reference [46], so its running time is not reported here. One can see that, for image denoising, the proposed HNSS-SSR is slower than only BM3D and PGPD, and for image deblurring, the proposed HNSS-SSR is faster than SNSS and LRENSS.

Table 9.

Running time in seconds (s) of different denoising and deblurring methods.

5. Conclusions

This paper proposed to learn a new NSS prior, namely the HNSS prior, from both internal and external image data and applied it to the image restoration problem. Two sets of GMMs for depicting internal and external NSS priors were first learned from the degraded observation and natural image sets, respectively. Subsequently, based on learned internal and external priors, the HNSS prior that can better characterize the image structure and detail information was efficiently learned by SVD and a simple weighting method. An HNSS prior-based structural sparse representation (HNSS-SSR) model with adaptive regularization parameters was then formulated for the image restoration problem. Further, we adopted an alternate minimization strategy to solve the corresponding restoration problem, resulting in a general restoration algorithm. Experimental results have validated that, compared to many classical or excellent approaches, our proposed HNSS-SSR algorithm not only provides better visual results but also yields competitive PSNR and SSIM metrics.

Author Contributions

Conceptualization, W.Y. and H.L.; methodology, W.Y. and H.L.; software, W.Y.; resources, H.L., L.L. and W.W.; writing—original draft preparation, W.Y.; writing—review and editing, W.Y., H.L., L.L. and W.W.; supervision, H.L., L.L. and W.W.; funding acquisition, H.L., L.L. and W.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grants 92270117, U2034209, and 62376214, the Natural Science Basic Research Program of Shaanxi under Grant 2023-JC-YB-533, and the Construction Project of Qin Chuangyuan Scientists and Engineers in Shaanxi Province under Grant 2024QCY-KXJ-160.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zha, Z.; Zhang, X.; Wang, Q.; Bai, Y.; Chen, Y.; Tang, L.; Liu, X. Group sparsity residual constraint for image denoising with external nonlocal self-similarity prior. Neurocomputing 2018, 275, 2294–2306. [Google Scholar] [CrossRef]

- Yuan, W.; Liu, H.; Liang, L.; Xie, G.; Zhang, Y.; Liu, D. Rank minimization via adaptive hybrid norm for image restoration. Signal Process. 2023, 206, 108926. [Google Scholar] [CrossRef]

- Yuan, W.; Liu, H.; Liang, L. Joint group dictionary-based structural sparse representation for image restoration. Digit. Signal Process. 2023, 137, 104029. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, D.; Gao, W. Group-based sparse representation for image restoration. IEEE Trans. Image Process. 2014, 23, 3336–3351. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.; Zhu, C.; Kot, A.C. A hybrid structural sparsification error model for image restoration. IEEE Trans. Neural Networks Learn. Syst. 2021, 33, 4451–4465. [Google Scholar] [CrossRef] [PubMed]

- Papyan, V.; Elad, M. Multi-scale patch-based image restoration. IEEE Trans. Image Process. 2016, 25, 249–261. [Google Scholar] [CrossRef] [PubMed]

- Yuan, W.; Liu, H.; Liang, L.; Wang, W.; Liu, D. Image restoration via joint low-rank and external nonlocal self-similarity prior. Signal Process. 2024, 215, 109284. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef]

- Mairal, J.; Elad, M.; Sapiro, G. Sparse representation for color image restoration. IEEE Trans. Image Process. 2007, 17, 53–69. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Shi, G.; Wu, X. Image deblurring and super-resolution by adaptive sparse domain selection and adaptive regularization. IEEE Trans. Image Process. 2011, 20, 1838–1857. [Google Scholar] [CrossRef] [PubMed]

- Gai, S. Theory of reduced biquaternion sparse representation and its applications. Expert Syst. Appl. 2023, 213, 119245. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 2013, 22, 1620–1630. [Google Scholar] [CrossRef] [PubMed]

- Zoran, D.; Weiss, Y. From learning models of natural image patches to whole image restoration. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 479–486. [Google Scholar]

- Yu, G.; Sapiro, G.; Mallat, S. Solving inverse problems with piecewise linear estimators: From Gaussian mixture models to structured sparsity. IEEE Trans. Image Process. 2012, 21, 2481–2499. [Google Scholar] [PubMed]

- Colak, O.; Eksioglu, E.M. On the fly image denoising using patch ordering. Expert Syst. Appl. 2022, 190, 116192. [Google Scholar] [CrossRef]

- Burger, H.C.; Schuler, C.J.; Harmeling, S. Image denoising: Can plain neural networks compete with BM3D? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2392–2399. [Google Scholar]

- Wang, J.; Wang, Z.; Yang, A. Iterative dual CNNs for image deblurring. Mathematics 2022, 10, 3891. [Google Scholar] [CrossRef]

- Chen, Y.; Pock, T. Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1256–1272. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Van Gool, L.; Timofte, R. Plug-and-play image restoration with deep denoiser prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6360–6376. [Google Scholar] [CrossRef]

- Li, X.; Wang, J.; Liu, X. Deep Successive Convex Approximation for Image Super-Resolution. Mathematics 2023, 11, 651. [Google Scholar] [CrossRef]

- Yuan, W.; Liu, H.; Liang, L. Image restoration via exponential scale mixture-based simultaneous sparse prior. IET Image Process. 2022, 16, 3268–3283. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Zhou, J.; Zhu, C.; Wen, B. Image restoration via simultaneous nonlocal self-similarity priors. IEEE Trans. Image Process. 2020, 29, 8561–8576. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Yuan, X.; Wen, B.; Zhang, J.; Zhou, J.; Zhu, C. Simultaneous nonlocal self-similarity prior for image denoising. In Proceedings of the IEEE International Conference on Image Processing, Taipei, Taiwan, 22–25 September 2019; pp. 1119–1123. [Google Scholar]

- Wen, B.; Li, Y.; Bresler, Y. Image recovery via transform learning and low-rank modeling: The power of complementary regularizers. IEEE Trans. Image Process. 2020, 29, 5310–5323. [Google Scholar] [CrossRef] [PubMed]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Xu, J.; Zhang, L.; Zuo, W.; Zhang, D.; Feng, X. Patch group based nonlocal self-similarity prior learning for image denoising. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 244–252. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Xu, J.; Liu, M.; Liu, G.; Liu, L.; Zhu, F.; Shao, L. NLH: A blind pixel-level non-local method for real-world image denoising. IEEE Trans. Image Process. 2020, 29, 5121–5135. [Google Scholar] [CrossRef]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. In Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2272–2279. [Google Scholar]

- Dong, W.; Shi, G.; Ma, Y.; Li, X. Image restoration via simultaneous sparse coding: Where structured sparsity meets gaussian scale mixture. Int. J. Comput. Vis. 2015, 114, 217–232. [Google Scholar] [CrossRef]

- Yuan, W.; Liu, H.; Liang, L.; Wang, W.; Liu, D. A hybrid structural sparse model for image restoration. Opt. Laser Technol. 2022, 171, 110401. [Google Scholar] [CrossRef]

- Ou, Y.; Swamy, M.; Luo, J.; Li, B. Single image denoising via multi-scale weighted group sparse coding. Signal Process. 2022, 200, 108650. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Wen, B.; Zhou, J.; Zhu, C. Group sparsity residual constraint with non-local priors for image restoration. IEEE Trans. Image Process. 2020, 29, 8960–8975. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Wen, B.; Zhang, J.; Zhou, J.; Zhu, C. Image restoration using joint patch-group-based sparse representation. IEEE Trans. Image Process. 2020, 29, 7735–7750. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.; Zhu, C. Image restoration via reconciliation of group sparsity and low-rank models. IEEE Trans. Image Process. 2021, 30, 5223–5238. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.; Zhu, C.; Kot, A.C. Low-rankness guided group sparse representation for image restoration. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 7593–7607. [Google Scholar] [CrossRef]

- Dong, W.; Shi, G.; Li, X. Nonlocal image restoration with bilateral variance estimation: A low-rank approach. IEEE Trans. Image Process. 2012, 22, 700–711. [Google Scholar] [CrossRef]

- Gu, S.; Xie, Q.; Meng, D.; Zuo, W.; Feng, X.; Zhang, L. Weighted nuclear norm minimization and its applications to low level vision. Int. J. Comput. Vis. 2017, 121, 183–208. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Wen, B.; Zhou, J.; Zhang, J.; Zhu, C. From rank estimation to rank approximation: Rank residual constraint for image restoration. IEEE Trans. Image Process. 2019, 29, 3254–3269. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.F.; Wang, Q.W.; Song, G.J.; Li, T. Quaternion matrix factorization for low-rank quaternion matrix completion. Mathematics 2023, 11, 2144. [Google Scholar] [CrossRef]

- Xu, C.; Liu, X.; Zheng, J.; Shen, L.; Jiang, Q.; Lu, J. Nonlocal low-rank regularized two-phase approach for mixed noise removal. Inverse Probl. 2021, 37, 085001. [Google Scholar] [CrossRef]

- Lu, J.; Xu, C.; Hu, Z.; Liu, X.; Jiang, Q.; Meng, D.; Lin, Z. A new nonlocal low-rank regularization method with applications to magnetic resonance image denoising. Inverse Probl. 2022, 38, 065012. [Google Scholar] [CrossRef]

- Li, H.; Jia, X.; Zhang, L. Clustering based content and color adaptive tone mapping. Comput. Vis. Image Underst. 2018, 168, 37–49. [Google Scholar] [CrossRef]

- Liu, H.; Li, L.; Lu, J.; Tan, S. Group sparsity mixture model and its application on image denoising. IEEE Trans. Image Process. 2022, 31, 5677–5690. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, L.; Zhang, D. External prior guided internal prior learning for real-world noisy image denoising. IEEE Trans. Image Process. 2018, 27, 2996–3010. [Google Scholar] [CrossRef] [PubMed]

- Yue, H.; Sun, X.; Yang, J.; Wu, F. CID: Combined image denoising in spatial and frequency domains using Web images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Mandi, India, 16–19 December 2014; pp. 2933–2940. [Google Scholar]

- Liu, J.; Yang, W.; Zhang, X.; Guo, Z. Retrieval compensated group structured sparsity for image super-resolution. IEEE Trans. Multimed. 2017, 19, 302–316. [Google Scholar] [CrossRef]

- Zoran, D.; Weiss, Y. Natural images, Gaussian mixtures and dead leaves. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; Volume 25. [Google Scholar]

- Keller, J.M.; Gray, M.R.; Givens, J.A. A fuzzy k-nearest neighbor algorithm. IEEE Trans. Syst. Man Cybern. 1985, SMC-15, 580–585. [Google Scholar]

- Niknejad, M.; Rabbani, H.; Babaie-Zadeh, M. Image restoration using Gaussian mixture models with spatially constrained patch clustering. IEEE Trans. Image Process. 2015, 24, 3624–3636. [Google Scholar] [CrossRef]

- Wu, C.J. On the convergence properties of the EM algorithm. Ann. Stat. 1983, 11, 95–103. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. A J. Issued Courant Inst. Math. Sci. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Osher, S.; Burger, M.; Goldfarb, D.; Xu, J.; Yin, W. An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 2005, 4, 460–489. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Quan, Y.; Chen, M.; Pang, T.; Ji, H. Self2self with dropout: Learning self-supervised denoising from single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1890–1898. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image restoration by sparse 3D transform-domain collaborative filtering. In Proceedings of the Image Processing: Algorithms and Systems VI, San Jose, CA, USA, 28–29 January 2008; pp. 62–73. [Google Scholar]

- Zhang, J.; Zhao, D.; Xiong, R.; Ma, S.; Gao, W. Image restoration using joint statistical modeling in a space-transform domain. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 915–928. [Google Scholar] [CrossRef]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In Proceedings of the Curves and Surfaces: 7th International Conference, Avignon, France, 24–30 June 2010; pp. 711–730. [Google Scholar]

- Romano, Y.; Elad, M.; Milanfar, P. The little engine that could: Regularization by denoising (RED). SIAM J. Imaging Sci. 2017, 10, 1804–1844. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning deep CNN denoiser prior for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3929–3938. [Google Scholar]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.T.; Zhou, J.; Zhu, C. Triply complementary priors for image restoration. IEEE Trans. Image Process. 2021, 30, 5819–5834. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).