Ocelli: Efficient Processing-in-Pixel Array Enabling Edge Inference of Ternary Neural Networks

Abstract

1. Introduction

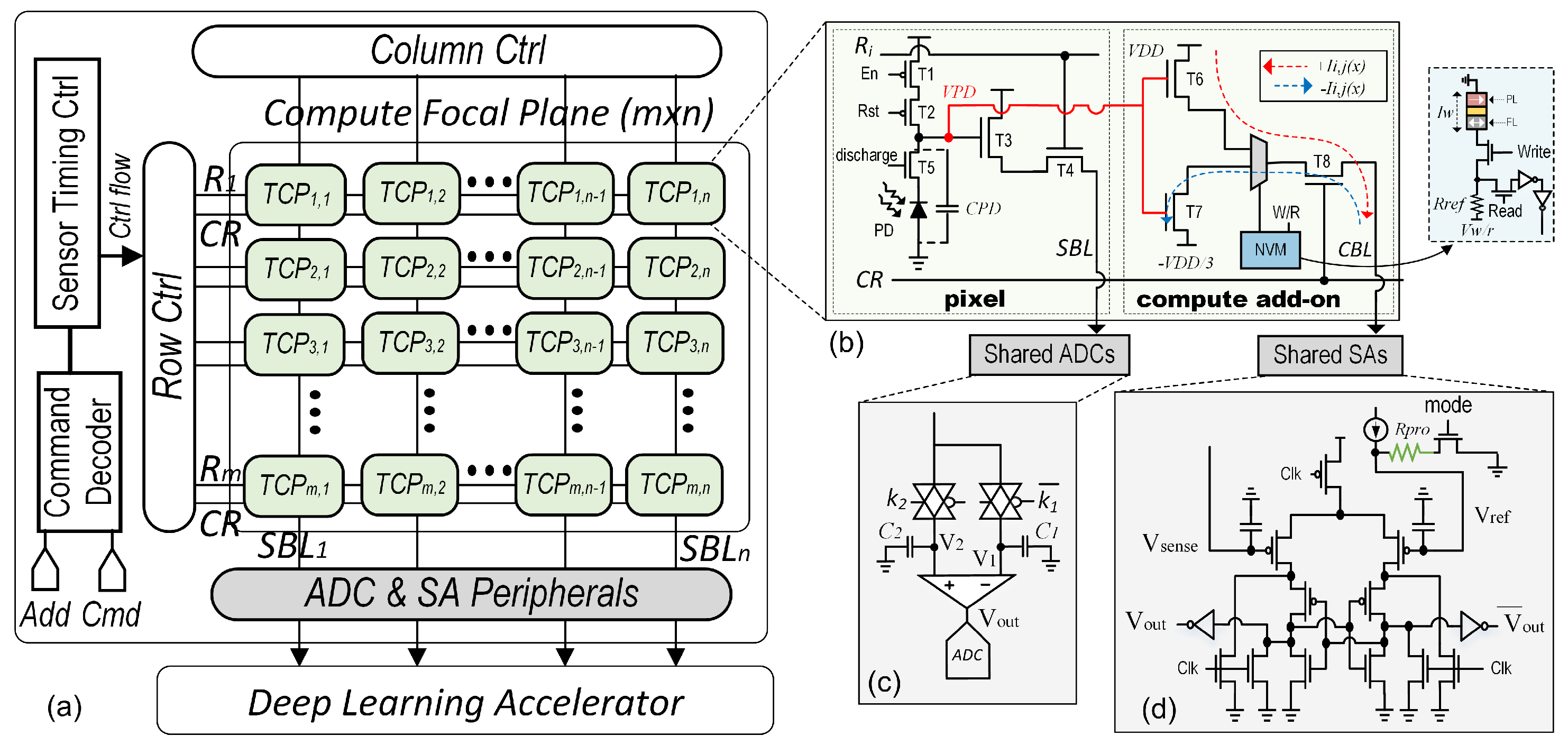

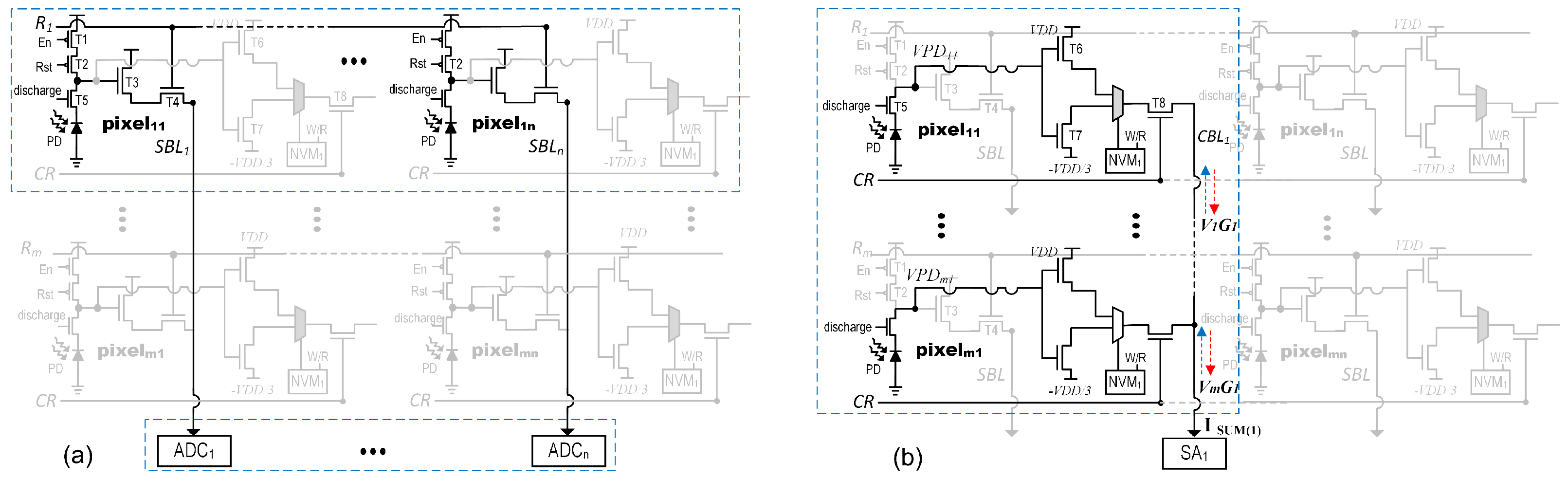

2. Near/In-Sensor Processing Background

3. Proposed Ternary Compute Pixel

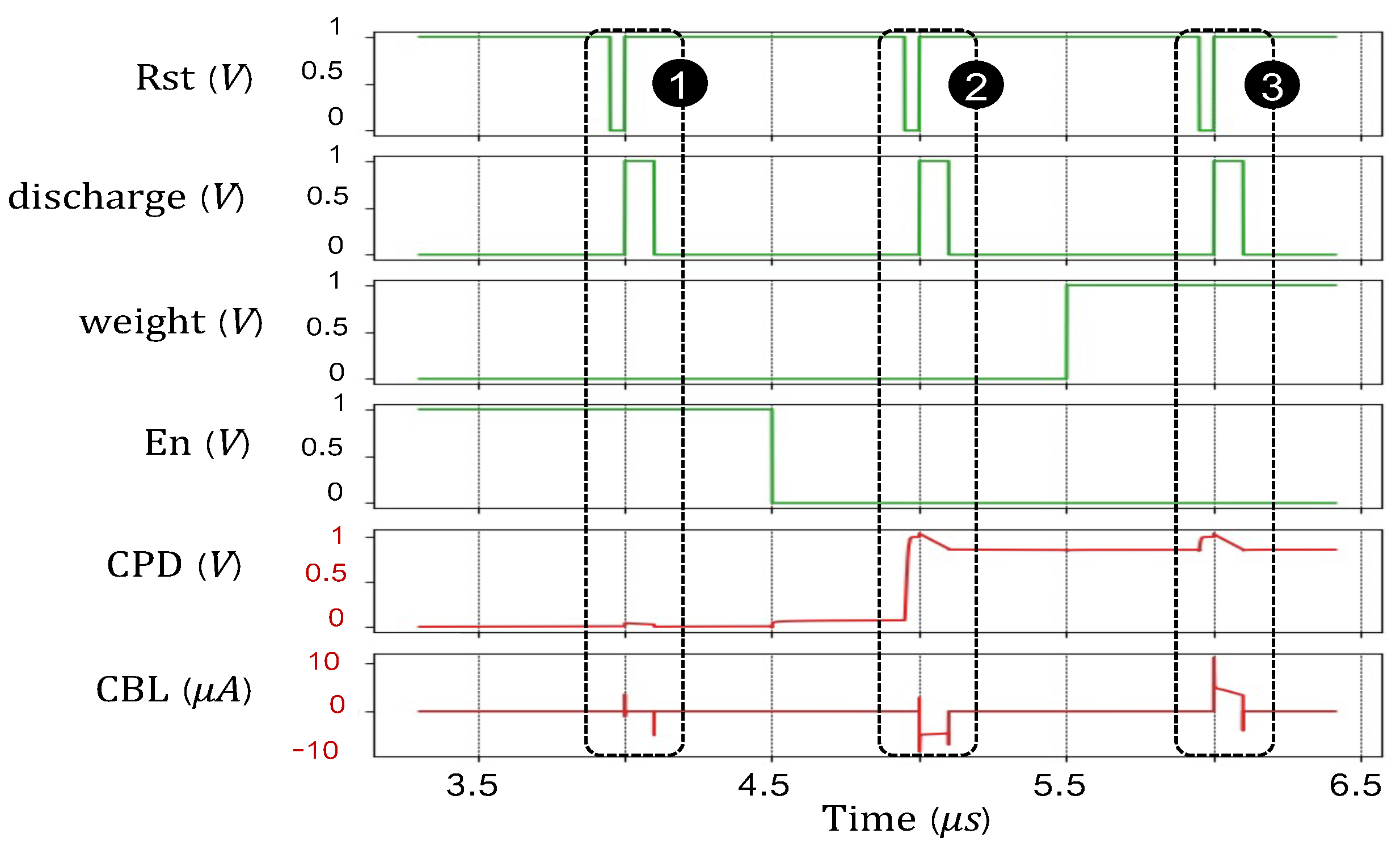

3.1. Sensing Mode

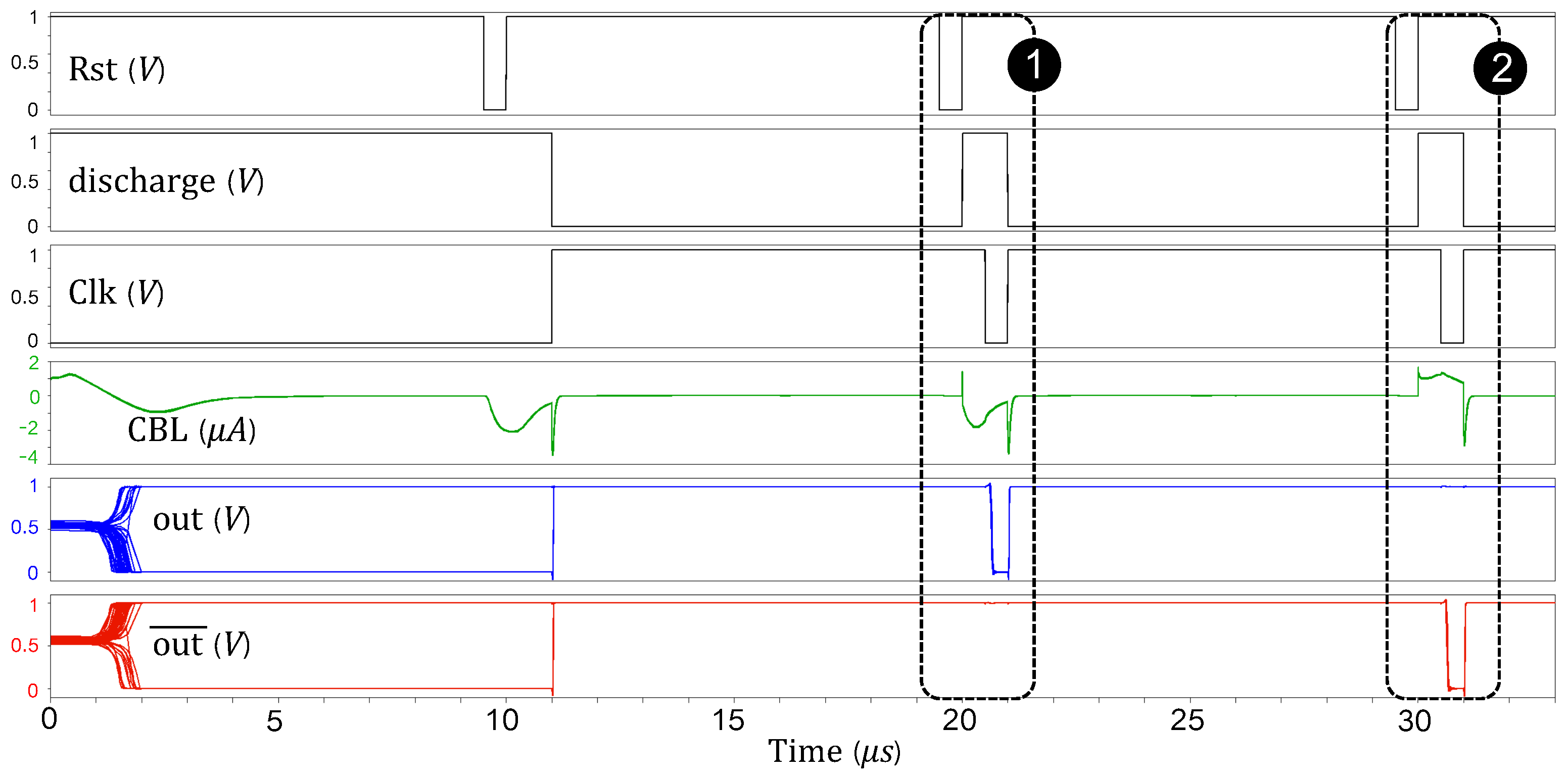

3.2. Processing Mode

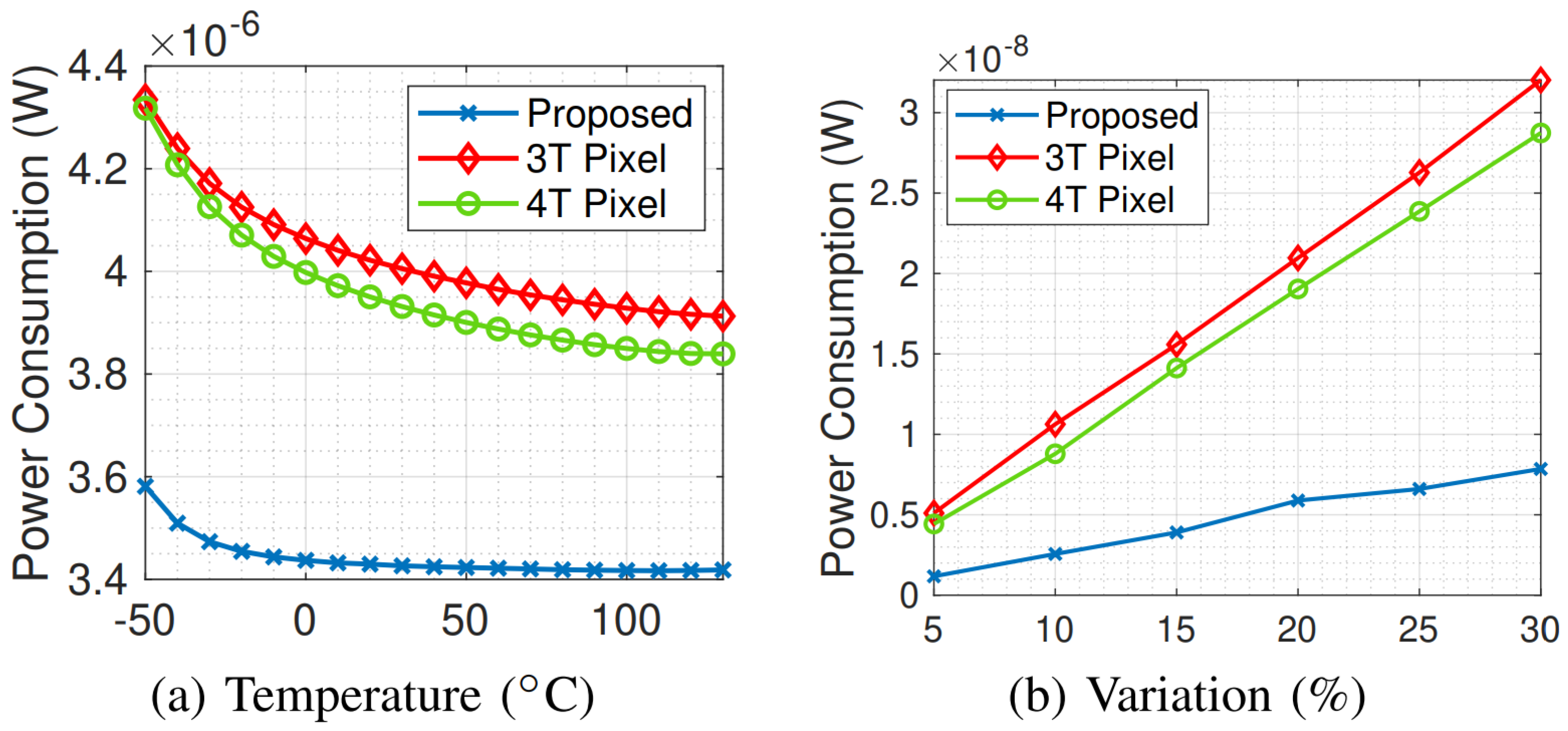

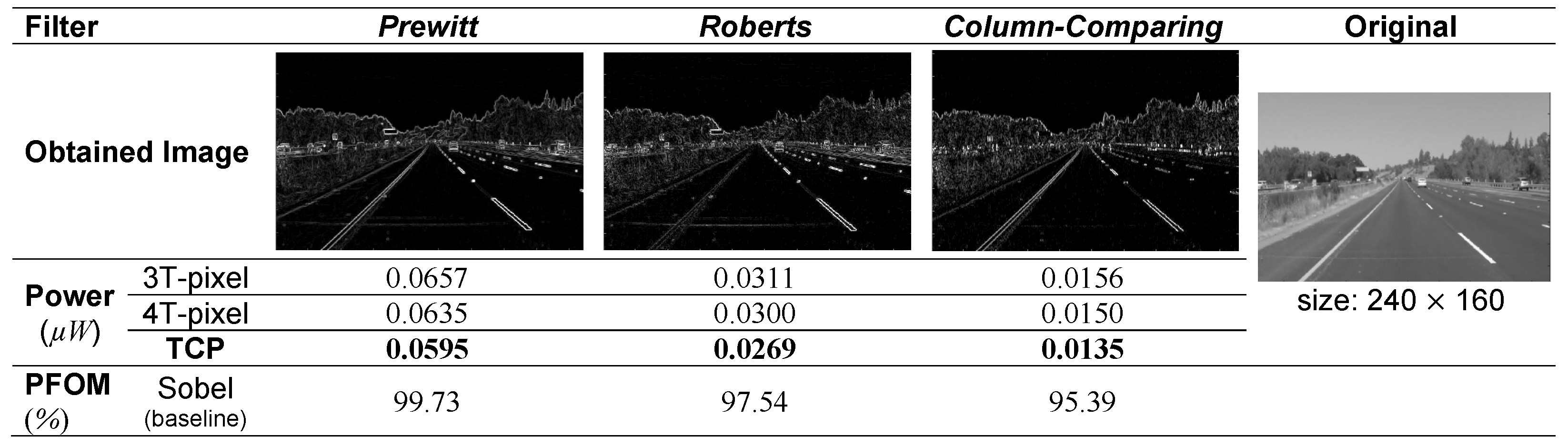

4. Simulation Results

Comparison Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hsu, T.H.; Chiu, Y.C.; Wei, W.C.; Lo, Y.C.; Lo, C.C.; Liu, R.S.; Tang, K.T.; Chang, M.F.; Hsieh, C.C. AI edge devices using computing-in-memory and processing-in-sensor: From system to device. In Proceedings of the 2019 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 7–11 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 22.5.1–22.5.4. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. Xnor-net: Imagenet classification using binary convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 525–542. [Google Scholar]

- Zhu, C.; Han, S.; Mao, H.; Dally, W.J. Trained Ternary Quantization. In Proceedings of the International Conference on Learning Representations (ICLR) 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhou, S.; Wu, Y.; Ni, Z.; Zhou, X.; Wen, H.; Zou, Y. DoReFa-Net: Training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv 2016, arXiv:1606.06160. [Google Scholar]

- Xu, H.; Li, Z.; Lin, N.; Wei, Q.; Qiao, F.; Yin, X.; Yang, H. Macsen: A processing-in-sensor architecture integrating mac operations into image sensor for ultra-low-power bnn-based intelligent visual perception. IEEE Trans. Circuits Syst. II Express Briefs 2020, 68, 627–631. [Google Scholar] [CrossRef]

- LiKamWa, R.; Hou, Y.; Gao, J.; Polansky, M.; Zhong, L. Redeye: Analog convnet image sensor architecture for continuous mobile vision. ACM SIGARCH Comput. Archit. News 2016, 44, 255–266. [Google Scholar] [CrossRef]

- Xu, H.; Lin, N.; Luo, L.; Wei, Q.; Wang, R.; Zhuo, C.; Yin, X.; Qiao, F.; Yang, H. Senputing: An Ultra-Low-Power Always-On Vision Perception Chip Featuring the Deep Fusion of Sensing and Computing. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 69, 232–243. [Google Scholar] [CrossRef]

- Li, Q.; Liu, C.; Dong, P.; Zhang, Y.; Li, T.; Lin, S.; Yang, M.; Qiao, F.; Wang, Y.; Luo, L.; et al. NS-FDN: Near-Sensor Processing Architecture of Feature-Configurable Distributed Network for Beyond-Real-Time Always-on Keyword Spotting. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 1892–1905. [Google Scholar] [CrossRef]

- Hsu, T.H.; Chen, Y.R.; Liu, R.S.; Lo, C.C.; Tang, K.T.; Chang, M.F.; Hsieh, C.C. A 0.5-V Real-Time Computational CMOS Image Sensor with Programmable Kernel for Feature Extraction. IEEE J. Solid-State Circuits 2020, 56, 1588–1596. [Google Scholar] [CrossRef]

- Bhowmik, P.; Pantho, M.J.H.; Bobda, C. Visual cortex inspired pixel-level re-configurable processors for smart image sensors. In Proceedings of the 2019 56th ACM/IEEE Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–2. [Google Scholar]

- Angizi, S.; Morsali, M.; Tabrizchi, S.; Roohi, A. A Near-Sensor Processing Accelerator for Approximate Local Binary Pattern Networks. arXiv 2022, arXiv:2210.06698. [Google Scholar]

- Angizi, S.; Roohi, A. Integrated Sensing and Computing using Energy-Efficient Magnetic Synapses. In Proceedings of the 2022 23rd International Symposium on Quality Electronic Design (ISQED), Santa Clara, CA, USA, 6–7 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Xu, H.; Nazhamaiti, M.; Liu, Y.; Qiao, F.; Wei, Q.; Liu, X.; Yang, H. Utilizing direct photocurrent computation and 2D kernel scheduling to improve in-sensor-processing efficiency. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Xu, H.; Liu, Z.; Li, Z.; Ren, E.; Nazhamati, M.; Qiao, F.; Luo, L.; Wei, Q.; Liu, X.; Yang, H. A 4.57 μW@ 120fps Vision System of Sensing with Computing for BNN-Based Perception Applications. In Proceedings of the 2021 IEEE Asian Solid-State Circuits Conference (A-SSCC), Busan, Korea, 7–10 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–3. [Google Scholar]

- Abedin, M.; Roohi, A.; Liehr, M.; Cady, N.; Angizi, S. MR-PIPA: An Integrated Multi-level RRAM (HfO x) based Processing-In-Pixel Accelerator. IEEE J. Explor.-Solid-State Comput. Devices Circuits 2022, 1. [Google Scholar] [CrossRef]

- Angizi, S.; Tabrizchi, S.; Roohi, A. Pisa: A binary-weight processing-in-sensor accelerator for edge image processing. arXiv 2022, arXiv:2202.09035. [Google Scholar]

- Yamazaki, T.; Katayama, H.; Uehara, S.; Nose, A.; Kobayashi, M.; Shida, S.; Odahara, M.; Takamiya, K.; Hisamatsu, Y.; Matsumoto, S.; et al. 4.9 A 1ms high-speed vision chip with 3D-stacked 140GOPS column-parallel PEs for spatio-temporal image processing. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 82–83. [Google Scholar]

- Kim, W.T.; Lee, H.; Kim, J.G.; Lee, B.G. An on-chip binary-weight convolution CMOS image sensor for neural networks. IEEE Trans. Ind. Electron. 2020, 68, 7567–7576. [Google Scholar] [CrossRef]

- Taherian, F.; Asemani, D. Design and implementation of digital image processing techniques in pulse-domain. In Proceedings of the 2010 IEEE Asia Pacific Conference on Circuits and Systems, Kuala Lumpur, Malaysia, 6–9 December 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 895–898. [Google Scholar]

- Tabrizchi, S.; Angizi, S.; Roohi, A. TizBin: A Low-Power Image Sensor with Event and Object Detection Using Efficient Processing-in-Pixel Schemes. In Proceedings of the 2022 IEEE International Conference on Computer Design (ICCD), Lake Tahoe, NV, USA, 23–26 October 2022. [Google Scholar]

- Song, R.; Huang, K.; Wang, Z.; Shen, H. A reconfigurable convolution-in-pixel cmos image sensor architecture. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7212–7225. [Google Scholar] [CrossRef]

- Choi, J.; Park, S.; Cho, J.; Yoon, E. An energy/illumination-adaptive CMOS image sensor with reconfigurable modes of operations. IEEE J. Solid-State Circuits 2015, 50, 1438–1450. [Google Scholar] [CrossRef]

- Muñoz-Martínez, F.; Abellán, J.L.; Acacio, M.E.; Krishna, T. STONNE: Enabling Cycle-Level Microarchitectural Simulation for DNN Inference Accelerators. In Proceedings of the 2021 IEEE International Symposium on Workload Characterization (IISWC), Storrs, CT, USA, 7–9 November 2021; pp. 201–213. [Google Scholar]

- Huai, Y. Spin-transfer torque MRAM (STT-MRAM): Challenges and prospects. AAPPS Bull. 2008, 18, 33–40. [Google Scholar]

- Jin, M.; Noh, H.; Song, M.; Kim, S.Y. Design of an edge-detection cmos image sensor with built-in mask circuits. Sensors 2020, 20, 3649. [Google Scholar] [CrossRef] [PubMed]

- Abdou, I.E.; Pratt, W.K. Quantitative design and evaluation of enhancement/thresholding edge detectors. Proc. IEEE 1979, 67, 753–763. [Google Scholar] [CrossRef]

- Biswas, R.; Sil, J. An improved canny edge detection algorithm based on type-2 fuzzy sets. Procedia Technol. 2012, 4, 820–824. [Google Scholar] [CrossRef]

| Parameter | Description | Value |

|---|---|---|

| Area | MTJ Surface | 100 × 65 × nm |

| Reference MTJ Surface | 100 × 45 × nm | |

| Thickness of oxide barrier | 0.85 nm | |

| Gilbert Damping factor | 0.007 | |

| Thickness of free layer | 1.3 nm | |

| Bohr Magneton | 9.27e J·T | |

| P | Polarization (DWNM, MTJ) | 0.75, 0.5 |

| Saturation magnetization | 200 8e A·m | |

| IC0 | Threshold Current Density | e–e A·m |

| , | MTJ-1/MTJ-2 Resistance | 2.5 K, 1.25 K |

| Reference MTJ Resistance | 1.8 K | |

| TMR | TMR ratio | 100% |

| Out of Plane Anisotropy Field | 1600∼1800 Oe | |

| Uniaxial Anisotropy | 400e J/m |

| Enable Bit (En) | Stored NVM Value | Represented Weight | Output Current |

|---|---|---|---|

| 1 | x | 0 | 0 |

| 0 | 0 | −1 | |

| 0 | 1 | 1 |

| Domain | DNN Model [23] | Power Consumption (1st Layer) | ||

|---|---|---|---|---|

| Ocelli (TCP) | 3T-Pixel | 4T-Pixel | ||

| Image Classification | MobileNets | 1 | 1.25 | 1.21 |

| SqueezeNet | 1 | 1.23 | 1.19 | |

| AlexNet | 1 | 1.26 | 1.22 | |

| ResNet-50 | 1 | 1.30 | 1.26 | |

| VGG-16 | 1 | 1.31 | 1.27 | |

| Object Detection | SDD-MobileNets | 1 | 1.25 | 1.21 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tabrizchi, S.; Angizi, S.; Roohi, A. Ocelli: Efficient Processing-in-Pixel Array Enabling Edge Inference of Ternary Neural Networks. J. Low Power Electron. Appl. 2022, 12, 57. https://doi.org/10.3390/jlpea12040057

Tabrizchi S, Angizi S, Roohi A. Ocelli: Efficient Processing-in-Pixel Array Enabling Edge Inference of Ternary Neural Networks. Journal of Low Power Electronics and Applications. 2022; 12(4):57. https://doi.org/10.3390/jlpea12040057

Chicago/Turabian StyleTabrizchi, Sepehr, Shaahin Angizi, and Arman Roohi. 2022. "Ocelli: Efficient Processing-in-Pixel Array Enabling Edge Inference of Ternary Neural Networks" Journal of Low Power Electronics and Applications 12, no. 4: 57. https://doi.org/10.3390/jlpea12040057

APA StyleTabrizchi, S., Angizi, S., & Roohi, A. (2022). Ocelli: Efficient Processing-in-Pixel Array Enabling Edge Inference of Ternary Neural Networks. Journal of Low Power Electronics and Applications, 12(4), 57. https://doi.org/10.3390/jlpea12040057