Developing a Diagnostic Decision Support System for Benign Paroxysmal Positional Vertigo Using a Deep-Learning Model

Abstract

:1. Introduction

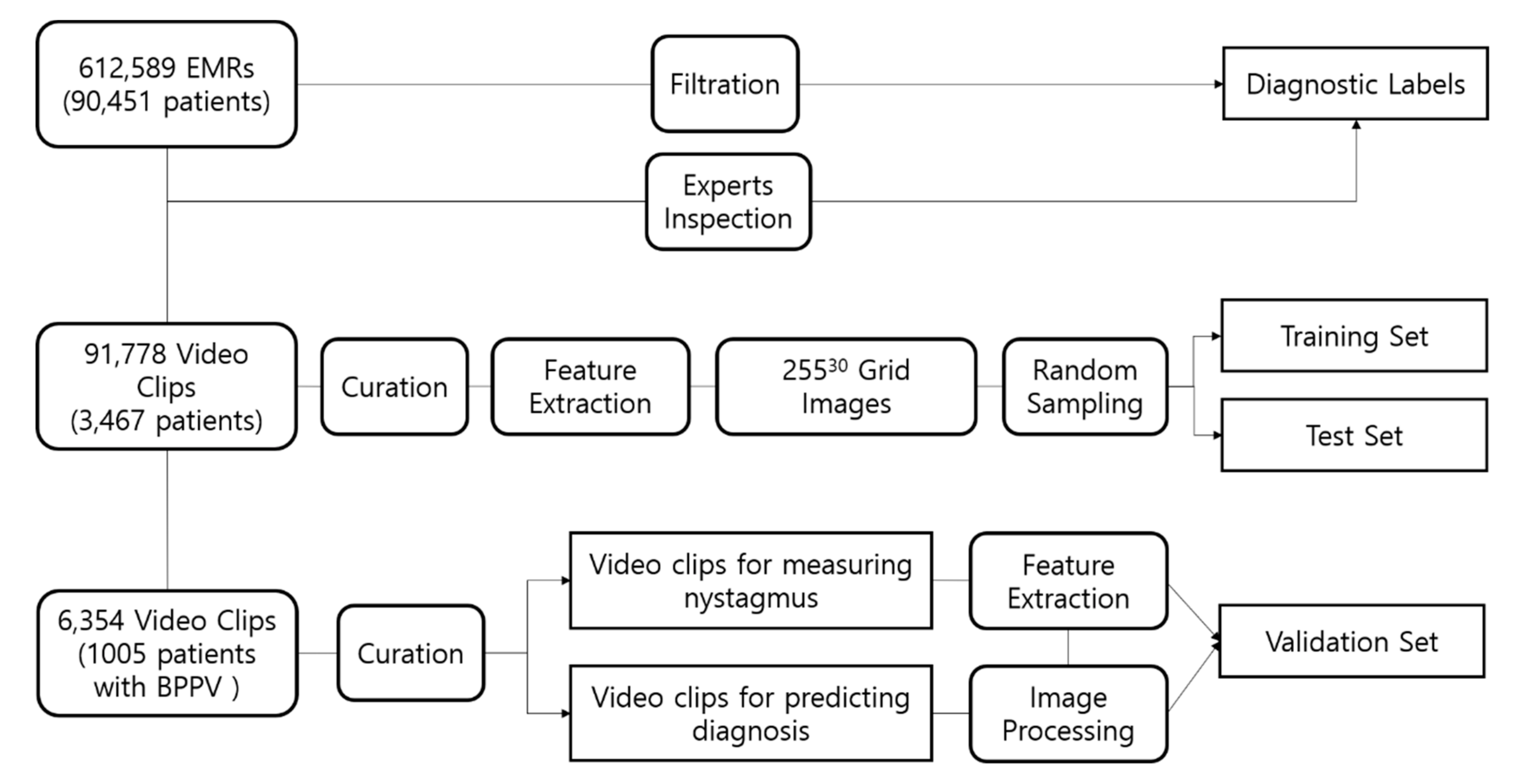

2. Methods

2.1. Data Collection

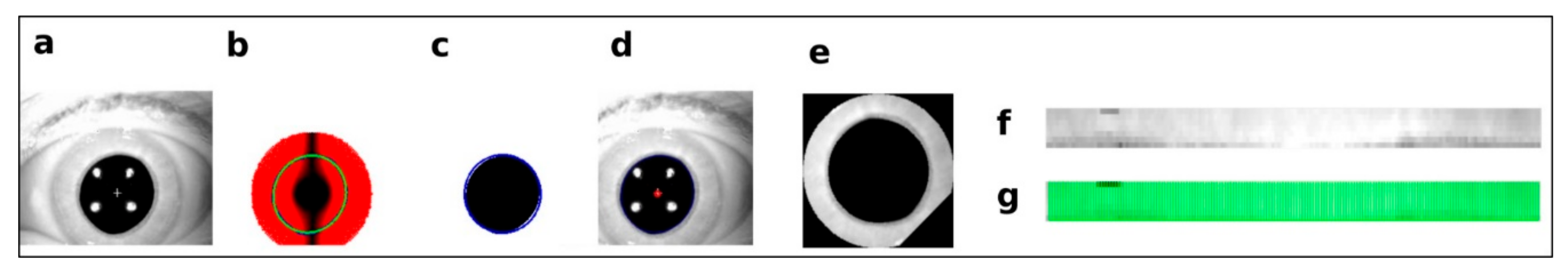

2.2. Preprocessing and Axis Measurement on Eye Movement

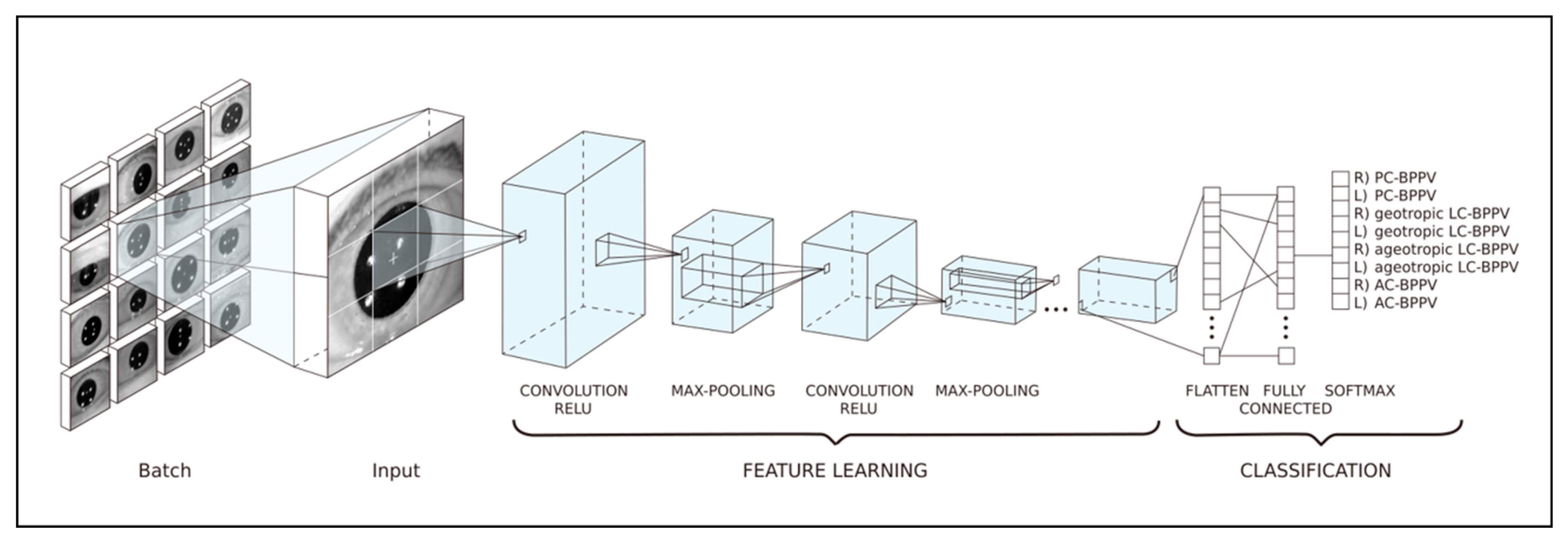

2.3. Training Datasets for Prediction of the Affected Canal in BPPV Cases

2.4. Diagnostic Algorithm for BPPV and Test Datasets

2.5. Statistical Analyses

- Accuracy = (TP + TN)/(TP + FP + TN + FN)

- Precision = TP/(TP + FP)

- Sensitivity = TP/(TP + FN)

- Specificity = TN/(TN + FP)

- False positive rate (FPR) = 1 − Specificity

- F1 score =

- where TP = true positive, TN = true negative, FP = false positive, FN = false negative, and β = 1.

3. Results

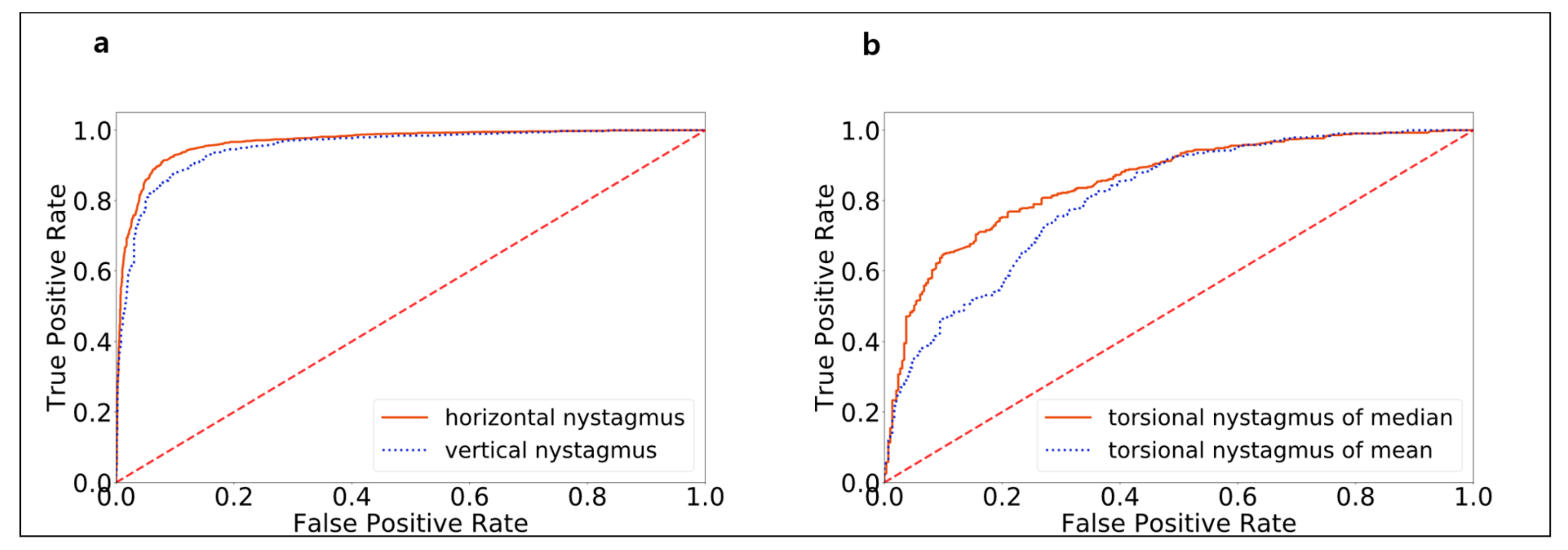

3.1. Model Performance in Detecting Nystagmus Types

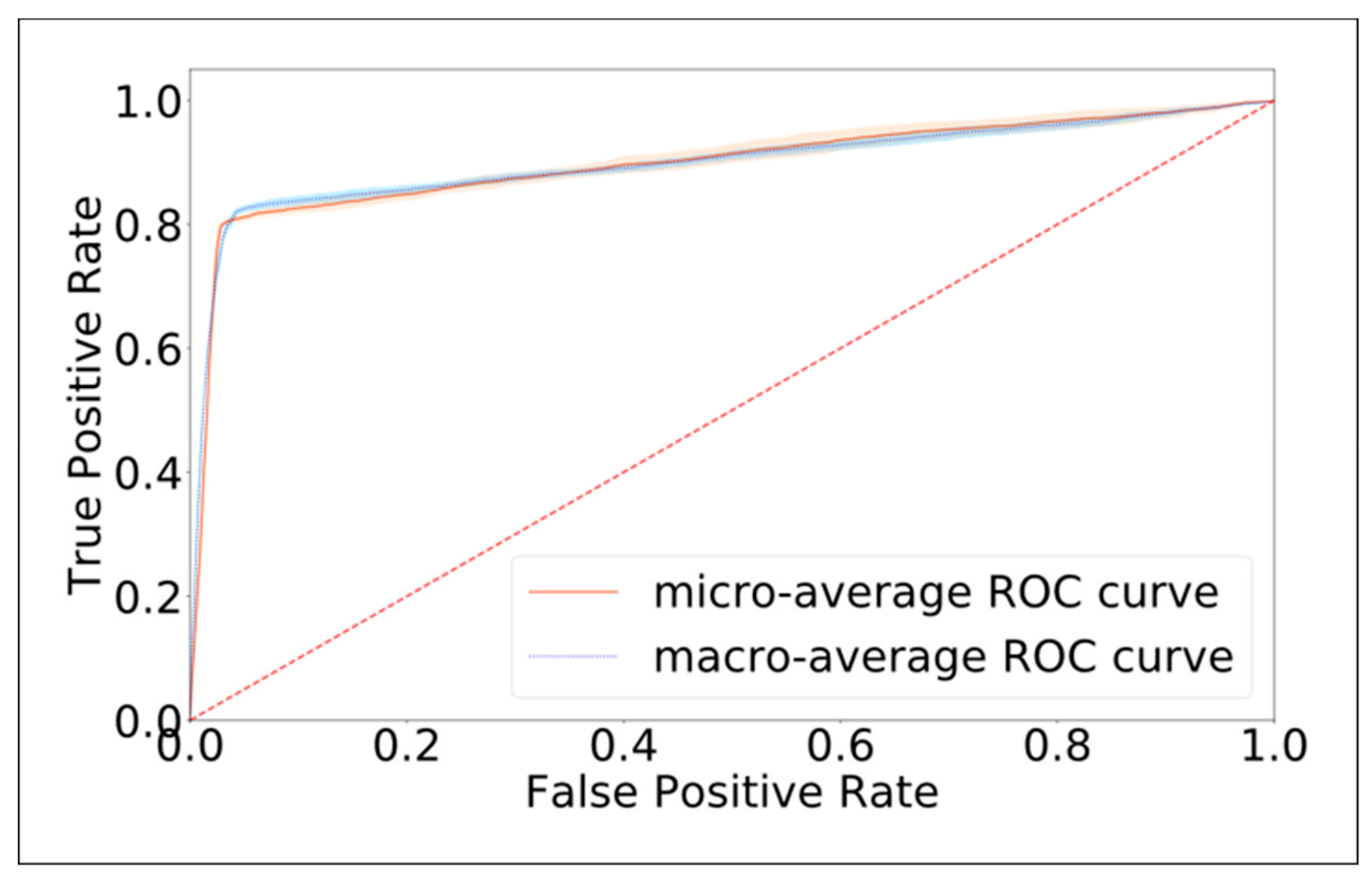

3.2. Model Performance in Identifying the Canal Affected by BPPV

4. Discussion

Limitation and Future Research

5. Conclusions

6. Patents

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kim, J.S.; Zee, D.S. Clinical practice. Benign paroxysmal positional vertigo. N. Engl. J. Med. 2014, 370, 1138–1147. [Google Scholar] [CrossRef]

- Bhattacharyya, N.; Gubbels, S.P.; Schwartz, S.R.; Edlow, J.A.; El-Kashlan, H.; Fife, T.; Holmberg, J.M.; Mahoney, K.; Hollingsworth, D.B.; Roberts, R. Clinical practice guideline: Benign paroxysmal positional vertigo (update). Otolaryngol. Head Neck Surg. 2017, 156, S1–S47. [Google Scholar] [CrossRef] [PubMed]

- Von Brevern, M.; Bertholon, P.; Brandt, T.; Fife, T.; Imai, T.; Nuti, D.; Newman-Toker, D. Benign paroxysmal positional vertigo: Diagnostic criteria. J. Vestib. Res. 2015, 25, 105–117. [Google Scholar] [CrossRef]

- Furman, J.M.; Cass, S.P. Benign paroxysmal positional vertigo. N. Engl. J. Med. 1999, 341, 1590–1596. [Google Scholar] [CrossRef] [PubMed]

- Lempert, T.; Neuhauser, H. Epidemiology of vertigo, migraine and vestibular migraine. J. Neurol. 2009, 256, 333–338. [Google Scholar] [CrossRef] [PubMed]

- Neuhauser, H.K. The epidemiology of dizziness and vertigo. Handb. Clin. Neurol. 2016, 137, 67–82. [Google Scholar]

- Von Brevern, M.; Radtke, A.; Lezius, F.; Feldmann, M.; Ziese, T.; Lempert, T.; Neuhauser, H. Epidemiology of benign paroxysmal positional vertigo: a population based study. J. Neurol. Neurosurg. Psychiatry 2007, 78, 710–715. [Google Scholar] [CrossRef]

- Wang, H.; Yu, D.; Song, N.; Su, K.; Yin, S. Delayed diagnosis and treatment of benign paroxysmal positional vertigo associated with current practice. Eur. Arch. Otorhinolaryngol. 2014, 271, 261–264. [Google Scholar] [CrossRef]

- Lopez-Escamez, J.A.; Gamiz, M.J.; Fernandez-Perez, A.; Gomez-Fiñana, M. Long-term outcome and health-related quality of life in benign paroxysmal positional vertigo. Eur. Arch. Otorhinolaryngol. 2005, 262, 507–511. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.; van Ginneken, B.; Sanchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Saha, S.; Singh, G.; Sapienza, M.; Torr, P.H.; Cuzzolin, F. Deep learning for detecting multiple space-time action tubes in videos. arXiv 2016, arXiv:1608.01529. [Google Scholar]

- Kattah, J.C.; Talkad, A.V.; Wang, D.Z.; Hsieh, Y.H.; Newman-Toker, D.E. HINTS to diagnose stroke in the acute vestibular syndrome: three-step bedside oculomotor examination more sensitive than early MRI diffusion-weighted imaging. Stroke 2009, 40, 3504–3510. [Google Scholar] [CrossRef]

- Moore, S.T.; Curthoys, I.S.; McCoy, S.G. VTM--an image-processing system for measuring ocular torsion. Comput. Methods Programs Biomed. 1991, 35, 219–230. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Jansen, S.H.; Kingma, H.; Peeters, R.M.; Westra, R.L. A torsional eye movement calculation algorithm for low contrast images in video-oculography. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2010, 2010, 5628–5631. [Google Scholar] [CrossRef] [PubMed]

- Ong, J.K.; Haslwanter, T. Measuring torsional eye movements by tracking stable iris features. J. Neurosci. Methods 2010, 192, 261–267. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 2017 IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Fife, D.; Fitzgerald, J.E. Do patients with benign paroxysmal positional vertigo receive prompt treatment? Analysis of waiting times and human and financial costs associated with current practice. Int. J. Audiol. 2005, 44, 50–57. [Google Scholar] [CrossRef] [PubMed]

- Lynn, S.; Pool, A.; Rose, D.; Brey, R.; Suman, V. Randomized trial of the canalith repositioning procedure. Otolaryngol. Head Neck Surg. 1995, 113, 712–720. [Google Scholar] [CrossRef]

- Li, J.C.; Li, C.J.; Epley, J.; Weinberg, L. Cost-effective management of benign positional vertigo using canalith repositioning. Otolaryngol. Head Neck Surg. 2000, 122, 334–339. [Google Scholar] [CrossRef]

- Von Brevern, M.; Lezius, F.; Tiel-Wilck, K.; Radtke, A.; Lempert, T. Benign paroxysmal positional vertigo: current status of medical management. Otolaryngol. Head Neck Surg. 2004, 130, 381–382. [Google Scholar] [CrossRef]

- Imai, T.; Sekine, K.; Hattori, K.; Takeda, N.; Koizuka, I.; Nakamae, K.; Miura, K.; Fujioka, H.; Kubo, T. Comparing the accuracy of video-oculography and the scleral search coil system in human eye movement analysis. Auris Nasus Larynx 2005, 32, 3–9. [Google Scholar] [CrossRef]

- Harezlak, K.; Kasprowski, P. Application of eye tracking in medicine: A survey, research issues and challenges. Comput. Med. Imaging Graph. 2018, 65, 176–190. [Google Scholar] [CrossRef]

- Scherer, H.; Teiwes, W.; Clarke, A.H. Measuring three dimensions of eye movement in dynamic situations by means of videooculography. Acta Otolaryngol. 1991, 111, 182–187. [Google Scholar] [CrossRef]

- Young, L.R.; Sheena, D. Survey of eye movement recording methods. Behav. Res. Methods Instrum. 1975, 7, 397–429. [Google Scholar] [CrossRef]

| Affected Canal | Precision | Sensitivity | Specificity | F1-score | N |

|---|---|---|---|---|---|

| R) PSC-BPPV | 0.888 ± 0.014 | 0.560 ± 0.013 | 0.985 ± 0.002 | 0.686 ± 0.009 | 179 |

| L) PSC-BPPV | 0.753 ± 0.007 | 0.882 ± 0.005 | 0.952 ± 0.002 | 0.813 ± 0.005 | 144 |

| R) geotropic LSC-BPPV | 0.837 ± 0.009 | 0.916 ± 0.007 | 0.970 ± 0.002 | 0.875 ± 0.007 | 146 |

| L) geotropic LSC-BPPV | 0.771 ± 0017 | 0.941 ± 0.008 | 0.982 ± 0.002 | 0.847 ± 0.010 | 61 |

| R) ageotropic LSC-BPPV | 0.800 ± 0.012 | 0.957 ± 0.006 | 0.967 ± 0.003 | 0.871 ± 0.007 | 122 |

| L) ageotropic LSC-BPPV | 0.853 ± 0.010 | 0.934 ± 0.003 | 0.973 ± 0.002 | 0.891 ± 0.006 | 142 |

| R) ASC-BPPV | 0.775 ± 0.031 | 0.614 ± 0.015 | 0.986 ± 0.003 | 0.685 ± 0.015 | 73 |

| L) ASC-BPPV | 0.707 ± 0.022 | 0.663 ± 0.014 | 0.956 ± 0.005 | 0.684 ± 0.008 | 138 |

| Average/Total | 0.798 ± 0.015 | 0.808 ± 0.009 | 0.971 ± 0.002 | 0.794 ± 0.008 | 1005 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, E.-C.; Park, J.H.; Jeon, H.J.; Kim, H.-J.; Lee, H.-J.; Song, C.-G.; Hong, S.K. Developing a Diagnostic Decision Support System for Benign Paroxysmal Positional Vertigo Using a Deep-Learning Model. J. Clin. Med. 2019, 8, 633. https://doi.org/10.3390/jcm8050633

Lim E-C, Park JH, Jeon HJ, Kim H-J, Lee H-J, Song C-G, Hong SK. Developing a Diagnostic Decision Support System for Benign Paroxysmal Positional Vertigo Using a Deep-Learning Model. Journal of Clinical Medicine. 2019; 8(5):633. https://doi.org/10.3390/jcm8050633

Chicago/Turabian StyleLim, Eun-Cheon, Jeong Hye Park, Han Jae Jeon, Hyung-Jong Kim, Hyo-Jeong Lee, Chang-Geun Song, and Sung Kwang Hong. 2019. "Developing a Diagnostic Decision Support System for Benign Paroxysmal Positional Vertigo Using a Deep-Learning Model" Journal of Clinical Medicine 8, no. 5: 633. https://doi.org/10.3390/jcm8050633

APA StyleLim, E.-C., Park, J. H., Jeon, H. J., Kim, H.-J., Lee, H.-J., Song, C.-G., & Hong, S. K. (2019). Developing a Diagnostic Decision Support System for Benign Paroxysmal Positional Vertigo Using a Deep-Learning Model. Journal of Clinical Medicine, 8(5), 633. https://doi.org/10.3390/jcm8050633