Abstract

Accurate and fast 3D mapping of crime scenes is crucial in law enforcement, and first responders often need to document scenes in detail under challenging conditions and within a limited time. Traditional methods often fail to capture the details required to understand these scenes comprehensively. This study investigates the effectiveness of recent mobile phone-based mapping technologies equipped with a LiDAR (Light Detection and Ranging) sensor. The performance of LiDAR and pure photogrammetry is evaluated under different illumination (day and night) and scanning conditions (slow and fast scanning) in a mock-up crime scene. The results reveal that the mapping using an iPhone LIDAR in daylight conditions with 5 min of fast scanning shows the best results, yielding 0.1084 m of error. Also, the cloud-to-cloud distance showed that 90% of the point clouds exhibited under 0.1224 m of error, demonstrating the utility of these tools for rapid and portable scanning in crime scenes.

1. Introduction

Accurate and reliable three-dimensional (3D) mapping information is paramount in crime scene investigations. Once a crime is committed, the crime scene is a witness to illicit acts that occurred in it. It contains data and information, in addition to other forms of evidence, and all should be collected accurately and precisely to ensure that justice is served [1,2,3,4]. However, investigators’ initial encounter with the crime scene is usually challenging, especially for first responders, who are responsible for the preservation of the scene’s integrity, with no contamination being allowed, and all objects on the scene that could be of use as evidence must be collected as soon as possible [2,4]. Traditional manual measurements tools have been widely utilized to document the crime scene content, such as rulers, measuring tapes, and photographs, to document all essential aspects of a crime. However, they struggle to capture all of the crime scene details, posing a challenge in terms of practicality, accuracy, and total comprehensiveness of the crime scene [5,6,7,8]. Moreover, manual easements are time-consuming, especially with more extensive scenes, prone to human error, and require the collaboration of a properly trained team to finish the work swiftly.

While traditional tools are used and accepted in legal proceedings, the rapid development of 3D-modeling technologies, particularly LiDAR (Light Detection and Ranging) scanners [1,3], can be found not only in professional-grade scanners but also in more affordable consumer-grade scanners, such as the iPhone built-in LiDAR sensor [5,9,10,11,12,13]. Image-based photogrammetry technology also offers a higher level of accuracy with details plus the fast speed and beyond provided by the traditional methods [4,14,15,16,17,18]. LiDAR technology involves emitting laser beams to scan the surveyed area and calculating the time it takes the laser beam to return to the sensor. This technology has been used vastly in many fields with highly accurate results, and, thus, it has been studied for crime scene investigation [1]. On the other hand, photogrammetry employs photography to capture images, combines them together, and builds a three-dimensional representation of the photographed area [4,14]. Like LiDAR, photogrammetry has found significant applications in forensic science, where it aids in reconstructing crime scenes, allowing forensic experts to analyze crime scenes with high spatial accuracy, enabling a better understanding of the events that occurred without needing a physical presence [4]. Moreover, photogrammetry can be more practical than LiDAR in some cases, since most mobile phones have cameras but do not have LIDAR sensors. Although LiDAR and photogrammetry technologies have been utilized for crime scene applications, they still lack the thorough evaluation of the recent iPhone LiDAR and photogrammetry technologies in a realistic crime scene with challenging illumination and scanning time [10,18].

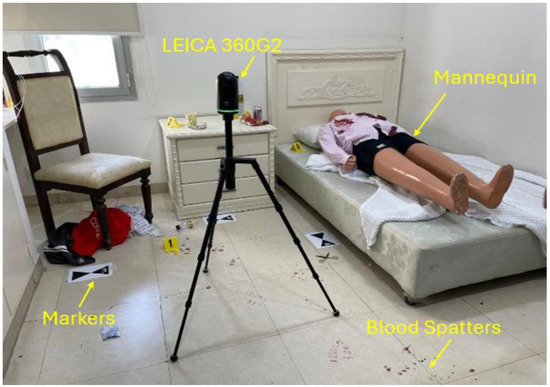

In this work, we evaluate the recent mobile phone-based LiDAR and photogrammetry technologies for a mock-up crime scene, as shown in Figure 1, in which an Apple iPhone 15 Pro Max and a LEICA BLK360 (Lecia Geosystems, Heerbrugg, Switzerland) are used for LiDAR mapping, while the same phone is used for photogrammetric mapping for comparison. The LEICA LiDAR is used to compare the point cloud accuracy, which is crucial to understand the accuracy of 3D maps. The mock-up crime scene was professionally prepared by crime scene investigators and has been utilized for training law enforcement officers in the institution. The significance of this study lies in its attempt to enhance crime scene evidence documentation by evaluating recent mobile 3D reconstruction technologies.

Figure 1.

A mock-up crime scene, showing a mannequin, markers, blood spatters, and a LIDAR scanner for ground truth comparison. The scene was prepared by crime scene professionals and has been utilized for training law enforcement officers.

The key contributions of this work are as follows:

- We extensively review the recent mobile phone-based 3D-mapping technologies using LIDAR and photogrammetry for the crime scene applications and discuss the challenges and limitations.

- We evaluate the performance of the LiDAR and photogrammetry technologies for a mock-up crime scene with realistic operational conditions, potentially making these tools more widely acceptable for use in law enforcement agencies.

- We compare the accuracy of point-cloud level maps with an industrial-grade LiDAR to assess the unmeshed and un-smoothed evidence of the scene, which is crucial for legal and judicial presentation.

The outline of this work is as follows. Section 2 provides the recent related literature focusing on within five years and identifies the gaps and limitations. Section 3 presents the LiDAR and photogrammetry mapping technologies with the crime scene setup, the scanning models with four different operational conditions, as well as the tool chains utilized. Section 4 presents the results and discussions. Lastly, the Conclusion summarizes the findings, discussing the limitations and recommendations.

2. Related Work

2.1. Applications of LiDAR in Crime Scene Reconstruction

The study by Desai et al. [3] evaluated how accurately handheld LiDAR devices can be used to reconstruct crash scenes. The generated point cloud data were extracted from devices like the iPad Pro (Apple Inc., Cupertino, CA, USA), the FARO Focus 3D X330 (FARO Inc., Lake Mary, FL, USA), and TLS Trimble TX8 (Trimble Inc., Westminster, CO, USA) to analyze vehicle damage. Their results were promising, showing that the iPad Pro scanning took around 15 min, compared to 3 h for the other two devices. It also had a maximum root mean square error (RMSE) of 3 cm. This level of precision falls within an acceptable range for reconstructing crash scenes. Another study involving the iPad Pro tablet was conducted by Drofova et al. [4]. Three-dimensional forensic science was the focus of the study, which utilized 3D scanning and modeling to assist the investigation. The iPad model used was 11 Pro, while photogrammetry software, Scaniverse Pro Version 1.4.0, was used to construct 3D wireframe models. According to the researchers, 3D tools provide advantages in court by presenting facts appealingly and convincingly. However, they still do not substitute traditional methods but instead complement them.

Kottner et al. [19] focused on testing the Recon-3D application on an iPhone 13 Pro to capture a simulated accident and crime scene. The findings indicate that utilizing iPhone LiDAR with the Recon-3D app for reconstructing forensic crime scenes yielded 3D documentation effortlessly, eliminating the necessity for training. It could serve as a substitute for measuring tapes. Nonetheless, the study recognizes constraints, such as requiring a defined distance from objects to achieve resolution. Moreover, working with LiDAR may present challenges on extremely dark or highly reflective surfaces. Canvas 3D Pocket is another iOS app used by researchers in crime reconstruction, as in the experiment by John et al. [20]. Their results showed an error rate ranging up to 8% compared to the actual benchmark dimensions of their mock-up crime scene. They also stated that their proposed application is a cost-efficient tool for reconstructing crime scenes. However, other factors must be considered when choosing tools, such as case sensitivity and the potential impact of the error, even if the effect on the investigation is small.

The study conducted by Beck et al. [21] aimed to enhance crash investigation methods. They utilized data from a LiDAR sensor placed at the center of an automated vehicle. According to their findings, LiDAR proves its capability to detect vehicles better than cameras. The research underlines how analyzing sensor data from autonomous vehicles can significantly improve crash investigations by considering factors like the reaction times of systems and human drivers. Nonetheless, it also emphasizes the necessity for new protocols to analyze sensor data effectively. This highlights the importance of efforts among companies, law enforcement agencies, and legal systems to develop these protocols. Maneli and Isafiade [5] reviewed existing research on 3D crime scene reconstruction, focusing on the role of LiDAR scanners in generating 3D maps of crime scenes. Their study revealed that LiDAR scanners can scan accurate crime scenes as well as reduce the chance of scene data being contaminated or manipulated. Moreover, portable gadgets such as iPads equipped with LiDAR sensors provide first responders with mobility and flexibility when working on crime scenes.

Another work, reviewing the latest 3D tools and methodologies in crime scene reconstruction, was performed by Galanakis et al. [6]. They conducted a state-of-the-art overview of 3D technology tools and their applications in crime scene reconstruction, including LiDAR technology. According to the study, numerous factors influence the selection of appropriate technology, such as technical requirements, convenience of use, cost, plus scanning time. Furthermore, differences between indoor and outdoor scenes, as well as scene size, do impact this choice. LiDAR technology was highlighted in outdoor crime scene scenarios, where its usage can be compared to other sensory tools, such as aerial photogrammetry. Various crime scene documentation techniques were examined in the study by Berezowski et al. [7], including LiDAR scanners and drone-based photogrammetry. The study showed how these scanners captured a scene in five minutes with one user. This time-saving advantage is crucial compared to methods involving teamwork. However, the researchers also identify challenges, including the need for training, the legal implications, and barriers when implementing such advanced technologies.

In the study by Thiruchelvam et al. [8], Unmanned Aerial Vehicles (UAVs) were utilized to document crime scenes. Furthermore, an evaluation of 3D reconstruction software was introduced in their research, such as Pix4D [22] and Agisoft Metashape. The study recommends using LiDAR and photogrammetry technology to enhance the accuracy and detail of crime scene documentation. Another study on drones with LiDAR for crime scene reconstruction was performed by Mishra et al. [23]. According to the survey, drones with high-resolution cameras can capture and record crime scenes from different angles, resulting in enhanced documentation. According to the researchers, innovative technologies must be constantly developed to improve criminal investigation. The research by Deshmukh et al. [24] investigated the challenges of using LIDAR scanning with virtual reality (VR) for crime scene documentation. The results showed that these technologies efficiently enhanced crime scene examination since human eyes often fail to detect small details in a crime scene. In their research, Chase and Liscio [9] examined the use of Recon-3D, an app with Apple’s LiDAR sensor for documenting bullet trajectories. It compared Recon-3D (Recon-3D Inc., Woodbridge, ON, Canada) to the Faro Focus S350 laser scanner (FARO, Lake Mary, FL, USA), and its results showed that Recon-3D’s accuracy and repeatability were similar. While the Recon-3D app is user-friendly and efficient, it may occasionally crash during high-resolution scans. However, due to the high sensitivity of the forensic field, where no mistakes are accepted, it is recommended that further validation and exploration of the tested Recon-3D application be conducted. A study focusing on documenting bullet trajectories at crime scenes was performed by Sung et al. [10], which explored the BLK2GO LiDAR scanner (Lecia Geosystems, Heerbrugg, Switzerland) capabilities. The key findings suggest that, although the handheld scanner offers mobility in addition to ease of use, it falls short in data quality and precision, especially when dealing with evidence like bullets. Yet, it can be suitable for cases in which high accuracy is unnecessary, such as reconstructing general crime scenes [10].

Liscio et al.’s research [11] enhances the understanding of integrating innovative technologies like Apple LiDAR using the Recon-3D app in forensic documentation. The study reveals that these technologies can achieve centimeter-level accuracy in reconstructing 3D crash scenes. However, it also highlights potential inaccuracies due to environmental factors and manual measurement errors. These insights are vital for forensic professionals considering adopting advanced technological solutions in scene reconstruction. The study by Spreafico et al. [12] evaluated the built-in LiDAR sensor of the iPad Pro for its applicability in rapid 3D mapping. Their preliminary analysis reveals that the sensor shows promise for quick surveying tasks due to its affordability plus portability compared to traditional surveying tools. The study highlights the sensor’s reliable data collection and rapid processing capability. In the study by Stevenson et al. [13], the authors evaluate the application of iPhone LiDAR using the Recon-3D app for bloodstain pattern analysis (BPA). Traditional BPA methods are prone to error and are compared against newer, more accurate 3D technologies. The researchers highlighted that Recon-3D offers a cost-effective and accessible alternative for generating 3D crime scene reconstructions, showing promising accuracy in determining the area of origin of bloodstains. Table 1 summarizes these studies.

Table 1.

Comparative analysis of the literature on the applications of LiDAR in crime scene reconstruction.

2.2. Applications of Photogrammetry and Other 3D Tools in Crime Scene Reconstruction

Yu et al. [25] explored the use of virtual reality (VR) utilizing 3D imaging and photogrammetry methods to improve crime scene investigations, mainly focusing on the difficulties in capturing and reconstructing indoor scenes. They introduced a Dundee Ground Truth imaging protocol that involved digital cameras with Structure from Motion (SfM) photogrammetry to create VR environments. The study showed that although quicker approaches, such as the K-model, can speed up crime scene processing, better accuracy is achieved using controlled methods like the Dundee GT protocol. Other research on stereo photogrammetry with the Leica BLK3D device for reconstructing 3D crime scenes was performed by Bohórquez et al. [14]. They compared single stereo pair (SSP) and two stereo pair (TSP) modes, comparing them with a TLS survey for validation, highlighting their effectiveness in collecting on-site data and reducing processing requirements. They also found that the impact of human error on the photogrammetry on both single and two stereo pairs and the performance in crime scene maps were minimal, confirming its reliability for swift and precise crime scene documentation and reconstruction.

A framework for creating high-resolution 3D models of crime scenes using aerial photogrammetry was introduced by Becker et al. [15]. The importance of using photogrammetry for creating 3D models was highlighted, emphasizing how enhancing the quality of evidence can aid in making a better judicial decision. Liao [16] explored the integration of close-range photogrammetry, reality, and panoramic techniques. The study objective was to document crime scenes, emphasizing the need for a comprehensive strategy in virtual crime scene reconstruction, also identifying the gaps in equipment and training requirements. Furthermore, it highlighted the capabilities of photogrammetry and virtual reality in reconstructing crime scenes, offering a comprehensive 3D model that can aid in thorough investigations and courtroom comprehensiveness for such scenes. Abate et al. [17] highlighted how practical it is to use a camera to document the contamination level at crime scenes. They used the Ricoh Theta SC 360s camera(Tokyo, Japan), which could create quick 3D models and assess contamination levels accurately. Although the camera could capture the scene characteristics, it showed limitations in reconstructing smaller objects.

Another study on the use of photogrammetry technology in criminal investigation was conducted by Chapman and Colwill [18], utilizing Agisoft software (version 2.1.4) to generate 3D models. They pointed out that, while this method has its limitations, especially when dealing with shiny surface analysis, it can be used in addition to traditional techniques such as terrestrial laser scanners (TLSs). Notably, photogrammetry can speed up the documentation process by allowing for 3D recordings that are less intrusive and can be done using equipment already available to most forensic teams. Sazaly et al. [26] explored the use of micro-unmanned Aerial Vehicles (UAVs) with terrestrial laser scanning (TLS) for indoor crime scene reconstruction through 3D photogrammetry. Utilizing a simulated crime scene, the study assessed this approach against traditional methods, concluding that integrating micro-UAVs and TLS provides highly accurate 3D models. This makes UAVs a practical option for detailed and efficient crime scene documentation that maintains the scene’s integrity. However, the study focused on a small sample size and limited the comparison to only TLS.

The study by Kanostrevac et al. [27] highlighted that aerial photogrammetry faces challenges in heavy forests, and, thus, LiDAR is more accurate and useful in archaeology and environmental studies. The research focused on LiDAR’s ability to generate detailed terrain models in areas obscured by dense vegetation, which are quite relevant to forensic science, especially in reconstructing outdoor crime scenes. By selecting the most suitable technology based on the specific environment requirements of the crime scene, the accuracy of forensic investigations and the effectiveness of evidence presentation in legal scenarios can be significantly enhanced. Flanagan et al. [28] introduced a practical application of perspective grid photogrammetry for crime scene reconstruction in their study. This method utilizes a simple grid system to quickly derive spatial measurements from crime scene photographs, significantly reducing the time required on site. The approach is particularly beneficial in environments where rapid scene processing is crucial due to adverse conditions. They provide a valuable tool for forensic professionals by simplifying measurement techniques without significantly compromising the accuracy.

Sirmacek et al. [29] investigated the use of iPhone cameras to create 3D urban models, comparing these with high-accuracy terrestrial laser scanning (TLS) data. Their study highlights a promising mean error of 0.11 m between the models, suggesting the potential for using consumer-grade technology in professional geoscience applications. Nonetheless, they emphasize further refinement to improve data accuracy and reliability. The study by Norahim et al. [30] presents an innovative approach for reconstructing 3D models of accident scenes using UAVs, which is pivotal in reducing the time taken for accident investigations, thus alleviating traffic congestion. Their research evaluates the efficiency of various UAV flight patterns, circular, double grid, and single grid, in creating 3D models. The study tests these methodologies across different settings and altitudes, finding that the circular method at 5 m altitude with ground control points (GCPs) yielded the highest accuracy, with the lowest RMSE value of 0.047 m. This work could have profound implications for rapid and precise accident scene analysis, offering a significant advancement over traditional methods, which often involve more time-consuming and less accurate processes.

The study by Maiese et al. [31] discussed the integration of camera and LiDAR sensors in forensic pathology for 3D autopsy documentation, highlighting the limitations of traditional two-dimensional imaging. This new method enhances detail capture, necessary for medico-legal accuracy, by preserving spatial integrity through three-dimensional models. Their study validates the superiority of 3D models over conventional methods, offering more straightforward and precise visual evidence for forensic investigations. This advancement can potentially improve documentation practices and educational applications within forensic pathology. As highlighted in the study of Carew et al. [32], the integration of 3D imaging and printing technologies is revolutionizing the field of forensic science, particularly in the reconstruction of crime scenes. This emerging domain, 3D forensic science (3DFS), combines various established techniques, such as forensic radiography, medicine, and anthropology, with the advanced capabilities of 3D modeling to enhance the precision of forensic evidence. The authors argue that 3DFS significantly contributes to the criminal justice system by improving the robustness and accuracy of crime reconstructions, which are increasingly utilized in courtrooms to support the presentation of evidence and expert testimony. They emphasize the necessity of defining this distinct field, developing best practices, and setting standards to maximize its efficacy and reliability in legal contexts.

As outlined in [33] by Bostanci, digital reconstruction of crime scenes using advanced computer vision techniques with 3D modeling tools allows for a more nuanced exploration of the crime environment. This approach not only enhances the visual representation of spatial relationships and evidence placement but also aids in preserving the integrity of the crime scene, thereby augmenting the traditional methodologies of crime scene investigation. These technologies facilitate a detailed examination that can be pivotal in solving crimes by providing insights that might not be evident through standard 2D images and manual sketches. Table 2 summarizes these studies.

Table 2.

Comparative analysis of the literature on the applications of photogrammetry in crime scene reconstruction.

2.3. Summary and Implications

The literature highlighted the impact of various 3D modeling technologies, including LiDAR and photogrammetry criminal investigation procedures, crime scene documentation, and evidence integrity preservation. Studies have also shown that the built-in LiDAR capabilities of handheld devices like the iPhone Pro and iPad Pro can swiftly reconstruct crime scenes using point clouds produced from the LiDAR sensors and the iPhone camera with the help of various applications, devices, and cloud processing. For instance, Desai et al. [19] demonstrated how an iPad could complete a whole scan in 15 min, while the same scene could take hours to be completed when done by a professional-grade scanner, with accuracy suitable for legal procedures.

Moreover, photogrammetry is increasingly employed for crime scene reconstruction, playing a crucial role in courtrooms to illustrate complex crime scenes effortlessly, as shown in the study by Drofova et al. [20]. Their analysis revealed that using the images obtained from the iPad in conjunction with photogrammetry software to create 3D models can be used to support evidence. Likewise, the current research examines how the built-in LiDAR technologies in mobile phones perform when compared to traditional techniques, as shown by Kottner et al. [19]. The study also demonstrated the practicality of portable handheld devices without proper training. Moreover, the usage of 3D technologies was explored in various forensic science fields, such as vehicle collision accidents, as in Beck et al. [21], analyzing the interaction of vehicles, which is usually challenging when using traditional methods.

Nevertheless, despite the promising results shown in the literature, various gaps need to be addressed:

- There is a lack of studies directly comparing the efficiency and practicality of LiDAR versus photogrammetry to establish a ground for forensic science application.

- There is insufficient field testing to implement these technologies in various environmental settings.

- There is limited research on how well these technologies perform if individuals lack training in these technologies, like first responders.

3. Methodology

3.1. Research Design

The adopted study design is a comparative experimental design, in which a controlled experimental design is combined with a comparative analysis. The controlled experimental design involves the manipulation of two independent variables:

- Scanning duration of five (fast) and ten (slow) minutes

- Illumination condition of day and night

This is to observe their causal effects on the dependent variables (the accuracy of the reconstructed 3D models), using accuracy metric of the mean absolute error (MAE). Three models are compared:

- Model 1: iPhone LiDAR with Recon-3D application

- Model 2: iPhone camera with Pix4D solutions

- Model 3: Ground truth measurements and 3D map, measurements of designated markers and a Leica scanner BLK360 Second Gen., which are used as the ground truth 3D map.

The assumptions in the study include the consistency of the iPhone LiDAR application and the recon-3D application’s performance across different scanning setups, on which a fixed resolution of 5 mm is selected. Except for the controlled variables, the environmental conditions are assumed to be similar across all scanning setups. Lastly, using a laser ruler and a measuring tape, the distance measurements between the reference markers placed on the ground are considered accurate and reliable.

3.2. Mock-Up Crime Scene Setup

An indoor mock-up crime scene was prepared at Naif Arab University for Security Sciences (NAUSS), featuring elements like a dummy, imitation evidence markers, and blood spatters, as previously shown in Figure 1. Two AprilTag markers were strategically positioned facing each other at the crime scene as reference markers on the wall, with 4.18 m between them, for the 3D reconstruction scaling procedure when using the Recon 3D application. Moreover, five markers were strategically placed near areas with evidence. The distance between these markers is detailed in Table 3, which was accurately measured using a laser ruler, as shown in Figure 2.

Table 3.

Actual measurements between the five markers.

Figure 2.

A laser ruler and tape measure are used to measure between markers.

3.3. Hardware and Software Technology Utilized

The scanning method involves creating three models, by utilizing an iPhone equipped with LiDAR technology and the Recon 3D app (Model 1), a photogrammetry model using iPhone with PiX4Dcatch [22], for data collection, and Pix4Dmapper [22] for the processing (Model 2); a second-generation Leica scanner BLK360 model (Model 3) was used as the ground truth. This is summarized in Table 4 with more details. The first model uses iPhone’s built-in LiDAR sensor to scan the scene with four different scanning settings at a 5.0 mm resolution specified in the Recon-3D application settings. The second model uses Pix4Dcatch with the Pix4Dmapper or photogrammetry using the same four scanning settings. However, Pix4Dcatch does not have resolution settings. When comparing resolutions between LiDAR and photogrammetry models, it is essential to understand that these technologies use different methodologies to capture and process data, leading to differences in the type and detail of their results. Photogrammetry could offer minute details, as low as 0.097 mm per pixel at 1 m away from the target and as high as 0.486 mm per pixel at 5 m away using the iPhone 15 Pro Max. Thus, various factors should be considered, such as the distance to the subject, the camera’s specifications, and environmental conditions like lighting and texture. The third model is the Leica BLK 360 G2 reference model. It compares the efficiency of the best scan from the first and second models using the ICP (Iterative Closest Points) algorithm to align the 3D models, and CloudCompare was utilized for point-wise evaluation.

Table 4.

Summary the scanning models for LiDAR, photogrammetry, and ground reference.

3.4. Model 1: LiDAR with an iPhone and Recon-3D App

The LiDAR scanner on the iPhone is used together with the Recon-3D app to evaluate how accessible it is and what limitations it might have. The scan processing was performed on the cloud as well as on the device. The first and second scans were taken methodically, moving from top to bottom while circling the room in overlapping paths, all completed within ten minutes for the first model. Next, the scanning time was shortened to just five minutes for the second model. The five-minute scan was processed on the device, whereas trying to process the ten-minute scan caused the application to crash. Hence, the processing was performed on the cloud. These scans took place at noon under the light to ensure sufficient lighting conditions. Scanning from top to bottom in an overlapping manner ensured comprehensive coverage. Avoiding using flash during scanning while relying on sunlight was intentional in testing LiDAR capabilities. During the third and fourth rounds of scanning the crime scene, a significant change was made. The scanning was done at night using iPhone’s built-in flash, completed in ten minutes for the fourth scan, and then the time was shortened to five minutes for the final scanning. Likewise, the five-minute scan was taken on the device, while the ten-minute scan had to be processed on the cloud. The adjustment in the nighttime scans aimed to assess how effective the scanning process is under these conditions and determine whether using flash lighting could improve the quality of data collection or if it was unnecessary, particularly considering LiDAR technology.

3.5. Model 2: Photogrammetry with PiX4Dcatch and Pix4Dmapper

The photogrammetry approach was conducted using Pix4Dcatch, which is designed to automatically capture images through the phone. It is installed on the iPhone 15 Pro Max to ensure consistency between the models. The application collects the images that will be used to reconstruct the 3D models using Pix4DMapper. The data collection phase involved scanning the mock-up crime scene with four scenarios. The same person conducted all scans to ensure consistency in data collection. The first scan was conducted under daylight, with 10 min allocated for the scanning process. The aim was to establish a baseline for daylight scanning efficiency and reconstruction accuracy. The second scan was conducted under the same daylight conditions, while the scanning duration was reduced to 5 min. The third scan aimed to assess the 3D model under nighttime conditions using only the built-in flash. Also, the scanning duration was 10 min, as the first scan was used to compare the impact of the lighting condition upon the 3D models between the third and the first scan, considering that the duration was constant for both scans. The fourth scan was conducted under the same lighting conditions as the third at night. The scanning duration was reduced to 5 min to assess the impact of the scanning duration upon the model under the same lighting conditions. Each scan followed a structured approach, involving three rounds of circular motions in overlapping paths around the scene to capture the scene from multiple angles: (1) medium distance, 2–3 ft away from the target, providing a balanced scene perspective; (2) closer distance to the ground to capture details closer to the surface; and (3) higher distance using a selfie stick to ensure complete coverage. After completing the scanning processes, all data were collected through Pix4Dcatch, including the captured photos, metadata, and location data. They were exported as a ZIP file and processed through Pix4DMapper to reconstruct the 3D models. The resulting point clouds were then exported in LAS format, so that CloudCompare software was utilized for point-wise evaluation.

3.6. Model 3: The Leica BLK360 Second Generation

As a ground truth, a Leica BLK360 G2 is used to scan the same area that lasted 10 min. The scanning processing involved moving the scanner in multiple positions to ensure proper scene coverage while preventing blind spots. The scanned model data were stored in LAS format, a compatible format with CloudCompare software (Version 2.6.3) for analysis and comparison.

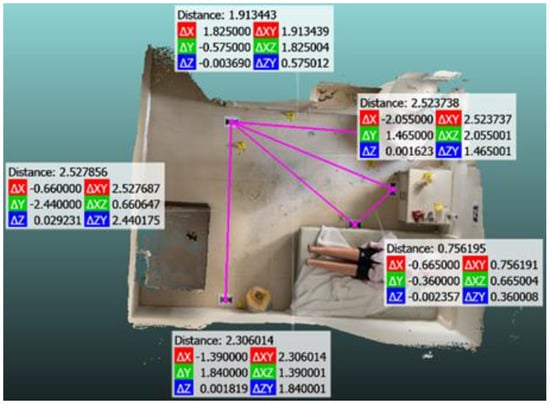

3.7. Scanned Models’ Accuracy Assessment

The accuracy of the constructed 3D models is assessed using two methods: (1) using the reference markers and (2) using the point-cloud comparison. A simple mean absolute error (MAE) metric is computed for the reference markers, which calculates the absolute difference between predicted values from the 3D models and the actual values measured using a laser meter. Figure 3 shows the predicted values computed from CloudCompare. We repeated the predicted measurements three times for each maker pair and then calculated the average error for each repetition to enhance the accuracy. The overall MAE for each scan is calculated by averaging the MAE values across all maker pairs. We need to use the raw format to evaluate the quality of our scans, that is, e57 file format from the Recon-3D application and the LAS format from Pix4Dmapper. These raw formats can assist us in visually inspecting the models on CloudCompare and precisely measuring the models’ dimensions while ensuring that there was no editing to enhance them visually. Each scan will be assessed to confirm that it contains key elements, including the makers and AprilTags. The models must be aligned to the reference model for the point-cloud comparison. The Iterative Closest Points algorithm aligned the models, thus enabling the calculation of the point-wise errors.

Figure 3.

Measurements of five pairs of markers using CloudCompare.

4. Results and Discussion

4.1. Model 1: LiDAR with an iPhone and Recon-3D App Results

During the first scan, which took ten minutes, 1,312,097 point clouds were gathered. The scan, which was done during the daytime, had an overall MAE of 0.029 m. This level of precision indicates that LiDAR technology maintains accuracy in capturing scene dimensions and spatial relationships during longer sessions. In the second scan, which lasted for five minutes during daytime, the gathered number of point clouds significantly increased to 2,111,092 and resulted in an overall MAE of 0.012 m. The improved accuracy is due to the increased density of the collected point clouds. This accuracy effectively captures detailed 3D data for crime scene investigation purposes, especially when timing is critical for forensic investigations, where swift yet accurate results are required. While it may seem logical that a longer scanning duration using LiDAR would gather more point clouds, when implemented, the prolonged scanning (ten minutes) caused a reduced number of registered point clouds, leading to lower mapping accuracy. The mobile Recon-3D app crashed and failed to process the dataset, and the cloud system was utilized for the processing. It seems like the Recon-3D program performs the point cloud registration very conservatively, rejecting many point clouds as outliers, particularly if there is high distance variation between the camera and the surface. The third scan, which took place at nighttime for over ten minutes using the built-in flash of the iPhone, gathered a total of 1,143,808 point clouds. However, this process required a processing time of approximately 9 h and 40 min over the cloud. Despite the low-light conditions, this scan achieved an MAE of 0.021 m, like the first scanned model, which also lasted ten minutes in the daytime. This elevated level of accuracy was achieved in a pitch-dark environment, and using the iPhone flash in this setup shows how the built-in iPhone flash features are beneficial where lighting conditions may not always be ideal.

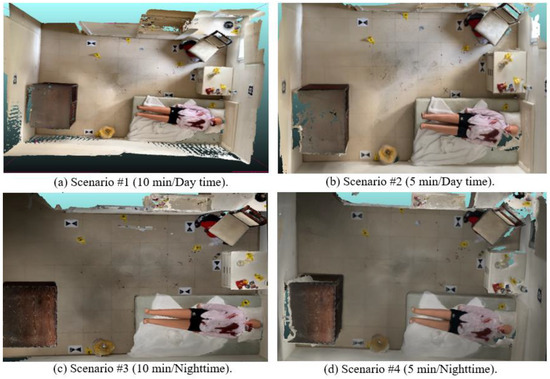

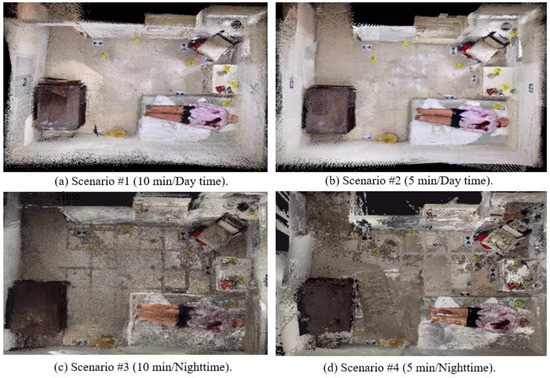

The last scan was also from nighttime, but the scanning duration was reduced to five minutes with the iPhone flash on. It generated the highest number of point clouds of 2,374,383, surprisingly achieving an MAE of 0.0066 m, indicating an exceptionally high level of accuracy. This notable increase in point cloud size and the low MAE value suggests that quick scanning with iPhone flash can result in exact and detailed 3D models, which is valuable for documentation and analysis in low-light scenarios. However, it should be noted that for this scan, while performing well regarding the accuracy measurements, the actual scene color and visual representation had a lot of discrepancies across the different areas in the scene; also, shadows and some objects were not aligned properly. Figure 4 shows the texturized LiDAR mapping results, comparing the four scenarios. The result from Scenario 4 shows the most accurate 3D map due to the reduced sunlight interference in the LIDAR sensor, but the visual representation was poor due to the use of a flashlight. Scenario 2 shows the most optimal performance, balancing the map accuracy and visual representation of the scene.

Figure 4.

Three-dimensional map results of Model 1 using LiDAR with four different scanning scenarios. Although the nightime scans are visually poor, their maps are more accurate due to the minimal sunlight interference.

4.2. Model 2: Photogrammetry with an iPhone and pix4d Catch 3D App Results

The first scan was conducted during daytime for 10 min, and 1483 images were captured, with 8,450,137 point clouds registered. It had an overall mean absolute error (MAE) of 0.017 m, which could be considered the highest accuracy achieved among all four scans of the photogrammetry approach. In the second scan, the duration was reduced to 5 min, capturing 745 images, which reduced the gathered point clouds to 4,546,991, resulting in an overall error (MAE) of 0.020 m. This result is expected since the scanning duration was reduced, which affected both the collected point clouds and produced lower performance than the scan under the same lighting conditions with a longer duration (the first scan). The third scan was taken during the nighttime to assess the effect of the lighting conditions on the 3D model results while maintaining the same duration as the first scan (10 min), resulting in 994 images with 2,219,352 point clouds. The resulting error was 0.030m, showing that the poor lighting conditions degrade performance. The fourth scan was performed during the nighttime, while the scanning duration was reduced to 5 min, capturing 483 images with 1,529,689 point clouds. It had an overall error of 0.032 m. In this scan, the darkness during the nighttime lighting conditions and reducing the scanning duration led to fewer point clouds, significantly reducing the accuracy. Figure 5 compares the mapping results from photogrammetry, showing that Scenario 1 has the most accurate map in terms of accuracy and visual representation, whilst Scenario 4 has the worst performance.

Figure 5.

Three-dimensional map results of Model 2 using photogrammetry with four scanning scenarios, showing the best results in daytime.

4.3. Comparison of Model 1 and Model 2

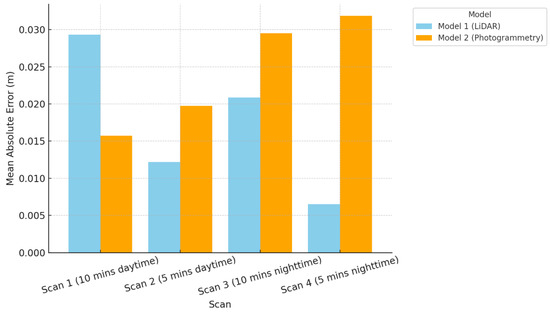

Figure 6 presents the comparative analysis of Model 1 (LiDAR) and Model 2 (photogrammetry) using the MAE values of marker pairs for four scanning scenarios. The data indicate that Model 1 consistently demonstrates lower MAE values than Model 2, affirming its accuracy and consistency. Interestingly, Scenario 1’s scan (10 min, daytime) of the photogrammetry model showed better accuracy than the LiDAR model, which is because the photogrammetry model registered more point clouds, while the LiDAR sensor’s prolonged scanning resulted in rejecting data and a reduction in the model’s point clouds. Another reason may be the extensive sunlight interference in LiDAR sensing in daytime. This confirmed that the LiDAR map from Scenario 4 (5 min, nighttime) showed the best accuracy due to the lowest sunlight interference at nighttime. However, the textured LiDAR map was visually poor due to the poor image quality. Therefore, Model 1 from Scenario 2 was chosen for a point-wise comparison with the Leica BLK360 G2 reference model. Regarding the third and fourth scans, Model 1 maintained a lower MAE across marker pairs than Model 2, indicating that the LiDAR model performed better in pitch-dark settings by relying on the iPhone’s built-in flash.

Figure 6.

Comparison of MAE for Model 1 (LiDAR) and Model 2 (photogrammetry) across 4 scanning scenarios (the lower, the better), showing the best LiDAR result at nighttime with fast scan, while the best photogrammetry at daytime with slow scan.

The LiDAR model generally achieved lower overall MAE values, with the differences between the scans becoming more pronounced in reduced-light conditions. In such scenarios, photogrammetry scans struggled to maintain accuracy, leading to an increase in the overall MAE. Conversely, the LiDAR model’s overall MAE clearly showed that the prolonged 10 min scanning time, whether in the daytime (as demonstrated in Scenario 1) or at night (as shown in Scenario 3), results in decreased accuracy compared to the shorter-duration scans of 5 min (as shown in Scenarios 2 and 4) due to the previously mentioned explanation of the Recon-3D point cloud registration problem.

From our analysis of LiDAR (Model 1) and photogrammetry (Model 2) in different scanning scenarios, the second LiDAR scan of Model 1 was chosen as the best-scanned model since it achieved a balance between accuracy and visual quality. This scan, which took five minutes to complete during the daytime, captured double the number of point clouds compared to the longer ten-minute duration of the first LiDAR scan of Model 1. The higher point density increased accuracy and showed good visual quality since it was conducted in the daytime with natural lighting. In contrast to the third and fourth LiDAR scans of Model 1 that were performed at night, they had issues like ghosting, missing parts, and color inaccuracies. The chosen scan offered a precise and visually appealing representation of the mock-up crime scene. On the other hand, when comparing the chosen second LiDAR scan of Model 1 with the most accurate scan of the photogrammetry model (Model 2), the first scan lasted for ten minutes, doubled the amount of scanning time, and had a higher MAE of 0.0166 compared to 0.012. Moreover, the scene had various missing parts, though it produced a denser point cloud, as shown in Figure 5a.

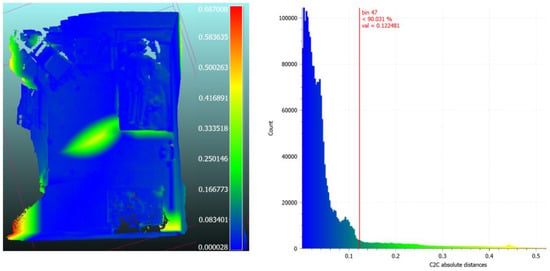

The chosen model (second LiDAR scan of Model 1) was compared to the reference model (Leica BLK 360 G2 Model 3). First, the ICP algorithm was applied to align two point clouds, which is required for model comparisons [34]. Figure 7 shows a heatmap of cloud-to-cloud distance errors, presenting a more detailed map. Based on the heatmap histogram, around 90% of the point clouds have an absolute distance of 0.1224 m between them. This indicates the distance difference and how close the point clouds are from the aligned model of the iPhone’s LiDAR from the professional-grade Leica BLK360 scanner. A relatively higher distance was noted in the middle of the heatmap of the aligned models because some blind spots existed in the Leica BLK 360 scanned model, as shown in green. It can also be seen that the highest discrepancies between the C2C distance are displayed at the scanned edges, in which the difference ranges between 0.58 and 0.66 m, as shown in red in the model heatmap, which was not the focus of our scanning when reconstructing the crime scene since it does not contain any relevant evidence. This visual representation highlights where the alignment is solid or weak, giving us insight into how well our aligned iPhone model is compared to the reference Leica mode.

Figure 7.

Cloud-to-cloud distance heatmap (in meters) (left) and related histogram showing most point clouds (90%) fall under 0.12m of error (right). The regions of high errors are from the sensing boundary or blind spots during the scanning.

4.4. Discussion

This study evaluates LiDAR and photogrammetry in indoor crime scene scenarios while highlighting their effectiveness in creating 3D models for forensic analysis. Like the findings of Desai et al. [31], our study confirms that handheld LiDAR devices like those found in mobile phones can swiftly and effectively capture crime scenes. Our findings support their results on how LiDAR scanners can achieve acceptable precision levels for crime scene investigations. Furthermore, the swift documentation ability highlighted by Berezowski et al. [7] was also evident in our results, showing how iPhones with LiDAR scanners can efficiently gather point clouds under optimal lighting conditions.

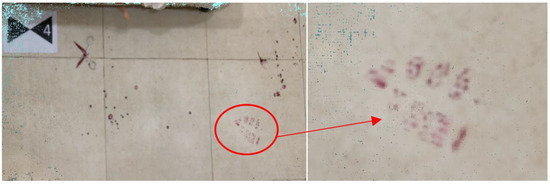

However, despite the fast data acquisition, there exist challenges with LIDAR in terms of sensitivity to various lighting conditions, affecting collected data integrity as well as efficiency, resulting in missing point clouds, as shown in the different scans where several point clouds were missing. Our observation about the limited details of the crime scene and the discrepancies in the color and alignment in low-light conditions raises concerns, like those in a study by Chase and Liscio [9], which should be taken into consideration when trying to interpret LiDAR data in forensic investigations. While our study did not explicitly focus on the accuracy of closeup scans of evidence found at crime scenes, we did scan a small area covering a few objects of interest, a footprint, spatters of blood, and scissors, all found in our mock-up crime scene, as shown in Figure 8. With a single scan attempt using Recon-3D with 1 mm resolution, the scan took 1 min with 1.9 M point clouds. This resulted in obtaining the highest-possible resolution details achievable by the application, with only a few point clouds missing at the scan corners. Still, the objects of interest in the scene were fully reconstructed in 3D. This close-up reconstruction will be particularly effective in outdoor scenes such as muddy ground where 3D reconstruction of evidence is critical.

Figure 8.

A 3D reconstructed footprint as a piece of evidence at the scene.

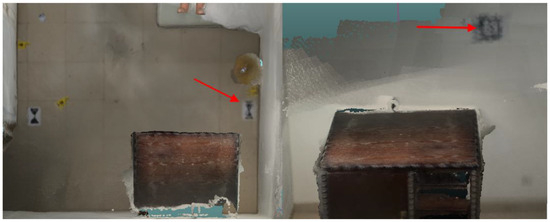

Also, it should be mentioned that the visual representations sometimes showed discrepancies across the different areas in the maps, resulting in shadows and some objects not aligned properly, as shown in Figure 9. Maintaining the integrity of 3D models is also critical when dealing with criminal cases. Our research incorporates LiDAR and photogrammetry technologies for their accuracy in capturing crime scene details, thus reducing the chances of tampering with data during the investigation process. According to Maneli and Isafiade [5], the accuracy of these reconstructions is essential, as any unauthorized changes or contamination in data quality could affect their admissibility as evidence in the courtroom. In addition, digital documentation of crime scenes not only assists first responders when dealing with crime scenes but also helps in preserving the collected evidence integrity for further investigation, which usually comes in handy in later proceedings. According to Galanakis et al. [6], incorporating 3D digital tools in law enforcement enhances the durability of crime scene documentation by enabling examinations beyond the initial phase of crime scene documentation. This feature proves vital when preserving the physical crime scene, which is not feasible over time. An equally important aspect is the incorporation of the hashing mechanisms, such as MD5 or SHA1, to preserve the integrity of the 3D digital map obtained. This will ensure the Chain of Custody of the 3D reconstructed maps.

Figure 9.

Failed image alignments in a marker on the floor and an April Tag on the wall (with arrows).

5. Conclusions

This study investigated how mobile phone-based LiDAR and photogrammetry technologies can be used in reconstructing 3D indoor crime scenes, with a focus on the accuracy and visual quality of the maps. The results show that the built-in scanner in the iPhone 15 Pro Max with the Recon-3D app could capture the crime scene in detail with acceptable accuracy for forensic purposes. The comparative study also revealed that photogrammetry using Pix4D mobile apps could create denser point clouds with visual detail, providing accuracy of 0.017 m compared to the LiDAR-based solution with 0.012 m. Although the LiDAR solution achieved the best map accuracy in the nighttime scenario (0.006 m) due to having the lowest interference of sunlight, its visual quality (using flash lighting) was not acceptable for forensic purposes. The research also revealed the saturation problem in LiDAR with Recon-3D, potentially impacting the results of the crime scene documentation. The results are crucial for crime scene investigators to understand the accuracy of the latest 3D-mapping tools and their limitations for court presentations. Future work should investigate the recent advancements in 3D rendering techniques, such as Gaussian Splatting, and integrating AI framework to identify crime evidence. Also, the integrity of the 3D models should also be preserved by incorporating checksum calculations in the model.

Recommendations for Practical Implementation

When using a combination of documentation techniques and technologies, when possible, employing a range of documentation methods, such as LiDAR, photogrammetry, and conventional photography, can improve the documentation results. The photogrammetry ability to create denser point clouds combined with the LiDAR capability to create visually appealing scans that are accurate in spatial measurement can enhance the results of the documented evidence and act as supporting evidence in addition to the widely used traditional photography. This approach ensures that each technology’s unique strength is maximized.

- Promoting the development of LiDAR sensor applications on iOS devices: Since Recon-3D is one of the first applications that utilizes LiDAR technology on iPhones/iPads for forensics science purposes and it demonstrated a relatively low RMSE compared to a professional-grade Leica scanner, there is potential for other developers to create similar applications on iOS devices. Moreover, to gain interest among researchers and developers in using smartphone-based LIDAR, it is advisable to include a viewing feature within the current study, which uses Recon-3D or other developed applications in the future. This feature should enable free viewing and sharing of 3D models without requiring users to register or subscribe.

- Preserve model integrity: While this topic is not the focus of this study, it is crucial to highlight the importance of preserving the accuracy of scanned models, mainly when such models can be used not only as supporting evidence of the crime scene environment and content but also as virtual tours for the court, to better comprehend the crime scene content, dimensions, and activities.

- Ensure the integrity of the reconstructed models is preserved. Checksum mechanisms should be incorporated to validate that the scanned data models are unaltered from their original state when the scan was taken. This ensures that any modifications can be identified, preserving the model’s integrity. A proposed approach is integrating checksum calculations in the scanning application, whether the Recon-3D application or PIX4D. Additionally, data transmission is secured by employing encryption to ensure the model’s data while uploading them to the cloud. Since Recon-3D already transfers data to the cloud, it is essential to specify the security measure implemented when the transfer is complete.

Author Contributions

Conceptualization, F.M.S., W.M.A. and J.H.K.; methodology, F.M.S., W.M.A. and J.H.K.; software: F.M.S. and W.M.A.; validation, F.M.S. and W.M.A.; formal analysis, F.M.S. and W.M.A.; investigation, F.M.S. and W.M.A.; resources, M.M. and J.H.K.; data curation, F.M.S. and W.M.A.; writing—original draft preparation, F.M.S. and W.M.A.; writing—review and editing, J.H.K.; visualization, F.M.S. and W.M.A.; supervision, J.H.K.; project administration, J.H.K.; funding acquisition, J.H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Security Research Center at Naif Arab University for Security Sciences (Project No. NAUSS-23-R26). The authors would like to express thanks to the Vice Presidency for Scientific Research at Naif Arab University for Security Sciences for their support on work.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy issue.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this research.

| 3D | Three-Dimensional |

| AV | Automated Vehicles |

| BLK360 | Leica BLK360 Imaging Laser Scanner |

| C2C | Cloud to Cloud |

| ICP | Iterative Closest Points Algorithm |

| iOS | iPhone Operating System |

| LiDAR | Light Detection and Ranging |

| MAE | Mean Absolute Error |

| PIX4D | PIX4D Software for Photogrammetry |

| RMSE | Root Mean Square Error |

| SfM | Structure from Motion |

| SLR | Systematic Literature Review |

| TLS | Terrestrial Laser Scanning |

| UAV | Unmanned Aerial Vehicle |

| VR | Virtual Reality |

| GCP | ground control points |

References

- Mehendale, N.; Neoge, S. Review on LiDAR Technology. Soc. Sci. Res. Network 2020. [Google Scholar] [CrossRef]

- Villa, C.; Lynnerup, N.; Jacobsen, C. A virtual, 3D multimodal approach to victim and crime scene reconstruction. Diagnostics 2023, 13, 2764. [Google Scholar] [CrossRef] [PubMed]

- Desai, J.; Liu, J.; Hainje, R.; Oleksy, R.; Habib, A.; Bullock, D.M. Assessing vehicle profiling accuracy of handheld LIDAR compared to terrestrial laser scanning for crash scene reconstruction. Sensors 2021, 21, 8076. [Google Scholar] [CrossRef]

- Drofova, I.; Adámek, M.; Stoklásek, P.; Ficek, M.; Valouch, J. Application 3D forensic science in a criminal investigation. WSEAS Trans. Inf. Sci. Appl. 2023, 20, 59–65. [Google Scholar] [CrossRef]

- Maneli, M.A.; Isafiade, O.E. 3D Forensic crime scene Reconstruction Involving Immersive Technology: A Systematic Literature review. IEEE Access 2022, 10, 88821–88857. [Google Scholar] [CrossRef]

- Galanakis, G.; Zabulis, X.; Evdaimon, T.; Fikenscher, S.; Allertseder, S.; Tsikrika, T.; Vrochidis, S. A study of 3D Digitisation Modalities for Crime Scene Investigation. Forensic Sci. 2021, 1, 56–85. [Google Scholar] [CrossRef]

- Berezowski, V.; Mallett, X.; Moffat, I. Geomatic techniques in forensic science: A review. Sci. Justice 2020, 60, 99–107. [Google Scholar] [CrossRef]

- Thiruchelvam, I.T.D.V.; Jegatheswaran, R.; Juremi, J.B.; Puat, H.A.M. Crime scene reconstruction based on a suitable software: A comparison study. J. Eng. Sci. Technol. Spec. Issue SIET2022 2022, 266–283. [Google Scholar] [CrossRef]

- Chase, C.; Liscio, E. Technical Note: Validation of Recon-3D, iPhone LiDAR for bullet trajectory documentation. Forensic Sci. Int. 2023, 350, 111787. [Google Scholar] [CrossRef]

- Sung, L.J.; Majid, Z.; Mohd Ariff, M.F.; Razali, A.F.; Chen Keng, R.W.; Wook, M.A.; Idris, M.I. Assessing Handheld Laser Scanner for Crime Scene Analysis. Open Int. J. Inform. 2022, 10, 133–144. [Google Scholar]

- Liscio, E.; Lim, J. Recon-3D Measurement Accuracy Study for Small Scenes. J. Assoc. Crime Scene Reconstr. 2023, 27, 1–10. [Google Scholar]

- Spreafico, A.; Chiabrando, F.; Teppati Losè, L.; Giulio Tonolo, F. The iPad Pro built-in LiDARsensor: 3D rapid mapping tests and quality assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 63–69. [Google Scholar] [CrossRef]

- Stevenson, S.; Liscio, E. Assessing iPhone LiDAR& Recon-3D for determining the area of origin in bloodstain pattern analysis. J. Forensic Sci. 2024, 69, 1045–1060. [Google Scholar] [CrossRef] [PubMed]

- Bohórquez, A.O.; Del Pozo, S.; Courtenay, L.A.; González-Aguilera, D. Handheld stereo photogrammetry applied to crime scene analysis. Measurement 2023, 216, 112861. [Google Scholar] [CrossRef]

- Becker, S.; Spranger, M.; Heinke, F.; Grunert, S.; Labudde, D. A Comprehensive Framework for High Resolution Image-based 3D Modeling and Documentation of Crime Scenes and Disaster Sites. Int. J. Adv. Syst. Meas 2018, 11, 1–12. [Google Scholar]

- Liao, G. A Novel Plan for Crime Scene Reconstruction. In Proceedings of the 5th International Conference on Computer Engineering and Networks, Shanghai, China, 1 October 2015. [Google Scholar] [CrossRef]

- Abate, D.; Toschi, I.; Colls, C.S.; Remondino, F. A Low-Cost Panoramic Camera for the 3d Documentation of Contaminated Crime Scenes. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Hamburg, Germany, 28–29 November 2017. [Google Scholar]

- Chapman, B.; Colwill, S. Three-Dimensional Crime Scene and Impression Reconstruction with Photogrammetry. J. Forensic Res. 2019, 10, 1–6. [Google Scholar]

- Kottner, S.; Thali, M.J.; Gascho, D. Using the iPhone’s LiDAR technology to capture 3D forensic data at crime and crash scenes. Forensic Imaging 2023, 32, 200535. [Google Scholar] [CrossRef]

- John, S.; Philip, S.; Singh, N.; Hari, P.B.; Khokhar, G. Economic Solution for Spatial Reconstruction Using LiDAR Technology in Forensic Sciences. In Proceedings of the 2023 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE), Dubai, United Arab Emirates, 9–10 March 2023; pp. 252–256. [Google Scholar] [CrossRef]

- Beck, J.; Arvin, R.; Lee, S.; Khattak, A.J.; Chakraborty, S. Automated vehicle data pipeline for accident reconstruction: New insights from LiDAR, camera, and radar data. Accid. Anal. Prev. 2023, 180, 106923. [Google Scholar] [CrossRef]

- Pix4D. Available online: https://www.pix4d.com/ (accessed on 6 November 2024).

- Mishra, V.; Dedhia, H.; Wavhal, S. Application of drones in the investigation and management of a crime scene. Forensic Sci. 2015, 4, 1–2. [Google Scholar]

- Deshmukh, J.; Shetty, S.; Waingankar, M.; Mahajan, G.; Joseph, R. CrimeVerse: Exploring Crime Scene Through Virtual Reality. In Proceedings of the 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 26–28 April 2023; pp. 670–675. [Google Scholar] [CrossRef]

- Yu, S.; Thomson, G.; Rinaldi, V.; Rowland, C.; Nic Daeid, N. Development of a Dundee Ground Truth imaging protocol for recording indoor crime scenes to facilitate virtual reality reconstruction. Sci. Justice 2023, 63, 238–250. [Google Scholar] [CrossRef]

- Sazaly, A.N.; Ariff, M.F.M.; Razali, A.F. 3D indoor crime scene reconstruction from micro UAV photogrammetry technique. Eng. Technol. Appl. Sci. Res. 2023, 13, 12020–12025. [Google Scholar] [CrossRef]

- Kanostrevac, D.; Borisov, M.; Bugarinović, Ž.; Ristić, A.; Radulović, A. Data quality comparative analysis of photogrammetric and LiDARDEM. Geo-SEE Institute 2019, 12, 17–34. [Google Scholar]

- Flanagan, J.; Robinson, E. Off the Grid: Perspective Grid Photogrammetry in Crime Scene Reconstruction. J. Assoc. Crime Scene Reconstr. 2011, 17, 57–61. [Google Scholar]

- Sirmacek, B.; Lindenbergh, R. Accuracy assessment of building point clouds automatically generated from iPhone images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 547–552. [Google Scholar] [CrossRef]

- Norahim, M.N.I.; Tahar, K.N.; Maharjan, G.R.; Matos, J.C. Reconstructing 3D model of accident scene using drone image processing. Int. J. Electr. Comput. Eng. 2023, 13, 4087–4100. [Google Scholar] [CrossRef]

- Maiese, A.; Manetti, A.C.; Ciallella, C.; Fineschi, V. The introduction of a new diagnostic tool in forensic pathology: LiDARsensor for 3D autopsy documentation. Biosensors 2022, 12, 123. [Google Scholar] [CrossRef]

- Carew, R.M.; French, J.; Morgan, R.M. 3D forensic science: A new field integrating 3D imaging and 3D printing in crime reconstruction. Forensic Sci. Int. Synerg. 2021, 3, 100205. [Google Scholar] [CrossRef]

- Bostanci, G.E. 3D reconstruction of crime scenes and design considerations for an interactive investigation tool. arXiv 2015, arXiv:1512.03156. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May 2001–1 June 2001; IEEE: Piscataway, NJ, USA, 2002; pp. 145–152. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).