Abstract

In this paper, we propose a novel approach for egocentric 3D human pose estimation using fisheye images captured by a head-mounted display (HMD). Most studies on 3D pose estimation focused on heatmap regression and lifting 2D information to 3D space. This paper addresses the issue of depth ambiguity with highly distorted 2D fisheye images by proposing the SegDepth module, which jointly regresses segmentation and depth maps from the image. The SegDepth module distinguishes the human silhouette, which is directly related to pose estimation through segmentation, and simultaneously estimates depth to resolve the depth ambiguity. The extracted segmentation and depth information are transformed into embeddings and used for 3D joint estimation. In the evaluation, the SegDepth module improves the performance of existing methods, demonstrating its effectiveness and general applicability in improving 3D pose estimation. This suggests that the SegDepth module can be integrated into well-established methods such as Mo2Cap2 and xR-EgoPose to improve 3D pose estimation and provide a general performance improvement.

1. Introduction

Recently, more people have begun playing virtual reality (VR) games while wearing head-mounted displays (HMDs). During gameplay, it is important to track the user’s pose in order to receive user inputs or to synchronize the virtual representation of the user, an avatar, according to the user’s pose. Researchers [1] have estimated the full-body pose using the inverse kinematics (IK) method with only a few limb joint positions, but this approach often yields inaccurate results and can lead to a negative user experience [1,2].

Many researchers attempted to track the full-body pose. The vision tracking approach is promising because it could be achieved with one or a few cameras, so it is cheap and lightweight, with a high level of compatibility and accuracy [3,4,5]. In general, there are two types of vision tracking approaches: outside-in and inside-out [6,7,8,9,10]. The outside-in tracking approach usually takes full-body images from one or more external cameras [11,12,13]. However, this outside-in tracking approach necessitates a camera setup process and requires space and money to accommodate the cameras [14,15]. To solve these problems, researchers [8,10] have recently attempted an inside-out tracking approach, where images are captured from cameras mounted on an HMD or other body-worn devices. This approach supports high portability. However, most inside-out approaches rely solely on 2D information, such as heatmaps, and perform 3D lifting to estimate the 3D human pose.

In this paper, we propose a new inside-out approach for 3D pose estimation using a fisheye camera mounted on an HMD. Our approach regresses depth maps and segmentation maps in addition to the heatmaps. However, regressing all three in a single model is challenging due to optimization difficulties and a high risk of overfitting. To solve these issues, we used two separate backbone networks: one dedicated to heatmap regression and the other dedicated to the regression of depth maps and segmentation maps. Since depth maps and segmentation maps have similar visual characteristics, we regress them to the same backbone. By integrating information from the segmented depth map (SegDepth) and the heatmap, our method incorporates depth information more effectively than previous approaches that lifted 2D pose into 3D pose. This results in improved accuracy for 3D pose estimation, especially when dealing with depth ambiguity. In evaluations, integrating the SegDepth module into existing architectures (xR-EgoPose [8] and Mo2Cap2 [10]) improves their performance. These results demonstrate the generality and adaptability of the SegDepth module.

In this paper, we propose the following:

- A SegDepth module to effectively extract depth features from highly distorted images.

- Architecture that uses the features of depth map, segmentation map, and heatmap for 3D pose estimation.

- A new end-to-end framework for 3D pose estimation using images from a single fisheye camera mounted on an HMD.

2. Related Work

Three-dimensional (3D) pose estimation is usually performed with an RGB-D camera [16,17] or multiple RGB cameras in a studio [15,18,19,20,21,22,23]. However, 3D pose estimation using an RGB-D camera is significantly affected by sunlight and its use is limited due to its weight and high power consumption. Additionally, estimation with multiple RGB cameras in a studio requires a certain room size, high setup time, and substantial costs. Many researchers have attempted to address these issues through single-camera estimation using advanced convolutional neural networks (CNNs). In this section, we describe previous technologies for 3D human pose estimation utilizing CNNs.

2.1. Single Front View Estimation

With the advent of CNNs and large training datasets, significant advancements have been made in monocular human 3D pose estimation. Before HMDs became popular, researchers attempted to estimate human 3D poses using front (outside-in view) images of humans. With front images, there are two typical methods to regress 3D pose: (i) directly regressing 3D joint positions, and (ii) regressing 2D joint positions and then lifting them to 3D. Examples of the first method can be seen in the works of Zhou [24] and Tekin [25]. Zhou et al. [24] proposed a method that explicitly enforces bone-length constraints in 3D pose prediction by using a generative forward-kinematic layer. Tekin et al. [25] proposed an architecture that extracts the embedded features of the images by the CNN and then inputs them into the pre-trained decoder. In addition, Pavlakos et al. [26] introduced a 3D approach that regresses a volumetric representation of the 3D skeleton through a coarse-to-fine architecture. These methods [13,24,25,26,27,28], which directly regress 3D pose, often face generalization issues, as they tend to overfit to the specific environments used by the benchmark datasets.

The solution to this generalization issue involves performing the estimation within two subtasks: (i) 2D pose estimation and (ii) lifting the estimated 2D pose to a 3D pose [7,11,12,29]. In the first subtask (for 2D pose estimation), a regressor extracts direct pose vectors [30] or heatmap-based 2D pose features [5,31]. In the second subtask, a regressor lifts the 2D joint pose into the 3D joint pose. For example, Zhou et al. [11] estimated the 3D joint pose by combining information from the sparse dictionary of the 2D heatmap. Bogo et al. [7] predicted 3D pose by selecting the best 3D mesh model that matches its 2D projection. Tome et al. [32] proposed a method that generated a plausible 3D human model from 2D heatmaps with a pre-trained probabilistic layer, and refined these heatmaps by combining 3D pose projection and the image features. Additionally, Martinez et al. [33] introduced a simple architecture that lifts 2D joint positions to 3D joint positions with low error rates [33].

These two-step approaches, however, suffer from a lack of depth information, as they estimate 3D pose only using 2D joint information, leading to ambiguous results. In addition, their studies focused on networks using images from a front view, which are not compatible with the recent HMD-based VR systems that require a high level of portability without the need for additional installations, such as placing a camera in a room.

2.2. Egocentric 3D Human Pose Estimation

As HMD-based VR systems become more popular, several researchers [8,9,10] introduced methods that are compatible with an HMD. They mostly attached one or two cameras underneath a hat, glasses, or an HMD.

The earliest study using the egocentric view is Rhodin’s work [34], which estimated the stereo egocentric pose using a pair of fish-eye cameras. His main approach involved taking 2D features from each fisheye view and optimizing the 3D pose by extracting 3D features. Zhao [35] and Cao [36] introduced a similar method involving two image regressions from two cameras. However, because these methods processed two images, they required higher computational resources and more electrical power compared to methods that regress a single image. This is particularly critical for head-mounted displays (HMDs), which rely on battery power.

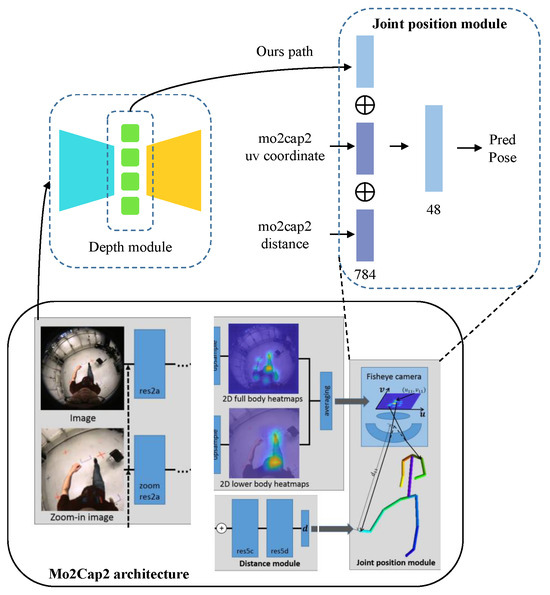

Xu et al. [10] proposed the Mo2Cap2 framework, which uses a single fisheye camera mounted underneath a hat. To overcome the rapid resolution degradation at body joints far from the fisheye camera, such as the ankles and knees, they proposed a two-stream CNN architecture. This architecture regresses a zoom-out image to track the overall body pose and a zoom-in egocentric image to specifically track the lower body joints.

Tome et al. [8] also used an egocentric view with a single fish-eye camera mounted underneath an HMD. They proposed the xR-EgoPose framework, which supports dual-branch decoders for lifting 2D features to 3D poses. They also published a large synthetic dataset supporting better image quality compared to the previously published Xu dataset [10].

Wang et al. [9] proposed a 3D pose estimation framework with two branches. The two branches extract the 2D body pose features with a heatmap and scene depth features with a depth map. They extracted the scene depth features after excluding the body region from the images and used the scene depth features to estimate the 3D human pose together with heatmap pose features. Their main idea was that the human pose is generally influenced by the objects around the human (e.g., hands on a keyboard often have a similar depth to the keyboard itself); thus, incorporating scene depth features into the regression can increase accuracy. In embedding these scene depth features, they implemented a scene voxel network [37]. This approach tends to reduce speed performance and consume significant computational resources. The use of scene depth features for 3D pose estimation offers advantages in tracking occluded body parts.

Wang’s approach [9] is somewhat similar to our framework in regard to using two branches to obtain depth features. Their depth features, however, embedded the scene information while our depth features embedded the body depth information. Additionally, Wang’s approach would not be compatible with VR systems because the essential scene information (real-world objects around a user) in their approach would not be available. This is because people play VR games in empty spaces for safety reasons (to prevent bumping into real-world objects). Our approach, which uses only the body image, would be promising in VR systems regardless of the availability of scene information.

Liu et al. [38] proposed a self-supervised framework, named EgoFish3D, for 3D pose estimation, using a single fisheye camera and third-person view images. The framework solves key challenges in egocentric fisheye-based estimation, including severe image distortion, frequent occlusions, and a lack of real-world annotated datasets. EgoFish3D integrates three modules: (i) a third-person module that estimates 3D poses using synchronized third-person cameras; (ii) an egocentric module that predicts 3D poses with egocentric fisheye images, and (iii) an interactive module that aligns the third-person and egocentric coordinate systems. The framework shows strong robustness in real-world scenarios, demonstrating the potential of self-supervised methods for egocentric 3D pose estimation.

3. Our Methods

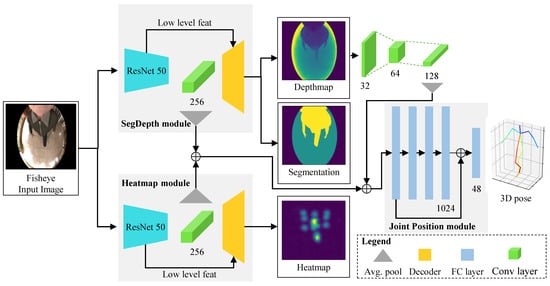

We propose a new method for predicting egocentric body pose estimation using the segmentation depth decoder. In this section, we describe the proposed architecture of our framework and the three modules of the architecture. The overview of our approach is shown in Figure 1.

Figure 1.

Overview of our framework. The RGB image () is input to generate 2D pose heatmaps, segmentation maps (), and depth maps (). The joint position module combines the heatmap features, SegDepth features, and an additional convolutional feature () extracted from the depth map to estimate the final 3D pose ().

3.1. Architecture

The architecture is divided into three modules: (i) a heatmap module that extracts the embedded pose features during the process of regressing a heatmap image, (ii) a SegDepth module that segments the body region from the images, extracting both embedded depth features and convolutional features from the depth map, and (iii) a joint position module that estimates 3D joints based on concatenating the features from the heatmap module, the SegDepth module, and the convolutional features extracted from the depth map.

3.1.1. Two Branch Architecture

In designing the architecture, we initially faced the question of how many branches our architecture should have. Most previous studies on egocentric 3D pose estimation [8,9,10] had two branches. Mo2Cap2 [10] opted for a two-branch architecture because they regressed two heatmaps individually using two zoom-level input images. xR-EgoPose [8] used dual branches because they regressed two types of outputs: 3D joint positions and a heatmap with 2D information. Wang’s approach [9] implemented two branches to extract 2D body features with a heatmap and body-excluded scene depth features with a depth map. Given our need to extract both 2D body features and body depth features, we designed a dual-branch architecture following their approaches.

With the dual-branch architecture, we considered whether our network should start with two branches, like Mo2Cap2 [10], or begin with one branch and split into two decoding branches as in xR-EgoPose [8]. This difference comes from the number of features that are used for 3D pose estimation. xR-EgoPose [8] extracts one feature, the heatmap feature, and uses it to regress 3D joint positions. Therefore, xR-EgoPose [8] requires one branch for the heatmap feature extraction at startup. Mo2Cap2 [10], extracts two heatmap features from two different input images (showing zoom-in and zoom-out views of the user’s body), thus requiring two branches at runtime. Similarly, Wang’s approach [9] extracts two features—heatmap and depth map features—with two branches at runtime. Since our main idea was to use two features—heatmap and depth map features—to regress the 3D pose, we followed Wang’s structure, starting with dual branches, but we used the body depth map feature instead of the scene depth feature. As a result, our architecture consists of the SegDepth and heatmap branches (see Figure 1).

3.1.2. Heatmap Module

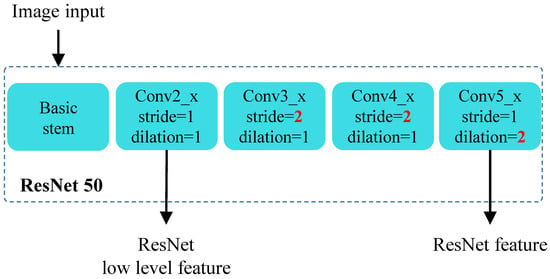

The heatmap module takes a distorted image from a fisheye camera. To mitigate the effects of the distortion, we customized ResNet-50 [39] as the encoder to expand the receptive field and the field of view (FOV). The strides of the third and fourth convx and the dilation of the fifth convx are set to double the expansion of the receptive field and FOV (see Figure 2). The expanded receptive field and FOV stretch the reduced parts of the distorted images. The decoder takes the embedding features of the ResNet encoder as input, and the low-level features from the conv2x in the encoder are connected by the skip connection. The heatmap decoder has two blocks of 3 × 3 convolutional layers (see Figure 3). After the convolutional layers, ReLu [40] and BN [41] are followed to improve the convergence speed and generalization performance of the network; dropout is set afterward to prevent overfitting.

Figure 2.

Our ResNet-50 structure. The strides of the 3rd and 4th convx and the dilation of the 5th convx are set to two. The output of conv2x is used as a low-level ResNet feature.

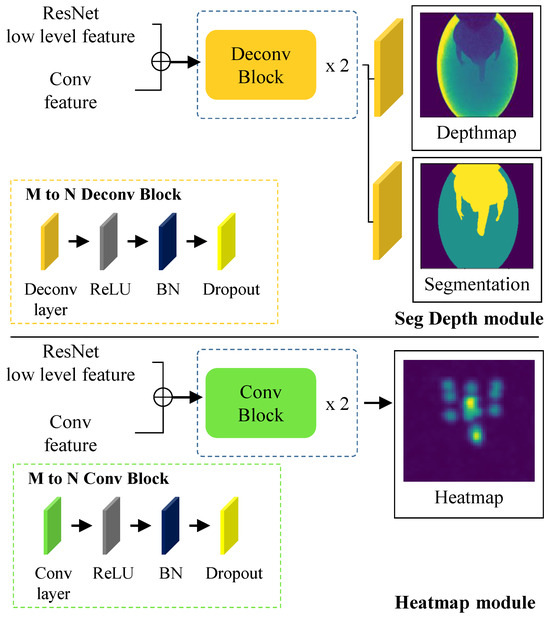

Figure 3.

The decoder takes ResNet and conv features as inputs. The deconv and conv blocks are repeated twice with ReLU, BN, and dropout steps. The SegDepth module decodes the depth and segmented images using the deconv layer. The heatmap module increases the heatmap size by bilinear interpolation.

3.1.3. Segmentation Depth Module

We implemented the SegDepth module in addition to the heatmap module. The encoder in the SegDepth module has the same structure as the encoder in the heatmap module (see Figure 2).

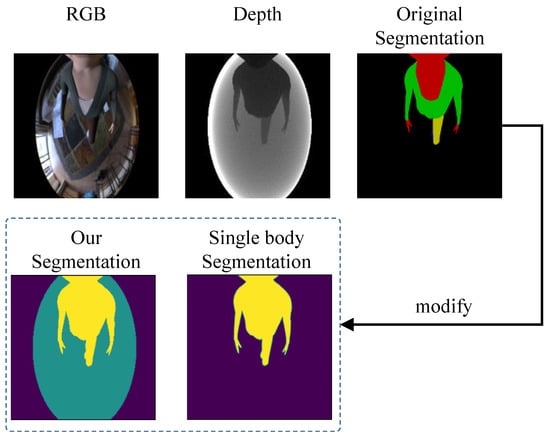

The architecture of xR-EgoPose [8] provided inspiration for the design of the decoder of the SegDepth module. xR-EgoPose [8] extracted the 3D pose features using the joint features of the 2D heatmap. Similarly, our SegDepth module extracts the depth features from a segmented image using 2D features from a segmented image (see the top right images in Figure 3). Since the depth map visually consists of three regions: body, background, and vignetting regions, our proposed segmentation is designed to have the three regions (see the top middle, and bottom left images of Figure 4). Our SegDepth module regresses the depth map along with the segmented 2D image, thus inducing the module to focus on the body region when extracting depth features. Additionally, the embedded features from the regression of the 2D segmentation help enhance the depth regression by leveraging 2D information.

Figure 4.

RGB image of the dataset (top left), 8-bit depth map (top center), original segmentation (top right), modified single-body segmentation (bottom right), and our segmentation (bottom left).

The decoder of the SegDepth module utilizes 4 × 4 deconvolution layers for the regression of the segmented image and depth map (see Figure 3). This is possible because the segmented image and depth map have the same optical shape and structure, allowing the network to learn from each feature, thereby enhancing each other with the information obtained during the estimation process from a distorted image. By implementing the decoder in this manner, we mitigate weight bias that sometimes occurs during training and can reduce the network size while maintaining the performance of the architecture. After processing the deconvolution block, two layers regress the segmented image and depth map individually.

3.1.4. Joint Position Module

The joint position module has fully connected (FC) layers with skip connections, following Martinez’s approach [33]. The joint position module takes the embedding features from both the heatmap and SegDepth modules as input, together with the additional input from the convolutional layers (top right three green layers in Figure 1) of the decoded depth map, and then estimates the 3D joint pose (). The embedding features from the heatmap and SegDepth modules provide global features, and the additional input from depth map convolutional layers provides local features to the joint position module. With the global and local features from the SegDepth module, the joint position module can effectively learn the depth information on the image, which enables more accurate 3D pose estimation than using only the features from the heatmap module.

4. Experiments

We evaluate our main concept—the regression of the depth map and segmentation using their embedded features to estimate 3D joint positions alongside features from heatmap regression—by applying it to well-known 3D pose estimation approaches: xR-EgoPose [8] and Mo2Cap2 [10]. We evaluate whether our idea improves performance. In addition, we qualitatively evaluate the effectiveness of our three-region segmentation by comparing it to the single-body segmentation, which extracts only the human body from the distorted images (see the bottom right image of Figure 4). We also compare the single-decoder architecture in the SegDepth module with two single-decoder architectures. For all evaluations, we use Tome’s xR-EgoPose dataset [8], which supports high scalability and generality by reflecting a variety of use cases. We do not compare our method with Wang’s method [9] because their approach is designed for environments heavily populated with objects around humans (a user), whereas ours is intended for environments without such objects. Therefore, the results from our method and Wang’s are heavily influenced by the dataset according to the level of object density, making direct comparisons irrelevant.

4.1. Dataset

Tome et al. [8], Xu et al. [10], and Wang et al. [9] recently published synthetic datasets for estimating 3D human poses using egocentric images captured by a fisheye camera. Among them, we chose Tome’s xR-EgoPose dataset [8] for training and testing our framework because the dataset contains depth information, which is crucial for regressing a depth map, along with the metadata (3D joint positions in our case). Additionally, the dataset supports high scalability by representing five body types (skinny short, skinny tall, normal, full short, and full tall), a variety of skin colors (white, light-skinned European, dark-skinned European, Mediterranean or olive, dark brown, and black), nine clothe types (sports pants, jeans, shorts, dress pants, skirts, jackets, T-shirts, long sleeves, and tank tops), five shoe types (sandals, boots, dress shoes, sneakers, crocs), and nine daily life behaviors (see the action type in Table 1). The dataset images have a resolution of 1024 × 1024 pixels and a color depth of 16 bits. The RGB, depth, normals, body segmentation, and pixel world position are available for each frame. Metadata includes 3D joint positions, height (from 155 cm to 189 cm), environment, camera pose (when the images were captured), body segmentation, and animation rig. The segmented images in the xR-EgoPose dataset [8] did not reflect the characteristics of the depth map (see the top right image of Figure 4). Therefore, we adapted the image segmentation to include three regions, similar to the depth map (see the bottom left image of Figure 4), and a simpler version with two regions (body and vignetting regions) (see the bottom right image of Figure 4). By comparing these two segmented images, we will find the best one for our framework.

Table 1.

Total number of frames according to the action types and the distribution between training and test sets.

The total number of frames in the modified dataset is 341 K, with 16 female and 18 male characters. The dataset is divided into three sets, as follows:

- Train-set: 225 K, 11 male, 11 female.

- Val-set: 30 K, 1 male, 1 female.

- Test-set: 85 K, 6 males, 4 females.

4.2. Implementation Details

4.2.1. SegDepth Module and Heatmap Module

Given an RGB image I () as input, the architecture uses two modified ResNet-50 models [39] to encode the image into two embedding features. The skip-connected low-level features and the embedding features are input to the segmentation depth decoder and the heatmap decoder, respectively, which infer depth map (), segmentation (), and heatmap (), respectively. The heatmap decoder trains the network using the following mean square error (MSE) function:

is the ground truth (GT) and is what the decoder predicted. Since the depth estimation and segmentation are performed simultaneously in a single process, we used a unified loss function for depth and segmentation, as follows:

The depth loss function uses the mean square error with GT, and the segmentation loss function uses cross-entropy, as follows:

D and S are the GT of the depth map and the segmentation, and and are the predictions of the decoder.

4.2.2. Joint Position Module

The joint position module takes the following three types of features as input: (i) two embedding features after encoding in the SegDepth and Heatmap modules, and (ii) the conv features of the depth map in the segmentation depth module. The joint position module first concatenates two embedding features and then concatenates them again with the depth map conv features. The total concatenated features are the inputs for the joint position module and it predicts the 3D joint positions () as output. The loss function of the 3D joint position module is as follows:

P denotes the GT of the joint positions, N denotes the total number of joints, and denotes the predicted joint positions.

The entire framework is trained on the sum of the losses of the modules as follows: (1), (2) and (5).

The weights of each loss are as follows:

These weights were chosen to balance the contributions of each module’s loss term by aligning their scales to the range of 10–100. This alignment allows all modules to contribute proportionally to the total loss, which is what achieves balanced multitask learning.

The framework was trained with the training set for 10 epochs and the batch size was 20. The training optimizer was Adam [42]. The initial running rate was and it was halved after every 2 epochs to avoid overfitting. To match the size between the outputs of the decoders (depth map, segmentation, and heatmap) and the GT, bilinear interpolation was used. The deconv layer in the segmentation depth decoder had a kernel size = 4, stride = 2, and padding = 1 to double the size of the input image. The modified ResNet-50 was pre-trained with the ImageNet dataset [43]. All training was done on two GTX3090 GPUs and took about 10 h to complete.

4.2.3. Implementation of xR-EgoPose and Mo2Cap2

Since the implementation of xR-EgoPose [8] and Mo2Cap2 [10] is not publicly available, we implemented them based on their paper and dataset. To implement xR-EgoPose, we followed their dual-branch architecture to predict 3D joints from a single RGB image. The image was referenced as a heatmap via ResNet-101, the encoder extracted the 20-length vector, and the vector was used as input to two decoder branches. One decoder branch regressed the heatmap and the other estimated the 3D human pose.

When implementing Mo2Cap2, we took two images as initial inputs by following their implementations: one image was a full-sized fisheye image that showed a full body, and the other image was a zoomed-in image with a clear view of the lower body (i.e., legs and feet). The implemented Mo2Cap2 had a dual-branch architecture to predict two heatmaps with the two zoom-level images. By averaging these two heatmaps, the Mo2Cap2 network generated an averaged heatmap on u,v coordinates. Additionally, the network regressed the distance between the camera and each joint using a distance module consisting of two residual blocks and a fully connected layer. We followed their implementation up to this step, but we were unable to feed the u,v coordinates directly into Mo2Cap2’s calibration function [44] because the coordinates of the dataset that we used were not compatible with Mo2Cap2. Therefore, instead of adopting Mo2Cap2’s final step, which inputs the 3D coordinate normal vectors and distance values into the fisheye camera calibration function, we implemented a simpler network. This network consists of a fully connected layer that regresses the 3D joint positions using the 2D joint u,v coordinates from the averaged heatmap and the distance values.

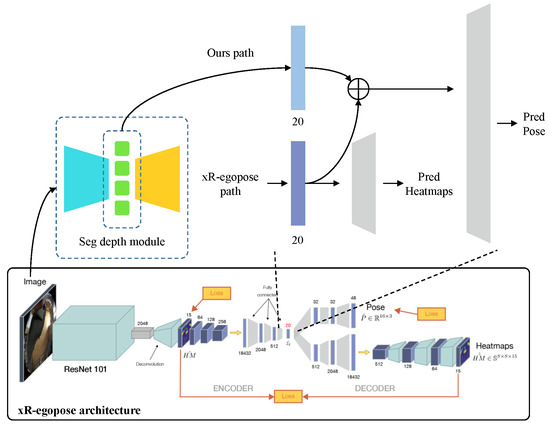

Additionally, we implemented the SegDepth module on top of the xR-EgoPose and Mo2Cap2 implementations; their SegDepth module has the same architecture as ours: the modified ResNet-50 encoder and the SegDepth decoder, so it uses the same process to extract the depth features. In the xR-EgoPose pipeline, we modified the dual-decoder structure to incorporate the depth features estimated by the SegDepth module. The xR-EgoPose consists of a single encoder, which encodes the heatmap into an embedding vector, and two decoders: one reconstructs the heatmap using the embedding vector, while the other estimates the 3D pose. We kept the embedding vector input to the heatmap reconstruction decoder unchanged but added the depth features estimated by the SegDepth module to the embedding vector input of the 3D pose estimation decoder via concatenation (see Appendix A.1). Similarly, we incorporated the depth features estimated by the SegDepth module into the Mo2Cap2 pipeline by modifying Mo2Cap2 as follows: Mo2Cap2 calculated the average joint coordinates u,v from two heatmaps at different scales and multiplied these coordinates by the camera distance vector to estimate the 3D pose. We added the depth features from the SegDepth module to the camera distance vector by concatenating them (see Appendix A.2).

5. Results

5.1. Evaluation Metrics

By following Tome’s xR-EgoPose [8], our metric for quantitative evaluation is the MPJPE (mean per joint position error).

and are the 3D points of the GT and predicted pose at the frame f for the joint j. denotes the number of frames and denotes the number of joints.

5.2. Effect of Referencing Depth Map with Segmentation

The impact of the SegDepth module was evaluated on three architectures: our proposed method, xR-EgoPose, and Mo2Cap2. The results in Table 2 show that referencing depth and segmentation features consistently improve the mean per joint position error (MPJPE) across all architectures and action types. When referencing depth information alone, xR-EgoPose achieved a 7.1% improvement in MPJPE, Mo2Cap2 showed a 10.1% improvement, and our architecture showed a 4.7% improvement compared to the baseline. Adding segmentation features, in addition to depth, resulted in performance gains, with MPJPE reductions of 10.9%, 10.2%, and 8.2% for xR-EgoPose, Mo2Cap2, and our method, respectively. These results suggest that depth information provides additional spatial context that can help resolve ambiguities inherent in 2D heatmap-based methods. The segmentation features improve performance by isolating relevant body regions and reducing noise, which is beneficial for accurate joint localization. The SegDepth module provides certain poses involving dynamic movements, occlusions, and extended limb positions. Among these, the upper stretching, lower stretching, and walking poses showed the most improvement. In the upper stretching pose, the integration of depth and segmentation features reduced MPJPE by 11.8% for xR-EgoPose and 12.2% for Mo2Cap2. This improvement reflects the module’s ability to enhance spatial localization in stretching poses with significant limb movement. For lower stretching, xR-EgoPose and Mo2Cap2 showed MPJPE reductions of 12.0% and 11.6%, respectively, demonstrating the module’s effectiveness in capturing leg positions and resolving spatial ambiguities in motion. In walking poses, xR-EgoPose and Mo2Cap2 showed improvements of 13.6% and 10.6%, respectively, in the utility of depth and segmentation features in addressing occlusion issues and maintaining spatial consistency in motions.

Table 2.

The results of MPJPE for our method, Mo2Cap2, and xR-EgoPose, categorized by action types and the average, with or without depth and/or segmentation regression using the SegDepth module.

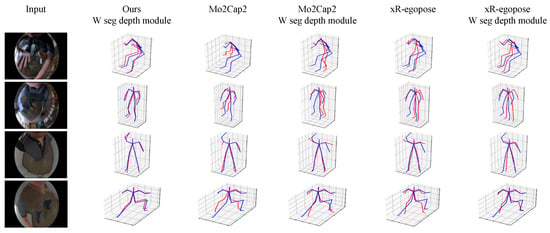

The qualitative comparisons presented in Figure 5 provide additional insight into the improvements achieved by the SegDepth module. The results show accurate joint localization and smoother pose reconstruction when depth and segmentation features are referenced. For Mo2Cap2, our proposed method resolves depth ambiguities. For example, when depth and segmentation features are used, the reconstructed poses show a reduction in errors related to limb length discrepancies, especially in walking or stretching actions. The estimated leg lengths are closer to the ground truth compared to the baseline, showing the effect of depth information in maintaining consistent proportions. Similarly, the reconstructed poses for xR-EgoPose show improved accuracy in reflecting realistic joint movements for actions involving significant upper-body bending.

Figure 5.

The qualitative evaluation of our model, Mo2Cap2, and xR-EgoPose with and without the SegDepth module. The blue skeleton denotes ground truth and red denotes the estimated result.

5.3. Ablation Study

We conducted an ablation study to evaluate the impact of key design choices in the proposed SegDepth module, focusing on the decoder architecture and segmentation strategy. This analysis shows how these components affect network performance, optimization stability, and computational efficiency.

5.3.1. Decoder Architecture

To assess the influence of the decoder architecture, we compared two configurations: a single-decoder architecture and a dual-decoder architecture. The single-decoder architecture regresses both the depth map and segmentation using a single decoder, whereas the dual-decoder architecture uses two separate decoders for depth map and segmentation. The experimental results in Table 3 show that the single-decoder architecture has advantages over the dual-decoder configuration. (i) The single-decoder architecture reduces the overall network size, making it more computationally efficient. (ii) It achieves better performance in terms of MPJPE, showing that the shared representation of depth map and segmentation features improves generalization.

Table 3.

The MPJPE and network size with a single decoder and dual decoder for regressing the segmentation and depth map in the SegDepth module.

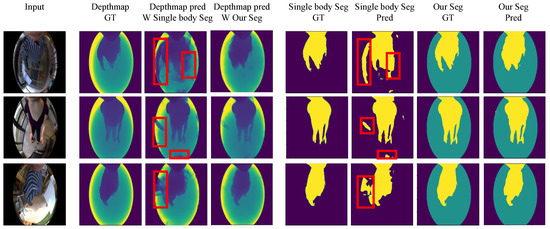

5.3.2. Segmentation Strategy

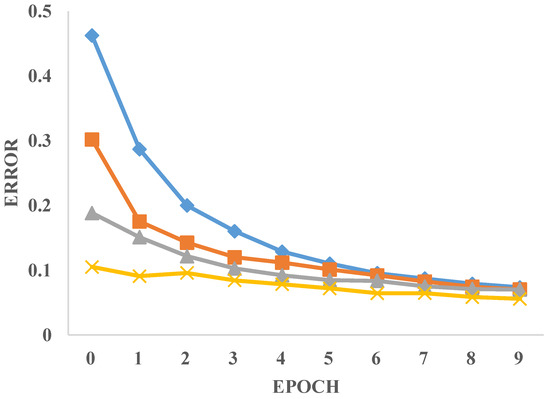

We also evaluated the effects of different segmentation strategies on the performance of the SegDepth module through both qualitative and quantitative analyses. We compared a single-body segmentation approach, which represents the entire body as a single region, with our proposed three-region segmentation, which divides the body into upper body, lower body, and background regions. To further quantify the impact of segmentation strategies on robustness, we evaluated four conditions: The first condition, heatmap only, uses only heatmap features for 3D pose estimation, without incorporating any depth or segmentation information. The second condition, heatmap + depth map (no segmentation), integrates depth map features into the pipeline but does not use any segmentation data. The third condition, heatmap + depth map + single-body segmentation, combines depth map features with a single-body segmentation approach that considers the entire body as a single region. Finally, the fourth condition, heatmap + depth map + three-region segmentation, combines depth map features with our proposed three-region segmentation strategy for detailed feature extraction. Quantitative results, presented in Table 4, show that the three-region segmentation strategy achieves the best performance with an MPJPE of 65.7. While the integration of depth map features alone reduces MPJPE compared to the heatmap-only condition (from 71.6 to 68.2), the single-body segmentation approach increases MPJPE to 75.8, likely due to the lack of spatial granularity. In contrast, the three-region segmentation strategy further reduces MPJPE to 65.7, demonstrating its effectiveness in improving the accuracy of 3D pose estimation. Qualitative results, as illustrated in Figure 6, show that the three-region segmentation strategy is effective in reducing noise in egocentric fisheye images. Additionally, Figure 7 presents the optimization curves for 3D joint training, highlighting the robustness and accuracy of the proposed method. The approach provides detailed and localized information, improving the network’s ability to detect overlapping or occluded body parts.

Table 4.

Quantitative evaluation of different segmentation strategies for the SegDepth module.

Figure 6.

Comparison between the single-body segmentation and our three-region segmentation methods. Results show that the single-body segmentation method does not clearly separate the body region, which leads to noise in the depth map and segmentation estimation (red box), while our segmentation shows a fine-grained body silhouette.

Figure 7.

Optimization curves for 3D joint training as a function of epoch. Gray (triangle): heatmap, orange (square): heatmap W depth map, blue (rhombus): heatmap W depth map and single-body segmentation, yellow (cross, our method): heatmap W depth map and three-region segmentation. The optimization curve regressing the three-region segmentation and depth map shows the lowest error while maintaining robustness.

6. Conclusions and Discussion

In this paper, we propose a novel approach for egocentric 3D human pose estimation using fisheye images captured by a head-mounted display (HMD). We propose the SegDepth module, which uses depth maps and segmentation maps to address the limitations of previous methods based on 2D heatmap-based approaches. Our approach improves the accuracy of 3D pose estimation in ambiguous and occluded scenarios. The SegDepth module combines depth and segmentation functions through a single-decoder architecture, reducing the network size while maintaining or improving performance. The single-decoder architecture reduces the network size by 9.5% compared to a dual-decoder configuration, while achieving a better mean per joint position error (MPJPE). These results demonstrate that the shared representation of depth and segmentation features improves the network’s generalization ability and computational efficiency. The adoption of our three-region segmentation strategy, which separates the body and background regions, improves the robustness of the model. Compared to single-body segmentation, three-region segmentation provides finer-grained spatial information, enabling the network to reduce noise and optimize training stability. Qualitative results and optimization curves show the superiority of the three-region approach in reducing noise and maintaining stable convergence. We also demonstrate the generality of the SegDepth module by integrating it into existing pose estimation frameworks, including xR-EgoPose and Mo2Cap2. In both cases, the addition of depth and segmentation features resulted in improvements across different action types. These results demonstrate the adaptability of the SegDepth module and its potential to enhance other 3D pose estimation systems. Despite its effectiveness, the proposed method has several limitations. First, the evaluations were conducted on the synthetic xR-EgoPose dataset, which, while diverse, may not fully capture the variability of real-world environments. Future work should focus on validating the method using real-world datasets to ensure broader applicability. Second, the method is designed for fisheye camera setups, which may limit its generalizability to other camera types or configurations. Exploring extensions to other lens types or multi-camera systems could broaden its applicability. Finally, the current framework processes each frame independently, without considering temporal information. Incorporating temporal dynamics could further improve performance for actions involving rapid motion or complex transitions. The proposed method provides a solution for egocentric 3D pose estimation in VR. By addressing the limitations of 2D-based methods and introducing depth and segmentation features, the SegDepth module shows potential for improving pose estimation accuracy. Its adaptable architectures and compatibility with VR-specific constraints make it useful for future virtual and augmented reality applications.

Author Contributions

H.S.: Conceptualization, Methodology, Software, Writing – original draft, Visualization, Writing—review & editing, Investigation. S.K.: Supervision, Methodology, Validation, Funding acquisition, Resources, Writing—review & editing, Project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a grant from the ICT R&D Project through the Institute for Information & Communication Technology Planning and Evaluation (IITP), funded by the Ministry of Science & ICT, the Republic of Korea (grant number: RS-2022-II220874, grant title: Metaverse content conversion technology development for real-time accessibility support), and the Innovative Human Resource Development for Local Intellectualization program through the Institute of Information & Communications Technology Planning & Evaluation (IITP), a grant funded by the Korean government (MSIT) (grant number: IITP-2024-00156287).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in xR-EgoPose at https://github.com/facebookresearch/xR-EgoPose accessed on 16 December 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Implemented the SegDepth Module

Appendix A.1. xRegopose

Figure A1.

The SegDepth module attached to the xr-EgoPose architecture.

Appendix A.2. mo2cap2

Figure A2.

The SegDepth module attached to the Mo2Cap2 architecture.

References

- Czesak, K.; Mohedano, R.; Carballeira, P.; Cabrera, J.; Garcia, N. Fusion of pose and head tracking data for immersive mixed-reality application development. In Proceedings of the 2016 3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), Hamburg, Germany, 4–6 July 2016; IEEE: New York, NY, USA, 2016; pp. 1–4. [Google Scholar]

- Money, K.E. Motion sickness. Physiol. Rev. 1970, 50, 1–39. [Google Scholar] [CrossRef] [PubMed]

- Moon, G.; Chang, J.Y.; Lee, K.M. Posefix: Model-agnostic general human pose refinement network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7773–7781. [Google Scholar]

- Huang, Y.; Kaufmann, M.; Aksan, E.; Black, M.J.; Hilliges, O.; Pons-Moll, G. Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time. Acm Trans. Graph. (Tog) 2018, 37, 1–15. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Chen, C.H.; Ramanan, D. 3d human pose estimation= 2d pose estimation+ matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7035–7043. [Google Scholar]

- Bogo, F.; Kanazawa, A.; Lassner, C.; Gehler, P.; Romero, J.; Black, M.J. Keep it SMPL: Automatic estimation of 3D human pose and shape from a single image. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part V 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 561–578. [Google Scholar]

- Tome, D.; Peluse, P.; Agapito, L.; Badino, H. xr-egopose: Egocentric 3d human pose from an hmd camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 7728–7738. [Google Scholar]

- Wang, J.; Luvizon, D.; Xu, W.; Liu, L.; Sarkar, K.; Theobalt, C. Scene-aware Egocentric 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 13031–13040. [Google Scholar]

- Xu, W.; Chatterjee, A.; Zollhoefer, M.; Rhodin, H.; Fua, P.; Seidel, H.P.; Theobalt, C. Mo 2 cap 2: Real-time mobile 3d motion capture with a cap-mounted fisheye camera. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2093–2101. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Zhu, M.; Leonardos, S.; Derpanis, K.G.; Daniilidis, K. Sparseness meets deepness: 3d human pose estimation from monocular video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4966–4975. [Google Scholar]

- Yasin, H.; Iqbal, U.; Kruger, B.; Weber, A.; Gall, J. A dual-source approach for 3d pose estimation from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4948–4956. [Google Scholar]

- Park, S.; Hwang, J.; Kwak, N. 3d human pose estimation using convolutional neural networks with 2d pose information. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part III 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 156–169. [Google Scholar]

- Shiratori, T.; Park, H.S.; Sigal, L.; Sheikh, Y.; Hodgins, J.K. Motion capture from body-mounted cameras. In ACM SIGGRAPH 2011 Papers; Association for Computing Machinery: New York, NY, USA, 2011; pp. 1–10. [Google Scholar]

- Holte, M.B.; Tran, C.; Trivedi, M.M.; Moeslund, T.B. Human pose estimation and activity recognition from multi-view videos: Comparative explorations of recent developments. IEEE J. Sel. Top. Signal Process. 2012, 6, 538–552. [Google Scholar] [CrossRef]

- Baak, A.; Müller, M.; Bharaj, G.; Seidel, H.P.; Theobalt, C. A data-driven approach for real-time full body pose reconstruction from a depth camera. In Consumer Depth Cameras for Computer Vision. Advances in Computer Vision and Pattern Recognition; Springer: London, UK, 2013; pp. 71–98. [Google Scholar]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; IEEE: New York, NY, USA, 2011; pp. 1297–1304. [Google Scholar]

- Bregler, C.; Malik, J. Tracking people with twists and exponential maps. In Proceedings of the 1998 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No. 98CB36231), Santa Barnara, CA, USA, 23–25 June 1998; IEEE: New York, NY, USA, 1998; pp. 8–15. [Google Scholar]

- Gall, J.; Rosenhahn, B.; Brox, T.; Seidel, H.P. Optimization and filtering for human motion capture: A multi-layer framework. Int. J. Comput. Vis. 2010, 87, 75–92. [Google Scholar] [CrossRef]

- Joo, H.; Liu, H.; Tan, L.; Gui, L.; Nabbe, B.; Matthews, I.; Kanade, T.; Nobuhara, S.; Sheikh, Y. Panoptic studio: A massively multiview system for social motion capture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3334–3342. [Google Scholar]

- Moeslund, T.B.; Hilton, A.; Krüger, V.; Sigal, L. Visual Analysis of Humans; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Sigal, L.; Balan, A.O.; Black, M.J. Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. Int. J. Comput. Vis. 2010, 87, 4–27. [Google Scholar] [CrossRef]

- Sigal, L.; Isard, M.; Haussecker, H.; Black, M.J. Loose-limbed people: Estimating 3D human pose and motion using non-parametric belief propagation. Int. J. Comput. Vis. 2012, 98, 15–48. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, X.; Zhang, W.; Liang, S.; Wei, Y. Deep kinematic pose regression. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part III 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 186–201. [Google Scholar]

- Tekin, B.; Katircioglu, I.; Salzmann, M.; Lepetit, V.; Fua, P. Structured prediction of 3d human pose with deep neural networks. arXiv 2016, arXiv:1605.05180. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Derpanis, K.G.; Daniilidis, K. Coarse-to-fine volumetric prediction for single-image 3D human pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7025–7034. [Google Scholar]

- Li, S.; Chan, A.B. 3d human pose estimation from monocular images with deep convolutional neural network. In Proceedings of the Computer Vision–ACCV 2014: 12th Asian Conference on Computer Vision, Singapore, 1–5 November 2014; Revised Selected Papers, Part II 12. Springer: Berlin/Heidelberg, Germany, 2015; pp. 332–347. [Google Scholar]

- Mehta, D.; Rhodin, H.; Casas, D.; Fua, P.; Sotnychenko, O.; Xu, W.; Theobalt, C. Monocular 3d human pose estimation in the wild using improved cnn supervision. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE: New York, NY, USA, 2017; pp. 506–516. [Google Scholar]

- Jahangiri, E.; Yuille, A.L. Generating multiple diverse hypotheses for human 3d pose consistent with 2d joint detections. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 805–814. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 483–499. [Google Scholar]

- Tome, D.; Russell, C.; Agapito, L. Lifting from the deep: Convolutional 3d pose estimation from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2500–2509. [Google Scholar]

- Martinez, J.; Hossain, R.; Romero, J.; Little, J.J. A simple yet effective baseline for 3d human pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2640–2649. [Google Scholar]

- Rhodin, H.; Richardt, C.; Casas, D.; Insafutdinov, E.; Shafiei, M.; Seidel, H.P.; Schiele, B.; Theobalt, C. Egocap: Egocentric marker-less motion capture with two fisheye cameras. Acm Trans. Graph. (Tog) 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Zhao, D.; Wei, Z.; Mahmud, J.; Frahm, J.M. Egoglass: Egocentric-view human pose estimation from an eyeglass frame. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; IEEE: New York, NY, USA, 2021; pp. 32–41. [Google Scholar]

- Cha, Y.W.; Price, T.; Wei, Z.; Lu, X.; Rewkowski, N.; Chabra, R.; Qin, Z.; Kim, H.; Su, Z.; Liu, Y.; et al. Towards fully mobile 3D face, body, and environment capture using only head-worn cameras. IEEE Trans. Vis. Comput. Graph. 2018, 24, 2993–3004. [Google Scholar] [CrossRef] [PubMed]

- Moon, G.; Chang, J.Y.; Lee, K.M. V2v-posenet: Voxel-to-voxel prediction network for accurate 3d hand and human pose estimation from a single depth map. In Proceedings of the IEEE conference on computer vision and pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5079–5088. [Google Scholar]

- Liu, Y.; Yang, J.; Gu, X.; Chen, Y.; Guo, Y.; Yang, G.Z. Egofish3d: Egocentric 3d pose estimation from a fisheye camera via self-supervised learning. IEEE Trans. Multimed. 2023, 25, 8880–8891. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; IEEE: New York, NY, USA, 2006; pp. 5695–5701. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).