Abstract

Aspect-Based Sentiment Analysis (ABSA) is a crucial process for assessing customer feedback and gauging satisfaction with products or services. It typically consists of three stages: Aspect Term Extraction (ATE), Aspect Categorization Extraction (ACE), and Sentiment Analysis (SA). Various techniques have been proposed for ATE, including unsupervised, supervised, and hybrid methods. However, many studies face challenges in detecting aspect terms due to reliance on training data, which may not cover all multiple aspect terms and relate semantic aspect terms effectively. This study presents a knowledge-driven approach to automatic semantic aspect term extraction from customer feedback using Linked Open Data (LOD) to enrich aspect extraction outcomes in the training dataset. Additionally, it utilizes the N-gram model to capture complex text patterns and relationships, facilitating accurate classification and analysis of multiple-word terms for each aspect. To assess the effectiveness of the proposed model, experiments were conducted on three benchmark datasets: SemEval 2014, 2015, and 2016. Comparative evaluations with contemporary unsupervised, supervised, and hybrid methods on these datasets yielded F-measures of 0.80, 0.76, and 0.77, respectively.

1. Introduction

Electronic commerce plays a vital role in facilitating transactions over the Internet, encompassing the exchange of goods and services [1,2]. Consumer reliance on product reviews, given by previous customers, is substantial, as they serve as crucial aids in purchasing decisions. Concurrently, entrepreneurs leverage customer feedback to enhance their offerings. However, condensing and synthesizing these reviews present significant challenges, necessitating the application of techniques such as Sentiment Analysis (SA) [3,4,5].

SA categorizes sentences as positive, negative, or neutral based on sentiment polarity extracted from Natural Language Processing (NLP) [5]. NLP is a subfield of Artificial Intelligence (AI) that employs machine learning techniques to facilitate computers in comprehending and communicating with human language [6]. However, SA primarily captures the overall sentiment in customer reviews without targeting specific aspects. Aspect-Based Sentiment Analysis (ABSA) [7,8] addresses this limitation by focusing on evaluating customer feedback and measuring satisfaction with products or services [8]. ABSA comprises three stages: (1) Aspect Term Extraction (ATE): extracts aspect terms from individual reviews. (2) Aspect Categorization Extraction (ACE): identifies and extracts aspect categories from customer reviews. (3) SA: Determines the polarity.

Examples of extracted aspect terms include “spicy roll”, “waiter”, and “decor”, while examples of aspect categories for each aspect term are “food”, “service”, and “ambiance”, respectively. Therefore, the primary objective of this research is to concentrate on extracting aspect terms.

In recent years, ATE has been approached primarily through two methods. The lexicon-based approach involves creating an aspect lexicon that is integrated into a static lexicon, which is a predetermined list of words [9,10,11]. For instance, Nguyen M.H. et al. [9] and Haq B. et al. [10] utilized aspect lists derived from datasets to identify aspect terms, while Mishra P. et al. [11] developed an aspect term lexicon in combination with specific rules. Conversely, the machine learning approach has gained popularity in ATE, relying on datasets for aspect training and extraction employing supervised, unsupervised, and hybrid learning methods [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44]. Anping Z. and Yu Y. [42] introduced the Bidirectional Encoder Representations from Transformers (BERT), a knowledge-enhanced language representation model, for aspect-based sentiment analysis. This model belongs to the unsupervised methods. In order to achieve comprehensible and precise identification of aspect terms and their accompanying aspect-sentiment detection, it is beneficial to incorporate external domain-specific knowledge. Yiheng F. [43] proposed a new framework called Aspect Sentiment Quadruplet Extraction (ASQE). This research goal is to identify aspect terms using the Label-Semantics Enhanced Multi-Layer Heterogeneous Graph Convolutional Network (LSEMH-GCN), which falls under the category of supervised methods. Omar A. and Nur S. [44] proposed a hybrid approach to aspect-based sentiment analysis, which integrates domain lexicons and rules, to analyze entity smart app reviews using data from smart government reviews.

However, despite advancements in ATE research, the literature on this topic faces several challenges:

- (1)

- Identifying aspect terms presents challenges, especially when dealing with multiple words. It is imperative to conduct a comprehensive analysis, particularly for multiple-word aspect terms not presented in the training dataset. For instance, if the term “views of city” is absent from the training data, it may not be recognized as an aspect, despite the presence of the term “views” alone.

- (2)

- Relying solely on the training dataset for aspect extraction proves insufficient, particularly when certain aspects or semantic nuances are not represented. This dependency on the training data can lead to a biased understanding of aspects and may fail to provide a comprehensive comprehension of the domain. For example, the dish “foie gras terrine with figs”, categorized under “food”, may be absent from the training dataset, highlighting the limitations of this approach.

- (3)

- Within the training dataset, a notable challenge arises from numerous synonymous aspect terms, including both single-word and multiple-word aspect terms. It is essential to resolve these synonymous terms to enable more precise aspect terms and improve the semantic understanding of these aspects.

1.1. Contribution of this Paper

This research endeavors to address three primary categories of challenges by focusing on the following concerns:

- (1)

- In addressing the first issue, this research introduces a novel algorithm leveraging syntactic dependency and sentiment lexicons to capture and analyze multiple aspect terms of a sentence proficiently. The proposed approach significantly improves the performance of ATE in both single-word and multiple-word aspect terms scenarios.

- (2)

- To tackle the second and third challenges, this research leverages the N-gram model [45] to capture complex text patterns and relationships, facilitating classification and accurate analysis of multiple-word aspect terms. Furthermore, knowledge-based methods, including the Linked Open Data (LOD) [46,47], with an emphasis on the DBpedia [48] and the Thesaurus lexicon [49], are employed to resolve the synonymous aspect terms and aspect categorization.

1.2. Structure of the Paper

The remainder of this paper is organized as follows: Section 2 provides a comprehensive review of relevant research in ATE. Section 3 details the proposed method and its underlying architecture. The datasets and evaluation metrics are presented in Section 4. Section 5 analyzes the experimental results, while Section 6 investigates the issue of aspect term misprediction. Finally, Section 7 concludes the proposed method and outlines promising directions for future work.

2. Related Work

The analysis of customer reviews has become a prominent area of research, as indicated by the increasing volume of academic work in this domain. In recent years, there has been a surge of interest in ATE, particularly within the frameworks of supervised, unsupervised, and hybrid learning [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41].

- (1)

- Supervised aspect extraction utilizes annotated datasets to teach machine learning algorithms to automatically recognize and extract aspect terms from customer reviews related to products or services [12,13,14,15,16,17,18]. Xue et al. [12] proposed a Multi-Task Neural Network (MTNN) framework for ATE, integrating Bidirectional Long Short-Term Memory (Bi-LSTM) and Convolutional Neural Networks (CNN) to improve multitask learning outcomes using restaurant review data. Yu et al. [13] introduced the Global Inference (GI) approach for ATE applied to MTNN. Their method integrates various syntactic elements to explicitly capture intra-aspect and inter-aspect relationships. Agerri et al. [14] introduced a language-agnostic system for aspect extraction that combines local, surface-level attributes with Semantic Distributional Features based on Clustering (SDFC) to aspect identification. Luo et al. [15] present an innovative framework for ATE. This framework utilizes a Bidirectional Dependency Tree Network (BiDTree) to capture tree-structured relationships within sentences. The embeddings learned from the BiDTree are subsequently fused with a Conditional Random Field (CRF) to leverage sequential information. This BiDTreeCRF approach enables the model to concurrently learn syntactic and sequential features, resulting in enhanced performance of the ATE. Akhtar et al. [16] present a Bi-LSTM paired with a self-attention mechanism for ATE. This strategy employs Bi-LSTMs to capture sequential information within sentences, and the self-attention mechanism enables the model to concentrate on the most pertinent segments of the input for ATE. Ning Liu and Bo Shen [17] introduced a new Seq2Seq learning framework for ATE using the Information-Augmented Neural Network (IANN), which utilizes the foundation of a neural network. This model addresses the issue of the limited capacity of static word embedding used in ATE, which are insufficient for capturing the evolving meaning of words. Yinghao P. and Jin Z. [18] proposed a new extraction model called BiLSTM-BGAT-GCN, which is used to identify aspect terms, opinion terms, and their corresponding sentiment orientations in customer review.

- (2)

- Unsupervised aspect extraction involves automatically identifying and extracting aspects from customer reviews without relying on pre-labeled training data. Heng Yan et al. [19] proposed a model for Chinese-oriented aspect-based sentiment analysis called LCF-ATEPC. This model utilizes the BERT model to improve the performance of ATE. Additionally, the methodologies employed in this task encompass the following techniques [20,21,22,23,24,25,26,27,28,29,30,31,32,33,34]:

- (a)

- Frequency-based ATE approaches utilize the distribution of word or phrase frequencies within a text to discern significant aspects based on their frequent occurrence [20,21,22]. Minqing Hu and Bing Liu [20] introduced a technique for aspect extraction from customer reviews, employing the frequency distribution of aspects within the reviews. Anwer et al. [21] presented their method for ATE from customer reviews, which exploits frequency distribution to identify recurring aspects discussed by customers. Toqir A. and Yu C. [22] introduced a two-fold rule-based model for aspect extraction called TF-RBM, which relies on rules derived from sequential patterns extracted from customer reviews. Moreover, this study has implemented frequency and similarity-based techniques to enhance the aspect extraction accuracy of the proposed model.

- (b)

- Syntax-based ATE approaches utilize the grammatical structure of sentences to identify aspect terms, often employing part-of-speech (POS) tagging, dependency parsing, and predefined syntactic patterns [23,24,25,26,27]. Zhao et al. [23] introduced a novel approach for ATE from product reviews, which utilizes generalized syntactic patterns and similarity measures to improve ATE performance. Maharani et al. [24] introduce a new approach to ATE based on unstructured reviews. They suggest a rule-based system that utilizes syntactic patterns derived from feature observations within the review text. This method is supported by a comprehensive analysis of the diverse patterns identified in the data. Shafie et al. [25] assessed the effectiveness of different dependency relations in combination with various POS tagging patterns for candidate ATE from customer reviews. Dragoni et al. [26] introduce an opinion monitoring service that utilizes dependency parsing for ATE and integrates a visualization tool to assist in data monitoring. Jie et al. [27] presents an adaptive semantic relative distance method that relies on dependent syntactic analysis. It utilizes adaptive semantic relative distance to identify the suitable local context for each text, hence enhancing the precision of sentiment analysis.

- (c)

- Topic modeling is an unsupervised statistical technique used to reveal latent thematic structures within a set of documents. One of the most widely used and well-established algorithms for topic modeling is Latent Dirichlet Allocation (LDA) [50]. LDA is a statistical model that represents documents as combinations of a predetermined number of underlying topics. Each topic is defined by a probability distribution over the vocabulary, indicating the likelihood of each word appearing within that topic [51]. LDA has been applied to ATE in the realm of ABSA, as discussed in several studies [28,29,30,31,32,33,34]. Titov and McDonald [28] introduced Multi-grain Latent Dirichlet Allocation (MG-LDA), an extension of LDA, for joint topic modeling and ATE from online customer reviews. MG-LDA captures aspects at various levels of granularity, allowing the extraction of both fine-grained (local topics) and coarse-grained (global topics) aspects along with their associated textual evidence within reviews. Brody and Elhadad [29] utilized LDA for ATE from restaurant customer reviews, augmenting their work with a knowledge lexicon to improve the identification of named entities within the reviews. Yao et al. [30] proposed a novel framework for named entity recognition (NER) in news articles, employing LDA to identify latent topics within the text and integrating a knowledge lexicon derived from Wikipedia to enhance entity extraction. This approach was tested on news datasets from the New York Times and Tech Crunch, demonstrating its efficacy in extracting news-related entities. Shams and Baraani-Dastjerd [31] introduced a novel method to improve the quality of automatic ATE by integrating domain knowledge through word co-occurrence relationships. This method, known as Enriched Latent Dirichlet Allocation (ELDA), seeks to capture more nuanced aspects by incorporating co-occurrence information into the standard LDA model. Annisa et al. [32] proposed an ATE approach for analyzing hotel reviews in Mandalika, Indonesia, utilizing LDA. Ozyurt and Akcayol [33] propose an innovative approach for ATE focusing on Turkish restaurant reviews. Their method utilizes Sentence Segment Latent Dirichlet Allocation (SS-LDA), an extension of the traditional LDA model, to tackle the challenge of limited data availability in the ATE task. Venugopalan et al. [34] tackle the limitations of LDA in handling overlapping topics by integrating BERT. This approach harnesses BERT’s ability to capture semantic relationships between words, enhancing ATE performance by discerning between thematically similar aspects.

- (3)

- Hybrid ATE Methods: The last category of ATE methodologies comprises hybrid approaches. These methods combine various techniques, algorithms, or resources to enhance ATE accuracy. This can involve synergistically integrating supervised, unsupervised, or other methods to address their individual limitations. The primary goal of these hybrid approaches is to achieve superior performance in aspect term extraction compared to using a single approach in isolation [35,36,37,38,39,40,41]. In their work, Wu et al. [35] introduce a novel unsupervised approach for ATE that combines linguistic conjunction rules with pruning and a deep learning model employing a Gated Recurrent Unit (GRU) network. Chauhan et al. [36] propose a two-stage unsupervised approach for ATE. This model harnesses both symbolic and neural techniques by integrating linguistic rules with an attention-based Bi-LSTM architecture. This integration aims to enhance the overall ATE performance. Musheng C. et al. [37] introduce a novel end-to-end framework for ABSA. It leverages a task-sharing layer to facilitate interaction between aspect term extraction and sentiment classification. Furthermore, the framework employs a multi-head attention mechanism to capture the relationships between different aspect items, addressing the issue of sentiment inconsistency within aspects observed in previous end-to-end models. Zhigang Jin et al. [38] introduced a model called SpanGCN, which is a Span-based dependency-enhanced Graph Convolutional Network. This model combines the Latent dependency Graph Convolutional Network (LGCN) with span enumeration to tackle the ABSA task. In this research, BERT and the Graph Convolutional Network (GCN) are employed to capture contextual semantic and dependency relationships. Fan Zhang et al. [39] presented a new model called the Syntactic Dependency Graph Convolutional Network (SD-GCN). This model uses GCN to implement syntactic dependencies and yield enhanced aspect features for ATE. Yusong M. and Shumin S. [40] introduced a novel model named Dependency-Type Weighted Graph Convolution Network (DTW-GCN) for the purpose of combining dependency-type messages with word embedding in the context of ABSA. Busst M. M. A. et al. [41] presented Ensemble BiLSTM, a novel approach for aspect extraction. The Ensemble BiLSTM model utilizes the syntactic, semantic, and contextual properties of unstructured texts found in BERT word embeddings to extract aspect terms. Additionally, it incorporates the sequential properties of aspect term categories by employing an ensemble of BiLSTM model.

In summary, supervised learning methods utilize pre-annotated data to train models for ATE, offering effectiveness but requiring substantial labeled data and struggling with unseen aspects. Unsupervised methods, conversely, operate without labeled data, providing domain independence but facing challenges with unseen aspects as well. Hybrid approaches combine supervised and unsupervised techniques or integrate them with other methods, showcasing greater adaptability across diverse data types and domains. These hybrid approaches face challenges about fixed lexicons and rules. This research proposes a new unsupervised ATE approach that addresses these limitations. It leverages the training dataset to represent knowledge and utilizes the semantic power of LOD to suggest synonymous aspect terms. This approach expands the knowledge base beyond the limitations of learning aspect terms solely from the training dataset, allowing the model to identify both single-word and multi-word aspect terms not explicitly present in the training dataset. This overcomes the restrictions of existing supervised, unsupervised, and hybrid methods.

This research will establish hypotheses based on two observations, relying from machine learning methods in related works: (1) The model’s performance can be enhanced by utilizing the single-word and multiple-word aspect terms. (2) The model’s efficacy can be enhanced by aspect terms that have infer. This research displays the methodology of LOD used for aspect extraction in the following section, which corroborates the hypotheses.

3. Methodology

This section presents a diagrammatic framework illustrating the process of extracting aspect terms from customer reviews. The framework utilizes review datasets to generate extended synonyms and categories of single-word and multiple-word aspect terms. It consists of three primary modules, as shown in Figure 1. The first module, the preprocessing module, prepares the review dataset for analysis and proposes Algorithm 1 for solving slang and abbreviations. The second module, the multiple aspect terms extraction module, is responsible for identifying both single-word and multiple-word aspect terms within a sentence using Algorithm 2 to solve the basic library (TextBlob). This algorithm is integral to the process of extracting aspect terms. The third module, the semantic aspect categorization extraction (ACE) module, utilizes the LOD approach (DBpedia) and Thesaurus to expand and enrich each aspect term extracted from the ATE module. Subsequently, all expanded aspect terms are classified into appropriate categories. Further details on each module are discussed in the following subsections:

Figure 1.

The diagrammatic framework of ATE from the training dataset.

| Algorithm 1: Slang and Abbreviation Processing |

| Input: refers to a finite set of segmented words. Output: refers to a finite set of new segmented words resulting from the correction of slang and abbreviations. Notations used: Let be a finite set of updated slang and abbreviations verified by DBpedia. function to correct slang and abbreviations using DBpedia. function to correct slang and abbreviations using the S&A dictionary. Procedure: foreach //some cannot be found in if ( S&A dictionary) then else end if end foreach return End Procedure |

3.1. Preprocessing Module

This module utilizes the Stanza library from Stanford NLP [52] and the Natural Language Toolkit [53] to prepare customer review data through six stages:

- (1)

- Word segmentation: The Stanza toolkit extracts lexical units, such as words, symbols, and semantically significant elements.

- (2)

- Word correction: This work investigates the spelling error correction of words using the pyspellchecker library [54] and a dictionary named WordCoreection. For instance, pyspellchecker can correct “survice” to “service”, while WordCoreection handles cases like “chuwan” being replaced with “chawan”.

- (3)

- Slang and abbreviation processing: This stage aims to correct slang and abbreviations using LOD. Additionally, a Slang and Abbreviations (S&A) dictionary is utilized to correct slang and abbreviations missing in the LOD. For example, “veggie” is replaced with “vegetable”, “NYC” with “New York City”, and “rito” with “burrito”. Algorithm 1 describes this process.

Algorithm 1 consists of two stages. In the first stage, the segmented word set (SW) is processed to detect slang and abbreviations using the function . This function employs SPARQL to query DBpedia and searches for slang and abbreviations using the “dbo:wikiPageRedirects” property for abbreviations and the “dbo:wikiPageWikiLink” property for slang. The output is a revised segmented word set containing updated slang and abbreviations from DBpedia. In the second stage, any slang or abbreviations not found in DBpedia are analyzed using the S&A dictionary via the function . The results of the updated segmented words are then added to the NSW set.

- (4)

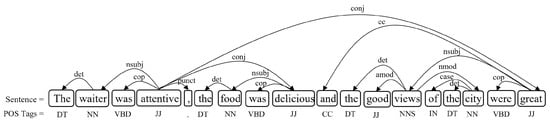

- Part of Speech (POS) tagging assigns a POS tag to each word in a sentence, forming a combination of the word and its corresponding POS tag. For example: “The (Determiner: DT)”, “waiter (Noun: NN)”, “was (Past tense verb: VBD)”, “attentive (Adjective: JJ)”, “, (End of sentence punctuation: .)”, “the (Determiner: DT)”, “food (Noun: NN)”, “was (Past tense verb: VBD)”, “delicious (Adjective: JJ)”, “and (Coordinating conjunction: CC)”, “the (Determiner: DT)”, “good (Adjective: JJ)”, “views (Plural noun: NNS)”, “of (Preposition or subordinating conjunction: IN)”, “the (Determiner: DT)”, “city (Noun: NN)”, “were (Past tense verb: VBD)”, and “great (Adjective: JJ)”.

- (5)

- Dependency parsing is a process that involves identifying the relationships between words in a sentence to analyze its grammatical structure. This process utilizes dependency grammar (DG) [55], a linguistic framework for analyzing grammatical structures. It consists of two main components. The “head” is a linguistic element that governs the syntax of other words, while the “dependent” is a word that has a semantic or syntactic connection with the head. The meaning or grammatical function of a word depends on its head, and this relationship is depicted by labeled arrows known as dependencies. These dependencies include subject, compound, object, modifier, and others, as illustrated in Figure 2.

Figure 2. An example of dependency grammar of the sentence in graph format.

Figure 2. An example of dependency grammar of the sentence in graph format.

Figure 2 illustrates the dependencies between the terms of the sentence, which can be defined using a general notation based on set theory as follows:

Definition 1.

The dependency grammar, denoted as , is defined as a tuple:

where V and E are defined as follows.

Definition 2.

Let V be a finite set of nodes and their attributes in the graph, defined as a set of tuples,

where is the node’s unique identifier, is the dependent word of the word node, is a term of POS tag, is the head word’s unique identifier, and is the head word of the word node.

The example of dependency grammar in the sentence illustrated in Figure 2 can be defined as the set of V as follows: V = {(:1, : “The”, : “DT”, : 2, : “waiter”), (: 2, : “waiter”, : “NN”, : 4, : “attentive”), …}.

Definition 3.

E is a finite set of directed edges between nodes and is defined as the set of tuples,

where and is the label of the directed edge or dependency relation that connects the node to its head word .

The example of dependency relations between words in the sentence illustrated in Figure 2 can be defined as the set of E as follows: E = {(: 1, : 2, : “det”) and (: 2, : 4, : “nsubj”), … }.

- (6)

- Stop word removal aims to remove common words or symbols from by using the Stop Word dictionary. Examples of stop words include “the”, “is”, and “a”, which are very frequent in written language and would not change the essential meaning of the sentence in ATE. The results of this process will be updated in to eliminate common words or symbols.

3.2. Multiple Aspect Terms Extraction Module

The objective of this module is to identify both single-word and multiple-word aspect terms in the sentence. The multiple-word aspect terms can be defined as compound words that combine modifiers, conjunctions, or prepositions within each aspect using Algorithm 2. Algorithm 2 outlines the process of extracting aspect terms in three stages, which are explained below:

- (1)

- Noun extraction involves extracting nouns or aspects via the function, as defined in the following definition:

Definition 4.

is a finite set of nouns extracted from V, where the POS tags such as “NN”, “NNS”, “NNP”, and “NNPS” are categorized under the “Noun Tag” or “”. The is defined as a set of tuples,

For example, = {(: 2, : “waiter”), (: 7, : “food”), (: 12, : “views”), and (: 15, : “city”)}.

- (2)

- In the single-word and multiple-word aspect terms extraction stage, related words from sets V and are associated. In this process, a tool like TextBlob [56] is utilized to identify basic compound-named entities using the function. The definition is given as follows:

Definition 5.

is a finite set of compound-named entities for each aspect derived from V and . It can be described as a set of tuples,

where denotes a new aspect term resulting from the combination of terms derived from V and .

For instance, when parsing the sentence “The spicy tuna roll in New York City is huge”, into V and , the includes the following elements: {(: 3, : “tuna”), (: 4, : “roll”), (: 6, : “new”), (: 7, : “york”), and (: 8, : “city”)}. By using the function, the set of V and are associated and then mapped to as follows: = {(: {2,3,4}, : {“spicy”, “tuna”, “roll”}), (: {6,7,8}, and : {“new”, “york”, “city”})}.

Regarding the example sentence depicted in Figure 2, the is as follows: {(: {2}, : {“waiter”}), (: {7}, : {“food”}), (: {12}, : {“views”}), (: {15}, and : {“city”})}. In this example, no compound-named entities have been identified.

However, many cases of complex multiple-word aspect terms may not be extracted by such tools. Examples of these include “four seasons restaurant”, “fish and chips”, and “views of city”.

To illustrate the solution for extracting these complex multiple-word aspect terms in some cases, this research proposes a portion of an algorithm, as described in Algorithm 2. In this algorithm, this research specifically targets multiple-word aspect terms that employ the prepositions “of” and “with”, as well as the conjunction “and”.

The definitions of the variables used in Algorithm 2 are as follows:

| Algorithm 2: Multiple-word aspect terms extraction |

| Input: is a finite set of tuples resulting from the dependency grammar. Output: is a finite set of final aspect terms extracted from complex multiple-word aspect terms. Notations used: Let be a finite set of compound words aggregated from V and using the TextBlob function. Let be a finite set of some prepositions, such as “of” and “with”. Let be a finite set of some conjunction, such as “and”. Let be a finite set of noun POS tags. Procedure: , foreach if ( == “amod”) then if then end if else end if end foreach foreach if ( then end if end foreach foreach if then end if if then end if end foreach return End Procedure |

Definition 6.

represents a finite set of dependent word relationships by associating each word in with V and E defined in , which has the dependency relation as an . The encompasses “amod”, “compound”, “case”, “nummod”, “cc”, and “nmod”.

An example of dependent words relationship in the sentence illustrated in Figure 2 can be defined as the following set: = {(: “good”, : “views”, : “amod”), (: “city”, : “views”, : “nmod”), and (: “of”, : “city”, : “case”)}.

For instance, in entry (: “good”, : “views”, : “amod”), it signifies that the word “good” serves as an adjectival modifier of the head word “views” with the dependency relation “amod”. The entry (: “city”, : “views”, : “nmod”) indicates that the word “city” acts as a nominal modifier of the head word “views” with the dependency relation “nmod”. Similarly, the entry (: “of”, : “city”, : “case”) signifies that the word “of” functions as a preposition of the head word “city” with the dependency relation “case”, where “case” denotes case-marking, prepositions, and possessive.

However, if the adjectival modifier, such as “good,” acts as a sentiment word, this entry will be disregarded and eliminated from the via the function. The process of identifying sentiment words is provided in Definition 9.

Definition 7.

, which stands for “Multiple-Word Terms”, is extracted from the dependent word () and head word () within the . analyzes the prepositions and conjunctions associated with the collected words, combines them with the consecutive words, and organizes all the words into the appropriate structure. The is described as a set of tuples:

Regarding the example sentence depicted in Figure 2, the set of derived from is as follows: = {(: {“city”, “view” }) and (: { “of”, “city”})}. In this scenario, the preposition “of” is included within the entry; thus, “of” and “city” are amalgamated to form the mapping words () as = { “of city” }. Subsequently, this entry is eliminated from the using the function. The next step entails scrutinizing words in other entries of to detect any word matching with any part of the combined words in . Upon discovery, the duplicated word in is substituted with the combined word in via the function. As a result, the updated becomes {(: {“of city”, “view”})}. Subsequently, the word order in is rearranged to yield = { (: { “view”, “of city”})} using the function.

Another example is based on the original text: “Bread and butter pudding with blueberries is filling and yummy”. The example includes: = {(: “and”, : “butter pudding”, : “CC”), (: “bread”, : “butter pudding”, : “compound”), (: “blueberries”, : “butter pudding”, : “nmod”), and (: “with”, : “blueberries”, : “case”)}, where “butter pudding” is obtained from .

On the other hand, the comprises: = {(: {“and”, “butter pudding”}), (: {“bread”, “butter pudding”}), (: {“blueberries”, “butter pudding”}), and (: {“with”, “blueberries”})}.

In this example, both the conjunction “and” and the preposition “with” are included within the entry. Consequently, “and” and “butter pudding”, as well as “with” and “blueberries”, are merged to form the mapping words (), resulting in = { “and butter pudding”, “with blueberries” }, respectively. These entries are subsequently removed from the . Following this, any duplicated word in is substituted with the corresponding combined word from . As a result, the updated becomes {(: {“bread”, “and butter pudding”}), (: {“with blueberries”, and “and butter pudding”})} via the function. In the subsequent step, each entry is examined again. If any entry contains the conjunction “and” but not the preposition, such as “with”, it then combines the words in that entry. Otherwise, it retains the entry unchanged. Consequently, the result of will be {(: {“bread and butter pudding”}) and (: {“with blueberries”, “and butter pudding”})}. For the final step, each entry in is reevaluated for duplicate group words. If any entry contains duplicate group words, the function is employed to replace the shorter phrase with the longer one and then remove the longer phrase from the entry in . This process ensures that each entry in contains unique group words. The results of this operation yield {(: {“with blueberries”, “bread and butter pudding”})}. However, if the duplicate group words are not found, no replacement or deletion occurs. During the final update, the undergoes another transformation. This is triggered by the detection of the preposition “with” within the entry. Consequently, the word order within the is rearranged using the function, resulting in the final form: = {(: {“bread and butter pudding”, “with blueberries”})}.

Definition 8.

denotes a finite collection of multiple-word aspect terms derived from . The outputs produced by are combined to form the outputs within , represented as a set of tuples:

In Figure 2, an example of is {: {“views of city”}}. This set denotes a multiple-word aspect term, “view of city”. Another instance of , showcasing the inclusion of conjunctions and prepositions, is {: {“bread and butter pudding with blueberries”}}. In this case, captures the entire phrase “bread and butter pudding with blueberries” as a multiple-word aspect term.

Definition 9.

This definition explains the semantics of sentiment words utilized as adjectives in . To verify the presence of sentiment words in , the function is utilized. SentiWordNet [57] serves as the basis for identifying sentiment words, employing Equations (1) and (2) [58]. Sentiment words are identified if their term score exceeds 0 or falls below 0.

In these equations, the variable w represents a word, represents a part-of-speech, represents the sum of the positive score, represents the sum of the negative score, and r represents the count of sentiment words appearing in the SentiWordNet.

Within the multiple-word aspect terms extraction algorithm, this algorithm concentrates on identifying a finite collection of derived from the . These will be combined with other single-word aspect terms and subsequently forwarded to the subsequent module for additional processing. Figure 2 illustrates this concept, showcasing = {waiter, food, views of city} for one case. For another example, = {bread and butter pudding with blueberries} for another.

3.3. Semantic Aspect Categorization Extraction

Following the extraction of multiple aspect terms, the subsequent stage involves identifying semantic categorizations for each aspect term. In this phase, LOD is utilized to augment semantic aspects search capabilities and autonomously enrich the training dataset category. The aspect terms derived in the undergo processing to ascertain their semantic significance and to accurately classify groups of these aspect terms. This phase consists of three consecutive processes.

- (1)

- Aspect vectorization refers to the process of transforming a set of multiple aspect terms within the into a collection of the Aspect Vectors (). The set is defined as follows:

Definition 10.

represents a finite set comprising unique identifiers (UIDs) and corresponding aspects (). It is defined as a collection of tuples:

where are UIDs and .

Each aspect, represented as , is extracted from the set of . For instance, let us consider the derived from Figure 2. In this case, the set can be defined as follows: = {(: “0”, : {“waiter”}), (: “1”, : {“food”}), and (: “2”, : {“views of city”})}. Another example of can be demonstrated as = {(: “0”, : {“bread and butter pudding with blueberries”})}.

Definition 11.

denotes a finite collection of constituent elements from each aspect of . The is defined as the set of tuples:

where .

An example of an derived from can be given as: = {(: “0”, : {“waiter”}), (: “1”, : {“food”}), and (: “2”, : {“views”, “of”, “city”})}. Another example of can be demonstrated as = {(: “0”, : {“bread”, “and”, “butter”, “pudding”, “with”, “blueberries”})}.

- (2)

- Analysis of Aspect Term Types: This stage involves identifying the types associated with each aspect term extracted in . To accomplish this, each element of aspect term is examined to ascertain whether it represents a single-aspect term or comprises multiple-word aspect terms. In the case of a single-word aspect term, such as {“waiter”} or {“food”,} SPARQL queries are utilized to interrogate the properties “dbo:type” or “rdf:type” within LOD resources, thereby determining the types associated with these entities for extended aspect terms. When dealing with multiple-word terms, as exemplified by {“bread”, “and”, “butter”, “pudding”, “with”, “blueberries”}, extracted from the intricate multiple-word aspect term “bread and butter pudding with blueberries”, determining the type of this term directly from LOD is not feasible. Only fragments of it can be retrieved from LOD. Hence, a combination of the N-gram model and LOD is employed to ascertain the type of these multiple-word aspect terms. This process unfolds in three steps: The initial step entails determining the N-gram size of each , which represents a multiple-word term. This determination is achieved by utilizing Equations (3) and (4) [45] from the N-gram model, as illustrated in the sample outcomes presented in Table 1.

Subsequently, the second step entails selecting the first index for each N-gram size, such as [bread], [bread and], [bread and butter], [bread and butter pudding], [bread and butter pudding with], and [bread and butter pudding with blueberries]. Lastly, the types of each sequence are examined using the “dbo:type” or “rdf:type” properties through SPARQL from the LOD, as shown in the example results in Table 2.

This study delves into the efficacy of N-gram indexing for entity type identification. The initial N-gram indexes across various N-gram sizes are detailed in Table 2. The detail shows that unigrams (1-gram, e.g., “bread”) and quadrigrams (4-gram, e.g., “bread and butter pudding”) can yield satisfactory accuracy. Notably, the entity type of the 4-gram “bread and butter pudding” accurately encompasses both “pudding” and “food”. Similarly, the unigram “bread” is precisely classified as both “food” and “mouna” (a specific bread type). This observation underscores the effectiveness of extrapolating the entity type captured by the 4-gram to represent the type of the entire multiple-word term.

Table 1.

An example results of the N-gram sequences.

Table 1.

An example results of the N-gram sequences.

| N-Gram Size | Sequence Examples |

|---|---|

| 1-gram | [bread], [and], [butter], [pudding], [with], [blueberries] |

| 2-gram | [bread and], [and butter], [butter pudding], [pudding with], [with blueberries] |

| 3-gram | [bread and butter], [and butter pudding], [butter pudding with], [pudding with blueberries] |

| 4-gram | [bread and butter pudding], [and butter pudding with], [butter pudding with blueberries] |

| 5-gram | [bread and butter pudding with], [and butter pudding with blueberries] |

| 6-gram | [bread and butter pudding with blueberries] |

Table 2.

An example results of the types of entities.

Table 2.

An example results of the types of entities.

| N-Gram Size | Sequence Examples | Types of Entities |

|---|---|---|

| 1-gram | [bread] | [food], [mouna], … |

| 2-gram | [bread and] | [⌀] |

| 3-gram | [bread and butter] | [⌀] |

| 4-gram | [bread and butter pudding] | [pudding], [food] |

| 5-gram | [bread and butter pudding with] | [⌀] |

| 6-gram | [bread and butter pudding with blueberries] | [⌀] |

- (3)

- Semantic Aspect Expansion involves two steps. First, each aspect in the training dataset is identified, and their corresponding aspect types are determined. These aspect types are then aggregated and integrated into the aspect corpus. The last step entails expanding the aspect terms utilized in the training dataset by discovering synonymous aspect terms. To accomplish this, the “dbo:wikiPageRedirects” property of LOD and a thesaurus dictionary are utilized via SPARQL to search for synonymous aspect terms. This process is exemplified in the instances illustrated in Table 3.

Table 3. An example results of semantic aspect expansion.

Table 3. An example results of semantic aspect expansion.

4. Datasets and Evaluation Metrics

The cornerstone of evaluating this research lies in two key components: datasets and evaluation metrics. Datasets provide the ground truth labels against which a model’s predictions are compared. Evaluation metrics, conversely, quantify the discrepancies between these predictions and the ground truth, enabling a rigorous assessment of the model’s effectiveness.

4.1. Datasets

SemEval (Semantic Evaluation) is an ongoing series of international workshops dedicated to assessing the effectiveness of research conducted by SIGLEX. SIGLEX, a special interest group within the Association for Computational Linguistics (ACL), aims to advance research in semantic analysis, interpreting meaning in language, and developing high-quality datasets for NLP. Each annual SemEval workshop defines topic-specific NLP and provides datasets for participants to use to develop and test systems [59]. SemEval’s workshops in 2014, 2015, and 2016 are the focus of this research, which defines topic-specific NLP in ABSA [60,61,62].

SemEval of ABSA annotates labels with aspect categories, aspect terms (including single and multiple-word aspect terms), and polarities. The research community widely relies on SemEval datasets to assess and compare different algorithms and methodologies and gauge performance against the current state-of-the-art. This research utilizes the SemEval datasets from 2014, 2015, and 2016 to assess aspect terms within the restaurant domain. The statistics regarding the train and test datasets are provided in Table 4.

Table 4.

Sentence and number of aspect terms.

4.2. Evaluation Metrics

The proposed model’s performance in aspect-term extraction was assessed on the test datasets using standard metrics such as precision, recall, and F-measure. These metrics were calculated through Equations (5), (6), and (7), respectively, as outlined in reference [63].

In Equations (5) and (6), True Positives () denote the count of instances correctly predicted as positive, meaning they were identified as aspect terms accurately. False Positives () refer to the count of instances predicted as positive but were actually negative, signifying aspect terms that were identified incorrectly. False Negatives () represent the count of positive instances predicted as negative, indicating aspect terms that were missed.

In Equation (7), the F-measure represents the harmonic mean of precision and recall, serving as a balanced measure of the model’s performance.

5. Experimental Results

This section discusses the experimental results of aspect term extraction. The experiment was carried out on the SemEval datasets within the restaurant domain and assessed using standard metrics, as depicted in Table 5. Table 6 outlines the comparison of the experiment’s F-measure (F1) results with existing state-of-the-art baselines across various approaches, including unsupervised, supervised, and hybrid approaches.

Table 5.

Result of the proposed model for ATE on SemEval datasets.

Table 6.

Comparisons with the existing state-of-the-art baselines.

In Table 5, when utilizing the SemEval 2014 dataset, the proposed model demonstrates notably high precision and recall compared to other dataset versions. This suggests that the proposed ATE processes exhibit a high level of accuracy and can effectively identify a larger number of correct aspect terms. The F-measure value of 0.80 indicates a balanced trade-off between precision and recall. For the SemEval 2015 and 2016 datasets, the precision experienced a slight decrease to 0.78 and 0.79, respectively, indicating that the positive predictions made by the proposed model are slightly less accurate. Additionally, the recall value drops to 0.75 and 0.76, respectively, indicating that fewer aspect terms are captured. The F-measures of 0.76 and 0.77, respectively, suggest a moderate balance between precision and recall in this case. Indeed, when the precision of the results is higher than recall, it signifies that the proposed approach tends to return more relevant aspect terms than irrelevant ones. This indicates that the model’s predictions are more precise, with fewer False Positives, which suggests that the identified aspect terms are highly likely to be accurate.

Table 6 presents a comparative analysis of the performance between the proposed model and other approaches across three different datasets: SemEval 2014, 2015, and 2016. The evaluation is based on F-measure scores. The table showcases a wide spectrum of methodologies utilized for ATE, encompassing supervised, unsupervised, and hybrid methods. The experimental results provide insights into the performance of these approaches. For dataset SemEval 2014, although the proposed approach achieved an F-measure of 0.80, which is slightly lower than some other approaches such as Yu J. et al. (2018) [13] (F-measure = 0.84) and Luo H. et al. (2019) [15] (F-measure = 0.85), it still demonstrates competitive performance compared to some other approaches, such as Wu. Chuhan et al. (2018) [35]. The differences in F-measure between the proposed approach and the top-performing approaches are relatively small, indicating that our approach is effective in capturing aspect terms in the dataset SemEval 2014. In the dataset SemEval 2015, the proposed approach outperforms all other approaches with an F-measure of 0.76, surpassing the values achieved by Xue W. et al. (2017) [12], Yu J. et al. (2018) [13], Agerri R. et al. (2019) [14], Luo H. et al. (2019) [15], Wu. Chuhan et al. (2018) [35], Dragoni M. et al. (2019) [26], Venugopalan et al. (2022) [34], and Busst M. M. A. et al. (2024) [41]. This suggests that the proposed model excels at accurately identifying aspect terms in the dataset SemEval 2015, demonstrating its robustness and effectiveness in this particular domain. Moving to the dataset SemEval 2016, our approach achieves an F-measure of 0.77, which is slightly lower than the maximum F-measure attained by the approach of Chauhan et al. (2020) [36] (F-measure = 0.79). However, the proposed approach still performs competitively compared to other approaches, such as Xue W. et al. (2017) [12], Agerri R. et al. (2019) [14], Luo H. et al. (2019) [15], Wu. Chuhan et al. (2018) [35], Dragoni M. et al. (2019) [26], and Venugopalan et al. (2022) [34].

Overall, the experimental results highlight the effectiveness of the proposed model for aspect term extraction across different datasets, while the proposed approach may not always achieve the highest F-measure in every dataset, it consistently demonstrates competitive performance and, in some cases, outperforms other approaches. This indicates the versatility and reliability of the proposed model in capturing aspect terms across diverse contexts within the restaurant domain. Further refinements and optimizations could potentially enhance its performance even further in future experiments.

6. Time Complexity Analysis

The time complexity evaluation for the proposed algorithms is presented in Table 7. The actual running time has been measured using restaurant training datasets. It is assumed in this research that the maximum sentence length is derived from these training datasets, with the maximum sentence length being 61 words. Consequently, the total time complexity of the proposed algorithms is O(n) [35], indicating a linear time complexity, where n represents the maximum length of a sentence.

Table 7.

The time complexity of the proposed algorithms.

According to Table 7, the proposed algorithms’ time complexity reflects their efficiency in processing restaurant reviews. Slang and Abbreviation Processing and the Multiple-word Aspect Terms Extraction algorithm operate in theoretical & O(n) time complexity and achieve an actual processing time of 0.08 min and 0.05 min, respectively. The Slang and Abbreviation Processing algorithm’s efficiency ensures rapid processing, even for longer sentences. The Multiple-Word Aspect Term Extraction algorithm’s efficiency is critical for identifying nuanced aspects of restaurant reviews without undue computational overhead.

7. Exploring Aspect Terms Misprediction

This section delves into the classification of mispredictions occurring during the aspect term extraction process, comparing the classifications with the labels provided in the test datasets. The misprediction of aspect terms is categorized into two main types in Table 8:

Table 8.

Examples of aspect terms misprediction during the ATE Process.

- (1)

- False Positives and False Negatives: Instances where the model incorrectly predicts the presence or absence of an aspect term.

- (2)

- POS Tag Errors: Instances where the POS tagging of aspect terms leads to mispredictions.

7.1. False Positives and False Negatives

False Positives and False Negatives consist of the following cases:

Case 1–2: The label fails to identify the aspect term while the model correctly predicts it as “brooklyn” or “people and sushi”, indicating a False Negative.

Case 3: The label designates “mioposto” as the aspect term, yet the proposed model fails to make the prediction. This failure stems from the absence of “mioposto” as a recognized entity in both the LOD and the Aspect Term corpus. As “mioposto” is a specific pizza restaurant name. Consequently, the proposed model lacks the necessary information to classify “mioposto” accurately, resulting in another instance of False Negative misprediction.

Case 4: The ground truth label accurately identifies the dish as “chuwam mushi”. However, the proposed model predicts “chawan mushi”. This discrepancy likely arises from a word correction applied during the pre-processing module, resulting in a False Positive classification.

7.2. POS Tag Errors

POS Tag Errors consist of the following case: Case 5: The model fails to recognize “priced” as an aspect term due to incorrect POS tagging, resulting in a misprediction categorized as a POS tag error.

This section provides an analysis of various misprediction scenarios encountered during the aspect terms extraction process. Cases 1–4 illustrate instances of False Positives and False Negatives, while case 5 exemplifies a POS tag error. These findings underscore the intricate nature of aspect term classification and shed light on the limitations of SemEval datasets. By identifying aspect term mispredictions, this study contributes to enhancing the accuracy and reliability of aspect term classification models in natural language processing tasks.

8. Conclusions and Future Work

This study introduces a knowledge-driven approach aimed at automating semantic aspect-term extraction by utilizing the semantic capabilities of DBpedia. By leveraging DBpedia, our model seeks to enhance and enrich aspect extraction outcomes within the training dataset. Moreover, due to the extensive knowledge base of DBpedia, the proposed approach can be utilized for the identification of named entity aspect terms in other domains, including individuals, places, music albums, films, video games, organizations, species, and diseases [64]. To evaluate the efficacy of the proposed model, the research conducted experiments across three distinct datasets: SemEval 2014, 2015, and 2016. These evaluations involved comparisons with contemporary unsupervised, supervised, and hybrid methods on identical datasets.

The experimental findings underscore the effectiveness of the proposed approach in aspect-term extraction across various datasets, while this proposed approach may not consistently yield the highest F-measure in every dataset, it consistently demonstrates competitive performance and, in certain instances, even outperforms alternative methodologies. This indicates the versatility and reliability of our model in capturing aspect terms across diverse contexts. Experimental evidence confirms the hypotheses that LOD’s semantic capabilities help enhance the performance of ATE.

This research has potential applications and benefits for a variety of industries, including tourism companies, restaurants, and e-commerce. For instance, comprehending the reviewer’s opinion on the meal or constructing recommended systems that propose restaurants according to user preferences, such as suggesting a restaurant with a vibrant ambiance.

In future endeavors, this research may explore the development of an automatic ontology creation process to supplant the corpus for aspect extraction. This would aim to enhance the semantic relationships between aspects and facilitate more accurate aspect terms extraction and aspect type classifications.

Author Contributions

Conceptualization, W.S.; methodology, W.S.; validation, W.S., N.A.-I. and W.W.; investigation, W.S. and N.A.-I.; resources, W.S.; writing—original draft preparation, W.S. and N.A.-I.; writing—review and editing, W.S., N.A.-I. and W.W.; visualization, W.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

SemEval datasets from 2014, 2015, and 2016.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fleisher, C.S.; Bensoussan, B.E. Strategic and Competitive Analysis: Methods and Techniques for Analyzing Business Competition; Prentice Hall: Hoboken, NJ, USA, 2003; Volume 457. [Google Scholar]

- Choi, S.; Fredj, K. Price competition and store competition: Store brands vs. national brand. Eur. J. Oper. Res. 2013, 225, 166–178. [Google Scholar] [CrossRef]

- Salinca, A. Business reviews classification using sentiment analysis. In Proceedings of the 2015 17th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 21–24 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 247–250. [Google Scholar]

- Hu, M.; Liu, B. Mining and summarizing customer reviews. In Proceedings of the tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 168–177. [Google Scholar]

- Liu, B. Sentiment Analysis: Mining Opinions, Sentiments, and Emotions; Studies in Natural Language Processing; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Vajjala, S.; Majumder, B.; Gupta, A.; Surana, H. Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems; O’Reilly Media: Sebastopol, CA, USA, 2020. [Google Scholar]

- Toh, Z.; Wang, W. Dlirec: Aspect term extraction and term polarity classification system. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 235–240. [Google Scholar]

- Shelke, P.P.; Wagh, K.P. Review on aspect based sentiment analysis on social data. In Proceedings of the 2021 8th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 17–19 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 331–336. [Google Scholar]

- Nguyen, M.H.; Nguyen, T.M.; Van Thin, D.; Nguyen, N.L.T. A corpus for aspect-based sentiment analysis in Vietnamese. In Proceedings of the 2019 11th International Conference on Knowledge and Systems Engineering (KSE), Da Nang, Vietnam, 24–26 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Haq, B.; Daudpota, S.M.; Imran, A.S.; Kastrati, Z.; Noor, W. A Semi-Supervised Approach for Aspect Category Detection and Aspect Term Extraction from Opinionated Text. Comput. Mater. Contin. 2023, 77, 115–137. [Google Scholar] [CrossRef]

- Mishra, P.; Panda, S.K. Dependency Structure-Based Rules Using Root Node Technique for Explicit Aspect Extraction From Online Reviews. IEEE Access 2023, 11, 65117–65137. [Google Scholar] [CrossRef]

- Xue, W.; Zhou, W.; Li, T.; Wang, Q. MTNA: A neural multi-task model for aspect category classification and aspect term extraction on restaurant reviews. In Proceedings of the Eighth International Joint Conference on Natural Language Processing (Volume 2: Short Papers), Taipei, Taiwan, 1 December 2017; pp. 151–156. [Google Scholar]

- Yu, J.; Jiang, J.; Xia, R. Global inference for aspect and opinion terms co-extraction based on multi-task neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 27, 168–177. [Google Scholar] [CrossRef]

- Agerri, R.; Rigau, G. Language independent sequence labelling for opinion target extraction. Artif. Intell. 2019, 268, 85–95. [Google Scholar] [CrossRef]

- Luo, H.; Li, T.; Liu, B.; Wang, B.; Unger, H. Improving aspect term extraction with bidirectional dependency tree representation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1201–1212. [Google Scholar] [CrossRef]

- Akhtar, M.S.; Garg, T.; Ekbal, A. Multi-task learning for aspect term extraction and aspect sentiment classification. Neurocomputing 2020, 398, 247–256. [Google Scholar] [CrossRef]

- Liu, N.; Shen, B. Aspect term extraction via information-augmented neural network. Complex Intell. Syst. 2023, 9, 537–563. [Google Scholar] [CrossRef]

- Piao, Y.; Zhang, J.X. Text Triplet Extraction Algorithm with Fused Graph Neural Networks and Improved Biaffine Attention Mechanism. Appl. Sci. 2024, 14, 3524. [Google Scholar] [CrossRef]

- Yang, H.; Zeng, B.; Yang, J.; Song, Y.; Xu, R. A multi-task learning model for chinese-oriented aspect polarity classification and aspect term extraction. Neurocomputing 2021, 419, 344–356. [Google Scholar] [CrossRef]

- Hu, M.; Liu, B. Mining Opinion Features in Customer Reviews; AAAI: Washington, DC, USA, 2004; Volume 4, pp. 755–760. [Google Scholar]

- Anwer, N.; Rashid, A.; Hassan, S. Feature based opinion mining of online free format customer reviews using frequency distribution and Bayesian statistics. In Proceedings of the 6th International Conference on Networked Computing and Advanced Information Management, Seoul, Republic of Korea, 16–18 August 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 57–62. [Google Scholar]

- Rana, T.A.; Cheah, Y.N. A two-fold rule-based model for aspect extraction. Expert Syst. Appl. 2017, 89, 273–285. [Google Scholar] [CrossRef]

- Zhao, Y.; Qin, B.; Hu, S.; Liu, T. Generalizing syntactic structures for product attribute candidate extraction. In Proceedings of the Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Angeles, CA, USA, 2–4 June 2010; pp. 377–380. [Google Scholar]

- Maharani, W.; Widyantoro, D.H.; Khodra, M.L. Aspect extraction in customer reviews using syntactic pattern. Procedia Comput. Sci. 2015, 59, 244–253. [Google Scholar] [CrossRef]

- Shafie, A.S.; Sharef, N.M.; Murad, M.A.A.; Azman, A. Aspect extraction performance with pos tag pattern of dependency relation in aspect-based sentiment analysis. In Proceedings of the 2018 Fourth International Conference on Information Retrieval and Knowledge Management (CAMP), Kota Kinabalu, Malaysia, 26–28 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Dragoni, M.; Federici, M.; Rexha, A. An unsupervised aspect extraction strategy for monitoring real-time reviews stream. Inf. Process. Manag. 2019, 56, 1103–1118. [Google Scholar] [CrossRef]

- Huang, J.; Cui, Y.; Wang, S. Adaptive Local Context and Syntactic Feature Modeling for Aspect-Based Sentiment Analysis. Appl. Sci. 2023, 13, 603. [Google Scholar] [CrossRef]

- Titov, I.; McDonald, R. Modeling online reviews with multi-grain topic models. In Proceedings of the 17th International Conference on World Wide Web, Beijing, China, 21–25 April 2008; pp. 111–120. [Google Scholar]

- Brody, S.; Elhadad, N. An unsupervised aspect-sentiment model for online reviews. In Proceedings of the Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Los Angeles, CA, USA, 2–4 June 2010; pp. 804–812. [Google Scholar]

- Yao, L.; Zhang, Y.; Wei, B.; Li, L.; Wu, F.; Zhang, P.; Bian, Y. Concept over time: The combination of probabilistic topic model with wikipedia knowledge. Expert Syst. Appl. 2016, 60, 27–38. [Google Scholar] [CrossRef]

- Shams, M.; Baraani-Dastjerdi, A. Enriched LDA (ELDA): Combination of latent Dirichlet allocation with word co-occurrence analysis for aspect extraction. Expert Syst. Appl. 2017, 80, 136–146. [Google Scholar] [CrossRef]

- Annisa, R.; Surjandari, I. Opinion mining on Mandalika hotel reviews using latent dirichlet allocation. Procedia Comput. Sci. 2019, 161, 739–746. [Google Scholar] [CrossRef]

- Ozyurt, B.; Akcayol, M.A. A new topic modeling based approach for aspect extraction in aspect based sentiment analysis: SS-LDA. Expert Syst. Appl. 2021, 168, 114231. [Google Scholar] [CrossRef]

- Venugopalan, M.; Gupta, D. An enhanced guided LDA model augmented with BERT based semantic strength for aspect term extraction in sentiment analysis. Knowl.-Based Syst. 2022, 246, 108668. [Google Scholar] [CrossRef]

- Wu, C.; Wu, F.; Wu, S.; Yuan, Z.; Huang, Y. A hybrid unsupervised method for aspect term and opinion target extraction. Knowl.-Based Syst. 2018, 148, 66–73. [Google Scholar] [CrossRef]

- Chauhan, G.S.; Meena, Y.K.; Gopalani, D.; Nahta, R. A two-step hybrid unsupervised model with attention mechanism for aspect extraction. Expert Syst. Appl. 2020, 161, 113673. [Google Scholar] [CrossRef]

- Chen, M.; Hua, Q.; Mao, Y.; Wu, J. An Interactive Learning Network That Maintains Sentiment Consistency in End-to-End Aspect-Based Sentiment Analysis. Appl. Sci. 2023, 13, 9327. [Google Scholar] [CrossRef]

- Jin, Z.; Tao, M.; Wu, X.; Zhang, H. Span-based dependency-enhanced graph convolutional network for aspect sentiment triplet extraction. Neurocomputing 2024, 564, 126966. [Google Scholar] [CrossRef]

- Zhang, F.; Zheng, W.; Yang, Y. Graph Convolutional Network with Syntactic Dependency for Aspect-Based Sentiment Analysis. Int. J. Comput. Intell. Syst. 2024, 17, 37. [Google Scholar] [CrossRef]

- Mu, Y.; Shi, S. Dependency-Type Weighted Graph Convolutional Network on End-to-End Aspect-Based Sentiment Analysis. In International Conference on Intelligent Information Processing; Springer: Berlin/Heidelberg, Germany, 2024; pp. 46–57. [Google Scholar]

- Busst, M.M.A.; Anbananthen, K.S.M.; Kannan, S.; Krishnan, J.; Subbiah, S. Ensemble BiLSTM: A Novel Approach for Aspect Extraction From Online Text. IEEE Access 2024, 12, 3528–3539. [Google Scholar] [CrossRef]

- Zhao, A.; Yu, Y. Knowledge-enabled BERT for aspect-based sentiment analysis. Knowl.-Based Syst. 2021, 227, 107220. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, X.; Miao, D.; Qin, X.; Lu, P.; Li, X. Label-semantics enhanced multi-layer heterogeneous graph convolutional network for Aspect Sentiment Quadruplet Extraction. Expert Syst. Appl. 2024, 255, 124523. [Google Scholar] [CrossRef]

- Alqaryouti, O.; Siyam, N.; Abdel Monem, A.; Shaalan, K. Aspect-based sentiment analysis using smart government review data. Appl. Comput. Inform. 2024, 20, 142–161. [Google Scholar] [CrossRef]

- Cavnar, W.B.; Trenkle, J.M. N-gram-based text categorization. In Proceedings of the SDAIR-94, 3rd Annual Symposium on Document Analysis and Information Retrieval, Las Vegas, NV, USA, 11–13 April 1994; Volume 161175, p. 14. [Google Scholar]

- Auer, S.; Bryl, V.; Tramp, S. Linked Open Data—Creating Knowledge Out of Interlinked Data: Results of the LOD2 Project; Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Bauer, F.; Kaltenböck, M. Linked Open Data: The Essentials; Edition Mono/Monochrom: Vienna, Austria, 2011; Volume 710. [Google Scholar]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; Van Kleef, P.; Auer, S.; et al. Dbpedia—A large-scale, multilingual knowledge base extracted from wikipedia. Semant. Web 2015, 6, 167–195. [Google Scholar] [CrossRef]

- Boyd, M. Thesaurus Synonyms API. 2016. Available online: https://api-ninjas.com/api/thesaurus/ (accessed on 8 February 2024).

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Jelodar, H.; Wang, Y.; Yuan, C.; Feng, X.; Jiang, X.; Li, Y.; Zhao, L. Latent Dirichlet allocation (LDA) and topic modeling: Models, applications, a survey. Multimed. Tools Appl. 2019, 78, 15169–15211. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Qi, P.; Manning, C.D.; Langlotz, C.P. Biomedical and clinical English model packages for the Stanza Python NLP library. J. Am. Med. Inform. Assoc. 2021, 28, 1892–1899. [Google Scholar] [CrossRef] [PubMed]

- Bird, S. NLTK: The natural language toolkit. In Proceedings of the COLING/ACL 2006 Interactive Presentation Sessions, Sydney, Australia, 18 July 2006; pp. 69–72. [Google Scholar]

- Barrus, T. Pyspellchecker. 2020. Available online: https://pypi.org/project/pyspellchecker/ (accessed on 12 August 2023).

- Nivre, J. Dependency grammar and dependency parsing. MSI Rep. 2005, 5133, 1–32. [Google Scholar]

- Loria, S. textblob Documentation. Release 0.15 2018, 2, 269. [Google Scholar]

- Baccianella, S.; Esuli, A.; Sebastiani, F. Sentiwordnet 3.0: An enhanced lexical resource for sentiment analysis and opinion mining. In Proceedings of the Lrec, Valletta, Malta, 17–23 May 2010; Volume 10, pp. 2200–2204. [Google Scholar]

- Han, H.; Zhang, Y.; Zhang, J.; Yang, J.; Zou, X. Improving the performance of lexicon-based review sentiment analysis method by reducing additional introduced sentiment bias. PloS ONE 2018, 13, e0202523. [Google Scholar] [CrossRef] [PubMed]

- Agirre, E.; Bos, J.; Diab, M.; Manandhar, S.; Marton, Y.; Yuret, D. *SEM 2012: The First Joint Conference on Lexical and Computational Semantics—Volume 1: Proceedings of the Main Conference and the Shared Task, and Volume 2: Proceedings of the Sixth International Workshop on Semantic Evaluation (semEval 2012), Montreal, Canada, 7–8 June 2021; Omnipress, Inc.: Madison, WI, USA, 2012. [Google Scholar]

- Kirange, D.; Deshmukh, R.R.; Kirange, M. Aspect based sentiment analysis semeval-2014 task 4. Asian J. Comput. Sci. Inf. Technol. (AJCSIT) 2014, 4, 72–75. [Google Scholar]

- Papageorgiou, H.; Androutsopoulos, I.; Galanis, D.; Pontiki, M.; Manandhar, S. SemEval-2015 Task 12: Aspect Based Sentiment Analysis. In Proceedings of the 9th International Workshop on Semantic Evaluation, Denver, CO, USA, 4–5 June 2015; pp. 486–495. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; AL-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; De Clercq, O.; et al. Semeval-2016 task 5: Aspect based sentiment analysis. In Proceedings of the ProWorkshop on Semantic Evaluation (SemEval-2016), Association for Computational Linguistics, San Diego, CA, USA, 16–17 June 2016; pp. 19–30. [Google Scholar]

- Schwaiger, J.M.; Lang, M.; Ritter, C.; Johannsen, F. Assessing the accuracy of sentiment analysis of social media posts at small and medium-sized enterprises in Southern Germany. In Proceedings of the Twenty-Fourth European Conference on Information Systems (ECIS), Istanbul, Turkey, 12–15 June 2016. [Google Scholar]

- Bizer, C.; Lehmann, J.; Kobilarov, G.; Auer, S.; Becker, C.; Cyganiak, R.; Hellmann, S. Dbpedia-a crystallization point for the web of data. J. Web Semant. 2009, 7, 154–165. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).