Abstract

Background/Objectives: Conventional workflows, peripheral blood smears, and bone marrow assessment supplemented by LDI-PCR, molecular cytogenetics, and array-CGH, are expert-driven in the face of biological and imaging variability. Methods: We propose an AI pipeline that integrates convolutional neural networks (CNNs) and transfer learning-based models with two explainable AI (XAI) approaches, LIME and Grad-Cam, to deliver both high diagnostic accuracy and transparent rationale. Seven public sources were curated into a unified benchmark (66,550 images) covering ALL, AML, CLL, CML, and healthy controls; images were standardized, ROI-cropped, and split with stratification (80/10/10). We fine-tuned multiple backbones (DenseNet-121, MobileNetV2, VGG16, InceptionV3, ResNet50, Xception, and a custom CNN) and evaluated the accuracy and F1-score, benchmarking against the recent literature. Results: On the five-class task (ALL/AML/CLL/CML/Healthy), MobileNetV2 achieved 97.9% accuracy/F1, with DenseNet-121 reaching 97.66% F1. On ALL subtypes (Benign, Early, Pre, Pro) and across tasks, DenseNet121 and MobileNetV2 were the most reliable, achieving state-of-the-art accuracy with the strongest, nucleus-centric explanations. Conclusions: XAI analyses (LIME, Grad-CAM) consistently localized leukemic nuclei and other cell-intrinsic morphology, aligning saliency with clinical cues and model performance. Compared with baselines, our approach matched or exceeded accuracy while providing stronger, corroborated interpretability on a substantially larger and more diverse dataset.

1. Introduction

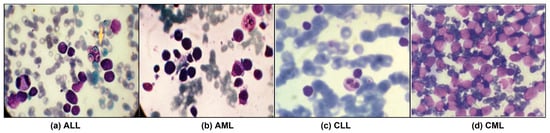

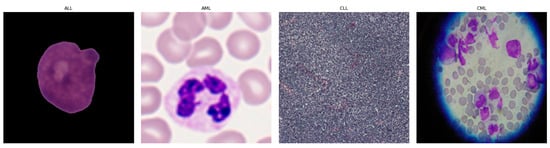

Leukemia accounts for ~8% of cancers and is classified as acute or chronic, with lymphoblastic and myeloid subtypes [1,2]. As shown in Figure 1, it is crucial to have a good understanding of the epidemiology and trends of leukemia to plan treatment accordingly [1]. Diagnosis is led by expert pathologists using peripheral blood smears and bone marrow analyses, complemented by advanced methods such as LDI-PCR, molecular cytogenetics, array-CGH, and sometimes interventional radiology [3]. These approaches are costly and time-consuming, imaging sensitivity can be influenced by genetic factors, and the disease’s complexity leaves room for diagnostic error.

Figure 1.

Leukemia images consisting of different sub-classes: (a) Acute Lymphoblastic Leukemia (ALL) [1], (b) Acute Myeloid Leukemia (AML) [1], (c) Chronic Lymphocytic Leukemia (CLL) [2], and (d) Chronic Myeloid Leukemia (CML) [2].

Intelligent diagnostic tools are essential for precise leukemia identification. Recent advances in AI have reshaped medical imaging and hematologic analysis. Deep Learning (DL) enables rich data representation by automatically learning multi-level hierarchical features from raw medical images, starting from low-level edges and textures to high-level morphological patterns, through multiple layers of interconnected neural units that adjust their weights via backpropagation to minimize prediction error [4]. DL has enabled the automated analysis of high-dimensional medical images of a wide range of complex diseases, including cancers such as leukemia [3,5]. CNNs have proven their ability to evaluate and interpret medical images, including blood and bone marrow, facilitating accurate differentiation between various leukemia types [2,4]. XAI targets transparency by providing human-understandable rationales for model outputs and, in cancer imaging, couples modern processing and DL to both detect disease and explain decisions [6]. Because leukemia arises from acquired genetic and chromosomal abnormalities that drive malignant clonal expansion, timely and accurate diagnosis is critical for survival and appropriate therapy selection [4]. Although there are different types of leukemia classified by cell lineage and disease progression, as shown in Table 1 [1,2], prior studies vary in design and often rely on limited datasets. To address these issues, this work proposes integrating XAI with DL to improve efficiency and accuracy, distinguishing leukemic from healthy cells using extensive blood and bone-marrow image datasets. The objectives are to develop CNN-based models for AML, ALL, CML, and CLL, incorporate LIME and Grad-CAM for explainability, evaluate with Accuracy and F1-Score, and benchmark against state-of-the-art approaches [2,7,8] using transfer-learning backbones, such as DenseNet, VGG, MobileNet, Inception, ResNet, and Xception. However, these approaches are costly and time-consuming, imaging sensitivity can be influenced by genetic factors, and the disease’s complexity leaves room for diagnostic error [9].

Table 1.

Major types of leukemia classified by cell lineage and disease progression, showing the affected cell type, rate of development, predominant age group, and key distinguishing features for each form.

2. Related Work

2.1. Deep Learning for Leukemia Diagnosis

2.1.1. Single-Type Pipeline Classifier

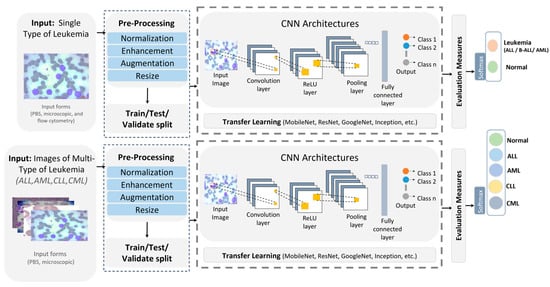

A growing body of work applies DL to diagnose and classify a single leukemia type, highlighting the promise of CNNs, hybrid pipelines, and ensembles in boosting accuracy [4,10,11,12]. Figure 2 illustrates a streamlined single-type versus multiple-type classifiers workflow. The Single-Type and Multiple-Type classifier pipelines are recognized and differentiated based on the number and diversity of leukemia classes targeted and the corresponding network design and labeling strategy.

Figure 2.

Single-type versus multi-type leukemia classification pipeline.

- Single-Type Pipeline: Focuses on binary classification by distinguishing one leukemia type (e.g., ALL) from healthy or benign samples. It typically involves fewer image categories, simpler network heads (two output neurons), and is trained on datasets that include only one disease type.

- Multiple-Type Pipeline: Extends this to multi-class classification across several leukemia types (e.g., ALL, AML, CLL, CML, and Healthy). This pipeline uses multi-label encoding (five output neurons in our case) and is trained on a consolidated dataset where each sample is annotated according to its specific subtype.

Inputs from peripheral blood smears and microscopic images undergo normalization, enhancement, augmentation, and resizing, then the dataset is split into training and testing before a CNN performs binary classification, with standard evaluation metrics reported [4,11]. Rather than training from scratch, many studies use transfer learning, commonly MobileNet for lightweight screening and ResNet50 for feature extraction, to improve predictive performance [11,12]. Representative examples include a survey showing that CNN-based analysis of bone-marrow images is effective for ALL detection and that techniques, such as CatBoost, XGBoost, and transfer learning, further raise performance while underscoring the need for larger, more diverse datasets [4]. A B-ALL classifier built on a lightweight CNN with a tailored segmentation step reported high accuracy, with a MobileNetV2 variant performing best for early and efficient screening [11]. Another study proposed ResRandSVM, combining ResNet50 features, Random Forest feature selection, and SVM classification, achieving 90% accuracy, 90.2% precision, 95.7% recall, and a 92.9% F1-score on PBS images [12]. An ensemble that fused DenseNet-201 features with BiLSTM and GRU encoders and an MSVM head reached 96.29% accuracy, 94.58% sensitivity, 98% specificity, 96.23% F1-score, and 97.93% precision on the C-NMC 2019 dataset, demonstrating the strength of DL-sequence hybrids for ALL recognition [10].

2.1.2. Multi-Type Pipeline Classifier

Recent multi-type leukemia studies report notable gains in diagnostic performance using DL methods [2,13]. Figure 2 shows a typical pipeline in which images (for ALL, AML, CLL, and CML sourced from PBS and microscopy) are normalized, enhanced, augmented, and resized, split into training and test sets, and passed to CNN-based models. Performance is then measured with standard metrics, and transfer learning is used to estimate accuracy. More blood types are investigated [2,13]. A hybrid scheme that pairs deep transfer learning feature extractors, including VGG, Xception, InceptionResV2, DenseNet, and ResNet, with ML classifiers (RF, XGBoost), was proposed for both binary and multi-class tasks on constrained datasets [2]. Using ALL-IDB for binary classification and a private set with AML, CLL, and CML for multi-class, the method reached 97.08% in multi-class classification. VGG16 and DenseNet-121 were trained to classify two leukemia types and three lymphoma subtypes, merging three public sources to cover eight cancers (including AML, ALL subtypes, CLL, FL, and CML) [13]. After resizing and augmentations, such as shear, zoom, and flips, VGG16 outperformed DenseNet-121 with 98.2% accuracy. Table 2 contrasts DL approaches targeting single versus multiple leukemia types across bone-marrow smears and PBS, spanning CNNs and hybrid designs. Pretrained backbones appearing in these works include AlexNet [4], DenseNet [2,4,10,13], MobileNet [4,11], Inception [2], Xception [2], ResNet [2,4,12] and VGG [2,13], with reported accuracies summarized therein. Despite strong results, key gaps remain: limited and noisy datasets, potential generalization issues, and opaque decision processes. XAI is therefore essential to clarify model reasoning and bolster clinical trust and reliability.

Table 2.

Comparison of single-type vs. multi-type classifiers in the literature.

2.2. XAI for Leukemia Diagnosis

XAI has recently driven major gains in leukemia diagnostics by transforming deep learning models from opaque classifiers into transparent decision-support tools. Through visual explanation methods, such as Grad-CAM and LIME, researchers have verified that model attention corresponds to clinically meaningful regions, such as nuclear morphology and chromatin texture [7,8,14,15,16,17,18]. These works use backpropagation explainers, such as CAM and Grad-CAM, to make CNN decisions transparent. In [14], a pipeline combined precise WBC-nuclei segmentation (U-Net + Grad-CAM) with modified ResNet-50, delivering 0.91 segmentation accuracy and 99.9% classification across six datasets. Ref. [15] compared heatmap methods on ALL_IDB2 and found that Grad-CAM with GoogleNet best-aligned relevant pixels with key morphology: 43.61% of relevant pixels lay in diagnostic structures, with nuclei containing 73.97% of important pixels; for ALL cells, 91.90% of relevant pixels were in the nucleus versus 8.10% in cytoplasm, while healthy cells showed 54.26% in nucleus and 45.74% in cytoplasm. Ref. [16] created ALL-IDB WSI patches and proposed OrthoALLNet with Grad-CAM, achieving 96.06% accuracy. A feature-fusion ensemble of EfficientNetB7 and MobileNetV3Large with Grad-CAM reached 99.3% accuracy/F1 and 0.997 AUC across ALLIDB1, ALLIDB2, and ASH [17]. Using CAM, Grad-CAM, and Grad-CAM++ on multiple backbones, [18] reported top metrics around 94% accuracy/precision and 93% recall/F1. For multi-type detection, [7] fine-tuned VGG16 (last three conv layers) with an SVM head and Grad-CAM on a subject-independent smear dataset (expanded from 750 to 1250 images), attaining 84% accuracy. By contrast, a perturbation-based approach with LIME combined transfer-learned VGG-16 and Inception for binary ALL-IDB and a private multi-class set, yielding 83.33% (binary) and 100% (multi-class), though the latter was likely inflated by the small sample size [8]. Table 3 summarizes XAI methods (model-specific and model-agnostic) applied to leukemia, including Grad-CAM [7,14,15,16,17,18] and LIME [8], the DL backbones used, and reported performance, which ranges roughly from 68% to 99% accuracy. Despite high scores, studies often face limited or noisy data and computational overhead; XAI helps address trust and interpretability for clinical adoption.

Table 3.

Summary of visual explainable AI (XAI) techniques applied to leukemia classification, showing the model types, pre-trained models, XAI methods, performance, and limitations.

3. Methodology

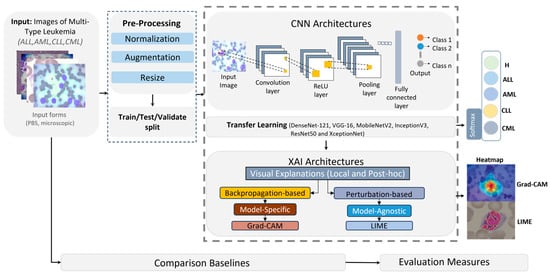

3.1. Proposed Deep Learning Models

In this section, we present an AI-driven pipeline for diagnosing leukemia from microscopic imagery spanning four disease categories: Acute Lymphoblastic Leukemia (ALL), Acute Myeloid Leukemia (AML), Chronic Lymphocytic Leukemia (CLL), and Chronic Myeloid Leukemia (CML). The approach couples convolutional neural networks (CNNs) with explainable AI (XAI) to both perform classification and expose the model’s decision rationale, thereby enhancing diagnostic accuracy and interpretability for clinical end-users. As depicted in Figure 3, the framework comprises three stages.

Figure 3.

General framework of the proposed CNN and XAI model for leukemia detection.

- Preprocessing: Input images—provided in BMP, JPG, or TIFF—were standardized via normalization, resized to 224 × 224 pixels to ensure CNN compatibility, and augmented to expand sample diversity and mitigate overfitting.

- Model training: The processed corpus was partitioned into training (80%) and testing/validation (20%) splits. We fine-tuned pretrained CNN backbones (DenseNet-121, VGG-16, ResNet50) by replacing their terminal classification layers to adapt them to leukemia subtype prediction. Deep learning was selected as the core analytical approach because microscopic leukemia diagnosis involves highly complex, high-dimensional visual patterns that cannot be effectively captured by conventional feature-engineering or shallow classifiers.

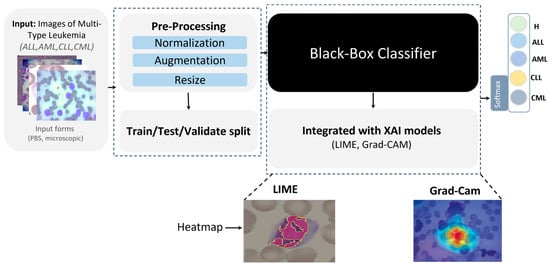

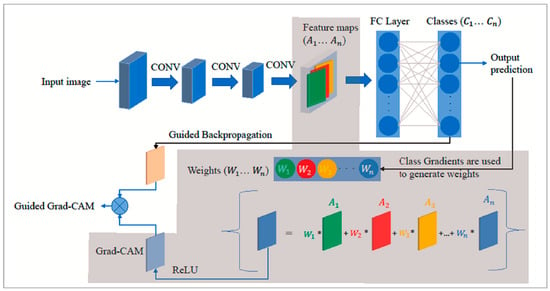

- Explainability: Post hoc XAI methods, specifically LIME and Grad-CAM, as shown in Figure 4, were applied to generate heatmaps that highlighted image regions most influential to the classifier, rendering model behavior transparent and clinically interpretable.

Figure 4. Proposed XAI approach for leukemia detection.

Figure 4. Proposed XAI approach for leukemia detection.

Our framework builds upon well-established deep learning backbones (e.g., DenseNet-121, MobileNetV2, VGG16, InceptionV3, ResNet50, Xception) and standard XAI techniques (LIME and Grad-CAM). While the proposed pipeline leverages established CNN backbones and standard XAI methods, its novelty lies in the integration and large-scale evaluation of these techniques on a unified, multi-type leukemia dataset, enabling simultaneous optimization of diagnostic performance and interpretability. Model performance is quantified using standard metrics, including accuracy and F1-score, to assess reliability across leukemia categories. Finally, we benchmark the proposed CNN + XAI pipeline against conventional deep learning baselines without XAI to demonstrate advantages in both predictive performance and interpretability.

3.2. Proposed XAI Techniques

Our explainability strategy combines two complementary perspectives:

- Model-agnostic (LIME)—provides local, quantitative explanations by perturbing input pixels and observing how model predictions change;

- Model-specific (Grad-CAM)—provides visual, qualitative explanations by tracing class-specific gradients back through the final convolutional layer.

3.2.1. LIME

LIME provides a thorough explanation for the predictions made by any classifier or regressor by locally approximating these predictions with an interpretable model [19]. This technique is particularly useful in the field of medical image analysis, where it serves as an XAI approach designed to make the predictions of ML or DL models understandable to laypersons. LIME achieves its explanatory power by altering the feature values of a single data sample and observing the impact on the result. This process involves perturbing the input images and observing the changes in the model’s prediction, effectively pinpointing the image features that substantially impact the model’s decision [20]. An “explainer” then offers estimates for each individual data sample, detailing the contribution of each feature to a prediction for a particular sample, thereby facilitating a local level of understanding [19], which can be obtained as in [20], as follows:

where f and g represent functions with different inputs. To be specific,

refers to the function

applied to the original input

, while

refers to the function g applied to the perturbed input

. The input

is a modified version of

used to assess the robustness and performance of the model g. The term

is a regularization term used to manage the complexity of g [20].

3.2.2. Grad-CAM

Grad-CAM provides visual explanations for CNN models by leveraging the high-level semantic and spatial information captured by the last CNN layer. Grad-CAM identifies the importance of each neuron for a target class using backpropagated gradients. Gradients for non-target classes are set to zero, and the non-zero signal is backpropagated to generate Rectified Convolutional Feature Maps (RCFM), which are combined to produce the Grad-CAM localization map [2]. Figure 5 shows the Grad-CAM architecture [21]. To generate this map, Grad-CAM calculated the gradient of the target class score

with respect to the feature map

of the convolutional layer. These gradients were aggregated across spatial dimensions using indices i and j, leading to the computation of significance weights

for each feature map, as follows [20]:

where Z denotes a normalization factor that is equal to the total number of elements in the feature map. The Grad-CAM heatmap

for a specific target class c is created by combining the forward activation maps with a weighted approach, followed by the application of a ReLU function. This technique is specifically crafted to visualize only the features that have a positive impact on the class of interest, which can be obtained as in [20], as follows:

Figure 5.

Grad-CAM (gray-shaded) architecture [21].

4. Experimental Setup

4.1. Datasets

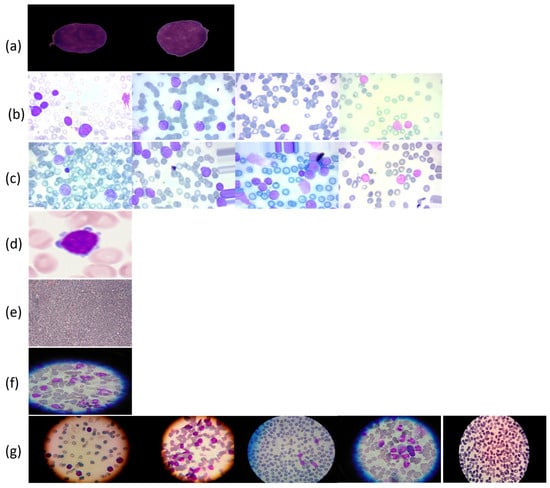

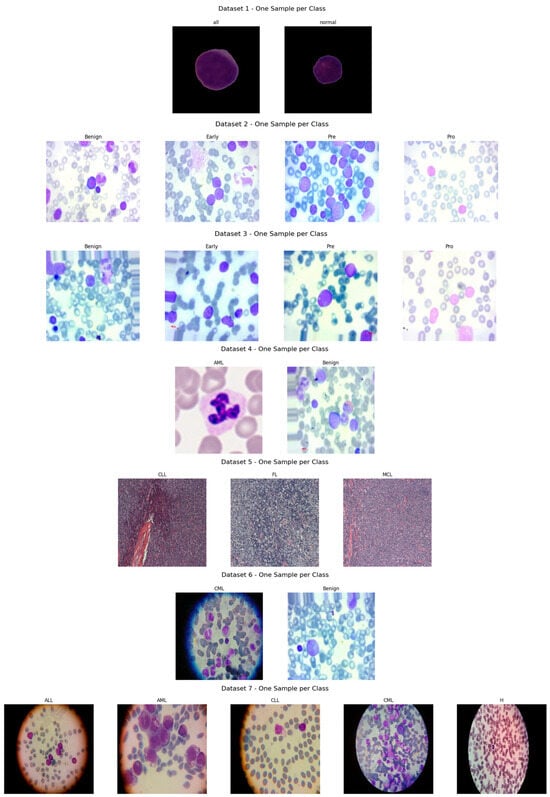

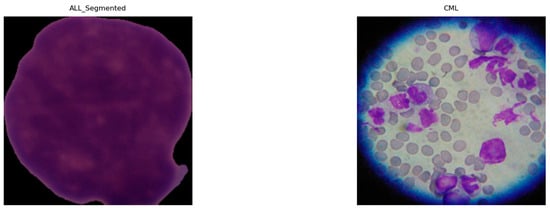

To support the experimental evaluation of leukemia diagnosis, we assembled a benchmark corpus by aggregating seven publicly available datasets sourced from Kaggle and Rabinndata. The consolidated dataset integrated seven open repositories (ALL_IDB1–2, C_NMC_Leukemia, MLL, Raabin-WBC, Peripheral_Blood_Cells, BloodMNIST, and BloodCell_Images) comprising 66,550 images. All sets were curated and harmonized to yield consistent annotations that reflect the morphological variability characteristic of distinct leukemia subtypes. The consolidated benchmark encompassed Acute Lymphoblastic Leukemia (ALL), Acute Myeloid Leukemia (AML), Chronic Lymphocytic Leukemia (CLL), and Chronic Myeloid Leukemia (CML), and included healthy controls for the ALL subset, as illustrated in Figure 6. This breadth of material provided a robust foundation for deep learning-based classification and diagnostic modeling. The integrated collection comprised 15,135 microscopic images covering ALL and healthy cells, as well as 3256 peripheral blood smear (PBS) images labeled as benign or malignant. Malignant ALL cases were further stratified into Early Pre-B, Pre-B, and Pro-B to capture lymphoblast progression. An additional 20,000 ALL images—drawn from a larger archive of approximately 130,000 cancer images—were categorized into benign versus malignant. For AML, we included 10,000 PBS images capturing hallmark myeloid morphology. The CLL component consisted of two subsets totaling 113 images extracted from malignant lymphoma material. The CML portion comprised 623 microscope images acquired via a smartphone camera, providing high-detail morphology for chronic myeloid analysis. Finally, a comprehensive dataset spanning all four leukemia types plus healthy cells contributed 20,000 microscopic images covering ALL, AML, CLL, CML, and H (healthy). The ALL subset was hierarchically annotated to mirror disease trajectory: Benign denoted non-cancerous healthy cells (specific to the ALL collection), Early marked incipient leukemic presentation, Pre indicated pre-stage abnormalities suggestive of progression, and Pro represented advanced-stage leukemia cells. Across the corpus, imaging conditions varied in resolution, magnification, and clinical context. A full specification of sources, class labels, and counts is provided in Table 4.

Figure 6.

Leukemia types and subcategories: (a) ALL: Segmented ALL and healthy samples; (b) B-ALL: Benign, Early, Pre, and Pro stages; (c) ALL: Benign, Early, Pre, and Pro stages; (d) AML; (e) CLL; (f) CML; (g) ALL, AML, CLL, CML, and H (healthy).

Table 4.

Summary of data sources of types of leukemia utilized in this work.

4.2. Data Splitting

To prepare data for the proposed leukemia-diagnosis model, the consolidated corpus was partitioned into training, validation, and test subsets. We first applied an 80/20 split, allocating 80% for model training and the remaining 20% held out for evaluation. The held-out portion was then divided into validation and test sets, yielding 53,239 images for training, 6654 for validation, and 6657 for testing. A stratified procedure was used to preserve class proportions so that the four leukemia categories (ALL, AML, CLL, and CML) were uniformly represented across splits, ensuring reliable and consistent evaluation (Table 5).

Table 5.

Distribution of data across training and testing datasets used in this study.

4.3. Benchmark Baselines

For comparative evaluation, we adopted three baseline configurations as summarized in Table 6. The first baseline follows Ref. [2], which addresses multi-class classification across AML, CLL, and CML using transfer learning with state-of-the-art backbones (e.g., VGG, DenseNet) coupled to conventional classifiers, such as Random Forest and XGBoost. Although that work reported 100% accuracy for a binary setting, those results are excluded from our direct comparisons due to potential overfitting concerns. The second baseline is derived from Ref. [8], which proposed an ensemble of VGG16 and Inception architectures for both binary classification of ALL versus healthy and multi-class classification over AML, CLL, and CML. LIME was employed to interpret predictions and enhance explainability. While 100% accuracy was reported for both tasks, the modest size and limited diversity of the underlying dataset likely influenced these figures, underscoring the need to assess models on larger and more heterogeneous cohorts. The third baseline follows Ref. [7], which performed classification over all four leukemia types—ALL, AML, CLL, and CML—while also incorporating healthy cells for each subtype and leveraging deep transfer learning with Grad-CAM to support interpretability. This breadth renders [7] a particularly relevant benchmark. However, our dataset did not include healthy cells across all subtypes, which constrained strict one-to-one comparability with that baseline.

Table 6.

Comparison of the measures of the proposed model with the related studies.

4.4. Computational Resources and Evaluation Metrics

All experiments were executed on Google Compute Engine with a single NVIDIA A100 GPU (40.0 GB VRAM), 83.5 GB system RAM, and 166.8 GB local disk. To validate the proposed leukemia-diagnosis models, we report three standard measures as in [14]. First, the training objective is categorical cross-entropy, which quantifies the divergence between predicted class probabilities and ground-truth labels. Second, accuracy measures the proportion of correctly classified instances:

Third, the F1-score is the harmonic mean of precision and recall:

where TP, TN, FP, and FN denote true positives, true negatives, false positives, and false negatives, respectively [14].

4.5. Dataset Exploration

4.5.1. Samples and Visualizations

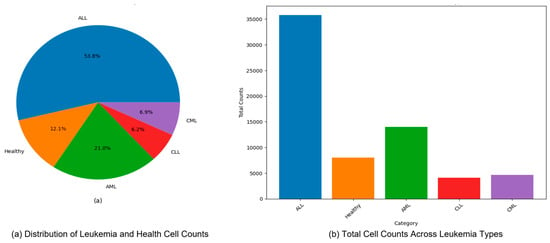

Figure 7 presents exemplar microscopic fields from the consolidated dataset assembled for leukemia diagnosis. The corpus covers four principal leukemia categories—ALL, AML, CLL, and CML—capturing substantial morphological variability to support fine-grained analysis and classification. We extracted representative tiles for each class, including ALL (Segmented ALL and Healthy Segmented), the B-ALL progression subclasses (Benign, Early, Pre, Pro), as well as AML, CLL, and CML. These samples illustrate intra- and inter-class heterogeneity and the presence of stage-dependent cues. Healthy controls for the ALL subset were ensured by explicitly including normal peripheral blood smear images provided within the same public ALL datasets and companion repositories, where samples are independently annotated as healthy by expert hematologists. These healthy images underwent the same preprocessing, quality control, and stratified splitting as ALL samples to ensure fair and consistent comparison. Figure 8 summarizes class representation and highlights pronounced imbalance: (a) shows proportional composition, ALL constitutes 53.8%, followed by AML at 21.0%, CML at 6.9%, CLL at 6.2%, and Healthy at 12.1%; (b) reports absolute counts, with approximately 36,000 samples for ALL, 14,000 for AML, 8000 for Healthy, 4000 for CLL, and 4500 for CML. Together, these views underscore a strong skew toward the ALL cohort and comparatively limited representation of CLL and CML.

Figure 7.

Sample microscopic images of leukemia types (ALL, AML, CLL, and CML) categorized by class and stage.

Figure 8.

Distribution and total cell counts of leukemia types (ALL, AML, CLL, and CML) in the dataset (a) Distribution of Leukemia and Health Cell Counts, (b) Total Cell Counts Across Leukemia Types.

4.5.2. Training Controls to Mitigate over-/Underfitting

We control overfitting and underfitting through a combination of data, model, and training-procedure safeguards:

- Using a stratified 80/10/10 split (train/val/test), selecting all hyperparameters on the validation split only; the test split is used once, at the end, to report final metrics.

- Monitoring validation loss/F1 and stop training when validation loss does not improve for 10 epochs (patience = 10).

- Weight decay (L2 = 1 × 10−4) and dropout (0.3–0.5) in the classification head; label smoothing = 0.1 to reduce over-confidence.

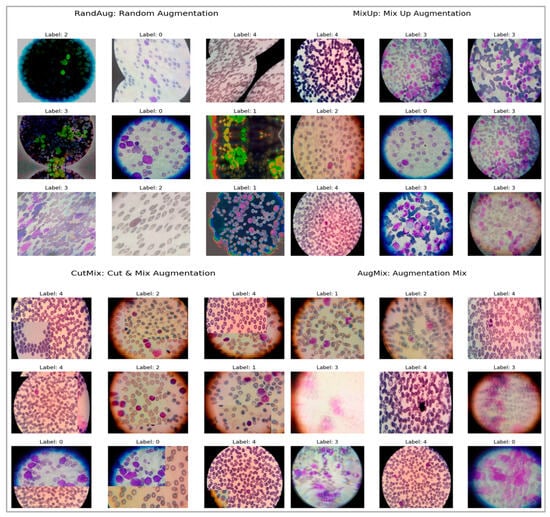

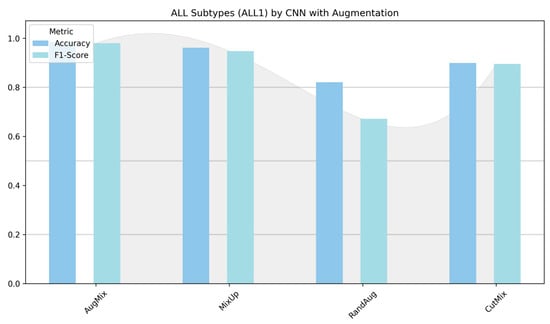

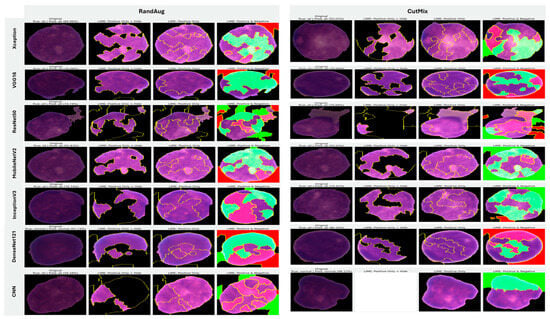

- We evaluated four families (MixUp, AugMix, CutMix, and RandAug). MixUp (α = 0.2) and AugMix (severity = 3, width = 3) consistently minimized the train–val generalization gap and yielded the most clinically coherent explanations; results reported in the paper use those settings. (We retain RandAug/CutMix results for completeness but do not base model selection on them.)

- We tracked training vs. validation curves for loss and F1. Models are accepted only if (i) the validation F1 improves while the training F1 improves (no divergence) and (ii) the generalization gap at the chosen epoch is small (typically ≤ 2–3% for the best models). We report test metrics once for the single model checkpoint with the best validation F1.

4.5.3. Preprocessing and Augmentation

Given the heterogeneous native resolutions in the source datasets (Table 5), we applied a standardized preprocessing pipeline to harmonize inputs for deep models. As shown in Figure 9, all images (ALL, AML, CLL, and CML) were resized to 224 × 224 pixels and intensity-normalized to [0, 1] to stabilize optimization and reduce variance across acquisition settings. To remove non-informative borders common in segmented or misaligned fields, we performed ROI cropping by detecting the largest contour on a binary mask and extracting its bounding box. The binary mask is used to detect the largest contour because it provides a clean, threshold representation of the cell region, enabling the precise localization of the nucleus while minimizing background interference. Figure 10 illustrates examples from ALL (Segmented) and CML, where black margins and microscope artifacts were trimmed, concentrating the field of view on diagnostically relevant cellular morphology and minimizing background noise prior to learning. Because our datasets came from multiple sources (Kaggle and Rabinndata) with varying magnifications and background noise, a standardized denoising and ROI-cropping procedure was implemented before normalization and resizing.

Figure 9.

Exemplary microscopic images of ALL, AML, CLL, and CML following resizing and intensity normalization.

Figure 10.

Examples of cropped ALL (segmented) and CML highlighting focused cellular regions.

To enhance generalization and robustness, we employed a suite of augmentation strategies, exemplified in Figure 11. RandAug reduces policy search to two hyperparameters: the number and magnitude of randomly sampled transforms, which enables effective, dataset-direct augmentation with low tuning overhead [23]. MixUp forms convex combinations of images, including label pairs to encourage locally smooth decision boundaries and mitigate overfitting, albeit at the risk of producing less natural composites for fine localization tasks [24]. CutMix pastes a rectangular patch from one image into another and mixes labels proportionally to the patch area, yielding more realistic spatial structure and improved weakly supervised localization with minimal computational cost [25]. AugMix constructs short stochastic augmentation chains and enforces prediction consistency across their mixtures, substantially improving robustness under corruption and distribution shift while maintaining manageable training overhead [26].

Figure 11.

Samples of augmented ALL, AML, CML, CLL, and Healthy images to address class imbalance.

4.6. Fine-Tuning Procedure

Each CNN backbone (DenseNet-121, VGG16, ResNet50, InceptionV3, Xception, and MobileNetV2) was initialized with ImageNet pretrained weights and adapted through a two-stage transfer-learning strategy. In the first stage, all convolutional layers were frozen, and only the custom classification head (Global Average Pooling → Dropout → Dense → Dropout → Softmax) was trained for 10–15 epochs using the Adam optimizer (learning rate = 1 × 10−3). After stabilization, the final 2–4 convolutional blocks of each backbone were unfrozen and fine-tuned end-to-end with a reduced learning rate (1 × 10−5) and weight decay = 1 × 10−4. Early stopping (patience = 10) and ReduceLROnPlateau (factor = 0.1) were applied, and MixUp/AugMix augmentations were used to enhance robustness. The best validation-F1 checkpoint was finally evaluated on the independent test set.

5. Experimental Results

5.1. Experimental Results from CNNs and Pretrained Models

We evaluated seven architectures (Xception, VGG16, ResNet50, MobileNetV2, InceptionV3, DenseNet121, and a custom CNN) under four augmentation regimes (MixUp, AugMix, CutMix, and RandAug) for binary and multiclass leukemia classification. Models were trained with a batch size of 32 for up to 100 epochs using early stopping and learning-rate scheduling. Performance is summarized in Table 6, Table 7, Table 8 and Table 9, reporting on training/validation/test splits using accuracy, loss, and F1-score. Across tasks and backbones, MixUp was the most consistently effective augmentation, typically delivering the highest F1-scores and lowest (or near-lowest) losses. AugMix was a strong runner-up, especially on MobileNetV2 and the custom CNN. CutMix often produced competitive accuracy, but frequently with higher losses, suggesting less confident predictions. RandAug was least reliable: despite occasionally yielding low losses, it often degraded the F1-score, indicating overconfident yet inaccurate predictions.

Table 7.

Performance of deep learning models on binary ALL vs. Healthy leukemia classification under four augmentation strategies (AugMix, MixUp, RandAug, and CutMix); trained for up to 100 epochs with early stopping.

Table 8.

Performance of deep learning models on three-class lymphoma classification (CLL vs. FL vs. CML) under four augmentation strategies (AugMix, MixUp, RandAug, and CutMix); trained for up to 100 epochs with early stopping.

Table 9.

Performance of deep learning models on ALL-subtype multiclass classification (Benign, Early, Pre, Pro) across two datasets under four augmentation strategies (AugMix, MixUp, RandAug, and CutMix); trained for up to 100 epochs with early stopping.

5.1.1. Experiment (A)—Binary ALL vs. Healthy (Table 7)

MixUp dominated across pretrained models, e.g., Xception (Accuracy 86.10%, F1 83.32%), VGG16 (79.19%, 73.61%), ResNet50 (78.54%, 73.42%), MobileNetV2 (83.24%, 79.37%), InceptionV3 (80.80%, 77.05%), and DenseNet121 (84.94%, 81.59%). The custom CNN was an exception: CutMix yielded the best test results (Accuracy 88.95%, F1 87.75%, Loss 0.3168). AugMix was stable but generally below MixUp; RandAug underperformed (e.g., ResNet50 F1 40.41%; InceptionV3 F1 70.23%). These results can be interpreted because MixUp’s label-space smoothing reliably improved generalization, CutMix’s spatial mixing helped a shallower CNN, while RandAug’s heavy randomness likely disrupted fine cellular cues.

5.1.2. Experiment (B)—Multiclass (CLL, FL, CML) (Table 7)

This lymphoma triplet was the hardest setting. Best results were achieved by DenseNet121 + MixUp (Accuracy 62.10%, F1 62.20%, Loss 0.7763). Other notable peaks include the following: MobileNetV2 + AugMix (Accuracy 59.47%, F1 58.38%, Loss 0.9422; CutMix had the lowest loss 0.9406 but lower F1 48.60%), Xception + MixUp (55.26%, 54.63%), VGG16 + CutMix (49.45%, 49.08%), and ResNet50 + MixUp (48.96%, 42.73%). The custom CNN struggled; AugMix was best for it (Accuracy 45.24%, F1 42.41%), while RandAug showed low loss but very low F1 (20.43%), indicating miscalibration. While no single augmentation dominated across all backbones, deeper networks benefited most from MixUp/CutMix.

5.1.3. Experiment (C)—Multiclass CALL Subtypes (Benign, Early, Pre, Pro) Across Two Datasets (Table 8)

Performance was uniformly high, with MixUp most often on top. The subtype morphology is highly separable. Thus, MixUp generally maximizes performance, while AugMix particularly benefits MobileNetV2 and the CNN. CutMix attains strong accuracy but with a higher loss, indicating less confident predictions.

5.1.4. Experiment (D)—Five-Class: (ALL, AML, CLL, CML, Healthy) (Table 10)

MixUp again led for most models: MobileNetV2 (F1 97.88%, Accuracy 97.90%, Loss 0.0775), DenseNet121 (F1 97.66%, lowest loss 0.0806), VGG16 (F1 97.29%, Loss 0.1099), and InceptionV3 (F1 95.24%). Xception peaked with AugMix (F1 96.30%, Accuracy 96.28%, Loss 0.1183), with MixUp a close second (F1 96.26%). ResNet50 lagged (MixUp F1 82.33%), and the custom CNN showed higher variance (MixUp F1 92.38%; RandAug weakest at 79.79%, Loss 0.4808). These results can be interpreted as MobileNetV2 and DenseNet121 being the most reliable across augmentations; Xception preferred AugMix in this five-class setting. RandAug frequently produced unbalanced or overconfident predictions.

Table 10.

Performance of deep learning models on five-class leukemia classification (ALL, AML, CLL, CML, Healthy) under four augmentation strategies (AugMix, MixUp, RandAug, and CutMix); trained for up to 100 epochs with early stopping.

Table 10.

Performance of deep learning models on five-class leukemia classification (ALL, AML, CLL, CML, Healthy) under four augmentation strategies (AugMix, MixUp, RandAug, and CutMix); trained for up to 100 epochs with early stopping.

| Model | Augmentation | Train | Val | Test | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc. | Loss | F1 | Acc. | Loss | F1 | Acc. | Loss | F1 | ||

| Xception | AugMix | 0.9045 | 0.2603 | 0.9044 | 0.9637 | 0.1056 | 0.9633 | 0.9628 | 0.1183 | 0.9630 |

| MixUp | 0.9199 | 0.4214 | 0.8737 | 0.9688 | 0.1003 | 0.9687 | 0.9622 | 0.1234 | 0.9626 | |

| RandAug | 0.8280 | 0.4444 | 0.8275 | 0.9304 | 0.1898 | 0.9296 | 0.9310 | 0.1929 | 0.9316 | |

| CutMix | 0.7079 | 0.8873 | 0.6560 | 0.9481 | 0.2301 | 0.9480 | 0.9523 | 0.2355 | 0.9525 | |

| VGG16 | AugMix | 0.8821 | 0.3157 | 0.8824 | 0.9516 | 0.1487 | 0.9510 | 0.9575 | 0.1288 | 0.9579 |

| MixUp | 0.9168 | 0.4464 | 0.8692 | 0.9743 | 0.1065 | 0.9741 | 0.9725 | 0.1099 | 0.9729 | |

| RandAug | 0.8277 | 0.4404 | 0.8268 | 0.9269 | 0.1995 | 0.9257 | 0.9432 | 0.1892 | 0.9430 | |

| CutMix | 0.6885 | 0.9281 | 0.6404 | 0.9466 | 0.2502 | 0.9460 | 0.9438 | 0.2429 | 0.9440 | |

| ResNet50 | AugMix | 0.6714 | 0.8285 | 0.6706 | 0.8029 | 0.5207 | 0.8002 | 0.7855 | 0.5172 | 0.7848 |

| MixUp | 0.7198 | 0.8360 | 0.6892 | 0.8271 | 0.4846 | 0.8264 | 0.8215 | 0.4820 | 0.8233 | |

| RandAug | 0.5595 | 1.0700 | 0.5579 | 0.7324 | 0.7375 | 0.7329 | 0.7105 | 0.7652 | 0.7138 | |

| CutMix | 0.5553 | 1.2279 | 0.5149 | 0.8054 | 0.6240 | 0.8061 | 0.8146 | 0.6363 | 0.8177 | |

| MobileNetV2 | AugMix | 0.9052 | 0.2588 | 0.9056 | 0.9582 | 0.1133 | 0.9576 | 0.9656 | 0.1080 | 0.9652 |

| MixUp | 0.9228 | 0.4107 | 0.8771 | 0.9783 | 0.0823 | 0.9782 | 0.9790 | 0.0775 | 0.9788 | |

| RandAug | 0.8555 | 0.3893 | 0.8553 | 0.9355 | 0.1722 | 0.9352 | 0.9562 | 0.1459 | 0.9564 | |

| CutMix | 0.7187 | 0.8620 | 0.6613 | 0.9607 | 0.1843 | 0.9604 | 0.9590 | 0.1868 | 0.9595 | |

| InceptionV3 | AugMix | 0.8477 | 0.4069 | 0.8481 | 0.9340 | 0.1965 | 0.9337 | 0.9328 | 0.2166 | 0.9335 |

| MixUp | 0.8992 | 0.4942 | 0.8531 | 0.9541 | 0.1495 | 0.9541 | 0.9525 | 0.1668 | 0.9524 | |

| RandAug | 0.7856 | 0.5631 | 0.7844 | 0.8977 | 0.2902 | 0.8976 | 0.8999 | 0.3001 | 0.8999 | |

| CutMix | 0.6845 | 0.9488 | 0.6349 | 0.9360 | 0.2649 | 0.9358 | 0.9302 | 0.2815 | 0.9301 | |

| DenseNet121 | AugMix | 0.9086 | 0.2477 | 0.9080 | 0.9703 | 0.0940 | 0.9702 | 0.9662 | 0.0934 | 0.9665 |

| MixUp | 0.9221 | 0.4169 | 0.8761 | 0.9793 | 0.0743 | 0.9792 | 0.9763 | 0.0806 | 0.9766 | |

| RandAug | 0.8766 | 0.3261 | 0.8755 | 0.9597 | 0.1178 | 0.9594 | 0.9624 | 0.1183 | 0.9628 | |

| CutMix | 0.7027 | 0.8896 | 0.6519 | 0.9551 | 0.2096 | 0.9549 | 0.9563 | 0.2092 | 0.9565 | |

| CNN | AugMix | 0.8451 | 0.4290 | 0.8431 | 0.8745 | 0.3266 | 0.8690 | 0.8761 | 0.3147 | 0.8772 |

| MixUp | 0.9072 | 0.4809 | 0.8544 | 0.8846 | 0.3279 | 0.8833 | 0.9251 | 0.2502 | 0.9238 | |

| RandAug | 0.7689 | 0.6108 | 0.7675 | 0.7883 | 0.5145 | 0.7816 | 0.7984 | 0.4808 | 0.7979 | |

| CutMix | 0.7038 | 0.9079 | 0.6564 | 0.8241 | 0.4683 | 0.8215 | 0.8699 | 0.3926 | 0.8720 | |

5.2. Comparative Analysis of Model Results for Diagnosis Problems

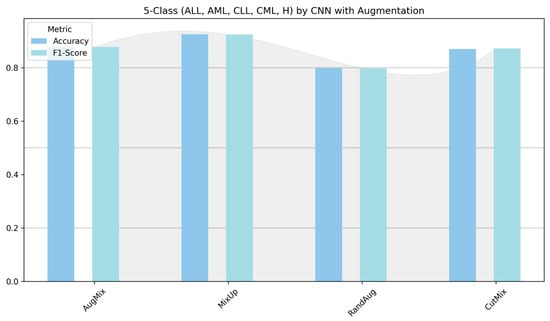

Figure 12 contrasts the custom CNN across two settings: (left) ALL-subtype recognition (Benign, Early, Pre, Pro) and (right) the harder five-class task (ALL, AML, CLL, CML, Healthy). On the subtype dataset, the model reaches near-ceiling performance with AugMix and MixUp, while CutMix is solid but lower, and RandAug depresses F1 markedly. In the five-class experiment, absolute scores drop, reflecting greater inter-class similarity and acquisition variability; nevertheless, MixUp remains the most reliable augmentation (highest accuracy/F1), AugMix is consistently second best, CutMix is competitive but not dominant, and RandAug again lags. The CNN benefits most from augmentations that enforce smooth decision boundaries or consistency (MixUp/AugMix), whereas the heavier stochasticity of RandAug appears to disrupt fine morphological cues, which results in the gap between the easier ALL-subtype task and the more challenging five-class setting.

Figure 12.

Classification performance (accuracy and F1-score) of the custom CNN for ALL subtypes (Benign, Early, Pre, and Pro) versus the five-class task (ALL, AML, CLL, CML, and Healthy).

Augmentations such as MixUp, CutMix, and AugMix enhance model generalization by enforcing decision-boundary smoothness and prediction consistency. In these methods, linear or patch-based interpolations between images and their labels constrain the CNN to produce proportionally mixed outputs, thereby discouraging abrupt changes in class probability across the feature manifold. This mechanism aligns with the Vicinal Risk Minimization (VRM) and Manifold Mixup principles [24], which promote smoother decision surfaces and reduce overfitting. Empirically, our results (Table 6, Table 7, Table 8 and Table 9) show that such augmentations yield the highest validation F1-scores and smallest generalization gaps, confirming their effectiveness in producing robust and consistent leukemia classifiers.

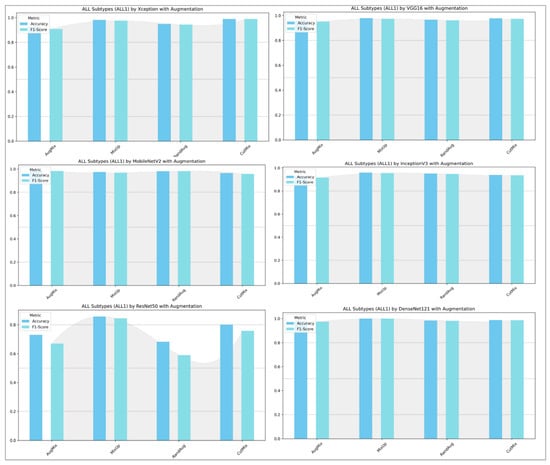

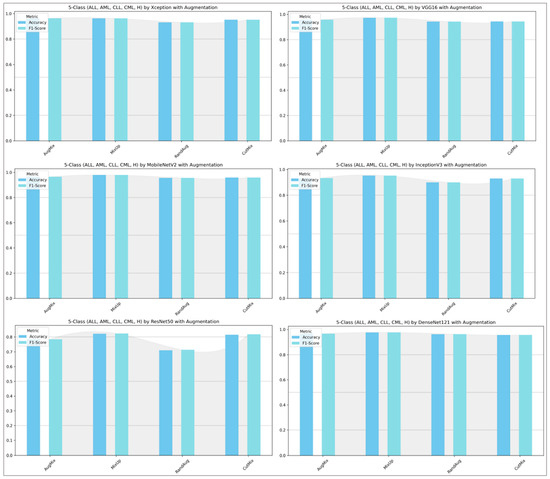

Figure 13 and Figure 14 show that augmentation choice and backbone architecture jointly govern performance. For ALL-subtype recognition (Figure 13), most pretrained models approach ceiling performance, with MixUp typically yielding the highest accuracy/F1 and AugMix close behind; RandAug is consistently weakest, and CutMix is competitive but rarely best. DenseNet121 and MobileNetV2 are the most robust, while ResNet50 exhibits the largest drop and variability. In the harder five-class setting (Figure 14), absolute scores decline slightly across models, yet the pattern persists: MixUp again dominates (especially for MobileNetV2 and DenseNet121), AugMix remains a reliable second, and RandAug trails. Notably, accuracy and F1 move in lockstep, indicating balanced precision–recall, with the biggest architecture gap seen for ResNet50 versus the stronger DenseNet121/MobileNetV2 pair.

Figure 13.

ALL-subtype classification (Benign, Early, Pre, and Pro): performance (accuracy and F1-score) of pretrained models.

Figure 14.

Five-class leukemia classification (ALL, AML, CLL, CML, and Healthy): performance (accuracy and F1-score) of pretrained models.

5.3. Experimental Results from Explainable AI (XAI)

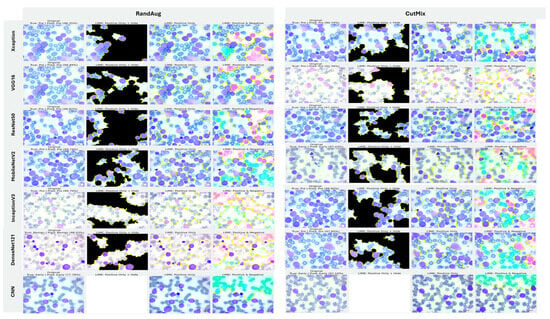

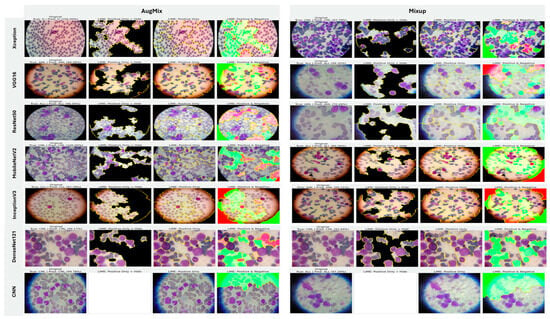

5.3.1. Interpretability of Binary Classifiers Using LIME

In image-based tasks, LIME defines features as interpretable super-pixels obtained by segmenting the image into contiguous regions with similar color and texture, rather than individual pixels. The explainer perturbs these super-pixels by selectively masking or altering them and observes the change in model prediction to estimate each region’s local contribution to the decision. We quantified the transparency of decisions for the three binary tasks (ALL vs. Healthy, AML vs. Benign, and Benign vs. CML) by applying LIME to every trained model under four augmentation regimes (AugMix, MixUp, RandAug, and CutMix). For each model–augmentation pair, we generated three complementary explanation views—Positive Only + Hide, Positive Only, and Positive and Negative—to localize evidence supporting or opposing the predicted class. Figure 15 illustrates how the LIME explainer identifies the image regions that most strongly influence the CNN’s decision in the binary task (Acute Lymphoblastic Leukemia vs. Healthy).

Figure 15.

LIME-based explainability for binary ALL vs. Healthy leukemia classification, showing how the LIME explainer identifies the image regions that most strongly influence the CNN’s decision in the binary task (Acute Lymphoblastic Leukemia vs. Healthy).

- Green-highlighted super-pixels represent areas that positively contribute to the predicted class (supporting evidence).

- Red-highlighted regions denote areas that contradict the prediction or carry lower diagnostic relevance.

In the ALL samples, LIME consistently highlights the nuclear region, chromatin density, and irregular cytoplasmic boundaries—key morphological cues of leukemic cells—while in healthy cells, attention focuses on uniform nuclei with smooth borders. Across tasks, MixUp produced the most clinically coherent attributions and the strongest alignment between predictive performance and explanation quality. In the representative ALL vs. Healthy setting, Xception (F1 = 83.32%) yielded green saliency tightly concentrated on leukemic nuclei with non-relevant background suppressed (red), indicating confident, well-localized reasoning; DenseNet121 (F1 = 81.59%) and InceptionV3 (F1 = 77.05%) showed similar nucleus-centric maps. MobileNetV2 also produced focused explanations under MixUp (F1 = 79.37%) and remained competitive with CutMix (F1 = 76.93%). By contrast, RandAug routinely degraded both accuracy and interpretability. ResNet50 under RandAug (F1 = 40.41%) exhibited diffuse, noisy saliency with poor correspondence to cellular structures; switching to CutMix improved F1 to 72.30% and modestly tightened attributions, though heatmaps remained scattered. VGG16 showed comparatively better localization under RandAug (F1 = 73.95%), but its explanatory fidelity varied more across augmentations than MixUp-driven models. The custom CNN illustrates a performance–explanation tension: while CutMix (F1 = 87.75%) and MixUp (F1 = 80.64%) delivered strong scores, Positive Only + Hide maps were occasionally unreliable (partially or fully blank), suggesting weaker feature localization despite favorable metrics. A similar, albeit milder, pattern appeared with InceptionV3, which achieved solid F1 with MixUp but sometimes produced less sharply focused heatmaps.

Taken together, these results show a clear contrast between augmentation strategies: MixUp (and, secondarily, CutMix) tends to yield anatomically plausible, nucleus-focused attributions that track improvements in F1, whereas RandAug often produces overconfident yet poorly localized explanations. Notably, high accuracy does not invariably guarantee faithful localization (custom CNN, InceptionV3), underscoring the necessity of multi-view LIME diagnostics to validate decision reliability beyond aggregate performance metrics (Figure 15).

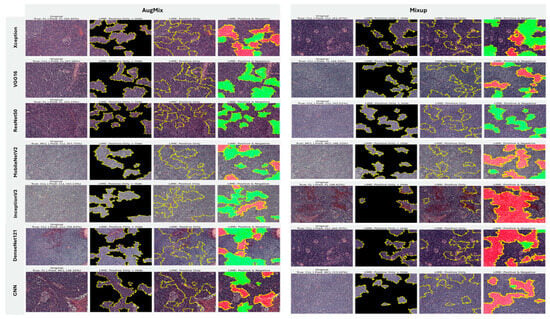

5.3.2. Interpretability of Multi-Class Classifiers Using LIME

Across the three multi-class settings, LIME visualizations (Figure 16, Figure 17 and Figure 18) broadly track model accuracy: clearer, nucleus-centric attributions accompany higher F1, whereas diffuse or unfocused maps coincide with weaker performance. The lymphoma triplet (CLL/FL/CML) is the most challenging—most backbones show low F1 with scattered saliency; the custom CNN is especially vague, while Xception improves under MixUp with class-relevant highlights. By contrast, ALL-subtype recognition yields strong and well-localized explanations for Xception, VGG16, MobileNetV2, DenseNet121, and InceptionV3, with DenseNet121 achieving the most consistent, sharply focused maps; the CNN, despite high scores under AugMix/MixUp, sometimes presents nearly blank “Positive Only + Hide” views, revealing an accuracy–interpretability gap. In the five-class task, DenseNet121 again leads with tight, diagnostically coherent heatmaps, closely followed by MobileNetV2; Xception and VGG16 remain competitive with spatially meaningful attributions, whereas InceptionV3’s maps are reliable under MixUp but less precise under RandAug/AugMix. ResNet50 and the CNN lag, often producing scattered or coarse saliency. The experimental results reveal that MixUp delivers the most faithful and stable explanations across models, AugMix is a solid second, while CutMix and RandAug most often degrade the interpretability.

Figure 16.

LIME visualizations for the three-class CLL–FL–CML task within our leukemia classification pipeline.

Figure 17.

LIME visualizations for ALL-subtype leukemia classification (Benign, Early, Pre, and Pro).

Figure 18.

LIME visualizations for five-class leukemia classification (ALL, AML, CLL, CML, and Healthy).

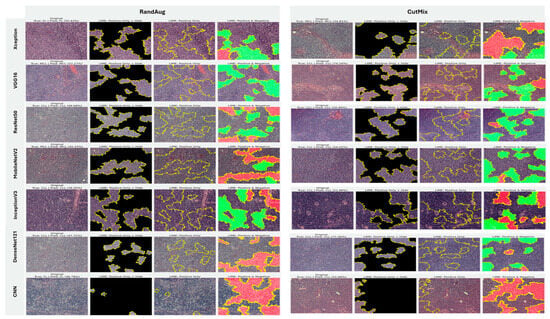

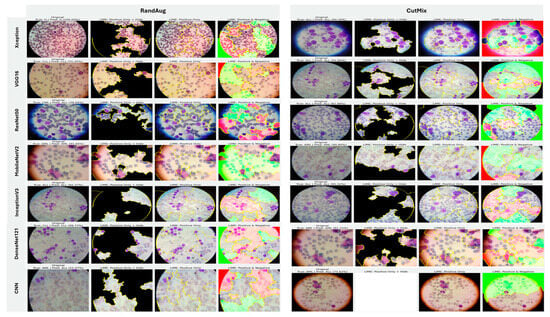

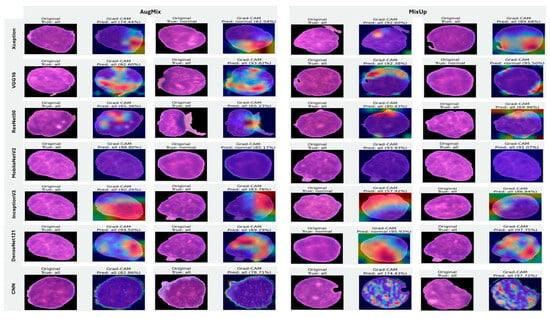

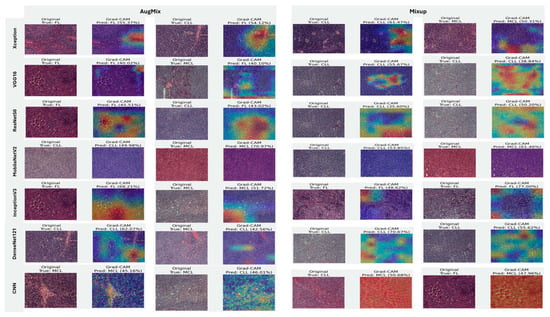

5.3.3. Interpretability of Binary Classifiers Using Grad-CAM

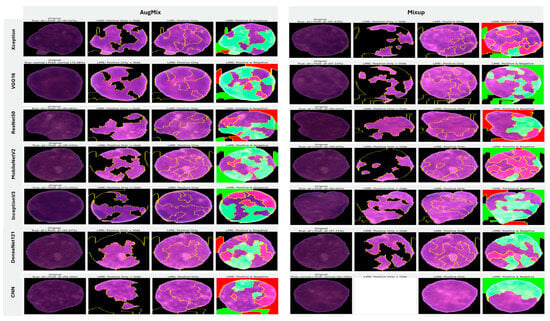

Guided by the preceding experiments, we report Grad-CAM results exclusively for MixUp and AugMix, as these augmentations consistently produced the most faithful and stable explanations across architectures, whereas CutMix and RandAug generally degraded interpretability. Figure 19 presents Grad-CAM visualizations for the ALL vs. Healthy classification, providing further insights into each model’s ability to localize class-relevant regions. With regard to Grad-CAM (ALL vs. Healthy), across backbones, MixUp (right panels) produces cleaner, nucleus-centric saliency than AugMix (left), which is often broader and more diffuse. DenseNet121 and MobileNetV2 show the most clinically plausible maps; the heat strongly concentrates on leukemic nuclei with background largely suppressed, followed by Xception and VGG16, whose MixUp explanations are also well localized. InceptionV3 improves under MixUp but still exhibits occasional spillover into cytoplasm. ResNet50 remains inconsistent, with edge- or background-biased activations, especially under AugMix. The custom CNN yields the least stable attributions (noisy/perimeter-focused) under both regimes, though MixUp narrows attention somewhat. MixUp enhances both confidence and interpretability, while AugMix more commonly dilutes focus on the diagnostically relevant regions.

Figure 19.

Grad-CAM visualizations for binary ALL vs. Healthy leukemia classification. Heatmaps highlight the discriminative regions driving each prediction, enabling verification that models attend to leukemic nuclei and other cell-intrinsic morphology rather than background artifacts.

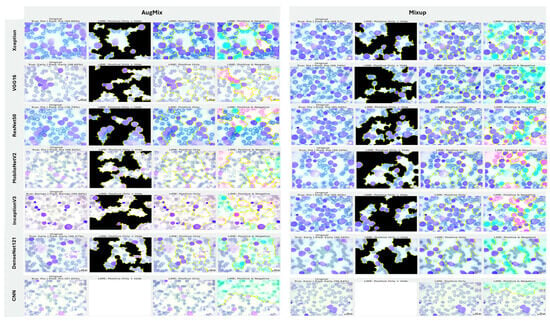

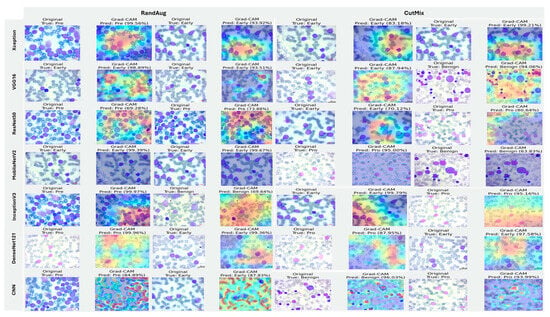

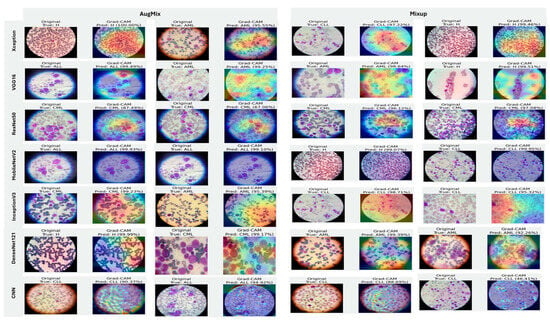

5.3.4. Interpretability of Multi-Class Classifiers Using Grad-CAM

Figure 20, Figure 21 and Figure 22 summarize Grad-CAM evidence across the three multi-class settings and reveal a consistent link between performance and interpretability. In the lymphoma triplet (CLL–FL–CML, shown in Figure 20), saliency is comparatively diffuse and sometimes edge-biased, especially for ResNet50 and the custom CNN—reflecting the task’s higher class similarity; MixUp generally sharpens attention over subtype-relevant tissue regions, with DenseNet121 and InceptionV3 producing the most coherent maps. In contrast, ALL-subtype recognition (Benign, Early, Pre, and Pro; Figure 21) yields compact, nucleus-centric activations across backbones, with MixUp (and closely, AugMix) focusing on diagnostically meaningful structures; DenseNet121 and MobileNetV2 are particularly well localized, whereas ResNet50 and the CNN remain less stable. The five-class setting (ALL, AML, CLL, CML, and Healthy; Figure 22) falls between these extremes: MixUp again delivers the cleanest, class-specific attributions for DenseNet121, MobileNetV2, Xception, and VGG16, while weaker models exhibit broader, less discriminative heatmaps.

Figure 20.

Grad-CAM visualizations for three-class leukemia classification (CLL vs. FL vs. CML).

Figure 21.

Grad-CAM visualizations for ALL-subtype leukemia classification (Benign, Early, Pre, and Pro).

Figure 22.

Grad-CAM visualizations for five-class leukemia classification (ALL, AML, CLL, CML, and Healthy). Saliency overlays identify class-specific cellular structures across heterogeneous samples.

Across tasks, DenseNet121 and MobileNetV2 are the most reliable, achieving state-of-the-art accuracy with the strongest, nucleus-centric explanations. DenseNet121 attains perfect F1 on the ALL-subtype dataset and ~97.7% F1 on the five-class problem, while MobileNetV2 reaches ~97.9% accuracy/F1 in the same five-class setting; in both cases, LIME and Grad-CAM concentrate on leukemic nuclei and other cell-intrinsic structures, indicating faithful attribution.

5.4. Comparison of Baseline Methods and the Proposed Model

Compared with prior XAI baselines, our system attains high accuracy on a larger and harder benchmark while providing richer, corroborated interpretability. Using 20,000 images spanning five classes (ALL, AML, CLL, CML, Healthy), our MobileNetV2-based model with LIME and Grad-CAM achieves 97.9% accuracy, as can be seen in Table 11. In contrast, [2] reported 100% on ALL and 97.08% on (AML, CLL, CML) using 889 ALL-IDB images plus a small private set and no XAI; results that are impressive but plausibly inflated by limited data and the absence of a Healthy class.

Table 11.

Comparison of leukemia diagnostic models with XAI integration.

Baselines with XAI on smaller corpora underperform: 84% with VGG16 + SVM and Grad-CAM on 1250 AIIMS Patna images in [7], and 83.33% on ALL (ALL-IDB) using LIME in [8], with a reported 100% on a private multi-class set that again likely reflects dataset constraints. Our model matches or exceeds the multi-class performance from [2] while substantially outperforming [7] and [8] on public data, and crucially delivers complementary, cell-centric explanations (LIME + Grad-CAM) that validate attention to leukemic nuclei and support clinical trust across a far more diverse dataset.

6. Conclusions and Future Work

This study demonstrates the transformative potential of deep learning for leukemia diagnostics by enabling early, accurate, and automated classification of hematologic subtypes from microscopy. We engineered a CNN and XAI framework that adapts powerful pretrained backbones, namely DenseNet-121, MobileNetV2, VGG16, InceptionV3, ResNet50, and Xception, to classify multiple leukemia types (ALL, AML, CLL, CML) and healthy cells using standardized, ROI-focused images. Comprehensive evaluations across binary and multiclass settings show state-of-the-art performance: on the five-class task, MobileNetV2 reached 97.9% F1, while DenseNet-121 achieved ~97.66% F1. The lymphoma triplet (CLL vs. FL vs. CML) remained the most challenging, where DenseNet-121 attained the best scores (62.1% accuracy/62.2% F1). In binary settings, outcomes depended on the model and setup: the custom CNN achieved 88.95% accuracy on ALL vs. Healthy. Crucially, integrating XAI mitigated model opacity. LIME and Grad-CAM supplied complementary evidence maps that aligned predictions with cell-intrinsic morphology. DenseNet-121, InceptionV3, and Xception produced the most consistently nucleus-centric Grad-CAM saliency, whereas MobileNetV2 showed mixed Grad-CAM heatmaps, underscoring that high accuracy does not always guarantee faithful localization. Our approach matched or exceeded prior non-XAI baselines and substantially outperformed XAI baselines trained on smaller corpora, while offering stronger, corroborated interpretability.

The work has some limitations. The corpus is class-imbalanced (over-representation of ALL and limited CLL/CML), heterogeneous in acquisition (stains, magnification, devices), and lacks healthy controls for all subtypes. Explanations were assessed with post hoc methods that provide no formal guarantees of faithfulness. Prospective clinical validation and workflow integration were beyond scope.

Future work will (i) expand to multi-center, class-balanced cohorts with standardized staining and metadata to reduce domain shift; (ii) explore self-supervised and foundation-model pretraining, stain/style normalization, domain generalization, and calibration/uncertainty quantification (e.g., conformal risk control) to improve robustness; (iii) adopt hierarchical and multi-instance learning to model patient-level decisions from fields of view and whole-slide images; (iv) enrich explainability with complementary techniques (Integrated Gradients, SHAP, counterfactuals) and quantitative faithfulness tests, coupled with user studies of pathologist trust and decision impact; (v) implement source-disjoint and patient-level splits to eliminate potential data leakage; (vi) conduct statistical significance testing for comparative results across architectures and augmentations and evaluate deployment aspects, such as runtime constraints, on-device inference, and continuous learning/active labeling. Collectively, these directions aim to translate accurate, interpretable leukemia AI from retrospective benchmarks to prospective, real-world clinical settings.

Author Contributions

Conceptualization, S.M.A. and S.H.A.; methodology, S.M.A. and S.H.A.; software, S.H.A.; validation, S.M.A.; formal analysis, S.M.A. and S.H.A.; investigation, S.H.A.; resources, S.M.A.; data curation, S.H.A.; writing—original draft preparation, S.H.A.; writing—review and editing, S.M.A.; visualization, S.M.A. and S.H.A.; supervision, S.M.A.; project administration, S.M.A.; funding acquisition, S.M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Taif University, Saudi Arabia, Project No. (TU-DSPP-2025-55).

Institutional Review Board Statement

Not applicable. Because the dataset originates from a public database.

Informed Consent Statement

Not applicable. Because the dataset originates from a public database.

Data Availability Statement

To allow reproducibility by other researchers and improvements on our work, the notebook codes for all models used in the study have been made available at: https://drive.google.com/drive/folders/1QnGBmsojl_zqUcE8rkYgH5vkEQjonnws?usp=sharing (accessed on 1 August 2024).

Acknowledgments

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2025-55).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ansari, S.; Navin, A.H.; Babazadeh Sangar, A.; Vaez Gharamaleki, J.; Danishvar, S. Acute Leukemia Diagnosis Based on Images of Lymphocytes and Monocytes Using Type-II Fuzzy Deep Network. Electronics 2023, 12, 1116. [Google Scholar] [CrossRef]

- Deshpande, N.-M.; Gite, S.; Pradhan, B.; Alamri, A.; Lee, C.-W. A New Method for Diagnosis of Leukemia Utilizing a Hybrid DL-ML Approach for Binary and Multi-Class Classification on a Limited-Sized Database. Comput. Model. Eng. Sci. 2024, 139, 593–631. [Google Scholar] [CrossRef]

- Alzahrani, A.K.; Alsheikhy, A.A.; Shawly, T.; Azzahrani, A.; Said, Y. A Novel Deep Learning Segmentation and Classification Framework for Leukemia Diagnosis. Algorithms 2023, 16, 556. [Google Scholar] [CrossRef]

- Elsayed, B.; Elhadary, M.; Elshoeibi, R.M.; Elshoeibi, A.M.; Badr, A.; Metwally, O.; ElSherif, R.A.; Salem, M.E.; Khadadah, F.; Alshurafa, A.; et al. Deep learning enhances acute lymphoblastic leukemia diagnosis and classification using bone marrow images. Front. Oncol. 2023, 13, 1330977. [Google Scholar] [CrossRef] [PubMed]

- Kaur, M.; AlZubi, A.A.; Jain, A.; Singh, D.; Yadav, V.; Alkhayyat, A. DSCNet: Deep Skip Connections-Based Dense Network for ALL Diagnosis Using Peripheral Blood Smear Images. Diagnostics 2023, 13, 2752. [Google Scholar] [CrossRef]

- Alkhalaf, S.; Alturise, F.; Bahaddad, A.A.; Elnaim, B.M.E.; Shabana, S.; Abdel-Khalek, S.; Mansour, R.F. Adaptive Aquila Optimizer with Explainable Artificial Intelligence-Enabled Cancer Diagnosis on Medical Imaging. Cancers 2023, 15, 1492. [Google Scholar] [CrossRef]

- Abhishek, A.; Jha, R.K.; Sinha, R.; Jha, K. Automated detection and classification of leukemia on a subject-independent test dataset using deep transfer learning supported by Grad-CAM visualization. Biomed. Signal Process. Control 2023, 83, 104722. [Google Scholar] [CrossRef]

- Deshpande, N.M.; Gite, S.; Pradhan, B. Explainable AI for binary and multi-class classification of leukemia using a modified transfer learning ensemble model. Int. J. Smart Sens. Intell. Syst. 2024, 17, 1–20. [Google Scholar] [CrossRef]

- Döhner, H.; Estey, E.; Grimwade, D.; Amadori, S.; Appelbaum, F.R.; Büchner, T.; Dombret, H.; Ebert, B.L.; Fenaux, P.; Larson, R.A.; et al. Diagnosis and management of AML in adults: 2017 ELN recommendations from an international expert panel. Blood 2017, 129, 424–447. [Google Scholar] [CrossRef]

- Mohammed, K.K.; Hassanien, A.E.; Afify, H.M. Refinement of ensemble strategy for acute lymphoblastic leukemia microscopic images using hybrid CNN-GRU-BiLSTM and MSVM classifier. Neural Comput. Appl. 2023, 35, 17415–17427. [Google Scholar] [CrossRef]

- Hosseini, A.; Eshraghi, M.A.; Taami, T.; Sadeghsalehi, H.; Hoseinzadeh, Z.; Ghaderzadeh, M.; Rafiee, M. A mobile application based on efficient lightweight CNN model for classification of B-ALL cancer from non-cancerous cells: A design and implementation study. Inform. Med. Unlocked 2023, 39, 101244. [Google Scholar] [CrossRef]

- Sulaiman, A.; Kaur, S.; Gupta, S.; Alshahrani, H.; Reshan, M.S.A.; Alyami, S.; Shaikh, A. ResRandSVM: Hybrid Approach for Acute Lymphocytic Leukemia Classification in Blood Smear Images. Diagnostics 2023, 13, 2121. [Google Scholar] [CrossRef] [PubMed]

- Mohamed, H.; Elsheref, F.K.; Kamal, S.R. A New Model for Blood Cancer Classification Based on Deep Learning Techniques. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 422–429. [Google Scholar] [CrossRef]

- Diaz Resendiz, J.L.; Ponomaryov, V.; Reyes Reyes, R.; Sadovnychiy, S. Explainable CAD System for Classification of Acute Lymphoblastic Leukemia Based on a Robust White Blood Cell Segmentation. Cancers 2023, 15, 3376. [Google Scholar] [CrossRef]

- Velázquez-Arreola, J.D.; Sánchez-Medel, N.I.; Zarraga-Vargas, O.A.; Díaz-Hernández, R.; Altamirano-Robles, L. Evaluation of Heat Map Methods Using Cell Morphology for Classifying Acute Lymphoblastic Leukemia Cells. Comput. Sist. 2024, 28, 221–231. [Google Scholar] [CrossRef]

- Genovese, A.; Piuri, V.; Scotti, F. ALL-IDB Patches: Whole Slide Imaging for Acute Lymphoblastic Leukemia Detection Using Deep Learning. In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech, and Signal Processing Workshops, ICASSPW, Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Himel, M.; Hasan, M.A.; Suzuki, T.; Shin, J. Feature Fusion Based Ensemble of Deep Networks for Acute Leukemia Diagnosis Using Microscopic Smear Images. IEEE Access 2024, 12, 54758–54771. [Google Scholar] [CrossRef]

- Raza, M.U.; Nawaz, A.; Liu, X.T.; Chen, Z.Z.; Leung, V.C.M.; Chen, J.; Li, J.Q. Visual Explanations: Activation-based Acute Lymphoblastic Leukemia Cell Classification. In Proceedings of the 2023 IEEE International Conference on Development and Learning, ICDL, Macau, China, 9–11 November 2023; pp. 61–66. [Google Scholar]

- Ghnemat, R.; Alodibat, S.; Abu Al-Haija, Q. Explainable Artificial Intelligence (XAI) for Deep Learning Based Medical Imaging Classification. J. Imaging 2023, 9, 177. [Google Scholar] [CrossRef]

- Muhammad, D.; Bendechache, M. Unveiling the black box: A systematic review of Explainable Artificial Intelligence in medical image analysis. Comput. Struct. Biotechnol. J. 2024, 24, 542–560. [Google Scholar] [CrossRef]

- Mohamed, E.; Sirlantzis, K.; Howells, G. A review of visualisation-as-explanation techniques for convolutional neural networks and their evaluation. Displays 2022, 73, 102239. [Google Scholar] [CrossRef]

- Haque, R.; Al Sakib, A.; Hossain, M.F.; Islam, F.; Ibne Aziz, F.; Ahmed, M.R.; Kannan, S.; Rohan, A.; Hasan, M.J. Advancing Early Leukemia Diagnostics: A Comprehensive Study Incorporating Image Processing and Transfer Learning. BioMedInformatics 2024, 4, 966–991. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. RandAugment: Practical automated data augmentation with a reduced search space. arXiv 2019, arXiv:1909.13719. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar] [CrossRef]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. arXiv 2019, arXiv:1905.04899. [Google Scholar] [CrossRef]

- Hendrycks, D.; Mu, N.; Cubuk, E.D.; Zoph, B.; Gilmer, J.; Lakshminarayanan, B. AugMix: A Simple Data Processing Method to Improve Robustness and Uncertainty. arXiv 2020, arXiv:1912.02781. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.