Abstract

Background and Objectives: The availability of multiple dental implant systems makes it difficult for the treating dentist to identify and classify the implant in case of inaccessibility or loss of previous records. Artificial intelligence (AI) is reported to have a high success rate in medical image classification and is effectively used in this area. Studies have reported improved implant classification and identification accuracy when AI is used with trained dental professionals. This systematic review aims to analyze various studies discussing the accuracy of AI tools in implant identification and classification. Methods: The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines were followed, and the study was registered with the International Prospective Register of Systematic Reviews (PROSPERO). The focused PICO question for the current study was “What is the accuracy (outcome) of artificial intelligence tools (Intervention) in detecting and/or classifying the type of dental implant (Participant/population) using X-ray images?” Web of Science, Scopus, MEDLINE-PubMed, and Cochrane were searched systematically to collect the relevant published literature. The search strings were based on the formulated PICO question. The article search was conducted in January 2024 using the Boolean operators and truncation. The search was limited to articles published in English in the last 15 years (January 2008 to December 2023). The quality of all the selected articles was critically analyzed using the Quality Assessment and Diagnostic Accuracy Tool (QUADAS-2). Results: Twenty-one articles were selected for qualitative analysis based on predetermined selection criteria. Study characteristics were tabulated in a self-designed table. Out of the 21 studies evaluated, 14 were found to be at risk of bias, with high or unclear risk in one or more domains. The remaining seven studies, however, had a low risk of bias. The overall accuracy of AI models in implant detection and identification ranged from a low of 67% to as high as 98.5%. Most included studies reported mean accuracy levels above 90%. Conclusions: The articles in the present review provide considerable evidence to validate that AI tools have high accuracy in identifying and classifying dental implant systems using 2-dimensional X-ray images. These outcomes are vital for clinical diagnosis and treatment planning by trained dental professionals to enhance patient treatment outcomes.

1. Introduction

Advancements in science and technology have influenced people’s lives in various fields, including dentistry. With the introduction of precise digital machines, dentists can provide high-quality treatment to their patients [1,2]. Various studies have shown that these computer-aided machines help dentists in various ways, from the fabrication of prostheses using CAD/CAM [2,3,4,5] to the use of robots in the treatment of patients [6,7,8]. The introduction of AI has taken dentistry to the next level. These tools help/act as supplementary aids to guide dentists’ diagnosis and treatment planning. Artificial intelligence involves developing and training machines through a set of data so that they are capable of decision making and problem solving, mimicking the human brain [9,10,11]. Machine learning (ML), a segment of AI, involves using algorithms to perform tasks without human intervention. Deep learning (DL), e.g., convolutional neural network (CNN), is an element of ML that creates a neural network capable of identifying patterns by itself, which enhances feature identification [11,12,13].

AI functions on two levels. The first level involves training, in which data are used to train and set the parameters. The second level is the testing level, in which AI performs its designated task of problem solving or decision making based on the training data. The training data are generally from the pool of collected data of interest [14,15,16,17]. Currently, AI is widely used in dentistry, which involves caries detection [18,19], periapical lesion detection [20], oral cancer diagnosis [21,22], screening of osteoporosis [23], working length determination during endodontic treatment [24,25], determination of root morphology [26,27], forensic odontology [28], pediatric dentistry [29], and implant dentistry for identification [30,31,32], diagnosis, and treatment planning [33,34]. Studies have shown that, in general, AI helps dentists in diagnosis and treatment planning, as it provides logical reasons that aid in scientific assessment.

Dental implants are commonly used for replacing missing teeth. Studies have reported a high long-term success rate with a ten-year survival rate above 95% [35,36,37,38]. With constantly increasing demands, dental implant manufacturers are developing different implant systems to increase the success rate [39]. With the increase in the use of dental implants, an increase in complications has also been reported. These complications may be related to prosthetic or fixture components or may be biological in nature [40,41,42,43]. To manage these complications, the treating dentist should know the type of implant system used so that he or she can provide the best possible treatment outcome [44]. The data related to the implant system can be retrieved easily from the patient’s previous records. However, in case of inaccessibility or loss of previous records due to any reason, it becomes difficult for the dentist to identify and classify the implant system using the available X-rays and clinical observation [45]. Dentists with vast experience in implantology may also find this task challenging. AI is reported to have a high success rate in medical image classification and is effectively used in this area. AI has been used to manage the problem of implant system identification and classification [30,31,32,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63]. The AI tool is trained using a database of implant images and is later used to identify and classify the implants. Studies have reported improved implant classification and identification accuracy when AI is used with trained dental professionals [51,53,60,62]. This systematic review aims to analyze various studies discussing the accuracy of AI tools in implant identification and classification.

2. Materials and Methods

2.1. Registration

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [64] were followed to systematize and compile this systematic review. The study was registered with the International Prospective Register of Systematic Reviews (PROSPERO registration No.: CRD42024500347).

2.2. Inclusion and Exclusion Criterias

The details of inclusion and exclusion criteria are given in Table 1.

Table 1.

Selection criteria.

2.3. Exposure and Outcome

In the current study, the exposure was the identification of the type and classification of an implant system using an artificial intelligence tool. The outcome was the accuracy of identification. The focused PICO (Population (P), Intervention (I), Comparison (C), and Outcome (O)) question for the current study was “What is the accuracy (outcome) of artificial intelligence tools (Intervention) in detecting and/or classifying the type of dental implant (Participant/population) using X-ray images?”

- P: Human X-rays with dental implants.

- I: Artificial intelligence tools.

- C: Expert opinions and reference standards.

- O: Accuracy of detection of the dental implant.

2.4. Information Sources and Search Strategy

Four electronic databases (Web of Science, Scopus, MEDLINE-PubMed, and Cochrane) were searched systematically to collect the relevant published literature. The search strings were based on the formulated PICO question. The article search was conducted in January 2024 using the Boolean operators and truncation. The search was limited to articles published in English in the last 15 years (January 2008 to December 2023). Studies performed on animals were not included. Details about the search strategy are mentioned in Table 2. Minor changes were made in the search strings based on the requirements of the database. Grey literature was searched, and bibliographies of selected studies and other review articles were checked manually to ensure that no relevant articles were left.

Table 2.

Strategy and search terms for the electronic databases.

2.5. Screening, Selection of Studies, and Data Extraction

Two reviewers, M.S.A. and M.N.A., independently reviewed the titles and abstracts obtained by the electronic search. Duplicate titles were eliminated. The remaining titles were assessed based on the preset selection criteria and the PICO question. Full texts of the selected studies were reviewed independently by two reviewers, R.S.P. and W.I.I., and relevant articles were shortlisted. Any disagreements were resolved by discussion between them and with the third reviewer, M.N.A. Articles that did not meet the selection criteria were discarded, and the reason for exclusion was noted. The inter-examiner agreement was calculated using kappa statistics. W.I.I. created a data extraction chart and collected information related to the author, year of publication, country where the research was conducted, type and name of the algorithm network architecture, architecture depth, number of training epochs, learning rate, type of radiographic image, patient data collection duration, number of implant images evaluated, number and names of implant brands and models evaluated, comparator, test group, and training/validation number and ratio. Accuracy reported by the studies, author’s suggestions, and conclusions were also extracted. These data were checked and verified by a second reviewer (M.S.A.).

2.6. Quality Assessment of Included Studies

The quality of all the selected articles was critically analyzed using the Quality Assessment and Diagnostic Accuracy Tool (QUADAS-2) [65]. This tool is used for studies evaluating diagnostic accuracy (Table S1). This tool assesses the risk of bias and applicability concerns. The risk of bias arm has four domains that primarily focus on patient selection, index test, reference standard, and flow and timing. Meanwhile, the applicability concern arm has three domains focusing on patient selection, index test, and reference standards.

3. Results

3.1. Identification and Screening

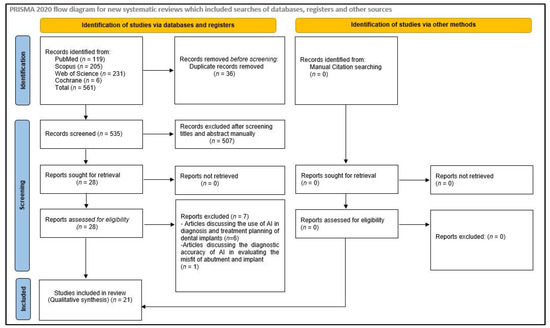

After an electronic search of the databases, 561 hits were displayed. A total of 36 articles were found to be duplicates and were removed, and the titles and abstracts of 525 articles were reviewed and checked for eligibility based on inclusion and exclusion criteria. Twenty-eight articles were selected for full-text review. Out of these twenty-eight articles, six were rejected, as they discussed the use of AI in diagnosis and treatment planning of dental implants, and one was rejected because it discussed the diagnostic accuracy of AI in evaluating the misfit of abutment and implant. Eventually, twenty-one articles were included in the study. No relevant articles meeting the selection criteria were found during the manual search of the bibliographies of the selected studies and other review articles (Figure 1). During the full-text review phase, Cohen’s kappa value was found to be 0.89 for two reviewers (R.S.P. and W.I.I.), which is an excellent agreement.

Figure 1.

Flow chart illustrating the search strategy.

3.2. Study Characteristics

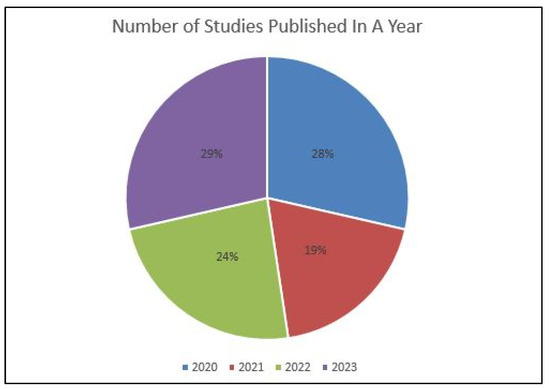

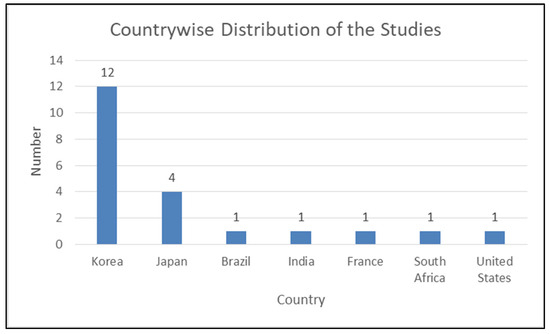

Table 3 displays the characteristics of studies involved in the review. All the involved studies were published in the last four years (2020: six; 2021: four; 2022: five; 2023: six) (Figure 2). Out of selected 21 studies, 12 were conducted in the Republic of Korea [31,48,51,53,54,55,57,58,59,60,61,62], four in Japan [30,47,50,52], and one each in Brazil [49], India [56], France [46], South Africa [32], and the United States [63] (Figure 3). Some of the included studies were conducted by the same research groups (Kong et al. [31,61], Park et al. [48,62], Sukegawa et al. [30,50,52], and Lee et al. [51,53,54]). Each of them shared common funding sources and grant numbers, respectively, but the studies by Kong et al. [31,61] also shared a common research registration number. The number of algorithm networks evaluated for accuracy varied in the selected studies. Ten studies [46,47,48,49,51,53,57,58,60,62] evaluated the accuracy of one algorithm network; three evaluated two algorithm networks [32,59,61]; two tested three algorithm networks [31,54]; one tested four algorithm networks [56]; three tested five algorithm networks [50,52,55]; one study each tested six [30] and ten [63] algorithm networks. All the included studies evaluated the accuracy of tested AI tools in implant detection and classification, whereas four studies [51,53,60,62] also compared this to trained dental professionals.

Table 3.

Study characteristics and accuracy results of the included studies.

Figure 2.

Year-wise distribution of published studies.

Figure 3.

Country-wise distribution of published studies.

More than 431,000 implant images were used to train and test the selected AI tools’ implant detection and classification accuracy. Eight studies [30,31,47,50,52,56,60,61] used cropped panoramic X-ray images, and six studies [49,55,57,58,59,63] used cropped periapical X-ray images, whereas another six studies [46,48,51,53,54,62] used both periapical and panoramic implant images. In one study [32], artificially generated X-ray images were used to test AI accuracy. In most of the selected studies, the test group to training group ratio was 1:4. The learning rate of the AI algorithm ranged between 0.0001 and 0.02, the number of training epochs ranged from 50 to 2000, and the architecture depth varied from 3 to 150 layers. Also, the number of implant brands and models identified and classified varied from N = 3 to N = 130.

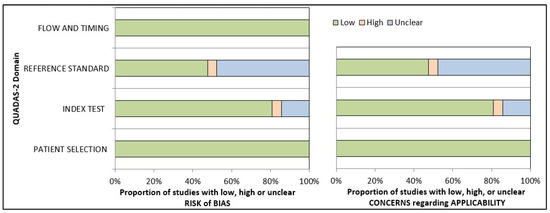

3.3. Quality Assessment of Included Studies

The QUADAS-2 tool was used to assess the risk of bias in diagnostic tests. Out of the 21 studies evaluated, 14 were found to be at risk of bias, with high or unclear risk in one or more domains. The remaining seven studies, however, had a low risk of bias. All the included studies utilized photographic data as input to AI, resulting in a low risk of bias in the data selection domain across all studies. The results from the risk-of-bias arm demonstrated that 80.95% of the studies had a low risk, 14.28% had an unclear risk, and 4.76% had a high risk in the index test domain. In contrast, in the reference standard domain, 47.62% of the studies had a low or unclear risk of bias, while 4.76% had a high risk of bias. As the data feeding in AI technology is standardized, the final output will not affect the flow or time frame. Therefore, all studies regarded both aspects as low-risk categories (100%). Based on the risk-of-bias arm of the QUADAS-2 assessment tool, applicability concerns generated similar results. (Table S1 and Figure 4).

Figure 4.

Presentation of the risk of quality assessment summary of risk bias and applicability concerns for included studies according to the QUADAS-2 tool.

3.4. Accuracy Assessment

The overall accuracy of deep learning algorithms (DLA) in implant detection and identification ranged from a low of 67% [56] to as high as 98.5% [52]. Most included studies reported mean accuracy levels above 90% [30,46,50,51,52,53,54,55,58,59,63]. The accuracy of the latest finely tuned versions of DLAs was reported to be higher when compared to basic DLAs. Six studies [46,48,51,53,54,62] used both periapical and panoramic implant images to test the DLA models. Four studies reported higher accuracy when periapical radiographs were used [46,51,53,54,62]. One study reported higher accuracy with panoramic radiographs [48], whereas one study did not provide these details [46]. Four studies compared the accuracy of DLAs with dental professionals [51,53,60,62]. All four reported higher accuracy for DLAs when compared to dental professionals. A study by Lee et al. [60] reported that the board-certified periodontists with the assistance of DLA reported higher accuracy when compared to automated DL alone.

4. Discussion

The current systematic review involved all the recently published studies evaluating the accuracy of AI in implant detection and classification [30,31,32,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63]. Overall, the outcome of this review revealed that the application of AI in implant detection and classification is a reliable and accurate method and can help dentists manage cases with no previous data related to the type of implant. With the advancements in AI, the accuracy levels may improve to a great extent.

However, the outcomes of this review should be inferred with caution because there was a significant variation between the numbers of implant models evaluated for testing the accuracy in the included studies. These ranged from as low as three [49,51,56,59] to as high as one hundred and thirty [61]. In general, the lower the number, the higher the accuracy rate of identification and classification, generally. There was a large variation in the sample size in the selected studies, which varied from 300 [57] to more than 150,000 [62].

The included studies have variations in the annotation process. PA images were used for training and testing the AI tool in six studies [49,55,57,58,59,63] and panoramic images in eight studies [30,31,47,50,52,56,60,61], whereas both PA and panoramic images were used in six studies [46,48,51,53,54,62]. One study used simulated images generated artificially [32]. In the studies where both PA and panoramic images were used, four studies reported that the accuracy of identification and classification was higher with PA images as compared to panoramic images [51,53,54,62], whereas one study reported that the accuracy was higher with the panoramic images [48].

The dental professionals involved in image selection, cropping, image standardization, training, and validation varied in areas of practice from periodontists and prosthodontists to oral and maxillofacial surgeons [30,48,51,53,54,63]. In contrast, other included studies were lacking in this information. One study validated the collected data with the help of board-certified oral and maxillofacial radiologists [48] and periodontists [53]. To reduce the heterogeneity and standardize the outcomes, the validation of the selected X-ray images should be performed by a trained radiologist. There was variation in training epochs, which varied from 50 to 2000, and the architecture depth varied from 3 to 150 layers. These parameters can affect the accuracy outcomes of the included studies. The accuracy of identification and classification also depends on the generation of Dl architecture used. There was a difference in the tested algorithms in the selected studies.

In their study, Sukegawa et al. [52] trained a CNN algorithm to analyze the implant brand and treatment stage simultaneously. The AI tool was annotated for both parameters. The classification accuracy of the implant treatment stage was reported as 0.996, with a large effect size of 0.818. The accuracy of single-task and multi-task AI tools were found to be comparable. Lee et al. [54] trained and tested the accuracy of AI tools to identify and classify fractured implants. They reported an implant classification accuracy varying from 0.804 to 0.829. They reported higher accuracy levels when DCNN architecture used only PA images for identification.

All the included studies evaluated the accuracy of tested AI tools in implant detection and classification, whereas four studies [51,53,60,62] also compared this to the trained dental professionals. Lee et al. [51,53,60] and Park et al. [62] compared the accuracy of the tested DL algorithm in implant detection and classification with trained dental professionals. All the studies reported that the accuracy performance of the DL algorithm was significantly superior when compared to humans. The accuracy reported by Park et al. [62] for DL was 82.3% and for humans varied from 16.8% (dentist not specialized in implantology) to 43.3% (dentist specialized in implantology). Lee et al. [60] reported mean accuracy of 80.56% for the automated DL algorithm, 63.13% for all participants without DL assistance, and 78.88% for all participants with DL assistance. They reported that the DL algorithm significantly helped in improving the classification accuracy of all dental professionals. Lee et al. [53], in another study, reported an accuracy of 95.4% for DL and between 50.1% to 96.8% for dentists. Another study by Lee et al. [51] reported a similar accuracy rate with DL at 97.1% and periodontists at 92.5%.

Most of the currently reported AI models use two-dimensional X-rays (periapical or panoramic). In contrast, three-dimensional X-rays like cone-beam computed tomography, widely used in implantology, were not evaluated. Also, the studies included have limitations in the type of implant systems evaluated. Thus, there is a need for more studies with a vast database that can include most of the commonly used implant systems and can utilize all forms of radiographic techniques.

The DL algorithm’s identification and classification abilities in all the selected studies were limited to the implant models the authors trained. There is a need to include more implant systems and models and create a vast database to help identify a wider variety of implant models and their characteristics. A comprehensive search strategy and rigorous selection strategy are the strong points of this systematic review. All articles mentioning AI and dental implants were assessed based on pre-set selection criteria, thus ensuring that every relevant article was reviewed.

4.1. Inferences and Future Directions

The field of AI is growing exponentially. There is vast literature discussing the advancements of AI in the healthcare field. Most of these AI tools focus on identification, diagnosis, and treatment planning and ways to improve them to help healthcare professionals provide the best possible treatment to their patients. All the included studies used two-dimensional images (periapical or panoramic) to identify and classify the implant systems. Three-dimensional imaging techniques like CBCT are considered a gold-standard imaging technique in dental implant planning and treatment. Thus, there is a need to develop AI tools that can use these 3D images to identify and classify the implant systems. Additionally, with the availability of newer generations of AI tools, there is a need for constant up-gradation to increase the accuracy levels of these tools.

4.2. Limitations

The current systematic review has a few limitations. This review included studies published only in English. The search period was limited to the last 25 years only (2008–2023). As AI is a recent and advancing field, the authors believed that conducting a search before this time may provide studies in which the technology is in an immature stage. Lastly, a meta-analysis was not feasible due to the lack of heterogeneity among the selected studies.

5. Conclusions

To conclude, it can be stated that the articles in the present review provide considerable evidence to validate AI tools as having high accuracy in identifying and classifying dental implant systems using 2-dimensional X-ray images. These outcomes are vital for clinical diagnosis and treatment planning by trained dental professionals to enhance patient treatment outcomes.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/diagnostics14080806/s1, Table S1: Quality Assessment (QUADAS-2) Summary of Risk Bias and Applicability Concerns.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

Author would like to thank Mohammed Sultan Al-Ak’hali (M.S.A.), Mohammad N. Alam (M.N.A.), and Reghunathan S. Preethanath (R.S.P.) for their help in the screening and selection of the reviewed articles.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Abad-Coronel, C.; Bravo, M.; Tello, S.; Cornejo, E.; Paredes, Y.; Paltan, C.A.; Fajardo, J.I. Fracture Resistance Comparative Analysis of Milled-Derived vs. 3D-Printed CAD/CAM Materials for Single-Unit Restorations. Polymers 2023, 15, 3773. [Google Scholar] [CrossRef] [PubMed]

- Martín-Ortega, N.; Sallorenzo, A.; Casajús, J.; Cervera, A.; Revilla-León, M.; Gómez-Polo, M. Fracture resistance of additive manufactured and milled implant-supported interim crowns. J. Prosthet. Dent. 2022, 127, 267–274. [Google Scholar] [CrossRef] [PubMed]

- Jain, S.; Sayed, M.E.; Shetty, M.; Alqahtani, S.M.; Al Wadei, M.H.D.; Gupta, S.G.; Othman, A.A.A.; Alshehri, A.H.; Alqarni, H.; Mobarki, A.H.; et al. Physical and mechanical properties of 3D-printed provisional crowns and fixed dental prosthesis resins compared to CAD/CAM milled and conventional provisional resins: A systematic review and meta-analysis. Polymers 2022, 14, 2691. [Google Scholar] [CrossRef] [PubMed]

- Gad, M.M.; Fouda, S.M.; Abualsaud, R.; Alshahrani, F.A.; Al-Thobity, A.M.; Khan, S.Q.; Akhtar, S.; Ateeq, I.S.; Helal, M.A.; Al-Harbi, F.A.; et al. Strength and surface properties of a 3D-printed denture base polymer. J. Prosthodont. 2021, 31, 412–418. [Google Scholar] [CrossRef]

- Al Wadei, M.H.D.; Sayed, M.E.; Jain, S.; Aggarwal, A.; Alqarni, H.; Gupta, S.G.; Alqahtani, S.M.; Alahmari, N.M.; Alshehri, A.H.; Jain, M.; et al. Marginal Adaptation and Internal Fit of 3D-Printed Provisional Crowns and Fixed Dental Prosthesis Resins Compared to CAD/CAM-Milled and Conventional Provisional Resins: A Systematic Review and Meta-Analysis. Coatings 2022, 12, 1777. [Google Scholar] [CrossRef]

- Wang, D.; Wang, L.; Zhang, Y.; Lv, P.; Sun, Y.; Xiao, J. Preliminary study on a miniature laser manipulation robotic device for tooth crown preparation. Int. J. Med. Robot. Comput. Assist. Surg. 2014, 10, 482–494. [Google Scholar] [CrossRef] [PubMed]

- Toosi, A.; Arbabtafti, M.; Richardson, B. Virtual reality haptic simulation of root canal therapy. Appl. Mech. Mater. 2014, 666, 388–392. [Google Scholar] [CrossRef]

- Jain, S.; Sayed, M.E.; Ibraheem, W.I.; Ageeli, A.A.; Gandhi, S.; Jokhadar, H.F.; AlResayes, S.S.; Alqarni, H.; Alshehri, A.H.; Huthan, H.M.; et al. Accuracy Comparison between Robot-Assisted Dental Implant Placement and Static/Dynamic Computer-Assisted Implant Surgery: A Systematic Review and Meta-Analysis of In Vitro Studies. Medicina 2024, 60, 11. [Google Scholar] [CrossRef] [PubMed]

- Bellman, R. Artificial Intelligence: Can Computers Think? Thomson Course Technology; Boyd & Fraser: Boston, MA, USA, 1978; 146p. [Google Scholar]

- Akst, J. A primer: Artificial intelligence versus neural networks. Inspiring Innovation. The Scientist Exploring Life, 1 May 2019; 65802. [Google Scholar]

- Kozan, N.M.; Kotsyubynska, Y.Z.; Zelenchuk, G.M. Using the artificial neural networks for identification unknown person. IOSR J. Dent. Med. Sci. 2017, 1, 107–113. [Google Scholar]

- Khanagar, S.B.; Al-ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Sikka, A.; Jain, A. Sex determination of mandible: A morphological and morphometric analysis. Int. J. Contemp. Med. Res. 2016, 3, 1869–1872. [Google Scholar]

- Kaladhar, D.; Chandana, B.; Kumar, P. Predicting Cancer Survivability Using Classification Algorithms. Books 1 View project Protein Interaction Networks in Metallo Proteins and Docking Approaches of Metallic Compounds with TIMP and MMP in Control of MAPK Pathway View project Predicting Cancer. Int. J. Res. Rev. Comput. Sci. 2011, 2, 340–343. [Google Scholar]

- Kalappanavar, A.; Sneha, S.; Annigeri, R.G. Artificial intelligence: A dentist’s perspective. Pathol. Surg. 2018, 5, 2–4. [Google Scholar] [CrossRef]

- Krishna, A.B.; Tanveer, A.; Bhagirath, P.V.; Gannepalli, A. Role of artificial intelligence in diagnostic oral pathology-A modern approach. J. Oral Maxillofac. Pathol. 2020, 24, 152–156. [Google Scholar] [CrossRef] [PubMed]

- Katne, T.; Kanaparthi, A.; Gotoor, S.; Muppirala, S.; Devaraju, R.; Gantala, R. Artificial intelligence: Demystifying dentistry—The future and beyond artificial intelligence: Demystifying dentistry—The future and beyond. Int. J. Contemp. Med. Surg. Radiol. 2019, 4, 4. [Google Scholar] [CrossRef]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Murata, M.; Ariji, Y.; Ohashi, Y.; Kawai, T.; Fukuda, M.; Funakoshi, T.; Kise, Y.; Nozawa, M.; Katsumata, A.; Fujita, H.; et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019, 35, 301–307. [Google Scholar] [CrossRef] [PubMed]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019, 45, 917–922.e5. [Google Scholar] [CrossRef]

- Deif, M.A.; Attar, H.; Amer, A.; Elhaty, I.A.; Khosravi, M.R.; Solyman, A.A.A. Diagnosis of Oral Squamous Cell Carcinoma Using Deep Neural Networks and Binary Particle Swarm Optimization on Histopathological Images: An AIoMT Approach. Comput. Intell. Neurosci. 2022, 2022, 6364102. [Google Scholar] [CrossRef]

- Yang, S.Y.; Li, S.H.; Liu, J.L.; Sun, X.Q.; Cen, Y.Y.; Ren, R.Y.; Ying, S.C.; Chen, Y.; Zhao, Z.H.; Liao, W. Histopathology-Based Diagnosis of Oral Squamous Cell Carcinoma Using Deep Learning. J. Dent. Res. 2022, 101, 1321–1327. [Google Scholar] [CrossRef]

- Lee, K.S.; Jung, S.K.; Ryu, J.J.; Shin, S.W.; Choi, J. Evaluation of transfer learning with deep convolutional neural networks for screening osteoporosis in dental panoramic radiographs. J. Clin. Med. 2020, 9, 392. [Google Scholar] [CrossRef]

- Saghiri, M.A.; Garcia-Godoy, F.; Gutmann, J.L.; Lotfi, M.; Asgar, K. The reliability of artificial neural network in locating minor apical foramen: A cadaver study. J. Endod. 2012, 38, 1130–1134. [Google Scholar] [CrossRef] [PubMed]

- Saghiri, M.A.; Asgar, K.; Boukani, K.K.; Lotfi, M.; Aghili, H.; Delvarani, A.; Karamifar, K.; Saghiri, A.M.; Mehrvarzfar, P.; Garcia-Godoy, F. A new approach for locating the minor apical foramen using an artificial neural network. Int. Endod. J. 2012, 45, 257–265. [Google Scholar] [CrossRef] [PubMed]

- Hatvani, J.; Horváth, A.; Michetti, J.; Basarab, A.; Kouamé, D.; Gyöngy, M. Deep learning-based super-resolution applied to dental computed tomography. IEEE Trans. Rad. Plasma Med. Sci. 2019, 3, 120–128. [Google Scholar] [CrossRef]

- Hiraiwa, T.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019, 48, 20180218. [Google Scholar] [CrossRef]

- Vila-Blanco, N.; Carreira, M.J.; Varas-Quintana, P.; Balsa-Castro, C.; Tomas, I. Deep neural networks for chronological age estimation from OPG images, IEEE Trans. Med. Imaging 2020, 39, 2374–2384. [Google Scholar] [CrossRef]

- Vishwanathaiah, S.; Fageeh, H.N.; Khanagar, S.B.; Maganur, P.C. Artificial Intelligence Its Uses and Application in Pediatric Dentistry: A Review. Biomedicines 2023, 11, 788. [Google Scholar] [CrossRef]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Tanaka, F.; Yamashita, K.; Kagaya, T.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; et al. Is attention branch network effective in classifying dental implants from panoramic radiograph images by deep learning? PLoS ONE 2022, 17, e0269016. [Google Scholar] [CrossRef]

- Kong, H.J.; Eom, S.H.; Yoo, J.Y.; Lee, J.H. Identification of 130 Dental Implant Types Using Ensemble Deep Learning. Int. J. Oral Maxillofac. Implants 2023, 38, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Kohlakala, A.; Coetzer, J.; Bertels, J.; Vandermeulen, D. Deep learning-based dental implant recognition using synthetic X-ray images. Med. Biol. Eng. Comput. 2022, 60, 2951–2968. [Google Scholar] [CrossRef]

- Kurt Bayrakdar, S.; Orhan, K.; Bayrakdar, I.S.; Bilgir, E.; Ezhov, M.; Gusarev, M.; Shumilov, E. A deep learning approach for dental implant planning in cone-beam computed tomography images. BMC Med. Imaging 2021, 21, 86. [Google Scholar] [CrossRef] [PubMed]

- Moufti, M.A.; Trabulsi, N.; Ghousheh, M.; Fattal, T.; Ashira, A.; Danishvar, S. Developing an artificial intelligence solution to autosegment the edentulous mandibular bone for implant planning. Eur. J. Dent. 2023, 17, 1330–1337. [Google Scholar] [CrossRef] [PubMed]

- Howe, M.S.; Keys, W.; Richards, D. Long-term (10-year) dental implant survival: A systematic review and sensitivity meta-analysis. J. Dent. 2019, 84, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Simonis, P.; Dufour, T.; Tenenbaum, H. Long-term implant survival and success: A 10-16-year follow-up of non-submerged dental implants. Clin. Oral Implants Res. 2010, 21, 772. [Google Scholar] [CrossRef] [PubMed]

- Romeo, E.; Lops, D.; Margutti, E.; Ghisolfi, M.; Chiapasco, M.; Vogel, G. Longterm survival and success of oral implants in the treatment of full and partial arches: A 7-year prospective study with the ITI dental implant system. Int. J. Oral Maxillofac. Implants. 2004, 19, 247–249. [Google Scholar] [PubMed]

- Papaspyridakos, P.; Mokti, M.; Chen, C.J.; Benic, G.I.; Gallucci, G.O.; Chronopoulos, V. Implant and prosthodontic survival rates with implant fixed complete dental prostheses in the edentulous mandible after at least 5 years: A systematic review. Clin. Implant. Dent. Relat. Res. 2014, 16, 705–717. [Google Scholar] [CrossRef] [PubMed]

- Jokstad, A.; Braegger, U.; Brunski, J.B.; Carr, A.B.; Naert, I.; Wennerberg, A. Quality of dental implants. Int. Dent. J. 2003, 53, 409–443. [Google Scholar] [CrossRef]

- Sailer, I.; Karasan, D.; Todorovic, A.; Ligoutsikou, M.; Pjetursson, B.E. Prosthetic failures in dental implant therapy. Periodontol. 2000 2022, 88, 130–144. [Google Scholar] [CrossRef]

- Lee, D.W.; Kim, N.H.; Lee, Y.; Oh, Y.A.; Lee, J.H.; You, H.K. Implant fracture failure rate and potential associated risk indicators: An up to 12-year retrospective study of implants in 5124 patients. Clin. Oral Implants Res. 2019, 30, 206–217. [Google Scholar] [CrossRef] [PubMed]

- Tabrizi, R.; Behnia, H.; Taherian, S.; Hesami, N. What are the incidence and factors associated with implant fracture? J. Oral Maxillofac. Surg. 2017, 75, 1866–1872. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, M.; Meyer, S.; Mombelli, A.; Müller, F. Dental implants in the elderly population: A systematic review and meta-analysis. Clin. Oral Implants Res. 2017, 28, 920–930. [Google Scholar] [CrossRef]

- Al-Wahadni, A.; Barakat, M.S.; Abu Afifeh, K.; Khader, Y. Dentists’ most common practices when selecting an implant system. J. Prosthodont. 2018, 27, 250–259. [Google Scholar] [CrossRef] [PubMed]

- Tyndall, D.A.; Price, J.B.; Tetradis, S.; Ganz, S.D.; Hildebolt, C.; Scarfe, W.C.; American Academy of Oral and Maxillofacial Radiology. Position statement of the American Academy of Oral and Maxillofacial Radiology on selection criteria for the use of radiology in dental implantology with emphasis on cone beam computed tomography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2012, 113, 817–826. [Google Scholar] [CrossRef] [PubMed]

- Hadj Saïd, M.; Le Roux, M.K.; Catherine, J.H.; Lan, R. Development of an Artificial Intelligence Model to Identify a Dental Implant from a Radiograph. Int. J. Oral Maxillofac. Surg. 2020, 36, 1077–1082. [Google Scholar] [CrossRef]

- Takahashi, T.; Nozaki, K.; Gonda, T.; Mameno, T.; Wada, M.; Ikebe, K. Identification of dental implants using deep learning-pilot study. Int. J. Implant. Dent. 2020, 6, 53. [Google Scholar] [CrossRef]

- Park, W.; Huh, J.K.; Lee, J.H. Automated deep learning for classification of dental implant radiographs using a large multi-center dataset. Sci. Rep. 2023, 13, 4862. [Google Scholar] [CrossRef]

- Da Mata Santos, R.P.; Vieira Oliveira Prado, H.E.; Soares Aranha Neto, I.; Alves de Oliveira, G.A.; Vespasiano Silva, A.I.; Zenóbio, E.G.; Manzi, F.R. Automated Identification of Dental Implants Using Artificial Intelligence. Int. J. Oral Maxillofac. Implants 2021, 36, 918–923. [Google Scholar] [CrossRef] [PubMed]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Yamashita, K.; Nakano, K.; Yamamoto, N.; Nagatsuka, H.; Furuki, Y. Deep Neural Networks for Dental Implant System Classification. Biomolecules 2020, 10, 984. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Jeong, S.N. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs: A pilot study. Medicine 2020, 99, e20787. [Google Scholar] [CrossRef]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Matsuyama, T.; Yamashita, K.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Furuki, Y. Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images. Biomolecules 2021, 11, 815. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-H.; Kim, Y.-T.; Lee, J.-B.; Jeong, S.-N. A Performance Comparison between Automated Deep Learning and Dental Professionals in Classification of Dental Implant Systems from Dental Imaging: A Multi-Center Study. Diagnostics 2020, 10, 910. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.-W.; Kim, S.-Y.; Jeong, S.-N.; Lee, J.-H. Artificial Intelligence in Fractured Dental Implant Detection and Classification: Evaluation Using Dataset from Two Dental Hospitals. Diagnostics 2021, 11, 233. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-E.; Nam, N.-E.; Shim, J.-S.; Jung, Y.-H.; Cho, B.-H.; Hwang, J.J. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. J. Clin. Med. 2020, 9, 1117. [Google Scholar] [CrossRef] [PubMed]

- Benakatti, V.B.; Nayakar, R.P.; Anandhalli, M. Machine learning for identification of dental implant systems based on shape—A descriptive study. J. Indian Prosthodont. Soc. 2021, 21, 405–411. [Google Scholar] [CrossRef] [PubMed]

- Jang, W.S.; Kim, S.; Yun, P.S.; Jang, H.S.; Seong, Y.W.; Yang, H.S.; Chang, J.S. Accurate detection for dental implant and peri-implant tissue by transfer learning of faster R-CNN: A diagnostic accuracy study. BMC Oral Health 2020, 22, 591. [Google Scholar] [CrossRef] [PubMed]

- Kong, H.J. Classification of dental implant systems using cloud-based deep learning algorithm: An experimental study. J. Yeungnam Med. Sci. 2023, 40 (Suppl.), S29–S36. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.S.; Ha, E.G.; Kim, Y.H.; Jeon, K.J.; Lee, C.; Han, S.S. Transfer learning in a deep convolutional neural network for implant fixture classification: A pilot study. Imaging Sci. Dent. 2022, 52, 219–224. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Kim, Y.T.; Lee, J.B.; Jeong, S.N. Deep learning improves implant classification by dental professionals: A multi-center evaluation of accuracy and efficiency. J. Periodontal Implant Sci. 2022, 52, 220–229. [Google Scholar] [CrossRef]

- Kong, H.J.; Yoo, J.Y.; Lee, J.H.; Eom, S.H.; Kim, J.H. Performance evaluation of deep learning models for the classification and identification of dental implants. J. Prosthet. Dent. 2023, in press. [CrossRef] [PubMed]

- Park, W.; Schwendicke, F.; Krois, J.; Huh, J.K.; Lee, J.H. Identification of Dental Implant Systems Using a Large-Scale Multicenter Data Set. J. Dent. Res. 2023, 102, 727–733. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, C.Y.; Bai, H.; Ling, H.; Yang, J. Artificial Intelligence in Identifying Dental Implant Systems on Radiographs. Int. J. Periodontics Restor. Dent. 2023, 43, 363–368. [Google Scholar]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; PRISMA-P Group. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group (2011). QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).