Abstract

Background/Objectives: Age-related macular degeneration (AMD) is a significant cause of vision loss in older adults, often progressing without early noticeable symptoms. Deep learning (DL) models, particularly convolutional neural networks (CNNs), demonstrate potential in accurately diagnosing and classifying AMD using medical imaging technologies like optical coherence to-mography (OCT) scans. This study introduces a novel CNN-based DL method for AMD diagnosis, aiming to enhance computational efficiency and classification accuracy. Methods: The proposed method (PM) combines modified Inception modules, Depthwise Squeeze-and-Excitation Blocks, and ConvMixer architecture. Its effectiveness was evaluated on two datasets: a private dataset with 2316 images and the public Noor dataset. Key performance metrics, including accuracy, precision, recall, and F1 score, were calculated to assess the method’s diagnostic performance. Results: On the private dataset, the PM achieved outstanding performance: 97.98% accuracy, 97.95% precision, 97.77% recall, and 97.86% F1 score. When tested on the public Noor dataset, the method reached 100% across all evaluation metrics, outperforming existing DL approaches. Conclusions: These results highlight the promising role of AI-based systems in AMD diagnosis, of-fering advanced feature extraction capabilities that can potentially enable early detection and in-tervention, ultimately improving patient care and outcomes. While the proposed model demon-strates promising performance on the datasets tested, the study is limited by the size and diversity of the datasets. Future work will focus on external clinical validation to address these limita-tions.

1. Introduction

The retina is a light-sensitive tissue lining the inner surface of the eye, responsible for capturing visual information. The macula, a specialized region of the retina, plays a key role in central vision, capturing high-resolution details such as colors and brightness levels, which are then transmitted to the brain for interpretation [1]. Age-related macular degeneration (AMD), a retinal condition, is characterized by degenerative changes occurring in the choroid and retina within the macular region [2]. As per the World Health Organization, roughly 1.3 billion people globally experience different levels of vision impairment, with AMD ranking as the third most significant contributor to visual loss [3,4]. This condition predominantly impacts individuals aged 50 and above, and its occurrence rises as people grow older [5]. With an aging population, AMD’s projected cases are estimated to reach 288 million by 2040, constituting approximately 8.7% of total global blindness cases [6,7].

AMD may be categorized into two forms: exudative, known as wet AMD, and non-exudative, known as dry AMD. Dry AMD is distinguished from wet AMD by the absence of blood or serum leakage. Around 85% to 90% of individuals with AMD have the dry form [8]. The presence of drusen in the retinal pigment epithelium layer is a characteristic feature of AMD, observed in both dry and wet forms. This drusen buildup can impair the function of the macula by disrupting its normal structure and causing progressive vision loss. While individuals with dry AMD might retain sufficient central vision, they often face significant limitations in functionality, including diminished night vision, fluctuations in sight, and challenges with reading due to a narrowed central vision area. Furthermore, a segment of dry AMD cases may advance to wet AMD as time progresses [9]. In individuals with wet AMD, the presence of blood or fluid leaks behind the macula can lead to the appearance of dark spots in their central vision. Choroidal neovascularization (CNV), which develops beneath the macula and retina, is one of the primary causes of wet AMD. This abnormal growth of blood vessels can result in macular edema and temporary vision impairment. Alternatively, it can lead to bleeding, posing a significant threat to the underlying photoreceptors and causing permanent vision loss. Wet AMD is known for causing rapid and escalating vision deterioration [10,11]. If CNV emerges in one eye, there is a heightened risk for the other eye, necessitating regular examinations. Consequently, consistent retinal screenings play a crucial role in detecting and treating AMD and in averting further deterioration [3].

Ophthalmologists now have access to various imaging technological advancements for detecting AMD-related features. Among the clinical-level imaging modalities, optical coherence tomography (OCT) and fundus retinal imaging (FRI) are the most commonly employed methods to identify AMD [12]. A limitation of fundus photography is that it provides a two-dimensional frontal view of the retina, whereas OCT offers higher resolution and sectional imaging, enabling detailed analysis of retinal layers. [13].

The OCT, an imaging technology widely employed in ophthalmology, serves to diagnose and track various retinal conditions in a noninvasive manner. This rapid medical imaging method utilizes low-coherence interferometry to produce detailed cross-sectional images of the retina and optic nerve head, covering the foremost segment of the visual pathway and evaluating visual disorders such as optic disc abnormalities both qualitatively and quantitatively [14]. Its capability to generate high-resolution 3D images offers precise insights into the structure and operation of the human retina [15]. The OCT technology generates 3D cross-sectional images of the eye’s organic tissues, enabling the scrutiny of various layers within the eye’s rear part. This capability facilitates the detection and ongoing observation of eye disorders and irregularities [16]. Especially in the case of AMD, OCT has emerged as the primary method for identifying and evaluating the condition, which can lead to vision problems and even blindness. Through precise imaging, OCT enables detailed visualization of retinal structures, facilitating early detection of abnormalities and aiding in the development of effective treatment plans [17,18]. This advanced technology has significantly improved diagnostic accuracy and supports ophthalmologists in providing targeted care for patients with retinal conditions [19].

As the prevalence of AMD increases, AI-based technologies have the potential to assist ophthalmologists by identifying subtle retinal features, thereby enhancing diagnostic efficiency. Concurrently, with the advancements in multimodal imaging and the emergence of new biomarkers, data volume per patient will expand [20]. As retinal scans become more detailed and widely utilized, the volume of OCT images to be processed is expected to increase, presenting challenges in efficiently diagnosing and treating AMD patients. Hence, there is a growing need for a computer-assisted diagnostic system capable of automatically detecting AMD from an extensive array of retinal OCT images [21].

The advancement of AI-based detection systems opens up new possibilities for AMD diagnosis and treatment [22]. AI-based detection techniques can address this need by facilitating early detection and intervention through scalable, affordable solutions for AMD diagnosis and monitoring [23]. CNN-based deep learning (DL) algorithms have demonstrated strong performance in detecting AMD and classifying its types (wet and dry) [24].

This study introduces a novel DL model utilizing CNN for AMD detection using OCT images. The model incorporates a combination of the Depthwise Squeeze-and-Excitation Block (DSEB), modified Inception module, and ConvMixer architecture. The key contributions of this proposed model (PM) are outlined as follows:

- An innovative method is presented, merging the benefits of reducing parameter count using depthwise separable convolutions with the multi-scale parallel design of the Inception module. This combined technique, known as the modified Inception module, effectively minimizes the number of trainable parameters, thus reducing computational costs.

- Incorporating a depthwise separable convolutions layer into the Squeeze-and-Excitation block within DSEB creates a sturdier configuration. The resultant DSEB architecture adeptly assigns significance to low-level features and integrates them with high-level ones, optimizing the utilization of low-level features while marginally affecting the computational load. Consequently, the suggested design bolsters both training efficacy and network efficiency. Empirical findings affirm that integrating this structure within the proposed architecture enhances classification accuracy.

- ConvMixer offers robust capabilities for extracting features, enabling the model to grasp intricate patterns and formations within the input images. This method excels in extracting features abundant in spatial details. Operating directly on input patches and employing standard convolutions solely for mixing steps, it significantly boosts classification accuracy. Moreover, the integration of depthwise separable convolution layers and a modified Inception module into the ConvMixer architecture curtails computational expenses, rendering the proposed architecture more straightforward and less computationally burdensome.

- Comprehensive experimental studies were conducted on a private AMD dataset consisting of 2316 images to analyze the classification performance of the PM. F1 score of 97.86%, recall of 97.77%, precision of 97.95%, and an accuracy of 97.98% were achieved with the PM. In addition, as a result of the experimental studies carried out on the public (Noor) AMD dataset, 100% results were obtained in all evaluation metrics. Comparisons with different DL models have shown the superior performance of the PM.

The structure of the remaining paper is laid out as follows: Section 2 presents an overview of related works extracted from the existing literature. Section 3 elaborates on the model introduced for AMD classification in this study, providing detailed insights into the DSEB, modified Inception module, and ConvMixer architecture incorporated into the PM. Section 4 encompasses our AMD dataset utilized in the experimental investigations and outlines the obtained experimental results. Lastly, Section 5 wraps up the article by presenting conclusions derived from the findings and suggesting potential paths for future exploration.

2. Related Works

DL has significantly developed medical image classification by enabling more accurate and efficient analysis of medical data. The continued development of DL models combined with advancements in medical imaging technologies holds significant promise for improving healthcare diagnostics and treatment. DL models rely on representation learning, wherein a neural network with multiple layers autonomously uncovers the necessary representations essential for classification, eliminating the need for manual crafting of features, thus replacing the multi-step process used in conventional methods [25]. DL, particularly CNN-based models, have demonstrated promising outcomes in the classification of AMD utilizing OCT images. There are CNN-based studies in the literature for the diagnosis and classification of AMD. A general summary of these studies is given in Table 1, Table 2, Table 3, Table 4 and Table 5.

Table 1.

An overall overview of the research conducted in the literature employing DL-driven techniques for classifying AMD.

Table 2.

An overall overview of the research conducted in the literature employing DL-driven techniques for classifying AMD.

Table 3.

An overall overview of the research conducted in the literature employing DL-driven techniques for classifying AMD.

Table 4.

An overall overview of the research conducted in the literature employing DL-driven techniques for classifying AMD.

Table 5.

An overall overview of the research conducted in the literature employing DL-driven techniques for classifying AMD.

3. Proposed Model (PM)

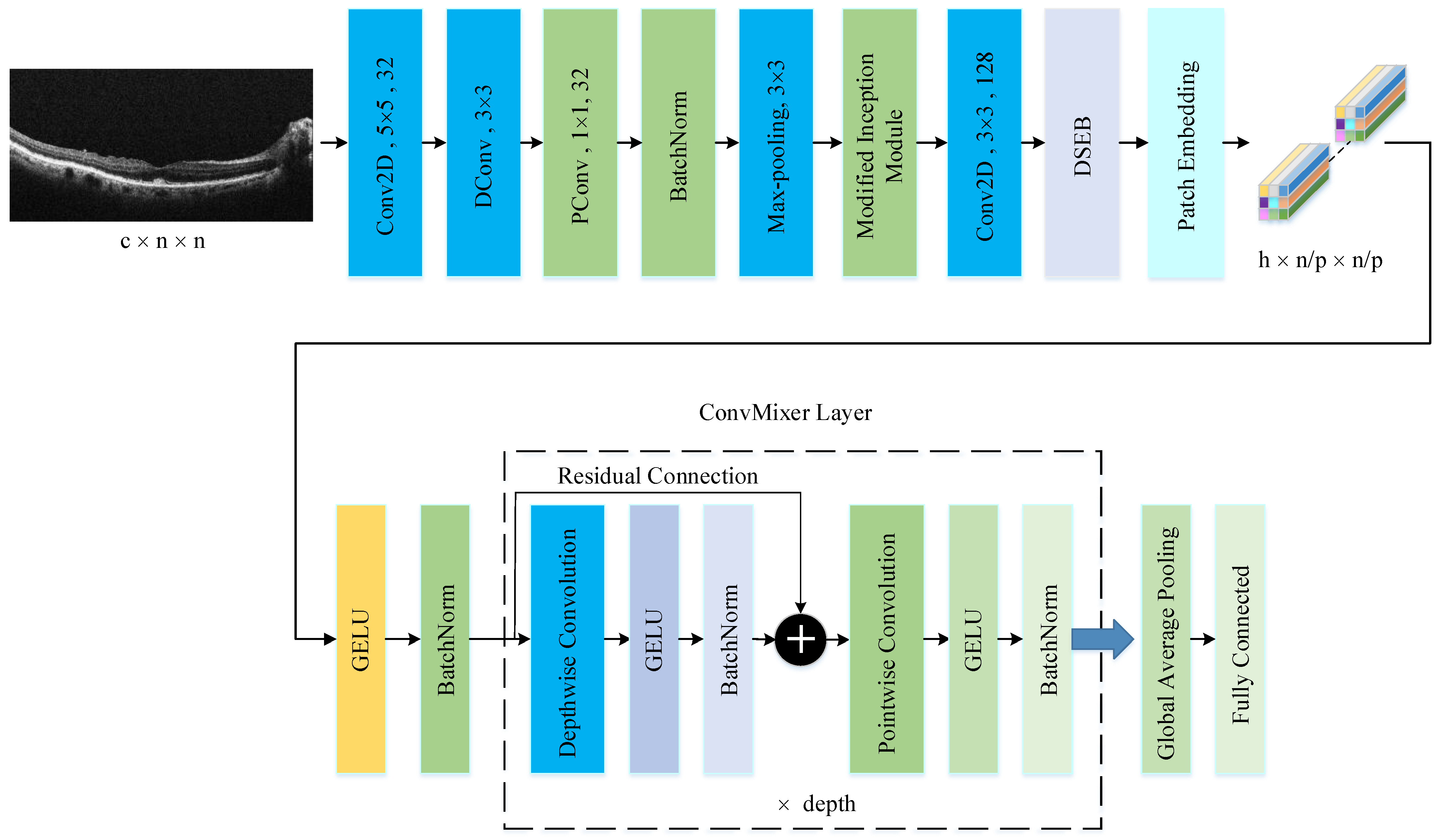

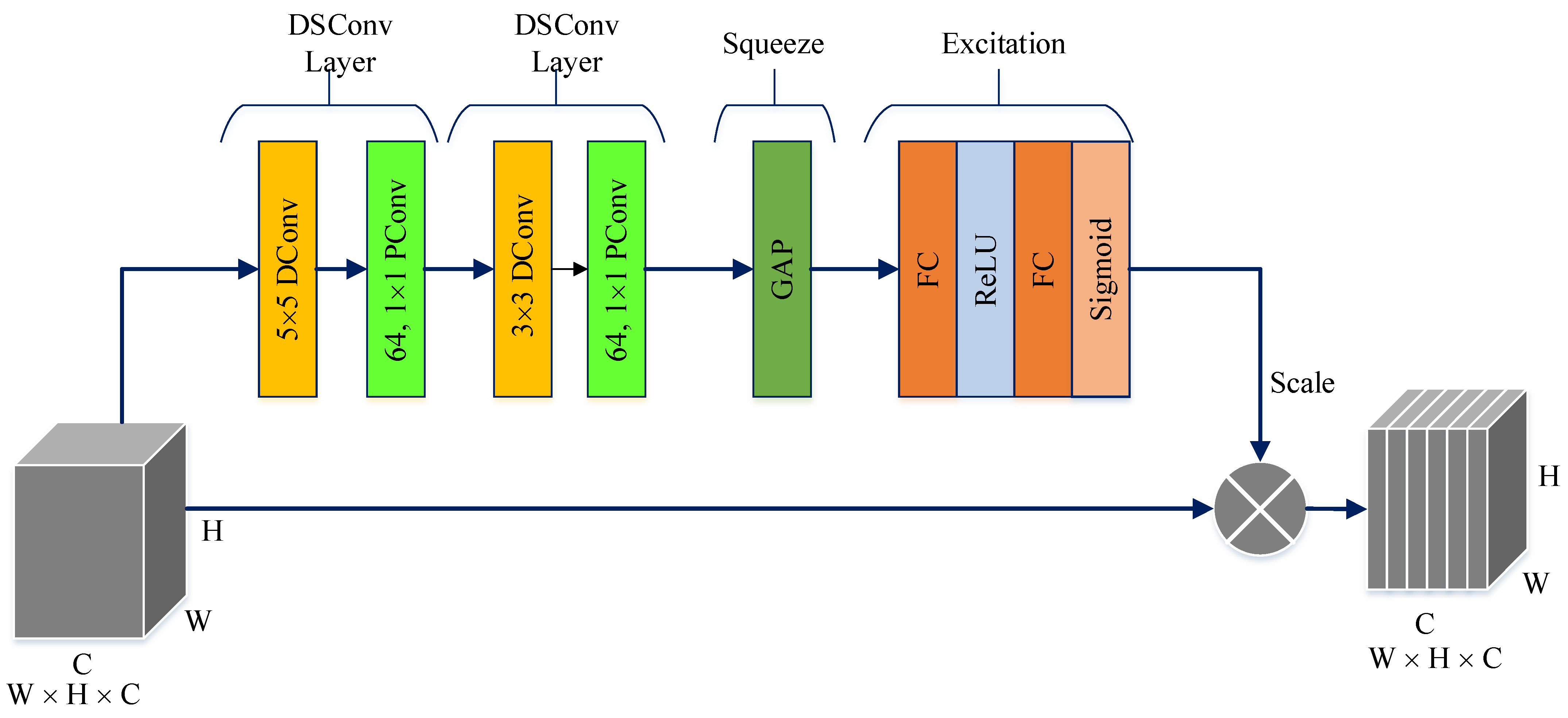

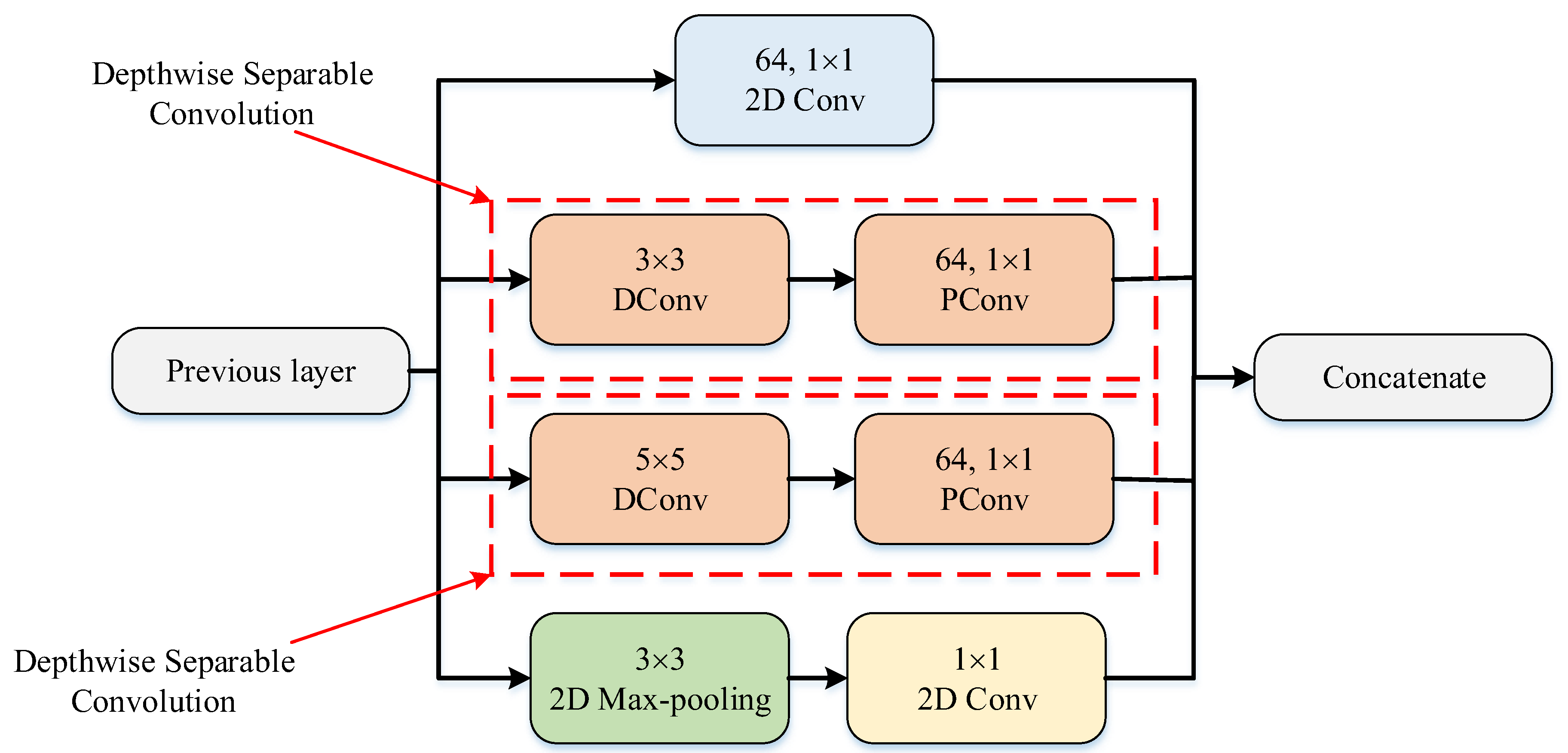

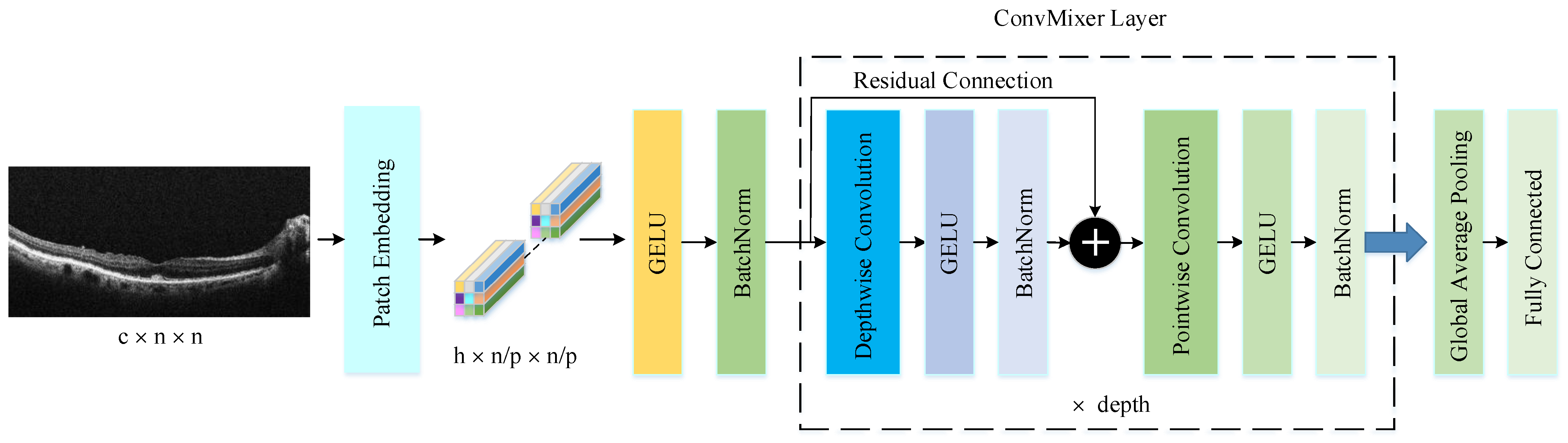

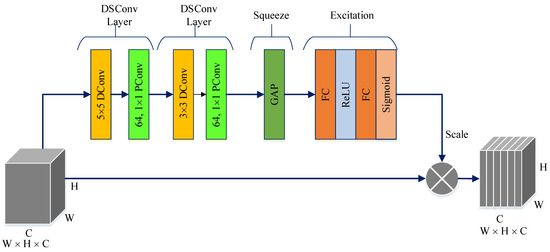

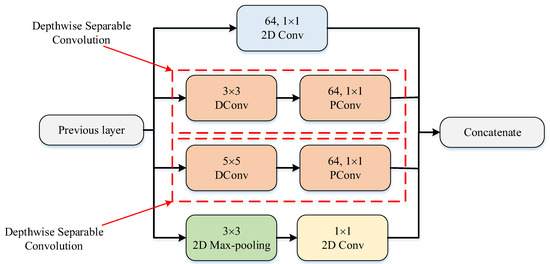

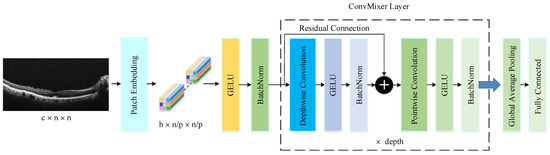

The PM consists of a combination of the ConvMixer (CM) architecture, modified Inception module (MIM), and Depthwise Squeeze-and-Excitation Block (DSEB), as shown in Figure 1 and Figure 2. DSEB consists of the combination of depthwise separable convolution (DSConv) and Squeeze-and-Excitation (SE) block. In the PM, the input image is taken as 224 × 224 × 3. Initially, a 2D Conv with a kernel size of 5 × 5 and 32 filters is applied to this input image. Subsequently, depthwise convolution (DConv) with a kernel size of 3 × 3, followed by a pointwise convolution (PConv) with a kernel size of 1 × 1 and 32 filters, Batch Normalization (BatchNorm), and a 3 × 3 kernel size for maximum pooling (max-pooling) are applied in sequence. The purpose of BatchNorm is to ease and accelerate the training process. The resulting feature map is then fed into an MIM comprising DSConv layers. The application of the MIM is illustrated in Figure 3. Within the scope of the PM, the MIM is applied once. Following this, a 2D Conv with a kernel size of 3 × 3 and 128 filters is applied to the obtained feature map. Next, it serves as input to the DSEB. Within the DSEB, two consecutive DSConv operations are applied. In the first DSConv, DConv with a kernel size of 5 × 5 and PConv with a kernel size of 1 × 1 and 64 filters are employed. In the second DSConv, DConv with a kernel size of 3 × 3 and PConv with a kernel size of 1 × 1 and 64 filters are applied. The output of the second DSConv is then fed into the SE block. Utilizing the SE block enhances the classification performance of networks with almost no increase in system cost. The structure of the DSEB is depicted in Figure 2. The feature map obtained at the output of the DSEB is given to the input of the CM. The creation of CM aimed to explore whether patches contribute to enhancing classification accuracy in image tasks [41]. Hence, the initial layer of CM serves as a patch embedding layer. In the initial phase of the CM approach, image patches are extracted via patch embedding. This embedding is executed utilizing a standard Conv layer with equivalent kernel size and stride as the patch size, mirroring the original CM. Subsequently, patch representation data are obtained. This is succeeded by employing a Gaussian Error Linear Unit (GELU) activation function and a BatchNorm layer. The model’s subsequent phase involves a CM layer block, comprising a residual block encompassing a DConv, a GELU, and a BatchNorm layer. The inputs are merged with the output from the BatchNorm layer. The combined output undergoes further processing via PConv, a GELU layer, and a BatchNorm layer. The CM block repetition occurs depth times. The final stage within PM is the classification phase. The feature map achieved at the conclusion of the second stage undergoes processing through Global Average Pooling (GAP) and fully connected (FC) layers. Ultimately, the output from the FC layer is subjected to a softmax layer, generating the classification prediction output. The hyperparameters of the CM layer in the PM are 5 for the kernel size of the DSConv and 256 for the number of filters of the PConv. Finally, the depth (d) of the CM layer is 16. In the first stage of the proposed CM, patch embedding, the image is divided into patches. The patch size (p) used in this block is 2. Moreover, comprehensive explanations of the techniques employed in the PM are outlined in subsequent sections.

Figure 1.

The structure of PM.

Figure 2.

The structure of DSEB.

Figure 3.

The structure of MIM.

3.1. Depthwise Squeeze-and-Excitation Block (DSEB)

The DSEB is an extension and refinement of the traditional SE block, often employed in CNN architectures to enhance feature representation. In contrast to the SE block, which predominantly operates across channels within a convolutional layer, the DSEB introduces DSConv. These DSConvs allow for an additional level of analysis, considering both channel and spatial dimensions, such as height and width, simultaneously. By incorporating DSConv, the DSEB aims to capture more intricate patterns and nuanced spatial information within the features. This enables the network to learn contextually rich representations that adapt better to the specific spatial characteristics of the data. The DSEB combines the strengths of the SE block, which focuses on channel-wise attention, with the added spatial adaptability brought by DSConv. This integration contributes to improved feature extraction, allowing neural networks to discern and emphasize important features across both channel and spatial dimensions more effectively. As shown in Figure 2, the main components in DSEB are as follows.

Depthwise convolution (DConv): This block leverages depthwise convolutions, which perform convolutions independently for each channel of the input, preserving spatial information while processing each channel separately. By doing so, it adapts to spatial characteristics, enabling better feature extraction [42]. Pointwise convolution (PConv): Following the DConv, a PConv is utilized. This PConv involves using 1 × 1 kernels to merge details across various channels through a linear combination derived from the DConv output. Its primary function is to expand the feature map’s depth while maintaining the spatial dimensions or potentially decreasing them. Frequently termed as the ‘bottleneck’ layer, the PConv reduces channel count, aiding in diminishing computational complexity [42]. Squeeze phase: Similar to the SE block, the DSEB begins with a “squeeze” phase. It globally pools features across spatial dimensions, generating channel-wise statistics to capture the importance of each channel’s information [43]. Excitation phase: Following the squeeze phase, learned channel-wise statistics are utilized to generate attention weights. These weights represent the relevance or significance of each channel in contributing to the final representation. This process enables the network to focus more on informative channels while suppressing less relevant ones [43]. Scale: The computed attention weights are applied to the original feature map to emphasize important channel-wise information. This scaled information is then fused with the output of the depthwise convolutional layer, enriching the feature representation with both spatial and channel-wise contextual information [43].

The DSEB’s innovation lies in its ability to combine the advantages of SE blocks’ channel-wise attention with the adaptability of DSConvs to capture spatial contexts efficiently. This integration results in improved feature representations, aiding the network in learning more discriminative and relevant features, which can lead to better performance in various computer vision tasks, such as semantic segmentation, object detection, and image classification.

3.2. Modified Inception Module (MIM)

In the Inception module (IM), convolution and maximum pooling operations are executed in parallel to extract richer features. DSConv divides the standard convolution into DConv and PConv. DConv performs independent convolutions for each channel of the input image, while PConv is used to combine the output obtained from DConv. This approach reduces the computational cost and the number of parameters by treating the standard convolution as two separate convolutions. By combining DSConv with the IM, a module with higher success rates and fewer parameters has been designed, termed as the MIM. This modified module, illustrated in Figure 3, replaces the sequential 1 × 1 − 3 × 3 convolution layers in the standard IM with 3 × 3 DConv and 1 × 1 PConv and replaces the 1 × 1 − 5 × 5 convolution layers with 5 × 5 DConv and 1 × 1 PConv [44]. The parameter counts for the standard and modified IMs are listed in Table 6 and Table 7. While the standard IM has a total parameter count of 15.488, the MIM has 1132 parameters. This adjustment significantly decreases the number of parameters used in computations, approximately by 13 times, resulting in highly successful outcomes.

Table 6.

Number of parameters of the IM.

Table 7.

Number of parameters of the MIM.

3.3. ConvMixer Architecture

The ConvMixer (CM) presents a straightforward convolutional design suggested as an option to the patch-based structure of the Vision Transformer (ViT). While ViT achieves excellent results with self-attention layers, their computational time escalates quadratically and necessitates patch embeddings. Conversely, the CM works directly with input patches and relies solely on conventional convolutions for mixing steps. It ensures consistent resolution and uniformity throughout the network while independently addressing channel and spatial dimension integration [41]. The CM architecture outperforms both classical vision models such as ResNets and some corresponding MLP-Mixer and ViT variants, even with additions intended to make those architectures more performant on smaller datasets. The method is based on the idea of mixing, where DConv is used to mix spatial locations and PConv to mix channel locations. The method is instantiated with four hyperparameters: the hidden dimension, depth, kernel size, and patch size. The architecture is named after its hidden dimension and depth, like CM-h/d. The CM supports variable-sized inputs and is based on the idea of mixing, which is used in other architectures. These results suggest that patch embeddings themselves may be a critical component of newer architectures like ViT [41]. CM architecture consists of three parts, as shown in Figure 4. The first part of this architecture consists of a patch embedding layer and repeated applications of a fully convolutional block. The initial stage of this architecture incorporates a patch embedding layer, followed by multiple iterations of a fully convolutional block. Patch embeddings are applied through convolution, with specified input channels, output channels, kernel size, and stride. This process transforms an image into a feature map of dimensions , where represents the patch size and denotes the number of filters in the convolution layers the number of filters used in the convolution layer [45]. After the patch embedding layer, the GELU activation function is applied, succeeded by BatchNorm layers. Unlike RELU, GELU adjusts the inputs based on their magnitude instead of their sign, making it an efficient activation function. The second stage consists of the CM block, which is repeated a defined number of times based on the architecture’s depth. Each CM block includes a DConv operation, followed by a PConv operation, with every convolution being accompanied by an activation and BatchNorm step. The DConv operation is implemented within a residual block, which combines the input of a previous layer with the output of the subsequent layer to create a unified structure. DConv processes input channels independently, facilitating the mixing of spatial information within the image. PConv, on the other hand, employs a convolution to analyze each pixel or point individually, allowing for the integration of data across patches.

Figure 4.

The structure of CM architecture.

After many applications of this block, GAP is performed to obtain a feature vector, which is passed to a softmax classifier [41]. This is the third part of the CM architecture.

4. Experimental Studies

4.1. AMD Datasets

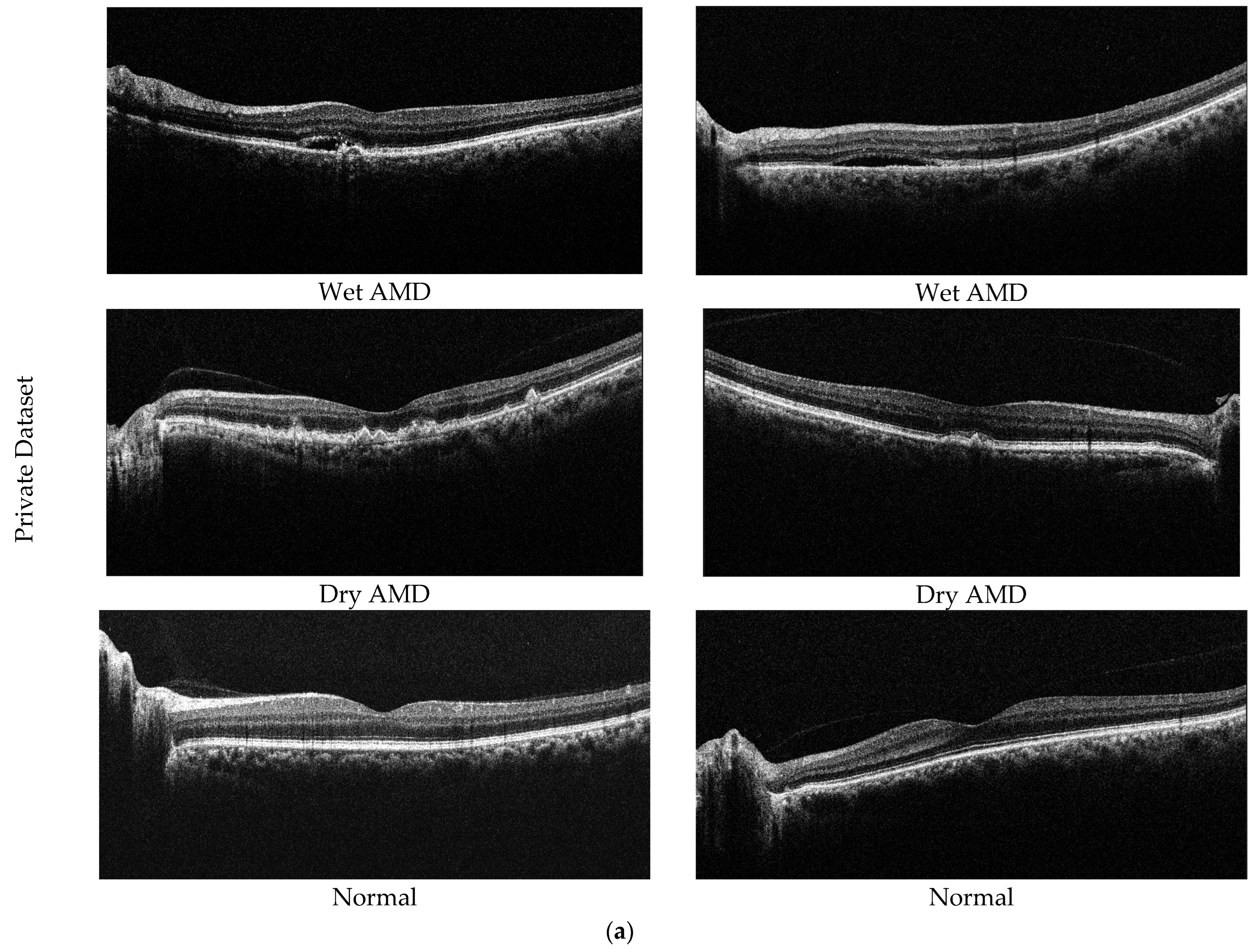

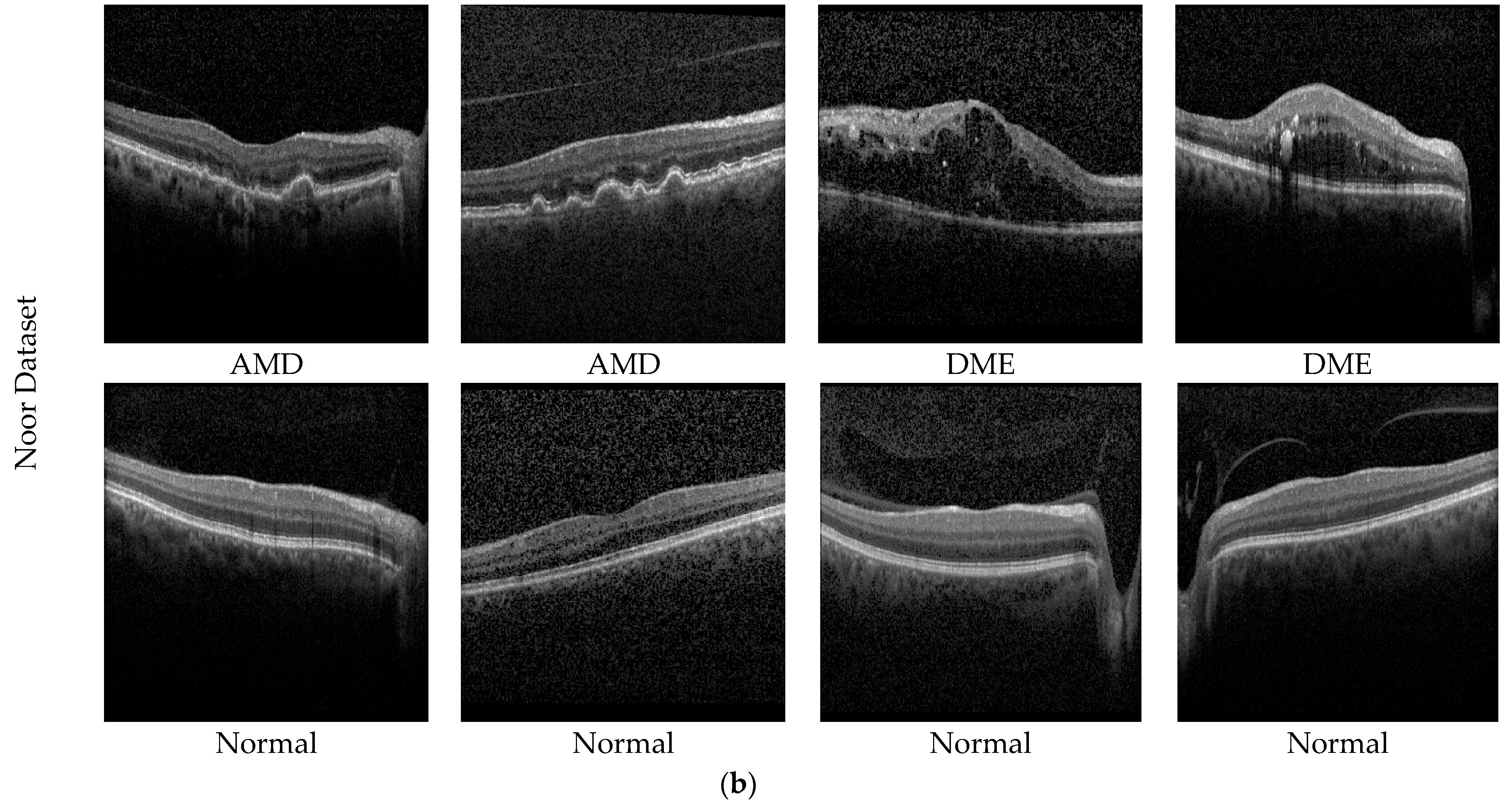

In this study, two different datasets are used to determine the effectiveness of the proposed model. The first of these datasets is our private dataset obtained from the outpatient clinic of the Department of Ophthalmology at Elazığ Fethi Sekin City Hospital in Turkey. In total, 2316 OCT images from 653 eyes, collected from 256 participants, are included in the dataset. The dataset consists of 3 classes (dry AMD, normal, and wet AMD), with 653 images obtained from 89 patients for dry AMD, 743 images obtained from 82 patients for wet AMD, and 920 images obtained from 85 healthy individuals. Images from AMD samples within our dataset utilized for experimental analysis are depicted in Figure 5a. Dry AMD occurs when the macula thins and dries out, accompanied by the accumulation of a small quantity of shapeless material, known as drusen, within the eye’s cells. On the other hand, wet AMD involves an abnormal blood vessel developing beneath the macula, termed choroidal neovascularization. This vessel’s presence may elevate the macula due to leakage of fluid and blood, disrupting its flat position.

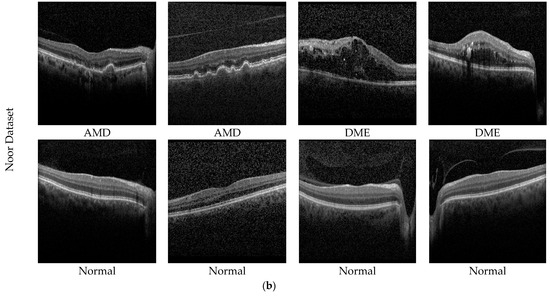

Figure 5.

Sample AMD images in the datasets. (a) Private dataset and (b) Noor dataset.

The second dataset used in the study is the public dataset. The Noor Eye Hospital in Tehran obtained this dataset through the use of imaging tools known as Heidelberg Spectral Domain Optical Coherence Tomography. Within this collection, there are 50 sets of normal OCT volumes, 48 sets related to dry AMD, and 50 sets connected to DME. Each of these sets contains a varied number of OCT images, ranging between 17 and 73 images. Altogether, these volumes encompass a sum of 1585 images for normal cases, 1637 for dry AMD cases, and 1104 for DME cases. The dimensions of the scans in this dataset measure 8.9 × 7.4 mm2, and the resolution along the axis is 3.5 µm. This particular dataset is also recognized by the name “Noor dataset”. Sample images of the Noor dataset are shown in Figure 5b.

4.2. Experimental Setup

The AMD dataset underwent experimental analysis using the TPU VM v3-8 hardware accelerator in the Kaggle platform. To train the proposed architecture, images sized at 224 × 224 × 3, with a batch size of 128 and a split of 15%–15%–70% for validation, testing, and training, respectively, were employed. In the dataset of 2316 AMD images, 347 of them are used for testing, 348 for validation, and 1621 for training. The same hyperparameters and training setup were also applied to experiments conducted on the public Noor dataset to ensure consistency across all evaluations. The model went through 100 training epochs, employing the Adam optimizer to minimize the loss function and enhance the model’s optimization. No data augmentation was applied during the study. Alongside hyperparameters, two specific callbacks played a crucial role in refining the training process. The initial callback, ReduceLROnPlateau, intervened by reducing the learning rate when validation loss stagnated, ensuring stability in training and countering overfitting. Within this callback, the minimum learning rate (lr) threshold was set at 0.000001, with a reduction factor of 0.3 for adjusting the lr. During training, the ModelCheckpoint, as the second callback, stored model weights periodically, ensuring the retention of the best model determined by validation accuracy, which can be utilized later on.

4.3. Evaluation Metrics

This research study employed various evaluation metrics, including F1 score (F1s), precision (Pr), accuracy (Acc), and recall (Re), to assess the classification performance of the PM. The Acc of the model is derived from Equation (1), where correct predictions are divided by the total predictions made. The Pr of the model measures its accuracy in positive predictions, as demonstrated in Equation (2). The model’s Re evaluates the accuracy of positive class predictions and is calculated using Equation (3). Additionally, the F1s, which represents a balanced measure of Pr and Re, is calculated following the steps outlined in Equation (4).

The values of true negatives, false negatives, false positives, and true positives found in Equation (1)–(4) are extracted from the confusion matrix. These particular values are described below: True positives indicate the number of accurately classified input images within each class. False negatives correspond to instances where an image from a particular class is mistakenly classified as negative. True negatives represent correctly classified images that do not belong to that class. False positives indicate the count of incorrectly classified images within a specific class.

4.4. Experimental Results

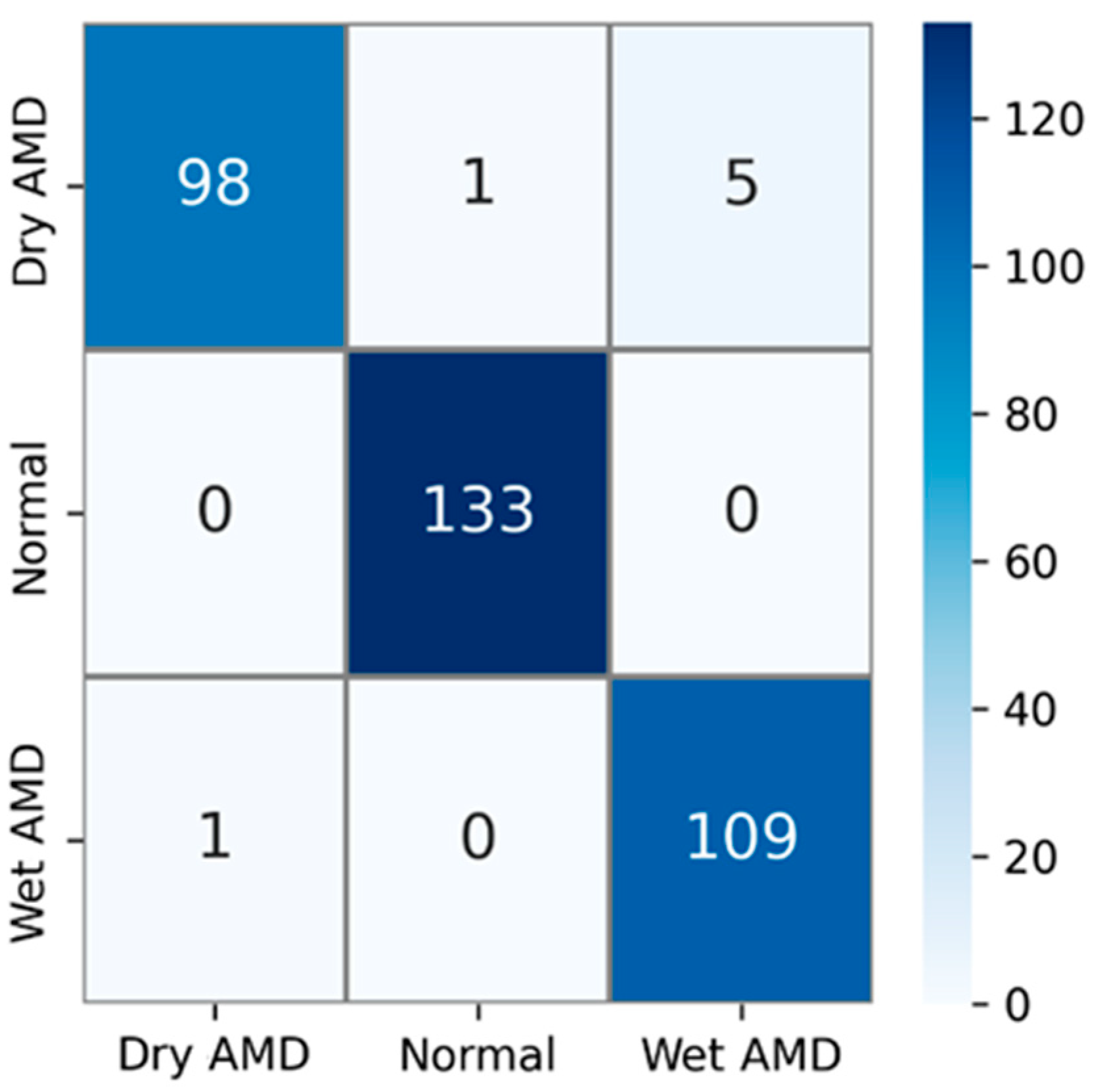

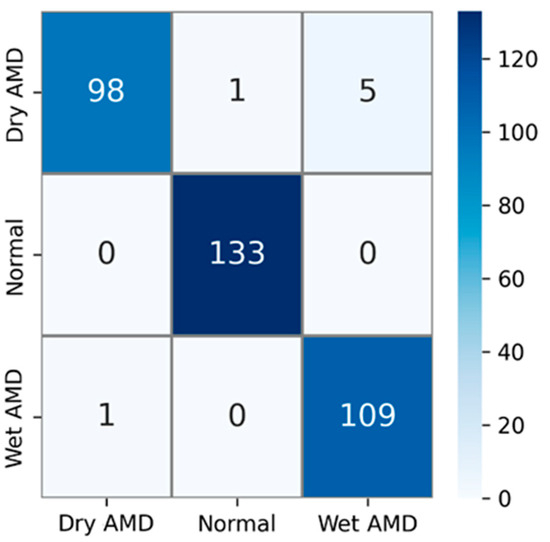

The private AMD dataset used in experimental studies contains 3 classes: dry AMD, normal, and wet AMD. The confusion matrix obtained using test images in this AMD dataset is shown in Figure 6. The test dataset comprises 15% of the total number of samples. That is, out of a total of 2316 AMD images, 347 were used for testing. Upon examining the confusion matrix in Figure 6, the results indicate that out of a total of 104 images in the dry AMD class, 98 were correctly classified, 1 image was misclassified as normal, and 5 images were misclassified as wet AMD. All 133 images in the normal class were correctly classified, while out of 110 images in the wet AMD class, 109 were accurately classified. Only 1 of the wet AMD images was mistakenly classified as dry AMD.

Figure 6.

Confusion matrix for the PM using private dataset.

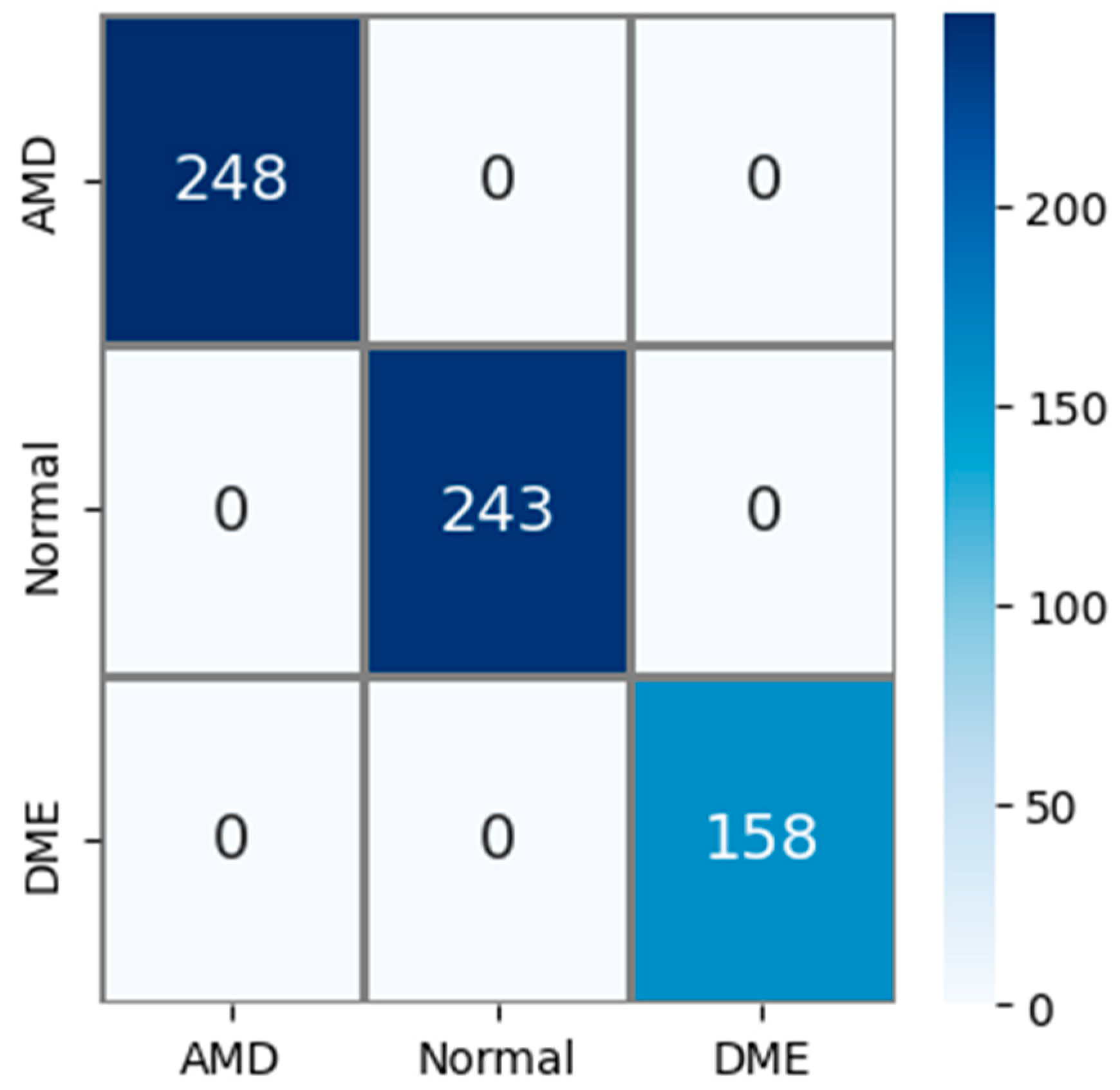

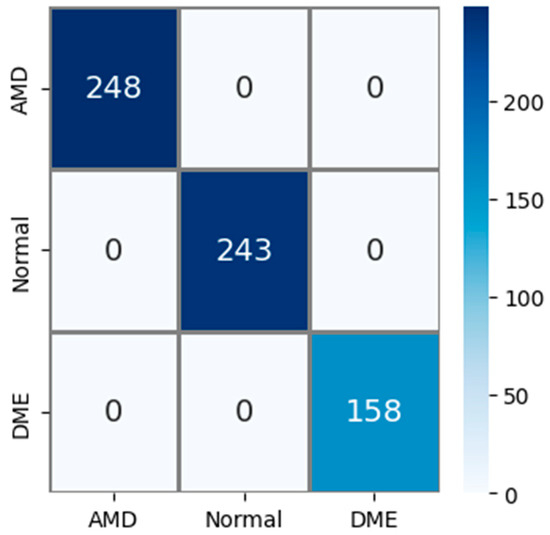

The public AMD dataset (Noor dataset) used in experimental studies contains 3 classes: AMD, normal, and DME. The confusion matrix obtained using the test images in this AMD dataset is shown in Figure 7. The test dataset constitutes 15% of the total sample count. So, out of a total of 4326 AMD images, 649 were used for testing. Upon examining the confusion matrix in Figure 7, it is observed that all 248 AMD, 243 normal, and 158 DME images were correctly classified. Accordingly, Acc, Pr, Re, and F1s values for all classes were obtained as 100% based on this information.

Figure 7.

Confusion matrix for the PM using public (Noor) dataset.

Using our private dataset, the PM has been compared with different DL models, and the classification results are provided in Table 8. The DL models used for comparison are as follows: VGG16 [46], ResNet50 [47], ResNet101 [47], InceptionV3 [48], DenseNet121 [49], DenseNet201 [49], EfficientNetB0 [50], EfficientNetB7 [50], MobileNet [51], and ConvNeXtTiny [52]. When examining Table 8, the Acc, Pr, Re, and F1s values obtained by the PM are as follows: 97.98%, 97.95%, 97.77%, and 97.86%, respectively. The closest values to the PM are found in the DenseNet201 model, with 95.97% Acc, 95.74% Pr, 95.51% Re, and 95.62% F1s. The PM outperforms the DenseNet201 model by 2.01% in Acc, 2.21% in Pr, 2.26% in Re, and 2.24% in F1s. Similarly, compared to InceptionV3, the PM exhibits better performance by 2.3% in Acc, 2.46% in Pr, 3.24% in Re, and 2.85% in F1s. Additionally, in comparison to DenseNet121, the PM achieves superior classification performance by 2.3% in Acc, 2.77% in Pr, 2.34% in Re, and 2.56% in F1s. The classification results obtained from comparing other models to the PM are as follows: When compared to VGG16, the PM exhibits better performance by 4.32% in Acc, 4.79% in Pr, 4.74% in Re, and 4.77% in F1s. In comparison to ResNet50, the PM shows better performance by 6.91% in Acc, 7.52% in Pr, 7.88% in Re, and 7.7% in F1s. When compared to ResNet101, the PM demonstrates better performance by 7.2% in Acc, 7.8% in Pr, 8.1% in Re, and 7.95% in F1s. The experimental studies resulted in the following Acc, Pr, Re, and F1s values for models with the lowest classification performance: ConvNeXtTiny achieved 59.37% Acc, 65.16% Pr, 56.35% Re, and 60.44% F1s. EfficientNetB0 yielded 77.23% Acc, 76.16% Pr, 75.03% Re, and 75.59% F1s. MobileNet showed 79.25% Acc, 78.10% Pr, 77.63% Re, and 77.86% F1s. Lastly, EfficientNetB7 achieved 84.15% Acc, 82.27% Pr, 81.48% Re, and 81.87% F1s. When comparing all models, the results suggest that the classification outcomes acquired with the PM are highly successful. Additionally, Table 8 provides the parameter counts for all models. Upon examining the parameter counts in Table 8, it can be inferred that the PM achieves superior results with the least number of parameters.

Table 8.

Comparison with different DL models (for private dataset).

In experimental studies conducted using the public (Noor) dataset, the PM has been compared with various DL models such as VGG16, ResNet50, ResNet101, InceptionV3, DenseNet121, DenseNet201, EfficientNetB0, EfficientNetB7, MobileNet, and ConvNeXtTiny. The results are presented in Table 9. Upon examining Table 9, it is observed that the PM achieved a value of 100% in all evaluation metrics. Among other methods, the results closest to the PM were obtained with the DenseNet121, achieving 99.85% Acc and 99.86% Pr, Re, and F1s. Following that, DenseNet201 achieved 99.69% Acc, 99.72% Pr, 99.65% Re, and 99.68% F1s. Additionally, InceptionV3 attained 99.23% Acc, 99.26% Pr, 99.31% Re, and 99.28% F1s. While ResNet50 yielded 98.92% Acc, 99.06% Pr, 98.82% Re, and 98.94% F1s, ResNet101 achieved 98.92% Acc, 98.98% Pr, 98.97% Re, and 98.97% F1s. VGG16 recorded 97.69% Acc, 97.70% Pr, 97.81% Re, and 97.75% F1s. EfficientNetB0 showed 91.52% Acc, 91.17% Pr, 91.48% Re, and 91.32% F1s, while EfficientNetB7 demonstrated 90.29% Acc, 90.61% Pr, 89.56% Re, and 90.08% F1s. Finally, MobileNet achieved 91.22% Acc, 90.73% Pr, 91.13% Re, and 90.93% F1s, whereas ConvNeXtTiny resulted in 62.86% Acc, 68.65% Pr, 59.84% Re, and 63.94% F1s. Upon examining all methods, the findings suggest that the PM achieved more successful results compared to the methods used for comparison. Furthermore, considering the trainable parameters of all methods, it is observed that the PM has the least number of trainable parameters. Considering all results, the PM has achieved significantly successful results with fewer parameters.

Table 9.

Comparison with different DL models (for public Noor dataset).

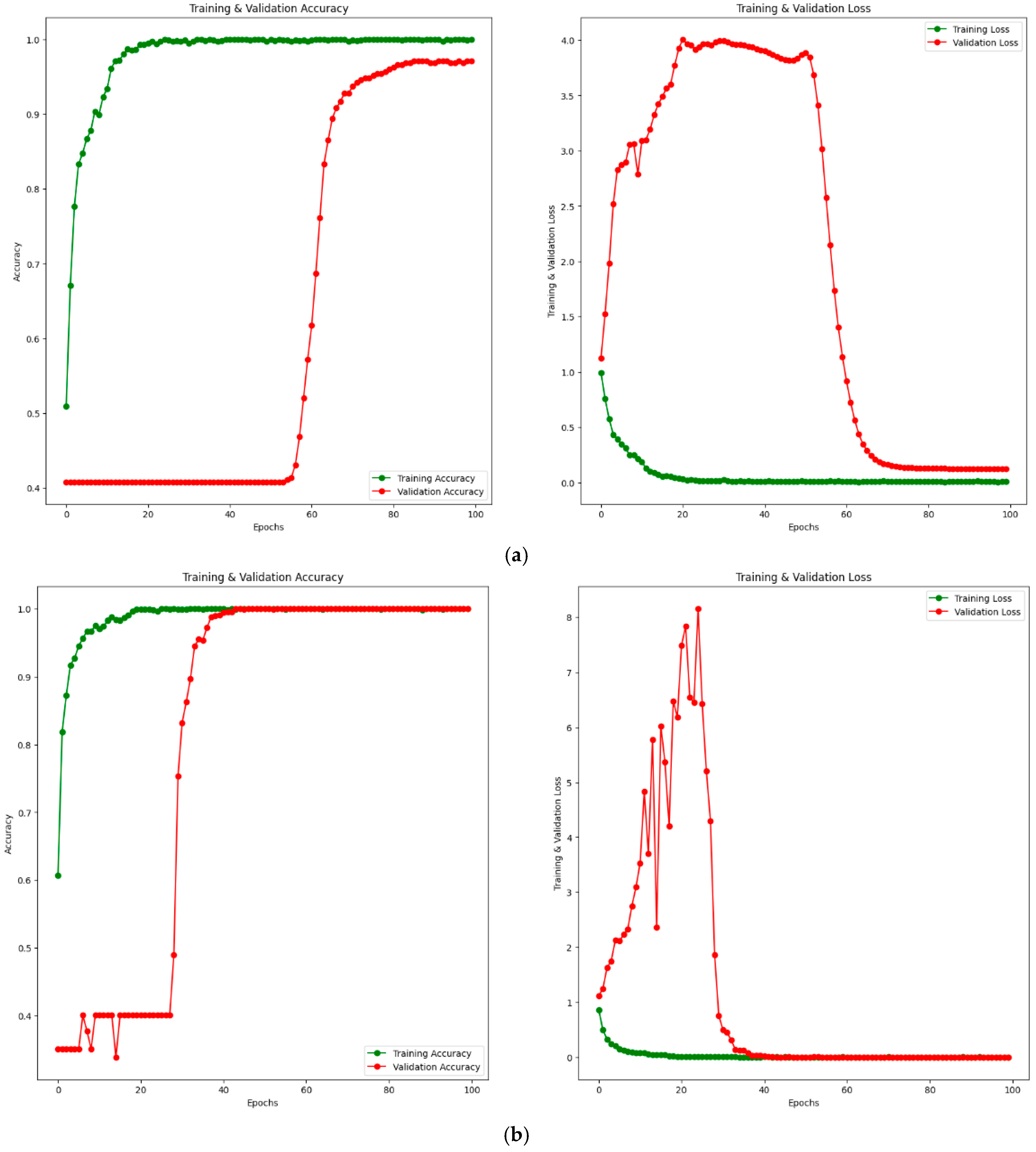

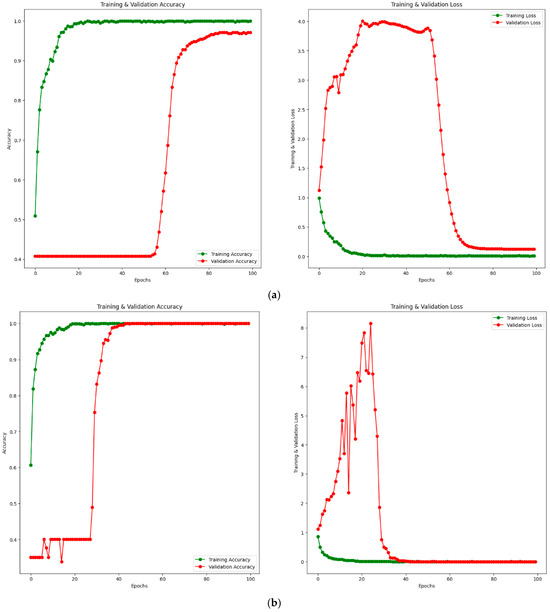

Additionally, training–validation (accuracy and loss) convergence curves for the PM using private and public (Noor) datasets are given in Figure 8. Figure 8a shows the training–validation curve for the private dataset, while Figure 8b shows the training–validation curve for the public (Noor) dataset. When examining Figure 8a, it is observed that the training accuracy increases up to approximately 20 epochs, then converges towards 100% after 20 epochs. The validation accuracy remains around 40% until approximately 60 epochs, after which it starts to increase. Considering the loss values, the training loss decreases up to about 20 epochs and converges to 0 after 20 epochs. The validation loss shows some fluctuations until around 60 epochs and converges to a value close to 0 after 60 epochs. Similarly, when examining Figure 8b, the training accuracy increases up to 20 epochs and reaches 100% after 20 epochs. The training loss also decreases up to 20 epochs and becomes 0 after 20 epochs. The validation accuracy shows fluctuations at certain intervals up to approximately 40 epochs and increases at certain intervals, reaching 100% after 40 epochs. The validation loss fluctuates until around 40 epochs and reaches 0 after 40 epochs. This indicates that both the training and validation accuracy values reach 100%. Considering the training–validation accuracy and loss curves for both private and public datasets, it is clear from the results that the training process converges rapidly. The rapid convergence of training–validation accuracy and loss curves in Figure 8b demonstrates the absence of overfitting, even with 100% accuracy achieved on the public dataset (Noor). The alignment of training and validation performance highlights the robustness and generalization capability of the proposed model.

Figure 8.

Training–validation convergence curves for PM. Accuracy curve on the left and loss curve on the right. (a) Private dataset. (b) Public (Noor) dataset.

4.5. Discussion

In this section, comparisons are made with existing methods in the literature using the public (Noor) dataset, and the results are given in Table 10. Paima et al. [25] introduced an innovative approach using a multi-scale CNN technique integrated with a feature pyramid network (FPN) for feature fusion. Their FPN-based method leverages diverse-scale receptive fields to enhance the detection of retinal abnormalities found at different sizes within OCT images. This approach enables seamless training of the multi-scale model through a singular CNN, employing a straightforward structure and obviating the necessity for preprocessing the input data. Within this methodology, the multi-scale CNN framework adopts preexisting architectures like VGG16, ResNet50, DenseNet121, and EfficientNetB0 as backbone structures. As a result of the experimental studies carried out using the Noor dataset, 87.80% Acc and 86.60% Re values were obtained when EfficientNetB0 was used with FPN. When DenseNet121 was used as the backbone structure with FPN, 90.9% Acc and 90.5% Re values were found. Similarly, when VGG16 was used as the backbone network with FPN, 92% Acc and 91.8% Re values were obtained, while when ResNet50 was used as the backbone network, 90.1% Acc and 89.8% Re values were obtained. When all backbone networks were examined, more successful results were obtained when VGG16 was used together with FPN. Thomas et al. [27] suggested an architectural design featuring a CNN that operates across multiple scales and paths, encompassing six layers of convolution. With the multi-scale convolutional layer, the network can generate diverse local structures employing filters of different sizes. Through multipath feature extraction, the CNN effectively amalgamates features associated with both sparse local and intricate global structures. The effectiveness of this architecture underwent evaluation via ten-fold cross-validation, employing various classifiers like support vector machines, multilayer perceptrons, and random forests. Experiments conducted on the Noor dataset showcased notable outcomes: using RF as a classifier alongside CNN achieved 98.97% Acc and 99% Pr, Re, and F1s. Employing SVM with CNN yielded 94.89% Acc and 94.9% Pr, Re, and F1s, while utilizing a multilayer perceptron classifier resulted in 96.93% Acc, 97% Pr, and 96.9% Re and F1s. Considering the comprehensive results, the most promising outcomes were achieved by combining CNN with the RF classifier. Das et al. [38] created a B-scan attentive CNN designed to replicate ophthalmologists’ diagnostic approach by concentrating on clinically significant B-scans while categorizing OCT images. To achieve this, they employed a feature extraction module based on CNNs, extracting spatial features from B-scans. Subsequently, a self-attention module gathered these features based on their clinical significance, resulting in a discriminative high-level feature vector for accurate diagnostics. Their trials with the Noor dataset demonstrated Acc of 94.9% and a Re rate of 93.26%. Rasti et al. [39] engineered an ensemble model that blends multi-scale convolutions for classifying DEM, dry AMD, and normal images sourced from OCT scans. Through empirical investigations on the Noor dataset encompassing these classes, their model achieved a Pr rate of 99.39%, Re rate of 99.36%, and F1s of 99.34%. Sabi et al. [53] introduce a new framework termed Double-Scale CNN designed for the diagnosis of AMD. This proposed structure integrates six convolutional layers to differentiate normal and AMD images. The double-scale convolutional layer enables the formation of multiple localized structures through the utilization of two distinct filter sizes. Within this proposed network, the sigmoid function serves as the classifier. Experimental investigations carried out on the Noor dataset unveiled an Acc level of 94.89%. Sahoo et al. [54] purposed to devise a prediction model for diagnosing dry AMD using a weighted majority voting (WMV) ensemble approach. This method merges forecasts from foundational classifiers and selects the predominant class based on weights assigned to each classifier’s prediction. A unique technique for extracting features along the retinal pigment epithelium (RPE) layer plays a crucial role in identifying dry AMD/normal images using the WMV method, wherein the number of windows calculated for each image is a determining factor. The preprocessing involves a hybrid-median filter, followed by scale-invariant feature transform-based segmentation of the RPE layer and curvature flattening of the retina, which aids in precisely measuring the RPE layer’s thickness. Through experimental analysis on the Noor dataset, they observed Acc of 96.94%, Pr of 95.83%, Re of 97.87%, and F1s of 96.84%. As per the lesion characteristics observed in OCT images, Xu et al. [55] introduced a hybrid attention approach, which combines a spatial attention mechanism (SAM) with a channel attention mechanism (CAM) operating concurrently. The CAM assigns distinct coefficients to individual channel feature maps within the channel dimension, aiming to emphasize the channel feature map containing the most prevalent lesion traits. Conversely, the SAM allocates specific position coefficients to each element in the spatial dimension, highlighting the significance of elements within the lesion area. This hybrid attention mechanism enables the network to prioritize the lesion area across both channel and spatial dimensions. Through experimental evaluation on the Noor dataset, they identified Acc of 99.76%, Pr of 99.70%, Re of 99.79%, and F1s of 99.74%. Mishra et al. [54] introduced an approach that incorporates an innovative deformation-responsive attention mechanism alongside pre-existing deep CNN architectures to derive improved distinguishing features from OCT images. This approach, labeled MacularNet, utilizes the VGG16 pre-trained model. Upon conducting experimental assessments on the Noor dataset to gauge the efficacy of the amalgamation of VGG16 and the attention mechanism, they found Acc of 99.79%, Pr of 99.80%, and Re and F1s of 99.79%. Fang et al. [56] introduced an innovative CNN approach tailored for the classification of OCT images, termed the lesion-aware CNN. Inspired by the diagnostic process followed by ophthalmologists, they devised a lesion detection network capable of identifying diverse types of macular lesions and generating corresponding attention maps. These attention maps, upon detection, are employed to delicately adjust the convolutional feature maps of the classification network, allowing the lesion-aware CNN model to emphasize crucial information, particularly related to macular lesions. Informed by lesion-specific data, the classification network harnesses insights from localized lesion-related areas, enhancing the efficiency and precision of OCT classification. Additionally, VGG16 serves as the foundational network for constructing the lesion-aware CNN model. Notably, the lesion-attention module is integrated into VGG16 before each pooling layer to retain more distinctive features through the maximum pooling process. To test the effectiveness of the lesion-aware CNN model, experimental studies on the Noor dataset yielded 99.39% Pr, 99.33% Re, and 99.36% F1s. Finally, a hybrid method has been developed in the PM, consisting of a combination of CM architecture, DSEB, and the MIM. The primary objective in developing the hybrid method is to reduce the trainable parameter count, thereby increasing classification accuracy while offering computational efficiency. As a result of experimental studies on the Noor dataset, the PM achieved a classification result of 100% across all evaluation metrics.

Table 10.

Comparison of methods in the literature using the public Noor dataset.

Based on the above information, it is observed that the most successful results were obtained using the PM. Results closest to the PM were achieved with Mishra et al. [54]’s MacularNet. The PM outperformed MacularNet by 0.21% in Acc, 0.2% in Pr, 0.21% in Re, and 0.21% in F1s. Alongside these results, the primary aim of the PM is to reduce the number of trainable parameters. While the PM has 1.650.020 parameters, MacularNet has approximately 19 million parameters. The PM holds about 12 times fewer parameters than MacularNet. Additionally, compared to Xu et al. [55]’s multi-branch hybrid attention network, the PM demonstrates a 0.24% improvement in Acc, 0.3% in Pr, 0.21% in Re, and 0.26% in F1s. Similarly, the PM outperforms Fang et al. [56]’s lesion-aware CNN method by 0.61% in Pr, 0.67% in Re, and 0.64% in F1s. Furthermore, the lesion-aware CNN method utilizes VGG16 as its base network, significantly increasing computational costs. Another study that achieved results closest to the PM is the multipath CNN with six convolutional layers and RF classifier method developed by Thomas et al. [27]. The PM yielded results that were 1.03% higher in Acc, 1% in Pr, 1% in Re, and 1% in F1s compared to this method. Additionally, the PM has about 4 times fewer parameters than the method developed by Thomas et al. [27]. The inclusion of the Noor dataset, originating from Tehran, Iran, demonstrates that the proposed model can generalize well to data from different geographic regions and imaging systems. The excellent performance of the PM on this dataset (100% across all metrics) highlights its robustness. However, future work will focus on expanding the dataset further to include images from additional geographic regions and diverse OCT devices to enhance the model’s applicability. The PM achieved a 5.11% higher Acc than the Double-Scale CNN method presented by Sabi et al. [53]. Moreover, compared to the multi-scale convolutional mixture of expert ensemble model suggested by Rasti et al. [39], the PM produced results that were 0.61% higher Pr, 0.64% higher Re, and 0.66% higher F1s. When contrasted with the B-scan attentive CNN method proposed by Das et al. [38], the PM showed a 5.1% higher Acc. Lastly, when compared to the FPN-VGG16 by Paima et al. [25], the PM outperformed it by 8%, FPN-ResNet50 by 9.9%, FPN-DenseNet121 by 9.1%, and FPN-EfficientNetB0 by 12.2% in Acc. It is important to note that the VGG16, ResNet50, DenseNet121, and EfficientNetB0 models used in the proposed methods by Paima et al. [25] significantly increase computational costs.

4.6. Ablation Study

The PM integrates a fusion of the CM, DSEB, and MIM design. This model comprises three distinct components: MIM, DSEB, and CM. The examination of individual components within PM was conducted with both private and public (Noor) datasets and is detailed in Table 11. For the private dataset, in the first 3 models, the results obtained from the individual use of the models are listed. In Model 1, only MIM was used, resulting in an Acc of 61.67%, Pr of 58.34%, Re of 57.80%, and F1s of 58.07%. In Model 2, only DSEB was used, yielding Acc, Pr, Re, and F1s values of 63.69%, 61.24%, 59.35%, and 60.28%, respectively. Model 3 exclusively utilized CM, resulting in an Acc of 95.10%, Pr of 95.16%, Re of 94.68%, and an F1s of 94.92%. In these three models, the findings suggest that the best result when used individually was achieved with CM. In Model 4, CM was used alongside DSEB. The incorporation of DSEB has positively influenced all evaluation metrics compared to using CM alone. When CM and DSEB were used together, it increased Acc by 0.58%, Pr by 0.39%, Re by 0.68%, and the F1s by 0.53% compared to CM alone. Similarly, in Model 5, MIM and CM were used together. Compared to using CM alone, it increased Acc by 1.44%, Pr by 0.79%, Re by 1.83%, and the F1s by 1.31%. In Model 6, MIM and DSEB were used together, resulting in Acc, Pr, Re, and F1s values of 66.28%, 61.37%, 61.62%, and 61.49%, respectively. The final model (Model 7) is the proposed one, utilizing all three components together. This combined model achieved an Acc of 97.98%, Pr of 97.95%, Re of 97.77%, and an F1s of 97.86%. Considering all models, the results indicate that the PM, composed of the combination of all three models, yields the best results.

Table 11.

Analysis of components in the PM.

When analyzing the components in the PM for the public (Noor) dataset, using all three components together (Model 7) resulted in 100% across all evaluation metrics. In Model 1, using only MIM yielded 82.13% Acc, 82.03% Pr, 82.84% Re, and 82.46% F1s. Model 2, using only DSEB, achieved 82.28% Acc, 81.61% Pr, 82.19% Re, and 81.90% F1s. Model 3, utilizing only CM, showed 99.54% Acc, 99.55% Pr, 99.50% Re, and 99.52% F1s. Individual usage reveals CM as the most successful model. In Model 4, combining DSEB and CM resulted in 99.69% Acc, 99.71% Pr, 99.59% Re, and 99.65% F1s. Model 5, combining MIM and CM, yielded 99.85% Acc, 99.86% Pr, 99.79% Re, and 99.82% F1s, while combining MIM and DSEB resulted in 92.91% Acc, 92.82% Pr, 92.90% Re, and 92.86% F1s. Upon examining all results, the findings indicate that the PM, which utilizes all three components together, performs successfully across the board.

5. Conclusions and Future Works

AMD, a progressive retinal condition, predominantly affects individuals aged 50 and above, leading to degenerative changes in the macula—a critical area of the retina responsible for central vision. It can lead to central vision loss, complicating everyday activities like reading and facial recognition. Advancements in ophthalmic imaging, particularly through technologies like OCT, have revolutionized the management and diagnosis of AMD by providing high-resolution, detailed insights into the retinal layers, enabling precise diagnosis and timely interventions. With the increasing prevalence of AMD, the demand for analyzing retinal scans is expected to grow, emphasizing the need for efficient diagnostic systems. This necessitates the development of automated diagnostic systems leveraging artificial intelligence (AI) to handle the growing number of OCT images efficiently. While the private dataset used in this study demonstrated high accuracy (97.98%), its relatively smaller size compared to the public dataset poses a potential limitation in terms of generalization. Increasing the training dataset size, especially through data collection from diverse regions, could further enhance the robustness and applicability of the proposed model. In addition to dataset size and diversity, it is important to acknowledge that clinical OCT scans often include noisy, artifact-laden, or incomplete images. These real-world challenges were not addressed in this study, as it primarily focused on high-quality datasets to establish baseline performance. Future research will aim to incorporate such challenging data to better evaluate the model’s robustness and reliability in real-world clinical scenarios. This step is crucial for ensuring the broader applicability of the proposed model in diverse clinical settings. The emergence of DL, particularly CNNs, has shown promise in significantly enhancing AMD detection and classification. The proposed CNN-based deep learning model in this study incorporates innovative architectural elements like CM architecture, DSEB, and the MIM. These modifications aim to improve computational efficiency, reduce parameter counts, and enhance feature extraction, leading to superior classification accuracy. The PM achieved an Acc of 97.98%, Pr of 97.95%, Re of 97.77%, and an F1s of 97.86% based on experimental studies conducted on a dataset consisting of 2316 images. In addition, as a result of the experimental studies carried out on the public (Noor) AMD dataset, 100% results were obtained in all evaluation metrics. Considering the experimental results, the findings suggest that the PM outperforms various existing DL models by attaining high Acc, Pr, Re, and F1s. These findings underscore the potential of AI-based systems, especially DL algorithms, in revolutionizing AMD diagnosis and treatment, providing a pathway for more accurate and efficient clinical practices in ophthalmology. In future works, the plan is to transform the PM into a real-time operational system applicable for expert doctors. Additionally, there are plans to extend the application of the PM to other eye diseases based on OCT images. While this study focused on three primary classes (normal, dry AMD, and wet AMD), we recognize that both dry AMD and wet AMD have additional progression stages and subtypes, such as geographic atrophy or variations in CNV presentation. Future research will aim to analyze these finer distinctions by incorporating detailed annotations for AMD subtypes and employing advanced visualization techniques, such as Grad-CAM, to better understand how the model differentiates overlapping features in these subtypes.

Author Contributions

E.Y.: Conceptualization, Resources, Data Curation, Formal Analysis, Supervision, Writing—Review and Editing, Validation. H.F.: Methodology, Software Investigation, Writing—Original Draft, Validation. H.Ü.: Conceptualization, Methodology, Software Investigation, Writing—Original Draft. S.T.A.Ö.: Visualization, Methodology, Software, Writing—Original Draft. İ.B.Ç.: Formal Analysis, Investigation, Methodology, Writing—Review and Editing, Resources. A.Ş.: Methodology, Supervision, Validation, Visualization, Writing—Review and Editing, Formal Analysis. O.A.: Validation, Visualization, Writing—Review and Editing, Formal Analysis, Project Administration. N.H.G.: Supervision, Validation, Visualization, Writing—Review and Editing, Formal Analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by Fırat University, Scientific Research Project Committee, under grant No. TEKF.24.47.

Institutional Review Board Statement

The study was approved by the Fırat University ethics committee (ethical approval number: E50716828-050.01.04-375003), with an approval date of 24 September 2023.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Umer, M.J.; Sharif, M.; Raza, M.; Kadry, S. A Deep Feature Fusion and Selection-Based Retinal Eye Disease Detection from OCT Images. Expert Syst. 2023, 40, e13232. [Google Scholar] [CrossRef]

- Nowak, J.Z. Age-Related Macular Degeneration (AMD): Pathogenesis and Therapy. Pharmacol. Rep. 2006, 58, 353–363. [Google Scholar] [PubMed]

- Mitchell, P.; Liew, G.; Gopinath, B.; Wong, T.Y. Age-Related Macular Degeneration. Lancet 2018, 392, 1147–1159. [Google Scholar] [CrossRef] [PubMed]

- Fleckenstein, M.; Keenan, T.D.L.; Guymer, R.H.; Chakravarthy, U.; Schmitz-Valckenberg, S.; Klaver, C.C.; Wong, W.T.; Chew, E.Y. Age-Related Macular Degeneration. Nat. Rev. Dis. Primers 2021, 7, 31. [Google Scholar] [CrossRef]

- Yang, K.; Liang, Y.B.; Gao, L.Q.; Peng, Y.; Shen, R.; Duan, X.R.; Friedman, D.S.; Sun, L.P.; Mitchell, P.; Wang, N.L.; et al. Prevalence of Age-Related Macular Degeneration in a Rural Chinese Population: The Handan Eye Study. Ophthalmology 2011, 118, 1395–1401. [Google Scholar] [CrossRef] [PubMed]

- Wong, W.L.; Su, X.; Li, X.; Cheung, C.M.G.; Klein, R.; Cheng, C.Y.; Wong, T.Y. Global Prevalence of Age-Related Macular Degeneration and Disease Burden Projection for 2020 and 2040: A Systematic Review and Meta-Analysis. Lancet Glob. Health 2014, 2, e106–e116. [Google Scholar] [CrossRef]

- Ricci, F.; Bandello, F.; Navarra, P.; Staurenghi, G.; Stumpp, M.; Zarbin, M. Neovascular Age-Related Macular Degeneration: Therapeutic Management and New-Upcoming Approaches. Int. J. Mol. Sci. 2020, 21, 8242. [Google Scholar] [CrossRef] [PubMed]

- Schultz, N.M.; Bhardwaj, S.; Barclay, C.; Gaspar, L.; Schwartz, J. Global Burden of Dry Age-Related Macular Degeneration: A Targeted Literature Review. Clin. Ther. 2021, 43, 1792–1818. [Google Scholar] [CrossRef] [PubMed]

- Stahl, A. Diagnostik Und Therapie Der Altersabhängigen Makuladegeneration. Dtsch. Arztebl. Int. 2020, 117, 513–520. [Google Scholar] [CrossRef]

- Gheorghe, A.; Mahdi, L.; Musat, O. Age-Related Macular Degeneration. Rom. J. Ophthalmol. 2015, 59, 74–77. [Google Scholar] [PubMed]

- Davis, M.D.; Gangnon, R.E.; Lee, L.-Y.; Hubbard, L.D.; Klein, B.E.K.; Klein, R.; Ferris, F.L.; Bressler, S.B.; Milton, R.C. The Age-Related Eye Disease Study Severity Scale for Age-Related Macular Degeneration: AREDS Report No. 17. Arch. Ophthalmol. 2005, 123, 1484–1498. [Google Scholar] [CrossRef] [PubMed]

- He, T.; Zhou, Q.; Zou, Y. Automatic Detection of Age-Related Macular Degeneration Based on Deep Learning and Local Outlier Factor Algorithm. Diagnostics 2022, 12, 532. [Google Scholar] [CrossRef] [PubMed]

- Heo, T.Y.; Kim, K.M.; Min, H.K.; Gu, S.M.; Kim, J.H.; Yun, J.; Min, J.K. Development of a Deep-Learning-Based Artificial Intelligence Tool for Differential Diagnosis between Dry and Neovascular Age-Related Macular Degeneration. Diagnostics 2020, 10, 261. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, P.P.; Kim, L.A.; Mettu, P.S.; Cousins, S.W.; Comer, G.M.; Izatt, J.A.; Farsiu, S. Fully Automated Detection of Diabetic Macular Edema and Dry Age-Related Macular Degeneration from Optical Coherence Tomography Images. Biomed. Opt. Express 2014, 5, 3568–3577. [Google Scholar] [CrossRef] [PubMed]

- Podoleanu, A.G. Optical Coherence Tomography. J. Microsc. 2012, 247, 209–219. [Google Scholar] [CrossRef]

- Liew, A.; Agaian, S.; Benbelkacem, S. Distinctions between Choroidal Neovascularization and Age Macular Degeneration in Ocular Disease Predictions via Multi-Size Kernels ξCho-Weighted Median Patterns. Diagnostics 2023, 13, 729. [Google Scholar] [CrossRef] [PubMed]

- Ferris, F.L.; Wilkinson, C.P.; Bird, A.; Chakravarthy, U.; Chew, E.; Csaky, K.; Sadda, S.R. Clinical Classification of Age-Related Macular Degeneration. Ophthalmology 2013, 120, 844–851. [Google Scholar] [CrossRef]

- Shelton, R.L.; Shrestha, S.; Park, J.; Applegate, B.E. Optical Coherence Tomography. Biomed. Technol. Devices Second Ed. 2013, 254, 247–266. [Google Scholar] [CrossRef]

- Darooei, R.; Nazari, M.; Kafieh, R.; Rabbani, H. Optimal Deep Learning Architecture for Automated Segmentation of Cysts in OCT Images Using X-Let Transforms. Diagnostics 2023, 13, 1994. [Google Scholar] [CrossRef] [PubMed]

- Muntean, G.A.; Marginean, A.; Groza, A.; Damian, I.; Roman, S.A.; Hapca, M.C.; Muntean, M.V.; Nicoară, S.D. The Predictive Capabilities of Artificial Intelligence-Based OCT Analysis for Age-Related Macular Degeneration Progression—A Systematic Review. Diagnostics 2023, 13, 2464. [Google Scholar] [CrossRef] [PubMed]

- Thomas, A.; Sunija, A.P.; Manoj, R.; Ramachandran, R.; Ramachandran, S.; Varun, P.G.; Palanisamy, P. RPE Layer Detection and Baseline Estimation Using Statistical Methods and Randomization for Classification of AMD from Retinal OCT. Comput. Methods Programs Biomed. 2021, 200, 105822. [Google Scholar] [CrossRef]

- Adamis, A.P.; Brittain, C.J.; Dandekar, A.; Hopkins, J.J. Building on the Success of Anti-Vascular Endothelial Growth Factor Therapy: A Vision for the next Decade. Eye 2020, 34, 1966–1972. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Lung Chong, K.K.; Li, Z. Applications of AI to Age-Related Macular Degeneration: A Case Study and a Brief Review. In Proceedings of the 2022 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shijiazhuang, China, 22–24 July 2022; 2022; pp. 586–589. [Google Scholar]

- Serener, A.; Serte, S. Dry and Wet Age-Related Macular Degeneration Classification Using OCT Images and Deep Learning. In Proceedings of the 2019 Scientific Meeting on Electrical-Electronics and Biomedical Engineering and Computer Science, EBBT, Istanbul, Turkey, 24–26 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Sotoudeh-Paima, S.; Jodeiri, A.; Hajizadeh, F.; Soltanian-Zadeh, H. Multi-Scale Convolutional Neural Network for Automated AMD Classification Using Retinal OCT Images. Comput. Biol. Med. 2022, 144, 105368. [Google Scholar] [CrossRef]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef] [PubMed]

- Thomas, A.; Harikrishnan, P.M.; Ramachandran; Ramachandran, S.; Manoj, R.; Palanisamy, P.; Gopi, V.P. A Novel Multiscale and Multipath Convolutional Neural Network Based Age-Related Macular Degeneration Detection Using OCT Images. Comput. Methods Programs Biomed. 2021, 209, 106294. [Google Scholar] [CrossRef]

- Celebi, A.R.C.; Bulut, E.; Sezer, A. Artificial Intelligence Based Detection of Age-Related Macular Degeneration Using Optical Coherence Tomography with Unique Image Preprocessing. Eur. J. Ophthalmol. 2023, 33, 65–73. [Google Scholar] [CrossRef] [PubMed]

- Hu, M.; Wu, B.; Lu, D.; Xie, J.; Chen, Y.; Yang, Z.; Dai, W. Two-Step Hierarchical Neural Network for Classification of Dry Age-Related Macular Degeneration Using Optical Coherence Tomography Images. Front. Med. 2023, 10, 1221453. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Xie, X.; Terry, L.; Wood, A.; White, N.; Margrain, T.H.; North, R. V Age-Related Macular Degeneration Detection and Stage Classification Using Choroidal OCT Images. In Image Analysis and Recognition; Campilho, A., Karray, F., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 707–715. [Google Scholar]

- Lee, C.S.; Baughman, D.M.; Lee, A.Y. Deep Learning Is Effective for the Classification of OCT Images of Normal versus Age-Related Macular Degeneration. Ophthalmol. Retin. 2017, 1, 322–327. [Google Scholar] [CrossRef]

- Yoo, T.K.; Choi, J.Y.; Seo, J.G.; Ramasubramanian, B.; Selvaperumal, S.; Kim, D.W. The Possibility of the Combination of OCT and Fundus Images for Improving the Diagnostic Accuracy of Deep Learning for Age-Related Macular Degeneration: A Preliminary Experiment. Med. Biol. Eng. Comput. 2019, 57, 677–687. [Google Scholar] [CrossRef]

- Hwang, D.-K.; Hsu, C.-C.; Chang, K.-J.; Chao, D.; Sun, C.-H.; Jheng, Y.-C.; Yarmishyn, A.A.; Wu, J.-C.; Tsai, C.-Y.; Wang, M.-L.; et al. Artificial Intelligence-Based Decision-Making for Age-Related Macular Degeneration. Theranostics 2019, 9, 232–245. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Wang, W.; Yang, J.; Zhao, J.; Ding, D.; He, F.; Chen, D.; Yang, Z.; Li, X.; Yu, W.; et al. Automated Diagnoses of Age-Related Macular Degeneration and Polypoidal Choroidal Vasculopathy Using Bi-Modal Deep Convolutional Neural Networks. Br. J. Ophthalmol. 2021, 105, 561–566. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Xue, Y.; Wu, X.; Zhong, Y.; Rao, H.; Luo, H.; Weng, Z. Deep Learning-Based System for Disease Screening and Pathologic Region Detection From Optical Coherence Tomography Images. Transl. Vis. Sci. Technol. 2023, 12, 29. [Google Scholar] [CrossRef]

- Kadry, S.; Rajinikanth, V.; González Crespo, R.; Verdú, E. Automated Detection of Age-Related Macular Degeneration Using a Pre-Trained Deep-Learning Scheme. J. Supercomput. 2022, 78, 7321–7340. [Google Scholar] [CrossRef]

- Das, V.; Dandapat, S.; Bora, P.K. Multi-Scale Deep Feature Fusion for Automated Classification of Macular Pathologies from OCT Images. Biomed. Signal Process. Control 2019, 54, 101605. [Google Scholar] [CrossRef]

- Das, V.; Prabhakararao, E.; Dandapat, S.; Bora, P.K. B-Scan Attentive CNN for the Classification of Retinal Optical Coherence Tomography Volumes. IEEE Signal Process. Lett. 2020, 27, 1025–1029. [Google Scholar] [CrossRef]

- Rasti, R.; Rabbani, H.; Mehridehnavi, A.; Hajizadeh, F. Macular OCT Classification Using a Multi-Scale Convolutional Neural Network Ensemble. IEEE Trans. Med. Imaging 2018, 37, 1024–1034. [Google Scholar] [CrossRef]

- Farsiu, S.; Chiu, S.J.; O’Connell, R.V.; Folgar, F.A.; Yuan, E.; Izatt, J.A.; Toth, C.A. Quantitative Classification of Eyes with and without Intermediate Age-Related Macular Degeneration Using Optical Coherence Tomography. Ophthalmology 2014, 121, 162–172. [Google Scholar] [CrossRef] [PubMed]

- Trockman, A.; Kolter, J.Z. Patches Are All You Need? arXiv 2022, arXiv:2201.09792. [Google Scholar]

- Asker, M.E. Hyperspectral Image Classification Method Based on Squeeze-and-Excitation Networks, Depthwise Separable Convolution and Multibranch Feature Fusion. Earth Sci. Inform. 2023, 16, 1427–1448. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Tuncer, A. Cost-Optimized Hybrid Convolutional Neural Networks for Detection of Plant Leaf Diseases. J. Ambient Intell. Humaniz. Comput. 2021, 12, 8625–8636. [Google Scholar] [CrossRef]

- Ozcelik, S.T.A.; Uyanık, H.; Deniz, E.; Sengur, A. Automated Hypertension Detection Using ConvMixer and Spectrogram Techniques with Ballistocardiograph Signals. Diagnostics 2023, 13, 182. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE Computer Society, Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML, Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

- Sabi, S.; Gopi, V.P.; Raj, J.R.A. Detection of Age-Related Macular Degeneration from Oct Images Using Double Scale Cnn Architecture. Biomed. Eng. Appl. Basis Commun. 2021, 33, 2150029. [Google Scholar] [CrossRef]

- Mishra, S.S.; Mandal, B.; Puhan, N.B. MacularNet: Towards Fully Automated Attention-Based Deep CNN for Macular Disease Classification. SN Comput. Sci. 2022, 3, e0261285. [Google Scholar] [CrossRef]

- Xu, L.; Wang, L.; Cheng, S.; Li, Y. MHANet: A Hybrid Attention Mechanism for Retinal Diseases Classification. PLoS ONE 2021, 16, 1–20. [Google Scholar] [CrossRef]

- Fang, L.; Wang, C.; Li, S.; Rabbani, H.; Chen, X.; Liu, Z. Attention to Lesion: Lesion-Aware Convolutional Neural Network for Retinal Optical Coherence Tomography Image Classification. IEEE Trans. Med. Imaging 2019, 38, 1959–1970. [Google Scholar] [CrossRef] [PubMed]

- Sahoo, M.; Mitra, M.; Pal, S. Improved Detection of Dry Age-Related Macular Degeneration from Optical Coherence Tomography Images Using Adaptive Window Based Feature Extraction and Weighted Ensemble Based Classification Approach. Photodiagnosis Photodyn. Ther. 2023, 42, 103629. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).