Abstract

The proper segmentation of the brain tumor from the image is important for both patients and medical personnel due to the sensitivity of the human brain. Operation intervention would require doctors to be extremely cautious and precise to target the brain’s required portion. Furthermore, the segmentation process is also important for multi-class tumor classification. This work primarily concentrated on making a contribution in three main areas of brain MR Image processing for classification and segmentation which are: Brain MR image classification, tumor region segmentation and tumor classification. A framework named DeepTumor is presented for the multistage-multiclass Glioma Tumor classification into four classes; Edema, Necrosis, Enhancing and Non-enhancing. For the brain MR image binary classification (Tumorous and Non-tumorous), two deep Convolutional Neural Network) CNN models were proposed for brain MR image classification; 9-layer model with a total of 217,954 trainable parameters and an improved 10-layer model with a total of 80,243 trainable parameters. In the second stage, an enhanced Fuzzy C-means (FCM) based technique is proposed for the tumor segmentation in brain MR images. In the final stage, an enhanced CNN model 3 with 11 hidden layers and a total of 241,624 trainable parameters was proposed for the classification of the segmented tumor region into four Glioma Tumor classes. The experiments are performed using the BraTS MRI dataset. The experimental results of the proposed CNN models for binary classification and multiclass tumor classification are compared with the existing CNN models such as LeNet, AlexNet and GoogleNet as well as with the latest literature.

1. Introduction

The study of automated diagnosis of brain tumors is an important subject for affected patients, doctors, technicians, and hospitals. For patients, early diagnosis can offer a better survival rate through early treatment and intervention. For doctors and technicians, it can offer a more accurate and faster way of diagnosis and treatment options. For hospitals and the general healthcare system, it reduces the cost of healthcare through an early diagnosis which means early intervention with less expensive treatment options. Automatic brain tumor detection plays an important role in assisting radiologists in diagnosing the brain tumor. Image segmentation plays an equally important role in identifying the location of the tumor [1,2]. Different classification and segmentation methods were presented in recent research studies for the detection of brain tumors. Through extensive analysis of previous research, it was found that most studies either suffer from or do not account for the most common problems of overfitting and lack of sufficiently sized datasets [3,4]. The overfitting problem occurs for many reasons, including a large number of hidden layers leading to extracting noise features that negatively affect the classifier performance. Various techniques have been proposed to tackle the overfitting problem: early stopping, training with more data, regularization, cross-validation, and dropout. Identifying the optimized deep learning model for brain MR images and glioma tumor classification is the main aim of this study. Here, it will be worth to mention that there are many types of primary brain tumors that exist and glioma tumor is most common type of brain tumor which is produced by the glial cells. The contribution of this research is an enhanced classification and segmentation methods which consist of a multistage process to classify the Glioma tumor into its multiclass; Necrosis, Edema, Enhancing and Non-Enhancing.

The work presented in this research is of interest to researchers in the field and medical personnel specialized in cancer treatment. This work primarily contributed to three main areas of brain MR Image processing for classification and segmentation. In the first stage, proposed significant contributions in the classification of MR images into Tumorous and Non-tumorous was presented. In the second stage, proposed techniques for the segmentation of the tumorous image were explained. In the third stage, proposed significant contributions for the classification of tumorous images into the four Glioma classes; Necrosis, Edema, Enhancing, and Non-enhancing were detailed [5].

The goal of this research is to propose an enhanced classification and segmentation techniques using deep learning models by tuning the deep learning parameters to avoid the overfitting problem and increase the classification and segmentation accuracy of binary and multiclass brain tumors. Recent developments in the computing field with high-speed multi-core processors and GPUs made it possible to explore image processing techniques that were put on the shelf previously because of their high-speed processing demand.

The rest of article is organized as follows; Section 2 discusses about the materials and methods. In Section 3, the experimental results and analysis is presented. In Section 4, the conclusion and future directions are provided.

1.1. Medical Image Modalities

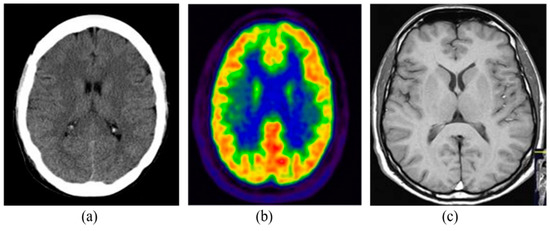

The medical imaging modalities define several methods to capture the image for the structure of some specific organs of the human body. Medical imaging has become an essential part of the clinical procedures due to its effective visualization and quantitative assessment. There are many different modalities of medical imaging available such as Computed Tomography (CT), Positron Emission Tomography (PET) and Magnetic Resonance Imaging (MRI) [6]. Some of the commonly and widely used imaging modalities are detailed in the following subsections. Figure 1 shows the samples of brain images captured from different imaging modalities.

Figure 1.

Brain imaging using different modalities: (a) CT, (b) PET, (c) MRI.

1.2. Brain MRI

In brain MRI, magnetic fields produced by large magnets along with radio waves and a computing device are used to generate detailed information of the internal structure of the brain. There are three types of images produced by the brain MRI procedure and these types are based on the magnetic field strength and frequency of the waves. Changing the pulse order and image constraints, the following types of images are acquired: proton density (PD) weighted, longitudinal relaxation time (T1) weighted and transverse relaxation time (T2) weighted [7]. T1 images show the dark internal tissues of the brain whereas the T2 images indicate the bright tissues inside. PD images show the water and macromolecules inside the image [8].

A magnetic field is mainly used by brain MRI unlike radiations in CT scan or other techniques and can detect tissue swelling, infection and tumors inside the brain. The images obtained from MRI can be used for the analysis of the different types of brain abnormalities.

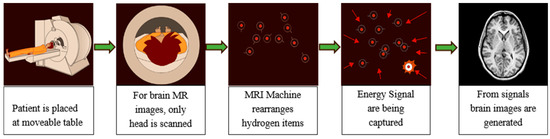

In the brain MRI procedure, large magnets produce a magnetic field in the range 0.2 T to 7 T (average 1.5 T). The subject is placed inside this magnetic field and excited hydrogen atoms inside the body, due to the presence of water molecules, emit radio frequencies that are captured in the large enclosed area of the MRI scanner. These frequencies are used to generate the images inside a computing system. Various brain MR image modalities are obtained by changing the magnetic field strength. The large magnets’ coils are switched to on and off states to detect the timing information of the hydrogen atoms’ realignment into an equilibrium state. The process typically takes from 20 to 45 min to complete and is shown in Figure 2.

Figure 2.

MR image capturing process from MRI Scanner.

1.3. Brain MRI

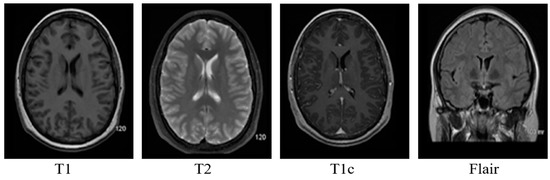

Brain MRI provides the option to obtain different image modalities by changing the strength of the magnetic field and timing. Echo time in MR imaging is the time for which the radio frequencies emitted by the excited hydrogen atoms are measured. Repetition time is the time delay between two consecutive echo times. Changing the echo time and repetition time can have four different image modalities.

- T1: This modality has a small echo and repetition time. T1 provides a nice image contrast for the various healthy tissues inside the brain, i.e., gray matter, cerebrospinal fluid and white matter, etc.

- T2: It has a long time of echo and repetition time but slow image acquisition. It provides good contrast for the tumor surrounding tissues (edema).

- T1c: It is the same as T1, but a contrast agent is applied to enhance the contrast.

- FLAIR: It is used to nullify the signal from the fluid, suppress the effect of Cerebrospinal Fluid (CSF), and bring out the periventricular hyperintense lesion.

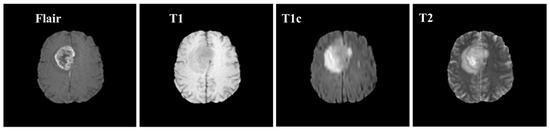

Brain MRI tumors have complicated structures and shapes, which makes the tumor classification and segmentation process more difficult using uni-modality. MRI machines provide an option to capture multimodality images with a more detailed representation of brain tissues [9]. During the MRI scan of a patient, the MRI machine produces different types of MRI sequences including T1, T2, T1c, and Flair, which are based on the Time to Echo (TE), Repetition Time (TR), brightness and contrast values. Figure 3 describes the four different brain MRI modalities and Figure 4 describes the three different types of healthy tissues inside the brain.

Figure 3.

Pictorial view of brain MR Image modalities.

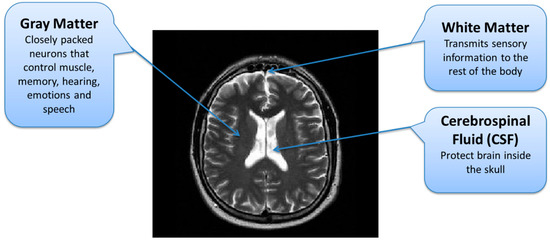

Figure 4.

Brain MRI Tissues Types.

1.4. Convolutional Neural Network (CNN) for MR Image Analysis

The use of CNN for brain MR image classification is proposed with the use of small kernels for deep architectures. They achieved an average accuracy of 97.5%. The Deep CNN was applied to the BraTS 2015 dataset containing tumorous and non-tumorous images. Using the Deep CNN lowers the complexity and the computation time [10]. This study’s limitation is that it only classifies the images into tumorous and non-tumorous images and does not study the multimodal analysis of brain tumors. A 3D deep CNN architecture for brain MR image classification into LGG and HGG glioma brain tumor using the complete volumetric T1-Gado MRI sequence is proposed by Mzoughi et al. [11]. The proposed method merges both local and global features by utilizing deep networks with the use of small kernels. Preprocessing was done using adaptive contrast enhancement along with intensity normalization to over the data heterogeneity. Data augmentation was used for effective training of the deep 3D network. BraTS 2018 dataset was used for experiments on the proposed architecture and compared with 2D CNNs. They reported an overall accuracy of 96.49% and concluded that data augmentation and suitable preprocessing could lead to better classification results. However, 3D models are computationally and memory-intensive methods thus, it would be better to have equivalent or better results using less computationally and memory-intensive methods. Kumar (2020) proposed an optimized deep learning algorithm Dolphin-SCA based Deep CNN to classify Glioma brain tumors from MR images [12]. Fuzzy deformable fusion with Dolphin Echolocation based Sine Cosine Algorithm (Dolphin-SCA) is used for segmentation. Features are extracted using power local directional patterns (LDP) and statistical features. Deep CNN is then used for the classification. The BraTS Q7 and SimBraTS datasets are used. The maximum accuracy achieved is 96.3%. However, this method does not take more features which might prove to be useful and does not classify the tumors to malignant and benign. In addition, the use of feature extraction along with deep CNN seems to add more unneeded complexity as deep CNN is capable of extracting features on its own. In [13], a multi-model CNN based hybrid approach is proposed for the classification of brain MR images. Similarly, several recent studies are discussed in [14] which utilizes different CNN models for brain tumor classification.

Overfitting often occurs due to excessively complex models containing large numbers of hidden layers. The model starts to learn noises in the training set that negatively affect the training data. Overfitting can also occur due to focus on the training set and building complex relations between features which might not work well with the new test data. The famous CNN models (LeNet, AlexNet and GoogleNet) lead to overfitting and do not perform well for brain tumor classification because of complex architectures with a high number of layers designed for many output classes (1000 classes) with RGB input images. AlexNet consists of a total of 25 layers and has more than 61 million parameters [15,16]. Similarly, GoogleNet consists of 144 layers and has more than seven million parameters [17,18]. In [19], an efficient brain tumor classification frame work is proposed where brain MR images are preprocessed to avoid overfitting problem.

2. Material and Methods

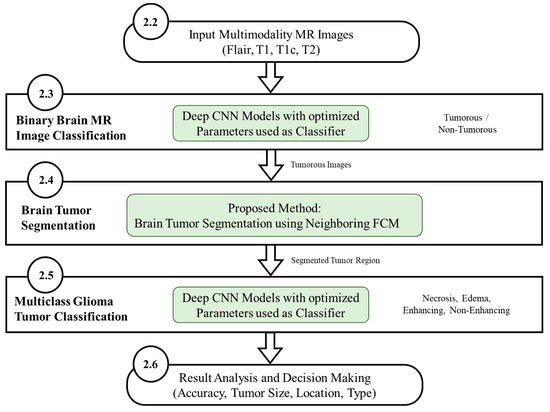

The workflow diagram of the new proposed methodology is shown in Figure 5. The multistage process consists of binary MR image classification for classifying the MR images into tumorous and non-tumorous using proposed CNN models, tumor segmentation to extract the tumorous region from the tumorous images, and multiclass Glioma tumor classification for classifying the Glioma tumors into four types. The use of deep learning-based CNN architecture classifies MR images producing an accuracy level superior to other techniques such as LeNet, AlexNet and GoogleNet.

Figure 5.

Workflow diagram of the proposed methodology (Green highlighted sections represent contributions in this work).

Deep learning methods are gaining popularity in many areas of computer vision specifically image processing and speech analysis [20]. Deep learning can be used for classification, segmentation, and recognition in supervised learning [21]. Deep learning networks work by applying different sequential operations on the input data that transform the input such as convolution, sigmoid function application, etc. Each operation is performed in one layer of the deep network. The rectified linear activations (ReLU), residual connections, and an increased number of hidden layers make the performance better than classical neural networks. Another advantage of using deep learning networks is the availability of large datasets for training and the inherent data parallelism in the training process of the system which allows the use of modern-day GPUs for optimizing the system performance having millions of parameters. There are three major factors involved in using CNN for solving image processing problems. The architecture of the network, the regularization techniques used, and the optimization algorithms which are used in the training of the CNN system.

2.1. Experimental Environment

A dedicated 64-bit Windows 10 operating system machine equipped with GTX 1080 GPU having 2560 CUDA cores and 8 GB GDDR5X GPU memory was used for the experiments. The machine also contains 32-gigabyte memory (RAM) and a 3.70 GHz core i7 CPU. The software programs for pre-processing and segmentation were developed using MATLAB 2022a. Training and testing of MR images for classification into tumorous and non-tumorous images as well tumor classification among four different tumor types using CNN was performed using Python-based libraries called Keras, Tensor Flow, and Anaconda.

2.2. Experimental Datasets

For MR image classification, datasets from different sources were used. The gathered data sets are indicated in Table 1. For experiments, BraTS 2015 [22] dataset was mainly used along with the other datasets collected from different sources as described in Table 1. The dataset is split into training and testing with 80% and 20%, respectively for glioma tumor classification. Additionally, 20% of the training portion was used for cross-validation. The BraTS 2015 was used as a newer version of the BraTS because the BraTS 2018 dataset is actually the same as the BraTS 2015 dataset in terms of images (training and validation) in which expert radiologists manually revise all the ground truth labels. The BraTS 2018 dataset contains 384 training and testing patients’ data of both Low-Grade Glioma (LGG) and High-Grade Glioma (HGG). As per the WHO reports, LGG is considered as grade 1 and grade 2 tumor while HGG is considered as grade 3 and grade 4 tumor [23].

Table 1.

Brain MRI Dataset Description.

- Grade I: The brain tissue is benign and cell appearance is like normal brain cells, which grow slowly.

- Grade II: The brain tissue is malignant and cell appearance is less like the normal brain cells.

- Grade III: The brain tissue is malignant and appearance is very different from normal cells which are actively growing.

- Grade IV: The brain tissue is malignant and has the most abnormal appearance as compared to normal cells which grow rapidly.

Samples of these four modalities (T1, T2, T1c, Flair) are presented in Figure 6. All four modalities have 620 MR images which make a total of 239,320 MR images for all 384 cases and a total of 169,880 MR images 274 train images as shown in Table 2. In BraTS dataset, labels are provided only for the train images so only train images are used for experiments. The dataset is divided into 60% for training, 20% for validation and 20% for the testing. BraTS provides data in MetaImage (.mha) format which is used to store 3D medical images. For each modality of every case, there are 155 slices with 240 × 240 pixel dimensions which are stored in a single mha file.

Figure 6.

Sample MR images of T1, T2, T1c and Flair modalities.

Table 2.

Summary of Acquired BraTS MRI Dataset.

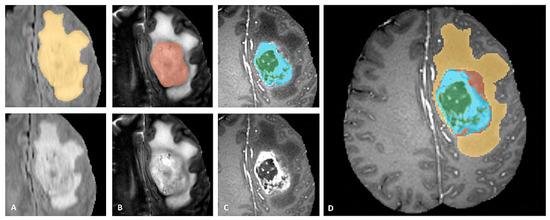

BraTS dataset provides annotations for the training cases with four different classes (Necrosis, Edema, Enhancing, and Non-Enhancing) and the fifth class is considered as everything else. The dataset is also described in three sub-compartment regions. Region 1 is known as Complete Tumor with labels 1, 2, 3, 4 in the annotated data. Region 2 is known as Tumor Core with labels 1, 3, 4, and Region 3 which is known as Enhancing Tumor with label 4 in the annotated data. The description of data labeling is presented in Table 3 and Figure 7 shows a sample of the labeled multiclass tumor in MR image. In Figure 7, the yellow color represents the whole tumor, red represents the core tumor, light blue represents enhancing tumor and green patches show the necrotic core [22].

Table 3.

BraTS Annotations and Sub Compartments.

Figure 7.

Sample of Multiclass Glioma labels with different modalities. The image label shows: (A). Whole tumor, (B). Tumor core, (C). Enhancing Tumor and (D). Combined all tumor types [22].

In the preprocessing phase of the dataset, the 3D DICOM images were converted to PNG 2D images, and the metadata about patient information was removed. Each patient case includes five DICOM images (four DICOM for four MRI modalities; T1, T1c, T2 and Flair while the fifth DICOM image contains the annotation of the Glioma Tumor classes). Each DICOM contains 155 slices of grayscale MR images for a single patient. All images of the dataset are labeled based on the ground truth values provided by the annotations in the original BraTS dataset.

2.3. Binary Brain MR Image Classification

In this first stage, the binary classification is performed by inputting the images directly to the proposed deep CNN models. This is referred to as binary classification throughout the research. The experimental results are compared with the latest feature-based techniques such as texture features, block features and deep CNN as classifier-based techniques for example LeNet, AlexNet and GoogleNet.

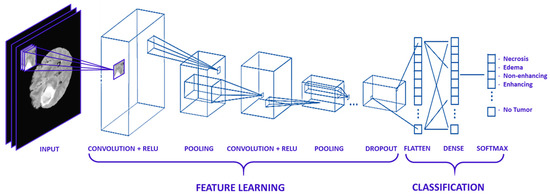

2.3.1. Typical Deep CNN Architecture

In this work, a collection of parallel feature maps was formulated using different kernels that were slid over the input dataset. These are stacked together in the convolutional layer. While creating feature maps, a smaller dimension was used that helps in feature sharing between different layers. Kernel overlapping was avoided using zero-padding of the input images, which helps in managing the dimension of the convolution layer as well. A weighted sum of the input was passed through an activation function that helps determine which neuron should be rejected. The neurons having a higher weight associated with them are most probably to be rejected. Various activation functions are proposed in the literature for different types of deep learning applications, e.g., Linear, Sigmoid, ReLU, and softmax, etc. The pooling layer was applied after the convolution and non-linear transformation of the input dataset. In pooling Layers, the data is down-sampled to remove noise, smooth the data, and prevent overfitting. The data points which were extracted from the pooling layers were extended into column vectors. These column vectors were then used as input to a classical deep neural network. The architecture of the typical CNN Model for MR image classification is given in Figure 8.

Figure 8.

Typical CNN Architecture MR Image Classification.

2.3.2. CNN Optimization Parameters for MR Images Classification

The proposed binary CNN architecture model 1 is described in Table 4 which consists of 9 layers and a total of 217,954 trainable parameters. An improved binary CNN architecture model 1 particularly for brain MR image classification is described in Table 5 which consists of a total of 10 layers but slightly fewer number of trainable parameters. After an intensive literature review of the optimization techniques of the CNN models and thorough study of the existing CNN models, enhanced CNN models are proposed. Several experiments were also performed using the existing models such as LeNet, AlexNet and GoogleNet. Similarly, different custom-built CNN models based on number of layers and parameters were tested to select the best combination of layers and parameters that perform better than existing CNN Models for the special nature of the grayscale MR images.

Table 4.

Proposed CNN Model 1 for MR Image Classification.

Table 5.

Proposed Improved CNN Model 2 for MR Image Classification.

In model 2, the number of layers were increased to 10 layers as compared to 9 layers in model 1 but the number of parameters were reduced to 80,243 from 217,954 as shown in Table 4 and Table 5. In MR images, the tumor regions appear brighter compared to normal brain cells. Commonly, in an MR image, there is one tumor that appears in a particular shape inside the brain, which means that the more useful features can be found locally by keeping the convolutional filter size small. Another benefit of using small filter size for convolutional layers is weight sharing for all the pixels within the convolutional filter to extract local features from the brain MR images. Although Model 1 perform better than LeNet, AlexNet and GoogleNet but Model 2 further improves the performance by reducing the convolutional filter size and number of parameters.

2.4. Brain Tumor Segmentation

In the second stage, tumor regions were extracted from the tumorous images. Segmentation of brain tumor is a very complex task because of the complex anatomy of the brain structure [24]. Due to the low contrast and correlated MR scans, the segmentation task becomes highly complicated. For a comprehensive analysis of brain tumors from MR images, different patterns of effective parts of the brain are required through which the tumorous part can be differentiated from the rest of the brain. A brain can be divided into three main parts; Cerebrospinal Fluid (CSF), Gray Matter (GM), and White Matter (WM) [25]. The important task during the segmentation of brain MR images is to partition these tissues correctly. Hence, voxels’ labeling for specific tissue types carries immense importance in MR image segmentation [26]. As described earlier, the low contrast of brain MR images is another issue due to which it is difficult to differentiate among these three tissue types. The brain tissue overlapping issue is mainly addressed using FCM-based brain tumor segmentation technique.

In the proposed method tumor regions were extracted from the tumorous images by ignoring the non-tumorous images using the proposed neighboring FCM technique. To enhance the segmentation, the image intensity values were manipulated and tumor region was extracted using neighboring image features along with the actual image features. The tumor region was further enhanced by applying a region-growing algorithm. Brain tumor segmentation is useful for the identification and diagnosis of various types of tumors.

For proper diagnosis and proper treatment plan, the tumor must not only be detected but additional information such as tumor class, size and location should be identified. The tumorous portion of the image should be segmented in order to prepare the data for a second phase of classification to identify tumor class, size and location. It should be noted that segmentation is also a complicated step due to the complex nature of the image and the overlapping tissues and layers in the brain MR image.

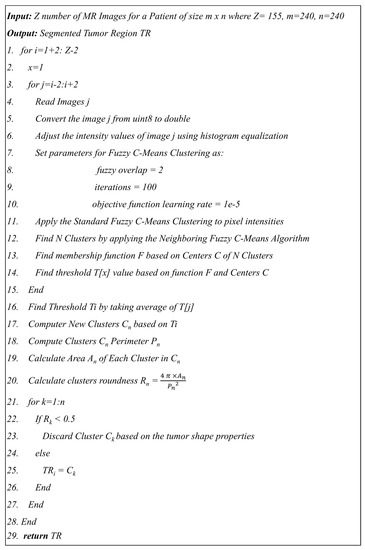

The proposed segmentation method using neighboring FCM has an advantage over hard segmentation in that it retains more information from the original image. In this method, tumor regions were extracted using FCM from the tumorous images by ignoring the non-tumorous images. Figure 9 shows the proposed algorithm 1 used to find the tumor regions from the MR image using neighboring FCM.

Figure 9.

Proposed Algorithm 1 for tumor segmentation using Neighboring FCM.

In the proposed neighboring FCM, the standard FCM equation is modified to calculate the optimized centroid by including the previous two and next two images along with the actual image as shown in Equation (1).

where represents the membership value of a pixel located at the position of class . The is image intensity calculated based on the average of two previous images and two next images at position instead of using single image intensity value at position . The total number of classes is pre-defined . The operator norm ‖.‖ represents the Euclidean distance and represents the weightage for each fuzzy membership related to a specific class.

2.5. Multiclass Glioma Tumor Classification

In the final stage, a multiclass tumor classification is performed and an optimization driven deep CNN model is proposed as an enhanced brain MR image classification technique which categorizes the brain tumor into four types, i.e., Necrosis, Edema, Enhancing and Non-Enhancing. Before applying deep CNN. The experiments are compared with the latest CNN models such as LeNet, AlexNet and GoogleNet. The use of the deep CNN architecture classified images accurately producing higher accuracy than other techniques on the same database.

In this stage, multiclass tumor classification was performed and a deep CNN architecture was proposed as an enhanced brain MR image classification technique which categorizes the brain tumor into four types, i.e., Necrosis, Edema, Enhancing and Non-Enhancing. The use of the deep CNN architecture classified images accurately producing accuracy levels superior to other techniques on the same database. An enhanced CNN model 3 with 11 hidden layers and a total of 241,624 trainable parameters was proposed in Table 6 for multiclass Glioma tumor classification. The results were also compared with model 2 proposed for MR image classification presented in Section 3.3 and detailed in Table 5. In MR images, there is a strong association between the tumor tissues of different Glioma types (Necrosis, Edema, Enhancing, and Non-Enhancing) compared to the association between the tumorous tissues and non-tumorous (healthy) tissues of the brain. In CNN architecture, the lower convolutional layers mainly obtain intensity and shape features from the tumorous MR images while deeper features were extracted from feature maps which are more abstract and useful for the correct classification of the multiple types of Glioma tumor. Due to this fact, model 3 was proposed where the number of layers was increased along with the number of parameters to extract more useful features from the highly associated pixel values of tumorous region in the MR images.

Table 6.

Proposed CNN model 3 specifically for Glioma tumor classification.

2.6. Results Evaluation Techniques

The proposed framework is based on different steps and in order to quantify, the performance metrics; accuracy, precision, recall, F measure and Dice Similarity Coefficient (DSC) based on experiments are conducted for each step. The performance of the proposed method is measured based on True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN) [24]. The percentage of predicted positive true cases that are in fact true positive is referred as precision. The rate of correctly predicted true positive to all the actual class observation is referred to as Recall. Furthermore, both Precision and Recall are used in the computation of the F Measure. DSC is a measurement in the spatial domain of the percentage of overlapped segmented portions of any two images.

3. Experimental Results and Discussions

The final output of the proposed system should be able to specify whether an image contains a tumor or not. For images that contain a tumor, the system further segments the tumorous region and classifies the tumor into one of four classes; Necrosis, Edema, Enhancing and non-enhancing. The system further specifies based on analysis the location and size of the tumor. The information retrieved from the system outperforms previous methods mentioned in the literature in terms of accuracy, precision, recall and F measure which helps to determine a proper diagnosis and proper treatment.

3.1. Brain MR Image Classification Results

Several experiments with different parameter combinations of batch size (100, 200, and 500) and epochs (4, 8, 16, 32, and 64) were performed for the proposed CNN models. The batch size of 100 and epochs value of 8 was found to be achieving the best accuracy and thus chosen with results presented. As shown in Table 7 and Table 8, the accuracy, precision, recall, and F-measure for model 1 and model 2 are presented. The results are also compared with the existing well-known CNN models like LeNet [27], AlexNet [15,16], and GoogleNet [17,28]. The AlexNet experiments show promising results with an accuracy of 96.95% and 96.53% for HGG and LGG, respectively as compared to LeNet and GoogleNet, but model 2 performance is far better than AlexNet, LeNet and GoogleNet. The famous CNN models (LeNet, AlexNet, GoogleNet) leads to overfitting and do not perform well for brain tumor classification because of complex architectures with high number of layers designed for very large number of output classes (1000 classes) with RGB input images. For example, AlexNet has 64 filters in the first convolutional layer which are mostly encoded with color information. Due to the small batch size for a large dataset of 169,880 MR images with enhanced CNN model 2, highest results are achieved with 8 epochs. It is also clear in the results that the number of Epochs is directly proportional to effectiveness. The effectiveness increases with the increase in the number of Epochs. The proposed models especially model 2 achieved the best accuracy for both LGG and HGG and for all modalities. For HGG, model 2 achieved the best classification accuracy for the flair modality of 98.74% with precision of 0.983, recall of 0.985, and F-measure of 0.984. For LGG, model 2 achieved the best accuracy for the flair modality of 97.33% with precision of 0.960, recall of 0.988, and F-measure of 0.974. Model 2 outperformed model 1 and outperform the well-known CNN models specified.

Table 7.

Classification results using proposed CNN Models as classifier and comparison with existing CNN models for brain HGG MR images.

Table 8.

Classification results using proposed CNN Models as classifier and comparison with existing CNN models for brain LGG MR images.

The proposed models are also tested on the AANLIB and PMIS datasets and results are compared with LeNet, AlexNet and GoogleNet CNN models as shown in Table 9. The results are reaffirmed and validated showing that for both AANLIB and PMIS datasets, the proposed CNN models outperformed the well-known CNN models and that Model 2 in outperformed all other models by achieving 100% accuracy. This shows that the proposed models solve the problems of overfitting as well as problems of data availability.

Table 9.

Experimental Results for Validation Datasets (PMIS-MRI and AANLIB).

Table 10 shows a summary of the results obtained in this work and a comparison with the latest literature. The proposed methods achieved an average accuracy ranging from 96.88% to a maximum of 98.74%, whereas, previously published results indicated relatively less accuracies as shown in Table 10. The proposed CNN model 2 as classifier achieved 98.74% on a very large dataset (BraTS 2015). The accuracy obtained using the proposed approach in this work is very high even when using a big dataset (BraTS 2015), which shows the robustness of the approach.

Table 10.

Comparison of the proposed method for brain MR Images Classification with latest literature techniques.

3.2. Glioma Tumor Segmentation Results

Tumor regions were extracted from the tumorous images by ignoring the non-tumorous images using the proposed neighboring FCM based tumor segmentation method. The experimental results of brain tumor segmentation are evaluated based on visual comparison, accuracy, specificity, sensitivity, dice similarity coefficient (DSC) and mutual information (MI).

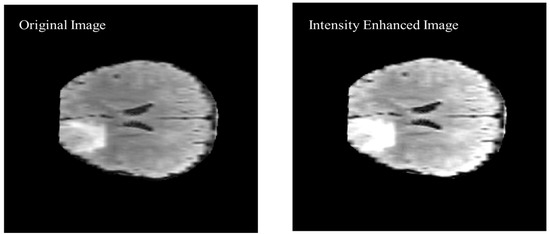

As shown in Figure 9 of the proposed algorithm, image intensity values were manipulated to enhance the segmentation by saturating the highest 1% and lowest 1% of all the pixel values in the MR image which enhances the contrast of the grayscale image. The enhancement method was applied to all the images before converting the image to black and white. The visual results are shown in Figure 10 to compare the original brain MR sample image and the intensity manipulated image.

Figure 10.

Visual comparison of the original image and intensity enhanced image.

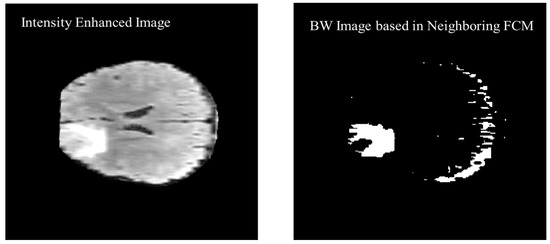

The previous two and following two images are used as a reference along with the actual image to calculate the threshold value for the segmentation. Figure 11 shows the black and white (BW) binary image generated based on the neighboring FCM threshold applied to intensity-enhanced MR image.

Figure 11.

Black and White (BW) binary image generated based on the neighboring FCM threshold applied to intensity enhanced image.

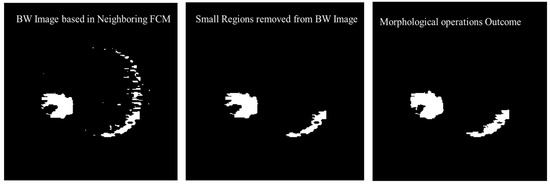

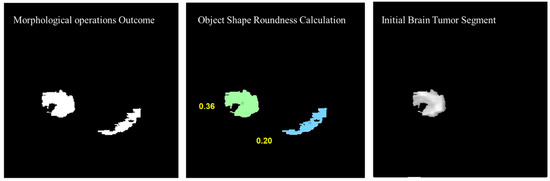

Morphological operations are applied for further enhancement of the tumor region in the binary image. Small regions in the binary are removed based on the connected pixel count values of less than 256 from the MR image having total 57,600 pixels. Erosion and dilation morphological operations with a structure size of pixels are applied to fill the small gaps in the binary image. Figure 12 shows the visual results after removing the small regions from the binary image and applying the morphological operations (erosion and dilation).

Figure 12.

Visual results after removing the small regions from the binary image and applying the morphological operations.

To remove the non-tumor parts from the binary image, the number of objects was calculated in the binary image and the tumor region was selected based on the shape roundness properties of the objects. In Figure 13, objects’ roundness properties are measured from the binary image and the roundness values for each object are displayed. The initial brain tumor segment is extracted based on the best roundness value.

Figure 13.

Object shape roundness calculation and generating the initial tumor segment.

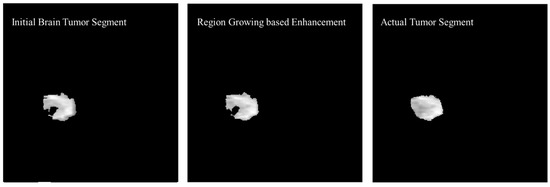

The initial tumor segment is enhanced using the region growing method. The first step in the process of edge segmentation based on the region-growing technique is to find the seed pixels which is selected based on the Neighboring FCM based initial segmentation. In the first step, a geometric structure from a gray level image was secured then centers of adjacent labeled edges were given as initial input to the algorithm. Figure 14 shows the enhancement in the initial brain tumor segment by applying region growing method and a visual comparison was made with the actual tumor segment.

Figure 14.

Enhancement in the initial brain tumor segment by applying region growing method and visual comparison with the actual tumor segment.

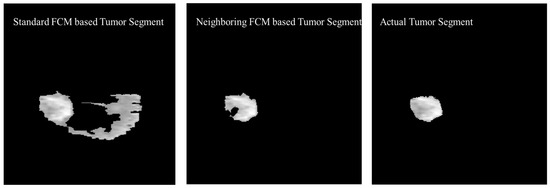

The experimental results were also generated based on the standard FCM to compare with the proposed neighboring FCM based tumor segmentation method. Figure 15 shows the visual comparison of the standard FCM based tumor segment with the neighboring FCM based tumor segment and the actual tumor segment.

Figure 15.

Visual Comparison of the Standard FCM based tumor segment with the Neighboring FCM based tumor segment and the actual tumor segment.

Better effectiveness of neighboring FCM can be observed through statistical analysis. Prominent improvement of 14.3% and 16.37% was secured with respect to Accuracy and DSC, respectively and other parameters as well. In the proposed neighboring FCM technique, the labeling of the images is going to be influenced by immediate neighbors only, so it gives better results as compared to standard FCM. The proposed method has outperformed in terms of average DSC, specificity, and sensitivity with values 90.87%, 99.86% and 95.52% as compared to the standard FCM average DSC, specificity, and sensitivity with values 66.86%, 87.22% and 81.29%, respectively.

Table 11 shows a comparison between the proposed segmentation technique with techniques proposed in recent literature. Dice Similarity Coefficient (DSC) is used for comparison purposes as it is the common metric adopted in recent literature. Results indicate that the proposed segmentation technique achieved an average DSC of 90.87% which outperforms all the methods listed in Table 11.

Table 11.

Comparison of the proposed brain Tumor Segmentation method with latest literature techniques.

The reason for this improvement was mainly because of extra neighboring information incorporated along with the original image and in the final stage region growing algorithm was used to secure a more accurate segmented image. FCM parameter selection is highly sensitive to noise and computational time will increase rapidly with non-homogeneous pixel intensities. The modification in original FCM function is made to tackle the non-homogeneous intensities of the pixels. In the proposed method, each image is influenced by immediate neighbors. This phenomenon creates a regularized effect and influence on labeling with respect to neighbors, which will secure a more homogeneous biased solution.

3.3. Glioma Tumor Classification Results

Although Model 2 was proposed for binary classification of MR image into tumorous and nontumorous it still performed better than LeNet, AlexNet and GoogleNet for multiclass Glioma tumor classification as shown in Table 12 and Table 13 but the Model 3 further improved the performance by reducing the convolutional filter size and number of parameters. The CNN architecture models were proposed namely; model 2 which is same model proposed and explained in Section 3.3 (Table 6) and model 3 which is optimized model architecture described in Table 7. As shown in Table 12 and Table 13, model 2 achieved highest average accuracy 95.94% and 96.30% for HGG and LGG, respectively using Flair images. The combination of proposed enhanced model 3 and Batch size 200, epochs 8 reduced the overfitting and improved the classification accuracies as compared to other well-known CNN architectures; LeNet, AlexNet, and GoogleNet. It also outperformed model 2 proposed in this study.

Table 12.

Multiclass Glioma Tumor Classification results using proposed CNN models classifiers compared with other well-known CNN models for brain HGG MR images.

Table 13.

Multiclass Glioma Tumor Classification results using proposed CNN models classifiers compared with other well-known CNN models for brain LGG MR images.

In the proposed enhanced CNN model 3 was used as classifier and parameters such as batch size and epoch were further tuned based on accuracy results. The results of the proposed model 3 were compared with the existing CNN models (LeNet, AlexNet and GoogleNet) and model 2. For HGG Glioma, model 3 with batch size of 200 and 8 epoch achieved the highest average accuracy of 95.94% for enhanced model 3 with Flair MR images. The average accuracies showed improvement as shown in Table 12 and Table 13. For LGG Glioma classification, the batch size of 200 and 8 epoch secured the highest average accuracy for model 3 of 96.30% accuracy for Flair MR images.

The AlexNet experiments show promising results with an average accuracy of 92.31% and 93.14% for HGG and LGG, respectively as compared to LeNet and GoogleNet but still model 3 performance is far better than AlexNet, LeNet and GoogleNet. The famous CNN models (LeNet, AlexNet, and GoogleNet) do not perform well for brain tumor classification because of the complex architectures with high number of layers and parameters designed for very large number of output classes (1000 classes) with RGB input images. For example, AlexNet has 64 filters in the first convolutional layer which are mostly encoded with color information. In a deep learning model, if the number of parameters is higher than the training data set as observed for the case of LeNet, AlexNet and GoogleNet. In this case, regularization becomes a more critical step. The approximation of temporary functions of the input data in the design of CNN architecture plays an important role. This approximation is connected with the selection of parameters of the network like depth and width. In the process of regularization, overfitting of the algorithm is avoided especially when the complexity of the model increases. Hence, from the statistical analysis presented in Table 12 and Table 13, it can be concluded that if CNN model 3 is used as a classifier then the best classification accuracy is achieved for all the classes and Glioma types with a batch size of 200 and 8 epochs.

As shown in Table 14, the proposed technique using CNN as a classifier achieved an accuracy of 96.30% for multiclass classification. When compared with other recent techniques from literature, it is evident that the proposed technique outperformed those listed in Table 14. This also outperformed the other methods that were published recently using the same dataset.

Table 14.

Comparison of the proposed method for multiclass Glioma Tumor Classification with literature techniques.

4. Conclusions and Future Work

A brain tumor is deadly and painful disease. It can lead to death if not diagnosed in its early stages. Manual extraction of tumor segments by doctors is a time-consuming and irreversible process. In this study, a framework named DeepTumor is presented for the multistage-multiclass Glioma tumor classification into four classes, edema, necrosis, Enhancing and Non-enhancing. A multistage automated brain tumor classification method was proposed with high accuracy that can assist radiologists in accurate and early diagnosis of the brain tumor. The experiments were performed using multimodality (Flair, T1, T1c, T2) BraTS 2015 MRI dataset. The first stage, the proposed CNN classifier model 2 achieved a 98.74% accuracy for High-Grade Glioma (HGG) and 97.33% accuracy for Low-Grade Glioma (LGG) MR Image classification. In the second stage, the tumorous portion of the image was segmented using an enhanced proposed technique that uses the neighboring images Fuzzy C-means (FCM) information along with the actual image to perform the tumor segmentation. By using this technique, the tumor region information was extracted with a higher accuracy rate. In the third stage, segmented tumors were classified into four Glioma tumor classes; Necrosis, Edema, Non-enhancing tumor, and enhancing tumor. The experimental results showed that for multiclass tumor classification, an average accuracy of 96.30% was achieved using Deep CNN Classifier.

As future work, an automated decision support system can be integrated. The system will provide intelligent decisions for doctors by analyzing the size, shape, location, and type of the tumor by predicting the prevalence rate, the severity of brain cancer, and surgery decisions. The size and type of the Glioma brain tumor is a direct indicator of the tumor grade and the severity of brain cancer. As the structural and spatial parameters of brain tumors like size, shape, and location play an important role in radiologists’ decisions, future work can include methods to approximate the volume of the brain tumor and create a 3D model. In future work, machine learning algorithms with combined CNN features from MR images and radiomic features can be used for the prediction of patient survival. Additionally, the proposed method will continue to be enhanced to further achieve higher accuracies in brain tumor segmentation and classification as well as applying the same method and its enhancement on other medical conditions such as skin cancer.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable as the dataset used in this research is taken from the public resource.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this research was acquired from publicly.

Acknowledgments

The author acknowledges the help and support of Jaafar Alghazo (Virginia Military Institute, VI, USA) for the review and grammar checking.

Conflicts of Interest

The author declares no conflict of interest.

References

- Kalaiselvi, T.; Nagaraja, N. An Automatic Segmentation of Brain Tumor from MRI Scans through Wavelet Transformations. Int. J. Image Graph. Signal Process. 2016, 8, 59–65. [Google Scholar] [CrossRef][Green Version]

- Latif, G.; Iskandar, D.A.; Alghazo, J. Multiclass brain tumor classification using region growing based tumor segmentation and ensemble wavelet features. In Proceedings of the 2018 International Conference on Computing and Big Data, Shenzhen, China, 28–30 April 2018; pp. 67–72. [Google Scholar] [CrossRef]

- Iqbal, S.; Ghani, M.U.; Saba, T.; Rehman, A. Brain Tumor Segmentation in Multi-Spectral MRI Using Convolutional Neural Networks (CNN). Microsc. Res. Tech. 2018, 81, 419–427. [Google Scholar] [CrossRef] [PubMed]

- Abdelaziz Ismael, S.A.; Mohammed, A.; Hefny, H. An Enhanced Deep Learning Approach for Brain Cancer MRI Images Classification Using Residual Networks. Artif. Intell. Med. 2020, 102, 101779. [Google Scholar] [CrossRef]

- Latif, G.; Ben Brahim, G.; Iskandar, D.N.F.A.; Bashar, A.; Alghazo, J. Glioma Tumors’ Classification Using Deep-Neural-Network-Based Features with SVM Classifier. Diagnostics 2022, 12, 1018. [Google Scholar] [CrossRef] [PubMed]

- Cherry, S.R. Multimodality Imaging: Beyond PET/CT and SPECT/CT. Semin. Nucl. Med. 2009, 39, 348–353. [Google Scholar] [CrossRef]

- Foster-Gareau, P.; Heyn, C.; Alejski, A.; Rutt, B.K. Imaging Single Mammalian Cells with a 1.5 T Clinical MRI Scanner. Magn. Reson. Med. 2003, 49, 968–971. [Google Scholar] [CrossRef]

- De Leeuw, F.-E. Prevalence of Cerebral White Matter Lesions in Elderly People: A Population Based Magnetic Resonance Imaging Study. The Rotterdam Scan Study. J. Neurol. Neurosurg. Psychiatry 2001, 70, 9–14. [Google Scholar] [CrossRef]

- Bauer, S.; Wiest, R.; Nolte, L.-P.; Reyes, M. A Survey of MRI-Based Medical Image Analysis for Brain Tumor Studies. Phys. Med. Biol. 2013, 58, R97–R129. [Google Scholar] [CrossRef]

- Seetha, J.; Raja, S.S. Brain Tumor Classification Using Convolutional Neural Networks. Biomed. Pharmacol. J. 2018, 11, 1457–1461. [Google Scholar] [CrossRef]

- Mzoughi, H.; Njeh, I.; Wali, A.; Slima, M.B.; BenHamida, A.; Mhiri, C.; Mahfoudhe, K.B. Deep Multi-Scale 3D Convolutional Neural Network (CNN) for MRI Gliomas Brain Tumor Classification. J. Digit. Imaging 2020, 33, 903–915. [Google Scholar] [CrossRef]

- Kumar, S.; Mankame, D.P. Optimization Driven Deep Convolution Neural Network for Brain Tumor Classification. Biocybern. Biomed. Eng. 2020, 40, 1190–1204. [Google Scholar] [CrossRef]

- Aamir, M.; Rahman, Z.; Dayo, Z.A.; Abro, W.A.; Uddin, M.I.; Khan, I.; Imran, A.S.; Ali, Z.; Ishfaq, M.; Guan, Y.; et al. A Deep Learning Approach for Brain Tumor Classification Using MRI Images. Comput. Electr. Eng. 2022, 101, 108105. [Google Scholar] [CrossRef]

- Xie, Y.; Zaccagna, F.; Rundo, L.; Testa, C.; Agati, R.; Lodi, R.; Manners, D.N.; Tonon, C. Convolutional Neural Network Techniques for Brain Tumor Classification (from 2015 to 2022): Review, Challenges, and Future Perspectives. Diagnostics 2022, 12, 1850. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Classification of Skin Lesions Using Transfer Learning and Augmentation with Alex-Net. PLoS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Butt, M.M.; Iskandar, D.N.F.A.; Abdelhamid, S.E.; Latif, G.; Alghazo, R. Diabetic Retinopathy Detection from Fundus Images of the Eye Using Hybrid Deep Learning Features. Diagnostics 2022, 12, 1607. [Google Scholar] [CrossRef]

- Guan, Y.; Muhammad, A.; Rahman, Z.; Ali, A.; Waheed Ahmed Abro; Zaheer Ahmed Dayo; Muhammad Shoaib Bhutta; Hu, Z. A Framework for Efficient Brain Tumor Classification Using MRI Images. Math. Biosci. Eng. 2021, 18, 5790–5816. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Cai, L.; Gao, J.; Zhao, D. A Review of the Application of Deep Learning in Medical Image Classification and Segmentation. Ann. Transl. Med. 2020, 8, 713. [Google Scholar] [CrossRef]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Trans. Med. Imaging 2015, 34, 1993–2024. [Google Scholar] [CrossRef]

- Qaddoumi, I.; Sultan, I.; Gajjar, A. Outcome and Prognostic Features in Pediatric Gliomas. Cancer 2009, 115, 5761–5770. [Google Scholar] [CrossRef] [PubMed]

- Latif, G.; Awang Iskandar, D.; Jaffar, A.; Mohsin Butt, M. Multimodal Brain Tumor Segmentation Using Neighboring Image Features. J. Telecommun. Electron. Comput. Eng. (JTEC) 2017, 9, 37–42. [Google Scholar]

- Latif, G.; Iskandar, D.N.F.A.; Alghazo, J.; Jaffar, A. Improving Brain MR Image Classification for Tumor Segmentation Using Phase Congruency. Curr. Med. Imaging Rev. 2018, 14, 914–922. [Google Scholar] [CrossRef]

- Pohl, K.M.; Bouix, S.; Kikinis, R.; Eric, W.; Grimson, L. Anatomical Guided Segmentation with Non-Stationary Tissue Class Distributions in an Expectation-Maximization Framework. In Proceedings of the 2004 2nd IEEE International Symposium on Biomedical Imaging: Nano to Macro (IEEE Cat No. 04EX821), Arlington, VA, USA, 15–18 April 2004; IEEE: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Bouti, A.; Mahraz, M.A.; Riffi, J.; Tairi, H. A Robust System for Road Sign Detection and Classification Using LeNet Architecture Based on Convolutional Neural Network. Soft Comput. 2019, 24, 6721–6733. [Google Scholar] [CrossRef]

- Bai, J.; Jiang, H.; Li, S.; Ma, X. NHL Pathological Image Classification Based on Hierarchical Local Information and GoogLeNet-Based Representations. BioMed Res. Int. 2019, 2019, 1065652. [Google Scholar] [CrossRef]

- Srinivas, B.; Rao, G.S. A hybrid CNN-KNN model for MRI brain tumor classification. Int. J. Recent Technol. Eng. (IJRTE) 2019, 2, 2277–3878. [Google Scholar] [CrossRef]

- Sriramakrishnan, P.; Kalaiselvi, T.; Nagaraja, P.; Mukila, K. Tumorous Slices Classification from MRI Brain Volumes using Block based Features Extraction and Random. Int. J. Comput. Sci. Eng. 2018, 6, 191–196. [Google Scholar]

- Wasule, V.; Sonar, P. Classification of Brain MRI Using SVM and KNN Classifier. In Proceedings of the 2017 Third International Conference on Sensing, Signal Processing and Security (ICSSS), Chennai, India, 4–5 May 2017. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, W.; Gu, H.; Liu, C.; Hong, S.; Xu, W.; Yang, J.; Gui, G. Convolutional Neural Network Based Models for Improving Super-Resolution Imaging. IEEE Access 2019, 7, 43042–43051. [Google Scholar] [CrossRef]

- Tahir, B.; Iqbal, S.; Usman Ghani Khan, M.; Saba, T.; Mehmood, Z.; Anjum, A.; Mahmood, T. Feature Enhancement Framework for Brain Tumor Segmentation and Classification. Microsc. Res. Tech. 2019, 82, 803–811. [Google Scholar] [CrossRef]

- Soltaninejad, M.; Yang, G.; Lambrou, T.; Allinson, N.; Jones, T.L.; Barrick, T.R.; Howe, F.A.; Ye, X. Supervised Learning Based Multimodal MRI Brain Tumour Segmentation Using Texture Features from Supervoxels. Comput. Methods Programs Biomed. 2018, 157, 69–84. [Google Scholar] [CrossRef] [PubMed]

- El-Melegy, M.T.; El-Magd, K.M.A. A Multiple Classifiers System for Automatic Multimodal Brain Tumor Segmentation. In Proceedings of the 2019 15th International Computer Engineering Conference (ICENCO), Giza, Egypt, 29–30 December 2019; IEEE: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Xue, Y.; Yang, Y.; Farhat, F.G.; Shih, F.Y.; Boukrina, O.; Barrett, A.M.; Binder, J.R.; Graves, W.W.; Roshan, U.W. Brain Tumor Classification with Tumor Segmentations and a Dual Path Residual Convolutional Neural Network from MRI and Pathology Images. Brainlesion Glioma Mult. Scler. Stroke Trauma. Brain Inj. 2020, 360–367. [Google Scholar] [CrossRef]

- Sajjad, M.; Khan, S.; Muhammad, K.; Wu, W.; Ullah, A.; Baik, S.W. Multi-Grade Brain Tumor Classification Using Deep CNN with Extensive Data Augmentation. J. Comput. Sci. 2019, 30, 174–182. [Google Scholar] [CrossRef]

- Rao, G.S.; Vydeki, D. Efficient Tumour Detection from Brain MR Image with Morphological Processing and Classification Using Unified Algorithm. Int. J. Med. Eng. Inform. 2021, 13, 461–473. [Google Scholar] [CrossRef]

- Shaik, N.S.; Cherukuri, T.K. Multi-level attention network: Application to brain tumor classification. Signal Image Video Process. 2022, 16, 817–824. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).