Abstract

Biomarker identification is very important to differentiate the grade groups in the histopathological sections of prostate cancer (PCa). Assessing the cluster of cell nuclei is essential for pathological investigation. In this study, we present a computer-based method for cluster analyses of cell nuclei and performed traditional (i.e., unsupervised method) and modern (i.e., supervised method) artificial intelligence (AI) techniques for distinguishing the grade groups of PCa. Two datasets on PCa were collected to carry out this research. Histopathology samples were obtained from whole slides stained with hematoxylin and eosin (H&E). In this research, state-of-the-art approaches were proposed for color normalization, cell nuclei segmentation, feature selection, and classification. A traditional minimum spanning tree (MST) algorithm was employed to identify the clusters and better capture the proliferation and community structure of cell nuclei. K-medoids clustering and stacked ensemble machine learning (ML) approaches were used to perform traditional and modern AI-based classification. The binary and multiclass classification was derived to compare the model quality and results between the grades of PCa. Furthermore, a comparative analysis was carried out between traditional and modern AI techniques using different performance metrics (i.e., statistical parameters). Cluster features of the cell nuclei can be useful information for cancer grading. However, further validation of cluster analysis is required to accomplish astounding classification results.

1. Introduction

Many techniques are used for analysis, color enhancement, segmentation, and classification of medical images, such as those yielded by magnetic resonance (MR), positron emission tomography (PET), and microscopic biopsy; many internal bodily structures can be imaged non-invasively. Computers can be used for image gain, storage, presentation, and communication. Clinical, biochemical, and pathological images are used to diagnose and stage PCa; computer scientists are very active in this field. However, the sensitivity and specificity of the techniques remain controversial []. PCa diagnosis requires prostate MR and microscopic biopsy images. A traditional cancer diagnosis is subjective; pathologists examine biopsy samples under a microscope. It is difficult to objectively describe tissue texture, tissue color, and cell morphology.

Despite recent advances, PCa remains a major medical issue among males, being associated with the overtreatment of inherently benign disease and inadequate treatment of metastases []. The prostate has a pseudostratified epithelium with three types of terminally differentiated epithelial cells: luminal, basal, and neuroendocrine []. Other cells of the epithelium include fibroblasts, smooth muscle cells, endothelial cells, immune cells, autonomic nerve fibers, and associated ganglia []. Malignant transformation is a multistage process; prostatic intraepithelial neoplasia (PIN) triggers localized PCa followed by adenocarcinoma characterized by local invasion and, finally, metastatic PCa. The most common PCa grading system is the Gleason system, which has been refined since it was first introduced in 1974 []; the system is widely used to score PCa aggressiveness. However, there are problems, including inter- and intra-observer variation. In addition, most biopsy samples are negative [,,]. Here, we evaluate histopathological images of cancerous tissues. PCa grading was performed by a pathologist based on structural changes in stained sections.

Computer-based algorithms can perform cluster analyses of cell nuclei; available methods include traditional MST [,,]. MST cluster analysis, derived from graph theory, explores nuclear distributions. A tree is used to represent binary relationships; the connected components constitute a subtree representing an independent cluster. The identification of cancer cell abnormalities is essential for early cancer detection. Today, ML and deep learning (DL) algorithms are used for medical image analysis, feature classification, and pattern recognition. ML algorithms are usually accurate, fast, and customizable. ML iteration is essential; new data must be received and assimilated. Supervised learning is commonly used during ML training and testing; a model is trained using labeled data in a training set, and the knowledge thus acquired is used to evaluate unforeseen labeled data in a test set []. On the other hand, unsupervised learning is not commonly used for the prediction of the diagnosis of different diseases. It is essential in the real-world environment and discovers hidden patterns using the unlabeled datasets. Therefore, unsupervised learning is also a trustworthy method but computationally complex.

In this study, four state-of-the-art approaches were proposed for color normalization, cell nuclei segmentation, feature selection, and ML classification. Histopathology samples were collected from two different centers and created two datasets for binary (grade 3 vs. grade 5) and multiclass (grade 3 vs. grade 4 vs. grade 5) classification. Before we perform the segmentation, stain normalization and deconvolution techniques were carried out as a preprocessing step. After stain deconvolution, the image hematoxylin channel was selected for extracting the cell nuclei tissue components. Furthermore, we used an advanced method (i.e., marker-controlled watershed algorithm) to separate the overlapping cell nuclei. Next, we use an MST algorithm to perform cluster analysis and extract significant information for AI classification. The cell nuclei clusters were separated, and their features are evaluated heuristically. Cluster analysis was performed to better capture the proliferation and community structure of cell nuclei. These methods are making their way into pathology via various computer-aided detection (CAD) systems to assist pathologic diagnosis. Then, we proposed a majority voting method by combining filter and wrapper-based techniques for selecting the most significant features. Finally, we use state-of-the-art algorithms (i.e., stacked ML ensemble and k-medoids clustering) to perform supervised and unsupervised PCa classification. The performance metrics used for evaluating the results are accuracy, precision, recall, and F1-score.

The remainder of this paper are as follows: Section 2 presents the related work of the past study where we discussed different state-of-the-art methods for PCa analysis. Section 3 illustrates the materials and methods of the study where we mentioned the process of data collection and state-of-the-art techniques used in this study. In Section 4, we presented the results of AI models and discussed the overall implication of the study. Lastly, the paper is concluded in Section 5.

2. Related Work

Histopathology image analysis of PCa is quite problematic compared to other cancer types. Many researchers are still working on it and trying to develop new techniques for detecting and treating PCa. It is very difficult to analyze PCa under a microscope based on the Gleason grading system because the tissue pattern, formation of the gland, and distribution of cell nuclei is quite similar in some regions (i.e., score 3 and 4) of the whole slide image (WSI). Most of the existing research performed texture and morphological analysis to differentiate cancer scoring using histopathology images. Table 1 shows the summary of the significant papers that used microscopy biopsy tissue images for the analysis of PCa.

Table 1.

Summary of some existing papers that performed PCa analysis using histopathology images.

The studies in Table 1 confirm the success of the analysis of histopathological images for the classification of PCa such as benign vs. malignant and low- vs. high-grade cancer. It has been analyzed from the above-mentioned studies that most of the authors performed morphological and texture feature analysis for PCa classification. However, it has also been shown that morphological analysis of cell nuclei is not significant for PCa diagnosis because the shape and size of the cell nucleus are almost similar in all the grades (i.e., grade 3, grade 4, and grade 5), and AI models can produce unsatisfactory results. Therefore, in the present study, we performed the PCa analysis only based on the cluster features of the cell nuclei. The features extracted from the clusters are provided in Section 3.2.4.

3. Materials and Methods

3.1. Data Acquisition

Dataset 1 (grade 3, grade 4, and grade 5 WSIs) was collected from the Yonsei University Severance Hospital, Korea. WSIs were scanned into a computer at 40× optical magnification using a 0.3 NA objective, fitted to a C-3000 digital camera (Olympus, Tokyo, Japan) attached to a BX-51 microscope (Olympus). The tissue samples had been sectioned to a thickness of ; then, the sections were deparaffinized, rehydrated, and stained with H&E (staining blue and red, respectively). The WSIs used for this research were acquired from 80 patients.

Dataset 2 (grade 3, grade 4, and grade 5 WSIs) was collected from the Kaggle repository, available at https://www.kaggle.com/c/prostate-cancer-grade-assessment (accessed on 25 March 2021). The WSIs were analyzed and prepared at Radboud University medical center. All the slides were scanned using 3DHistech Panoramic Flash II 250 scanner at 20× magnification (pixel resolution 0.48 ). All cases were retrieved from the pathology achieves of the Radboud University Medical Center. Patients with a pathologist’s report between 2012 and 2017 were eligible for inclusion. The WSIs used for this research were acquired from 60 patients.

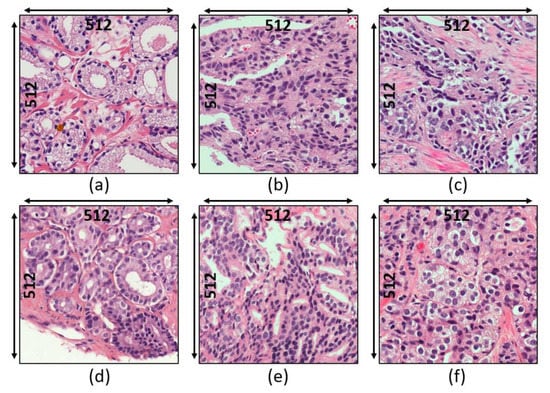

A total of 900 H&E-stained patch images of size 512 × 512 pixels were generated by tiling the pathology annotated slides. Furthermore, the acquired samples were divided equally into three cancer grades (300 grade 3, 300 grade 4, and 300 grade 5). For supervised classification, the dataset was divided into two subsets: train set (80%) and test set (20%). On the other hand, unsupervised classification was performed using the whole dataset. Examples of histopathological images of datasets 1 and 2 are shown in Figure 1. The binary classification was defined (grade 3 vs. grade 5) as was multiclass classification (grade 3 vs. grade 4 vs. grade 5). Appendix A, Figure A1, Figure A2 and Figure A3 show the illustration of the Gleason grading process. Each of the grades is assigned according to the Gleason grading system as follows:

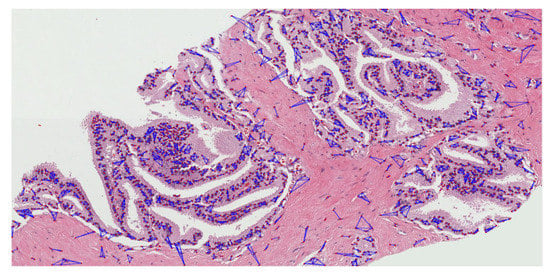

Figure 1.

Histologic findings for each grade of prostate cancer. (a–c) Dataset 1: grade 3, grade 4, and grade 5, respectively. (d–f) Dataset 2: grade 3, grade 4, and grade 5, respectively.

- Grade 3: Gleason score 4 + 3 = 7. Distinctly infiltrative margin.

- Grade 4: Gleason score 4 + 4 = 8. Irregular masses of neoplastic glands. Cancer cells have lost their ability to form glands.

- Grade 5: Gleason score 4 + 5, 5 + 4, or 5 + 5 = 9 or 10. Only occasional gland formation. Sheets of cancer cells throughout the tissue.

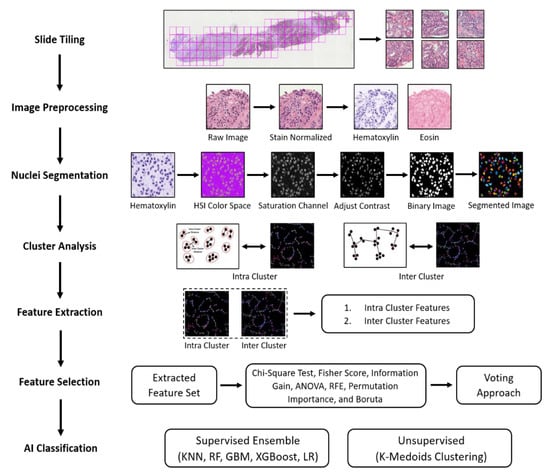

3.2. Research Pipeline

The patch images of size 512 × 512 pixels were extracted to perform AI classification. Figure 2 illustrates the entire methodology for AI classification to distinguish between the grades of PCa. The pipeline plotted below consisted of seven phases, which include slide tiling, image preprocessing, nuclei segmentation, cluster analysis, feature extraction, feature selection, and AI classification.

Figure 2.

Analytical pipeline for the cluster analysis and AI classification of cancer grades observed in histological sections.

3.2.1. Image Preprocessing

Our observations on H&E-stained images show that there is a problem of color constancy, and it is a critical issue for segmentation. Therefore, stain normalization represents a vital step for balancing the color intensity in the histological section. We applied stain normalization and stain deconvolution techniques as a preprocessing step. To perform stain normalization, we selected an image from the dataset as a reference image to match the color intensity with the source images in the dataset. Therefore, the stain normalization approach was applied by transforming both the source and reference image to the LAB color space, and the mean and standard deviation of the reference image are harmonized to that of the source image. Figure 3 shows the source, reference, and normalized images. Based on the statistics of the source and reference images, each image channel was normalized. However, to improve the quality of the images, the computation process of stain normalization has been slightly modified from the original equations and can be expressed as:

where , , and are the channel means and , , and are the channel standard deviation, is the source image, is the target image, and is the normalized LAB image, which was further converted to RGB color space. The end part of Equations (1)–(3) has been modified from the original equations [].

Figure 3.

Stain normalization. (a) Raw image. (b) Reference image. (c) Normalized image.

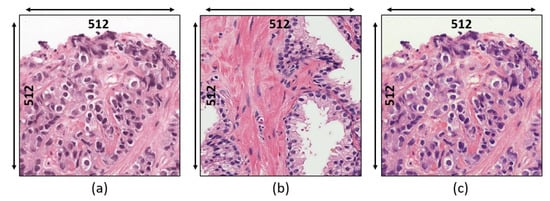

On the other hand, stain deconvolution [] was applied to transform the RGB color image into stain color spaces (i.e., H&E). Examples of separated stain images are shown in Figure 4. All color values on the normalized image are converted to their corresponding optical density (OD) values and the computation of OD for each (Red, Green, and Blue) channel can be expressed as follows:

where is the background brightfield (i.e., the intensity of light entering the image).

Figure 4.

Stain deconvolution. (a) Normalized image. (b) Hematoxylin channel. (c) Eosin channel.

The stain matrix was estimated using the Qupath open-source software based on the reference image used for stain normalization. Here, is the hematoxylin stain matrix [0.587 0.754 0.294] and is the Eosin stain matrix [0.136 0.833 0.536]. The normalized image is transformed into an optical density space to determine the concentration of the individual stain in RGB channels. Furthermore, estimated stain vector channels were recombined to obtain the stained images. The computation process for determining the stain concentration and recombining the stain vector channels can be expressed as:

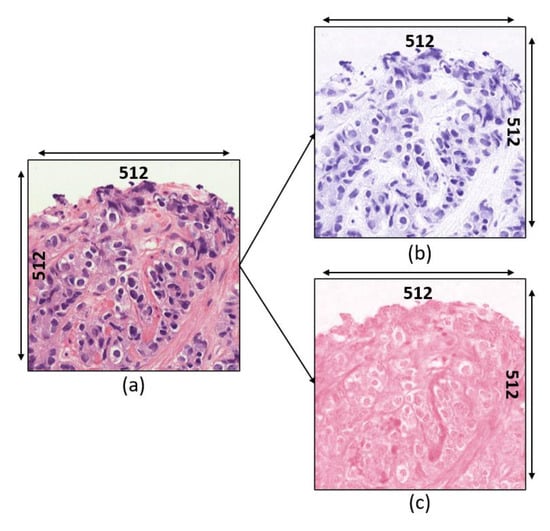

3.2.2. Nuclear Segmentation of Cancer Cells

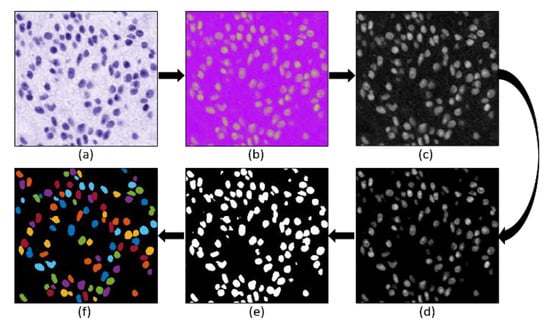

To perform cell nuclear segmentation, image preprocessing was carried out as discussed in the previous section. The hematoxylin-stained image separated from the normalized image was converted to HSI (i.e., Hue—H, Saturation—S, and Intensity—I) color space. Furthermore, the image of the S-channel (8-bit/pixel) was selected for the segmentation purpose because the cell nucleus is more apparent. Next, the contrast adjustment (i.e., specifying the contrast limit) was performed to remove the inconstancy intensity from the background. Then, the global threshold method was applied to the saturation-adjusted image to convert it into a pure binary image (1-bit/pixel). Finally, the marker-controlled watershed algorithm was applied to separate the overlapping nuclei [,,,,,]. After separating the touching nuclei, some artifacts and objects were rejected (considered as noise), and morphological operations (i.e., closing and opening) were applied to remove the peripheral brightness and smooth the membrane boundary of the cell nucleus. Figure 5 shows the complete process for nuclear segmentation of cancer cells.

Figure 5.

The complete process for nuclear segmentation of cancer cells. (a) Hematoxylin channel extracted after performing stain deconvolution. (b) HSI color space converted from (a). (c) Saturation channel extracted from (b). (d) Contrast adjusted image extracted from (c). (e) Binary image after applying global thresholding on (d). (f) Nuclei segmentation after applying the watershed algorithm on (e). Some small objects and artifacts were removed before and after applying the watershed algorithm.

3.2.3. Cluster Analysis

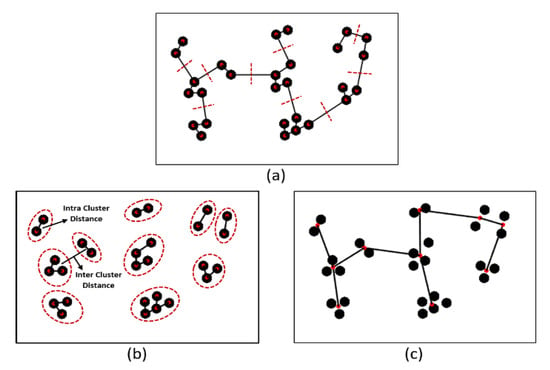

This study performed an intra- and inter-cluster analysis using an MST algorithm that identifies inconsistent edges between the clusters. This is a graph-based method that creates a network by connecting m points in n dimensions. Here, we used an MST for cluster analysis of cell nuclei in the histological section. In the MST, the sum of the edge weights is less than or equal to the sum of the edge weights of every other spanning tree [,,]. An MST sub-graph traverses all vertices of the full graph in a cycle-free manner, yielding the minimum sum of weights of all included edges, as shown in Figure 6.

Figure 6.

Examples of MST cluster analysis. (a) An MST is based on the minimum distances between vertex coordinates. The red dashed lines indicate the removal of inconsistent edges. (b) An intra-cluster MST was obtained after removal of the nine longest edges from (a); the red circles indicate inter- and intra-cluster similarity. (c) The inter-cluster MST was obtained from (b).

The MST usefully identifies nuclear clusters; the centroids connecting all nuclei create a graph that can be used to extract different kinds of features. Each center point of the cell nucleus, called a “vertex”, is connected to at least one other through a line segment, which is called an “edge”. We used the Euclidean minimum distance algorithm to measure the length between the two vertices its joins and construct the MST graph. The edges (distances) are sorted in ascending order and then listed. The edges pass through all vertices; if an edge connects a vertex coordinate that was not linked previously, that edge will be included in the tree [,]. To create separate vertices (nuclei), we used a maximum distance/weight threshold of 10 pixels. Any longer edge distance was considered inconsistent and thus removed, as shown in Figure 6a. If there are K vertices, the complete tree has (K − 1) edges. As shown in Figure 6b, the graph contains 10 groups of clusters formed by cutting links longer than a threshold value.

Next, we performed inter- and intra-cluster analyses; we computed the distances between objects in different clusters and objects in the same clusters. Cluster analysis does not require a specific algorithm; several methods are explored on a case-by-case basis to obtain the desired output. It is important to efficiently locate the clusters. Inter- and intra-cluster similarity are vital for clustering, as shown in Figure 6b,c, respectively. Cluster analysis identifies nuclear patterns and community structure in the histological sections and identifies similar groups in datasets. Data are clustered based on their similarity [,]. The Euclidean distance measure used to compute the distance between two data points can be expressed as:

where is the Euclidean distance, are the centroid points, and and are the inter- and intra-cluster distances, respectively.

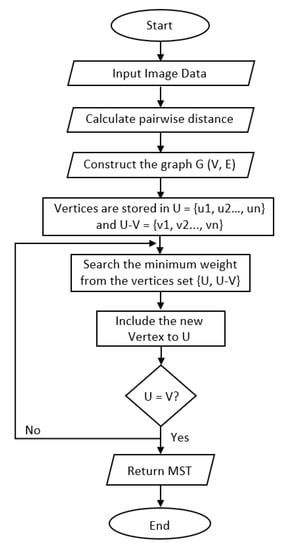

Figure 7 shows the flowchart of MST construction and the detailed algorithm is composed of the following steps:

Figure 7.

Flow chart of MST construction.

- Create an adjacent grid matrix using the input image.

- Calculate the total grid numbers in the rows and columns.

- Generate a graph from an adjacent matrix, which must contain the minimum and maximum weights of all vertices.

- Create an MST-set to track all vertices.

- Find a minimum weight for all vertices in the input graph.

- Assign that weight to the first vertex.

- As the MST-set does not include all vertices:

- Select a vertex u not present in the MST-set that has the minimum weight;

- Add u to the MST-set;

- Update the minimum weights of all vertices adjacent to u by iterating through all adjacent vertices. For every adjacent vertex v, if the weight of edge u-v is less than the previous key value of v, update that minimum weight;

- Iterate step 7 until the MST is complete.

3.2.4. Feature Extraction and Selection

We now discuss morphological and distance-based features extracted from histological sections. Both morphological and distance-based features were used for supervised and unsupervised classification using traditional and modern AI techniques. The features were extracted as numbers based on the area and distance. A total of 26 features were extracted, which include the total intra-cluster total MST distance, total intra-cluster nucleus to nucleus maximum distance, inter-cluster centroid to centroid total distance, inter-cluster total MST distance, number of clusters, total intra-cluster maximum MST distance, average intra-cluster nucleus to nucleus minimum distance, average intra-cluster nucleus to nucleus maximum distance, average intra-cluster maximum MST distance, average cluster area, total intra-cluster nucleus to nucleus total distance, total intra-cluster minimum MST distance, total intra-cluster nucleus to nucleus minimum distance, inter-cluster maximum MST distance, average intra-cluster total MST distance, average intra-cluster minimum MST distance, total cluster area, inter-cluster average MST distance, average intra-cluster nucleus to nucleus average distance, inter-cluster centroid to centroid average distance, minimum area of a cluster, average intra-cluster nucleus to nucleus total distance, inter-cluster centroid to centroid minimum distance, inter-cluster centroid to centroid maximum distance, maximum area of a cluster, and inter-cluster minimum MST distance.

We checked the significance of each feature; this is important, because irrelevant features reduce model performance and lead to overfitting. The elimination of irrelevant features reduces model complexity and makes it easier to interpret. In addition, it enables the model to train faster and improves its performance. In this study, the combination of filter (Chi-Square, ANOVA, Information Gain, and Fisher Score) [,,] and wrapper (recursive feature elimination, permutation importance, and Boruta) [,,] methods were used to select the significant features. Filter methods use statistical techniques to evaluate the relationship between each input variable and the target variable, whereas the wrapper method uses machine learning algorithms and tries to fit on a given dataset and selects the combination of features that gives the optimal results. However, the best 16 features out of 26 were selected based on the majority votes. Here, we have set “minimum votes = 4” as a threshold, which signifies that the features to be selected must have at least a total of 4 votes from the seven feature selection methods, and below a total of 4 votes will be rejected, as shown in Table 2.

Table 2.

Feature selection based on majority voting. The most significant features were selected based on majority “True”. True: Selected, False: Not selected, χ2: Chi-Square Test, FS: Fisher Score, IG: Information Gain, RFE: Recursive Feature Elimination, and PI: Permutation Importance.

3.2.5. AI Classification

After performing feature extraction and selection, modern and traditional AI techniques were used for supervised and unsupervised classification, respectively. For supervised classification, we used ML algorithms, namely k-NN [], RF [], GBM [], XGBoost [], and LR []. On the other hand, for unsupervised classification, we used a traditional k-medoids clustering algorithm []. We subjected each model of supervised learning to five-fold cross-validation (CV); the training data were divided into five groups, and the accuracy was recorded after five trials. Similarly, the testing was also performed based on a five-fold technique. This approach is useful for assessing model performance and identifying hyperparameters that enhance accuracy and reduce error [,]. The histological grades were classified as binary and multiclass to compare the performance of the AI techniques.

The data were standardized across the entire dataset before classification. Every feature has a magnitude and standardized unit. Occasionally, feature scaling is required; here, we used the standard normal distribution for standard scalar scaling:

where is the feature values, is the mean () values, and is the standard deviations () values.

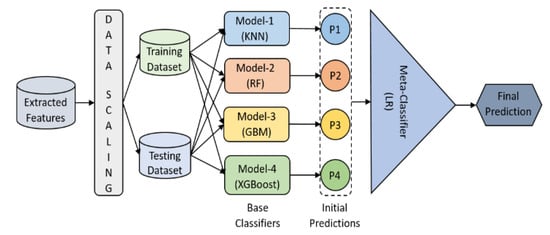

We proposed an ensemble model for supervised classification, and it was designed by stacking five different machine learning algorithms. Figure 8 shows how four different classifiers get trained and tested. The initial predictions of all four base classifiers get stacked and are used as features to train and test the meta-clasifier, which makes the final prediction. The meta-classifier provides a smooth interpretation of the initial predictions made by the base classifiers. This ensemble model is developed for the higher predictive performance.

Figure 8.

Machine learning stacking-based ensemble classification. The data were scaled before training and testing. The classification was carried out in two steps: initial and final predictions using base and meta classifiers, respectively.

4. Experimental Results and Discussion

We performed qualitative and quantitative analyses to extract meaningful features and classify those using AI algorithms. Both multiclass and binary classifications were carried out to differentiate PCa grading. We subjected 900 images to preprocessing, segmentation, cluster analysis, feature extraction, and classification. The data were equally distributed among the three grades; the analyses were separate and independent. To perform supervised classification using modern AI techniques, we divided the dataset into training and testing datasets according to an 8:2 ratio. On the other hand, we used the whole dataset for unsupervised classification using a traditional AI technique. Table 3 shows the comparative analysis between supervised and unsupervised classification, and the results are based on the test dataset. Furthermore, the test and whole datasets were separated into five-split while testing our ensemble supervised model and performing k-medoids unsupervised classification for determining model generalizability. We used MATLAB (ver. R2020b; MathWorks, Natick, MA, USA) and Python programming language for stain normalization, nuclei segmentation, MST-based cluster analysis, feature extraction, and AI-based classification. The equations used for computing the performance metrics/statistical parameters can be expressed as:

where is a true positive (correct classification of positive samples), is a true negative (correct classification of negative samples), is a false positive (incorrect classification of positive samples), and is a false negative (incorrect classification of negative samples).

Table 3.

Comparative analysis of the performance of supervised and unsupervised classification using test and whole datasets, respectively. A five-fold technique was used for both supervised and unsupervised classification. Split 1 and 2 from supervised and split 2 from unsupervised shows the best results marked in bold.

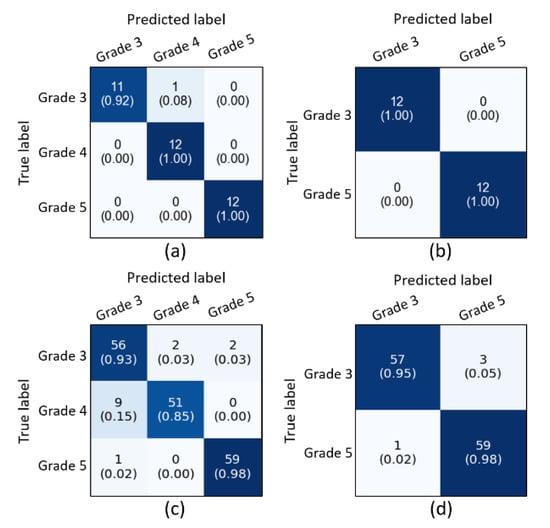

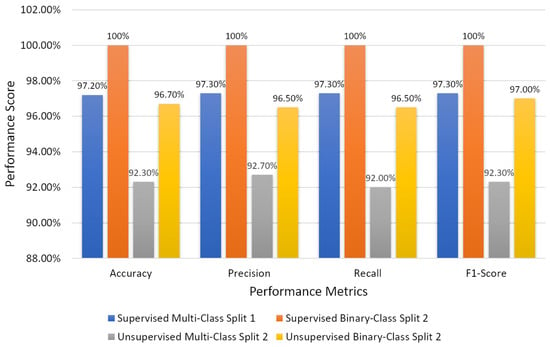

From the obtained results, we have analyzed that the supervised ensemble classification using modern AI techniques outperformed unsupervised classification using a traditional AI technique. However, both supervised and unsupervised performed well and achieved astounding results. Regarding multiclass classification using the supervised ensemble technique, the model performed the best at test split 1 and achieved an overall accuracy, precision, recall, and f1-score of 97.2%, 97.3%, 97.3%, and 97.3%, respectively. Moreover, in binary classification using the supervised technique, the model achieved amazing results of 100% for all the performance measures at test split 2. In contrast, for unsupervised multiclass classification, the k-medoids algorithm performed admirably at data split 2 and achieved an overall accuracy, precision, recall, and f1-score of 92.5%, 92.7%, 92.0%, and 92.3%, respectively. Likewise, in binary classification, the k-medoids algorithm performed exceptionally at data split 2 and achieved surprising results (i.e., accuracy: 96.7%, precision: 96.5%, recall: 96.5%, and f1-score: 97.0%). Figure 9 shows the confusion matrices generated to evaluate the performance of the supervised and unsupervised classification, and the results are based on the test dataset. We present the confusion matrices of both multiclass and binary classifications and show data that were correctly and erroneously classified during testing the ensemble model and unsupervised learning. In addition, we can observe from the confusion matrices that the high cancer grade (i.e., grade 5) was perfectly and accurately classified using supervised and unsupervised techniques. Figure 10 shows the bar graph of the accuracy score of each grade separately, and the scores were obtained from the confusion matrices, as shown in Figure 9.

Figure 9.

Confusion matrices of the supervised and unsupervised classification using test and whole datasets, respectively. (a,b) Confusion matrices of multiclass and binary classification using supervised ensemble technique based upon the test split 1 and 2 in Table 3A, respectively. (c,d) Confusion matrices of multiclass and binary classification using an unsupervised technique based upon the data split 2, respectively.

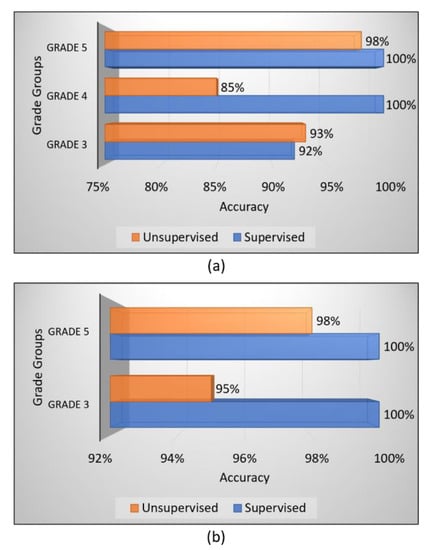

Figure 10.

Bar charts of the accuracy scores of unsupervised and supervised classifications. (a) Multiclass classification. (b) Binary classification. The performance of each PCa grade was obtained from the confusion matrices.

The current study was not planned using clinical data; instead, we used image data of PCa. A total of 900 microscopic biopsy samples (i.e., 300 of grade 3, 300 of grade 4, and 300 of grade 5) were selected in the present study. The data samples were distributed equally among three grade groups of PCa, and therefore, our dataset had no issue with class imbalance. For ML-based supervised ensemble classification, the dataset was separated into two parts for training (720 data samples) and testing (180 data samples) according to an 8:2 ratio. On the other hand, the whole dataset was utilized for unsupervised classification instead of divided into training and testing. In the view of feature reduction, after performing a majority voting approach using statistical and ML techniques, the 16 best features were selected based on optimum performance and 10 were rejected, as shown in Table 2. Therefore, the final selected features were used for AI classification and differentiating between the grades of PCa. Figure 11 shows the bar graph of the best performance scores of supervised and unsupervised classifications.

Figure 11.

Bar chart of the overall performance scores of supervised and unsupervised classifications.

There are many feature selection methods, and it is quite difficult to select the best one. In addition, we need to be very concerned about the features that are being fed to the model because ML follows the rules of “garbage in” and “garbage out”. We know that irrelevant features can increase computational cost and decrease the performance of the models. However, it is challenging to identify which method is the best for our dataset, and each method has a different way to select significant features. Therefore, the majority voting approach was proposed to solve this problem.

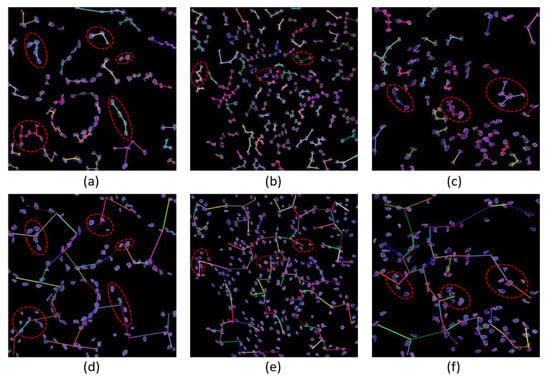

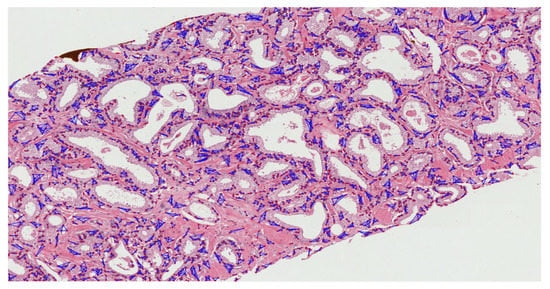

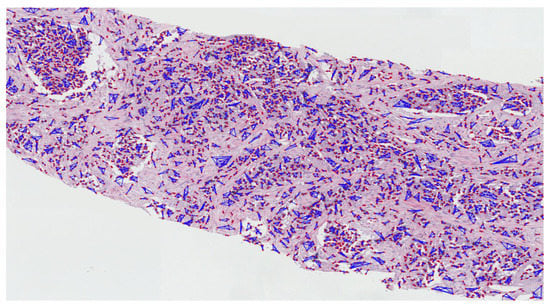

The MST cluster analysis method was applied on the PCa tissue samples of dataset 1 and dataset 2, and the visualization results of intra- and inter-cluster MST are shown in Figure 12. From the following figure, we can analyze that the structure and shape of the clusters in each grade are different from each other. It is quite challenging for researchers and doctors to analyze the microscopic biopsy images of PCa and identify suitable biomarkers compared to other common cancers.

Figure 12.

The visualization of intra- and inter-cluster MST graphs. (a–c) The intra-cluster MST of grade 3, grade 4, and grade 5, respectively. (d–f) The inter-cluster MST was generated from a, b, and c, respectively. The dotted red circle indicates the cluster of cell nuclei. Different color lines in a-c and d-f indicate intra- and inter-clusters, respectively.

The gold standard for the diagnosis of prostate cancer is a pathologist’s evaluation of prostate tissue. To potentially assist pathologists, DL-based cancer detection systems have been developed. Many of the state-of-the-art models are patch-based convolutional neural networks. Patch-based systems typically require detailed, pixel-level annotations for effective training. However, such annotations are seldom readily available in contrast to the clinical reports of pathologists, which contain slide-level labels. Our study sliced annotated and graded images from the pathologist, and we use an MST algorithm to perform cluster analysis and extract significant information for AI classification. The proliferation and cluster structure of cell nuclei, as shown in Appendix A, Figure A4 (Gleason pattern 3), Figure A5 (Gleason pattern 4), and Figure A6 (Gleason pattern 5), will help the pathologist to identify, classify, and grade more precisely the Gleason score assignment in the light of heterogeneity and variability.

In this era, deep learning-based algorithms are mostly used for cancer image analysis and classification. However, in this paper, we used traditional image processing algorithms to analyze PCa biopsy images and performed classification using modern and traditional AI techniques. In addition, we compared the performance of our proposed approach with the other state-of-the-art methods, as shown in Table 4.

Table 4.

Comparison with other state-of-the-art approaches. AUC: Area under the curve, DL: Deep learning, ML: Machine learning.

The limitations of our study are as follows:

- The size of the image datasets was too small to perform cluster analysis and apply deep learning-based algorithms, such as graph convolution neural network (GCNN) and LSTM network, and the study could be improved by increasing the data samples.

- Cell nuclei segmentation using traditional-based algorithms is a major issue, but we can improve this problem gradually by performing cell-level analysis applying different state-of-the-art methods.

- We know that unsupervised classification is very important in the real-world environment, the classifiers used in our study performed well but did not achieve astounding results compared to supervised classification. Therefore, we can improve this problem by analyzing the feature dissimilarities between the PCa grades.

5. Conclusions

In the paper, we focused principally on the cluster features of nuclei in tissue images, which facilitate cancer grading. Two-dimensional tissue images stained with H&E were subjected to cluster shape and size analyses. The distribution of cell nuclei and the shape and size of the clusters have changed as the cancer grade progressed. We developed multiple methods for histopathological image analysis (i.e., stain normalization, cell nuclei segmentation, cluster analysis, feature selection, and classification). The majority voting and stacking-based ensemble techniques are proposed for feature selection and classification, respectively. All the methods were executed successfully and achieved promising results. Cell-level analysis in the field of diagnostic cytopathology is important to analyze and differentiate the clusters of cell nuclei in each cancer grade. Although we performed several types of research, many challenges remain.

In conclusion, this research contributes useful information about the proliferation and community structure of cell nuclei that exist in the histological sections of PCa. Although we used several state-of-the-art methods and achieved astounding results, in-depth research is required for the segmentation and cluster analysis of cell nuclei using other state-of-the-art algorithms. Therefore, to overcome the challenges in the field of medical image analysis, we should think beyond the borderline. In the future, we will update this research work by performing cluster-based graph convolution neural network (GCNN) classification and apply our approach to other types of cancers.

Author Contributions

Formal analysis, S.B.; Funding acquisition, H.-K.C.; Investigation, S.B.; Methodology, S.B. and N.M.; Resources, N.-H.C.; Supervision, H.-C.K. and H.-K.C.; Validation, K.I. and Y.-B.H.; Visualization, Y.-B.H. and R.I.S.; Writing—original draft, S.B.; Writing—review and editing, K.S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MIST) (Grant No. 2021R1A2C2008576), and grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (Grant No: HI21C0977).

Informed Consent Statement

For dataset 1, the requirement for written informed patient consent was waived by the Institutional Ethics Committee of the College of Medicine, Yonsei University, Korea (IRB number 1-2018-0044). Dataset 2 was anonymized for the PANDA challenge, and the need for informed consent was waived by the local ethics review board of the Radboud University Medical Center, Netherland (IRB 2016-2275).

Data Availability Statement

Dataset 1 is not available online, cannot be transferred without an internal permission procedure. It is only available on request from the corresponding author. Dataset 2 is openly available online in the Kaggle repository at https://www.kaggle.com/c/prostate-cancer-grade-assessment (accessed on 25 March 2021). Code, test data, and pre-trained models for supervised ensemble classification are available in the Github repository at https://github.com/subrata001/Prostate-Cancer-Classification-Based-On-Ensemble-Machine-Learning-Techniques (accessed on 7 September 2021).

Acknowledgments

Firstly, we would like to thank Nam-Hoon Cho from the Severance Hospital of Yonsei University for providing the materials for the research. Secondly, we would like to thank JLK Inc., Korea, http://www.jlkgroup.com/ (accessed on 7 September 2021), for cooperating in the project and research work. Special thanks to Heung-Kook Choi for his support and suggestions during the preparation of this paper. Also, special thanks to Hee-Cheol Kim.

Conflicts of Interest

The authors have declared no conflict of interest.

Appendix A

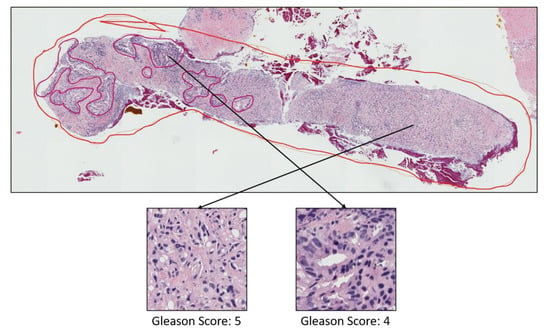

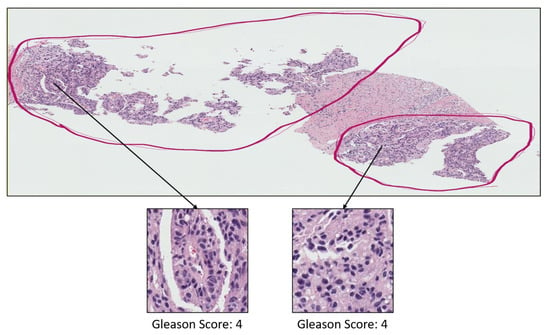

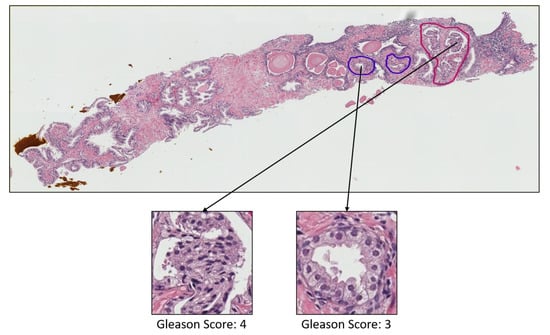

The pathology annotated WSIs used in this research to analyze the pattern and community structure of cell nuclei in grades 3, 4, and 5, shown in Figure A1, Figure A2 and Figure A3, respectively. The cluster analysis was performed successfully on histological images of PCa. For visualization of the community structure of cell nuclei, we plot the clusters in the annotated regions of grade 3, grade 4, and grade 5 in WSIs, shown in Figure A4, Figure A5 and Figure A6, respectively.

Figure A1.

Prostate adenocarcinoma with Gleason scores 4 and 3 annotated with red and blue color, respectively.

Figure A2.

Prostate adenocarcinoma with Gleason scores 4 annotated with red color.

Figure A3.

Prostate adenocarcinoma with Gleason scores 5 and 4 annotated with orange and red color, respectively.

Figure A4.

The proliferation and community structure of cell nuclei in the annotated region of grade 3.

Figure A5.

The proliferation and community structure of cell nuclei in the annotated region of grade 4.

Figure A6.

The proliferation and community structure of cell nuclei in the annotated region of grade 5.

References

- Zhu, Y.; Williams, S.; Zwiggelaar, R. Computer Technology in Detection and Staging of Prostate Carcinoma: A Review. Med. Image Anal. 2006, 10, 179–199. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Zhao, D.; Spring, D.J.; DePinho, R.A. Genetics and Biology of Prostate Cancer. Genes Dev. 2018, 32, 1105–1140. [Google Scholar] [CrossRef]

- Shen, M.M.; Abate-Shen, C. Molecular Genetics of Prostate Cancer: New Prospects for Old Challenges. Genes Dev. 2010, 24, 1967–2000. [Google Scholar] [CrossRef]

- Barron, D.A.; Rowley, D.R. The Reactive Stroma Microenvironment and Prostate Cancer Progression. Endocr.-Relat. Cancer 2012, 19, R187–R204. [Google Scholar] [CrossRef] [PubMed]

- Gleason, D.F.; Mellinger, G.T.; Veterans Administration Cooperative Urological Research Group. Prediction of Prognosis for Prostatic Adenocarcinoma by Combined Histological Grading and Clinical Staging. J. Urol. 2017, 197, S134–S139. [Google Scholar] [CrossRef] [PubMed]

- Cintra, M.L.; Billis, A. Histologic Grading of Prostatic Adenocarcinoma: Intraobserver Reproducibility of the Mostofi, Gleason and Böcking Grading Systems. Int. Urol. Nephrol. 1991, 23, 449–454. [Google Scholar] [CrossRef]

- Özdamar, Ş.O.; Sarikaya, Ş.; Yildiz, L.; Atilla, M.K.; Kandemir, B.; Yildiz, S. Intraobserver and Interobserver Reproducibility of WHO and Gleason Histologic Grading Systems in Prostatic Adenocarcinomas. Int. Urol. Nephrol. 1996, 28, 73–77. [Google Scholar] [CrossRef] [PubMed]

- Egevad, L.; Ahmad, A.S.; Algaba, F.; Berney, D.M.; Boccon-Gibod, L.; Compérat, E.; Evans, A.J.; Griffiths, D.; Grobholz, R.; Kristiansen, G.; et al. Standardization of Gleason Grading among 337 European Pathologists. Histopathology 2013, 62, 247–256. [Google Scholar] [CrossRef]

- Xu, Y.; Olman, V.; Xu, D. Minimum Spanning Trees for Gene Expression Data Clustering. Genome Inform. 2001, 12, 24–33. [Google Scholar] [CrossRef]

- Kruskal, J.B. On the Shortest Spanning Subtree of a Graph and the Traveling Salesman Problem. Proc. Am. Math. Soc. USA 1956, 7, 48–50. [Google Scholar] [CrossRef]

- Gower, J.C.; Ross, G.J.S. Minimum Spanning Trees and Single Linkage Cluster Analysis. Appl. Stat. 1969, 18, 54–64. [Google Scholar] [CrossRef]

- Pliner, H.A.; Shendure, J.; Trapnell, C. Supervised classification enables rapid annotation of cell atlases. Nat. Methods 2019, 16, 983–986. [Google Scholar] [CrossRef]

- Poojitha, U.P.; Lal Sharma, S. Hybrid Unified Deep Learning Network for Highly Precise Gleason Grading of Prostate Cancer. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 899–903. [Google Scholar] [CrossRef]

- Jafari-Khouzani, K.; Soltanian-Zadeh, H. Multiwavelet Grading of Pathological Images of Prostate. IEEE Trans. Biomed. Eng. 2003, 50, 697–704. [Google Scholar] [CrossRef] [PubMed]

- Kwak, J.T.; Hewitt, S.M. Nuclear Architecture Analysis of Prostate Cancer via Convolutional Neural Networks. IEEE Access 2017, 5, 18526–18533. [Google Scholar] [CrossRef]

- Linkon, A.H.M.; Labib, M.M.; Hasan, T.; Hossain, M.; Jannat, M.-E. Deep Learning in Prostate Cancer Diagnosis and Gleason Grading in Histopathology Images: An Extensive Study. Inform. Med. Unlocked 2021, 24, 100582. [Google Scholar] [CrossRef]

- Wang, J.; Chen, R.J.; Lu, M.Y.; Baras, A.; Mahmood, F. Weakly Supervised Prostate Tma Classification Via Graph Convolutional Networks. In Proceedings of the IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 239–243. [Google Scholar] [CrossRef]

- Bhattacharjee, S.; Park, H.G.; Kim, C.H.; Prakash, D.; Madusanka, N.; So, J.H.; Cho, N.H.; Choi, H.K. Quantitative Analysis of Benign and Malignant Tumors in Histopathology: Predicting Prostate Cancer Grading Using SVM. Appl. Sci. 2019, 9, 2969. [Google Scholar] [CrossRef]

- Bhattacharjee, S.; Kim, C.H.; Prakash, D.; Park, H.G.; Cho, N.H.; Choi, H.K. An Efficient Lightweight Cnn and Ensemble Machine Learning Classification of Prostate Tissue Using Multilevel Feature Analysis. Appl. Sci. 2020, 10, 8013. [Google Scholar] [CrossRef]

- Nir, G.; Hor, S.; Karimi, D.; Fazli, L.; Skinnider, B.F.; Tavassoli, P.; Turbin, D.; Villamil, C.F.; Wang, G.; Wilson, R.S.; et al. Automatic grading of prostate cancer in digitized histopathology images: Learning from multiple experts. Med. Image Anal. 2018, 50, 167–180. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Veltri, R.; Epstein, J.A.; Christudass, C.; Madabhushi, A. Cell Cluster Graph for Prediction of Biochemical Recurrence in Prostate Cancer Patients from Tissue Microarrays. In Medical Imaging 2013: Digital Pathology; Gurcan, M.N., Madabhushi, A., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; p. 86760H. [Google Scholar] [CrossRef]

- Kim, C.-H.; Bhattacharjee, S.; Prakash, D.; Kang, S.; Cho, N.-H.; Kim, H.-C.; Choi, H.-K. Artificial Intelligence Techniques for Prostate Cancer Detection through Dual-Channel Tissue Feature Engineering. Cancers 2021, 13, 1524. [Google Scholar] [CrossRef]

- Reinhard, E.; Ashikhmin, M.; Gooch, B.; Shirley, P. Color Transfer between Images. IEEE Comput. Graph. Appl. 2001, 21, 34–41. [Google Scholar] [CrossRef]

- Ruifrok, A.C.; Johnston, D.A. Quantification of Histochemical Staining by Color Deconvolution. Anal. Quant. Cytol. Histol. 2001, 23, 291–299. [Google Scholar]

- Tan, K.S.; Mat Isa, N.A.; Lim, W.H. Color Image Segmentation Using Adaptive Unsupervised Clustering Approach. Appl. Soft Comput. 2013, 13, 2017–2036. [Google Scholar] [CrossRef]

- Azevedo Tosta, T.A.; Neves, L.A.; do Nascimento, M.Z. Segmentation Methods of H&E-Stained Histological Images of Lymphoma: A Review. Inform. Med. Unlocked 2017, 9, 34–43. [Google Scholar] [CrossRef]

- Song, J.; Xiao, L.; Lian, Z. Contour-Seed Pairs Learning-Based Framework for Simultaneously Detecting and Segmenting Various Overlapping Cells/Nuclei in Microscopy Images. IEEE Trans. Image Process. 2018, 27, 5759–5774. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Shang, F.; Ozolek, J.; Rohde, G. Detecting and Segmenting Cell Nuclei in Two-Dimensional Microscopy Images. J. Pathol. Inform. 2016, 7, 42–50. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Lu, C.; Mandal, M. An Efficient Technique for Nuclei Segmentation Based on Ellipse Descriptor Analysis and Improved Seed Detection Algorithm. IEEE J. Biomed. Health Inform. 2014, 18, 1729–1741. [Google Scholar] [CrossRef]

- Guven, M.; Cengizler, C. Data Cluster Analysis-Based Classification of Overlapping Nuclei in Pap Smear Samples. Biomed. Eng. Online 2014, 13, 159–177. [Google Scholar] [CrossRef]

- Lv, X.; Ma, Y.; He, X.; Huang, H.; Yang, J. CciMST: A Clustering Algorithm Based on Minimum Spanning Tree and Cluster Centers. Math. Probl. Eng. 2018, 2018, 8451796. [Google Scholar] [CrossRef]

- Nithyanandam, G. Graph based image segmentation method for identification of cancer in prostate MRI image. J. Comput. Appl. 2011, 4, 104–108. [Google Scholar]

- Pike, R.; Lu, G.; Wang, D.; Chen, Z.G.; Fei, B. A Minimum Spanning Forest-Based Method for Noninvasive Cancer Detection with Hyperspectral Imaging. IEEE Trans. Biomed. Eng. 2016, 63, 653–663. [Google Scholar] [CrossRef] [PubMed]

- Ying, S.; Xu, G.; Li, C.; Mao, Z. Point Cluster Analysis Using a 3D Voronoi Diagram with Applications in Point Cloud Segmentation. ISPRS Int. J. Geo-Inf. 2015, 4, 1480–1499. [Google Scholar] [CrossRef]

- Nithya, S.; Bhuvaneswari, S.; Senthil, S. Robust Minimal Spanning Tree Using Intuitionistic Fuzzy C-Means Clustering Algorithm for Breast Cancer Detection. Am. J. Neural Netw. Appl. 2019, 5, 12–22. [Google Scholar] [CrossRef]

- Bommert, A.; Sun, X.; Bischl, B.; Rahnenführer, J.; Lang, M. Benchmark for Filter Methods for Feature Selection in High-Dimensional Classification Data. Comput. Stat. Data Anal. 2020, 143, 106839. [Google Scholar] [CrossRef]

- Karabulut, E.M.; Özel, S.A.; İbrikçi, T. A Comparative Study on the Effect of Feature Selection on Classification Accuracy. Procedia Technol. 2012, 1, 323–327. [Google Scholar] [CrossRef]

- Pirgazi, J.; Alimoradi, M.; Esmaeili Abharian, T.; Olyaee, M.H. An Efficient Hybrid Filter-Wrapper Metaheuristic-Based Gene Selection Method for High Dimensional Datasets. Sci. Rep. 2019, 9, 18580. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Wu, Y. Feature Subset Selection for Cancer Classification Using Weight Local Modularity. Sci. Rep. 2016, 6, 34759. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Liu, Y.; Wei, D.; Xu, M.; Chen, H.; Han, J. Selection of Interdependent Genes via Dynamic Relevance Analysis for Cancer Diagnosis. J. Biomed. Inform. 2013, 46, 252–258. [Google Scholar] [CrossRef]

- Isabelle, G.; Jason, W.; Stephen, B.; Vladimir, V. Gene Selection for Cancer Classification Using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Wang, R. Efficient KNN Classification with Different Numbers of Nearest Neighbors. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1774–1785. [Google Scholar] [CrossRef]

- Toth, R.; Schiffmann, H.; Hube-Magg, C.; Büscheck, F.; Höflmayer, D.; Weidemann, S.; Lebok, P.; Fraune, C.; Minner, S.; Schlomm, T.; et al. Random Forest-Based Modelling to Detect Biomarkers for Prostate Cancer Progression. Clin. Epigenet. 2019, 11, 148. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient Boosting Machines, a Tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed]

- Ma, B.; Meng, F.; Yan, G.; Yan, H.; Chai, B.; Song, F. Diagnostic Classification of Cancers Using Extreme Gradient Boosting Algorithm and Multi-Omics Data. Comput. Biol. Med. 2020, 121, 103761. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Liu, K.Y.; Wong, S.T.C. Cancer Classification and Prediction Using Logistic Regression with Bayesian Gene Selection. J. Biomed. Inform. 2004, 37, 249–259. [Google Scholar] [CrossRef]

- Sahran, S.; Albashish, D.; Abdullah, A.; Shukor, N.A.; Hayati Md Pauzi, S. Absolute Cosine-Based SVM-RFE Feature Selection Method for Prostate Histopathological Grading. Artif. Intell. Med. 2018, 87, 78–90. [Google Scholar] [CrossRef] [PubMed]

- García Molina, J.F.; Zheng, L.; Sertdemir, M.; Dinter, D.J.; Schönberg, S.; Rädle, M. Incremental Learning with SVM for Multimodal Classification of Prostatic Adenocarcinoma. PLoS ONE 2014, 9, e93600. [Google Scholar] [CrossRef] [PubMed]

- Albashish, D.; Sahran, S.; Abdullah, A.; Shukor, N.A.; Pauzi, S. Ensemble Learning of Tissue Components for Prostate Histopathology Image Grading. Int. J. Adv. Sci. Eng. Inf. Technol. 2016, 6, 1134–1140. [Google Scholar] [CrossRef][Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).