Abstract

Image clustering analysis faces the curse of dimensionality, distance concentration, multimodal landscapes, and rapid diversity loss that challenge meta-heuristics. Meanwhile, the standard Crayfish Optimization Algorithm (COA) has shown notable potential but often suffers from poor convergence speed and premature convergence. To address these issues, this paper introduces a Chaos-initiated and Adaptive Multi-guide Control-based COA (CMCOA). First, a chaotic initialization strategy is employed by explicitly exploiting the reflection symmetry of logistic-map chaotic sequences together with opposition-based learning, which enhances population diversity and facilitates early exploration of promising regions. Second, a fitness-feedback adaptive parameter control mechanism, motivated by the general idea of the MIT rule, is integrated to dynamically balance exploration and exploitation, thereby accelerating convergence while mitigating premature stagnation. Furthermore, a multi-guide stage-switching strategy is designed to avoid being trapped in local optima by promoting adaptive transitions between exploration phases and exploitation phases. CMCOA is benchmarked against competing algorithms on ten challenging test functions drawn from CEC2017, CEC2019, CEC2020, and CEC2022 suites. We also conducted multispectral clustering, where class differences often lie in reflectance magnitude; we adopt Euclidean distance for its efficiency and suitability in capturing such variations. Compared with other algorithms, CMCOA shows faster convergence, higher accuracy, and improved robustness, revealing its broader potential for image analysis tasks.

1. Introduction

High-dimensional image analysis, especially in multispectral and hyperspectral clustering, faces unique challenges for meta-heuristic optimizers. The curse of dimensionality and distance concentration weaken similarity measures and obscure cluster boundaries, reducing the effectiveness of traditional distance metrics as dimensionality increases [,]. Additionally, the objective landscapes are highly non-convex and multi-modal, often resembling ridges, which can cause premature convergence in many population-based methods unless diversity is actively preserved [,,]. Population diversity itself tends to diminish under leader-biased update rules, further increasing the risk of mode trapping; recent diversity-guided and multi-level learning strategies only partially address this problem []. Fixed or manually tuned schedules also find it difficult to balance exploration and exploitation across different stages, motivating the use of adaptive parameter control and stage-aware strategies in evolutionary computation [,]. Furthermore, unequal cluster sizes in real hyperspectral scenes are common and can lead to over-segmentation or collapsed clusters, prompting methods that combine subspace or graph modeling with imbalance-aware learning [,]. These interconnected challenges limit the effectiveness of mainstream optimizers when directly applied to high-dimensional image data. To counter these issues, this paper proposes a unified framework that improves initialization coverage, incorporates adaptive stage control, and includes anti-bias guidance to enhance robustness and clustering accuracy in imagery.

Among many meta-heuristic techniques, the Crayfish Optimization Algorithm (COA) [] has gained attention for its simple design and biologically inspired phase structure, which—through summer-resort, competition, and foraging stages—provides a natural way to switch between exploration and exploitation. Empirically, COA achieves competitive results on various benchmarks and engineering problems, and its temperature-driven schedule offers an understandable method to adjust search behavior. However, these practical advantages do not guarantee consistent performance across all problem types: in high-dimensional or highly deceptive landscapes, the usual COA initialization and leader-biased updates can lead to inadequate search-space coverage and faster diversity loss, raising the chance of premature stagnation. These limitations highlight specific areas for improvement while keeping the useful phase structure that makes COA a good base for improvement.

In response to the limitations of the standard COA mentioned earlier, several researchers have proposed various improvements to enhance its performance. Shikoun et al. [] introduced the Binary Crayfish Optimization Algorithm (BinCOA), which uses refracted opposition-based learning and crisscross strategies to improve the algorithm’s exploitation capability and convergence accuracy in feature selection tasks. To address performance decline and local stagnation observed in later iterations of COA, Jia et al. [] proposed the Modified Crayfish Optimization Algorithm (MCOA), which includes an environmental renewal mechanism and a ghost-antagonism-based learning strategy. Their results show significant improvements in avoiding local optima, especially in constrained engineering design and feature selection scenarios. To further enhance the standard COA, Elhosseny et al. [] introduced the Adaptive Dynamic COA with a Locally enhanced escape operator (AD-COA-L), which leverages the Bernoulli map for population initialization, an adaptive dynamic inertia weight, and a local escape operator to better balance exploration and exploitation and prevent premature convergence. Table 1 summarizes the mathematical mechanisms across COA variants.

Table 1.

Comparative table summarizing mathematical mechanisms across COA variants.

Many studies collectively emphasize COA’s potential in solving feature selection and engineering optimization problems []. However, there is a growing demand for more efficient and robust optimization algorithms. Recent research has extended COA and its variants into the fields of image processing and recognition. Padmashree et al. [] used COA to optimize similarity-navigated graph neural networks (SNGNNs) for brain tumor image analysis, resulting in significant improvements in diagnostic accuracy. Dinh [] employed COA to control texture layer fusion in the Medical Image Fusion based on the Bilateral Texture Filter and Transfer Learning with the ResNet-101 network, addressing issues such as limited training data, inadequate feature representation, and contrast loss. Their approach enhanced the visual quality of fused medical images, highlighting the algorithm’s usefulness in critical clinical imaging tasks.

Among these techniques, chaotic systems have gained increasing attention as initialization methods due to their sensitivity to initial conditions and ergodic, pseudo-random dynamics, which increase dispersion in the initial population and therefore help to reduce the risk of premature convergence. Practically, chaotic initializers can be viewed in three classes: simple one-dimensional maps that provide low-cost, tunable diversification; higher-order and hyperchaotic generators that produce stronger mixing for rugged search landscapes; and fractional-order or hybrid schemes that introduce memory effects or combine chaotic sequences with Lévy/quantum operators to enhance exploration under constraints. Recent research has explored their integration into various applications: Lévy-augmented chaotic PSO has been shown to improve energy-efficient cluster routing in industrial WSNs [], while a chaotic-quantum hybrid was employed successfully for berth-crane scheduling under tidal constraints []. In the image-security domain, fractional-order chaotic Hopfield networks and hybrid-domain chaotic encryption have been proposed for high-performance protection of medical and social images [,], and tailored quadratic polynomial hyperchaotic maps combined with pixel-fusion strategies have demonstrated superior randomness and efficiency in image encryption []. Together, these results both motivate and guide our choice of a chaotic initializer: match the map complexity to problem difficulty.

However, directly using chaotic sequences for population initialization in high-dimensional clustering is insufficient: distance concentration persists, and cluster balance is not guaranteed. To address this gap, the proposed CMCOA simultaneously acts on initialization, parameter adaptation, and guidance diversity. Initialization is reworked through logistic-map chaos coupled with opposition-based selection [], which increases initial sample dispersion and convergence speed; the adaptive parameter control, inspired by the general concept of the MIT rule [] and implemented here as a population-level, non-gradient fitness-feedback controller, replaces the fixed temperature schedule with a state-driven step-size controller that scales exploitation intensity according to relative improvement; finally, a multi-guide stage-switching mechanism distributes search pressure among several elites and employs a diversity threshold to trigger phase transitions, thereby avoiding single-leader dominance while permitting focused refinement when warranted. Each of these components targets one of the identified failure modes and is integrated so that early broad exploration is preserved while late exploitation is stabilized, yielding lower final objective values in image clustering benchmarks.

The effectiveness of CMCOA is demonstrated through benchmark functions and clustering experiments on multispectral image datasets. Empirical results show that the proposed algorithm delivers improvements in convergence speed, clustering precision, and intra-cluster balance. Notably, CMCOA is able to extract semantically meaningful structures from complex images, producing segmentation results that align well with human visual interpretation. These findings support CMCOA’s applicability to image analysis tasks and reinforce its value as a robust tool for advanced image analysis.

This paper’s contributions are summed up as follows:

- We present CMCOA, an improved optimization algorithm that integrates chaotic initialization and adaptive multi-guide control, showing consistent gains in exploration, convergence speed, and robustness on benchmarks and multispectral datasets.

- CMCOA combines logistic-map chaos with opposition-based learning to enhance initial diversity and accelerate early search, while an MIT-inspired adaptive mechanism adjusts parameters to maintain a balance between exploration and exploitation.

- A multi-guide stage-switching strategy enables flexible transitions across search phases, reducing leader bias and supporting more reliable solution quality.

- Applied to multispectral image clustering, CMCOA improves cluster balance and texture preservation, yielding meaningful segmentation with practical efficiency in high-dimensional tasks.

2. The Chaotic Initialization and Adaptive Multi-Guide Control-Based Crayfish Optimization Algorithm

2.1. Overview of the Original Crayfish Optimization Algorithm

The Crayfish Optimization Algorithm is a nature-inspired meta-heuristic that mimics the social and survival behaviors of crayfish. These behaviors mainly include summer avoidance, competition, and foraging, which are reflected as different search phases in the algorithm. Each crayfish in the algorithm represents a possible solution within the multi-dimensional search space, with its position being updated iteratively based on these behaviors. The algorithm goes through multiple iterations, aiming to reach an optimal solution by imitating the collective intelligence and adaptive strategies seen in crayfish populations.

2.1.1. Initialization

In the original COA, the initialization of individuals in the population is typically performed using a uniform random distribution. Each individual’s position vector is generated independently within the problem’s lower and upper bounds:

where represents the position of the individual and dimension, is the problem dimension, and denotes a d-dimensional vector whose entries are generated uniformly at random from the interval [0, 1]. and denote the lower and upper bounds of the dimension search space, respectively. While effective for basic coverage, purely random initialization lacks structural diversity and may limit exploration from the outset.

2.1.2. Temperature Schedule

The behavior of crayfish is significantly influenced by variations in temperature, which in turn dictate the specific behavioral stages undertaken by the organisms. The definition of temperature can be expressed as Equation (2). When temperatures exceed 30 °C, crayfish are observed to seek cooler refuges, exhibiting a summer escape behavior. Conversely, under optimal temperature conditions, foraging activities are initiated. The quantity of food consumed by crayfish is directly impacted by ambient temperature. It has been established that the optimal foraging temperature range for crayfish lies between 15 °C and 30 °C, with peak intake observed around 25 °C. Consequently, the foraging intake of crayfish can be approximated by a normal distribution, reflecting its dependence on temperature fluctuations.

is generated uniformly at random from the interval [0, 1].

2.1.3. Summer Resort Stage

If temp > 30, each crayfish seeks a safe cave location. The “cave” position is defined as the midpoint between the global best and the individual’s local best:

The fitness of each agent is evaluated to determine the global best solution and each local best . Subsequently, with a probability of 0.5, indicating the absence of competition, the crayfish directly relocates to the cave position. Otherwise, in the presence of competition for the same cave, it moves toward the cave by taking a fractional step in its direction:

where is the linearly decreasing coefficient, with total iterations and current iteration . This update pulls the crayfish toward the cave, enhancing exploitation as they converge on high-quality regions.

2.1.4. Competition Stage

When temp > 30 and another crayfish is contesting the cave, modeled by the random coin flip, a competition update is used. A random competitor is chosen from the population, and the current agent moves toward it:

This exchange encourages diversity by having agents interact and spread out, expanding the search.

2.1.5. Foraging Stage

If temp ≤ 30, the environment is suitable for feeding. All crayfish move toward the food at the current global best position . Each crayfish computes a food-size measure :

where is the fitness of crayfish , is the fitness at , and is a constant “food factor”. This ratio measures how large or small the food is relative to the crayfish’s current solution. Two feeding behaviors ensue:

- Shredding (when ): If the food is large relative to the agent’s state, the crayfish first “shreds” it. The global best position is scaled to simulate processing:Then the crayfish advances toward the food with an alternating step:Here is the food intake proportion, determined by a Gaussian function of the temperature:Among them, µ refers to the temperature most suitable for crayfish, σ and are used to control the intake of crayfish at different temperatures, where and in the original COA formulation. The sine-cosine term is designed to emulate the alternating use of claws when manipulating larger food items, thereby enabling fine-grained local search behavior within the optimization process.

- Direct Feeding (when ): If the food is small enough, the crayfish moves directly toward it:This accelerates convergence by exploiting the gradient toward the best solution. These stages are repeated until the iteration limit T is reached. Throughout, the algorithm updates global and local bests. In summary, COA embeds exploration and exploitation within a unified framework.

2.2. Improved Strategy Framework of CMCOA

To address the identified drawbacks of the standard COA, a novel enhanced version named CMCOA was developed. CMCOA integrates three specifically designed strategies to improve population diversity, balance exploration with exploitation, and enhance convergence robustness.

2.2.1. Chaotic Initialization Strategy

Instead of purely random initialization, CMCOA adopts a chaotic initialization strategy in which logistic mapping is utilized to construct the initial population. In this process, chaotic sequences are applied not primarily for their randomness but for their inherent symmetric properties within the unit interval [0, 1]. By coupling each candidate solution with its symmetric counterpart, the coverage of the search space is reinforced, and population diversity is preserved from the outset. The logistic formulation is then employed as a generator of such symmetric mappings, defined by:

With chosen as 4 and the chaotic value . For = 4, the Logistic map admits the invariant distribution:

This distribution yields mean E[X] = 0.5 and variance Var(X) = 1/8, which is larger than that of the uniform distribution (1/12), implying stronger boundary coverage and greater spread of samples. Its positive Lyapunov exponent λ = ln2 > 0 ensures chaotic sensitivity and ergodicity. This recurrence generates a pseudo-random sequence that is ergodic on [0, 1]. To initialize a solution, one takes a chaotic value and maps it into the search space for each dimension

By applying initialization independently to each individual, a well-distributed and diverse initial population can be obtained. Compared with uniform random sampling, chaotic sequences provide more comprehensive coverage of the search space, as they traverse the domain in a deterministic yet non-repetitive manner. To further accelerate convergence, opposition-based learning has also been incorporated, whereby for each candidate solution , an opposite solution is generated, and the superior of the two is retained.

The expected distance between and is 0.5 under uniform sampling and 2/π ≈ 0.6366 under the logistic invariant measure, both larger than the expected distance between two independent uniform samples (1/3). This operator yields antipodal pairs whose midpoint is the domain center. From a geometric standpoint, evaluating such antipodal pairs reduces directional bias introduced by single-point sampling and increases the effective angular coverage around potential optima. In stochastic terms, the expected pairwise distance under the logistic invariant measure exceeds that of uniform sampling, which empirically accelerates the early-stage basin discovery.

2.2.2. Adaptive Parameter Control Rule

In the original COA, the transition between different behavioral stages is mainly governed by a random temperature variable: temp. When temp > 30, the algorithm enters the summer resort or competition stage; otherwise, it performs foraging. While this mechanism introduces stochasticity, it lacks sensitivity to the actual optimization state, which may result in either premature convergence or excessive randomness.

To overcome this limitation, CMCOA replaces the random temperature-driven mechanism with a state-driven adaptive rule. Although the update mechanism used in this paper is not the same as the classical MIT rule in Model Reference Adaptive Control—which typically adjusts parameters through gradient-based adaptation with respect to a reference model—our design is inspired by its underlying idea of error-driven adjustment. Specifically, CMCOA adopts a fitness-feedback approach: the control parameter is updated according to the relative difference between the population mean fitness and the current best fitness, with projection onto a bounded interval to ensure stability. This mechanism is computationally efficient, model-free, and automatically balances exploration and exploitation during the search process.

Specifically, a control parameter is introduced to govern the step size, initially set as 2, replacing in Equation (4). Instead of being linearly decreasing, is dynamically adjusted according to the optimization progress. The updated law is given by:

denotes the average fitness value of the generation population, measures the relative deviation between the population average and the best solution, is a learning rate controlling the update speed set as 0.03, and the projection operator max (0.01, min (2, ⋅)) ensures that always remains within the bounded interval [0.01, 2].

When the population quality is poor, < 0, which decreases . This reduces the exploitation intensity and introduces exploratory behavior, thereby mitigating premature convergence. When the population tends to converge, > 0, leading to an increase in . This encourages stronger exploitation by amplifying the attraction toward promising regions.

Furthermore, the projection operator guarantees boundedness, since by construction ∈ [0.01, 2] at every iteration. When the algorithm converges, we have →0. Thus, the increments vanish, and the update stabilizes at a fixed point within the bounded interval. Therefore, the adaptive update law ensures both stability and convergence under practical conditions.

2.2.3. Multi-Guide and Stage-Switching Update Mechanism

To further improve search robustness, CMCOA enhances the update mechanism by introducing multiple elite guides and a diversity-driven stage-switching rule, which operate in synergy with the adaptive parameter control.

- Multi-guide update

Instead of relying on a single global best, CMCOA aggregates several top-performing solutions. Let E denote the elite set of size , where is the best solution. The elite ratio was set to 20% as a balanced choice: smaller values reduce diversity, while larger value weakens selection pressure. Our preliminary tests indicated that performance is stable within 10–30%, with 20% giving slightly better results overall. This parameter is tunable and not a strict requirement of the algorithm. The guiding position is formed by a convex combination:

where is the global best solution. will replace in Equation (4). The weights are adaptively chosen based on the population state. stands for generation population fitness standard deviation. Thus, when diversity is high, increases, giving more emphasis to multiple elites; when diversity collapses, dominates, and the algorithm relies on the global best. The adaptive weighting balances robustness and intensification: at high population diversity, the global best may be an outlier, so using the elite centroid gives a more robust search direction; at low diversity, the population has converged, and the global best is more reliable, so emphasizing it enhances exploitation. This is a heuristic design intended to improve practical robustness; a protocol for empirical comparison with alternative schemes is provided in the supplement.

Suppose all elite solutions lie in the feasible domain , which is convex. Then the convex combination also lies in . Hence, the multi-guide rule preserves feasibility. Moreover, by mixing different elite directions, the update direction variance is enlarged, thereby enhancing exploration before convergence.

By weighing multiple elite guides, the algorithm explores along different promising directions simultaneously, which can reduce over-convergence on a single leader and maintain diversity in exploitation.

- Stage-switching rule

Stage-switching means that CMCOA can alternate between different update formulas as the search progresses. Concretely, one can define two update functions , and a threshold:

Specifically, the switching threshold is set as , and the allowable fitness fluctuation equals 5% of the current mean fitness. The switching threshold is chosen as a small relative tolerance. Using normalized/relative thresholds to decide stage switches or convergence is common in population-based methods, and thresholds in practice typically lie on the order of 1–10% depending on problem scale. This practice aligns with previous work that uses population variance or normalized tolerances to guide search dynamics. Thus, can be interpreted as a practical relative rule that exhibits scale-insensitive behavior in practice, balancing the risk of premature switching against the need for timely exploitation.

When diversity is high, multiple elite guides promote global exploration; once diversity drops below the adaptive switching point , the algorithm shifts to local exploitation led by the global best. This stage-switching mechanism works in tandem with the adaptive parameter control: the former selects the update mode, while the latter adjusts the step intensity via . Together, they replace the fixed scheduling of the original COA with a responsive, state-driven balance between exploration and exploitation.

2.3. Complexity Analysis

The time complexity of CMCOA is related to the number of crayfish population size (N), the dimension of the problem (D), the number of iterations (T), and the cost of a single objective function evaluation (EC). Therefore, the time complexity of CMCOA can be expressed as follows:

Specifically, parameter initialization requires only (1), chaotic initialization of the population requires (N × D), and the initial fitness evaluation of the population requires (N × EC). During the iterative process, adaptive parameter control and diversity estimation introduce an additional (N); the update of population positions requires (T × N × D), and the fitness evaluation of candidate solutions requires (T × N × EC). Therefore, the overall time complexity of CMCOA can be described as:

Since the number of iterations T is usually large, and fitness evaluations dominate the runtime of population-based metaheuristics practically, whereas the vector operations in position updates are of order (D) and comparatively minor. The term T × N × (EC + D) dominates the complexity, Equation (30) can be simplified into:

This demonstrates that the time complexity of CMCOA remains polynomial. Compared with the standard COA, the proposed CMCOA introduces chaotic initialization, adaptive parameter control, and stage-switching mechanisms, which slightly increase the computational overhead in constant terms. Nevertheless, the overall computational efficiency of CMCOA is maintained, and the algorithm can be regarded as an effective optimization method with acceptable computational complexity.

2.4. Pseudo Code and Flow Chart of CMCOA

The pseudo code of CMCOA is presented as Algorithm 1:

| Algorithm 1: The CMCOA algorithm |

|

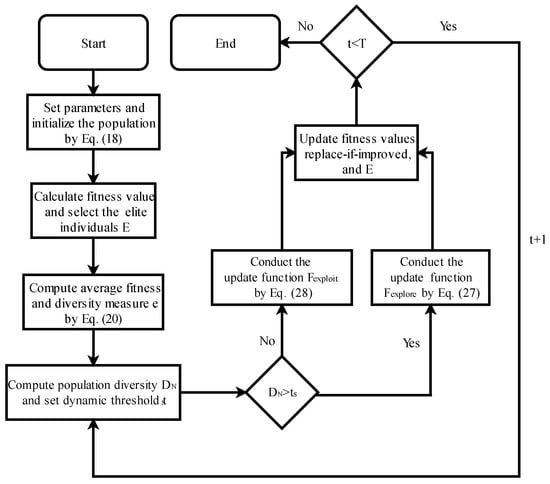

The flow chart of CMCOA is shown in Figure 1.

Figure 1.

Flow chart of CMCOA.

2.5. Numeric Experiments

Numerical experiments were conducted to evaluate the performance of the proposed CMCOA against several other algorithms, including Differential Evolution (DE) [], Hippopotamus Optimization (HO) [], Whale Optimization Algorithm (WOA) [], Ivy Algorithm (IVY) [], the original COA [], MCOA [], and AD-COA-L []. These algorithms have attracted considerable attention in the field of meta-heuristic optimization and are recognized for their commendable optimization performance and representativeness. Compared with them, CMCOA demonstrates significant superiority. The parameter configurations for all algorithms are provided in Table 2.

Table 2.

Parameter setting of each algorithm.

The experimental setup utilized test functions from the IEEE Congress on Evolutionary Computation (CEC) competitions, specifically CEC2017 [], CEC2019 [], CEC2020 [], and CEC2022 []. Ten representative test functions were selected, encompassing unimodal, hybrid, and composition types, as summarized in Table 3. We selected the CEC benchmark functions to comprehensively evaluate the algorithm’s performance. Unimodal functions assess convergence and precision in high-dimensional spaces, hybrid functions capture problems with multiple distinct features, and composition functions mimic multi-modal and non-separable complexities. This combination forms a widely accepted benchmark, providing a robust and realistic assessment of algorithm capability. Hybrid functions and composition functions are constructed from multiple basic functions, and M in the table denotes the number of basic functions. All algorithms were executed under identical conditions to ensure fair comparison. The population size was uniformly set to 30, a standard value in the meta-heuristic community that balances the need for sufficient population diversity to explore the search space and computational efficiency. Similarly, a maximum of 500 iterations was chosen as the termination criterion. This value provides adequate time for the algorithms to converge on most test functions while preventing unnecessarily long run times.

Table 3.

Characteristics of 10 test functions.

2.5.1. Algorithm Performance Analysis on Test Functions

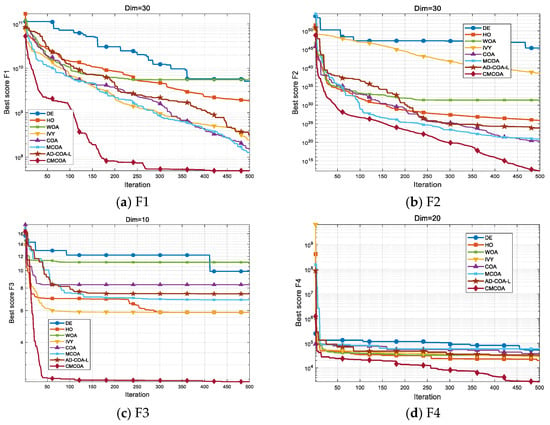

The performance of the algorithms is evaluated in two ways: Figure 2 displays the comparative convergence curves of the different algorithms across ten test functions, and Table 4 provides the statistical results, showing the mean and standard deviation, as well as the average total runtime in seconds for each algorithm on each function, obtained from 30 independent runs. A careful examination of these data underscores the superior performance of CMCOA across a wide range of test functions. On the unimodal F1, CMCOA’s mean and on F2 are substantially better than those of the baseline COA and other competitors, indicating a stronger and more reliable drive toward the global optimum. On more deceptive hybrid and composition landscapes, the advantage persists: CMCOA’s performance on the hybrid F5 and on composition F8 is significantly improved compared with COA, which suggests that the algorithm’s enhanced diversity maintenance and adaptive exploitation mechanisms more effectively cope with multi-modality and landscape heterogeneity.

Figure 2.

Comparison of convergence curves of the eight optimization algorithms on test functions.

Table 4.

Detailed performance metrics of the eight optimization algorithms.

These numeric trends are supported by the convergence curves shown in Figure 2: CMCOA typically exhibits a steeper early decline followed by a smoother late-stage descent, which reflects two complementary effects of the proposed modifications. The chaotic initialization accelerates early discovery of promising basins, producing the rapid early decrease visible on most curves, while the MIT rule-inspired adaptive control, together with multi-guide stage-switching, stabilizes the later iterations and prevents premature stagnation, as evidenced by reduced variance across independent runs and by a tighter final spread in Table 3. Finally, the additional computational overhead introduced by these strategies is modest: average runtimes remain comparable to lightweight methods and are frequently in the sub-second or low-second range on the tested functions, indicating that improved search behavior has been achieved without prohibitive cost. This combination of faster initial exploration and controlled, diversity-aware exploitation explains why CMCOA achieves robust gains across unimodal, hybrid, and composition classes.

2.5.2. Analysis of Non-Parametric Statistical Significance Test of CMCOA

To further validate the statistical reliability of the observed performance differences, a Wilcoxon rank-sum test was carried out against all comparative algorithms. The p-values reported in Table 5 show that CMCOA achieves statistically significant improvements (p < 0.05) on the vast majority of benchmark functions, with many cases reaching p < 0.01. This confirms that the observed advantages are not due to random effects but stem from its enhanced search strategy. Only a few functions yield p > 0.05, where CMCOA remains competitive but not significantly better. Overall, the results provide strong statistical evidence that the proposed chaotic initialization, adaptive parameter control, and multi-guide stage-switching mechanisms substantially improve the reliability and superiority of CMCOA over other algorithms.

Table 5.

Experimental results of the Wilcoxon rank-sum test on test functions.

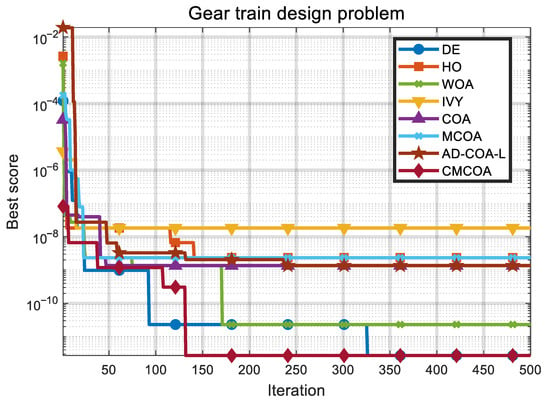

2.5.3. Engineering Design Problems

To further demonstrate the general-purpose capability of CMCOA beyond benchmark functions, two widely used engineering design problems are investigated: the gear train design problem and the cantilever beam design problem. These problems are classical constrained optimization benchmarks that have been extensively employed to assess the effectiveness of metaheuristic algorithms in both discrete and continuous domains.

- Gear Train Design Problem

The gear train design problem aims to minimize the squared error between the actual gear ratio and the desired target ratio R = 1/6.931. The decision variables represent the number of teeth of four gears , , , (), which must be positive integers. The objective function can be formulated as:

- Cantilever Beam Design Problem

The cantilever beam design problem is a classical continuous constrained optimization benchmark. The objective is to minimize the material volume of a beam consisting of five segments, subject to stress and deflection constraints. The five design variables correspond to the cross-sectional dimensions of the beam , , , , . The mathematical model can be formulated as:

The problem is subject to a maximum stress/deflection constraint which must not exceed the allowable limit, represented by the value of 1 in the constraint function . The constraint formulation is:

- Experimental analysis

In addition to the baseline algorithms already considered, we further compare CMCOA with four recently proposed methods that have shown strong performance on engineering design benchmarks: Symmetric Projection Optimizer (SPO) [], Genghis Khan Shark Optimizer (GKSO) [], Surrogate Ensemble-Assisted Hyper-Heuristic Algorithm (SEA-HHA) [], and Surrogate-assisted Hybrid Evolutionary Algorithm with Local Estimation of Distribution (SHEALED) []. These methods were included to provide a more comprehensive benchmark in both discrete and continuous engineering optimization problems.

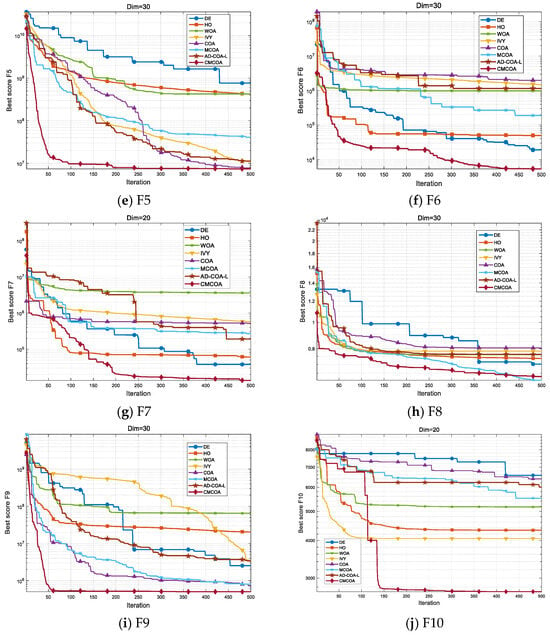

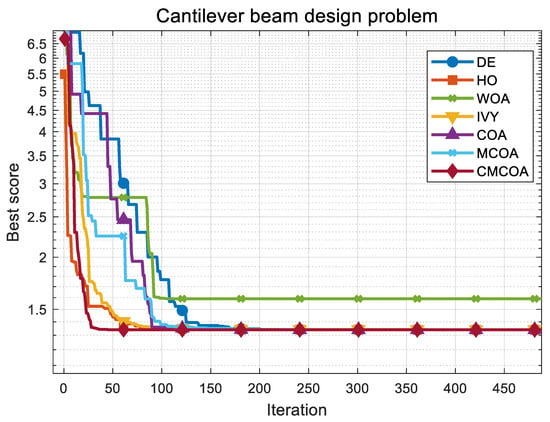

For the discrete optimization problem of gear train design, as detailed in Table 6, CMCOA successfully identified the optimal integer solution, achieving a minimum objective function value. This outcome not only significantly outperforms all variants of COA and numerous mainstream metaheuristics but also perfectly matches the best-known results reported in the literature by state-of-the-art methods like SPO and GKSO. This superior performance indicates that CMCOA’s chaotic initialization strategy and multi-guide mechanism exhibit powerful exploration and precise convergence capabilities within the discrete search space, effectively overcoming the complexities introduced by integer variables. Furthermore, the convergence curves presented in Figure 3 confirm that CMCOA demonstrates a fast convergence rate, rapidly escaping local optima and approaching the global best solution.

Table 6.

Optimal results of algorithms on the gear train design problem.

Figure 3.

Convergence curves of CMCOA and competitor algorithms on the gear train design problem.

Turning to the continuous constrained optimization problem of Cantilever Beam Design, as shown in Table 7 and Figure 4. While this result is highly competitive with benchmark algorithms such as DE and HO, the critical advantage lies in CMCOA’s ability to achieve this minimal volume while strictly satisfying all constraint conditions. Notably, CMCOA’s performance also proved to be more consistent and effective compared to recent surrogate-assisted methods, which often struggle with solution consistency across multiple runs or sacrifice precision for accelerated convergence.

Table 7.

Optimal results of algorithms on the cantilever beam design problem.

Figure 4.

Convergence curves of CMCOA and competitor algorithms on the cantilever beam design problem.

In summary, the comprehensive assessment across both discrete and constrained continuous engineering design problems provides compelling evidence for the versatility, efficacy, and robustness of the CMCOA algorithm. By achieving competitive or superior results against both classic and state-of-the-art optimizers, the experiments validate that the proposed enhancements—chaotic initialization, adaptive parameter control, and multi-guide phase switching—serve as effective, general strategies for comprehensively boosting the optimization performance of the underlying algorithm.

3. Application and Evaluation of CMCOA for Image Analysis

3.1. Experimental Setup

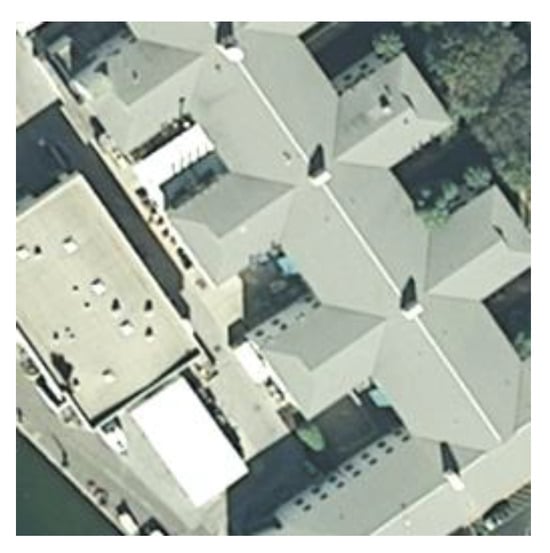

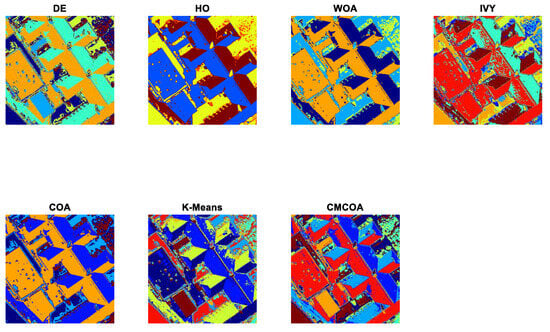

To assess the clustering capability of the proposed CMCOA on realistic high-dimensional imagery, two test images were used. Test image 1, as shown in Figure 5, is a multispectral scene with pronounced local texture and color variation, while test image 2, as shown in Figure 6, is drawn from the UC Merced Land Use dataset [] and consists of satellite aerial imagery captured at high spatial resolution. The two images induce different clustering challenges: the first emphasizes fine-grained intra-object variation, whereas the second presents large-scale land use patterns and more clearly separated spectral modes.

Figure 5.

Test image 1 in the experiment.

Figure 6.

Test image 2 in the experiment.

All optimizers were executed under identical control settings—population size 30, maximum iterations 500, search bounds [0, 255], and a fixed random seed to ensure reproducibility. The original three-dimensional image data were first transformed into a two-dimensional matrix, where each row represents a pixel’s feature vector across all bands. This transformation allowed the clustering task to be formulated as a numerical optimization problem, with the goal of locating a set of optimal cluster centers. A cost function was established to minimize the sum of Euclidean distances between each pixel and its nearest cluster center, thereby ensuring spectral compactness within clusters.

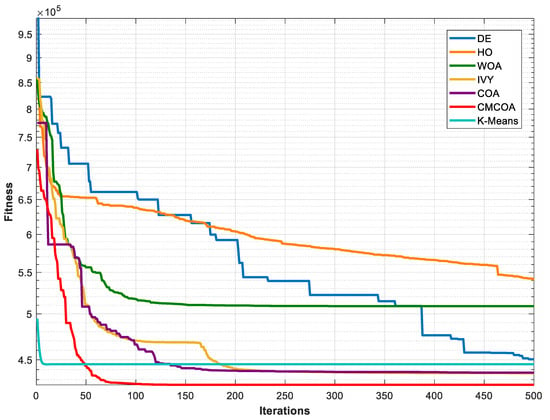

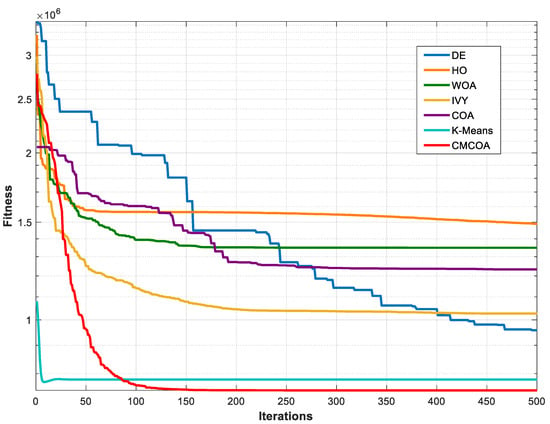

where denotes the feature vector of the i-th pixel, and represents the k-th cluster center. and represent the total number of pixels and cluster centers, respectively. During the clustering experiments, each algorithm was executed independently to obtain a final set of cluster centers. We set the number of cluster centers to 6 in test image 1 and 8 in test image 2. Pixels were subsequently assigned to their respective nearest centers, and segmented images were reconstructed accordingly. The best fitness value at each iteration was recorded to generate convergence curves and analyze the algorithms’ optimization behavior over time.

To ensure experimental reproducibility and statistical reliability, we presented the final objective function value , and an external validity metric—the mean silhouette coefficient (Silhouette)—in Table 8. We additionally added the most basic and widely used standard clustering algorithms in this field, K-Means [], to fully demonstrate the practical value and effectiveness of the proposed optimization-based approach. The K-Means baseline was implemented with k-means++ initialization and 50 restarts to mitigate sensitivity to initial centroids; other meta-heuristic algorithms used the parameter settings reported in Table 2 and were run with the same random-seed strategy to ensure comparability. Silhouettes were computed in the original pixel multiband feature space using Euclidean distance. Pixel preprocessing was limited to standard normalization, and no additional dimensionality reduction or spatial priors were introduced so that the performance of the optimizers themselves in the spectral space could be assessed objectively.

Table 8.

Comparison of quantitative metrics for different algorithms in image clustering.

3.2. Analysis of Experimental Results

Across both test images, the proposed CMCOA produced a consistently faster early decline in the objective value and a more restrained late-stage descent, a pattern that aligns with the intended effects of the chaotic initialization and adaptive control mechanisms. On test image 1, the final mean Fit obtained by CMCOA was 424,886.16, which improved upon the classical COA and the K-Means baseline. This reduction in the optimization objective was accompanied by a clear gain in external validity: CMCOA’s mean silhouette rose to 0.6432, exceeding the nearest competitor by a comfortable margin. The steep initial drop in the convergence curve is attributed to the chaotic, opposition-enhanced initialization that populated promising regions early, while the adaptive parameter control rule moderated step sizes as the population approached better basins, preventing premature stagnation and yielding a tighter final spread in successive runs.

Results on the UC Merced satellite image, test image 2, present a complementary picture. CMCOA attained the lowest Fit among all methods, indicating superior minimization of the spectral compactness objective in a higher-complexity setting. The silhouette score for CMCOA was marginally lower than the highest silhouette produced by K-Means. This divergence is informative: K-Means’ objective biases it toward spherical, well-separated clusters and therefore it can occasionally produce slightly higher silhouette figures when the underlying class geometry matches that bias; CMCOA, in contrast, optimizes the same Euclidean objective while maintaining richer intra-cluster texture and avoiding collapsed centroids, which explains the lower Fit but the very similar silhouette performance. Visual inspection of the segmentation confirms that CMCOA preserves subtle boundaries and intra-class heterogeneity that are desirable in remote-sensing interpretation, while still delivering compact spectral clusters. Clustering fitness convergence curves of seven optimization algorithms on images 1 and 2 are shown in Figure 7 and Figure 8.

Figure 7.

Clustering fitness convergence curves of seven optimization algorithms on image 1.

Figure 8.

Clustering fitness convergence curves of seven optimization algorithms on image 2.

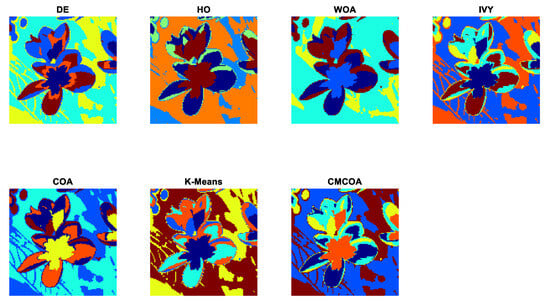

Figure 9 and Figure 10 present the clustering results produced by the seven algorithms on the test images.

Figure 9.

Comparison of the visualized clustering results of the seven algorithms on image 1.

Figure 10.

Comparison of the visualized clustering results of the seven algorithms on image 2.

Qualitative comparisons further emphasize the differences in segmentation performance. DE and HO could outline the general shape, but failed to capture internal details, leading to blurred boundaries and some background misclassification. WOA delivered improved contour detection and better separation, but lacked accuracy in capturing finer textures. IVY was less effective, with irregular segmentation and significant background misclassification. The original COA, serving as CMCOA’s baseline, showed better structural differentiation and cleaner backgrounds, confirming its suitability for these tasks.

In contrast, CMCOA achieved the most advanced segmentation, clearly distinguishing between petal regions and accurately preserving subtle structural and color variations, especially near the flower’s center. This level of detail retention and color consistency is particularly useful in digital printing workflows, where precise boundary detection, minimal color bleeding, and accurate tone reproduction are crucial for maintaining print quality and visual appeal. CMCOA’s strong performance in balancing cluster sizes further ensures even ink distribution, reducing the risk of over-saturation or under-printing in different image areas.

Overall, CMCOA generated segmentation results that closely matched human perception of semantic regions, effectively decomposing the image into distinct and meaningful components. This validates its potential in unsupervised image recognition. With stable convergence, accurate clustering, and strong visual interpretability, CMCOA proves to be a compelling approach for image analysis. In addition, it should be noted that ground truth segmentations for the experimental images are not available. As a result, standard image segmentation metrics could not be computed. This represents a limitation of the present study. Consequently, our evaluation relies on qualitative visual inspection and clustering-related indices. Future work will incorporate datasets with available ground truth masks to enable a more comprehensive and objective assessment.

4. Conclusions

This paper presented CMCOA, an improved framework for image clustering that integrates chaotic initialization, fitness-feedback adaptive parameter controller inspired by the MIT-rule, and a multi-guide stage-switching mechanism. These strategies were designed to strengthen global exploration, maintain a dynamic exploration-exploitation balance, and mitigate premature convergence. Experimental results on CEC benchmark functions and multispectral image datasets showed that CMCOA achieves notable gains in convergence speed, clustering accuracy, and intra-cluster compactness compared with several state-of-the-art meta-heuristics, while maintaining competitive computational efficiency.

Although the results are encouraging, some limitations remain. The adaptive parameter control is still designed empirically and may not be equally effective across all problem scales. While the multi-guide strategy reduces the risk of stagnation, convergence on problems with many local optima may still be challenging. More importantly, the current evaluation has been largely limited to clustering and multispectral imagery. The generalization of CMCOA to other image analysis tasks—such as segmentation, classification, or feature extraction—and its performance on different modalities, including medical, satellite, and natural images, remains to be systematically investigated.

Future work will focus on improving adaptability and extending applicability. Potential directions include refining adaptive parameter dynamics, incorporating parallel computing to reduce runtime, and combining CMCOA with deep learning or graph-based models for richer semantic representation. Furthermore, extending validation to diverse image domains and tasks will be essential for assessing robustness and transferability, paving the way for CMCOA to serve as a versatile tool in complex and dynamic optimization scenarios.

Author Contributions

Conceptualization, Z.S. (Zhe Sun) and Y.B.; methodology, Z.S. (Zhe Sun); software, Z.S. (Ziyang Shen); validation, Z.S. (Ziyang Shen), Z.S. (Zhe Sun) and Z.S. (Zhixin Sun); formal analysis, Z.S. (Ziyang Shen); investigation, Z.S. (Zhe Sun) and Z.S. (Zhixin Sun); resources, Z.S. (Zhe Sun) and Y.B.; data curation, Z.S. (Ziyang Shen); writing—original draft preparation, Z.S. (Ziyang Shen); writing—review and editing, Z.S. (Ziyang Shen) and Z.S. (Zhe Sun); visualization, Z.S. (Ziyang Shen); supervision, Z.S. (Zhixin Sun); project administration, Z.S. (Zhe Sun); funding acquisition, Y.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China under Grant No. 62303214 and No. 62272239.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this paper:

| CMCOA | Chaos-initiated and Adaptive Multi-guide Control-based Crayfish Optimization Algorithm |

| COA | Crayfish Optimization Algorithm |

| MIT rule | Massachusetts Institute of Technology Rule from Model Reference Adaptive Control |

| BinCOA | Binary Crayfish Optimization Algorithm |

| MCOA | Modified Crayfish Optimization Algorithm |

| AD-COA-L | Adaptive Dynamic COA with a Locally enhanced escape operator |

| PSO | Particle Swarm Optimization |

| OBL | Opposition-Based Learning |

| Eq | Equation |

| rand | random number in the interval of [0, 1] |

| DE | Differential Evolution |

| HO | Hippopotamus Optimization |

| WOA | Whale Optimization Algorithm |

| IVY | Ivy Algorithm |

| CEC | IEEE Congress on Evolutionary Computation competition |

| Symbols | |

| dimension | |

| the problem dimension | |

| -dimensional vector which is generated uniformly at random from the interval [0, 1] | |

| dimension search space | |

| dimension search space | |

| a random value from the interval [0, 1] | |

| the global best solution | |

| the local best solution | |

| total iterations and current iteration | |

| the linearly decreasing coefficient | |

| N | the population size |

| round() | to round a decimal to the nearest integer |

| the food location | |

| a constant food factor which equals 3 and represents the largest food | |

| the food size | |

| the food intake proportion | |

| µ | for crayfish |

| factors to control the intake of crayfish at different temperatures, which are equal to 3 and 0.2, respectively | |

| the chaotic value | |

| the logistic-map parameter, which equals 4 | |

| the positive Lyapunov exponent | |

| the opposite solution of each candidate | |

| the control parameter in the adaptive parameter control rule | |

| generation population | |

| the relative deviation between the population average and the best solution | |

| the learning rate controlling the update speed set as 0.03 | |

| E | the top-performing solutions |

| the elite set of size | |

| the guiding position in the multi-guide strategy | |

| best solution | |

| the feasible domain of elite solutions | |

| the switching threshold | |

| generation population fitness standard deviation | |

| M | the number of basic functions |

| R | the desired target ratio |

| feature vector of the i-th pixel | |

| the k-th cluster center | |

| the total number of pixels and cluster centers, respectively |

References

- Peng, D.; Gui, Z.; Wu, H. Interpreting the Curse of Dimensionality from Distance Concentration and Manifold Effect. arXiv 2023, arXiv:2401.00422. [Google Scholar]

- Simpson, C.; Tabatsky, E.; Rahil, Z.; Eddins, D.J.; Tkachev, S.; Georgescauld, F.; Papalegis, D.; Culka, M.; Levy, T.; Gregoretti, I.; et al. Lifting the Curse from High-Dimensional Data: Automated Projection Pursuit Clustering for a Variety of Biological Data Modalities. GigaScience 2025, 14, giaf052. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Al-Shamayleh, A.S.; Ishtaiwi, A.; Makhadmeh, S.N.; Fakhouri, S.N.; Hamad, F. Hybrid Four Vector Intelligent Metaheuristic with Differential Evolution: Opportunities and Challenges for Structural Single-Objective Engineering Optimization. Algorithms 2024, 17, 417. [Google Scholar] [CrossRef]

- Wang, C.-H.; Hu, K.; Wu, X.; Ou, Y. Rethinking Metaheuristics: Unveiling the Myth of “Novelty” in Metaheuristic Algorithms. Mathematics 2025, 13, 2158. [Google Scholar] [CrossRef]

- Rajwar, K.; Deep, K.; Das, S. An Exhaustive Review of the Metaheuristic Algorithms for Search and Optimization: Taxonomy, Applications, and Open Challenges. Artif. Intell. Rev. 2023, 56, 13187–13257. [Google Scholar] [CrossRef]

- Tian, D.; Xu, Q.; Yao, X.; Zhang, G.; Li, Y.; Xu, C. Diversity-Guided Particle Swarm Optimization with Multi-Level Learning Strategy. Swarm Evol. Comput. 2024, 86, 101533. [Google Scholar] [CrossRef]

- Choi, K.P.; Lai, T.L.; Tong, X.T.; Tsang, K.W.; Wong, W.K.; Zhang, H. Adaptive Parameter Tuning of Evolutionary Computation Algorithms. Stat. Biosci. 2025, 13, 2158. [Google Scholar] [CrossRef]

- Prado-Rodríguez, R.; González, P.; Banga, J.R. A Parameter Control Strategy for Parallel Island-Based Metaheuristics. Expert Syst. 2025, 42, e70061. [Google Scholar] [CrossRef]

- Grewal, R.; Kasana, S.S.; Kasana, G. Hyperspectral Image Segmentation: A Comprehensive Survey. Multimed. Tools Appl. 2023, 82, 20819–20872. [Google Scholar] [CrossRef]

- Wang, N.; Cui, Z.; Lan, Y.; Zhang, C.; Xue, Y.; Su, Y.; Li, A. Large-Scale Hyperspectral Image-Projected Clustering via Doubly Stochastic Graph Learning. Remote Sens. 2025, 17, 1526. [Google Scholar] [CrossRef]

- Jia, H.; Rao, H.; Wen, C.; Mirjalili, S. Crayfish Optimization Algorithm. Artif. Intell. Rev. 2023, 56 (Suppl. S2), 1919–1979. [Google Scholar] [CrossRef]

- Shikoun, N.H.; Al-Eraqi, A.S.; Fathi, I.S. BinCOA: An Efficient Binary Crayfish Optimization Algorithm for Feature Selection. IEEE Access 2024, 12, 28621–28635. [Google Scholar] [CrossRef]

- Jia, H.; Zhou, X.; Zhang, J.; Abualigah, L.; Yildiz, A.R.; Hussien, A.G. Modified crayfish optimization algorithm for solving multiple engineering application problems. Artif. Intell. Rev. 2024, 57, 127. [Google Scholar] [CrossRef]

- Elhosseny, M.; Abdel-Salam, M.; El-Hasnony, I.M. Adaptive dynamic crayfish algorithm with multi-enhanced strategy for global high-dimensional optimization and real-engineering problems. Sci. Rep. 2025, 15, 10656. [Google Scholar] [CrossRef]

- Jayakrishna, N.; Prasanth, N.N. Detection and mitigation of distributed denial of service attacks in vehicular ad hoc network using a spatiotemporal deep learning and reinforcement learning approach. Results Eng. 2025, 26, 104839. [Google Scholar] [CrossRef]

- Padmashree, A.; Sankar, P.; Alkhayyat, A.; Muniyand, E. A novel similarity navigated graph neural networks and crayfish optimization algorithm for accurate brain tumor detection. Res. Biomed. Eng. 2025, 41, 31. [Google Scholar]

- Dinh, P.H. MIF-BTF-MRN: Medical image fusion based on the bilateral texture filter and transfer learning with the ResNet-101 network. Biomed. Signal Process. Control 2025, 100, 106976. [Google Scholar] [CrossRef]

- Luo, T.; Xie, J.; Zhang, B.; Zhang, Y.; Li, C.; Zhou, J. An improved Lévy chaotic particle swarm optimization algorithm for energy-efficient cluster routing scheme in industrial wireless sensor networks. Expert Syst. Appl. 2024, 241, 122780. [Google Scholar] [CrossRef]

- Li, M.-W.; Xu, R.-Z.; Yang, Z.-Y.; Yeh, Y.-H.; Hong, W.-C. Optimizing berth–crane allocation considering tidal effects using chaotic quantum whale optimization algorithm. Appl. Soft Comput. 2024, 162, 111811. [Google Scholar] [CrossRef]

- Feng, W.; Zhang, K.; Zhang, J.; Zhao, X.; Chen, Y.; Cai, B.; Zhu, Z.; Wen, H.; Ye, C. Integrating fractional-order Hopfield neural network with differentiated encryption: Achieving high-performance privacy protection for medical images. Fractal Fract. 2025, 9, 426. [Google Scholar] [CrossRef]

- Ye, C.; Tan, S.; Wang, J.; Shi, L.; Zuo, Q.; Feng, W. Social image security with encryption and watermarking in hybrid domains. Entropy 2025, 27, 276. [Google Scholar] [CrossRef]

- Feng, W.; Zhang, J.; Chen, Y.; Qin, Z.; Zhang, Y.; Ahmad, M.; Woźniak, M. Exploiting robust quadratic polynomial hyperchaotic map and pixel fusion strategy for efficient image encryption. Expert Syst. Appl. 2024, 246, 123190. [Google Scholar] [CrossRef]

- Mahdavi, S.; Rahnamayan, S.; Deb, K. Opposition-based learning: A literature review. Swarm Evol. Comput. 2018, 39, 1–23. [Google Scholar] [CrossRef]

- Rothe, J.; Zhang, Y.; Schmidt, M.; Müller-Stoll, S. A modified Model Reference Adaptive Controller (M-MRAC): Comparison of MIT-rule and SPR-rule. Electronics 2020, 9, 1104. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Amiri, M.H.; Mehrabi Hashjin, N.; Montazeri, M.; Mirjalili, S.; Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep. 2024, 14, 5032. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Ghasemi, M.; Zare, M.; Trojovský, P.; Rao, R.V.; Trojovská, E.; Kandasamy, V. Optimization based on the smart behavior of plants with its engineering applications: Ivy algorithm. Knowl.-Based Syst. 2024, 295, 111850. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real-Parameter Optimization; National University of Defense Technology: Changsha, China, 2017. [Google Scholar]

- Xue, B.; Zhang, M.; Browne, W.; Ma, H.; Mei, Y.; Song, A.; Andreae, P.; Chen, A.; Chen, Q.; Al-Sahaf, H.; et al. 2019 IEEE Congress on Evolutionary Computation (CEC 2019). In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019. [Google Scholar]

- Liang, J.J.; Qu, B.; Gong, D.; Yue, C. Problem Definitions and Evaluation Criteria for the CEC 2019 Special Session on Multimodal Multiobjective Optimization; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2019. [Google Scholar]

- Kumar, A.; Price, K.V.; Mohamed, A.W.; Hadi, A.A.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the 2022 Special Session and Competition on Single Objective Bound Constrained Numerical Optimization; Nanyang Technological University: Singapore, 2021. [Google Scholar]

- Su, H.; Dong, Z.; Liu, Y.; Mu, Y.; Li, S.; Xia, L. Symmetric projection optimizer: Concise and efficient solving engineering problems using the fundamental wave of the Fourier series. Sci. Rep. 2024, 14, 6032. [Google Scholar] [CrossRef]

- Hu, G.; Guo, Y.; Wei, G.; Abualigah, L. Genghis Khan shark optimizer: A novel nature-inspired algorithm for engineering optimization. Adv. Eng. Inform. 2023, 58, 102210. [Google Scholar] [CrossRef]

- Zhong, R.; Yu, J.; Zhang, C.; Munetomo, M. Surrogate ensemble-assisted hyper-heuristic algorithm for expensive optimization problems. Int. J. Comput. Intell. Syst. 2023, 16, 169. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, H. Surrogate-assisted hybrid evolutionary algorithm with local estimation of distribution for expensive mixed variable optimization problems. Appl. Soft Comput. 2023, 133, 109957. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-Visual-Words and Spatial Extensions for Land-Use Classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems (ACM SIGSPATIAL GIS 2010), San Jose, CA, USA, 2–5 November 2010; ACM: New York, NY, USA, 2010; pp. 270–279. [Google Scholar]

- Lloyd, S.P. Least Squares Quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).