Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications

Abstract

1. Introduction

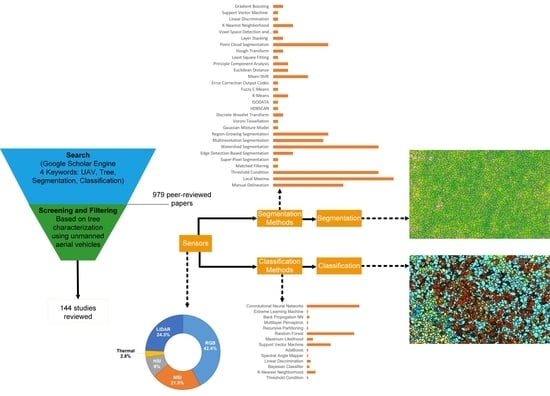

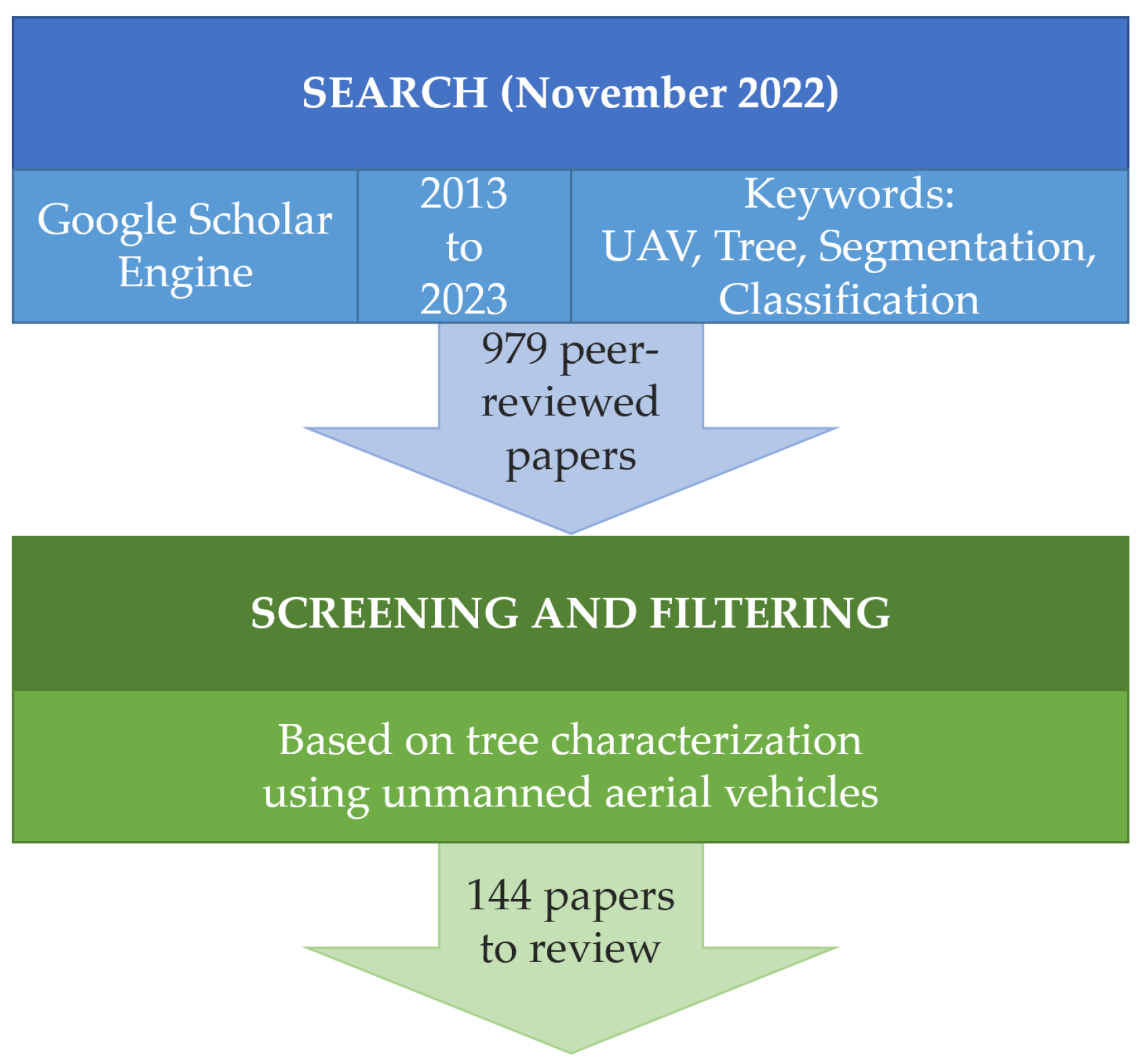

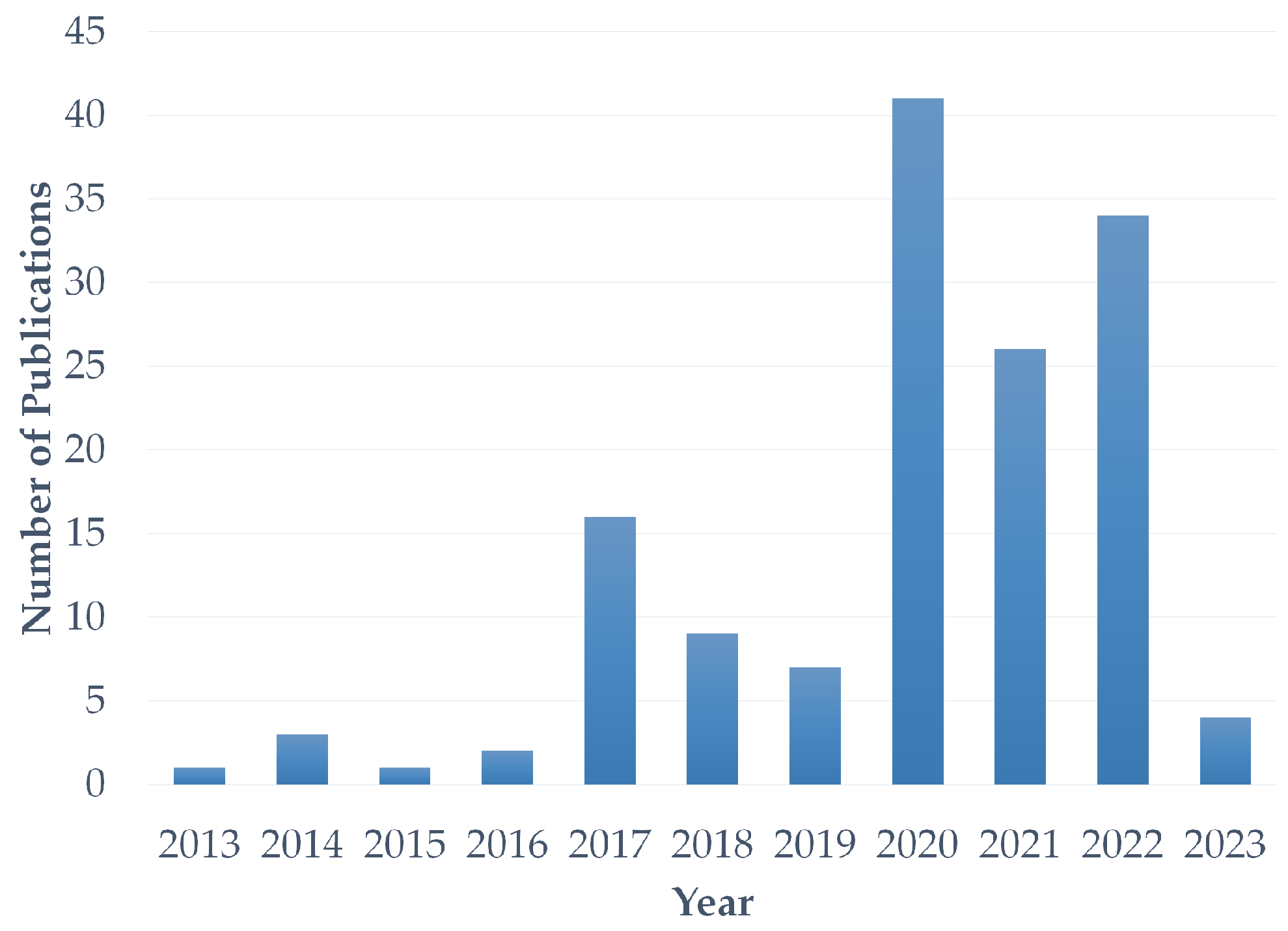

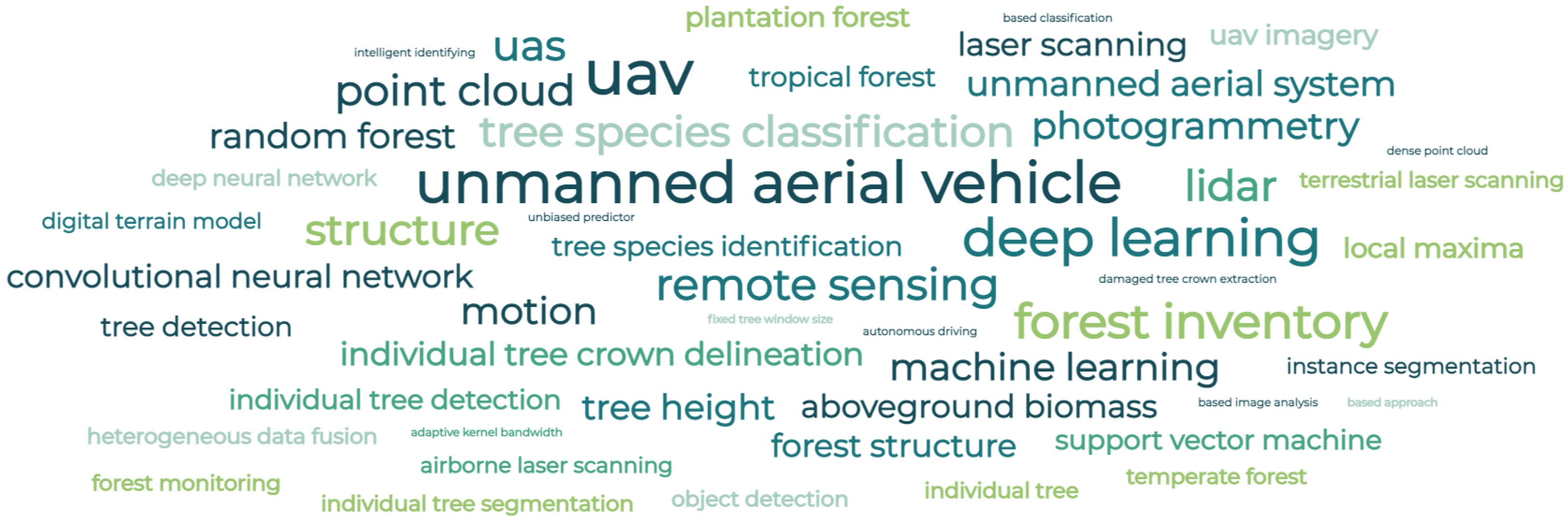

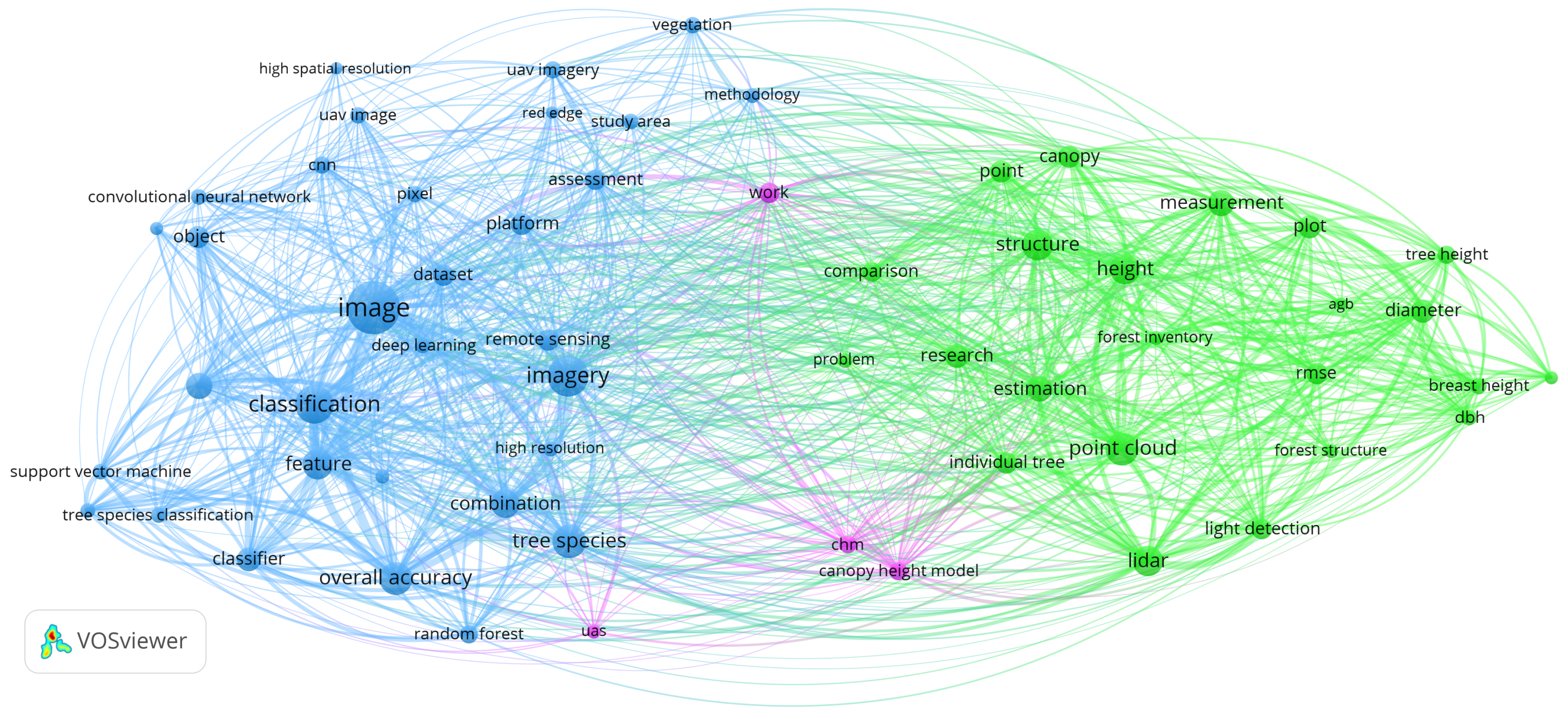

2. Review Methodology

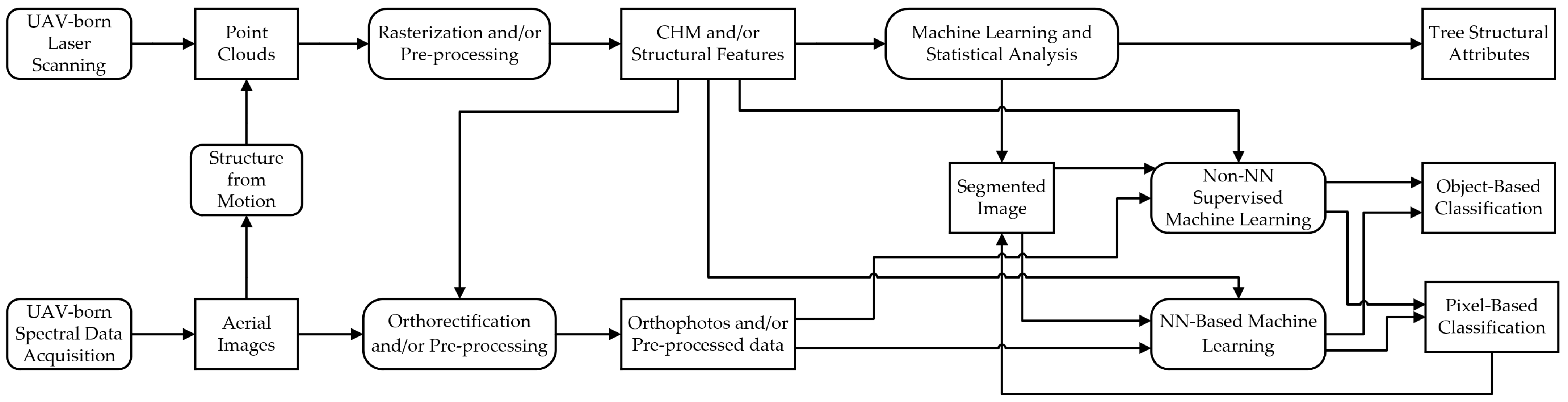

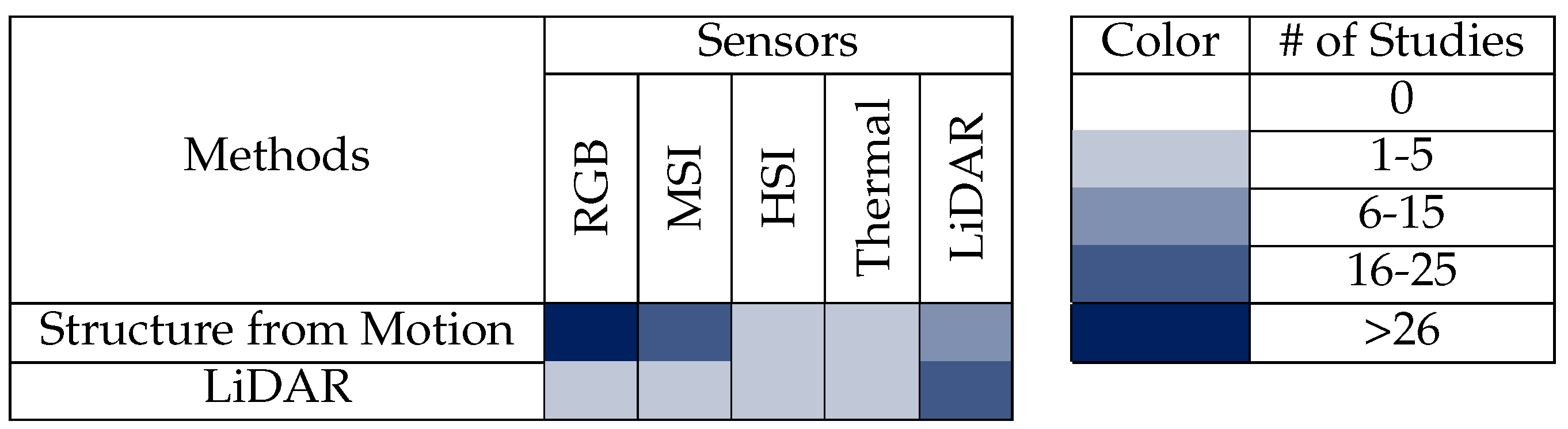

3. Sensors and Data Structure

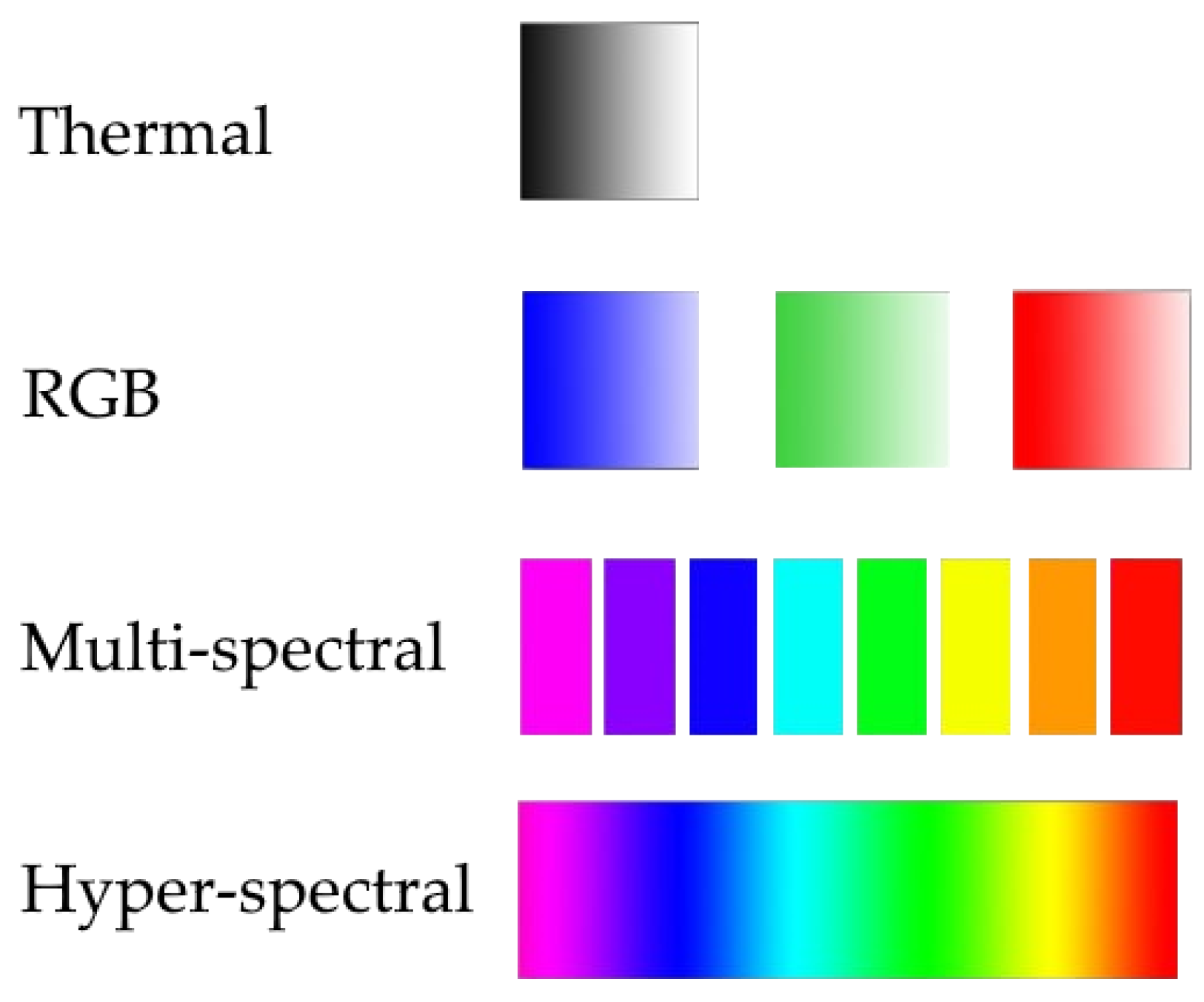

3.1. Spectral Data

3.2. Structural Data

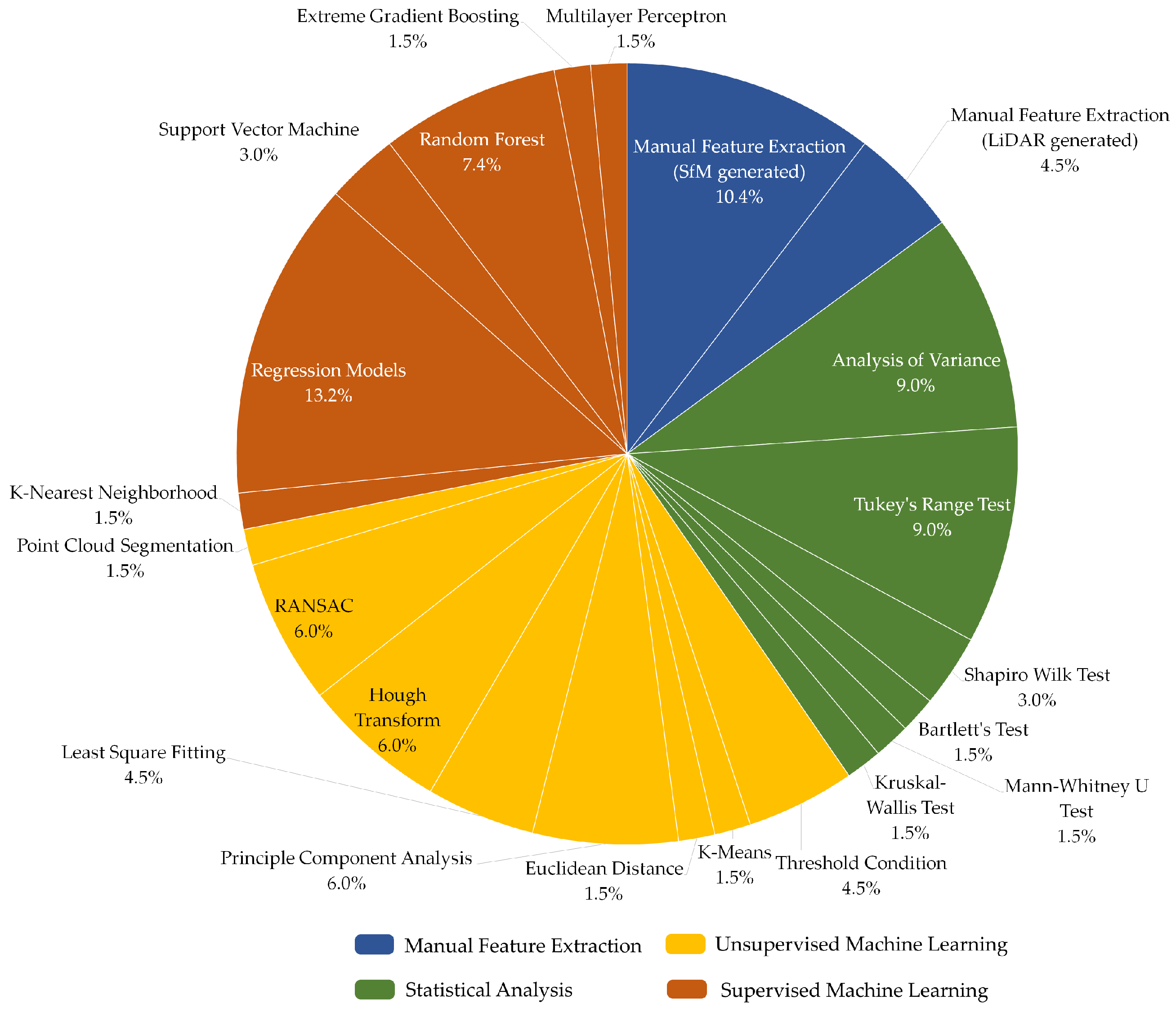

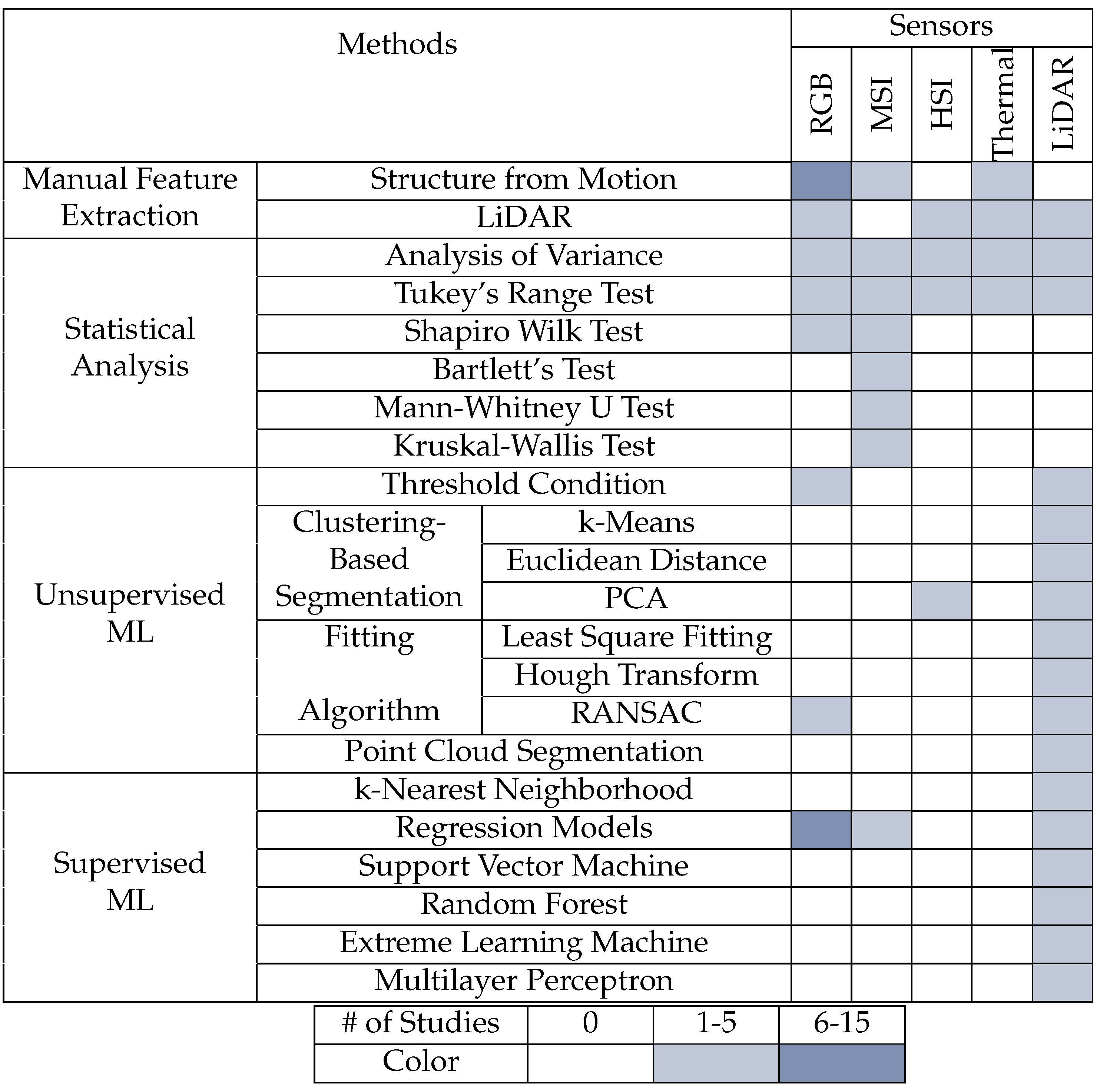

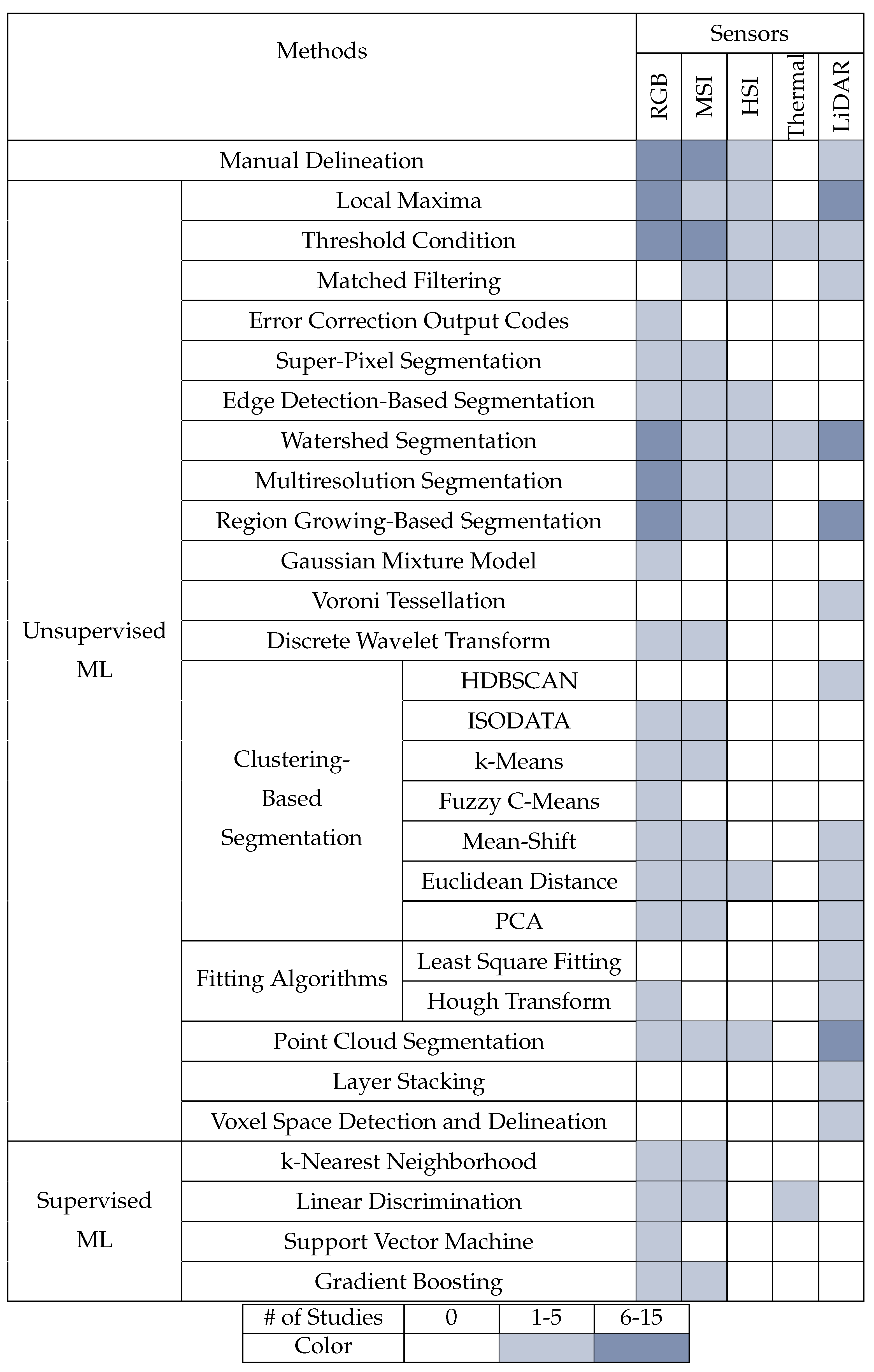

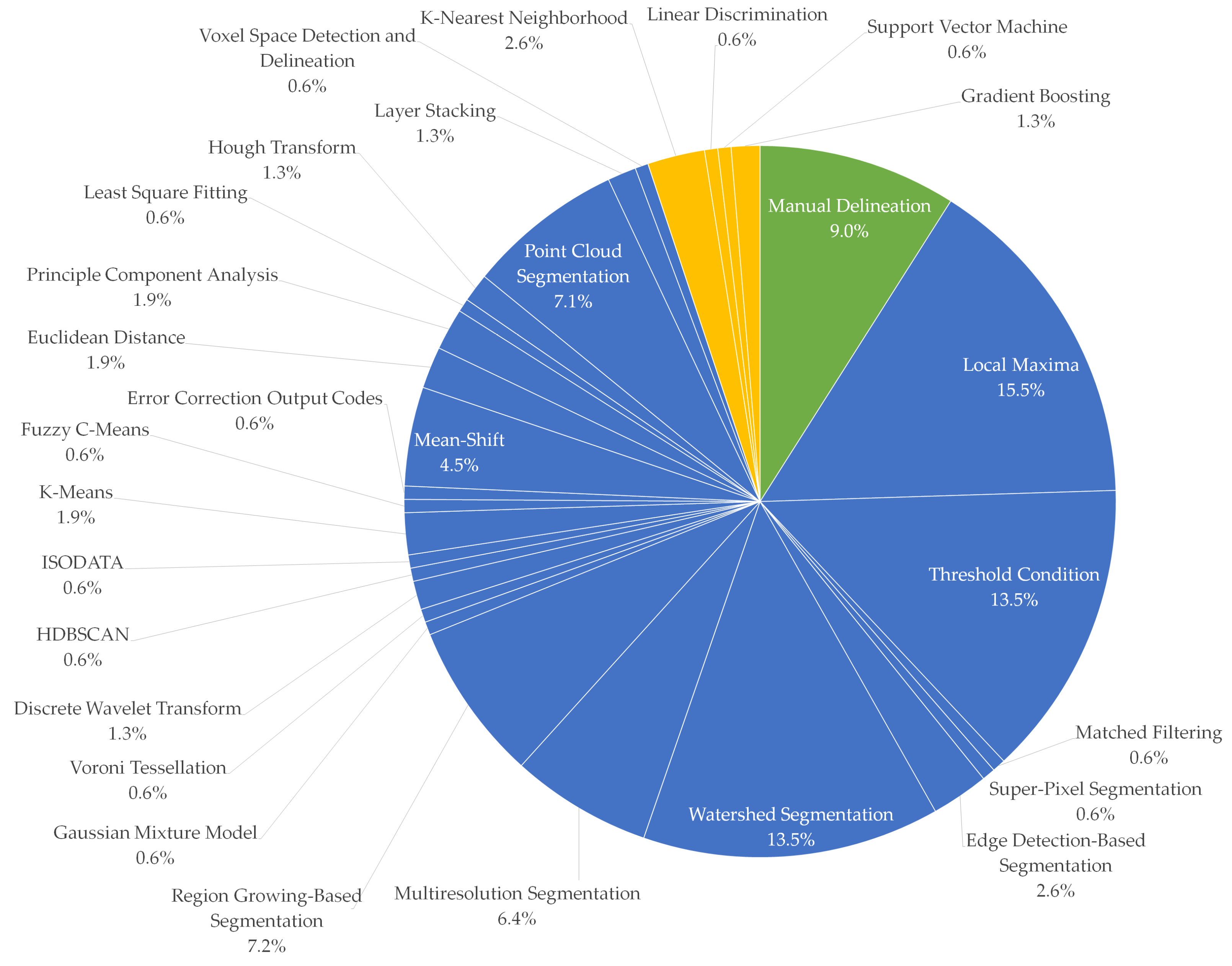

4. Forestry and Statistics

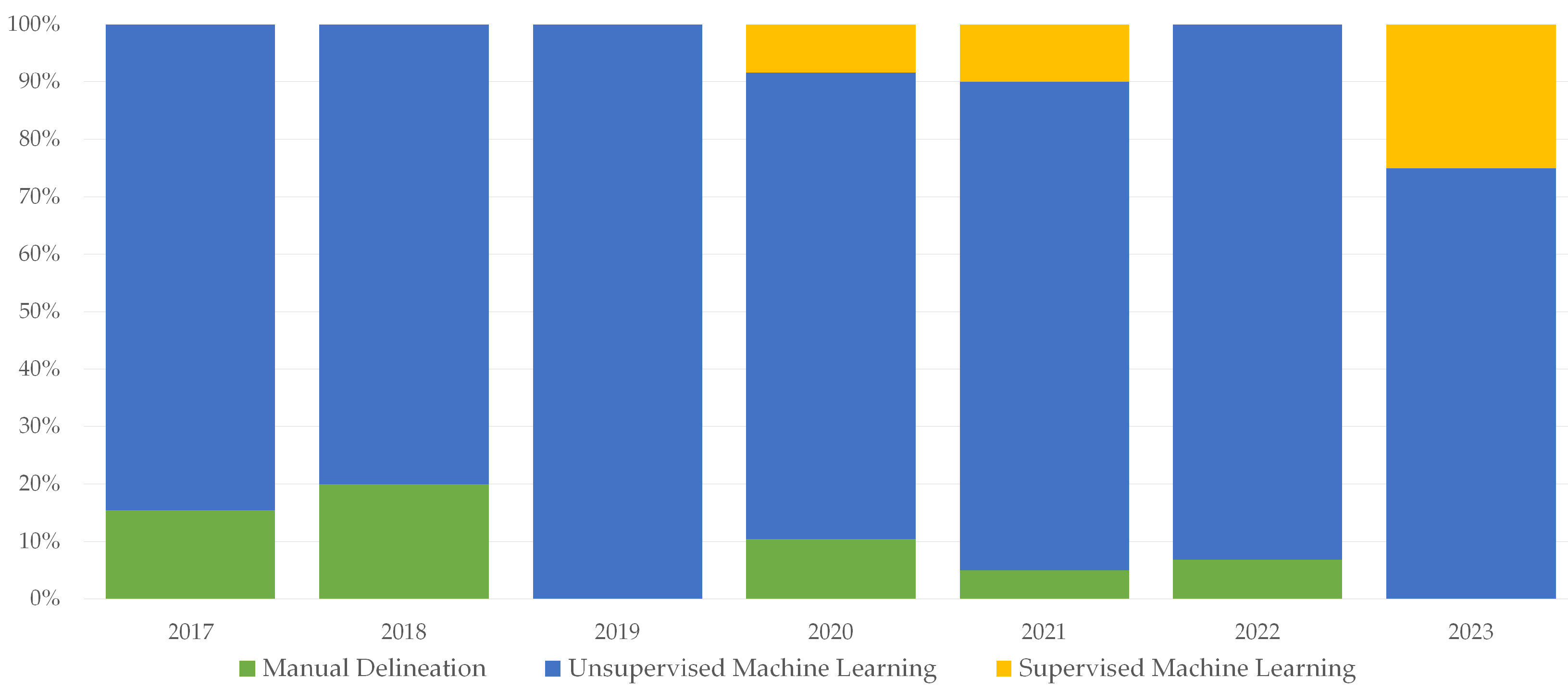

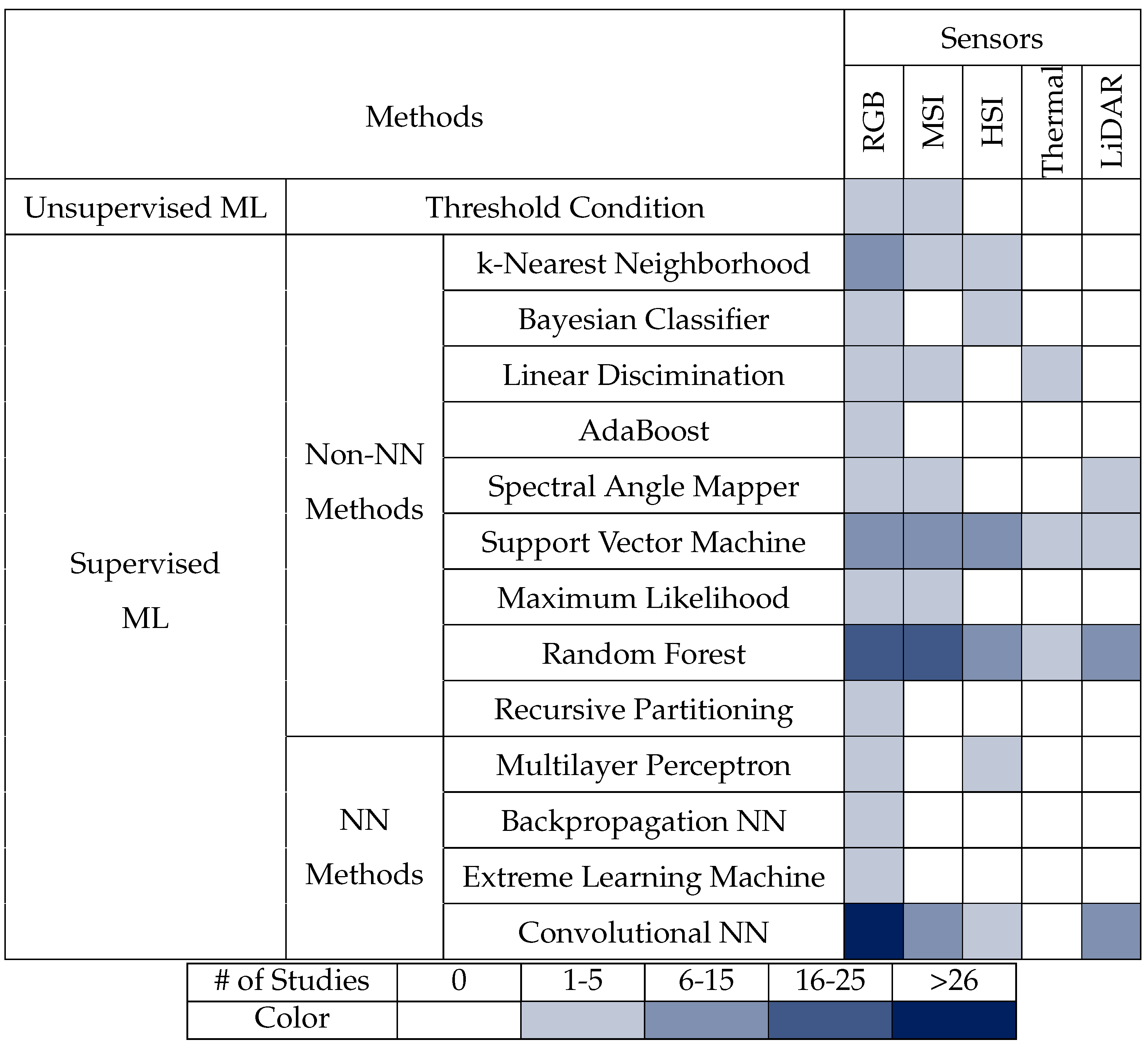

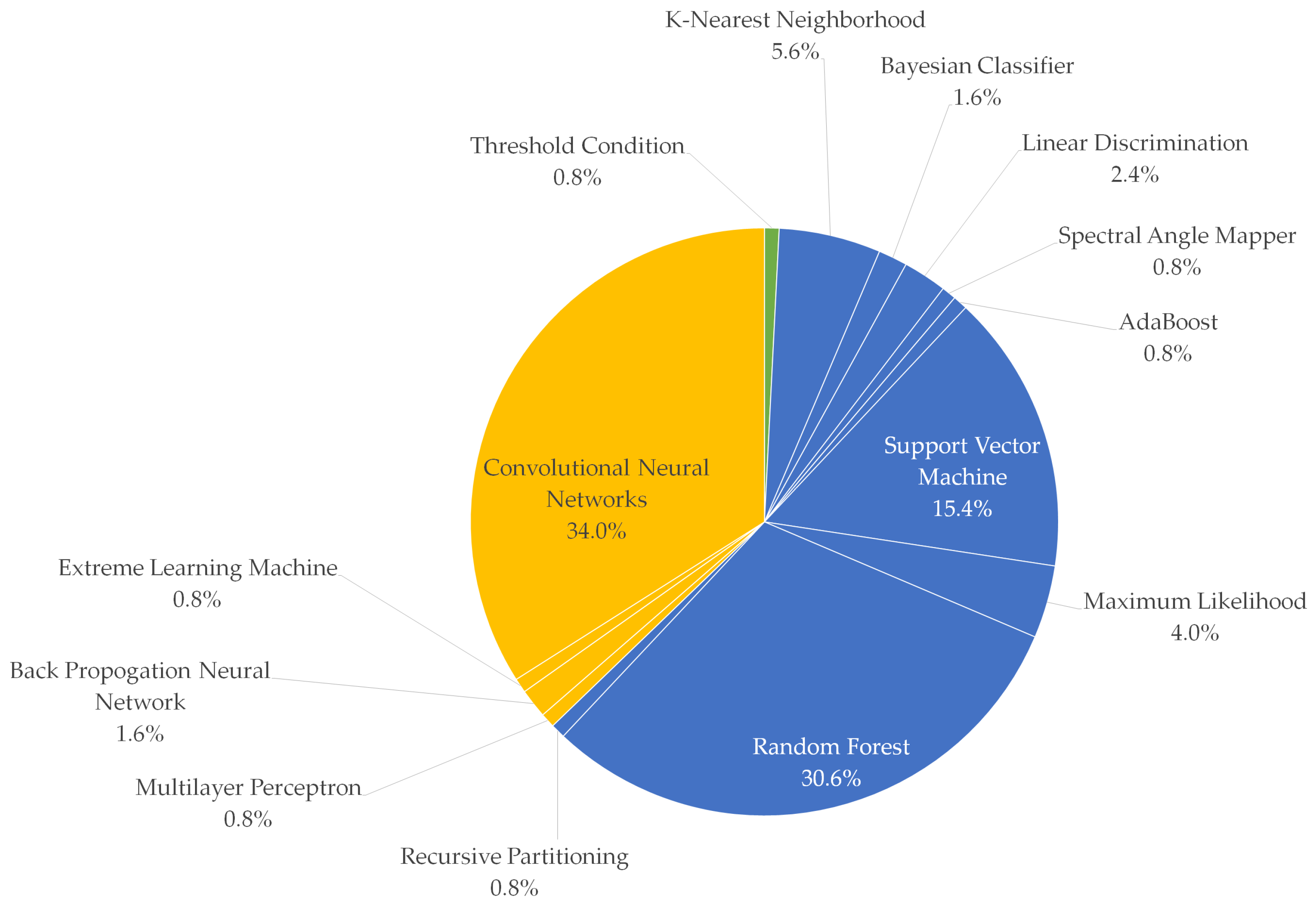

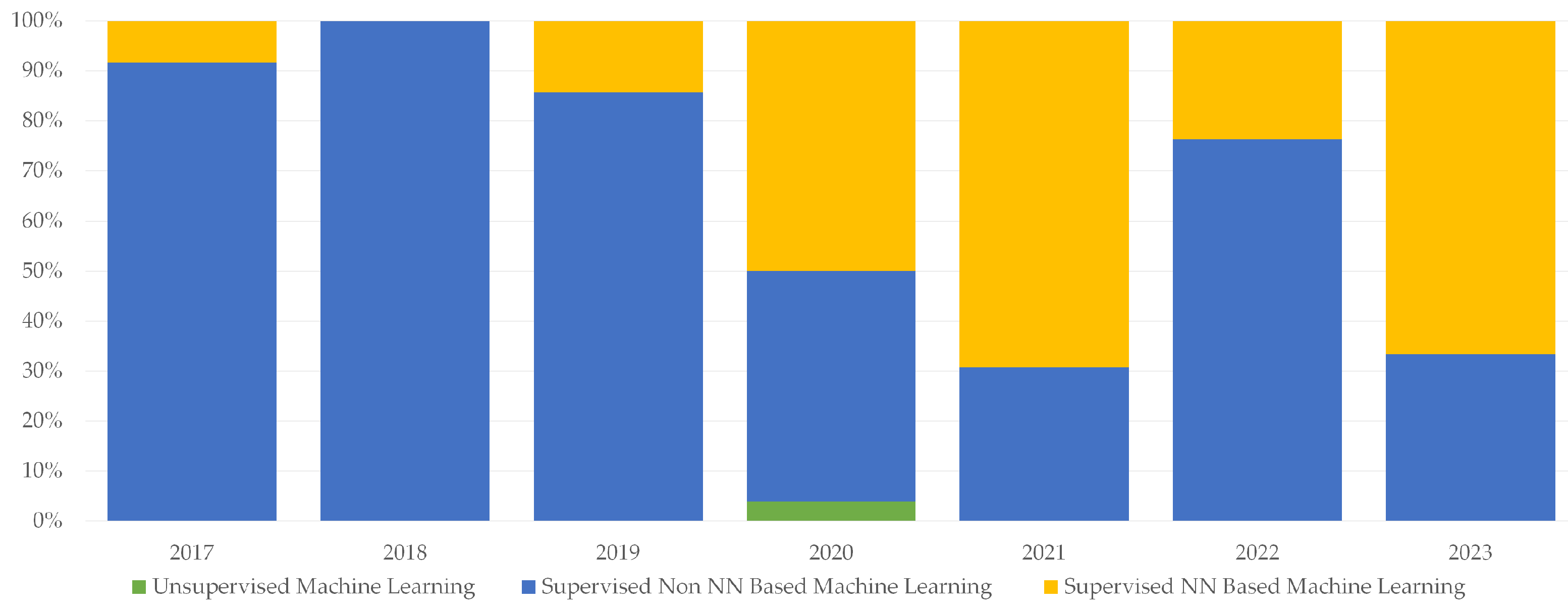

5. Image Processing and Machine Learning

- Select N random samples from the training data-set;

- Build a decision tree for each sample;

- Choose the number of trees in the algorithm and repeat steps 1 and 2;

- In the prediction phase, each tree in the forest predicts the response. The final prediction is calculated by averaging the predictions of all the trees.

- Forest type classification: used to classify different types of forests based on satellite or aerial imagery [19];

6. Conclusions

- 3D Information: the use of 3D information such as LiDAR data to extract tree crowns and stems has proven to be beneficial by aiding in segmentation and classification tasks and giving more in-depth analysis of forest structures.

- Multi-modal data: the use of multi-modal data, such as LiDAR, hyperspectral, and thermal imagery in conjunction with visible light imagery, has been growing extensively to improve segmentation and classification results.

- AI methods: Convolutional Neural Networks (CNNs) and Deep Learning architectures have been increasingly used for image segmentation and classification tasks in forestry. These methods are able to achieve high accuracy in identifying and segmenting trees from UAV images. However, this trend is in its early stages and specifically tailored networks for specific forestry tasks are still missing.

- Active learning: With active learning methods, the model can learn from human input to improve the segmentation and classification results. This means an end-to-end architecture is not necessarily the best possible approach and human interference (manufacturing features manually and feeding them to the NN architecture) at certain levels (or in this case of NNs layers) can improve the performance of the model significantly.

- Transfer learning: Transfer learning allows the use of pre-trained models to reduce the amount of labeled data needed for a specific task, which can be especially useful in the case of forestry where data may be limited. Additionally, since NN methods, and in general machine learning model development, is an experimental endeavour, using already existing knowledge from other applications can reduce the development time substantially.

- Data Augmentation: Data augmentation is a technique in machine learning and computer vision where new training data are generated from existing training data by applying transformations to the original data. These transformations can include various techniques such as cropping, rotating, scaling, flipping, adding noise, or changing the color. By increasing the size and diversity of the training data-set, one can improve the performance of the machine learning model. This pre-processing technique can be beneficial in forestry applications since UAV-born data are scarce.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ABA | Area-based Approach |

| AdaBoost | Adaptive Boosting |

| AGB | Above Ground Biomass |

| ANN | Artificial Neural Network |

| ANOVA | Analysis of Variance |

| BP | Back Propagation |

| CA | Canopy Coverage Area |

| CHM | Canopy Height Model |

| CNN | Convolutional Neural Network |

| DBH | Diameter at Breast Height |

| DEM | Digital Elevation Model |

| DSM | Digital Surface Model |

| DTM | Digital Terrain Model |

| DWT | Discrete Wavelet Transform |

| ECOC | Error Correction Output Codes |

| EGB | Extreme Gradient Boosting |

| ELM | Extreme Learning Machine |

| FCM | Fuzzy C-Means |

| GMM | Gaussian Mixture Model |

| GPS | Global Positioning System |

| HDBSCAN | Hierarchical Density-Based Spatial Clustering of Applications with Noise |

| HSI | Hyperspectral Imaging |

| ISODATA | Iterative Self-Organizing Data Analysis Technique |

| k-NN | k-Nearest Neighborhood |

| LAI | Leaf Area Index |

| LiDAR | Light Detection and Ranging |

| ML | Machine Learning |

| MLP | Multilayer Perceptron |

| MSI | Multispectral Imaging |

| NN | Neural Network |

| OB | Object-based |

| PB | Pixel-based |

| PCA | Principle Component Analysis |

| PCS | Point Cloud Segmentation |

| RADAR | Radio Detection And Ranging |

| RANSAC | Random Sample Consensus |

| RF | Random Forest |

| RGB | Red Green Blue |

| SAM | Spectral Angle Mapper |

| SfM | Structure from Motion |

| SNR | Signal-to-Noise Ratio |

| SVM | Support Vector Machine |

| UAV | Unmanned Aerial Vehicle |

| VDD | Voxel space Detection and Delineation |

| VT | Voroni Tessellation |

Appendix A

| Methods | Papers |

|---|---|

| Structure from Motion (SfM) | [37] [RGB], [39] [RGB], [41] [RGB], [43] [RGB], [45] [RGB], [49] [RGB], [58] [RGB], [59] [RGB], [63] [RGB], [70] [RGB], [53] [RGB], [102] [RGB] [75] [RGB], [74] [RGB], [77] [RGB], [51] [MSI], [52] [MSI], [60] [MSI], [61] [MSI], [71] [MSI], [64] [MSI], [55] [MSI], [48] [GB+RE], [72] [RGB+NIR] [42] [RGB+NIR], [54] [RGB+NIR], [44] [RGB+NIR], [46] [RGB+MSI], [47] [RGB+MSI], [56] [RGB+MSI], [57] [RGB+MSI], [62] [RGB+MSI] [65] [RGB+MSI], [69] [RGB+MSI], [50] [RGB+LiDAR], [73] [RGB+LiDAR], [38] [RGB+LiDAR], [67] [RGB+LiDAR], [68] [RGB+MSI+Thermal] [66] [RGB+MSI+LiDAR], [76] [RGB+MSI+LiDAR], [40] [MSI+HSI+LiDAR] |

| LiDAR | [78] [LiDAR], [79] [LiDAR], [97] [LiDAR], [96] [LiDAR], [82] [LiDAR], [83] [LiDAR], [84] [LiDAR], [85] [LiDAR], [86] [LiDAR] [87] [LiDAR], [88] [LiDAR], [89] [LiDAR], [90] [LiDAR], [91] [LiDAR], [92] [LiDAR], [93] [LiDAR], [94] [LiDAR], [95] [LiDAR] [80] [RGB+HSI+Thermal+LiDAR], [81] [RGB+MSI+HSI+Thermal+LiDAR] |

| Methods | Papers | ||

|---|---|---|---|

| Manual Feature Extraction | Structure from Motion (SfM) | [39] [RGB], [41] [RGB], [43] [RGB], [42] [RGB+NIR] [65] [RGB+MSI], [69] [RGB+MSI], [98] [RGB+Thermal] | |

| LiDAR | [92] [LiDAR], [90] [LiDAR], [80] [RGB+HSI+Thermal+LiDAR] | ||

| Statistical Analysis | Analysis of Variance (ANOVA) | [47] [RGB+MSI], [62] [RGB+MSI], [64] [MSI] [80] [RGB+HSI+Thermal+LiDAR], [99] [LiDAR], [122] [LiDAR] | |

| Tukey’s Range Test | [47] [RGB+MSI], [62] [RGB+MSI], [64] [MSI] [80] [RGB+HSI+Thermal+LiDAR], [99] [LiDAR], [122] [LiDAR] | ||

| Shapiro Wilk Test | [43] [RGB], [64] [MSI] | ||

| Bartlett’s Test | [64] [MSI] | ||

| Mann-Whitney U Test | [71] [MSI] | ||

| Kruskal-Wallis Test | [71] [MSI] | ||

| Unsupervised Machine Learning | Threshold Condition | [100] [LiDAR], [87] [LiDAR], [38] [RGB+LiDAR] | |

| Clustering-Based Segmentation | k-Means | [96] [LiDAR] | |

| Euclidean Distance | [92] [LiDAR] | ||

| Principal Component Analysis (PCA) | [36] [HSI], [82] [LiDAR], [84] [LiDAR], [85] [LiDAR] | ||

| Fitting Algorithms | Least square fitting | [79] [LiDAR], [86] [LiDAR], [101] [LiDAR] | |

| Hough Transform | [79] [LiDAR], [83] [LiDAR], [85] [LiDAR], [101] [LiDAR] | ||

| RANSAC | [102] [RGB], [101] [LiDAR], [96] [LiDAR], [50] [RGB+LiDAR] | ||

| Point Cloud Segmentation | [97] [LiDAR] | ||

| Supervised Machine Learning | k-Nearest Neighborhood (k-NN) | [95] [LiDAR] | |

| Regression Models | [37] [RGB], [41] [RGB], [43] [RGB], [102] [RGB], [42] [RGB+NIR] [89] [LiDAR], [96] [LiDAR], [104] [MSI], [103] [RGB+LiDAR] | ||

| Support Vector Regression (SVR) | [89] [LiDAR], [91] [LiDAR] | ||

| Random Forest (RF) | [82] [LiDAR], [89] [LiDAR], [95] [LiDAR], [91] [LiDAR], [96] [LiDAR] | ||

| Extreme Gradient Boosting | [91] [LiDAR] | ||

| Multilayer Perceptron (MLP) | [91] [LiDAR] | ||

| Methods | Papers | ||

|---|---|---|---|

| Manual Delineation | [37] [RGB], [109] [RGB], [110] [RGB], [111] [RGB], [115] [RGB], [60] [MSI], [55] [MSI], [108] [HSI], [106] [RGB+NIR] [56] [RGB+MSI], [107] [RGB+MSI], [114] [RGB+MSI], [113] [HSI+LiDAR], [112] [RGB+HSI+LiDAR] | ||

| Unsupervised Machine Learning | Local Maxima | [45] [RGB], [53] [RGB], [63] [RGB], [70] [RGB], [117] [RGB], [120] [RGB], [77] [RGB], [82] [LiDAR], [96] [LiDAR], [99] [LiDAR] [100] [LiDAR], [121] [LiDAR], [118] [LiDAR], [87] [LiDAR], [91] [LiDAR], [93] [LiDAR], [122] [LiDAR], [116] [RGB+MSI] [56] [RGB+MSI], [50] [RGB+LiDAR], [67] [RGB+LiDAR], [73] [RGB+LiDAR], [119] [HSI+LiDAR], [76] [RGB+MSI+LiDAR] | |

| Threshold Condition | [49] [RGB], [63] [RGB], [124] [RGB], [117] [RGB], [125] [RGB], [102] [RGB], [120] [RGB], [48] [BG+RE], [151] [BG+NIR] [72] [RGB+NIR], [61] [MSI], [64] [MSI], [123] [MSI], [127] [MSI], [62] [RGB+MSI], [68] [RGB+MSI+Thermal] [108] [HSI], [96] [LiDAR], [99] [LiDAR], [73] [RGB+LiDAR], [126] [RGB+LiDAR] | ||

| Matched Filtering | [40] [MSI+HSI+LiDAR] | ||

| Error Correction Output Codes (ECOC) | [124] [RGB] | ||

| Super-pixel Segmentation | [128] [RGB+MSI] | ||

| Edge Detection-Based Segmentation | [124] [RGB], [130] [RGB], [46] [RGB+MSI], [129] [RGB+HSI] | ||

| Watershed Segmentation | [39] [RGB], [43] [RGB], [59] [RGB], [63] [RGB], [77] [RGB], [71] [MSI], [134] [HSI], [78] [LiDAR], [82] [LiDAR], [93] [LiDAR] [132] [LiDAR], [118] [LiDAR], [122] [LiDAR], [56] [RGB+MSI], [50] [RGB+LiDAR], [67] [RGB+LiDAR], [73] [RGB+LiDAR] [131] [HSI+LiDAR], [112] [RGB+HSI+LiDAR], [133] [RGB+HSI+LiDAR], [81] [RGB+MSI+HSI+Thermal+LiDAR] | ||

| Multiresolution Segmentation | [58] [RGB], [136] [RGB], [138] [RGB], [139] [RGB], [48] [BG+RE], [106] [RGB+NIR], [55] [MSI], [137] [MSI], [135] [HSI] [128] [RGB+MSI] | ||

| Region Growing-Based Segmentation | [45] [RGB], [49] [RGB], [70] [RGB], [48] [BG+RE], [71] [MSI], [118] [LiDAR], [79] [LiDAR], [95] [LiDAR], [99] [LiDAR] [73] [RGB+LiDAR], [112] [RGB+LiDAR+HSI] | ||

| Gaussian Mixture Model | [117] [RGB] | ||

| Voroni Tessellation (VT) | [118] [LiDAR] | ||

| Discrete Wavelet Transform | [123] [MSI], [124] [RGB] | ||

| Clustering- Based Segmentation | HDBSCAN | [84] [LiDAR] | |

| ISODATA | [106] [RGB+NIR] | ||

| k-Means | [65] [RGB+MSI], [140] [RGB], [125] [RGB] | ||

| Fuzzy C-Means (FCM) | [125] [RGB] | ||

| Mean-Shift | [39] [RGB], [141] [RGB], [51] [MSI], [100] [LiDAR], [88] [LiDAR], [57] [RGB+MSI], [114] [RGB+MSI] | ||

| Euclidean Distance | [79] [LiDAR], [69] [RGB+MSI], [131] [HSI+LiDAR] | ||

| Principle Component Analysis (PCA) | [51] [MSI], [79] [LiDAR], [54] [RGB+NIR] | ||

| Fitting Algorithm | Least Square Fitting | [78] [LiDAR] | |

| Hough Transform | [78] [LiDAR], [140] [RGB] | ||

| Point Cloud Segmentation | [132] [LiDAR], [90] [LiDAR], [93] [LiDAR], [99] [LiDAR], [97] [LiDAR], [121] [LiDAR], [46] [RGB+MSI], [47] [RGB+MSI] [131] [HSI+LiDAR], [144] [RGB+MSI+LiDAR], [112] [RGB+HSI+LiDAR] | ||

| Layer Stacking | [93] [LiDAR], [132] [LiDAR] | ||

| Voxel space detection and delineation (VDD) | [121] [LiDAR] | ||

| Supervised Machine Learning | k-Nearest Neighborhood (k-NN) | [136] [RGB], [65] [RGB+MSI], [69] [RGB+MSI] | |

| Linear Discrimination | [68] [RGB+MSI+Thermal] | ||

| Support Vector Machines (SVM) | [136] [RGB] | ||

| Gradient Boosting | [117] [RGB], [104] [MSI] | ||

| Methods | Papers | ||

|---|---|---|---|

| Unsupervised Machine Learning | Threshold Condition | [62] [RGB+MSI] | |

| Supervised Machine Learning | Non-NN Methods | Bayesian Classifier | [146] [RGB], [33] [RGB+HSI] |

| K-Nearest Neighborhood | [49] [RGB], [145] [RGB], [138] [RGB], [130] [RGB], [137] [MSI], [32] [RGB+HSI], [33] [RGB+HSI] | ||

| Linear Discrimination | [39] [RGB], [114] [RGB+MSI], [68] [RGB+MSI+Thermal] | ||

| AdaBoost | [147] [RGB] | ||

| Spectral Angle Mapper (SAM) | [144] [RGB+MSI+LiDAR] | ||

| Support Vector Machine (SVM) | [49] [RGB], [58] [RGB], [136] [RGB], [147] [RGB], [141] [RGB], [149] [RGB], [130] [RGB], [146] [RGB], [123] [MSI], [137] [MSI] [150] [MSI], [135] [HSI], [134] [HSI], [148] [HSI], [44] [RGB+NIR], [57] [RGB+MSI], [34] [RGB+HSI], [129] [RGB+HSI] [81] [RGB+MSI+HSI+Thermal+LiDAR] | ||

| Maximum Likelihood | [149] [RGB], [44] [RGB+NIR], [54] [RGB+NIR], [106] [RGB+NIR], [57] [RGB+MSI] | ||

| Decision Trees and Random Forest (RF) | [59] [RGB], [109] [RGB], [49] [RGB], [141] [RGB], [149] [RGB], [139] [RGB], [130] [RGB], [146] [RGB], [153] [GR+NIR] [51] [MSI], [55] [MSI], [152] [MSI], [123] [MSI], [137] [MSI], [150] [MSI], [154] [MSI], [127] [MSI], [108] [HSI], [135] [HSI] [151] [BG+NIR], [106] [RGB+NIR], [46] [RGB+MSI], [47] [RGB+MSI], [107] [RGB+MSI], [128] [RGB+MSI], [32] [RGB+HSI] [33] [RGB+HSI], [100] [LiDAR], [96] [LiDAR], [97] [LiDAR], [126] [RGB+LiDAR], [103] [RGB+LiDAR], [113] [HSI+LiDAR] [131] [HSI+LiDAR], [119] [HSI+LiDAR], [133] [RGB+HSI+LiDAR], [40] [MSI+HSI+LiDAR] [81] [RGB+MSI+HSI+Thermal+LiDAR] | ||

| Recursive Partitioning | [49] [RGB] | ||

| NN Methods | Multilayer Perceptron (MLP) | [33] [RGB+HSI] | |

| Back Propagation Neural Network (BP NN) | [145] [RGB], [147] [RGB] | ||

| Extreme Learning Machine (ELM) | [155] [RGB] | ||

| Convolutional Neural Networks (CNN) | [58] [RGB], [35] [RGB], [170] [RGB], [171] [RGB], [163] [RGB], [110] [RGB], [111] [RGB], [117] [RGB], [149] [RGB] [165] [RGB], [166] [RGB], [136] [RGB], [145] [RGB], [147] [RGB], [168] [RGB], [156] [RGB], [161] [RGB], [173] [RGB] [19] [RGB], [174] [RGB], [115] [RGB], [167] [RGB], [175] [RGB], [60] [MSI], [61] [MSI], [172] [MSI], [159] [MSI], [160] [MSI] [104] [MSI], [36] [HSI], [162] [HSI], [94] [LiDAR], [176] [LiDAR], [158] [LiDAR], [169] [RGB+MSI], [56] [RGB+MSI] [177] [RGB+MSI], [128] [RGB+MSI], [164] [RGB+LiDAR], [126] [RGB+LiDAR], [157] [MSI+LiDAR], [66] [RGB+MSI+LiDAR] | ||

References

- Abad-Segura, E.; González-Zamar, M.D.; Vázquez-Cano, E.; López-Meneses, E. Remote Sensing Applied in Forest Management to Optimize Ecosystem Services: Advances in Research. Forests 2020, 11, 969. [Google Scholar] [CrossRef]

- Gao, Y.; Skutsch, M.; Paneque-Gálvez, J.; Ghilardi, A. Remote sensing of forest degradation: A review. Environ. Res. Lett. 2020, 15, 103001. [Google Scholar] [CrossRef]

- Camarretta, N.; Harrison, P.A.; Bailey, T.; Potts, B.; Lucieer, A.; Davidson, N.; Hunt, M. Monitoring forest structure to guide adaptive management of forest restoration: A review of remote sensing approaches. New For. 2020, 51, 573–596. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Estoque, R. A Review of the Sustainability Concept and the State of SDG Monitoring Using Remote Sensing. Remote Sens. 2020, 12, 1770. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Toro, F.G.; Tsourdos, A. (Eds.) UAV Sensors for Environmental Monitoring; MDPI: Basel, Switzerland, 2018. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Grybas, H.; Congalton, R.G. Evaluating the Impacts of Flying Height and Forward Overlap on Tree Height Estimates Using Unmanned Aerial Systems. Forests 2022, 13, 1462. [Google Scholar] [CrossRef]

- Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W.; Marcial-Pablo, M.; Enciso, J. Digital Terrain Models Generated with Low-Cost UAV Photogrammetry: Methodology and Accuracy. ISPRS Int. J. Geo-Inf. 2021, 10, 285. [Google Scholar] [CrossRef]

- Lewicki, T.; Liu, K. Multimodal Wildfire Surveillance with UAV. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Casagli, N.; Frodella, W.; Morelli, S.; Tofani, V.; Ciampalini, A.; Intrieri, E.; Raspini, F.; Rossi, G.; Tanteri, L.; Lu, P. Spaceborne, UAV and ground-based remote sensing techniques for landslide mapping, monitoring and early warning. Geoenviron. Disasters 2017, 4, 9. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.J.; Tiede, D.; Seifert, T. UAV-Based Forest Health Monitoring: A Systematic Review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of Uav Sfm Mvs Photogrammetry and Remote Sensing: Short Review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLII-3/W12-2020, 267–272. [Google Scholar] [CrossRef]

- Holzinger, A.; Saranti, A.; Angerschmid, A.; Retzlaff, C.O.; Gronauer, A.; Pejakovic, V.; Medel-Jimenez, F.; Krexner, T.; Gollob, C.; Stampfer, K. Digital Transformation in Smart Farm and Forest Operations Needs Human-Centered AI: Challenges and Future Directions. Sensors 2022, 22, 3043. [Google Scholar] [CrossRef] [PubMed]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and evaluation of deep learning architectures for efficient land cover mapping with UAS hyper-spatial imagery: A case study over a wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Haq, M.A.; Rahaman, G.; Baral, P.; Ghosh, A. Deep Learning Based Supervised Image Classification Using UAV Images for Forest Areas Classification. J. Indian Soc. Remote Sens. 2021, 49, 601–606. [Google Scholar] [CrossRef]

- Eroshenkova, D.A.; Terekhov, V.I.; Khusnetdinov, D.R.; Chumachenko, S.I. Automated Determination of Forest-Vegetation Characteristics with the Use of a Neural Network of Deep Learning. In Automated Determination of Forest-Vegetation Characteristics with the Use of a Neural Network of Deep Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 295–302. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Pádua, L.; Hruška, J.; Bessa, J.; Adão, T.; Martins, L.; Gonçalves, J.; Peres, E.; Sousa, A.; Castro, J.; Sousa, J. Multi-Temporal Analysis of Forestry and Coastal Environments Using UASs. Remote Sens. 2017, 10, 24. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicle Forest Remote Sensing—A Systematic Review. Part I: A General Framework. Forests 2021, 12, 327. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicles Forest Remote Sensing—A Systematic Review. Part II: Research Applications. Forests 2021, 12, 397. [Google Scholar] [CrossRef]

- Michałowska, M.; Rapiński, J. A review of tree species classification based on airborne lidar data and applied classifiers. Remote Sens. 2021, 13, 353. [Google Scholar] [CrossRef]

- van Eck, N.J.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Noack, A. Energy Models for Graph Clustering. J. Graph Algorithms Appl. 2007, 11, 453–480. [Google Scholar] [CrossRef]

- Ran, L.; Zhang, Y.; Wei, W.; Zhang, Q. A Hyperspectral Image Classification Framework with Spatial Pixel Pair Features. Sensors 2017, 17, 2421. [Google Scholar] [CrossRef] [PubMed]

- Mateen, M.; Wen, J.; Nasrullah; Azeem, M.A. The Role of Hyperspectral Imaging: A Literature Review. Int. J. Adv. Comput. Sci. Appl. 2018, 9. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Tuominen, S.; Näsi, R.; Honkavaara, E.; Balazs, A.; Hakala, T.; Viljanen, N.; Pölönen, I.; Saari, H.; Ojanen, H. Assessment of Classifiers and Remote Sensing Features of Hyperspectral Imagery and Stereo-Photogrammetric Point Clouds for Recognition of Tree Species in a Forest Area of High Species Diversity. Remote Sens. 2018, 10, 714. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Sothe, C.; Dalponte, M.; de Almeida, C.M.; Schimalski, M.B.; Lima, C.L.; Liesenberg, V.; Miyoshi, G.T.; Tommaselli, A.M.G. Tree Species Classification in a Highly Diverse Subtropical Forest Integrating UAV-Based Photogrammetric Point Cloud and Hyperspectral Data. Remote Sens. 2019, 11, 1338. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Miyoshi, G.T.; Arruda, M.d.S.; Osco, L.P.; Marcato Junior, J.; Gonçalves, D.N.; Imai, N.N.; Tommaselli, A.M.G.; Honkavaara, E.; Gonçalves, W.N. A Novel Deep Learning Method to Identify Single Tree Species in UAV-Based Hyperspectral Images. Remote Sens. 2020, 12, 1294. [Google Scholar] [CrossRef]

- Hird, J.; Montaghi, A.; McDermid, G.; Kariyeva, J.; Moorman, B.; Nielsen, S.; McIntosh, A. Use of Unmanned Aerial Vehicles for Monitoring Recovery of Forest Vegetation on Petroleum Well Sites. Remote Sens. 2017, 9, 413. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Alonzo, M.; Andersen, H.E.; Morton, D.; Cook, B. Quantifying Boreal Forest Structure and Composition Using UAV Structure from Motion. Forests 2018, 9, 119. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Giannetti, F.; Chirici, G.; Gobakken, T.; Næsset, E.; Travaglini, D.; Puliti, S. A new approach with DTM-independent metrics for forest growing stock prediction using UAV photogrammetric data. Remote Sens. Environ. 2018, 213, 195–205. [Google Scholar] [CrossRef]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The application of Unmanned Aerial Vehicles (UAVs) to estimate above-ground biomass of mangrove ecosystems. Remote Sens. Environ. 2020, 242, 111747. [Google Scholar] [CrossRef]

- Müllerová, J.; Bartaloš, T.; Brůna, J.; Dvořák, P.; Vítková, M. Unmanned aircraft in nature conservation: An example from plant invasions. Int. J. Remote Sens. 2017, 38, 2177–2198. [Google Scholar] [CrossRef]

- Miraki, M.; Sohrabi, H.; Fatehi, P.; Kneubuehler, M. Individual tree crown delineation from high-resolution UAV images in broadleaf forest. Ecol. Inform. 2021, 61, 101207. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, X.; Cao, L.; Coops, N.C.; Goodbody, T.R.; Zhong, T.; Zhao, W.; Sun, Q.; Ba, S.; Zhang, Z.; et al. Tree species classification using UAS-based digital aerial photogrammetry point clouds and multispectral imageries in subtropical natural forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102173. [Google Scholar] [CrossRef]

- Liu, K.; Wang, A.; Zhang, S.; Zhu, Z.; Bi, Y.; Wang, Y.; Du, X. Tree species diversity mapping using UAS-based digital aerial photogrammetry point clouds and multispectral imageries in a subtropical forest invaded by moso bamboo (Phyllostachys edulis). Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102587. [Google Scholar] [CrossRef]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef]

- Tagle Casapia, X.; Falen, L.; Bartholomeus, H.; Cárdenas, R.; Flores, G.; Herold, M.; Honorio Coronado, E.N.; Baker, T.R. Identifying and Quantifying the Abundance of Economically Important Palms in Tropical Moist Forest Using UAV Imagery. Remote Sens. 2019, 12, 9. [Google Scholar] [CrossRef]

- Yrttimaa, T.; Saarinen, N.; Kankare, V.; Viljanen, N.; Hynynen, J.; Huuskonen, S.; Holopainen, M.; Hyyppä, J.; Honkavaara, E.; Vastaranta, M. Multisensorial Close-Range Sensing Generates Benefits for Characterization of Managed Scots Pine (Pinus sylvestris L.) Stands. ISPRS Int. J. Geo-Inf. 2020, 9, 309. [Google Scholar] [CrossRef]

- Kampen, M.; Vienna, L.S.; Immitzer, M.; Vienna, L.S.; Lederbauer, S.; Jan; Mund, P.; Immitzer, M. UAV-Based Multispectral Data for Tree Species Classification and Tree Vitality Analysis. Dreilandertagung der DGPF, der OVG und der SGPF in Wien, Osterreich Publikationen der DGPF, Band 28 2019, 10, 623–639. [Google Scholar]

- Minařík, R.; Langhammer, J. Use of a multispectral UAV photogrammetry for detection and tracking of forest disturbance dynamics. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 711–718. [Google Scholar] [CrossRef]

- Mohan, M.; Silva, C.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.; Dia, M. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Canopy Height Model in an Open Canopy Mixed Conifer Forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef]

- Gini, R.; Sona, G.; Ronchetti, G.; Passoni, D.; Pinto, L. Improving Tree Species Classification Using UAS Multispectral Images and Texture Measures. ISPRS Int. J. Geo-Inf. 2018, 7, 315. [Google Scholar] [CrossRef]

- Franklin, S.E.; Ahmed, O.S. Deciduous tree species classification using object-based analysis and machine learning with unmanned aerial vehicle multispectral data. Int. J. Remote Sens. 2018, 39, 5236–5245. [Google Scholar] [CrossRef]

- Yu, K.; Hao, Z.; Post, C.J.; Mikhailova, E.A.; Lin, L.; Zhao, G.; Tian, S.; Liu, J. Comparison of Classical Methods and Mask R-CNN for Automatic Tree Detection and Mapping Using UAV Imagery. Remote Sens. 2022, 14, 295. [Google Scholar] [CrossRef]

- Šiljeg, A.; Panđa, L.; Domazetović, F.; Marić, I.; Gašparović, M.; Borisov, M.; Milošević, R. Comparative Assessment of Pixel and Object-Based Approaches for Mapping of Olive Tree Crowns Based on UAV Multispectral Imagery. Remote Sens. 2022, 14, 757. [Google Scholar] [CrossRef]

- Onishi, M.; Ise, T. Explainable identification and mapping of trees using UAV RGB image and deep learning. Sci. Rep. 2021, 11, 903. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Wang, Y.; Zhou, C.; Yin, L.; Feng, X. Urban forest monitoring based on multiple features at the single tree scale by UAV. Urban For. Urban Green. 2021, 58, 126958. [Google Scholar] [CrossRef]

- Hao, Z.; Lin, L.; Post, C.J.; Mikhailova, E.A.; Li, M.; Chen, Y.; Yu, K.; Liu, J. Automated tree-crown and height detection in a young forest plantation using mask region-based convolutional neural network (Mask R-CNN). ISPRS J. Photogramm. Remote Sens. 2021, 178, 112–123. [Google Scholar] [CrossRef]

- Kolanuvada, S.R.; Ilango, K.K. Automatic Extraction of Tree Crown for the Estimation of Biomass from UAV Imagery Using Neural Networks. J. Indian Soc. Remote Sens. 2021, 49, 651–658. [Google Scholar] [CrossRef]

- Belmonte, A.; Sankey, T.; Biederman, J.A.; Bradford, J.; Goetz, S.J.; Kolb, T.; Woolley, T. UAV-derived estimates of forest structure to informponderosa pine forest restoration. Remote Sens. Ecol. Conserv. 2020, 6, 181–197. [Google Scholar] [CrossRef]

- Dong, X.; Zhang, Z.; Yu, R.; Tian, Q.; Zhu, X. Extraction of Information about Individual Trees from High-Spatial-Resolution UAV-Acquired Images of an Orchard. Remote Sens. 2020, 12, 133. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; de Castro, A.I.; Torres-Sánchez, J.; Triviño-Tarradas, P.; Jiménez-Brenes, F.M.; García-Ferrer, A.; López-Granados, F. Classification of 3D Point Clouds Using Color Vegetation Indices for Precision Viticulture and Digitizing Applications. Remote Sens. 2020, 12, 317. [Google Scholar] [CrossRef]

- Jurado, J.M.; Ortega, L.; Cubillas, J.J.; Feito, F.R. Multispectral Mapping on 3D Models and Multi-Temporal Monitoring for Individual Characterization of Olive Trees. Remote Sens. 2020, 12, 1106. [Google Scholar] [CrossRef]

- Pleșoianu, A.I.; Stupariu, M.S.; Șandric, I.; Pătru-Stupariu, I.; Drăguț, L. Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model. Remote Sens. 2020, 12, 2426. [Google Scholar] [CrossRef]

- Gu, J.; Grybas, H.; Congalton, R.G. A Comparison of Forest Tree Crown Delineation from Unmanned Aerial Imagery Using Canopy Height Models vs. Spectral Lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

- Di Nisio, A.; Adamo, F.; Acciani, G.; Attivissimo, F. Fast Detection of Olive Trees Affected by Xylella Fastidiosa from UAVs Using Multispectral Imaging. Sensors 2020, 20, 4915. [Google Scholar] [CrossRef] [PubMed]

- Jurado, J.; Ramos, M.; Enríquez, C.; Feito, F. The Impact of Canopy Reflectance on the 3D Structure of Individual Trees in a Mediterranean Forest. Remote Sens. 2020, 12, 1430. [Google Scholar] [CrossRef]

- Gu, J.; Congalton, R.G. Individual Tree Crown Delineation From UAS Imagery Based on Region Growing by Over-Segments With a Competitive Mechanism. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Minařík, R.; Langhammer, J.; Lendzioch, T. Automatic Tree Crown Extraction from UAS Multispectral Imagery for the Detection of Bark Beetle Disturbance in Mixed Forests. Remote Sens. 2020, 12, 4081. [Google Scholar] [CrossRef]

- Belcore, E.; Wawrzaszek, A.; Wozniak, E.; Grasso, N.; Piras, M. Individual Tree Detection from UAV Imagery Using Hölder Exponent. Remote Sens. 2020, 12, 2407. [Google Scholar] [CrossRef]

- Yin, D.; Wang, L. Individual mangrove tree measurement using UAV-based LiDAR data: Possibilities and challenges. Remote Sens. Environ. 2019, 223, 34–49. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.; Faias, S.; Tomé, M.; Díaz-Varela, R. Use of Multi-Temporal UAV-Derived Imagery for Estimating Individual Tree Growth in Pinus pinea Stands. Forests 2017, 8, 300. [Google Scholar] [CrossRef]

- Dempewolf, J.; Nagol, J.; Hein, S.; Thiel, C.; Zimmermann, R. Measurement of Within-Season Tree Height Growth in a Mixed Forest Stand Using UAV Imagery. Forests 2017, 8, 231. [Google Scholar] [CrossRef]

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidar-based point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2017, 38, 2411–2426. [Google Scholar] [CrossRef]

- Ahmadi, S.A.; Ghorbanian, A.; Golparvar, F.; Mohammadzadeh, A.; Jamali, S. Individual tree detection from unmanned aerial vehicle (UAV) derived point cloud data in a mixed broadleaf forest using hierarchical graph approach. Eur. J. Remote Sens. 2022, 55, 520–539. [Google Scholar] [CrossRef]

- Brede, B.; Calders, K.; Lau, A.; Raumonen, P.; Bartholomeus, H.M.; Herold, M.; Kooistra, L. Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 2019, 233, 111355. [Google Scholar] [CrossRef]

- Terryn, L.; Calders, K.; Bartholomeus, H.; Bartolo, R.E.; Brede, B.; D’hont, B.; Disney, M.; Herold, M.; Lau, A.; Shenkin, A.; et al. Quantifying tropical forest structure through terrestrial and UAV laser scanning fusion in Australian rainforests. Remote Sens. Environ. 2022, 271, 112912. [Google Scholar] [CrossRef]

- De Almeida, D.R.A.; Broadbent, E.N.; Ferreira, M.P.; Meli, P.; Zambrano, A.M.A.; Gorgens, E.B.; Resende, A.F.; de Almeida, C.T.; do Amaral, C.H.; Corte, A.P.D.; et al. Monitoring restored tropical forest diversity and structure through UAV-borne hyperspectral and lidar fusion. Remote Sens. Environ. 2021, 264, 112582. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Maimaitijiang, M. Urban tree species classification using UAV-based multi-sensor data fusion and machine learning. GIScience Remote Sens. 2021, 58, 1250–1275. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Liang, X.; Hyyppä, H.; Wang, Y. Autonomous Collection of Forest Field Reference—The Outlook and a First Step with UAV Laser Scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef]

- Dalla Corte, A.P.; Rex, F.E.; de Almeida, D.R.A.; Sanquetta, C.R.; Silva, C.A.; Moura, M.M.; Wilkinson, B.; Zambrano, A.M.A.; da Cunha Neto, E.M.; Veras, H.F.P.; et al. Measuring Individual Tree Diameter and Height Using GatorEye High-Density UAV-Lidar in an Integrated Crop-Livestock-Forest System. Remote Sens. 2020, 12, 863. [Google Scholar] [CrossRef]

- Neuville, R.; Bates, J.S.; Jonard, F. Estimating forest structure from UAV-mounted LiDAR point cloud using machine learning. Remote Sens. 2021, 13, 352. [Google Scholar] [CrossRef]

- Liang, X.; Wang, Y.; Pyörälä, J.; Lehtomäki, M.; Yu, X.; Kaartinen, H.; Kukko, A.; Honkavaara, E.; Issaoui, A.E.I.; Nevalainen, O.; et al. Forest in situ observations using unmanned aerial vehicle as an alternative of terrestrial measurements. For. Ecosyst. 2019, 6, 20. [Google Scholar] [CrossRef]

- Wieser, M.; Mandlburger, G.; Hollaus, M.; Otepka, J.; Glira, P.; Pfeifer, N. A Case Study of UAS Borne Laser Scanning for Measurement of Tree Stem Diameter. Remote Sens. 2017, 9, 1154. [Google Scholar] [CrossRef]

- Picos, J.; Bastos, G.; Míguez, D.; Alonso, L.; Armesto, J. Individual Tree Detection in a Eucalyptus Plantation Using Unmanned Aerial Vehicle (UAV)-LiDAR. Remote Sens. 2020, 12, 885. [Google Scholar] [CrossRef]

- Yan, W.; Guan, H.; Cao, L.; Yu, Y.; Li, C.; Lu, J. A Self-Adaptive Mean Shift Tree-Segmentation Method Using UAV LiDAR Data. Remote Sens. 2020, 12, 515. [Google Scholar] [CrossRef]

- Peng, X.; Zhao, A.; Chen, Y.; Chen, Q.; Liu, H.; Wang, J.; Li, H. Comparison of modeling algorithms for forest canopy structures based on UAV-LiDAR: A case study in tropical China. Forests 2020, 11, 1324. [Google Scholar] [CrossRef]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2020, 13, 77. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Souza, D.V.; Rex, F.E.; Sanquetta, C.R.; Mohan, M.; Silva, C.A.; Zambrano, A.M.A.; Prata, G.; Alves de Almeida, D.R.; Trautenmüller, J.W.; et al. Forest inventory with high-density UAV-Lidar: Machine learning approaches for predicting individual tree attributes. Comput. Electron. Agric. 2020, 179, 105815. [Google Scholar] [CrossRef]

- Brede, B.; Lau, A.; Bartholomeus, H.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR Derived Canopy Height and DBH with Terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef]

- Ma, K.; Chen, Z.; Fu, L.; Tian, W.; Jiang, F.; Yi, J.; Du, Z.; Sun, H. Performance and Sensitivity of Individual Tree Segmentation Methods for UAV-LiDAR in Multiple Forest Types. Remote Sens. 2022, 14, 298. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, K.; Zhu, Y.; Wang, X.; Yun, T. Individual Tree Crown Segmentation Directly from UAV-Borne LiDAR Data Using the PointNet of Deep Learning. Forests 2021, 12, 131. [Google Scholar] [CrossRef]

- Hao, Y.; Widagdo, F.R.A.; Liu, X.; Quan, Y.; Dong, L.; Li, F. Individual Tree Diameter Estimation in Small-Scale Forest Inventory Using UAV Laser Scanning. Remote Sens. 2020, 13, 24. [Google Scholar] [CrossRef]

- Puliti, S.; Breidenbach, J.; Astrup, R. Estimation of Forest Growing Stock Volume with UAV Laser Scanning Data: Can It Be Done without Field Data? Remote Sens. 2020, 12, 1245. [Google Scholar] [CrossRef]

- Krůček, M.; Král, K.; Cushman, K.; Missarov, A.; Kellner, J.R. Supervised Segmentation of Ultra-High-Density Drone Lidar for Large-Area Mapping of Individual Trees. Remote Sens. 2020, 12, 3260. [Google Scholar] [CrossRef]

- Webster, C.; Westoby, M.; Rutter, N.; Jonas, T. Three-dimensional thermal characterization of forest canopies using UAV photogrammetry. Remote Sens. Environ. 2018, 209, 835–847. [Google Scholar] [CrossRef]

- Torresan, C.; Carotenuto, F.; Chiavetta, U.; Miglietta, F.; Zaldei, A.; Gioli, B. Individual Tree Crown Segmentation in Two-Layered Dense Mixed Forests from UAV LiDAR Data. Drones 2020, 4, 10. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, T.; Skidmore, A.K.; Heurich, M. Important LiDAR metrics for discriminating forest tree species in Central Europe. ISPRS J. Photogramm. Remote Sens. 2018, 137, 163–174. [Google Scholar] [CrossRef]

- Kuželka, K.; Slavík, M.; Surový, P. Very high density point clouds from UAV laser scanning for automatic tree stem detection and direct diameter measurement. Remote Sens. 2020, 12, 1236. [Google Scholar] [CrossRef]

- Zhang, W.; Gao, F.; Jiang, N.; Zhang, C.; Zhang, Y. High-Temporal-Resolution Forest Growth Monitoring Based on Segmented 3D Canopy Surface from UAV Aerial Photogrammetry. Drones 2022, 6, 158. [Google Scholar] [CrossRef]

- Maesano, M.; Santopuoli, G.; Moresi, F.; Matteucci, G.; Lasserre, B.; Scarascia Mugnozza, G. Above ground biomass estimation from UAV high resolution RGB images and LiDAR data in a pine forest in Southern Italy. iForest Biogeosci. For. 2022, 15, 451–457. [Google Scholar] [CrossRef]

- Vijayakumar, V.; Ampatzidis, Y.; Costa, L. Tree-level citrus yield prediction utilizing ground and aerial machine vision and machine learning. Smart Agric. Technol. 2023, 3, 100077. [Google Scholar] [CrossRef]

- Clark, J.S. The Elements of Statistical Learning; Springer Series in Statistics; Springer: New York, NY, USA, 2009; pp. 143–160. [Google Scholar] [CrossRef]

- Franklin, S.E. Pixel-and object-based multispectral classification of forest tree species from small unmanned aerial vehicles. J. Unmanned Veh. Syst. 2018, 6, 195–211. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Kurihara, J.; Koo, V.C.; Guey, C.W.; Lee, Y.P.; Abidin, H. Early Detection of Basal Stem Rot Disease in Oil Palm Tree Using Unmanned Aerial Vehicle-Based Hyperspectral Imaging. Remote Sens. 2022, 14, 799. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, M.P.; de Almeida, D.R.A.; Papa, D.d.A.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Hastings, J.H.; Ollinger, S.V.; Ouimette, A.P.; Sanders-DeMott, R.; Palace, M.W.; Ducey, M.J.; Sullivan, F.B.; Basler, D.; Orwig, D.A. Tree Species Traits Determine the Success of LiDAR-Based Crown Mapping in a Mixed Temperate Forest. Remote Sens. 2020, 12, 309. [Google Scholar] [CrossRef]

- Takahashi Miyoshi, G.; Imai, N.N.; Garcia Tommaselli, A.M.; Antunes de Moraes, M.V.; Honkavaara, E. Evaluation of Hyperspectral Multitemporal Information to Improve Tree Species Identification in the Highly Diverse Atlantic Forest. Remote Sens. 2020, 12, 244. [Google Scholar] [CrossRef]

- Fernandes, M.R.; Aguiar, F.C.; Martins, M.J.; Rico, N.; Ferreira, M.T.; Correia, A.C. Carbon Stock Estimations in a Mediterranean Riparian Forest: A Case Study Combining Field Data and UAV Imagery. Forests 2020, 11, 376. [Google Scholar] [CrossRef]

- Veras, H.F.P.; Ferreira, M.P.; da Cunha Neto, E.M.; Figueiredo, E.O.; Corte, A.P.D.; Sanquetta, C.R. Fusing multi-season UAS images with convolutional neural networks to map tree species in Amazonian forests. Ecol. Inform. 2022, 71, 101815. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, Z.; Tang, Y.; Qu, Y. Individual tree crown detection from high spatial resolution imagery using a revised local maximum filtering. Remote Sens. Environ. 2021, 258, 112397. [Google Scholar] [CrossRef]

- Cheng, Z.; Qi, L.; Cheng, Y.; Wu, Y.; Zhang, H. Interlacing Orchard Canopy Separation and Assessment using UAV Images. Remote Sens. 2020, 12, 767. [Google Scholar] [CrossRef]

- Gao, T.; Gao, Z.; Sun, B.; Qin, P.; Li, Y.; Yan, Z. An Integrated Method for Estimating Forest-Canopy Closure Based on UAV LiDAR Data. Remote Sens. 2022, 14, 4317. [Google Scholar] [CrossRef]

- Zhou, Q.; Yu, L.; Zhang, X.; Liu, Y.; Zhan, Z.; Ren, L.; Luo, Y. Fusion of UAV Hyperspectral Imaging and LiDAR for the Early Detection of EAB Stress in Ash and a New EAB Detection Index—NDVI(776,678). Remote Sens. 2022, 14, 2428. [Google Scholar] [CrossRef]

- Bindhu, M.L.H.; Potluri, T.; Korra, C.B.; Prasad, J.V.D. Detection and Counting of Trees in Aerial Images Using Image Processing Techniques. In Lecture Notes in Networks and Systems; Springer: Berlin/Heidelberg, Germany, 2023; pp. 181–189. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating Tree Detection and Segmentation Routines on Very High Resolution UAV LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Wallace, L.; Musk, R.; Lucieer, A. An Assessment of the Repeatability of Automatic Forest Inventory Metrics Derived From UAV-Borne Laser Scanning Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7160–7169. [Google Scholar] [CrossRef]

- Natividade, J.; Prado, J.; Marques, L. Low-cost multi-spectral vegetation classification using an Unmanned Aerial Vehicle. In Proceedings of the 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–28 April 2017; pp. 336–342. [Google Scholar] [CrossRef]

- Safonova, A.; Hamad, Y.; Dmitriev, E.; Georgiev, G.; Trenkin, V.; Georgieva, M.; Dimitrov, S.; Iliev, M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones 2021, 5, 77. [Google Scholar] [CrossRef]

- Wan, J.; Wu, L.; Zhang, S.; Liu, S.; Xu, M.; Sheng, H.; Cui, J. Monitoring of Discolored Trees Caused by Pine Wilt Disease Based on Unsupervised Learning with Decision Fusion Using UAV Images. Forests 2022, 13, 1884. [Google Scholar] [CrossRef]

- Wang, R.; Bao, T.; Tian, S.; Song, L.; Zhong, S.; Liu, J.; Yu, K.; Wang, F. Quantifying Understory Vegetation Cover of Pinus massoniana Forest in Hilly Region of South China by Combined Near-Ground Active and Passive Remote Sensing. Drones 2022, 6, 240. [Google Scholar] [CrossRef]

- Vélez, S.; Ariza-Sentís, M.; Valente, J. Mapping the spatial variability of Botrytis bunch rot risk in vineyards using UAV multispectral imagery. Eur. J. Agron. 2023, 142, 126691. [Google Scholar] [CrossRef]

- Sivanandam, P.; Lucieer, A. Tree Detection and Species Classification in a Mixed Species Forest Using Unoccupied Aircraft System (UAS) RGB and Multispectral Imagery. Remote Sens. 2022, 14, 4963. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, Y.; Zhang, X. Extraction of tree crowns damaged by Dendrolimus tabulaeformis Tsai et Liu via spectral-spatial classification using UAV-based hyperspectral images. Plant Methods 2020, 16, 135. [Google Scholar] [CrossRef]

- Xia, J.; Wang, Y.; Dong, P.; He, S.; Zhao, F.; Luan, G. Object-Oriented Canopy Gap Extraction from UAV Images Based on Edge Enhancement. Remote Sens. 2022, 14, 4762. [Google Scholar] [CrossRef]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. A machine learning algorithm to detect pine wilt disease using UAV-based hyperspectral imagery and LiDAR data at the tree level. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102363. [Google Scholar] [CrossRef]

- Chen, Q.; Gao, T.; Zhu, J.; Wu, F.; Li, X.; Lu, D.; Yu, F. Individual Tree Segmentation and Tree Height Estimation Using Leaf-Off and Leaf-On UAV-LiDAR Data in Dense Deciduous Forests. Remote Sens. 2022, 14, 2787. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, H.; Huang, Y.; Cheng, D.; Huang, J. A GA and SVM Classification Model for Pine Wilt Disease Detection Using UAV-Based Hyperspectral Imagery. Appl. Sci. 2022, 12, 6676. [Google Scholar] [CrossRef]

- Liu, M.; Yu, T.; Gu, X.; Sun, Z.; Yang, J.; Zhang, Z.; Mi, X.; Cao, W.; Li, J. The Impact of Spatial Resolution on the Classification of Vegetation Types in Highly Fragmented Planting Areas Based on Unmanned Aerial Vehicle Hyperspectral Images. Remote Sens. 2020, 12, 146. [Google Scholar] [CrossRef]

- Kuikel, S.; Upadhyay, B.; Aryal, D.; Bista, S.; Awasthi, B.; Shrestha, S. Individual Banana Tree Crown Delineation Using Unmanned Aerial Vehicle (UAV) Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B3-2, 581–585. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Guo, S.; Ye, Z.; Deng, H.; Hou, X.; Zhang, H. Urban Tree Classification Based on Object-Oriented Approach and Random Forest Algorithm Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2022, 14, 3885. [Google Scholar] [CrossRef]

- Shui, W.; Li, H.; Zhang, Y.; Jiang, C.; Zhu, S.; Wang, Q.; Liu, Y.; Zong, S.; Huang, Y.; Ma, M. Is an Unmanned Aerial Vehicle (UAV) Suitable for Extracting the Stand Parameters of Inaccessible Underground Forests of Karst Tiankeng? Remote Sens. 2022, 14, 4128. [Google Scholar] [CrossRef]

- Wang, B.; Sun, H.; Cracknell, A.P.; Deng, Y.; Li, Q.; Lin, L.; Xu, Q.; Ma, Y.; Wang, W.; Zhang, Z. Detection and Quantification of Forest-Agriculture Ecotones Caused by Returning Farmland to Forest Program Using Unmanned Aircraft Imagery. Diversity 2022, 14, 406. [Google Scholar] [CrossRef]

- Finn, A.; Kumar, P.; Peters, S.; O’Hehir, J. Unsupervised spectral-spatial processing of drone imagery for identification of pine seedlings. ISPRS J. Photogramm. Remote Sens. 2022, 183, 363–388. [Google Scholar] [CrossRef]

- Gonçalves, V.; Ribeiro, E.; Imai, N. Mapping Areas Invaded by Pinus sp. from Geographic Object-Based Image Analysis (GEOBIA) Applied on RPAS (Drone) Color Images. Remote Sens. 2022, 14, 2805. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Wang, L.; Gong, P.; Biging, G.S. Individual Tree-Crown Delineation and Treetop Detection in High-Spatial-Resolution Aerial Imagery. Photogramm. Eng. Remote Sens. 2004, 70, 351–357. [Google Scholar] [CrossRef]

- Lian, X.; Zhang, H.; Xiao, W.; Lei, Y.; Ge, L.; Qin, K.; He, Y.; Dong, Q.; Li, L.; Han, Y.; et al. Biomass Calculations of Individual Trees Based on Unmanned Aerial Vehicle Multispectral Imagery and Laser Scanning Combined with Terrestrial Laser Scanning in Complex Stands. Remote Sens. 2022, 14, 4715. [Google Scholar] [CrossRef]

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree species classification using deep learning and RGB optical images obtained by an unmanned aerial vehicle. J. For. Res. 2021, 32, 1879–1888. [Google Scholar] [CrossRef]

- Yang, K.; Zhang, H.; Wang, F.; Lai, R. Extraction of Broad-Leaved Tree Crown Based on UAV Visible Images and OBIA-RF Model: A Case Study for Chinese Olive Trees. Remote Sens. 2022, 14, 2469. [Google Scholar] [CrossRef]

- Hu, G.; Yin, C.; Wan, M.; Zhang, Y.; Fang, Y. Recognition of diseased Pinus trees in UAV images using deep learning and AdaBoost classifier. Biosyst. Eng. 2020, 194, 138–151. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Olariu, H.G.; Malambo, L.; Popescu, S.C.; Virgil, C.; Wilcox, B.P. Woody Plant Encroachment: Evaluating Methodologies for Semiarid Woody Species Classification from Drone Images. Remote Sens. 2022, 14, 1665. [Google Scholar] [CrossRef]

- Xiao, D.; Pan, Y.; Feng, J.; Yin, J.; Liu, Y.; He, L. Remote sensing detection algorithm for apple fire blight based on UAV multispectral image. Comput. Electron. Agric. 2022, 199, 107137. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species classification using Unmanned Aerial Vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Buchaillot, M.L.; Segarra, J.; Fernandez Gallego, J.A.; Araus, J.L.; Llosa, X.; Beltrán, M.; Piqué, M. Quantification of Pinus pinea L. Pinecone Productivity Using Machine Learning of UAV and Field Images. In Proceedings of the 2nd International Electronic Conference on Forests—Sustainable Forests: Ecology, Management, Products and Trade, Online, 1–15 September 2021; MDPI: Basel, Switzerland, 2022; p. 24. [Google Scholar] [CrossRef]

- Gomez Selvaraj, M.; Vergara, A.; Montenegro, F.; Alonso Ruiz, H.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Malek, S.; Bazi, Y.; Alajlan, N.; AlHichri, H.; Melgani, F. Efficient framework for palm tree detection in UAV images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4692–4703. [Google Scholar] [CrossRef]

- Natesan, S.; Armenakis, C.; Vepakomma, U. Individual tree species identification using Dense Convolutional Network (DenseNet) on multitemporal RGB images from UAV. J. Unmanned Veh. Syst. 2020, 8, 310–333. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. Classification of tree species and standing dead trees by fusing UAV-based lidar data and multispectral imagery in the 3D deep neural network PointNet++. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V-2-2020, 203–210. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Y.; Liu, Z. Classification of typical tree species in laser point cloud based on deep learning. Remote Sens. 2021, 13, 4750. [Google Scholar] [CrossRef]

- Qin, J.; Wang, B.; Wu, Y.; Lu, Q.; Zhu, H. Identifying pine wood nematode disease using UAV images and deep learning algorithms. Remote Sens. 2021, 13, 162. [Google Scholar] [CrossRef]

- Osco, L.P.; Nogueira, K.; Marques Ramos, A.P.; Faita Pinheiro, M.M.; Furuya, D.E.G.; Gonçalves, W.N.; de Castro Jorge, L.A.; Marcato Junior, J.; dos Santos, J.A. Semantic segmentation of citrus-orchard using deep neural networks and multispectral UAV-based imagery. Precis. Agric. 2021, 22, 1171–1188. [Google Scholar] [CrossRef]

- Liu, X.; Ghazali, K.H.; Han, F.; Mohamed, I.I. Automatic Detection of Oil Palm Tree from UAV Images Based on the Deep Learning Method. Appl. Artif. Intell. 2021, 35, 13–24. [Google Scholar] [CrossRef]

- La Rosa, L.E.C.; Sothe, C.; Feitosa, R.Q.; de Almeida, C.M.; Schimalski, M.B.; Oliveira, D.A.B. Multi-task fully convolutional network for tree species mapping in dense forests using small training hyperspectral data. ISPRS J. Photogramm. Remote Sens. 2021, 179, 35–49. [Google Scholar] [CrossRef]

- Moura, M.M.; de Oliveira, L.E.S.; Sanquetta, C.R.; Bastos, A.; Mohan, M.; Corte, A.P.D. Towards Amazon Forest Restoration: Automatic Detection of Species from UAV Imagery. Remote Sens. 2021, 13, 2627. [Google Scholar] [CrossRef]

- Li, Y.; Chai, G.; Wang, Y.; Lei, L.; Zhang, X. ACE R-CNN: An Attention Complementary and Edge Detection-Based Instance Segmentation Algorithm for Individual Tree Species Identification Using UAV RGB Images and LiDAR Data. Remote Sens. 2022, 14, 3035. [Google Scholar] [CrossRef]

- Onishi, M.; Watanabe, S.; Nakashima, T.; Ise, T. Practicality and Robustness of Tree Species Identification Using UAV RGB Image and Deep Learning in Temperate Forest in Japan. Remote Sens. 2022, 14, 1710. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, J.; Wang, H.; Tan, T.; Cui, M.; Huang, Z.; Wang, P.; Zhang, L. Multi-Species Individual Tree Segmentation and Identification Based on Improved Mask R-CNN and UAV Imagery in Mixed Forests. Remote Sens. 2022, 14, 874. [Google Scholar] [CrossRef]

- Gupta, M.; Jain, S. Banana Leaf Diseases and Machine Learning Algorithms Applied to Detect Diseases: A Study. In Lecture Notes in Networks and Systems; Springer: Berlin/Heidelberg, Germany, 2023; pp. 121–130. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef]

- Barros, T.; Conde, P.; Gonçalves, G.; Premebida, C.; Monteiro, M.; Ferreira, C.S.S.; Nunes, U.J. Multispectral Vineyard Segmentation: A Deep Learning approach. Comput. Electron. Agric. 2021, 195, 106782. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Yu, L.; Yuan, S.; Tao, W.Y.W.; Pang, T.K.; Kanniah, K.D. Growing status observation for oil palm trees using Unmanned Aerial Vehicle (UAV) images. ISPRS J. Photogramm. Remote Sens. 2021, 173, 95–121. [Google Scholar] [CrossRef]

- Egli, S.; Höpke, M. CNN-Based Tree Species Classification Using High Resolution RGB Image Data from Automated UAV Observations. Remote Sens. 2020, 12, 3892. [Google Scholar] [CrossRef]

- Osco, L.P.; de Arruda, M.d.S.; Marcato Junior, J.; da Silva, N.B.; Ramos, A.P.M.; Moryia, É.A.S.; Imai, N.N.; Pereira, D.R.; Creste, J.E.; Matsubara, E.T.; et al. A convolutional neural network approach for counting and geolocating citrus-trees in UAV multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2020, 160, 97–106. [Google Scholar] [CrossRef]

- Ocer, N.E.; Kaplan, G.; Erdem, F.; Kucuk Matci, D.; Avdan, U. Tree extraction from multi-scale UAV images using Mask R-CNN with FPN. Remote Sens. Lett. 2020, 11, 847–856. [Google Scholar] [CrossRef]

- Albuquerque, R.W.; Vieira, D.L.M.; Ferreira, M.E.; Soares, L.P.; Olsen, S.I.; Araujo, L.S.; Vicente, L.E.; Tymus, J.R.C.; Balieiro, C.P.; Matsumoto, M.H.; et al. Mapping Key Indicators of Forest Restoration in the Amazon Using a Low-Cost Drone and Artificial Intelligence. Remote Sens. 2022, 14, 830. [Google Scholar] [CrossRef]

- Ye, Z.; Wei, J.; Lin, Y.; Guo, Q.; Zhang, J.; Zhang, H.; Deng, H.; Yang, K. Extraction of Olive Crown Based on UAV Visible Images and the U2-Net Deep Learning Model. Remote Sens. 2022, 14, 1523. [Google Scholar] [CrossRef]

- Li, B.; Lu, H.; Wang, H.; Qi, J.; Yang, G.; Pang, Y.; Dong, H.; Lian, Y. Terrain-Net: A Highly-Efficient, Parameter-Free, and Easy-to-Use Deep Neural Network for Ground Filtering of UAV LiDAR Data in Forested Environments. Remote Sens. 2022, 14, 5798. [Google Scholar] [CrossRef]

- Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN. Sensors 2021, 21, 1617. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chehreh, B.; Moutinho, A.; Viegas, C. Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications. Remote Sens. 2023, 15, 2263. https://doi.org/10.3390/rs15092263

Chehreh B, Moutinho A, Viegas C. Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications. Remote Sensing. 2023; 15(9):2263. https://doi.org/10.3390/rs15092263

Chicago/Turabian StyleChehreh, Babak, Alexandra Moutinho, and Carlos Viegas. 2023. "Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications" Remote Sensing 15, no. 9: 2263. https://doi.org/10.3390/rs15092263

APA StyleChehreh, B., Moutinho, A., & Viegas, C. (2023). Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications. Remote Sensing, 15(9), 2263. https://doi.org/10.3390/rs15092263