Polarimetric Imaging via Deep Learning: A Review

Abstract

1. Introduction

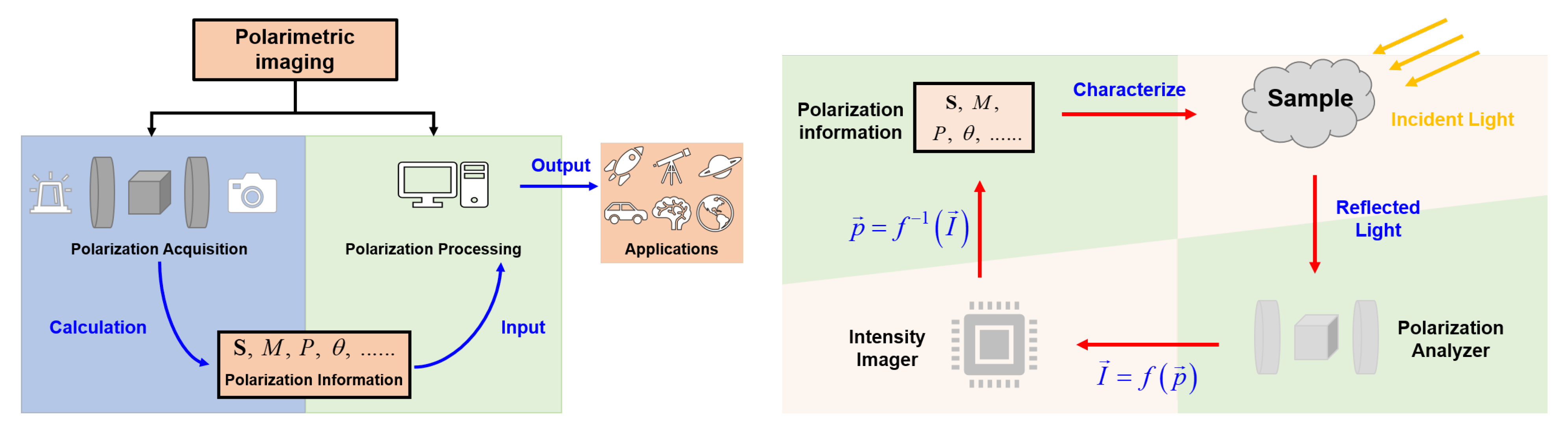

2. Principles of Polarization and Deep Learning

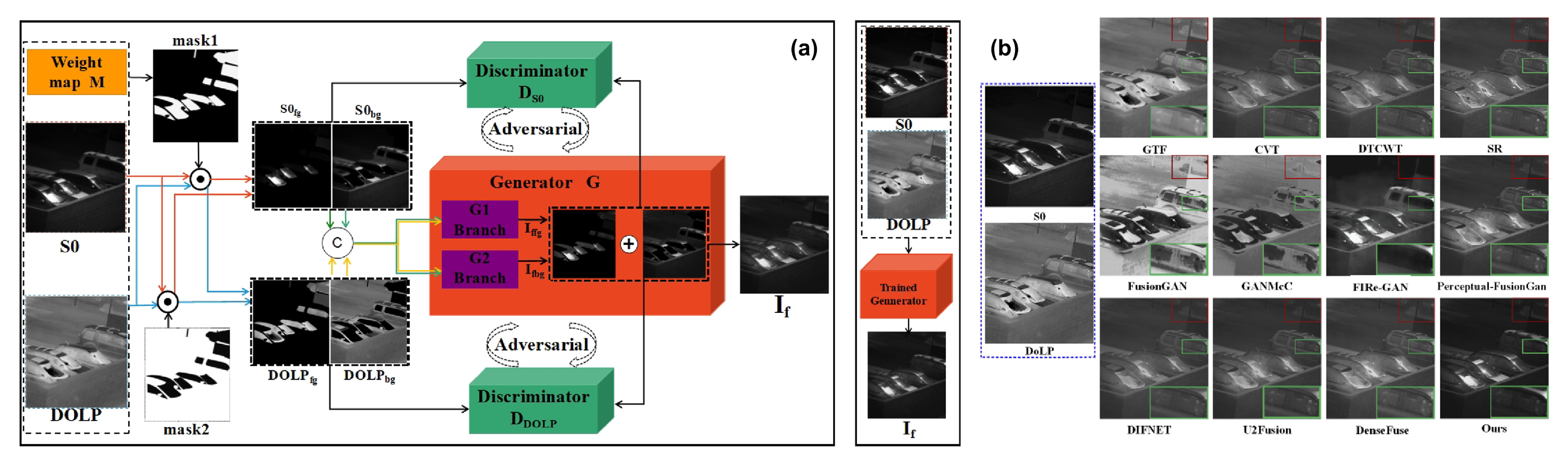

2.1. Overview of Polarization and PI

2.1.1. Jones Vector and Stokes Vector

2.1.2. Scattering Matrix

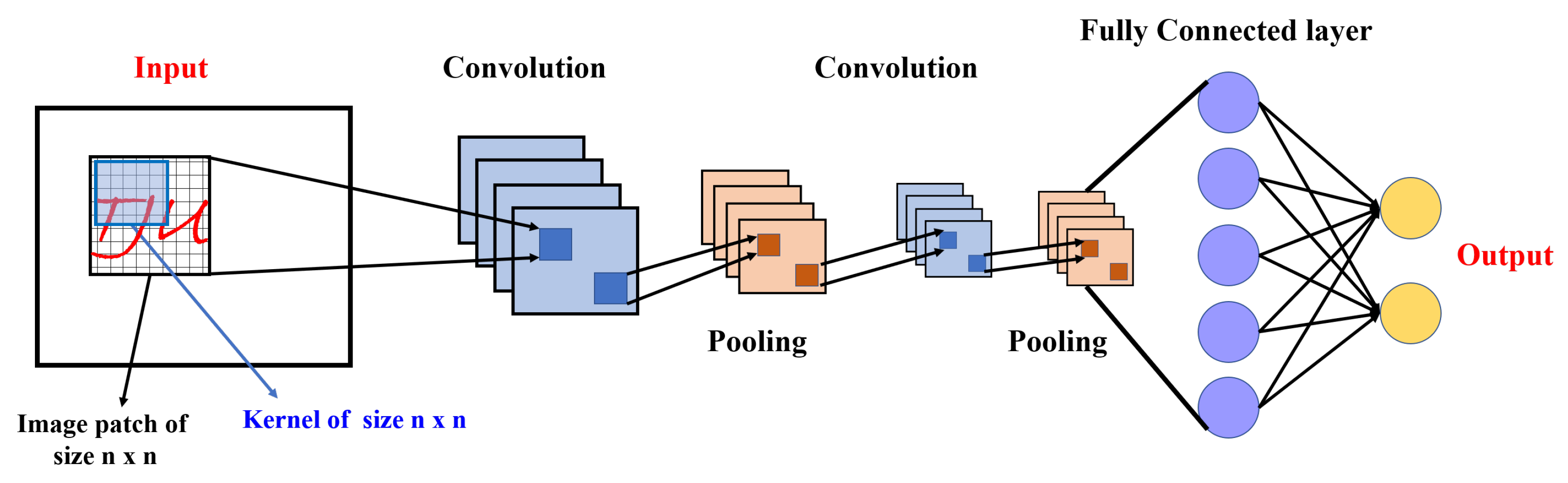

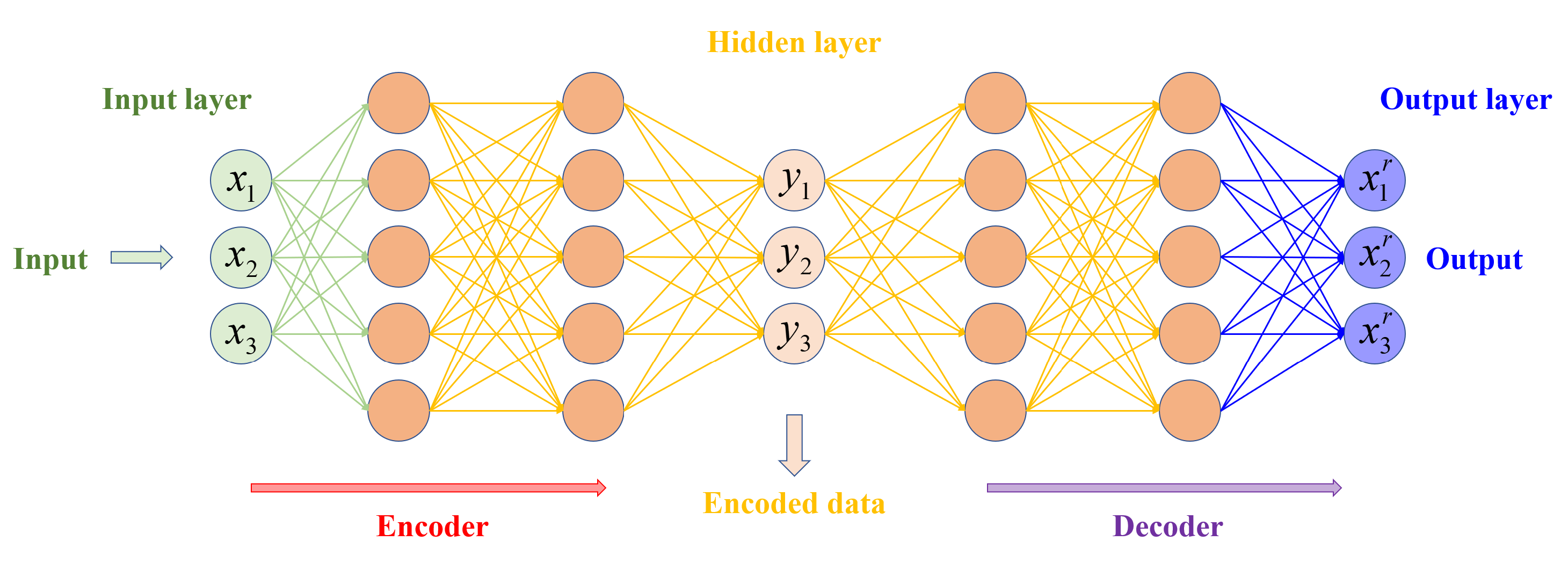

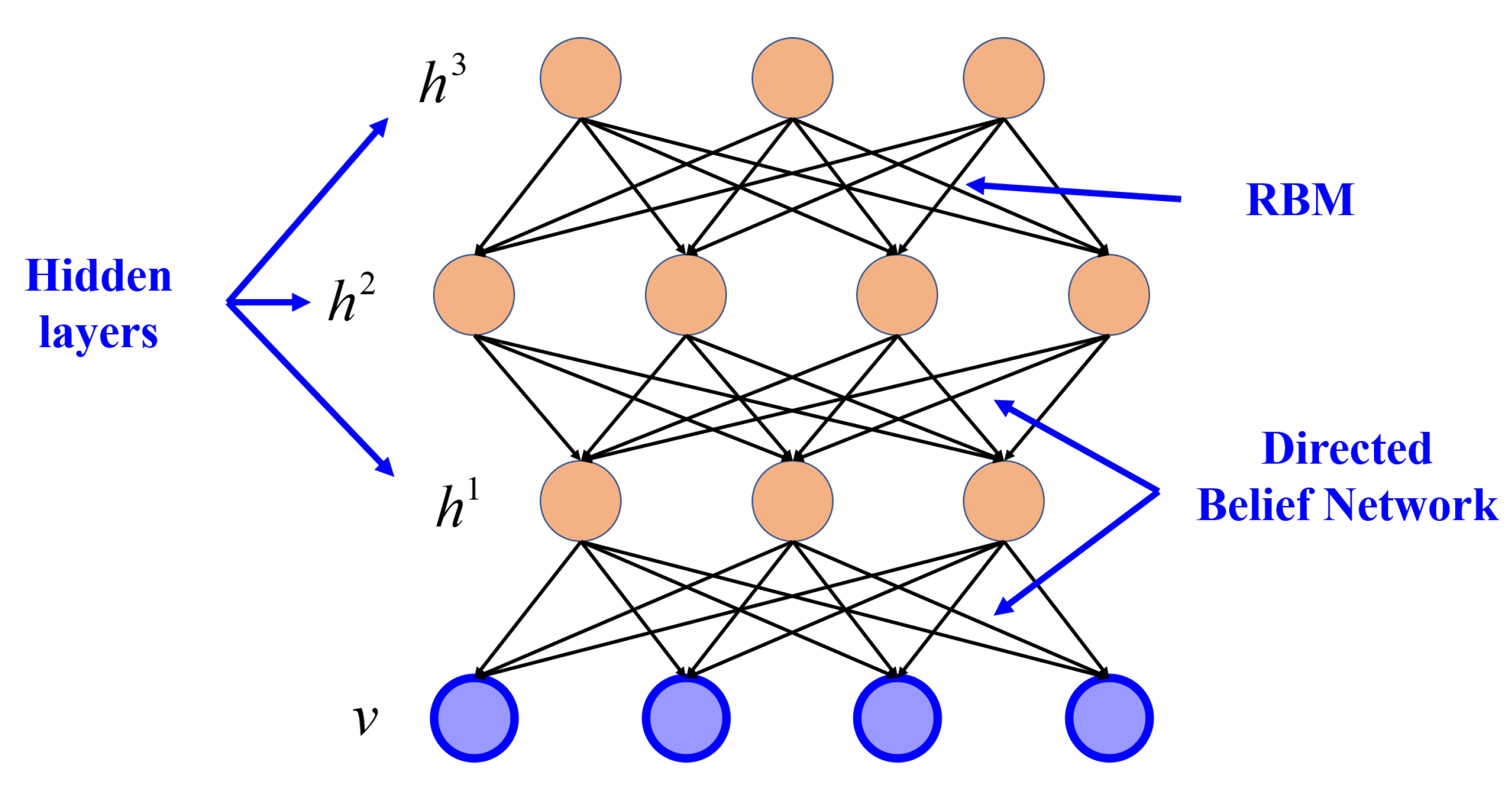

2.2. Overview of Deep Learning

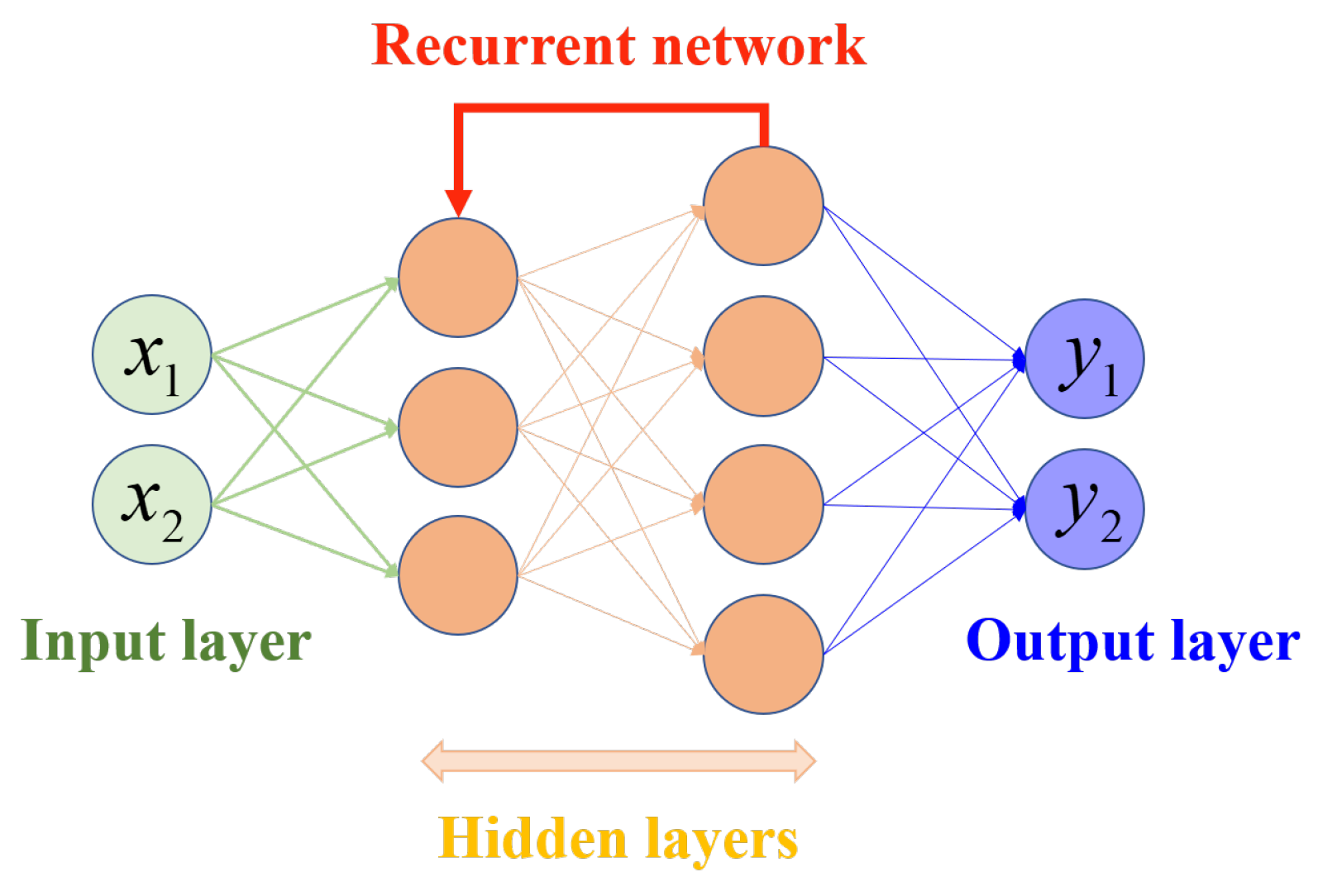

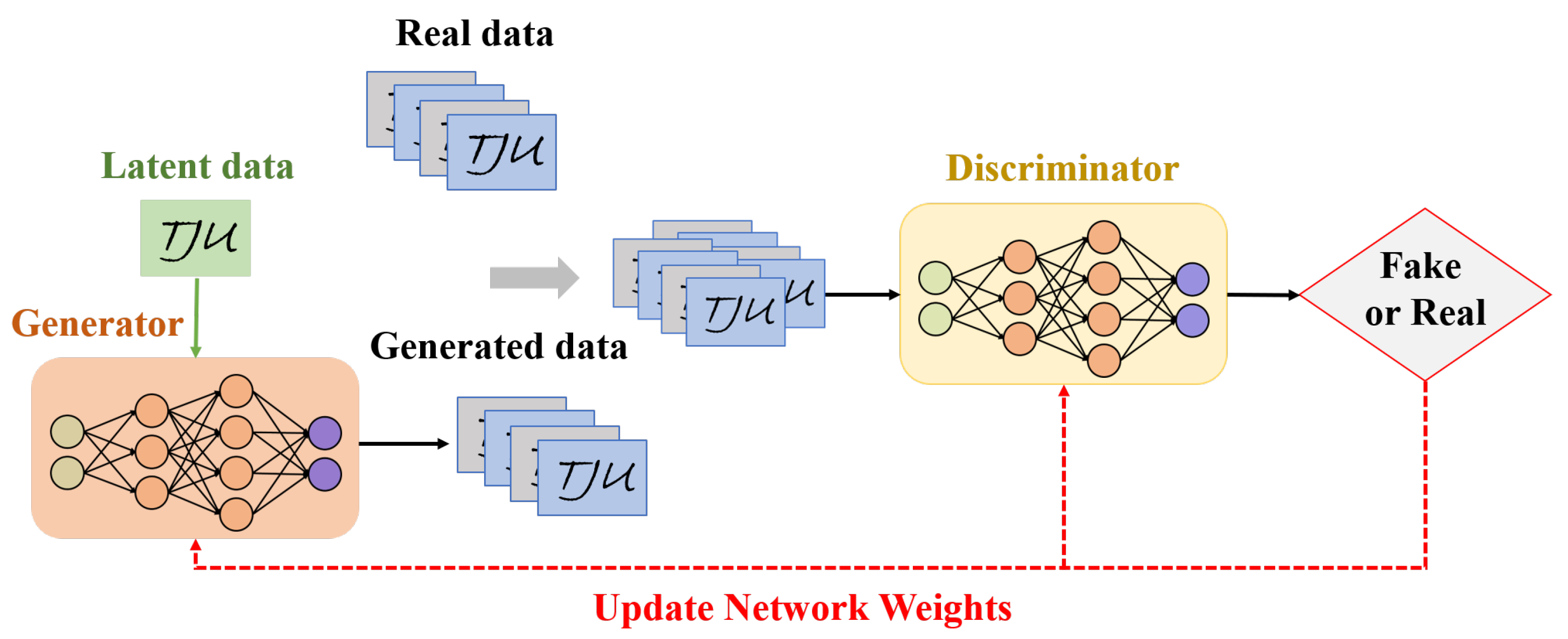

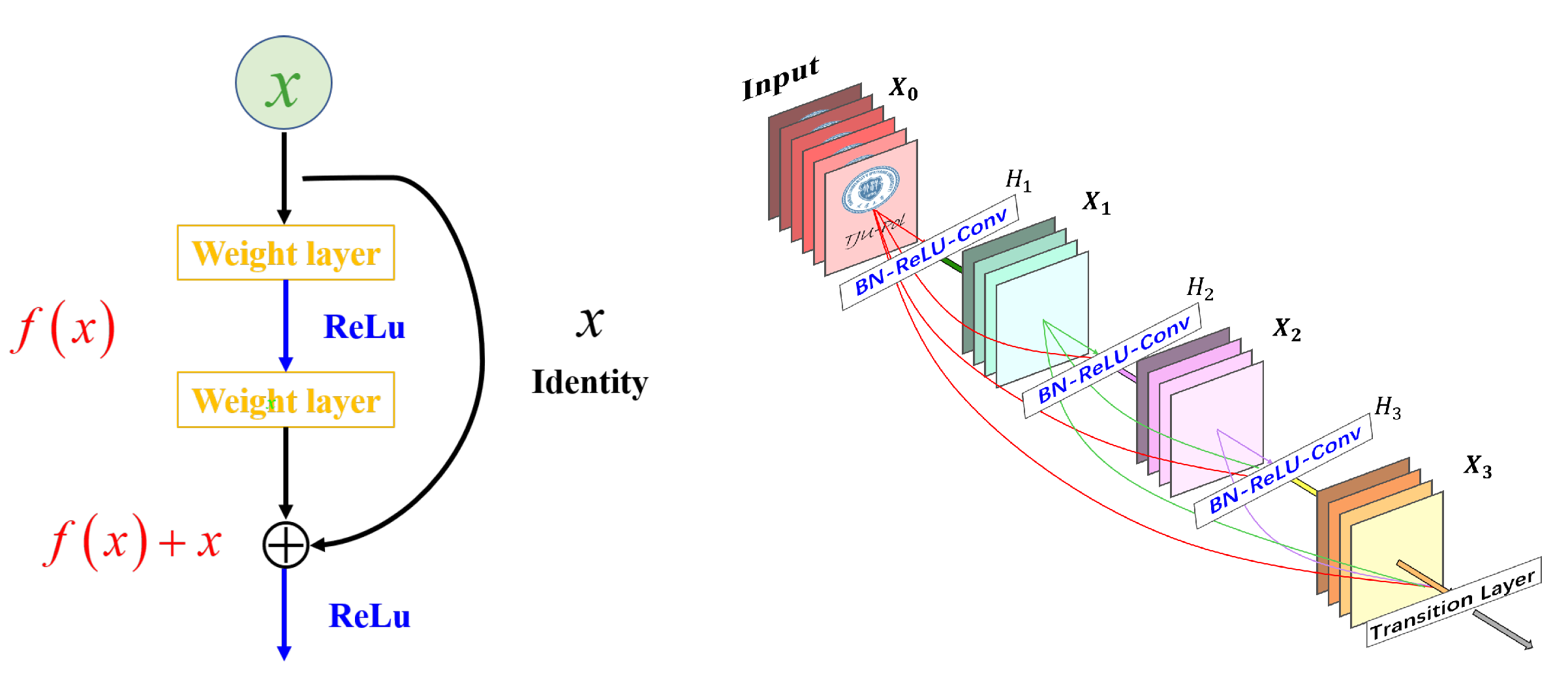

2.2.1. CNN

2.2.2. AE

2.2.3. DBN

2.2.4. Other Deep Networks

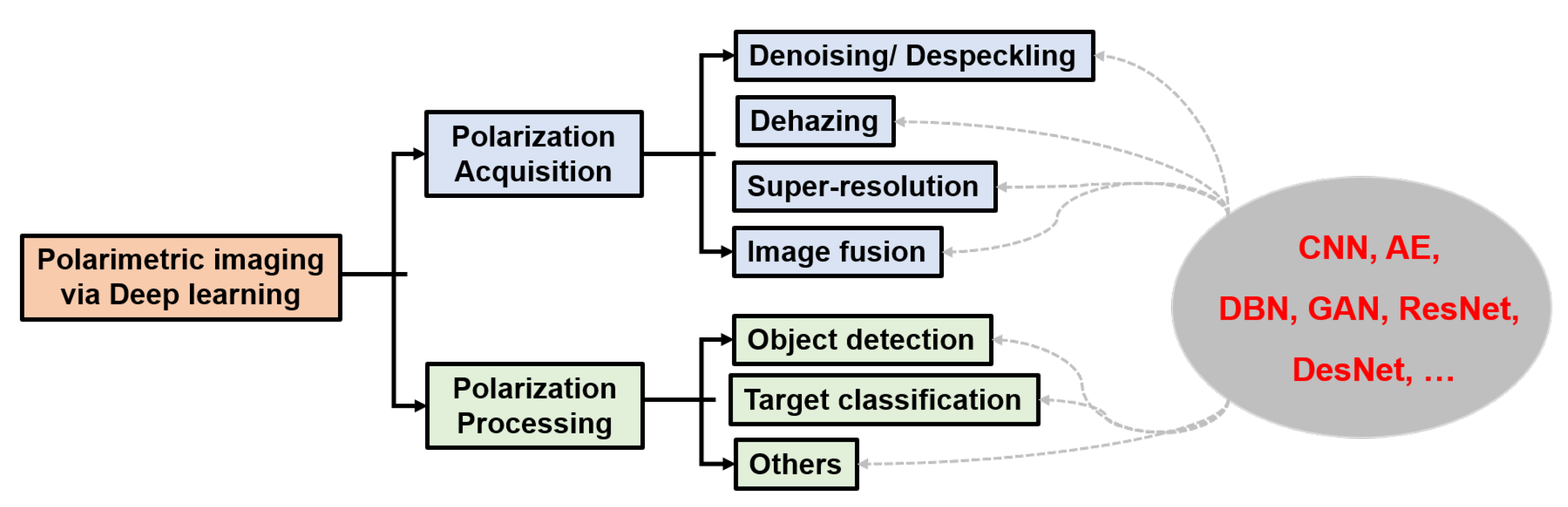

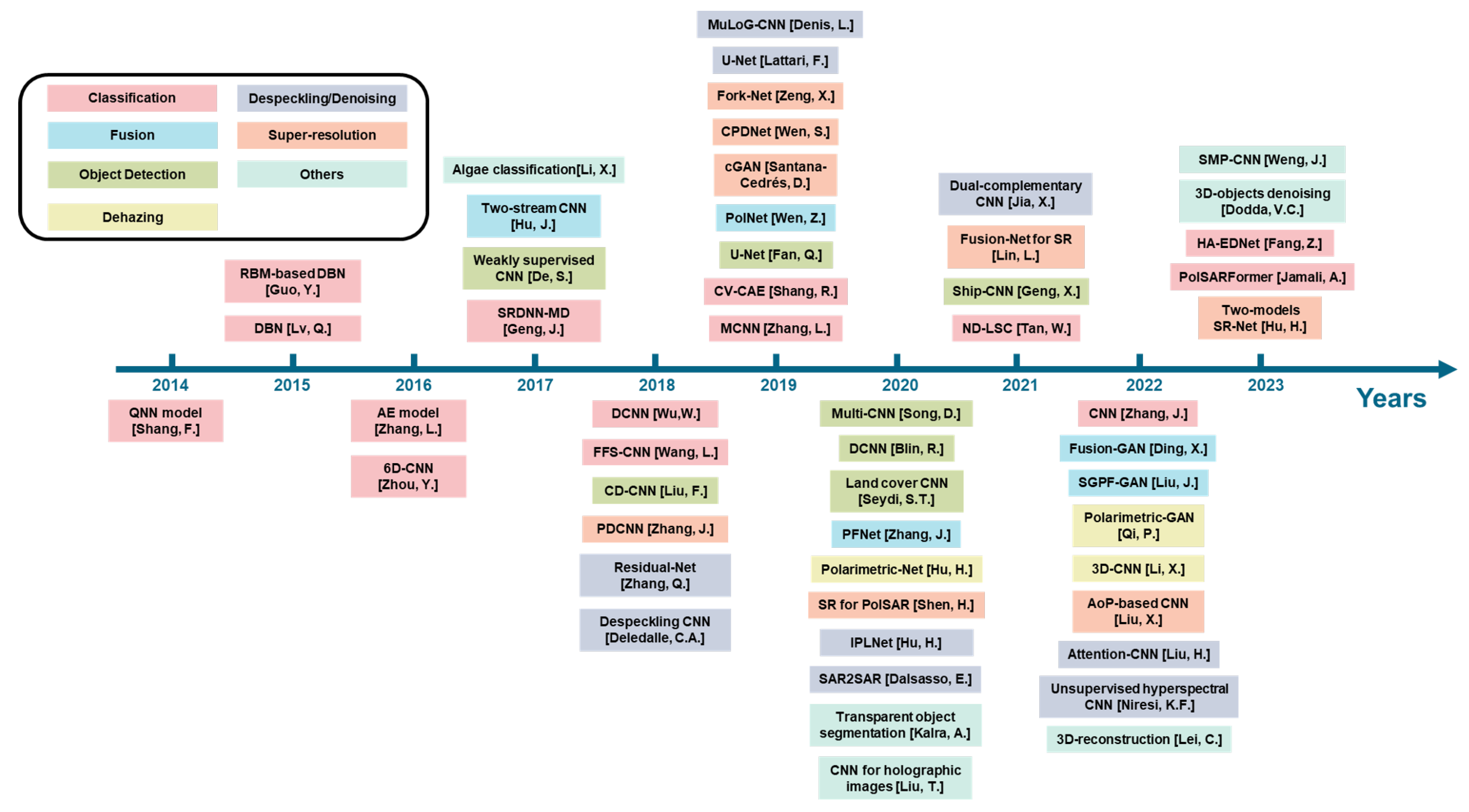

3. PI via Deep Learning

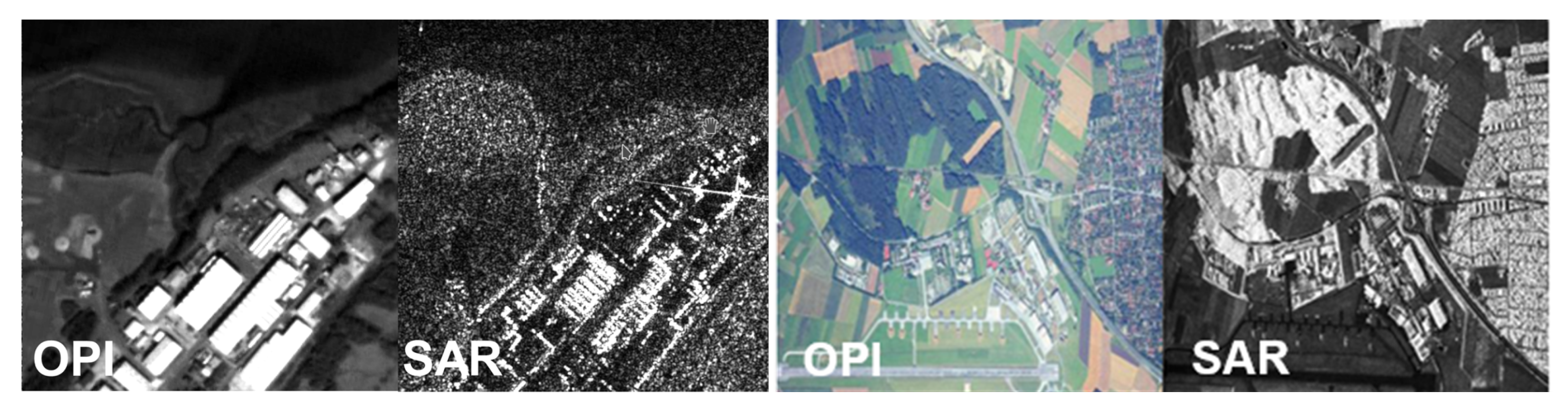

4. Polarization Information Acquisition

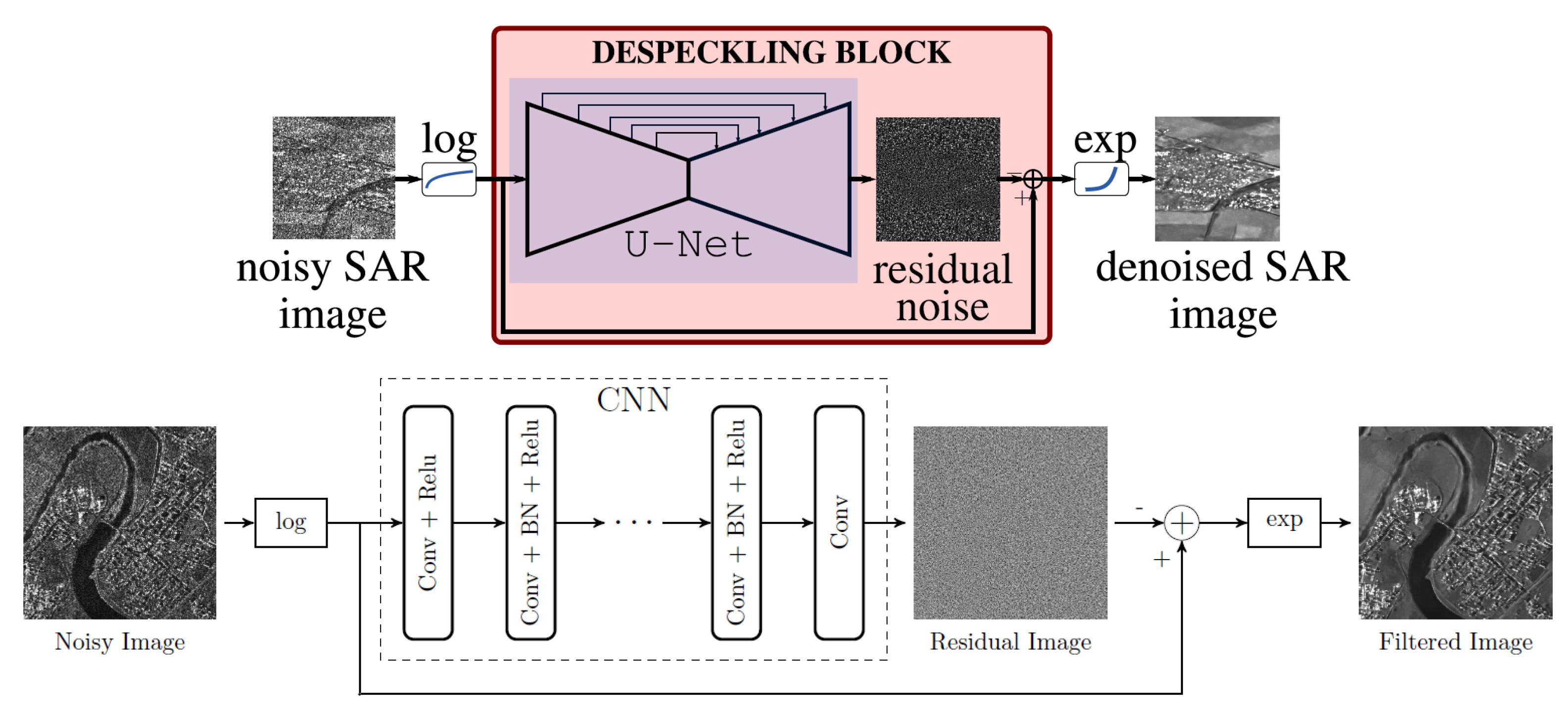

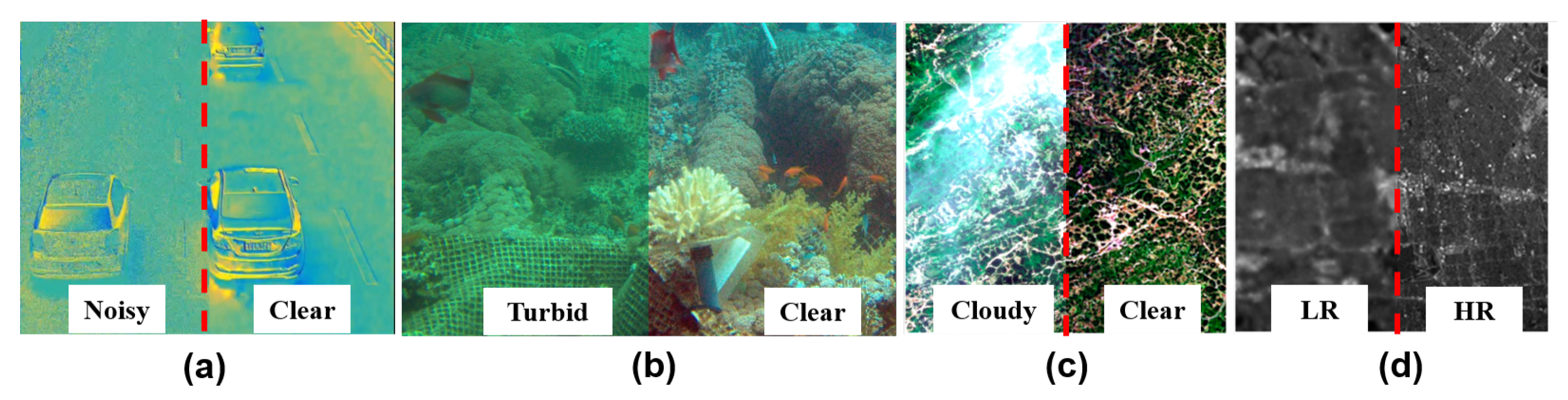

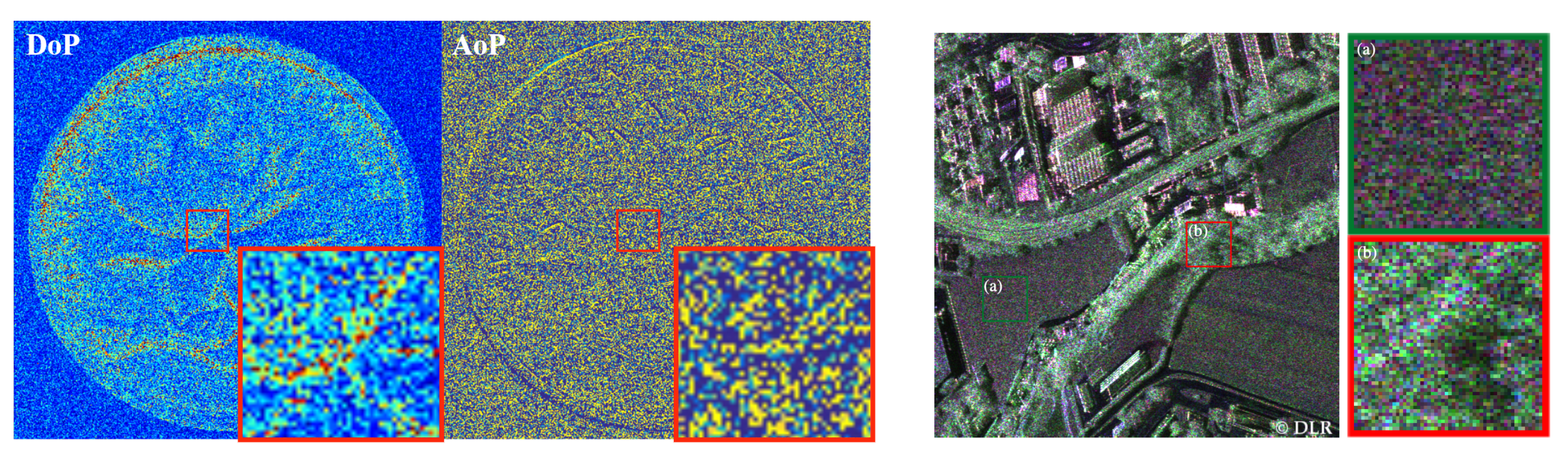

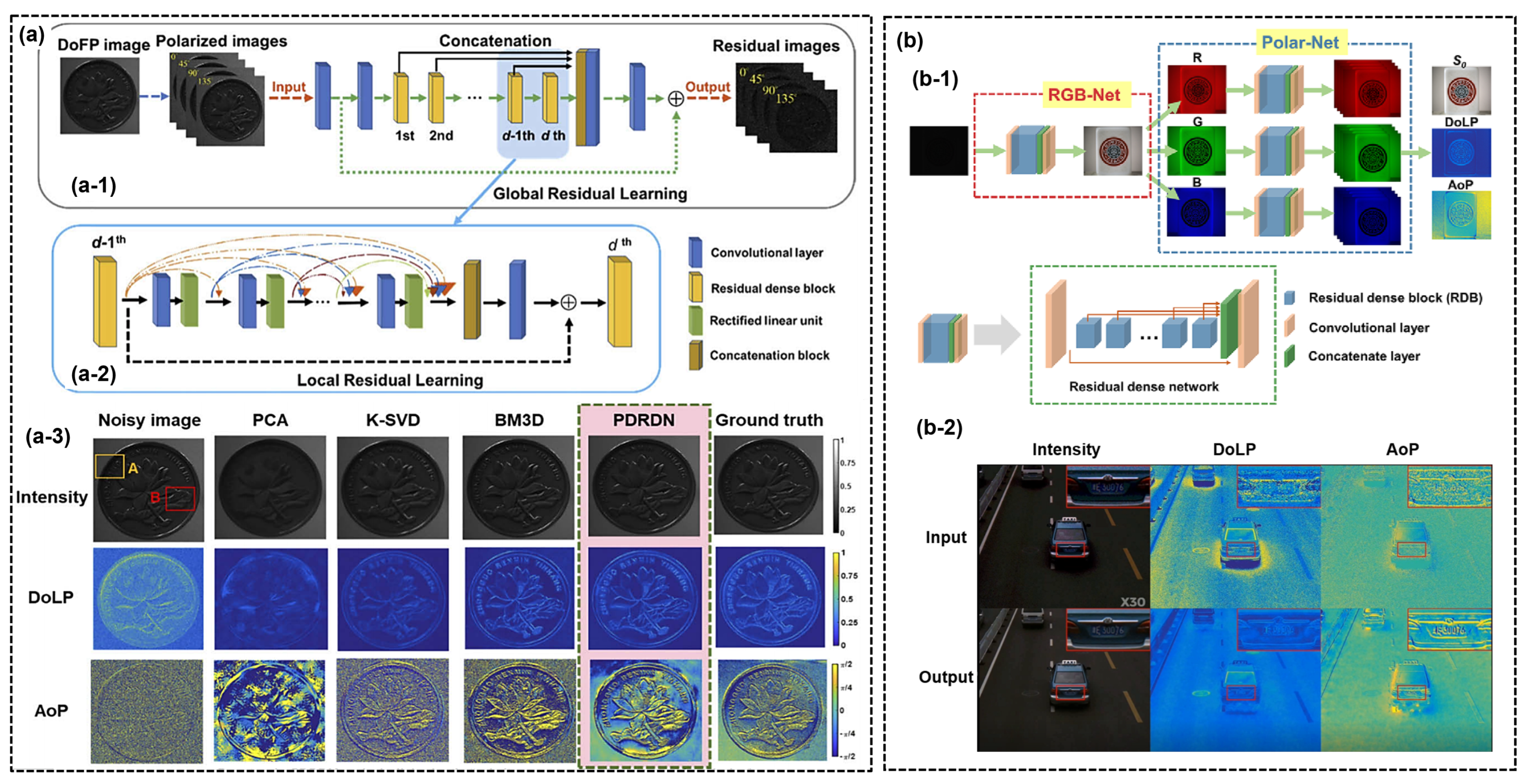

4.1. Denosing and Despeckling

4.1.1. PI Case

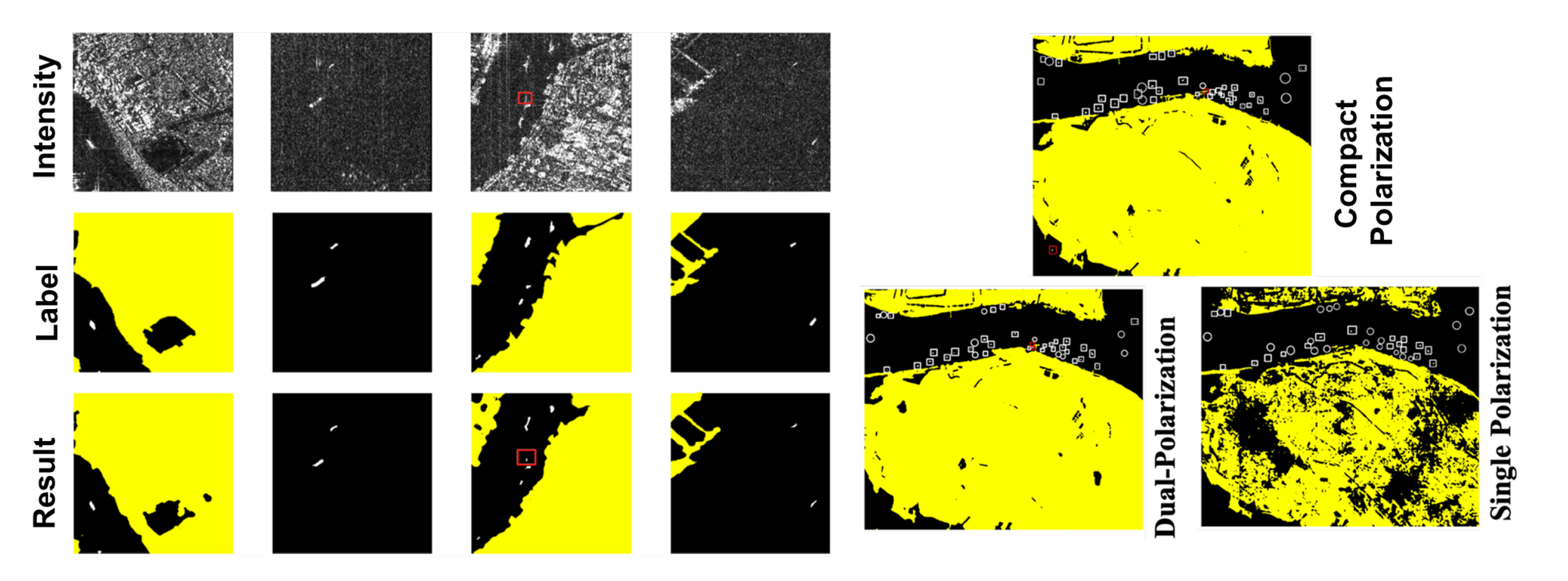

4.1.2. RS Case

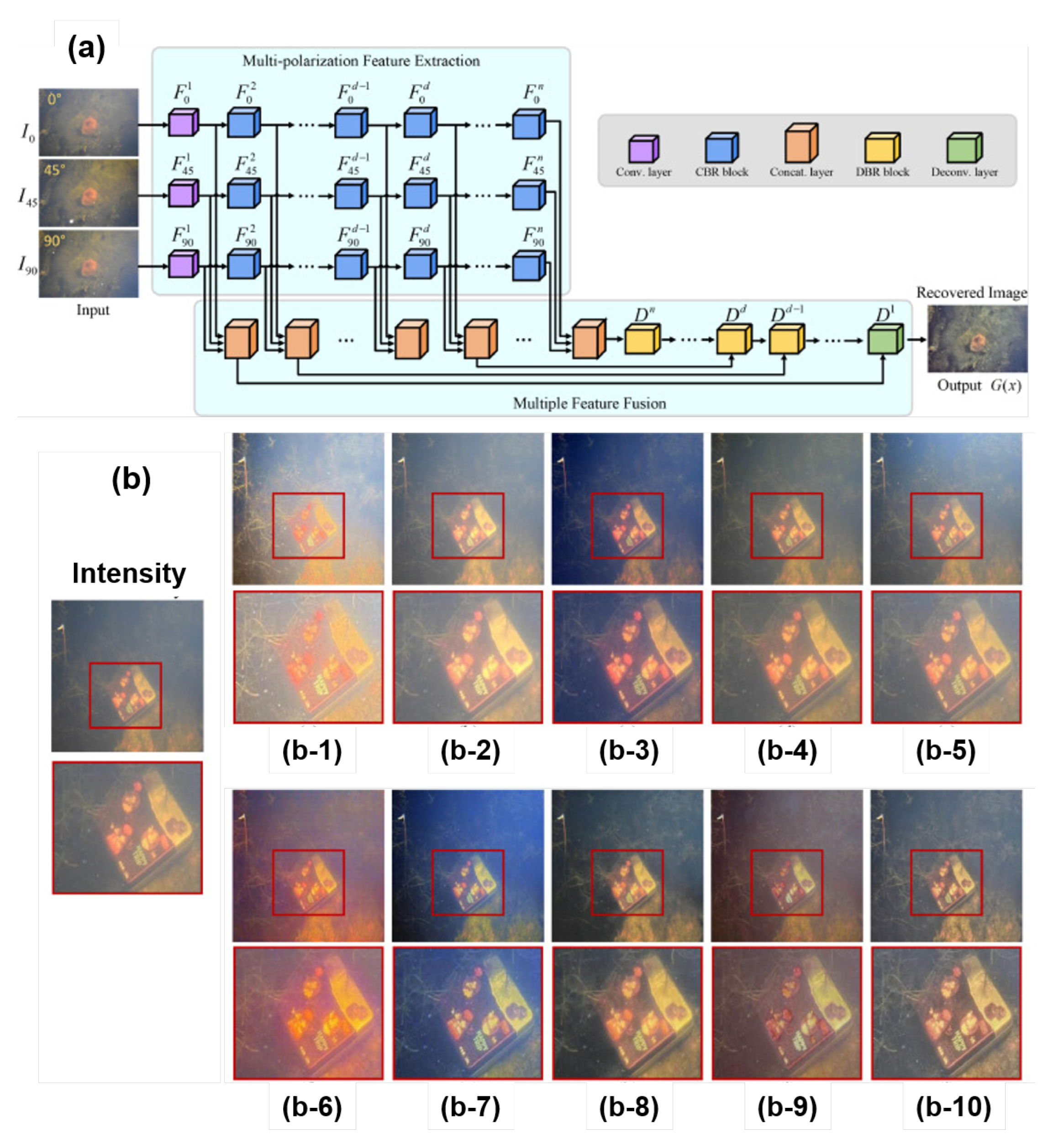

4.2. Dehazing

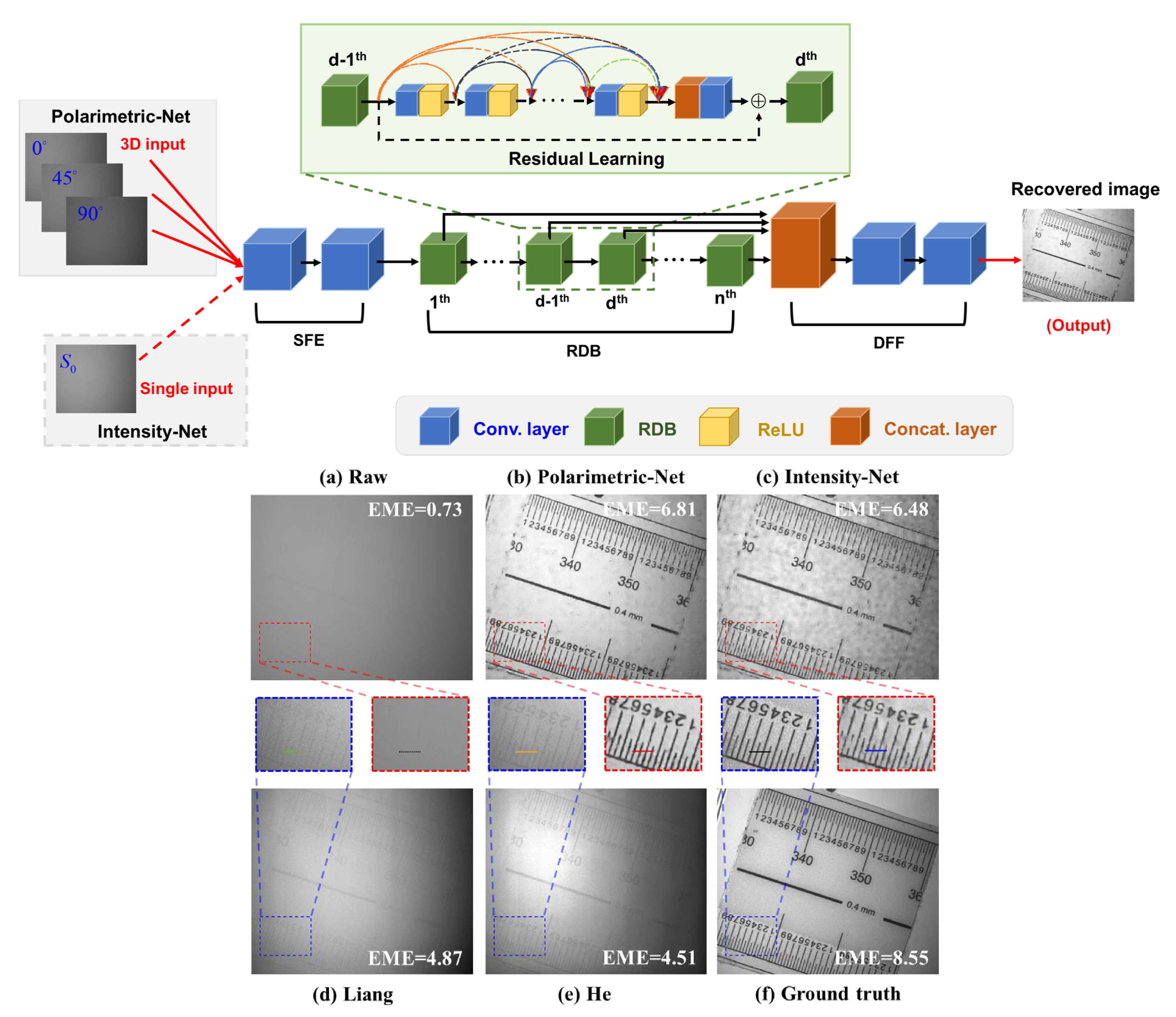

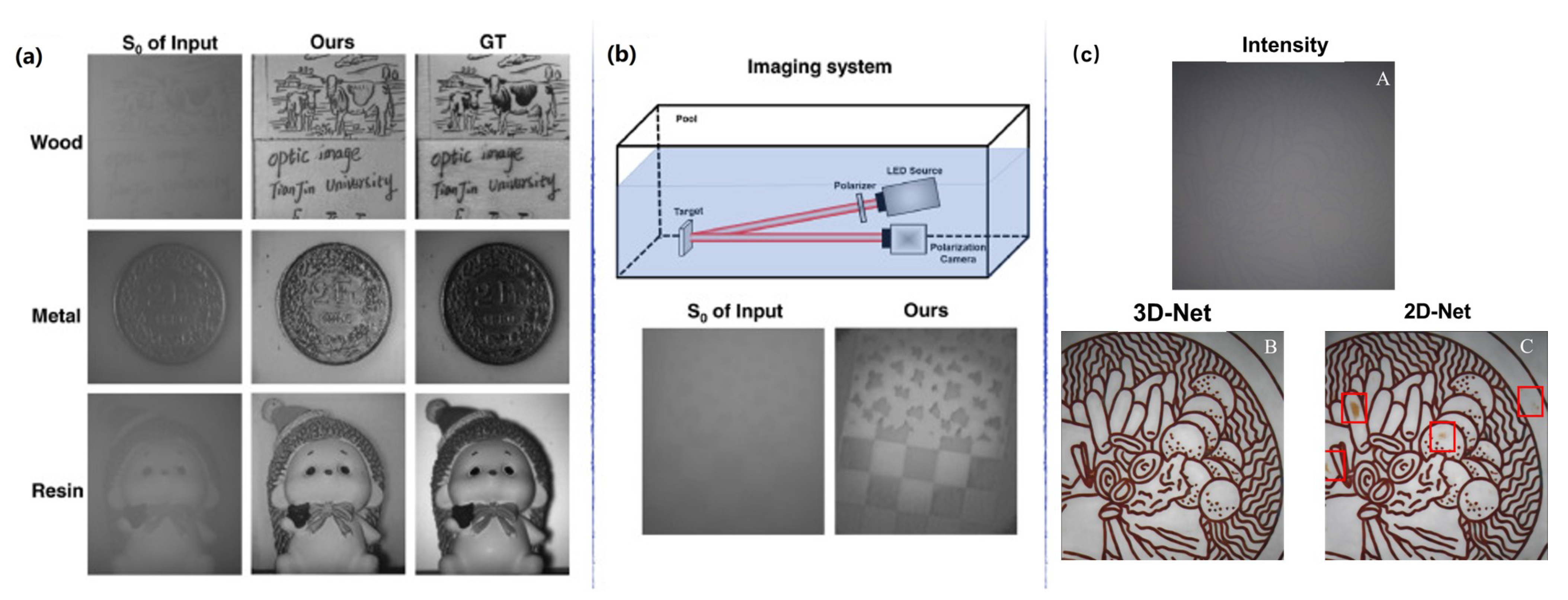

4.3. Super Resolution

4.4. Image Fusion

5. Polarization Information Applications

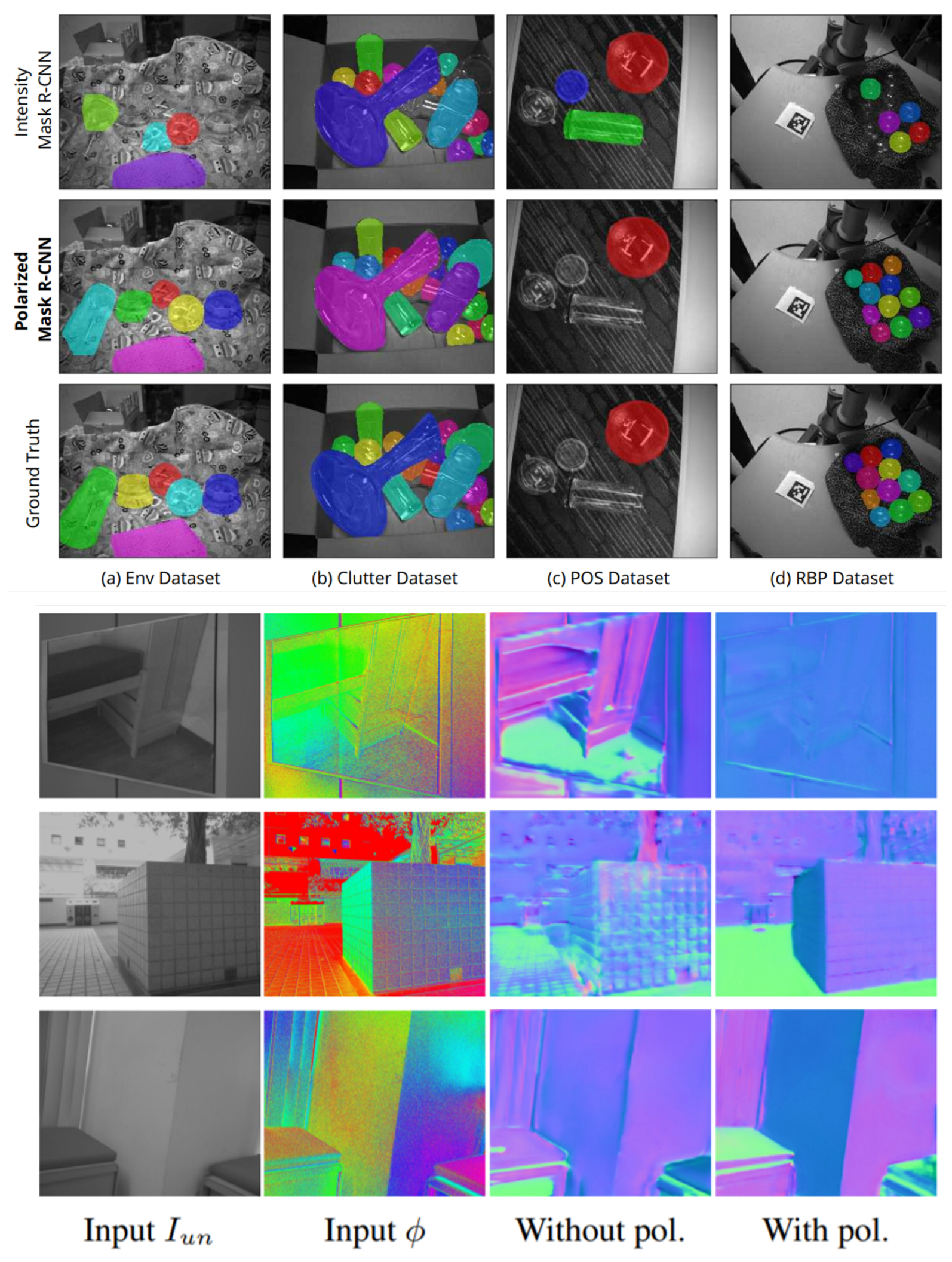

5.1. Object Detection

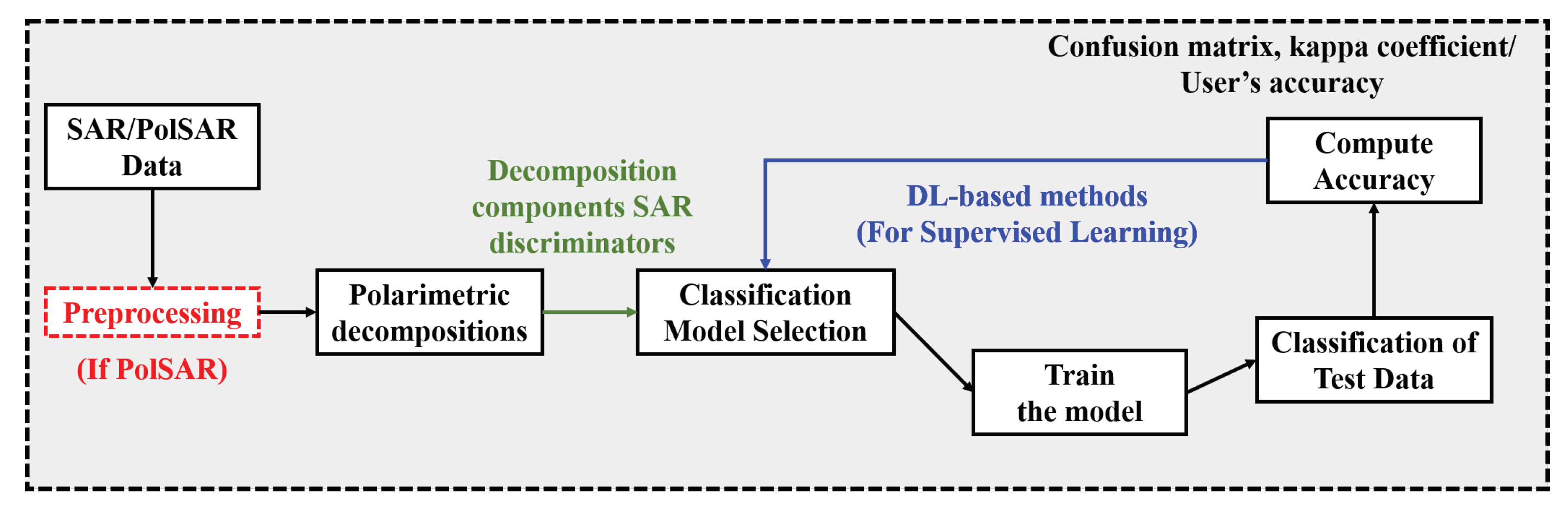

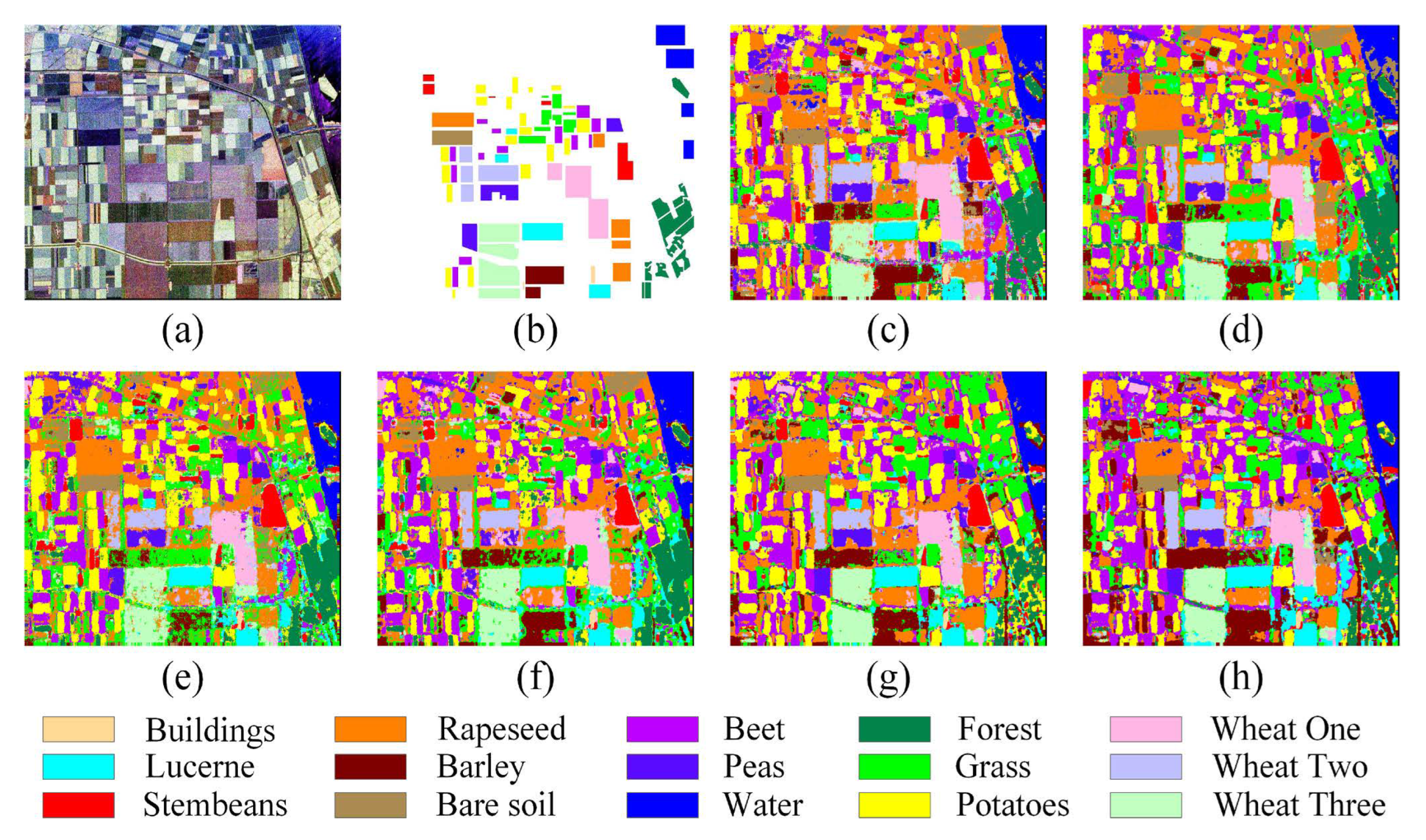

5.2. Target Classification

5.3. Others

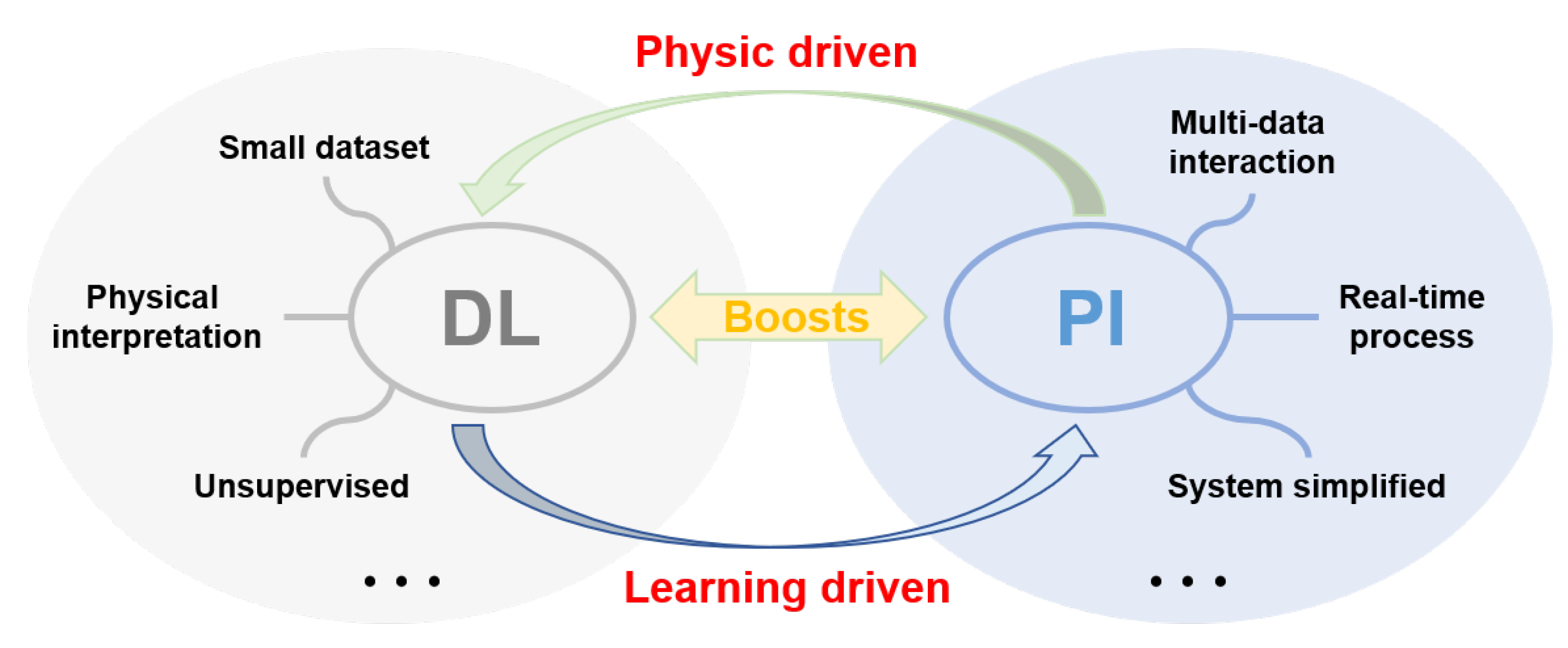

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bass, M.; Van Stryland, E.W.; Williams, D.R.; Wolfe, W.L. Handbook of Optics; McGraw-Hill: New York, NY, USA, 1995; Volume 2. [Google Scholar]

- Tyson, R.K. Principles of Adaptive Optics; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Fowles, G.R. Introduction to Modern Optics; Courier Corporation: North Chelmsford, MA, USA, 1989. [Google Scholar]

- Goldstein, D.H. Polarized Light; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Li, X.; Li, H.; Lin, Y.; Guo, J.; Yang, J.; Yue, H.; Li, K.; Li, C.; Cheng, Z.; Hu, H.; et al. Learning-based denoising for polarimetric images. Opt. Express 2020, 28, 16309–16321. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Hu, H.; Zhao, L.; Wang, H.; Yu, Y.; Wu, L.; Liu, T. Polarimetric image recovery method combining histogram stretching for underwater imaging. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Hu, H.; Li, X.; Guan, Z.; Zhu, W.; Jiang, J.; Liu, K.; Liu, T. An angle of polarization (AoP) visualization method for DoFP polarization image sensors Based on three dimensional HSI color space. Sensors 2019, 19, 1713. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhang, L.; Qi, P.; Zhu, Z.; Xu, J.; Liu, T.; Zhai, J.; Hu, H. Are indices of polarimetric purity excellent metrics for object identification in scattering media? Remote Sens. 2022, 14, 4148. [Google Scholar] [CrossRef]

- Song, L.M.W.K.; Adler, D.G.; Conway, J.D.; Diehl, D.L.; Farraye, F.A.; Kantsevoy, S.V.; Kwon, R.; Mamula, P.; Rodriguez, B.; Shah, R.J.; et al. Narrow band imaging and multiband imaging. Gastrointest. Endosc. 2008, 67, 581–589. [Google Scholar] [CrossRef]

- Zhao, Y.; Yi, C.; Kong, S.G.; Pan, Q.; Cheng, Y. Multi-band polarization imaging. In Multi-Band Polarization Imaging and Applications; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2016; pp. 47–71. [Google Scholar]

- Hu, H.; Lin, Y.; Li, X.; Qi, P.; Liu, T. IPLNet: A neural network for intensity-polarization imaging in low light. Opt. Lett. 2020, 45, 6162–6165. [Google Scholar] [CrossRef]

- Guan, Z.; Goudail, F.; Yu, M.; Li, X.; Han, Q.; Cheng, Z.; Hu, H.; Liu, T. Contrast optimization in broadband passive polarimetric imaging based on color camera. Opt. Express 2019, 27, 2444–2454. [Google Scholar] [CrossRef]

- Hariharan, P. Optical Holography: Principles, Techniques, and Applications; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Kim, M.K. Full color natural light holographic camera. Opt. Express 2013, 21, 9636–9642. [Google Scholar] [CrossRef]

- Levoy, M. Light fields and computational imaging. Computer 2006, 39, 46–55. [Google Scholar] [CrossRef]

- Tyo, J.S.; Goldstein, D.L.; Chenault, D.B.; Shaw, J.A. Review of passive imaging polarimetry for remote sensing applications. Appl. Opt. 2006, 45, 5453–5469. [Google Scholar] [CrossRef]

- Morio, J.; Refregier, P.; Goudail, F.; Dubois-Fernandez, P.C.; Dupuis, X. A characterization of Shannon entropy and Bhattacharyya measure of contrast in polarimetric and interferometric SAR image. Proc. IEEE 2009, 97, 1097–1108. [Google Scholar] [CrossRef]

- Li, X.; Xu, J.; Zhang, L.; Hu, H.; Chen, S.C. Underwater image restoration via Stokes decomposition. Opt. Lett. 2022, 47, 2854–2857. [Google Scholar] [CrossRef]

- Chen, W.; Yan, L.; Chandrasekar, V. Optical polarization remote sensing. Int. J. Remote Sens. 2020, 41, 4849–4852. [Google Scholar] [CrossRef]

- Liu, T.; Guan, Z.; Li, X.; Cheng, Z.; Han, Y.; Yang, J.; Li, K.; Zhao, J.; Hu, H. Polarimetric underwater image recovery for color image with crosstalk compensation. Opt. Lasers Eng. 2020, 124, 105833. [Google Scholar] [CrossRef]

- Meriaudeau, F.; Ferraton, M.; Stolz, C.; Morel, O.; Bigué, L. Polarization imaging for industrial inspection. Image Process. Mach. Vis. Appl. Int. Soc. Opt. Photonics 2008, 6813, 681308. [Google Scholar]

- Liu, X.; Li, X.; Chen, S.C. Enhanced polarization demosaicking network via a precise angle of polarization loss calculation method. Opt. Lett. 2022, 47, 1065–1069. [Google Scholar] [CrossRef]

- Li, X.; Han, Y.; Wang, H.; Liu, T.; Chen, S.C.; Hu, H. Polarimetric Imaging Through Scattering Media: A Review. Front. Phys. 2022, 10, 153. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Demos, S.; Alfano, R. Optical polarization imaging. Appl. Opt. 1997, 36, 150–155. [Google Scholar] [CrossRef]

- Liu, Y.; York, T.; Akers, W.J.; Sudlow, G.P.; Gruev, V.; Achilefu, S. Complementary fluorescence-polarization microscopy using division-of-focal-plane polarization imaging sensor. J. Biomed. Opt. 2012, 17, 116001. [Google Scholar] [CrossRef]

- Fade, J.; Panigrahi, S.; Carré, A.; Frein, L.; Hamel, C.; Bretenaker, F.; Ramachandran, H.; Alouini, M. Long-range polarimetric imaging through fog. Appl. Opt. 2014, 53, 3854–3865. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Hu, H.; Zhao, L.; Wang, H.; Han, Q.; Cheng, Z.; Liu, T. Pseudo-polarimetric method for dense haze removal. IEEE Photonics J. 2019, 11, 6900611. [Google Scholar] [CrossRef]

- Li, X.; Wang, H.; Hu, H.; Liu, T. Polarimetric underwater image recovery based on circularly polarized illumination and histogram stretching. In AOPC 2019: Optical Sensing and Imaging Technology; SPIE: Bellingham, WA, USA, 2019; Volume 11338, p. 113382O. [Google Scholar]

- Zhanghao, K.; Chen, L.; Yang, X.S.; Wang, M.Y.; Jing, Z.L.; Han, H.B.; Zhang, M.Q.; Jin, D.; Gao, J.T.; Xi, P. Super-resolution dipole orientation mapping via polarization demodulation. Light. Sci. Appl. 2016, 5, e16166. [Google Scholar] [CrossRef] [PubMed]

- Hao, X.; Kuang, C.; Wang, T.; Liu, X. Effects of polarization on the de-excitation dark focal spot in STED microscopy. J. Opt. 2010, 12, 115707. [Google Scholar] [CrossRef]

- Li, X.; Goudail, F.; Chen, S.C. Self-calibration for Mueller polarimeters based on DoFP polarization imagers. Opt. Lett. 2022, 47, 1415–1418. [Google Scholar] [CrossRef]

- Li, X.; Liu, W.; Goudail, F.; Chen, S.C. Optimal nonlinear Stokes–Mueller polarimetry for multi-photon processes. Opt. Lett. 2022, 47, 3287–3290. [Google Scholar] [CrossRef]

- Goudail, F.; Terrier, P.; Takakura, Y.; Bigué, L.; Galland, F.; DeVlaminck, V. Target detection with a liquid-crystal-based passive Stokes polarimeter. Appl. Opt. 2004, 43, 274–282. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Instant dehazing of images using polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Treibitz, T.; Schechner, Y.Y. Active polarization descattering. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 385–399. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Polarization-based vision through haze. Appl. Opt. 2003, 42, 511–525. [Google Scholar] [CrossRef]

- Ghosh, N.; Vitkin, A.I. Tissue polarimetry: Concepts, challenges, applications, and outlook. J. Biomed. Opt. 2011, 16, 110801. [Google Scholar] [CrossRef]

- Rehbinder, J.; Haddad, H.; Deby, S.; Teig, B.; Nazac, A.; Novikova, T.; Pierangelo, A.; Moreau, F. Ex vivo Mueller polarimetric imaging of the uterine cervix: A first statistical evaluation. J. Biomed. Opt. 2016, 21, 071113. [Google Scholar] [CrossRef]

- Jacques, S.L.; Ramella-Roman, J.C.; Lee, K. Imaging skin pathology with polarized light. J. Biomed. Opt. 2002, 7, 329–340. [Google Scholar] [CrossRef]

- Wang, W.; Lim, L.G.; Srivastava, S.; Bok-Yan So, J.; Shabbir, A.; Liu, Q. Investigation on the potential of Mueller matrix imaging for digital staining. J. Biophotonics 2016, 9, 364–375. [Google Scholar] [CrossRef]

- Pierangelo, A.; Benali, A.; Antonelli, M.R.; Novikova, T.; Validire, P.; Gayet, B.; De Martino, A. Ex-vivo characterization of human colon cancer by Mueller polarimetric imaging. Opt. Express 2011, 19, 1582–1593. [Google Scholar] [CrossRef]

- Parikh, H.; Patel, S.; Patel, V. Classification of SAR and PolSAR images using deep learning: A review. Int. J. Image Data Fusion 2020, 11, 1–32. [Google Scholar] [CrossRef]

- Pierangelo, A.; Manhas, S.; Benali, A.; Fallet, C.; Totobenazara, J.L.; Antonelli, M.R.; Novikova, T.; Gayet, B.; De Martino, A.; Validire, P. Multispectral Mueller polarimetric imaging detecting residual cancer and cancer regression after neoadjuvant treatment for colorectal carcinomas. J. Biomed. Opt. 2013, 18, 046014. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; De Grandi, G. Polarimetric SAR speckle filtering and its implication for classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2363–2373. [Google Scholar]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Yan, L.; Li, Y.; Chandrasekar, V.; Mortimer, H.; Peltoniemi, J.; Lin, Y. General review of optical polarization remote sensing. Int. J. Remote Sens. 2020, 41, 4853–4864. [Google Scholar] [CrossRef]

- Mullissa, A.G.; Tolpekin, V.; Stein, A.; Perissin, D. Polarimetric differential SAR interferometry in an arid natural environment. Int. J. Appl. Earth Obs. Geoinf. 2017, 59, 9–18. [Google Scholar] [CrossRef]

- Shang, R.; He, J.; Wang, J.; Xu, K.; Jiao, L.; Stolkin, R. Dense connection and depthwise separable convolution based CNN for polarimetric SAR image classification. Knowl.-Based Syst. 2020, 194, 105542. [Google Scholar] [CrossRef]

- Pourshamsi, M.; Xia, J.; Yokoya, N.; Garcia, M.; Lavalle, M.; Pottier, E.; Balzter, H. Tropical forest canopy height estimation from combined polarimetric SAR and LiDAR using machine-learning. ISPRS J. Photogramm. Remote Sens. 2021, 172, 79–94. [Google Scholar] [CrossRef]

- Yang, X.; Pan, T.; Yang, W.; Li, H.C. PolSAR image despeckling using trained models on single channel SAR images. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019; pp. 1–4. [Google Scholar]

- Hu, J.; Mou, L.; Schmitt, A.; Zhu, X.X. FusioNet: A two-stream convolutional neural network for urban scene classification using PolSAR and hyperspectral data. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Ferro-Famil, L.; Pottier, E.; Lee, J.S. Unsupervised classification of multifrequency and fully polarimetric SAR images based on the H/A/Alpha-Wishart classifier. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2332–2342. [Google Scholar] [CrossRef]

- Singha, S.; Johansson, A.M.; Doulgeris, A.P. Robustness of SAR sea ice type classification across incidence angles and seasons at L-band. IEEE Trans. Geosci. Remote Sens. 2020, 59, 9941–9952. [Google Scholar] [CrossRef]

- Pallotta, L.; Orlando, D. Polarimetric covariance eigenvalues classification in SAR images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 746–750. [Google Scholar] [CrossRef]

- Tadono, T.; Ohki, M.; Abe, T. Summary of natural disaster responses by the Advanced Land Observing Satellite-2 (ALOS-2). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 69–72. [Google Scholar] [CrossRef]

- Natsuaki, R.; Hirose, A. L-Band SAR Interferometric Analysis for Flood Detection in Urban Area-a Case Study in 2015 Joso Flood, Japan. In Proceedings of the IGARSS 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6592–6595. [Google Scholar]

- Hu, H.; Zhang, Y.; Li, X.; Lin, Y.; Cheng, Z.; Liu, T. Polarimetric underwater image recovery via deep learning. Opt. Lasers Eng. 2020, 133, 106152. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Feng, R.; Luo, S.; Zhang, C.; Jiang, M.; Shen, H. Generating high-quality and high-resolution seamless satellite imagery for large-scale urban regions. Remote Sens. 2020, 12, 81. [Google Scholar] [CrossRef]

- Pan, T.; Peng, D.; Yang, W.; Li, H.C. A filter for SAR image despeckling using pre-trained convolutional neural network model. Remote Sens. 2019, 11, 2379. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, J.; Yang, Z.; Ma, X. Learning a dilated residual network for SAR image despeckling. Remote Sens. 2018, 10, 196. [Google Scholar] [CrossRef]

- Goudail, F. Noise minimization and equalization for Stokes polarimeters in the presence of signal-dependent Poisson shot noise. Opt. Lett. 2009, 34, 647–649. [Google Scholar] [CrossRef]

- Denis, L.; Dalsasso, E.; Tupin, F. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 411–414.

- Qi, P.; Li, X.; Han, Y.; Zhang, L.; Xu, J.; Cheng, Z.; Liu, T.; Zhai, J.; Hu, H. U2R-pGAN: Unpaired underwater-image recovery with polarimetric generative adversarial network. Opt. Lasers Eng. 2022, 157, 107112. [Google Scholar] [CrossRef]

- Akiyama, K.; Ikeda, S.; Pleau, M.; Fish, V.L.; Tazaki, F.; Kuramochi, K.; Broderick, A.E.; Dexter, J.; Mościbrodzka, M.; Gowanlock, M.; et al. Superresolution full-polarimetric imaging for radio interferometry with sparse modeling. Astron. J. 2017, 153, 159. [Google Scholar] [CrossRef]

- Ahmed, A.; Zhao, X.; Gruev, V.; Zhang, J.; Bermak, A. Residual interpolation for division of focal plane polarization image sensors. Opt. Express 2017, 25, 10651–10662. [Google Scholar] [CrossRef]

- Tao, Y.; Muller, J.P. Super-resolution restoration of misr images using the ucl magigan system. Remote Sens. 2019, 11, 52. [Google Scholar] [CrossRef]

- Goudail, F.; Bénière, A. Optimization of the contrast in polarimetric scalar images. Opt. Lett. 2009, 34, 1471–1473. [Google Scholar] [CrossRef]

- Ma, X.; Wu, P.; Wu, Y.; Shen, H. A review on recent developments in fully polarimetric SAR image despeckling. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 743–758. [Google Scholar] [CrossRef]

- Zhang, L.; Dong, H.; Zou, B. Efficiently utilizing complex-valued PolSAR image data via a multi-task deep learning framework. ISPRS J. Photogramm. Remote Sens. 2019, 157, 59–72. [Google Scholar] [CrossRef]

- Li, N.; Zhao, Y.; Pan, Q.; Kong, S.G.; Chan, J.C.W. Full-Time Monocular Road Detection Using Zero-Distribution Prior of Angle of Polarization. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 457–473. [Google Scholar]

- Dickson, C.N.; Wallace, A.M.; Kitchin, M.; Connor, B. Long-wave infrared polarimetric cluster-based vehicle detection. JOSA A 2015, 32, 2307–2315. [Google Scholar] [CrossRef]

- Carnicer, A.; Javidi, B. Polarimetric 3D integral imaging in photon-starved conditions. Opt. Express 2015, 23, 6408–6417. [Google Scholar] [CrossRef]

- Hagen, N.; Otani, Y. Stokes polarimeter performance: General noise model and analysis. Appl. Opt. 2018, 57, 4283–4296. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Liu, T.; Huang, B.; Song, Z. Optimal distribution of integration time for intensity measurements in degree of linear polarization polarimetry. Opt. Express 2016, 24, 7191–7200. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Hu, H.; Wang, H.; Liu, T. Optimal Measurement Matrix of Partial Polarimeter for Measuring Ellipsometric Parameters with Eight Intensity Measurements. IEEE Access 2019, 7, 31494–31500. [Google Scholar] [CrossRef]

- Goudail, F.; Li, X.; Boffety, M.; Roussel, S.; Liu, T.; Hu, H. Precision of retardance autocalibration in full-Stokes division-of-focal-plane imaging polarimeters. Opt. Lett. 2019, 44, 5410–5413. [Google Scholar] [CrossRef] [PubMed]

- Hu, H.; Qi, P.; Li, X.; Cheng, Z.; Liu, T. Underwater imaging enhancement based on a polarization filter and histogram attenuation prior. J. Phys. D Appl. Phys. 2021, 54, 175102. [Google Scholar] [CrossRef]

- Lopez-Martinez, C.; Fabregas, X. Polarimetric SAR speckle noise model. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2232–2242. [Google Scholar] [CrossRef]

- Jaffe, J.S. Computer modeling and the design of optimal underwater imaging systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- Cariou, J.; Jeune, B.L.; Lotrian, J.; Guern, Y. Polarization effects of seawater and underwater targets. Appl. Opt. 1990, 29, 1689. [Google Scholar] [CrossRef]

- Shen, H.; Lin, L.; Li, J.; Yuan, Q.; Zhao, L. A residual convolutional neural network for polarimetric SAR image super-resolution. ISPRS J. Photogramm. Remote Sens. 2020, 161, 90–108. [Google Scholar] [CrossRef]

- Li, X.; Le Teurnier, B.; Boffety, M.; Liu, T.; Hu, H.; Goudail, F. Theory of autocalibration feasibility and precision in full Stokes polarization imagers. Opt. Express 2020, 28, 15268–15283. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Goudail, F.; Liu, T. Fundamental precision limits of full Stokes polarimeters based on DoFP polarization cameras for an arbitrary number of acquisitions. Opt. Express 2019, 27, 31261–31272. [Google Scholar] [CrossRef]

- Li, X.; Goudail, F.; Hu, H.; Han, Q.; Cheng, Z.; Liu, T. Optimal ellipsometric parameter measurement strategies based on four intensity measurements in presence of additive Gaussian and Poisson noise. Opt. Express 2018, 26, 34529–34546. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Wang, H.; Wu, L.; Liu, T.G. Influence of noise statistics on optimizing the distribution of integration time for degree of linear polarization polarimetry. Opt. Eng. 2018, 57, 064110. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Wu, L.; Liu, T. Optimization of instrument matrix for Mueller matrix ellipsometry based on partial elements analysis of the Mueller matrix. Opt. Express 2017, 25, 18872–18884. [Google Scholar] [CrossRef]

- Li, X.; Liu, T.; Huang, B.; Song, Z.; Hu, H. Optimal distribution of integration time for intensity measurements in Stokes polarimetry. Opt. Express 2015, 23, 27690–27699. [Google Scholar] [CrossRef]

- Dubreuil, M.; Delrot, P.; Leonard, I.; Alfalou, A.; Brosseau, C.; Dogariu, A. Exploring underwater target detection by imaging polarimetry and correlation techniques. Appl. Opt. 2013, 52, 997–1005. [Google Scholar] [CrossRef]

- Sun, R.; Sun, X.; Chen, F.; Pan, H.; Song, Q. An artificial target detection method combining a polarimetric feature extractor with deep convolutional neural networks. Int. J. Remote Sens. 2020, 41, 4995–5009. [Google Scholar] [CrossRef]

- Fan, Q.; Chen, F.; Cheng, M.; Lou, S.; Xiao, R.; Zhang, B.; Wang, C.; Li, J. Ship detection using a fully convolutional network with compact polarimetric sar images. Remote Sens. 2019, 11, 2171. [Google Scholar] [CrossRef]

- Liu, F.; Jiao, L.; Tang, X.; Yang, S.; Ma, W.; Hou, B. Local restricted convolutional neural network for change detection in polarimetric SAR images. IEEE Trans. Neural Networks Learn. Syst. 2018, 30, 818–833. [Google Scholar] [CrossRef]

- Goudail, F.; Tyo, J.S. When is polarimetric imaging preferable to intensity imaging for target detection? JOSA A 2011, 28, 46–53. [Google Scholar] [CrossRef]

- Wolff, L.B. Polarization-based material classification from specular reflection. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 1059–1071. [Google Scholar] [CrossRef]

- Tominaga, S.; Kimachi, A. Polarization imaging for material classification. Opt. Eng. 2008, 47, 123201. [Google Scholar]

- Fernández-Michelli, J.I.; Hurtado, M.; Areta, J.A.; Muravchik, C.H. Unsupervised classification algorithm based on EM method for polarimetric SAR images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 56–65. [Google Scholar] [CrossRef]

- Wen, Z.; Wu, Q.; Liu, Z.; Pan, Q. Polar-spatial feature fusion learning with variational generative-discriminative network for PolSAR classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8914–8927. [Google Scholar] [CrossRef]

- Solomon, J.E. Polarization imaging. Appl. Opt. 1981, 20, 1537–1544. [Google Scholar] [CrossRef]

- Daily, M.; Elachi, C.; Farr, T.; Schaber, G. Discrimination of geologic units in Death Valley using dual frequency and polarization imaging radar data. Geophys. Res. Lett. 1978, 5, 889–892. [Google Scholar] [CrossRef]

- Leader, J. Polarization discrimination in remote sensing. In Proceedings of the AGARD Electromagnetic Wave Propagation Involving Irregular Surfaces and Inhomogeneous Media 12 p (SEE N75-22045 13-70), Hague, The Netherlands, 25–29 March 1975. [Google Scholar]

- Gruev, V.; Perkins, R.; York, T. CCD polarization imaging sensor with aluminum nanowire optical filters. Opt. Express 2010, 18, 19087–19094. [Google Scholar] [CrossRef]

- Zhong, H.; Liu, G. Nonlocal Means Filter for Polarimetric SAR Data Despeckling Based on Discriminative Similarity Measure. IEEE Geosci. Remote Sens. Lett. 2014, 11, 514–518. [Google Scholar]

- Zhao, Y.; Liu, J.G.; Zhang, B.; Hong, W.; Wu, Y.R. Adaptive Total Variation Regularization Based SAR Image Despeckling and Despeckling Evaluation Index. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2765–2774. [Google Scholar] [CrossRef]

- Nie, X.; Qiao, H.; Zhang, B.; Wang, Z. PolSAR image despeckling based on the Wishart distribution and total variation regularization. In Proceedings of the 11th World Congress on Intelligent Control and Automation, Shenyang, China, 29 June–4 July 2014; pp. 1479–1484. [Google Scholar]

- Zhong, H.; Zhang, J.; Liu, G. Robust polarimetric SAR despeckling based on nonlocal means and distributed Lee filter. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4198–4210. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, H.; Liang, R.; Zhou, W.; Hui, B.; Chang, Z. PCA-based denoising method for division of focal plane polarimeters. Optics Express 2017, 25, 2391–2400. [Google Scholar] [CrossRef]

- Wenbin, Y.; Shiting, L.; Xiaojin, Z.; Abubakar, A.; Amine, B. A K Times Singular Value Decomposition Based Image Denoising Algorithm for DoFP Polarization Image Sensors with Gaussian Noise. IEEE Sens. J. 2018, 18, 6138–6144. [Google Scholar]

- Song, S.; Xu, B.; Yang, J. Ship detection in polarimetric SAR images via variational Bayesian inference. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2819–2829. [Google Scholar] [CrossRef]

- Abubakar, A.; Zhao, X.; Li, S.; Takruri, M.; Bastaki, E.; Bermak, A. A Block-Matching and 3-D Filtering Algorithm for Gaussian Noise in DoFP Polarization Images. IEEE Sens. J. 2018, 18, 7429–7435. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Khedri, E.; Hasanlou, M.; Tabatabaeenejad, A. Estimating Soil Moisture Using Polsar Data: A Machine Learning Approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 133–137. [Google Scholar] [CrossRef]

- Mahendru, A.; Sarkar, M. Bio-inspired object classification using polarization imaging. In Proceedings of the 2012 Sixth International Conference on Sensing Technology (ICST), Kolkata, India, 18–21 December 2012; pp. 207–212. [Google Scholar]

- Zhang, L.; Shi, L.; Cheng, J.C.Y.; Chu, W.C.; Yu, S.C.H. LPAQR-Net: Efficient Vertebra Segmentation from Biplanar Whole-spine Radiographs. IEEE J. Biomed. Health Inform. 2021, 25, 2710–2721. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Takruri, M.; Abubakar, A.; Alnaqbi, N.; Al Shehhi, H.; Jallad, A.H.M.; Bermak, A. DoFP-ML: A Machine Learning Approach to Food Quality Monitoring Using a DoFP Polarization Image Sensor. IEEE Access 2020, 8, 150282–150290. [Google Scholar] [CrossRef]

- Hänsch, R.; Hellwich, O. Skipping the real world: Classification of PolSAR images without explicit feature extraction. ISPRS J. Photogramm. Remote Sens. 2018, 140, 122–132. [Google Scholar] [CrossRef]

- Wang, H.; Xu, F.; Jin, Y.Q. A review of PolSAR image classification: From polarimetry to deep learning. In Proceedings of the IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 3189–3192. [Google Scholar]

- Pourshamsi, M.; Garcia, M.; Lavalle, M.; Pottier, E.; Balzter, H. Machine-Learning Fusion of PolSAR and LiDAR Data for Tropical Forest Canopy Height Estimation. In Proceedings of the IGARSS 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8108–8111. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Paoletti, M.; Haut, J.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Liu, L.; Lei, B. Can SAR images and optical images transfer with each other? In Proceedings of the IGARSS 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7019–7022. [Google Scholar]

- Wang, H.; Zhang, Z.; Hu, Z.; Dong, Q. SAR-to-Optical Image Translation with Hierarchical Latent Features. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, J.; Wei, Z.; Wang, N.; Gao, X. SAR-to-optical image translation based on improved CGAN. Pattern Recognit. 2022, 121, 108208. [Google Scholar] [CrossRef]

- Fuentes Reyes, M.; Auer, S.; Merkle, N.; Henry, C.; Schmitt, M. Sar-to-optical image translation based on conditional generative adversarial networks—Optimization, opportunities and limits. Remote Sens. 2019, 11, 2067. [Google Scholar] [CrossRef]

- Guneet Mutreja, Rohit Singh. SAR to RGB Translation Using CycleGAN. 2020. Available online: https://www.esri.com/arcgis-blog/products/api-python/imagery/sar-to-rgb-translation-using-cyclegan/ (accessed on 10 March 2020).

- Zebker, H.A.; Van Zyl, J.J. Imaging radar polarimetry: A review. Proc. IEEE 1991, 79, 1583–1606. [Google Scholar] [CrossRef]

- Boerner, W.M.; Cram, L.A.; Holm, W.A.; Stein, D.E.; Wiesbeck, W.; Keydel, W.; Giuli, D.; Gjessing, D.T.; Molinet, F.A.; Brand, H. Direct and Inverse Methods in Radar Polarimetry; Springer Science & Business Media: Berlin, Germany, 2013; Volume 350. [Google Scholar]

- Jones, R.C. A new calculus for the treatment of optical systemsi. description and discussion of the calculus. JOSA A 1941, 31, 488–493. [Google Scholar] [CrossRef]

- Jones, R.C. A new calculus for the treatment of optical systems. IV. JOSA A 1942, 32, 486–493. [Google Scholar] [CrossRef]

- Jones, R.C. A new calculus for the treatment of optical systemsv. A more general formulation, and description of another calculus. JOSA A 1947, 37, 107–110. [Google Scholar] [CrossRef]

- Pérez, J.J.G.; Ossikovski, R. Polarized Light and the Mueller Matrix Approach; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Oyama, K.; Hirose, A. Phasor quaternion neural networks for singular point compensation in polarimetric-interferometric synthetic aperture radar. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2510–2519. [Google Scholar] [CrossRef]

- Shang, R.; Wang, G.; A Okoth, M.; Jiao, L. Complex-valued convolutional autoencoder and spatial pixel-squares refinement for polarimetric SAR image classification. Remote Sens. 2019, 11, 522. [Google Scholar] [CrossRef]

- Henderson, F.; Lewis, A.; Reyerson, R. Polarimetry in Radar Remote Sensing: Basic and Applied Concepts; Wiley: Hoboken, NJ, USA, 1998. [Google Scholar]

- Yang, C.; Hou, B.; Ren, B.; Hu, Y.; Jiao, L. CNN-based polarimetric decomposition feature selection for PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8796–8812. [Google Scholar] [CrossRef]

- Krogager, E. New decomposition of the radar target scattering matrix. Electron. Lett. 1990, 26, 1525–1527. [Google Scholar] [CrossRef]

- Touzi, R. Target scattering decomposition of one-look and multi-look SAR data using a new coherent scattering model: The TSVM. In Proceedings of the IGARSS 2004—2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; Volume 4, pp. 2491–2494. [Google Scholar]

- Holm, W.A.; Barnes, R.M. On radar polarization mixed target state decomposition techniques. In Proceedings of the 1988 IEEE National Radar Conference, Ann Arbor, MI, USA, 20–21 April 1988; pp. 249–254. [Google Scholar]

- Huynen, J.R. Phenomenological Theory of Radar Targets; Citeseer: Princeton, NJ, USA, 1970. [Google Scholar]

- Van Zyl, J.J. Application of Cloude’s target decomposition theorem to polarimetric imaging radar data. In Radar Polarimetry; SPIE: Bellingham, WA, USA, 1993; Volume 1748, pp. 184–191. [Google Scholar]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Zhang, L.; Zou, B.; Cai, H.; Zhang, Y. Multiple-component scattering model for polarimetric SAR image decomposition. IEEE Geosci. Remote Sens. Lett. 2008, 5, 603–607. [Google Scholar] [CrossRef]

- Ballester-Berman, J.D.; Lopez-Sanchez, J.M. Applying the Freeman–Durden decomposition concept to polarimetric SAR interferometry. IEEE Trans. Geosci. Remote Sens. 2009, 48, 466–479. [Google Scholar] [CrossRef]

- Arii, M.; Van Zyl, J.J.; Kim, Y. Adaptive model-based decomposition of polarimetric SAR covariance matrices. IEEE Trans. Geosci. Remote Sens. 2010, 49, 1104–1113. [Google Scholar] [CrossRef]

- Serre, T.; Kreiman, G.; Kouh, M.; Cadieu, C.; Knoblich, U.; Poggio, T. A quantitative theory of immediate visual recognition. Prog. Brain Res. 2007, 165, 33–56. [Google Scholar] [PubMed]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Zhang, L.; Yu, S.C.H. Context-aware PolyUNet for Liver and Lesion Segmentation from Abdominal CT Images. arXiv 2021, arXiv:2106.11330. [Google Scholar]

- Koyama, C.N.; Watanabe, M.; Sano, E.E.; Hayashi, M.; Nagatani, I.; Tadono, T.; Shimada, M. Improving L-Band SAR Forest Monitoring by Big Data Deep Learning Based on ALOS-2 5 Years Pan-Tropical Observations. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6747–6750. [Google Scholar]

- Li, Z.; Yang, W.; Peng, S.; Liu, F. A survey of convolutional neural networks: Analysis, applications, and prospects. arXiv 2020, arXiv:2004.02806. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Y.; Zhang, N.; Xu, D.; Chen, B. Research on Scene Classification Method of High-Resolution Remote Sensing Images Based on RFPNet. Appl. Sci. 2019, 9, 2028. [Google Scholar] [CrossRef]

- Fukushima, K. A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Rahmani, B.; Loterie, D.; Konstantinou, G.; Psaltis, D.; Moser, C. Multimode optical fiber transmission with a deep learning network. Light. Sci. Appl. 2018, 7, 69. [Google Scholar] [CrossRef]

- Rivenson, Y.; Zhang, Y.; Günaydın, H.; Teng, D.; Ozcan, A. Phase recovery and holographic image reconstruction using deep learning in neural networks. Light. Sci. Appl. 2018, 7, 17141. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, Ft. Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Barbastathis, G.; Ozcan, A.; Situ, G. On the use of deep learning for computational imaging. Optica 2019, 6, 921–943. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santiago, Chile, 7–13 December 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A new end-to-end multi-dimensional CNN framework for land cover/land use change detection in multi-source remote sensing datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Zhang, J.; Shao, J.; Chen, J.; Yang, D.; Liang, B.; Liang, R. PFNet: An unsupervised deep network for polarization image fusion. Opt. Lett. 2020, 45, 1507–1510. [Google Scholar] [CrossRef]

- Blin, R.; Ainouz, S.; Canu, S.; Meriaudeau, F. A new multimodal RGB and polarimetric image dataset for road scenes analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 216–217. [Google Scholar]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Makhzani, A.; Frey, B. K-sparse autoencoders. arXiv 2013, arXiv:1312.5663. [Google Scholar]

- Luo, W.; Li, J.; Yang, J.; Xu, W.; Zhang, J. Convolutional sparse autoencoders for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 3289–3294. [Google Scholar] [CrossRef] [PubMed]

- Kusner, M.J.; Paige, B.; Hernández-Lobato, J.M. Grammar variational autoencoder. In Proceedings of the International Conference on Machine Learning PMLR, Sydney, Australia, 6–11 August 2017; pp. 1945–1954. [Google Scholar]

- Chen, W.; Gou, S.; Wang, X.; Li, X.; Jiao, L. Classification of PolSAR images using multilayer autoencoders and a self-paced learning approach. Remote Sens. 2018, 10, 110. [Google Scholar] [CrossRef]

- Zhang, L.; Ma, W.; Zhang, D. Stacked sparse autoencoder in PolSAR data classification using local spatial information. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1359–1363. [Google Scholar] [CrossRef]

- Hou, B.; Kou, H.; Jiao, L. Classification of polarimetric SAR images using multilayer autoencoders and superpixels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3072–3081. [Google Scholar] [CrossRef]

- Hu, Y.; Fan, J.; Wang, J. Classification of PolSAR images based on adaptive nonlocal stacked sparse autoencoder. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1050–1054. [Google Scholar] [CrossRef]

- Geng, J.; Ma, X.; Fan, J.; Wang, H. Semisupervised classification of polarimetric SAR image via superpixel restrained deep neural network. IEEE Geosci. Remote Sens. Lett. 2017, 15, 122–126. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Liu, F.; Jiao, L.; Hou, B.; Yang, S. POL-SAR image classification based on Wishart DBN and local spatial information. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3292–3308. [Google Scholar] [CrossRef]

- Tanase, R.; Datcu, M.; Raducanu, D. A convolutional deep belief network for polarimetric SAR data feature extraction. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7545–7548. [Google Scholar]

- Lv, Q.; Dou, Y.; Niu, X.; Xu, J.; Xu, J.; Xia, F. Urban land use and land cover classification using remotely sensed SAR data through deep belief networks. J. Sens. 2015, 2015, 538063. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, S.; Gao, C.; Shi, D.; Zhang, D.; Hou, B. Wishart RBM based DBN for polarimetric synthetic radar data classification. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1841–1844. [Google Scholar]

- Shah Hosseini, R.; Entezari, I.; Homayouni, S.; Motagh, M.; Mansouri, B. Classification of polarimetric SAR images using Support Vector Machines. Can. J. Remote Sens. 2011, 37, 220–233. [Google Scholar] [CrossRef]

- Wang, L.; Xu, X.; Dong, H.; Gui, R.; Yang, R.; Pu, F. Exploring Convolutional Lstm for PolSAR Image Classification. In Proceedings of the IGARSS 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8452–8455. [Google Scholar]

- Wang, L.; Xu, X.; Gui, R.; Yang, R.; Pu, F. Learning Rotation Domain Deep Mutual Information Using Convolutional LSTM for Unsupervised PolSAR Image Classification. Remote Sens. 2020, 12, 4075. [Google Scholar] [CrossRef]

- Jiao, L.; Liu, F. Wishart deep stacking network for fast POLSAR image classification. IEEE Trans. Image Process. 2016, 25, 3273–3286. [Google Scholar] [CrossRef]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Gao, F.; Ma, F.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. Semi-supervised generative adversarial nets with multiple generators for SAR image recognition. Sensors 2018, 18, 2706. [Google Scholar] [CrossRef]

- Gao, F.; Yang, Y.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. A deep convolutional generative adversarial networks (DCGANs)-based semi-supervised method for object recognition in synthetic aperture radar (SAR) images. Remote Sens. 2018, 10, 846. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, W.; Yi, X.; Khan, A.; Yuan, F.; Zheng, Y. Recent progress on generative adversarial networks (GANs): A survey. IEEE Access 2019, 7, 36322–36333. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18 June–22 June 2018; pp. 2472–2481. [Google Scholar]

- Li, X.; Goudail, F.; Qi, P.; Liu, T.; Hu, H. Integration time optimization and starting angle autocalibration of full Stokes imagers based on a rotating retarder. Opt. Express 2021, 29, 9494–9512. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Wu, L.; Yu, Y.; Liu, T. Impact of intensity integration time distribution on the measurement precision of Mueller polarimetry. J. Quant. Spectrosc. Radiat. Transf. 2019, 231, 22–27. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Goudail, F.; Bénière, A. Estimation precision of the degree of linear polarization and of the angle of polarization in the presence of different sources of noise. Appl. Opt. 2010, 49, 683–693. [Google Scholar] [CrossRef] [PubMed]

- Réfrégier, P.; Goudail, F. Statistical Image Processing Techniques for Noisy Images: An Application-Oriented Approach; Springer Science & Business Media: Berlin, Germany, 2013. [Google Scholar]

- Goudail, F.; Réfrégier, P. Statistical algorithms for target detection in coherent active polarimetric images. JOSA A 2001, 18, 3049–3060. [Google Scholar] [CrossRef] [PubMed]

- Deledalle, C.A.; Denis, L.; Tupin, F. MuLoG: A generic variance-stabilization approach for speckle reduction in SAR interferometry and SAR polarimetry. In Proceedings of the IGARSS 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 5816–5819. [Google Scholar]

- Li, S.; Ye, W.; Liang, H.; Pan, X.; Lou, X.; Zhao, X. K-SVD based denoising algorithm for DoFP polarization image sensors. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Tibbs, A.B.; Daly, I.M.; Roberts, N.W.; Bull, D.R. Denoising imaging polarimetry by adapted BM3D method. JOSA A 2018, 35, 690–701. [Google Scholar] [CrossRef] [PubMed]

- Aviñoá, M.; Shen, X.; Bosch, S.; Javidi, B.; Carnicer, A. Estimation of Degree of Polarization in Low Light Using Truncated Poisson Distribution. IEEE Photonics J. 2022, 14, 6531908. [Google Scholar] [CrossRef]

- Dodda, V.C.; Kuruguntla, L.; Elumalai, K.; Chinnadurai, S.; Sheridan, J.T.; Muniraj, I. A denoising framework for 3D and 2D imaging techniques based on photon detection statistics. Sci. Rep. 2023, 13, 1365. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.; Cheng, Z.; Zhai, J.; Hu, H. Attention-based neural network for polarimetric image denoising. Opt. Lett. 2022, 47, 2726–2729. [Google Scholar] [CrossRef]

- Santana-Cedrés, D.; Gomez, L.; Alvarez, L.; Frery, A.C. Despeckling PolSAR images with a structure tensor filter. IEEE Geosci. Remote Sens. Lett. 2019, 17, 357–361. [Google Scholar] [CrossRef]

- Touzi, R.; Lopes, A. The principle of speckle filtering in polarimetric SAR imagery. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1110–1114. [Google Scholar] [CrossRef]

- Lopez-Martinez, C.; Fabregas, X. Model-based polarimetric SAR speckle filter. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3894–3907. [Google Scholar] [CrossRef]

- Wen, D.; Jiang, Y.; Zhang, Y.; Gao, Q. Statistical properties of polarization image and despeckling method by multiresolution block-matching 3D filter. Opt. Spectrosc. 2014, 116, 462–469. [Google Scholar] [CrossRef]

- Nie, X.; Qiao, H.; Zhang, B. A variational model for PolSAR data speckle reduction based on the Wishart distribution. IEEE Trans. Image Process. 2015, 24, 1209–1222. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Chen, J.; Chen, Y.; An, W.; Cui, Y.; Yang, J. Nonlocal filtering for polarimetric SAR data: A pretest approach. IEEE Trans. Geosci. Remote Sens. 2010, 49, 1744–1754. [Google Scholar] [CrossRef]

- Nie, X.; Qiao, H.; Zhang, B.; Huang, X. A nonlocal TV-based variational method for PolSAR data speckle reduction. IEEE Trans. Image Process. 2016, 25, 2620–2634. [Google Scholar] [CrossRef] [PubMed]

- Foucher, S.; López-Martínez, C. Analysis, evaluation, and comparison of polarimetric SAR speckle filtering techniques. IEEE Trans. Image Process. 2014, 23, 1751–1764. [Google Scholar] [CrossRef]

- Lattari, F.; Gonzalez Leon, B.; Asaro, F.; Rucci, A.; Prati, C.; Matteucci, M. Deep learning for SAR image despeckling. Remote Sens. 2019, 11, 1532. [Google Scholar] [CrossRef]

- Dalsasso, E.; Denis, L.; Tupin, F. SAR2SAR: A self-supervised despeckling algorithm for SAR images. arXiv 2020, arXiv:2006.15037. [Google Scholar] [CrossRef]

- Liu, S.; Liu, T.; Gao, L.; Li, H.; Hu, Q.; Zhao, J.; Wang, C. Convolutional neural network and guided filtering for SAR image denoising. Remote Sens. 2019, 11, 702. [Google Scholar] [CrossRef]

- Morio, J.; Réfrégier, P.; Goudail, F.; Dubois-Fernandez, P.C.; Dupuis, X. Information theory-based approach for contrast analysis in polarimetric and/or interferometric SAR images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2185–2196. [Google Scholar] [CrossRef]

- Denis, L.; Deledalle, C.A.; Tupin, F. From patches to deep learning: Combining self-similarity and neural networks for SAR image despeckling. In Proceedings of the IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5113–5116. [Google Scholar]

- Jia, X.; Peng, Y.; Li, J.; Ge, B.; Xin, Y.; Liu, S. Dual-complementary convolution network for remote-sensing image denoising. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Niresi, K.F.; Chi, C.Y. Unsupervised hyperspectral denoising based on deep image prior and least favorable distribution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5967–5983. [Google Scholar] [CrossRef]

- Liang, J.; Ren, L.; Ju, H.; Zhang, W.; Qu, E. Polarimetric dehazing method for dense haze removal based on distribution analysis of angle of polarization. Opt. Express 2015, 23, 26146–26157. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.; Ren, L.; Qu, E.; Hu, B.; Wang, Y. Method for enhancing visibility of hazy images based on polarimetric imaging. Photonics Res. 2014, 2, 38–44. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Karpel, N. Recovery of underwater visibility and structure by polarization analysis. IEEE J. Ocean. Eng. 2005, 30, 570–587. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Huang, Y.; Jiang, L.; Che, L.; Liu, T.; Zhai, J. UCRNet: Underwater color image restoration via a polarization-guided convolutional neural network. Front. Mar. Sci. 2022, 9, 2441. [Google Scholar]

- Gao, S.; Gruev, V. Bilinear and bicubic interpolation methods for division of focal plane polarimeters. Opt. Express 2011, 19, 26161–26173. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, H.; Hui, B.; Chang, Z. Image interpolation for division of focal plane polarimeters with intensity correlation. Opt. Express 2016, 24, 20799–20807. [Google Scholar] [CrossRef]

- Zeng, X.; Luo, Y.; Zhao, X.; Ye, W. An end-to-end fully-convolutional neural network for division of focal plane sensors to reconstruct s 0, dolp, and aop. Opt. Express 2019, 27, 8566–8577. [Google Scholar] [CrossRef]

- Zhang, J.; Shao, J.; Luo, H.; Zhang, X.; Hui, B.; Chang, Z.; Liang, R. Learning a convolutional demosaicing network for microgrid polarimeter imagery. Opt. Lett. 2018, 43, 4534–4537. [Google Scholar] [CrossRef]

- Wen, S.; Zheng, Y.; Lu, F.; Zhao, Q. Convolutional demosaicing network for joint chromatic and polarimetric imagery. Opt. Lett. 2019, 44, 5646–5649. [Google Scholar] [CrossRef]

- Hu, H.; Yang, S.; Li, X.; Cheng, Z.; Liu, T.; Zhai, J. Polarized image super-resolution via a deep convolutional neural network. Opt. Express 2023, 31, 8535–8547. [Google Scholar] [CrossRef]

- Yue, L.; Shen, H.; Li, J.; Yuan, Q.; Zhang, H.; Zhang, L. Image super-resolution: The techniques, applications, and future. Signal Process. 2016, 128, 389–408. [Google Scholar] [CrossRef]

- Pastina, D.; Lombardo, P.; Farina, A.; Daddi, P. Super-resolution of polarimetric SAR images of ship targets. Signal Process. 2003, 83, 1737–1748. [Google Scholar] [CrossRef]

- Jia, Y.; Ge, Y.; Chen, Y.; Li, S.; Heuvelink, G.; Ling, F. Super-resolution land cover mapping based on the convolutional neural network. Remote Sens. 2019, 11, 1815. [Google Scholar] [CrossRef]

- Haut, J.M.; Fernandez-Beltran, R.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Pla, F. A new deep generative network for unsupervised remote sensing single-image super-resolution. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6792–6810. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Zhang, L.; Zou, B.; Hao, H.; Zhang, Y. A novel super-resolution method of PolSAR images based on target decomposition and polarimetric spatial correlation. Int. J. Remote Sens. 2011, 32, 4893–4913. [Google Scholar] [CrossRef]

- Lin, L.; Li, J.; Shen, H.; Zhao, L.; Yuan, Q.; Li, X. Low-resolution fully polarimetric SAR and high-resolution single-polarization SAR image fusion network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5216117. [Google Scholar] [CrossRef]

- Huang, W.; Xiao, L.; Wei, Z.; Liu, H.; Tang, S. A new pan-sharpening method with deep neural networks. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1037–1041. [Google Scholar] [CrossRef]

- Schmitt, A.; Wendleder, A.; Hinz, S. The Kennaugh element framework for multi-scale, multi-polarized, multi-temporal and multi-frequency SAR image preparation. ISPRS J. Photogramm. Remote Sens. 2015, 102, 122–139. [Google Scholar] [CrossRef]

- Hu, J.; Ghamisi, P.; Zhu, X.X. Feature extraction and selection of sentinel-1 dual-pol data for global-scale local climate zone classification. ISPRS Int. J. Geo-Inf. 2018, 7, 379. [Google Scholar] [CrossRef]

- Hu, J.; Hong, D.; Wang, Y.; Zhu, X.X. A comparative review of manifold learning techniques for hyperspectral and polarimetric SAR image fusion. Remote Sens. 2019, 11, 681. [Google Scholar] [CrossRef]

- Xing, Y.; Wang, M.; Yang, S.; Jiao, L. Pan-sharpening via deep metric learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 165–183. [Google Scholar] [CrossRef]

- Shao, Z.; Cai, J. Remote sensing image fusion with deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1656–1669. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and hyperspectral image fusion using a 3-D-convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.Q.; Chan, J.C.W. Hyperspectral and multispectral image fusion via deep two-branches convolutional neural network. Remote Sens. 2018, 10, 800. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Guo, A.; Fang, L. Deep hyperspectral image sharpening. IEEE Trans. Neural Networks Learn. Syst. 2018, 29, 5345–5355. [Google Scholar] [CrossRef]

- Jouan, A.; Allard, Y. Land use mapping with evidential fusion of features extracted from polarimetric synthetic aperture radar and hyperspectral imagery. Inf. Fusion 2004, 5, 251–267. [Google Scholar] [CrossRef]

- Li, T.; Zhang, J.; Zhao, H.; Shi, C. Classification-oriented hyperspectral and PolSAR images synergic processing. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium-IGARSS, Melbourne, VIC, Australia, 21–26 July 2013; pp. 1035–1038. [Google Scholar]

- Dabbiru, L.; Samiappan, S.; Nobrega, R.A.; Aanstoos, J.A.; Younan, N.H.; Moorhead, R.J. Fusion of synthetic aperture radar and hyperspectral imagery to detect impacts of oil spill in Gulf of Mexico. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1901–1904. [Google Scholar]

- Hu, J.; Ghamisi, P.; Schmitt, A.; Zhu, X.X. Object based fusion of polarimetric SAR and hyperspectral imaging for land use classification. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–5. [Google Scholar]

- Liu, J.; Duan, J.; Hao, Y.; Chen, G.; Zhang, H. Semantic-guided polarization image fusion method based on a dual-discriminator GAN. Opt. Express 2022, 30, 43601–43621. [Google Scholar] [CrossRef]

- Ding, X.; Wang, Y.; Fu, X. Multi-polarization fusion generative adversarial networks for clear underwater imaging. Opt. Lasers Eng. 2022, 152, 106971. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Fu, X.; Liang, Z.; Ding, X.; Yu, X.; Wang, Y. Image descattering and absorption compensation in underwater polarimetric imaging. Opt. Lasers Eng. 2020, 132, 106115. [Google Scholar] [CrossRef]

- Liang, J.; Ren, L.Y.; Ju, H.J.; Qu, E.S.; Wang, Y.L. Visibility enhancement of hazy images based on a universal polarimetric imaging method. J. Appl. Phys. 2014, 116, 173107. [Google Scholar]

- Liang, J.; Ju, H.; Ren, L.; Yang, L.; Liang, R. Generalized polarimetric dehazing method based on low-pass filtering in frequency domain. Sensors 2020, 20, 1729. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Han, J.; Shoeiby, M.; Malthus, T.; Botha, E.; Anstee, J.; Anwar, S.; Wei, R.; Petersson, L.; Armin, M.A. Single underwater image restoration by contrastive learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 2385–2388. [Google Scholar]

- Tyo, J.S.; Rowe, M.; Pugh, E.; Engheta, N. Target detection in optically scattering media by polarization-difference imaging. Appl. Opt. 1996, 35, 1855–1870. [Google Scholar] [CrossRef]

- Hu, H.; Li, X.; Liu, T. Recent advances in underwater image restoration technique based on polarimetric imaging. Infrared Laser Eng. 2019, 48, 78–90. [Google Scholar]

- Anna, G.; Bertaux, N.; Galland, F.; Goudail, F.; Dolfi, D. Joint contrast optimization and object segmentation in active polarimetric images. Opt. Lett. 2012, 37, 3321–3323. [Google Scholar] [CrossRef][Green Version]

- Goudail, F.; Réfrégier, P. Target segmentation in active polarimetric images by use of statistical active contours. Appl. Opt. 2002, 41, 874–883. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Q.; Zu, H.; Liu, X.; Xie, R.; Wang, F. An end-to-end CNN framework for polarimetric vision tasks based on polarization-parameter-constructing network. arXiv 2020, arXiv:2004.08740. [Google Scholar]

- Song, D.; Zhen, Z.; Wang, B.; Li, X.; Gao, L.; Wang, N.; Xie, T.; Zhang, T. A novel marine oil spillage identification scheme based on convolution neural network feature extraction from fully polarimetric SAR imagery. IEEE Access 2020, 8, 59801–59820. [Google Scholar] [CrossRef]

- Marino, A. A notch filter for ship detection with polarimetric SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1219–1232. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H. PolSAR ship detection based on superpixel-level scattering mechanism distribution features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1780–1784. [Google Scholar] [CrossRef]

- Lin, H.; Chen, H.; Wang, H.; Yin, J.; Yang, J. Ship detection for PolSAR images via task-driven discriminative dictionary learning. Remote Sens. 2019, 11, 769. [Google Scholar] [CrossRef]

- Chang, Y.L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.Y.; Lee, W.H. Ship detection based on YOLOv2 for SAR imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef]

- De, S.; Bruzzone, L.; Bhattacharya, A.; Bovolo, F.; Chaudhuri, S. A novel technique based on deep learning and a synthetic target database for classification of urban areas in PolSAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 154–170. [Google Scholar] [CrossRef]

- Gong, M.; Yang, H.; Zhang, P. Feature learning and change feature classification based on deep learning for ternary change detection in SAR images. ISPRS J. Photogramm. Remote Sens. 2017, 129, 212–225. [Google Scholar] [CrossRef]

- Nascimento, A.D.; Frery, A.C.; Cintra, R.J. Detecting changes in fully polarimetric SAR imagery with statistical information theory. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1380–1392. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Wang, S. Transferred deep learning for sea ice change detection from synthetic-aperture radar images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1655–1659. [Google Scholar] [CrossRef]

- Geng, X.; Shi, L.; Yang, J.; Li, P.; Zhao, L.; Sun, W.; Zhao, J. Ship detection and feature visualization analysis based on lightweight CNN in VH and VV polarization images. Remote Sens. 2021, 13, 1184. [Google Scholar] [CrossRef]

- Vaughn, I.J.; Hoover, B.G.; Tyo, J.S. Classification using active polarimetry. In Polarization: Measurement, Analysis, and Remote Sensing X; SPIE: Bellingham, WA, USA, 2012; Volume 8364, p. 83640S. [Google Scholar]

- Fang, Z.; Zhang, G.; Dai, Q.; Xue, B.; Wang, P. Hybrid Attention-Based Encoder–Decoder Fully Convolutional Network for PolSAR Image Classification. Remote Sens. 2023, 15, 526. [Google Scholar] [CrossRef]

- Hariharan, S.; Tirodkar, S.; Bhattacharya, A. Polarimetric SAR decomposition parameter subset selection and their optimal dynamic range evaluation for urban area classification using Random Forest. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 144–158. [Google Scholar] [CrossRef]

- Aimaiti, Y.; Kasimu, A.; Jing, G. Urban landscape extraction and analysis based on optical and microwave ALOS satellite data. Earth Sci. Inform. 2016, 9, 425–435. [Google Scholar] [CrossRef]

- Shang, F.; Hirose, A. Quaternion neural-network-based PolSAR land classification in Poincare-sphere-parameter space. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5693–5703. [Google Scholar] [CrossRef]

- Kinugawa, K.; Shang, F.; Usami, N.; Hirose, A. Isotropization of quaternion-neural-network-based polsar adaptive land classification in poincare-sphere parameter space. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1234–1238. [Google Scholar] [CrossRef]

- Kinugawa, K.; Shang, F.; Usami, N.; Hirose, A. Proposal of adaptive land classification using quaternion neural network with isotropic activation function. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7557–7560. [Google Scholar]

- Usami, N.; Muhuri, A.; Bhattacharya, A.; Hirose, A. Proposal of wet snowmapping with focus on incident angle influential to depolarization of surface scattering. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1544–1547. [Google Scholar]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Wang, L.; Xu, X.; Dong, H.; Gui, R.; Pu, F. Multi-pixel simultaneous classification of PolSAR image using convolutional neural networks. Sensors 2018, 18, 769. [Google Scholar] [CrossRef]

- Xie, W.; Jiao, L.; Hou, B.; Ma, W.; Zhao, J.; Zhang, S.; Liu, F. POLSAR image classification via Wishart-AE model or Wishart-CAE model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3604–3615. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, W.; Hu, Y.; Chu, Q.; Liu, L. An improved sea ice classification algorithm with Gaofen-3 dual-polarization SAR data based on deep convolutional neural networks. Remote Sens. 2022, 14, 906. [Google Scholar] [CrossRef]

- Wu, W.; Li, H.; Zhang, L.; Li, X.; Guo, H. High-resolution PolSAR scene classification with pretrained deep convnets and manifold polarimetric parameters. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6159–6168. [Google Scholar] [CrossRef]

- Gao, F.; Huang, T.; Wang, J.; Sun, J.; Hussain, A.; Yang, E. Dual-branch deep convolution neural network for polarimetric SAR image classification. Appl. Sci. 2017, 7, 447. [Google Scholar] [CrossRef]

- Tan, W.; Sun, B.; Xiao, C.; Huang, P.; Xu, W.; Yang, W. A Novel Unsupervised Classification Method for Sandy Land Using Fully Polarimetric SAR Data. Remote Sens. 2021, 13, 355. [Google Scholar] [CrossRef]

- Qin, F.; Guo, J.; Lang, F. Superpixel segmentation for polarimetric SAR imagery using local iterative clustering. IEEE Geosci. Remote Sens. Lett. 2014, 12, 13–17. [Google Scholar]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Jamali, A.; Roy, S.K.; Bhattacharya, A.; Ghamisi, P. Local Window Attention Transformer for Polarimetric SAR Image Classification. IEEE Geosci. Remote Sens. Lett. 2023, 1, 1–5. [Google Scholar] [CrossRef]

- Li, J.; Liu, H.; Liao, R.; Wang, H.; Chen, Y.; Xiang, J.; Xu, X.; Ma, H. Recognition of microplastics suspended in seawater via refractive index by Mueller matrix polarimetry. Mar. Pollut. Bull. 2023, 188, 114706. [Google Scholar] [CrossRef]

- Weng, J.; Gao, C.; Lei, B. Real-time polarization measurement based on spatially modulated polarimeter and deep learning. Results Phys. 2023, 46, 106280. [Google Scholar] [CrossRef]

- Liu, T.; de Haan, K.; Bai, B.; Rivenson, Y.; Luo, Y.; Wang, H.; Karalli, D.; Fu, H.; Zhang, Y.; FitzGerald, J.; et al. Deep learning-based holographic polarization microscopy. ACS Photonics 2020, 7, 3023–3034. [Google Scholar] [CrossRef]

- Li, X.; Liao, R.; Zhou, J.; Leung, P.T.; Yan, M.; Ma, H. Classification of morphologically similar algae and cyanobacteria using Mueller matrix imaging and convolutional neural networks. Appl. Opt. 2017, 56, 6520–6530. [Google Scholar] [CrossRef]

- Kalra, A.; Taamazyan, V.; Rao, S.K.; Venkataraman, K.; Raskar, R.; Kadambi, A. Deep polarization cues for transparent object segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8602–8611. [Google Scholar]

- Lei, C.; Qi, C.; Xie, J.; Fan, N.; Koltun, V.; Chen, Q. Shape from polarization for complex scenes in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12632–12641. [Google Scholar]

- Goda, K.; Jalali, B.; Lei, C.; Situ, G.; Westbrook, P. AI boosts photonics and vice versa. APL Photonics 2020, 5, 070401. [Google Scholar] [CrossRef]

- Chen, X.W.; Lin, X. Big data deep learning: Challenges and perspectives. IEEE Access 2014, 2, 514–525. [Google Scholar] [CrossRef]

- Ng, H.W.; Nguyen, V.D.; Vonikakis, V.; Winkler, S. Deep learning for emotion recognition on small datasets using transfer learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Washington, DC, USA, 9–13 November 2015; pp. 443–449. [Google Scholar]

- Ren, Z.; Oviedo, F.; Thway, M.; Tian, S.I.; Wang, Y.; Xue, H.; Perea, J.D.; Layurova, M.; Heumueller, T.; Birgersson, E.; et al. Embedding physics domain knowledge into a Bayesian network enables layer-by-layer process innovation for photovoltaics. NPJ Comput. Mater. 2020, 6, 9. [Google Scholar] [CrossRef]

- Hagos, M.T.; Kant, S. Transfer learning based detection of diabetic retinopathy from small dataset. arXiv 2019, arXiv:1905.07203. [Google Scholar]

- Zhang, Q.; Liu, X.; Liu, M.; Zou, X.; Zhu, L.; Ruan, X. Comparative Analysis of Edge Information and Polarization on SAR-to-Optical Translation Based on Conditional Generative Adversarial Networks. Remote Sens. 2021, 13, 128. [Google Scholar] [CrossRef]

- Wang, F.; Bian, Y.; Wang, H.; Lyu, M.; Pedrini, G.; Osten, W.; Barbastathis, G.; Situ, G. Phase imaging with an untrained neural network. Light. Sci. Appl. 2020, 9, 77. [Google Scholar] [CrossRef]

- Bostan, E.; Heckel, R.; Chen, M.; Kellman, M.; Waller, L. Deep phase decoder: Self-calibrating phase microscopy with an untrained deep neural network. Optica 2020, 7, 559–562. [Google Scholar] [CrossRef]

- Hu, H.; Han, Y.; Li, X.; Jiang, L.; Che, L.; Liu, T.; Zhai, J. Physics-informed neural network for polarimetric underwater imaging. Opt. Express 2022, 30, 22512–22522. [Google Scholar] [CrossRef]

- Le Teurnier, B.; Li, N.; Li, X.; Boffety, M.; Hu, H.; Goudail, F. How signal processing can improve the quality of division of focal plane polarimetric imagers? In Electro-Optical and Infrared Systems: Technology and Applications XVIII and Electro-Optical Remote Sensing XV; SPIE: Bellingham, WA, USA, 2021; Volume 11866, pp. 162–169. [Google Scholar]

- Le Teurnier, B.; Li, X.; Boffety, M.; Hu, H.; Goudail, F. When is retardance autocalibration of microgrid-based full Stokes imagers possible and useful? Opt. Lett. 2020, 45, 3474–3477. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, J.; Liang, R. Color polarization demosaicking by a convolutional neural network. Opt. Lett. 2021, 46, 4338–4341. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, J.; Liang, R. pHSCNN: CNN-based hyperspectral recovery from a pair of RGB images. Opt. Express 2022, 30, 24862–24873. [Google Scholar] [CrossRef] [PubMed]

- Mohan, A.T.; Lubbers, N.; Livescu, D.; Chertkov, M. Embedding hard physical constraints in neural network coarse-graining of 3d turbulence. arXiv 2020, arXiv:2002.00021. [Google Scholar]

- Ba, Y.; Zhao, G.; Kadambi, A. Blending diverse physical priors with neural networks. arXiv 2019, arXiv:1910.00201. [Google Scholar]

- Zhu, Y.; Zeng, T.; Liu, K.; Ren, Z.; Lam, E.Y. Full scene underwater imaging with polarization and an untrained network. Opt. Express 2021, 29, 41865–41881. [Google Scholar] [CrossRef]

- Polcari, M.; Tolomei, C.; Bignami, C.; Stramondo, S. SAR and optical data comparison for detecting co-seismic slip and induced phenomena during the 2018 Mw 7.5 Sulawesi earthquake. Sensors 2019, 19, 3976. [Google Scholar] [CrossRef]

- Forkuor, G.; Conrad, C.; Thiel, M.; Ullmann, T.; Zoungrana, E. Integration of optical and Synthetic Aperture Radar imagery for improving crop mapping in Northwestern Benin, West Africa. Remote Sens. 2014, 6, 6472–6499. [Google Scholar] [CrossRef]

- Zhang, H.; Wan, L.; Wang, T.; Lin, Y.; Lin, H.; Zheng, Z. Impervious surface estimation from optical and polarimetric SAR data using small-patched deep convolutional networks: A comparative study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2374–2387. [Google Scholar] [CrossRef]

- Molijn, R.A.; Iannini, L.; Vieira Rocha, J.; Hanssen, R.F. Sugarcane productivity mapping through C-band and L-band SAR and optical satellite imagery. Remote Sens. 2019, 11, 1109. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Lin, H. Improving the impervious surface estimation with combined use of optical and SAR remote sensing images. Remote Sens. Environ. 2014, 141, 155–167. [Google Scholar] [CrossRef]

- Liu, S.; Qi, Z.; Li, X.; Yeh, A.G.O. Integration of convolutional neural networks and object-based post-classification refinement for land use and land cover mapping with optical and SAR data. Remote Sens. 2019, 11, 690. [Google Scholar] [CrossRef]

- Zhang, W.; Xu, M. Translate SAR data into optical image using IHS and wavelet transform integrated fusion. J. Indian Soc. Remote Sens. 2019, 47, 125–137. [Google Scholar] [CrossRef]

- Eckardt, R.; Berger, C.; Thiel, C.; Schmullius, C. Removal of optically thick clouds from multi-spectral satellite images using multi-frequency SAR data. Remote Sens. 2013, 5, 2973–3006. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y.; Zhang, Y. Hybrid cGAN: Coupling Global and Local Features for SAR-to-Optical Image Translation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5236016. [Google Scholar] [CrossRef]

| Bicubic | Correlation-Based | PDCNN | Fork-Net | |

|---|---|---|---|---|

| 38.0604 | 42.1540 | 42.9584 | 43.7225 | |

| DoLP | 31.7751 | 29.8021 | 34.5301 | 35.0061 |

| AoP | 9.3744 | 7.6640 | 9.8273 | 11.0450 |

| Method | CVA | MAD | PCA | IR-MAD | SFA | 3D-CNN | Proposed |

|---|---|---|---|---|---|---|---|

| OA (%) | 91.74 | 92.17 | 91.36 | 91.37 | 95.64 | 95.62 | 98.31 |

| Sensitivity (%) | 44.11 | 52.62 | 27.57 | 22.56 | 71.75 | 64.00 | 93.06 |

| MD (%) | 55.88 | 47.37 | 72.42 | 77.43 | 28.24 | 35.99 | 6.93 |

| FA (%) | 3.78 | 4.11 | 2.64 | 2.16 | 2.11 | 1.41 | 1.19 |

| F1-Score (%) | 47.85 | 53.58 | 35.41 | 30.98 | 73.88 | 71.49 | 90.43 |

| BA (%) | 70.16 | 74.25 | 62.46 | 60.19 | 84.82 | 81.29 | 95.93 |

| Precision (%) | 52.27 | 54.57 | 49.47 | 49.42 | 76.14 | 80.95 | 87.94 |

| Specificity (%) | 96.21 | 95.88 | 97.35 | 97.83 | 97.88 | 98.58 | 98.80 |

| KC | 0.434 | 0.493 | 0.311 | 0.271 | 0.715 | 0.691 | 0.895 |

| Classes | Accuracy [%] | Classes | Accuracy [%] |

|---|---|---|---|

| 0. Stembeans | 92.58 | 8. Grasses | 79.20 |

| 1. Peas | 88.89 | 9. Rapeseed | 93.10 |

| 2. Forest | 93.95 | 10. Barly | 96.90 |

| 3. Lucerne | 92.21 | 11. Wheat2 | 91.82 |

| 4. Wheat | 93.62 | 12. Wheat3 | 94.46 |

| 5. Beet | 89.74 | 13. Water | 98.88 |

| 6. Potatoes | 87.24 | 14. Building | 87.18 |

| 7. Bare soill | 99.94 | Overall | 92.46 |

| Class | HED-SC | ND-RF | ND-LSC | |||

|---|---|---|---|---|---|---|

| PA | UA | PA | UA | PA | UA | |

| RT | 81.17 | 95.19 | 85.42 | 73.64 | 90.22 | 96.94 |

| RD | 89.05 | 64.51 | 91.35 | 68.69 | 98.75 | 95.45 |

| SS | 91.31 | 84.28 | 93.45 | 85.36 | 94.62 | 87.28 |

| DL | 87.82 | 89.64 | 86.19 | 90.39 | 93.21 | 87.13 |

| SL | 81.69 | 94.37 | 84.67 | 94.75 | 91.02 | 87.07 |

| V | 75.20 | 90.54 | 79.89 | 94.47 | 97.87 | 69.65 |

| L | 96.35 | 99.67 | 96.86 | 99.68 | 98.07 | 74.59 |

| OA (%) | 89.68 | 90.02 | 95.22 | |||

| Kappa | 0.8717 | 0.9205 | 0.9404 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Yan, L.; Qi, P.; Zhang, L.; Goudail, F.; Liu, T.; Zhai, J.; Hu, H. Polarimetric Imaging via Deep Learning: A Review. Remote Sens. 2023, 15, 1540. https://doi.org/10.3390/rs15061540

Li X, Yan L, Qi P, Zhang L, Goudail F, Liu T, Zhai J, Hu H. Polarimetric Imaging via Deep Learning: A Review. Remote Sensing. 2023; 15(6):1540. https://doi.org/10.3390/rs15061540

Chicago/Turabian StyleLi, Xiaobo, Lei Yan, Pengfei Qi, Liping Zhang, François Goudail, Tiegen Liu, Jingsheng Zhai, and Haofeng Hu. 2023. "Polarimetric Imaging via Deep Learning: A Review" Remote Sensing 15, no. 6: 1540. https://doi.org/10.3390/rs15061540

APA StyleLi, X., Yan, L., Qi, P., Zhang, L., Goudail, F., Liu, T., Zhai, J., & Hu, H. (2023). Polarimetric Imaging via Deep Learning: A Review. Remote Sensing, 15(6), 1540. https://doi.org/10.3390/rs15061540