A Hybrid Convolutional Neural Network and Random Forest for Burned Area Identification with Optical and Synthetic Aperture Radar (SAR) Data

Abstract

1. Introduction

2. Materials and Methods

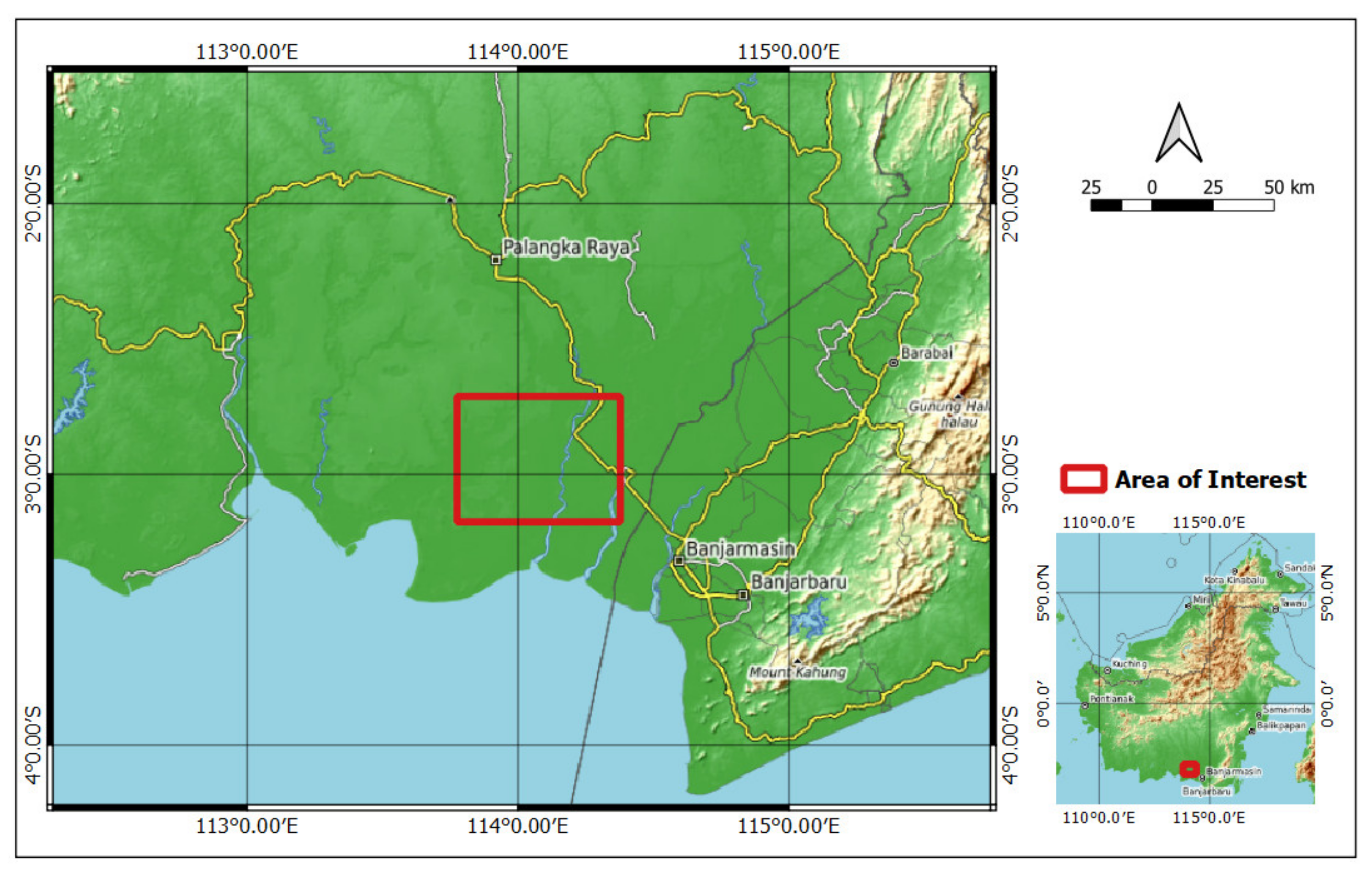

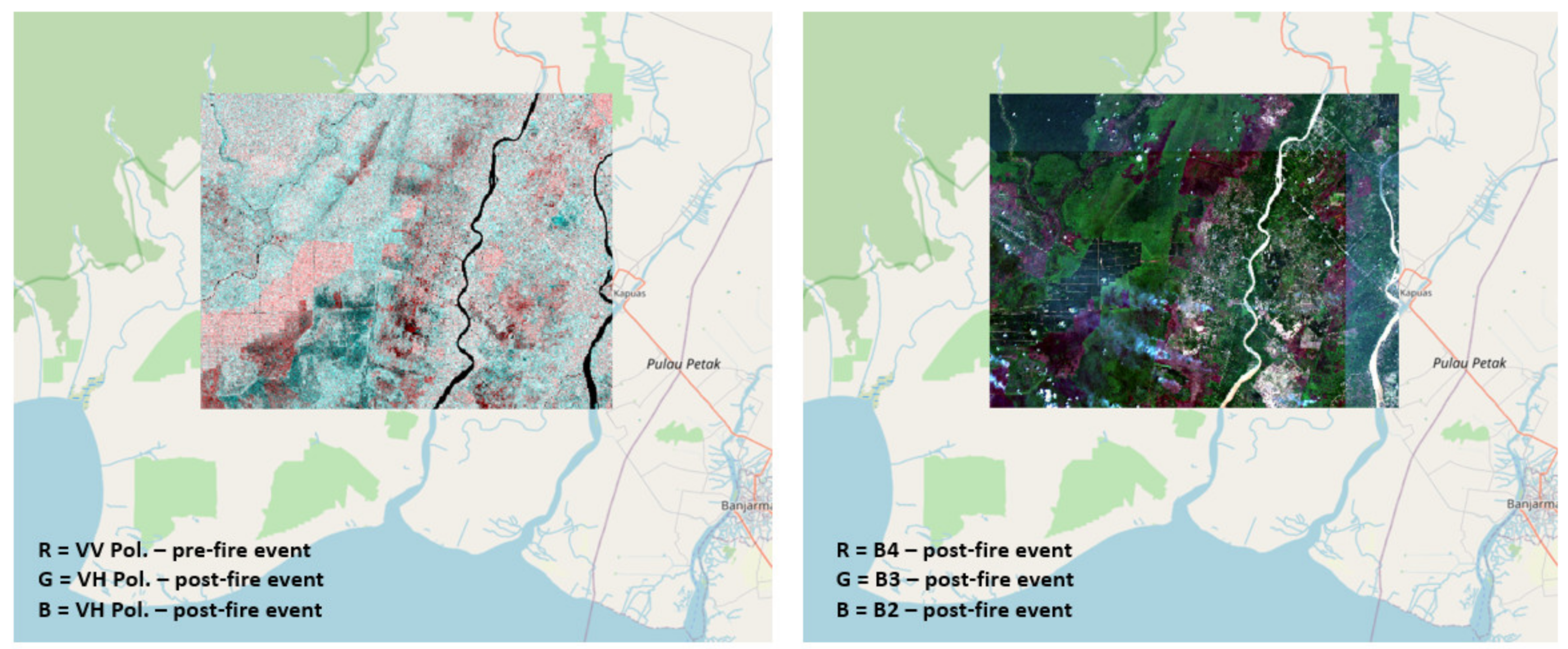

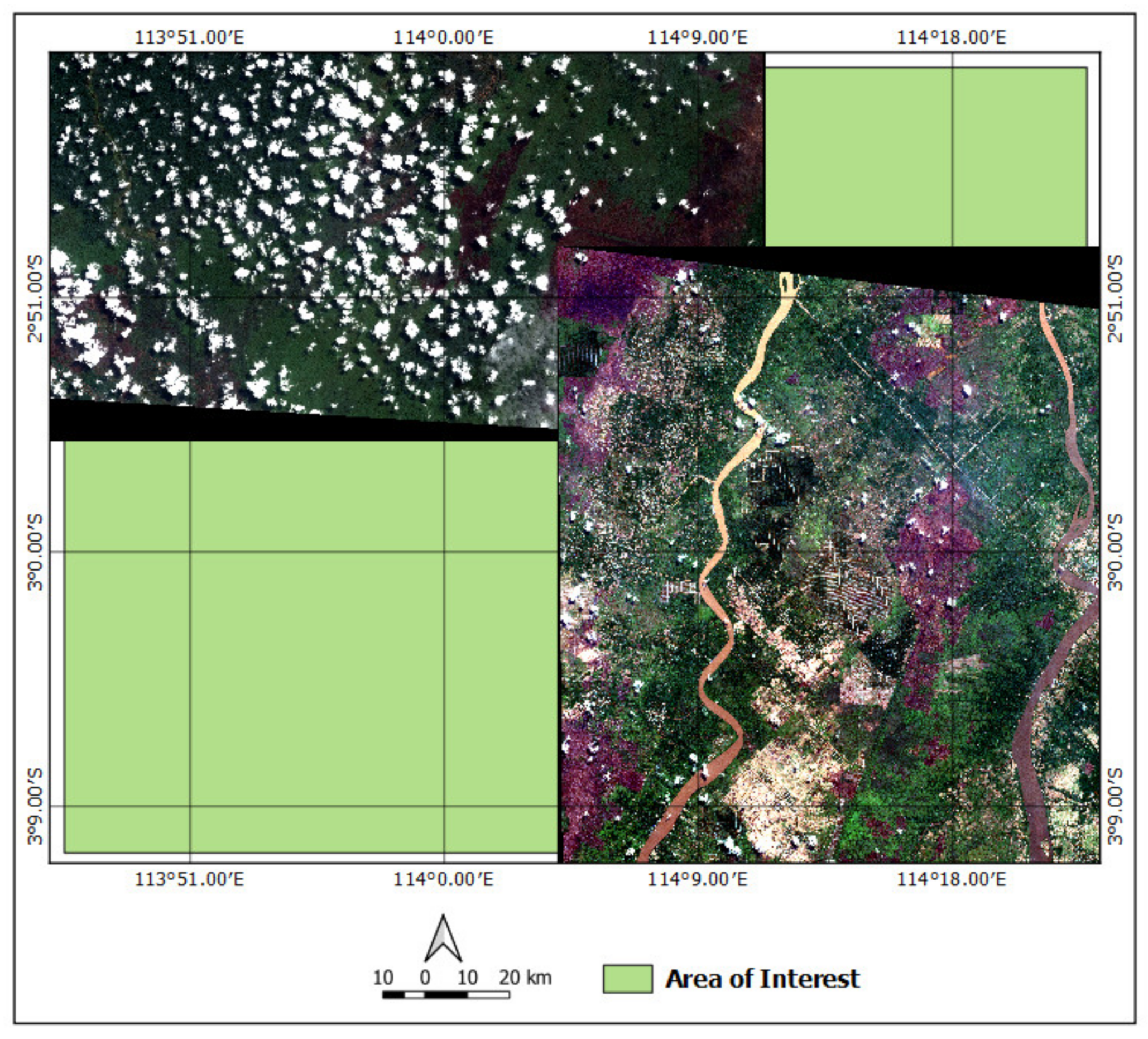

2.1. Research Area and Data

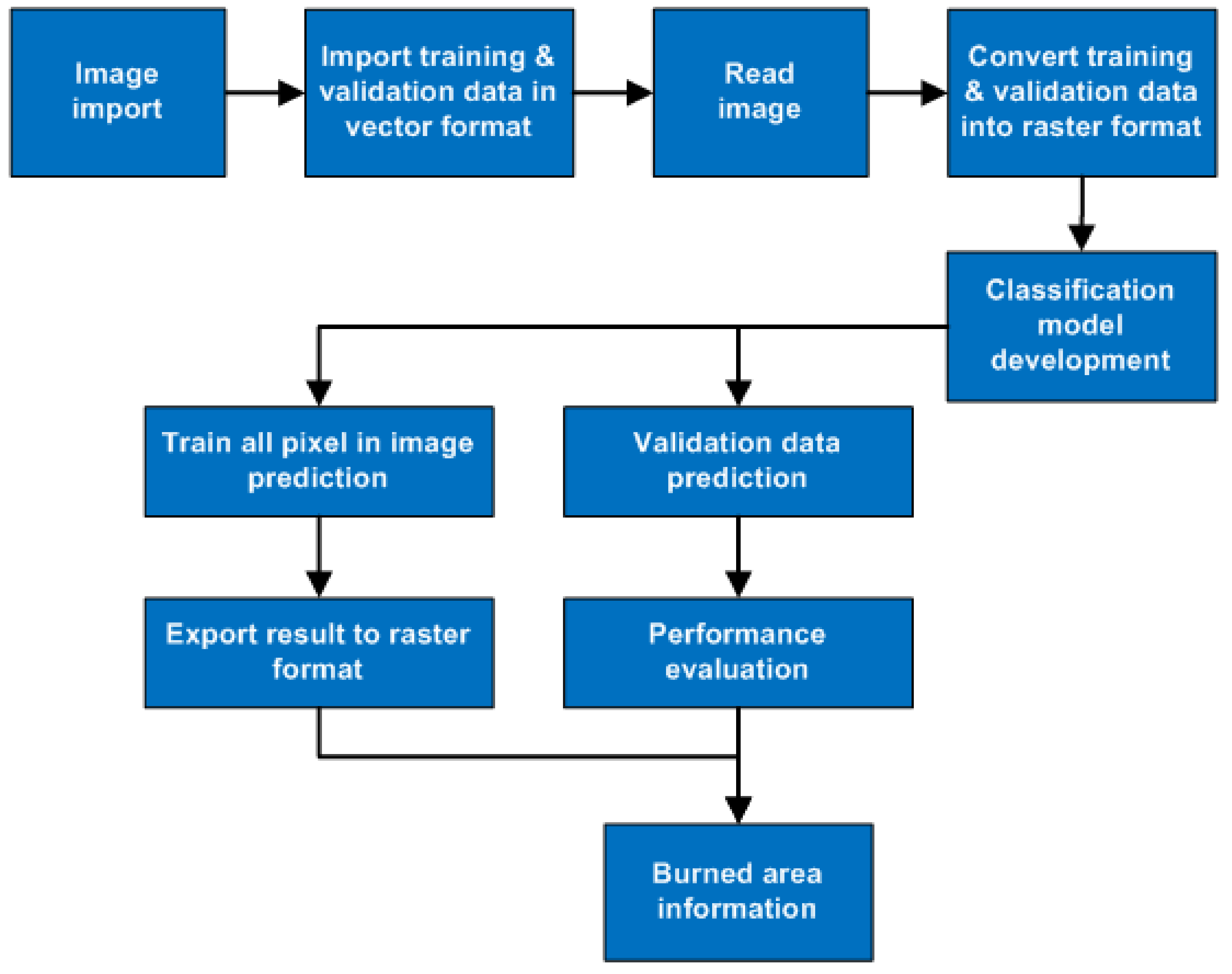

2.2. Methodology

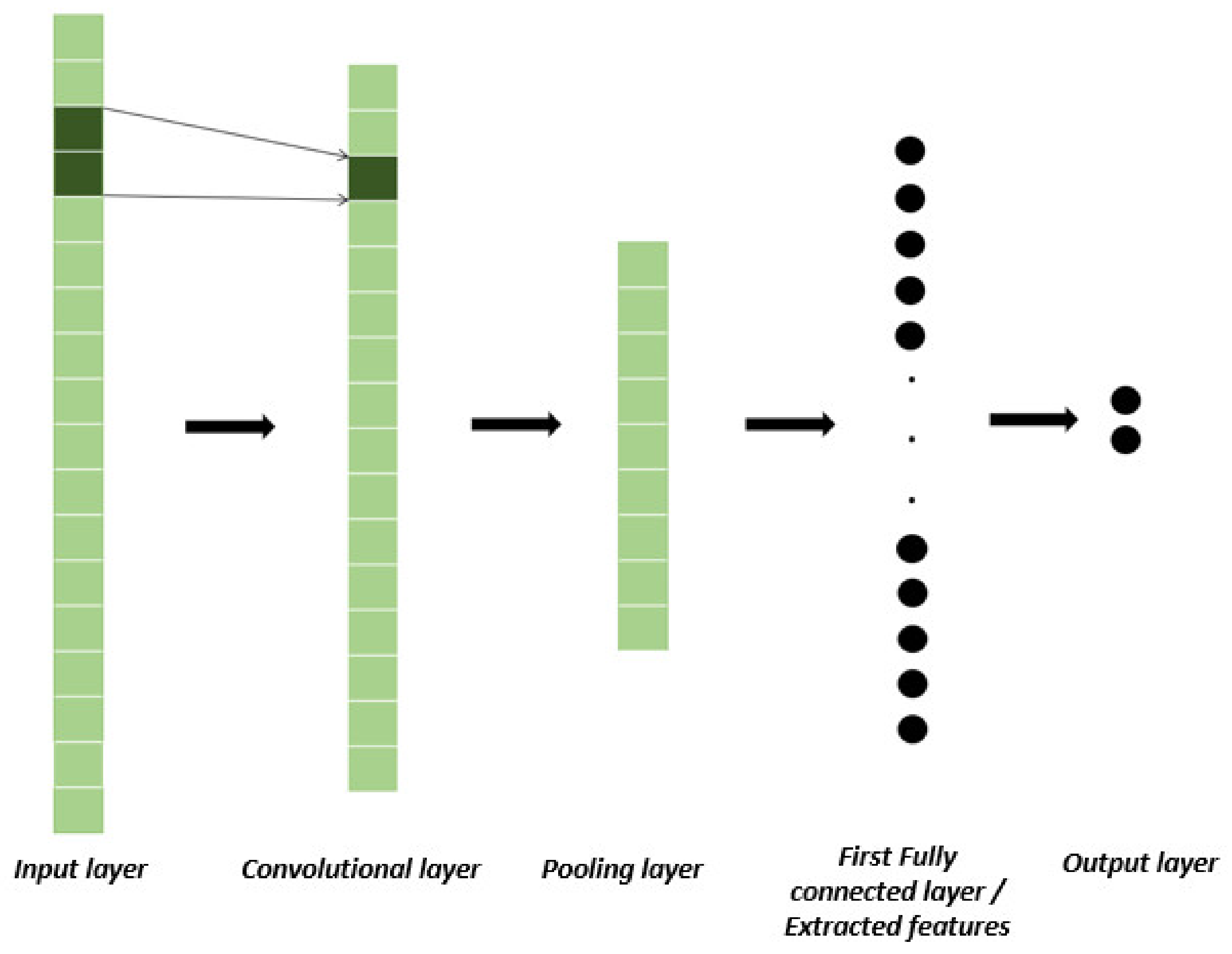

2.3. Convolutional Neural Network (CNN)

2.4. Random Forest (RF)

2.5. Proposed Method

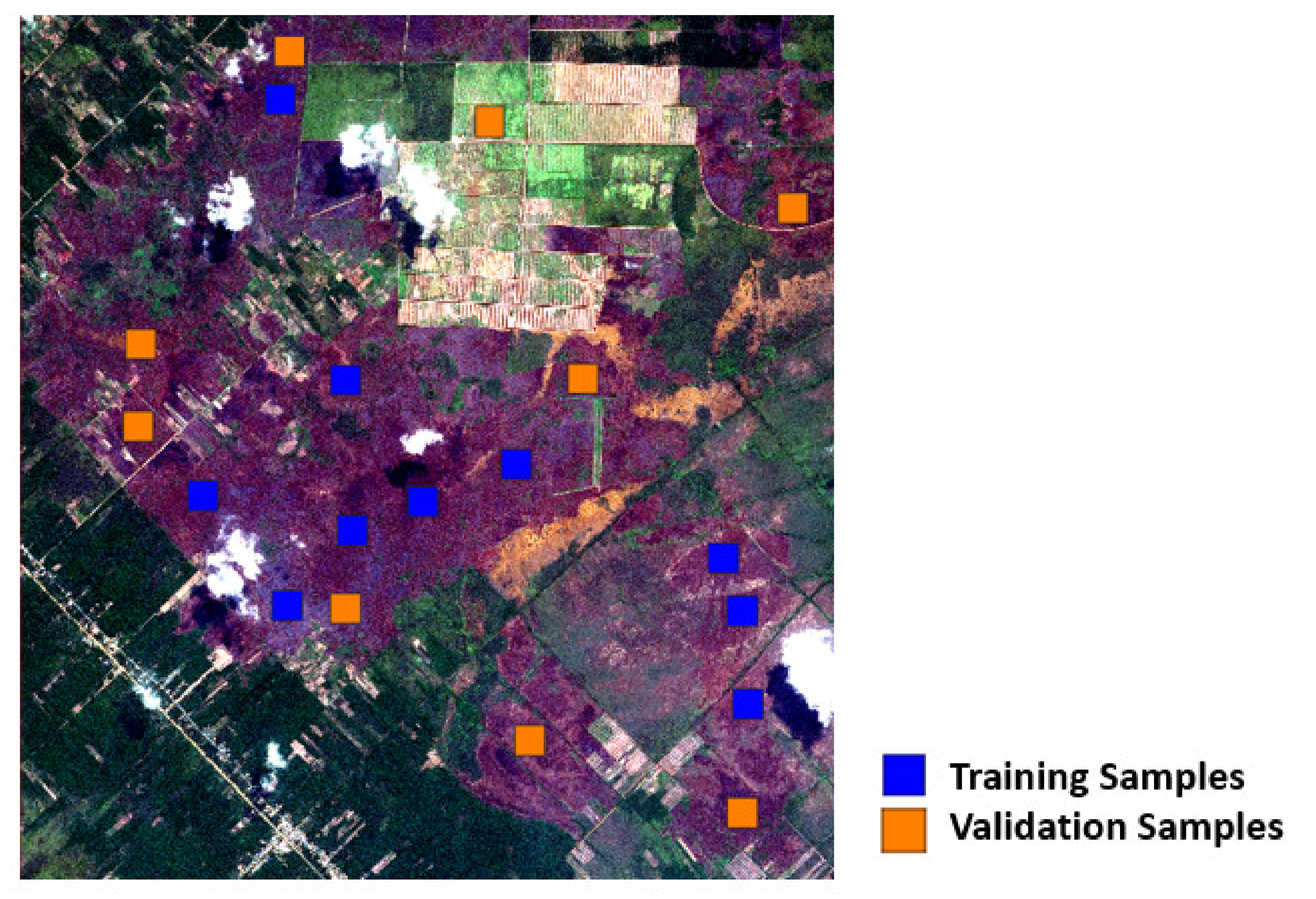

2.6. Training and Validation Dataset

2.7. Classifiers Implementation

2.8. Classification Scheme

2.9. Evaluation Parameter

3. Results

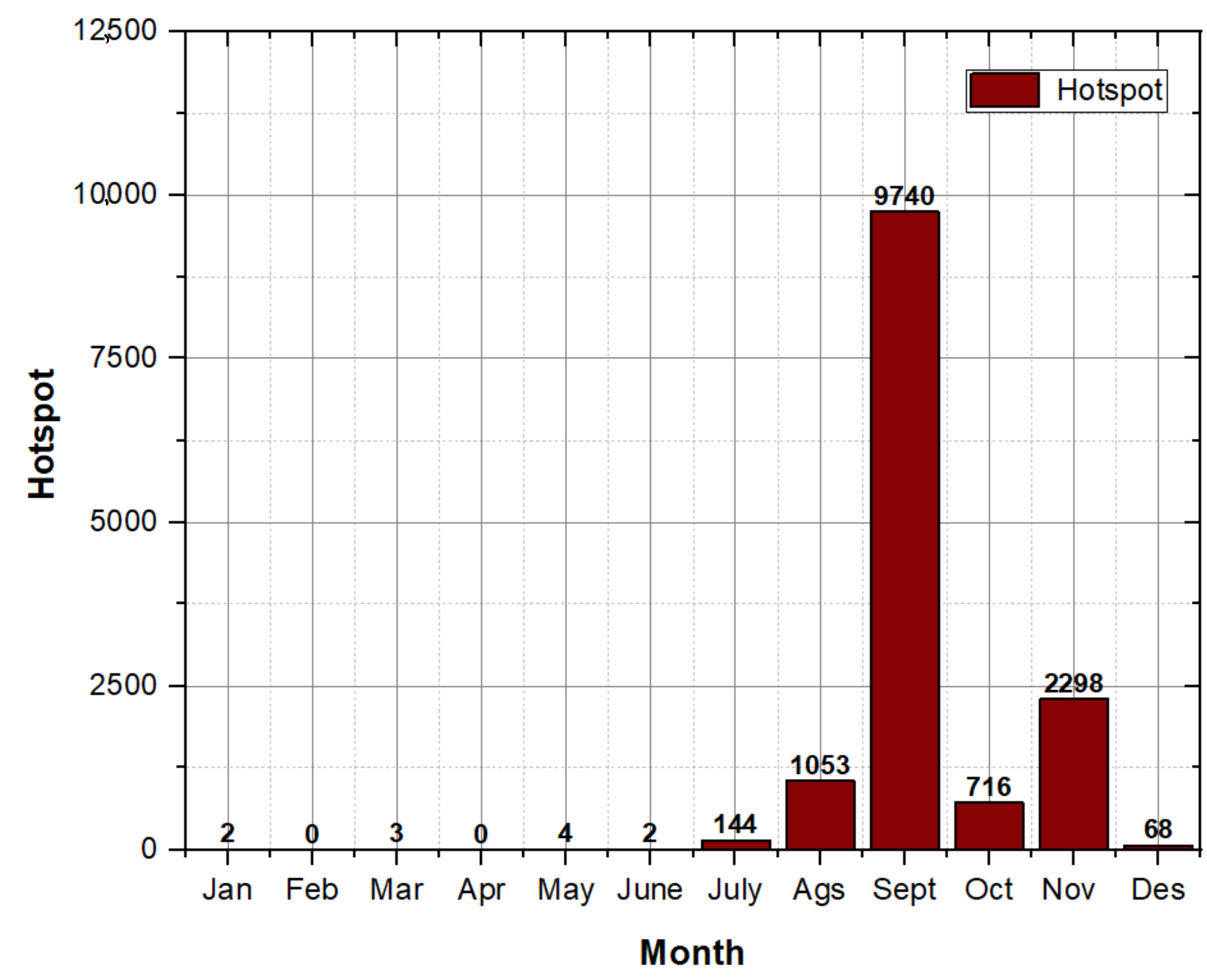

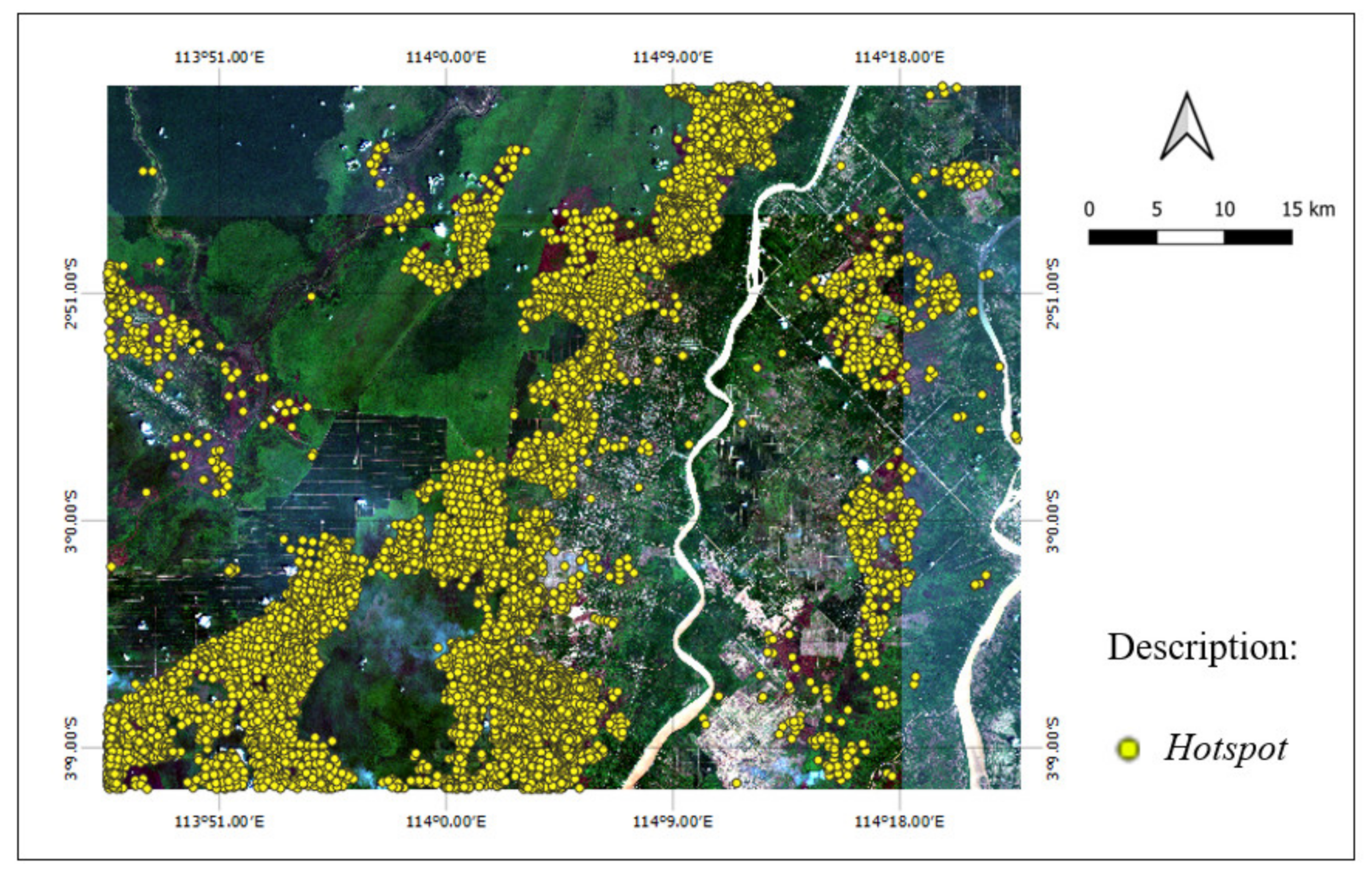

3.1. Hotspot Distribution Pattern

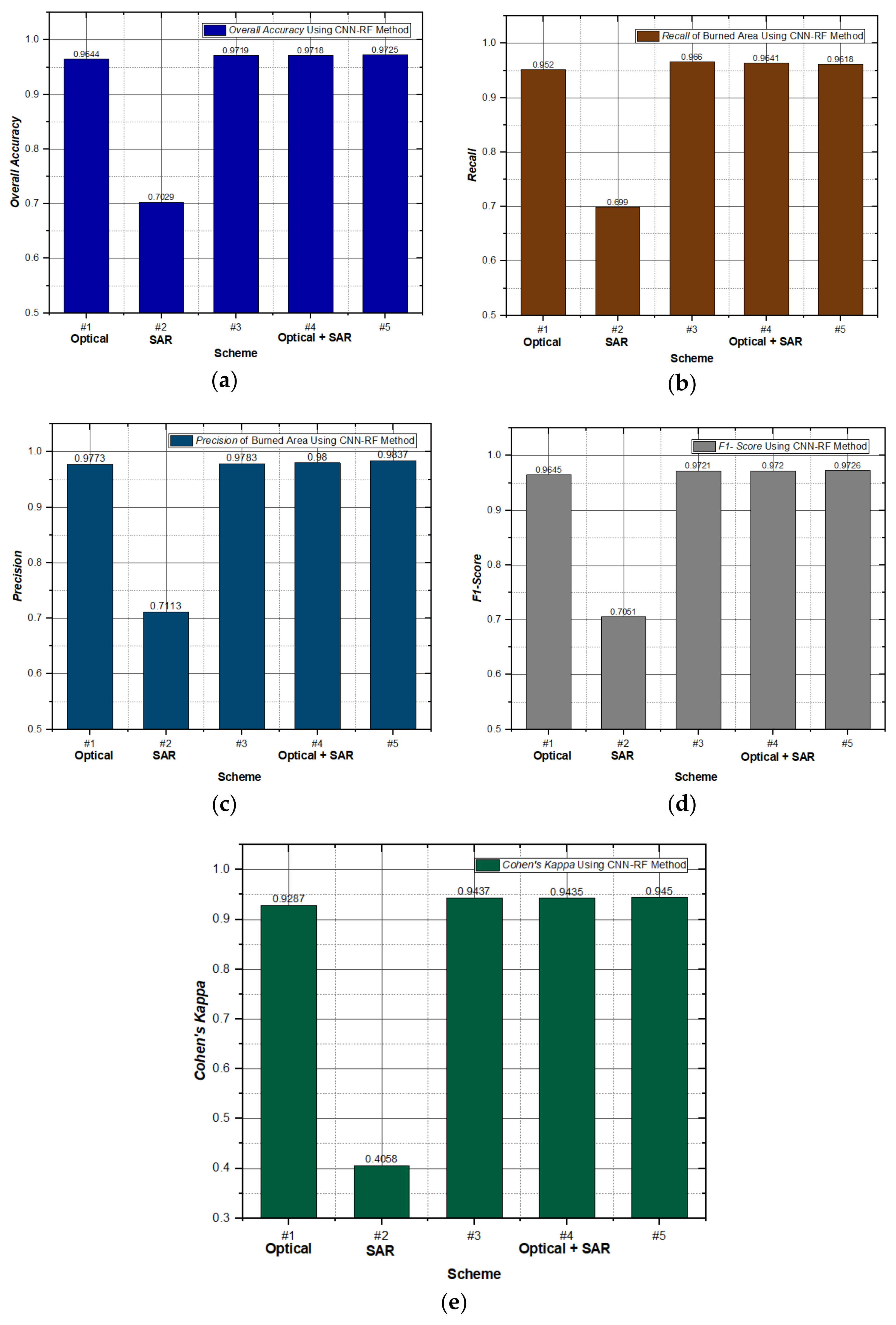

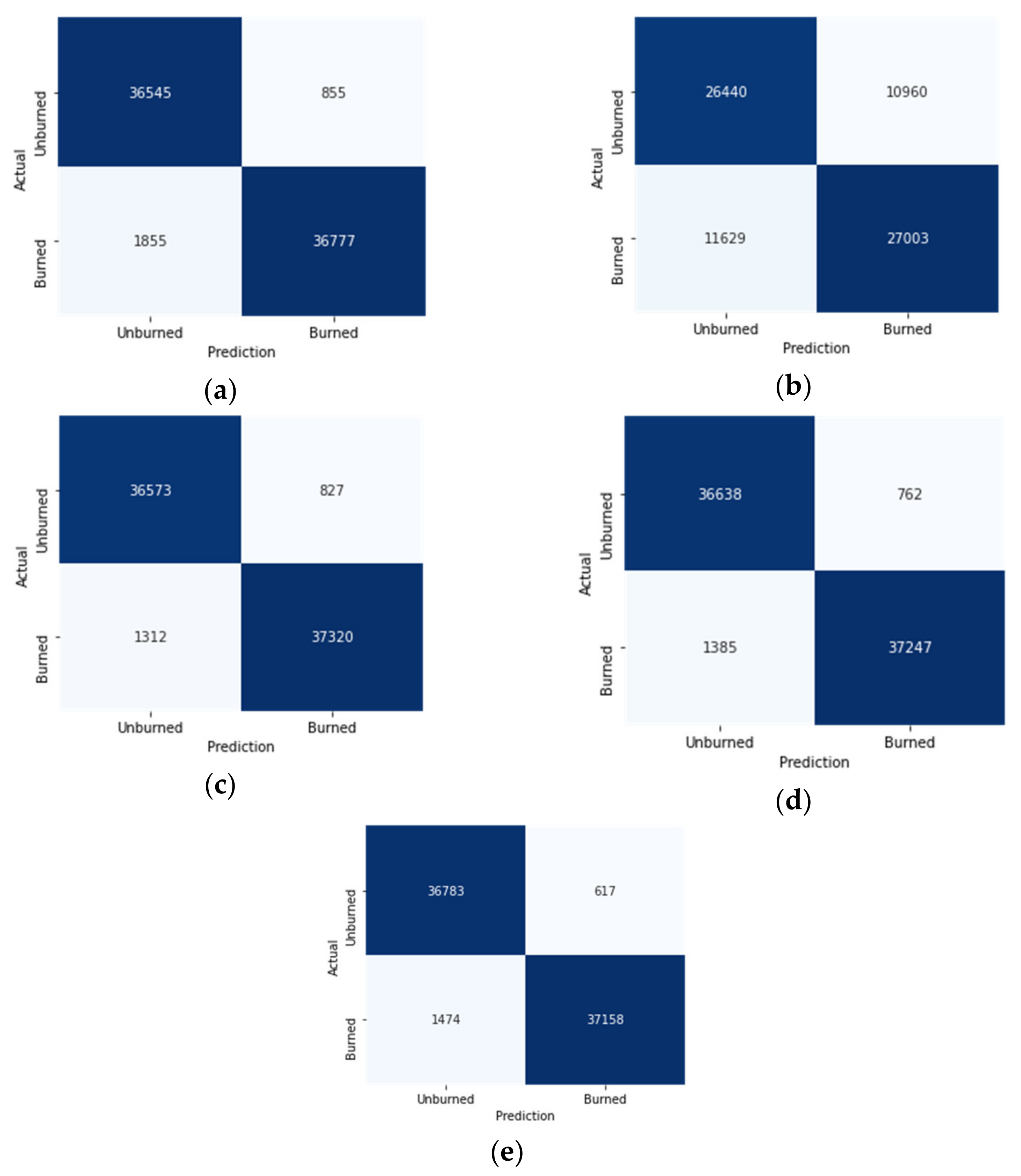

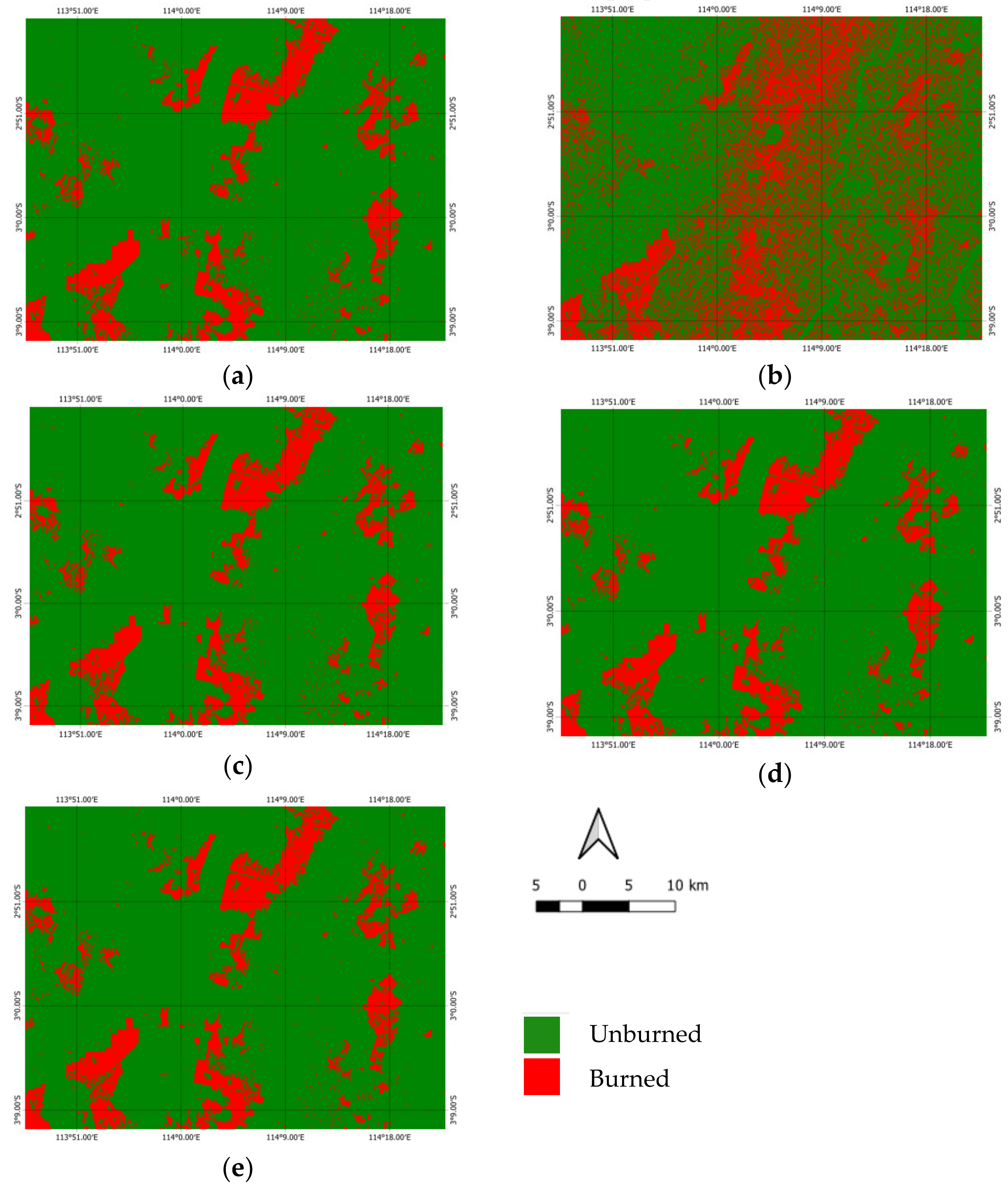

3.2. Classification Result of Burned Area using CNN-RF Method

4. Discussion

4.1. Comparison among CNN-RF, CNN, RF, and NN Methods

4.2. Burned Area Estimation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Government of the Republic of Indonesia. Presidential Regulation of the Republic of Indonesia Number 18/2020 Concerning the 2020–2024 National Mid-Term Development Plan; Republic of Indonesia: Jakarta, Indonesia, 2020. [Google Scholar]

- Ministry of National Development and Planning. Metadata Indikator Tujuan Pembangunan Berkelanjutan (TPB)/Sustainable Development Goals (SDGs) Indonesia Pilar Pembangunan Lingkungan; The Ministry of National Development Planning Republic of Indonesia: Jakarta, Indonesia, 2020. [Google Scholar]

- Enríquez-de-Salamanca, Á. Contribution to Climate Change of Forest Fires in Spain: Emissions and Loss of Sequestration. J. Sustain. For. 2019, 39, 417–431. [Google Scholar] [CrossRef]

- Rekapitulasi Luas Kebakaran Hutan dan Lahan (Ha) Per Provinsi di Indonesia Tahun 2015–2020 (Data s/d 30 September 2020). Available online: https://sipongi.menlhk.go.id/hotspot/luas_kebakaran (accessed on 10 October 2021).

- The World Bank. Indonesia Economic Quarterly: Investing People; The World Bank: Bretton Woods, NH, USA, 2019. [Google Scholar]

- Marlier, M.E.; Liu, T.; Yu, K.; Buonocore, J.J.; Koplitz, S.N.; DeFries, R.S.; Mickley, L.J.; Jacob, D.J.; Schwartz, J.; Wardhana, B.S.; et al. Fires, smoke exposure, and public health: An integrative framework to maximize health benefits from peatland restoration. GeoHealth 2019, 3, 178–189. [Google Scholar] [CrossRef]

- Harrison, M.E.; Page, S.E.; Limin, S.H. The global impact of Indonesian forest fires. Biologist 2009, 56, 156–163. [Google Scholar]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- Huang, H.; Roy, D.; Boschetti, L.; Zhang, H.; Yan, L.; Kumar, S.; Gomez-Dans, J.; Li, J. Separability analysis of Sentinel-2A multi-spectral instrument (MSI) data for burned area Discrimination. Remote Sens. 2016, 8, 873. [Google Scholar] [CrossRef]

- Fornacca, D.; Ren, G.; Xiao, W. Evaluating the best spectral indices for the detection of burn scars at several post-fire dates in a Mountainous Region of Northwest Yunnan, China. Remote Sens. 2018, 10, 1196. [Google Scholar] [CrossRef]

- Filipponi, F. BAIS2: Burned Area Index for Sentinel-2. Proceedings 2018, 2, 364. [Google Scholar]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing, 3rd ed.; Elsevier: New Delhi, India, 2007. [Google Scholar]

- Weng, Q. Principles of Remote Sensing. In Remote Sensing and GIS Integration Theories, Methods, and Applications: Theory, Methods, and Applications; McGraw-Hill: New York, NY, USA, 2010. [Google Scholar]

- Lasaponara, R.; Tucci, B. Identification of Burned Areas and Severity Using SAR Sentinel-1. IEEE Geosci. Remote Sens. Lett. 2019, 16, 917–921. [Google Scholar] [CrossRef]

- De Luca, G.; Modica, G.; Fattore, C.; Lasaponara, R. Unsupervised burned area mapping in a protected natural site. an approach using SAR Sentinel-1 data and K-mean algorithm. In Computational Science and Its Applications—ICCSA 2020; Springer Nature: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Lestari, A.I.; Rizkinia, M.; Sudiana, D. Evaluation of Combining Optical and SAR Imagery for Burned Area Mapping using Machine Learning. In Proceedings of the IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC) 2021, Las Vegas, NV, USA, 27–30 January 2021; pp. 52–59. [Google Scholar]

- Mutai, S.C. Analysis of Burnt Scar Using Optical and Radar Satellite Data. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2019. [Google Scholar]

- Ramo, R.; Chuvieco, E. Developing a random forest algorithm for MODIS global burned area classification. Remote Sens. 2017, 9, 1193. [Google Scholar] [CrossRef]

- Ngadze, F.; Mpakairi, K.S.; Kavhu, B.; Ndaimani, H.; Maremba, M.S. Exploring the utility of Sentinel-2 MSI and Landsat 8 OLI in burned area mapping for a heterogenous savannah landscape. PLoS ONE 2020, 15, e0232962. [Google Scholar] [CrossRef]

- Gaveau, D.L.A.; Descals, A.; Salim, M.A.; Sheil, D.; Sloan, S. Refined 1 burned-area mapping protocol using Sentinel-2 data 2 increases estimate of 2019 Indonesian burning. Earth Syst. Sci. Data 2021, 13, 5353–5368. [Google Scholar] [CrossRef]

- Carreiras, J.M.B.; Quegan, S.; Tansey, K.; Page, S. Sentinel-1 observation frequency significantly increases burnt area detect-ability in tropical SE Asia. Environ. Res. Lett. 2020, 15, 54008. [Google Scholar] [CrossRef]

- Widodo, J.; Riza, H.; Herlambang, A.; Arief, R.; Razi, P.; Kurniawan, F.; Izumi, Y.; Perissin, D.; Sumantyo, J.T.S. Forest Areas with a High Potential Risk of Fire Mapping on Peatlands Using Interferometric Synthetic Aperture Radar. In Proceedings of the 2021 7th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Bali, Indonesia, 1–3 November 2021; pp. 1–4. [Google Scholar]

- Corcoran, J.; Knight, J.; Gallant, A. Influence of multi-source and multitemporal remotely sensed and ancillary data on the accuracy of random forest classification of wetlands in northern Minnesota. Remote Sens. 2013, 5, 3212–3238. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Al-Rawi, K.R.; Casanova, J.L.; Calle, A. Burned area mapping system and fire detection system, based on neural networks and NOAA-AVHRR imagery. Int. J. Remote Sen. 2001, 22, 2015–2032. [Google Scholar] [CrossRef]

- Langford, Z.; Kumar, J.; Hoffman, F. Wildfire mapping in interior Alaska using deep neural networks on imbalanced datasets. In Proceedings of the IEEE International Conference on Data Mining Workshops (ICDMW), Singapore, 17–20 November 2018. [Google Scholar]

- Hu, Y.; Zhang, Q.; Zhang, Y.; Yan, H. A deep convolution neural network method for land cover mapping: A case study of Qinhuangdao, China. Remote Sens. 2018, 10, 2053. [Google Scholar] [CrossRef]

- Jozdani, S.E.; Johnson, B.A.; Chen, D. Comparing deep neural networks, ensemble classifiers, and support vector machine algorithms for object-based urban land use/land cover classification. Remote Sens. 2019, 11, 1713. [Google Scholar] [CrossRef]

- Riyanto, I.; Rizkinia, M.; Arief, R.; Sudiana, D. Three-Dimensional Convolutional Neural Network on Multi-Temporal Synthetic Aperture Radar Images for Urban Flood Potential Mapping in Jakarta. Appl. Sci. 2022, 12, 1679. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Y.; Song, Y.; Rong, X. The Influence of the activation function in a convolution neural network model of facial expression recognition. Appl. Sci. 2020, 10, 1897. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near real-time wildfire progression monitoring with Sentinel-1 SAR time series and deep learning. Sci. Rep. 2020, 10, 1322. [Google Scholar] [CrossRef] [PubMed]

- Stroppiana, D.; Azar, R.; Calò, F.; Pepe, A.; Imperatore, P.; Boschetti, M.; Silva, J.; Brivio, P.; Lanari, R. Integration of optical and SAR data for burned area mapping in mediterranean regions. Remote Sens. 2015, 7, 1320–1345. [Google Scholar] [CrossRef]

- Guidici, D.; Clark, M. One-dimensional convolutional neural network land-cover classification of multi-seasonal hyper-spectral imagery in the San Francisco Bay Area, California. Remote Sens. 2017, 9, 629. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Republic of Indonesia. Government Regulation Number 11 of 2018 Concerning Procedures for the Implementation of Remote Sensing Activities; Republic of Indonesia: Jakarta, Indonesia, 2018. [Google Scholar]

- Song, Y.; Zhang, Z.; Baghbaderani, R.K.; Wang, F.; Qu, Y.; Stuttsy, C.; Qi, H. Land cover classification for satellite images through 1D CNN. In Proceedings of the 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; pp. 1–5. [Google Scholar]

- Zhang, A.; Lipton, Z.C.; Li, M.; Smola, A.J. Dive into deep learning Release 0.16.6. arxiv 2021, arXiv:2106.11342v1. [Google Scholar]

- Peta Rupa Bumi Indonesia. Available online: https://tanahair.indonesia.go.id/portal-web (accessed on 14 October 2021).

- Fire Information for Resource Management System. Available online: https://firms.modaps.eosdis.nasa.gov/download/ (accessed on 3 July 2021).

- Sentinel-2 Handbook. Available online: https://sentinel.esa.int/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 13 August 2021).

- Sentinel-1 ESA’s Radar Observatory Mission for GMES Operational Services. Available online: https://sentinel.esa.int/documents/247904/349449/S1_SP-1322_1.pdf (accessed on 13 August 2021).

- Calculation of Beta Naught and Sigma Naught for TerraSAR-X Data. Available online: https://www.intelligence-airbusds.com/files/pmedia/public/r465_9_tsxx-airbusds-tn-0049-radiometric_calculations_d1.pdf (accessed on 3 March 2022).

- Small, D.; Miranda, N.; Meier, E. A revised radiometric normalisation standard for SAR. In Proceedings of the IEEE International Geoscience & Remote Sensing Symposium (IGARSS), Cape Town, South Africa, 12–17 July 2009; pp. 566–569. [Google Scholar]

- Cloud Mask. Available online: https://github.com/fitoprincipe/geetools-code-editor/blob/master/cloud_masks (accessed on 10 September 2021).

- Belenguer-Plomer, M.A.; Tanase, M.A.; Fernandez-Carrillo, A.; Chuvieco, E. CNN-based burned area mapping using radar and optical data. Remote Sens. Environ. 2021, 260, 112468. [Google Scholar] [CrossRef]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition. In Proceedings of the International Conference on Artificial Neural Networks (ICANN) 2010, Thessaloniki, Greece, 15–18 September 2010; pp. 92–101. [Google Scholar]

- Wang, Y.; Fang, Z.; Hong, H.; Peng, L. Flood susceptibility mapping using convolutional neural network frameworks. J. Hydrol. 2020, 582, 124482. [Google Scholar] [CrossRef]

- Belenguer-Plomer, M.A.; Tanase, M.A.; Fernandez-Carrilo, A.; Chuvienco, R. Burned area detection and mapping using Sentinel-1 backscatter coefficient and thermal anomalies. Remote Sens. Environ. 2019, 233, 111345. [Google Scholar] [CrossRef]

- Taufik, M.; Veldhuizen, A.A.; Wösten, J.H.M.; van Lanen, H.A.J. Exploration of the importance of physical properties of In-donesian peatlands to assess critical groundwater table depths, associated drought and fire hazard. Geoderma 2019, 347, 160–169. [Google Scholar] [CrossRef]

- Van Dijk, D.; Shoaie, S.; Van Leeuwen, T.; Veraverbeke, S. Spectral signature analysis of false positive burned area detection from agricultural harvests using Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102296. [Google Scholar] [CrossRef]

| Sensor | Specifications | |||

|---|---|---|---|---|

| Sentinel-2 MSI | Date | Pre-fire: July 2019 | Post-fire: October 2019 | |

| Bands | Band number | Band name | Resolution | |

| B2 | Blue | 10 | ||

| B3 | Green | 10 | ||

| B4 | Red | 10 | ||

| B5 | Vegetation red edge 1 | 20 | ||

| B6 | Vegetation red edge 2 | 20 | ||

| B7 | Vegetation red edge 3 | 20 | ||

| B8 | NIR | 10 | ||

| B8A | Narrow NIR | 20 | ||

| B11 | SWIR1 | 20 | ||

| B12 | SWIR2 | 20 | ||

| Product Level | L-2A | |||

| Sentinel-1 (C-Band SAR) | Date | Pre-fire: July 2019 | Post-fire: October 2019 | |

| Frequency | 5.405 GHz | |||

| Orbit | Descending | |||

| Product Type | Ground range detected | |||

| Acquisition Mode | Interferometric wide swath | |||

| Polarization Mode | VV and VH | |||

| Scheme | Sensor | Band/Polarization |

|---|---|---|

| #1 | Optical | 20 bands of pre-fire and post-fire events |

| # 2 | SAR | 8 bands VV and VH polarization of and of pre-fire and post-fire events |

| # 3 | Optical and SAR VH Polarization | optical band and VH polarization of and of pre-fire and post-fire events, with a total of 24 bands |

| # 4 | Optical and SAR VV Polarization | optical band and VV polarization of and of pre-fire and post-fire events, with a total of 24 bands |

| # 5 | Optical and SAR VH and VV Polarization | optical band and VH and VV polarization of and of pre-fire and post-fire events, with a total of 28 bands |

| Sensor | Scheme | CNN | ||||

| OA | Recall | Precision | F1 -Score | K | ||

| Optical | #1 | 0.9619 | 0.9475 | 0.9767 | 0.9619 | 0.9237 |

| SAR | #2 | 0.7136 | 0.7553 | 0.7030 | 0.7282 | 0.4263 |

| Optical and SAR | #3 | 0.9676 | 0.9665 | 0.9697 | 0.9681 | 0.9352 |

| #4 | 0.9716 | 0.9793 | 0.9653 | 0.9723 | 0.9432 | |

| #5 | 0.9682 | 0.9519 | 0.9849 | 0.9681 | 0.9363 | |

| RF | ||||||

| Optical | #1 | 0.9580 | 0.9427 | 0.9738 | 0.9580 | 0.9160 |

| SAR | #2 | 0.6991 | 0.6908 | 0.7094 | 0.7000 | 0.3983 |

| Optical and SAR | #3 | 0.9621 | 0.9498 | 0.9749 | 0.9622 | 0.9241 |

| #4 | 0.9635 | 0.9513 | 0.9763 | 0.9636 | 0.9271 | |

| #5 | 0.9636 | 0.9525 | 0.9753 | 0.9638 | 0.9272 | |

| NN | ||||||

| Optical | #1 | 0.9611 | 0.9602 | 0.9632 | 0.9617 | 0.9223 |

| SAR | #2 | 0.6953 | 0.6614 | 0.7170 | 0.6881 | 0.3912 |

| Optical and SAR | #3 | 0.9548 | 0.9742 | 0.9391 | 0.9563 | 0.9095 |

| #4 | 0.9506 | 0.9483 | 0.9543 | 0.9512 | 0.9012 | |

| #5 | 0.9580 | 0.9593 | 0.9580 | 0.9587 | 0.9159 | |

| CNN-RF | ||||||

| Optical | #1 | 0.9644 | 0.9520 | 0.9773 | 0.9645 | 0.9287 |

| SAR | #2 | 0.7029 | 0.6990 | 0.7113 | 0.7051 | 0.4058 |

| Optical and SAR | #3 | 0.9719 | 0.9660 | 0.9783 | 0.9721 | 0.9437 |

| #4 | 0.9718 | 0.9641 | 0.9800 | 0.9720 | 0.9435 | |

| #5 | 0.9725 | 0.9618 | 0.9837 | 0.9726 | 0.9450 | |

| Scheme | Burned Area Estimation (Hectares) | |||

|---|---|---|---|---|

| CNN | RF | NN | CNN-RF | |

| #1 | 47,834.74 | 47,762.48 | 51,749.67 | 48,367.02 |

| #2 | 112,691.83 | 108,029.40 | 96,339.55 | 106,945.36 |

| #3 | 51,750.16 | 47,536.03 | 55,671.40 | 48,735.11 |

| #4 | 50,428.16 | 47,659.81 | 51,366.37 | 48,514.23 |

| #5 | 47,903.56 | 47,433.93 | 50,783.50 | 48,824.59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sudiana, D.; Lestari, A.I.; Riyanto, I.; Rizkinia, M.; Arief, R.; Prabuwono, A.S.; Sri Sumantyo, J.T. A Hybrid Convolutional Neural Network and Random Forest for Burned Area Identification with Optical and Synthetic Aperture Radar (SAR) Data. Remote Sens. 2023, 15, 728. https://doi.org/10.3390/rs15030728

Sudiana D, Lestari AI, Riyanto I, Rizkinia M, Arief R, Prabuwono AS, Sri Sumantyo JT. A Hybrid Convolutional Neural Network and Random Forest for Burned Area Identification with Optical and Synthetic Aperture Radar (SAR) Data. Remote Sensing. 2023; 15(3):728. https://doi.org/10.3390/rs15030728

Chicago/Turabian StyleSudiana, Dodi, Anugrah Indah Lestari, Indra Riyanto, Mia Rizkinia, Rahmat Arief, Anton Satria Prabuwono, and Josaphat Tetuko Sri Sumantyo. 2023. "A Hybrid Convolutional Neural Network and Random Forest for Burned Area Identification with Optical and Synthetic Aperture Radar (SAR) Data" Remote Sensing 15, no. 3: 728. https://doi.org/10.3390/rs15030728

APA StyleSudiana, D., Lestari, A. I., Riyanto, I., Rizkinia, M., Arief, R., Prabuwono, A. S., & Sri Sumantyo, J. T. (2023). A Hybrid Convolutional Neural Network and Random Forest for Burned Area Identification with Optical and Synthetic Aperture Radar (SAR) Data. Remote Sensing, 15(3), 728. https://doi.org/10.3390/rs15030728