1. Introduction

Forests are the mainstay of terrestrial ecosystems and are essential in maintaining ecological security and balance [

1]. Conducting forest resource surveys and monitoring is important for formulating forestry guidelines and policies, protecting and utilizing planned forests, and constructing a sound ecological environment [

2]. Among these, tree species identification is one of the basic and key components of forest resources monitoring, which plays a vital role in forest fire prevention [

3], the monitoring of forest pests and diseases [

4], the extraction of forest change information [

5], and the protection of biodiversity [

6]. Traditional tree species identification mainly relies on manual field surveys to identify tree species based on the external morphology of trees. Although this method has high accuracy, it has low accessibility, involves a difficult investigation, and involves high danger for plots without traffic conditions [

7]. Secondly, field survey is costly and time-consuming, which makes it challenging to identify large-scale tree species in a short time.

The rapid development of remote sensing technology makes up for the deficiency of manual survey methods, which can obtain large-area image data without touching trees and realize the classification and identification of tree species in large regional scale areas without causing damage to the forest ecological environment. In particular, the hyperspectral sensor can simultaneously image the target region in tens to hundreds of continuous and subdivided spectral bands, obtaining the spatial information of the surface image as well as its spectral information, achieving the combination of spectra and image. Compared with RGB and multispectral images, hyperspectral images have rich spectral information and can detect subtle differences in the spectra of different vegetation, which has significant advantages in forest tree species classification.

In recent years, deep learning methods based on neural network have become popular with the development of computer hardware and algorithms. As an emerging research direction in the field of machine learning, it utilizes deep neural network structures that can automatically learn high-level abstract features and combine these features layer by layer to achieve efficient and accurate data classification and prediction [

8,

9]. Compared with traditional machine learning methods, deep learning has more robust self-adaptive and generalization capabilities, can better handle large-scale complex data, and has achieved great success in computer vision, natural language processing, speech recognition, and other fields. In the field of remote sensing, deep learning technology has attracted extensive attention from scholars, and many experts have utilized deep learning methods for tree species classification and achieved good classification results [

10,

11,

12]. Among them, the convolutional neural network (CNN) has achieved remarkable results in computer vision, such as image classification [

13], object detection [

14], and semantic segmentation [

15]. Due to its powerful feature extraction capability, the convolutional neural network has become the most commonly used neural network in hyperspectral tree species classification [

16,

17,

18]. The hyperspectral image classification methods based on CNN can be mainly divided into three classes:

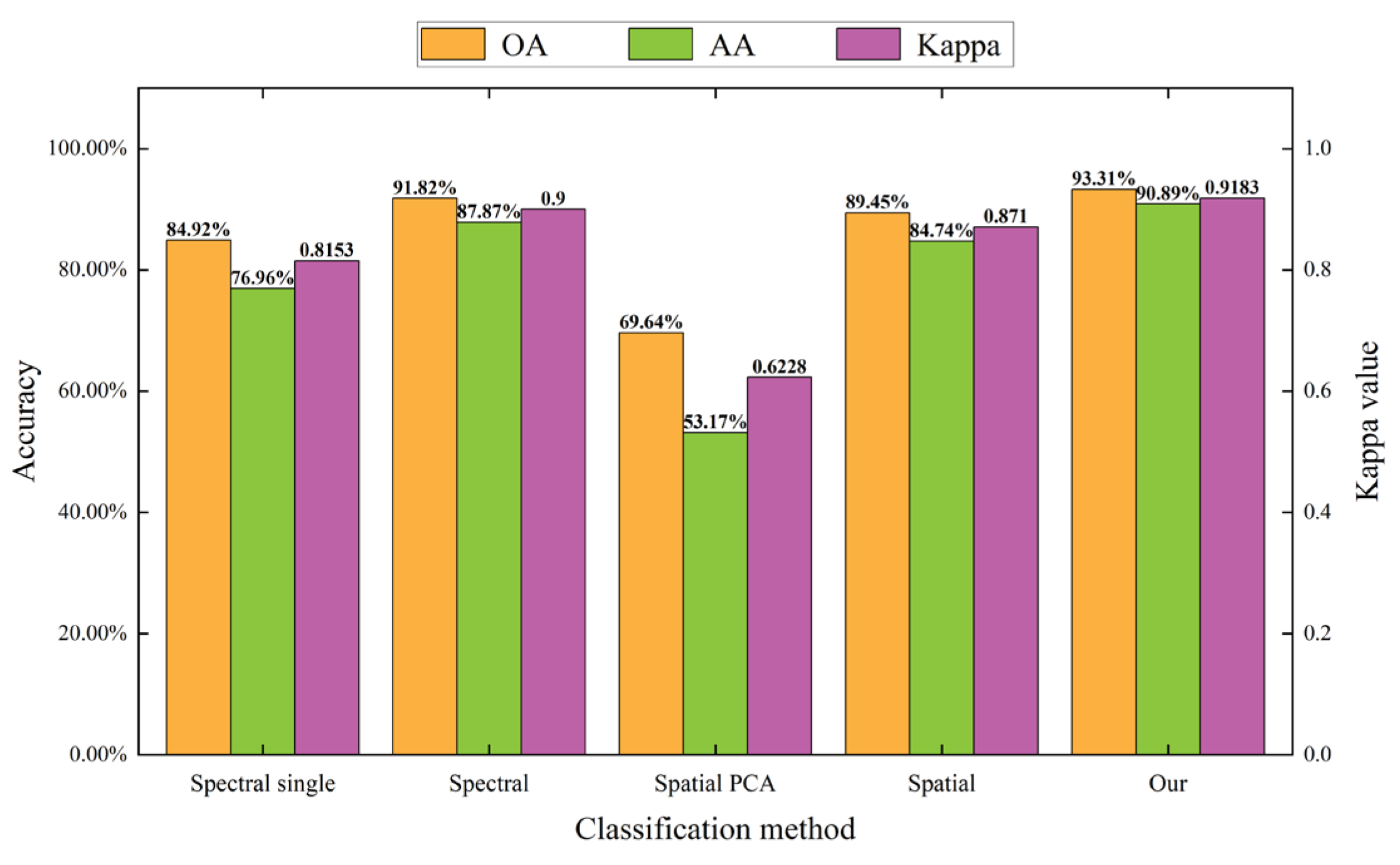

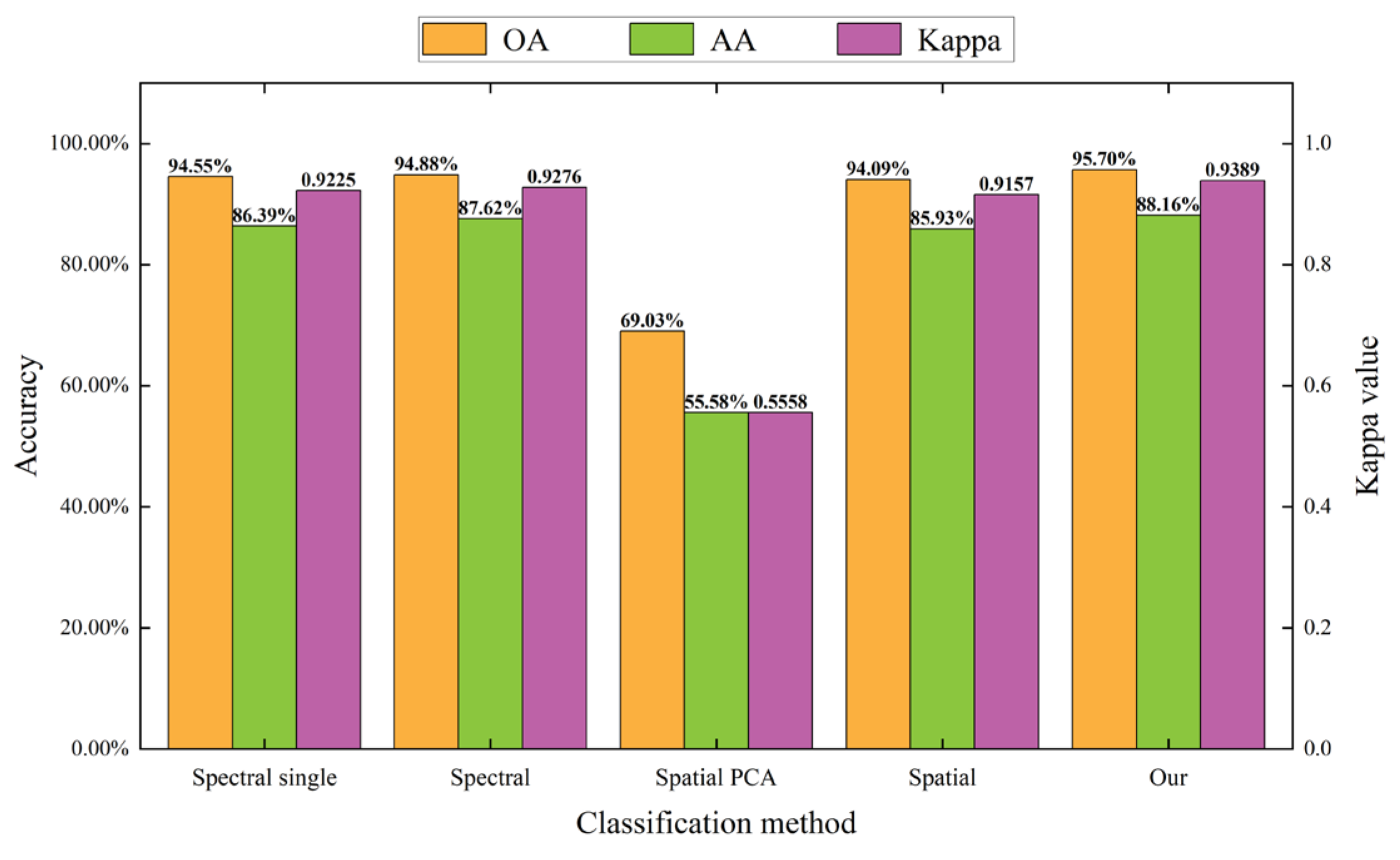

Classification methods based on spectral features [

19,

20]. This method utilizes 1D-CNN to extract features from the raw spectral information of pixels to complete classification. Xi et al. [

21] applied a 1D-CNN to tree species classification in OHS-1 hyperspectral images, and the results showed that the accuracy obtained using a 1D-CNN (85.04%) was better than that of the Random Forest classification model (80.61%). However, the 1D-CNN only considered the spectral information of the samples and not their spatial information.

Classification methods based on spatial features [

22,

23]. This method first performs feature dimensionality reduction on hyperspectral images and then extracts spatial information in a neighborhood centered on the pixel to be classified, using a 2D-CNN to complete classification. Fricker et al. [

24] performed PCA dimensionality reduction on hyperspectral data, used a 2D-CNN to extract spatial features from the data after dimensionality reduction, and classified seven dominant species and dead trees in a mixed coniferous forest, achieving a classification accuracy of 87%. Although the 2D-CNN utilizes the spatial information of pixels, it loses the original spectral information in the dimensionality reduction process.

Classification methods based on spatial–spectral features association. One way is to use a 3D-CNN to simultaneously extract spectral and spatial features of pixels [

25,

26]. Zhang et al. [

27] proposed an improved 3D convolutional neural network for tree species classification, which uses the raw data of airborne hyperspectral images as input without dimensionality reduction or feature selection and can extract spectral and spatial features simultaneously, resulting in a tree species classification accuracy of 93.14%. However, this method only uses the 3D convolutional structure, which can easily lead to overfitting when the number of network parameters is large. Another way is to use different networks to extract spectral and spatial features separately, and then combine the two features to complete classification [

28,

29,

30]. For example, Liang et al. [

31] proposed a spectral–spatial paralleled convolutional neural network to classify the forest tree species in the UAV HSI. The experimental results showed that the SSPCNN produced competitive performance compared with other methods. However, the network structure is relatively simple, and the classification effect is not good in the context of complex forests.

In short, methods based on spectral or spatial features may lose certain information and fail to take full advantage of hyperspectral images. Hyperspectral images contain both spatial and rich spectral information, so classification methods based on spatial–spectral feature association are more in line with hyperspectral characteristics. Compared with a 3D-CNN, the double-branch network can design different forms of spectral and spatial networks to extract features, which has more flexibility and a strong advantage in hyperspectral tree species classification. In tree species classification, different spectral features and spatial features have different abilities to distinguish tree species, and the neural network should focus on the features that contribute significantly to the classification results. Therefore, the accuracy of tree species classification can be improved by introducing an attention mechanism to make the network focus on important features and suppress unimportant features.

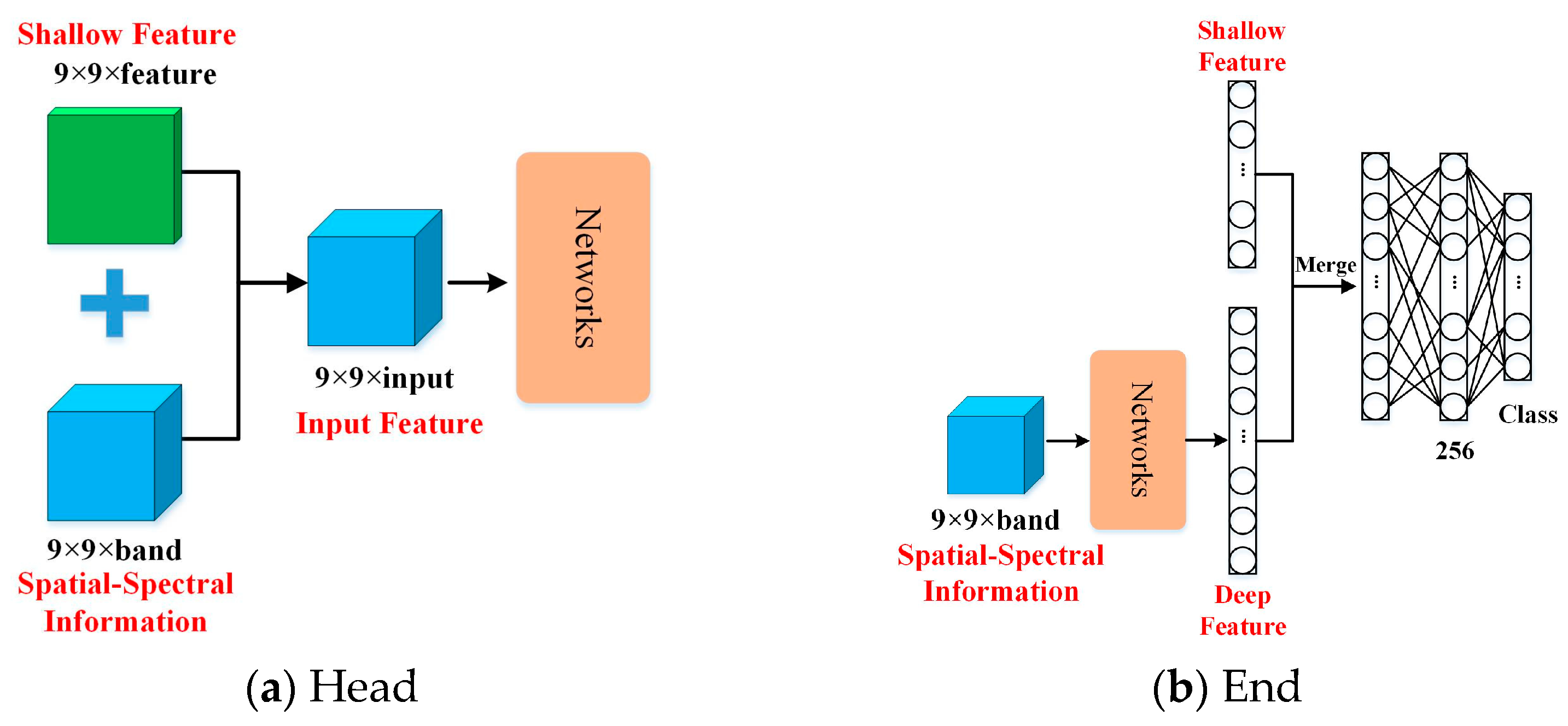

In summary, tree species identification is essential for forest inventory, and remote sensing technology has strong advantages in large-scale tree species identification. Since airborne hyperspectral images have rich spectral and spatial information, which can detect subtle differences between different tree species, we used hyperspectral images as the data source. However, the classification methods based on spectral features do not consider the spatial information of hyperspectral images, and the classification methods based on spatial features will cause the loss of spectral information in the process of data dimensionality reduction. In addition, the neural network should focus on features that contribute significantly to classification. In order to fully utilize the spatial–spectral information of hyperspectral images, we design a double-branch network, i.e., a spectral branch and a spatial branch. In the spectral branch, we utilize a 3D-CNN to extract spectral features of pixels instead of a 1D-CNN, which avoids the loss of spatial information. In the spatial branch, we use muti-scale convolution and a 2D-CNN to extract spatial features of pixels. It is worth noting that we do not reduce the dimensionality of the original data, but input all the spectral bands into the spatial branch, which avoids the loss of spectral information in the process of dimensionality reduction. In both branches, we design corresponding residual structure blocks to extract features better. To further utilize the spatial–spectral information, we fuse the features obtained from the two branches through a concatenation operation to obtain spatial–spectral features. In addition, the neural network should focus on features that contribute significantly to classification. So, we introduce the SimAM (Simple Parameter-Free Attention Module) mechanism into the network, which is a parameter-free attention mechanism that can make the network focus on important features without increasing the number of parameters of the network. Finally, the fully connected layer is utilized to complete tree species classification.

Our main contributions can be summarized as follows:

- 1.

To fully utilize the advantages of the airborne hyperspectral images, we propose a double-branch spatial–spectral joint network based on the SimAM attention mechanism for tree species classification. The network consists of three parts: spectral branch, spatial branch, and feature fusion. The spatial–spectral information of pixels is utilized in both spectral and spatial branches to extract features, and spatial and spectral features are merged in the feature fusion stage. Moreover, the SimAM attention mechanism is used to refine the features further to improve the classification accuracy.

- 2.

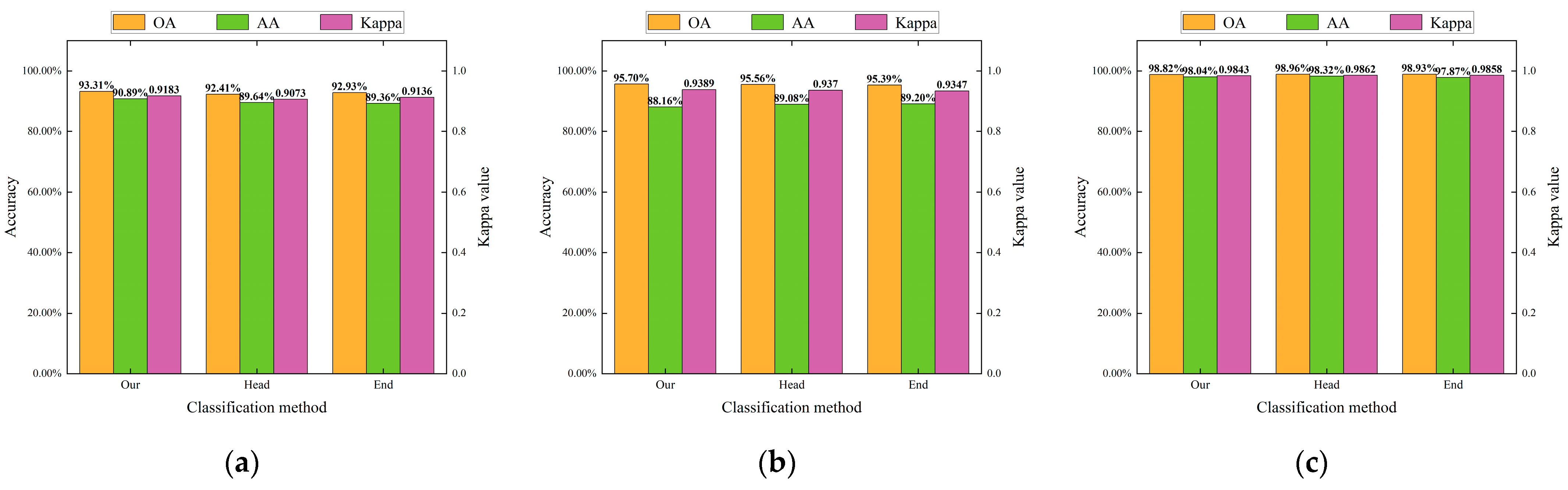

To verify the effectiveness of the proposed method, we conducted tree species classification experiments on three different tree species datasets, and the experimental results showed that the method proposed in this study performed the best and obtained the highest tree species classification accuracy compared to other classification methods. Furthermore, we further verified the importance of joint spatial–spectral information and the SimAM attention mechanism through ablation experiments. Finally, we analyzed the factors affecting the classification accuracy of tree species.

The rest of this article is organized as follows:

Section 2 describes the related work.

Section 3 describes the three tree species datasets used in this study and the proposed method in detail.

Section 4 presents classification results.

Section 5 discusses and analyzes the classification of tree species from different perspectives. Finally, the manuscript presents the conclusions and briefly describes the directions for future work.

3. Materials and Methodology

3.1. Dataset Introduction

To verify the robustness of the proposed method, we conducted tree species classification experiments on three different hyperspectral datasets. The three study areas are located in different spatial locations, and the datasets are acquired using various hyperspectral sensors, with different spatial resolutions and different tree species categories. Next, the three datasets are described in detail.

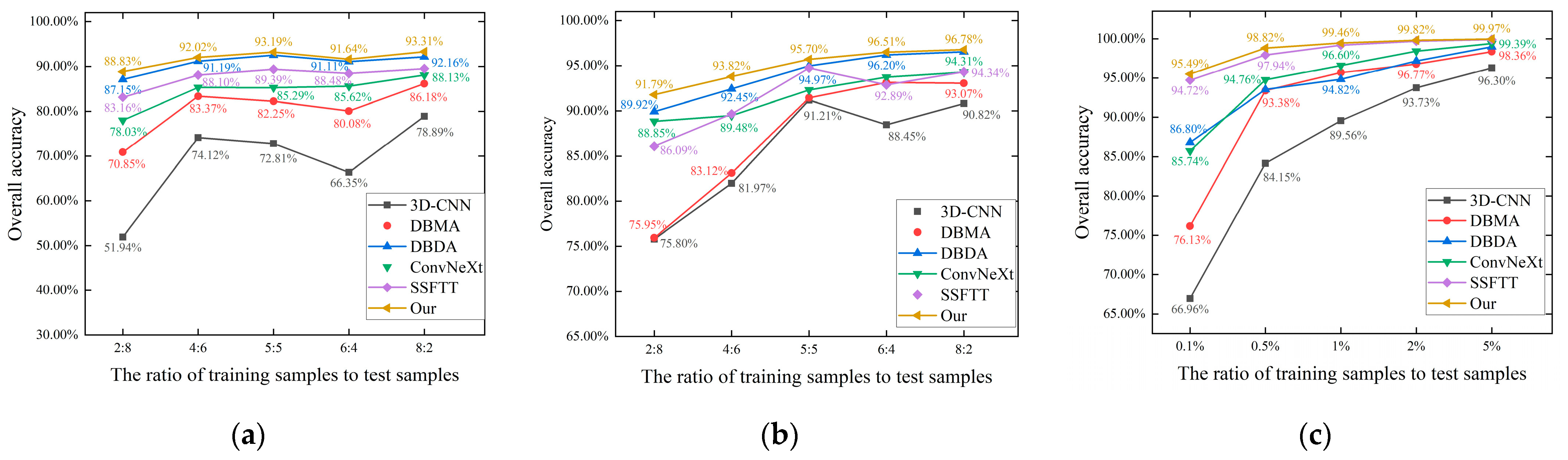

3.1.1. TEF Dataset

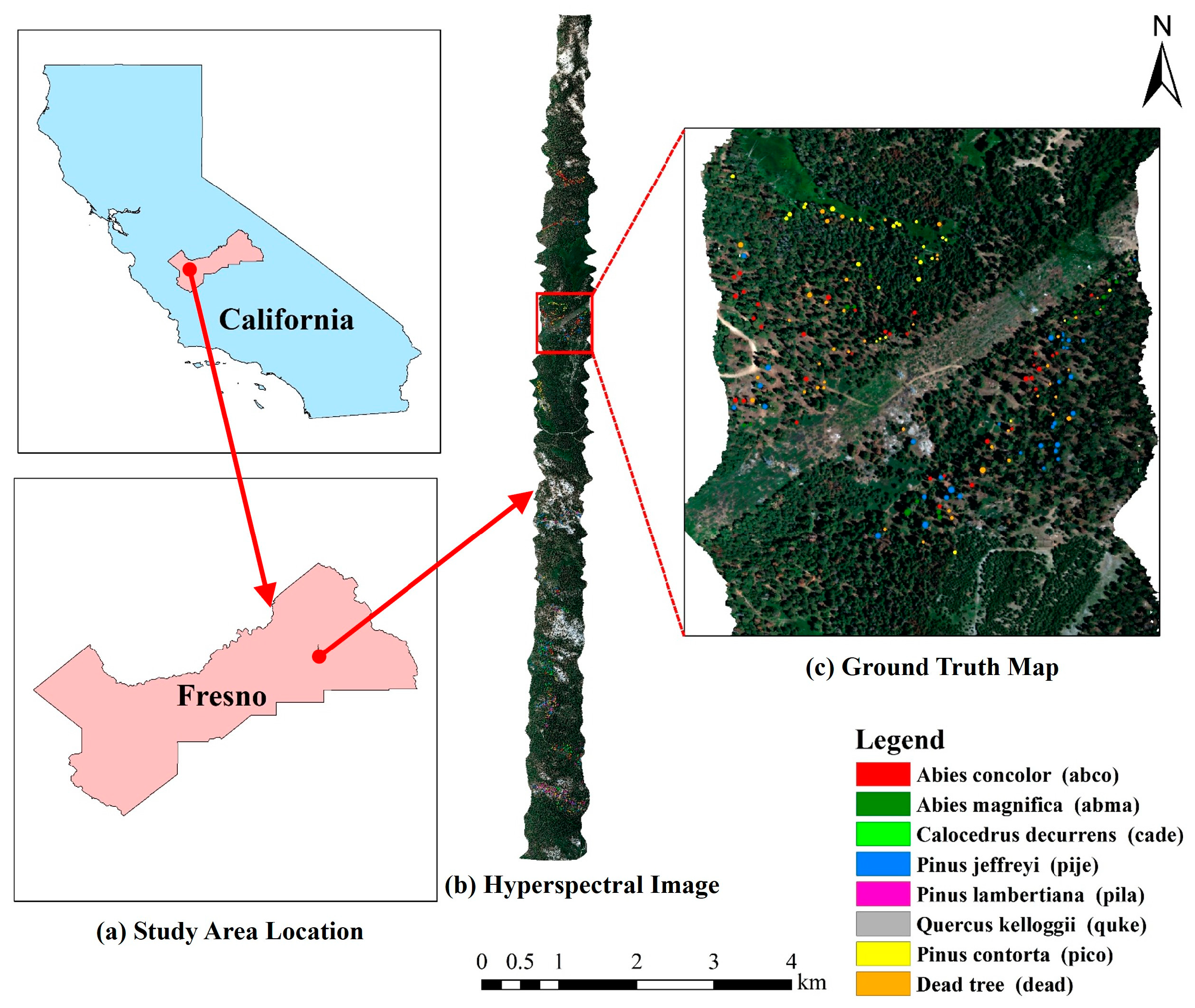

The Teakettle Experimental Forest (TEF) study area is located in northeastern Fresno, California, USA (36°59′51″N, 119°1′28″W), near the southern Sierra Nevada Mountains, as shown in

Figure 1a. The TEF dataset was collected in 2017 by the National Ecological Observatory Network (NEON) using the airborne remote sensing platform AOP. A south-to-north flight strip, approximately 16 km long and 1 km wide, covering a portion of the Teakettle Experimental Forest, was used for this study. The hyperspectral sensor covers a wavelength range of 380–2510 nm with a spectral sampling interval of 5 nm, resulting in 426 bands. Data acquisition using the remote sensing platform occurred at an altitude of approximately 1000 m above the ground, resulting in an image spatial resolution of 1 m. After removing the empty band and the bands affected by water vapor absorption, the remaining 388 bands were used for experiments. The hyperspectral data was preprocessed by NEON at the time of dataset release, including radiometric calibration, geometric correction, atmospheric correction, and orthometric correction. Field data was provided by Geoffrey et al. [

24], and includes seven dominant tree species and dead trees.

The dataset was divided using a stratified random sampling method in this study. Specifically, a fixed proportion of data from each category was selected as the training and test sets. To avoid the chance of random selection in the dataset production process, we adopted a 5-fold cross-validation method to obtain five datasets. In each experiment, five datasets were trained and tested, respectively, and the average value was taken as the final classification result. The specific number of training and test sets for each category in the TEF dataset is shown in

Table 1.

3.1.2. Tiegang Reservoir Dataset

The Tiegang Reservoir study area is located in the southeastern part of Baoan District, Shenzhen City, Guangdong Province, China (22°36′30″N, 113°54′30″E), as shown in

Figure 2a. The image was collected using an independently integrated UAV hyperspectral system of the Chinese Academy of Surveying and Mapping. The hyperspectral sensor is a push-broom scanner that records 112 bands in the 400–1000 nm spectral range with a spectral resolution of 5 nm. The flight altitude was set to 100 m above ground level, resulting in an image spatial resolution of 0.1 m. We performed pre-processing operations such as outlier removal, radiometric calibration, geometric correction, atmospheric correction, and image mosaic on the raw image. The hyperspectral image is shown in

Figure 2b. Field data was collected at the end of the flight process, and contained a total of seven tree species.

Because the spatial resolution of the Tiegang Reservoir dataset is 0.1 m, and the number of pixels is more compared to the TEF dataset, we did not use a 5-fold cross-validation method to produce the dataset; instead, the training set and the test set were divided according to the ratio of 1:1. The above operation was repeated five times, and its average value was calculated as the final classification result. The specific number of training and test sets for each category in the Tiegang reservoir dataset is shown in

Table 2.

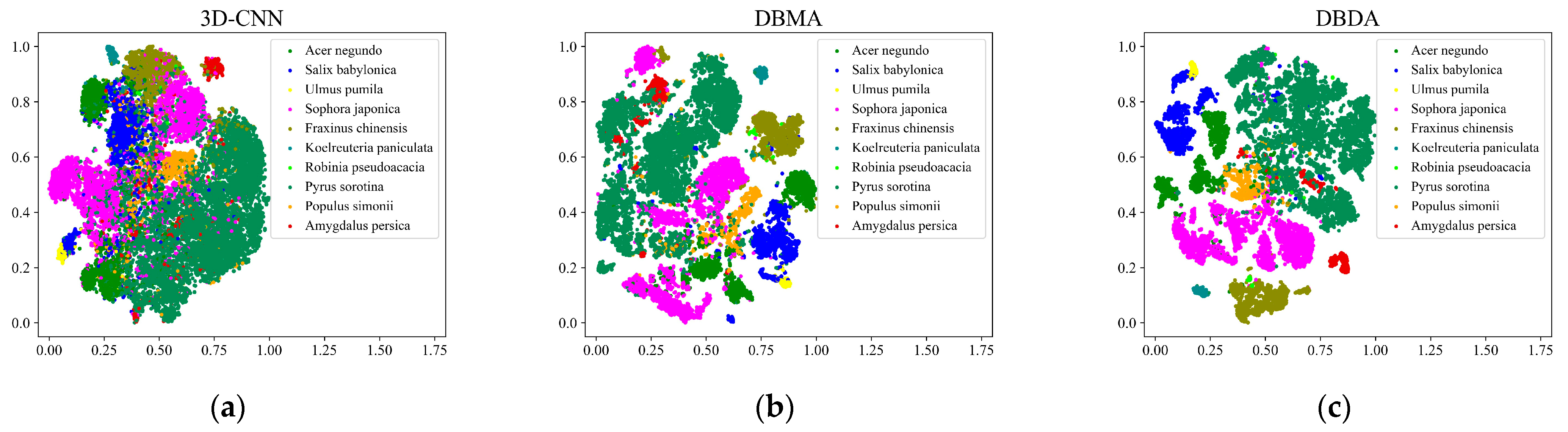

3.1.3. Xiongan New Area Dataset

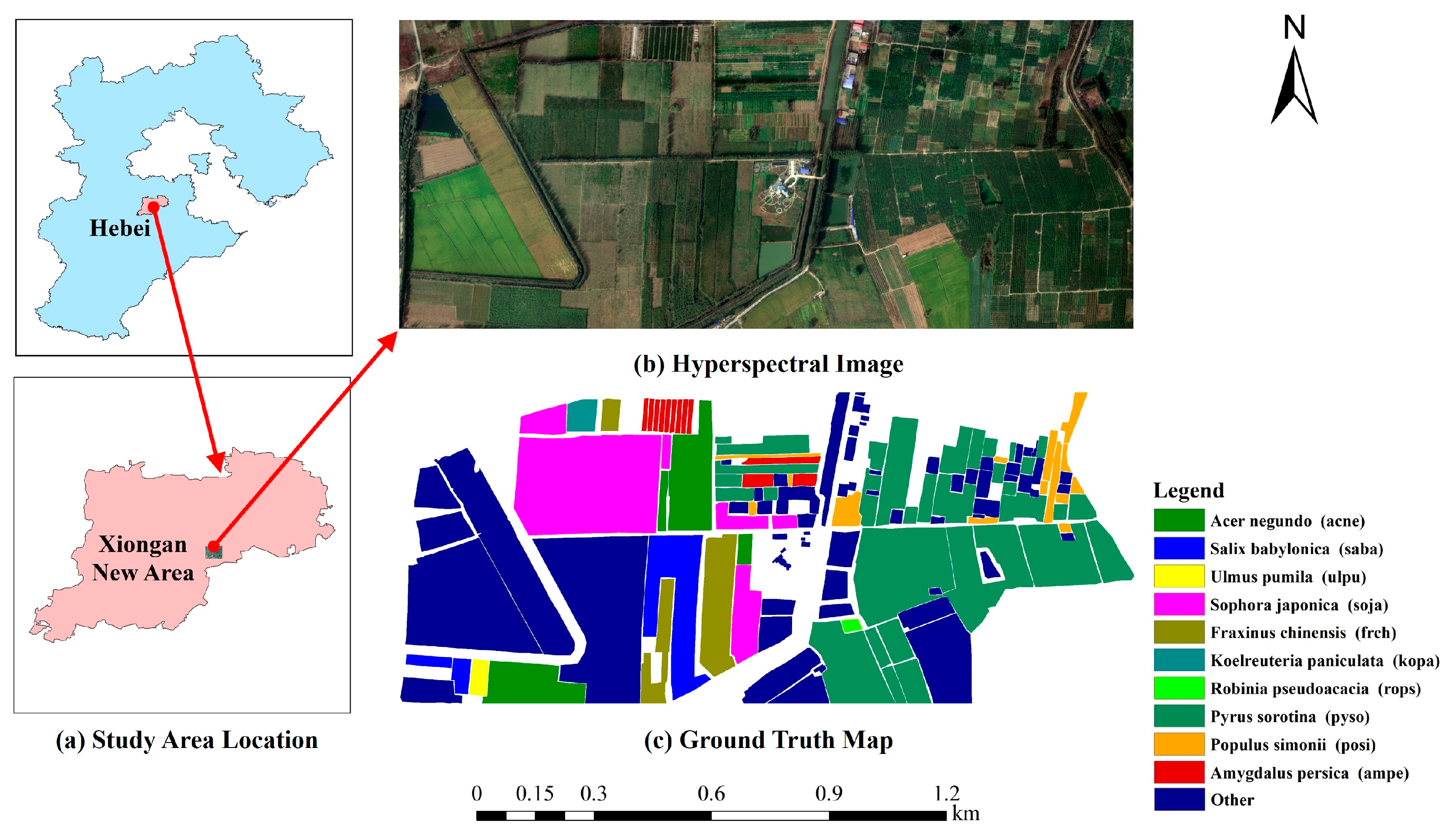

The Xiongan New Area study area is located in the Matiwan Village, in the southeastern part of Xiongan New Area, Hebei Province, China (38°56′40″N, 116°3′57″E), as shown in

Figure 3a. The hyperspectral image was collected in October 2017 by the Institute of Remote Sensing and Digital Earth and the Shanghai Institute of Technical Physics of the Chinese Academy of Sciences. The hyperspectral sensor covers a wavelength range of 390-1000 nm, with a spectral sampling interval of approximately 2.4 nm, resulting in 256 bands. The data was collected using the airborne remote sensing platform at about 2000 m from the ground, resulting in an image spatial resolution of 0.5 m. The image contains 3750 × 1580 pixels, as shown in

Figure 3b. Through the field investigation of land cover types, 20 categories were annotated, as shown in

Figure 3c, including many tree species, such as

Pyrus sorotina,

Acer negundo,

Salix babylonica, etc.

The Xiongan New Area dataset differs from the other two datasets. All trees are artificial plantations, which are uniformly distributed, and the boundaries between different tree species are clear. Considering the difficulty and high cost of sample acquisition in practical remote sensing applications for forests, the tree species classification performance of the network was explored with limited training samples. Specifically, we randomly selected 0.5% of the data from each category as the training set and 5% of the data as the test set. The objects of the study were various typical broadleaf tree species in northern China, so the categories of non-tree objects were categorized as other. Similarly, the above operation was repeated five times to avoid the chance of random selection, resulting in five datasets. The five datasets were trained and tested in each experiment, and the average value was taken as the final classification result. The specific number of training and test sets for each category in the Xiongan New Area dataset is shown in

Table 3.

3.2. Methodology

The hyperspectral image data

(where

and

denote the spatial size of HSI and the number of spectral bands, respectively) are a three-dimensional structure, which contains spatial information and rich spectral information. To better classify HSI pixels

with spectral and spatial information, the HSI patch

is cropped from

and input into the neural network to extract spatial–spectral features. Here, the center pixel of

is

, and

is patch size, chosen in this study to be 9 × 9. Moreover, to fully utilize the advantages of hyperspectral images, we proposed a double-branch spatial–spectral joint network based on the SimAM attention mechanism for tree species classification. The network structure is shown in

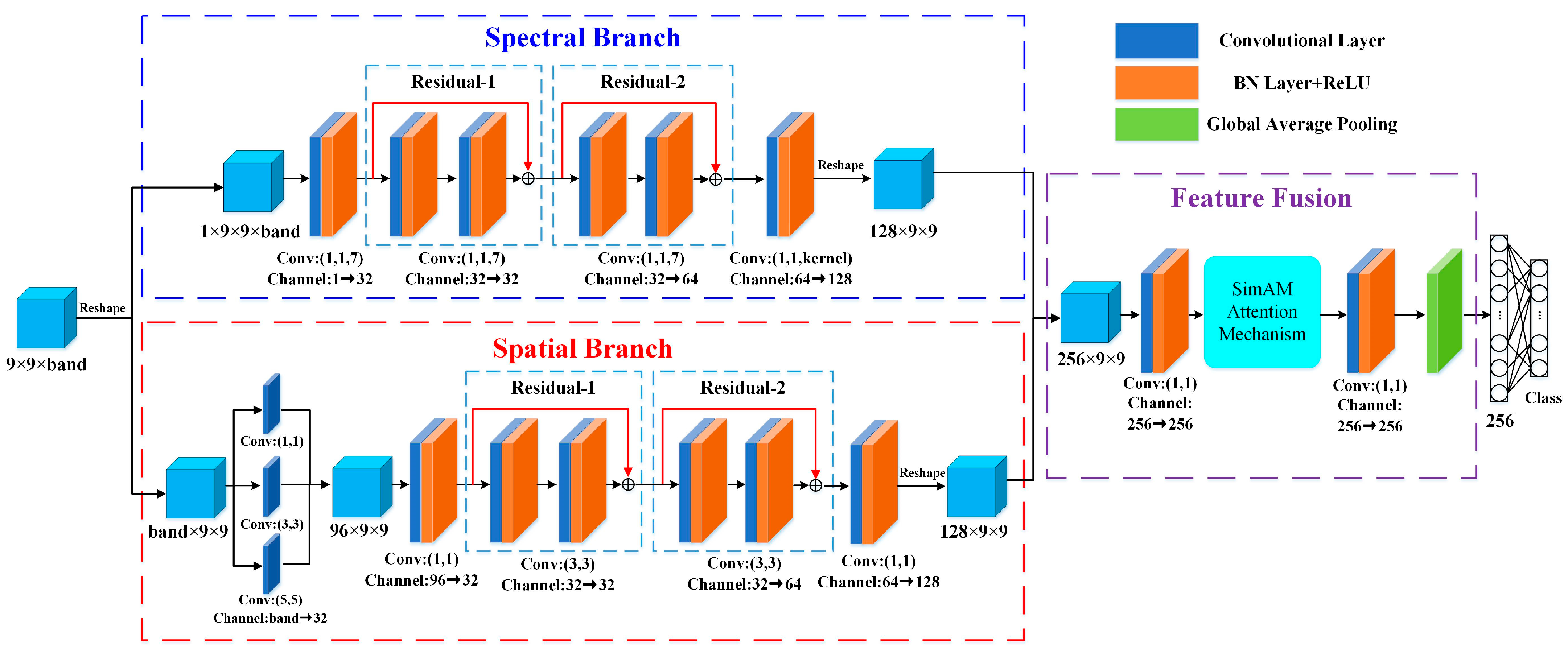

Figure 4, and consists of three parts: spectral branch, spatial branch, and feature fusion. Specifically, the spectral branch mainly uses a 3D-CNN to extract the spectral features of pixels. The spatial branch mainly uses a 2D-CNN to extract the spatial features of pixels. To make further use of the spatial–spectral information of hyperspectral data, we fuse the features extracted from the spectral branch with those extracted from the spatial branch, and introduce the SimAM attention mechanism in the fusion stage. By assigning different weights to each part of the feature map, important features are extracted, and unimportant features are suppressed, thus improving the classification accuracy of tree species.

3.2.1. Spectral Branch

When extracting the spectral features of pixels using a 1D-CNN, the spatial information of the data will be lost, while a 3D-CNN extracts the spectral features along with their spatial features, which can avoid the loss of spatial information. Therefore, in the spectral branch, we utilize a 3D-CNN to extract the spectral features of pixels. The 3D convolution operation is as in Equation (1):

where,

is the value at position

on the

jth feature cube in the

lth layer.

and

denote the height and width of the 3D convolution kernel in the spatial dimension, respectively, and

denotes the 3D convolution kernel in the spectral dimension.

is the weight parameter at position

of the

th convolution kernel in the

th layer, and the convolution kernel is connected to the

mth feature cube in the

th layer.

is the value at position

on the

th feature cube in the

th layer.

is the bias value.

is the activation function; we chose the ReLU activation function in this study.

The input data format for the spectral branch is (1, 9, 9, band), which contains all pixels within a neighborhood size of 9 × 9 centered on the pixel to be classified. First, we convolve the data using a 3D convolution kernel of size (1, 1, 7) to increase the number of channels to 32. After that, we design two spectral residual blocks to extract features further. The residual structure connects the convolutional layers through identity mapping, which promotes a better backpropagation of the gradient and helps to solve the problem of gradient vanishing and explosion [

46]. Each spectral residual block consists of two 3D convolutional layers. Meanwhile, in order to speed up the training and convergence of the network and prevent model overfitting, we add a Batch Normalization (BN) layer after each convolutional layer of the network to improve the model performance. Finally, we utilize a 3D convolutional kernel of size (1, 1, kernel), where kernel denotes the number of bands remaining after a series of convolutions, to obtain a spectral feature map of size (128, 9, 9), denoted by

.

3.2.2. Spatial Branch

In the spatial branch, we utilize a 2D-CNN to extract the spatial features of hyperspectral images. A 2D-CNN mainly uses a 2D convolution kernel to perform convolution operations on 2D data. The value

at position

on the

th feature map in the

th layer is:

where,

and

denote the height and width of the 2D convolution kernel, respectively.

is the weight parameter at position

of the

th convolution kernel in the

th layer, and the convolution kernel is connected to the

th feature map in the

th layer.

is the value at position

on the

th feature map in the

th layer.

is the bias value.

is the activation function; similarly, we chose the ReLU activation function in the spatial branch.

In previous studies, when researchers extracted spatial features of hyperspectral images using a 2D-CNN, they first processed the raw data using a dimensionality reduction algorithm (e.g., PCA algorithm), and then used the neural network for classification. However, in the process of performing feature dimensionality reduction, the spectral information of the data will be lost. To avoid the loss of information, instead of performing dimensionality reduction on the data, we input all of the original spectral bands of the pixels into the network. Hence, the input data format of the spatial branch is (band, 9, 9). In the spatial branch, we first extract the multi-scale spatial features of the data using multi-scale convolution (the convolution kernels are 1 × 1, 3 × 3, and 5 × 5, respectively). The number of output channels is 32, and three sets of feature maps with sizes of (32, 9, 9) are obtained, respectively. Then, the three sets of features are combined to form a muti-scale feature map used as input to the subsequent convolutional layers, as shown in Equation (3).

where,

denotes the muti-scale feature map.

,

, and

denote the feature maps obtained after different scales of convolutional layers, respectively.

After multi-scale convolution, we utilize a 2D convolution with a kernel size of (1, 1) for the multi-scale feature map, and the number of output channels is set to 32 to reduce the number of parameters. Similarly, in the spatial branch, we also design two spatial residual blocks with a convolution kernel size of (3, 3). Finally, after a convolutional layer with kernel size (1, 1), a spatial feature map with size (128, 9, 9) is obtained, denoted by .

3.2.3. Feature Fusion

After the raw data goes through the spectral branch and the spatial branch, the spectral features

and the spatial features

of size (128, 9, 9) are obtained, respectively. We combine these two features to utilize the spatial–spectral information of the data further. Since the spectral and spatial features are in different domains, the concatenate operation is chosen instead of the addition operation so that the two features can be kept independent. The features are merged to form spatial–spectral features

of size (256, 9, 9).

All features in the feature

have the same weight. However, different spatial locations and channels have different distinguishing abilities for tree species. In order to extract features with stronger discriminative ability, we introduce the SimAM attention mechanism into the network to weight the feature maps. The SimAM attention mechanism can find the weight of each neuron in the feature maps by minimizing an energy function [

47]. The energy function of the

th neuron

is shown in Equation (5).

where,

and

denote the mean and variance of all neurons on the channel, respectively.

represents the number of neurons per channel as H × W.

denotes the regularization term.

The lower the energy

, the greater the difference between the target neuron

and the surrounding neurons, i.e., the more important the neuron. Therefore, the weight of each neuron on the feature maps can be obtained by

. Then, the feature maps enhanced using the attention mechanism can be expressed by Equation (6).

where the

activation function is designed to restrict too large of a value in

.

denotes Hadamard product.

The SimAM attention mechanism does not introduce additional parameters into the weight generation process. It belongs to parameter-free attention, which reduces the number of model parameters compared to other attention mechanisms. Furthermore, in order to aggregate the features further, we use a 2D convolution with kernel size (1, 1) before and after the attention mechanism, respectively. Then, the features are subject to globally averaged pooling, and finally complete the tree species classification through the fully connected layer and the softmax function.

In summary, the network proposed in this study makes full use of the advantages of hyperspectral images. First, the input data of the spectral branch is not only the spectral information of the pixel to be classified, but also contains the spectral information of other pixels in its neighborhood range, which avoids the loss of spatial information. Second, in the spatial branch, we do not reduce the dimensionality of the original data, but input all the spectral bands into the spatial branch, which avoids the loss of spectral information in the process of dimensionality reduction. Finally, we further combine the spectral and spatial information using a double-branch network structure.

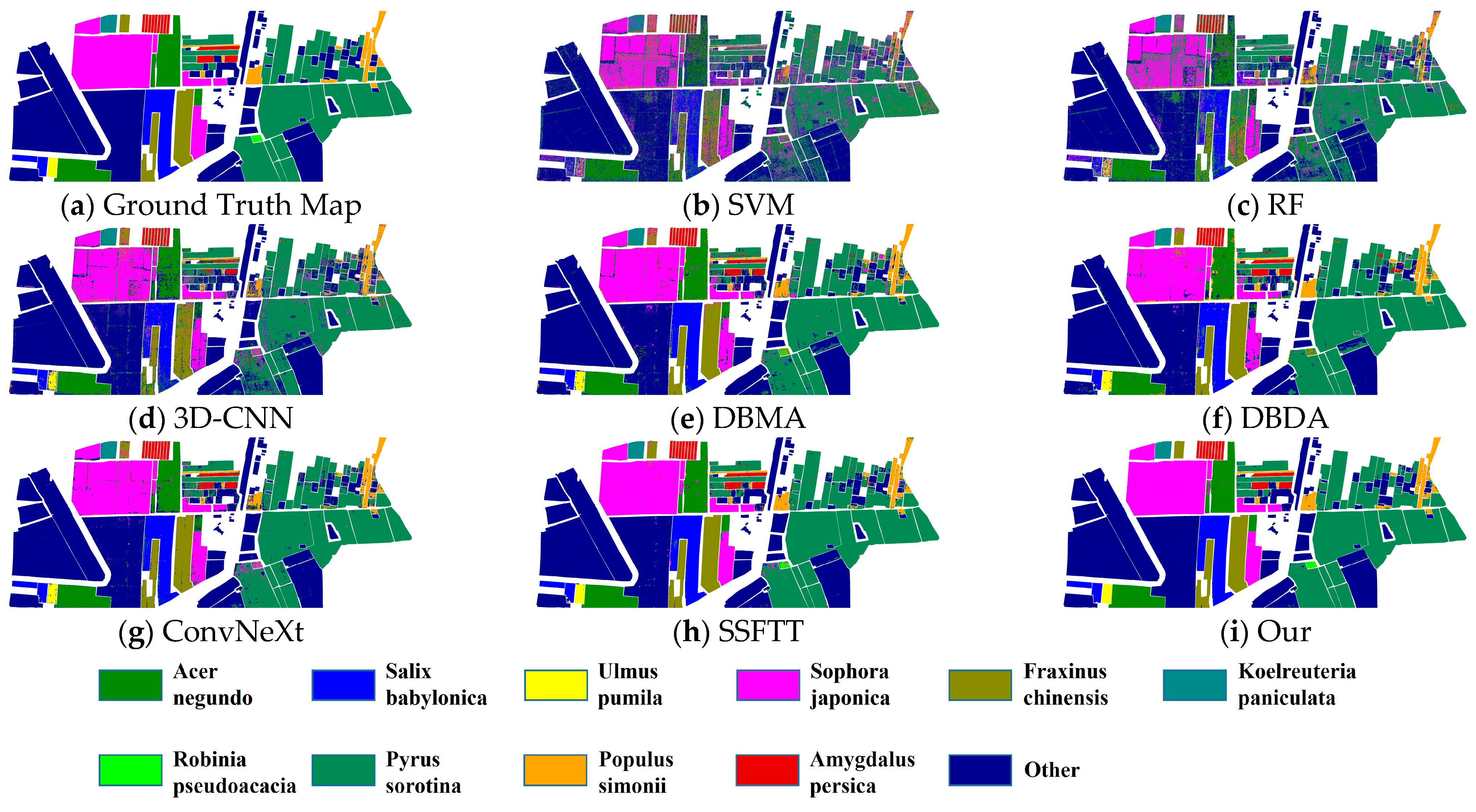

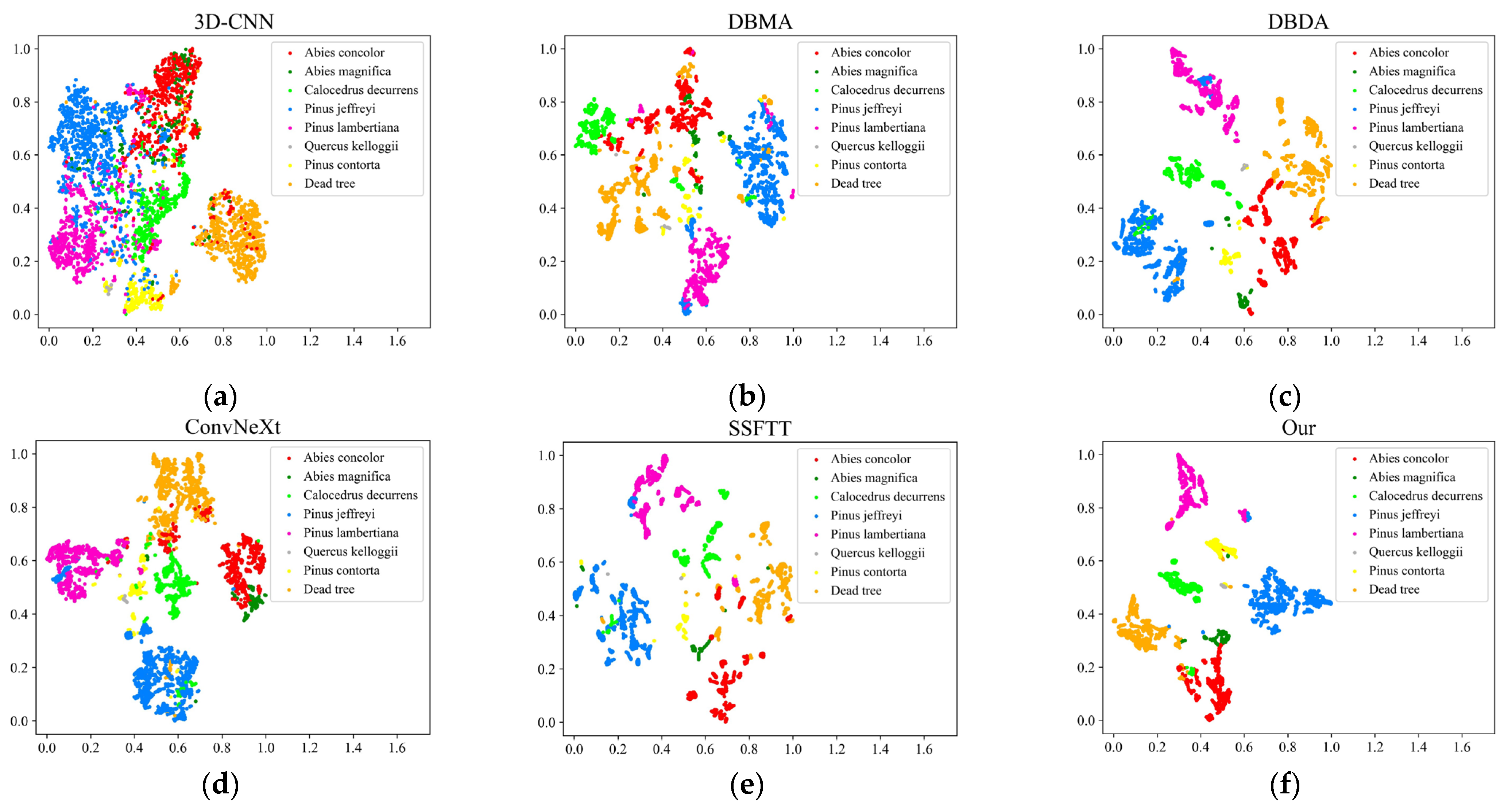

3.3. Comparison Methods

To demonstrate the superiority and effectiveness of the proposed method in this study, we compare it with traditional machine learning methods such as SVM and RF, and other state-of-the-art deep learning methods such as 3D-CNN, DBMA, DBDA, ConvNeXt and SSFTT. Next, the compared methods are briefly described separately.

- 1.

SVM: Support Vector Machine. A support vector machine with a radial basis function was used in this study, and the input features were important bands, vegetation index, the first three principal components after PCA dimensionality reduction, and eight spatial texture features corresponding to each principal component.

- 2.

RF: Random Forest. The parameter n_estimators was set to 500, and the input features were consistent with those of the SVM.

- 3.

3D-CNN: Three-Dimensional Convolutional Neural Network. The specific network architecture is described in Zhang et al. [

27]. The method is based on the 3D-CNN, and the input data size is 1 × 9 × 9 × bands, where “band” represents the number of spectral bands, and 9 denotes patch size.

- 4.

DBMA: Double-Branch Multi-Attention Mechanism Network. The specific network architecture is described in Ma et al. [

42]. The method is based on a double-branch network structure, dense blocks and the CBAM attention mechanism, and the input data size is consistent with a 3D-CNN.

- 5.

DBDA: Double-Branch Dual-Attention Mechanism Network. The specific network architecture is described in Li et al. [

48]. The method is based on a double-branch network structure, dense blocks and the DANet attention mechanism, and the input data size is consistent with a 3D-CNN.

- 6.

ConvNeXt: A pure ConvNet model. The specific network architecture is described in Liu et al. [

49]. The method is based on the ideas of ResNet and Swin Transformer, and the input data size is band × 9 × 9.

- 7.

SSFTT: Spectral–Spatial Feature Tokenization Transformer. The specific network architecture is described in Sun et al. [

50]. The method is based on the 3D-CNN and Transformer Encoder. The input data size is 1 × 30 × 13 × 13, where 30 denotes the first thirty principal components after PCA dimensionality reduction, and 13 denotes patch size.

To fairly compare the classification performance of each deep learning method, we set the same training parameters. In particular, the batch size is set to 128, and the Adam optimizer is adopted. The learning rate is set to 0.0001, and we train each model for 50 epochs.

All methods were implemented in Python 3.6. SVM and RF were implemented based on the scikit-learn library, and deep learning methods were implemented based on the Pytorch 1.9.1 open-source deep learning framework. The operating platform configuration consisted of two Intel(R) Xeon(R) Gold 5218R @2.10GHz CPU (Intel Corporation, Santa Clara, CA, USA) and an NVIDIA GeForce RTX 3080 GPU (NVIDIA Corporation, Santa Clara, CA, USA).