Abstract

Floods are natural events that can have a significant impacts on the economy and society of affected regions. To mitigate their effects, it is crucial to conduct a rapid and accurate assessment of the damage and take measures to restore critical infrastructure as quickly as possible. Remote sensing monitoring using artificial intelligence is a promising tool for estimating the extent of flooded areas. However, monitoring flood events still presents some challenges due to varying weather conditions and cloud cover that can limit the use of visible satellite data. Additionally, satellite observations may not always correspond to the flood peak, and it is essential to estimate both the extent and volume of the flood. To address these challenges, we propose a methodology that combines multispectral and radar data and utilizes a deep neural network pipeline to analyze the available remote sensing observations for different dates. This approach allows us to estimate the depth of the flood and calculate its volume. Our study uses Sentinel-1, Sentinel-2 data, and Digital Elevation Model (DEM) measurements to provide accurate and reliable flood monitoring results. To validate the developed approach, we consider a flood event occurred in 2021 in Ushmun. As a result, we succeeded to evaluate the volume of that flood event at 0.0087 km3. Overall, our proposed methodology offers a simple yet effective approach to monitoring flood events using satellite data and deep neural networks. It has the potential to improve the accuracy and speed of flood damage assessments, which can aid in the timely response and recovery efforts in affected regions.

1. Introduction

Floods are one of the most catastrophic natural disasters, affecting millions of people globally and resulting in significant economic and environmental damage. Traditional methods of flood monitoring, such as ground-based surveys and aerial photography, can be time-consuming, costly, and hazardous [1]. The use of remote sensing data and computer vision techniques can provide a faster and more efficient solution for flood monitoring and damage assessment [2].

Over the past few decades, remote sensing data has become increasingly accessible and has been widely used for monitoring and mapping both vegetation cover [3], infrastructure objects [4], and water bodies [5]. Remote sensing imagery can provide a synoptic view of the flooded area, and by analyzing the image data, it is possible to estimate the extent of the flooded area. For instance, in [6], Hernandez et al. showed how accurately flood boundaries can be assessed in real-time using unmanned aerial vehicles (UAVs). Feng et al. applied UAVs to monitor urban flood in [7] using a random forest algorithm. The authors achieved the overall accuracy of 87.3%. In [8], Hashemi-Beni et al. also proposed a deep learning approach for flood monitoring with processing of optical images.

Although UAV-based measurements provide detailed information about flooded areas, such observations are not always available during a disasters due to factors such as limited flight time, poor weather conditions, or logistical constraints. Satellite remote sensing is more suitable in particular cases. A growing body of research has explored the use of data from various apparatus and sensors for water body monitoring. Several satellite missions are commonly used for water body monitoring, including Terra, Aqua, Landsat, Ikonos, WorldView-1, WorldView-2, GeoEye-1, Radarsat-2, Sentinel-1, and Sentinel-2 [9]. These missions vary in terms of spatial and spectral resolution, as well as revisit time, and are used for different purposes. For instance, low resolution data are typically used for flood alerts on a regional scale, due to large spatial coverage [10], while higher resolution and more expensive data can be applied for precise analysis and damage assessment. In [11], Moortgat et al. utilized commercial satellite imagery with very high spatial resolution in combination with deep neural networks to develop a hydrology application. Another source of high-resolution close-to-real-time satellite observations is Chinese GaoFen mission, which provides data for governmental and commercial organizations. Zhang et al. also demonstrated flood detection on the territory of China during the summer period using datasets collected from Chinese mission satellites GaoFen-1, GaoFen-3, GaoFen-6 and Zhuhai-1 in [12]. Water indices based on multispectral data are also significant for flood assessment based on missions such as Sentinel-2 [13] and Landsat imagery [14]. In addition to the trainable models, rule-based methods are also used, such as the method proposed by Jones in [15]. The author presented several rules for determining the water surface based on the raw values of Landsat images, as well as indices calculated on their basis. However, due to the use of rules that were investigated only based on the existing data, such an approach becomes more complicated to generalize for new satellite sensors that might be highly actual at present time. The service inspired by this approach is available in [16].

Although solutions based on visible satellite data show high performance, they can be limited due to varying weather conditions and cloud cover [17]. In [18], Dong et al., demonstrate the possibility of segmenting floods on the territory of China using Sentinel-1 satellite images and convolutional neural networks. In [19], Bonafilia et al. collected and annotated the dataset accompanied by Sentinel-1 data to map flooded areas in various geographical regions. Bai et al. show, in [20], the potential for identifying temporarily flooded areas and permanent water bodies using the modern Boundary-Aware Salient Network (BASNet) architecture [21], which utilizes a predict-refine architecture and a hybrid loss, allowing highly accurate image segmentation, achieving an accuracy of 76% Intersection over Union (IoU) in the above-described task. In [22], Rudner et al. propose to implement a modern approach for segmenting flooded buildings by using data jointly from different dates and sensors of various satellites. However, the use of SAR data alone does not give results as good as the use of multi-spectrum data, and such approaches have significant limitations, for example, if there is urban development in the studied region due to re-reflections of the radar signal from the walls of buildings, objects, etc.

In addition, estimating the extent of a flood alone may not be sufficient for effective flood management. It is necessary to estimate both the extent and volume or depth of the flood. The importance of depth estimation of flooded areas is highlighted by Quinn et al. in [23]. It is shown that depth can be used for economical loss computation. Estimating water volume during floods also holds critical significance for multiple reasons. Firstly, it provides essential inputs for hydrological models that simulate flood behavior, aiding in understanding how floodwaters propagate through watersheds. This information, combined with accurate flow rate calculations, is pivotal for assessing flood severity, designing hydraulic structures, and predicting rapid water level rises in flash floods. Moreover, precise water volume estimates contribute to real-time stream gauging, enabling timely flood monitoring and early warnings to safeguard communities. Additionally, these estimates serve as foundational data for hydrological research, advancing flood forecasting techniques and enhancing our understanding of climate change impacts on flood occurrences. Approaches for estimating water volume usually involve auxiliary topographic data. Rakwatin et al. explored spatial data acquisition and processing for flood volume calculation in [24]. In [25], Cohen et al. used flood maps with a Digital Elevation Model (DEM) to estimate floodwater depth for coastal and riverine regions. The approach relies on selecting the nearest boundary cell to the flooded domain and subtracting the inundated land elevation from the water elevation value [26]. The calculated water depth is then compared with hydraulic model simulation. The authors suggest using floodwater extent maps derived from remote sensing classification. A similar approach is explored in [27], where Budiman et al. used Sentinel-1 data and DEM to estimate flash flood volume by multiplying depth and each pixel size. However, these approaches do not involve usage of such advanced algorithms as convolutional neural networks.

In our research, we assume that using several models that utilize different sets of input data allow us to have several options for making predictions taking into account the availability and quality of the data. Floods occur quickly, thus, such variability in models ensures relevant and more accurate results. Therefore, our objective is to design an ML-based approach for flood extent and volume monitoring that overcomes current limitations and involves several stages. To deliver both high-quality and prompt results, we combine multispectral and radar data derived from Sentinel-2 and Sentinel-1 satellites, respectively. This enables high model performance in cloudy conditions and detailed mapping on cloudless dates. Additionally, feature space selection plays a vital role in developing a robust model, so we considered different spectral indices in addition to the initial satellite bands. To create flood volume maps, we use a combination of model predictions with flood extent and DEM measurements. To evaluate the proposed methodology, we conducted experiments using various popular neural network architectures, such as U-Net, MA-Net, and DeepLabv3. We also assessed their quality on a real-life case involving a flood that occurred in Ushmun, Russia, in 2021. The annotations for this event were created manually. The main contributions of the work are the following:

- We proposed a flood extension estimation pipeline based on Sentinel-1 and Sentinel-2 data that utilizes neural network technology;

- We took into account possible real-life limitations such as satellite data availability and cloud coverage during flood events;

- We explored different deep learning architectures and investigated feature spaces to optimize our approach;

- Additionally, we developed a method for flood volume estimation that utilizes both DEM and predicted flood extent.

2. Materials and Methods

2.1. Dataset

2.1.1. Data Description

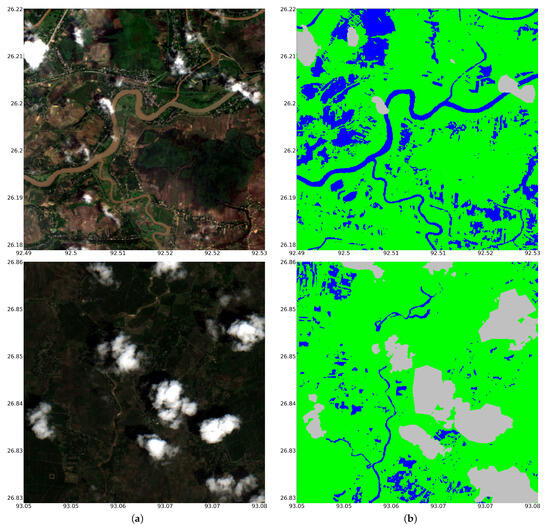

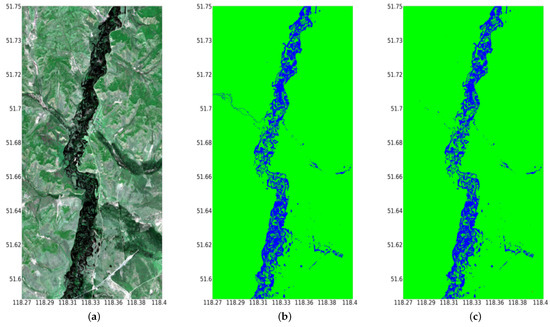

The training and testing datasets are formed based on the labels from the open dataset Sen1Floods11 [19]. The original dataset includes only raw Sentinel-1 images. We separately collected and additionally preprocessed data from Sentinel-1 and Sentinel-2 satellites for the associated dates (preprocessing details are provided in Section 2.1.2). The original dataset was collected across six continents and covers territories with different climatic, topographic, and natural factors. The dataset includes 11 floods worldwide that occurred from 2016 to 2019. The labeling contains three classes: water bodies, non-water areas, and clouds. Examples of RGB composites and ground truth labeling are provided in Figure 1. The total area of the considered territory is 120,406 km2, of which only about 10,000 km2 are manually labeled. For labeling the rest of the areas, indices based on Sentinel-1 and Sentinel-2 data are used. However, it was noticed that the labeling in the Sen1Floods11 dataset based on the indices is less accurate. Therefore, we used only more precise manual annotation in present research. The dataset statistics are presented in Table 1.

Figure 1.

Examples of flood annotation from Sen1Floods11 dataset [19] and additionally collected Sentinel-2 data: RGB channels of Sentinel-2 satellite (a), manual original markup with additional cloud mask from Sentinel-2 product (b). Legend: water, background, clouds.

Table 1.

Sen1Floods11 Dataset’s statistics.

2.1.2. Image Processing

The SentinelHub service [28] was used to download images from Sentinel-1 and Sentinel-2 satellites, which provides all the latest images as well as archives dating back to November 2015 (for Sentinel-1 and Sentinel-2 L1C) or January 2017 (for Sentinel-2 L2A). Both Sentinel-1 and Sentinel-2 satellites provide up-to-date data with a latency of approximately two days depending on the product level. Revisit time might differ for different territories, but officially the revisit frequency of Sentinel-1 is up to 4 days and the revisit frequency of Sentinel-2 is 5 days. For Sentinel-1 images, the following preprocessing steps were applied: images were taken from the Interferometric Wide (IW) swath mode with VV and VH polarizations at the GRD processing level, and then the image underwent an ellipsoid correction with the backscatter coefficient SIGMA0_ELLIPSOID. Orthorectification was also applied. The COPERNICUS DEM with a resolution of 10 and 30 m (depending on the region) was used as a digital elevation model. For Sentinel-2 images, we extract all spectral channels except the channel. Reflectance multiplied by is used as units for all channels.

Additionally we calculate five water indices based on Sentinel-2 multi-spectral channels:

- —Normalized Difference Water Index. It emphasizes water bodies and vegetation water content [29];

- —Modified Normalized Difference Water Index. It is similar to NDWI, but reduces sensitivity to dense vegetation, making it more effective for open water body detection [30];

- —Standardized Water-Level Index. It estimates soil moisture content by analyzing the difference between near-infrared and shortwave infrared reflectance [31];

- , —Automated Water Extraction Index. Both and are indices tailored for water body detection, with leveraging shortwave infrared data for enhanced accuracy and offering an alternative when shortwave data are lacking [32].

The formulas are the following:

2.1.3. External Test Data

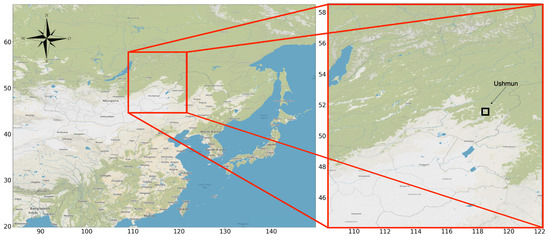

For the external test, we selected an area near Ushmun village (Zabaykalsky Krai, Russian Federation). The geography of chosen territory is shown in Figure 2. The flood occurred there in 2021. We collected available data for time before, after, and during flood. In total, we collected data for 4 days. These days are shown in Table 2. Hereinafter, we use indices and for labeling images collected beyond and during flood, respectively. For quality metric calculations, we manually labeled the area for some dates. The coordinates of the studied area are as follows: ((118.266, 51.565), (118.266, 51.754), (118.446, 51.754), (118.446, 51.565)).

Figure 2.

Geography of external test territories, Ushmun village, Zabaykalsky Krai, Russia.

Table 2.

Dates for which satellite images were collected for the flood event in Ushmun.

2.2. Methods

2.2.1. Flood Extent

One of the fundamental limitations for detecting water boundaries during floods is the long time intervals between satellite imagery acquisitions (approximately 6 days for each type of the considered satellite) and the presence of cloud cover. To address this issue, we propose the simultaneous use of three models, each trained on its own configuration of input data—solely on multispectral data and the products derived from them, solely on radar data, and on their combination. Thus, we can provide an extension of temporal coverage for territories and overcome cloud cover by utilizing radar data. Often, the satellite observation dates for different satellites (for instance, Sentinel-1 and Sentinel-2) do not coincide day by day, but are available on neighboring days. Due to the dynamic of natural events such as flooding, we suggest combining only those images that were taken on the same day, otherwise using the appropriate models for the data available at that time. Moreover, it is not possible to estimate in advance the exact number of available cloudless images taken during the flood due to the weather conditions, slight variation in revisit time for the same territory, as well as the duration of the flood itself.

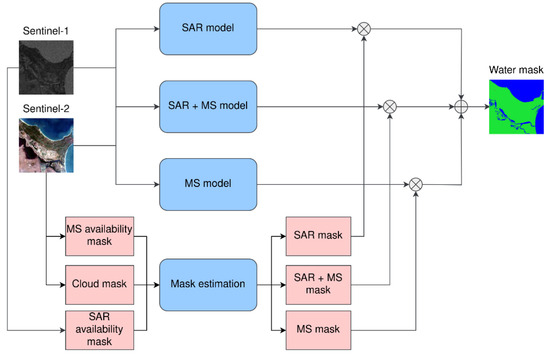

The proposed approach is illustrated in Figure 3. We employ the dataMask band from the raw image data of Sentinel-1 and Sentinel-2 to extract the availability masks for Synthetic Aperture Radar (SAR) and Multispectral (MS) data, respectively. Additionally, for cloud masking, we utilize the Scene Classification (SCL) band obtained from the Sentinel-2 image, which is generated using the Sen2Cor processor [33]. The SCL band provides a scene classification that enables us to identify and filter out various cloud-related elements, including cloud shadows, clouds with low, medium, and high probabilities, and cirrus clouds. These identified cloud regions are marked as clouds for subsequent analysis. These three masks are processed simultaneously to generate SAR mask, SAR + MS mask, and MS mask. These masks are utilized to delineate specific regions where the respective model should be applied.

Figure 3.

Proposed approach for recognizing water bodies based on remote sensing data and fusion of multispectral and radar data.

For each of the three data configurations, we train different combinations of input channels. Additionally, we apply per-channel min–max normalization to the data, with global minimum and maximum values calculated beforehand based on the available data for each channel.

where is the i-th channel s, and globalmin and globalmax are arrays with global per-channel minimum and maximum values.

In constructing our solution, we conduct experiments using various neural network architectures: U-Net [34], MA-Net [35], and DeepLabV3 [36], which have proven themselves in remote sensing tasks [37] and, more generally, in segmentation tasks [38]. The U-Net stands out as a pioneer in its ability to capture intricate spatial features and retain fine-grained details. Its architecture, inspired by the encoder–decoder framework, facilitates the preservation of both local and global context within the segmented regions. This design choice is particularly suited for our remote sensing context, where retaining the intricate details of land cover and features is crucial for accurate interpretation. Complementing the U-Net, we incorporate the MA-Net architecture. MA-Net has exhibited remarkable performance in previous remote sensing tasks by explicitly modeling multi-scale features. This capability allows it to effectively account for the varying scales present in remote sensing images, capturing everything from large geographical features to smaller, finer structures. The MA-Net’s focus on multi-scale feature integration aligns well with the heterogeneous nature of remote sensing data. In addition to the U-Net and MA-Net, we embrace the DeepLabV3 architecture to enrich our segmentation toolkit. The distinguishing feature of DeepLabV3 is its employment of atrous (or dilated) convolutions, which effectively enlarge the receptive field without sacrificing resolution. This characteristic proves invaluable in remote sensing segmentation, where comprehensive context integration is essential to distinguish between diverse land cover classes accurately.

To enhance the adaptability and robustness of our models, we strategically integrate pretrained on ImageNet [39] encoders into our architecture selections. MobileNetV2 [40], renowned for its efficiency and effectiveness in feature extraction, enriches our models with a diverse range of low- to high-level features, thus bolstering their capacity to discern and understand intricate remote sensing patterns. Similarly, ResNet18 [41], with its deep architecture and skip connections, ensures the integration of both local and global information, enhancing the models’ contextual awareness.

Thus, the input data of the CNN models are three-dimensional arrays consisting of the specified combination of preprocessed channels of multispectral or radar images, as well as calculated indices. A set of input features varies depending on the experimental setup. As a model output, we have a two-dimensional array consisting of 0 and 1 values. Pixels with value 0 represent the surface without water objects; pixels with label 1 show areas with water objects. Clouds are additionally masked for this post-processing as a separate class for multispectral images.

All models are trained for 150 epochs. The learning rate is adjusted according to the OneCycleLR [42] strategy throughout the training process, starting from a value of 5 × 10. FocalLoss [43,44] is utilized as the loss function. The batch size is consistent across all models and set to 16.

2.2.2. Flood Volume

To estimate the volume of flooding, in addition to the area, it is necessary to have data on the water level at each point, taking into account the available resolution. However, DEM, such as a Copernicus product with a 10–30 m resolution, which describes the altitude, cannot be used for estimating water level both before and during flood events due to low temporal resolutions. Most of these maps are updated only once in several years. Thus, DEM could be used only as general description of the surface. Cohen et al. proposed such an approach in [26]. It describes a method for estimating water depth during the flood by using DEM as general description of the surface. The method requires a local elevation of floodwater. The elevation for each cell of the study area is assigned using the “Focal Statistics” neighborhood approach. Then, the floodwater depth is estimated based on the difference in surface flood water elevation and inundated land elevation. Inspired by this research, we propose a new approach of volume estimation based on the prediction of the area of flood and on a single DEM that describes the terrain.

Having a general description of the terrain, which we can assume represents the terrain before the flood (we will call it “baseline”), the calculation of water levels in different points of the terrain boils down to estimating the Absolute Level of the Water Surface (ALWS) during the flood. Knowing which areas were flooded, as well as the baseline terrain, we can accurately estimate the ALWS at the flood boundary, but interpolating the ALWS in internal areas presents a more complex problem due to potentially complex terrain and the overall direction of the river flow.

Assuming that the water level in the local area, due to the physics of water and the river, changes so insignificantly that its change can be neglected, we propose to interpolate the internal ALWS values by searching for the nearest flood boundary pixel whose ALWS value is reliable.

Having obtained ALWS for the entire flooded area in this way, as well as knowing the baseline level, we can calculate the water level in each point of the flood by simple subtraction. Knowing the water level and the flooded area, the volume can be calculated by summing the products of the area and water level for each point of the flood.

2.3. Evaluation Metrics

To evaluate the performance of water segmentation models, we utilize two metrics: Intersection over Union (IoU, also known as Jaccard index) and F1-score (also known as Dice Score). Equations for computing IoU and F1-score are the following:

where TP (True Positive) is the number of pixels correctly classified, FP (False Positive) is the number of pixels incorrectly classified as the target class, and FN (False Negative) is the number of pixels of the target class that were mistakenly classified as another class.

To evaluate, we use both metrics in “micro” mode, i.e., we concatenate all ground-truth and predicted labels into two vectors along all the images, and then calculate metrics.

3. Results

3.1. Results on Sen1Floods11 Dataset

Experiments on various combinations of input channels were conducted. The results for the test set of Sen1Floods11 dataset are presented in Table 3, Table 4 and Table 5. The best result was obtained for combination all of Sentinel-2 multispectral channels and all of Sentinel-1 radar channels. The F1-score for this model on the test set is 0.92, and the IoU is 0.851. This result outperforms the use of multispectral and radar data separately. However, even when only multispectral data are available, it is possible to achieve high results by all of Sentinel-2 spectral channels. The accuracy of such a model by the F1-score metric is 0.917. The use of only radar measurements shows significantly lower results of 0.793 for the F1-score metric and 0.657 for the IoU metric. The reason why a model trained on a combination of all image channels and indices is not the best option lies in the fact that, when training neural network models, an excessively large feature space can lead to a more complex optimization problem and, consequently, to model divergence and poorer results. For instance, in studies [45,46], it was demonstrated that a greater number of input channels does not always lead to better outcomes, and the use of specialized indices can yield results worse than the application of all available channels.

Table 3.

Results of experiments with different input data configurations. Model: U-net.

Table 4.

Results of experiments with different input data configurations. Model: MA-Net.

Table 5.

Results of experiments with different input data configurations. Model: DeepLabV3.

3.2. Results on External Data

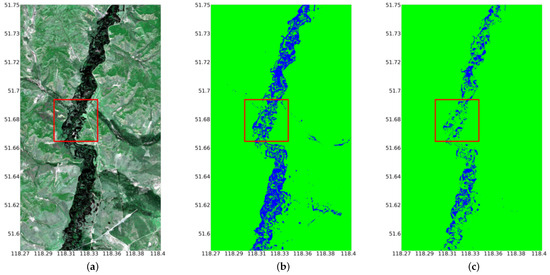

3.2.1. Flood Extent

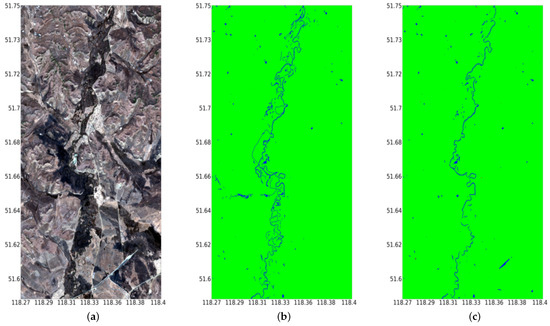

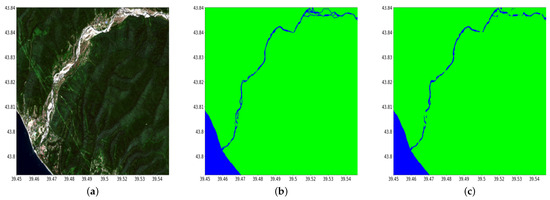

Predictions for Ushmun beyond flood (BF) and Ushmun during flood (DF) areas are shown in Figure 4 and Figure 5, respectively. The F1-score metrics are 0.705 and 0.99 for Ushmun BF and Ushmun DF, respectively. Thus, the average value is 0.848. The difference in metrics in water surface segmentation before and during flooding in this case can be explained as follows. Typically, segmentation models find it difficult to distinguish areas with a few pixels unless the boundaries are clearly discernible. This is the case when the river has a normal water regime. That is, in our case, the river is quite narrow and the model has some inaccuracies in the definition of the most bottlenecks (F1-score of 0.705). In case of flooding, the water surface has a larger, more continuous and more distinct area, which allows the model to segment the water body very accurately (F1-score of 0.99). Also, the reason is the time of the year when the images were taken. Sometimes at the time of observations there may still be snow in the studied region, which causes false positives of the model.

Figure 4.

Test area: Ushmun (BF). Observation date: 22 April 2021. RGB (a), manual markup (b), model prediction (c).

Figure 5.

Test area: Ushmun (DF). Observation date: 06.06.2021. RGB (a), manual markup (b), model prediction (c).

For permanent surface water measurements, Pekel et al. provide freely available data at the global scale [47]. For the analysis of natural objects and infrastructure objects, OpenStreetMap (OSM) [48] data are also often used [49]. However, during emergencies such as floods, these data can only be used to compare flooded areas with the initial area of water bodies. Also, for some areas, labeling may be absent. Another source of data are the mask supplied with Sentinel-2 data. It is calculated automatically by the data provider based on multispectral channels.

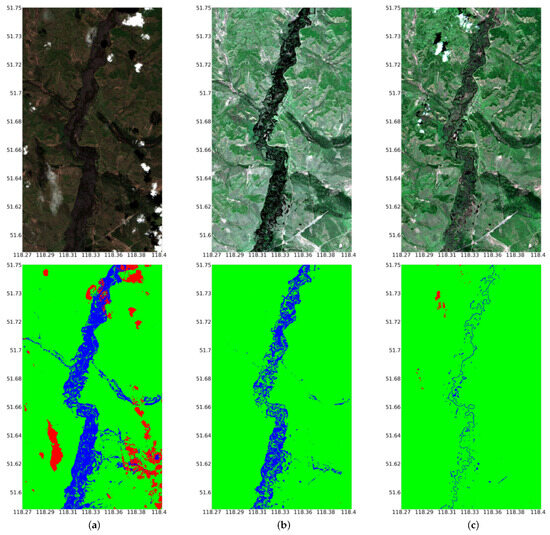

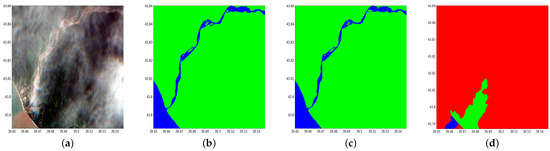

Figure 6 shows a comparison of the mask predicted by the model and the mask supplied with Sentinel-2 data. The advantage of the proposed approach to water body recognition is the combination of multispectral and radar data. As shown in the image, unlike the mask supplied with Sentinel-2 data, the predicted mask correctly processes areas covered by clouds. Examples of complex areas are also presented, where the developed algorithm provides greater recognition detail.

Figure 6.

Ushmun test area. Observation date: 06 June 2021. RGB (a), model prediction (b), Sentinel-2 mask (c). Examples of areas where the developed model recognizes better than the basic Sentinel-2 mask are highlighted.

The developed algorithm allows for effective monitoring of rivers during floods. Figure 7 shows an example of a river flood in June 2021 in Ushmun with the calculated water surface area.

Figure 7.

Demonstration of flood monitoring in Ushmun with series of images: RGB image and predicted mask on 4 June 2021 (a), estimated water surface area—81.3 km2, RGB image and predicted mask on 6 June 2021; (b) estimated water surface area—51.0 km2, RGB image and predicted mask on 11 June 2021; (c) estimated water surface area—8.9 km2. Legend: water, background, clouds.

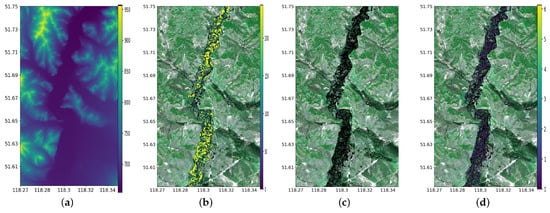

3.2.2. Flood Volume

To estimate the flood volume, we applied the proposed approach described earlier. In Figure 5 and Figure 7c, we present RGB images of the study area during and after the flood, respectively, as well as the flood extent masks obtained using our approach. Figure 8 displays the intermediate results of the approach. The estimated flood volume using this approach is 0.0087 km3.

Figure 8.

Intermediate calculation results in Ushmun: DEM (in meters) (a), ALWS (in meters) (b), flood edge (c), estimated water depth (in meters) (d).

4. Discussion

In this study, we have developed a novel methodology for estimating flood extent and volume by employing remote sensing data gathered from Sentinel-1 and Sentinel-2 satellites. Our approach integrates multi-spectral and radar data, utilizing deep neural network algorithms to process and analyze these data. As a result, we have been able to create an effective tool for flood monitoring, delivering reliable and accurate outcomes. This has been demonstrated through our case study of the 2021 flood event in Ushmun, further reinforcing the potential of our methodology. Moreover, we can state the superiority of Sentinel-1 and Sentinel-2 combination over single data source usage, such as in [50,51]. We extended the original dataset described in [19] and added multispectral images and indices that leads to higher results. Further training of the proposed models on additional relevant data might be beneficial for the northern regions. This will allow taking into account the specifics of the northern regions, where floods can be observed in the case of snow that is not fully dried. Accordingly, a more stable forecast is proposed where indices may not work properly or require additional decision rules, such as in [15]. Our approach can also benefit from the inclusion of more sophisticated machine learning techniques, such as unsupervised learning algorithms [52] or zero-shot learning techniques [53]. These algorithms can help to properly uncover patterns in visual data.

In addition, one of the advantages of the proposed approach is that we have shown how to easily estimate the amount of water during floods. Such an approach, together with hydrometeorological models, will allow a full analysis of the dynamics of evolving emergencies. Previous works have already described approaches for flood water depth estimation based on DEM products. The main limitations are associated with data availability and their spatial resolution for global mapping. In [25], the authors verified DEM measurements derived from HydroSHEDS, Multi-Error-Removed Improved-Terrain DEM (MERIT DEM) [54], and ALOS with spatial resolutions of 90, 90, and 30 m, respectively. Another limitation is connected with spatial resolution of water bodies map. For instance, Landsat-5 can be used for water bodies mapping with the spatial resolution of only 30 m per pixel [26]. Therefore, in our study, we focus on developing methodology for flood analysis with the spatial resolution of 10–30 m per pixel using Sentinel-1 and Sentinel-2 observations. Moreover, previous studies often leverage classification models based on threshold rules [26], which might lead to inaccuracy in predictions. In [27], Budiman et al. defined the range for dB values that represent flood areas on Sentinel-1 data. The authors noted the challenging of such values estimation due to similar reflectance of other surfaces. Our approach is developed to simplify flood areas mapping using convolutional neural networks that is capable of automatic spatial features extraction.

Moreover, there is potential for our methodology to be complemented with existing research on flood inundation mapping. For instance, in [55], Nguyen et al. demonstrated a novel method for estimating flood depth using SAR data. Another important reference is study [56], where mapping the spatial and temporal complexity of floodplain inundation in the Amazon basin using satellite data was managed. The findings reveal a complex interplay of factors influencing flood dynamics, offering valuable insights for enhancing flood volume estimation methodologies.

In addition, the methodology could be expanded to incorporate other types of data and modeling approaches. For example, the integration of hydrological models could help in predicting the temporal evolution of floods [57]. Similarly, the inclusion of more detailed topographical data could improve the estimation of flood volumes.

There is also the possibility to expand our methodology to other regions and types of floods. For example, our approach could be adapted to study flash floods in urban areas, which pose a significant risk to human lives and infrastructure. It can be also integrated with other neural network solutions for building recognition for further damage evaluation [58]. Knowledge of the level of water enables estimation of the number of flooded floors in buildings.

Our proposed approach allows us to work with historical data and to combine the results of models working on various subsamples of input data, focusing on real availability, within a single snapshot or region of interest. We focus specifically on Sentinel-1 and Sentinel-2 satellite images. The main advantage of these data are its high temporal resolution and free availability. The multispectral bands of the Sentinel-2 satellite enable us to compute additional indices to improve model performance. With a spatial resolution of 10 m per pixel, it addresses most practical needs. However, in some cases, a more detailed assessment may be required. When satellite images with higher spatial resolution are available, one can adjust an initial markup with lower spatial resolution to train a more precise CNN model without additional demands to annotated data [59]. A lack of well-annotated data can be reduced by advanced approaches that generate both images and their corresponding labels or that leverage classification markup instead of semantic segmentation to automatically refine the labels for a segmentation task [60,61]. Our approach can be also supplemented by super-resolution techniques [62]. Additionally, auxiliary bands can be artificially generated for high-resolution satellite images when the spectral range is narrower [63].

One of the advantages of the proposed approach is the ability to combine various sensors and apply corresponding models within a single region of interest, depending on the availability of imagery for that area, as well as the current weather conditions. We additionally examined the described pipeline for the cloudy date of the flood event in the town of Golovinka (Krasnodar Krai, Russian Federation) on 6 July 2021. The water body before the event is segmented using multispectral image (Figure 9). However, for the flood event, the region is covered with clouds. Both multispectral and radar imagery are available for that date. Figure 10 illustrates a case where radar observations play a crucial role for flood extent estimation. Almost the entire study area is represented with the cloud mask and cannot be used for a proper assessment based on visual bands.

Figure 9.

Test area (BF): Krasnodar Krai, Russian Federation. Observation date: 1 July 2021. RGB composite (a), manual markup (b), model prediction (c).

Figure 10.

Test area (DF): Krasnodar Krai, Russian Federation. Observation date: 6 July 2021. RGB composite (a), manual markup (b), models (MS + SAR and SAR) prediction (c), model (MS) prediction (d). Legend: water, background, clouds.

Our proposed methodology introduces a promising avenue for flood monitoring using remote sensing data and deep neural networks. Future research should aim to build upon our work and the research of others, with a focus on enhancing the accuracy and speed of flood damage assessments. By doing so, it will significantly contribute to the timely response and recovery efforts in affected regions, ultimately leading to better flood risk management and mitigation strategies.

5. Conclusions

In summation, this study has proposed and implemented a novel methodology for assessing flood scenarios via the utilization of remote sensing data and advanced deep learning techniques. Our method yielded an F1-score of 0.848, not only during the flood event in Ushmun in 2021, but also beyond it. This high F1-score showcases a strong ability to accurately predict the extent of water presence during and after a flood.

Moreover, our approach was capable of approximating the flood volume in Ushmun to be about 0.0087 km3. This gives us a deeper comprehension of the flood’s magnitude and lends insights into its implications for the area involved.

The methodology we developed also leverages the combined power of Sentinel-1 and Sentinel-2 satellites. The benefit of this combination is twofold. It allows for an extended period of time coverage, making it possible to assess the onset, peak, and recession of flood events regardless of weather condition and cloud coverage. Secondly, it enhances our capacity for estimating both the extent and volume of floods, as clearly demonstrated by the Ushmun flood scenario.

The expedited and precise flood damage assessments that our method enables could significantly bolster response and recovery initiatives. By swiftly and precisely pinpointing the flood extent, decision-makers and first responders can distribute resources in a more focused and efficient manner.

Going beyond immediate disaster mitigation, the utility of this tool can also greatly aid in our understanding of flood phenomena, helping to mitigate their detrimental effects and fortifying the resilience of impacted regions. Given its ability to perform effectively under a variety of conditions, our methodology stands as a substantial contribution to the realm of flood monitoring and management.

Author Contributions

Conceptualization, S.I. and D.S.; methodology, S.I. and D.S.; software, G.P. and K.E.; validation, G.P., S.I. and D.S.; formal analysis, G.P., S.I. and D.S.; investigation, G.P., S.I., D.S., K.E., N.S. and E.B.; resources, E.B.; data curation, G.P., K.E.; writing—original draft preparation, S.I., D.S., G.P., K.E., N.S. and E.B.; writing—review and editing, all authors; visualization, G.P.; supervision, S.I., D.S.; project administration, D.S.; funding acquisition, N.S. and E.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Analytical center under the RF Government (subsidy agreement 000000D730321P5Q0002, Grant No. 70-2021-00145 02.11.2021). The authors acknowledge the use of the Skoltech CDISE supercomputer Zhores [64] in obtaining the results presented in this paper.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ALWS | Absolute level of the water surface |

| AWEI | Automated Water Extraction Index |

| BASNet | Boundary-Aware Salient Network |

| BF | Beyond flood |

| DEM | Digital Elevation Model |

| DF | During flood |

| IoU | Intersection over Union |

| MNDWI | Modified Normalized Difference Water Index |

| MS | Multispectral |

| NDWI | Normalized Difference Water Index |

| NIR | Near-Infrared |

| OSM | OpenStreetMap |

| SAR | Synthetic Aperture Radar |

| SWI | Standardized Water-Level Index |

| SWIR | Short-Wave Infrared |

References

- Sharma, T.P.P.; Zhang, J.; Koju, U.A.; Zhang, S.; Bai, Y.; Suwal, M.K. Review of flood disaster studies in Nepal: A remote sensing perspective. Int. J. Disaster Risk Reduct. 2019, 34, 18–27. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.; Waller, S.T. Remote sensing methods for flood prediction: A review. Sensors 2022, 22, 960. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Tregubova, P.; Ignatiev, V.; Efimov, A.; Oseledets, I.; Burnaev, E. A Survey of Computer Vision Techniques for Forest Characterization and Carbon Monitoring Tasks. Remote Sens. 2022, 14, 5861. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Shukhratov, I.; Evteeva, K.; Popandopulo, G.; Sotiriadi, N.; Oseledets, I.; Burnaev, E. Benchmark for Building Segmentation on Up-Scaled Sentinel-2 Imagery. Remote Sens. 2023, 15, 2347. [Google Scholar] [CrossRef]

- Chawla, I.; Karthikeyan, L.; Mishra, A.K. A review of remote sensing applications for water security: Quantity, quality, and extremes. J. Hydrol. 2020, 585, 124826. [Google Scholar]

- Hernández, D.; Cecilia, J.M.; Cano, J.C.; Calafate, C.T. Flood detection using real-time image segmentation from unmanned aerial vehicles on edge-computing platform. Remote Sens. 2022, 14, 223. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. Urban flood mapping based on unmanned aerial vehicle remote sensing and random forest classifier—A case of Yuyao, China. Water 2015, 7, 1437–1455. [Google Scholar] [CrossRef]

- Hashemi-Beni, L.; Gebrehiwot, A.A. Flood extent mapping: An integrated method using deep learning and region growing using UAV optical data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2127–2135. [Google Scholar] [CrossRef]

- Chien, S.; Mclaren, D.; Doubleday, J.; Tran, D.; Tanpipat, V.; Chitradon, R. Using taskable remote sensing in a sensor web for Thailand flood monitoring. J. Aerosp. Inf. Syst. 2019, 16, 107–119. [Google Scholar] [CrossRef]

- Lin, L.; Di, L.; Tang, J.; Yu, E.; Zhang, C.; Rahman, M.S.; Shrestha, R.; Kang, L. Improvement and validation of NASA/MODIS NRT global flood mapping. Remote Sens. 2019, 11, 205. [Google Scholar] [CrossRef]

- Moortgat, J.; Li, Z.; Durand, M.; Howat, I.; Yadav, B.; Dai, C. Deep learning models for river classification at sub-meter resolutions from multispectral and panchromatic commercial satellite imagery. Remote Sens. Environ. 2022, 282, 113279. [Google Scholar] [CrossRef]

- Zhang, L.; Xia, J. Flood detection using multiple chinese satellite datasets during 2020 china summer floods. Remote Sens. 2022, 14, 51. [Google Scholar] [CrossRef]

- Islam, M.M.; Ahamed, T. Development of a near-infrared band derived water indices algorithm for rapid flash flood inundation mapping from sentinel-2 remote sensing datasets. Asia-Pac. J. Reg. Sci. 2023, 7, 615–640. [Google Scholar] [CrossRef]

- Sivanpillai, R.; Jacobs, K.M.; Mattilio, C.M.; Piskorski, E.V. Rapid flood inundation mapping by differencing water indices from pre-and post-flood Landsat images. Front. Earth Sci. 2021, 15, 1–11. [Google Scholar] [CrossRef]

- Jones, J.W. Improved automated detection of subpixel-scale inundation—Revised dynamic surface water extent (DSWE) partial surface water tests. Remote Sens. 2019, 11, 374. [Google Scholar] [CrossRef]

- Jet Propulsion Laboratory. Observational Products for End-Users from Remote Sensing Analysis (OPERA). 2023. Available online: https://www.jpl.nasa.gov/go/opera (accessed on 20 June 2023).

- Chen, Z.; Zhao, S. Automatic monitoring of surface water dynamics using Sentinel-1 and Sentinel-2 data with Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 103010. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, G.; Amankwah, S.O.Y.; Wei, X.; Hu, Y.; Feng, A. Monitoring the summer flooding in the Poyang Lake area of China in 2020 based on Sentinel-1 data and multiple convolutional neural networks. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102400. [Google Scholar] [CrossRef]

- Bonafilia, D.; Tellman, B.; Anderson, T.; Issenberg, E. Sen1Floods11: A georeferenced dataset to train and test deep learning flood algorithms for sentinel-1. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 210–211. [Google Scholar]

- Bai, Y.; Wu, W.; Yang, Z.; Yu, J.; Zhao, B.; Liu, X.; Yang, H.; Mas, E.; Koshimura, S. Enhancement of detecting permanent water and temporary water in flood disasters by fusing sentinel-1 and sentinel-2 imagery using deep learning algorithms: Demonstration of sen1floods11 benchmark datasets. Remote Sens. 2021, 13, 2220. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7479–7489. [Google Scholar]

- Rudner, T.G.; Rußwurm, M.; Fil, J.; Pelich, R.; Bischke, B.; Kopačková, V.; Biliński, P. Multi3net: Segmenting flooded buildings via fusion of multiresolution, multisensor, and multitemporal satellite imagery. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 702–709. [Google Scholar]

- Quinn, N.; Bates, P.D.; Neal, J.; Smith, A.; Wing, O.; Sampson, C.; Smith, J.; Heffernan, J. The spatial dependence of flood hazard and risk in the United States. Water Resour. Res. 2019, 55, 1890–1911. [Google Scholar] [CrossRef]

- Rakwatin, P.; Sansena, T.; Marjang, N.; Rungsipanich, A. Using multi-temporal remote-sensing data to estimate 2011 flood area and volume over Chao Phraya River basin, Thailand. Remote Sens. Lett. 2013, 4, 243–250. [Google Scholar] [CrossRef]

- Cohen, S.; Raney, A.; Munasinghe, D.; Loftis, J.D.; Molthan, A.; Bell, J.; Rogers, L.; Galantowicz, J.; Brakenridge, G.R.; Kettner, A.J.; et al. The Floodwater Depth Estimation Tool (FwDET v2. 0) for improved remote sensing analysis of coastal flooding. Nat. Hazards Earth Syst. Sci. 2019, 19, 2053–2065. [Google Scholar] [CrossRef]

- Cohen, S.; Brakenridge, G.R.; Kettner, A.; Bates, B.; Nelson, J.; McDonald, R.; Huang, Y.F.; Munasinghe, D.; Zhang, J. Estimating floodwater depths from flood inundation maps and topography. JAWRA J. Am. Water Resour. Assoc. 2018, 54, 847–858. [Google Scholar] [CrossRef]

- Budiman, J.; Bahrawi, J.; Hidayatulloh, A.; Almazroui, M.; Elhag, M. Volumetric quantification of flash flood using microwave data on a watershed scale in arid environments, Saudi Arabia. Sustainability 2021, 13, 4115. [Google Scholar] [CrossRef]

- Sinergise Ltd. Sentinel Hub: Cloud-Based Processing and Analysis of Satellite Data. Available online: https://www.sentinel-hub.com/ (accessed on 20 June 2023).

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Bagheri, H.; Moradi, M.; Sarikhani, M.R.; Tazeh, M. Soil water index determination using Landsat 8 OLI and TIRS sensor data. J. Appl. Remote Sens. 2015, 9, 096075. [Google Scholar]

- Fei, T.; Wang, C.; Li, X.; Zhou, Y.; Zhang, Z. Automatic Water Extraction Index (AWEI) for inland water body extraction with Landsat 8 OLI imagery. ISPRS J. Photogramm. Remote Sens. 2019, 147, 98–112. [Google Scholar]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for sentinel-2. In Proceedings of the Image and Signal Processing for Remote Sensing (XXIII SPIE), Warsaw, Poland, 11–13 September 2017; Volume 10427, pp. 37–48. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Fan, T.; Wang, G.; Li, Y.; Wang, H. MA-Net: A Multi-Scale Attention Network for Liver and Tumor Segmentation. IEEE Access 2020, 8, 179656–179665. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Illarionova, S.; Shadrin, D.; Ignatiev, V.; Shayakhmetov, S.; Trekin, A.; Oseledets, I. Estimation of the Canopy Height Model From Multispectral Satellite Imagery with Convolutional Neural Networks. IEEE Access 2022, 10, 34116–34132. [Google Scholar] [CrossRef]

- Sharma, S. Semantic Segmentation for Urban-Scene Images. arXiv 2021, arXiv:2110.13813. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. arXiv 2017, arXiv:1506.01186. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, W.; Huang, Y.; Zeng, L.; Chen, X.; Liu, Y.; Qian, Z.; Du, N.; Fan, W.; Xie, X. AnatomyNet: Deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy. Med. Phys. 2019, 46, 576–589. [Google Scholar] [CrossRef]

- Gao, Y.; Gella, G.W.; Liu, N. Assessing the Influences of Band Selection and Pretrained Weights on Semantic-Segmentation-Based Refugee Dwelling Extraction from Satellite Imagery. AGILE GISci. Ser. 2022, 3, 36. [Google Scholar] [CrossRef]

- Zhang, T.X.; Su, J.Y.; Liu, C.J.; Chen, W.H. Potential bands of sentinel-2A satellite for classification problems in precision agriculture. Int. J. Autom. Comput. 2019, 16, 16–26. [Google Scholar] [CrossRef]

- Pekel, J.F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef]

- OpenStreetMap Contributors. 2017. Available online: https://www.openstreetmap.org (accessed on 20 May 2023).

- Illarionova, S.; Shadrin, D.; Ignatiev, V.; Shayakhmetov, S.; Trekin, A.; Oseledets, I. Augmentation-Based Methodology for Enhancement of Trees Map Detalization on a Large Scale. Remote Sens. 2022, 14, 2281. [Google Scholar] [CrossRef]

- Helleis, M.; Wieland, M.; Krullikowski, C.; Martinis, S.; Plank, S. Sentinel-1-Based Water and Flood Mapping: Benchmarking Convolutional Neural Networks Against an Operational Rule-Based Processing Chain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2023–2036. [Google Scholar] [CrossRef]

- Mateo-Garcia, G.; Veitch-Michaelis, J.; Smith, L.; Oprea, S.V.; Schumann, G.; Gal, Y.; Baydin, A.G.; Backes, D. Towards global flood mapping onboard low cost satellites with machine learning. Sci. Rep. 2021, 11, 7249. [Google Scholar] [CrossRef]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised deep feature extraction for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1349–1362. [Google Scholar] [CrossRef]

- Nesteruk, S.; Agafonova, J.; Pavlov, I.; Gerasimov, M.; Latyshev, N.; Dimitrov, D.; Kuznetsov, A.; Kadurin, A.; Plechov, P. MineralImage5k: A benchmark for zero-shot raw mineral visual recognition and description. Comput. Geosci. 2023, 178, 105414. [Google Scholar] [CrossRef]

- Yamazaki, D.; Ikeshima, D.; Tawatari, R.; Yamaguchi, T.; O’Loughlin, F.; Neal, J.C.; Sampson, C.C.; Kanae, S.; Bates, P.D. A high-accuracy map of global terrain elevations. Geophys. Res. Lett. 2017, 44, 5844–5853. [Google Scholar] [CrossRef]

- Nguyen, T.H.D.; Nguyen, T.C.; Nguyen, T.N.T.; Doan, T.N. Flood inundation mapping using Sentinel-1A in An Giang province in 2019. Vietnam. J. Sci. Technol. Eng. 2020, 62, 36–42. [Google Scholar] [CrossRef]

- Lincoln, T. Flood of data. Nature 2007, 447, 393. [Google Scholar] [CrossRef]

- Yang, T.; Sun, F.; Gentine, P.; Liu, W.; Wang, H.; Yin, J.; Du, M.; Liu, C. Evaluation and machine learning improvement of global hydrological model-based flood simulations. Environ. Res. Lett. 2019, 14, 114027. [Google Scholar] [CrossRef]

- Illarionova, S.; Nesteruk, S.; Shadrin, D.; Ignatiev, V.; Pukalchik, M.; Oseledets, I. Object-based augmentation for building semantic segmentation: Ventura and santa rosa case study. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 1659–1668. [Google Scholar]

- Mirpulatov, I.; Illarionova, S.; Shadrin, D.; Burnaev, E. Pseudo-Labeling Approach for Land Cover Classification through Remote Sensing Observations with Noisy Labels. IEEE Access 2023, 11, 82570–82583. [Google Scholar] [CrossRef]

- Pai, M.M.; Mehrotra, V.; Verma, U.; Pai, R.M. Improved semantic segmentation of water bodies and land in SAR images using generative adversarial networks. Int. J. Semant. Comput. 2020, 14, 55–69. [Google Scholar] [CrossRef]

- Nesteruk, S.; Illarionova, S.; Zherebzov, I.; Traweek, C.; Mikhailova, N.; Somov, A.; Oseledets, I. PseudoAugment: Enabling Smart Checkout Adoption for New Classes Without Human Annotation. IEEE Access 2023, 11, 76869–76882. [Google Scholar] [CrossRef]

- Jozdani, S.; Chen, D.; Pouliot, D.; Johnson, B.A. A review and meta-analysis of generative adversarial networks and their applications in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102734. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Trekin, A.; Ignatiev, V.; Oseledets, I. Generation of the nir spectral band for satellite images with convolutional neural networks. Sensors 2021, 21, 5646. [Google Scholar] [CrossRef]

- Zacharov, I.; Arslanov, R.; Gunin, M.; Stefonishin, D.; Bykov, A.; Pavlov, S.; Panarin, O.; Maliutin, A.; Rykovanov, S.; Fedorov, M. “Zhores”—Petaflops supercomputer for data-driven modeling, machine learning and artificial intelligence installed in Skolkovo Institute of Science and Technology. Open Eng. 2019, 9, 512–520. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).