1. Introduction

Buildings constitute an essential element of urban areas. Accurate information about buildings is crucial for various applications, including city planning [

1,

2], environmental monitoring [

3], real estate management [

4], population estimation [

5], and disaster risk assessment [

6].

Rapid advancements in aerospace technology, photography, and remote sensing techniques have made it possible to rapidly obtain high-resolution spectral and spatial information of objects over large regions. The detailed texture and semantic information provided by this VHR imagery is useful for building extraction and land-cover classification [

7], making building extraction an exciting but challenging research topic [

8]. Traditional building extraction methods primarily rely on manual and expert interpretations of the statistical features of building shape and texture derived from remote sensing images. However, these approaches suffer from limited accuracy, making it challenging to meet the demands of large-scale datasets and intelligent automatic updates. In contrast, object-based extraction methods that use spectral, textural, and geometric features of buildings, and auxiliary information, such as DSM, LiDAR, and building shadows, can yield more accurate results [

9,

10,

11]. For example, Jiang et al. [

12] used an object-oriented method to extract trees and buildings with DSM data, then removed the trees to retrieve the building, while Vu et al. [

13] leveraged LiDAR to obtain building structural information through height and spectral data to remove vegetation affecting building extraction. However, handcrafted feature-based object extraction methods have limitations. They cannot extract semantic information about complex land features from VHR images. Additionally, these methods suffer from low accuracy, limited completeness, and require a considerable amount of manpower and resources. Additionally, they cannot meet the demands of industrial big data and intelligent automatic updates [

14,

15]. Therefore, the imperative lies in developing deep learning-based techniques for extracting building features with heightened precision and accuracy, thus fulfilling the demands of research and industrial applications.

In recent years, with the expeditious advancements in deep learning computer vision technology, the application of deep convolutional neural networks (DCNNs) for automated building extraction has been extensively adopted. Deep learning architectures are characterized by their ability to learn features specifically from the data, without the need for domain-specific knowledge to design features, which avoids the problem of feature dependence on specialized field knowledge [

16]. The integration of remote sensing techniques with deep learning has emerged as a prevalent methodology for the extraction of buildings, demonstrating its widespread adoption [

17,

18,

19,

20,

21]. Classical CNN models, such as VGGNet [

22], ResNet [

23], DenseNet [

24], AlexNet [

25], and InceptionNet [

26], have become baseline networks for many segmentation models. Shrestha et al. [

27] replaced the last three FC layers of the original VGGNet to construct an FCN model and applied conditional random fields to extract buildings from remote sensing images. Deng et al. [

28] applied an improved ResNet50 encoder to extract building features and used ASPP blocks to capture multi-scale features of objects between the encoder and decoder. Chen et al. [

29] employed a modified version of the Xception network as their backbone feature extraction network to identify buildings. This approach effectively reduced computational requirements, accelerated model convergence, and minimized training time.

Despite the substantial advancements achieved in building extraction accuracy through deep learning-based methods, certain challenges persist [

30,

31,

32]. In the process of extracting buildings from VHR images, the incorporation of contextual information assumes a critical role and is deemed indispensable [

33,

34]. Although the DCNN has strong semantic feature extraction ability, common down-sampling operations can lead to the loss of spatial details, resulting in blurred images [

35]. Tian et al. [

36] utilized dilated convolutions with different dilation rates to expand the receptive field and a densely connected refine feature pyramid structure for the decoder to fuse multi-scale features with more spatial and semantic information. However, dilated convolutions may miss small objects in VHR images. Wang et al. [

37] used an improved residual U-Net for building extraction, with residual modules to reduce the network’s parameters and degradation. The skip connection of U-Net can transmit low-level features and increase the context information [

38,

39]; however, the simple concatenation of features from the low and high levels can lead to insufficient feature exploitation and inaccurate extraction of boundaries or small buildings. The boundary information of semantic segmentation in remote sensing images is also important for performance. Due to the complex shape, large-scale changes, and different lighting conditions of the targets, the boundaries between semantic objects are often ambiguous, which poses a challenge to segmentation. A common method to improve edge accuracy is to combine edge detection algorithms and add constraint terms to the loss function [

40]. However, this method is susceptible to false detection of edges due to noise and different angles. When boundaries cross multiple regions, it becomes challenging to reflect the situation accurately and enhance the accuracy of segmentation results.

To further deal with these problems, we propose an MBR-HRNet network with boundary optimization for building extraction, which can automatically extract buildings from VHR images. The main contributions of this study are as follows:

- (1)

In the encoding stage of MBR-HRNet, we propose a boundary refinement module (BRM) that utilizes deep semantic information to generate a weight to filter out irrelevant boundary information from shallow layers, focusing on edge information and enhancing edge-recognition ability. The boundary extraction task is coordinated with the building body extraction task, increasing the accuracy of building body boundaries;

- (2)

In the decoding stage of MBR-HRNet, a multi-scale context fusion module (MCFM) is applied to optimize the semantic information in the feature map. By incorporating extracted boundary data and effectively retaining intricate contextual nuances, this module successfully tackles the integration of both global and local contextual information across various levels, resulting in enhanced segmentation precision.

The rest of this paper is organized as follows.

Section 2 provides a comprehensive overview of the methodology employed for refining a boundary extraction buildings network.

Section 3 presents the dataset description, experimental configuration, and evaluation metrics.

Section 4 describes the experimental results and discussions. Finally,

Section 5 concludes this paper.

2. Materials and Methods

This section begins with an overview of the model’s architecture outlined in

Section 2.1. Subsequently, detailed explanations of the BRM and MCFM are presented in

Section 2.2 and

Section 2.3, respectively. Concluding this section, we introduce our proposed loss function in

Section 2.4.

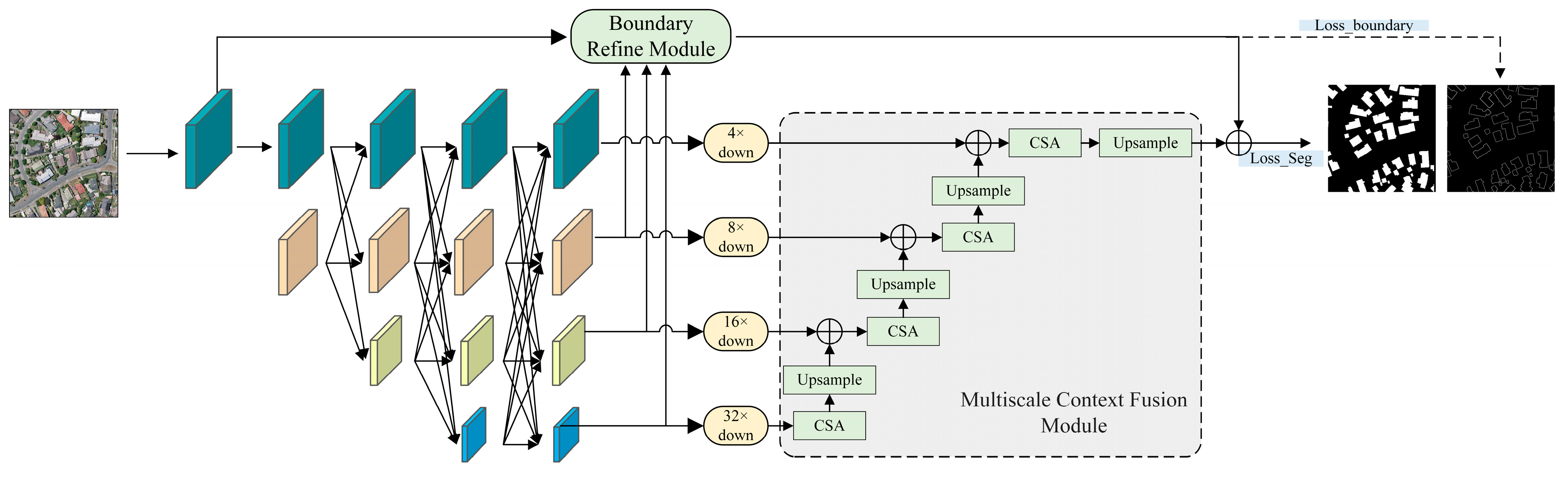

2.1. Architecture of the Proposed Framework

Previous semantic segmentation tasks mostly used an encoder–decoder architecture and convolutions to continuously down-sample the image, process contextual semantic information on the resulting low-resolution image, and then restore the original high-resolution output [

41]. However, as the number of convolutional layers increases, this operation cannot maintain the high-resolution feature information. Moreover, this feature extraction through serially connected encoders causes information loss and resource waste. When information is transmitted across multiple layers, each subsequent layer can only receive limited information from the previous layer. This means that, as the number of layers increases, the amount of information received by each subsequent layer decreases, which may result in the loss of important boundary contour information. In contrast, HRNet parallelizes the serial structure and replaces the operation of reducing the resolution with an operation that maintains the resolution [

42]. Furthermore, the high-resolution and low-resolution feature maps continuously exchange information and advance synchronously. The existence of high-resolution maps makes the spatial resolution more accurate, while the existence of low-resolution maps makes semantic information more comprehensive. Furthermore, we proposed the BRM to further refine boundaries, using a separate branch to capture building boundary information. Moreover, to fully utilize features at different levels and improve the model’s ability to extract fine contextual details, we proposed an MCFM. The pipeline of MBR-HRNet is shown in

Figure 1.

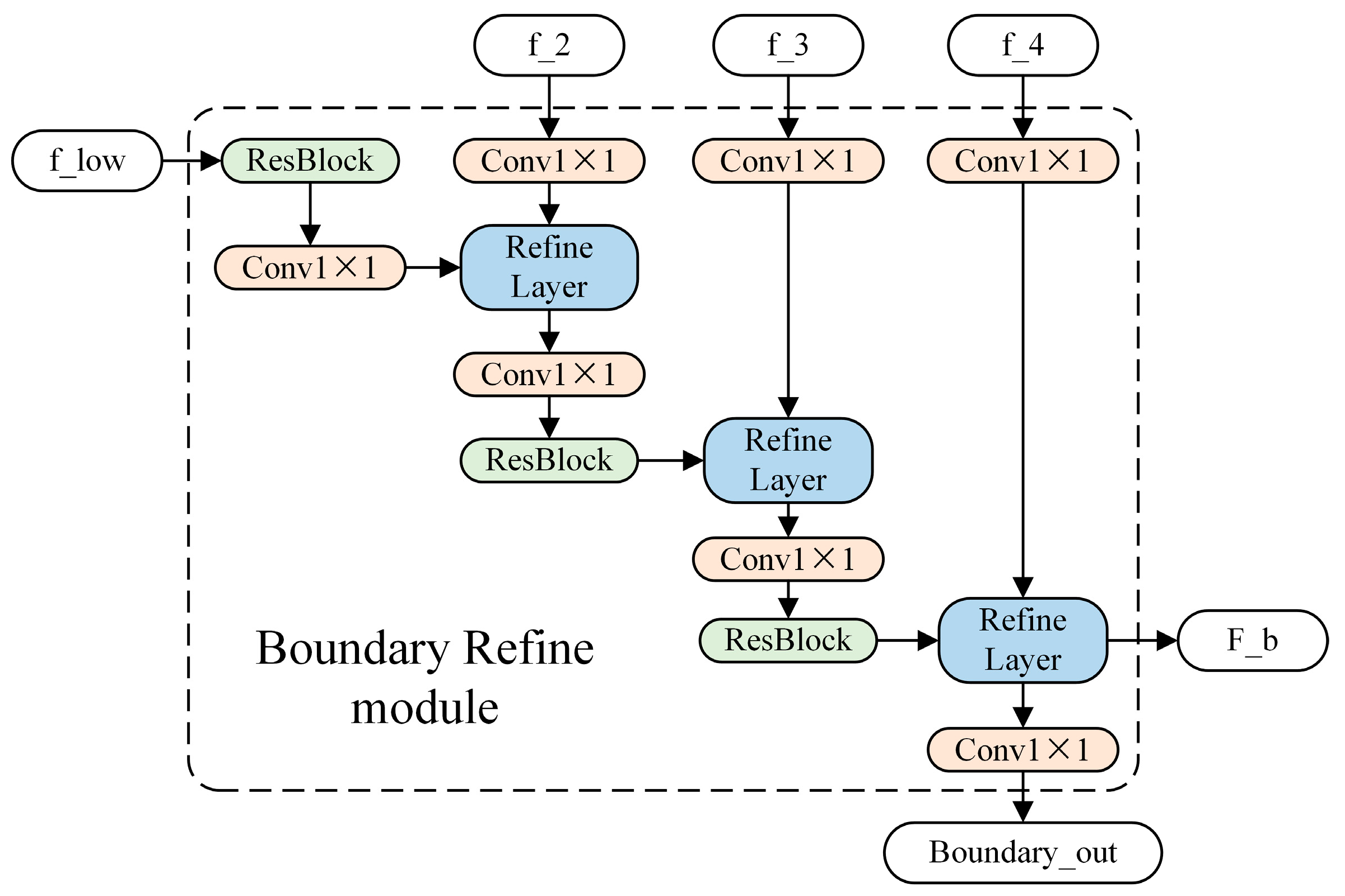

2.2. BRM

Although many semantic segmentation models can effectively extract buildings, they are not accurate enough in capturing the details and edge structures of buildings, especially when dealing with closely adjacent ones. Furthermore, for networks trained only with building label loss, the loss contributions of edge regions are often relatively small. Because the smaller loss values equal smaller gradients in backpropagation, the network tends to focus on constructing the body of the building rather than the edges. To solve this problem, we proposed a BRM, as shown in

Figure 2.

The BRM uses a separate stream to process boundary information, which can be considered as an auxiliary to the main feature extraction flow. The main feature extraction flow forms a higher-level semantic understanding of the scene, while the boundary processing flow focuses solely on the parts related to boundaries. This allows the boundary flow to employ an efficient shallow structure for image processing at a very high resolution, avoiding down-sampling and the loss of fine detail information. It takes the shallow feature f_low, which contains rich detail information in the encoder, as the input and calculates weights by jointly considering three different scales of deep features, f_2, f_3, and f_4, output by the encoder, in order to filter out the unrelated boundary information from the shallow layer and obtain F_b that focuses only on the boundary information. The binary cross-entropy loss function is used to predict the boundary map Boundary_out. Specifically, because f_2, f_3, and f_4 correspond to features of different sizes, they are first up-sampled to match the size of f_low. Subsequently, a 1 × 1 convolution is applied to transform their channel dimensions. Similarly, f_low is initially subjected to a 1 × 1 convolution to modify its channel dimension. Subsequently, it undergoes calculations with the refine layer, along with the aforementioned features, until arriving at the final outputs F_b and Boundary_out.

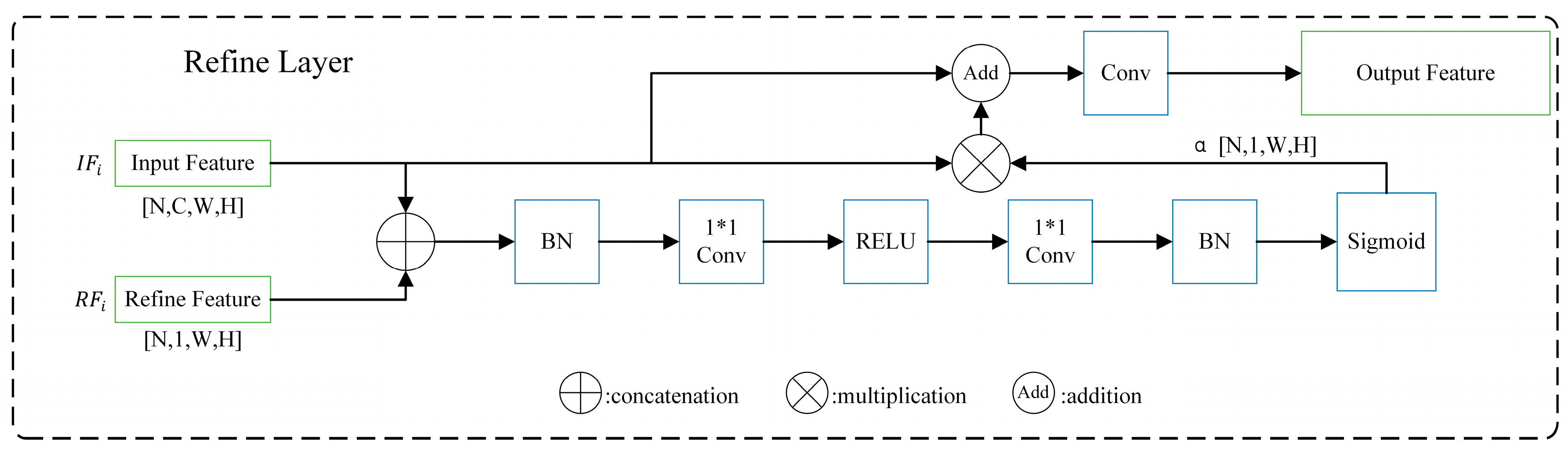

The refine layer plays a vital role in filtering out irrelevant information regarding boundaries. In the refine layer, the features containing boundary information from shallow layers are first combined with the features capturing the main body of buildings from deep layers. Weights are then derived through network adaptation and applied to the input shallow features. Finally, the refined boundary features, which have been selected based on weight adaptation through a residual connection, are outputted. For a detailed illustration, refer to

Figure 3. First,

and

are connected, followed by a normalized 1 × 1 convolution layer

and a sigmoid function

, resulting in an attention map

.

where

denotes the concatenation of the feature;

denotes the Hadamard product of the feature. For the obtained feature map

, it is applied to

through an element-wise multiplication. Finally, the output

is obtained through the residual connection and transmitted to the subsequent layer for further processing. The computations of the attention map and refine layer are differentiable, allowing for end-to-end backpropagation. Intuitively,

is an attention map that focuses on important boundary information and assigns greater weight to boundary areas. In the proposed BRM, three refine layers are used to connect the features of the encoder as refine features.

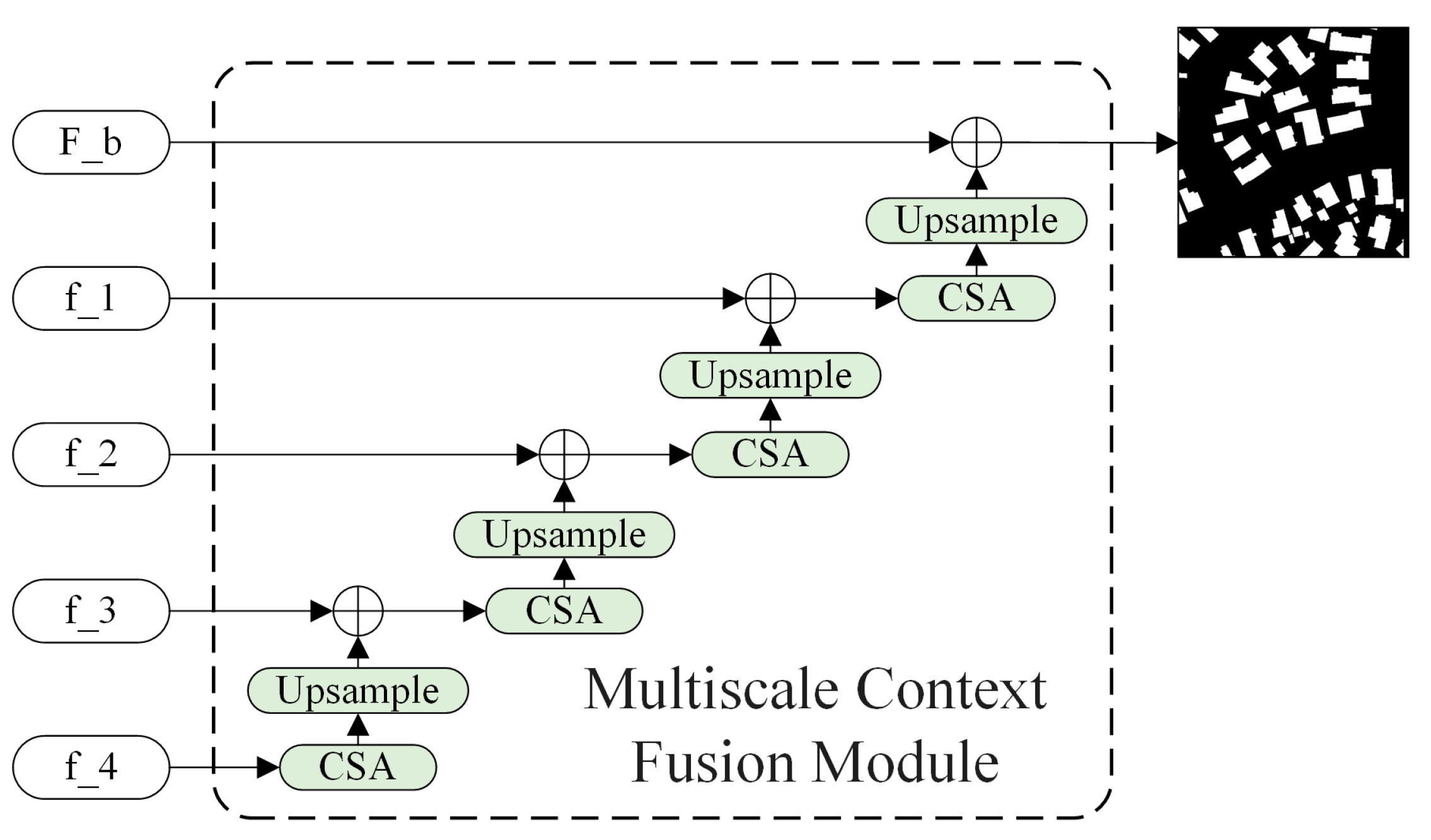

2.3. MCFM

In semantic segmentation, the encoder usually generates feature maps at multiple scales. These feature maps correspond to semantic information at different levels and aggregating them can better capture global and local contextual information, improving segmentation accuracy [

43,

44]. However, simply concatenating low-level and high-level features can result in the inadequate utilization of different level features. HRNet’s lowest-resolution branch output features have the strongest semantic representation [

45]; however, in the original segmentation network, they are directly concatenated with features of other scales, and output, which cannot be fully propagated to higher-resolution branches, and semantic information are not fully utilized. Restoring higher-resolution prediction maps based on simple bilinear up-sampling may result in a loss of fine details. As shown in

Figure 4 and

Figure 5, we proposed an up-sampling module that combined spatial and channel attention to fully utilize the spatial and channel dependencies of features to improve the semantic reconstruction ability and gradually restore them to the size of the prediction map. By effectively capturing the relevant features in the input data and removing useless information, this module can maintain a lightweight and efficient model. Moreover, using this module to combine regional features with boundary features can output refined semantic segmentation results. The formula is as follows:

where

represents the feature map from the

i-th layer, and

represents the feature map processed by the channel–spatial attention (CSA) module. The CSA module to is used to efficiently integrate multi-scale information; spatial and channel attention mechanisms are employed, thereby enhancing the feature representation capability. This module’s design minimizes the number of learnable parameters, resulting in a substantial reduction in model complexity. Additionally, it enables a better adaptation to scenarios that require an advanced semantic analysis.

In the CSA module, for the input feature map,

undergoes parallel transformations through MaxPool and AvgPool layers, resulting in a feature map size of C × 1 × 1. Through the Share MLP module, the two output results are then added element-wise, and a sigmoid activation function is applied to obtain the output weights

of the channel attention. The original image is then multiplied by these output weights to restore the size of the feature map to C × H × W. The result of channel attention is then obtained using max pooling and average pooling to obtain two 1 × H × W feature maps, followed by a concatenation operation to combine the two feature maps into a single channel feature map using a 7 × 7 convolutional layer. Finally, a sigmoid is applied to obtain the spatial attention feature map, M_s. The output is multiplied by the original image to restore its size to C × H × W. The weights

and

for the channel attention and spatial attention can be calculated using the following equations. Residual connections are employed to integrate the spatial and channel features effectively, thereby enhancing the semantic segmentation accuracy. The formulas are as follows:

where

represents the sigmoid function.

MLP refers to a fully connected layer, and

AvgPool and

MaxPool refer to global average pooling and global maximum pooling, respectively.

denotes the concatenation of feature maps, and

is the feature map output by the CSA module.

2.4. Loss Function

The semantic segmentation task for buildings encounters a notable disparity in sample quantities between foreground (building) and background (non-building regions) classes. When training the convolutional neural network using traditional loss functions, the network tends to predict all pixels as a background class since it is easier to predict the background class that occupies most of the pixels. This phenomenon leads to insufficient feature representation and discriminative learning of the foreground class during training, thereby affecting the accuracy of the final segmentation results. Accordingly, we put forth a modified cross-entropy (CE) loss function with weights and assigned a higher weight to the foreground class to balance the sample quantities between the two classes. In the proposed framework, MBR-HRNet outputs two main results that aim to generate the building segmentation masks and building boundaries, respectively. The formula is as follows:

where

is the number of samples,

represents the true label of the

sample (0 or 1),

represents the prediction for the

sample (ranging from 0 to 1), and

and

are the weight parameters for positive and negative classes, respectively. In this loss function, the cross-entropy error of the belonging class is calculated for each sample and multiplied by a corresponding weight. Different weight parameters can be set to adjust the impact of misclassification of different classes when applying the CE loss function, thus solving class imbalance in binary classifications.

When constructing boundary prediction models, the CE loss function is employed.

Therefore, the overall training loss is:

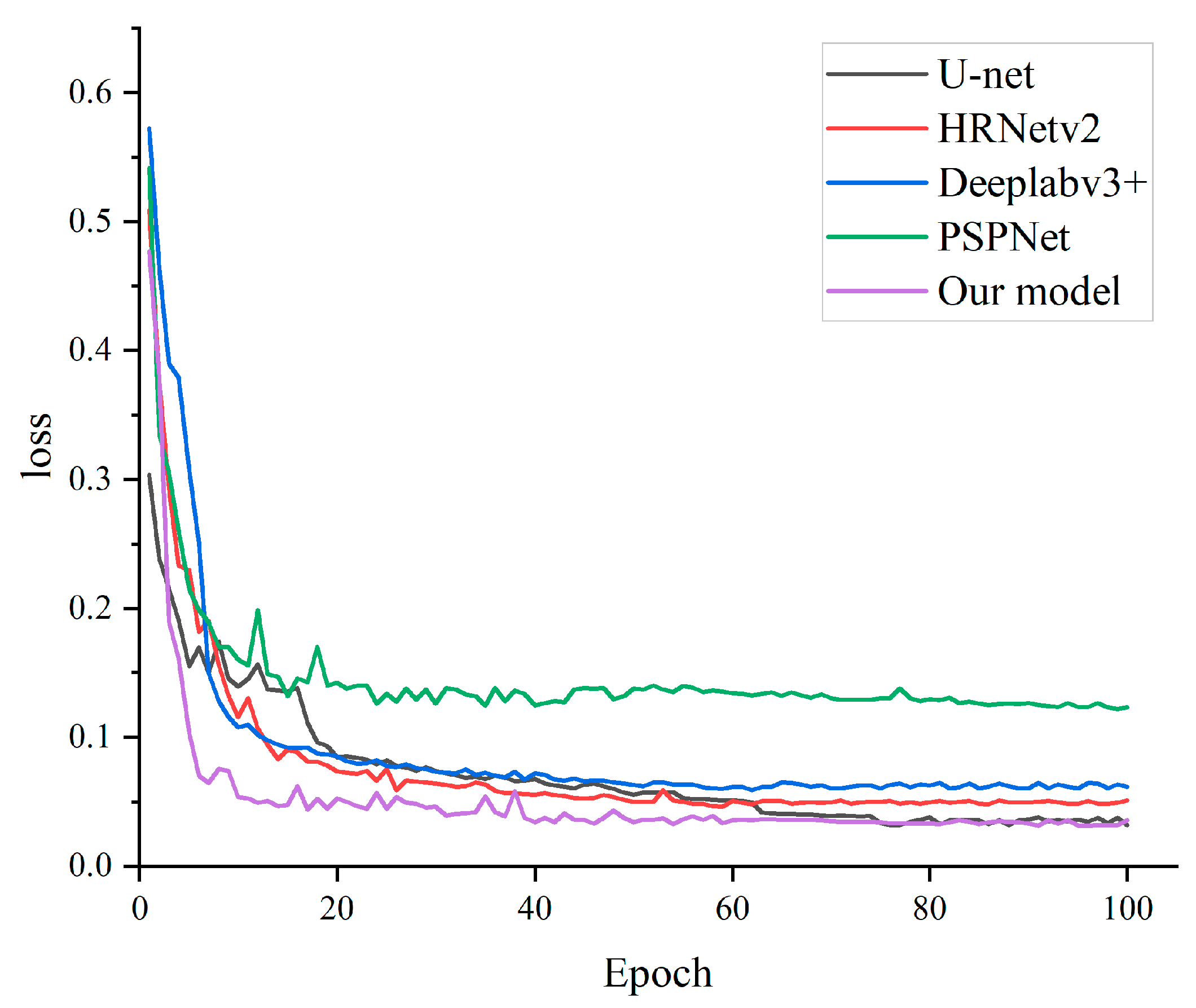

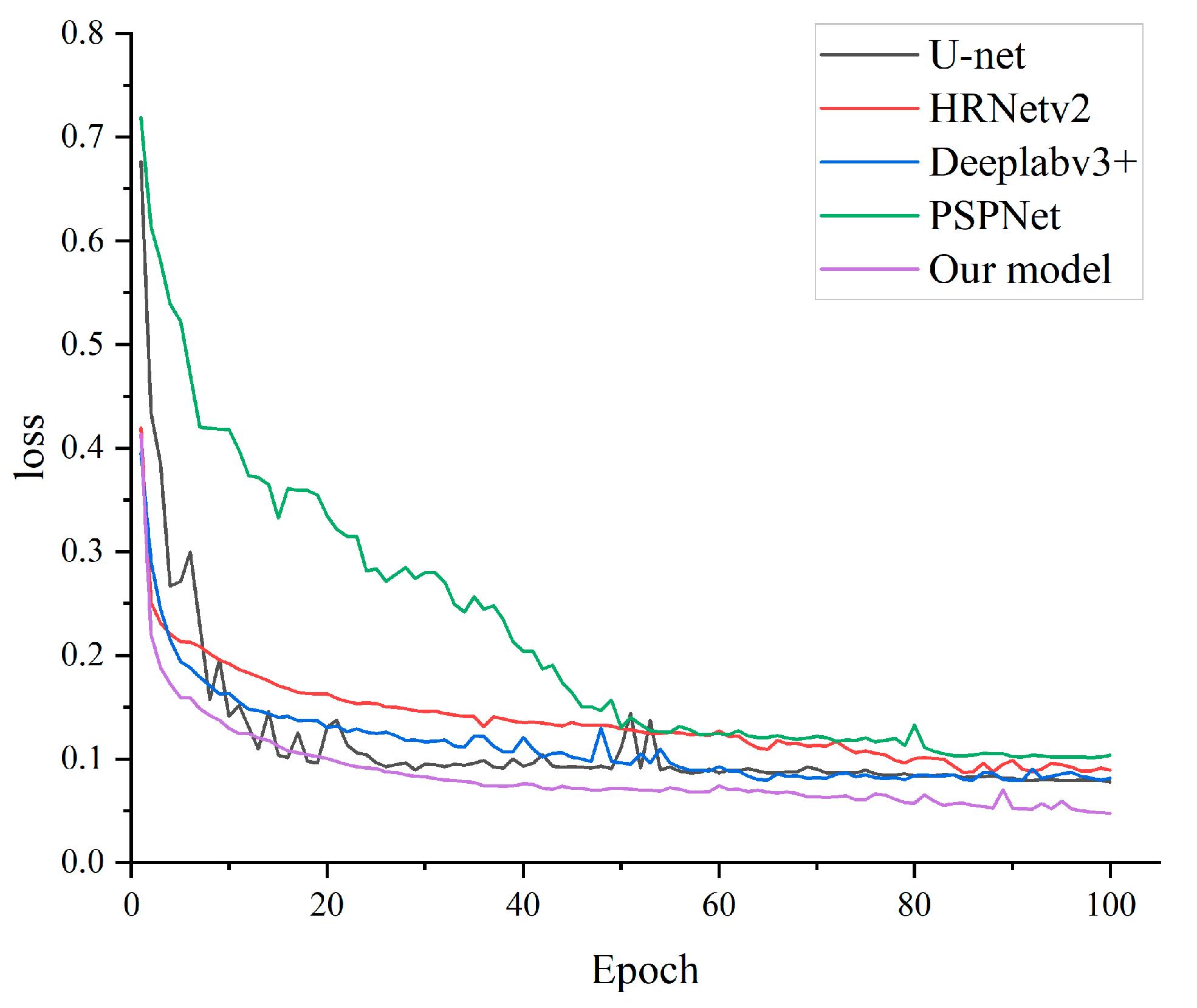

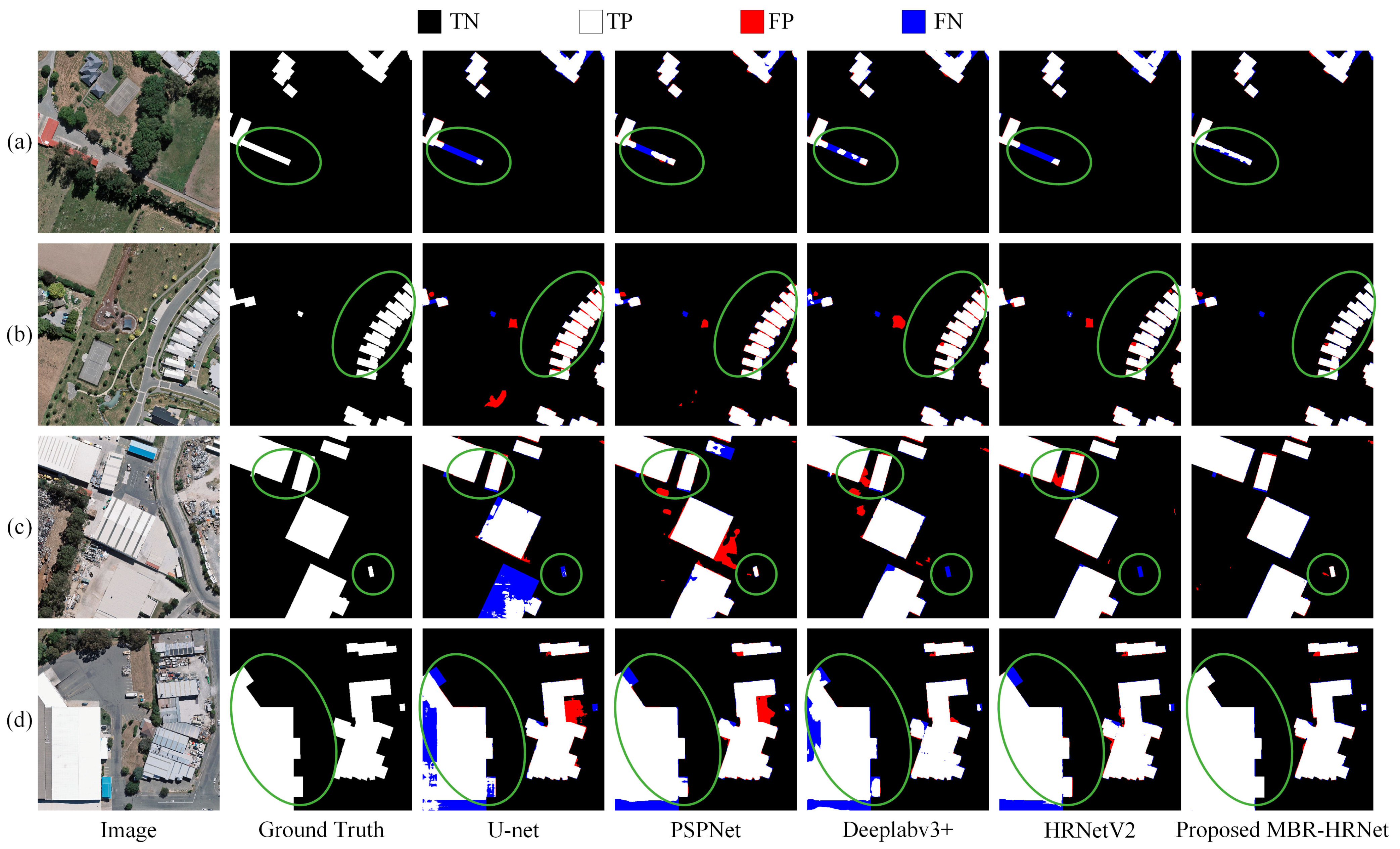

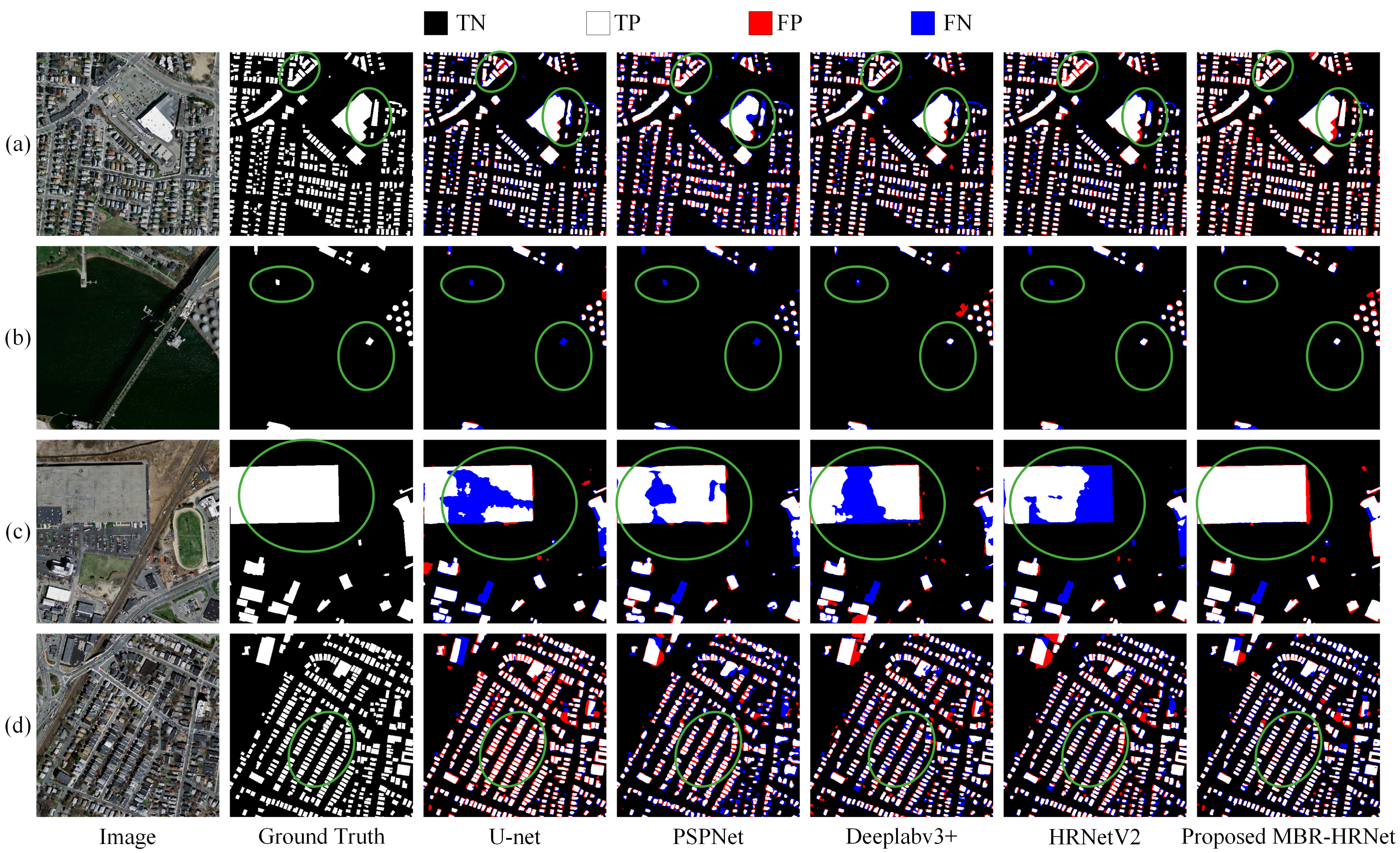

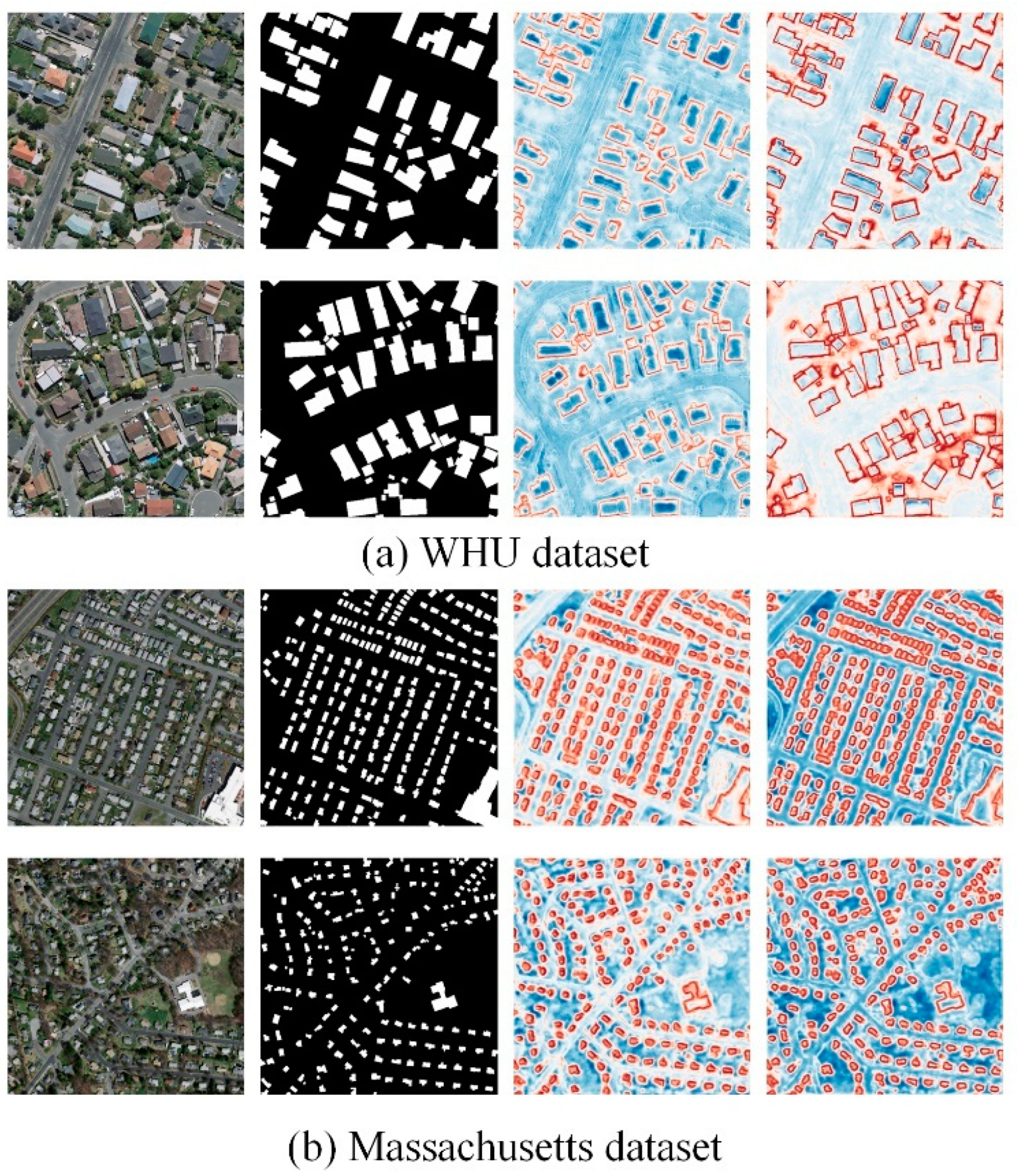

5. Conclusions

In this study, we introduced the utilization of MBR-HRNet to tackle various difficulties encountered in extracting buildings from remote sensing images. These challenges encompassed issues, such as the potential oversight of small-scale buildings, indistinct boundaries, and non-uniform building shapes. By employing MBR-HRNet, we aimed to overcome these obstacles and improve the accuracy and robustness of building extraction in remote sensing applications. Within the MBR-HRNet architecture, the MCFM module plays a crucial role in capturing and amplifying diverse global multi-scale features. It effectively harnesses intricate representations of spatial dimensions found within both high-level and low-level features. This capability proves highly valuable in extracting building boundaries that exhibits discontinuities, as well as accurately representing the appearance of irregular buildings. By incorporating the MCFM module, MBR-HRNet enhances its capacity to capture nuanced details and improve the overall performance of building extraction tasks. BRM progressively refines the building boundary information within the features, resulting in more accurate and refined boundary features, so that the extracted buildings have more accurate contours and prevent the extraction results of adjacent buildings from being sticky. The quantitative experimental outcomes highlight the superior performance of the MBR-HRNet model compared to other methods. This advantage was particularly evident in its remarkable achievements in both WHU and Massachusetts building datasets, as it attained the highest IoU results of 91.31% and 70.97%, respectively. From the qualitative experimental results, it can be seen that MBR-HRNet has a clear advantage in extracting small, dense buildings and accurately constructing building boundaries. In the future research, there are two main directions for further investigation. Firstly, integrating prior knowledge into the network to enable a faster and more accurate extraction of buildings in the network. Secondly, leveraging the precise boundary extraction advantages of MBR-HRNet for buildings, we aim to design a module that directly converts grid prediction maps into regular vector boundaries and integrate it into an end-to-end deep learning architecture. This approach would enable the direct acquisition of building boundaries and provide more comprehensive applications.