Abstract

Conventional measurement methods for above-ground biomass (AGB) are time-consuming, inaccurate, and labor-intensive. Unmanned aerial systems (UASs) have emerged as a promising solution, but a standardized procedure for UAS-based AGB estimation is lacking. This study reviews recent findings (2018–2022) on UAS applications for AGB estimation and develops a vegetation type-specific standard protocol. Analysis of 211 papers reveals the prevalence of rotary-wing UASs, especially quadcopters, in agricultural fields. Sensor selection varies by vegetation type, with LIDAR and RGB sensors in forests, and RGB, multispectral, and hyperspectral sensors in agricultural and grass fields. Flight altitudes and speeds depend on vegetation characteristics and sensor types, varying among crop groups. Ground control points (GCPs) needed for accurate AGB estimation differ based on vegetation type and topographic complexity. Optimal data collection during solar noon enhances accuracy, considering image quality, solar energy availability, and reduced atmospheric effects. Vegetation indices significantly affect AGB estimation in vertically growing crops, while their influence is comparatively less in forests, grasses, and horizontally growing crops. Plant height metrics differ across vegetation groups, with maximum height in forests and vertically growing crops, and central tendency metrics in grasses and horizontally growing crops. Linear regression and machine learning models perform similarly in forests, with machine learning outperforming in grasses; both yield comparable results for horizontally and vertically growing crops. Challenges include sensor limitations, environmental conditions, reflectance mixture, canopy complexity, water, cloud cover, dew, phenology, image artifacts, legal restrictions, computing power, battery capacity, optical saturation, and GPS errors. Addressing these requires careful sensor selection, timing, image processing, compliance with regulations, and overcoming technical limitations. Insights and guidelines provided enhance the precision and efficiency of UAS-based AGB estimation. Understanding vegetation requirements aids informed decisions on platform selection, sensor choice, flight parameters, and modeling approaches across different ecosystems. This study bridges the gap by providing a standardized protocol, facilitating widespread adoption of UAS technology for AGB estimation.

1. Introduction

Above-ground biomass (AGB) is defined as the dry mass of live or dead matter from plants, expressed as a mass per unit area (Mg ha−1) [1]. AGB represents the energy and matter accumulated by the photosynthesis of green plants [2,3,4]. Accurate AGB measurements are of great importance for non-agricultural and agricultural context management and climate change mitigation [5]. Additionally, AGB estimation assists the studies of desertification, biodiversity changes, water availability, and ecosystem changes [6,7]. Investigating AGB in forests is vital for assessing carbon storage, biodiversity, and guiding sustainable forest management [8]. In an agricultural context, AGB is a key biophysical metric used to: monitor crop growth status and health conditions [9], predict crop yield [10,11], manage fertilizer usage [12,13], manage weed and pests, monitor canopy closure, and estimate seed output [14]. AGB measurement is also important in managing grasslands and crucial for improving fodder production as they store 30% of the world’s terrestrial biomass [15,16,17]. Thus, an accurate measurement of AGB is a necessary parameter in managing forests, agricultural fields, and grasses.

Conventionally, estimating above-ground biomass involves physically cutting down and weighing plants, or harvesting and weighing plant parts. These methods are destructive and can be labor intensive and time consuming [18]. Additionally, conventional methods only provide a snapshot in time of the biomass of a particular area and do not allow for ongoing monitoring or studying of long-term changes in biomass [19]. Thus, there is a need for non-destructive, time-efficient, and repetitive methods (e.g., remote sensing techniques, proximal sensing) that enable quick and accurate estimation of AGB.

Remote sensing methods from orbital and sub-orbital platforms have gained prominence as powerful tools in estimating AGB [20] by observing physical, chemical, or biological properties of the crops and environment, such as temperature, humidity, vegetation type, or land use [10]. Satellite imagery offers several advantages, including a wide coverage area, a broad range of wavelengths, and a long lifespan. However, public-domain satellites also have some limitations, such as a limited resolution, a fixed viewing angle, and a long revisit time [18].

Recently, UASs have emerged as a great alternative to orbital systems in collecting aerial images and addressing the limitations of satellite imagery [21]. Compared with satellite imagery, UASs have several advantages, including high spatial resolution, flexible viewing angle, and short revisit time [22]. Due to those features, UASs have been used and studied by several researchers to estimate AGB [23,24,25,26,27].

Accurate biomass estimation using UAS platforms highly depends on the observed crop properties (traits) of interest [28,29]. For example, for corn, height is more relevant when estimating AGB [30], while for potato the leaf red-edge wavelength reflectance shows the highest correlation with AGB [31]. In non-agricultural crops, while plant height is essential when estimating AGB in forest, plant density is much more important in estimating AGB in grasslands [32]. One of the unique advantages of UASs in AGB estimation is the capability of aircrafts carrying different sensors or a combination of sensors (e.g., red-green-blue (RGB), multispectral (MS), and hyperspectral (HS) cameras) to collect data from different wavelengths of the electromagnetic spectrum [33,34]. This capability provides the opportunity to collect data and monitor several traits of interest at once, which can result in improvement in both efficiency and accuracy when estimating AGB.

Although UASs are very promising tools in estimating AGB, there are several factors that make the process challenging, such as pre-flight considerations, flight parameters, and modeling selection. Pre-flight considerations refer to the conditions and variables that can affect the quality and reliability of the UASs data before its collection, such selection and configuration of the sensors, and the aerial platform type. Flight parameters refer to the conditions and variables that can affect the quality and reliability of the UASs data during flight, such as stability and accuracy of both aircraft and sensors, atmospheric conditions, and interference and noise [22]. Modeling factors refer to the choice of methods and algorithms that are used to process and analyze the UASs data, such as pre-processing and calibration of data, selection and tuning of models and parameters, and validation and evaluation of the results. It is important that one is aware of those factors and their impacts, so measures can be put in place to mitigate their impact on the data being collected, so AGB estimates can be carried out on the best UAS-data quality possible.

The workflows of above-ground biomass estimation usually involved the following steps:

- Collection of UASs imagery concurrent with the ground based AGB data collection, either using allometric or direct (destructive) sampling,

- Data processing, including image pre-processing, creation of photogrammetric 3D point clouds and/or orthomosaics and georeferencing, creation of canopy height models using digital terrain and digital surface models, delineation of individual areas or plants of interest in models, and derivation of structural, textural, and/or MS, HS, or RGB spectral variables,

- Creation of predictive AGB models using UASs-derived variables (predictors) and ground-based AGB as the response variable, followed by variable selection, assessment of accuracy of the preferred model and in some studies, its validation.

- In some studies, an application of the model of choice to estimate site-wide biomass [5].

The lack of standardized methodology for collecting, pre-processing, and analyzing UAS data can increase the bias and uncertainty of the AGB estimates, and can lead to inconsistent and unreliable results [2]. While it is crucial to draw from the knowledge and experiences documented in the literature, our proposed standards will not solely rely on what has been most used in previous studies. We acknowledge that the field of UAS-based biomass estimation is rapidly evolving, and new methodologies, techniques, and technologies are constantly being developed. Therefore, we aim to take a comprehensive approach that not only considers the prevalent practices but also evaluates emerging methodologies that show potential for improving accuracy, precision, and reliability. To achieve this, we will conduct a systematic analysis of the existing literature, identifying the most employed methods and techniques for data collection, pre-processing, and analysis. We will focus on the latest developments within a specific timeframe (2018–2022) to ensure that we capture the most up-to-date advancements in the field.

In most of the studies in this area, researchers have used UAS data to study and identify traits that are highly connected with biomass such as vegetation indices, and structural metrics [3,14,15,31,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52]. However, to our best knowledge, the most important pre-flight, flight, and modeling factors that affect the accuracy of AGB estimation in different vegetation types and how these factors affect the accuracy of AGB estimation are rarely discussed.

In this review paper, we aim to address shortcomings on recently published studies regarding AGB estimation. This document is organized as follows: Section 2 explains the search method that we used to select the papers we reviewed for the purpose of this study. Section 3 addresses the question of “how do pre-flight parameters affect the accuracy of AGB estimation using UASs”? In Section 4, we analyzed the flight parameters and their effects on AGB estimation accuracy. Section 5 is devoted to discussing the effect of modeling parameters on the AGB estimation. Finally, in Section 6 we discuss the challenges of AGB estimation using UASs.

This paper addresses the limitations of conventional AGB estimation methods by exploring the potential of UASs. It offers a vegetation type-specific standard protocol based on a comprehensive review of recent research and statistical analysis of 211 papers. The paper provides evidence-based recommendations on sensor selection, flight parameters (altitude, speed, image overlap), ground control points (GCPs), number of vegetation indices, and modeling approaches. The proposed protocol’s complexity is influenced by factors such as sensor capabilities, data processing algorithms, computational resources, and implementation expertise. The evaluation metric used is the coefficient of determination (R2). This survey paper provides valuable insights and guidelines for enhancing precision and efficiency in UAS-based AGB estimation across different ecosystems. However, it is important to consider the generalizability of the protocol, potential challenges specific to certain crop types or geographical areas, and the need for further validation. Future research can focus on refining and expanding the protocol to address these limitations and improve its applicability in diverse contexts.

2. Search Method

The goal of this study was to assess the importance of pre-flight, flight, and modeling parameters, variables, and methods on the accuracy of the estimation of AGB using UAS in four different vegetation types (forest, grasses, vertically growing crops, and horizontally growing crops) based on recently published papers (from 2018 to 2022). The selected 2018–2022-time window balances an up-to-date review of UAS advancements in AGB estimation with a manageable study scope, ensuring relevance and currency. It enables comprehensive analysis, synthesis of key insights, and development of a crop-specific protocol. The chosen approach for assessing the parameters and models in this study was based on a combination of scatter plots and statistical tests. The scatter plots were employed to establish a visual representation of the relationship between each parameter and the final coefficient of determination (R2), which is a widely used metric for evaluating the accuracy of biomass estimation. By plotting these relationships, potential trends and correlations could be identified, providing insights into the influence of each parameter on the overall accuracy of above-ground biomass (AGB) estimation using unmanned aerial systems (UAS). In addition, t-tests were utilized to examine whether there were statistically significant differences between different classes of parameters and the overall accuracy of the models. This enabled an assessment of whether specific parameters had a significant impact on the accuracy of biomass estimation. Additionally, the t-tests were used to determine if there were differences in the performance of AGB estimation models across the various parameter classes. The choice to employ t-tests as a statistical tool allowed for a rigorous examination of the significance of the observed differences. R2 was chosen as the response variable for analysis due to its widespread use as a measure of model performance in biomass estimation literature. The consistent reporting of R2 across studies enabled comparability in the analysis, even though other metrics such as root mean square error (RMSE) could offer further insights.

In the initial search process, various keyword combinations were entered (Table 1), which returned 220 papers. Among them, there were 3 conference papers [44,53,54], 9 review papers [2,18,55,56,57,58,59,60,61,62,63,64], and 208 peer-reviewed papers from Web of Science and Google Scholar databases. We then reviewed the abstract and content of all papers and eliminated the ones that did not relate to the application of UASs in AGB estimation or did not report the value of the coefficient of determination (R2) as a comparison metric. The criteria that we used to include or exclude references in our review included factors such as the focus of the study, the methods used in the study, the relevance to our review, and the availability of the full text. After the first screening, 211 publications were extensively reviewed. To highlight the difference between our review paper and previous review papers the key findings and focus of those studies are presented in Table 2.

Table 1.

Keyword combination used during the literature search process for this review.

Table 2.

Summary of focus of previous literature review papers on AGB estimation using UASs.

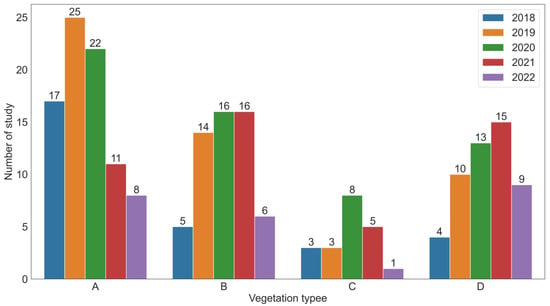

Figure 1 shows the focus area of this review paper and the frequency of publications in each area between the years 2018–2022. In this dataset, 39% of the publications were related to the estimation of AGB in forest environments, 24% were related to grasses, 27% were related to vertically growing crops (maize, wheat, oilseed rape, barley, sorghum, sugarcane, and oats), and the remaining 10% were focused on horizontally growing crops (sugarbeet, potato, tomato, legume, onion, alfalfa, and vegetables).

Figure 1.

The number of published papers included in this literature review by vegetation type and by year. (A) forest, (B) vertically growing crops, (C) horizontally growing crops, and (D) grasses.

In recent years, there has been a growing interest in utilizing unmanned aerial systems (UAS) and advanced sensors for accurate and efficient estimation of AGB. This has led to a substantial body of literature focusing on different application areas and vegetation types. As seen in Figure 1, the distribution of publications between 2018 and 2022 highlights the diverse research efforts in this field. Selecting the appropriate UAS platform and sensor are two main pre-flight factors that can affect AGB estimation.

3. Importance of Pre-Flight Factors in AGB Estimation

The UAS platform should be able to carry the sensor and have necessary flight characteristics such as flight duration, range, and altitude [66,67]. Attached sensor to UAS should provide detailed information on biomass and have appropriate spectral accuracy and resolution [68].

3.1. UAS Platform Type

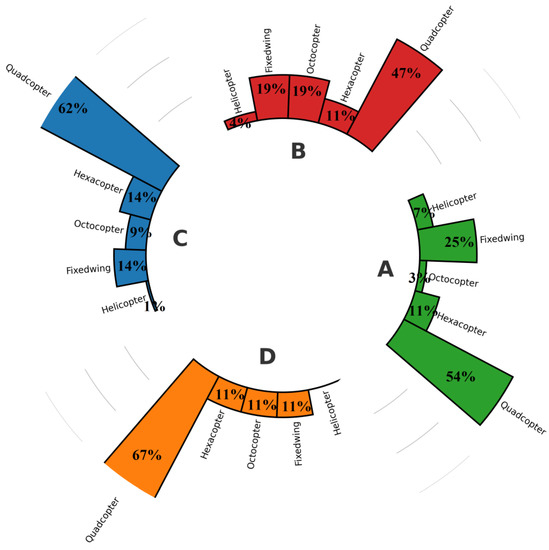

Rotary-wing and fixed-wing UASs were the most frequently used types of UASs in agricultural fields [22]. Rotary-wing UASs provide higher resolution images because they can fly in lower altitudes with lower speeds [69]. Compared with fixed-wing UASs, rotary-wing UASs cover smaller regions and take longer to fly over the same area [22]. Fixed-wing UASs need runways for takeoff and landing regions, and some are hand-launched from a launcher ramp. They can cover larger areas, and get a centimeter-level accuracy of ground sample distance because they can fly at high cruise altitudes and speeds [22]. The majority of studies considered in this review (more than 65%) reported using rotary-wing platforms to collect data, and quadcopters were the most frequently employed (45–67%) platform (Figure 2). The eBee UASs (developed by senseFly, Swiss, Switzerland) was the commonly used fixed-wing UAS to estimate the AGB [34,48,70,71,72]. It does not come as surprise that most of the studies reported using DJI drones (DJI Inc., Shenzhen, China) (Phantom 3, Phantom 4, Matrice 100, Matrice 200, and Matrice 600) as the platform of choice to carry RGB [11,17,32,73], multispectral (MS) [45,72,74,75,76,77], hyperspectral (HS) [78,79,80], and LIDAR [81,82,83] sensors. While the existing evidence [2] suggests that platform choice may not heavily impact AGB estimation accuracy, further research is needed to validate and expand upon these findings.

Figure 2.

Vegetation-based distribution of UAS platforms used to estimate above-ground biomass used on this literature review: (A) forest, (B) vertically growing crops, (C) grasses, and (D) horizontally growing crops.

3.2. Sensors

UASs offer an advantage over satellites and manned aircraft in terms of the range of available sensors and the ability to perform spectral imaging, due to their close proximity to the ground [84]. The most used sensors for AGB estimation and their applications are shown in Table 3. Factors that make a sensor suitable for AGB measurement include accuracy, spectral and spatial resolution, cost, and data collection and processing complexity. RGB sensors may be sensitive to environmental factors [85], while multispectral sensors may have lower spatial resolution [86]. Hyperspectral sensors can provide detailed analysis of plant species at tissue level [87], while LIDAR sensors can be used to measure height and density of vegetation [81]. RGB and multispectral sensors are easier to use, and the data analysis is less complex than LIDAR and hyperspectral sensors [59]. LIDAR and hyperspectral sensors offer greater accuracy in capturing structural and spectral information, which is crucial for estimating AGB [88]. However, cost, dataset size, and complexity of image processing are among the drawbacks of using LIDAR and hyperspectral sensors [22].

Table 3.

Most used sensors for AGB estimation and their applications.

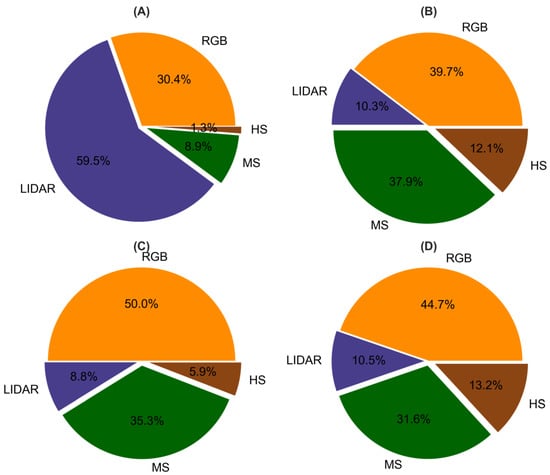

Analyzing the sensors used in previously published papers, we observed that different types of sensors were commonly employed for AGB estimation in various ecosystems (Figure 3). LIDAR and RGB sensors were frequently utilized for AGB estimation in forest studies, where LIDAR sensors offered distinct advantages due to their ability to accurately measure the vertical growth of trees [96], reduces the saturation effect in AGB estimation [97,98], and less affecting by solar zenith angles and cloudiness [82,99]. However, in agricultural crops and grass fields, RGB, multispectral (MS), and hyperspectral (HS) sensors were more commonly used for AGB estimation. This choice of sensors in non-forest environments could be attributed to factors such as cost [100], weight [42], and the provision of more spectral information [101]. It is important to emphasize that the selection of sensors is influenced by the specific requirements and characteristics of each studied ecosystem, as well as the growth stage of the vegetation. For example, when canopy density is at its highest stage, LIDAR-derived values can cause an overestimation of biomass [83]. In the following sections, we will further explore the considerations for sensor selection and their impact on AGB estimation accuracy.

Figure 3.

Frequency of using different sensors (red-green-blue (RGB), LIDAR, multispectral (MS), and hyper spectral (HS)) to estimate AGB in different vegetation types ((A) forest, (B) vertically growing crops, (C) horizontally growing crops, and (D) grasses).

4. Importance of Flight Parameters in AGB Estimation

Careful consideration of UASs flight parameters is crucial for ensuring the effectiveness and accuracy of data collected in various applications. The importance of flight parameters setting for accurate biomass estimation was evaluated by examining the impact of different settings such as flight altitude, flight speed, and overlap on the accuracy of biomass estimation in four groups of crops.

4.1. Flight Altitude

Flying at a lower altitude reduces the size of the covered area per flight, it might results in more flights to cover a study site, and can lead to greater variability in environmental conditions affecting radiometric adjustments [35]. On the contrary, flying at a higher altitude reduces flight time and allows larger areas to be covered, helping to keep environmental conditions consistent [55]. The ground sampling distance (GSD), is defined as the physical distance on the ground represented by each pixel in an image [102]. The relationship between flight altitude and GSD is direct, according to Equation (1). Lower altitude results in a smaller GSD, meaning that the image resolution of the ground is higher. Higher altitude results in a larger GSD, meaning that the image resolution of the ground is lower. The relationship between flight altitude and GSD is defined by the camera’s field of view, lens properties, and sensor size [103].

where h: flight altitude, f: principal distance of the lens, GSD: ground resolution, and a: pixel size. For a deeper understanding of flight altitude and its implications, the reader is referred to [55].

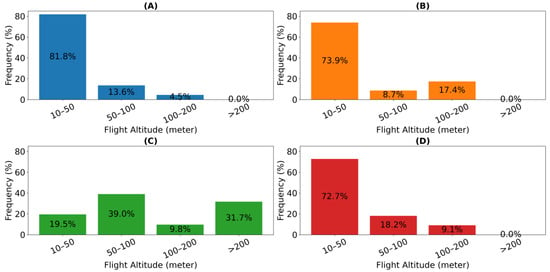

With respect to the different flight altitude classes for various vegetation types, a predominant number of flights for grasses and agricultural crops were conducted within a range of 10 to 50 m above ground level (AGL). Conversely, the highest frequency of flights was observed in the 50 m and 100 m above-ground-level classes for forests (Figure 4). This preference for lower flight altitudes can be attributed to the characteristics of the respective vegetation groups. Grasses and agricultural crops, known for their relatively low height and even surface, benefit from capturing detailed data at closer ranges. This enables a more accurate estimation of above-ground biomass. On the other hand, forests, characterized by their intricate vertical structure and potential canopy variations [104], necessitate flights at slightly higher altitudes to ensure a comprehensive capture of the biomass distribution. This is due to the need to encompass the complex three-dimensional nature of forest ecosystems, where flying at elevated altitudes provides a more encompassing perspective of the vegetation and its biomass distribution [105]. In general, the lack of standardization, limited justification for altitude selection, and the need to consider spatial resolution and minimum mapping units contribute to the variation in flight altitudes observed across different crop groups [106]. Further research and empirical studies are needed to establish best practices and guidelines for determining optimal flight altitudes and resolutions in UAS-based above-ground biomass estimation applications.

Figure 4.

Frequency of using different flight altitudes (above ground level or AGL) used for each vegetation types is presented as a percentage ((A) horizontally growing crops, (B) vertically growing crops, (C) forests, and (D) grasses).

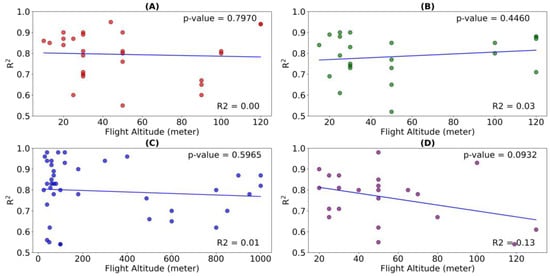

To evaluate the impact of flight altitude on the accuracy of AGB estimation, a scatter plot was generated using the values of coefficient of determination (R2) of the biomass model and flight altitude reported in four different groups of crops (Figure 5). Subsequently, a statistical analysis was performed to determine the significance of this relationship. The p-value serves as a measure of statistical significance and helps determine whether the observed relationship is likely due to chance or represents a meaningful correlation. The obtained p-values for the R2 coefficients in AGB estimation models and flight altitude across vegetation types are: 0.7970 (vertically growing crops), 0.4460 (horizontally growing crops), 0.5965 (forests), and 0.0932 (grasses). These p-values suggest that there is no statistically significant relationship between flight altitude and AGB estimation accuracy in any of the vegetation types studied, as all p-values are above the commonly used significance threshold of 0.05. Several factors might contribute to this finding. Firstly, the nature of the crops themselves could influence the relationship between flight altitude and AGB estimation accuracy. It is possible that the structural characteristics and spatial distribution of the crops minimize the impact of flight altitude on the estimation process [102]. Additionally, other variables such as data processing techniques, and environmental conditions might play a more dominant role in determining the accuracy of AGB estimation, overshadowing the influence of flight altitude. These findings show that the variation in flight altitudes within the evaluated range did not significantly affect the precision of AGB estimation. Therefore, users can fly at a higher altitude without negatively impacting the accuracy of AGB estimation. This can lead to significant time-saving benefits both during data collection in the field and data processing. However, further research is warranted to explore the potential interactions between flight altitude and other influential factors to gain a comprehensive understanding of their combined effects on AGB estimation accuracy.

Figure 5.

The relationship between flight altitude in meter (above ground level) and the coefficient of determination (R2) of AGB estimation models in four group of crops. (A) Horizontally growing crops, (B) vertically growing crops, (C) forests, and (D) grasses.

Grasses show higher sensitivity to flight altitude in AGB estimation compared to other vegetation types. The p-value of 0.0932, though not statistically significant, suggests a potential trend. Factors contributing to this include the lower and more uniform canopy structure of grasses, resulting in distinct variations in AGB estimation with altitude changes. Additionally, the unique growth dynamics of grasses, including distinct growth patterns and rates, make them more responsive to altitude changes [107]. Further research is required to validate these observations.

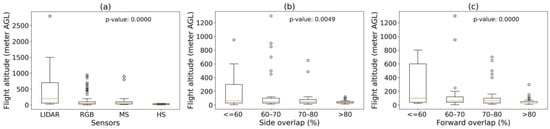

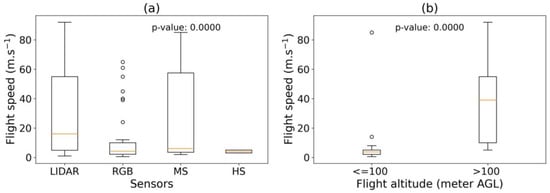

To assess the impact of sensor type (Figure 6a), side (Figure 6b) and forward (Figure 6c) overlap on the flight altitude, a comparison was conducted between these parameters. Then, statistical analysis was conducted to calculate p-values for these comparisons. These analyses determined whether significant differences existed in the flight altitude based on the sensor type and overlap. The results of the analysis indicated that there were significant differences in the flight altitude based on the sensor type and overlap (p-value less than 0.05). It was found that in terms of above-ground biomass estimation, LIDAR has a higher average flight altitude compared to other sensors. When comparing RGB, multispectral, and hyperspectral sensors, multispectral sensors exhibited a higher flight altitude than the RGB and multispectral sensors. In addition, flights with side and forward overlap greater than 70% are typically more common at lower flight altitudes.

Figure 6.

A comparison between flight altitude (meter above ground level) and sensor type (a), and side (b) and forward (c) overlap.

LIDAR sensors have the capability to operate at higher altitudes compared to other sensors due to their unique technology. LIDAR systems use laser pulses to measure distances and generate precise 3D point cloud data [95]. The laser beams emitted by LIDAR sensors have a narrow footprint and high energy, allowing them to penetrate through vegetation and capture detailed information about the terrain and objects at greater distances [108]. Additionally, lidar’s robustness against certain atmospheric conditions and signal interferences enables it to perform reliably at elevated altitudes, contributing to its suitability for high-altitude flight operations [109]. RGB sensors can be susceptible to signal interference from atmospheric conditions or surrounding objects [110]. To minimize such interference and ensure data accuracy, it may be necessary to fly at lower altitudes. In contrast, LIDAR and multispectral sensors might be less affected by these interferences, enabling them to operate at higher altitudes. One reason maybe that at lower flight altitudes, the perspective distortion is more pronounced due to the proximity of the sensor to the ground [111]. Increasing the overlap compensates for this distortion by capturing multiple images of the same area from slightly different angles, resulting in a more accurate and undistorted representation.

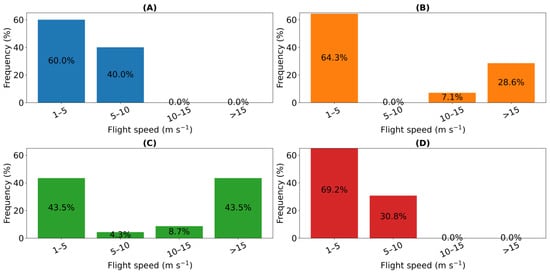

4.2. Flight Speed

UASs can fly at a range of speeds depending on the specific model and design. Flying at a slower speed can increase the duration of the flight [112]. The high flight speed of UAS can lead to a loss of details in the images, which can make it more difficult to accurately identify and measure individual plants and other features on the ground [113]. The results indicate that the flight speed of unmanned aerial systems (UASs) varied across different vegetation types (Figure 7). The majority of flights conducted for horizontally growing crops, vertically growing crops, and grasses occurred at speeds between 1 and 5 m per second (m s−1), with frequencies of 60%, 64.3%, and 69.2%, respectively. In contrast, forests exhibited a more balanced distribution of flight speeds, with approximately equal frequencies in the 1–5 m s−1 and greater than 15 m s−1 categories. This suggests that the flight speed preference is influenced by the characteristics of the vegetation types.

Figure 7.

The frequency distribution of different flight speed (m s−1) used for each vegetation types is presented as a percentage ((A) horizontally growing crops, (B) vertically growing crops, (C) forests, and (D) grasses).

The variation in flight speeds across different vegetation groups for above-ground biomass (AGB) estimation can be explained by several factors. Vegetation structure and density influence the flight speed preferences, with horizontally growing crops favoring lower speeds (1–5 m s−1) due to their uniform and dense canopies, while vertically growing crops require higher speeds (>15 m s−1) to capture their vertical structure and cover larger areas efficiently. Sensing resolution and image quality also play a role, as slower speeds enable higher-resolution imagery for accurate identification and measurement of individual plants [114], particularly in grasses and vertically growing crops. Environmental factors, including wind speed and turbulence, influence flight speed choices to maintain stability and image clarity [115], with higher speeds (>15 m s−1) preferred in forests to mitigate the impact of wind. Higher flight speeds enable the LIDAR sensor to capture more data points per unit area, creating a denser point cloud for a detailed representation of the forest’s structure [43]. This is crucial for comprehensive forest analysis, as forests cover large areas, and higher speeds allow for faster data collection, reducing survey time.

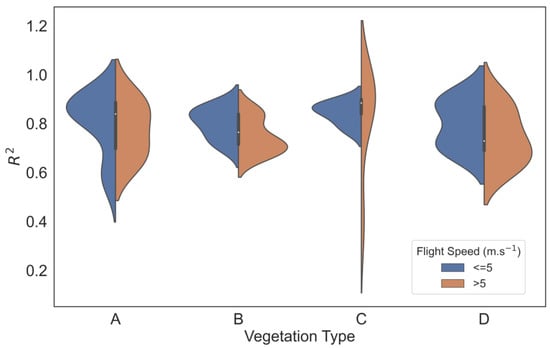

The impact of flight speed on the accuracy of AGB estimation was assessed by dividing the studies into two categories based on flight speed, below 5 m s−1 and above 5 m s−1. The final values of the coefficient of determination for AGB estimation were compared (Figure 8). The t-test was employed to assess whether there exists a significant difference in the accuracy of above-ground biomass (AGB) estimation between the two groups categorized by flight speed within each vegetation type. The analysis of t-test results revealed that flight speed has a statistically significant impact on the accuracy of above-ground biomass (AGB) estimation in certain vegetation types. Specifically, the t-test results showed that the p-values for grasses (p = 0.049) and horizontally growing crops (p = 0.006) were below the significance level of 0.05, indicating a significant difference in AGB estimation accuracy between the two flight speed groups. However, for forests (p = 0.229) and vertically growing crops (p = 0.701), the p-values were above 0.05, suggesting that the difference in flight speed did not have a significant impact on AGB estimation accuracy in these vegetation types.

Figure 8.

The comparison of accuracy of AGB estimation at two flight speed (less than or equal to 5 m s−1 and greater than 5 m s−1) in four types of vegetation (A) forest, (B) horizontally growing crops, (C) vertically growing crops, and (D) grasses).

The importance of flight speed in grasses and horizontally growing crops for above-ground biomass (AGB) estimation can be attributed to several factors. These vegetation types have a more uniform structure, making them susceptible to variations in flight speed that can affect sensor resolution, signal penetration, and the capture of fine-scale details relevant to AGB estimation. The lower sampling density associated with higher flight speeds [116] can also hinder accurate AGB estimation in grasses and horizontally growing crops, as these vegetation types require finer-scale data. In contrast, the denser and more heterogeneous structures of forests and vertically growing crops may minimize the impact of flight speed on AGB estimation accuracy due to structural variations or the need for higher sampling densities regardless of flight speed. Generally, faster flight underestimated biomass compared to standard settings by negatively impacting on the data’s coverage and resolution [116].

To assess the impact of sensor type (Figure 9a) and flight altitude (Figure 9b) on the flight speed, a comparison was conducted between these parameters. Then, statistical analysis was conducted to calculate p-values for this comparison. The results indicated a significant difference in flight speed among various sensors (LIDAR, RGB, multispectral, hyperspectral) and flight altitudes (below and above 100 m AGL). On average, LIDAR and multispectral sensors exhibited higher flight speeds compared to RGB and hyperspectral sensors. Flights conducted at altitudes above 100 m AGL demonstrated greater flight speeds compared to flights at altitudes below 100 m AGL. The higher resolution and data complexity of RGB and hyperspectral sensors may necessitate slower flight speeds for accurate data collection, leading to lower overall flight speeds. Flights at altitudes above 100 m AGL may prioritize broader coverage or time-sensitive data acquisition, resulting in increased flight speeds to efficiently cover larger areas.

Figure 9.

A comparison between flight speed (m s−1) and sensor type (a), and flight altitude (meter above ground level) (b).

4.3. Image Overlaps

Overlap refers to the percentage by which each image overlaps with its neighboring images. Small overlaps can decrease the number of flights and, thus, reduce the flight costs [113]. A higher overlap allows for more accurate stereo processing [117], which can result in a heavy dataset and increasing the time of process.

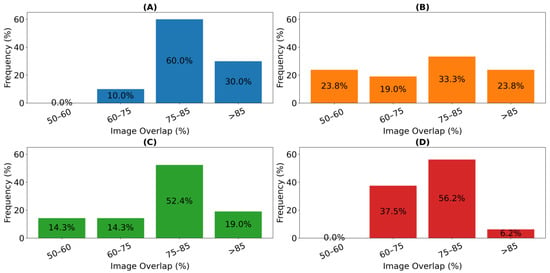

Regarding the percentage of image overlap, horizontally growing crops had the highest frequency (60%) in the 75–85% overlap range, indicating consistent image coverage. Figure 10 presents the frequency distribution of forward overlap classes used for different vegetation types. The distribution of side overlap exhibited similar patterns; hence, a single figure was utilized to represent both overlap types. Vertically growing crops had a more varied distribution, with roughly equal frequencies (23.8%) across the 50–60%, 60–75%, 75–85%, and >85% overlap ranges. Forests predominantly fell in the 75–85% overlap range (52.4%), while grasses had a majority (56.2%) in the 75–85% overlap range. These findings highlight the variability in image overlap characteristics among different vegetation types. The observed differences in image overlap characteristics among different vegetation types can be attributed to several factors. One significant factor is the limitations of the platform used for data collection, which can influence the selection of the desired overlap. For instance, fixed-wing platforms are generally unable to fly at low speeds and altitudes required to achieve sufficient overlap with this specific sensor. These limitations can hinder the ability of fixed-wing platforms to capture the desired level of sensor overlap effectively [106]. Secondly, the selection of overlap is dependent on the type of sensor used in the study. For instance, when utilizing LIDAR sensors to measure plant height, some studies [25,43,118,119,120] have considered lower overlap percentages ranging from 50% to 60%. On the other hand, when using multispectral sensors to calculate vegetation indices, other studies [75,121,122,123] have opted for higher overlap percentages, typically around 75% to 85%.

Figure 10.

The frequency of using different forward overlap class used for each vegetation types is presented as a percentage ((A) horizontally growing crops, (B) vertically growing crops, (C) forests, and (D) grasses).

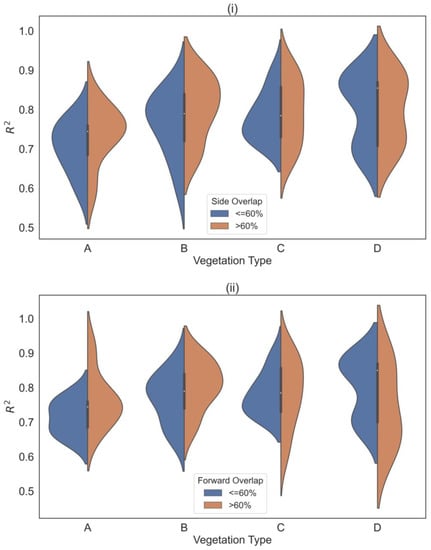

To evaluate the effect of overlap percentage on the accuracy of biomass estimation, two groups of side (Figure 11i) and forward (Figure 11ii) overlap (less and greater than 60%) for all categories of plants were assessed based on the final values of R2 of the studies.

Figure 11.

The comparison of accuracy of AGB estimation between two overlap categories (less than or equal to 60% and greater than 60%) for side (i) and forward (ii) overlap in four vegetation types (A—forest, B—horizontally growing crops, C—vertically growing crops, and D—grasses).

For the forest vegetation type, the p-values for side overlap and forward overlap were 0.1547 and 0.0222, respectively. The relatively low p-value for the forward overlap suggests a statistically significant impact on biomass estimation accuracy, indicating that greater than 60% forward overlap leads to improved results. The significant impact of forward overlap on biomass estimation accuracy in forest vegetation can be attributed to the requirements of the Structure from Motion (SfM) workflow commonly employed in the analyzed studies. SfM algorithms rely on a large number of overlapping images to identify key points and create 3D point clouds for surface models [106].

In the case of grasses, the p-values for side overlap and forward overlap were 0.9073 and 0.2228, respectively. Both p-values were above the significance level of 0.05, indicating that the choice of overlap percentage did not have a significant impact on biomass estimation accuracy for this vegetation type. For horizontally growing crops, the p-values for side overlap and forward overlap were 0.6532 and 0.1450, respectively. Similar to grasses, neither p-value was below the significance level, indicating that the selection of overlap percentage did not significantly influence biomass estimation accuracy in horizontally growing crops. In the case of vertically growing crops, the p-values for side overlap and forward overlap were 0.7815 and 0.8275, respectively. Both p-values were above 0.05, indicating that the choice of overlap percentage did not have a significant impact on biomass estimation accuracy in vertically growing crops.

Overall, having a higher forward overlap is more important for a better AGB estimation in forests compared to side overlap. Consequently, it may be a cost-effective solution to decrease the side overlap while maintaining a constant forward overlap to minimize the flight time and acquisition expenses. For grasses, horizontally growing crops, and vertically growing crops, the impact of overlap percentage on biomass estimation accuracy appears to be less significant. So, operators may opt to decrease the amount of overlap to reduce flight times and minimize the volume of data that needs to be stored. Further studies may be necessary to determine the optimal value for overlap based on the flight duration and dataset size. However, based on the literature on UAS imagery collection, an overlap range of 75–85% is suggested for accurate estimation of above-ground biomass in forests, grasses, horizontally and vertically growing crops.

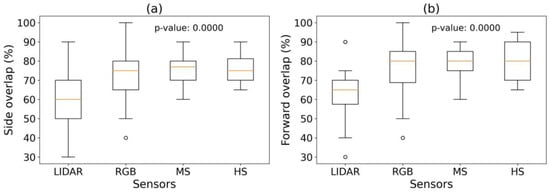

To assess the impact of sensor type on the side (Figure 12a) and forward (Figure 12b) overlap, a comparison was conducted between these parameters. Then, statistical analysis was conducted to calculate p-values for these comparisons. The results indicated a significant difference in forward and side overlap among various sensors (LIDAR, RGB, multispectral, hyperspectral). Hyperspectral, multispectral, and RGB sensors typically require both side and forward overlap greater than LIDAR sensors for accurate above-ground biomass estimation. This can be due to the fact that LIDAR sensors provide accurate 3D point clouds, which can be less sensitive to overlap variations compared to 2D imagery from hyperspectral, multispectral, and RGB sensors [124]. In addition, across all sensor types, the average forward overlap is higher than the side overlap. One reason maybe that forward overlap helps compensate for perspective distortion caused by the sensor’s nadir view angle [124]. It ensures better coverage of features in the scene, especially in areas where distortion is more pronounced.

Figure 12.

A comparison between side (a) and forward (b) overlap (%) and type of sensor (a).

5. Ground Control Points (GCPs)

GCPs are physical markers placed on the ground that can be used to georeference, geo-correct, and co-register images captured by the UASs [17,125,126]. By using GCPs, it is possible to accurately map the images onto a specific location on the Earth’s surface to reduce the planimetry error [14,127], which is essential for generating accurate biomass estimates. The geometric correction error of the image should be less than 0.5 pixels [103]. High-accuracy Global Navigation Satellite System (GNSS) techniques such as Real-Time Kinematic (RTK) or Post-Processed Kinematic (PPK) are often used to collect and use an appropriate number of GCPs in order to accurately georeference the data collected during the mission [65,103]. Factors such as flight altitude, study area, and complexity of the topography determine the number of GCPs per hectare [65].

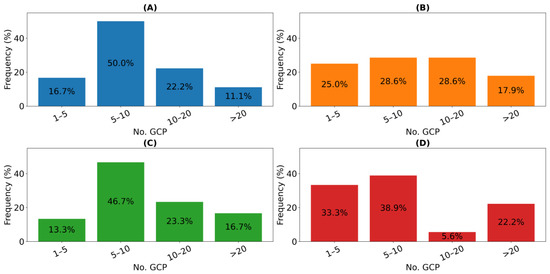

It was found that the usage of 5–10 GCPs was the most prevalent among all the crop groups. Additionally, studies conducted on grasses were observed to have used a higher number of GCPs compared to other vegetation groups (Figure 13).

Figure 13.

Frequency of using different number of ground control points (GCPs) used for each vegetation type is presented as a percentage ((A) horizontally growing crops, (B) vertically growing crops, (C) forests, and (D) grasses).

The higher use of Ground Control Points (GCPs) in grass studies compared to other vegetation groups can be attributed to the unique topographic characteristics of grasses. Grasses exhibit significant topographic variations, and a larger number of GCPs is required to adequately capture these variations [106]. Grasses have a complex and heterogeneous structure, with variations in height, density, and spatial arrangement. Their low-growing nature and diverse species result in intricate topographic variations. To accurately estimate biomass in grasses, more GCPs are used to capture elevation and topographic features effectively.

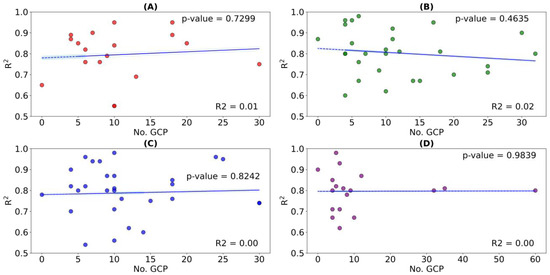

An analysis was conducted to examine the correlation between the number of GCPs and the overall accuracy of the AGB estimation model. The relationship between the number of GCPs and R2 was evaluated through the implementation of a statistical analysis (Figure 14). The results indicated that an increase in the number of GCPs does not have a significant effect on the final accuracy of the AGB estimation model. Advancements in technology, including portable and reusable GCPs with GNSS receivers and automated GCP identification and image registration techniques including sensor fusion-based registration, elastic registration, and direct image-to-image registration, have streamlined the georeferencing process. These innovations reduce the effort associated with GCP placement and measurement, and potentially enable accurate georeferencing with fewer GCPs.

Figure 14.

The relationship between number of ground control points (GCPs) and the coefficient of determination (R2) of AGB estimation models in four groups of vegetations. (A) horizontally growing crops, (B) vertically growing crops, (C) forests, and (D) grasses. The blue lines represent a trendline or line of best fit.

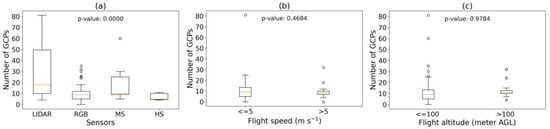

To assess the impact of sensor type (Figure 15a), flight speed (Figure 15b), and flight altitude (Figure 15c) on the number of GCPs, a comparison was conducted between these parameters. Then, statistical analysis was conducted to calculate p-values for each comparison. The results of the analysis indicated that there were significant differences in the number of GCPs based on sensor types (p-value less than 0.05). Specifically, it was found that the average number of GCPs increased when LIDAR was used. In terms of flight speed and altitude, there were no significance difference between the number of GCPs at different flight speed (p-value = 0.4684) and altitude (p-value = 0.9784). A higher flight speed was associated with less variation in the number of GCPs. Lastly, when considering flight altitude, it was observed that the average number of GCPs was higher for flight heights above 100 m above ground level (AGL) compared to heights below 100 m AGL.

Figure 15.

The comparison between number of ground control points (GCPs) at different sensors (a), flight speeds (b), and flight altitude (c).

The significant differences in the number of GCPs at different sensors can be attributed to the fact that the use of LIDAR technology requires a higher average number of GCPs [128], indicating its specific requirements for accurate georeferencing.

Table 4 highlights the key flight parameters, the expected resolution for that specific flight parameters, and the number of GCPs to estimate AGB in different groups of vegetation. LIDAR sensors show a wider range of flight altitude and speed, especially for forest and vertically growing crops. MS and HS sensors demonstrate a wider range of flight altitude and speed in vertically growing agricultural crops compared with RGB sensors. LIDAR sensors need less side and forward overlap compared to RGB, MS, and HS sensors and RGB sensors need more overlap than MS sensors.

Table 4.

Flight parameter thresholds in each sensor and vegetation type to estimate the above-ground biomass.

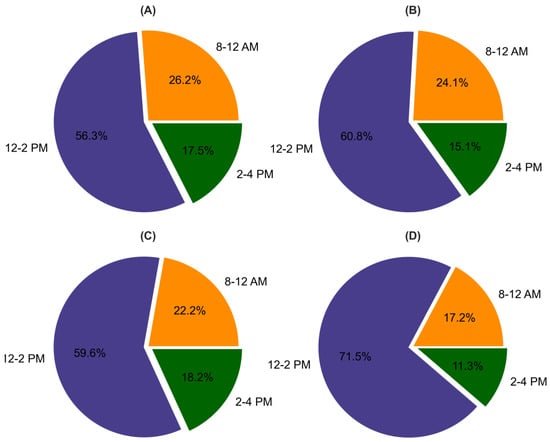

6. Data Acquisition Time

The time at which the UAS is used to collect data, can influence the accuracy of AGB estimation by changing the illumination conditions [65,171,177], reducing the effect of water content [178], reducing the effect of shadow [79,179], and reducing the effect of wind [40]. The solar noon helps to maintain a minimum solar angle of 50° from the horizon, which improves image quality, increases solar energy availability, and reduces atmospheric effects [15,35,171]. The early and late time in the day is not a good time to fly because at these times the leaf’s water content, which reduces the amount of reflectance, is high and could reduce the AGB estimation accuracy [178]. Wind speed that has a direct effect on the accuracy of imaging through UAS stability and plant movement can change during different times of the day. Moving the UASs due to wind during imaging can make it impossible to obtain a clear orthomosaic image and cause stillness and blurring on the image [40]. Displacement of the plant due to wind speed reduces the quality of the image dataset especially regarding spectral and structural information because plant movement leads to change in canopy structure [180]. Figure 16 shows the frequency of different times of the day with respect to flight in AGB estimation studies. According to this figure more than 50% of AGB estimation-related flights for all groups of vegetation were performed between 12:00 and 2:00 pm.

Figure 16.

The frequency of different acquisition time of image to estimate the biomass in different groups of crops (A) forest, (B) vertically growing crops, (C) horizontally growing crops, and (D) grasses).

7. Importance of Modeling Factors in AGB Estimation

The accuracy and predictive ability of AGB estimation using UAS imagery depends on the choice of the parameter(s) derived from the imagery [2]. In this study, we focused on the importance of the number of vegetation indices, types of textural and structural metrics of vegetation, feature selection, and choice of predictor algorithm to estimate the AGB.

7.1. Vegetation Traits

7.1.1. Vegetation Indices (VIs)

RGB vegetation indices are useful for estimating AGB using UASs imagery [65]. They can estimate AGB by measuring chlorophyll in leaves [181]. Chlorophyll in leaves is related to the plant’s ability to produce biomass [182]. Near-infrared (NIR) and red edge vegetation indices are commonly used in estimating biomass in vegetation. NIR vegetation indices are based on the reflectance of near-infrared wavelengths by vegetation, which is higher in healthy vegetation than in unhealthy or senescent vegetation [34,42]. Visible and NIR bands are highly sensitive to low biomass, thus, a simple combination of these bands can create a good estimation of vegetation biomass [73]. Red edge vegetation indices use reflectance of light in the red edge spectral range (700–740 nm) to estimate the amount of vegetation per unit of area [183]. They show a stronger association with leaf biomass compared to stem biomass [2,45,157]. For a comprehensive understanding of the prevalent RGB, NIR and red edge vegetation indices to AGB estimation please refer to Poley and McDermid [2].

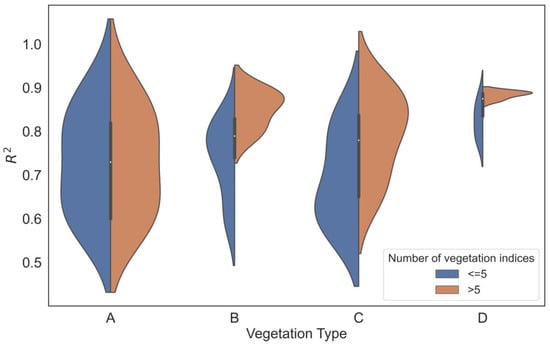

We examined the impact of the quantity of vegetation indices on the accuracy of AGB estimation by doing a statistical analysis. The results are presented in Figure 17, with the AGB accuracy of models using different numbers of vegetation indices (less than or equal to 5 and greater than 5) for various vegetation groups. The impact of the number of vegetation indices on AGB estimation accuracy varied across different vegetation types. For forests, the p-value of 1 indicated no statistically significant impact. The structural variations within forests may overshadow the specific impact of the number of vegetation indices on AGB estimation. Similarly, for grasses, the p-value of 6.4758 indicated a lack of statistical significance. Horizontally growing crops had a p-value of 3.403, also suggesting no significant impact. Grasses and horizontally growing crops often have a more homogeneous structure compared to other vegetation types [184]. This uniformity in structure may lead to less variability in AGB estimation accuracy, resulting in a lack of statistical significance for the number of vegetation indices due to the consistent signal response, limited structural complexity, and homogeneous growth conditions. However, vertically growing crops showed a significantly low p-value of 0.0007, indicating that the number of vegetation indices had a significant impact on AGB estimation accuracy in this vegetation type. Vertically growing crops, such as corn or wheat, often have complex and variable structures, with variations in plant height and canopy density. The number and selection of appropriate vegetation indices can capture these structural differences, leading to improved AGB estimation accuracy.

Figure 17.

The impact of the quantity of vegetation indices on the accuracy of AGB estimation in four vegetation types ((A) forest, (B) horizontally growing crops, (C) vertically growing crops, and (D) grasses).

7.1.2. Vegetation Texture

Several studies concluded that including image textural metrics can improve the accuracy of AGB estimation in different vegetation types [2,159,185,186]. Image texture can be used to analyze patterns and structures present in images of vegetation to extract information about the density, arrangement, and composition of the plant material [2,63]. This can be performed by analyzing the spectral and spatial variability of the grey level values in the image and comparing them to known values for different types of vegetation [187]. Image textural metrics can help to mitigate the issue of underestimating AGB at high biomass values when using vegetation indices (VIs) alone [2]. Texture parameters derived from red edge and NIR bands have been found to have a wider variation throughout the growing season, and thus can explain the greater variation in AGB than visible bands [72]. Table 5 provides a summary of the methods and their corresponding descriptions used for texture analysis in our study. These methods encompass various aspects such as co-occurrence matrix, Gabor filters, Local Binary Patterns (LBP), and Fractal Dimension. The co-occurrence matrix measures uniformity, entropy, contrast, homogeneity, and correlation in the grey level distribution of an image. Gabor filters analyze the frequency, orientation, and scale of patterns present in an image. LBP examines different types of transitions and intensity values between pixels. Lastly, fractal dimension explores the box-counting, information, and Hausdorff dimensions to assess image characteristics. These methods and types of image textural metrics have been demonstrated to be particularly useful in the estimation of biomass.

Table 5.

Summary of image textural metrics, their types, and corresponding descriptions used in the above-ground biomass estimation literature.

7.1.3. Structural Variables

Studies have shown that models including structural features have better accuracy in estimating AGB in various vegetation types [13,45,73,103,186]. Metrics such as tree diameter at breast height (DBH) are closely correlated with the amount of woody tissue in a tree [133,198,199,200,201], and plant height is closely correlated with vegetation growth [13,45,47] and biomass [32,202].

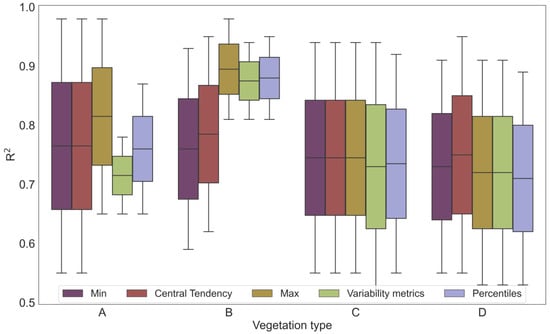

Central tendency metrics (mean, median, and mode), minimum (Min), maximum (Max), variability metrics (standard deviation, skewness, kurtosis, and variance) and percentiles of plant height are the commonly used metrics to estimate biomass. Central tendency metrics are useful for estimating overall biomass [35,45] and they are less affected by environmental noises such as the soil background [121], while measuring minimum and maximum plant height helps to identify small [104,164] or dominant [83,177,203] plants. The maximum plant height can reduce the impact of lodging on measurements of plant height [15]. Variability metrics can be used to understand variability in plant size [45,104] and finding outliers [45,104]. Percentiles aid understanding the overall shape of the distribution of plant sizes and identify any trends or patterns that may be relevant for biomass estimation [30,162,204].

Figure 18 shows the frequency of using different plant height metrics to estimate biomass in different groups of vegetation. While the maximum value of plant heights is the most used metrics to estimate the biomass in forests and vertically growing crops, the central tendency metrics of plant height are most useful for grasses and horizontally growing crops. The reason for using the maximum value of plant height in estimating biomass for forests and vertically growing crops is because the tallest plants in these vegetation types have a substantial impact on the overall biomass [205]. By considering the maximum value, capturing the highest plant height would be possible, which helps account for the influence of these tall individuals on biomass estimates. In contrast, when estimating biomass in grasses and horizontally growing crops, central tendency metrics of plant height (such as mean or median height) are more valuable. These metrics provide information about the average or typical height within these vegetation types, giving insights into the overall distribution and structure of the plants. In grasses, which often have dense and uniform canopies [38], the average height serves as a representative measure of biomass across the field. Likewise, horizontally growing crops with their spreading growth pattern [206] can benefit from central tendency metrics to capture the average height across the vegetation area.

Figure 18.

Use of different plant height metrics to estimate the biomass in different vegetation types ((A) forests, (B) vertically growing crops, (C) horizontally growing crops, and (D) grasses).

7.2. Feature Selection

In the context of estimating AGB, feature selection can improve the accuracy of the prediction model [207] by avoiding overfitting when a variety of RGB and MS vegetation index, textural, and structural metrics are used as predictor variables [208,209] and by reducing the complexity of the model through removing unnecessary or redundant predictors [210]. Several methods are used for feature selection, including:

Filter methods: These methods use statistical tests or simple heuristics to identify features that are correlated with the target variable. They are generally fast and simple to implement, but they do not consider the interactions between features [211]. Common filter methods include variance inflation factor (VIF), Pearson’s correlation coefficient, mutual information, ANOVA f-value, chi-squared test, and variance threshold [26,186,211,212,213,214,215,216,217].

Wrapper methods: These methods use a search algorithm, such as Forward Selection (FS), Backward Elimination (BE), Recursive Feature Elimination (RFE), and Genetic Algorithms (GA) to find the optimal subset of features. They are more computationally expensive than filter methods, but they consider the interactions between features [207,210,211,218].

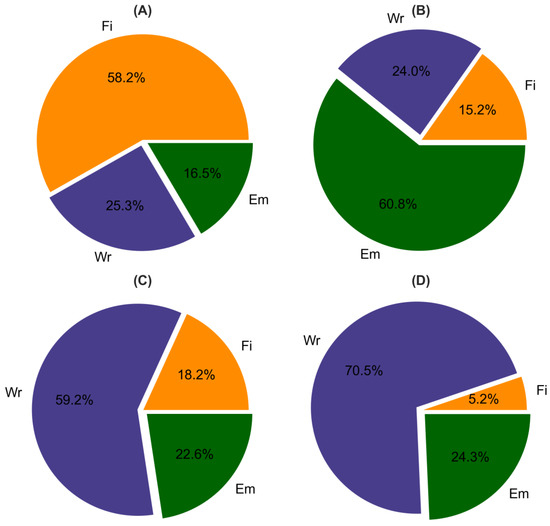

Embedded methods: These methods are built into the machine learning algorithm itself and select features as part of the model training process. They can be computationally expensive, but they can be effective for high-dimensional datasets. The most common embedded methods include principal component analysis (PCA), Lasso Regression, Ridge Regression, Elastic Net, Tree-Based Models, and Boosting models [207,207,219,220,221]. Figure 19 shows the frequency distribution of different feature selection methods used for above-ground biomass (AGB) estimation. Our study findings reveal that the most employed filter, wrapper, and embedded feature selection methods for AGB estimation across all vegetation groups are VIF, PCA, and BE, respectively.

Figure 19.

Frequency distribution of feature selection methods (Fi—Filter methods, Wr—wrapper methods, and Em—embedded methods) for above-ground biomass estimation in various vegetation groups ((A) forests, (B) vertically growing crops, (C) horizontally growing crops, and (D) grasses).

7.3. Model Selection

Model selection plays a crucial role in determining the accuracy of AGB estimation since different models have varying abilities to capture the complex relationships between variables [198,222]. Complex models may capture more details but may also require more data and be prone to errors [7]. Simpler models may be easier to use but may also be less accurate [187]. AGB estimation involves many different models with unique strengths and weaknesses. Table 6 compares the main parameters, advantages, and disadvantages of commonly used models. Traditional linear regression models may have limitations in dealing with outliers, non-linearity, and multivariate data [103], while machine learning techniques such as random forest (RF) and support vector machine (SVM) have the capacity to handle multidimensional data [223,224].

Table 6.

The main parameters, advantages, and disadvantages of most used models (MLR—multiple linear regression, ANN—artificial neural network, RF—random forest, and SVM—support vector machine) to estimate the above-ground biomass.

Assessing a model through evaluation metrics ensures its accuracy and reliability and helps identify areas for improvement [90,178]. Mean absolute error (MAE) [34,154,232], mean squared error (MSE) [142], root mean squared error (RMSE) [159,233], coefficient of determination (R2) [32,234], and Nash–Sutcliffe efficiency (NSE) [65,235] are commonly used evaluation metrics to evaluate the performance of AGB estimation models.

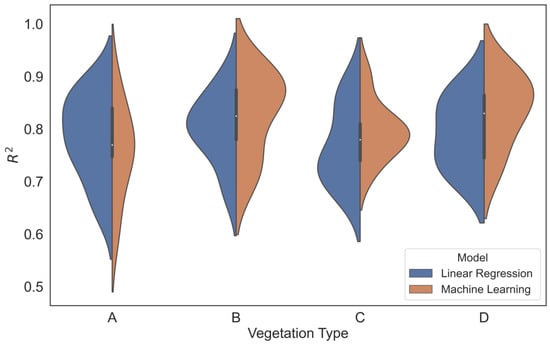

Multiple linear regression (MLR) and random forest (RF) were found to be the most used and most accurate models to estimate the biomass for all vegetation types. The accuracy of biomass estimation models (linear regression and machine learning) was assessed by comparing the range of R2 values across 211 studies (Figure 20). Additionally, a t-test was conducted to examine the statistical significance between two groups of models: linear regression and machine learning, for various vegetation types. In forests, there is no significant difference (p = 0.1615) between the two model types, indicating similar performance. However, for grasses, the p-value (p = 0.0330) signifies a significant difference, emphasizing the importance of machine learning models to estimate the above-ground biomass in grasses. Conversely, for horizontally and vertically growing crops, there is no significant difference (p = 0.2517 and p = 0.1216, respectively), suggesting that both model types perform equally well.

Figure 20.

The performance of regression and machine learning models to estimate the AGB in four vegetation types ((A) forest, (B) horizontally growing crops, (C) vertically growing crops, and (D) grasses).

Machine learning (ML) and traditional linear regression models showed similar performance in forests because structural metrics such as tree height and diameter are used to determine biomass, which are easy to obtain and provide strong predictors [236]. The homogenous structure of forests also leads to less complex relationships [237]. ML models outperformed linear regression models for grass biomass estimation due to the complexity and non-linear nature of the data. Grass biomass involves intricate interactions among variables [238]. ML models capture these patterns better. However, ML models showed similar performance to linear regression for estimation of biomass in horizontally and vertically growing crops. This may be due to simpler data relationships and more uniform growth patterns in these crops, where linear regression adequately captures the relationships. The additional complexities addressed by ML models are less impactful in these cases.

Table 7 presents a comprehensive guide for the accurate estimation of AGB using UAS-mounted sensors for different vegetation types, which is based on a thorough review of the literature.

Table 7.

Recommendations for Enhancing Accuracy in AGB Estimation: Effective Measures for Different Vegetation Types.

8. Challenges

The main challenges in using UASs for biomass estimation are related to sensors, aircraft platforms, and technical challenges to handle hardware and software.

The spatial resolution of sensors and the signal-to-noise ratio are the main sensor-based challenges that can affect the accuracy of biomass estimates using UASs. High resolution sensors may capture more detailed information but may also be more expensive and require more processing power to analyze the data [178]. A high signal-to-noise ratio can improve the accuracy of estimates but may also increase the cost and complexity of the sensors [183,239]. Careful consideration of cost, accuracy, and complexity is important when selecting sensors for biomass estimation using UASs. Inaccurate estimates may occur if the UAS is not stable, and data are distorted or incomplete. Environmental conditions such as wind and weather can also impact data accuracy by making it difficult for the UAS to maintain stability [22].

Estimating biomass in forests is challenging due to the reflectance mixture and multilevel canopy dossels. Reflectance mixture is when the reflectance of light from a given area is a combination of the reflectance of different species or vegetation present [240]. Multilevel canopy dossels is the presence of multiple canopy layers in a forest. To overcome these challenges, using multiple sensors or different wavelengths of light is recommended. Water, cloud cover, and tidal stage can affect the accuracy of biomass estimation [65]. Cloud cover can affect the accuracy of biomass estimation by reducing the amount of light available to the sensors and causing shadows [90] and other distortions in the data [178]. Dew on the canopies can cause overestimation of vegetation indices [103] and it is recommended to avoid collecting data when dew is present. Vegetation phenology can also affect the correlation between VIs and biomass, and data should be collected during peak biomass and similar seasons [103]. Artifacts in imagery can be a challenge for using UAS images to estimate biomass, but advanced image processing techniques can be used to remove or correct them [65]. Legal restrictions from the Federal Aviation Administration (FAA) can cause gaps and limitations in UAS imagery, making it challenging to detect changes over time. Researchers can minimize the impact by carefully planning the UAS flights [89]. Computing power can be a challenge for UAS images to estimate biomass, requiring significant amount of computing power to process [241,242]. Current battery capacities and flight times can also be a challenge, but researchers may use UASs with larger battery capacities or use multiple batteries to extend flight time [64]. Optical saturation and GPS positional errors can also affect the accuracy of biomass estimation [243]. To address this, researchers may use sensors with a higher dynamic range to avoid optical saturation [45,47]. GPS positional errors can be a significant challenge when using remotely sensed data for biomass estimation, as they can affect the accuracy of the biomass estimates by misaligning the location of the remotely sensed data with the true location of the vegetation, leading to overestimation or underestimation of biomass [242,244].

9. Conclusions

The findings of this study revealed important insights into the use of unmanned aerial systems (UASs) for above-ground biomass (AGB) estimation across different vegetation types. Rotary-wing UASs, particularly quadcopters, were found to be commonly used in agricultural fields, offering specific advantages in terms of their capabilities. Sensor selection varied depending on the vegetation types, with LIDAR and RGB sensors being commonly employed in forests, while RGB, multispectral, and hyperspectral sensors were prevalent in agricultural and grass fields. Regarding flight parameters, the choice of flight altitudes and speeds was influenced by vegetation characteristics and sensor types, with variations observed among different vegetation groups. Additionally, the number of ground control points (GCPs) required for accurate AGB estimation differed based on vegetation type and topographic complexity. To ensure optimal accuracy, data collection during solar noon was preferred, considering factors such as enhanced image quality, solar energy availability, and reduced atmospheric effects. The number of vegetation indices had a significant impact on AGB estimation accuracy in vertically growing crops, whereas its influence was limited in forests, grasses, and horizontally growing crops. Moreover, the choice of plant height metrics varied across vegetation groups, with forests and vertically growing crops relying on the maximum height, while grasses and horizontally growing crops utilized central tendency metrics. In terms of modeling approaches, linear regression, and machine learning models performed similarly in forests, but machine learning models outperformed linear regression models in grasses. However, both modeling approaches yielded comparable results for horizontally and vertically growing crops, indicating their suitability for these vegetation types. The use of UASs for biomass estimation also presented various challenges related to sensors, environmental conditions, reflectance mixture, canopy complexity, water, cloud cover, dew, phenology, image artifacts, legal restrictions, computing power, battery capacity, optical saturation, and GPS errors. Addressing these challenges necessitates careful consideration of sensor selection, timing, advanced image processing techniques, compliance with regulations, and overcoming technical limitations. Overall, our findings provide valuable insights and guidelines for the effective and accurate use of UASs in AGB estimation across different vegetation types. By understanding the specific requirements and characteristics of each vegetation type, researchers and practitioners can make informed decisions regarding platform selection, sensor choice, flight parameters, and modeling approaches, ultimately enhancing the precision and efficiency of biomass estimation using UAS technology.

Author Contributions

Conceptualization, A.B. and P.F.; methodology, A.B. and P.F.; software, A.B.; validation, A.B. and P.F.; formal analysis, A.B.; investigation, A.B. and N.D.; resources, P.F.; data curation, A.B.; writing—original draft preparation, A.B.; writing—review and editing, A.B., N.D., P.G.O., N.B. and P.F.; visualization, A.B.; supervision, P.F.; project administration, P.F.; funding acquisition, P.F. All authors have read and agreed to the published version of the manuscript.

Funding

This review paper was supported by funding from the North Dakota Agricultural Experiment Station, project number FARG080021.

Data Availability Statement

The data used in this research are available upon request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wilkes, P.; Disney, M.; Vicari, M.B.; Calders, K.; Burt, A. Estimating Urban above Ground Biomass with Multi-Scale LiDAR. Carbon Balance Manag. 2018, 13, 10. [Google Scholar] [CrossRef]

- Poley, L.G.; McDermid, G.J. A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems. Remote Sens. 2020, 12, 1052. [Google Scholar] [CrossRef]

- Liu, K.; Shen, X.; Cao, L.; Wang, G.; Cao, F. The Evaluation of Parametric and Non-Parametric Models for Total Forest Biomass Estimation Using UAS-LiDAR. In Proceedings of the 5th International Workshop on Earth Observation and Remote Sensing Applications, EORSA 2018—Proceedings, Xi’an, China, 18–20 June 2018. [Google Scholar]

- Yue, J.; Zhou, C.; Guo, W.; Feng, H.; Xu, K. Estimation of Winter-Wheat above-Ground Biomass Using the Wavelet Analysis of Unmanned Aerial Vehicle-Based Digital Images and Hyperspectral Crop Canopy Images. Int. J. Remote Sens. 2021, 42, 1602–1622. [Google Scholar] [CrossRef]

- Duncanson, L.; Armston, J.; Disney, M.; Avitabile, V.; Barbier, N.; Calders, K.; Carter, S.; Chave, J.; Herold, M.; Crowther, T.W.; et al. The Importance of Consistent Global Forest Aboveground Biomass Product Validation. Surv. Geophys. 2019, 40, 979–999. [Google Scholar] [CrossRef]

- Wan, X.; Li, Z.; Chen, E.; Zhao, L.; Zhang, W.; Xu, K. Forest Aboveground Biomass Estimation Using Multi-Features Extracted by Fitting Vertical Backscattered Power Profile of Tomographic Sar. Remote Sens. 2021, 13, 186. [Google Scholar] [CrossRef]

- Moradi, F.; Mohammad, S.; Sadeghi, M.; Heidarlou, H.B. Above-Ground Biomass Estimation in a Mediterranean Sparse Coppice Oak Forest Using Sentinel-2 Data. Ann. For. Res. 2022, 65, 165–182. [Google Scholar] [CrossRef]

- Khan, I.A.; Khan, W.R.; Ali, A.; Nazre, M. Assessment of Above-Ground Biomass in Pakistan Forest Ecosystem’s Carbon Pool: A Review. Forests 2021, 12, 586. [Google Scholar] [CrossRef]

- Chang, G.J.; Oh, Y.; Goldshleger, N.; Shoshany, M. Biomass Estimation of Crops and Natural Shrubs by Combining Red-Edge Ratio with Normalized Difference Vegetation Index. J. Appl. Remote Sens. 2022, 16, 14501. [Google Scholar] [CrossRef]

- Xu, C.; Ding, Y.; Zheng, X.; Wang, Y.; Zhang, R.; Zhang, H.; Dai, Z. A Comprehensive Comparison of Machine Learning and Feature Selection Methods for Maize Biomass Estimation Using Sentinel-1 SAR, Sentinel-2 Vegetation Indices, and Biophysical Variables. Remote Sens. 2022, 14, 4083. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Moreno, M.A. Onion Biomass Monitoring Using UAV-Based RGB Imaging. Precis. Agric. 2018, 19, 840–857. [Google Scholar] [CrossRef]

- Alebele, Y.; Zhang, X.; Wang, W.; Yang, G.; Yao, X.; Zheng, H.; Zhu, Y.; Cao, W.; Cheng, T. Estimation of Canopy Biomass Components in Paddy Rice from Combined Optical and SAR Data Using Multi-Target Gaussian Regressor Stacking. Remote Sens. 2020, 12, 2564. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Li, Z.; Yang, G. Estimation of Potato Above-Ground Biomass Based on Unmanned Aerial Vehicle Red-Green-Blue Images with Different Texture Features and Crop Height. Front. Plant Sci. 2022, 13, 938216. [Google Scholar] [CrossRef] [PubMed]

- Vargas, J.J.Q.; Zhang, C.; Smitchger, J.A.; McGee, R.J.; Sankaran, S. Phenotyping of Plant Biomass and Performance Traits Using Remote Sensing Techniques in Pea (Pisum sativum, L.). Sensors 2019, 19, 2031. [Google Scholar] [CrossRef] [PubMed]

- Acorsi, M.G.; das Dores Abati Miranda, F.; Martello, M.; Smaniotto, D.A.; Sartor, L.R. Estimating Biomass of Black Oat Using UAV-Based RGB Imaging. Agronomy 2019, 9, 344. [Google Scholar] [CrossRef]

- Bar-On, Y.M.; Phillips, R.; Milo, R. The Biomass Distribution on Earth. Proc. Natl. Acad. Sci. USA 2018, 115, 6506–6511. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Sun, Y.; Chang, L.; Qin, Y.; Chen, J.; Qin, Y.; Du, J.; Yi, S.; Wang, Y. Estimation of Grassland Canopy Height and Aboveground Biomass at the Quadrat Scale Using Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 851. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y.; Zhang, Y.; Shang, J. Review of Remote Sensing Applications in Grassland Monitoring. Remote Sens. 2022, 14, 2903. [Google Scholar] [CrossRef]

- Clementini, C.; Pomente, A.; Latini, D.; Kanamaru, H.; Vuolo, M.R.; Heureux, A.; Fujisawa, M.; Schiavon, G.; Del Frate, F. Long-Term Grass Biomass Estimation of Pastures from Satellite Data. Remote Sens. 2020, 12, 2160. [Google Scholar] [CrossRef]

- Muumbe, T.P.; Baade, J.; Singh, J.; Schmullius, C.; Thau, C. Terrestrial Laser Scanning for Vegetation Analyses with a Special Focus on Savannas. Remote Sens. 2021, 13, 507. [Google Scholar] [CrossRef]

- Grybas, H.; Congalton, R.G. Evaluating the Impacts of Flying Height and Forward Overlap on Tree Height Estimates Using Unmanned Aerial Systems. Forests 2022, 13, 1462. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Gil-Docampo, M.L.; Arza-García, M.; Ortiz-Sanz, J.; Martínez-Rodríguez, S.; Marcos-Robles, J.L.; Sánchez-Sastre, L.F. Above-Ground Biomass Estimation of Arable Crops Using UAV-Based SfM Photogrammetry. Geocarto Int. 2020, 35, 687–699. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, J.; Dong, X.; Du, X.; Zhu, J.; Sun, D.; Liu, Y.; He, Y.; Cen, H. Unmanned Aerial Vehicle-Based Field Phenotyping of Crop Biomass Using Growth Traits Retrieved from PROSAIL Model. Comput. Electron. Agric. 2021, 187, 106304. [Google Scholar] [CrossRef]

- Li, D.; Gu, X.; Pang, Y.; Chen, B.; Liu, L. Estimation of Forest Aboveground Biomass and Leaf Area Index Based on Digital Aerial Photograph Data in Northeast China. Forests 2018, 9, 275. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, X.; Wang, Y.; Zheng, Z.; Zheng, S.; Zhao, D.; Bai, Y. UAV-Based Individual Shrub Aboveground Biomass Estimation Calibrated against Terrestrial LiDAR in a Shrub-Encroached Grassland. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102358. [Google Scholar] [CrossRef]

- Gano, B.; Dembele, J.S.B.; Ndour, A.; Luquet, D.; Beurier, G.; Diouf, D.; Audebert, A. Using Uav Borne, Multi-Spectral Imaging for the Field Phenotyping of Shoot Biomass, Leaf Area Index and Height of West African Sorghum Varieties under Two Contrasted Water Conditions. Agronomy 2021, 11, 850. [Google Scholar] [CrossRef]

- Jiang, F.; Kutia, M.; Ma, K.; Chen, S.; Long, J.; Sun, H. Estimating the Aboveground Biomass of Coniferous Forest in Northeast China Using Spectral Variables, Land Surface Temperature and Soil Moisture. Sci. Total Environ. 2021, 785, 147335. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Yu, S.; Zhao, F.; Tian, L.; Zhao, Z. Comparison of Machine Learning Algorithms for Forest Parameter Estimations and Application for Forest Quality Assessments. For. Ecol. Manag. 2019, 434, 224–234. [Google Scholar] [CrossRef]

- Jin, S.; Su, Y.; Song, S.; Xu, K.; Hu, T.; Yang, Q.; Wu, F.; Xu, G.; Ma, Q.; Guan, H.; et al. Non-Destructive Estimation of Field Maize Biomass Using Terrestrial Lidar: An Evaluation from Plot Level to Individual Leaf Level. Plant Methods 2020, 16, 69. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-Ground Biomass Estimation and Yield Prediction in Potato by Using UAV-Based RGB and Hyperspectral Imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Grüner, E.; Astor, T.; Wachendorf, M. Biomass Prediction of Heterogeneous Temperate Grasslands Using an SFM Approach Based on UAV Imaging. Agronomy 2019, 9, 54. [Google Scholar] [CrossRef]