How to Improve the Reproducibility, Replicability, and Extensibility of Remote Sensing Research

Abstract

1. Introduction

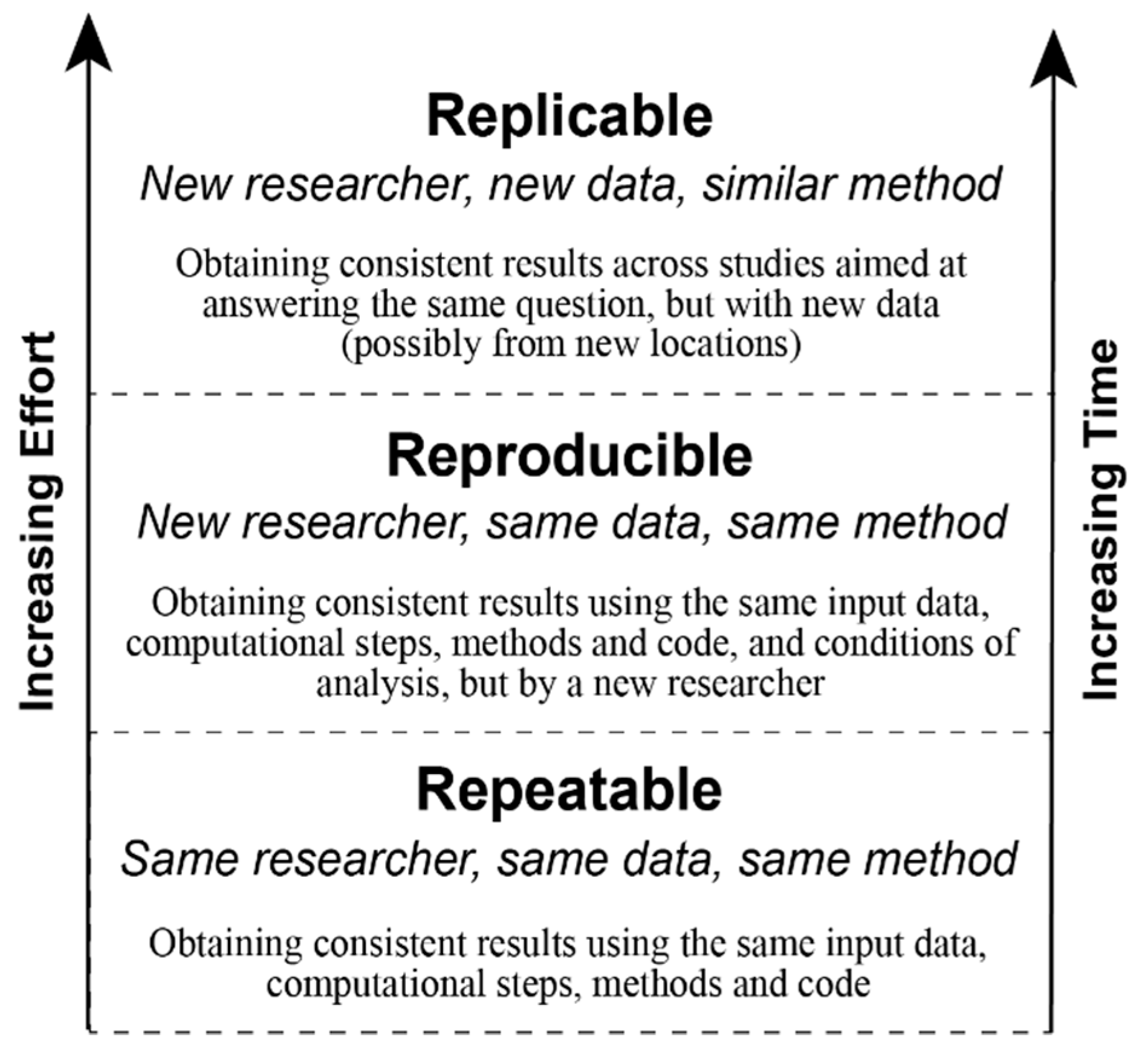

2. Reproducible, Replicable, and Extensible Research

3. Reproducibility and Replicability in Remote Sensing

4. Laying the Groundwork to Capitalize on Reproducibility and Replicability

5. Leveraging Recent Developments in Remote Sensing by Focusing on the Reproducibility and Replicability of Research

5.1. Forward-Looking Replication Sequences

5.2. Benchmarking Datasets for Method Comparisons and Multi-Platform Integration

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, L.; Driscol, J.; Sarigai, S.; Wu, Q.; Chen, H.; Lippitt, C.D. Google Earth Engine and Artificial Intelligence (AI): A Comprehensive Review. Remote Sens. 2022, 14, 3253. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Wang, L.; Yan, J.; Mu, L.; Huang, L. Knowledge discovery from remote sensing images: A review. WIREs Data Min. Knowl. Discov. 2020, 10, e1371. [Google Scholar] [CrossRef]

- Jupyter, P.; Bussonnier, M.; Forde, J.; Freeman, J.; Granger, B.; Head, T.; Holdgraf, C.; Kelley, K.; Nalvarte, G.; Osheroff, A.; et al. Binder 2.0—Reproducible, interactive, sharable environments for science at scale. In Proceedings of the 17th Python in Science Conference, Austin, TX, USA, 9–15 July 2018; pp. 75–82. [Google Scholar] [CrossRef]

- Nüst, D. Reproducibility Service for Executable Research Compendia: Technical Specifications and Reference Implementation. Available online: https://zenodo.org/record/2203844#.Y17s6oTMIuV (accessed on 10 October 2022).

- Brinckman, A.; Chard, K.; Gaffney, N.; Hategan, M.; Jones, M.B.; Kowalik, K.; Kulasekaran, S.; Ludäscher, B.; Mecum, B.D.; Nabrzyski, J.; et al. Computing environments for reproducibility: Capturing the “Whole Tale”. Futur. Gener. Comput. Syst. 2019, 94, 854–867. [Google Scholar] [CrossRef]

- Woodward, J.F. Data and phenomena: A restatement and defense. Synthese 2011, 182, 165–179. [Google Scholar] [CrossRef]

- Haig, B.D. Understanding Replication in a Way That Is True to Science. Rev. Gen. Psychol. 2022, 26, 224–240. [Google Scholar] [CrossRef]

- Popper, K.R. The Logic of Scientific Discovery, 2nd ed.; Routledge: London, UK, 2002; ISBN 978-0-415-27843-0. [Google Scholar]

- Earp, B.D. Falsification: How Does It Relate to Reproducibility? In Research Methods in the Social Sciences: An A–Z of Key Concepts; Oxford University Press: Oxford, UK, 2021; pp. 119–123. ISBN 978-0-19-885029-8. [Google Scholar]

- Balz, T.; Rocca, F. Reproducibility and Replicability in SAR Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3834–3843. [Google Scholar] [CrossRef]

- Howe, C.; Tullis, J.A. Context for Reproducibility and Replicability in Geospatial Unmanned Aircraft Systems. Remote Sens. 2022, 14, 4304. [Google Scholar] [CrossRef]

- Frazier, A.E.; Hemingway, B.L. A Technical Review of Planet Smallsat Data: Practical Considerations for Processing and Using PlanetScope Imagery. Remote Sens. 2021, 13, 3930. [Google Scholar] [CrossRef]

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- Tullis, J.A.; Corcoran, K.; Ham, R.; Kar, B.; Williamson, M. Multiuser Concepts and Workflow Replicability in SUAS Appli-cations. In Applications in Small Unmanned Aircraft Systems; CRC Press/Taylor & Francis Group: Boca Raton, FL, USA, 2019; pp. 34–55. [Google Scholar]

- Kedron, P.; Li, W.; Fotheringham, S.; Goodchild, M. Reproducibility and replicability: Opportunities and challenges for geospatial research. Int. J. Geogr. Inf. Sci. 2021, 35, 427–445. [Google Scholar] [CrossRef]

- Waters, N. Motivations and Methods for Replication in Geography: Working with Data Streams. Ann. Am. Assoc. Geogr. 2021, 111, 1291–1299. [Google Scholar] [CrossRef]

- Gertler, P.; Galiani, S.; Romero, M. How to make replication the norm. Nature 2018, 554, 417–419. [Google Scholar] [CrossRef]

- Neuliep, J.W. Editorial Bias against Replication Research. J. Soc. Behav. Personal. 1990, 5, 85. [Google Scholar]

- Wainwright, J. Is Critical Human Geography Research Replicable? Ann. Am. Assoc. Geogr. 2021, 111, 1284–1290. [Google Scholar] [CrossRef]

- Bennett, M.M.; Chen, J.K.; León, L.F.A.; Gleason, C.J. The politics of pixels: A review and agenda for critical remote sensing. Prog. Hum. Geogr. 2022, 46, 729–752. [Google Scholar] [CrossRef]

- Committee on Reproducibility and Replicability in Science; Board on Behavioral, Cognitive, and Sensory Sciences; Committee on National Statistics; Division of Behavioral and Social Sciences and Education; Nuclear and Radiation Studies Board; Division on Earth and Life Studies; Board on Mathematical Sciences and Analytics; Committee on Applied and Theoretical Statistics; Division on Engineering and Physical Sciences; Board on Research Data and Information; et al. Reproducibility and Replicability in Science; National Academies Press: Washington, DC, USA, 2019; p. 25303. ISBN 978-0-309-48616-3. [Google Scholar]

- Gundersen, O.E.; Kjensmo, S. State of the Art: Reproducibility in Artificial Intelligence. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Essawy, B.T.; Goodall, J.L.; Voce, D.; Morsy, M.M.; Sadler, J.M.; Choi, Y.D.; Tarboton, D.G.; Malik, T. A taxonomy for reproducible and replicable research in environmental modelling. Environ. Model. Softw. 2020, 134, 104753. [Google Scholar] [CrossRef]

- Nosek, B.A.; Errington, T.M. What is replication? PLoS Biol. 2020, 18, e3000691. [Google Scholar] [CrossRef]

- Kedron, P.; Frazier, A.E.; Trgovac, A.; Nelson, T.; Fotheringham, A.S. Reproducibility and Replicability in Geographical Analysis. Geogr. Anal. 2021, 53, 135–147. [Google Scholar] [CrossRef]

- Gelman, A.; Stern, H. The Difference between “Significant” and “Not Significant” is not Itself Statistically Significant. Am. Stat. 2006, 60, 328–331. [Google Scholar] [CrossRef]

- Jilke, S.; Petrovsky, N.; Meuleman, B.; James, O. Measurement equivalence in replications of experiments: When and why it matters and guidance on how to determine equivalence. Public Manag. Rev. 2017, 19, 1293–1310. [Google Scholar] [CrossRef]

- Hoeppner, S. A note on replication analysis. Int. Rev. Law Econ. 2019, 59, 98–102. [Google Scholar] [CrossRef]

- Goeva, A.; Stoudt, S.; Trisovic, A. Toward Reproducible and Extensible Research: From Values to Action. Harv. Data Sci. Rev. 2020, 2. [Google Scholar] [CrossRef]

- Dozier, J. A method for satellite identification of surface temperature fields of subpixel resolution. Remote Sens. Environ. 1981, 11, 221–229. [Google Scholar] [CrossRef]

- Adams, J.B.; Smith, M.O.; Johnson, P.E. Spectral mixture modeling: A new analysis of rock and soil types at the Viking Lander 1 Site. J. Geophys. Res. Earth Surf. 1986, 91, 8098–8112. [Google Scholar] [CrossRef]

- Somers, B.; Asner, G.P.; Tits, L.; Coppin, P. Endmember variability in Spectral Mixture Analysis: A review. Remote Sens. Environ. 2011, 115, 1603–1616. [Google Scholar] [CrossRef]

- Shi, C.; Wang, L. Incorporating spatial information in spectral unmixing: A review. Remote Sens. Environ. 2014, 149, 70–87. [Google Scholar] [CrossRef]

- Mathews, A.J. A Practical UAV Remote Sensing Methodology to Generate Multispectral Orthophotos for Vineyards: Es-timation of Spectral Reflectance Using Compact Digital Cameras. Int. J. Appl. Geospat. Res. 2015, 6, 65–87. [Google Scholar] [CrossRef]

- Csillik, O.; Asner, G.P. Near-real time aboveground carbon emissions in Peru. PLoS ONE 2020, 15, e0241418. [Google Scholar] [CrossRef]

- Kaufman, S.; Rosset, S.; Perlich, C.; Stitelman, O. Leakage in data mining: Formulation, Detection, and Avoidance. ACM Trans. Knowl. Discov. Data 2012, 6, 1–21. [Google Scholar] [CrossRef]

- Kapoor, S.; Narayanan, A. Leakage and the Reproducibility Crisis in ML-Based Science. arXiv 2022, arXiv:2207.07048. [Google Scholar]

- Frazier, A.E. Accuracy assessment technique for testing multiple sub-pixel mapping downscaling factors. Remote Sens. Lett. 2018, 9, 992–1001. [Google Scholar] [CrossRef]

- Fisher, M.; Fradley, M.; Flohr, P.; Rouhani, B.; Simi, F. Ethical considerations for remote sensing and open data in relation to the endangered archaeology in the Middle East and North Africa project. Archaeol. Prospect. 2021, 28, 279–292. [Google Scholar] [CrossRef]

- Mahabir, R.; Croitoru, A.; Crooks, A.T.; Agouris, P.; Stefanidis, A. A Critical Review of High and Very High-Resolution Remote Sensing Approaches for Detecting and Mapping Slums: Trends, Challenges and Emerging Opportunities. Urban Sci. 2018, 2, 8. [Google Scholar] [CrossRef]

- Shepherd, B.E.; Peratikos, M.B.; Rebeiro, P.F.; Duda, S.N.; McGowan, C.C. A Pragmatic Approach for Reproducible Research with Sensitive Data. Am. J. Epidemiol. 2017, 186, 387–392. [Google Scholar] [CrossRef] [PubMed]

- Tullis, J.A.; Kar, B. Where Is the Provenance? Ethical Replicability and Reproducibility in GIScience and Its Critical Applications. Ann. Am. Assoc. Geogr. 2020, 111, 1318–1328. [Google Scholar] [CrossRef]

- Rapiński, J.; Bednarczyk, M.; Zinkiewicz, D. JupyTEP IDE as an Online Tool for Earth Observation Data Processing. Remote Sens. 2019, 11, 1973. [Google Scholar] [CrossRef]

- Wagemann, J.; Fierli, F.; Mantovani, S.; Siemen, S.; Seeger, B.; Bendix, J. Five Guiding Principles to Make Jupyter Notebooks Fit for Earth Observation Data Education. Remote Sens. 2022, 14, 3359. [Google Scholar] [CrossRef]

- Hogenson, K.; Meyer, F.; Logan, T.; Lewandowski, A.; Stern, T.; Lundell, E.; Miller, R. The ASF OpenSARLab A Cloud-Based (SAR) Remote Sensing Data Analysis Platform. In Proceedings of the AGU Fall Meeting 2021, New Orleans, LA, USA, 13–17 December 2021. [Google Scholar]

- Nüst, D.; Pebesma, E. Practical Reproducibility in Geography and Geosciences. Ann. Am. Assoc. Geogr. 2020, 111, 1300–1310. [Google Scholar] [CrossRef]

- Owusu, C.; Snigdha, N.J.; Martin, M.T.; Kalyanapu, A.J. PyGEE-SWToolbox: A Python Jupyter Notebook Toolbox for Interactive Surface Water Mapping and Analysis Using Google Earth Engine. Sustainability 2022, 14, 2557. [Google Scholar] [CrossRef]

- Gundersen, O.E.; Gil, Y.; Aha, D.W. On Reproducible AI: Towards Reproducible Research, Open Science, and Digital Scholarship in AI Publications. AI Mag. 2018, 39, 56–68. [Google Scholar] [CrossRef]

- Pineau, J.; Vincent-Lamarre, P.; Sinha, K.; Larivière, V.; Beygelzimer, A.; d’Alché-Buc, F.; Fox, E.; Larochelle, H. Improving Reproducibility in Machine Learning Research (A Report from the NeurIPS 2019 Reproducibility Program). arXiv 2020, arXiv:2003.12206. [Google Scholar]

- Tmušić, G.; Manfreda, S.; Aasen, H.; James, M.R.; Gonçalves, G.; Ben-Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef]

- Nüst, D.; Ostermann, F.O.; Sileryte, R.; Hofer, B.; Granell, C.; Teperek, M.; Graser, A.; Broman, K.W.; Hettne, K.M.; Clare, C.; et al. AGILE Reproducible Paper Guidelines. Available online: osf.io/cb7z8 (accessed on 10 October 2022).

- James, M.R.; Chandler, J.H.; Eltner, A.; Fraser, C.; Miller, P.E.; Mills, J.; Noble, T.; Robson, S.; Lane, S.N. Guidelines on the use of structure-from-motion photogrammetry in geomorphic research. Earth Surf. Process. Landforms 2019, 44, 2081–2084. [Google Scholar] [CrossRef]

- Colom, M.; Kerautret, B.; Limare, N.; Monasse, P.; Morel, J.-M. IPOL: A New Journal for Fully Reproducible Research; Analysis of Four Years Development. In Proceedings of the 2015 7th International Conference on New Technologies, Mobility and Security (NTMS), Paris, France, 27–29 July 2015; pp. 1–5. [Google Scholar]

- Colom, M.; Dagobert, T.; de Franchis, C.; von Gioi, R.G.; Hessel, C.; Morel, J.-M. Using the IPOL Journal for Online Reproducible Research in Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6384–6390. [Google Scholar] [CrossRef]

- Nüst, D.; Lohoff, L.; Einfeldt, L.; Gavish, N.; Götza, M.; Jaswal, S.; Khalid, S.; Meierkort, L.; Mohr, M.; Rendel, C. Guerrilla Badges for Reproducible Geospatial Data Science (AGILE 2019 Short Paper); Physical Sciences and Mathematics. 2019. Available online: https://eartharxiv.org/repository/view/839/ (accessed on 15 October 2022).

- Wilson, J.P.; Butler, K.; Gao, S.; Hu, Y.; Li, W.; Wright, D.J. A Five-Star Guide for Achieving Replicability and Reproducibility When Working with GIS Software and Algorithms. Ann. Am. Assoc. Geogr. 2021, 111, 1311–1317. [Google Scholar] [CrossRef]

- Frery, A.C.; Gomez, L.; Medeiros, A.C. A Badging System for Reproducibility and Replicability in Remote Sensing Research. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4988–4995. [Google Scholar] [CrossRef]

- Remote Sensing Code Library|Home. Available online: https://tools.grss-ieee.org/rscl1/index.html (accessed on 5 October 2022).

- Liverman, D.M. People and Pixels: Linking Remote Sensing and Social Science; National Academy Press: Washington, DC, USA, 1998; ISBN 978-0-585-02788-3. [Google Scholar]

- Missier, P.; Belhajjame, K.; Cheney, J. The W3C PROV Family of Specifications for Modelling Provenance Metadata. In Proceedings of the 16th International Conference on Extending Database Technology—EDBT ’13, Genoa, Italy, 18–22 March 2013; p. 773. [Google Scholar]

- Richardson, D.B.; Kwan, M.-P.; Alter, G.; McKendry, J.E. Replication of scientific research: Addressing geoprivacy, confidentiality, and data sharing challenges in geospatial research. Ann. GIS 2015, 21, 101–110. [Google Scholar] [CrossRef]

- Richardson, D. Dealing with Geoprivacy and Confidential Geospatial Data. ARC News 2019, 41, 30. [Google Scholar]

- Nichols, J.D.; Kendall, W.L.; Boomer, G.S. Accumulating evidence in ecology: Once is not enough. Ecol. Evol. 2019, 9, 13991–14004. [Google Scholar] [CrossRef]

- Nichols, J.D.; Oli, M.K.; Kendall, W.L.; Boomer, G.S. A better approach for dealing with reproducibility and replicability in science. Proc. Natl. Acad. Sci. USA 2021, 118, e2100769118. [Google Scholar] [CrossRef]

- Feng, X.; Park, D.S.; Walker, C.; Peterson, A.T.; Merow, C.; Papeş, M. A checklist for maximizing reproducibility of ecological niche models. Nat. Ecol. Evol. 2019, 3, 1382–1395. [Google Scholar] [CrossRef] [PubMed]

- Leitão, P.J.; Santos, M.J. Improving Models of Species Ecological Niches: A Remote Sensing Overview. Front. Ecol. Evol. 2019, 7, 9. [Google Scholar] [CrossRef]

- Leidner, A.K.; Buchanan, G.M. (Eds.) Satellite Remote Sensing for Conservation Action: Case Studies from Aquatic and Terrestrial Ecosystems, 1st ed.; Cambridge University Press: Cambridge, UK, 2018; ISBN 978-1-108-63112-9. [Google Scholar]

- Climate Change 2022: Mitigation of Climate Change. In Contribution of Working Group III to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: New York, NY, USA, 2022.

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Microsoft Planetary Computer. Available online: https://planetarycomputer.microsoft.com/ (accessed on 22 September 2022).

- Yang, L.; Lunga, D.; Bhaduri, B.; Begoli, E.; Lieberman, J.; Doster, T.; Kerner, H.; Casterline, M.; Shook, E.; Ramachandran, R.; et al. 2021 GeoAI Workshop Report: The Trillion Pixel Challenge (No. ORNL/LTR-2021/2326); Oak Ridge National Lab. (ORNL): Oak Ridge, TN, USA, 2021; p. 1883938. [Google Scholar]

- Lunga, D.; Gerrand, J.; Yang, L.; Layton, C.; Stewart, R. Apache Spark Accelerated Deep Learning Inference for Large Scale Satellite Image Analytics. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 271–283. [Google Scholar] [CrossRef]

- Al-Ubaydli, O.; List, J.A.; Suskind, D. 2017 Klein Lecture: The Science of Using Science: Toward an Understanding of the Threats to Scalability. Int. Econ. Rev. 2020, 61, 1387–1409. [Google Scholar] [CrossRef]

- Al-Ubaydli, O.; Lee, M.S.; List, J.A.; Suskind, D. The Science of Using Science. In The Scale-Up Effect in Early Childhood and Public Policy; List, J.A., Suskind, D., Supplee, L.H., Eds.; Routledge: London, UK, 2021; pp. 104–125. ISBN 978-0-367-82297-2. [Google Scholar]

- Kane, M.C.; Sablich, L.; Gupta, S.; Supplee, L.H.; Suskind, D.; List, J.A. Recommendations for Mitigating Threats to Scaling. In The Scale-Up Effect in Early Childhood and Public Policy; List, J.A., Suskind, D., Supplee, L.H., Eds.; Routledge: London, UK, 2021; pp. 421–425. ISBN 978-0-367-82297-2. [Google Scholar]

- Wiik, E.; Jones, J.P.G.; Pynegar, E.; Bottazzi, P.; Asquith, N.; Gibbons, J.; Kontoleon, A. Mechanisms and impacts of an incentive-based conservation program with evidence from a randomized control trial. Conserv. Biol. 2020, 34, 1076–1088. [Google Scholar] [CrossRef]

- Weigel, C.; Harden, S.; Masuda, Y.J.; Ranjan, P.; Wardropper, C.B.; Ferraro, P.J.; Prokopy, L.; Reddy, S. Using a randomized controlled trial to develop conservation strategies on rented farmlands. Conserv. Lett. 2021, 14. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Stodden, V. Enabling Reproducible Research: Open Licensing for Scientific Innovation. Int. J. Commun. Law Policy 2009, 13, forthcoming. [Google Scholar]

- Lane, J.; Stodden, V.; Bender, S.; Nissenbaum, H. (Eds.) Privacy, Big Data, and the Public Good: Frameworks for Engagement; Cambridge University Press: New York, NY, USA, 2014; ISBN 978-1-107-06735-6. [Google Scholar]

- Vasquez, J.; Kokhanovsky, A. Special Issue “Remote Sensing Datasets” 2022. Available online: https://www.mdpi.com/journal/remotesensing/special_issues/datasets (accessed on 20 October 2022).

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kedron, P.; Frazier, A.E. How to Improve the Reproducibility, Replicability, and Extensibility of Remote Sensing Research. Remote Sens. 2022, 14, 5471. https://doi.org/10.3390/rs14215471

Kedron P, Frazier AE. How to Improve the Reproducibility, Replicability, and Extensibility of Remote Sensing Research. Remote Sensing. 2022; 14(21):5471. https://doi.org/10.3390/rs14215471

Chicago/Turabian StyleKedron, Peter, and Amy E. Frazier. 2022. "How to Improve the Reproducibility, Replicability, and Extensibility of Remote Sensing Research" Remote Sensing 14, no. 21: 5471. https://doi.org/10.3390/rs14215471

APA StyleKedron, P., & Frazier, A. E. (2022). How to Improve the Reproducibility, Replicability, and Extensibility of Remote Sensing Research. Remote Sensing, 14(21), 5471. https://doi.org/10.3390/rs14215471