Context for Reproducibility and Replicability in Geospatial Unmanned Aircraft Systems

Abstract

:1. Introduction

- Why does R&R matter in geography, GIScience, remote sensing, UAS, etc.?

- How does the literature incorporate R&R into GIScience and UAS research?

- What are key barriers to R&R affecting geospatial UAS workflows?

- What are best practices scientists can incorporate into future research to achieve a standard of R&R that expands the value of its impact to more stakeholders?

2. Context and Rise of Reproducibility and Replicability

2.1. Convergence in Definitions

2.2. Scope of the Problem

3. Literature on Reproducibility and Replicability in GIScience

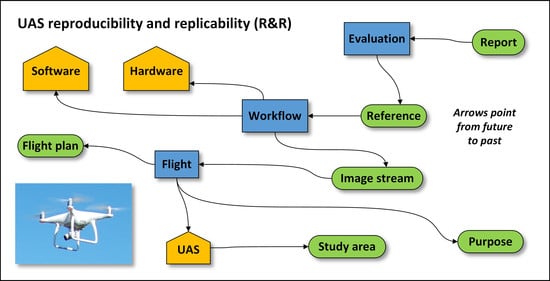

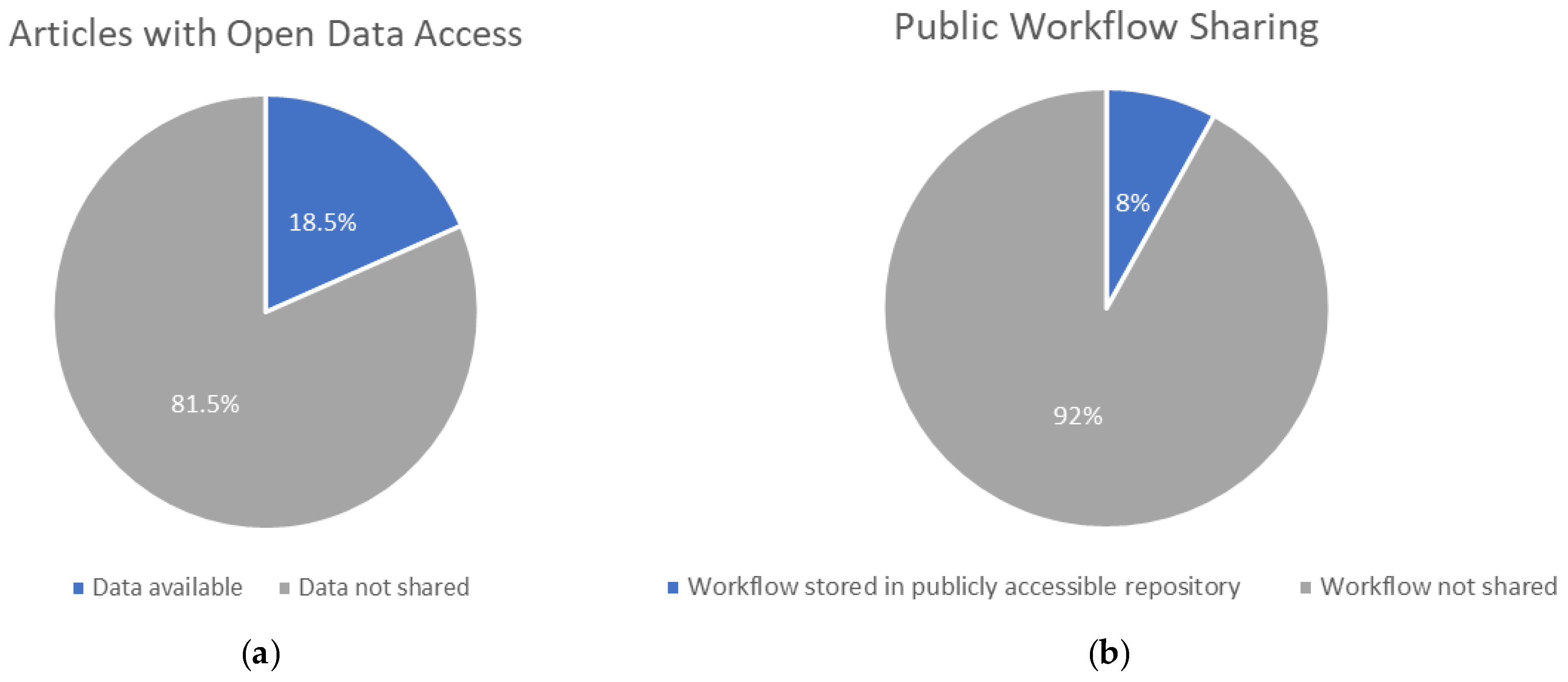

3.1. UAS Remote Sensing and Photogrammetry Workflows

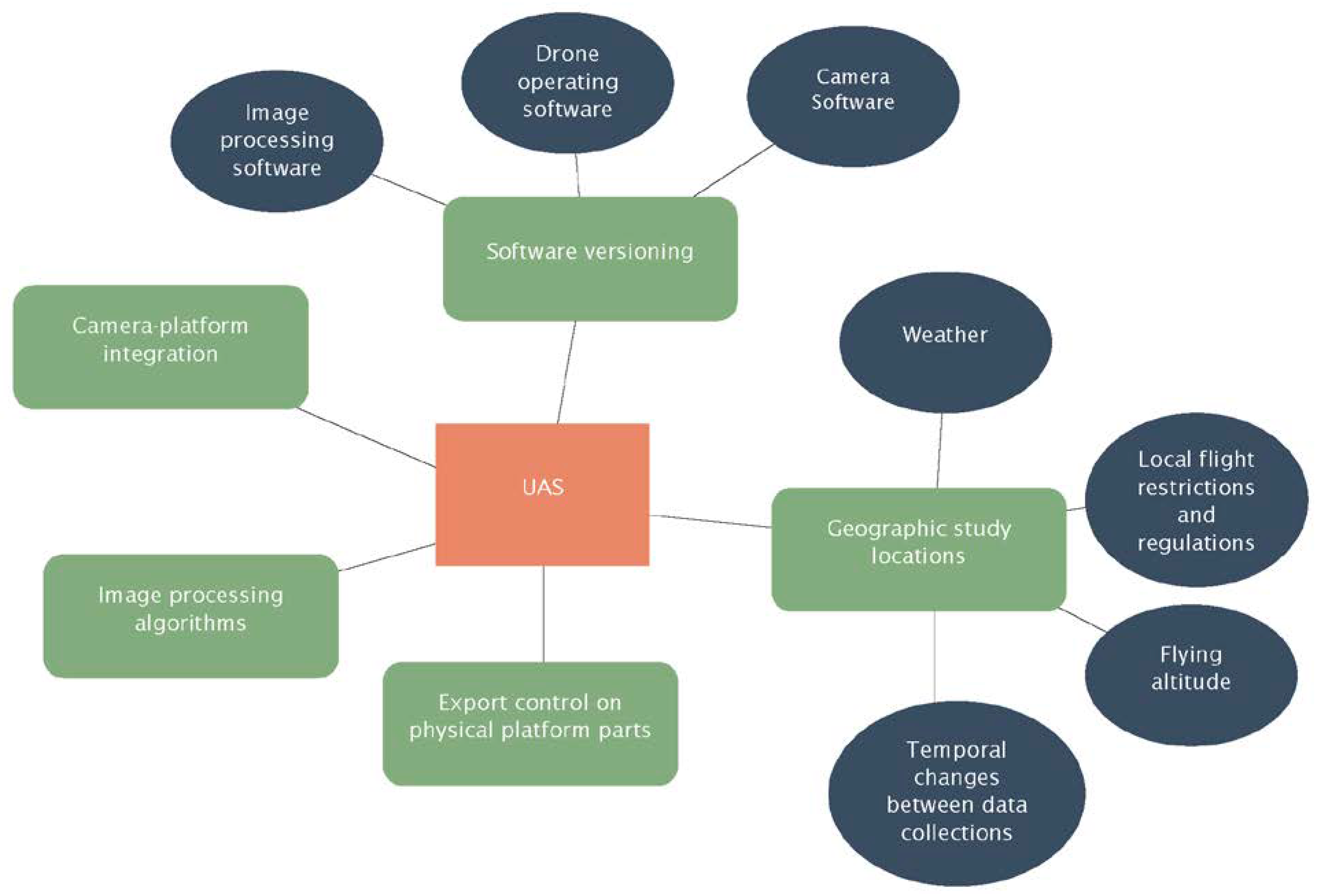

3.2. Key Barriers to Reproducibility and Replicability Affecting Geospatial UAS

4. Case Studies

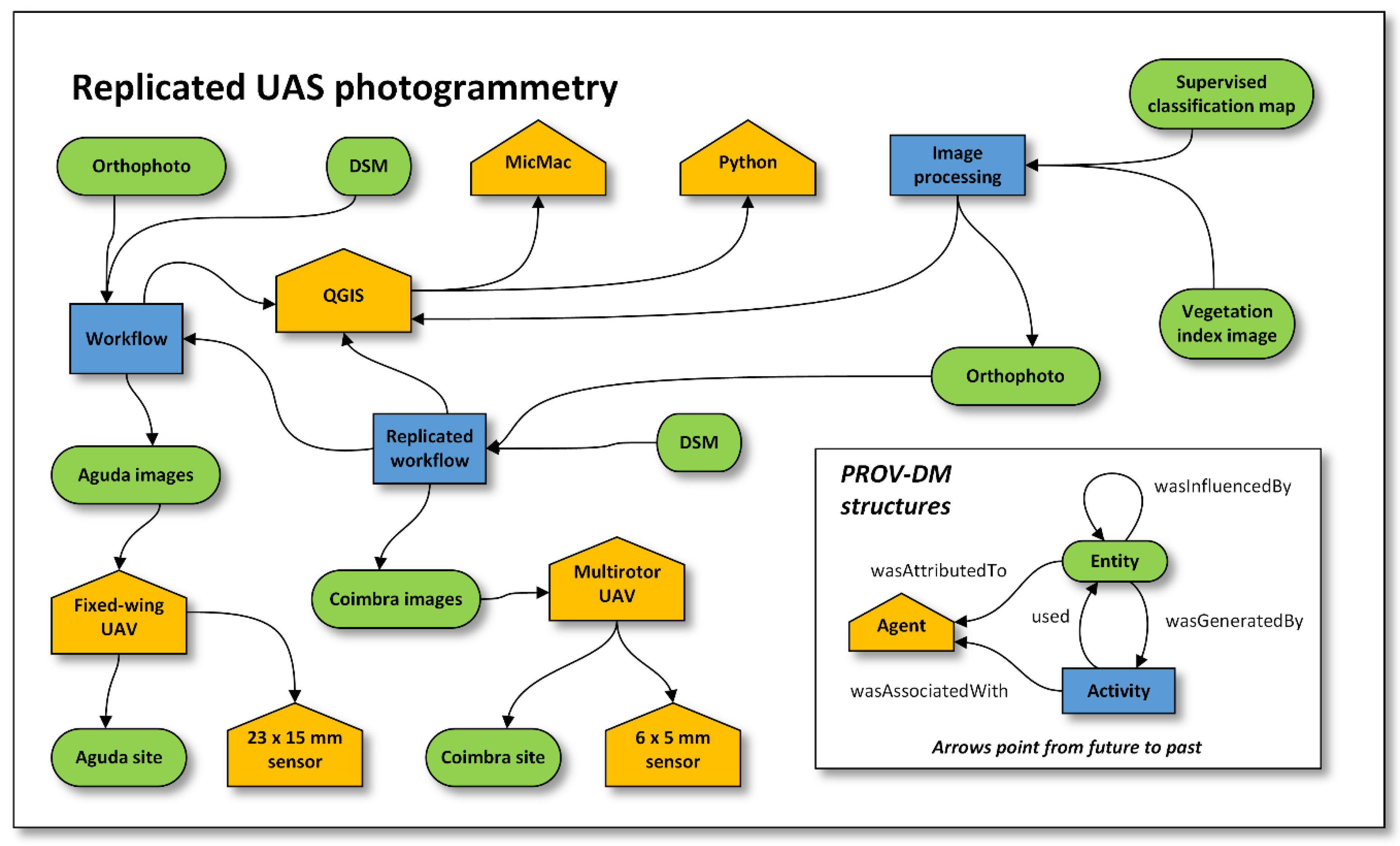

4.1. Case Study: Open Source Application for UAS Photogrammetry

4.2. Case Study: Open Source Landcover Mapping Applications for UAS

5. Key Recommendations

5.1. Communicating the Importance Replicability and Reproducibility

5.2. Increasing Access to Provenance and Metadata

5.3. Adapting Publishing Practices

5.4. Addressing the Issue of Geographic Variability

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Baker, M. 1500 Scientists Lift the Lid on Reproducibility. Nat. News 2016, 533, 452–454. [Google Scholar] [CrossRef]

- Romero, F. Philosophy of Science and the Replicability Crisis. Philos. Compass 2019, 14, 14. [Google Scholar] [CrossRef]

- Munafo, M.R.; Nosek, B.A.; Bishop, D.V.M.; Button, K.S.; Chambers, C.D.; du Sert, N.P.; Simonsohn, U.; Wagenmakers, E.; Ware, J.J.; Ioannidis, J.P.A. A Manifesto for Reproducible Science. Nat. Hum. Behav. 2017, 1, 21. [Google Scholar] [CrossRef]

- Peng, R. The Reproducibility Crisis in Science: A Statistical Counterattack. Significance 2015, 12, 30–32. [Google Scholar] [CrossRef]

- Fanelli, D. Opinion: Is Science Really Facing a Reproducibility Crisis, and Do We Need It To? Proc. Natl. Acad. Sci. USA 2018, 115, 2628–2631. [Google Scholar] [CrossRef] [PubMed]

- Sexton, K.; Ramage, J.; Lennertz, L.; Warn, S.; McGee, J. Research Reproducibility & Replicability Webinar. 2020. Available online: https://scholarworks.uark.edu/oreievt/1 (accessed on 6 March 2021).

- Arribas-Bel, D.; Reades, J. Geography and Computers: Past, Present, and Future. Geogr. Compass 2018, 12, e12403. [Google Scholar] [CrossRef]

- Konkol, M.; Kray, C.; Pfeiffer, M. Computational Reproducibility in Geoscientific Papers: Insights from a Series of Studies with Geoscientists and a Reproduction Study. Int. J. Geogr. Inf. Sci. 2019, 33, 408–429. [Google Scholar] [CrossRef]

- Tullis, J.; Corcoran, K.; Ham, R.; Kar, B.; Williamson, M. Multiuser Concepts and Workflow Replicability in SUAS Applications. In Applications of Small Unmanned Aircraft Systems; CRC Press: New York, NY, USA, 2019; pp. 35–56. ISBN 978-0-429-52085-3. [Google Scholar]

- Buck, S. Solving Reproducibility. Science 2015, 348, 1403. [Google Scholar] [CrossRef]

- Tullis, J.; Kar, B. Where Is the Provenance? Ethical Replicability and Reproducibility in GIScience and Its Critical Applications. Ann. Am. Assoc. Geogr. 2021, 111, 1318–1328. [Google Scholar] [CrossRef]

- Singleton, A.D.; Spielman, S.; Brunsdon, C. Establishing a Framework for Open Geographic Information Science. Int. J. Geogr. Inf. Sci. 2016, 30, 1507–1521. [Google Scholar] [CrossRef] [Green Version]

- Balz, T.; Rocca, F. Reproducibility and Replicability in SAR Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3834–3843. [Google Scholar] [CrossRef]

- Roco, M.; Bainbridge, W.; Tonn, B.; Whitesides, G. (Eds.) Converging Knowledge, Technology, and Society: Beyond Convergence of Nano-Bio-Info-Cognitive Technologies; Springer: Dordrecht, The Netherlands; Heidelberg, Germany; New York, NY, USA; London, UK, 2013; ISBN 978-3-319-02203-1. [Google Scholar]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Noor, N.; Abdullah, A.; Hasim, M. Remote Sensing UAV/Drones and Its Applications for Urban Areas: A Review. IOP Conf. Ser. Earth Environ. Sci. 2018, 169, 012003. [Google Scholar] [CrossRef]

- Adao, T.; Hruska, J.; Padua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Boesch, R. Thermal Remote Sensing with UAV-Based Workflows. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 41–46. [Google Scholar] [CrossRef]

- Larsen, P.; von Ins, M. The Rate of Growth in Scientific Publication and the Decline in Coverage Provided by Science Citation Index. Scientometrics 2010, 84, 575–603. [Google Scholar] [CrossRef] [PubMed]

- Reproducibility, N. OED. Available online: https://www.oed.com/view/Entry/163100 (accessed on 17 March 2021).

- Plesser, H. Reproducibility vs. Replicability: A Brief History of a Confused Terminology. Front. Neuroinform. 2017, 11, 76. [Google Scholar] [CrossRef]

- Waters, N. Motivations and Methods for Replication. Ann. Am. Assoc. Geogr. 2020, 109. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine. Reproducibility and Replicability in Science; The National Academies Press: Washington, DC, USA, 2019; ISBN 978-0-309-48616-3. [Google Scholar]

- Kedron, P.; Frazier, A.; Trgovac, A.; Nelson, T.; Fotheringham, S. Reproducibility and Replicability in Geographical Analysis. Geogr. Anal. 2019, 53, 135–147. [Google Scholar] [CrossRef]

- Davies, W.K.D. The Need for Replication in Human Geography: Some Central Place Examples. Tijdschr. Econ. Soc. Geogr. 1968, 59, 145–155. [Google Scholar] [CrossRef]

- Sui, D.; Kedron, P. Reproducibility and Replicability in the Context of the Contested Identities of Geography. Ann. Am. Assoc. Geogr. 2021, 111, 1275–1283. [Google Scholar] [CrossRef]

- Gil, Y.; David, C.; Demir, I.; Essawy, B.; Fulweiler, R.; Goodall, J.; Karlstrom, L.; Lee, H.; Mills, H.; Oh, J.; et al. Toward the Geoscience Paper of the Future: Best Practices for Documenting and Sharing Research from Data to Software to Provenance. Earth Space Sci. 2016, 3, 388–415. [Google Scholar] [CrossRef]

- Walsh, J.; Dicks, L.; Sutherland, W. The Effect of Scientific Evidence on Conservation Practitioners’ Management Decisions. Soc. Conserv. Biol. 2015, 29, 88–89. [Google Scholar] [CrossRef]

- Goodchild, M. Convergent GIScience. In Proceedings of the Convergent GIScience priorities workshop, Fayetteville, AR, USA, 28–29 October 2019. [Google Scholar]

- Guttinger, S. The Limits of Replicability. Eur. J. Philos. Sci. 2020, 10, 10. [Google Scholar] [CrossRef]

- Wainwright, J. Is Critical Human Geography Research Replicable? Ann. Am. Assoc. Geogr. 2020, 111, 1284–1290. [Google Scholar] [CrossRef]

- Sturdivant, E.; Lentz, E.; Thieler, E.; Farris, A.; Weber, K.; Remsen, D.; Miner, S.; Henderson, R. UAS-SfM for Coastal Research: Geomorphic Feature Extraction and Land Cover Classification from High-Resolution Elevation and Optical Imagery. Remote Sens. 2017, 9, 1020. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.; Mirsky, S.; Hively, W. Remote Sensing with Unmanned Aircraft Systems for Precision Agriculture Applications. In Proceedings of the 2013 Second International Conference on Agro-Geoinformatics, Fairfax, VA, USA, 12–16 August 2013; pp. 131–134. [Google Scholar]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicles Forest Remote Sensing—A Systematic Review. Part II: Research Applications. Forests 2021, 12, 397. [Google Scholar] [CrossRef]

- Stodola, P.; Drozd, J.; Mazal, J.; Hodicky, J.; Prochazka, D. Cooperative Unmanned Aerial System Reconnaissance in a Complex Urban Environment and Uneven Terrain. Sensors 2019, 19, 3754. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuan, X.; Li, W.; Chen, S. Automatic Power Line Inspection Using UAV Images. Remote Sens. 2017, 9, 824. [Google Scholar] [CrossRef] [Green Version]

- Satterlee, L. Climate Drones: A New Tool for Oil and Gas Air Emission Monitoring. Environ. Law Report. News Anal. 2016, 46, 11069–11083. [Google Scholar]

- Vincenzi, D.; Ison, D.; Terwilliger, B. The Role of Unmanned Aircraft Systems (UAS) in Disaster Response and Recovery Efforts: Historical, Current and Future. In Proceedings of the AUVSI Unmanned Systems 2014, Orlando, FL, USA, 12–15 May 2014; pp. 763–771. Available online: https://commons.erau.edu/publication/641 (accessed on 12 July 2022).

- Nahon, A.; Molina, P.; Blazquez, M.; Simeon, J.; Capo, S.; Ferrero, C. Corridor Mapping of Sandy Coastal Foredunes with UAS Photogrammetry and Mobile Laser Scanning. Remote Sens. 2019, 11, 1352. [Google Scholar] [CrossRef]

- Seymour, A.C.; Dale, J.; Hammill, P.N.; Johnston, D.W. Automated Detection and Enumeration of Marine Wildlife Using Unmanned Aircraft Systems (UAS) and Thermal Imagery. Sci. Rep. 2017, 7, 45127. [Google Scholar] [CrossRef]

- Guo, Y.; Fu, Y.H.; Chen, S.; Robin Bryant, C.; Li, X.; Senthilnath, J.; Sun, H.; Wang, S.; Wu, Z.; de Beurs, K. Integrating Spectral and Textural Information for Identifying the Tasseling Date of Summer Maize Using UAV Based RGB Images. Int. J. Appl. Earth Obs. Geoinform. 2021, 102, 102435. [Google Scholar] [CrossRef]

- Erenoglu, R.; Akcay, O.; Erenoglu, O. An UAS-Assisted Multi-Sensor Approach for 3D Modeling and Reconstruction of Cultural Heritage Site. J. Cult. Herit. 2017, 26, 79–90. [Google Scholar] [CrossRef]

- Beaver, J.; Baldwin, R.; Messinger, M.; Newbolt, C.; Ditchkoff, S.; Silman, M. Evaluating the Use of Drones Equipped with Thermal Sensors as an Effective Method for Estimating Wildlife. Wildl. Soc. Bull. 2020, 44, 434–443. [Google Scholar] [CrossRef]

- Giordan, D.; Manconi, A.; Remondino, F.; Nex, F. Use of Unmanned Aerial Vehicles in Monitoring Application and Management of Natural Hazards. Geomat. Nat. Hazards Risk 2017, 8, 1–4. [Google Scholar] [CrossRef]

- Changchun, L.; Li, S.; Hai-Bo, W.; Tianjie, L. The Research on Unmanned Aerial Vehicle Remote Sensing and Its Applications; IEEE: Shenyang, China, 2010; pp. 644–647. [Google Scholar]

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Singhal, G.; Bansod, B.; Mathew, L. Unmanned Aerial Vehicle Classification, Applications and Challenges: A Review. Unmanned Aer. Veh. 2018. preprints. [Google Scholar] [CrossRef]

- Zahari, N.; Karim, M.; Nurhikmah, F.; Aziz, N.; Zawawi, M.; Mohamad, D. Review of Unmanned Aerial Vehicle Photogrammetry for Aerial Mapping Applications. In Lecture Notes in Civil Engineering; Springer: Singapore, 2021; Volume 132, pp. 669–676. ISBN 978-981-336-310-6. [Google Scholar]

- Sigala, A.; Langhals, B. Applications of Unmanned Aerial Systems (UAS): A Delphi Study Projecting Future UAS Missions and Relevant Challenges. Drones 2020, 4, 8. [Google Scholar] [CrossRef]

- Idries, A.; Mohamed, N.; Jawhar, I.; Mohamed, F.; Al-Jaroodi, J. Challenges of Developing UAV Applications: A Project Management View. In Proceedings of the 2015 International Conference on Industrial Engineering and Operations Management (IEOM), Dubai, United Arab Emirates, 3–5 March 2015; IEEE: New York, NY, USA, 2015; pp. 1–10. [Google Scholar]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-Based Earth Topography Reconstructions Based on Structure-from-Motion Algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- Mlambo, R.; Woodhouse, I.H.; Gerard, F.; Anderson, K. Structure from Motion (SfM) Photogrammetry with Drone Data: A Low Cost Method for Monitoring Greenhouse Gas Emissions from Forests in Developing Countries. Forests 2017, 8, 68. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Lehmann, J.R.K.; Prinz, T.; Ziller, S.R.; Thiele, J.; Heringer, G.; Meria-Neto, J.A.A.; Buttschardt, T.K. Open-Source Processing and Analysis of Aerial Imagery Acquired with a Low-Cost Unmanned Aerial System to Support Invasive Plant Management. Front. Environ. Sci. 2017, 5, 44. [Google Scholar] [CrossRef]

- Goncalves, G.R.; Perez, J.A.; Duarte, J. Accuracy and Effectiveness of Low Cost UASs and Open Source Photogrammetric Software for Fordunes Mapping. Int. J. Remote Sens. 2018, 39, 5059–5077. [Google Scholar] [CrossRef]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions. Remote Sens. 2016, 8, 465. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the Accuracy of Georeferenced Point Clouds Produced via Multi-View Stereopsis from Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Ludwig, M.; Runge, C.; Friess, N.; Koch, T.; Richter, S.; Seyfried, S.; Wraase, L.; Lobo, A.; Sebastia, M.-T.; Reudenbach, C.; et al. Quality Assessment of Photogrammetric Methods—A Workflow for Reproducible UAS Orthomosaics. Remote Sens. 2020, 12, 3831. [Google Scholar] [CrossRef]

- Park, J.W.; Jeong, H.H.; Kim, J.S.; Choi, C.U. Development of Open Source-Based Automatic Shooting and Processing UAV Imagery for Orthoimage Using Smart Camera UAV. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume XLI-B7, pp. 941–944. [Google Scholar]

- Ahmadabadian, A.H.; Robson, S.; Boehm, J.; Shortis, M.; Wenzel, K.; Fritsch, D. A Comparison of Dense Matching Algorithms for Scaled Surface Reconstruction Using Stereo Camera Rigs. ISPRS J. Photogramm. Remote Sens. 2013, 78, 157–167. [Google Scholar] [CrossRef]

- Galland, O.; Bertelsen, H.; Guldstrand, F.; Girod, L.; Johannessen, R.; Bjugger, F.; Burchardt, S.; Mair, K. Application of Open-Source Photogrammetric Software MicMac for Monitoring Surface Deformation in Laboratory Models. J. Geophys. Res. Sold Earth 2016, 121, 2852–2872. [Google Scholar] [CrossRef] [Green Version]

- Forsmoo, J.; Anderson, K.; Macleod, C.; Wilkinson, M.; DeBell, L.; Brazier, R. Structure from Motion Photogrammetry in Ecology: Does the Choice of Software Matter? Ecol. Evol. 2019, 9, 12964–12979. [Google Scholar] [CrossRef]

- Rocchini, D.; Petras, V.; Petrasova, A.; Horning, N.; Furtkevicova, L.; Neteler, M.; Leutner, B.; Wegmann, M. Open Data and Open Source for Remote Sensing Training in Ecology. Ecol. Inform. 2017, 40, 57–61. [Google Scholar] [CrossRef]

- Benassi, F.; Dall’Asta, E.; Diotri, F.; Forlani, G.; di Cella, U.; Roncella, R.; Santise, M. Testing Accuracy and Repeatability of UAV Blocks Oriented with GNSS-Supported Aerial Triangulation. Remote Sens. 2017, 9, 172. [Google Scholar] [CrossRef]

- Teodoro, A.C.; Araujo, R. Comparison of Performance of Object-Based Image Analysis Techniques Available in Open Source Software (Spring and Orfeo Toolbox/Monteverdi) Considering Very High Spatial Resolution Data. J. Appl. Remote Sens. 2016, 10, 016011. [Google Scholar] [CrossRef]

- Anders, N.; Smith, M.; Cammeraat, E.; Keesstrea, S. Reproducibility of UAV-Based Photogrammetric Surface Models. In Proceedings of the EGU General Assembly 2016, Vienna, Austria, 17–22 April 2016. [Google Scholar]

- Meng, L.; Peng, Z.; Zhou, J.; Zhang, J.; Zhenyu, L.; Baumann, A.; Du, Y. Real-Time Detection of Ground Objects Based on Unmanned Aerial Vehicle Remote Sensing with Deep Learning: Application in Excavator Detection for Pipeline Safety. Remote Sens. 2020, 12, 182. [Google Scholar] [CrossRef]

- Baca, T.; Petrlik, M.; Vrba, M.; Spurny, V.; Penicka, R.; Hert, D.; Saska, M. The MRS UAV System: Pushing the Frontiers of Reproducible Research, Real-World Deployment, and Education with Autonomous Unmanned Aerial Vehicles. J. Intell. Robot. Syst. 2021, 102, 26. [Google Scholar] [CrossRef]

- Knoth, C.; Nust, D. Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers. Remote Sens. 2017, 9, 290. [Google Scholar] [CrossRef]

- Spate, O.H.K. Quantity and Quality in Geography. Ann. Assoc. Am. Geogr. 1960, 50, 377–394. [Google Scholar] [CrossRef]

- Casadevall, A.; Fang, F. Reproducible Science. Infect. Immun. 2010, 78, 4972–4975. [Google Scholar] [CrossRef]

- Wilson, J.; Butler, K.; Gao, S.; Hu, Y.; Li, W.; Wright, D. A Five-Star Guide for Achieving Replicability and Reproducibility When Working with GIS Software and Algorithms. Ann. Am. Assoc. Geogr. 2021, 111, 1311–1317. [Google Scholar] [CrossRef]

- Dangermond, J.; Goodchild, M. Building Geospatial Infrastructure. Geo-Spat. Inf. Sci. 2020, 23, 1–9. [Google Scholar] [CrossRef]

- Bunge, W.; Fred, K. Schaefer and the Science of Geography. Ann. Assoc. Am. Geogr. 1979, 69, 128–132. [Google Scholar] [CrossRef]

- Nust, D.; Pebesma, E. Practical Reproducibility in Geography and Geosciences. Ann. Am. Assoc. Geogr. 2021, 111, 1300–1310. [Google Scholar] [CrossRef]

- Duarte, L.; Teodoro, A.C.; Moutinho, O.; Goncalves, J.A. Open-Source GIS Application for UAV Photogrammetry Based on MicMac. Int. J. Remote Sens. 2017, 38, 3181–3202. [Google Scholar] [CrossRef]

- Moreau, L.; Missier, P. PROV-DM: The PROV Data Model. Available online: https://www.w3.org/TR/prov-dm/ (accessed on 17 August 2022).

- Horning, N.; Fleishman, E.; Ersts, P.; Fogarty, F.; Zilig, M. Mapping of Land Cover with Open-Source Software and Ultra-High-Resolution Imagery Acquired with Unmanned Aerial Vehicles. Remote Sens. Ecol. Conserv. 2020, 6, 487–497. [Google Scholar] [CrossRef]

- Granell, C.; Sileryte, R.; Nust, D. Reproducible Graduate Theses in GIScience. In Proceedings of the Paper Presentations, Research Reproducibility, Gainesville, FL, USA, 2–3 December 2020. [Google Scholar]

- Helregel, N. Engaging Undergraduates on Issues of Reproducibility. In Proceedings of the Librarians Building Momentum for Reproducibility, Online, 28 January 2020. [Google Scholar]

- Frery, A.; Gomez, L.; Medeiros, A. A Badging System for Reproducibility and Replicability in Remote Sensing Research. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4988–4995. [Google Scholar] [CrossRef]

- Santana-Perez, I.; Perez-Hernandez, M.S. Towards Reproducibility in Scientific Workflows: An Infrastructure-Based Approach. Sci. Program. 2015, 2015, 243180. [Google Scholar] [CrossRef]

- Bollen, K.; Cacioppo, J.; Kaplan, R.; Krosnick, J.; Olds, J. Social, Behavioral, and Economic Sciences Perspectives on Robust and Reliable Science; National Science Foundation: Alexandria, VA, USA, 2015; p. 29.

- Miyakawa, T. No Raw Data, No Science: Another Possible Source of the Reproducibility Crisis. Mol. Brain 2020, 13, 24. [Google Scholar] [CrossRef]

- Ohuru, R. A Method for Enhancing Shareability and Reproducibility of Geoprocessing Workflows. Case Study: Integration of Crowdsourced Geoinformation, Satellite, and in-Situ Data for Water Resource Monitoring. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2019. [Google Scholar]

- Anselin, L.; Rey, S.J.; Li, W. Metadata and Provenance for Spatial Analysis: The Case of Spatial Weights. Int. J. Geogr. Inf. Sci. 2014, 28, 2261–2280. [Google Scholar] [CrossRef]

- Instructions for Authors|Remote Sensing. Available online: https://www.mdpi.com/journal/remotesensing/instructions (accessed on 16 March 2021).

- Editorial Policies|Nature Portfolio. Available online: https://www.nature.com/nature-research/editorial-policies (accessed on 16 March 2021).

- Colom, M.; Dagobert, T.; de Franchis, C.; von Gioi, R.; Hessel, C. Using the IPOL Journal for Online Reproducible Research in Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6384–6390. [Google Scholar] [CrossRef]

- Nust, D.; Konkol, M.; Pebesma, E.; Kray, C.; Schutzeichel, M.; Przibytzin, H.; Lorenz, J. Opening the Publication Process with Executable Research Compendia. D-Lib. Mag. 2017, 23, 12. [Google Scholar] [CrossRef]

- Nust, D. Container Images for Research Librarians 101. In Proceedings of the Librarians Building Momentum for Reproducibility, Online, 28 January 2020. [Google Scholar]

- Stodden, V. Enabling Reproducible Research: Open Licensing for Scientific Innovations. Int. J. Commun. Law Policy 2009, 55. Available online: https://ssrn.com/abstract=1362040 (accessed on 12 July 2022).

- Etienne, A.; Ahmad, A.; Aggarwal, V.; Saraswat, D. Deep Learning-Based Object Detection System for Identifying Weeds Using UAS Imagery. Remote Sens. 2021, 13, 5182. [Google Scholar] [CrossRef]

- Kenett, R.; Shmueli, G. Clarifying the Terminology That Describes Scientific Reproducibility. Nat. Methods 2015, 12, 699. [Google Scholar] [CrossRef]

| Category | Barrier | Applied Example |

|---|---|---|

| Terminology [11] | A researcher publishes work and claims it is “reproducible” but does not provide access to source code or original data. | |

| Time [1,25,28] | A research plan specifies five different workflow trials, but as the deadline for submission draws near, the researcher only finds time to run the workflow once before analyzing the results. | |

| Applicable to many scientific disciplines | Finance [10,28] | A researcher wants a graduate student to create a script automating their workflow that can be published alongside their upcoming journal article. However, the project is quickly running out of grant funding. They would need to petition for additional funds to pay the hourly wages needed for the graduate student to complete the script, so instead they decide to publish the results without it. |

| Publication pressure [8,72] | A researcher believes that to be considered for tenure next year, he should publish five manuscripts over the next year. This only leaves enough time to run through each project workflow once, and to minimize writing time by outlining only the basic steps of each experiment. | |

| Article format [28] | A researcher constructs a complex image processing workflow that requires four different open-source software (OSS) packages. He does his best to describe each step in the methods section of his paper, but another researcher finds the workflow impossible to follow based only on the description. She really needs the actual script to correctly replicate the work on her own image data. | |

| Issue of place [26,27,76] | A researcher publishes a useful image processing workflow and includes a link to the script used to conduct the original experiment. Another researcher downloads the script and runs it on his own imagery from a different area of the world. He finds that the hard-coded script variables do not accurately account for the landscape features in his imagery and his results vary significantly from the original research. | |

| Geography and GIScience barriers | Conceptualization of phenomena [25,75] | A researcher publishes a workflow that analyzes imagery for potential environmental hazards. A researcher from another country replicates the workflow, using her own data to try to assess the risk in her own country. The agency funding the work is unhappy because the analysis failed to identify a specific type of environmental hazard common to their country, because it was not common to the country in which the original workflow was created and excluded from the workflow. |

| Different educational backgrounds [77] | A researcher with a background in computer science publishes a remote sensing workflow with what he considers to be a very detailed write up of necessary steps to complete the workflow. Another geoscientist wants to replicate the workflow for her own remote sensing project. She tries to follow the write up in the article, but finds the instructions too high level for anyone without a computer science background to follow. |

| R&R Recommendations | Case Study: Duarte et al. [78] | Cast Study: Horning et al. [80] |

|---|---|---|

| Use of OSS throughout workflow | Yes | Yes |

| Automated workflow or script | Yes | No |

| GUI interface for automated workflow | Yes | No |

| Publication of source data for open access | No | Yes |

| Publication of workflow for open access | Yes | Yes |

| Working link to data or workflow repository | No | Yes |

| Sufficient accompanying workflow metadata | Yes | Yes |

| Reproduced or replicated workflow before publication | Yes | Yes |

| Publication of article in open access journal | No | Yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Howe, C.; Tullis, J.A. Context for Reproducibility and Replicability in Geospatial Unmanned Aircraft Systems. Remote Sens. 2022, 14, 4304. https://doi.org/10.3390/rs14174304

Howe C, Tullis JA. Context for Reproducibility and Replicability in Geospatial Unmanned Aircraft Systems. Remote Sensing. 2022; 14(17):4304. https://doi.org/10.3390/rs14174304

Chicago/Turabian StyleHowe, Cassandra, and Jason A. Tullis. 2022. "Context for Reproducibility and Replicability in Geospatial Unmanned Aircraft Systems" Remote Sensing 14, no. 17: 4304. https://doi.org/10.3390/rs14174304

APA StyleHowe, C., & Tullis, J. A. (2022). Context for Reproducibility and Replicability in Geospatial Unmanned Aircraft Systems. Remote Sensing, 14(17), 4304. https://doi.org/10.3390/rs14174304