4.4.1. Comparison with Other Methods on LEVIR-CD

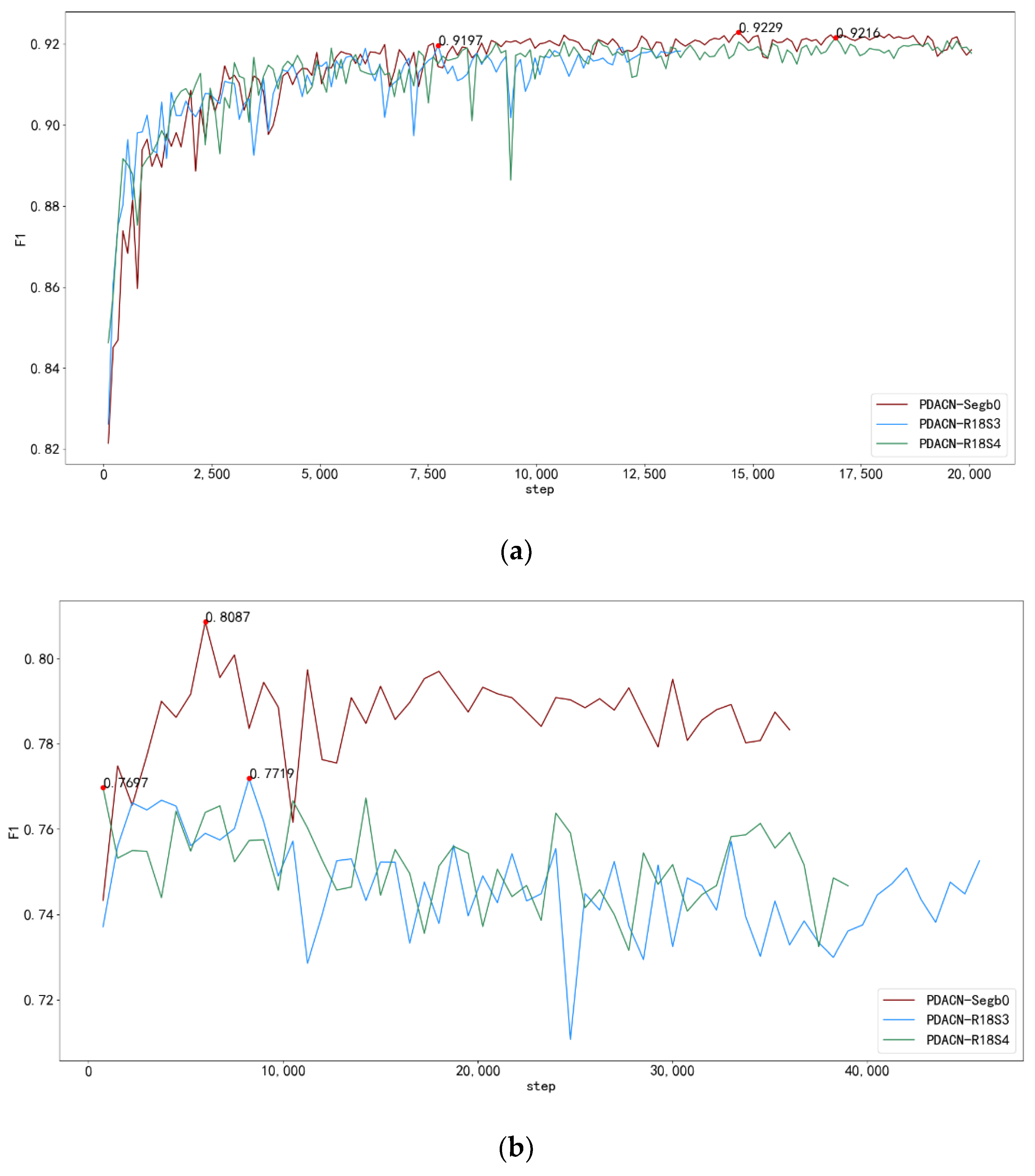

In this section, we present a comparison of the results of PDACN and other CD methods for the application to LEVIR-CD. FC-EF and its two variants (FC-Siam-Conc and FC-Siam-Diff) were not evaluated in the original paper on LEVIR-CD for the metrics. To ensure a consistent comparison of these methods, we fully retrained the methods used for comparison using the training approach and loss functions in this paper; both were validated on the same test set.

Here, the quantitative evaluation results are given in terms of F1, IoU, precision, and recall, which are commonly used in CD task metrics; the results are shown in

Table 3. Note that we used two inference testing strategies for image size (LEVIR-CD

256 and LEVIR-CD

1024) in the test set. The first one ensured that the test environment used in the original paper [

38] method was available; i.e., the test set images were cropped into 2048 non-overlapping 256-sized image blocks for testing. The second one directly used 128 1024-sized test images for testing.

By using the LEVIR-CD256 approach to test the model performance, the BIT approach achieved slight improvements of 0.81% and 1.33% over the F1 and IoU metrics of the original paper, respectively, which we believe was probably due to the training hyperparameters such as the learning rate and training time used in this paper. Compared with LEVIR-CD256, LEVIR-CD1024 achieved a greater improvement in all metrics to different degrees, which we believe occurred because the second test image used a 1024-sized image from the original test set, which made the input of the network richer in global and texture information, thus improving the network’s expressive power.

Here, we focus on analyzing the results of the 1024-size inference test, which are shown in

Table 3 corresponding to LEVIR-CD

1024. FC-EF obtained the lowest F1 of 87.61% and IoU of 77.94%, while its variant in the form of a Siamese network structure (FC-Siam-Conc) obtained a slight performance improvement in IoU, which indicated that the Siamese network structure could improve the performance expression of the model using this dataset. When using the Siamese network in the case of taking the difference and absolute value, the FC-Siam-Diff network yielded a 1.52% and 2.45% higher F1 and IoU, respectively, compared to the FC-EF. We believe this was because in this building dataset, the semantic information of the buildings in the images from different periods was similar, so a simple absolute value difference was sufficient for change-information extraction. In contrast, concatenation introduced unnecessary noise to limit the model expression while increasing the number of model parameters. The BIT constructed spatiotemporal attention to enhance the features using a transformer before absolute-value differencing and obtained a 2.62% and 2.45% higher IoU and precision than those of FC-Siam-Diff. This showed that using feature enhancement before absolute value extraction could further reduce the noise difference between features in different time phases, thus improving the detection accuracy and reducing “pseudo-variation” caused by misclassification. Our method (PDACN) achieved the best results for all metrics of the methods tested. Compared with those of BIT, we achieved a 1.55%, 2.64%, and 2.61% higher F1, IoU, and recall, respectively. We believe that the BIT filtered some useful information during the feature-reinforcement process on this dataset, which resulted in some missing building identifications; while our PDACN reduced “pseudo-variation” by pre-locating the change locations while reinforcing the integrity of individual buildings in the change areas. Finally, it should be noted that our network exhibited the best performance with the encoder form SegFormer-b0, followed by ResNet18-S4 and ResNet18-S3.

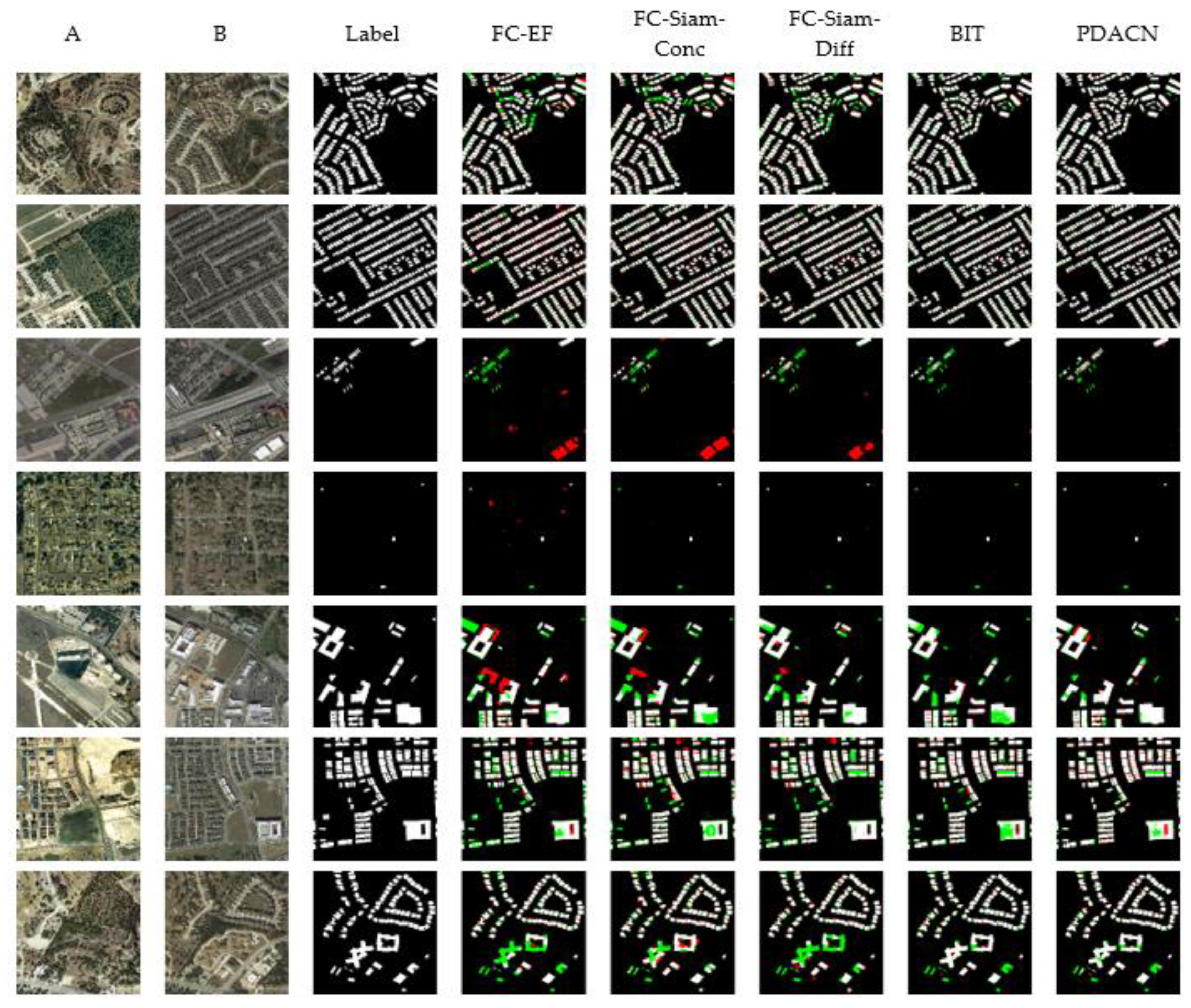

Figure 5 provides a visual comparison of the various methods used on the LEVIR dataset, where red and green represent the false and missed detection parts, respectively. Since the encoder for SegFormer-b0 performed best, we visualized only PDACN-Segb0. From top to bottom, their names in the test set were test_20, test_45, test_21, test_77, test_ 80, test_103, and test_107. Siam-Conc was able to determine the obvious range of variation in general. FC-Siam-Conc improved the visual effect on building edges but was not robust to pseudo-variations caused by lighting and shadows. According to the first and second rows, our PDACN and the BIT yielded better building boundaries and complete building profiles compared to the FC series approach. We believe that this occurred because both were robust against the effects of lighting and alignment on the bi-temporal images due to building attention mechanisms. According to rows 3 and 4, PDACN yielded the fewest red and green parts of all methods, which indicated that our method maintained a good performance when detecting small buildings. According to rows 5, 6, and 7, all methods exhibited some lack of connectivity in the detection of relatively large buildings, which we believe was a misclassification phenomenon due to the lack of differentiation between bare soil and buildings.

4.4.2. Comparison with Other Methods on SYSU-CD

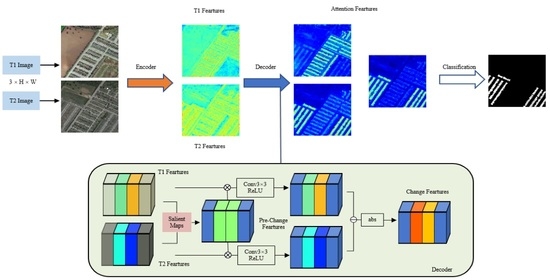

In this section, we focus on comparison with DSAMNet [

20], a method proposed together with SYSU-CD. Similar to the attention mechanism PDA proposed for the method in this paper, DSAMNet reinforces features by channel and spatial attention mechanisms before changing them. Here, we also used F1, IoU, precision, and recall as quantitative comparisons. We used the same training strategy as for LEVIR-CD except that the batch size was adjusted to 16. Since the data were at a fixed size of 256, the DSAMNet experimental results did not differ much from those of the original paper. Therefore, we directly cited the work related to the metrics of the model using the original paper. Among them, BiDateNet used LSTM to enhance temporal information between images and STANet included a spatiotemporal attention mechanism to enhance the features using ResNet18 as the encoder. DSAMNet, which also used ResNet18 as an encoder, additionally included shallow-feature supervised reinforcement through the introduction of spatial and channel attention mechanisms. These networks were essentially the same as the approach used in this paper, with the decoding part of the network modified on the basis of generating a change feature map.

As we can see in

Table 4, compared to the LEVIR-CD single-building CD dataset, the F1, precision, recall, and IoU of our model (PDACN-Segb0) on the SYSU-CD dataset decreased by 10.27%, 11.62%, 8.96%, and 16.16%, respectively. This was because this dataset, in addition to significant artificial structures such as buildings alone, contained more intensive complex changes such as the expansion of built-up areas and bare soil conversion of grass. This made the types of changes in complex scenes very expansive, which made the CD more difficult.

In addition, our method achieved a better performance than DSAMNet in all metrics, especially in the case of the SegFormer-b0 encoder. The F1 and IoU were 6.10% and 4.37% higher, respectively, which showed that our method could still maintain excellent results in complex change scenes.

When we used the same encoder as DSAMNet (ResNet18), we obtained 0.91%, 8.27%, and 1.22% for F1, precision, and IoU, respectively, with the PDACN-R18S4 structure; and 2.02%, 6.95%, and 2.76% for F1, precision, and IoU, respectively, with the PDACN-R18S3 structure, respectively. Furthermore, the accuracy of the transformer-based encoder was better than that of the convolutional architecture and R18S3 with fewer network parameters returned better results than R18S4, which we believe was because the transformer-based network generalized better while the convolutional architecture was more likely to overfit this dataset.

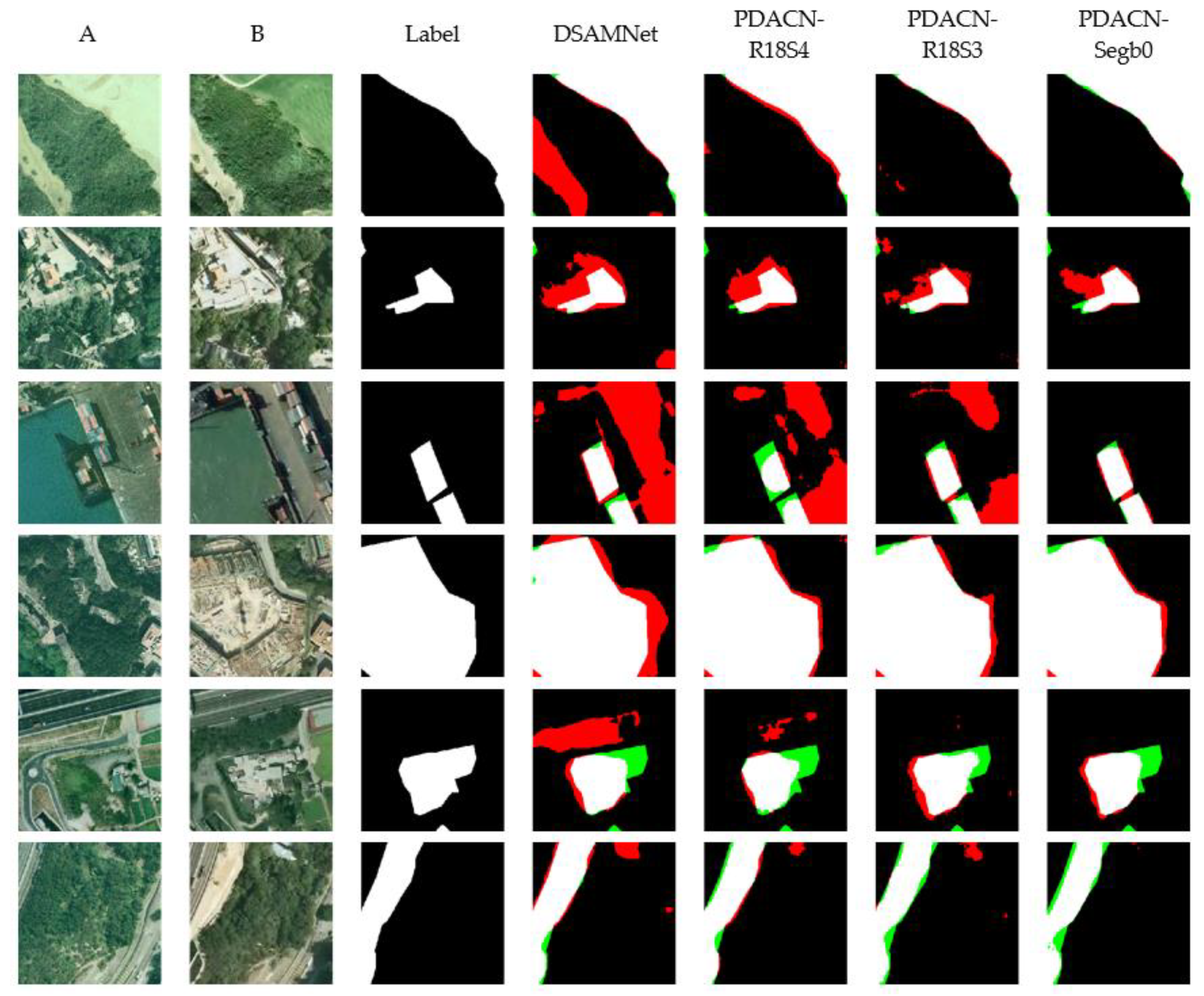

Figure 6 shows a visual comparison between PDACN and DSAMNet on the SYSU-CD test dataset, where red and green represent false detections and missed detections, respectively. Here, we selected some representative types of changes in the test set for visualization (from top to bottom): vegetation changes (01536), pre-construction foundation work (01524), marine construction (00028), suburban expansion (00101), new urban buildings (00503), and road expansion (00147), followed by their names. Overall, compared with DSAMNet, the red parts in the last three columns of the figure show that our method yielded significantly fewer false detections under the transformer architecture. In particular, in the vegetation CD in row 1, DSAMNet incorrectly detected roads with a color mismatch caused by lighting factors while our PDACN was able to overcome this difficulty. The other types of CD, R18S3 and R18S4 with convolutional structures, still had false-detection performances similar to that of DSAMNet; while Segb0 with a transformer architecture performed the best, which indicated that the latter had a better generalization ability for complex change scenes.