Aerial Image Dehazing Using Reinforcement Learning

Abstract

1. Introduction

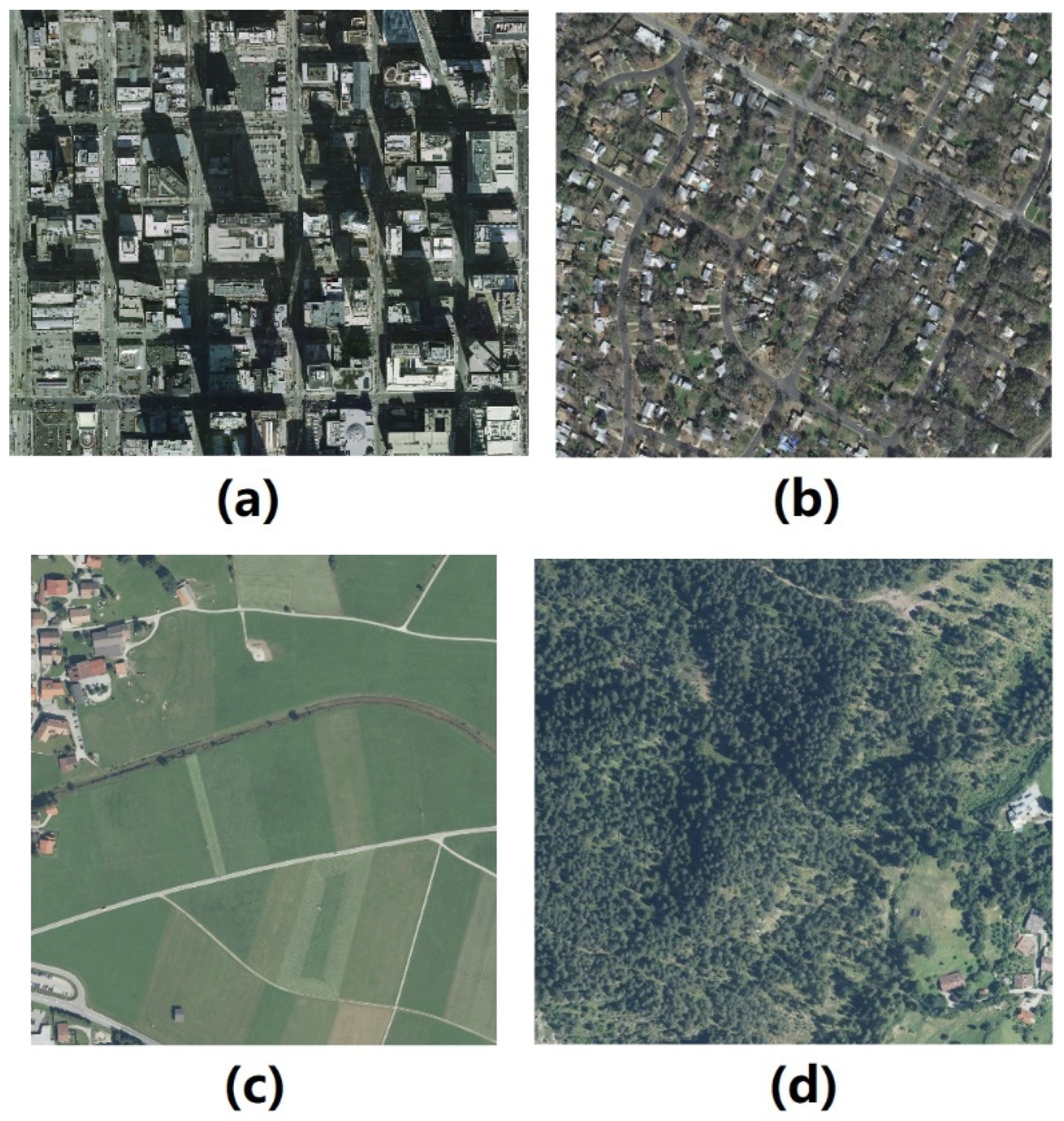

- The contributions of the current study are as follows:

- We develop a specialized clear–hazy image dataset for aerial images.

- We compare the different dehazing method effects on aerial images.

- We are the first to explore the application of deep reinforcement learning (DRL) to image dehazing, and we achieve good results.

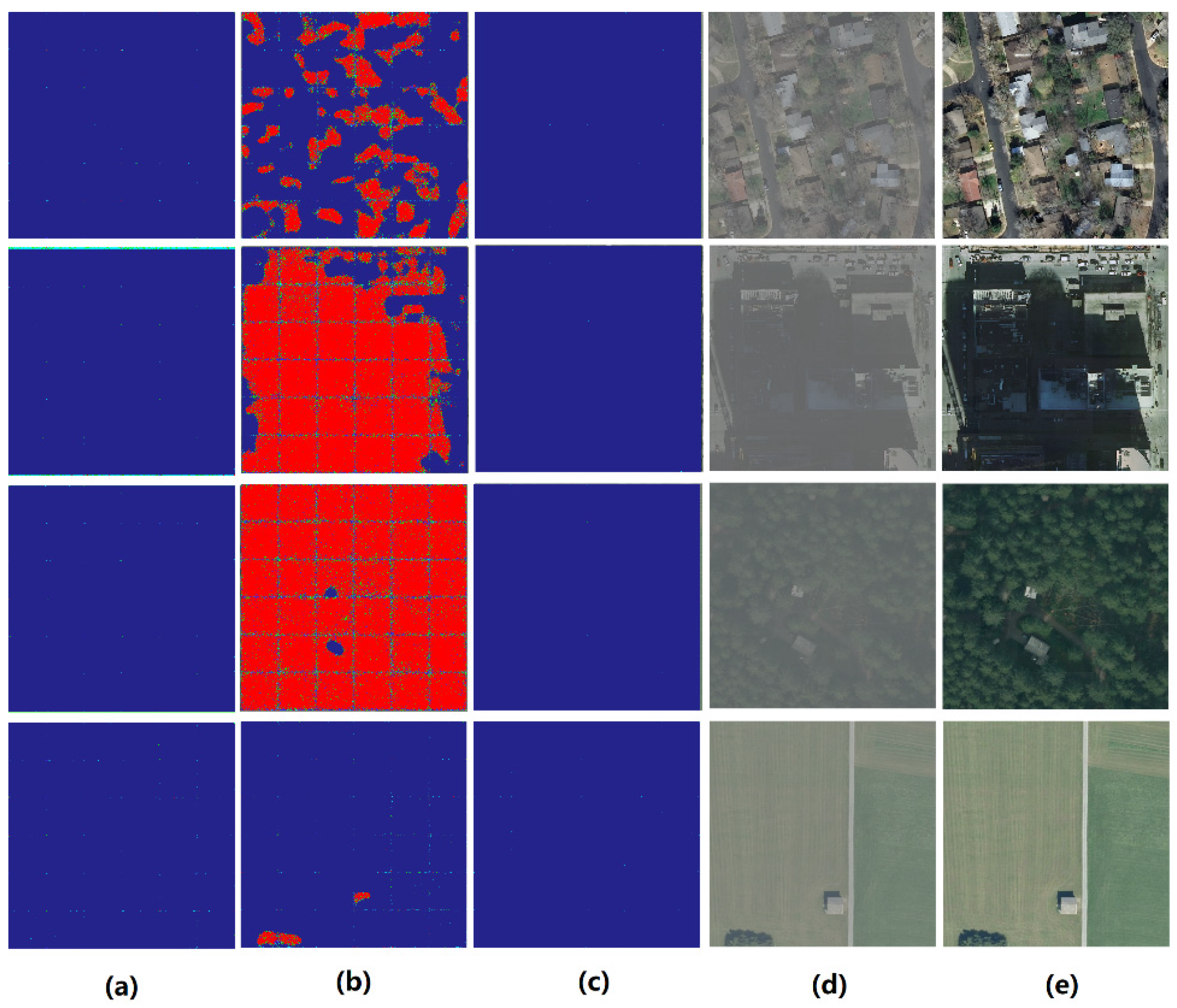

- According to the differences between the natural-ground and aerial images, we select the most suitable dehazing method, which is modified to a multi-scale form, to use in the DRL method. Then, every pixel of the hazy image independently selects its best solution using the decision-making abilities of the DRL method. The choices in the DRL process can be displayed visually, and we can observe the actions of each pixel in each step of the process of obtaining the final result, which is convenient for analyzing the results.

2. Related Work

2.1. Dehazing Algorithms

2.2. Application of DRL in the Field of Image Processing

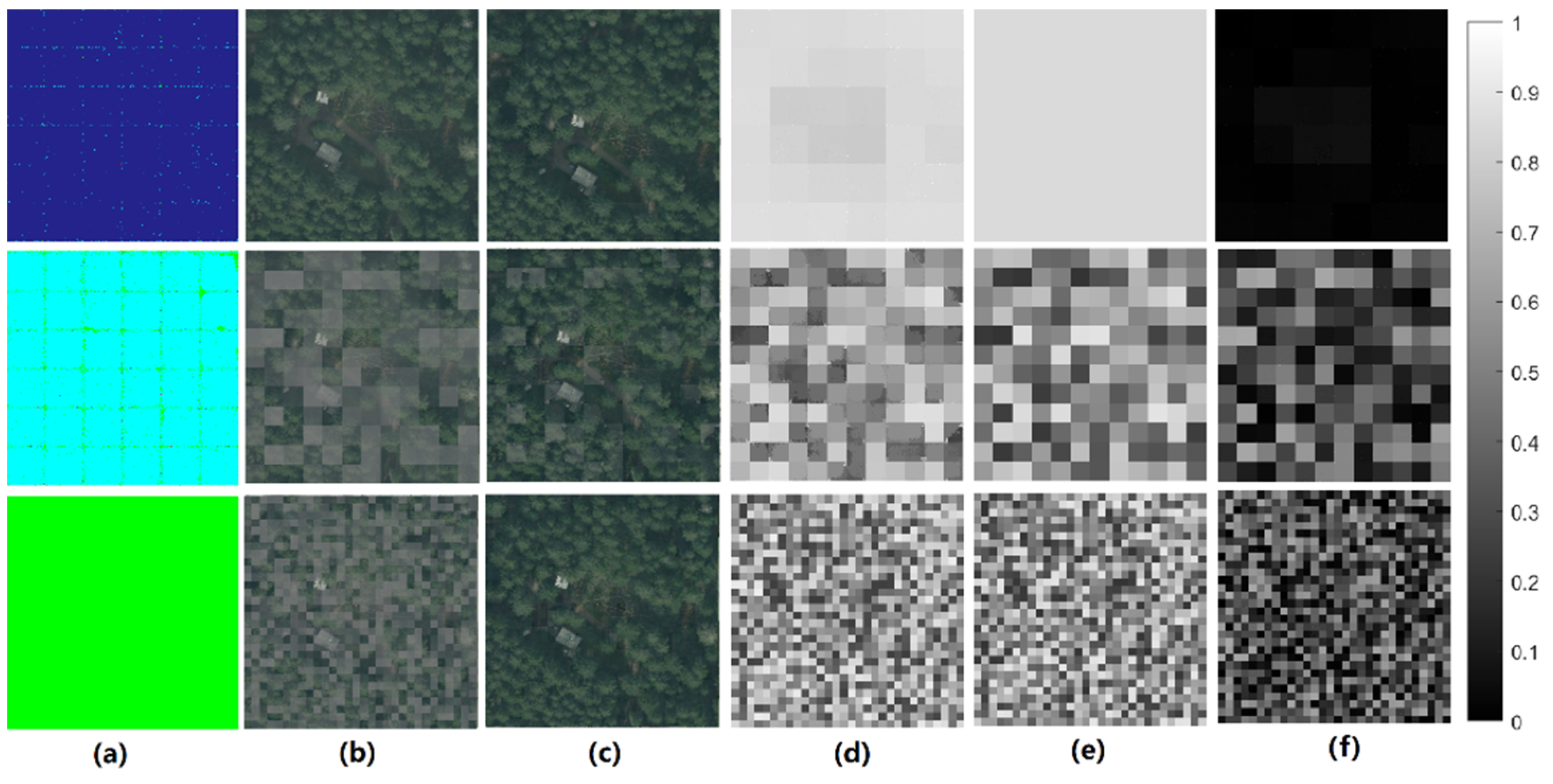

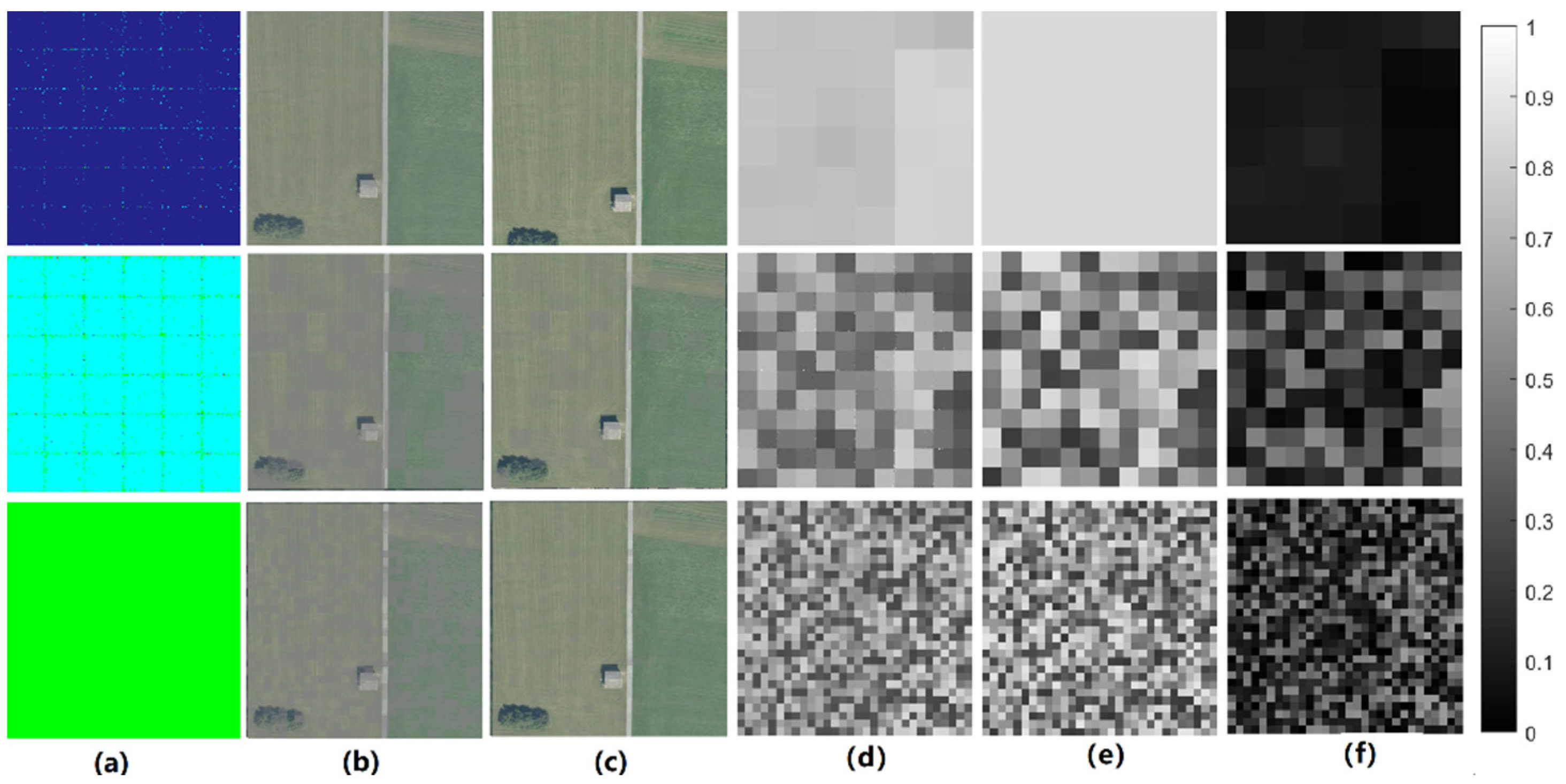

3. Datasets

4. Methods

4.1. Problem Formulation

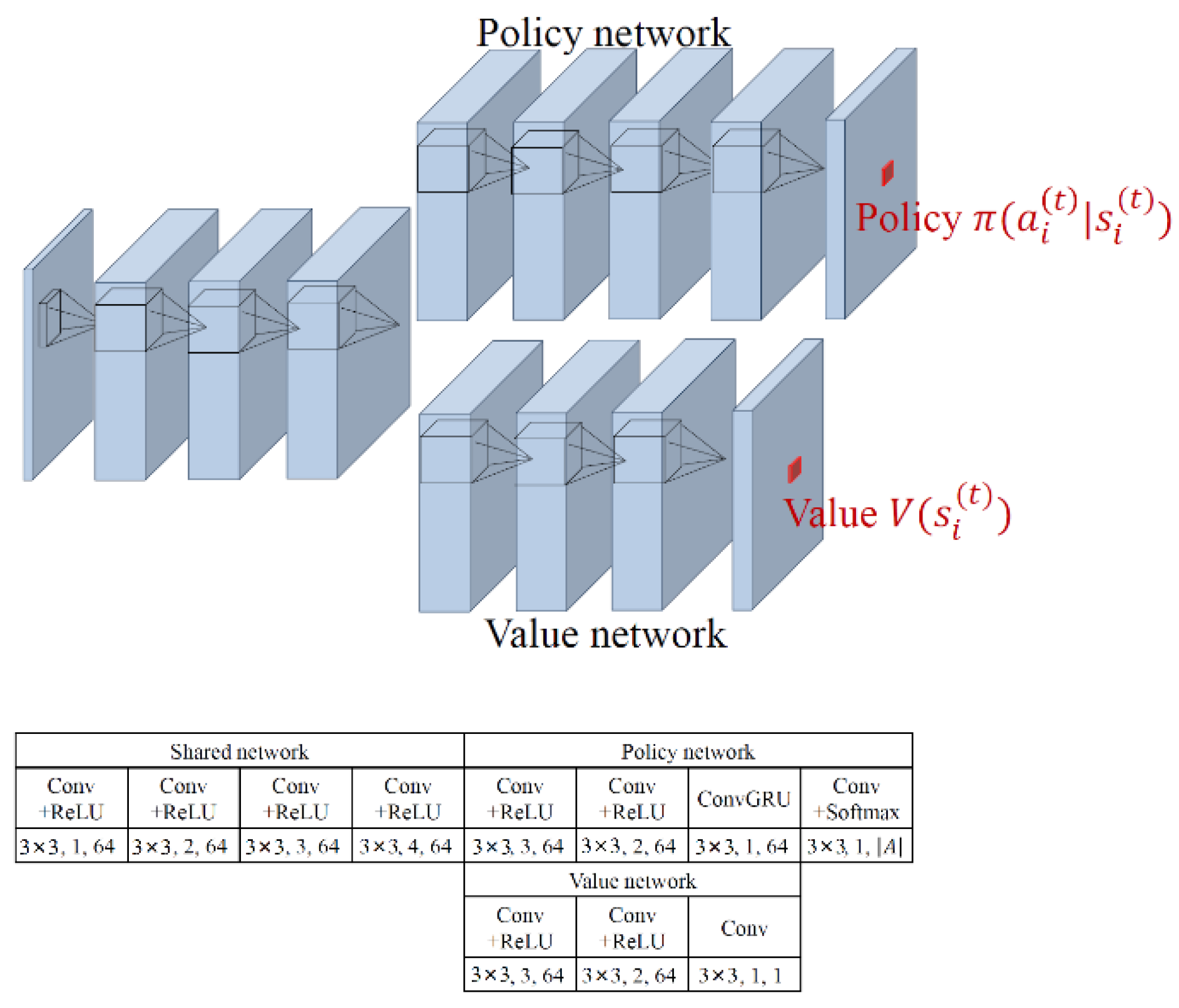

4.2. PixelRL Method

- 1.

- Fully convolutional networks (FCN) were used instead of networks; hence, all agents can share the parameters. The A3C was modified to a fully convolutional form, as illustrated in Figure 3.

- 2.

- The network was designed with a bigger receptive field to boost the network performance. The policy and value networks not only observe the i-th pixel but also the neighbor pixels. In this case, action affects not only state , but also the policies and values in a local window centered at the i-th pixel. The selected action not only affects the i-th pixel, but also the pixels in the local window centered at the i-th pixel.

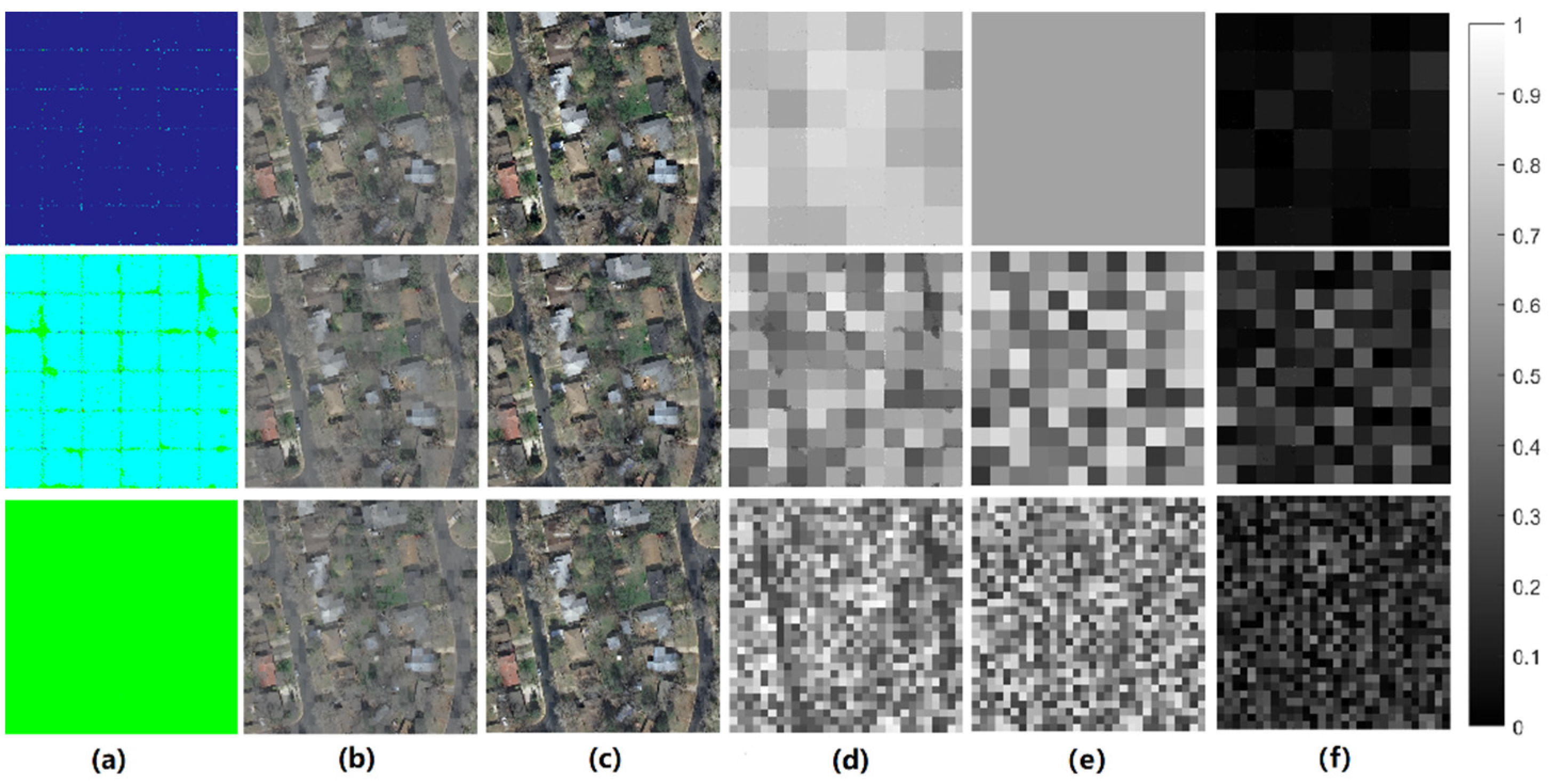

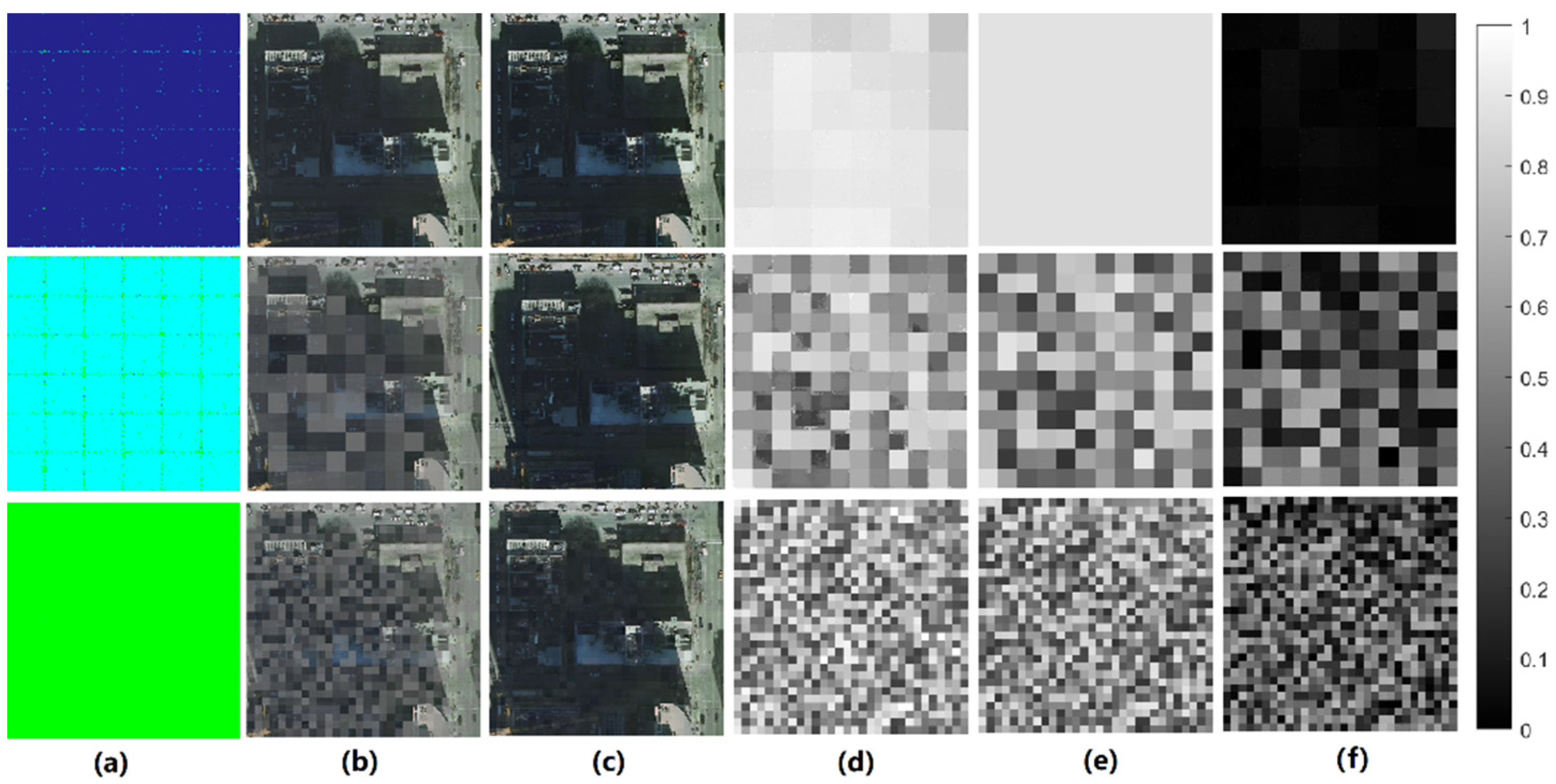

4.3. Actions

- Action 0, pixel-value decrement: subtract 1 from the values of all channels of the pixel;

- Action 1, do nothing: do not change the pixel values;

- Action 2, pixel-value increment: add 1 to the values of all channels of the pixel;

- Action 3, DehazeNet14: substitute the pixel values with the result of the DehazeNet14 method;

- Action 4, DehazeNet35: substitute the pixel values with the results of the DehazeNet35 method;

- Action 5, DehazeNet70: substitute the pixel values with the results of the DehazeNet70 method;

- Action 6, substitute the pixel values with the results of the DCP method.

4.4. Reward

5. Results and Discussion

5.1. One-Step DRL_Dehaze Results

5.2. Two-Step DRL_Dehaze Results

5.3. Three-Step DRL_Dehaze Results

5.4. Quantitative Evaluations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, H.; Gu, X.F.; Xie, D.H.; Yu, T.; Meng, Q.Y. A review of atmospheric aerosol research by using polarization remote sensing. Spectrosc. Spectr. Anal. 2014, 34, 1873–1880. [Google Scholar]

- Li, Z.; Chen, X.; Ma, Y.; Qie, L.; Hou, W.; Qiao, Y. An overview of atmospheric correction for optical remote sensing satellites. J. Nanjing Univ. Inf. Sci. Technol. Nat. Sci. Ed. 2018, 10, 6–15. [Google Scholar]

- Griffin, M.K.; Burke, H.H. Compensation of hyperspectral data for atmospheric effects. Linc. Lab. J. 2003, 14, 29–54. [Google Scholar]

- Gao, B.C.; Montes, M.J.; Davis, C.O.; Goetz, A.F. Atmospheric correction algorithms for hyperspectral remote sensing data of land and ocean. Remote Sens. Environ. 2009, 113, S17–S24. [Google Scholar] [CrossRef]

- Minu, S.; Shetty, A. Atmospheric correction algorithms for hyperspectral imageries: A review. Int. Res. J. Earth Sci. 2015, 3, 14–18. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef] [PubMed]

- Berman, D.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1674–1682. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 154–169. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 26–29 October 2017; pp. 4770–4778. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. TOG 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Kang, J.; Woo, S.S. DLPNet: Dynamic Loss Parameter Network using Reinforcement Learning for Aerial Imagery Detection. In Proceedings of the 2021 4th International Conference on Artificial Intelligence and Pattern Recognition, Xiamen, China, 24–26 September 2021; pp. 191–198. [Google Scholar]

- Liu, S.; Tang, J. Modified deep reinforcement learning with efficient convolution feature for small target detection in VHR remote sensing imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 170. [Google Scholar] [CrossRef]

- Uzkent, B.; Yeh, C.; Ermon, S. Efficient object detection in large images using deep reinforcement learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 2–5 March 2020; pp. 1824–1833. [Google Scholar]

- Fu, K.; Li, Y.; Sun, H.; Yang, X.; Xu, G.; Li, Y.; Sun, X. A ship rotation detection model in remote sensing images based on feature fusion pyramid network and deep reinforcement learning. Remote Sens. 2018, 10, 1922. [Google Scholar] [CrossRef]

- Casanova, A.; Pinheiro, P.O.; Rostamzadeh, N.; Pal, C.J. Reinforced active learning for image segmentation. arXiv 2020, arXiv:2002.06583. [Google Scholar]

- Li, X.; Zheng, H.; Han, C.; Wang, H.; Dong, K.; Jing, Y.; Zheng, W. Cloud detection of superview-1 remote sensing images based on genetic reinforcement learning. Remote Sens. 2020, 12, 3190. [Google Scholar] [CrossRef]

- Feng, J.; Li, D.; Gu, J.; Cao, X.; Shang, R.; Zhang, X.; Jiao, L. Deep reinforcement learning for semisupervised hyperspectral band selection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5501719. [Google Scholar] [CrossRef]

- Mou, L.; Saha, S.; Hua, Y.; Bovolo, F.; Bruzzone, L.; Zhu, X.X. Deep reinforcement learning for band selection in hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5504414. [Google Scholar] [CrossRef]

- Bhatt, J.S.; Joshi, M.V. Deep learning in hyperspectral unmixing: A review. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2189–2192. [Google Scholar]

- Chu, X.; Zhang, B.; Ma, H.; Xu, R.; Li, Q. Fast, accurate and lightweight super-resolution with neural architecture search. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 59–64. [Google Scholar]

- Rout, L.; Shah, S.; Moorthi, S.M.; Dhar, D. Monte-Carlo Siamese policy on actor for satellite image super resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 194–195. [Google Scholar]

- Vassilo, K.; Heatwole, C.; Taha, T.; Mehmood, A. Multi-step reinforcement learning for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 512–513. [Google Scholar]

- Furuta, R.; Inoue, N.; Yamasaki, T. Fully convolutional network with multi-step reinforcement learning for image processing. Proc. AAAI Conf. Artif. Intell. 2019, 33, 3598–3605. [Google Scholar] [CrossRef]

- Furuta, R.; Inoue, N.; Yamasaki, T. Pixelrl: Fully convolutional network with reinforcement learning for image processing. IEEE Trans. Multimed. 2019, 22, 1704–1719. [Google Scholar] [CrossRef]

- Li, W.; Feng, X.; An, H.; Ng, X.Y.; Zhang, Y.-J. Mri reconstruction with interpretable pixel-wise operations using reinforcement learning. Proc. AAAI Conf. Artif. Intell. 2020, 34, 792–799. [Google Scholar] [CrossRef]

- Liao, X.; Li, W.; Xu, Q.; Wang, X.; Jin, B.; Zhang, X.; Wang, Y.; Zhang, Y. Iteratively-refined interactive 3D medical image segmentation with multi-agent reinforcement learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9394–9402. [Google Scholar]

- Anh, T.T.; Nguyen-Tuan, K.; Quan, T.M.; Jeong, W.K. Reinforced Coloring for End-to-End Instance Segmentation. arXiv 2020, arXiv:2005.07058. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

| Ground Feature | Evaluation Indicators | Atmosphere Transmission | Hazy Image | DCP | CAP | NLD | DehazeNet | AODNet | MSCNN | |

|---|---|---|---|---|---|---|---|---|---|---|

| Residential area | PSNR | R1 * | 0.64 | 23.42 | 20.73 | 24.72 | 12.59 | 27.36 | 23.77 | 30.75 ** |

| R2 | 0.87 | 27.80 | 13.84 | 18.20 | 13.49 | 27.95 | 19.77 | 25.00 | ||

| SSIM | R1 | 0.64 | 0.92 | 0.94 | 0.98 | 0.59 | 0.97 | 0.94 | 0.98 | |

| R2 | 0.87 | 0.96 | 0.84 | 0.90 | 0.66 | 0.97 | 0.90 | 0.95 | ||

| Cities | PSNR | C1 | 0.25 | 14.21 | 19.64 | 19.69 | 16.43 | 27.21 | 20.10 | 23.43 |

| C2 | 0.89 | 28.51 | 21.62 | 23.26 | 18.04 | 28.62 | 27.32 | 24.50 | ||

| SSIM | C1 | 0.25 | 0.62 | 0.92 | 0.90 | 0.78 | 0.97 | 0.90 | 0.92 | |

| C2 | 0.89 | 0.97 | 0.86 | 0.92 | 0.74 | 0.97 | 0.96 | 0.87 | ||

| Forests | PSNR | FO1 | 0.85 | 27.67 | 23.30 | 31.65 | 19.82 | 38.12 | 25.44 | 34.24 |

| FO2 | 0.29 | 13.36 | 28.32 | 20.55 | 14.19 | 27.10 | 30.01 | 26.29 | ||

| SSIM | FO1 | 0.85 | 0.98 | 0.89 | 0.98 | 0.71 | 0.99 | 0.89 | 0.99 | |

| FO2 | 0.29 | 0.67 | 0.94 | 0.89 | 0.64 | 0.96 | 0.97 | 0.96 | ||

| Farmlands | PSNR | FA1 | 0.70 | 26.79 | 11.65 | 18.57 | 16.03 | 28.01 | 19.35 | 26.48 |

| FA2 | 0.85 | 40.93 | 11.89 | 23.73 | 17.93 | 41.06 | 35.71 | 33.17 | ||

| SSIM | FA1 | 0.70 | 0.97 | 0.77 | 0.87 | 0.81 | 0.98 | 0.86 | 0.96 | |

| FA2 | 0.85 | 1.00 | 0.70 | 0.98 | 0.88 | 1.00 | 0.99 | 0.99 |

| Serial Number | Action | Color |

|---|---|---|

| 0 | Pixel value − = 1 |  |

| 1 | do nothing |  |

| 2 | Pixel value + = 1 |  |

| 3 | DehazeNet14 |  |

| 4 | DehazeNet35 |  |

| 5 | DehazeNet70 |  |

| 6 | DCP |  |

| Image Group Name | Uniform-Haze Situation | Medium-Haze Situation | Small-Scale Haze Situation | ||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | MSE ** | PSNR | SSIM | MSE ** | PSNR | SSIM | MSE ** | |

| R1 * | 34.54 | 0.99 | 1.02 | 27.39 | 0.96 | 12.62 | 27.26 | 0.96 | 13.26 |

| R2 | 27.30 | 0.97 | 7.43 | 27.16 | 0.95 | 19.51 | 26.51 | 0.96 | 21.65 |

| C1 | 26.57 | 0.95 | 2.31 | 23.77 | 0.95 | 22.72 | 25.43 | 0.94 | 22.82 |

| C2 | 38.42 | 1.00 | 0.41 | 27.89 | 0.96 | 37.04 | 25.23 | 0.92 | 41.40 |

| FO1 | 42.09 | 1.00 | 0.15 | 33.09 | 0.98 | 31.53 | 26.47 | 0.94 | 32.54 |

| FO2 | 26.19 | 0.95 | 1.17 | 24.80 | 0.95 | 19.12 | 22.71 | 0.90 | 21.16 |

| FA1 | 38.83 | 1.00 | 1.26 | 31.21 | 0.98 | 23.16 | 29.28 | 0.97 | 26.50 |

| FA2 | 42.53 | 1.00 | 2.05 | 42.24 | 1.00 | 22.72 | 40.15 | 1.00 | 24.24 |

| Ground Feature | Evaluation Indicators | Hazy Image | Dehaze Net | One-Step DRL_Dehaze | Two-Step DRL_Dehaze | Three-Step DRL_Dehaze | |

|---|---|---|---|---|---|---|---|

| Residential area | PSNR | R1 ** | 23.42 | 27.36 | 34.54 * | 25.88 | 23.42 |

| R2 | 27.80 | 27.30 | 27.95 | 25.58 | 22.09 | ||

| SSIM | R1 | 0.92 | 0.97 | 0.99 | 0.96 | 0.93 | |

| R2 | 0.96 | 0.97 | 0.97 | 0.96 | 0.92 | ||

| Cities | PSNR | C1 | 14.21 | 27.21 | 23.77 | 30.28 | 26.62 |

| C2 | 28.51 | 28.62 | 38.42 | 36.87 | 33.88 | ||

| SSIM | C1 | 0.62 | 0.97 | 0.95 | 0.99 | 0.97 | |

| C2 | 0.97 | 0.97 | 1.00 | 0.99 | 0.99 | ||

| Forests | PSNR | FO1 | 27.67 | 38.12 | 42.09 | 34.72 | 31.84 |

| FO2 | 13.36 | 27.10 | 24.80 | 32.90 | 37.19 | ||

| SSIM | FO1 | 0.98 | 0.99 | 1.00 | 0.99 | 0.99 | |

| FO2 | 0.67 | 0.96 | 0.95 | 0.99 | 1.00 | ||

| Farmlands | PSNR | FA1 | 26.79 | 28.01 | 38.83 | 39.62 | 36.34 |

| FA2 | 40.93 | 41.06 | 42.53 | 35.85 | 32.15 | ||

| SSIM | FA1 | 0.97 | 0.98 | 1.00 | 1.00 | 1.00 | |

| FA2 | 1.00 | 1.00 | 1.00 | 0.99 | 0.99 | ||

| Time consumption (second) | - | 1.8 | 16.30 | 20.1 | 21.4 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.; Liang, D.; Hang, B.; Gao, H. Aerial Image Dehazing Using Reinforcement Learning. Remote Sens. 2022, 14, 5998. https://doi.org/10.3390/rs14235998

Yu J, Liang D, Hang B, Gao H. Aerial Image Dehazing Using Reinforcement Learning. Remote Sensing. 2022; 14(23):5998. https://doi.org/10.3390/rs14235998

Chicago/Turabian StyleYu, Jing, Deying Liang, Bo Hang, and Hongtao Gao. 2022. "Aerial Image Dehazing Using Reinforcement Learning" Remote Sensing 14, no. 23: 5998. https://doi.org/10.3390/rs14235998

APA StyleYu, J., Liang, D., Hang, B., & Gao, H. (2022). Aerial Image Dehazing Using Reinforcement Learning. Remote Sensing, 14(23), 5998. https://doi.org/10.3390/rs14235998