A Review of AI-Driven Conversational Chatbots Implementation Methodologies and Challenges (1999–2022)

Abstract

1. Introduction

- Overview

- Define the year range of the surveyed papers.

- Define the keywords and screening criteria of the surveyed papers.

- Review, study, and categorize the data of all surveyed papers.

- What is the purpose of building chatbots?

- Why are chatbots built?

- What issues are people trying to resolve?

- How are chatbots used in specific areas?

- Who are the target users of chatbots?

- How are chatbots built?

- What are the technical considerations when building chatbots?

- What key machine learning models are used in chatbots?

- What training techniques are used in chatbots?

- What are the overall outcome and challenges of chatbots?

- Are the objectives and intentions met?

- What are the limitations and challenges?

- What are the future development and research trends of chatbots?

- What are the conclusions of chatbot research so far?

- What other potential areas can be applied to chatbots?

- What will be the future development trend?

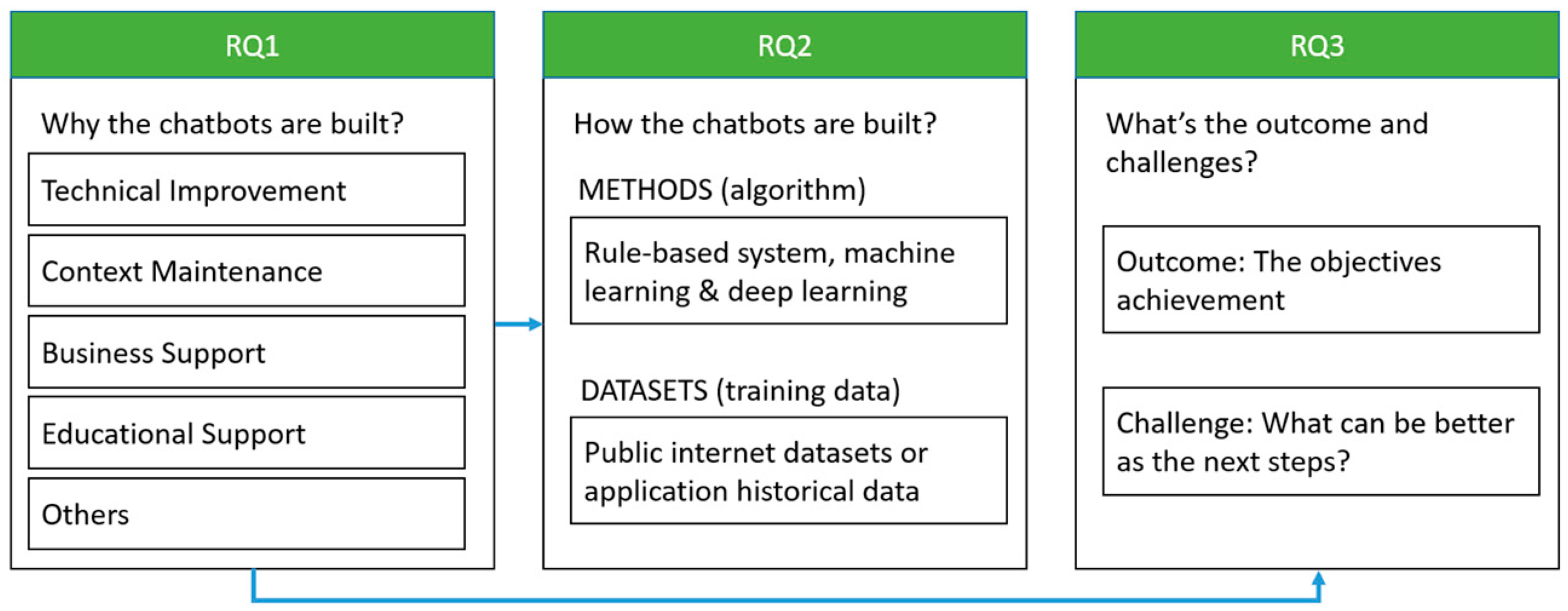

- RQ1: What are the objectives of building conversational chatbots?

- RQ2: What are the methods and datasets used to build conversational chatbots?

- RQ3: What are the outcomes and challenges of conversational chatbots?

2. Literature Review

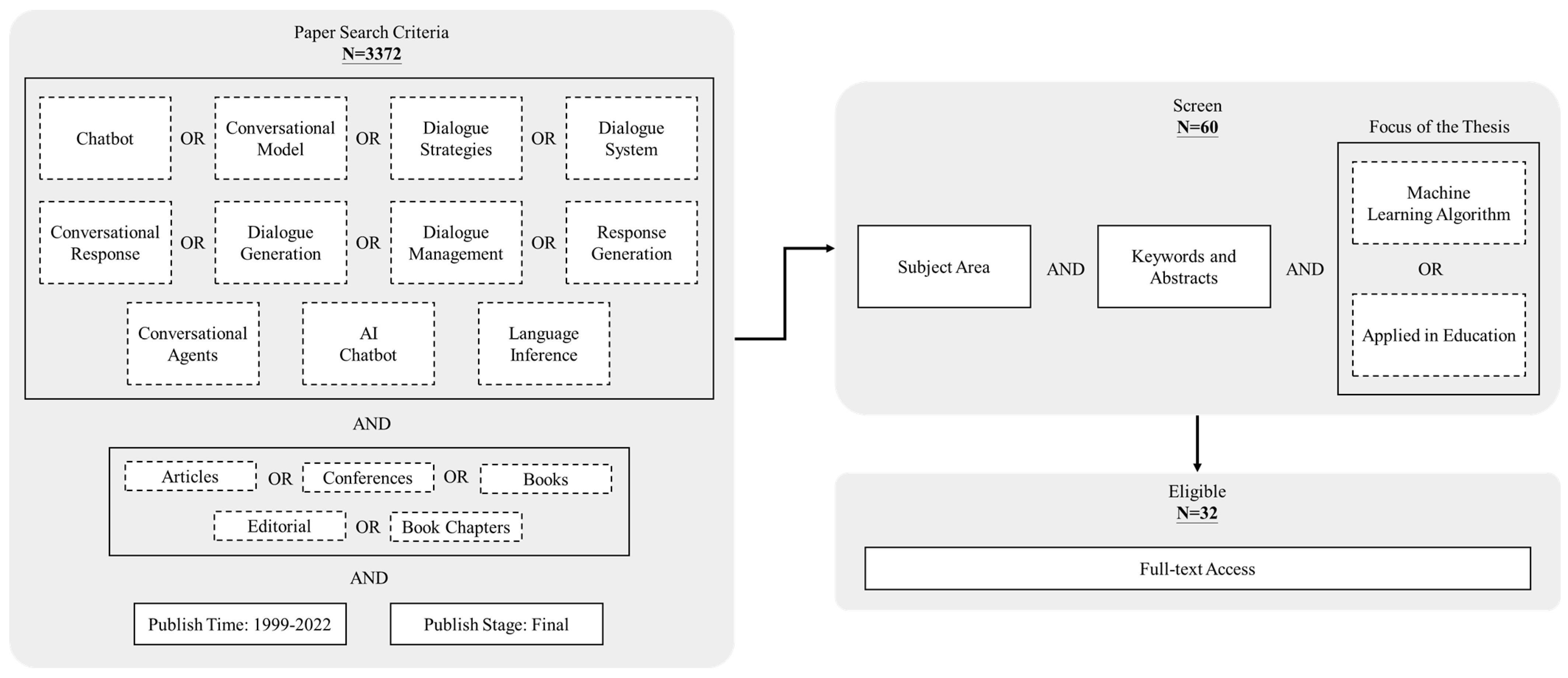

3. Review Methodology

3.1. Process of the Survey Literature

3.2. Overview of Surveyed Method

4. Findings and Discussion

4.1. Overview of Conversational Chatbots

4.2. Objectives of Conversational Chatbots (RQ1)

4.3. Methods and Datasets of Conversational Chatbots

4.4. Outcomes and Challenges of a Conversational Chatbot

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- ChatGPT: Optimizing Language Models for Dialogue—OpenAI. Available online: https://openai.com/blog/chatgpt/ (accessed on 28 December 2022).

- Ghazvininejad, M.; Brockett, C.; Chang, M.W.; Dolan, B.; Gao, J.; Yih, W.T.; Galley, M. A knowledge-grounded neural conversation model. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Mrkšić, N.; Séaghdha, D.Ó.; Wen, T.H.; Thomson, B.; Young, S. Neural Belief Tracker: Data-Driven Dialogue State Tracking. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 1777–1788. [Google Scholar]

- Cui, L.; Huang, S.; Wei, F.; Tan, C.; Duan, C.; Zhou, M. Superagent: A customer service chatbot for e-commerce websites. In Proceedings of the ACL 2017, System Demonstrations, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 97–102. [Google Scholar]

- Pawlik, Ł.; Płaza, M.; Deniziak, S.; Boksa, E. A method for improving bot effectiveness by recognising implicit customer intent in contact centre conversations. Speech Commun. 2022, 143, 33–45. [Google Scholar] [CrossRef]

- Song, D.; Oh, E.Y.; Hong, H. The Impact of Teaching Simulation Using Student Chatbots with Different Attitudes on Preservice Teachers’ Efficacy. Educ. Technol. Soc. 2022, 25, 46–59. [Google Scholar]

- Lee, D.; Yeo, S. Developing an AI-based chatbot for practicing responsive teaching in mathematics. Comput. Educ. 2022, 191, 104646. [Google Scholar] [CrossRef]

- Liu, C.C.; Liao, M.G.; Chang, C.H.; Lin, H.M. An analysis of children’interaction with an AI chatbot and its impact on their interest in reading. Comput. Educ. 2022, 189, 104576. [Google Scholar] [CrossRef]

- Hollander, J.; Sabatini, J.; Graesser, A. How Item and Learner Characteristics Matter in Intelligent Tutoring Systems Data. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practi-tioners’ and Doctoral Consortium: 23rd International Conference, AIED 2022, Durham, UK, 27–31 July 2022, Proceedings, Part II; Springer International Publishing: Cham, Switzerland, 2022; pp. 520–523. [Google Scholar]

- Lin, C.J.; Mubarok, H. Learning analytics for investigating the mind map-guided AI chatbot approach in an EFL flipped speaking classroom. Educ. Technol. Soc. 2021, 24, 16–35. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Sun, X.; Chen, X.; Pei, Z.; Ren, F. Emotional human machine conversation generation based on SeqGAN. In Proceedings of the 2018 First Asian Conference on Affective Computing and Intelligent Interaction (ACII Asia), Beijing, China, 20–22 May 2018; pp. 1–6. [Google Scholar]

- Li, J.; Monroe, W.; Ritter, A.; Galley, M.; Gao, J.; Jurafsky, D. Deep reinforcement learning for dialogue generation. arXiv preprint 2016, arXiv:1606.01541. [Google Scholar]

- Singh, S.; Kearns, M.; Litman, D.; Walker, M. Reinforcement learning for spoken dialogue systems. Adv. Neural Inf. Process. Syst. 1999, 12, 956–962. [Google Scholar]

- Kanodia, N.; Ahmed, K.; Miao, Y. Question Answering Model Based Conversational Chatbot using BERT Model and Google Dialogflow. In Proceedings of the 2021 31st International Telecommunication Networks and Applications Conference (ITNAC), Sydney, Australia, 24–26 November 2021; pp. 19–22. [Google Scholar]

- Serban, I.; Sordoni, A.; Bengio, Y.; Courville, A.; Pineau, J. Building end-to-end dialogue systems using generative hierarchical neural network models. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Zhou, L.; Gao, J.; Li, D.; Shum, H.Y. The design and implementation of xiaoice, an empathetic social chatbot. Comput. Linguist. 2020, 46, 53–93. [Google Scholar] [CrossRef]

- Singh, S.; Litman, D.; Kearns, M.; Walker, M. Optimizing dialogue management with reinforcement learning: Experiments with the NJFun system. J. Artif. Intell. Res. 2002, 16, 105–133. [Google Scholar] [CrossRef]

- Li, J.; Galley, M.; Brockett, C.; Spithourakis, G.P.; Gao, J.; Dolan, B. A persona-based neural conversation model. arXiv preprint 2016, arXiv:1603.06155. [Google Scholar]

- Levin, E.; Pieraccini, R.; Eckert, W. A stochastic model of human–machine interaction for learning dialog strategies. IEEE Trans. Speech Audio Process. 2000, 8, 11–23. [Google Scholar] [CrossRef]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A diversity-promoting objective function for neural conversation models. arXiv preprint 2015, arXiv:1510.03055. [Google Scholar]

- Sordoni, A.; Galley, M.; Auli, M.; Brockett, C.; Ji, Y.; Mitchell, M.; Dolan, B. A neural network approach to context-sensitive generation of conversational responses. arXiv preprint 2015, arXiv:1506.06714. [Google Scholar]

- Jianfeng, G.; Michel, G.; Lihong, L. Neural approaches to conversational AI. Found. Trends Inf. Retr. 2019, 13, 127–298. [Google Scholar]

- Dong, L.; Yang, N.; Wang, W.; Wei, F.; Liu, X.; Wang, Y.; Hon, H.W. Unified language model pre-training for natural language understanding and generation. Adv. Neural Inf. Process. Syst. 2019, 33, 13063–13075. [Google Scholar]

- Sato, S.; Yoshinaga, N.; Toyoda, M.; Kitsuregawa, M. Modeling situations in neural chat bots. In Proceedings of ACL 2017, Student Research Workshop, Vancouver, Canada, 30 July–4 August 2017; pp. 120–127. [Google Scholar]

- Lei, W.; Jin, X.; Kan, M.Y.; Ren, Z.; He, X.; Yin, D. Sequicity: Simplifying task-oriented dialogue systems with single sequence-to-sequence architectures. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 1437–1447. [Google Scholar]

- Anki, P.; Bustamam, A.; Al-Ash, H.S.; Sarwinda, D. High Accuracy Conversational AI Chatbot Using Deep Recurrent Neural Networks Based on BiLSTM Model. In Proceedings of the 2020 3rd International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 24–25 November 2020; pp. 382–387. [Google Scholar]

- Keerthana, R.R.; Fathima, G.; Florence, L. Evaluating the Performance of Various Deep Reinforcement Learning Algorithms for a Conversational Chatbot. In Proceedings of the 2021 2nd International Conference for Emerging Technology (INCET), Belgaum, India, 21–23 May 2021; pp. 1–8. [Google Scholar]

- Gasic, M.; Breslin, C.; Henderson, M.; Kim, D.; Szummer, M.; Thomson, B.; Young, S. POMDP-based dialogue manager adaptation to extended domains. In Proceedings of the SIGDIAL 2013 Conference, Metz, France, 22–24 August 2013; pp. 214–222. [Google Scholar]

- Henderson, M.; Vulić, I.; Gerz, D.; Casanueva, I.; Budzianowski, P.; Coope, S.; Su, P.H. Training neural response selection for task-oriented dialogue systems. arXiv preprint 2019, arXiv:1906.01543. [Google Scholar]

- Syed, Z.H.; Trabelsi, A.; Helbert, E.; Bailleau, V.; Muths, C. Question answering chatbot for troubleshooting queries based on transfer learning. Procedia Comput. Sci. 2021, 192, 941–950. [Google Scholar] [CrossRef]

- Tegos, S.; Demetriadis, S.; Karakostas, A. MentorChat: Introducing a configurable conversational agent as a tool for adaptive online collaboration support. In Proceedings of the 2011 15th Panhellenic Conference on Informatics, Kastoria, Greece, 30 September–2 October 2011; pp. 13–17. [Google Scholar]

| Region | Country | Count | Reference |

|---|---|---|---|

| North America | USA | 15 | [1,2,4,6,7,9,11,13,14,17,18,20,21,22,23] |

| Canada | 1 | [16] | |

| APAC | China | 2 | [12,24] |

| Taiwan | 2 | [8,10] | |

| Japan | 1 | [25] | |

| Singapore | 1 | [26] | |

| Indonesia | 1 | [27] | |

| India | 1 | [28] | |

| Europe | UK | 3 | [3,29,30] |

| Germany | 1 | [19] | |

| Australia | 1 | [15] | |

| France | 1 | [31] | |

| Greece | 1 | [32] | |

| Poland | 1 | [5] |

| Category | Item Description | Count | References |

|---|---|---|---|

| Technical Improvement | Response accuracy | 7 | [1,11,13,14,24,27,30] |

| Integrate domain knowledge into responses | 4 | [1,2,11,15,31] | |

| Produce content-based responses | 3 | [1,2,3,11] | |

| Model objective functional enhancement | 2 | [5,21] | |

| Context Maintenance | Identify dialogue context (or opinion) | 6 | [1,13,14,22,26,28] |

| Optimize dialogue strategies (policy) | 5 | [1,12,18,20,29] | |

| Enhance word embedding (syntactic to semantic) | 1 | [22] | |

| Maintain users’ engagement or connection | 1 | [9] | |

| Business Support | Support entertainment | 2 | [2,3] |

| Increase potential business revenue | 2 | [4,5] | |

| Educational Support | Improve comprehension skills | 3 | [8,9,10] |

| Enhance teaching efficacy | 2 | [6,7] | |

| Support collaborative learning | 1 | [32] | |

| Other specific Objectives | Integrate emotion (human feeling) into responses | 2 | [12,19] |

| Be cognitive, user-friendly, interactive, and empathetic | 2 | [15,16] |

| Category | Type of Method | Count | References |

|---|---|---|---|

| Machine Learning Training Techniques | Reinforcement Learning | 7 | [1,12,13,14,18,20,28] |

| Supervised Learning | 1 | [20] | |

| Transfer Learning | 1 | [29] | |

| Machine Learning Models | LSTM | 4 | [12,19,21,27] |

| BERT | 5 | [11,15,24,30,31] | |

| RNN | 3 | [2,16,22] | |

| ELMO | 2 | [24,30] | |

| MDP | 2 | [20,29] | |

| GPT-3 | 1 | [1] | |

| Seq2Seq RNN | 1 | [25] | |

| Others | Specific Systems | 6 | [3,4,15,16,17,23] |

| Experiment based | 6 | [6,7,8,9,10,32] |

| Dataset | Count | References |

|---|---|---|

| Twitter-Related Dataset | 4 | [19,21,22,25] |

| OpenSubtitles Dataset | 3 | [13,21,30] |

| MovieDic or Cornell Movie Dialog Corpus | 3 | [16,27,28] |

| Wikipedia and Book Corpus | 5 | [1,2,4,11,15] |

| Television Series Transcripts | 2 | [17,19] |

| SEMEVAL15 | 2 | [4,30] |

| Amazon Reviews and Amazon QA | 2 | [2,30] |

| Amazon Mechanical Turk Platform | 2 | [3,26] |

| Foursquare | 1 | [2] |

| CoQA | 1 | [28] |

| Specific Application Historic Dataset | 6 | [5,12,14,18,20,31] |

| Others (e.g., course materials) | 11 | [6,7,8,9,10,17,23,24,29,30,32] |

| Category | Outcome | # | References |

|---|---|---|---|

| Technical Improvement (Output Optimization) | Include Context | 6 | [1,2,3,11,13,30] |

| Skills | 4 | [2,3,15,21] | |

| External Knowledge | 3 | [2,15,31] | |

| Personality and Emotion | 2 | [12,19] | |

| Context Maintenance (Algorithm or Model Optimization) | Reinforcement Learning | 4 | [1,14,18,28] |

| BERT | 4 | [11,15,30,24] | |

| GPT-3 | 1 | [1] | |

| LSTM | 1 | [19] | |

| Self-Attention | 2 | [11,24] | |

| Transfer Learning | 1 | [31] | |

| NBT (Neural Belief Tracker) | 1 | [3] | |

| Educational Support | English Skills Improvement | 3 | [8,9,10] |

| Teaching Efficacy Improvement | 2 | [6,7] | |

| Learning Result Improvement | 1 | [32] | |

| Business Support | Optimized Input (Noise Removal) | 1 | [5] |

| Dedicated Model for E-commerce | 1 | [4] | |

| Entertainment and Fun Support | 2 | [2,3] |

| Challenge | Count | References |

|---|---|---|

| Best (or Better) Models Selection and Modification | 8 | [1,16,17,19,26,27,29,30] |

| More Efficient Pre-work for System Training | 4 | [4,5,18,20] |

| More Efficient Information Extraction and Classification | 3 | [20,21,26] |

| Good Diversity and Quantity of Training Data | 6 | [3,4,7,8,12,32] |

| More Dynamic Profile/Strategy Adjustment | 3 | [6,9,10] |

| Defining Best Objective Function Formulation | 1 | [20] |

| Better Feature Selection | 1 | [18] |

| Humanization and Moral Enhancement | 1 | [12] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.-C.; Huang, A.Y.Q.; Yang, S.J.H. A Review of AI-Driven Conversational Chatbots Implementation Methodologies and Challenges (1999–2022). Sustainability 2023, 15, 4012. https://doi.org/10.3390/su15054012

Lin C-C, Huang AYQ, Yang SJH. A Review of AI-Driven Conversational Chatbots Implementation Methodologies and Challenges (1999–2022). Sustainability. 2023; 15(5):4012. https://doi.org/10.3390/su15054012

Chicago/Turabian StyleLin, Chien-Chang, Anna Y. Q. Huang, and Stephen J. H. Yang. 2023. "A Review of AI-Driven Conversational Chatbots Implementation Methodologies and Challenges (1999–2022)" Sustainability 15, no. 5: 4012. https://doi.org/10.3390/su15054012

APA StyleLin, C.-C., Huang, A. Y. Q., & Yang, S. J. H. (2023). A Review of AI-Driven Conversational Chatbots Implementation Methodologies and Challenges (1999–2022). Sustainability, 15(5), 4012. https://doi.org/10.3390/su15054012