Abstract

This study investigates the application of advanced deep learning models to forecast fossil energy prices, a critical factor influencing global economic stability. Unlike previous research, this study conducts a comparative analysis of Gated Recurrent Unit (GRU), Recurrent Neural Network (RNN), Bidirectional Long Short-Term Memory (Bi-LSTM), Long Short-Term Memory (LSTM), Convolutional Neural Network (CNN), and Deep Neural Network (DNN) models. The evaluation metrics employed include Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE). The results reveal that recurrent architectures, particularly GRU, LSTM, and Bi-LSTM, consistently outperform feedforward and convolutional models, demonstrating superior ability to capture temporal dependencies and nonlinear dynamics in energy markets. In contrast, the RNN and DNN show relatively weaker generalization capabilities. Additionally, visualizations of actual versus predicted prices for each model further emphasize superior forecasting accuracy of recurrent models. The results highlight the potential of deep learning in enhancing investment and policy decisions. Additionally, the results provide significant implications for policymakers and investors by emphasizing the value of accurate energy price forecasting in mitigating market volatility, improving portfolio management, and supporting evidence-based energy policies.

1. Introduction

The prediction of stock price movement is a critical component in shaping investment strategies within the stock market and has gathered significant interest from researchers. According to the widely recognized Efficient Market Hypothesis (EMH), stock prices incorporate all available information that influence price fluctuations [1]. As a result, the dynamic and unpredictable nature of market evolution leads to complex and constantly shifting patterns in stock prices, rendering accurate forecasting particularly challenging. However, the potential of high returns led to the development of many methods for predicting stock markets. Over the last three decades, a lot of research has been performed in this field. Nevertheless, many scholars continue to regard the prediction of stocks within nonlinear, non-stationary financial time series as one of the most formidable challenges [2]. Although several metathetical models have been created, the outcome remains unsatisfactory. Moreover, much research in stock market forecasting has predominantly concentrated on predicting market volatility [3,4]. However, traditional econometric models have historically dominated financial time series analysis, their linear assumptions often fail to capture the nonlinear, noisy, and dynamic nature of equity markets [5].

The stock market has long posed a significant challenge to analysts due to its inherent unpredictability. However, Artificial Intelligence (AI) has proven effective in identifying hidden patterns in large datasets [6]. AI is widely used in finance to improve predictions and economic growth, with many governments investing in AI to enhance financial forecasting [7]. By automating processes, AI reduces labor costs and boosts efficiency [8]. In addition, AI assists in predicting stock prices, identifying strong and weak stocks, and supporting portfolio management to lower risk [9,10,11,12]. Further, machine learning algorithms, for example, K-means clustering, are often utilized to manage assets and extract significant insights from the data [13,14]. Moreover, some of the algorithms including Support Vector Machines (SVMs) and Artificial Neural Networks (ANNs) are particularly effective. The SVM organizes stock data by evaluating latest patterns with historical trends, whereas the ANN assists foresee volatility by revealing nonlinear relationships in the data without prior knowledge of dependencies [15,16,17,18,19].

The deep learning models have effectively modeled and influenced the field of finance, specifically in forecasting future trends of the financial times series. Therefore, to make the best of the financial times series, the paper explores six key and widely used deep learning models, namely the Long Short-Term Memory (LSTM), Deep Neural Networks (DNNs), Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), Bidirectional Long Short-Term Memory (Bi-LSTM), and Gated Recurrent Units (GRUs) for their efficacy in forecasting the five key fossil energy prices of crude oil, natural gas, Brent oil, RBOB gasoline, and heating oil price. The flow of information in a typical feedforward neural network architecture is mainly focused on the forward direction. In addition, preserving previous information is a challenge due to autonomous processing. The models offer limitations in handling sequential data due to the importance of previous events that are necessary to predict the upcoming events. Therefore, RNNs are proposed to achieve these tasks. RNNs are concentrated form of the ANN, that are constructed to process sequential inputs by integrating internal feedback connections among neurons [20]. However, the DNN contains dense hidden layers with a hierarchical topology [21]. Furthermore, LSTM is a special variant of the RNN that is mainly suited for handling nonlinear time series with larger time intervals and delays, which adds in retaining memory for predictive tasks [22]. Conversely, LSTM models have limitations because they can learn only from past information [23]. Additionally, Bi-LSTM is a variant of LSTM, which can learn from previous and upcoming information due to incorporation of two hidden layers with inverse directions and linked with the same output [24]. As mentioned by [25], CNNs are mainly inspired by computer vision applications, but they have also been successfully applied for financial times series analysis [26,27]. In addition, most prior research focuses on evaluating isolated models (example, LSTM or CNN) or short-term prospects, leaving a critical gap in understanding how advanced DL models perform comparatively in capturing multi-step dependencies for strategic, long-term investment planning. This study addresses this gap by conducting the first comprehensive comparative analysis of six state-of-the-art DL architectures of the GRU, RNN, Bi-LSTM, LSTM, CNN, and Deep Neural Network, applied to forecast fossil energy prices for energy security and financial stability. Moreover, the papers utilize five major fossil fuel benchmarks including crude oil, natural gas, Brent oil, RBOB gasoline, and heating oil, which play a central role in shaping global energy security, macroeconomic stability, and financial market dynamics. Additionally, the fossil fuels prices are highly sensitive to geological risks, supply chain disruptions, policy uncertainty, and international market fluctuations, which makes fossil energy markets ideal setting to evaluate the predictive ability of advanced deep learning models for long horizon forecasting. Therefore, this study goes beyond short term volatility analysis and contributes to long term predictive insights that are essential for policymakers, institutional investors, and energy security authorities.

Despite the growing literature on deep learning for price forecasting, a clear gap exists in the application of these methods to long-horizon forecasting of fossil energy commodities (crude oil, natural gas, and coal). Prior studies overwhelmingly concentrate on either short-term predictions (for example: next-day and intra-day horizons) or predominantly forecast stock markets and electricity pricing, where high-frequency data and immediate volatility dominate [28,29,30]. In contrast, multi-month to multi-year forecasting of primary energy carriers, which is critical for strategic energy policy, infrastructure investment, hedging, and national energy security planning, remain severely underexplored. Therefore, to the best of our knowledge, no prior study has systematically compared major deep learning architectures (DNN, RNN, LSTM, Bi-LSTM, GRU, and CNN) on long-term fossil energy price prediction tasks. This study fills that gap by providing the first comprehensive benchmarking of these six widely used architectures specifically under extended forecasting horizons. I explicitly demonstrate which architectures most effectively capture the complex nonlinear dynamics and long-range temporal dependencies that characterize global fossil energy markets. The comparative framework and empirical findings presented here therefore extend well beyond routine application of off-the-shelf models and establish a new reference point for future research in energy commodity forecasting. Some of the key contributions of this paper are mentioned below.

Informed long-term energy forecasting: The paper contributes towards the critical gap in forecasting fossil energy prices over extended period, which supports strategic investment planning, hedging decisions, and national energy security measures.

Modeling nonlinear dynamics: Numerous existing studies in the financial market prioritize next-day price predictions. Contrary, this research exploits the temporal dependencies and captures complex temporal patterns in the energy markets using deep learning techniques designed for sequential financial data.

Comprehensive assessment of DL models: The study focused on extensive experiments to compare the predictive robustness of six key deep learning models including LSTM, DNN, RNN, Bi-LSTM, GRU, and CNN, presenting a thorough understanding of their performance in financial forecasting.

Implications for Energy security and Financial Stability: The study assists in delivering a more accurate and reliable forecast for the energy commodities, which empowers investors to make informed decisions, reduce risks, and develop trading strategies including trend-following or mean-reverting techniques. Additionally, accurate predictions can assist governments optimize strategic energy reserves, supporting market stability.

Economic and Financial Comprehensions: The study mentions actionable insights for a wider market participants, exhibiting how accurate forecasting can mitigate herding behaviors, stabilize energy prices, and promote data-driven investment strategies.

Expanding Financial Machine Learning: This study contributes to the increasing body of literature on financial machine learning by applying state-of-the art algorithms to a critical market index, further highlighting its transformative potential for market analysis and investment management.

The structure of paper is summarized as follows. Section 2 mentions related work, Section 3 briefly discusses six deep learning models and their architecture. Section 4 mentions the data, methodology, and experimental setup, Section 5 mentions the results and discusses them, and Section 6 concludes the paper.

2. Related Work

Over the past few decades, financial market analysis has mostly been influenced by the Efficient Market Hypothesis (EMH), which stressed economic values and led to a stationary approach to forecasting stock price indices. According to EMH, financial markets particularly stock prices follow a random process, and making accurate price predictions almost impossible. With the passage of time, as research in market theory expanded, new insights into the predictable components of stock prices gradually appeared, making stock price predictions more viable. Traditional stock price prediction techniques mostly relied on mathematical and statistical models [31,32], fractal theory [33,34,35], and fuzzy theory [36]. Despite their extensive use, these classical statistical models often had narrowed predictive power because of the statistical laws that governed the variables they aimed to foresee [37]. Moreover, these limited capabilities persuaded the researchers to merge fuzzy theory with statistical methods to extract more refined insights into stock price movements. Combining with statical models, machine learning techniques including Random Forest (RF), Decision Trees (DTs), and ANN, have achieved popularity in stock price prediction. While these models have gained success, they remain somewhat limited in their ability to completely capture the complex, nonlinear characteristics of fossil fuel price behavior.

Nevertheless, machine learning models generally outperformed the traditional models; their predictive performance, however, remains relatively superficial, indicating a need for further improvements to better understand the deeper complexities of financial market dynamics [38,39]. Ref. [40] applied three machine learning models to predict two indices namely CNX Nifty and S&P Bombay Stock Exchange (BSE) Sensex by taking data between January 2003 and December 2012. Their results proved the effectiveness of two stage fusion model in reducing the overall prediction error. In addition, ref. [41] utilized numerous machine learning algorithms to understand the dynamics of market risk premia of Chinese stock market returns and to evaluate the prediction power of machine learning algorithms. In addition to establishing outstanding predictive performance of neural networks during Chinese stock market crash of 2015, their results showed strong predictability of retails investors short-term focus especially for small stocks. Ref. [42] used DNNs and traditional ANNs to predict the daily return direction of the SPDR S&P 500 ETF. Their results showed high accuracy of DNN-based classification model. Further, ref. [43] tested the performance of linear model (LM), ANN, RF, and Support Vector Regression (SVR) on three major indices of Ibex35, DAX, and Dow Jones industrial (DJI). Their results demonstrated that combination of ML and technical analysis strategies effectively achieves enhanced trading signals and makes the proposed trading rules more effective. Moreover, ref. [44] used various machine learning algorithms and demonstrated that investors can find profitable technical trading rules using historical prices, while establishing that genetic algorithms provide an advantage in creating profitable in comparison with the machines learning algorithms aimed particularly on minimizing losses.

Recent advancements in DL have revolutionized financial time series forecasting, yet the field remains divided on the optimal architecture for stock index prediction. Early work by [45] demonstrated LSTM’s efficacy in capturing short-term stock price dependencies, and they have gathered substantial attention due to their versatility and effectiveness across a wide range of applications. To simplify the LSTM architecture, ref. [46] propose leveraging the content of the long-term memory cell within a recurrent block containing only two gates. Nevertheless, this approach overlooks the significance of the LSTM output, which may limit its applicability to more complex tasks, despite its potential utility in simpler scenarios. In addition, these studies focused narrowly on single-model evaluations, neglecting comparative performance analyses. Moreover, ref. [47] highlighted Bi-LSTM’s edge in incorporating forward-backward temporal contexts. With their complex hidden layers, deep learning models are more effective than traditional machine learning algorithms because of their ability to learn and model complex patterns in large datasets, and therefore achieving higher accuracy [48,49]. For the nonlinear, complex, and often chaotic nature of stock market price movements, deep learning methods are poised to show significant predictive advantage. The previous literature on the use of deep learning for financial market prediction is ample. For example, ref. [50] utilized a hybrid dynamic correlation-based DCDNN model to predict the correlation between China, Hongkong, United States, and European stock markets. In addition to proving the effectiveness of deep learning models in successfully predicting the correlation among global stock markets, their results also demonstrated that DCDNN performed better especially during the 2008 financial crisis. In addition, ref. [51] utilized LSTM to predict the US stock index of S&P500, and showed that the model achieved excellent prediction performance compared to a standard DNN and logistic regression. Moreover, ref. [52] applied a deep learning-based CNN and ANN and achieved a higher accuracy of 98.92% and 97.66% for stock price predictions, respectively.

Additionally, ref. [53] performed a comparative analysis of machine learning and deep learning models and highlighted CNN-LSTM model to accurately predict the stock prices. Moreover, ref. [54] used a combination of three Deep Neural Networks of RNN, LSTM, and CNN to predict the price of NSE listed companies, and quantified the performance using percentage error. Recently, ref. [55] showed that the CNN outperformed all other deep learning models in forecasting stock prices of Halal tourism sentiment index. Furthermore, ref. [56] proven that LSTM models provided an impressive mean absolute error (MAE) reduction of 23.4% compared to traditional forecasting methods, with an excellent forecast accuracy of 89.7% in the financial market predictions. In addition, ref. [57] used deep learning models to predict the stock prices of three well-known companies by taking data from January 2011 to September 2022. Their results showed that the GRU and LSTM outperformed in prediction accuracy by regularly attaining lower MAPE values across multiple datasets. The application of GRUs in finance, though less explored, has gained traction due to their computational efficiency. For instance, ref. [58] reported the GRU’s parity with LSTM in predicting stocks and Crypto returns but did not extend their analysis to multi-step forecasting. In addition, ref. [59] integrated environmental data and financial indicators using the PCA-GRU-LSTM model to improve stock price forecasting, emphasizing the role of environmental factors and advance machine learning techniques in financial predictions. More recently, ref. [60] explored forecasting realized volatility in stock indices using advanced techniques, by using data of Shanghai Stock Exchange Composite (SSE), Infosys (INFY), and the National Stock Exchange Index (NIFTY). They proposed a hybrid model combining optimized Variational Mode Decomposition (VMD) with deep learning methods of the ANN, LSTM, and GRU, and demonstrated that the DL model provided highly accurate volatility predictions, offering practical benefits for financial institutions in effective management of the risk. Recently, ref. [61] utilized DL models to forecast electricity prices and nominated VMD-BiLSTM architecture to better predict a day-ahead electricity price of the US.

Despite increasing interest in AI-driven forecasting, deep learning applications to fossil energy markets remain limited compared to extensive work on equity and cryptocurrency markets. Much of the existing research focuses on stock indices, offering little insight into how these methods apply to the unique behavior of fossil fuel prices driven by geopolitical shocks, supply disruptions, and structural cycles. In energy markets, most prior studies are review papers rather than empirical forecasting analyses, such as [62,63,64,65], resulting in limited evidence on the effectiveness of different model types.

For fossil fuels, only a few empirical studies focused on ML models on energy commodities, and these typically examine single assets, narrow model selections, and short horizons. For example, ref. [66] forecasted Morocco’s oil and natural gas consumption and production using regression and machine learning models to assess future energy security risks and guide policy decisions aligned with the country’s NDC commitments. In addition, ref. [67] showed that ML models including Neural Networks and RFs outperform traditional econometric methods in forecasting major global energy commodity prices and uniquely captured market turning points. Ref. [68] proposed a QHSA-LSSVM hybrid model that optimized LSSVM parameters using a quantum harmony search algorithm and demonstrated higher accuracy in forecasting China’s fossil fuel energy consumption for power generation compared to traditional regression, GM (1,1), BP, and standard LSSVM models. Recently, ref. [69] used optimized machine learning models to accurately forecast China’s overall energy use, fossil fuel consumption, and electricity demand, mentioning that CatBoost-ARO, XGBR-ARO, and LightGBM-ARO achieved the highest predictive accuracy and effectively capture underlying consumption patterns driven by rapid industrialization and urbanization. Additionally, previous work rarely connects forecasting outcomes to energy security or financial stability, leaving a clear gap that this study addresses.

To address these gaps, our study provides new insights and tackles the critical limitations presented in the previous research. First, most studies prioritize short-term horizons of daily predictions, overlooking the need for models that stabilize performance over extended periods, which is a prerequisite for long-term portfolio management. Second, fossil energy prices remain understudied compared to other financial markets, despite their distinct exposure and importance for global economies and financial market participants. Third, the study provides one of the first systematic comparisons of GRU, LSTM, Bi-LSTM, RNN, CNN, and DNN models applied to long-horizon fossil energy price forecasting. By employing consistent datasets, identical preprocessing procedures, and multiple performance metrics (RMSE and MAPE), this study provides a more rigorous and comparable evaluation of model performance. The findings highlight the dominance of recurrent architecture in capturing nonlinear temporal dynamics, thereby offering new insights into the suitability of deep learning models for energy security analysis and financial decision-making. Therefore, this study is first to explore applicability and a thorough comparative analysis of six key deep learning methods applied to effectively predict fossil energy prices. In addition, it has been proven that accurate prediction can play a vital role in long-term investment strategies by offering insights into upcoming market trends, thereby assisting investors to make more knowledgeable decisions. Further, the study provides valuable insights by highlighting effective deep learning models that can help in altering investment strategies based on evolving market states, confirming that long-term investments are associated with expected future trends, eventually improving the chances of attaining sustainable returns.

3. Deep Learning Models

3.1. Deep Neural Network (DNN)

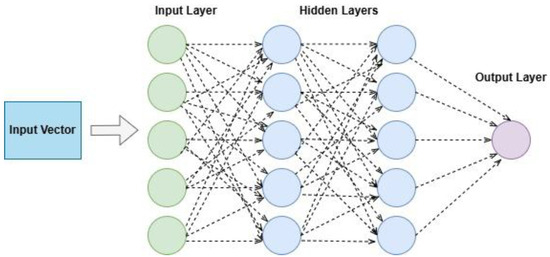

Introduced in the 1940s, the focus of the ANN is on input and output layers, in addition to hidden layers among the neural networks, while the DNN includes an extension with compact hidden layers with hierarchical topology. Proposed by [70], the major difference lies in the number of hidden layers, where the DNN uses multiple layers to enable the model to acquire more sophisticated and abstract representations of the data. This study uses the DNN designed for times series forecasting using multiple layers, which are necessary in seizing the complex patterns of the data. The architecture contains an input layer, multiple hidden layers, and an output layer. The following Figure 1 shows the architecture of a standard DNN.

Figure 1.

Architecture of DNN.

3.2. Recurrent Neural Network (RNN)

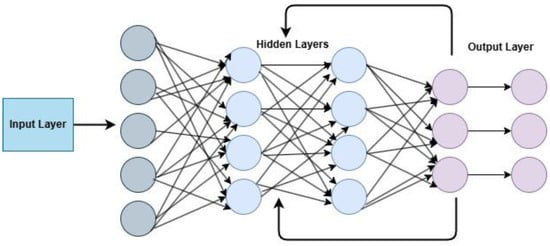

A Recurrent Neural Network is an advanced type of neural network that features internal memory, enabling it to process lengthy sequences of data [71]. This characteristic makes RNNs particularly effective for tasks like stock price prediction, which requires the analysis of extensive historical data. In addition, RNNs leverage the sequence of data to develop a memory that helps them interpret both current and past information within that sequence. The concept of simple RNNs was first introduced by [72]. This paper utilizes the simple RNN model, which is fully connected. A fully connected RNN means that every neuron in one layer is linked to every other neuron in the subsequent layer, as shown in Figure 2.

Figure 2.

Architecture of RNN.

3.3. LSTM and Bi-LSTM

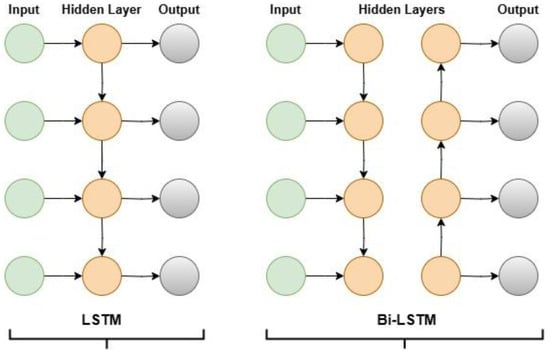

LSTM is a specialized variant of RNNs that are designed to handle sequential data such as time series by maintaining internal memory of prior inputs. While RNNs are effective for these types of tasks, they often encounter the issue of vanishing gradients [73], which can slow down the learning process or cause it to halt entirely. To address this challenge, LSTMs were developed by [45]. Furthermore, they are capable of retaining information over longer periods and can effectively learn from inputs with significant time gaps among them. In addition, the LSTM architecture has proven to be highly effective in modeling time series data across a range of frameworks, with its direct application having outperformed conventional machine learning and Artificial Neural Network techniques [74]. An LSTM network comprises three distinct gates: the input gate, which governs the inclusion of new information. Secondly, the forget gate, which is responsible for discarding irrelevant data. Finally, the output gate, which determines the information to be released. Further, Bidirectional LSTM (Bi-LSTM) was introduced by [75], which enhances LSTM by processing data in both forward and backward directions, allowing it to capture dependencies from both the previous and upcoming context. Figure 3 illustrates the architecture of LSTM and Bi-LSTM. This paper utilizes LSTM and Bi-LSTM for time series forecasting.

Figure 3.

Architecture of LSTM and Bi-LSTM.

3.4. Convolution Neural Network (CNN)

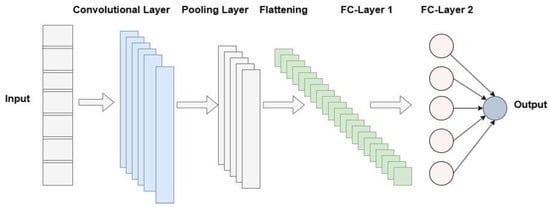

The Convolution Neural Network is a specific type of DNN that incorporates convolution layers, as introduced by [76]. CNNs are widely used for tasks related to vision and image processing, such as image classification, object detection, and image segmentation [77,78,79]. In addition, the CNN has been utilized in several studies for predicting stock market trends [80,81]. One of the key advantages of CNNs over traditional deep learning models, such as Deep Multilayer Perception (DMLP), is their reduced number of parameters. Further, the use of kernel window functions for filtering allows CNN architectures to perform image processing with fewer parameters, which is advantageous in terms of computational efficiency and storage requirements. Moreover, CNN architecture typically includes several types of layers: convolutional, max-pooling, dropout, and fully connected Multilayer Perceptron (MLP) layers. Figure 4 presents the architecture of the CNN model. The CNN is one of the deep neural models that is utilized in this paper for time series forecasting.

Figure 4.

Architecture of CNN.

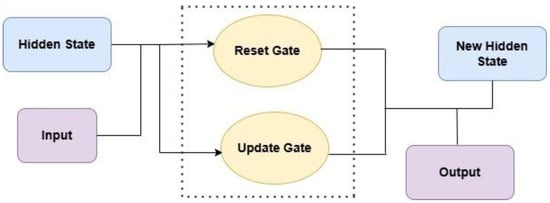

3.5. Gated Recurrent Unit (GRU)

Introduced by [82], the GRU is a subtype of RNN that resolves the issue of long-distance information capture by integrating a gating structure. The GRU is a simplified type of LSTM, integrating only two gates, the update gate and reset gate. The role of an update gate is to regulate the extent of the current output and former output to be moved to the next cell. However, the reset gate determines the quantity of the past information that must not be recalled. In addition, the reset gate plays a vital role in the model in controlling the extent to which the prior hidden state is discarded and how much of the new input is contained. Previous research shows that the GRU is computationally more efficient compared to LSTM [83], due to its capabilities for faster training [84]. The architecture of the GRU model is shown in Figure 5.

Figure 5.

Architecture of GRU.

4. Methodology, Experiments, and Data

The study uses the same configuration for all the six deep learning models to ensure an unbiased comparison. The dates are converted into integer values ranging from 1 and . Previous research shows the importance of normalized data in the process [85,86,87], due to noisy data of financial time series; therefore, the study uses min-max scaler formula to normalize the data before further processing, ensuring that the model is trained on a complete and clean dataset, which is presented in the following Equation (1).

In the above equation is the feature matrix, and and signify to its minimum and maximum values, respectively. Moreover, I used daily price data for five major fossil energy commodities including crude oil, Brent oil, RBOB gasoline, natural gas, and heating oil sourced from Yahoo Finance. The use of data sources from Yahoo Finance is well-established in the empirical literature, specifically in studies involving high frequency financial time series. More specifically, refs. [88,89] utilized Yahoo Finance data to analyze major energy sector commodities and their associations with boarder financial markets. More recently, refs. [90,91,92] have used the data from Yahoo Finance to model market behavior, develop prediction engines, and examine the role of big data analytics in trading decision-making. Therefore, the adoption of this data source in the present study is aligned with established methodological practices in the literature, including prior research on financial networks and market dynamics such as [93]. The detailed description of each energy commodity, along with its ticker and data source, is presented in Table 1. These energy prices serve as critical benchmarks for global energy markets, influencing energy security, investment strategies, and macroeconomic stability. In addition, I use an extended daily dataset, spanning from 4 January 2016 to 28 August 2025, which serves a thorough overview of the market periods comprising bearish, bullish, and stagnant market trends, enhancing the model’s ability to generalize.

Table 1.

Summary of fossil energy price data.

The descriptive statistics of the five fossil energy commodities, in Table 2, provides an initial understanding of their price behavior and underlying market characteristics over the sample period between January 2016 and August 2025. Crude oil exhibits an average price of USD 63.72, with a relatively high standard deviation of 17.82, indicating substantial volatility. The interquartile range, defined by the 25th percentile (USD 50.84) and 75th percentile (USD 74.83), shows that most of the trading activity occurred within a moderately dispersed price band. Similarly, Brent oil displays a mean value of USD 68.02, accompanied by a standard deviation of 18.12, highlighting volatility patterns comparable to crude oil. Its price fluctuated between USD 19.33 and USD 127.98, consistent with global supply or demand disruptions, geopolitical tensions, and commodity-specific shocks. However, natural gas shows a lower mean of USD 3.20 with a standard deviation of 1.42, suggesting moderate volatility relative to oil benchmarks. Additionally, heating oil and RBOB gasoline also demonstrate distinct volatility structures. Heating oil, with a mean of USD 2.14 and standard deviation of 0.71, reveals moderate fluctuation, ranging from USD 0.61 to USD 5.14. In comparison, RBOB gasoline averages USD 1.98 with a standard deviation of 0.58, and prices span between USD 0.41 and 4.28, capturing the dynamics associated with refining margins, crude oil pass-through effects, and seasonal driving trends. These patterns justify the use of deep learning models capable of capturing nonlinear dependencies and volatility clustering inherent in energy commodity price movements.

Table 2.

Descriptive statistics.

In addition, I employed a chronological split, where the first (80%) of the data was allocated for training, and the remaining 20% was reserved for evaluating model performance and testing. By implementing this approach, I ensure that future data are never used to inform past predictions, thereby preventing data leakage. Moreover, time series sequences were created using a sliding window of 60 days, enabling the models to learn temporal dependencies. Moreover, the models are trained using a batch size of 32 and optimized using the Adam optimizer. Furthermore, each of the six models undergoes training for a total of 100 epochs to ensure effective learning and convergence. The experimental setup involves strict temporal ordering, separate scaling in form of MinMaxScalar, and no future information to prevent any data leakages. In addition, Table 3 mentions the parameters and values.

Table 3.

Parameters.

Since the primary objective of the study is to provide comparative baseline performance of six different deep learning architectures (LSTM, DNN, RNN, Bi-LSTM, GRU, and CNN) on commodity time series prediction using the five fossil fuel energy prices. As such, I adopted commonly used and empirically validated parameters including 256 units, dropout of 0.2, batch size of 32, and 100 epochs, that are widely recognized in the literature as robust starting points for the time series forecasting tasks [94,95]. Additionally, this has been carried out to ensure a fair and controlled comparison among all models, and to use consistent architectural and training parameters across the different models. Therefore, this selection avoids introducing variability from extensive tuning which could bias results in favor of a particular model. All the six DL models used standard configurations without custom modifications to architecture depth or connectivity. The uniformity ensures the models are directly comparable in structure and capacity.

Once the model training phase is complete, predictions are generated for the future prices using the test dataset, and the results are compared with actual values using inverse transformation. The deep learning model’s prediction accuracy and reliability is evaluated using Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE). RMSE is a widely used metric that effectively captures the magnitude of prediction errors, while MAPE evaluates relative errors, making it particularly suitable for comparing performance across different indices. These metrics have been extensively utilized in prior studies and are recognized as robust indicators of forecasting accuracy in financial time series analysis [95,96]. The analytical formulations of RMSE and MAPE are defined as follows.

where is the original time series, indicates the predicted time series achieved from the model, and signifies the number of observations. Moreover, the less the values of RMSE and MAPE, the better the performance of the model.

5. Results and Discussion

This paper evaluated the predictive performance of numerous deep learning models, such as LSTM, DNN, RNN, CNN, Bi-LSTM, and GRU, in forecasting fossil energy prices. Additionally, the models are assessed using key metrices of RMSE and MAPE, and this section summarizes and discusses the results obtained after examining the five fossil fuel prices.

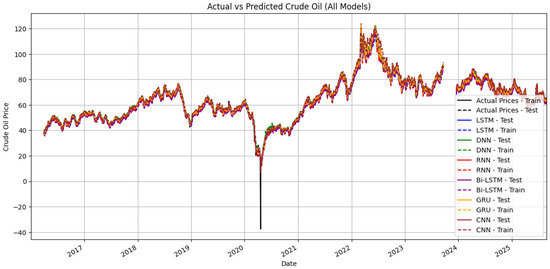

5.1. Crude Oil

Table 4 mentions the forecasting results for crude oil. Interestingly, the LSTM model outperformed all other deep learning architectures, demonstrating superior predictive accuracy with the lowest test RMSE (23.3159) and test MAPE (0.4603), along with achieving a strong training RMSE (1.8443) and training MAPE (0.0318). This implies that the LSTM model not only fits the training data of crude oil prices well but also generalizes effectively to unseen data. Additionally, the results show that the Bi-LSTM model also performed competitively and almost equally, and stands at second spot after LSTM, with a training RMSE value of 23.3505, and test MAPE of 0.4603, confirming its robust temporal learning capability and high consistency with the LSTM model. Therefore, these results demonstrate that Bi-LSTM is a robust alternative with strong generalization capability compared to LSTM for crude oil data.

Table 4.

Results for crude oil.

Furthermore, the RNN model provided a test RMSE of 24.1147 and a test MAPE of 0.4766, representing reasonable predicting capability but slightly lesser generalization compared to LSTM-based models. The GRU model stands at fourth spot with a test RMSE of 25.5417 and a test MAPE of 0.5072, suggesting that an effective handling of sequential dependencies but less sensitive to complex temporal variations in crude oil price fluctuations. In contrast to conventional RNNs, the GRU employs a gating mechanism that efficiently regulates the information flow, enabling it to keep crucial dependencies while eliminating unnecessary input. In the financial time series forecasting, where historical trends significantly influence future movements, this architecture is especially helpful. Moreover, the DNN model exhibited higher test errors , signifying limited capability to capture sequential dependencies due to its static feedforward structure. Additionally, the CNN model stands at the last spot with a highest test RMSE value of 27.0272 and test MAPE of 0.5391, reflecting weaker performance. The underperformance of the CNN and DNN for complex data underscores the challenges of utilizing feedforward architectures to the financial time series data, as these models lack inherent mechanisms to model temporal dependencies effectively [97]. While CNNs stand out in feature extraction, their dependence on spatial hierarchies may not align well with the sequential patterns inherent in commodity of crude oil price. Figure 6 presents visually the price patterns of actual and individual models’ prediction in the training and testing phases. The break point splits the training and testing phases.

Figure 6.

Actual vs. predicted prices of crude oil using DL models.

To sum up, LSTM and Bi-LSTM models demonstrate clear superiority in modeling the dynamic and nonlinear characteristics of crude oil price fluctuations. Their ability to retain long-term dependencies enables them to adapt to complex market influences including geopolitical tensions, production adjustments, and overall demand shifts. Additionally, the superior performance of LSTM-based models reveals their potential as reliable tool for anticipating crude oil price, a key commodity, which policymakers can leverage to stabilizing energy markets and minimizing economic shocks. These results are intuitive given the structural characteristics of global crude oil markets: oil prices tend to follow smoother cyclical movements influenced by OPEC production policies, global demand cycles, and macroeconomic conditions. Therefore, these slower-moving dynamics are well captured by LSTM-based models, whose gating mechanisms allow them to retain wider dependencies in the data. In contrast, the GRU performs relatively well but slightly underperforms LSTM, likely because its simplified architecture captures short-term patterns effectively but is less suited to commodities with multi-month structural price cycles. Furthermore, the results imply for analysts dealing in crude oil market, policy units, and long-term strategic planners, that LSTM-family models appear most reliable for medium- to long-horizon monitoring, investment planning, and reserve allocation. Integrating LSTM predictions into risk dashboards could help anticipate multi-week and multi-month market shifts more effectively than traditional models.

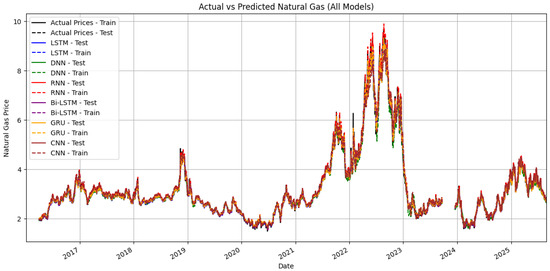

5.2. Natural Gas

Table 5 exhibits the forecasting results for natural gas prices across all deep learning models, while Figure 7 visually explains the actual versus predicted price patterns. The results reveal that the GRU model demonstrated the most effective predictive performance, achieving the lowest test RMSE (0.6811) and test MAPE (0.1967), while maintaining competitive training accuracy with a train RMSE of 0.0920 and train MAPE of 0.0242. This indicates that the GRU model generalizes well to unseen data and effectively captures the nonlinear dynamics inherent in natural gas price movements. The ability of the GRU model to retain long-term dependencies while preventing vanishing gradient issues makes it particularly well-suited for forecasting complex and volatile time series including natural gas prices. The finding aligns with the known volatility profile of natural gas markets, where prices exhibit sharp seasonal spikes (for example, winter heating demand), sudden supply disruptions, and storage-related shocks. The GRU’s simpler gating structure makes it more responsive to these abrupt, high-frequency changes, avoiding over-smoothing, which is a common issue for LSTM in highly volatile contexts.

Table 5.

Results for natural gas.

Figure 7.

Actual vs. predicted prices of natural gas using DL models.

Another key finding is robust generalization of LSTM-based models. We can observe that Bi-LSTM model ranked second, with a test RMSE value of 0.7076 and test MAPE of 0.2056. Followed closely, the LSTM model (test RMSE 0.7133, test MAPE 0.2063), placed at third spot for effective predicting of natural gas prices. Additionally, both architectures exhibited a comparable performance, implying that bidirectional processing of sequential dependencies provides marginal gains in predictive accuracy. Moreover, the DNN model also delivered reasonable test results (RMSE 0.6821, MAPE 0.1952), indicating that while feedforward architectures can capture overall trends, they may lack the temporal awareness compared to recurrent models of the GRU and Bi-LSTM.

Contrary to other DL models, the RNN and CNN models show relatively weaker test performance, with test RMSE values of 0.7329 and 0.7298, and test MAPE values of 0.2143 and 0.2121, respectively. The basic RNN model’s limitations likely stem from its inability to effectively manage long-term dependencies, while the CNN model’s comparatively higher test error may be attributed to its spatial convolutional structure being less suitable for sequential temporal forecasting [98].

Overall, the results emphasize that the GRU model delivers the most reliable and stable prediction for natural gas prices, outperforming both simple recurrent and convolutional models. The small gap among its training and testing errors further indicates strong generalization capability and minimal overfitting. Given the critical role of natural gas in energy production and industrial use worldwide, the accurate forecast of pricing will eventually provide higher energy security, and informed hedging strategies. Additionally, traders, utility companies, and regulators monitoring short-term gas price fluctuations, the GRU provides a more responsive and accurate tool for capturing sudden market shifts. This can support decisions like procurement timing, hedging strategies, and seasonal reserve planning.

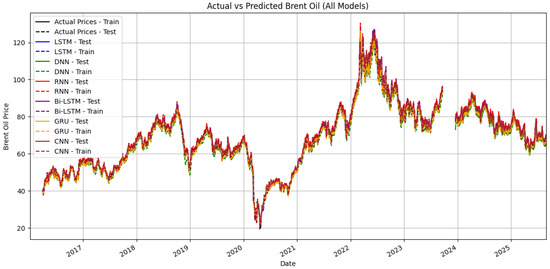

5.3. Brent Oil

The results of Brent oil reveal that the Bi-LSTM model achieved the best performance, achieving the lowest test RMSE (27.5013) and test MAPE (0.5128), along with the smallest training RMSE (1.0590) and training MAPE (0.0162), as shown in Table 6. This outcome indicates that the Bi-LSTM effectively captures both short- and long-term dependencies, adapting well to Brent oil’s complex temporal fluctuations. Followed closely, the LSTM model also performed competitively, with a test RMSE of 27.6452 and MAPE of 0.5159, reaffirming its robustness. While comparing it with crude oil price prediction, where LSTM-based models also showed top performance, it was demonstrated that this architecture is particularly consistent across related fossil energy markets that share similar volatility characteristics. Brent oil prices, influenced by international benchmarks, geopolitical tensions, and supply coordination among exporting countries, follow patterns that show both persistence and momentum. While relying on the results, LSTM-based models outperform at capturing such gradual but meaningful directional shifts. Similarly, the GRU performs reasonably well, but its predictive power is not as strong as LSTM for a fossil fuel commodity of brent oil with well-established long-range dynamics. Moreover, the visual results of Brent oil by utilizing all the DL-based models are presented in Figure 8.

Table 6.

Results of Brent oil.

Figure 8.

Actual vs. predicted prices of Brent oil using DL models.

Additionally, the results reveal that the GRU model demonstrates competitive generalization ability, with a test RMSE value of 25.7639, and test MAPE of 0.4779, though its learning curve suggests it reacts faster to recent changes than to extended historical dependencies. Meanwhile, DNN and CNN models display moderate forecasting power for Brent oil, mainly capturing broad price patterns rather than subtle time-based variations. The RNN, however, lagged in effective prediction compared to other models, reflecting its limitations in retaining long-term dependencies and managing nonlinear market dynamics.

Finally, the results achieved for Brent oil shows that Bi-LSTM and LSTM models stand out as the most effective for forecasting the prices, given their ability to learn from long-range patterns while preserving short-term volatility signals. Additionally, the results are consistent with the findings for crude oil price forecast where LSTM models demonstrated reliability and accurate prediction. These findings suggest that advanced recurrent models, especially Bi-LSTM and LSTM, can serve as dependable analytical tools for traders, analysts, and policymakers seeking to enhance predictive precision, and optimize energy investment decisions. Moreover, the deep learning models of Bi-LSTM and LSTM, which demonstrated excellent predictive abilities for Brent oil and crude oil prices, can be integrated into algorithmic trading systems or decision-support tools to enhance portfolio performance.

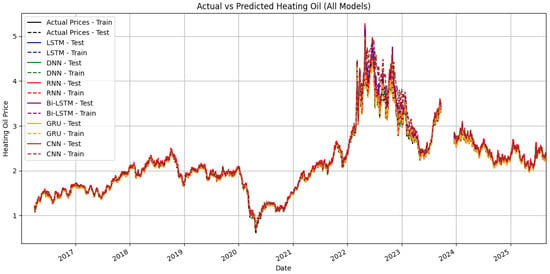

5.4. Heating Oil

Table 7 presents the DL models summary results for heating oil prices, while Figure 9 illustrates the comparison between actual and predicted prices in both training and testing phases. Among all models, the GRU model demonstrated the highest predictive accuracy, achieving the lowest test RMSE (0.8803) and test MAPE (0.5489), confirming its effectiveness in capturing complex temporal and nonlinear dynamics in heating oil prices. The LSTM model followed closely, demonstrating a test RMSE of 0.9067 and MAPE of 0.5665, confirming its robustness and adaptability. The results further validate the consistency of LSTM architectures, which have already shown reliable forecasting strength for other commodities including crude oil and Brent oil, suggesting that LSTM-based frameworks can generalize well across different fossil fuel types. Similarly, the Bi-LSTM model produced slightly higher error metrics , though it maintained stable learning behavior and captured the cyclical nature of heating oil prices effectively. These results further demonstrate superior capabilities of the GRU model on the test set. Heating oil prices are strongly seasonal, highly sensitive to short-term weather variations, refinery disruptions, and fuel-switching behavior in winter months. These factors create irregular, short-lived spikes that favor models capable of quick adaptation. The GRU’s efficiency and lower computational burden help it adjust faster to sudden deviations, which explains its edge over LSTM-based models in this category.

Table 7.

Results of heating oil.

Figure 9.

Actual vs. predicted prices of heating oil using DL models.

Additionally, the DNN and CNN models reported moderate performance, with higher test MAPE values (0.5659 and 0.5983, respectively), indicating that their static architectures may not fully capture sequential dependencies inherent in time series data. Similarly, it is not surprising that the RNN model produced the weakest results with a test RMSE of 0.9505 and test MAPE of 0.5973, highlighting its limitations in handling longer time dependencies compared to gated or bidirectional structures, like other fossil fuel commodities.

Given the direct link between heating oil costs, energy consumption, and household expenditures, better price predictions can help governments design more effective energy pricing and subsidy policies to stabilize inflationary pressures. Furthermore, for industries dependent on heating oil, such as manufacturing and logistics, reliable forecasts can enhance budget planning, operational efficiency, and cost management. Moreover, utility companies, fuel distributors, and winter risk managers may benefit from using GRU-based forecasting systems, which better anticipate rapid seasonal changes. Finally, incorporating GRU- and LSTM-based forecasting models can offer energy market stability, assisting policymakers to anticipate price shocks, and improve economic resilience against global oil market volatility.

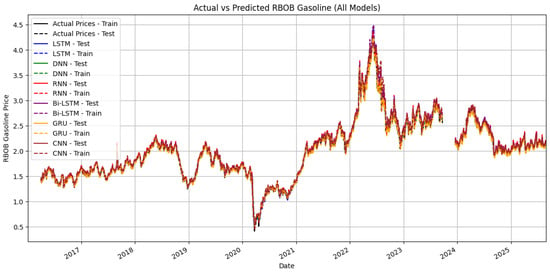

5.5. RBOB Gasoline

Table 8 presents the forecasting performance for RBOB gasoline prices by utilizing all the six deep learning models, and Figure 10 visualizes the actual and predicted price patterns in both the training and testing phases. Among the evaluated models, the GRU model demonstrates the most accurate predictive capability, achieving the lowest test RMSE value of 0.7260 and test MAPE of 0.4345. This indicates that the GRU effectively captures temporal dependencies and nonlinear patterns in RBOB gasoline price movements, consistent with its strong performance in forecasting the heating oil prices. Additionally, gasoline markets are affected by refinery outages, transportation logistics, and demand fluctuations tied to driving patterns, all of which create sudden and short-lived price shocks. Therefore, the GRU’s architecture is remarkably suited to learning such high-frequency fluctuations without overshooting and lagging.

Table 8.

Results of RBOB gasoline.

Figure 10.

Actual vs. predicted prices of RBOB gasoline using DL models.

Additionally, the LSTM and Bi-LSTM models also perform well, with test RMSE values of 0.7701 and 0.7827 and test MAPE values below 0.47. These results confirm the robustness of memory-based recurrent models in managing sequential data with complex temporal interactions, cementing their importance further in effective forecasting of fossil fuel energy markets. Conversely, the DNN, RNN, and CNN models show weaker generalization on the testing data, suggesting that purely feedforward models are less effective for a volatile market time series. Finally, the implications of these results extend towards wider stakeholders, from gasoline market analysts and downstream suppliers to trading desks managing short-term exposure. The GRU model provides the most reliable predicting performance for RBOB gasoline, enabling more informed inventory planning, price-setting strategies, and volatility-management decisions, particularly during periods of demand uncertainty.

When comparing RBOB gasoline with other four energy commodities of crude oil, Brent oil, natural gas, and heating oil, an interesting and consistent performance pattern emerges, which is that models that include gating mechanisms such as LSTM and GRU consistently deliver superior forecasting accuracy, reflecting their strength in managing broader dependencies and irregular market fluctuations. Additionally, the LSTM-based models achieved the best results for crude and Brent oil, and the GRU model performed better for natural gas, heating oil, and RBOB gasoline. This suggests that the GRU’s simpler architecture and faster convergence make it particularly effective for high-frequency price variations and unstable market conditions.

The observed performance differences can be attributed to the intrinsic design of the model architectures and the volatility characteristics of each commodity. LSTM and Bi-LSTM models, with their triple-gate structures, outperform at capturing long-term dependencies and smoothing over transient fluctuations, which aligns well with crude and Brent oil prices that are influenced by geopolitical events and longer-term market cycles. In contrast, GRU models, with their simpler dual-gate design, converge faster and are less prone to overfitting, making them more efficient in capturing the high-frequency, abrupt fluctuations characteristic of natural gas, heating oil, and RBOB gasoline. This mechanistic insight explains why the GRU outperforms LSTM-based models for commodities with spikier, short-term volatility, while LSTM-based architectures better track commodities with persistent, long-horizon price trends.

To sum up, the findings of this study provide direct implications for broader investment strategies. Accurate prediction of fossil energy prices is critical because volatility in crude oil, Brent oil, natural gas, heating oil, and RBOB gasoline can directly affect investment decisions, risk management, and energy security. By demonstrating the superior predictive performance of memory- and gating-based deep learning models, including LSTM and GRU models, this study provides market participants with practical tools to anticipate price movements more reliably. Additionally, integrating advanced predictive analytics fosters sustainable investment practices and resilient energy markets. Investors, regulators, and market participants can align operational and financial decisions more closely with underlying market dynamics. This not only supports economic stability but also facilitates more strategic, data-driven management of energy resources in an era of persistent volatility and global energy transitions.

6. Conclusions

Fossil energy price volatility has profound financial and macroeconomic implications. In response, this study explored the effectiveness of six widely used deep learning models including the GRU, LSTM, Bi-LSTM, RNN, CNN, and DNN, across five major energy commodities including crude oil, Brent oil, natural gas, heating oil, and RBOB gasoline. Using daily data from 2016 to 2025, the models were trained and tested under a rigorous experimental setup with proper chronological splits, feature scaling, and temporal ordering to ensure unbiased results.

The results demonstrated that models with memory and gating mechanisms, particularly LSTM-based and the GRU, consistently outperform traditional architectures in forecasting energy prices. The LSTM-based models achieved the most accurate results for crude and Brent oil, while the GRU model demonstrated superior performance for natural gas, heating oil, and RBOB gasoline. This performance consistency highlights the capability of recurrent models to capture complex temporal dependencies and nonlinear price dynamics in highly volatile markets. Additionally, the results showed Bi-LSTM and LSTM models prove competitive and leading performance, further emphasizing the importance of sequential data modeling in capturing market movements.

6.1. Implications of the Study

For investors and portfolio managers, the results of the study highlight the potential to enhance strategic asset allocation and risk management. Through utilization of effective deep learning models, investors can enhance their ability to identify better market trends and adapt to short-term fluctuations, thereby fostering more informed and strategic investment practices. Specifically, the LSTM-based models’ accuracy in predicting crude and Brent oil prices supports more informed decisions in energy-focused equities and commodities trading, while the GRU model’s strength in forecasting natural gas, heating oil, and RBOB gasoline can guide investment in regional energy markets and derivative instruments. Additionally, investors and portfolio managers can make better-informed decisions regarding energy-related assets, mitigating financial risk associated with volatile markets. Moreover, Bi-LSTM predictions for Brent oil can inform hedging contract duration and position adjustment based on forecasted price swings, while the GRU forecasts for natural gas and RBOB gasoline can guide short-term trading strategies and risk-adjusted hedge sizing to manage exposure effectively. By translating model performance into actionable strategies, the study highlights how advanced forecasting tools can support evidence-based policy and investment planning, ultimately contributing to more stable and resilient global energy markets.

For policymakers and energy regulators, the study provides actionable guidance on integrating model forecasts into energy security and regulatory planning. Reliable price predictions can inform not just about the timing and scale of strategic reserves but also threshold-based responses to extreme market scenarios. Specifically:

- -

- Crude and Brent oil: Forecasts can guide reserve management, import or export decisions, and long-term energy contracts.

- -

- Natural gas: Predictions support domestic supply planning, industrial pricing policies, and adjustment of storage levels during high-demand periods.

- -

- Heating oil and RBOB gasoline: Forecasts enable regional price stabilization, consumer protection, and monitoring of transportation fuel markets.

By incorporating multi-step predictions and monitoring predicted volatility, governments and energy firms can optimize reserves, mitigate sudden price shocks, reduce speculative behaviors, and enhance overall market stability and investor confidence.

6.2. Limitations and Future Research

The study has certain limitations that require further exploration. First, validating the results requires additional research incorporating hybrid models that leverage the strengths of diverse architectures. For instance, integrating GRUs with attention mechanisms and employing ensemble learning could enhance predictive performance. Additionally, further work may incorporate cross-period and stress-testing for extreme market conditions. Second, future studies should compare DL approaches with traditional statistical and machine learning models to substantiate the superiority of deep learning in financial time series forecasting and benchmark their performance against the findings of this paper. Third, this work can be extended by incorporating hyperparameter optimization techniques, which could improve model generalization and robustness across diverse financial datasets. Forth, further studies may extend the current framework to include macroeconomic indicators, geopolitical events, and energy supply–demand data to enhance model realism and policy relevance, bridging the gap between methodological rigor and applied market utility. Finally, to enhance the practical relevance of this research, future research should include financial metrics including Return on Investment (ROI), win ratio (percentage of profitable trades), and loss ratio (percentage of unprofitable trades). These enhancements may further complement the statistical evaluation of deep learning models and will offer detailed insights for investors and policymakers.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are openly available and accessible from Yahoo Finance.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DL | Deep Learning |

| GRU | Gated Recurrent Unit |

| RNN | Recurrent Neural Network |

| CNN | Convolution Neural Network |

| LSTM | Long Short-term Memory |

| Bi-LSTM | Bidirectional Long Short-term Memory |

| DNN | Deep Neural Network |

| RMSE | Root Mean Squared Error |

| MAPE | Mean Absolute Percentage Error |

| AI | Artificial Intelligence |

| RF | Random Forest |

| DT | Decision Tree |

| ANN | Artificial Neural Network |

| EMH | Efficient Market Hypothesis |

References

- Fama, E.F. Efficient capital markets. J. Financ. 1970, 25, 383–417. [Google Scholar]

- Patel, J.; Shah, S.; Thakkar, P.; Kotecha, K. Predicting stock and stock price index movement using trend deterministic data preparation and machine learning techniques. Expert Syst. Appl. 2015, 42, 259–268. [Google Scholar] [CrossRef]

- Guresen, E.; Kayakutlu, G.; Daim, T.U. Using artificial neural network models in stock market index prediction. Expert Syst. Appl. 2011, 38, 10389–10397. [Google Scholar] [CrossRef]

- Kurani, A.; Doshi, P.; Vakharia, A.; Shah, M. A Comprehensive Comparative Study of Artificial Neural Network (ANN) and Support Vector Machines (SVM) on Stock Forecasting. Ann. Data Sci. 2023, 10, 183–208. [Google Scholar] [CrossRef]

- Pan, S.; Long, S.; Wang, Y.; Xie, Y. Nonlinear asset pricing in Chinese stock market: A deep learning approach. Int. Rev. Financ. Anal. 2023, 87, 102627. [Google Scholar] [CrossRef]

- Tien, J.M. Internet of things, real-time decision making, and artificial intelligence. Ann. Data Sci. 2017, 4, 149–178. [Google Scholar] [CrossRef]

- Ahn, M.J.; Chen, Y.-C. Artificial intelligence in government: Potentials, challenges, and the future. In Proceedings of the 21st Annual International Conference on Digital Government Research, Seoul, Republic of Korea, 15–19 June 2020; pp. 243–252. [Google Scholar]

- Acemoglu, D.; Restrepo, P. Artificial intelligence, automation, and work. In The Economics of Artificial Intelligence: An Agenda; University of Chicago Press: Chicago, IL, USA, 2018; pp. 197–236. [Google Scholar]

- Milana, C.; Ashta, A. Artificial intelligence techniques in finance and financial markets: A survey of the literature. Strateg. Chang. 2021, 30, 189–209. [Google Scholar]

- Thakkar, A.; Chaudhari, K. A comprehensive survey on portfolio optimization, stock price and trend prediction using particle swarm optimization. Arch. Comput. Methods Eng. 2021, 28, 2133–2164. [Google Scholar] [CrossRef]

- Chhajer, P.; Shah, M.; Kshirsagar, A. The applications of artificial neural networks, support vector machines, and long–short term memory for stock market prediction. Decis. Anal. J. 2022, 2, 100015. [Google Scholar] [CrossRef]

- Sutiene, K.; Schwendner, P.; Sipos, C.; Lorenzo, L.; Mirchev, M.; Lameski, P.; Kabasinskas, A.; Tidjani, C.; Ozturkkal, B.; Cerneviciene, J. Enhancing portfolio management using artificial intelligence: Literature review. Front. Artif. Intell. 2024, 7, 1371502. [Google Scholar] [CrossRef] [PubMed]

- Ikotun, A.M.; Ezugwu, A.E.; Abualigah, L.; Abuhaija, B.; Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 2023, 622, 178–210. [Google Scholar] [CrossRef]

- Huang, Z.; Zheng, H.; Li, C.; Che, C. Application of machine learning-based k-means clustering for financial fraud detection. Acad. J. Sci. Technol. 2024, 10, 33–39. [Google Scholar]

- Yu, L.; Chen, H.; Wang, S.; Lai, K.K. Evolving least squares support vector machines for stock market trend mining. IEEE Trans. Evol. Comput. 2008, 13, 87–102. [Google Scholar] [CrossRef]

- Godarzi, A.A.; Amiri, R.M.; Talaei, A.; Jamasb, T. Predicting oil price movements: A dynamic Artificial Neural Network approach. Energy Policy 2014, 68, 371–382. [Google Scholar] [CrossRef]

- Nayak, R.K.; Mishra, D.; Rath, A.K. A Naïve SVM-KNN based stock market trend reversal analysis for Indian benchmark indices. Appl. Soft Comput. 2015, 35, 670–680. [Google Scholar] [CrossRef]

- Zhang, X.-d.; Li, A.; Pan, R. Stock trend prediction based on a new status box method and AdaBoost probabilistic support vector machine. Appl. Soft Comput. 2016, 49, 385–398. [Google Scholar] [CrossRef]

- Naveed, H.M.; HongXing, Y.; Memon, B.A.; Ali, S.; Alhussam, M.I.; Sohu, J.M. Artificial neural network (ANN)-based estimation of the influence of COVID-19 pandemic on dynamic and emerging financial markets. Technol. Forecast. Soc. Change 2023, 190, 122470. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G. Recurrent Neural Networks: A Comprehensive Review of Architectures, Variants, and Applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Naveed, H.M.; Yanchun, P.; Memon, B.A.; Ali, S.; Sohu, J.M. Financial Modelling System Using Deep Neural Networks (DNNs) for Financial Risk Assessments. Int. Soc. Sci. J. 2024, 75, 137–154. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Tahir, M.; Ali, S.; Sohail, A.; Zhang, Y.; Jin, X. Unlocking Online Insights: LSTM Exploration and Transfer Learning Prospects. Ann. Data Sci. 2024, 11, 1421–1434. [Google Scholar] [CrossRef]

- Mughees, N.; Mohsin, S.A.; Mughees, A.; Mughees, A. Deep sequence to sequence Bi-LSTM neural networks for day-ahead peak load forecasting. Expert Syst. Appl. 2021, 175, 114844. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Mienye, E.; Jere, N.; Obaido, G.; Mienye, I.D.; Aruleba, K. Deep Learning in Finance: A Survey of Applications and Techniques. AI 2024, 5, 2066–2091. [Google Scholar] [CrossRef]

- Abraham, R.; Samad, M.E.; Bakhach, A.M.; El-Chaarani, H.; Sardouk, A.; Nemar, S.E.; Jaber, D. Forecasting a Stock Trend Using Genetic Algorithm and Random Forest. J. Risk Financ. Manag. 2022, 15, 188. [Google Scholar] [CrossRef]

- Ghani, M.; Guo, Q.; Ma, F.; Li, T. Forecasting Pakistan stock market volatility: Evidence from economic variables and the uncertainty index. Int. Rev. Econ. Financ. 2022, 80, 1180–1189. [Google Scholar] [CrossRef]

- O’Connor, C.; Bahloul, M.; Prestwich, S.; Visentin, A. A Review of Electricity Price Forecasting Models in the Day-Ahead, Intra-Day, and Balancing Markets. Energies 2025, 18, 3097. [Google Scholar] [CrossRef]

- Fang, B.; Zhang, P. Big data in finance. In Big Data Concepts, Theories, and Applications; Springer: Cham, Switzerland, 2016; pp. 391–412. [Google Scholar]

- Deng, C.; Liu, Y. A Deep Learning-Based Inventory Management and Demand Prediction Optimization Method for Anomaly Detection. Wirel. Commun. Mob. Comput. 2021, 2021, 9969357. [Google Scholar] [CrossRef]

- Weron, A.; Weron, R. Fractal market hypothesis and two power-laws. Chaos Solitons Fractals 2000, 11, 289–296. [Google Scholar] [CrossRef]

- Memon, B.A.; Yao, H.; Naveed, H.M. Examining the efficiency and herding behavior of commodity markets using multifractal detrended fluctuation analysis. Empirical evidence from energy, agriculture, and metal markets. Resour. Policy 2022, 77, 102715. [Google Scholar] [CrossRef]

- Memon, B.A.; Aslam, F.; Asadova, S.; Ferreira, P. Are clean energy markets efficient? A multifractal scaling and herding behavior analysis of clean and renewable energy markets before and during the COVID19 pandemic. Heliyon 2023, 9, e22694. [Google Scholar] [CrossRef]

- Li, Z.; Mu, J.; Basheri, M.; Hasan, H. Application of mathematical probabilistic statistical model of base–FFCA financial data processing. Appl. Math. Nonlinear Sci. 2022, 7, 491–500. [Google Scholar]

- Ratner, B. Statistical and Machine-Learning Data Mining:: Techniques for Better Predictive Modeling and Analysis of Big Data; Chapman and Hall/CRC: London, UK, 2017. [Google Scholar]

- Kumbure, M.M.; Lohrmann, C.; Luukka, P.; Porras, J. Machine learning techniques and data for stock market forecasting: A literature review. Expert Syst. Appl. 2022, 197, 116659. [Google Scholar] [CrossRef]

- Ahmed, S.F.; Alam, M.S.B.; Hassan, M.; Rozbu, M.R.; Ishtiak, T.; Rafa, N.; Mofijur, M.; Shawkat Ali, A.B.M.; Gandomi, A.H. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Patel, J.; Shah, S.; Thakkar, P.; Kotecha, K. Predicting stock market index using fusion of machine learning techniques. Expert Syst. Appl. 2015, 42, 2162–2172. [Google Scholar] [CrossRef]

- Leippold, M.; Wang, Q.; Zhou, W. Machine learning in the Chinese stock market. J. Financ. Econ. 2022, 145, 64–82. [Google Scholar] [CrossRef]

- Zhong, X.; Enke, D. Predicting the daily return direction of the stock market using hybrid machine learning algorithms. Financ. Innov. 2019, 5, 24. [Google Scholar] [CrossRef]

- Ayala, J.; García-Torres, M.; Noguera, J.L.V.; Gómez-Vela, F.; Divina, F. Technical analysis strategy optimization using a machine learning approach in stock market indices. Knowl.-Based Syst. 2021, 225, 107119. [Google Scholar] [CrossRef]

- Brogaard, J.; Zareei, A. Machine Learning and the Stock Market. J. Financ. Quant. Anal. 2023, 58, 1431–1472. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Liu, Y.; Hao, X.; Zhang, B.; Zhang, Y. Simplified long short-term memory model for robust and fast prediction. Pattern Recognit. Lett. 2020, 136, 81–86. [Google Scholar] [CrossRef]

- Aljebreen, M.; Alamro, H.; Al-Mutiri, F.; Othman, K.M.; Alsumayt, A.; Alazwari, S.; Hamza, M.A.; Mohammed, G.P. Enhancing Traffic Flow Prediction in Intelligent Cyber-Physical Systems: A Novel Bi-LSTM-Based Approach With Kalman Filter Integration. IEEE Trans. Consum. Electron. 2024, 70, 1889–1902. [Google Scholar] [CrossRef]

- Nikou, M.; Mansourfar, G.; Bagherzadeh, J. Stock price prediction using DEEP learning algorithm and its comparison with machine learning algorithms. Intell. Syst. Account. Financ. Manag. 2019, 26, 164–174. [Google Scholar] [CrossRef]

- Bui, D.T.; Tsangaratos, P.; Nguyen, V.-T.; Liem, N.V.; Trinh, P.T. Comparing the prediction performance of a Deep Learning Neural Network model with conventional machine learning models in landslide susceptibility assessment. CATENA 2020, 188, 104426. [Google Scholar] [CrossRef]

- Ni, J.; Xu, Y. Forecasting the Dynamic Correlation of Stock Indices Based on Deep Learning Method. Comput. Econ. 2023, 61, 35–55. [Google Scholar] [CrossRef]

- Fischer, T.; Krauss, C. Deep learning with long short-term memory networks for financial market predictions. Eur. J. Oper. Res. 2018, 270, 654–669. [Google Scholar] [CrossRef]

- Mukherjee, S.; Sadhukhan, B.; Sarkar, N.; Roy, D.; De, S. Stock market prediction using deep learning algorithms. CAAI Trans. Intell. Technol. 2023, 8, 82–94. [Google Scholar] [CrossRef]

- Shah, J.; Vaidya, D.; Shah, M. A comprehensive review on multiple hybrid deep learning approaches for stock prediction. Intell. Syst. Appl. 2022, 16, 200111. [Google Scholar] [CrossRef]

- Selvin, S.; Vinayakumar, R.; Gopalakrishnan, E.A.; Menon, V.K.; Soman, K.P. Stock price prediction using LSTM, RNN and CNN-sliding window model. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1643–1647. [Google Scholar]

- Abdullah, M.; Sulong, Z.; Chowdhury, M.A.F. Explainable deep learning model for stock price forecasting using textual analysis. Expert Syst. Appl. 2024, 249, 123740. [Google Scholar] [CrossRef]

- Billah, M.M.; Sultana, A.; Bhuiyan, F.; Kaosar, M.G. Stock price prediction: Comparison of different moving average techniques using deep learning model. Neural Comput. Appl. 2024, 36, 5861–5871. [Google Scholar] [CrossRef]

- Sivadasan, E.T.; Mohana Sundaram, N.; Santhosh, R. Stock market forecasting using deep learning with long short-term memory and gated recurrent unit. Soft Comput. 2024, 28, 3267–3282. [Google Scholar] [CrossRef]

- Dip Das, J.; Thulasiram, R.K.; Henry, C.; Thavaneswaran, A. Encoder–Decoder Based LSTM and GRU Architectures for Stocks and Cryptocurrency Prediction. J. Risk Financ. Manag. 2024, 17, 200. [Google Scholar] [CrossRef]

- Liu, B.; Lai, M. Advanced Machine Learning for Financial Markets: A PCA-GRU-LSTM Approach. J. Knowl. Econ. 2024, 16, 3140–3174. [Google Scholar] [CrossRef]

- Kumar, S.; Rao, A.; Dhochak, M. Hybrid ML models for volatility prediction in financial risk management. Int. Rev. Econ. Financ. 2025, 98, 103915. [Google Scholar] [CrossRef]

- Rahman, M.S.; Reza, H. Hybrid Deep Learning Approaches for Accurate Electricity Price Forecasting: A Day-Ahead US Energy Market Analysis with Renewable Energy. Mach. Learn. Knowl. Extr. 2025, 7, 120. [Google Scholar] [CrossRef]

- Klyuev, R.V.; Morgoev, I.D.; Morgoeva, A.D.; Gavrina, O.A.; Martyushev, N.V.; Efremenkov, E.A.; Mengxu, Q. Methods of Forecasting Electric Energy Consumption: A Literature Review. Energies 2022, 15, 8919. [Google Scholar] [CrossRef]

- Hong, T.; Pinson, P.; Wang, Y.; Weron, R.; Yang, D.; Zareipour, H. Energy Forecasting: A Review and Outlook. IEEE Open Access J. Power Energy 2020, 7, 376–388. [Google Scholar] [CrossRef]

- Gaamouche, R.; Chinnici, M.; Lahby, M.; Abakarim, Y.; Hasnaoui, A.E. Machine Learning Techniques for Renewable Energy Forecasting: A Comprehensive Review. In Computational Intelligence Techniques for Green Smart Cities; Lahby, M., Al-Fuqaha, A., Maleh, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 3–39. [Google Scholar]

- Benti, N.E.; Chaka, M.D.; Semie, A.G. Forecasting Renewable Energy Generation with Machine Learning and Deep Learning: Current Advances and Future Prospects. Sustainability 2023, 15, 7087. [Google Scholar] [CrossRef]

- Nasreddin, D.; Abdellaoui, Y.; Cheracher, A.; Aboutaleb, S.; Benmoussa, Y.; Sabbahi, I.; El Makroum, R.; Marrakchi, S.A.; Khaldoun, A.; El Alami, A.; et al. Regression and Machine Learning Modeling Comparative Analysis of Morocco’s Fossil Fuel Energy Forecast; Springer Nature: Cham, Switzerland, 2023; pp. 244–256. [Google Scholar]

- Herrera, G.P.; Constantino, M.; Tabak, B.M.; Pistori, H.; Su, J.-J.; Naranpanawa, A. Long-term forecast of energy commodities price using machine learning. Energy 2019, 179, 214–221. [Google Scholar] [CrossRef]

- Sun, W.; He, Y.; Chang, H. Forecasting Fossil Fuel Energy Consumption for Power Generation Using QHSA-Based LSSVM Model. Energies 2015, 8, 939–959. [Google Scholar] [CrossRef]

- Li, S.; Luo, L.; Li, J. Advanced Machine Learning Approaches for Predicting Energy and Fossil Fuel Consumption for Green Growth. Unconv. Resour. 2025, In Press, Journal Pre-proof, 100262. 100262. [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- He, T.; Mao, H.; Yi, Z. Subtraction Gates: Another Way to Learn Long-Term Dependencies in Recurrent Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 1740–1751. [Google Scholar] [CrossRef]

- Sezer, O.B.; Gudelek, M.U.; Ozbayoglu, A.M. Financial time series forecasting with deep learning: A systematic literature review: 2005–2019. Appl. Soft Comput. 2020, 90, 106181. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Song, J.; Gao, S.; Zhu, Y.; Ma, C. A survey of remote sensing image classification based on CNNs. Big Earth Data 2019, 3, 232–254. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J.; Fredes, C.; Valenzuela, A. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020, 10, 3443. [Google Scholar] [CrossRef]

- Hoseinzade, E.; Haratizadeh, S. CNNpred: CNN-based stock market prediction using a diverse set of variables. Expert Syst. Appl. 2019, 129, 273–285. [Google Scholar] [CrossRef]

- Zhang, J.; Ye, L.; Lai, Y. Stock Price Prediction Using CNN-BiLSTM-Attention Model. Mathematics 2023, 11, 1985. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Cahuantzi, R.; Chen, X.; Güttel, S. A Comparison of LSTM and GRU Networks for Learning Symbolic Sequences; Springer Nature: Cham, Switzerland, 2023; pp. 771–785. [Google Scholar]

- Shewalkar, A.; Nyavanandi, D.; Ludwig, S.A. Performance Evaluation of Deep Neural Networks Applied to Speech Recognition: RNN, LSTM and GRU. J. Artif. Intell. Soft Comput. Res. 2019, 9, 235–245. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Raju, V.N.G.; Lakshmi, K.P.; Jain, V.M.; Kalidindi, A.; Padma, V. Study the Influence of Normalization/Transformation process on the Accuracy of Supervised Classification. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; pp. 729–735. [Google Scholar]

- Tang, Z.; Zhang, T.; Wu, J.; Du, X.; Chen, K. Multistep-Ahead Stock Price Forecasting Based on Secondary Decomposition Technique and Extreme Learning Machine Optimized by the Differential Evolution Algorithm. Math. Probl. Eng. 2020, 2020, 2604915. [Google Scholar] [CrossRef]

- Meiryani, M.; Delvin Tandyopranoto, C.; Emanuel, J.; Lindawati, A.S.L.; Fahlevi, M.; Aljuaid, M.; Hasan, F. The effect of global price movements on the energy sector commodity on bitcoin price movement during the COVID-19 pandemic. Heliyon 2022, 8, e10820. [Google Scholar] [CrossRef]

- Sadorsky, P. A Random Forests Approach to Predicting Clean Energy Stock Prices. J. Risk Financ. Manag. 2021, 14, 48. [Google Scholar] [CrossRef]

- Alshawarbeh, E.; Abdulrahman, A.T.; Hussam, E. Statistical Modeling of High Frequency Datasets Using the ARIMA-ANN Hybrid. Mathematics 2023, 11, 4594. [Google Scholar] [CrossRef]