Abstract

This paper focuses on the iterative parameter estimation algorithms for dual-frequency signal models that are disturbed by stochastic noise. The key of the work is to overcome the difficulty that the signal model is a highly nonlinear function with respect to frequencies. A gradient-based iterative (GI) algorithm is presented based on the gradient search. In order to improve the estimation accuracy of the GI algorithm, a Newton iterative algorithm and a moving data window gradient-based iterative algorithm are proposed based on the moving data window technique. Comparative simulation results are provided to illustrate the effectiveness of the proposed approaches for estimating the parameters of signal models.

1. Introduction

Parameter estimation is used widely in system identification [1,2,3] and signal processing [4,5]. The existing parameter estimation methods for signal models can be classified into the following basic categories: the frequency-domain methods and the time-domain methods. The frequency-domain methods based on the fast Fourier transform (FFT) mainly include the Rife method, the phase difference method, etc. The accuracy of the Rife method is high in the case of noiseless or higher signal-to-noise ratio with adaptive sampling points; however, the error given by the Rife method is large if the signal frequency is near the DFT quantization frequency point. Therefore, some improved methods were proposed [6,7]. For example, Jacobsen and Kootsookos used three largest spectral lines of the FFT spectrum to calibrate the frequency estimation [8]. Deng et al. proposed a modified Rife method for the frequency shift of signals [9]. However, there is a risk that the shift direction of the frequency may be error. The time-domain methods mainly include the maximum likelihood algorithm, the subspace method and the self-correlation phase method. The maximum likelihood method is effective estimation to minimize the average risk though imposing significant computational costs. The methods in [10,11,12] used multiple autocorrelation coefficients or multi-steps autocorrelation functions to estimate the frequency so the amount of computations increase.

In practice, actual signals are usually disturbed by various stochastic noise, and the time series signals like vibration signals or biomedical signals are subjected to dynamic excitations, including nonlinear and non-stationary properties. In order to solve the difficulties, time-frequency representations (TFR) provide a powerful tool since a TFR can give information about the frequencies contained in signal over time, such as short-time Fourier transform, wavelet transform, and Hilbert–Huang transform [13]. Recently, Amezquita-Sanchez and Adeli presented an adaptive multiple signal classification-empirical wavelet transform methodology for accurate time-frequency representation of noisy non-stationary and nonlinear signals [14]. Daubechies et al. used an empirical mode decomposition-like tool to decompose into the blocks functions, with slowly varying amplitudes and frequencies [15]. These existing approaches can obtain signal models indirectly, because they are realized based on the transform techniques like the Fourier transform and wavelet transform. In this paper, we propose the direct parameter estimation algorithms for signal modeling.

The iterative methods and/or the recursive methods play an important role not only in finding the solutions of nonlinear matrix equations, but also in deriving parameter estimation algorithms for signal models [16,17,18,19,20,21,22]. Furthermore, the iterative algorithms can give more accurate parameter estimates because of making full use of the observed data. Yun utilized the iterative methods to find the roots of nonlinear equations [23]. Dehghan and Hajarian presented an iterative algorithm for solving the generalized coupled Sylvester matrix equations over the generalized centro-symmetric matrices [24]. Wang et al. proposed an iterative method for a class of complex symmetric linear systems [25]. Xu derived a Newton iterative algorithm to the parameter estimation for dynamical systems [26]. Pei et al. used a monotone iterative technique to get the existence of positive solutions and to seek the positive minimal and maximal solutions for a Hadamard type fractional integro-differential equation [27].

As an optimization tool, the Newton method is useful for solving roots of nonlinear problems or deriving parameter estimation algorithms from observed data [28,29,30]. For a long time, the Newton method has been utilized in much literature, such as transcendental equations, minimization and maximization problems, and numerical verification for solutions of nonlinear equations. Simpson noted that the Newton method can be used to give the generalization to systems of two equations and to solve optimization problems [31]. Dennis provided Newton’s method or quasi-Newton methods for multidimensional unconstrained optimization and nonlinear equation problems [32]. Jürgen studied the accelerated convergence of the Newton method by molding a given function into a new one that the roots remain unchanged, but it looks nearly linear in a neighborhood of the root [33]. Djoudi et al. presented a guided recursive Newton method involving inverse iteration to solve the transcendental eigenproblems by reducing it to a generalised linear eigenproblem [34]. Benner described the numerical solution of large-scale Lyapunov equations, Riccati equations, and linear-quadratic optimal control problems [35]. Seinfeld et al. used a quasi-Newton search technique and a barrier modification to enfore closed-loop stability for the H-infinity control problem [36]. Liu et al. presented an iterative identification algorithm for Wiener nonlinear systems using the Newton method [37]. The gradient method with the search directions defined by the gradient of the function at the current point has been developed for optimization problems. For instance, Curry used the gradient descent method for minimizing a nonlinear function of n real variables [38]. Vranhatis et al. studied the development, convergence theory and numerical testing of a class of gradient unconstrained minimization algorithms with adaptive step-size [39]. Hajarian proposed a gradient-based iterative algorithm to find the solutions of the general Sylvester discrete-time periodic matrix equations.

The moving data window with a fixed length is moved as time, which is a first-in-first-out sequence. When a new observation arrives, the data in this moving window are updated by including the new observation and eliminating the oldest one. The length of the moving window remains fixed. The algorithm computes parameter estimates using the observed data in the current window. Recently, Wang et al. presented a moving-window second order blind identification for time-varying transient operational modal parameter identification of linear time-varying structures [41]. Al-Matouq and Vincent developed a multiple-window moving horizon estimation strategy that exploits constraint inactivity to reduce the problem size in long horizon estimation problems [42]. This paper focuses on the parameter estimation problems of dual-frequency signal models. The main contributions of this paper are twofold. The basic idea is to present a gradient-based iterative (GI) algorithm and to estimate the parameters for signal models. Several estimation errors obtained by the Newton iterative and the moving data window based GI algorithms are compared to the errors given by the GI algorithm.

To close this section, we give the outline of this paper. Section 2 derives a GI parameter estimation algorithm. Section 3 and Section 4 propose the Newton and moving data window gradient based iterative parameter estimation algorithms. Section 5 provides an example to verify the effectiveness of the proposed algorithms. Finally, some concluding remarks are given in Section 6.

2. The Gradient-Based Iterative Parameter Estimation Algorithm

Consider the following dual-frequency cosine signal model:

where and are the angular frequencies, and are the amplitudes, t is a continuous-time variable, is the observation, and is a stochastic disturbance with zero mean and variance . In actual engineering, we can only get discrete observed data. Suppose that the sampling data are , , where L is the data length and is the sampling time.

As we all know, signals include the sine signal, cosine signal, Gaussian signal, exponential signal, complex exponential signal, etc. Among them, the sine signal and the cosine signal are typical periodic signals whose waveforms are sine and cosine curves in mathematics. Many periodic signals can be decomposed into the sun of multiple sinusoidal signals with different frequencies and different amplitudes by the Fourier series [43]. The cosine signal differs from the sine signal by in the initial phase. This paper takes the double-frequency cosine signal model as an example and derives the parameter estimation algorithms, which are also applicable to the sinusoidal signal models.

Use observed data and the model output to construct the criterion function

The criterion function contains the parameters to be estimated, and it represents the error between the observed data and the model output. We hope that this error is as small as possible, which is equivalent to minimizing and obtaining the estimates of the parameter vector .

Letting the partial derivative of with respect to be zero gives

Define the stacked output vector and the stacked information matrix as

Define the information vector

Let , and the model output stacked vector

Then, the gradient vector can be expressed as

Let be an iterative variable and be the estimate of at iteration . The gradient at is given by

Using the negative gradient search and minimizing , introducing an iterative step-size , we can get the gradient-based iterative (GI) algorithm for dual-frequency signal models:

The steps of the GI algorithm to compute are listed as follows:

- Let , give a small number and set the initial value , is generally taken to be a large positive number, e.g., .

- Collect the observed data , , where L is the data length, form by Equation (10).

- Form by Equation (13) and by Equation (11).

- Form by Equation (14) and by Equation (12).

- Choose a larger satisfying Equation (16), and update the estimate by Equation (15).

- Compare with , if , then terminate this procedure and obtain the iteration time k and the estimate ; otherwise, increase k by 1 and go to Step 3.

3. The Newton Iterative Parameter Estimation Algorithm

The gradient method uses the first derivative, and its convergence rate is slow. The Newton method is discussed here, which requires the second derivative, with fast convergence speed. The following derives the Newton iterative algorithm to estimate the parameters of dual-frequency signal models.

According to the criterion function in Equation (2). Calculating the second partial derivative of the criterion function with respect to the parameter gives the Hessian matrix

Let be an iterative variable and be the estimate of at iteration . Based on the Newton search, we can derive the Newton iterative (NI) parameter estimation algorithm of dual-frequency signal models:

The procedure for computing the parameter estimation vector using the NI algorithm in Equations (18)–(35) is listed as follows:

- Let , give the parameter estimation accuracy , set the initial value .

- Collect the observed data , , form by Equation (19).

- Form by Equation (22), form by Equation (20).

- Form by Equation (23), form by Equation (21).

- Compute by Equations (25)–(34), , , and form by Equation (24).

- Update the estimate by Equation (35).

- Compare with , if , then terminate this procedure and obtain the estimate ; otherwise, increase k by 1 and go to Step 3.

4. The Moving Data Window Gradient-Based Iterative Algorithm

The gradient method and the Newton method use a batch of data, which is the data from to . Here, the moving window data from to , p used here is the length of the moving data window. Let be the current sampling time. The moving window data can be represented as , ,⋯, . These sampling data change with the sampling time . With the increasing of i, the data window moves forward constantly. New data are collected and old data are removed from the window. The following derives the moving data window gradient-based iterative algorithm of dual-frequency signal models.

Define the moving data window criterion function

Define the information vector

and the stacked information matrix

Define , the vector and as

Then, the gradient vector can be expressed as

Let be an iterative variable, and be the estimate of at iteration k and the sampling time . Minimizing and using the negative gradient search, we can obtain the moving data window gradient-based iterative (MDW-GI) algorithm:

The steps of the MDW-GI algorithm in Equations (41)–(48) to compute are listed as follows.

- Pre-set the length of p, let , give the parameter estimation accuracy and the iterative length .

- To initialize, let , , .

- Collect the observed data , form by Equation (43).

- Form by Equation (45), form by Equation (42).

- Form by Equation (46), form by Equation (44).

- Select a larger satisfying Equation (48), update the estimate by Equation (47).

- If , increase k by 1 and go to Step 4; otherwise, go to the next step.

- Compare with , if , then let , , go to Step 2; otherwise, obtain the parameter estimate , terminate this procedure.

5. Examples

Case 1: The numerical simulations of the three iterative algorithms.

Consider the following dual-frequency cosine signal model:

The parameter vector to be estimated is

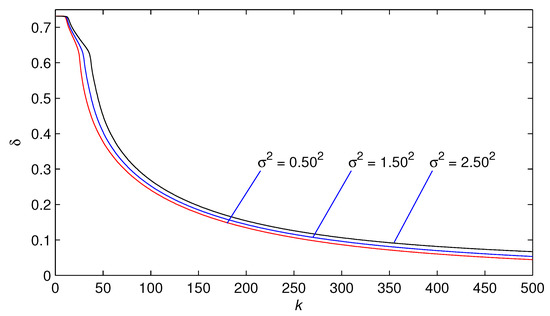

In simulation, is taken as a white noise sequence (stationary stochastic noise) with zero mean and variances , and , respectively. Let , the sampling period , , the data length . Apply the proposed GI algorithm using the observed data to estimate the parameters of this signal model. The parameter estimates and their estimation errors versus k are as shown in Table 1 and Figure 1.

Table 1.

The GI parameter estimates and errors.

Figure 1.

The GI estimation errors versus k.

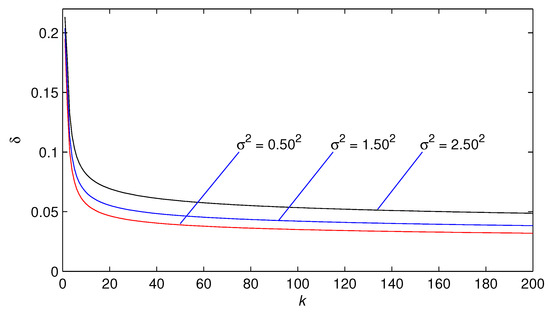

Apply the NI parameter estimation algorithm in Equations (18)–(35) with the finite observed data to estimate the parameters. The data length and the variances are the same as the condition in the GI algorithm. The parameter estimates and their estimation errors are given in Table 2 and the estimation errors versus k are shown in Figure 2.

Table 2.

The NI parameter estimates and errors.

Figure 2.

The NI estimation errors versus k.

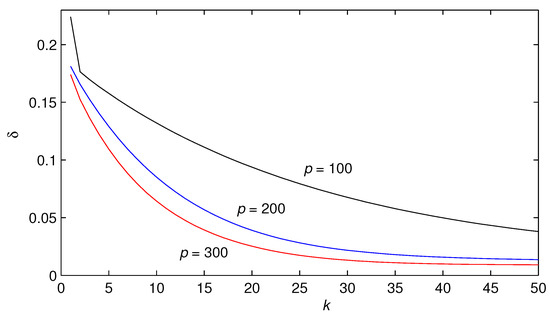

Apply the MDW-GI parameter estimation algorithm in Equations (41)–(48) to estimate the parameters of signal models. The length p of the moving data window is 100, 200 and 300, and is taken as a white noise sequence with zero mean and variance , respectively. The simulation results are shown in Table 3 and Figure 3.

Table 3.

The MDW-GI parameter estimates and errors ().

Figure 3.

The MDW-GI estimation errors versus k ().

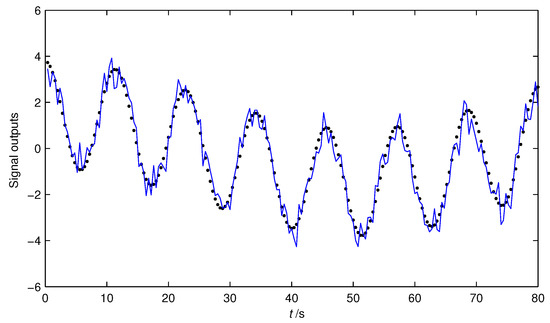

In order to validate the obtained models, we use the MDW-GI parameter estimates from next-to-last row in Table 3 with to construct the MDW-GI estimated model

The estimated outputs and the observed signal model are plotted in Figure 4, where the solid-line denotes the actual signal and the dot-line denotes the estimated signal .

Figure 4.

The estimated outputs and the actual signal model versus t. Solid line: the actual output , dot-line: the estimated signal .

From the simulation results, we can draw the following conclusions:

- The parameter estimation errors obtained by the presented three algorithms gradually decreasing trend as the iterative variable k increases.

- The parameter estimation errors given by three algorithms become smaller with the noise variance decreasing.

- In the simulation, these three algorithms are fulfilled in the same conditions (), and the estimated models obtained by the MDW-GI and NI algorithms have higher accuracy than the GI algorithm.

- The outputs of estimated signal are very close to the actual signal model . In other words, the estimated model can capture the dynamics of the signal.

6. Conclusions

This paper studies the direct parameter estimation algorithms for signal models only using the discrete observed data. By using the gradient search, we need to select a small step-size in order to ensure the convergence, and this will increase the search time and decrease the convergence rate. The proposed NI and MDW-GI algorithms have higher accuracy than the GI estimation algorithm for estimating the unknown parameters. The MWD-GI is used to obtain the parameter estimates at the current moment based on the estimates of previous data obtained at the moment time. Furthermore, the MDW-GI algorithm based on the moving data window technique can estimate the parameters of signal models in real time. The proposed algorithms can be extended to multi-frequency signal models. In the next work, we will consider the estimation of the initial phase of the signal models, that is to say, the signal model is a highly nonlinear function in regard to the frequencies and phases, and estimate all the parameters of dual-frequency signals including the unknown amplitudes, frequencies and initial phases simultaneously.

Acknowledgments

This work was supported by theScience Research of Colleges Universities in Jiangsu Province(No. 16KJB120007, China), and sponsored by Qing Lan Project and the National Natural Science Foundation of China (No. 61492195).

Author Contributions

Feng Ding conceived the idea and supervised his student Siyu Liu to write the paper and Siyu Liu designed and performed the simulation experiments. Ling Xu was involved in the writing of this research article. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ding, F. Complexity, convergence and computational efficiency for system identification algorithms. Control Decis. 2016, 31, 1729–1741. [Google Scholar]

- Ding, F.; Wang, F.F. Recursive least squares identification algorithms for linear-in-parameter systems with missing data. Control Decis. 2016, 31, 2261–2266. [Google Scholar]

- Xu, L. Moving data window based multi-innovation stochastic gradient identification method for transfer functions. Control Decis. 2017, 32, 1091–1096. [Google Scholar]

- Xu, L.; Ding, F. Recursive least squares and multi-innovation stochastic gradient parameter estimation methods for signal modeling. Syst. Signal Process. 2017, 36, 1735–1753. [Google Scholar] [CrossRef]

- Xu, L. The parameter estimation algorithms based on the dynamical response measurement data. Adv. Mech. Eng. 2017, 9. [Google Scholar] [CrossRef]

- Zhang, Q.G. The Rife frequency estimation algorithm based on real-time FFT. Signal Process. 2009, 25, 1002–1004. [Google Scholar]

- Yang, C.; Wei, G. A noniterative frequency estimator with rational combination of three spectrum lines. IEEE Trans. Signal Process. 2011, 59, 5065–5070. [Google Scholar] [CrossRef]

- Jacobsen, E.; Kootsookos, P. Fast accurate frequency estimators. IEEE Signal Process. Mag. 2007, 24, 123–125. [Google Scholar] [CrossRef]

- Deng, Z.M.; Liu, H.; Wang, Z.Z. Modified Rife algorithm for frequency estimation of sinusoid wave. J. Data Acquis. Process. 2006, 21, 473–477. [Google Scholar]

- Elasmi-Ksibi, R.; Besbes, H.; López-Valcarce, R.; Cherif, S. Frequency estimation of real-valued single-tone in colored noise using multiple autocorrelation lags. Signal Process. 2010, 90, 2303–2307. [Google Scholar] [CrossRef]

- So, H.C.; Chan, K.W. Reformulation of Pisarenko harmonic decomposition method for single-tone frequency estimation. IEEE Trans. Signal Process. 2004, 52, 1128–1135. [Google Scholar] [CrossRef]

- Cao, Y.; Wei, G.; Chen, F.J. An exact analysis of modified covariance frequency estimation algorithm based on correlation of single-tone. Signal Process. 2012, 92, 2785–2790. [Google Scholar] [CrossRef]

- Boashash, B.; Khan, N.A.; Ben-Jabeur, T. Time-frequency features for pattern recognition using high-resolution TFDs: A tutorial review. Digit. Signal Process. 2015, 40, 1–30. [Google Scholar] [CrossRef]

- Amezquita-Sanchez, J.P.; Adeli, H. A new music-empirical wavelet transform methodology for time-frequency analysis of noisy nonlinear and non-stationary signals. Digit. Signal Process. 2015, 45, 56–68. [Google Scholar] [CrossRef]

- Daubechies, I.; Lu, J.F.; Wu, H.T. Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Appl. Comput. Harmon. Anal. 2011, 30, 243–261. [Google Scholar] [CrossRef]

- Ding, F.; Xu, L.; Liu, X.M. Signal modeling—Part A: Single-frequency signals. J. Qingdao Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 38, 1–13. (In Chinese) [Google Scholar]

- Ding, F.; Xu, L.; Liu, X.M. Signal modeling—Part B: Dual-frequency signals. J. Qingdao Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 38, 1–17. (In Chinese) [Google Scholar]

- Ding, F.; Xu, L.; Liu, X.M. Signal modeling—Part C: Recursive parameter estimation for multi-frequency signal models. J. Qingdao Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 38, 1–12. (In Chinese) [Google Scholar]

- Ding, F.; Xu, L.; Liu, X.M. Signal modeling—Part D: Iterative parameter estimation for multi-frequency signal models. J. Qingdao Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 38, 1–11. (In Chinese) [Google Scholar]

- Ding, F.; Xu, L.; Liu, X.M. Signal modeling—Part E: Hierarchical parameter estimation for multi-frequency signal models. J. Qingdao Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 38, 1–15. (In Chinese) [Google Scholar]

- Ding, F.; Xu, L.; Liu, X.M. Signal modeling—Part F: Hierarchical iterative parameter estimation for multi-frequency signal models. J. Qingdao Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 38, 1–12. (In Chinese) [Google Scholar]

- Ding, J.L. Data filtering based recursive and iterative least squares algorithms for parameter estimation of multi-input output systems. Algorithms 2016, 9. [Google Scholar] [CrossRef]

- Yun, B.I. Iterative methods for solving nonlinear equations with finitely many roots in an interval. J. Comput. Appl. Math. 2013, 236, 3308–3318. [Google Scholar] [CrossRef]

- Dehghan, M.; Hajarian, M. Analysis of an iterative algorithm to solve the generalized coupled Sylvester matrix equations. Appl. Math. Model. 2011, 35, 3285–3300. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, Q.Q.; Lu, L.Z. A new iteration method for a class of complex symmetric linear systems. J. Comput. Appl. Math. 2017, 325, 188–197. [Google Scholar] [CrossRef]

- Xu, L. Application of the Newton iteration algorithm to the parameter estimation for dynamical systems. J. Comput. Appl. Math. 2015, 288, 33–43. [Google Scholar] [CrossRef]

- Pei, K.; Wang, G.T.; Sun, Y.Y. Successive iterations and positive extremal solutions for a Hadamard type fractional integro-differential equations on infinite domain. Appl. Math. Comput. 2017, 312, 158–168. [Google Scholar] [CrossRef]

- Dehghan, M.; Hajarian, M. Fourth-order variants of Newtons method without second derivatives for solving nonlinear equations. Eng. Comput. 2012, 29, 356–365. [Google Scholar] [CrossRef]

- Gutiérrez, J.M. Numerical properties of different root-finding algorithms obtained for approximating continuous Newton’s method. Algorithms 2015, 8, 1210–1216. [Google Scholar] [CrossRef]

- Wang, X.F.; Qin, Y.P.; Qian, W.Y.; Zhang, S.; Fan, X.D. A family of Newton type iterative methods for solving nonlinear equations. Algorithms 2015, 8, 786–798. [Google Scholar] [CrossRef]

- Simpson, T. The Nature and Laws of Chance; University of Michigan Library: Ann Arbor, MI, USA, 1740. [Google Scholar]

- Dennis, J.E.; Schnable, R.B. Numerical Methods for Unconstrained Optimization and Nonlinear Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1983. [Google Scholar]

- Jürgen, G. Accelerated convergence in Newton’s method. Soc. Ind. Appl. Math. 1994, 36, 272–276. [Google Scholar]

- Djoudi, M.S.; Kennedy, D.; Williams, F.W. Exact substructuring in recursive Newton’s method for solving transcendental eigenproblems. J. Sound Vib. 2005, 280, 883–902. [Google Scholar] [CrossRef]

- Benner, P.; Li, J.R.; Penzl, T. Numerical solution of large-scale Lyapunov equations, Riccati equations, and linear-quadratic optimal control problems. Numer. Linear Algebra Appl. 2008, 15, 755–777. [Google Scholar] [CrossRef]

- Seinfeld, D.R.; Haddad, W.M.; Bernstein, D.S.; Nett, C.N. H2/H∞ controller synthesis: Illustrative numerical results via quasi-newton methods. Numer. Linear Algebra Appl. 2008, 15, 755–777. [Google Scholar]

- Liu, M.M.; Xiao, Y.S.; Ding, R.F. Iterative identification algorithm for Wiener nonlinear systems using the Newton method. Appl. Math. Model. 2013, 37, 6584–6591. [Google Scholar] [CrossRef]

- Curry, H.B. The method of steepest descent for non-linear minimization problems. Q. Appl. Math. 1944, 2, 258–261. [Google Scholar] [CrossRef]

- Vrahatis, M.N.; Androulakis, G.S.; Lambrinos, J.N.; Magoulas, G.D. A class of gradient unconstrained minimization algorithms with adaptive stepsize. J. Comput. Appl. Math. 2000, 114, 367–386. [Google Scholar] [CrossRef]

- Hajarian, M. Solving the general Sylvester discrete-time periodic matrix equations via the gradient based iterative method. Appl. Math. Lett. 2016, 52, 87–95. [Google Scholar] [CrossRef]

- Wang, C.; Wang, J.Y.; Zhang, T.S. Operational modal analysis for slow linear time-varying structures based on moving window second order blind identification. Signal Process. 2017, 133, 169–186. [Google Scholar] [CrossRef]

- Al-Matouq, A.A.; Vincent, T.L. Multiple window moving horizon estimation. Automatica 2015, 53, 264–274. [Google Scholar] [CrossRef]

- Boashash, B. Estimating and interpreting the instantaneous frequency of a signal—Part 1: Fundamentals. Proc. IEEE 1992, 80, 520–538. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).