Introduction

Our everyday visual environment consists of many objects, with a wide variety of characteristics. The visual environment can undergo various physical transformations. These dynamic transformations usually result in perceptible changes in the scene. The ability to detect change is important in much of our lives (e.g., entering the classroom, or driving through traffic signs). Generally, people easily detect changes occurring around them. However, a number of researchers have found that people are surprisingly poor at detecting changes in visual scenes. These failures of change detection were observed both in complex natural scenes and in artificial displays, and regardless of whether or not observers were expecting the change (

Rensink, 2002;

Simons & Levin, 1997).

Eye movements usually accompany these change search and detection processes. Eye movements and fixations are not random; they are directed by many factors, including salience, or internal expectancy related to interesting objects present in the environment. Therefore, different eye movement patterns and indices could be analyzed to identify intent in a specific situation.

During the last decade, increasing interest in human computer interaction (HCI) and human robot interaction (HRI) has been linked with research on the human internal state and what it entails, as well as its interaction with the external environment. More specifically, human intent has been used to explain users’ mental states, behaviors, and goals in designing user-friendly interaction systems (

Salvucci, 1999;

Wong, Park, Kim, Jung, & Bien, 2006;

Youn & Oh, 2007).

Human intent can be explicit or implicit in nature. Generally, humans express their intent explicitly through facial expressions, speech, and hand gestures. However, these explicit expressions alone may not be enough to enable an accurate understanding of human intent. Therefore, it is critical to be able to understand the implicit component of human intent. Recently, there have been attempts to understand a user’s implicit intent based on electroencephalograms (EEG) (

Ferreira, et al., 2008;

Park, Park, Ko, & Sim, 2011) and electromyograms (

Ahsan, Ibrahimy, & Khalifa, 2009), and eye tracking (

Ibanez et al., 2014;

Irwin & Gordon, 1998;

Jang, 2014;

Jang, Lee, Mallipeddi, Kwak, & Lee, 2014;

Munoz & Everling, 2004).

Eye movements provide rich and complex information regarding human interest and intent (

Poole & Ball, 2006). The fixation and saccades observed occurring in response to a given scene might indicate what people see, to where they attend, and how they acquire information. Specifically, more overall fixations indicate less efficient search (

Goldberg & Kotval, 1999), or often an index of greater uncertainty in recognizing a target object (

Jacob & Karn, 2003). More fixations on a particular area indicate that it is more noticeable, or more important, to the viewer than other areas (

Poole & Ball, 2006). A longer fixation duration indicates difficulty in extracting information, or that the object is more engaging in some way (

Just & Carpenter, 1976).

Eye movements are fundamental motor movements controlled by the human cognitive system, and they have been studied in relation to cognitive processing of visual information (

Schwarz & Schmuckle, 2002). Eye movements provide a dynamic trace of where a person’s attention is being directed in a visual scene. In other words, human eye movements constantly provide information on how and where a person gazes according to their intent. Therefore, measuring aspects of eye movements can reveal the level of processing applied to objects.

Recently,

Jang et al. (

2014) proposed a new model for the recognition of human implicit intent based on eye movement patterns and pupil size variation. In their experiments, they confirmed that eye movement indices are the main variables that enable discrimination between navigational intent (this refers to a person’s intention to find interesting objects in a visual display without a particular goal) and informational intent (this refers to a person’s intention to find a particular object).

Taken together, it can be assumed that humans generate specific eye movement patterns during a visual search according to their implicit intent.. In other words, different implicit intentions may beget different eye movement patterns when looking at the same visual scene.

The current study focuses on eye movement patterns in a change-detection situation. Specifically, this study examines how human implicit intent affects the patterns of change-detection behavior. If our results show different patterns of eye movement in response to different intentions, we might be able to develop a model to identify human implicit intent using eye movement indices.

This study has some characteristics that distinguish it from other human implicit intent studies. Firstly, several studies of human intent categorized human implicit intent during visual stimulation as navigational intent and informational intent. We classified human implicit intent according to varying levels of information given to participants; to examine the effects of the type of intent, we defined three types of change-detection intent: “navigational intent,” “low-specific intent,” and “high-specific intent.” We postulated that each distinctive type of intent would require different patterns of search behavior, and hence would yield different eye-movement patterns. Secondly, by employing a primary task in which participants were required to identify aloud the colors of objects in the pre-change scene, we tried to control eye movements and visual search patterns in the pre-change scene.

Methods

Participants

Students at the Kyungpook National University in South Korea (N = 148) participated in this experiment. All students had normal or corrected-to-normal vision. None wore contact lenses. Forty-five participants were assigned to the navigational intent condition, thirty participants to the low-specific intent condition, and sixty-two to the high-specific intent condition. All participants provided written consent, and received course credits for their participation in this study. Eleven students were excluded from the analysis as they had calibration errors, or errors in the eye-movement data procured during the experiment. Finally, one hundred and thirty-seven students’ data were included in the analysis.

Apparatus

An eye tracking system (Tobii 1750 eye tracker, Tobii Technology Inc.) was utilized to measure each participant’s visual scan path in response to visual stimuli. The Tobii 1750 eye tracker locates the participant’s eyes, and calculates gaze positions automatically as they concentrate on the display stimulus. A high-resolution camera integrated into a 17-inch TFT display unit, with a maximum resolution of 1280 × 1024 pixels, was used to acquire eye images. Near-infrared light-emitting diodes were used to capture the reflection patterns on the corneas of participant’s eyes (Tobii User Manual, 2003). Visual stimuli were displayed on Windows OS computers, with 17-inch monitors (resolution 1280 × 1024 pixels) located approximately 60 cm from the participant’s eyes. The Tobii 1750 eye tracker collected gaze data concurrently for each eye, which provides greater accuracy. The software system measured the participant’s eye-movement, fixation duration and count, observation duration and count, and their visual scan path.

Stimuli and procedures

Three sets of pre- and post-change images were employed in the study. The post-change image was the same as the pre-change image, with one object missing from the scene. Two pairs of images were taken with a digital camera, and a pair of images was obtained from a book (

Goldstein, 2007). An object was erased from the scene using Adobe Photoshop 7.0 software.

All participants were tested individually in a quiet room at the laboratory. Participants were seated approximately 60 cm from the screen, and began by performing a 5-point grid calibration procedure. Task instructions were then displayed on the monitor, prior to display of the pre-change scene. The main experiment consisted of three trials. It took each participant approximately 5 minutes to complete the experiment.

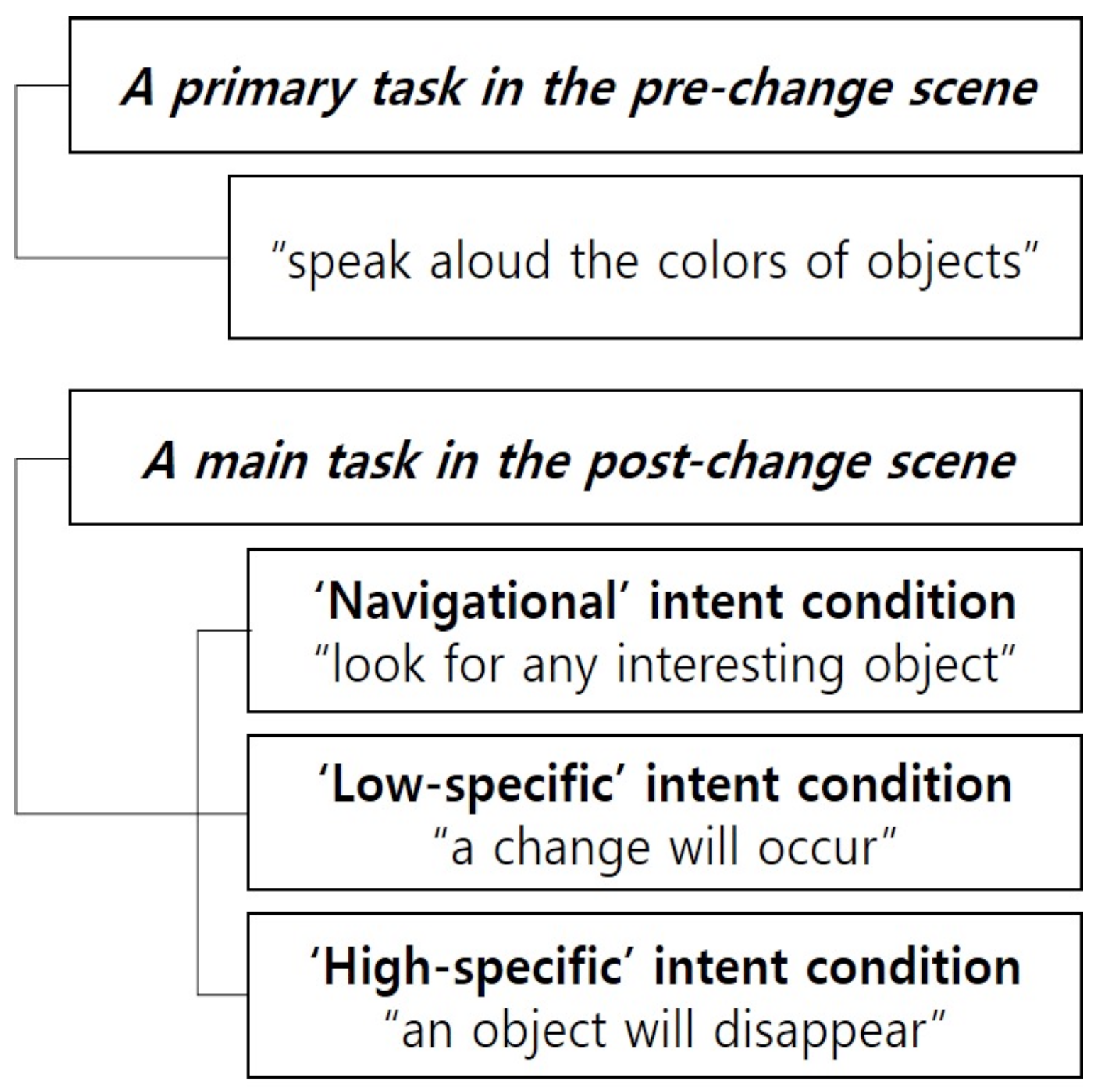

Figure 1.

Experimental procedure

Figure 1.

Experimental procedure

Figure 2.

Task instructions

Figure 2.

Task instructions

Task: a change detection task

After the pre-change scene was presented, participants were asked to identify colors of objects in the display, and speak them aloud for 5 seconds (primary task). This primary task was employed to ensure all participants made similar eye-movements when viewing the pre-change scene.

Participants were directed to look at the scene in accordance with specific instructions, thereby generating search intents that would depend on the information provided to them. There were three conditions: participants in the navigational intent condition were asked to look for any interesting objects, and were not given any information about the change to be made. In the low-specific intent condition, participants were provided with the information that a change may occur. In the high-specific intent condition, participants were informed that a change may occur, and more specifically, that an object may disappear.

The pre-change scene was presented for 5 seconds. This was followed by two 0.5-second blank displays: one with a white background, and the other with a gray background. These blank displays were masks, designed to erase after-images of the pre-change scene. This prevented participants from detecting changes based on the abrupt local changes between the pre- and post-change scenes. Finally, upon a 10-second presentation of the post-change scene, participants were asked to report any changes as compared to the pre-change scene. If they detected any change, participants were asked to point to the location of, and report the name of, the object that had changed. In addition, we recorded eye-movement data for each participant in each trial, starting at the presentation of the pre-change scene.

Experimental design and analysis

All statistical analyses were performed using SPSS for Windows, version 21. We conducted a multivariate analysis of variance (MANOVA), and mixed design analysis of variance (ANOVA) for each dependent variable (DV). Independent variables were the change detecting conditions (between subject variable), and the pre- and post-change scenes (within subject variable). Specifically, we used the first 5 seconds in the post-change scene in statistical analysis to balance the duration of time between the pre-change scene and post-change scene. The dependent variables were four eye-movement indices: fixation count (total number of fixations counted in the scene,) first fixation duration (time spent on the first fixation,) mean fixation duration (mean fixation duration excluding revisited fixations on the same position,) and total fixation duration (total time spent on fixations.)

Results and discussion

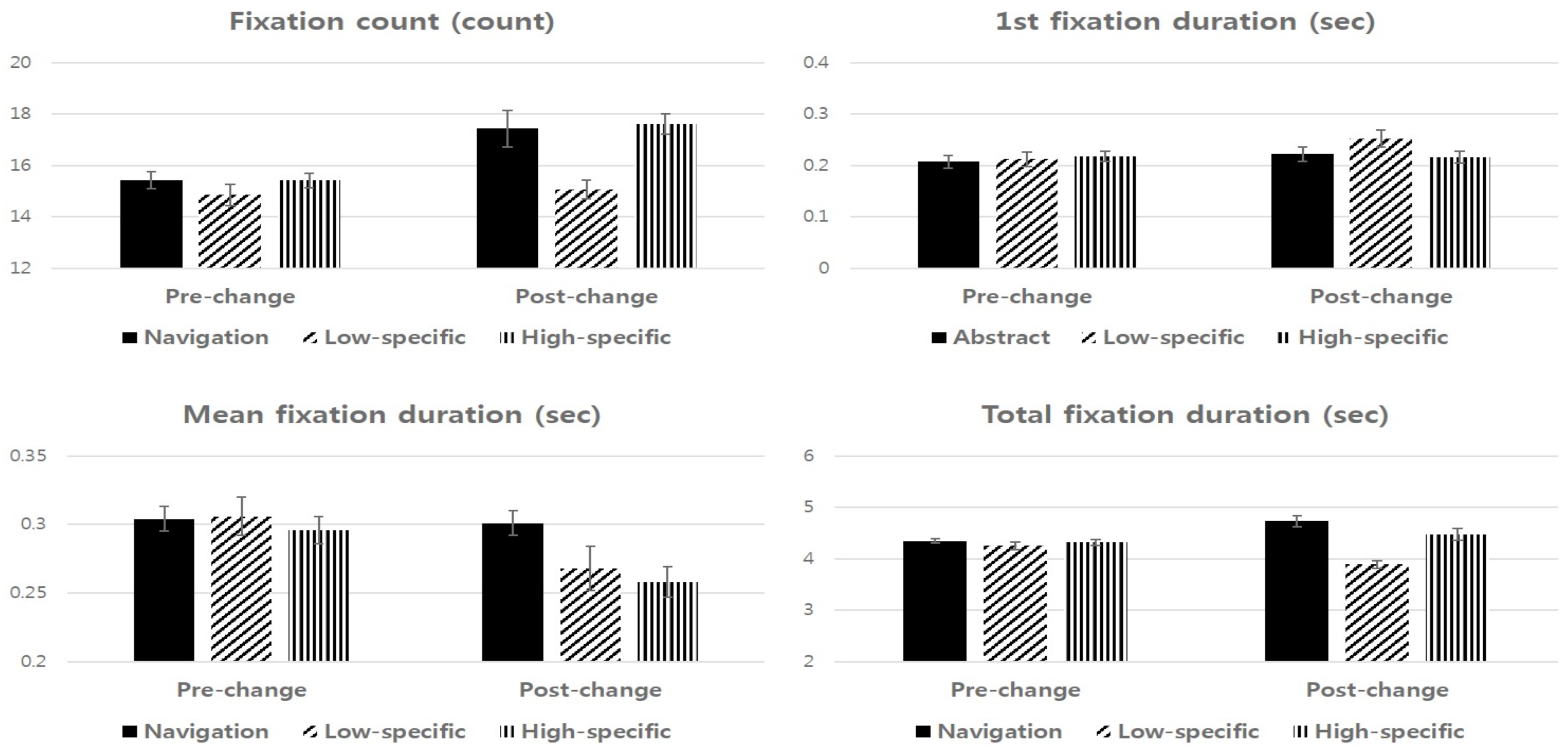

None of the participants in the navigational intent condition reported noticing any change. Furthermore, change detection performance in the low- and high-specific intent conditions was not improved as compared to that in the navigational intent condition. Our primary concern was whether the conditions’ different intents would affect their eye movements, regardless of whether we would observe varying amounts of change blindness across the conditions. Participants in all three conditions were required to perform the primary task (similar to the task utilized by Beck, Levin, & Angelone, 2006,) before carrying out the change detection task, in order to control the participants’ search processes. As can be seen in

Figure 3, this control manipulation appears to have been successful, since eye-movement patterns in the pre-change scene were uniform across the three intent conditions. It might also have disrupted the encoding objects in the pre-change scene, yielding uniform levels of change-blindness.

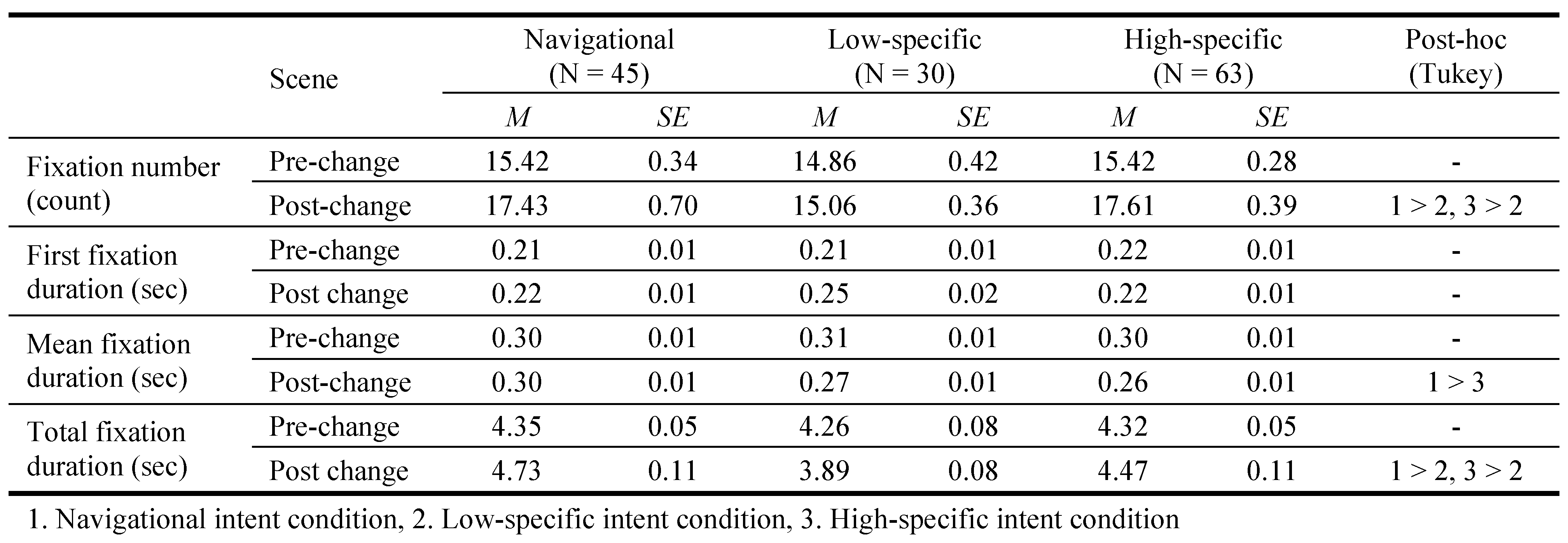

The mean and standard error for each intent condition and each DV are shown in

Table 1. There were no significant effects of the type of intent on each DV in the pre-change scene. Specifically, the effects on fixation count [

F (2, 411) = .725,

p = .485, ns.], the first fixation duration [

F (2, 411) = .181,

p = .834, ns.], the mean fixation duration [

F (2, 411) = .415,

p = .661, ns.], and the total fixation duration [

F (2, 411) = .510,

p = .601, ns.] were not significant. These results suggest that the primary task effectively controlled the visual searching process during pre-change scene.

Mixed-design ANOVAs were conducted to test differences between the intent conditions for each DV. First, for the fixation count, a significant main effect of the intent conditions [F (2, 411) = 4.540, p < .05], and scene condition [F (1, 411) = 24.108, p < .001], and interaction effect between the intent conditions and scene conditions [F (2, 411) = 3.839, p < .05] were found. To decompose the interaction effect, analysis of simple effects showed that the effect of scene conditions was more pronounced in the high-specific intent [F (1, 188) = 28.539, p < .001] than in the navigational intent [F (1, 134) = 10.981, p <.01] and the low-specific intent [F (1, 89) = .266, p = .607, ns.]. More specifically, the results of a simple comparison analysis, the low-specific intent condition showed fewer fixation counts than the other intent conditions [t (408) = -3.345. p < .01]. Additionally, a post-hoc analysis revealed that navigational intent and the high-specific intent conditions showed significantly greater fixation counts than the low-specific intent condition.

Second, for the first fixation duration, there was no significant effect of intent conditions in the post-change scene. However, we did find that the low-specific intent condition showed longer first fixation duration than the other intent conditions, though it was only marginally significant [t (408) = 1.775. p = .077, ns.].

Third, for the mean fixation duration, a significant effect of intent conditions [

F (2, 411) = 4.722,

p < .01], and scene conditions [

F (1, 411) = 31.328,

p < .001], and interaction effect between intent conditions and scene conditions [

F (2, 411) = 7.199,

p < .01] were found. Analysis of simple effects show that the effect of scene condition was more pronounced in the high-specific intent [

F (1, 188) = 42.874,

p < .001] than the low-specific intent [

F (1, 89) = 16.318,

p < .001] and the navigational intent [

F (1, 134) = .054,

p = .817, ns.]. More specifically, results of simple comparison analysis, the navigational intent showed significantly longer mean fixation duration than the other intent [

t (408) = 4.624.

p < .001]. Similar results were obtained from the post-hoc analysis (

Table 1).

Fourth, for the total fixation duration, a significant effect of intent condition [F (2, 411) = 8.683, p < .001], and interaction between intent condition and scene condition [F (2, 411) = 9.656, p < .001] were found. Analysis of simple effects showed that effects of scene conditions was more pronounced in the low-specific intent [F (1, 89) = 26.287, p < .001] than in either the navigational intent [F (1, 134) = 10.506, p < .01] or the high-specific intent [F (1, 188) = 2.296, p = .131, ns.]. An additional analysis of a simple comparison indicated that the low-specific intent showed significantly longer total fixation duration than two other intents [t (408) = 4.495. p < .001], similar to the result of a post-hoc analysis.

Taken together, our results showed that the type of intent affects eye movements, and that different intents give rise to different search patterns during the change detecting process. Specifically, navigational intent is related to an individual’s subjective interests in the visual scene. Navigational intent is thought to operate on raw sensory input, rapidly and involuntarily shifting attention to salient visual features of potential importance (

Connor, Egeth, & Yantis, 2004). Therefore, this intent condition does not necessarily entail deep visual processing levels; the navigational intent may have involve more frequent fixation counts than the other intent conditions, as shown in

Figure 3.

The low- and high-specific intent conditions may also show different patterns of search process during the change detection task. Specifically, in the low-specific intent condition, a participant was provided with the information that a change would occur. As this information was not specific, the participants had to look for all possible changes, such as changes in orientation, size, or color of all objects in the scene. It would have been easy to detect a single, predefined property change, but it is far more difficult to check all properties of every object (

Luck & Vogel, 1997;

Rensink, 2002). Therefore, participants in the low-specific intent condition might have had to spend longer looking at each object (fixation duration) to assess whether a change had been made, than participants in the high-specific intent condition, who had received more information about the impending change. This is consistent with the marginally prolonged first fixation duration recorded amongst the low-specific condition, when assessing the post-change scene. Moreover, fewer fixation counts would be expected, since the presentation time was limited in each trial, as shown in

Figure 3. As previously mentioned, the high-specific intent condition was provided with more specific information about the impending change than was the low-specific intent condition. They were advised that an object might disappear from the scene. Participants had to compare visual information in the pre-change scene with the post-change scene. Unlike those in the low-specific intent condition, participants in the high-specific intent condition did not have to assess all the possible property changes of objects; instead, they needed to fixate on as many objects as possible in the post-change scene, to check if there were any missing objects. These demands made of the high-specific intent condition would likely have resulted in greater fixation counts, and shorter fixation durations, as compared to the low-specific intent condition.

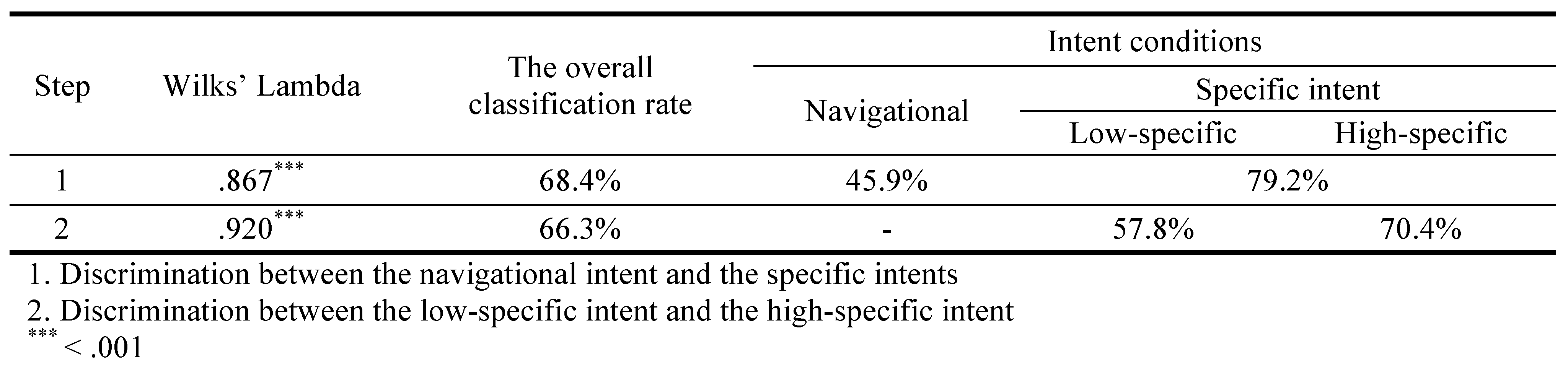

To examine the discriminating power of the DV indices, we carried out a stepwise discriminant function analysis. The four indices (fixation count, first fixation duration, mean fixation duration, and total fixation duration in the post-change scene) were included in the analysis. The first step of the analysis was to discriminate between the navigational intent condition and the two other conditions. The next step was to discriminate between the low-specific intent and the high-specific intent conditions.

Table 2 and

Table 3 show the results of the discriminant analysis.

First, in the discriminant analysis between the navigational intent condition and the other two specific intent conditions, the overall correct classification rate was 68.4%, and the Wilks’ Lambda of the discriminant function was highly significant (.867,

p < .001). The classification matrix showed that it correctly identified 62 of 135 (45.9%) cases of the navigational intent, and 221 of 279 (79.2%) cases of the specific intents. Additionally, the mean fixation duration (.628) and total fixation duration (.403) showed high discriminant coefficients among the DV indices (

Table 3).

Second, in the discrimination analysis between the low-specific intent and the high-specific intent, the overall correct classification rate was 66.3%, and the Wilks’ Lambda of the discriminant function was significant (.920, < .001). A classification matrix showed that it correctly identified 52 of 90 (57.8%) cases of the low-specific intent, and 133 of 189 (70.4%) cases of the high-specific intent. Additionally, the fixation count (-.841) and total fixation duration (

r = -.697) showed high discriminant coefficients (

Table 3).

Taken together, the discriminant function analysis showed reasonable classification rates for identifying the types of intent conditions. Additionally, we were able to confirm that eye-movement patterns are closely associated with the types of change detection intent. Specifically, the mean and total fixation duration were found to be useful in classifying the navigational intent and the two specific intents, and the fixation count and the total fixation duration were important to differentiate between the two levels of specific intent conditions. These results suggest that the eye-movement indices in a change detection task may be useful in detecting scene intents.

Conclusions

The goal of the present study was to examine how implicit intent affects eye-movement patterns during change detection behavior. To examine the effects of intent on eye movements, we instigated three types of change detection intent. Participants in the three intent conditions were given, and exposed to the same visual stimuli. The conditions were labeled: “navigational intent,” “low-specific intent,” and “high-specific intent.” Each type of intent would require different patterns of change search, and hence would yield different patterns of eye movements. Therefore, we expected to find different patterns of eye-movement, according to the prescribed intents when undertaking the task. The main results are as follows.

First, by employing a primary task, in which participants were required to speak aloud the colors of objects in the pre-change scene, we successfully controlled the visual search process during exposure to the pre-change scene, so that there were no differences between the three conditions in their patterns of eye-movement. This control might have disrupted encoding of the objects in the pre-change scene, yielding uniform levels of change-blindness across the three conditions. Additionally, the different eye-movement patterns observed in the post-change scene can be assumed to be an effect of the types of change detection intent.

Second, we observed significantly different patterns of eye-movement across the types of intent conditions in the post-change scene; this result suggested that generating a specific intent for change detections yields a distinctive pattern of eye-movement indices. Specifically, the navigational intent condition is closely related with a person’s subjective interests in the visual scene; therefore, this intent condition does not necessarily entail deep visual processing levels. Navigational intent is thought to operate on raw sensory input, rapidly and involuntarily shifting attention to salient visual features of potential importance (

Connor, Egeth, & Yantis, 2004). Therefore, the navigational intent may result in greater fixation counts than the other intent conditions.

The two specific intent conditions also showed different patterns in search processes during the change detection behavior. Specifically, participants in the low-specific intent condition were provided with the information that a change would occur in the visual scene. As this information was not specific, these participants had to check for all possible changes in the features of every object in the scene. Therefore, participants might have had to spend more time on each object to assess whether a change had been made. As a result, the low-specific intent condition may exhibit a reduced fixation count and longer fixation duration when compared to the high-specific intent condition. In contrast, the high-specific intent condition was provided with more specific information about the impending change—they were advised that an object might disappear from the scene. Participants in this condition had to compare the visual information in the pre-change scene with the post-change scene. Unlike the low-specific intent condition, they did not have to consider all the possible property change of every object; instead, they had to fixate on as many objects as possible in the post-change scene to check if there were any missing objects. These demands of the high-specific intent condition would result in greater fixation counts and shorter fixation durations.

Finally, we carried out the discriminant function analysis based on the four indices (fixation count, first fixation duration, mean fixation duration, and total fixation duration). The results showed reasonable classification rates for identifying the types of intention condition. Additionally, we were able to confirm that the eye-movement patterns are closely associated with the types of change detection intent. Specifically, the mean duration and total fixation duration were useful in classifying navigational versus specific intent conditions. Furthermore, the fixation count and the total fixation duration were important to classify the two levels of specific intents. Taken together, these results suggest that eye-movement might be a useful index in classifying human change detection intent. Types of intent affected eye-movement patterns in a change-detection task. These intention conditions cause differences in visual processing during the visual search.

The three types of intent condition used in our study may have some relevance to the bottom-up (stimulus-driven) and top-down (task-driven or user-driven) guidance in visual search. According to guided search theory (

Wolfe, 1994), the bottom-up visual search process describes attentional processing, which is driven by the properties of the objects themselves, or a person’s subjective interests in the visual scene. It does not depend on the person’s knowledge of the specific target. Top-down visual search process will guide attention to a desired object or purpose in the visual scene: information from top-down processing of the stimulus is used to create a ranking of objects in order of their attentional priority. Thus, this aspect of our visual search is under the control of the person who is attending to the visual stimulus. Therefore, it is mediated primarily by the frontal cortex and basal ganglia as one of the executive functions (

Posner & Petersen, 1990). Research has shown that it is related to other aspects of the executive function such as working memory (

Astle & Scerif, 2009). The navigational intent condition in this study demonstrates similar characteristics to the bottom-up visual search process, and the two specific intent conditions have characteristics similar to those of the top-down visual search process. We were able to confirm the effect of the two different aspects of visual search process based on the task-relevant information through the results of our study.

Our results are consistent with earlier studies of human implicit intent and its effect eye movement pattern (

Cutrell & Guan, 2007;

Gonzalez-Caro & Marcos, 2011;

Jang, 2014;

Jang et al., 2011;

Jang et al., 2014). In these studies, human implicit visual search intents were classified into navigational informational intents depending on the person’s purposes in a visual search task. Results showed that each intention condition was associated with different eye movement patterns, and that fixation count and total fixation duration were useful indices for the classification of human implicit intent. The results of our study also indicated that eye movement patterns might be useful indices to classify change detection intent. Furthermore, earlier studies of human intent have categorized the human implicit intent as navigational and informational intent. In contrast, in our study we classified the human implicit intent into three levels of intent depending on the level of information given to participants ahead of the task. We were able to confirm that eye-movement patterns were affected by the categories of intent as well as the levels of specific information.

Despite some positive findings, the limitations of this study need to be considered. Numerous changes can be made to various properties of an object, or multiple objects, in the visual scene. Change detection performance can vary with changes to different properties (

Rensink, 2000). In our study, we altered a single property of an object by making it disappear. Thus, future studies would be needed to assess the impact of implicit intent on the visual search process when other (more complex) properties of the visual scene are altered. Finally, we used four indices as the dependent variables in this study. Other indices (e.g., pupil size, areas of interest, sequences of scan path, and travel distance of eye-movements) might also be useful in revealing specific cognitive processes during the visual search performance.