Abstract

The simulated data used in eye-tracking-related research has been largely generated using normative eye models with little consideration of how the variations in eye biometry found in the population may influence eye-tracking outcomes. This study investigated the influence that variations in eye model parameters have on the ability of simulated data to predict real-world eye-tracking outcomes. The real-world experiments performed by two pertinent comparative studies were replicated in a simulated environment using a highcomplexity stochastic eye model that includes anatomically accurate distributions of eye biometry parameters. The outcomes showed that variations in anterior corneal asphericity significantly influence simulated eye-tracking outcomes of both interpolation and model-based gaze estimation algorithms. Other, more commonly varied parameters such as the corneal radius of curvature and foveal offset angle had little influence on simulated outcomes.

Introduction

The performance of an eye-tracker can be affected by a multitude of factors of which the variation in interpersonal eye biometry found in the population is thought to be a major contributor (Holmqvist, 2017; Blignaut, 2016). This makes developing an eye-tracker that performs well on a significantly large portion of the population a difficult endeavour.

Interpersonal variations in the performance of eye-tracking methods have been widely reported. Lai et al. (2014) reported interpersonal variations in gaze estimation errors as large as 0.7°. Villanueva and Cabeza (2008) reported errors larger than 2° between participants for a model-based gaze estimation algorithm using a similar eye-tracking hardware configuration. A series of studies investigating interpolation-based gaze estimation methods also reported significant interpersonal variations in eye-tracking performance (Blignaut and Wium, 2013; Blignaut, 2014; Blignaut, 2016).

Simulated eye-tracking data are generated by replicating the hardware and user of a device in a simulated environment. Ray-tracing operations are then used to simulate the position of features used by an eye-tracker such as the pupil and glints of the user on the image sensor of a camera model. Contemporary simulation environments also include realistic head models which allow the simulation of synthetic images of the entire eye region (Wood et al., 2016; Kim et al. 2019, Nair et al., 2020).

Using simulations, large and diverse eye-tracking datasets can be generated in a fraction of the time it would take to generate an equivalent amount of data in user studies (Wood et al., 2016). These datasets facilitate the rapid, repeated, and independent evaluation of eye-tracking methods. This is particularly advantageous during the early development of an eye-tracker in which various configurations of hardware and algorithmic components are considered (Villanueva and Cabeza, 2008; Narcizo et al., 2021).

Increasing the interpersonal variations in simulated eye-tracking data has been one of the central ambitions in the development of increasingly complex synthetic image data. This is evidenced by the increasing number of realistic eye-region textures and complexities of the methods used to simulate variations in the structure of the eye-region from the initial development of synthetic eyetracking images by Świrski and Dodgson (2014) to more contemporary works such as the models developed by Wood et al. (2016) and Stengel et al. (2019). However, a potential source of eyetracking errors as fundamental as variations in eye biometry have been largely overlooked in pursuit of increasing the interpersonal diversity in simulated eye-tracking data.

Investigations that endeavour to include variations in eye biometry in simulated data have a few options. The simplest method is the one-at-a-time approach in which one parameter of an eye model is systematically varied within reported biometric ranges while keeping the other parameters constant. This method allows researchers to independently investigate the influence of each eye parameter on simulated outcomes (Szczęsna and Kasprzak, 2006; Hansen et al., 2010). Another method is to simultaneously generate a combination of eye model parameters from anatomically observed distributions (Huang et al., 2014) These approaches should be used with caution as they are likely to produce combinations of ocular biometry that are not biometrically plausible (Rozema et al., 2016). The realism of the data is also limited by the complexity of the eye model of which the parameters were varied. For example, varying the parameters of an eye model that includes a single spherical cornea surface, as most studies in the eye-tracking-related literature have done, does not include realistic variations in the asphericity of the cornea in the simulated data.

Investigations that endeavour to include variations in eye biometry in simulated data have a few options. The simplest method is the one-at-a-time approach in which one parameter of an eye model is systematically varied within reported biometric ranges while keeping the other parameters constant. This method allows researchers to independently investigate the influence of each eye parameter on simulated outcomes (Szczęsna and Kasprzak, 2006; Hansen et al., 2010). Another method is to simultaneously generate a combination of eye model parameters from anatomically observed distributions (Huang et al., 2014) These approaches should be used with caution as they are likely to produce combinations of ocular biometry that are not biometrically plausible (Rozema et al., 2016). The realism of the data is also limited by the complexity of the eye model of which the parameters were varied. For example, varying the parameters of an eye model that includes a single spherical cornea surface, as most studies in the eye-tracking-related literature have done, does not include realistic variations in the asphericity of the cornea in the simulated data.

Stochastic eye models are a promising alternative that can be used to include variations in eye biometry in simulated eye-tracking data using a high-complexity eye model. A stochastic eye model is developed by measuring several anatomical parameters of the eyes of a large population. The measured biometry is then used to develop a statistical model that can generate an infinite number of random biometrically plausible eye models with a distribution of parameters indistinguishable from the population from which the model was derived. Despite the availability of stochastic eye models such as those proposed by Rozema et al. (2011) and Rozema et al. (2016), stochastic models have not been considered in eye-tracking-related literature.

The combination of reported interpersonal variation in eye-tracking outcomes, the prevalence of simulated eye-tracking data, and the lack of eye biometry diversity included in the simulated data used throughout the eye-tracking-related literature motivate the need for an investigation of the influence that variations in eye model parameters have on the predictive power of simulated eyetracking data. The combination of these findings suggests that the development of eye-tracking methods informed by simulated eye-tracking data may be hamstrung by a lack of eye biometry distributions in the data. This may have contributed to the interpersonal variations in eye-tracking performance observed in real-world eye-tracking outcomes.

This study investigated the influence that variations in eye model parameters have on the ability of simulated data to predict real-world eye-tracking outcomes. A stochastic eye model was used to generate unique eye models with biometrically plausible distributions of eye model parameters. The real-world experiments performed by Guestrin and Eizenman (2006), and Blignaut (2014) were then simulated using each eye model. These two comparative studies were chosen so that the influence of interpersonal variations in eye model parameters on both interpolation and model-based gaze estimation algorithms could be investigated. The main findings of each comparative study were identified, and the simulated outcomes were compared to the real-world experimental outcomes of the comparative studies. A multivariate regression analysis was also performed to investigate the sensitivity of the simulated gaze estimation errors to changes in eye model parameters.

Methods

This section describes the methodology used to simulate the real-world eye-tracking experiments performed by two comparative studies using a stochastic eye model. The section begins with a description of the comparative studies followed by the simulated environment developed to replicate the experiments performed in the comparative studies. The implementation of the stochastic eye model used to generate biometrically viable variations in eye model parameters is then discussed. Finally, the methods used to evaluate the simulated data against the findings of the comparative studies are described together with the methodology of the eye model parameter sensitivity analyses.

Comparative Studies

The experiments conducted by the two comparative studies described in Table 1 were replicated in a simulated environment, as described in the following section. The table describes the number of participants that were included in each study, the hardware configuration of the eye-tracker used, and the types of gaze estimation algorithms investigated. The hardware configurations and gaze estimation algorithm categories are based on the categories described by Kar & Corcoran (2017). The final column describes the outcomes that the eye-tracking data was used to assess.

Table 1.

Comparative studies.

Guestrin and Eizenman (2006) evaluated the performance of a model-based gaze estimation algorithm for a remote eye-tracker consisting of a single camera and two light sources during head movements. The experiment consisted of five sets of nine fixation targets that four participants were tasked with sequentially directing their gaze towards. Each set of fixations was performed at a different head position with the first fixation procedure performed in the central head position. The fixation procedure was then repeated four times with head movements of 30 mm left and right and then 40 mm forward and backwards.

The model-based gaze estimation algorithm evaluated by Guestrin and Eizenman (2006) involved calibrating the parameters of a three-dimensional eye model using the extracted image features and the known positions of the hardware components of the eye-tracker. The orientation of the eye model was then taken as the direction of the user’s gaze. The radius of curvature of the cornea (Rac), the distance between the corneal centre of rotation the anatomical pupil centre (K), and the foveal offset (α) were calibrated by minimizing the average gaze estimation errors across the nine fixation targets in the central head position using a non-linear search algorithm. The initial parameter values suggested by Guestrin and Eizenman (2006) were used for the search algorithm with Rac = 7.8 mm, K = 4.2 mm and α = (5, 1.5)° and ηac = 1.3375. The calibrated algorithm was used to calculate the estimated gaze point for the 45 simulated fixations and the average angular gaze estimation error over the nine fixation targets in the central (H1) and 36 fixation targets in the peripheral (H2) head positions for each participant were recorded.

Blignaut (2014) evaluated the performance of a large set of regression function combinations and calibration configurations on the performance of a binocular interpolation-based gaze estimation algorithm using a remote eye-tracker. Interpolation-based algorithms use extracted image features to fit some mapping function, in this case, a polynomial regression function that maps the positions of the image feature to the user’s estimated gaze position. The experiment used a remote eye-tracking configuration consisting of one camera and one infrared light source that recorded the pupil and glint features of 26 participants as they sequentially directed their gaze towards 135 fixation targets.

At each fixation, the pupil-glint vector was calculated as the vector between the pupil and glint centres and normalized by the distance between the pupils of the two eyes. The calculated pupilglint vectors were used to calibrate twelve different regression functions using each of the six calibration configurations investigated by Blignaut (2014). Calibration involved calculating the coefficients of the regression functions that minimized the gaze estimation errors over a set of calibration targets with known positions using a least squares solver. The calibration configurations used consisted of an arrangement of 5, 9, 14, 18, 23 and 135 (C5, C9, C14, C18, C23 and C135) of the 135 fixation targets. The average gaze estimation error across the 135 fixation targets was then calculated for each of the 72 calibrated regression functions as the angular error between the average estimated gaze point of both eyes and the fixation targets. The regression functions that produced the smallest average errors using each calibration configuration were identified and are referred to here as the best functions.

Stochastic Eye Model

The first version of the aspheric stochastic model of the right eye developed by Rozema et al. (2011), referred to as SyntEyes, was used in this study. The SyntEyes model was derived from the ocular biometry of 127 participants (37 male, 90 female, 123 Caucasian and 4 non-Caucasian). Various ophthalmic imaging equipment was used to capture 39 eye biometry parameters from each participant that were used to derive the 17 parameters describing the SyntEyes model. The parameters generated by the SyntEyes model are age, anterior corneal keratometry parameters (Kac,M, Kac,J0 and Kac,J45), anterior and posterior corneal eccentricity (Eac and Epc), posterior corneal keratometry parameters (Kpc,M, Kpc,J0 and Kpc,J45), central corneal thickness (CCT), anterior chamber depth (ACD), anterior and posterior lens radii of curvatures (Ral and Rpl), lens thickness (T), axial length (AL), lens power (D) and low-light pupil diameter (Pd).

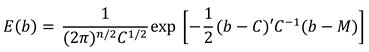

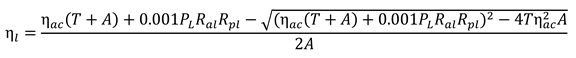

Using Equation 1, a set of randomly generated eye models (E) described by n = 17 normally distributed parameters (b) are generated from a multivariate Gaussian distribution using a vector (z) describing the mean values of the eye model parameters and a covariance matrix (C) describing the relationships between these parameters. The mean vector (z) and covariance matrix (C) provided by Rozema et al. (2011) are used.

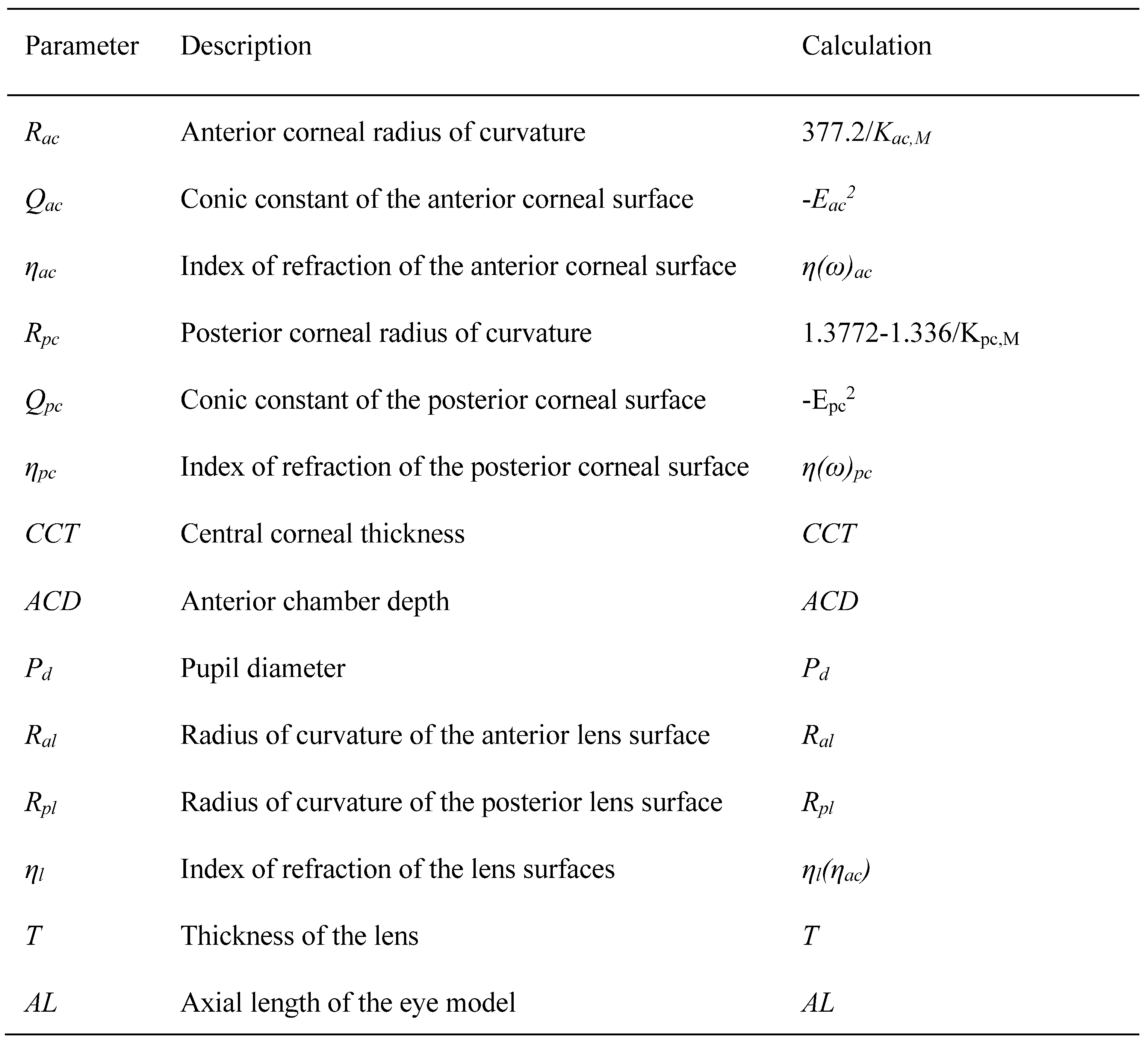

The 17 parameters generated by the SyntEyes model were used to calculate the 14 parameters of a right eye model that includes aspheric anterior corneal surfaces, and a circular pupil with the parameters given in Table 2. Note that the corneal shape parameters are not directly generated by the SyntEyes model and were calculated as a function of the SyntEyes parameters using the equations provided by Rozema et al. (2011). Given the high degree of symmetry between the eyes reported by Durr et al. (2015), the same eye model parameters were used to simulate the left and right eyes of the same user. The eye model was defined relative to the optic axis with all the ocular surfaces and pupil centered on the optic axis.

Table 2.

The parameters of the eye model generated by the SyntEyes model (Rozema et al., 2011).

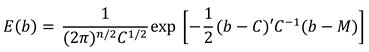

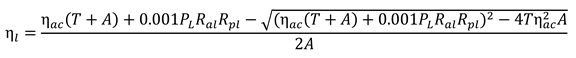

The refractive index of the lens and corneal surfaces were calculated as a function of the incident light wavelength (ω) using Cauchy’s equation (Atchison and Smith, 2005) with the chromatic dispersion coefficients used by Aguirre (2019). Rozema et al. (2011) caution that since the radius of the curvature of the lens surfaces (Ral and Rpl) and thickness of the lens (T) are randomly generated, the resulting power of the lens might not correspond to the lens power parameter (D) generated by SyntEyes. Based on the recommendation by Rozema et al. (2011), the refractive index of the lens (ηl) was calculated as a function of the corneal index of refraction (ηl) using a derivation of the thick lens formula, given in Equation 2 with, A = T – Ral + Rpl, to ensure that the resulting power of the lens matches the lens power (D) generated by the model.

The SyntEyes parameters only describe the anterior segment components of the eye model. Posterior segment parameters were required to perform fixations of the eye model. The posterior chamber parameters of the eye model proposed by Aguirre (2019) were used. This includes an ellipsoidal retina surface, an offset angle of the fovea from the optic axis (α), separate centres of rotation for azimuth (Er,z) and elevation (Er,y) eye rotations. Aguirre (2019) scaled these parameters according to the spherical refraction (SR) of the eye model and the laterality of the eye being simulated. Since the SyntEyes model does not generate a value of spherical refraction, the spherical refraction (SR) of the eye model was calculated as SR(AL) = (23.58 - AL)/0.299 according to the relationship proposed by Atchison (2006).

Simulation Procedure

The MATLAB-based gkaModelEye framework (Aguirre, 2019) was used to develop the simulation environment used in this investigation. The framework contains computational models of the various components present in an eye-tracking experiment including an eye, camera, light sources, and screen. The framework uses ray tracing operations to simulate the image features observed by the camera of an eye-tracker such as the glint and apparent pupil for various orientations of the eye model. This framework was preferred over other options such as the et_simul (Bohme et al., 2008) and UnityEyes (Wood et al., 2016) frameworks as it readily facilitates the inclusion of a high-complexity eye model and provides the functionality to configure the simulation environment to replicate the experiments performed by the comparative studies.

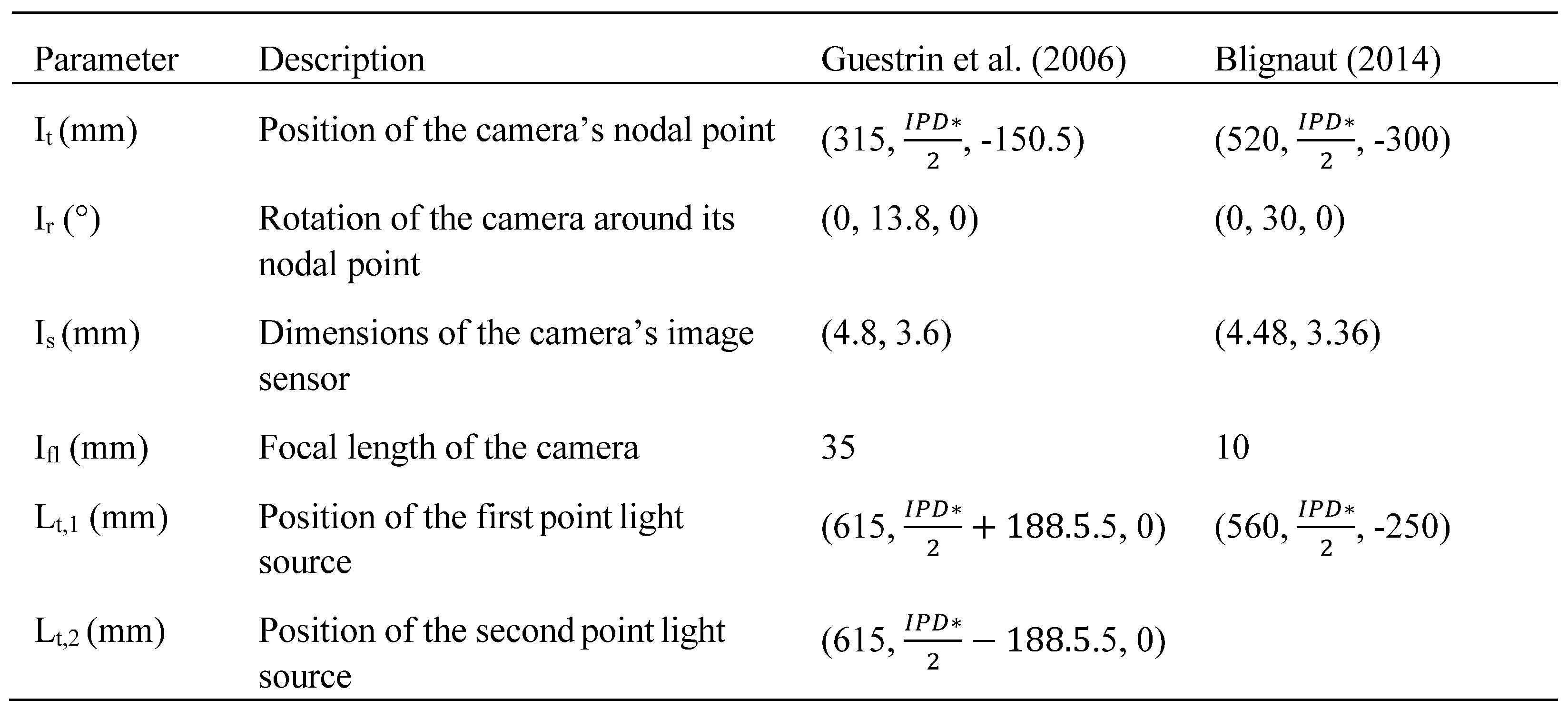

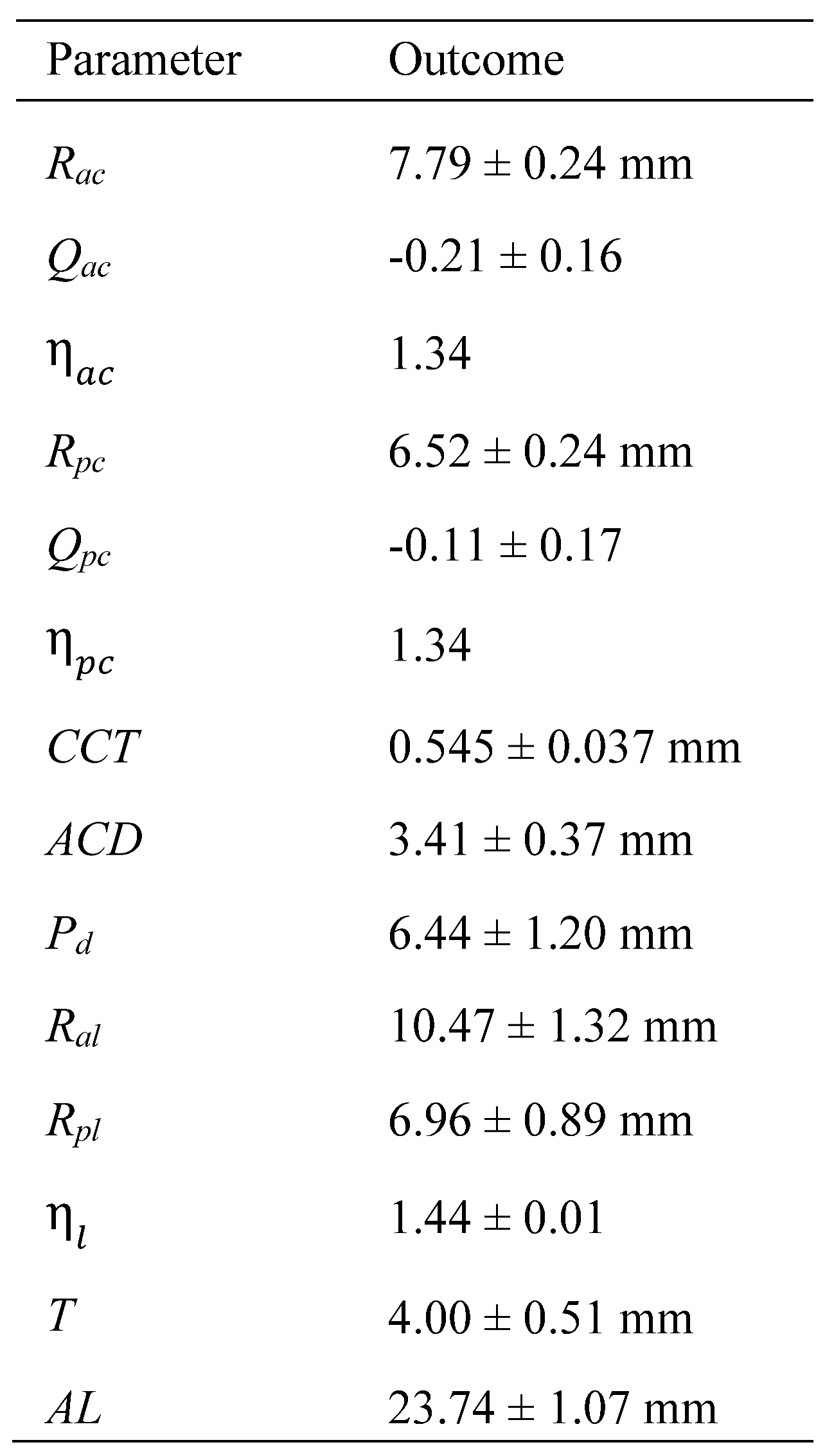

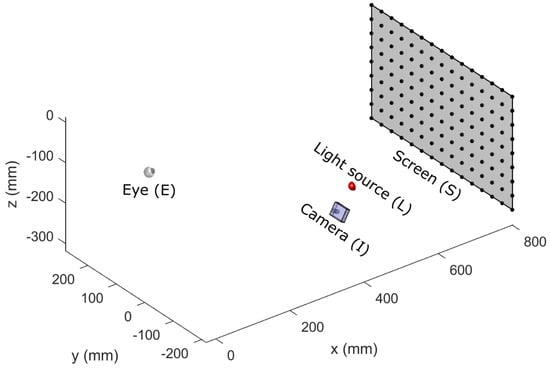

The configuration of the simulation environment developed was defined by the nine parameters described in Table 3. The configurations of the parameters that were used to replicate the experiments performed by the comparative studies investigated in this chapter are also given in the table. Figure 1 illustrates the graphical output of the simulation environment configured to replicate the experimental configuration used by Blignaut (2014). The origin of the simulation environment was placed on the intersection of the optic axis with the anterior corneal surface of the eye in an unrotated orientation. The x-axis was directed along the optic axis of the eye towards the screen, the y-axis was directed nasally for the right eye and temporally for the left eye and the z-axis was directed superiorly.

Table 3.

The parameters of the simulation environment and the configurations of the environment used to replicate each comparative study.

Figure 1.

An illustration of the simulation environment that was configured to replicate the experiment performed by Blignaut (2014). The black dots on the screen represent the fixation targets that participants were asked to focus their gaze on.

The fixation procedure performed in the eye-tracking experiments was simulated by sequentially fixating the eye model on a series of fixation targets and then using ray tracing operations to simulate the position of the glint and apparent pupil centres on the image sensor of the camera model. Each fixation was performed by rotating the eye model in accordance with Listing’s law around its centres of rotation (Er,z and Er,y) so that its line of sight aligns with the fixation target (Aguirre, 2019). This is a non-trivial operation as the position of the entrance pupil’s centre to which the line-of-sight is defined changes non-linearly as the eye rotates (Nowakowski, 2012). The gkaModelEye framework (Aguirre, 2019) uses a non-linear search algorithm to find the orientation of the eye that results in a line-of-sight that intersects the fixation target as well as the entrance pupil’s centre and the fovea of the eye model.

During each fixation, the position of the apparent pupil boundary was simulated by projecting 30 points uniformly arranged around the anatomical pupil boundary through the cornea so that the rays intersect the nodal point of the camera and its image sensor. The apparent pupil’s centre was then calculated as the centre of an ellipse fitted to the projected apparent pupil boundary points using a least squares solver. The glint centres were simulated as the intersection of rays with the image sensor that project from each point light source, reflects off the anterior corneal surface and then intersects the nodal point of the camera. All ray traces performed in this study were confirmed to intersect the nodal point of the camera within 10%+ mm.

The simulation environment only included one eye model at a time. Binocular eye-tracking data of a single user was generated by performing the fixation procedure twice with the same eye model in a different position. The right eye of a user was simulated by translating the screen, camera, and light source components half of the interpupillary distance (IPD) along the positive y-axis. Conversely, a left eye was simulated by translating the same components by half of the interpupillary distance along the negative y-axis. A fixed interpupillary distance of 63 mm was used in all the simulations based on the interpupillary distance of the average human reported by Dodgson (2004). The interpupillary distance was kept constant so that variations in eye model parameter were the only influence on gaze estimation outcomes.

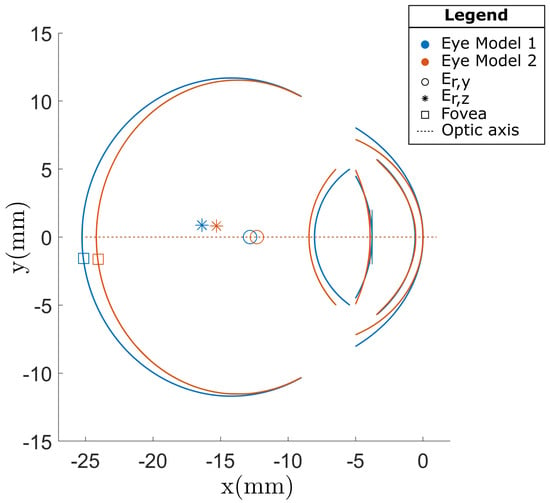

The stochastic eye model was used to generate 100 unique eye models. The fixation procedures performed in the two comparative studies were then simulated using each of the 100 eye models. An example of two superimposed eye models generated with the SyntEyes model is illustrated in Figure 2. The eye model shown in orange has a shorter axial length, slightly larger anterior chamber depth and more aspheric cornea than the eye model shown in blue. The average gaze estimation errors at the central (H1) and peripheral (H2) head positions was then calculated for the comparative study by Guestrin and Eizenman (2006) and the 12 regression functions calibrated using each of the six calibration configurations for the comparative study by Blignaut (2014).

Figure 2.

A comparison of two eye models that were generated using the SyntEyes model. The orange eye model has a smaller axial length, slightly larger anterior chamber depth and a more aspheric cornea than the eye model shown in blue.

Analyses

The distribution of the eye model parameters generated by the SyntEyes model (Rozema et al., 2011) were inspected to ensure that the model was correctly implemented in the simulation environment, The simulated pupil and glint centres were then plotted to inspect the influence of eye model parameter variations on the simulated image features.

The simulated gaze estimation errors were compared to the real-world eye-tracking errors reported by Guestrin and Eizenman (2006), and Blignaut (2014). Two-tailed student t-tests were performed to compare the mean simulated and real-world gaze estimation errors and a two-tailed F-test was performed to compare the variance in errors simulated to the errors reported in the real world reported by Guestrin and Eizenman (2006). A single sample student t-test was performed to compare the simulated and real-world gaze estimation errors generated by the experiment performed by Blignaut (2014).

The simulated data was also investigated to identify any significant relationships between the simulated gaze estimation errors and the 11 eye model parameters given in Table 2 excluding the indexes of refractions (η). A multivariate regression analysis was performed using Matlab (v2019b, Mathworks, USA). The multivariate regression function is given in Equation 2 with the parameter matrix (B) containing the h = 11 parameters of the n = 100 eye models that were used to generate the simulated data. The parameter matrix (B) was converted to z-scores by subtracting the mean value of each parameter column from each element in the column and then dividing it by the standard deviation of the column. The error matrix (W) consisted of t = 8 columns representing the gaze estimation errors categories simulated for the two comparative studies. The error categories were chosen to correspond to the results reported by the comparative studies with l = 1, 2 representing the errors generated at H1 and H2 by simulating the experiment performed by Guestrin and Eizenman (2006). The remaining columns, l = 3 … 8 represented the best functions’ errors simulated using each of the six calibration configurations evaluated by Blignaut (2014).

By solving for the coefficients aq,l, the value of each coefficient describes the sensitivity (m) of the simulated gaze estimation errors in the corresponding error category (Wl) to changes in the eye model parameters (q) in units of standard deviations. The ρ parameter of each regression coefficient in the resulting multivariate regression model was inspected for eye model parameters that have a significant influence (ρ < .05) on the simulated gaze estimation errors of each error category (l). The coefficients aq,l were again solved using only the significant eye model parameters.

A partial regression plot was generated for each error category (l) for the constant term against the residuals of the regression model that includes the terms for all the significant eye model parameters. Partial regression plots are commonly used in multivariate regression analyses to analyze the influence that the addition of an independent variable has on the residuals of a regression model that includes terms for all the other independent variables being considered (Velleman and Welsch, 1981). Therefore, a partial regression plot for the constant term of a regression model visualizes the performance of the entire model.

The R2 error of each regression model was calculated to evaluate the variance in simulated gaze estimations that were captured by the significant eye-model parameters for each error category (Draper and Smith, 1998). An R2 value of one indicates that the significant eye model parameters completely described the variance in simulated gaze estimation errors with no influence from the other eye model parameters. Deviations from one define the magnitude of contributions from nonsignificant eye model parameters or a non-linear relationship between errors and eye model parameters.

Results

In this section, the simulated outcomes are evaluated against the experimental findings of the comparative studies and the relationships between eye model parameters and the simulated eyetracking outcomes are described.

Eye Model Parameters

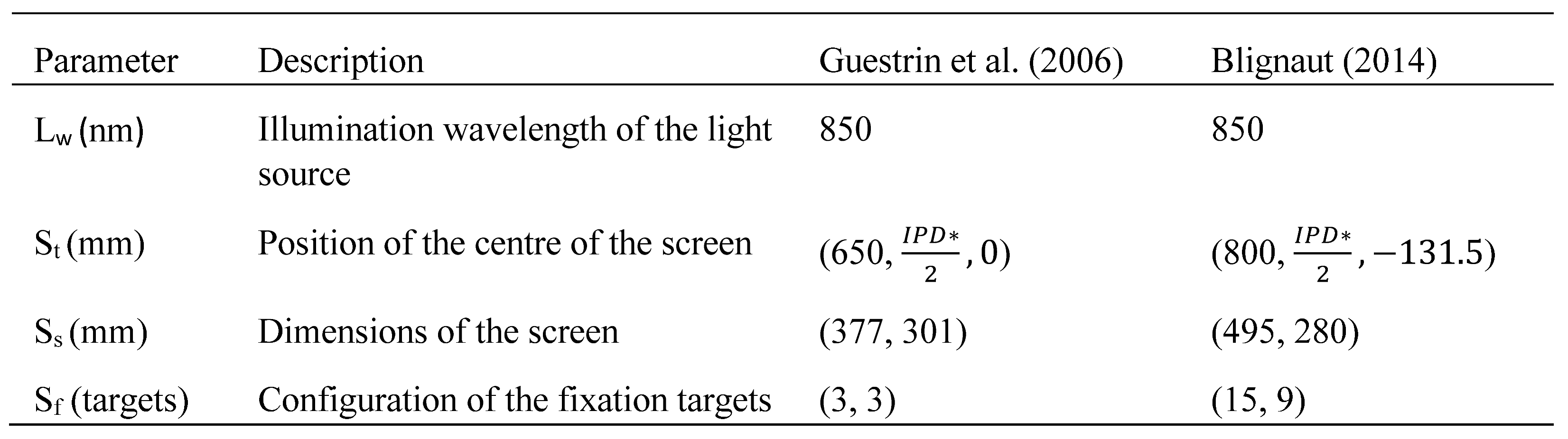

The distribution of the eye model parameters of the 100 eye models generated by the SyntEyes model (Rozema et al., 2011) are given in Table 4. The mean values of the parameter distributions correspond closely to the mean values of the SyntEyes model (Rozema et al., 2011). This demonstrates that the eye model was correctly implemented in the simulation.

Table 4.

The distribution of the eye model parameters of the 100 eye models generated by the SyntEyes model (Rozema et al., 2011). .

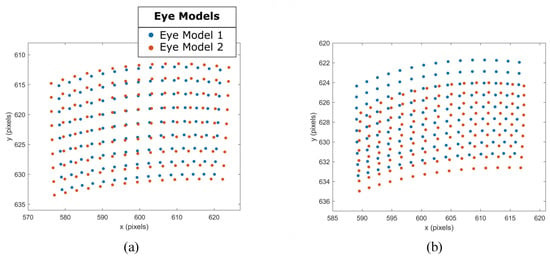

Simulated Image Features

The influence that variations of eye model parameters have on the simulated image features are illustrated in Figure 3. The blue and orange image features were simulated using the correspondingly colored eye models in Figure 2 with the eye models sequentially fixated on 135 fixation targets in the simulation environment shown in Figure 1.

Figure 3.

The simulated (a) pupil and (b) glint features generated for two eye models. .

A clear difference between the simulated pupil and glint centres can be observed. The pupil centre features of Eye Model 2 have a larger distribution than the features of eye model 1. The aspect ratio of the glint features for Eye Model 2 is flatter and translated in the positive y-axis relative to the glint features simulated using Eye Model 1.

Guestrin and Eizenman (2006)

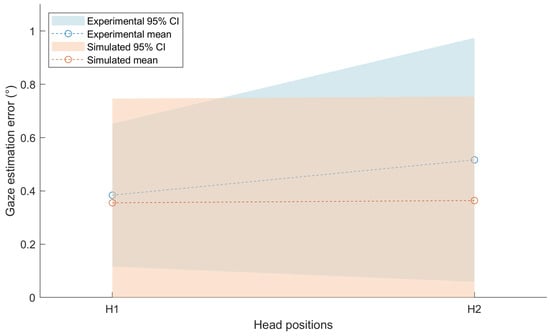

The mean and 95% confidence intervals (CI) of the simulated outcomes generated in this study for the comparative study by Guestrin and Eizenman (2016) are compared to the real-world outcomes at H1 and H2 in Figure 4. The simulated outcomes at both head positions were found to be strongly right-skewed (H1: skewness = 1.86, H2: skewness = 1.84). This indicates that the simulated gaze estimation errors were more strongly concentrated towards smaller errors than the normal distribution illustrated in Figure 4 suggests. However, the simulated errors are reported as a normative distribution to facilitate the comparison to experimental results.

Figure 4.

A comparison of the real-world outcomes of the study performed by Guestrin and Eizenman (2006) and the simulated outcomes achieved in this study.

The simulated mean errors at both head positions (H1: M = 0.36, SD = 20; H2: M = 0.36, SD = 20) were not significantly different from the real-world outcomes (H1: t(4.47) = 0.21, ρ = .84; H2: t(3.47) = 1.79, ρ = .16). The variances in eye-tracking errors between the simulated and real-world outcomes were also not significantly different (H1: F(99,3) = 5.53, ρ = .18; H2: F(99,3) = 1.89, ρ = .67). However, the simulated data was not able to predict the increase in the mean or variance of errors during head movements observed in the real-world data.

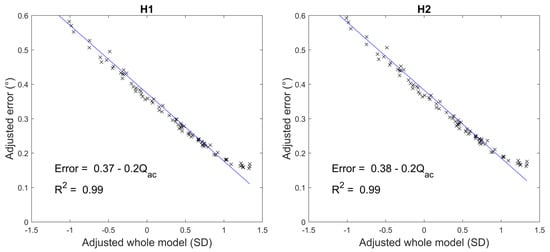

Only the anterior corneal asphericity (Qac) demonstrated a statistically significant influence (ρ < .05) on the simulated gaze estimation errors at each head position, as illustrated in Figure 5. A significant sensitivity of 0.2° error per standard deviation (SD) change in anterior corneal asphericity was found at both head positions. Figure 5 further shows that the variations in errors are well described by variations in anterior corneal asphericity with R2 = .99 at both head position categories.

Figure 5.

The influence of the anterior corneal asphericity on the gaze estimation errors generated by the simulation of the real-world experiment performed by Guestrin and Eizenman (2006).

Blignaut (2014)

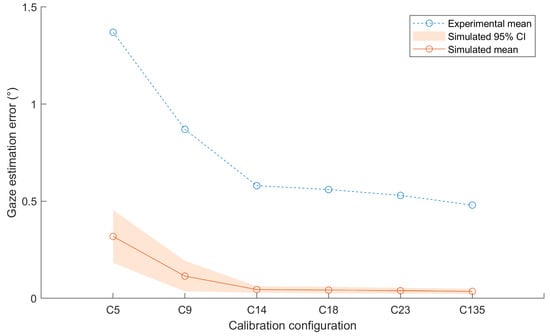

The distribution of the simulated and experiential best functions errors are illustrated in Figure 6. The simulated best function errors were significantly different from the experimental outcomes using each calibration configuration (t(25) > 5.34, ρ < .05 for all tests).

Figure 6.

A comparison of the real-world outcomes of the study performed by Blignaut (2014) and the simulated outcomes achieved in this study.

The simulated data predicted the negligible relative decrease in errors achieved by increasing the number of calibration targets beyond 14 as observed in the experimental data. The real-world decrease in the best functions’ errors was 0.28° and the simulated decrease was 0.20° for an increase from five to nine calibration targets. All subsequent increases in calibration targets resulted in a change in errors of under 0.1° in both the real-world and simulated errors.

The variances of the simulated and real-world outcomes could not be compared as the variance of the real-world outcome was not reported by Blignaut (2014). However, an interesting observation can be made from Figure 6. The figure shows that the variance in simulated errors decreased with each addition of calibration targets up to C14. This indicates that as the number of calibration targets was increased, the best functions were better able to account for the interpersonal variations in image feature distributions. After C14, the best functions could almost completely compensate for interpersonal variations in eye model parameters.

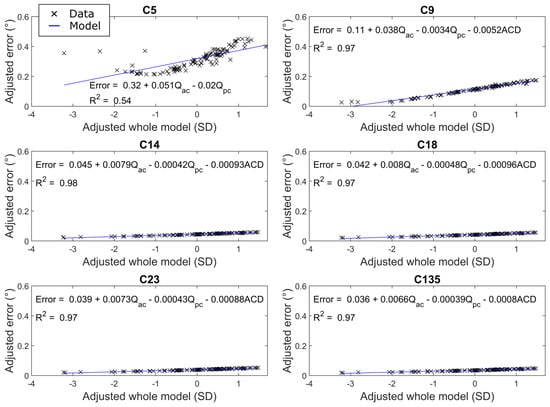

The relationship between eye model parameters and simulated gaze estimation errors given in Figure 7 further clarifies the observed error variances over the number of calibration targets. The strongest influence on errors was the anterior corneal asphericity (Qac) with a smaller but not insignificant contribution by the anterior chamber depth (ACD) and posterior corneal asphericity (Qpc) at each calibration configuration.

Figure 7.

The influence of eye model parameters on the simulated outcomes generated by the real-world experiment performed by Blignaut (2014).

Discussion

A discussion of the findings of this study is provided in this section. The discussion starts with an overview of the implications of the findings of the study. The limitations of this investigation are then described followed by recommendations for future work.

Implications of Findings

The simulated mean and the variance in errors were statistically similar to the experiential outcomes reported for the model-based gaze estimation algorithm evaluated by Guestrin (2006). This indicates that the stochastic eye model provided a good prediction of how the real-world eye-tracking outcomes produced by model-based gaze estimation algorithms are influenced by interpersonal variations in the shape of the eye. The simulated mean best functions errors were significantly different than the experimental results reported by Blignaut (2014). However, the simulated and realworld interpolation-based gaze estimation errors followed a similar trend when an increasing number of calibration targets were used.

The most influential eye model parameter on the simulated gaze estimation errors in both comparative studies was the anterior corneal asphericity (Qac). The anterior chamber depth (ACD) and posterior corneal asphericity (Qpc) also had a smaller but statistically significant influence on simulated interpolation-based gaze estimation errors. This indicates that studies aiming to simulate the interpersonal variations in the eye-tracking performance of an interpolation or model-based gaze estimation method should simulate variations in the asphericity of the cornea.

This finding is alarming considering that variations in anterior corneal asphericity are rarely considered in simulated eye-tracking data (Wood et al., 2016; Kim et al., 2019; Porta et al., 2019). Other eye model parameters that are more frequently varied in the existing literature have been the anterior corneal radius of curvature and the foveal offset (Mardanbegi et al., 2018; Dierkes et al., 2018; Petersch et al., 2021), the first of which was shown in this chapter to have a negligible influence on simulated eye-tracking outcomes. The foveal offset was not explicitly investigated in this study. However, the foveal offset was scaled relative to the axial length (AL) of the eye and a significant influence would’ve been captured by this parameter.

Limitations

The distribution of eye model parameters generated by the SyntEyes model is dependent on the distribution of the eye biometry used to derive the model. The population used to generate the SyntEyes model was slightly skewed towards women and highly skewed towards Caucasians. This may introduce some bias in the simulated eye-tracking outcomes and the findings of this study towards these populations.

The first version of the SyntEyes model is a paraxial model intended to replicate the central visual field of the eye. The maximum viewing angle of the eye model was just under 20° in the simulation of both comparative studies. Studies such as Polans et al. (2010) have shown that paraxial eye models fail to accurately replicate the visual performance of the eye at the peripheral viewing angles used in this study as they extrapolate centrally determined eye parameters to the periphery of the ocular surfaces and do not include realistic ocular surface decentrations and tilts. The risk of extrapolating central eye parameters are illustrated in Eye Model 2 in Figure 2 where the cornea becomes thinner towards its periphery which is not physiologically accurate (Bergmanson et al., 2019).

Rozema et al. (2016) developed the second version of the SyntEyes stochastic eye model. This model was derived from a larger and more diverse population and includes corneal surfaces described by an 8th-order Zernike polynomial. Unfortunately, this patient-specific eye model could not be used in this study as the Zernike coefficients are only provided for the central 6.5 mm of the corneal surfaces. This was not sufficient for simulating eye-tracking outcomes at large viewing angles as the pupil and glint rays may not interest the corneal surfaces.

Interpersonal variations in the pupil centre position and translation of the apparent pupil’s centre during changes in the size of the pupil have been shown to adversely influence the performance of eye-trackers (Choe et al., 2016). Pupil centre shifts were not included in this investigation as it is unclear the extent to which shifts in the apparent pupil’s centre are caused by the influence of the corneal optics as the anatomical pupil’s diameter changes or asymmetrical dilations and constriction of the anatomical pupil.

Future Work

The work presented in this study demonstrated a method to readily include biometrically plausible eye model parameter variations in simulated data using a stochastic eye model. This method has significant potential to increase the distributions of realistic interpersonal variations contained in synthetic image datasets. Supplementing the training of appearance-based gaze estimation algorithms with a dataset that contains interpersonal variations in eye biometry could significantly improve the outcomes achieved by these algorithms.

The realism of simulated eye-tracking outcomes could be further improved by using a higher complexity stochastic eye model that includes patient-specific corneal surface parameters as well as ocular decentrations and tilts. Once a higher complexity stochastic eye model becomes available, the multivariate regression analysis method performed in this study can be repeated to gain further insight into how interpersonal variations in the anatomy of the eye influence eye-tracking outcomes. Improving the complexity of the eye model could also improve the similarity of the simulated outcomes to the experimental outcomes generated by interpolation-based gaze estimation algorithms.

Ethics and Conflict of Interest

The author(s) declare(s) that the contents of the article are in agreement with the ethics described in http://biblio.unibe.ch/portale/elibrary/BOP/jemr/ethics.html and that there is no conflict of interest regarding the publication of this paper.

Acknowledgments

“Research reported in this publication was supported by the South African Medical Research Council under a Self-Initiated Research Grant. The views and opinions expressed are those of the authors and do not necessarily represent the official views of the SA MRC. This work is also based on the research supported in part by the National Research Foundation of South Africa (Grant Numbers: 113337).

References

- Aguirre, G. K. 2019. A model of the entrance pupil of the human eye. Scientific reports 9, 1: 9360. [Google Scholar] [CrossRef] [PubMed]

- Atchison, D. A., and G. Smith. 2005. Chromatic dispersions of the ocular media of human eyes. Journal of the Optical Society of America A 22, 1: 29–37. [Google Scholar]

- Atchison, D. A. 2006. Optical models for human myopic eyes. Vision research 46, 14: 2236–2250. [Google Scholar] [CrossRef]

- Bergmanson, J., A. Burns, and M. Walker. 2019. Anatomical explanation for the central-peripheral thickness difference in human corneas. Investigative Ophthalmology & Visual Science 60, 9: 4652–4652. [Google Scholar]

- Blignaut, P., and D. Wium. 2013. The effect of mapping function on the accuracy of a video-based eye tracker. Proceedings of the 2013 conference on eye tracking South Africa; pp. 39–46. [Google Scholar] [CrossRef]

- Blignaut, P. 2014. Mapping the pupil-glint vector to gaze coordinates in a simple video-based eye tracker. Journal of Eye Movement Research 7, 1. [Google Scholar] [CrossRef]

- Blignaut, P. 2016. Idiosyncratic feature-based gaze mapping. Journal of Eye Movement Research 9, 3. [Google Scholar] [CrossRef]

- Böhme, M., M. Dorr, M. Graw, T. Martinetz, and E. Barth. 2008. A software framework for simulating eye trackers. Proceedings of the 2008 symposium on Eye tracking research & applications; pp. 251–258. [Google Scholar] [CrossRef]

- Choe, K. W., R. Blake, and S. H. Lee. 2016. Pupil size dynamics during fixation impact the accuracy and precision of video-based gaze estimation. Vision research 118: 48–59. [Google Scholar]

- Dierkes, K., M. Kassner, and A. Bulling. 2018. A novel approach to single camera, glintfree 3D eye model fitting including corneal refraction. Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, June; pp. 1–9. [Google Scholar] [CrossRef]

- Dodgson, N. A. 2004. Variation and extrema of human interpupillary distance. Stereoscopic displays and virtual reality systems XI, May, vol. 5291, pp. 36–46. [Google Scholar] [CrossRef]

- Draper, N. R., and H. Smith. 1998. Applied regression analysis, 326. [Google Scholar]

- Durr, G. M., E. Auvinet, J. Ong, J. Meunier, and I. Brunette. 2015. Corneal shape, volume, and interocular symmetry: parameters to optimize the design of biosynthetic corneal substitutes. Investigative Ophthalmology & Visual Science 56, 8: 4275–4282. [Google Scholar] [CrossRef]

- Guestrin, E. D., and M. Eizenman. 2006. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Transactions on biomedical engineering 53, 6: 1124–1133. [Google Scholar] [CrossRef]

- Hansen, D. W., J. S. Agustin, and A. Villanueva. 2010. Homography normalization for robust gaze estimation in uncalibrated setups. Proceedings of the 2010 symposium on eye-tracking research & applications; pp. 13–20. [Google Scholar] [CrossRef]

- Holmqvist, K. 2017. Common predictors of accuracy, precision and data loss in 12 eye-trackers. In The 7th Scandinavian Workshop on Eye Tracking. pp. 1–25. [Google Scholar] [CrossRef]

- Huang, J. B., Q. Cai, Z. Liu, N. Ahuja, and Z. Zhang. 2014. Towards accurate and robust crossratio based gaze trackers through learning from simulation. Proceedings of the Symposium on Eye Tracking Research and Applications; pp. 75–82. [Google Scholar] [CrossRef]

- Kar, A., and P. Corcoran. 2017. A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms. IEEE Access 5: 16495–16519. Available online: https://ieeexplore.ieee.org/document/8003267.

- Kim, J., M. Stengel, A. Majercik, S. De Mello, D. Dunn, S. Laine, M. McGuire, and D. Luebke. 2019. Nvgaze: An anatomically-informed dataset for low-latency, near-eye gaze estimation. Proceedings of the 2019 CHI conference on human factors in computing systems; pp. 1–12. [Google Scholar] [CrossRef]

- Lai, C.C., S.W. Shih, and Y.P. Hung. 2014. Hybrid method for 3-D gaze tracking using glint and contour features. IEEE Transactions on Circuits and Systems for Video Technology 25, 1: 2437. [Google Scholar] [CrossRef]

- Mardanbegi, D., and D. W. Hansen. 2012. Parallax error in the monocular headmounted eye trackers. Proceedings of the 2012 acm conference on ubiquitous computing, September; pp. 689–694. [Google Scholar] [CrossRef]

- Nair, N., R. Kothari, A. K. Chaudhary, Z. Yang, G. J. Diaz, J. B. Pelz, and R. J. Bailey. 2020. RIT-Eyes: Rendering of near-eye images for eye-tracking applications. ACM Symposium on Applied Perception 2020, September; pp. 1–9. [Google Scholar] [CrossRef]

- Narcizo, F. B., F. E. D. Dos Santos, and D. W. Hansen. 2021. High-accuracy gaze estimation for interpolation-based eye-tracking methods. Vision 5, 3: 41. [Google Scholar] [CrossRef] [PubMed]

- Nowakowski, M., M. Sheehan, D. Neal, and A. V. Goncharov. 2012. Investigation of the isoplanatic patch and wavefront aberration along the pupillary axis compared to the line of sight in the eye. Biomedical optics express 3, 2: 240–258. [Google Scholar] [CrossRef]

- Petersch, B., and K. Dierkes. 2022. Gaze-angle dependency of pupil-size measurements in headmounted eye tracking. Behavior Research Methods 54, 2: 763–779. [Google Scholar] [CrossRef] [PubMed]

- Polans, J., B. Jaeken, R. P. McNabb, P. Artal, and J. A. Izatt. 2015. Wide-field optical model of the human eye with asymmetrically tilted and decentered lens that reproduces measured ocular aberrations. Optica 2, 2: 124–134. [Google Scholar] [CrossRef]

- Porta, S., B. Bossavit, R. Cabeza, A. Larumbe-Bergera, G. Garde, and A. Villanueva. 2019. U2Eyes: A binocular dataset for eye tracking and gaze estimation. Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops; pp. 3660–3664. [Google Scholar]

- Rozema, J. J., D. A. Atchison, and M. J. Tassignon. 2011. Statistical eye model for normal eyes. Investigative Ophthalmology & Visual Science 52, 7: 4525–4533. [Google Scholar] [CrossRef]

- Rozema, J. J., P. Rodriguez, R. Navarro, and M. J. Tassignon. 2016. SyntEyes: a higher-order statistical eye model for healthy eyes. Investigative ophthalmology & visual science 57, 2: 683–691. [Google Scholar] [CrossRef]

- Świrski, L., and N. Dodgson. 2014. Rendering synthetic ground truth images for eye tracker evaluation. Proceedings of the Symposium on Eye Tracking Research and Applications; pp. 219–222. [Google Scholar] [CrossRef]

- Szczęsna, D. H., and H. T. Kasprzak. 2006. The modelling of the influence of a corneal geometry on the pupil image of the human eye. Optik 117, 7: 341–347. [Google Scholar] [CrossRef]

- Wood, E., T. Baltrušaitis, L. P. Morency, P. Robinson, and A. Bulling. 2016. Learning an appearance-based gaze estimator from one million synthesised images. Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications; pp. 131–138. [Google Scholar] [CrossRef]

- Villanueva, A., and R. Cabeza. 2008. A novel gaze estimation system with one calibration point. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics) 38, 4: 1123–1138. [Google Scholar] [CrossRef] [PubMed]

- Wood, E., T. Baltrušaitis, L. P. Morency, P. Robinson, and A. Bulling. 2016. Learning an appearance-based gaze estimator from one million synthesised images. Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, March; pp. 131–138. [Google Scholar] [CrossRef]

- Velleman, P. F., and R. E. Welsch. 1981. Efficient computing of regression diagnostics. The American Statistician 35, 4: 234–242. Available online: https://10.1080/00031305.1981.10479362. [CrossRef]

Copyright © 2023. This article is licensed under a Creative Commons Attribution 4.0 International License.