Abstract

Uncooled infrared imaging systems have significant potential in industrial hazardous gas leak detection. However, the use of narrowband filters to match gas spectral absorption peaks leads to a low level of incident energy captured by uncooled infrared cameras. This results in a mixture of fixed pattern noise and Gaussian noise, while existing denoising methods for uncooled infrared images struggle to effectively address this mixed noise, severely hindering the extraction and identification of actual gas leak plumes. This paper presents a UNet-structured dual-encoder denoising network specifically designed for narrowband uncooled infrared images. Based on the distinct characteristics of Gaussian random noise and row–column stripe noise, we developed a basic scale residual attention (BSRA) encoder and an enlarged scale residual attention (ESRA) encoder. These two encoder branches perform noise perception and encoding across different receptive fields, allowing for the fusion of noise features from both scales. The combined features are then input into the decoder for reconstruction, resulting in high-quality infrared images. Experimental results demonstrate that our method effectively denoises composite noise, achieving the best results according to both objective metrics and subjective evaluations. This research method significantly enhances the signal-to-noise ratio of narrowband uncooled infrared images, demonstrating substantial application potential in fields such as industrial hazardous gas detection, remote sensing imaging, and medical imaging.

1. Introduction

Infrared imaging technology is widely used in military detection, industrial exploration, civilian temperature measurement, and other applications. In particular, it has seen further development in recent years in fields such as industrial gas leakage detection [1]. However, correction of the inherent pattern noise in infrared focal plane imaging has always been a critical issue [2], especially because the sensitivity of uncooled infrared detectors is relatively low. Additionally, factors such as the use of narrow bandpass filters in gas leakage detection increase the severity of the temporal and spatial stripe noise of thermal images, thereby limiting their application in various scenarios. Therefore, infrared image denoising technology to reduce noise in uncooled infrared images and improve the signal-to-noise ratio (SNR) has become an important research direction.

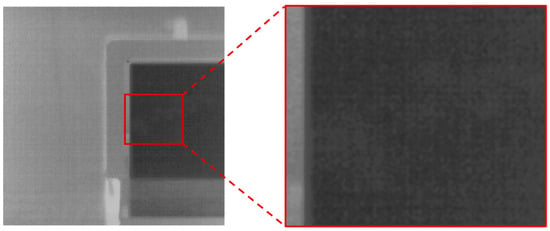

Infrared image denoising is a fundamental task in infrared image processing technology, with the goal of eliminating noise components from infrared images. At present, the technology for uncooled infrared imaging systems has reached maturity. To correct the non-uniformity of the detector, most non-uniformity noise can typically be effectively removed by dynamically correcting bias drift using internal baffles, based on prior laboratory blackbody calibrations. However, this correction approach does not address the calibration parameter shifts introduced by narrowband filters after the system is integrated. In the residual noise components of the initially corrected narrowband uncooled infrared images, the noise types that primarily reduce the image signal-to-noise ratio include Gaussian random noise and spatiotemporal line stripe noise [3,4,5,6], which can interfere with the detection of weak gas targets (as shown in Figure 1). Given the relatively high proportion of stripe noise in early uncooled infrared cameras, infrared image denoising technology has largely concentrated on removing stripe noise. This includes methods based on spatial filters [7], transform domain filtering methods [8], and targeted modeling techniques based on noise characteristics [9,10,11], all of which demonstrate varying degrees of effectiveness in stripe noise removal.

Figure 1.

Noise characterization of narrowband infrared images.

Currently, there is limited research on methods that simultaneously remove temporal and spatial noise from infrared images, primarily due to the difficulty of modeling both types of noise concurrently. However, Hu et al. have made progress in denoising research using deep learning methods [12], demonstrating that mature visible light image denoising neural networks are also effective in learning and eliminating complex noise in infrared images. In the realm of deep learning-based image denoising technology, the DnCNN network introduced by K. Zhang et al. [13] has surpassed traditional denoising methods like BM3D [14] and WNNM [15] in terms of performance. The UNet architecture, which is based on a residual CNN, is capable of learning noise characteristics and removing complex noise [16]. However, due to the limited receptive field of CNN networks, their ability to remove noise is constrained. With the rise of Transformer technology in computer vision, self-attention mechanisms have been integrated into image denoising, resulting in numerous image restoration networks that enhance denoising performance benchmarks [17,18,19]. However, because image denoising methods in the visible light domain have not been specifically designed to tackle the issue of mixed Gaussian noise and stripe noise, their denoising capabilities fail to meet the necessary requirements.

To address these challenges, we propose DER-UNet, an infrared image denoising network featuring a dual-encoder UNet structure incorporating two types of scale attention mechanisms. The proposed network, based on the basic residual UNet denoising residual network, incorporates an encoder branch with extended-scale attention to better learn the row–column features of stripe noise. The main structure of the DER-UNet network comprises a UNet based on a basic scale residual attention (BSRA) encoder–decoder, an enlarged scale residual attention (ESRA) encoder branch, and an encoding feature fusion module. The features extracted from the two encoder branches are combined and connected to the decoder layer through the residual dense network structure to refine the effective features. The experimental results demonstrate that DER-UNet achieves the best uncooled infrared image denoising results reported, and ablation experiments prove the effectiveness of the network structure.

The contributions of the proposed method are summarized as follows:

- (1)

- To address the differences in characteristic scales between Gaussian noise and stripe noise, we designed a dual-branch encoder UNet structure based on the traditional residual UNet network, which considers the features of both noise types. Additionally, we employed a feature fusion structure based on the RCAG module to effectively integrate the two noise characteristics. The resulting network structure is concise and demonstrates outstanding performance, achieving the best denoising results among existing methods.

- (2)

- We proposed an expanded scale encoder branch design specifically for learning the characteristics of stripe noise. To reduce the computational burden associated with this expanded scale encoder branch, we utilized depthwise separable convolutions in place of traditional convolution operations, significantly lowering the computational load while maintaining effective learning capabilities.

2. Related Work

2.1. Infrared Image Denoising Technology

Infrared image denoising technology aims to remove various types of noise from infrared images and enhance their SNRs. In traditional mixed noise denoising methods, the main approach involves modeling based on the image characteristics of stripe noise and then using image processing techniques that can extract stripe noise for denoising.

Song et al. proposes a method based on the expectation maximization (EM) framework that divides the overall stripe and noise removal problem into two independent sub-problems [20]. Specifically, the conditional expectation of the true image is calculated based on observations from the previous iteration and estimated stripes, and the column mean of the residual image is estimated to ensure maximum likelihood estimation (MLE). He et al. introduced spatial priors of stripes into the mixed denoising model and, for the first time, introduced frequency-domain priors in plug-and-play technology, which is beneficial for the mutual enhancement of multi-domain feature constraints in stripe removal [21]. In another study, wavelet functions were used to extract the approximate and vertical components of the original image containing stripe noise [22], denoise the approximate components through parameter estimation, and denoise the vertical components through guided filtering; wavelet reconstruction was performed to achieve denoising of the original image.

With the development of deep learning technology, the denoising of complex infrared images based on neural networks has made significant progress. Several neural networks adapted for infrared image denoising have been developed by designing network structures with powerful feature-learning and image restoration capabilities. A CNN architecture was adopted along with second-order attention mechanisms and non-local modules at the regional level to improve the extraction of image features and fit noise residuals [3]. Yang et al. proposes an infrared image denoising method based on multi-level feature attention networks (MLFANs) with adversarial learning [4]. The multi-level feature attention block (MLFAB) aims to extract features from different levels and establish connections through feature fusion to enhance the details of the reconstructed image, and a discriminator is developed to provide adversarial learning loss. Hu et al. proposes an infrared thermal image denoising method based on residual learning and a symmetric multi-scale (SM) decoder sampling structure (SMEDS) [12]. The U-shaped SMEDS aims to extract SM information from different layers and focuses on decoding recovery. Currently, infrared image denoising is primarily based on CNNs, which lack denoising network designs based on Transformer structures.

2.2. Dual-Encoder UNet

UNet first appeared in the field of medical image segmentation [23], and has become a fundamental structure in the field of image denoising, demonstrating strong encoding and decoding capabilities. The design of dual-encoder branches has been studied in various domains, such as semantic segmentation, saliency detection, image denoising, image enhancement, and image super-resolution [24]. The dual-encoder UNet was developed from the conventional UNet by adding an additional encoder branch to the left side of the UNet, providing multiple attentions for better exploration of the target features.

Fu et al. designed a dual-encoder feature attention medical image segmentation network, DEAU-UNet [25], which uses dual encoders in the encoding stage to independently achieve macro- and micro-feature extraction, followed by feature attention fusion. This enables the network to perform well in the recognition of macro-features and shows significant improvement in the processing of micro-features. Liang et al. designed a medical image segmentation network, N-Net, proposing a dual-encoder model based on the UNet network to deepen the network depth and enhance feature extraction capabilities [26]. Channel-level global features were obtained by adding Squeeze-and-Excitation (SE) modules to the dual-encoder model, and the introduction of full-size skip connections promoted the fusion of low-level details and high-level semantic information. Li et al. proposed an Efficient Residual Double-Coding UNet (ERDUnet), which also constructs a dual-encoder UNet for medical image segmentation [27]. It improved feature extraction efficiency by simultaneously extracting local features and global continuity information through the encoder module. Yao et al. proposed a dual-encoder UNet called DEUNet for high dynamic range (HDR) image denoising and reconstruction [28]. It learned brightness and texture information through two feature extraction branches for HDR image reconstruction. The two branches interact through spatial feature transformation, fully utilizing multi-scale information at different image levels. Moreover, the network includes another decoding network for fusing the image brightness and texture information, as well as a weighted network to selectively retain the most useful information.

2.3. Multi-Scale Attention

Neural networks based on CNNs have a receptive field that is related to the size of the convolutional kernels and depth of the network. Since denoising networks typically use small 3 × 3 convolutional kernels to balance feature extraction effectiveness and computational load and are usually limited in depth, they can only perform local perception on input images. Moreover, as the receptive field expands, the perception ability decreases. Multi-scale attention mechanisms have been developed to enhance the receptive field of CNNs, and research progress has been made in fields such as target detection [29], image dehazing [30], and image super-resolution [31].

In the field of image denoising, a Multi-Scale Residual Dense Cascade UNet (MCU-Net) that uses Multi-Scale Residual Dense Blocks (MRDBs) to connect the encoder and decoder of the UNet has been proposed [32]. Compared with skip connections, block connections using MRDB can adaptively transform the features of the encoder and transfer them to the UNet decoder. Gou et al. proposed a novel multi-scale adaptive network (MSANet) for single-image denoising that simultaneously contains intra-scale features and cross-scale complementarity [33]. An adaptive multi-scale block (AMB) can expand the receptive field and aggregate multi-scale information, whereas an Adaptive Fusion Block (AFuB) is dedicated to fusing multi-scale features from coarse to fine. Thakur et al. proposed a blind Gaussian denoising network for designing a dual-path model [34]. One path uses a Multi-Scale Pixel Attention (MSPA) block, and the other path uses a Multi-Scale Feature Extraction (MSFE) block.

3. Dual-Domain Perception Denoising Network

3.1. Narrowband Uncooled Infrared Noise Model

The noise components in uncooled infrared images primarily consist of temporal Gaussian noise and spatiotemporal striping noise. Upon the introduction of a narrowband filter, the image signal is diminished, leading to a further reduction in the image signal-to-noise ratio. Considering these two types of noise as additive noise, the degraded image can be expressed as

where is a noise-free clean image, denotes additive white Gaussian noise, and denotes the stripe noise.

Relative to the removal of Gaussian noise, the characteristics of composite noise in uncooled infrared images are more complex, and the scale ranges of Gaussian and stripe noises are different. To correct complex infrared noise while effectively preserving weak gas plume signals, it is necessary to utilize the learning and fitting capabilities of neural networks to remove composite noise.

3.2. Dual-Branch Encoder UNet Network Structure

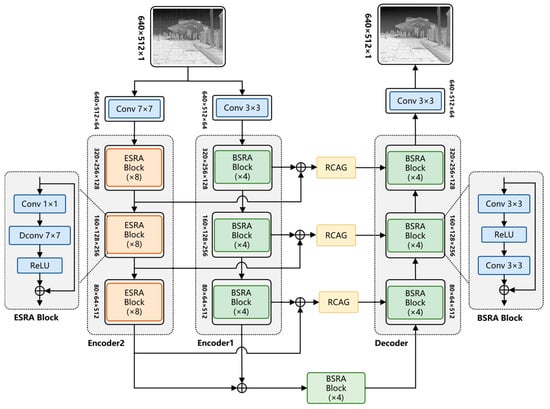

The proposed DER-UNet network is designed based on a dual-encoder UNet architecture that consists of two encoder branches with different scales and one decoder branch, as shown in Figure 2. To address the problem of different feature scales for Gaussian noise and stripe noise, two types of convolutional encoder branches with different scales are designed: the BSRA encoder for learning the features of Gaussian noise and the ESRA encoder for learning the features of stripe noise. The features obtained from the two encoder branches are combined and connected to the decoder layer through the feature fusion connection structure. The feature fusion connection structure can further fuse the features obtained by the encoders and, to some extent, predict and restore the image, thereby enhancing the denoising effect.

Figure 2.

Architecture of DER-UNet.

The detailed structure of the DER-UNet network is as follows:

- (1)

- Three-layer Residual UNet Structure: The basic structure of the network is a three-layer downsampling residual UNet network with a BSRA encoder and decoder designed as symmetrical structures. The BSRA encoder is based on a 3 × 3 residual convolution kernel, as shown in Figure 2, with each layer of the encoder structure stacking four layers of BSRA blocks. The tensors obtained at each layer of the two encoder branches are of the same scale, with downsampling and upsampling designs between the layers. The numbers of feature channels from top to bottom are 64, 128, and 256, respectively. Upon obtaining the features from the two encoders at each layer, they are added together and sent to the corresponding layer of the decoder.

- (2)

- ESRA Encoder: To effectively learn the large-scale features of stripe noise, an Enlarge Scale Attention encoder branch is designed using a 7 × 7 convolution kernel to construct the ESRA blocks. Depthwise separable convolution is used instead of conventional convolution to reduce the computational load of the large-sized convolutional kernel, as shown in Figure 2. The convolution structure of each basic module contains depthwise separable convolution layers and ReLU layers, with the input connected to the output through a residual connection.

- (3)

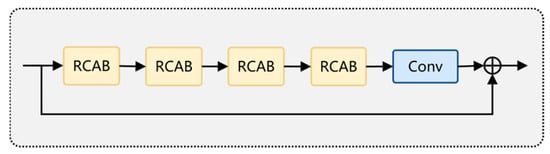

- Feature Fusion Connection Module: Further fusion processing is required because a difference exists in the scale of the features obtained from the two encoder branches. Therefore, on the basis of the skip connection in the conventional UNet network, the residual channel attention group (RCAG) module proposed by the residual channel attention network (RCAN) [35] is added. The RCAG structure is developed by cascading four residual channel attention blocks (RCABs) and further fusing and reconstructing the mixed features from the two encoder branches. The structure of the RCAG module is shown in Figure 3.

Figure 3. Structure of RCAG module.

Figure 3. Structure of RCAG module.

The network’s processing pipeline can be mathematically characterized as follows: Initially, an image corrupted by noise is provided as input and a convolution operation is applied to augment the dimensionality of the feature space. The computational process is expressed as

Subsequently, the BSRA encoder employs a three-level coding strategy that incorporates downsampling. Since each encoder layer is constructed from a series of cascaded encoder modules, the representations for the basic BSRA block and the ESRA block are given by

where denotes the activation function , represents a depthwise separable convolution with a 7 × 7 kernel.

The input feature map at each level of the multi-layer encoder is obtained from the output of the previous level. Define as the input feature map to each encoder level, where , , and represent the number of channels, height, and width, respectively. Consequently, the output of each layer of the BSRA encoder can be expressed as

where , represents a composite module executed in series , and represents a downsampling operation with a stride of 2.

Following a similar approach, the ESRA encoder utilizes extended three-level downsampling. If represents the input feature map for each level, then the respective output for each level is

Following the final downsampling stage, the BSRA module integrates the resulting features.

The network then proceeds with iterative upsampling. Each upsampling layer integrates the outputs of the dual encoders with features received from the higher-resolution decoder layer. The mathematical representation of this upsampling module can be expressed as

where represents an upsampling operation using transposed convolution.

A convolutional operation is then applied to the upsampled feature maps for channel restoration, generating the final predicted image.

3.3. Loss Function and Training Process

Network loss is generated from the ground-truth and estimated images. The loss function used is based on the L1 loss and incorporates the total variation (TV) loss as a regularization term, specifically.

where represents the L1 loss; represents the regularization coefficient for the TV loss , used to adjust the regularization strength; represents the ground truth image; and represents the estimated image after denoising by the network. The solution for image denoising using the proposed loss function is represented as follows:

where denotes the mapping model that is used to estimate noise with its trainable parameters under the loss function .

4. Experiments on Public Datasets

4.1. Experimental Design

Currently, dedicated datasets for uncooled infrared image denoising are lacking. Therefore, the publicly available FLIR advanced driving assistance systems (ADAS) v2 dataset [36] was used. This dataset is a thermal infrared and visible light dual-light dataset for ADAS, with thermal infrared images captured by an FLIR Tau 2 long-wave uncooled infrared camera. The image size is 640 × 512 and contains 10,472 training images and 1144 validation images. The dataset includes various scenes, such as lanes, vehicles, street views, pedestrians, and plants, providing a rich variety of scenes suitable for denoising tasks. To create a denoised image dataset, images were selected from the ADAS v2 training set according to the criteria of fewer repeated scenes, lower noise levels, and weaker motion blur; 800 images were chosen for training and 60 images were chosen for testing from the test set.

The selected training and test datasets had relatively weak stripe noise; therefore, the BM3D denoising method was used to denoise the dataset and obtain ground-truth images without noise. Based on the proposed Gaussian and stripe noise mixed model, Gaussian and stripe noises were then added to the ground truth images to create a synthetic noise dataset. The network training utilized two NVIDIA RTX 3090 graphics cards with a batch size of 128 and a learning rate of 1 × 10−4 during training. Additionally, an Adam network parameter optimizer was used.

4.2. Experimental Results

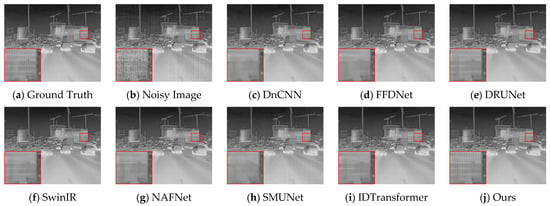

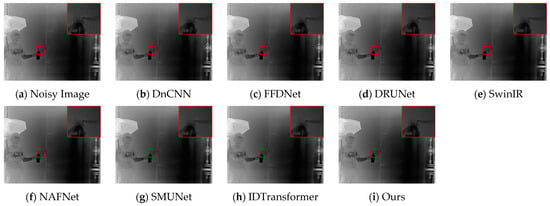

We compared the proposed DER-UNet with several state-of-the-art denoising methods, including several powerful image restoration networks (DnCNN [13], DRUnet [16], FFDNet [37], SwinIR [17], and NAFNet [38]), as well as the latest composite noise infrared image denoising network SMUNet [12] and IDTransformer [39]. The comparison results based on the peak signal-to-noise ratio (PSNR) and structure similarity index measure (SSIM) are presented in Table 1.

Table 1.

Average PSNR and SSIM results for test set images. The best two values in each metric are denoted in red and blue, respectively.

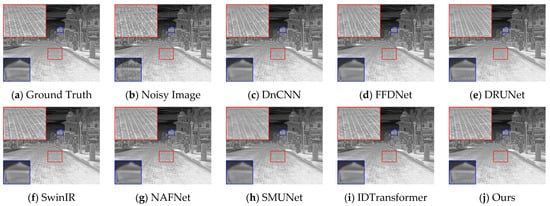

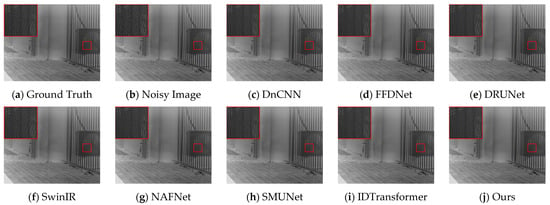

Figure 4 and Figure 5 present the denoising results of typical images from the test set, with the objective evaluation metrics, PSNR and SSIM, summarized in Table 2. The architectural details illustrated in Figure 4 suggest that the comparative methods tend to blur details in scenes rich in information. In contrast, DER-UNet effectively removes noise while preserving the target textures. In the street scene depicted in Figure 5, the DnCNN, SwinIR, and NAFNet networks manage to restore some road texture, while the DRUNet network recovers the roof texture. Notably, the proposed DER-UNet demonstrates superior preservation of both road and roof textures, closely resembling the ground truth image.

Figure 4.

Denoising results for an architectural scene.

Figure 5.

Denoising results for a street scene.

DER-UNet retains scene details and textures more effectively, achieving a visual effect closely resembling the ground truth image and performing best in PSNR and SSIM metrics. Among the comparison methods, the relatively strong DRUNet and SwinIR networks still lose some structural information from the original image. The PSNR and SSIM results support this finding, indicating alignment between visual assessments and objective metrics.

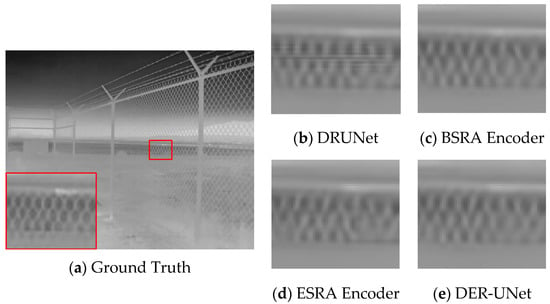

4.3. Ablation Study

An ablation study was conducted on the dual-encoder UNet to evaluate the contribution of the dual-encoder structure to the UNet network. Specifically, comparisons were made between the BSRA encoder single-branch UNet, the ESRA encoder single-branch UNet, and the complete DER-UNet network.

The results of the ablation experiments in Table 3 indicate that the dual-encoder structure of UNet shows improvements over the single-branch encoder structure across various metrics, with the DER-UNet achieving the best performance. A qualitative analysis of the ablation results, based on visual inspection of the dataset imagery (Figure 6), reveals further insights. The network employing the ESRA extended-scale encoder exhibits superior texture restoration capabilities; however, it also demonstrates a tendency to lose fine-scale details. Conversely, the basic DRUNet demonstrates insufficient texture recovery. The proposed DER-UNet, incorporating the dual-encoder structure, significantly enhances the recovery of detailed textures.

Table 3.

Ablation study and results.

Figure 6.

Comparative results of ablation study.

4.4. Network Runtime and Hardware Requirements

We compared DER-UNet with three representative networks, DRUNet, NAFNet, and SMUNet, in terms of runtime, floating-point operations per second (FLOPs), and memory requirements based on images sized 256 × 256 and 512 × 512. As indicated in Table 4, compared with image restoration networks DRUNet, NAFNet, and SMUNet, DER-UNet is not as advantageous in terms of runtime and FLOPs; however, it has better denoising results and is more suitable for denoising narrowband infrared images.

Table 4.

Execution time and hardware requirements. The best two values in each metric are denoted in red and blue, respectively.

5. Experiments on Real Noise Dataset

5.1. Real Noise Dataset

Evidence from practice demonstrates that image denoising methods must be validated using real noisy images. Utilizing real noise datasets allows for the learning of the true noise characteristics of the camera, which has significant practical value. Currently, real noise datasets are primarily created by capturing and synthesizing actual multi-frame images to eliminate the effects of random noise, resulting in noise-free images that closely resemble reality. However, due to the necessity of employing a multi-frame collection method to obtain ground truth images, there is currently no publicly available denoising dataset specifically for uncooled infrared images.

In contrast to the methods for obtaining real noise in visible light images, uncooled infrared image noise comprises both Gaussian noise and stripe noise components (as shown in Figure 7a), making complete removal of all noise components through multi-frame acquisition impossible. In the mixed noise of uncooled infrared images, Gaussian noise and temporal stripe noise can be largely eliminated with multi-frame acquisition, while spatial stripe noise appears as fixed pattern noise that integrates into the background signal after collection. To obtain ground truth images from the real noise captured by uncooled infrared cameras, averaging multiple frames is typically employed to remove Gaussian noise and temporal stripe noise. However, most uncooled infrared camera cores are equipped with in-camera calibration that eliminates much of the spatial stripe noise before image acquisition (as illustrated in Figure 7b). As a result, the real noisy images produced are largely free of Gaussian noise and temporal stripe noise, retaining only a small amount of residual spatial stripe noise (as shown in Figure 7c), which can be utilized as a real noise dataset.

Figure 7.

Process of uncooled infrared image noise: (a) uncooled infrared image noise; (b) image noise following internal baffle calibration; (c) image noise after averaging multiple frames.

Images were captured using the iRaytek LA6110 uncooled infrared camera (IRay Technology Co., Ltd., Yantai, China), which features a 40 mm focal length lens and a narrowband filter with a wavelength range of 7–8.75 μm. Under outdoor natural conditions, a total of 220 sets of real noise data sequences were collected. Near-ground truth images were generated through multi-frame stacking and averaging, resulting in the creation of a real noise dataset. Of these, 200 image pairs were designated for network training, while 20 image pairs were set aside for network testing. Additionally, a subset of noisy images containing gas plume targets was collected to evaluate the denoising performance of the network trained on the real noise dataset specifically for gas plume images.

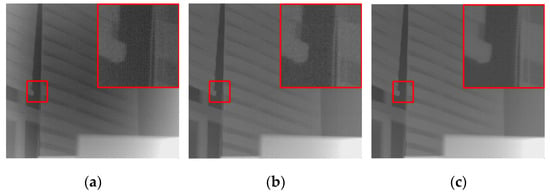

5.2. Narrowband Infrared Image Denoising Results

The proposed DER-UNet network was trained and tested, and comparisons were made with the DnCNN, FFDNet, DRUNet, SwinIR, NAFNet, SMUNet, and IDTransformer methods. The denoising results for the various methods are presented in Table 5. Figure 8 illustrates the denoising results for typical images from the test set. In scenes characterized by complex detail textures, DER-UNet demonstrates a superior ability to retain details compared to other methods, achieving better detail retention and higher performance metrics.

Table 5.

Average PSNR and SSIM results for test set images. The best two values in each metric are denoted in red and blue, respectively.

Figure 8.

Denoising results for the real scene.

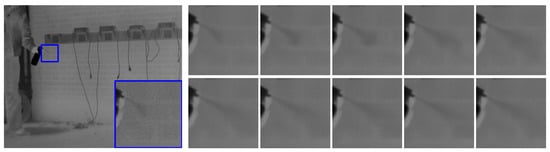

Figure 9 illustrates the denoising results for images of gas plume. Because gas plume targets do not have a fixed shape, it is not feasible to obtain a ground truth image using multi-frame averaging methods. Therefore, this section provides only a subjective evaluation of the denoising results for various methods. DER-UNet shows superior performance in both noise removal and the retention of gas plume targets.

Figure 9.

Denoising results for gas plume image.

Figure 10 presents the denoising results of the DER-UNet network applied to a sequence of actual gas plume images collected in the field, illustrating the network’s practical effectiveness. The left side of Figure 10 shows the original collected image alongside a magnified view of the noise in a local area of the plume. The right side displays a sequence of magnified local areas of the gas plume after denoising the subsequent 10 frames. The comparison of images before and after denoising, along with the results from multiple frames, highlights the reliability of the DER-UNet network in denoising sequential images.

Figure 10.

Denoising results of the gas plume image sequence.

6. Conclusions

To address the issue of image noise affecting uncooled infrared imaging detection of industrial hazardous gas leaks, we developed a dual-encoder denoising network based on UNet, inspired by the concept of dual-scale feature extraction for Gaussian and stripe noise. This network utilizes two branches: a basic residual attention CNN encoder for Gaussian noise and an expanded scale residual attention encoder for stripe features. This design effectively targets both types of noise components, enhancing the network’s performance and noise removal capability. We conducted training and testing on both a public dataset and a narrowband infrared image dataset. The results show significant performance advantages and improved image restoration effects compared to powerful image restoration networks such as DRUNet and SwinIR. Ablation experiments validate the effectiveness of the dual-encoder structure.

The dual-scale dual-encoder UNet architecture is well-suited for removing mixed noise from both local and non-local sources, effectively enhancing the signal-to-noise ratio of narrowband infrared images. After further optimization for speed, this approach is applicable for infrared imaging detection of industrial gas leak plumes and has broad potential in fields such as ultraviolet imaging and medical imaging.

Author Contributions

Conceptualization, M.W. and W.J.; methodology, M.W.; validation, M.W. and P.Y.; formal analysis, M.W. and S.Q.; writing—original draft preparation, M.W.; writing—review and editing, M.W., P.Y. and S.Q.; visualization, M.W.; supervision, L.L. and X.W.; project administration, W.J.; funding acquisition, W.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Capital of Science and Technology Platform of China, grant number Z171100002817011.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data underlying the results presented in this paper are not publicly available at this time but may be obtained from the authors upon reasonable request.

Acknowledgments

The authors acknowledge to the Beijing Wisdom Sharing Technology Service Co., Ltd. for the device support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Meribout, M. Gas Leak-Detection and Measurement Systems: Prospects and Future Trends. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, W. Scene-Based Nonuniformity Correction Using Local Constant Statistics. J. Opt. Soc. Am. A JOSAA 2008, 25, 1444–1453. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Luo, S.; Chen, M.; Wu, H.; Wang, T.; Cheng, L. Infrared Thermal Imaging Denoising Method Based on Second-Order Channel Attention Mechanism. Infrared Phys. Technol. 2021, 116, 103789. [Google Scholar] [CrossRef]

- Yang, P.; Wu, H.; Cheng, L.; Luo, S. Infrared Image Denoising via Adversarial Learning with Multi-Level Feature Attention Network. Infrared Phys. Technol. 2023, 128, 104527. [Google Scholar] [CrossRef]

- Feng, T.; Jin, W.; Si, J. Spatial-Noise Subdivision Evaluation Model of Uncooled Infrared Detector. Infrared Phys. Technol. 2021, 119, 103954. [Google Scholar] [CrossRef]

- Feng, T.; Jin, W.; Si, J.; Zhang, H. Optimal Theoretical Study of the Pixel Structure and Spatio-Temporal Random Noise of Uncooled IRFPA. J. Infrared Millim. Waves 2020, 39, 142–148. [Google Scholar] [CrossRef]

- Hua, W.; Zhao, J.; Cui, G.; Gong, X.; Ge, P.; Zhang, J.; Xu, Z. Stripe Nonuniformity Correction for Infrared Imaging System Based on Single Image Optimization. Infrared Phys. Technol. 2018, 91, 250–262. [Google Scholar] [CrossRef]

- Münch, B.; Trtik, P.; Marone, F.; Stampanoni, M. Stripe and Ring Artifact Removal with Combined Wavelet—Fourier Filtering. Opt. Express OE 2009, 17, 8567–8591. [Google Scholar] [CrossRef] [PubMed]

- Qian, W.; Chen, Q.; Gu, G.; Guan, Z. Correction Method for Stripe Nonuniformity. Appl. Opt. 2010, 49, 1764–1773. [Google Scholar] [CrossRef]

- Sui, X.; Chen, Q.; Gu, G. Adaptive Grayscale Adjustment-Based Stripe Noise Removal Method of Single Image. Infrared Phys. Technol. 2013, 60, 121–128. [Google Scholar] [CrossRef]

- Zhang, H.; Qian, W.; Xu, Y.; Zhang, K.; Kong, X.; Wan, M. Structural-Information-Awareness-Based Regularization Model for Infrared Image Stripe Noise Removal. J. Opt. Soc. Am. A JOSAA 2024, 41, 1723–1737. [Google Scholar] [CrossRef]

- Hu, X.; Luo, S.; He, C.; Wu, W.; Wu, H. Infrared Thermal Image Denoising with Symmetric Multi-Scale Sampling Network. Infrared Phys. Technol. 2023, 134, 104909. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted Nuclear Norm Minimization with Application to Image Denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Van Gool, L.; Timofte, R. Plug-and-Play Image Restoration With Deep Denoiser Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 6360–6376. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-Like Pure Transformer for Medical Image Segmentation. In Proceedings of the Computer Vision—ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022; pp. 205–218. [Google Scholar] [CrossRef]

- Song, L.; Huang, H. Simultaneous Destriping and Image Denoising Using a Nonparametric Model with the EM Algorithm. IEEE Trans. Image Process. 2023, 32, 1065–1077. [Google Scholar] [CrossRef]

- He, Y.; Zhang, C.; Zhang, B.; Chen, Z. FSPnP: Plug-and-Play Frequency–Spatial-Domain Hybrid Denoiser for Thermal Infrared Image. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Wang, E.; Liu, Z.; Wang, B.; Cao, Z.; Zhang, S. Infrared Image Stripe Noise Removal Using Wavelet Analysis and Parameter Estimation. J. Mod. Opt. 2023, 70, 170–180. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Fu, Z.; Li, J.; Hua, Z. DEAU-Net: Attention Networks Based on Dual Encoder for Medical Image Segmentation. Comput. Biol. Med. 2022, 150, 106197. [Google Scholar] [CrossRef]

- Liang, B.; Tang, C.; Zhang, W.; Xu, M.; Wu, T. N-Net: An UNet Architecture with Dual Encoder for Medical Image Segmentation. SIViP 2023, 17, 3073–3081. [Google Scholar] [CrossRef]

- Li, H.; Zhai, D.-H.; Xia, Y. ERDUnet: An Efficient Residual Double-Coding Unet for Medical Image Segmentation. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2083–2096. [Google Scholar] [CrossRef]

- Yao, Z.; Bi, J.; Deng, W.; He, W.; Wang, Z.; Kuang, X.; Zhou, M.; Gao, Q.; Tong, T. DEUNet: Dual-Encoder UNet for Simultaneous Denoising and Reconstruction of Single HDR Image. Comput. Graph. 2024, 119, 103882. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.-Y.; Bian, J.-W.; Zhang, L.; Cheng, M.-M. SAMNet: Stereoscopically Attentive Multi-Scale Network for Lightweight Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3804–3814. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.-H. Single Image Dehazing via Multi-Scale Convolutional Neural Networks. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 154–169. [Google Scholar] [CrossRef]

- Li, J.; Fang, F.; Mei, K.; Zhang, G. Multi-Scale Residual Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 517–532. [Google Scholar] [CrossRef]

- Bao, L.; Yang, Z.; Wang, S.; Bai, D.; Lee, J. Real Image Denoising Based on Multi-Scale Residual Dense Block and Cascaded U-Net With Block-Connection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 448–449. [Google Scholar] [CrossRef]

- Gou, Y.; Hu, P.; Lv, J.; Zhou, J.T.; Peng, X. Multi-Scale Adaptive Network for Single Image Denoising. Adv. Neural Inf. Process. Syst. 2022, 35, 14099–14112. [Google Scholar]

- Thakur, R.K.; Maji, S.K. Multi Scale Pixel Attention and Feature Extraction Based Neural Network for Image Denoising. Pattern Recognit. 2023, 141, 109603. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar] [CrossRef]

- Teledyne FLIR Free ADAS Thermal Dataset V2. Available online: https://adas-dataset-v2.flirconservator.com/#downloadguide (accessed on 26 June 2024).

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a Fast and Flexible Solution for CNN-Based Image Denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple Baselines for Image Restoration. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; pp. 17–33. [Google Scholar] [CrossRef]

- Shen, Z.; Qin, F.; Ge, R.; Wang, C.; Zhang, K.; Huang, J. IDTransformer: Infrared Image Denoising Method Based on Convolutional Transposed Self-Attention. Alex. Eng. J. 2025, 110, 310–321. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).