Abstract

Unmanned aerial vehicle swarms (UAVSs) can carry out numerous tasks such as detection and mapping when outfitted with machine learning (ML) models. However, due to the flying height and mobility of UAVs, it is very difficult to ensure a continuous and stable connection between ground base stations and UAVs, as a result of which distributed machine learning approaches, such as federated learning (FL), perform better than centralized machine learning approaches in some circumstances when utilized by UAVs. However, in practice, functions that UAVs must perform often, such as emergency obstacle avoidance, require a high sensitivity to latency. This work attempts to provide a comprehensive analysis of energy consumption and latency sensitivity of FL in UAVs and present a set of solutions based on an efficient asynchronous federated learning mechanism for edge network computing (EAFLM) combined with ant colony optimization (ACO) for the cases where UAVs execute such latency-sensitive jobs. Specifically, UAVs participating in each round of communication are screened, and only the UAVs that meet the conditions will participate in the regular round of communication so as to compress the communication times. At the same time, the transmit power and CPU frequency of the UAV are adjusted to obtain the shortest time of an individual iteration round. This method is verified using the MNIST dataset and numerical results are provided to support the usefulness of our proposed method. It greatly reduces the communication times between UAVs with a relatively low influence on accuracy and optimizes the allocation of UAVs’ communication resources.

1. Introduction

The application of aerial platforms such as unmanned aerial vehicle swarms (UAVs), also known as swarms of drones, is expanding quickly. UAVs are unmanned aircraft consisting of several single small, low-cost UAVs. By working in concert, UAVs have demonstrated a powerful capability to achieve significant advantages in missions that would be difficult for a single UAV to accomplish. With their unique advantages, including high mobility and flexibility, UAVs have played an important role in many areas [1], including rescue, signal detection, terrain mapping [2,3,4,5,6], etc. The expanding prospects of the applications of UAVs has attracted a significant amount of attention from academia and industry. However, due to the flying height and mobility of UAVs, it is very difficult to ensure a continuous and stable connection between ground base stations and UAVs. Therefore, UAVs are better suited to perform tasks using distributed machine learning approaches than centralized machine learning approaches.

A distributed machine learning method called federated learning (FL) deconstructs data silos and unleashes the potential of AI applications [7]. FL enables the participants to achieve joint modeling by exchanging only encrypted intermediate results of machine learning without disclosing the underlying data and their encrypted form. Such a distributed FL approach can be well suited for UAV communication: after each UAV in the cluster has individually trained a model based on the data it has collected, it uses an intra-cluster network to share FL parameters with other UAVs. This not only reduces volume of communication between UAVs but also avoids the disclosure of sensitive data and protects privacy security to a certain extent.

A number of recent works have investigated the feasibility of FL-based UAVs communication, these aerial access networks are also regarded to be very important in the upcoming sixth-generation (6G) wireless systems [8,9,10]: Ref. [11] built a leader–follower mode UAVs-FL architecture for the first time, and discussed how wireless factors such as bandwidth and UAV angle deviation affect FL convergence. They also minimized the convergence cycle of UAVs-FL. The limited resources that a single UAV can carry also limit the performance of UAVs: when UAVs perform complex tasks, the pressure of the self-organized data interaction network increases with the expansion of the scale of the task model, and the communication energy consumption increases. In reference [12], path gain was examined depending on the distance between the UAVs using a ray tracing method in various scenarios and with various antenna types in an air-to-air communication channel during communication for two UAVs, one of which was considered to be a receiver and the other as a transmitter, with direct vision between them. Ref. [13] improved the task allocation mechanism of UAVs, effectively reduces communication energy consumption, and ensures model performance. Under various constraints of power control, transmission time, accuracy, bandwidth allocation, and computing resources, Ref. [14] minimized the overall energy consumption of each UAV with limited bandwidth. Ref. [15] maximized the transmission rate and improved the probability of successful data transmission based on deep Q-network (DQN), a convolutional block attention module (CBAM), and the value decomposition network (VDN) algorithm. Ref. [16] built a synchronous federated learning (SFL) structure for multi-UAVs and also performed a comparative analysis of asynchronous federated learning (AFL) and SFL. Ref. [17] reformulated the optimization problem as the framework of a Markov decision process (MDP) and designed a DRL-based algorithm to solve the MDP. Refs. [18,19] proposed a secure transmission approach with energy efficiency in UAV networks to deal with the crucial challenges of energy saving and security in UAV wireless networks. Refs. [20,21] suggested some new frameworks for distributed learning for sharing the model parameters that use less energy while maintaining good test accuracy performance.

However, there still exists a problem with these edge-oriented distributed machine learning approaches, which is that the communication demand is often very high, resulting in high communication consumption [22]. A typical parallel SGD model was designed and implemented through research, with a parameter matrix size of 2,400,000, running on a distributed parameter server system with one server node and 10 work nodes, and an Ethernet bandwidth of 1 Gbps between nodes. Under the above model and hardware configuration conditions, 60,000 sample data from the MNIST handwritten digit recognition dataset were used as input, and each sample underwent only one iterative training. The final complete training took 23 h, and it was found that of the time and energy were spent on parameter exchange between the parameter server and the working node [23]. Thus, reducing communication consumption is a valuable entry point for improving such distributed machine learning methods.

In reality, the tasks that UAVs need to deal with are often very sensitive to latency, such as dynamic target recognition, emergency obstacle avoidance, etc. [24]. This latency sensitivity will directly affect the completion of the task and should be one of the primary considerations for optimizing the network management of UAVs. Based on this pilot–follower mode of UAVS-FL architecture, Ref. [25] proposed a non-orthogonal multiple access (NOMA) based UAVs-FL framework to jointly optimize the uplink and downlink transmission duration of the model and UAV power, aiming for minimization of the latency of a FL iteration round until a specified accuracy is reached. In addition, while considering the convergence, reliability, and latency-sensitivity requirements of UAVs, the constraints on the energy consumed by learning, communication and flight during FL convergence should also be considered. However, at present, most of the research on task allocation of unmanned aircraft clusters focuses on non-real-time tasks, and there is still a lack of more complete solutions for task allocation that consider both latency and reliability [26]. Therefore, motivated by the above reasons, this paper proposes a relatively complete solution for the situation where UAVs perform such latency-sensitive tasks.

It is worth mentioning that in the field of federated learning for edge computing, there is a similar problem: the network and node computing load are too heavy [27]. In large-scale training scenarios, a large amount of communication bandwidth is often required for gradient switching, which will greatly increase the cost of network infrastructure. At the same time, the limited computing resources of the edge nodes will also lead to higher overall latency, lower throughput, and intermittent poor connections in the model. Ref. [28] proposed a method for distributed machine learning to save communication resources named lazily aggregated gradient (LAG). This is a communication-efficient variant of stochastic gradient descent (SGD), which can adaptively skip gradient calculation based on the current gradient, and help reduce the communication and computing burden. Later, in order to further improve the performance in the random gradient scenario, lazily aggregated stochastic gradients (LASG) was proposed, which further reduces the communication bandwidth requirements, and the convergence rate is equivalent to the original SGD [29]. In addition to the idea of skipping redundant communication rounds, there have also been studies devoted to skipping some nodes in a certain round to ultimately achieve the purpose of saving communication resources. Ref. [30] proposed an efficient asynchronous federated learning mechanism (EAFLM) for edge network computing which compresses the redundant communication between the node and the parameter server in the training process according to the adaptive threshold to further reduce the communication consumption.

Based on the UAVs-FL model proposed in Ref. [11], we construct a leader–follower framework. On this basis, we have established an optimization problem and proposed a resource scheduling planning method specifically for the class of tasks that UAVs perform in practice, the latency-sensitive tasks. Our primary goal is to minimize communication latency in a federated learning round. Overall, the primary contributions of this paper are:

- Introduction of the efficient asynchronous federated learning mechanism (EAFLM), which compresses communication times by up to compared to the original communication times and minimizes the risk of private data leakage.

- Establishment of an optimization problem with the aim of minimizing FL latency. Although this problem is non-convex, we have transformed it into two convex subproblems related to the transmit power and the CPU frequency of UAVs. By introducing the ant colony optimization (ACO) algorithm to plan the power allocation of UAVs, lower global latency can be achieved for latency-sensitive tasks. The FL iteration latency per round can also be reduced to of the similar method.

- In the MNIST dataset, the accuracy of machine learning tasks remained above , which did not decrease compared to the situation without introducing the scheduling strategy in this paper.

In summary, in order to achieve a shorter global latency, the strategy initially allocates a portion of time and energy for local operations and subsequently plans for the power allocation methods, and ultimately achieves a reduced global latency.

The remainder of this paper is organized as follows: Section 2 describes the system model and gives out the problem model. Section 3 elaborates on the EAFLM-ACO strategy and the implementation of our proposed algorithm. In Section 4, simulations and analyses are presented to prove the efficiency of our proposed method. Section 5 summarizes this article.

2. System Model

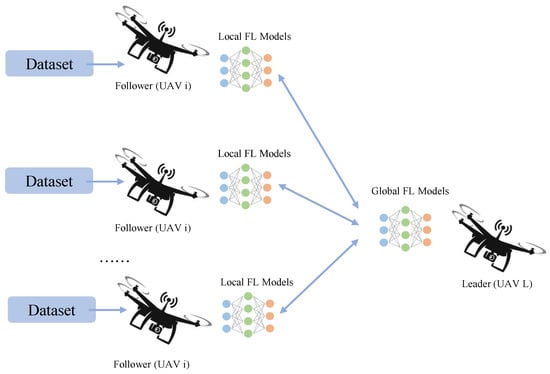

To study UAV network management based on FL, this chapter establishes a model as follows: a single group of UAVs consists of a leader UAV and I follower UAVs, with the follower UAVs forming the set . The leader UAV is denoted as UAV L, and each follower UAV is denoted as UAV i . The UAV group maintains a specific formation in the air, flying at a constant speed in the same direction at a certain altitude. The leader UAV and follower UAVs utilize FL to cooperate, performing machine learning tasks such as trajectory planning and target recognition. The overall architecture is shown in Figure 1.

Figure 1.

An illustration of the UAVs-FL architecture.

2.1. Federated Learning Model

Use to represent the global model parameters of UAV L, and represents the local model parameters of UAV i. The size of the model parameters is defined as . is the amount of sample data of UAV i. Assuming that each UAV i has a input sample set , and every only corresponds to one output through model , which means the output set is [31]. Take as the local sample set of UAV i, which means . The loss function reflects the predicted loss results of each sample. For every UAV i, the local loss function on its sample set can thus be represented as the average of the loss function of each sample, and the global loss function is the weighted average of all local loss functions, that is:

The purpose of federated learning is to find a parameter model that minimizes the global loss function above. To achieve this optimal model, traditional centralized machine learning algorithms require all follower UAVs to upload their datasets to the leader UAV for centralized training. In the federated learning circumstances described in this paper, the following five steps are performed in a certain round [32].

- Local gradient calculation: each UAV i computes its local gradient at moment t based on its own local dataset and quantizes the gradient as follows:

- Local gradient upload: after quantizing the local gradient, each UAV i establishes a communication link with UAV L to upload its local gradient.

- Global gradient aggregation: UAV L weights and averages the gradients uploaded by each UAV m and obtains the aggregated gradient as follows:

- Global gradient update: UAV L updates the parameters of the aggregated gradient using the method of gradient descent, where represents the global model parameters of iteration round t + 1, represents the learning rate and :

- Global parameter broadcast: UAV L broadcasts the updated global model parameters to all other UAV i. Each UAV i obtains the latest parameters and updates its local parameters for the next round of iterative learning.

In a federated learning system, these five steps are repeated until the maximum number of rounds is reached.

2.2. Communication Model

We assume that every follower UAV i in this FL iteration forms a group and communicates with UAV L using its local training model . In Section 3.1, the selection procedure for determining which follower UAV participates in this iteration will be explained in detail. We assume that UAV L utilizes the index in the group as the decoding order for uploading the local model parameters to UAV L. We use to represent the transmit power of UAV i, i.e., the transmit power for uploading its data to the leader UAV. According to Shannon’s formula, we can represent the uplink data rate between UAV i and UAV L as:

where represents the uplink bandwidth, represents the signal power of UAV i, is the channel power gain from UAV i to UAV L, and is the spectral power density of the background noise.

After receiving model parameters uploaded by the follower UAVs, UAV L performs local model aggregation. Once the aggregation is complete, the updated global model is broadcast to all follower UAVs. Considering the follower UAV with the weakest channel power obtained from the leader UAV, the downlink data rate from the leader UAV to the follower UAV with the weakest channel power gain can be expressed as:

where represents the downlink bandwidth, represents the signal power of UAV L, is the downlink channel power gain from the UAV L to UAV i, and is the spectral power density of the background noise.

Once the uplink and downlink data transmission rates of the channel are determined, the transmission latency can be calculated by the ratio of the size of the model parameters to the data transmission rate or .

2.3. Latency Analysis

As previously stated, our goal is to reduce end-to-end latency by optimizing the latency. In this section, we calculate the main types of latency in a single communication round [32].

2.3.1. Local Time Consumption of Follower UAVs

The total time consumption of follower UAVs can be divided into two parts, local gradient computation and local gradient upload . They can be expressed as:

where represents the size of collected data for UAV i, c represents the workload of CPU cycles per data bit, represents the CPU frequency of UAV i, represents the total data size of UAV i corresponding to the local parameter gradient, and represents the uplink data rate.

2.3.2. Global Time Consumption of Leader UAV

The total time consumption of a leader UAV can also be divided into two parts: global gradient computation and broadcast . They can be expressed as:

where represents the computational complexity, represents the CPU frequency of UAV L, I represents the total number of devices involved in model aggregation, and represents the downlink data rate.

2.3.3. Total Time

For UAV L, it must first wait for the local gradient to be uploaded by the follower UAVs before starting gradient aggregation and model broadcast. This implies that the total latency of a round of federated learning is the sum of the longest local time consumption among all follower UAVs and the global time consumption of the leader UAV. Therefore, for a swarm of UAVs, the total latency of a complete federated learning round is:

2.4. Energy Consumption Model

In this paper, we only consider the computation energy consumption, communication energy consumption, and maneuvering energy consumption related to federated learning and communication between UAVs. The energy consumption of the follower UAVs and the leader UAV can be expressed by the following formulae, respectively:

where and represent the energy consumption efficiency and are both positive constants [25] and represents the average maneuvering power.

2.5. Optimization for Minimizing Latency

We take the transmit power and CPU frequency of each UAV as optimization variables and optimize the time of each round of federated learning to minimize it. So, we can establish the following optimization problem, referred to as Problem 1.

where is the total time per round, which is the goal of the optimization problem. By controlling the transmit power of follower UAV , CPU frequency of follower UAV , transmit power of leader UAV , and CPU frequency of leader UAV , the single-round latency is minimized. The constraints include the transmit power and CPU frequency ranges of the UAVs. The energy consumption of follower UAV and leader UAV should also be lower than the maximum energy limit .

3. The Proposed Method

In the previous section, we have established an optimization problem that minimizes the federated learning time per round by considering the transmit power and CPU frequency of the UAV as variables. In this section, we propose a resource optimization configuration scheme that combines EAFLM and ACO. The goal is to achieve the minimum communication latency in each round.

3.1. UAVs Network Management Based on EAFLM

A complete federated learning framework includes a parameter server and several learning nodes corresponding to UAV L and UAV i in this model. In each round t, the learning nodes obtain the global model , compute the local gradient , and upload it to the server. The server aggregates the gradients, executes the optimization algorithm to update the model parameters, and then broadcasts the updated model parameters to each learning node. To minimize the need for establishing communication links, in this paper, we locally select learning nodes and allow some of them to skip certain rounds of communication. Here, we introduce the concept of ‘lazy nodes’ [30]. A lazy node is defined as a node that contributes less to the global gradient in a particular round of global gradient aggregation. In other words, the participation or exclusion of these nodes in a specific round of global gradient aggregation has almost no impact on the final result. Therefore, ignoring these nodes in this round of aggregation can have a good effect on the model performance. The set of lazy nodes satisfies:

where represents the total gradient uploaded by all followers within round , represents the total gradient uploaded by all lazy nodes within round , is the size of the lazy node set, and I is the total number of follower UAVs.

In this paper, we optimize the global model using the gradient descent algorithm.

where represents the global model parameters of iteration round t. represents the learning rate.

Therefore,

Because the global model tends to converge, the following approximation is used:

According to the mean inequality, we have:

where represents the size of collected data for UAV i.

Let . This implies that represents the proportion of lazy nodes, which do not participate in communication, among all follower UAVs. Therefore, represents the participation rate, which is the proportion of follower UAVs that participate in communication. If Equation (20) is satisfied, Equation (15) is also satisfied.

In summary, in each round t, UAV i locally verifies whether it satisfies Equation (20). If it does, the current round of upload will be skipped.

In the extreme case where all nodes in a particular round t satisfy Equation (20), UAV L will not receive the model information uploaded by any follower UAV. In such cases, UAV L selects a follower UAV randomly to participate in the upload after a specified time interval . This ensures that the federated learning task can continue in a relatively efficient situation. The specific time interval can be determined based on different scenarios. In this paper, we set as:

As for the time consumption in these extreme rounds, assuming that the device selected to the device is , the latency for this round can be defined as:

3.2. Latency Minimization Based on ACO

In the previous section, we formulated an optimization problem to minimize the time consumed in each round of federated learning by optimizing the transmit power and CPU frequency of each UAV. Next, we will solve this optimization problem based on ant colony optimization (ACO) Algorithm 1.

To solve the optimization problem, we will decompose it into sub-problems that will be solved independently through mathematical derivation and simplification. In Problem 1, the total time is defined as local time consumption and global time consumption. We have also calculated the time consumed for each step in the previous section. Due to the separate control of follower UAVs and the leader UAV, we will divide Problem 1 into two sub-problems: one focuses on the latency consumption of the follower UAVs, and the other focuses on the latency consumption of the leader UAV. Therefore, this optimization problem can be rewritten as Problem 2 and Problem 3 as follows.

| Algorithm 1: ACO for minimized the latency in one round. |

| Input: |

| UAVs communication parameters: I, , , , c, , , , , |

| or ; |

| Output: |

| Best solution of , or , and the minimum latency; |

| Initialize the model parameters: the size of ant colony N, pheromone value , |

| pheromone evaporation coefficient , pheromone weight , transfer factor weight |

| , total pheromone release Q; |

| Randomly initialize N ant solutions and pheromone value ; |

| Take iteration times as k; |

| while k < max iteration times do |

| Obtain the best index and its ; |

| for each individual in the colony do |

| Calculate the transition probability by ; |

| end |

| for each individual in the colony do |

| Update individual locations using local search and global search; |

| Determine whether an individual can move based on the restriction |

| condition and penalty function [33]; |

| The penalty function is calculated as: |

| where |

| is the multi-stage assignment function, |

| depends on specific cases. |

| Calculate the pheromone value; |

| Record the minimum latency with the solution; |

| end |

| end |

Problem 2 represents the follower’s latency consumption:

where and represent the energy consumption efficiency and are both positive constants. represents the average maneuvering power. represents the size of collected data for UAV i. c represents the workload of CPU cycles per data bit. represents the uplink bandwidth. is the channel power gain from UAV i to UAV L. is the spectral power density of the background noise.

Problem 3 represents the leader’s latency consumption:

where represents the downlink bandwidth, represents the signal power of UAV L, and is the downlink channel power gain from the UAV L to UAV i.

Therefore, this simplified optimization problem can be solved by the Algorithm 1 above.

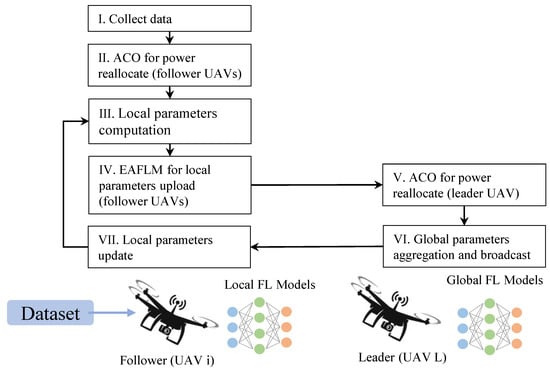

3.3. Overall Architecture

The overall architecture is shown in Figure 2.

Figure 2.

Overall architecture of the system.

4. Results and Discussion

In this section, we verify the validity of our EAFLM scheme through numerical results. Specifically, we utilized the TensorFlow framework to construct a leader–follower UAVs-FL model comprising a leader UAV and nine follower UAVs. The follower UAVs are distributed in a circle centered around the leader UAV. The UAVs maintain the same constant speed while moving and a fixed distance from each other, which means that their power consumption for maneuvering can be roughly considered as the same constant. Meanwhile, the channel power gain between the leader UAV and the follower UAV during FL can also be roughly considered as a constant. We test the performance of the proposed method on the handwritten numeric dataset MNIST. Among them, of the data are retained as the test set of the global model, and a three-layer MLP (multi-layer perceptron) neural network is used as the model of the classification task for the machine learning task of recognizing handwritten digits. The simulation parameters are as Table 1 [25]:

Table 1.

Simulation parameters.

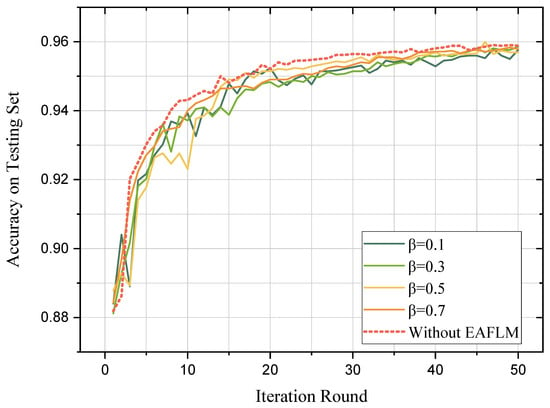

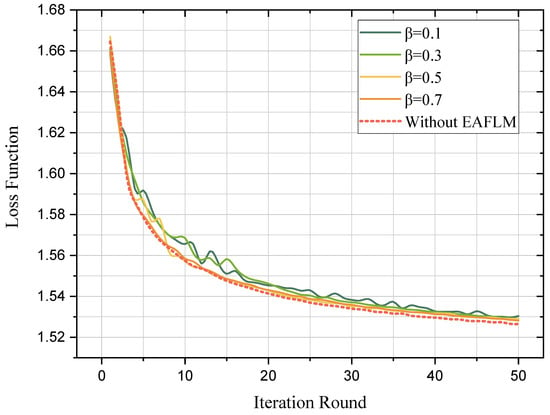

Firstly, the accuracy and loss function of classification results were evaluated, shown in Figure 3 and Figure 4, respectively. The experimental results show that the proposed method EAFLM-ACO can achieve FL convergence in 50 rounds.

Figure 3.

Convergence of accuracy.

Figure 4.

Convergence of loss function.

It can be observed that as the value of decreases (indicating fewer follower UAVs participating in each round of federated learning theoretically), the accuracy curve and loss function curve exhibit more fluctuations before reaching convergence. The introduction of the EAFLM strategy introduces some instability to the federated learning model because the participation of follower UAVs in communication is not fixed for each round. However, after 50 rounds, all five models with different values reached convergence, and their accuracies were similar to each other. Therefore, it can be concluded that the proposed method in this paper reduces the scale of communication while ensuring the training results.

However, it should be noted that the research focus of this paper is the communication in the federated learning framework, and the model structure and optimization algorithm have not been studied too much. Therefore, the reasons behind the overfitting problems and other problems in the experiment in this paper and their solutions do not belong to the scope of this paper. Similarly, experimental indexes such as accuracy are only for the purpose of comparing the performance of various methods, rather than evaluating the merits and demerits of the model. Moreover, because the comparative experiment of different methods adopts the same configuration, it can be said that the indexes in the experiment have the value of comparison.

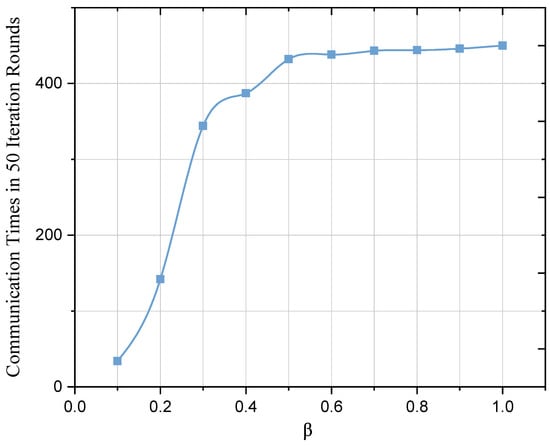

Because of the EAFLM strategy, after performing gradient calculation, UAV i makes an additional local check to see if it meets the conditions of skipping the round. If so, this UAV i skips this round of communication. It can be clearly seen from the results in Figure 5 that when is within the range of 0.1 to 0.3, the communication times have a very obvious change. When is greater than 0.3, the slope of the curve decreases gradually. In other words, when is below 0.3, the communication times of the UAVs-FL model will be significantly compressed compared to the case without communication compression ().

Figure 5.

Communication times between the different values of .

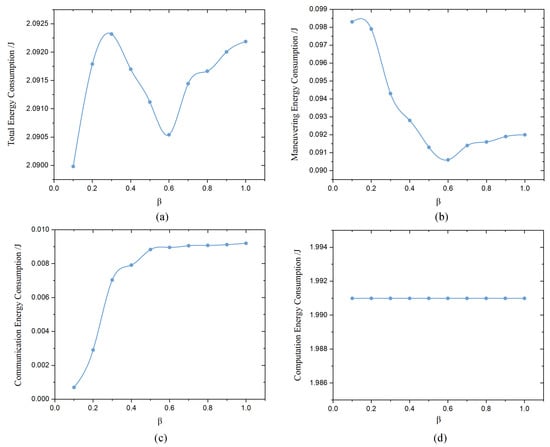

Figure 6 illustrates the average energy consumption of each follower UAV over 50 iterations of completing a federated learning task. Specifically, Figure 6a shows the total energy consumption, whereas Figure 6b–d represent the maneuvering energy consumption, communication energy consumption, and computation energy consumption, respectively. Because our optimization objective primarily aims to minimize the latency of federated learning, which directly affects the flight duration of the UAV fleet, the maneuvering energy consumption fluctuates due to different values of . With the introduction of our EAFLM strategy, which compresses communication times among UAVs, the communication energy consumption becomes proportionate to the average communication times, as the energy consumption per unit time for communication is constrained to a similar level. Consequently, the average communication energy consumption decreases due to the significant compression of communication times. Regarding computation energy consumption, each UAV is required to perform the local gradient computation and update steps in each iteration, resulting in a consistent level of computation energy regardless of changes in . However, it should be noted that this energy consumption variation can not infer the conclusion that this method is energy efficient.

Figure 6.

Average energy consumption of follower UAVs between the different values of . (a) Total energy consumption. (b) Maneuvering energy consumption. (c) Communication energy consumption. (d) Computation energy consumption.

We also compared our proposed method with a similar existing study in Table 2. The NOMA (non-orthogonal multiple access) is an FL framework designed for UAVs. The optimization goal of this method is also to achieve the minimum delay for each FL round, while using uplink transmission durations, downlink broadcasting duration, and CPU frequency as controllable variables however. Under the same environmental parameters, our method achieved a improvement in reducing latency compared to NOMA. This indicates that our optimization problem, which uses UAVs’ CPU frequency and communication power as optimization variables, holds promise for further investigation.

Table 2.

Performance comparison.

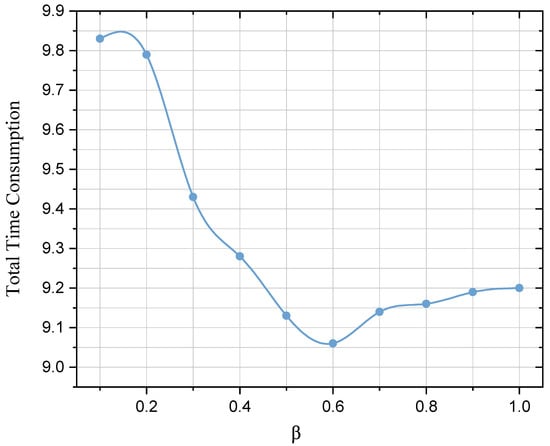

Finally, we analyze the total time latency required for the FL model to reach convergence, as shown in Figure 7. We can see that in the case without communication compression (), the total time consumption is much lower than the case when times of communication are greatly compressed (). This is because the highly compressed communication times are likely to lead to a situation in which there is no follower UAV in a certain round that meets the conditions to participate in the communication. The UAVs will waste waiting for the leader UAV to check if it is an extreme case, where the leader UAV will then randomly select a follower UAV to receive its upload parameters, which thus undoubtedly becomes a waste of time. It is also worth mentioning that the total communication time is significantly reduced when is around 0.6. This implies that an appropriate degree of communication compression holds substantial significance for latency control.

Figure 7.

Total time consumption of 50 iteration rounds with different values of .

In summary, this method effectively lowers the latency of an individual round of FL by compressing communication times and reallocating transmit power and CPU frequency.

5. Conclusions

In this paper, a leader–follower architecture UAVs-FL model is constructed. On this basis, an optimization problem is established for latency-sensitive tasks in UAVs. The EAFLM-ACO method is proposed with the main goal of achieving the shortest communication latency possible. Our method significantly compresses communication times among UAVs, ensures low latency in FL iterations, and optimizes the allocation of UAV communication resources. The model accuracy is also taken into account.

EAFLM-ACO significantly reduces communication times between UAVs while maintaining a relatively low impact on accuracy. After the follower UAVs train the local model, they check whether they meet the conditions for participating in this round of communication according to the self-inspection conditions. If they meet the conditions, the gradient will be uploaded to the leader UAV. This selective gradient exchange approach also mitigates the risk of disclosing private data. At the same time, the allocation of the transmit power and CPU frequency is adjusted locally to achieve the shortest latency.

The effectiveness of this method is additionally verified by experiments. As the degree of communication compression increases, the number of rounds required from FL model to achieve convergence are nearly the same and the accuracy and loss function of machine learning tasks are not significantly different from those without compression. In order to minimize the latency, the transmit power and CPU frequency are reallocated. The latency of each FL iteration is reduced by compared to other similar methods.

As for the further work, considering that the UAVs perform computationally heavier tasks or the amount of local data increases further, then at the end of each round of calculation, the additional gradient check will further increase the calculation time consumption, which may affect the energy allocation of the whole UAVs group. In order to reduce the pressure of local computation, a “check-free” mechanism can be invented, which may help to reduce the computation work caused by gradient checking. In future work, we will conduct further research on the “inspection exemption” strategy.

Author Contributions

Conceptualization, L.Z. and W.W.; methodology, L.Z. and W.W.; software, W.W.; validation, L.Z., W.W. and W.Z.; formal analysis, W.W.; investigation, W.W. and W.Z.; resources, L.Z.; writing—original draft preparation, W.W.; writing—review and editing, W.Z.; visualization, W.Z.; supervision, L.Z.; project administration, L.Z.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAVs | Unmanned Aerial Vehicle swarms |

| FL | Federated Learning |

| EAFLM | Efficient Asynchronous Federated Learning Mechanism |

| ACO | Ant Colony Optimization |

References

- Mohsan, S.A.H.; Khan, M.A.; Noor, F.; Ullah, I.; Alsharif, M.H. Towards the Unmanned Aerial Vehicles (UAVs): A Comprehensive Review. Drones 2022, 6, 147. [Google Scholar] [CrossRef]

- Liu, C.; Szirányi, T. Real-time human detection and gesture recognition for on-board UAV rescue. Sensors 2021, 21, 2180. [Google Scholar] [CrossRef] [PubMed]

- Shafique, A.; Mehmood, A.; Elhadef, M. Detecting signal spoofing attack in uavs using machine learning models. IEEE Access 2021, 9, 93803–93815. [Google Scholar] [CrossRef]

- Ficapal, A.; Mutis, I. Framework for the Detection, Diagnosis, and Evaluation of Thermal Bridges Using Infrared Thermography and Unmanned Aerial Vehicles. Buildings 2019, 9, 179. [Google Scholar] [CrossRef]

- Everaerts, J. The use of unmanned aerial vehicles (UAVs) for remote sensing and mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1187–1192. [Google Scholar]

- Messous, M.A.; Arfaoui, A.; Alioua, A.; Senouci, S.M. A Sequential Game Approach for Computation-Offloading in an UAV Network. In Proceedings of the 2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Aguera y Arcas, B. Communication-Efficient Learning of Deep Networks from Decentralized Data. arXiv 2016, arXiv:1602.05629. [Google Scholar]

- Alwis, C.D.; Kalla, A.; Pham, Q.V.; Kumar, P.; Dev, K.; Hwang, W.J.; Liyanage, M. Survey on 6G Frontiers: Trends, Applications, Requirements, Technologies and Future Research. IEEE Open J. Commun. Soc. 2021, 2, 836–886. [Google Scholar] [CrossRef]

- You, X.; Wang, C.X.; Huang, J.; Gao, X.; Zhang, Z.; Wang, M.; Huang, Y.; Zhang, C.; Jiang, Y.; Wang, J.; et al. Towards 6G wireless communication networks: Vision, enabling technologies, and new paradigm shifts. Sci. China Inf. Sci. 2021, 64, 110301. [Google Scholar] [CrossRef]

- Dao, N.N.; Pham, Q.V.; Tu, N.H.; Thanh, T.T.; Bao, V.N.Q.; Lakew, D.S.; Cho, S. Survey on Aerial Radio Access Networks: Toward a Comprehensive 6G Access Infrastructure. IEEE Commun. Surv. Tutor. 2021, 23, 1193–1225. [Google Scholar] [CrossRef]

- Zeng, T.; Semiari, O.; Mozaffari, M.; Chen, M.; Saad, W.; Bennis, M. Federated Learning in the Sky: Joint Power Allocation and Scheduling with UAV Swarms. In Proceedings of the 2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar]

- Yılmaz, A.; Toker, C. Air-to-Air Channel Model for UAV Communications. In Proceedings of the 2022 30th Signal Processing and Communications Applications Conference (SIU), Safranbolu, Turkey, 15–18 May 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Pham, Q.V.; Zeng, M.; Ruby, R.; Huynh-The, T.; Hwang, W.J. UAV Communications for Sustainable Federated Learning. IEEE Trans. Veh. Technol. 2021, 70, 3944–3948. [Google Scholar] [CrossRef]

- Fu, X.; Pan, J.; Gao, X.; Li, B.; Chen, J.; Zhang, K. Task Allocation Method for Multi-UAV Teams with Limited Communication Bandwidth. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1874–1878. [Google Scholar] [CrossRef]

- Li, J.; Li, S.; Xue, C. Resource Optimization for Multi-Unmanned Aerial Vehicle Formation Communication Based on an Improved Deep Q-Network. Sensors 2023, 23, 2667. [Google Scholar] [CrossRef] [PubMed]

- Sharma, I.; Sharma, A.; Gupta, S.K. Asynchronous and Synchronous Federated Learning-based UAVs. In Proceedings of the 2023 Third International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA-SYMP), Bangkok, Thailand, 18–20 January 2023; pp. 105–109. [Google Scholar] [CrossRef]

- Do, Q.V.; Pham, Q.V.; Hwang, W.J. Deep Reinforcement Learning for Energy-Efficient Federated Learning in UAV-Enabled Wireless Powered Networks. IEEE Commun. Lett. 2022, 26, 99–103. [Google Scholar] [CrossRef]

- Li, T.; Zhang, J.; Obaidat, M.S.; Lin, C.; Lin, Y.; Shen, Y.; Ma, J. Energy-Efficient and Secure Communication Toward UAV Networks. IEEE Internet Things J. 2022, 9, 10061–10076. [Google Scholar] [CrossRef]

- Mowla, N.I.; Tran, N.H.; Doh, I.; Chae, K. Federated Learning-Based Cognitive Detection of Jamming Attack in Flying Ad-Hoc Network. IEEE Access 2020, 8, 4338–4350. [Google Scholar] [CrossRef]

- Liu, X.; Deng, Y.; Mahmoodi, T. Energy Efficient User Scheduling for Hybrid Split and Federated Learning in Wireless UAV Networks. In Proceedings of the ICC 2022—IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Shiri, H.; Park, J.; Bennis, M. Communication-Efficient Massive UAV Online Path Control: Federated Learning Meets Mean-Field Game Theory. IEEE Trans. Commun. 2020, 68, 6840–6857. [Google Scholar] [CrossRef]

- Gupta, L.; Jain, R.; Vaszkun, G. Survey of Important Issues in UAV Communication Networks. IEEE Commun. Surv. Tutor. 2016, 18, 1123–1152. [Google Scholar] [CrossRef]

- Wang, S. Research on Parameter-Exchanging Optimizing Mechanism in Distributed Deep Learning. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2015. [Google Scholar]

- Dhuheir, M.; Baccour, E.; Erbad, A.; Al-Obaidi, S.S.; Hamdi, M. Deep Reinforcement Learning for Trajectory Path Planning and Distributed Inference in Resource-Constrained UAV Swarms. IEEE Internet Things J. 2022, 10, 8185–8201. [Google Scholar] [CrossRef]

- Song, Y.; Wang, T.; Wu, Y.; Qian, L.P.; Shi, Z. Non-orthogonal Multiple Access assisted Federated Learning for UAV Swarms: An Approach of Latency Minimization. In Proceedings of the 2021 International Wireless Communications and Mobile Computing (IWCMC), Harbin City, China, 28 June–2 July 2021. [Google Scholar]

- Liu, J.; Zhang, Q. Offloading Schemes in Mobile Edge Computing for Ultra-Reliable Low Latency Communications. IEEE Access 2018, 6, 12825–12837. [Google Scholar] [CrossRef]

- Lin, Y.; Han, S.; Mao, H.; Wang, Y.; Dally, W.J. Deep Gradient Compression: Reducing the Communication Bandwidth for Distributed Training. arXiv 2017, arXiv:1712.01887. [Google Scholar]

- Chen, T.; Giannakis, G.B.; Sun, T.; Yin, W. LAG: Lazily Aggregated Gradient for Communication-Efficient Distributed Learning. In Proceedings of the 2018 Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Chen, T.; Sun, Y.; Yin, W. LASG: Lazily Aggregated Stochastic Gradients for Communication-Efficient Distributed Learning. arXiv 2020, arXiv:2002.11360. [Google Scholar]

- Lu, X.; Liao, Y.; Liò, P.; Hui, P. An Asynchronous Federated Learning Mechanism for Edge Network Computing. J. Comput. Res. Dev. 2020, 57, 2571. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Ramage, D.; Richtárik, P. Federated Optimization: Distributed Machine Learning for On-Device Intelligence. arXiv 2016, arXiv:1610.02527. [Google Scholar]

- Ren, J.; Yu, G.; Ding, G. Accelerating DNN Training in Wireless Federated Edge Learning Systems. IEEE J. Sel. Areas Commun. 2021, 39, 219–232. [Google Scholar] [CrossRef]

- Yang, J.M.; Chen, Y.P.; Horng, J.T.; Kao, C.Y. Applying family competition to evolution strategies for constrained optimization. In Proceedings of the Evolutionary Programming VI: 6th International Conference, EP97, Indianapolis, IN, USA, 13–16 April 1997; Proceedings 6. Springer: Berlin/Heidelberg, Germany, 1997; pp. 201–211. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).