Edge-Guided Camouflaged Object Detection via Multi-Level Feature Integration

Abstract

1. Introduction

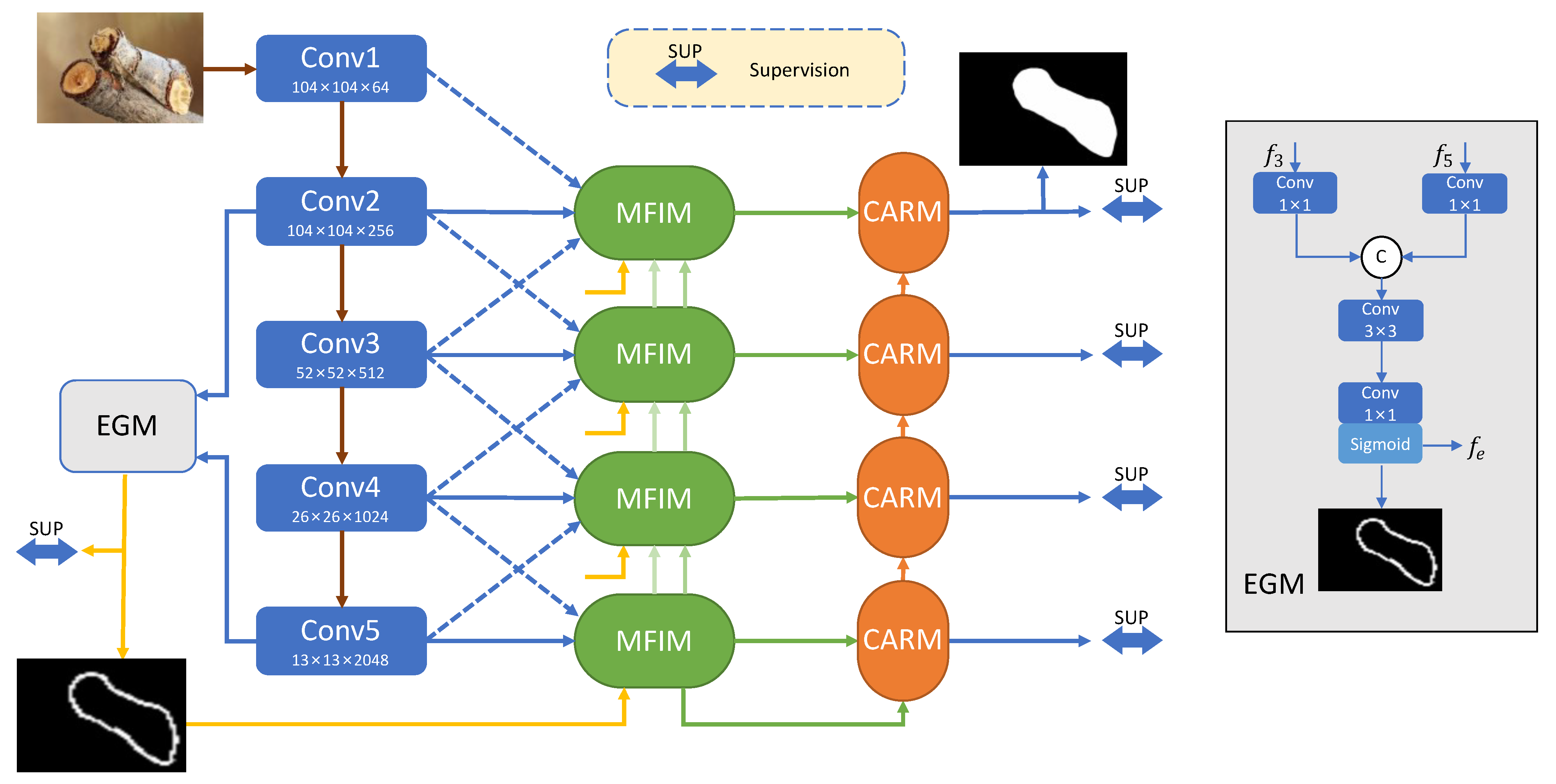

- We propose the novel MFNet to investigate the effectiveness of adjacent layer feature integration in the COD task and confirm the rationality of fully capturing contextual information through the interaction of adjacent layer features;

- We propose an edge guidance module to explicitly learn object edge representations and guide the model to discriminate camouflaged objects’ edges effectively;

- We propose a multi-level feature integration module to efficiently extract and integrate global semantic information and local detail information in multi-scale features;

- We propose a context aggregation refinement module to aggregate and refine cross-layer features via the attention mechanism, atrous convolution, and asymmetric convolution.

2. Related Work

2.1. Camouflaged Object Detection

2.2. Multi-Level Feature Fusion

2.3. Boundary-Aware Learning

3. Proposed Method

3.1. Edge Generation Module

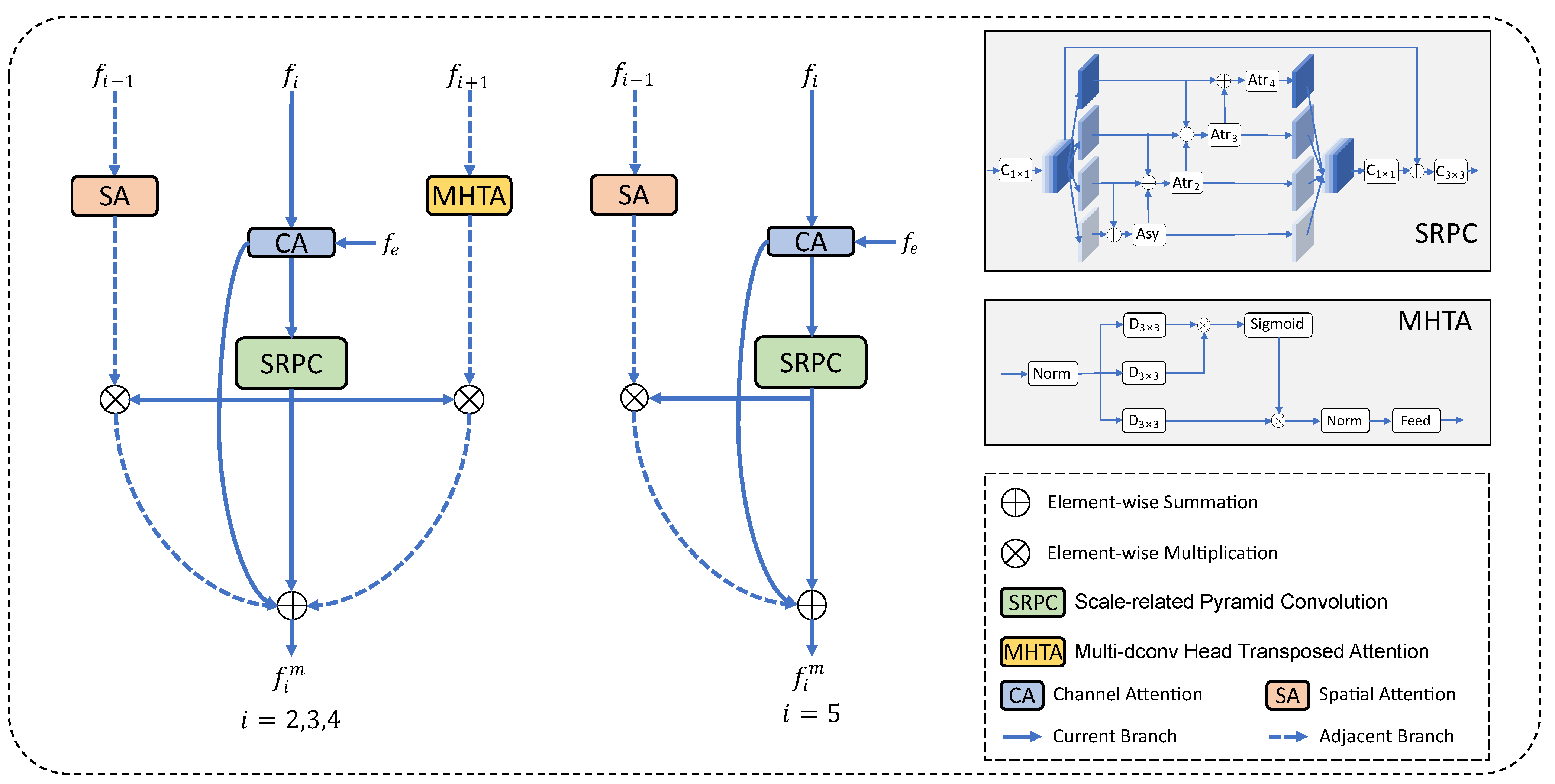

3.2. Multi-Level Feature Integration Module

3.2.1. Current Branch

3.2.2. Adjacent Branch

3.2.3. Branches’ Integration

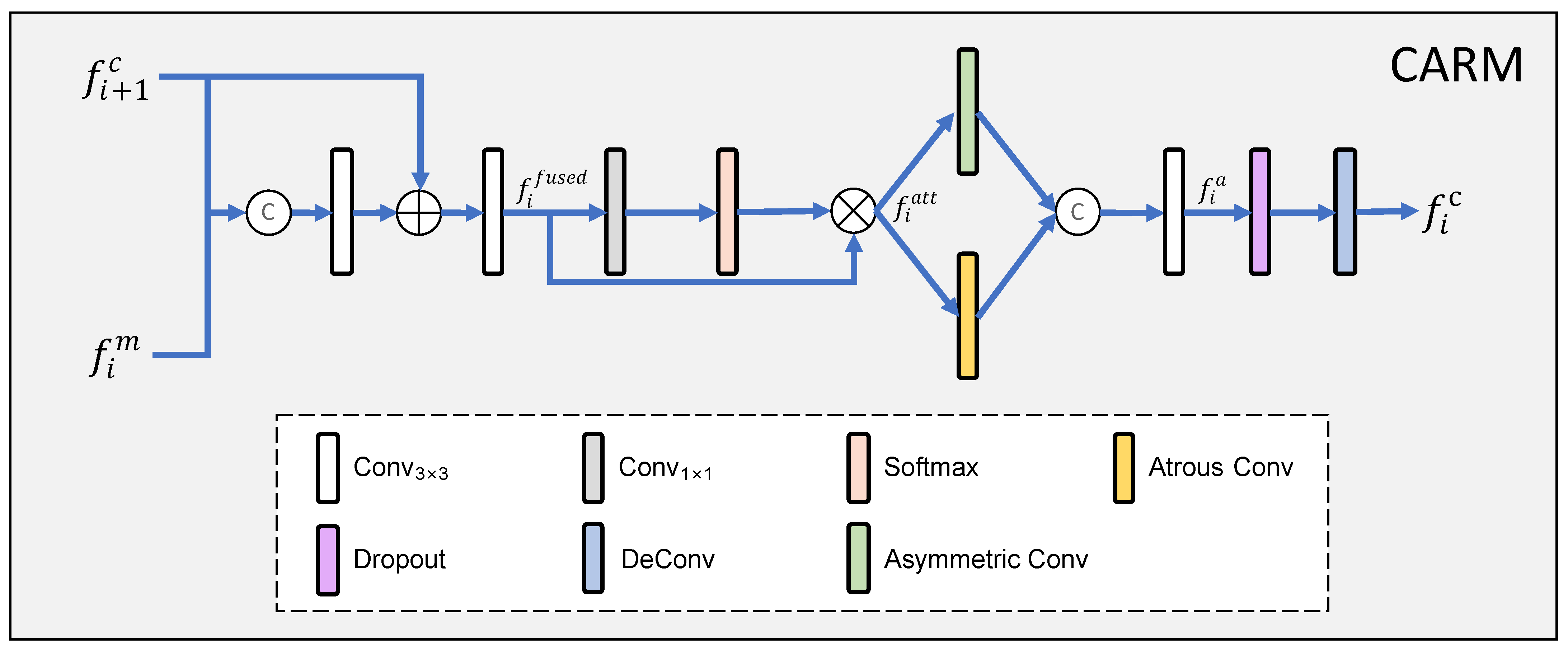

3.3. Context Aggregation Refinement Module

3.4. Loss Function

4. Experiments

4.1. Implementation Details

4.2. Datasets

4.3. Evaluation Metrics

4.3.1. Structure Measure ()

4.3.2. E-Measure ()

4.3.3. Weighted F-Measure ()

4.3.4. Mean Absolute Error ()

4.4. Comparison with SOTA Methods

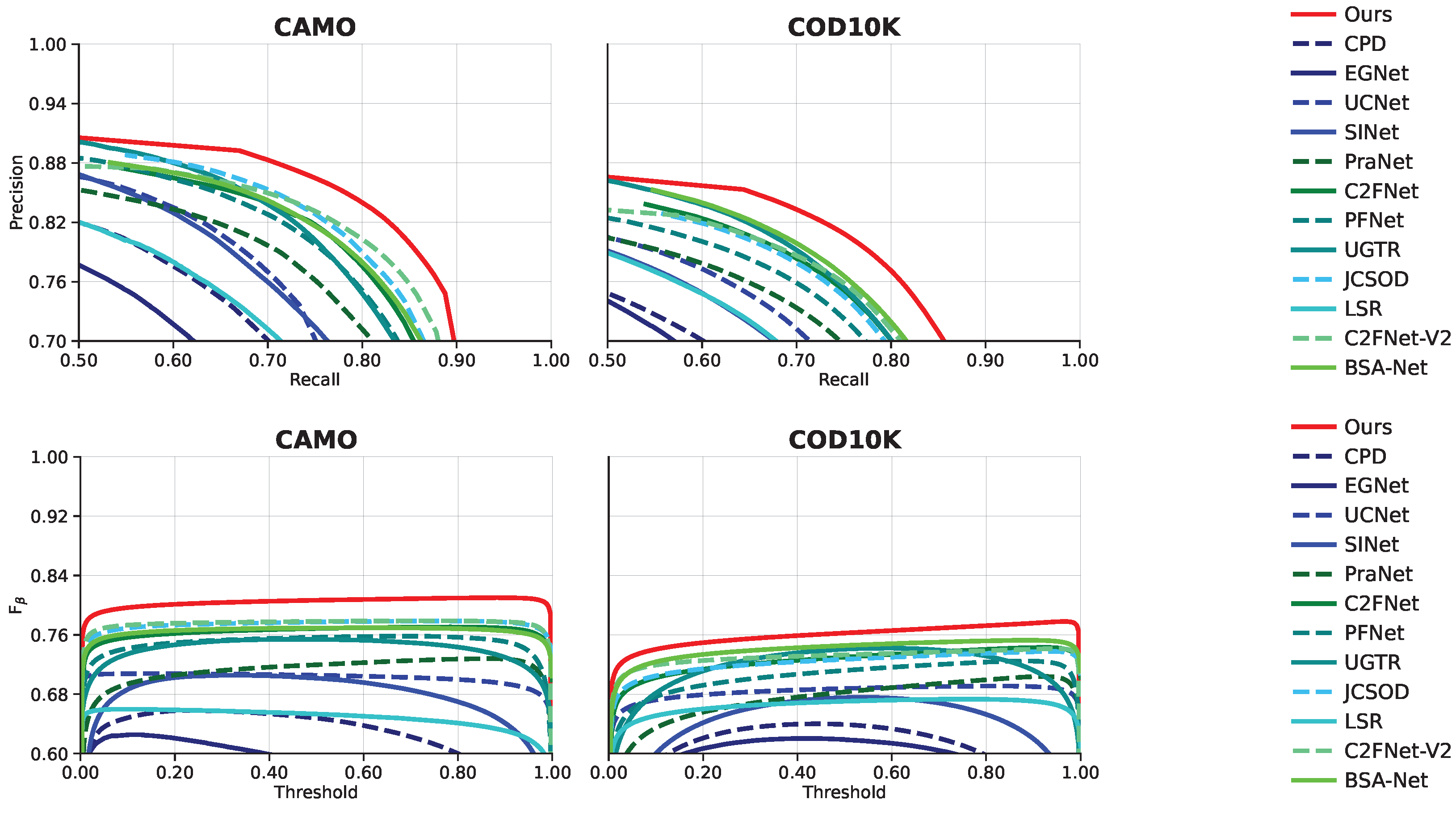

4.4.1. Quantitative Evaluation

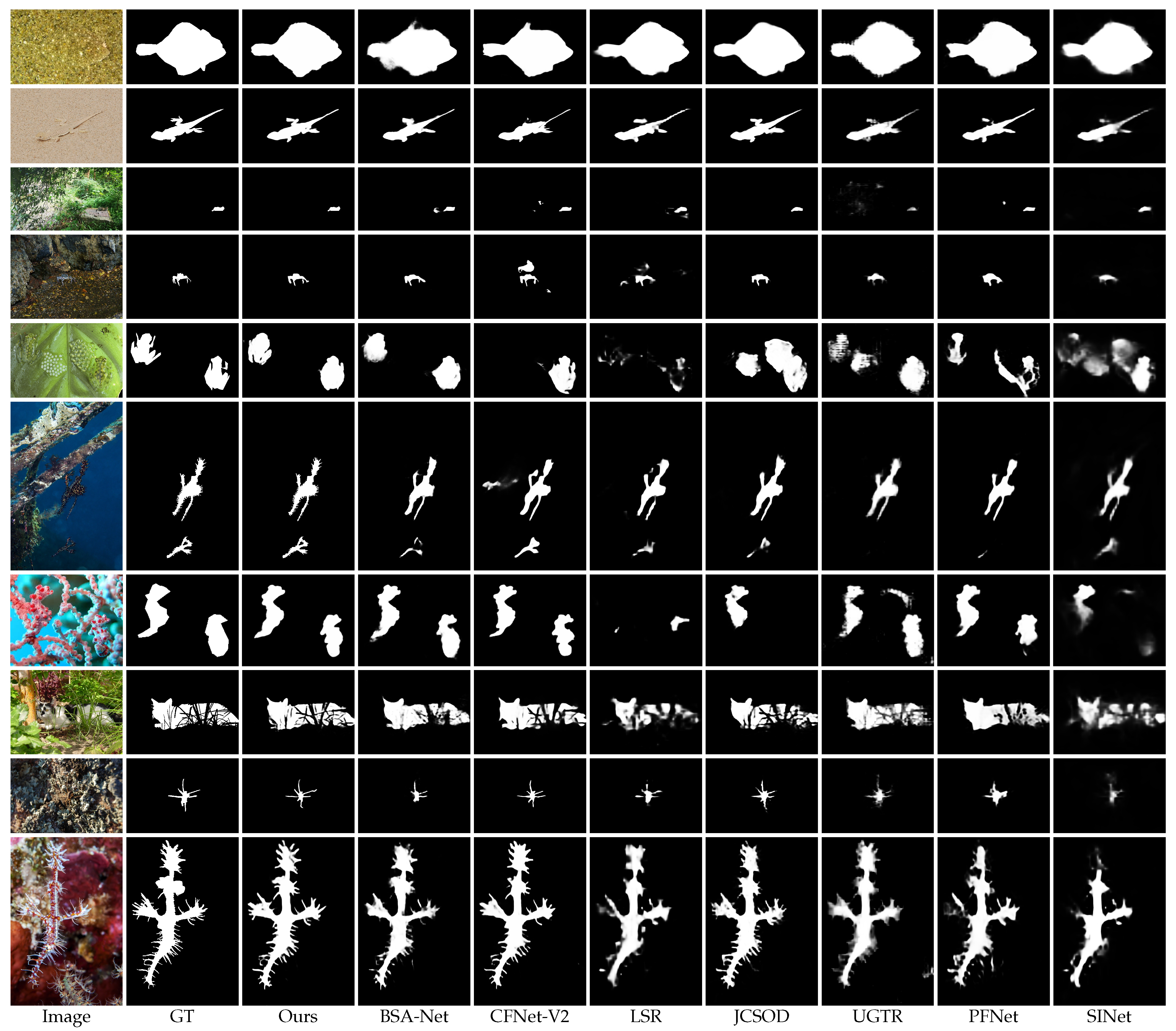

4.4.2. Qualitative Evaluation

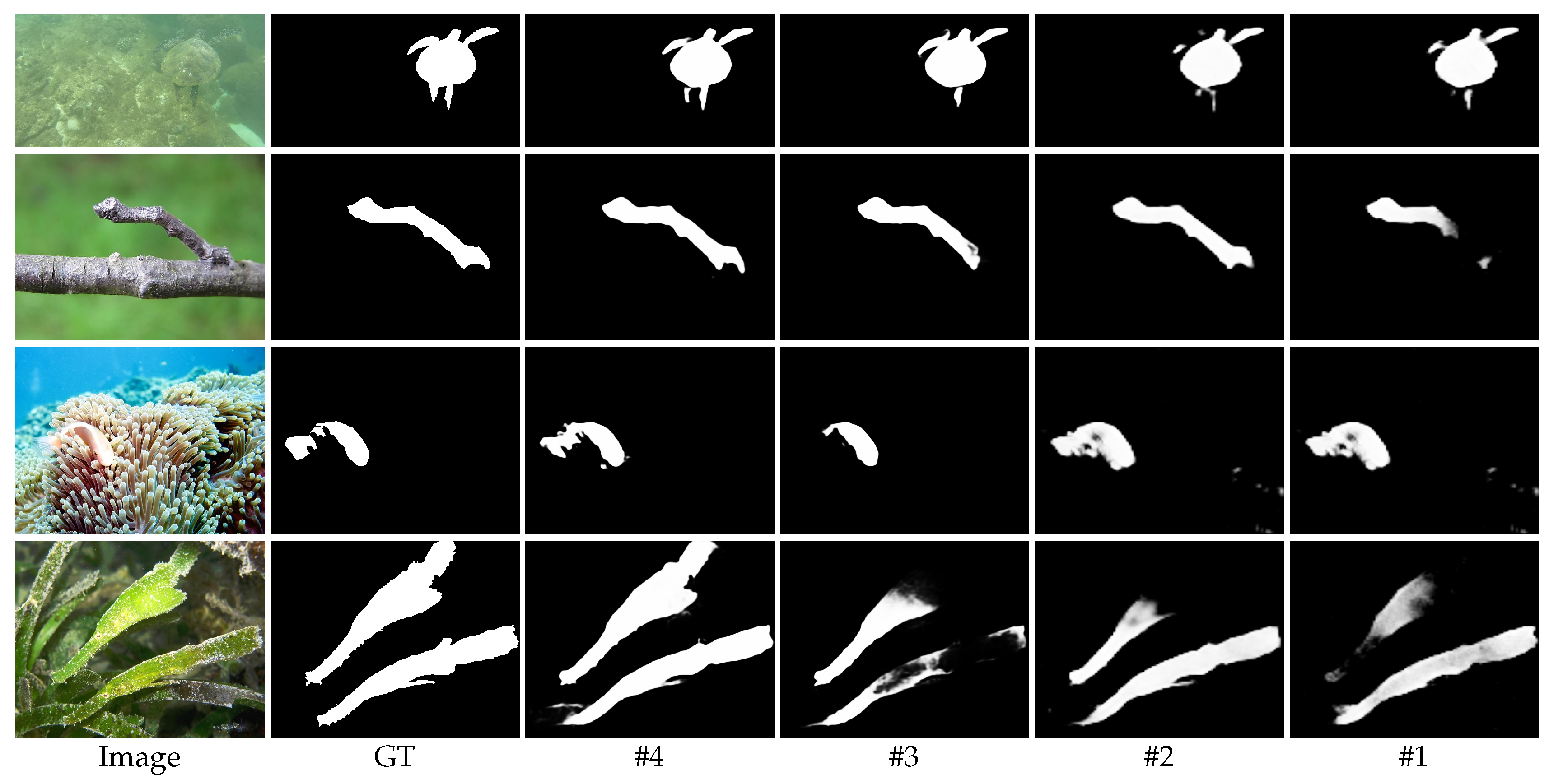

4.5. Ablation Analysis

4.5.1. Effect of Different Modules

4.5.2. Effect of Different Levels of Features as Input in EGM

4.5.3. Effect of Different Branches in MFIM

4.5.4. Effect of Atrous Convolution and Asymmetric Convolution in CARM

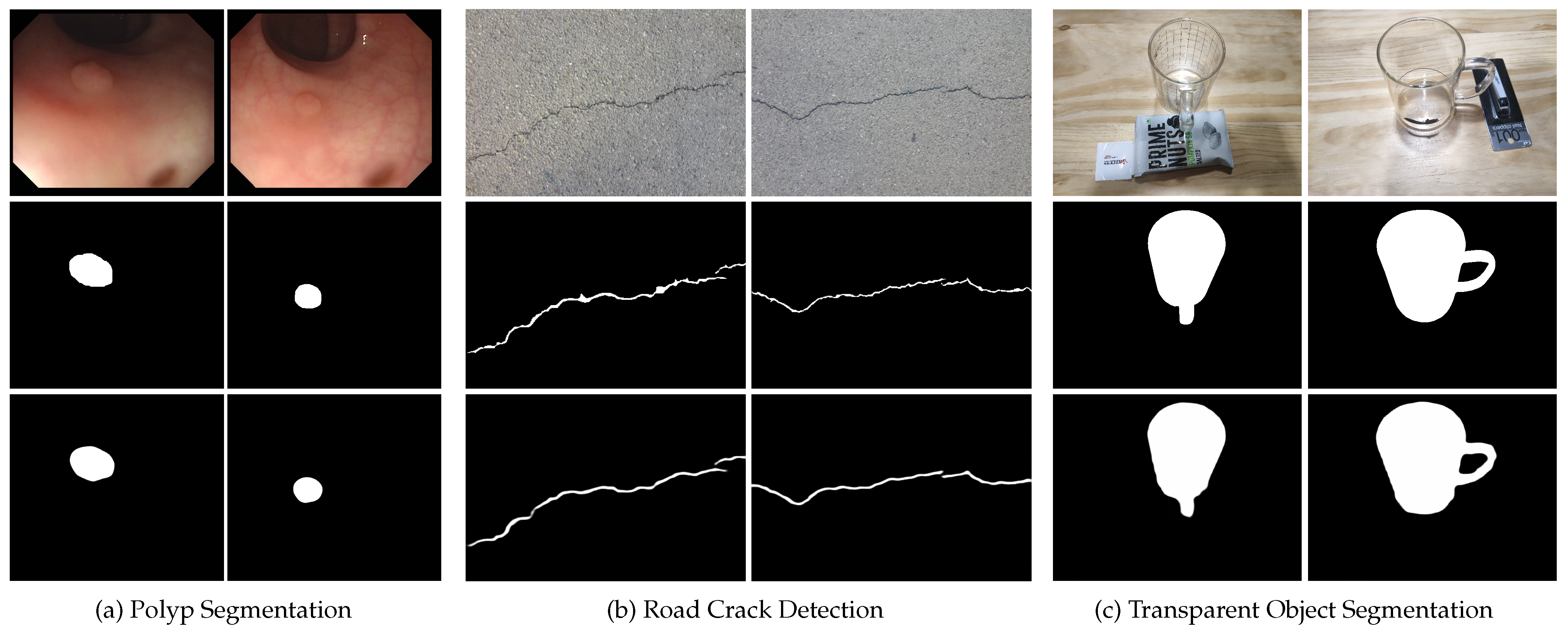

5. Downstream Applications

5.1. Polyp Segmentation

5.2. Defect Detection

5.3. Transparent Object Segmentation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2777–2787. [Google Scholar]

- Wu, Z.; Su, L.; Huang, Q. Cascaded partial decoder for fast and accurate salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3907–3916. [Google Scholar]

- Zhao, J.X.; Liu, J.J.; Fan, D.P.; Cao, Y.; Yang, J.; Cheng, M.M. EGNet: Edge guidance network for salient object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8779–8788. [Google Scholar]

- Wei, J.; Wang, S.; Huang, Q. F3Net: Fusion, feedback and focus for salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12321–12328. [Google Scholar]

- Zhang, J.; Fan, D.P.; Dai, Y.; Anwar, S.; Saleh, F.S.; Zhang, T.; Barnes, N. UC-Net: Uncertainty inspired RGB-D saliency detection via conditional variational autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8582–8591. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Zhou, T.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Pranet: Parallel reverse attention network for polyp segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2020, Proceedings of the 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part VI 23; Springer: Berlin/Heidelberg, Germany, 2020; pp. 263–273. [Google Scholar]

- Wu, Y.H.; Gao, S.H.; Mei, J.; Xu, J.; Fan, D.P.; Zhang, R.G.; Cheng, M.M. Jcs: An explainable covid-19 diagnosis system by joint classification and segmentation. IEEE Trans. Image Process. 2021, 30, 3113–3126. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef]

- Zeng, N.; Wu, P.; Wang, Z.; Li, H.; Liu, W.; Liu, X. A small-sized object detection oriented multi-scale feature fusion approach with application to defect detection. IEEE Trans. Instrum. Meas. 2022, 71, 1–14. [Google Scholar] [CrossRef]

- Rizzini, D.L.; Kallasi, F.; Oleari, F.; Caselli, S. Investigation of vision-based underwater object detection with multiple datasets. Int. J. Adv. Robot. Syst. 2015, 12, 77. [Google Scholar] [CrossRef]

- Kavitha, C.; Rao, B.P.; Govardhan, A. An efficient content based image retrieval using color and texture of image sub blocks. Int. J. Eng. Sci. Technol. (IJEST) 2011, 3, 1060–1068. [Google Scholar]

- Qiu, L.; Wu, X.; Yu, Z. A high-efficiency fully convolutional networks for pixel-wise surface defect detection. IEEE Access 2019, 7, 15884–15893. [Google Scholar] [CrossRef]

- Siricharoen, P.; Aramvith, S.; Chalidabhongse, T.; Siddhichai, S. Robust outdoor human segmentation based on color-based statistical approach and edge combination. In Proceedings of the The 2010 International Conference on Green Circuits and Systems, Shanghai, China, 21–23 June 2010; pp. 463–468. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Pan, Y.; Chen, Y.; Fu, Q.; Zhang, P.; Xu, X. Study on the camouflaged target detection method based on 3D convexity. Mod. Appl. Sci. 2011, 5, 152. [Google Scholar] [CrossRef]

- Yan, J.; Le, T.N.; Nguyen, K.D.; Tran, M.T.; Do, T.T.; Nguyen, T.V. Mirrornet: Bio-inspired camouflaged object segmentation. IEEE Access 2021, 9, 43290–43300. [Google Scholar] [CrossRef]

- Zhu, J.; Zhang, X.; Zhang, S.; Liu, J. Inferring camouflaged objects by texture-aware interactive guidance network. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 3599–3607. [Google Scholar]

- Zhai, Q.; Li, X.; Yang, F.; Chen, C.; Cheng, H.; Fan, D.P. Mutual graph learning for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12997–13007. [Google Scholar]

- Yang, F.; Zhai, Q.; Li, X.; Huang, R.; Luo, A.; Cheng, H.; Fan, D.P. Uncertainty-guided transformer reasoning for camouflaged object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4146–4155. [Google Scholar]

- Ji, G.P.; Zhu, L.; Zhuge, M.; Fu, K. Fast camouflaged object detection via edge-based reversible re-calibration network. Pattern Recognit. 2022, 123, 108414. [Google Scholar] [CrossRef]

- Mei, H.; Ji, G.P.; Wei, Z.; Yang, X.; Wei, X.; Fan, D.P. Camouflaged object segmentation with distraction mining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8772–8781. [Google Scholar]

- Sun, Y.; Chen, G.; Zhou, T.; Zhang, Y.; Liu, N. Context-aware cross-level fusion network for camouflaged object detection. arXiv 2021, arXiv:2105.12555. [Google Scholar]

- Wang, K.; Bi, H.; Zhang, Y.; Zhang, C.; Liu, Z.; Zheng, S. D 2 C-Net: A Dual-Branch, Dual-Guidance and Cross-Refine Network for Camouflaged Object Detection. IEEE Trans. Ind. Electron. 2021, 69, 5364–5374. [Google Scholar] [CrossRef]

- Zhou, T.; Zhou, Y.; Gong, C.; Yang, J.; Zhang, Y. Feature Aggregation and Propagation Network for Camouflaged Object Detection. IEEE Trans. Image Process. 2022, 31, 7036–7047. [Google Scholar] [CrossRef]

- Li, A.; Zhang, J.; Lv, Y.; Liu, B.; Zhang, T.; Dai, Y. Uncertainty-aware joint salient object and camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10071–10081. [Google Scholar]

- Lv, Y.; Zhang, J.; Dai, Y.; Li, A.; Liu, B.; Barnes, N.; Fan, D.P. Simultaneously localize, segment and rank the camouflaged objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11591–11601. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Cheng, M.M.; Shao, L. Concealed object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6024–6042. [Google Scholar] [CrossRef]

- Pang, Y.; Zhao, X.; Xiang, T.Z.; Zhang, L.; Lu, H. Zoom in and out: A mixed-scale triplet network for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–22 June 2022; pp. 2160–2170. [Google Scholar]

- Luo, Z.; Mishra, A.; Achkar, A.; Eichel, J.; Li, S.; Jodoin, P.M. Non-local deep features for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, Hawaii, USA, 21–26 July 2017; pp. 6609–6617. [Google Scholar]

- Zhang, X.; Wang, T.; Qi, J.; Lu, H.; Wang, G. Progressive Attention Guided Recurrent Network for Salient Object Detection. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Chen, S.; Tan, X.; Wang, B.; Hu, X. Reverse attention for salient object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 234–250. [Google Scholar]

- Wu, R.; Feng, M.; Guan, W.; Wang, D.; Lu, H.; Ding, E. A mutual learning method for salient object detection with intertwined multi-supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8150–8159. [Google Scholar]

- Zhang, P.; Wang, D.; Lu, H.; Wang, H.; Ruan, X. Amulet: Aggregating multi-level convolutional features for salient object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 202–211. [Google Scholar]

- Hou, Q.; Cheng, M.M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P.H. Deeply supervised salient object detection with short connections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3203–3212. [Google Scholar]

- Wang, T.; Zhang, L.; Wang, S.; Lu, H.; Yang, G.; Ruan, X.; Borji, A. Detect globally, refine locally: A novel approach to saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3127–3135. [Google Scholar]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Multi-scale interactive network for salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9413–9422. [Google Scholar]

- Ding, H.; Jiang, X.; Liu, A.Q.; Thalmann, N.M.; Wang, G. Boundary-aware feature propagation for scene segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6819–6829. [Google Scholar]

- Zhu, H.; Li, P.; Xie, H.; Yan, X.; Liang, D.; Chen, D.; Wei, M.; Qin, J. I can find you! Boundary-guided separated attention network for camouflaged object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, virtual, 22 February–1 March 2022; Volume 36, pp. 3608–3616. [Google Scholar]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Fan, D.P.; Zhai, Y.; Borji, A.; Yang, J.; Shao, L. BBS-Net: RGB-D salient object detection with a bifurcated backbone strategy network. In Proceedings of the Computer Vision–ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XII; Springer: Berlin/Heidelberg, Germany, 2020; pp. 275–292. [Google Scholar]

- Wu, Y.H.; Liu, Y.; Zhang, L.; Cheng, M.M.; Ren, B. EDN: Salient object detection via extremely-downsampled network. IEEE Trans. Image Process. 2022, 31, 3125–3136. [Google Scholar] [CrossRef]

- Yin, B.; Zhang, X.; Hou, Q.; Sun, B.Y.; Fan, D.P.; Van Gool, L. CamoFormer: Masked Separable Attention for Camouflaged Object Detection. arXiv 2022, arXiv:2212.06570. [Google Scholar]

- Lee, M.S.; Shin, W.; Han, S.W. TRACER: Extreme Attention Guided Salient Object Tracing Network (Student Abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, virtual, 22 February–1 March 2022; Volume 36, pp. 12993–12994. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Comput. Vis. Image Underst. 2019, 184, 45–56. [Google Scholar] [CrossRef]

- Skurowski, P.; Abdulameer, H.; Błaszczyk, J.; Depta, T.; Kornacki, A.; Kozieł, P. Animal camouflage analysis: Chameleon database. Unpubl. Manuscr. 2018, 2, 7. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4548–4557. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. arXiv 2018, arXiv:1805.1042. [Google Scholar]

- Margolin, R.; Zelnik-Manor, L.; Tal, A. How to evaluate foreground maps? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 248–255. [Google Scholar]

- Chen, G.; Liu, S.J.; Sun, Y.J.; Ji, G.P.; Wu, Y.F.; Zhou, T. Camouflaged object detection via context-aware cross-level fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6981–6993. [Google Scholar] [CrossRef]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Halvorsen, P.; de Lange, T.; Johansen, D.; Johansen, H.D. Kvasir-seg: A segmented polyp dataset. In Proceedings of the MultiMedia Modeling, Proceedings of the 26th International Conference, MMM 2020, Daejeon, Republic of Korea, 5–8 January 2020; Proceedings, Part II 26; Springer: Berlin/Heidelberg, Germany, 2020; pp. 451–462. [Google Scholar]

- Bernal, J.; Sánchez, F.J.; Fernández-Esparrach, G.; Gil, D.; Rodríguez, C.; Vilariño, F. WM-DOVA maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians. Comput. Med. Imaging Graph. 2015, 43, 99–111. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Wang, W.; Ding, M.; Shen, C.; Luo, P. Segmenting transparent objects in the wild. In Proceedings of the Computer Vision–ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIII 16; Springer: Berlin/Heidelberg, Germany, 2020; pp. 696–711. [Google Scholar]

| Method | Year | CAMO | CHAMELEON | COD10K | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ↑ | ↑ | ↑ | ↓ | ↑ | ↑ | ↑ | ↓ | ↑ | ↑ | ↑ | ↓ | ||

| CPD | 2019 | 0.716 | 0.796 | 0.658 | 0.113 | 0.857 | 0.898 | 0.813 | 0.048 | 0.750 | 0.853 | 0.640 | 0.053 |

| EGNet | 2019 | 0.662 | 0.766 | 0.612 | 0.124 | 0.848 | 0.831 | 0.676 | 0.050 | 0.737 | 0.810 | 0.608 | 0.056 |

| F3Net | 2020 | 0.711 | 0.780 | 0.630 | 0.109 | 0.848 | 0.917 | 0.798 | 0.047 | 0.739 | 0.819 | 0.609 | 0.051 |

| UCNet | 2020 | 0.739 | 0.787 | 0.700 | 0.095 | 0.880 | 0.930 | 0.836 | 0.036 | 0.776 | 0.857 | 0.681 | 0.042 |

| SINet | 2020 | 0.745 | 0.829 | 0.644 | 0.092 | 0.872 | 0.946 | 0.806 | 0.034 | 0.776 | 0.864 | 0.631 | 0.043 |

| PraNet | 2020 | 0.769 | 0.837 | 0.663 | 0.094 | 0.860 | 0.907 | 0.763 | 0.044 | 0.789 | 0.861 | 0.629 | 0.045 |

| C2FNet | 2021 | 0.796 | 0.864 | 0.719 | 0.080 | 0.888 | 0.935 | 0.828 | 0.032 | 0.813 | 0.890 | 0.686 | 0.036 |

| PFNet | 2021 | 0.782 | 0.842 | 0.695 | 0.085 | 0.882 | 0.931 | 0.810 | 0.033 | 0.800 | 0.877 | 0.660 | 0.040 |

| TINet | 2021 | 0.781 | 0.848 | 0.678 | 0.087 | 0.874 | 0.916 | 0.783 | 0.038 | 0.793 | 0.861 | 0.635 | 0.042 |

| UGTR | 2021 | 0.784 | 0.851 | 0.684 | 0.086 | 0.888 | 0.940 | 0.794 | 0.031 | 0.818 | 0.853 | 0.667 | 0.035 |

| R-MGL | 2021 | 0.775 | 0.847 | 0.673 | 0.088 | 0.893 | 0.923 | 0.813 | 0.030 | 0.814 | 0.852 | 0.666 | 0.035 |

| JCSOD | 2021 | 0.800 | 0.873 | 0.728 | 0.073 | 0.894 | 0.943 | 0.848 | 0.030 | 0.809 | 0.884 | 0.684 | 0.035 |

| LSR | 2021 | 0.787 | 0.854 | 0.696 | 0.080 | 0.893 | 0.938 | 0.839 | 0.033 | 0.804 | 0.880 | 0.673 | 0.037 |

| C2FNet-V2 | 2022 | 0.799 | 0.859 | 0.730 | 0.077 | 0.893 | 0.947 | 0.845 | 0.028 | 0.811 | 0.891 | 0.691 | 0.036 |

| SINet-V2 | 2022 | 0.820 | 0.882 | 0.743 | 0.070 | 0.888 | 0.942 | 0.816 | 0.030 | 0.815 | 0.887 | 0.680 | 0.037 |

| BSA-Net | 2022 | 0.796 | 0.851 | 0.717 | 0.079 | 0.895 | 0.946 | 0.841 | 0.027 | 0.818 | 0.891 | 0.699 | 0.034 |

| Ours | - | 0.824 | 0.883 | 0.763 | 0.067 | 0.904 | 0.948 | 0.856 | 0.026 | 0.834 | 0.901 | 0.726 | 0.032 |

| No. | B | MFIM | CARM | EGM | CAMO | COD10K | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| ↑ | ↑ | ↓ | ↑ | ↑ | ↓ | |||||

| #1 | 🗸 | 0.782 | 0.813 | 0.083 | 0.811 | 0.859 | 0.035 | |||

| #2 | 🗸 | 🗸 | 0.803 | 0.844 | 0.078 | 0.821 | 0.868 | 0.034 | ||

| #3 | 🗸 | 🗸 | 🗸 | 0.814 | 0.867 | 0.072 | 0.834 | 0.899 | 0.033 | |

| #4 | 🗸 | 🗸 | 🗸 | 🗸 | 0.824 | 0.883 | 0.067 | 0.834 | 0.901 | 0.032 |

| No. | Models | CAMO | COD10K | ||||

|---|---|---|---|---|---|---|---|

| ↑ | ↑ | ↓ | ↑ | ↑ | ↓ | ||

| #5 | 0.794 | 0.845 | 0.079 | 0.825 | 0.887 | 0.033 | |

| #6 | 0.804 | 0.848 | 0.079 | 0.822 | 0.876 | 0.034 | |

| #7 | 0.817 | 0.876 | 0.071 | 0.834 | 0.898 | 0.032 | |

| #4 | 0.824 | 0.883 | 0.067 | 0.834 | 0.901 | 0.032 | |

| No. | Models | CAMO | COD10K | ||||

|---|---|---|---|---|---|---|---|

| ↑ | ↑ | ↓ | ↑ | ↑ | ↓ | ||

| #8 | Without CB | 0.765 | 0.802 | 0.090 | 0.815 | 0.863 | 0.035 |

| #9 | Without AB | 0.799 | 0.847 | 0.080 | 0.823 | 0.883 | 0.034 |

| #10 | With DC | 0.820 | 0.881 | 0.070 | 0.830 | 0.897 | 0.034 |

| #11 | With NC | 0.825 | 0.880 | 0.068 | 0.832 | 0.897 | 0.034 |

| #4 | Ours | 0.824 | 0.883 | 0.067 | 0.834 | 0.901 | 0.032 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Qiu, T.; Yu, Y.; Li, S.; Li, X. Edge-Guided Camouflaged Object Detection via Multi-Level Feature Integration. Sensors 2023, 23, 5789. https://doi.org/10.3390/s23135789

Liu K, Qiu T, Yu Y, Li S, Li X. Edge-Guided Camouflaged Object Detection via Multi-Level Feature Integration. Sensors. 2023; 23(13):5789. https://doi.org/10.3390/s23135789

Chicago/Turabian StyleLiu, Kangwei, Tianchi Qiu, Yinfeng Yu, Songlin Li, and Xiuhong Li. 2023. "Edge-Guided Camouflaged Object Detection via Multi-Level Feature Integration" Sensors 23, no. 13: 5789. https://doi.org/10.3390/s23135789

APA StyleLiu, K., Qiu, T., Yu, Y., Li, S., & Li, X. (2023). Edge-Guided Camouflaged Object Detection via Multi-Level Feature Integration. Sensors, 23(13), 5789. https://doi.org/10.3390/s23135789