Abstract

Several studies have analyzed human gait data obtained from inertial gyroscope and accelerometer sensors mounted on different parts of the body. In this article, we take a step further in gait analysis and provide a methodology for predicting the movements of the legs, which can be applied in prosthesis to imitate the missing part of the leg in walking. In particular, we propose a method, called GaIn, to control non-invasive, robotic, prosthetic legs. GaIn can infer the movements of both missing shanks and feet for humans suffering from double trans-femoral amputation using biologically inspired recurrent neural networks. Predictions are performed for casual walking related activities such as walking, taking stairs, and running based on thigh movement. In our experimental tests, GaIn achieved a 4.55° prediction error for shank movements on average. However, a patient’s intention to stand up and sit down cannot be inferred from thigh movements. In fact, intention causes thigh movements while the shanks and feet remain roughly still. The GaIn system can be triggered by thigh muscle activities measured with electromyography (EMG) sensors to make robotic prosthetic legs perform standing up and sitting down actions. The GaIn system has low prediction latency and is fast and computationally inexpensive to be deployed on mobile platforms and portable devices.

1. Introduction

The increasing availability of wearable body sensors has led to novel scientific studies on human activity recognition and human gait analysis [1,2,3]. Human activity recognition (HAR) usually focuses on activities related to or performed by legs, such as walking, jogging, turning left or right, jumping, lying down, going up or down the stairs, sitting down, and so on. Human gait analysis (HGA), in contrast, focuses not only on the identification of activities performed by the user, but also on how the activities are performed. This is useful in exoskeleton design, sports, rehabilitation, and health care.

The walking gait cycle of a healthy human consists of two main phases: a swing phase that lasts about 38% and a stance phase that lasts about 62% of the gait cycle [4]. A good gait is related to a minimal mechanical energy consumption [5]. An unusual gait cycle can be evidence of disease; therefore, gait analysis is important in evaluating gait disorders, neurodegenerative diseases such as multiple sclerosis, cerebellar ataxia, brain tumors, etc. Multiple sclerosis patients show alterations in step size and walking speed [6]. The severity of Parkinson’s disease and stroke shows a high correlation with stride length [7]. Wearable sensors can be used to detect and measure gait-related disorders, to monitor patient’s recovery, or to improve athletic performance. For instance, EMG sensors can be used to evaluate muscle contraction force to improve performance [8,9] in running [10] and other sport fields [11]. Emergency fall events can be detected with tri-axial accelerometers attached on the waist of elderly people [12]. Accelerometers installed on the hips and legs of people with Parkinson’s disease can be used to detect freezing of gait and can prevent falling incidents [13,14,15,16].

Exoskeletons can provide augmented physical power or assistance in gait rehabilitation. In the former case, exoskeletons can be used to help firefighters and rescue workers in dangerous environments, nurses to move heavy patients [17], or soldiers to carry heavy loads [18]. Rehabilitation exoskeletons can be used to provide walking support for elderly people or can be applied in the rehabilitation of stroke or spinal cord injury [19,20]. The neuromuscular disease Cerebral Palsy, which affects the symmetry and the variability of the walking, represents the pathology that mainly needs the usage of exoskeletons/prostheses to rehabilitate the walking [21].

We introduce a new methodology, termed human gait inference (HGI), for predicting what would be the movements of amputated leg parts (thigh, shank, or foot) for causal walking-related activities such as walking, taking stairs, sitting down, standing up, etc. Limb losses occur due to (a) vascular disease (54%), including diabetes and peripheral arterial disease, (b) trauma (45%), and (c) cancer (less than 2%) [22]. Up to 55% of people with a lower extremity amputation due to diabetes will require amputation of the second leg within 2–3 years [23]. In the USA, about 2 million people live with limb loss [22].

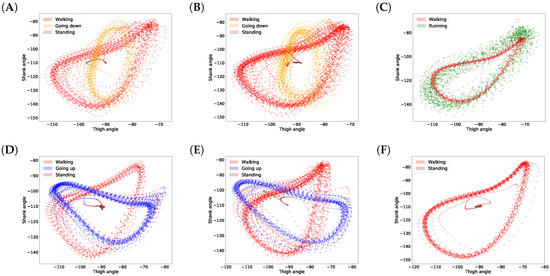

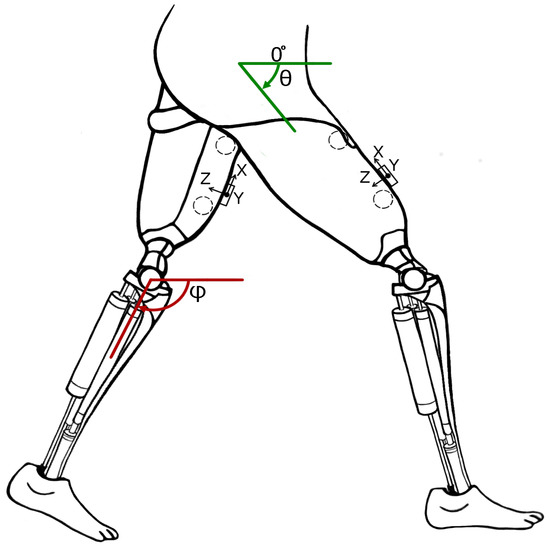

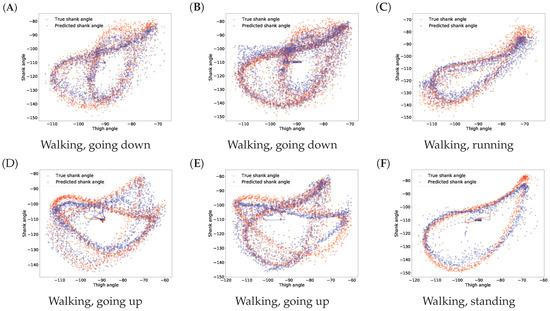

In this article, we propose a gait inference system, called GaIn, for patients suffering at most double trans-femoral amputation. Our idea is based on the high correlation between the movements of the leg parts of people without functional gait disorder during usual activities. Figure 1 shows a nonlinear correlation between the thigh and shank angles (of the same leg) during several gait cycles measured during walking related activities. The angles of the thigh and shank are measured to the horizontal line. Consequently, it is possible to infer the movements of the lower legs (both shanks and feet) based on the movements of both thighs using machine learning methods. The GaIn system could be installed on microchip-controlled robotic leg prostheses that could be attached to patients in a non-invasive way to infer the movements of the lower limbs, as illustrated in Figure 2. Therefore, the GaIn system could help patients suffering partial or double lower limb amputation to move and walk alone.

Figure 1.

Correlations between shank and thigh movement over several gait cycles in different activities. The angles of the thigh and shank are measured to horizontal line (see Figure 2). Plots (A–F) show various examples.

Figure 2.

Concept of robotic prosthetic legs for patients suffering from double trans-femoral amputation. Circles show the location of EMG sensors and boxes show the location of accelerometers and gyroscopes.

The HGI methodology and our GaIn system are different from exoskeletons. GaIn and HGI provide methods to infer the movements of the missing lower leg parts (shanks and feet), which are directly controlled by the remaining parts of the patient’s legs (thigh). In contrast, rehabilitation exoskeletons often replay a reference gait trajectory prerecorded on healthy users, which might result in an unsuitable gait for the patient [1,19,24]. The patients’ own efforts are not taken into account. However, the exoskeletons for augmented physical strength incorporate data obtained from the whole legs of healthy users [1].

The GaIn controller consists of two components: activity recognition and gait inference. The first component recognizes whether the patient is sitting, standing, or moving. In a sitting position, GaIn does not allow any gait inference, so the legs remain motionless. However, when thigh muscle activity is detected, the controller performs a standing up activity. When the patient is standing and starts swinging one of his legs, then GaIn activates the gait inference procedure. Because the human movement is produced with neural mechanisms in the motor cortex of the human brain or spinal neural circuits [25], we believe the neurally inspired artificial neural networks could be suitable models for gait inference. Therefore, GaIn uses recurrent neural networks for inferring human gait. In addition, we designed GaIn to be fast and computationally inexpensive, performing low prediction latency. In our opinion, these features are necessary in order to be applied on mobile devices where energy consumption matters [26]. We note that turning during walking involves rotating the torso, hip, and the thighs at hip joints but not the shanks [27]; therefore, our analysis does not examine turning strategies.

The GaIn system to be efficiently used in portable systems should meet the following requirements:

- Low prediction latency.

- Smooth, continuous activity recognition within a given activity and rapid transition in between different activities.

- Fast and energy efficiency.

The first requirement ensures that the model is of low latency; therefore, activity prediction can be made instantly based on the latest observed data. Therefore, bidirectional models, such as bidirectional long short-term memory (LSTM) recurrent neural networks (RNN) [28] or dynamic time warping (DTW) [29] methods, are not appropriate for our aims for two main reasons: first, these bidirectional methods require a whole observed sequence before making any predictions, which would therefore increase their latency. Second, the prediction they make on a frame is based on subsequent data. Standard hidden Markov models (HMMs) have become the de facto approach for activity recognition [30,31,32,33], and they yield good performance in general. However, they do so at the expense of increased latency in prediction because Viterbi algorithms use the whole sequence, or at least some part of it, in order to estimate a series of activities (i.e., hidden states), and their time complexity is polynomial. Therefore, in our opinion, HMMs are not adequate for on-the-fly prediction because the latency of these methods can be considered rather high.

The second point is to ensure that an activity recognition method provides consistent prediction within the same activity, but changes rapidly when the activity has changed. Lester et al. [32] have pointed out that single-frame prediction methods such as decision stumps or support vector machines are prone to yielding scattered predictions. However, human activity data are time series data in nature, and subsequent data frames are highly correlated. This tremendous amount of information can be exploited simply by sequential models such as HMM and RNN, or by incorporating the sliding-window technique to single-frame methods (e.g., nearest-neighbor). In fact, the authors in [31] have pointed out that the continuous-emissions HMM-based sequential classifier (cHMM) performs systematically better than its simple single-frame Gaussian mixture model (GMM) counterpart (99.1% vs. 92.2% in accuracy). Actually, the proposed sequential classifier wins over all its tested single-frame competitors (the best single-frame classifier is the nearest mean (NM) classifier which achieves up to 98.5% in accuracy). This highlights the relevance of exploiting the statistical correlation from human dynamics.

Continuous sensing and evaluating CPU-intensive prediction methods rapidly deplete a mobile system’s energy. Therefore, the third point requires a system to be energy-efficient enough for mobile-pervasive technologies. Several approaches have been introduced for this problem. Some methods aim to keep the number of necessary sensors low by adaptive selection [34] or based on the activity performed [35,36,37], for accurate activity prediction. Other approaches aim to reduce the computational cost by feature selection [38], feature learning [39], or proposing computationally inexpensive prediction models such as C4.5, random forest [40], or decision trees [41].

In this article, our methodology and experimental results are purely computational. Building and testing a prototype of such robotic, prosthetic legs for patient suffering from double trans-femoral amputation is the subject of our ongoing research. We expect that the patients may feel discomfort at the beginning but will become acclimated after a short adjustment period. We hope the prosthesis will be a useful tool in combating disability discrimination as is called for under several human rights treaties, such as the Rights of Persons with Disabilities convention by the United Nations [42] and Equality Acts [43,44] in jurisdictions worldwide. These also mandate access to goods, services, education, transportation, and employment. We expect the GaIn tool will be effective in helping patients tackle common obstacles such as stairs, uncut curb in urban areas.

The rest of the article is organized as follows. The next section gives a detailed description of the GaIn system. Section 3 describes the data collection and the performance evaluation methods used in our study. It also describes the feature extraction steps from the data obtained from the EMG and the motion sensors. In Section 4, we present our experimental results and discuss our findings. Finally, we conclude our study in the last section.

2. GaIn System

2.1. An Overview

The GaIn control system consists of two major parts. First, the controller recognizes the current activity of the user, and, second, it performs the necessary gain inference.

For the activity recognition, the GaIn system relies on a pair of triaxial accelerometer and gyroscope sensors installed symmetrically over the rectus femoris muscle (on thighs) 5 cm above the knee on the right and left legs, and on a pair of EMG sensors over the vastus lateralis (on both thighs) connected to the skin by three electrodes. For sensor locations, see Figure 2. The data from the accelerometers and gyroscopes are converted to angles and angular speed using the method described by Pedley [45]. Depending on the current recognized activity, the GaIn controller can perform the following actions:

- When the user is sitting, the controller does not allow any gait inference and the legs remain motionless. If an adequate amount of electrical activity from both thigh muscles is recognized by the EMG sensors on both thighs, then the system performs a standing up procedure.

- When the user is standing, then the controller can (i) keep the user in a standing position, (ii) start gait inference if one leg starts swinging, or (iii) perform a sitting down procedure if the electrical activity of both thigh muscles is suddenly high and both thighs have a similar position.

- When the user is walking, running, or taking stairs, then the controller performs gait inference using a recurrent neural network or goes to a standing position.

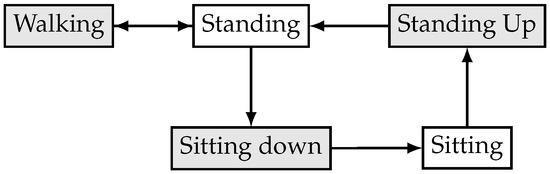

Figure 3 shows the possible transitions between different activities. For instance, if the user is walking, then the system cannot perform a sitting down activity without first stopping and standing, while, if the user is sitting, then the GaIn cannot infer walking-related activities without first standing up and standing.

Figure 3.

Activity transition graph of the GaIn controlling system.

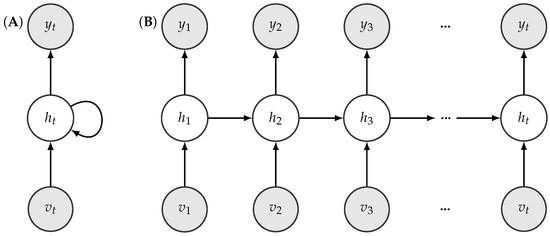

2.2. Activity Recognition Method

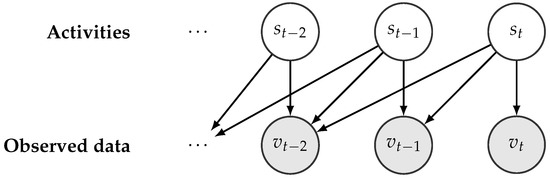

For activity recognition, we used the RapidHARe model [26], which is a computationally inexpensive method for providing a smooth and accurate activity prediction with low prediction latency. It is based on a dynamic Bayesian network [46], illustrated in Figure 4, and the most likely activity being performed at time t with respect to a given data observed in a context window of length K is formulated by:

where denotes the probability of the activity w.r.t a given observed data . Conditional probabilities are modeled with Gaussian mixture model (GMM) and the parameters were trained using the Expectation–Maximization (EM) method [47]. The training of GMMs was straightforward because our training data were segmented. For the full derivation of the model, we refer the reader to our previous work [26].

Figure 4.

Illustration of an unfolded dynamic Bayesian network w.r.t a given activity series.

2.3. Gait Inference Method

The shank movement prediction was modeled with recurrent neural networks (RNNs) [48] with long-short term memory (LSTM) units [49]. Figure 5 shows the typical structure on an RNN. RNNs are universal mathematical tools for modeling statistical relationships in sequential data. While standard RNN cells are prone to the so-called forgetting phenomenon, LSTM cells aim to circumvent this shortcoming, as we describe below.

Figure 5.

Illustration of a folded (A) and an unfolded (B) recurrent neural network structure.

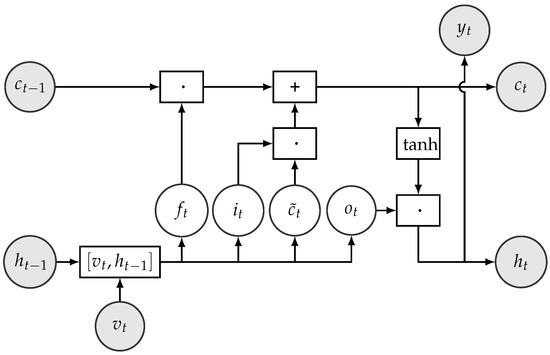

LSTM cells use two types of memory units to represent the past information of sequential data: one to capture short-term dependencies denoted by h and the other to capture long-term dependencies called state c. State c runs through the whole time and an LSTM performs four steps to update its data using so-called gates. The gates are: input gate, forget gate, input modulation, and output gate. The structure of an LSTM cell is shown in Figure 6. One of the main advantage of LSTMs is that each gate is differentiable, so their operations can be learned from data. The gates and the data manipulation steps are defined as follows:

Figure 6.

Structure of LSTM cell. The operators ·, +, , and in boxes represent element-wise multiplication, addition, concatenation, and operations on vectors, respectively.

- The forget gate calculates which information should be removed from state based on the hidden unit and the current input . It is defined formally as , where denotes the sigmoid function. The output can be considered as a bit vector, which indicates the components of the state vector c to be forgotten. For instance, indicates that the value of the ith component of will be kept and indicates that the value of that component will be forgotten.

- The input gate controls which information from the input should be kept and stored in the state vector at time step t. It is formally defined as and can be interpreted as a binary mask vector.

- The input modulation gate calculates a new candidate state vector .

- The new state vector is then calculated by .

- The output gate decides which parts of the cell state go to the output. It is calculated by .

- The new hidden state is formed from the new cell state whose values are first pushed between −1 and 1 using the function, and then multiplied by the values of the output gate. Formally, .

- Finally, the emission or the output of the cell (i.e., in our case, the predictions for the position of the shank) is calculated using .

In the above, denotes the sigmoid function, ‘·’ represents element-wise or Hadamard product of vectors, denotes vector concatenation, Ws denote weight matrices, and bs denote the corresponding biases whose values are to be learnt from data.

In our work, the observed data is a four-component vector, in which each component corresponds to the angle and the angular speed of the left and right thighs, respectively. The angular data were calculated from two triaxial gyroscopes and accelerometer sensors located on the right and left thighs using the methods described by Pedley [45]. The RNN was trained to predict the angles of both shanks.

We do not recommend bidirectional RNNs or Hidden Markov models (HMMs) for gait inference. These methods require the whole observed sequence before making any predictions for intermediate time frames. In other words, bidirectional methods use data from the future to make a prediction in the present. This would increase the prediction latency [26].

The feet angle and position was not the subject of prediction because novel feet prostheses have good mechanical systems for feet positioning without any information [50].

3. Methods and Data Sets

3.1. Data Sets

In our experiments, we used the human gait data from the HuGaDB database [51]. The data is freely available at https://github.com/romanchereshnev/HuGaDB. This dataset consists of a total of five hours of data from 18 participants performing eight different activities. These participants were healthy young adults: four females and 14 males with an average age of 23.67 years (standard deviation [STD]: 3.69), an average height of 179.06 cm (STD: 9.85), and an average weight of 73.44 kg (STD: 16.67). The participants performed a combination of activities at normal speed in a casual way, and there were no obstacles placed in their way. For instance, starting in the sitting position, participants were instructed to perform the following activities: sitting, standing up, walking, going up the stairs, walking, and sitting down. The experimenter recorded the data continually using a laptop and annotated the data with the activities performed. This provided us a long, continuous sequence of segmented data annotated with activities. In total, 1,077,221 samples were collected. Table 1 summarizes the activities recorded and provides other characteristics of the data.

Table 1.

Characteristics of data and activities.

During data collection, MPU9250 inertial sensors and electromyography sensors made in the Laboratory of Applied Cybernetics Systems, Moscow Institute of Physics and Technology (www.mipt.ru) were used. Each EMG sensor has a voltage gain of about 5000 and a band-pass filter with bandwidth corresponding to a power spectrum of EMG (10–500 Hz). The sample rate of each EMG-channel is 1.0 kHz, the analog-to-digital converter (ADC) resolution is 8 bits, and the input voltages is 0–5 V. The inertial sensors consisted of a three-axis accelerometer and a three-axis gyroscope integrated into a single chip. Data were collected with the accelerometer’s range equal to g with sensitivity 16.384 least significant bits (LSB)/g and the gyroscope’s range equal to /s with sensitivity 16.4 LSB/°/s. All sensors were powered with a battery, which helped to minimize electrical grid noise.

Accelerometer and gyroscope signals were stored in int16 format. EMG signals were stored in uint8. In our experiments, all data were scaled to the range .

In total, six pieces of inertial sensors (three-axis accelerometer and three-axis gyroscope) and two EMG sensors were installed symmetrically on the right and left legs with elastic bands. A pair of inertial sensors were installed over the rectus femoris muscle 5 cm above the knee, another pair of sensors around the middle of the shin bone at the level where the calf muscle ends, and a third pair on the feet on the metatarsal bones. Two EMG sensors were connected to the skin by three electrodes over the vastus lateralis muscle. The EMG sensors additionally provided two more features. This installation provided 36 signal sources, 18 signal sources were from the accelerometers (2 legs × 3 sensors × 3 axes), 18 signals were from the gyroscopes (2 legs × 3 sensors × 3 axes), and two signals were from the EMG (2 sensors). For the sensor locations, we refer the reader to see [51].

The sensors were connected through wires with each other and to a microcontroller box, which contained an Arduino electronics platform with a Bluetooth module. The microcontroller collected 56,350 samples per second on average, with a standard deviation of 3.2057, and then transmitted them to a laptop through a Bluetooth connection. Data acquisition was carried out mainly inside a building. Data were not recorded on a treadmill. We note that walking contains turning but unfortunately the annotation does not indicate this information.

We note that some data in HuGaDB contained corrupted signals and, typically, several gyroscope measurements were overflown and hence trimmed. We discarded these data from our experiments and Table 1 summarizes information on data we actually used.

3.2. Feature Extraction Methods

First, raw data obtained from the gyroscope and accelerometer sensors were filtered with moving average using a window of 100 samples. This was performed to remove the bias drift of inertial sensors [52].

The gait inference method is based on the thigh angle and angular speed data in the sagittal plane. The initial angle degrees for thigh and shank are calculated based on the accelerometer data and Earth gravity [45]. Formally, the start angle of the thigh () is calculated with

where and denote the values of the accelerometer sensors located on thigh. Similarly, the start angle of the shank () is calculated via

where , and denote the values of the accelerometer sensors located on shank. The angular velocities are from the gyroscope data. Let and be angular velocities of thigh and shank at time t, respectively. The angles of thigh and shank at time t can be calculated as follows [45]:

For every time frame t, the standard deviation (std) of the gradients of the EMG signals was calculated from the previous 5 and 10 measurements and were used for sitting down and standing up intention recognition, respectively. Formally, let denote the EMG signal data at time t. The feature for the variance of the gradients of the EMG signals in the last w time steps is formulated as . For sitting down intention recognition, we use a feature to measure the variance of the differences of the accelerometer data between the two thighs in the last 10 data frames. It is formulated as for the x-axis.

3.3. Model Implementation Details

The GaIn system (a) recognizes activities and intentions and (b) infers gait. We used a RapidHARe module to recognize standing up intention in the sitting position from the EMG sensor data. The intention was modeled with 10 Gaussian components, while sitting was modeled with one Gaussian component. We used another RapidHARe model to recognize sitting down intention during standing or walking activities from EMG sensor data and the differences of the accelerometer data. The intention was modeled with 5, while all others were modeled with two Gaussian components, respectively. We used a third RapidHARe module to recognize sitting, standing, and walking-related activities using one Gaussian component for each. All models used 20 long context windows.

For gait inference, the RNN consisted of 50 LSTM hidden units in one hidden layer. The learning objective for the RNN was to minimize the squared error between the predicted and the true shank angle. For the training, the input sequential data were chunked into 15 long data segments. Table 2 summarizes the features we used in each module.

Table 2.

Features used in GaIN. All features were scaled between 0 and 1.

The classification methods were implemented using the Python scikit-learn package (version 0.18.1). The RNN/LSTM was implemented with Keras library (version 2.1.2) using TensorFlow framework as backend (version 1.4.0). Training and testing were carried out on a PC equipped with Intel Core i7-4790 CPU, 8 Gb DDR-III 2400 MHz RAM, and Nvidia GTX Titan X GPU. Training and testing on all data took roughly three hours.

3.4. Evaluation Methods

The performance of our GaIn method was evaluated using a supervised cross-validation approach [53]. In this approach, data from a designated participant were held out for tests, and the rest of the data from the 17 participants were used for training. Thus, this approach gives a reliable estimation of how well the GaIn system would perform for a new patient whose data have not been seen before. In our experiments, we repeated this test for every user in the dataset and averaged the results.

The error of the gait inference was measured by the absolute value of the difference between the true and the predicted shank angles. The activity recognition was evaluated with and metrics, where TP, FP, and FN denote the number of the true positive, false positive, and false negative predictions, respectively. In addition, we calculated and reported the score, which is a combined score of the recall and the precision measures, defined as .

4. Results and Discussion

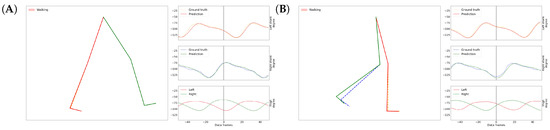

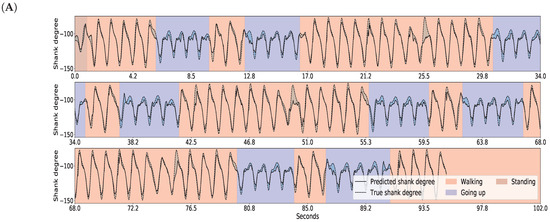

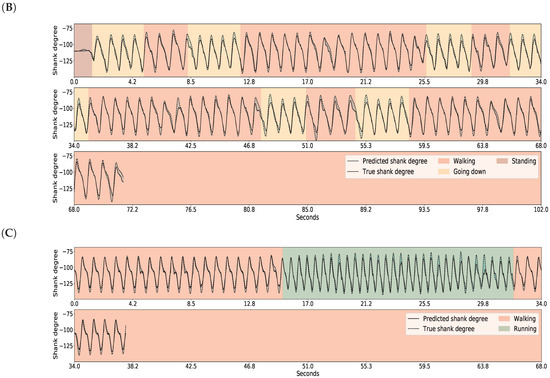

Our overall results on the gait inference can be seen in a video at https://youtu.be/aTeYPGxncnA, while two screenshots are shown in Figure 7. Many videos have been generated on different data recorded on different participants, but we did not see visually notable differences in the videos. The reason we chose this example particularly is that this data contains a variety of activities during a relatively short time.

Figure 7.

Screenshots of GaIn during gait inference (A,B). Around 56 data frames add up to one second. See the full video at: https://youtu.be/aTeYPGxncnA.

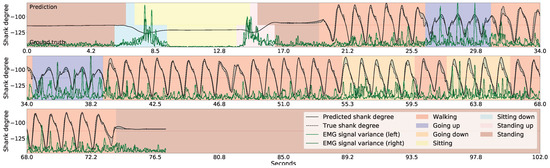

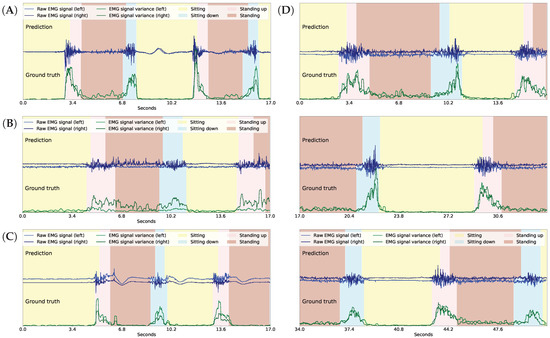

Figure 8 shows the inference for continuous series of standing up, sitting down, and a few walking-related activities. Note that the standing up and the sitting down activities are inferred based on the variance of the gradients in the EMG signals obtained from both thigh muscles (shown with green lines), and that the shank degrees (shown with black lines) are irrelevant here. The lower part of the figure indicates the true activities performed by the participant, while the upper part indicates the recognized activities. We note that the length of the sitting down and standing up activities in the figures is irrelevant here because the length would depend on how the robotic prosthetic legs performed these movements once the patient’s intention was recognized. The shank movement inference during walking-related activities is based on the thigh angles (not shown), and the EMG sensor data is ignored here. To help guide the reader, we have also indicated the current walking type by the color of the background, but GaIn does not take this information into account.

Figure 8.

Gait inference and activity recognition using GaIn. The first part (from 4.2 s to 20 s) shows sitting-related predictions, while the second part (from 21 s) shows shank movement inference for walking related activities. During the first part, the sitting down and standing up intentions are recognized based on the EMG signal variance (green) while the shank angles (black) are irrelevant here—while, in the second part, the shank movement inference for walking is calculated from the thigh angles (not shown) and the EMG signals are disregarded here. The walking type is indicated by the colors in the background only for the reader; it was not used in our methods.

4.1. Activity Classification Results

First, we discuss our experiments on how efficiently the activity recognition module of the GaIn system recognizes the patient’s intention to (a) sit down from a standing position and (b) to stand up from a sitting position using mainly EMG signals. The results, summarized in Table 3, show that standing and sitting position recognition can be achieved with high accuracy; however, it is easier to recognize standing up intention than sitting down intention. Our system achieved 0.99 recall and 0.99 precision for recognizing standing up intention, but it achieved only 0.68 recall and 0.99 precision for sitting down activity. The reason is that the muscle activity in both thighs is very low in a sitting position, thus it is effortless to recognize standing up intention form the sudden increase in muscle activity. However, muscle activity is already present in a standing position, which makes it more challenging to distinguish a patient’s simple balancing or walking efforts from a sitting down intention. Nevertheless, incorrect activity prediction can result in different impacts on the patient. When the GaIn system incorrectly recognized a standing up activity while the user is sitting, then the system simply stretched the robotic prosthetic leg, resulting in no harm to the patient. However, when a sitting down intention is predicted while the user is simply standing, then the patient would fall and may suffer serious injury. In our opinion, it is more important to achieve lower false alarm (high precision) than missed alarm (high recall) rates for sitting down activity. Therefore, we calibrated the decision threshold so that the activity recognition module achieved as high as 0.99 precision at the expense of recall, which decreased to 0.68. As a consequence, users may need to produce clearer and longer signals to the system for sitting down, but this results in GaIn causing fewer injuries from falling. Figure 9 shows the system in action with different users having different qualities of EMG signals.

Table 3.

Classification results for each participant.

Figure 9.

Activity recognition in GaIn with good (A), “waving” (B), weak (C), and “waving” and weak (D) EMG signals from participants ID = , respectively. Note that plot D consists of three continuing panels.

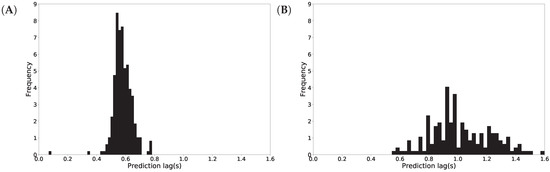

We examined the prediction latency and plotted a histogram of the activity recognition lag time in Figure 10. On average, it takes 602 milliseconds to recognize standing up intention (shown in Figure 10A), while it takes 846 milliseconds to recognize sitting down (shown in Figure 10B). We note that the standing up recognition has lower variance than the sitting down recognition and this is concluded from the width of the distributions in Figure 10. Note that the higher lag time for sitting down recognition is a result of the threshold calibration, as discussed above.

Figure 10.

Activity recognition latency in seconds (s) for standing up (A) and sitting down (B).

Finally, we mention that the quality of the EMG signals greatly depends on the physical properties of the user’s skin. Some users generated poor EMG signals (see the results for participant ID = 5, 14 in Table 3) that hampered the activity recognition consistently, while some users generated good quality EMG signals (see the results for participant ID = 1, 6 in Table 3), resulting in almost perfect activity recognition. Therefore, to mitigate dependency on the EMG signals, we propose calibrating the system’s activity recognition module for each patient individually in the future.

4.2. Gait Inference Results

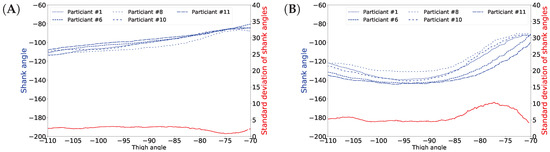

The results for gait inference are shown in Figure 11 for various walking-related activities such as walking, running, and taking the stairs up and down. The dashed lines show the true angle of the shank, while the solid line shows the prediction for the shank angle. The line segments going upward correspond to swing phases and line segments going downward correspond to stance phases in the gait cycle. The error, the difference between the true and the predicted movements, is indicated by the shaded area. It appears that the errors occur at the peaks and troughs which correspond to the turning point between the swing and stance phases. The color of the background indicates activity performed. Note that these activity labels were not incorporated into the training procedure; they are presented simply for illustration purposes. The prediction errors for different activities are listed in Table 4. The error was calculated for each activity over all data of all users. The average of the prediction errors for the shank angles across different activities is 4.55 degrees. Figure 12 shows the coordination and coordination variability of the true (red) and predicted (blue) shank angles with respect to the thigh angles. Here, we used the same data as for Figure 1. This scatter plot shows that the predicted shank angles are in accordance with the true shank angles. However, the predicted shank angles do not span over the range of the true angles in some cases. For instance, in plot F, the predicted shank angles do not reach the extremes of the true angles. This is the error that occurs at peaks and troughs in Figure 11.

Figure 11.

GaIn inference for various walking-related activities. The activity is indicated by the background color for the reader, but this information was not used in the methods. The shank degree is predicted based on thigh angles (not shown). The solid black line shows the predicted, the dashed line shows the true angles of the right shank, while the shaded area between them indicates the prediction error. Plots for the left leg are similar. Plots (A–C) show various examples.

Table 4.

Gait inference error.

Figure 12.

Predicted and true shank angles as a function of thigh position over several gait cycles in different activities. The input data used was the same as in Figure 1. The caption below each figure indicates the types of activities performed on the plot. Plots (A–F) show various examples.

4.3. Variance in Different Phases

People walk differently, resulting in variance in gaits [1]. Moreover, gait varies over different cycles for the same person as well. Figure 1 shows this natural variance. This variance prevents achieving 100% accuracy in gait prediction for someone’s gait based on other people’s gait data. It has also been noticed that variance in the swing phase is larger than in the stance phase [54]. This is as expected, since the stance phase is more important in walking stability, while legs may move more freely in the swing phase [54]. We also observed this fact in our data and plotted the shank angles in the stance and swing phases of one gait cycle obtained from different users. In Figure 13, panel A shows the shank angles of the gait cycle in the stance phase (blue lines) and the variance (red line) and panel B shows the same information for the swing phase. The figure shows that the variance is higher in the swing phase. Therefore, we expect higher prediction errors for the swing phase than for the stance phase. In fact, the mean shank degree prediction error across all activities is 4.783 (STD: 1.171) in the stance phase and 6.182 (STD: 1.680) in the swing phase. Table 5 shows detailed prediction errors for different activities.

Figure 13.

Shank angles of different participants in stance phase (A) and swing phase (B).

Table 5.

GaIn inference error.

4.4. Inference Errors around Activity Change

We closely examined the errors around activity changes; for instance, when a walking user started running. We measured the gait inference errors in a range of ±15 data samples (equivalent to half of a second) around the true activity change. We found that the shank degree prediction error is 5.44°, which is not especially larger than general. The detailed results for different activity transitions are shown in Table 6.

Table 6.

Average shank degree prediction error at activity transitions.

5. Conclusions

In this article, we presented a new method, called GaIn, for human gait inference. GaIn was designed to predict the movements of the lower legs based on the movements of both thighs. This can potentially be the basis for building non-invasive, robotic, lower limbic prostheses for patients suffering from double trans-femoral amputation. Our method is based on the observation that the thigh degrees strongly correlate to the shin bone degrees during casual walking-related activities. In this article, we showed that the shank degrees can be predicted using recurrent neural networks with LSTM memory cells using thigh degrees as input. Our experimental results showed that our system is highly accurate and it achieved 4.55 degree prediction error on average. The error for the stance phase was even lower. We believe that a recurrent neural network is a suitable mathematical model to simulate the motor cortex of the human neural system, and we think this is the reason why GaIn achieves low prediction error.

However, in a real-life application, sitting down and standing up intentions cannot be recognized from thigh movements. To circumvent this, we applied EMG sensors placed on the skin over the vastus lateralis—the thigh muscles; therefore, the patient can signal her/his intentions by increasing thigh muscle activity. Our system achieved a 99% precision and recall in recognizing standing up intention, and achieved 99% precision and 68% recall in recognizing sitting down intention. We mentioned that a patient may suffer injury if the system incorrectly predicts a sitting down intention during walking or just standing. For safety reasons, we adjusted the decision rule accordingly to maintain low false alarm (high precision) at the expense of high missed alarm (low recall). As a result, users may need to produce clearer signals to indicate sitting down intention.

Here, we presented our results purely on in silico experiments. Building a real prototype of such a robotic prosthetic leg is the subject of our current research. In practice, we expect that the patients may feel a little discomfort using such robotic prosthetic legs at the beginning and need to adjust to the device and, on the other hand, we will also need to make adjustment in the GaIn model to adapt the prosthesis to diverse peoples and urban situations. We hope that the prosthesis will be a useful tool in combating disability discrimination.

Author Contributions

A.K.-F. conceived the idea. A.K.-F. and R.C. designed the research methodology. R.C. developed the software and data analysis tools, carried out the experiments and participated in manuscript preparation. A.K.-F. and R.C. wrote the manuscript.

Funding

This research received no external funding.

Acknowledgments

We gratefully acknowledge the support of NVIDIA Corporation (Santa Clara, CA, USA) with the donation of the GTX Titan X GPU used for model parameter training in this research. We would also like to thank Elena Artemenko for the artwork and Timur Bergaliyev and his lab members Sergey Sakhno and Sergey Kravchenko from the Laboratory of Applied Cybernetic Systems at BiTronics Lab (www.bitronicslab.com), Moscow Institute of Physics and Technology, Russia for their technical support on using sensors.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| EMG | Electromyography |

| HAR | Human activity recognition |

| HGA | Human gait analysis |

| HGI | Human gait inference |

| EM | Expectation–Maximization |

| GMM | Gaussian mixture model |

| RNN | Recurrent neural networks |

| LSTM | Long–short term memory |

| HMM | Hidden Markov models |

| STD | Standard deviation |

| ADC | Analog-to-digital converter |

| LSB | Least significant bit |

| CPU | Central processing unit |

| GPU | Graphics processing unit |

| TP | True positive |

| FP | False positive |

| FN | False negative |

References

- Chinmilli, P.; Redkar, S.; Zhang, W.; Sugar, T. A Review on Wearable Inertial Tracking based Human Gait Analysis and Control Strategies of Lower-Limb Exoskeletons. Int. Robot. Autom. J. 2017, 3, 00080. [Google Scholar] [CrossRef]

- Tao, W.; Liu, T.; Zheng, R.; Feng, H. Gait analysis using wearable sensors. Sensors 2012, 12, 2255–2283. [Google Scholar] [CrossRef] [PubMed]

- Benson, L.C.; Clermont, C.A.; Bošnjak, E.; Ferber, R. The use of wearable devices for walking and running gait analysis outside of the lab: A systematic review. Gait Posture 2018, 63, 124–138. [Google Scholar] [CrossRef] [PubMed]

- Kaufman, K.R.; Sutherland, D.H. Kinematics of normal human walking. In Human Walking; Williams and Wilkins: Baltimore, MD, USA, 1994; pp. 23–44. [Google Scholar]

- Vaughan, C.L. Theories of bipedal walking: An odyssey. J. Biomech. 2003, 36, 513–523. [Google Scholar] [CrossRef]

- Gehlsen, G.; Beekman, K.; Assmann, N.; Winant, D.; Seidle, M.; Carter, A. Gait characteristics in multiple sclerosis: progressive changes and effects of exercise on parameters. Arch. Phys. Med. Rehabil. 1986, 67, 536–539. [Google Scholar] [PubMed]

- Salarian, A.; Russmann, H.; Vingerhoets, F.J.; Dehollain, C.; Blanc, Y.; Burkhard, P.R.; Aminian, K. Gait assessment in Parkinson’s disease: Toward an ambulatory system for long-term monitoring. IEEE Trans. Biomed. Eng. 2004, 51, 1434–1443. [Google Scholar] [CrossRef] [PubMed]

- White, S.C.; Winter, D.A. Predicting muscle forces in gait from EMG signals and musculotendon kinematics. J. Electromyogr. Kinesiol. 1992, 2, 217–231. [Google Scholar] [CrossRef]

- Wentink, E.; Schut, V.; Prinsen, E.; Rietman, J.; Veltink, P. Detection of the onset of gait initiation using kinematic sensors and EMG in transfemoral amputees. Gait Posture 2014, 39, 391–396. [Google Scholar] [CrossRef]

- Wahab, Y.; Bakar, N.A. Gait analysis measurement for sport application based on ultrasonic system. In Proceedings of the 2011 IEEE 15th International Symposium on Consumer Electronics (ISCE), Singapore, Singapore, 14–17 June 2011; pp. 20–24. [Google Scholar]

- de Silva, B.; Natarajan, A.; Motani, M.; Chua, K.C. A real-time exercise feedback utility with body sensor networks. In Proceedings of the 5th International Summer School and Symposium on Medical Devices and Biosensors, Hong Kong, China, 1–3 June 2008; pp. 49–52. [Google Scholar]

- Bourke, A.K.; Van De Ven, P.; Gamble, M.; O’Connor, R.; Murphy, K.; Bogan, E.; McQuade, E.; Finucane, P.; ÓLaighin, G.; Nelson, J. Assessment of waist-worn tri-axial accelerometer based fall-detection algorithms using continuous unsupervised activities. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Buenos Aires, Argentina, 31 August–4 September 2010; pp. 2782–2785. [Google Scholar]

- Bachlin, M.; Plotnik, M.; Roggen, D.; Maidan, I.; Hausdorff, J.M.; Giladi, N.; Troster, G. Wearable assistant for Parkinson‘s disease patients with the freezing of gait symptom. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 436–446. [Google Scholar] [CrossRef]

- Sant‘Anna, A.; Salarian, A.; Wickstrom, N. A new measure of movement symmetry in early Parkinson’s disease patients using symbolic processing of inertial sensor data. IEEE Trans. Biomed. Eng. 2011, 58, 2127–2135. [Google Scholar] [CrossRef]

- Sant’Anna, A. A Symbolic Approach to Human Motion Analysis Using Inertial Sensors: Framework and Gait Analysis Study. Ph.D. Thesis, Halmstad University, Halmstad, Sweden, 2012. [Google Scholar]

- Comber, L.; Galvin, R.; Coote, S. Gait deficits in people with multiple sclerosis: A systematic review and meta-analysis. Gait Posture 2017, 51, 25–35. [Google Scholar] [CrossRef] [PubMed]

- Kawamoto, H.; Taal, S.; Niniss, H.; Hayashi, T.; Kamibayashi, K.; Eguchi, K.; Sankai, Y. Voluntary motion support control of Robot Suit HAL triggered by bioelectrical signal for hemiplegia. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Buenos Aires, Argentina, 31 August–4 September 2010; pp. 462–466. [Google Scholar]

- Kazerooni, H.; Racine, J.L.; Huang, L.; Steger, R. On the control of the berkeley lower extremity exoskeleton (BLEEX). In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, ICRA 2005, Barcelona, Spain, 18–22 April 2005; pp. 4353–4360. [Google Scholar]

- Wang, L.; Wang, S.; van Asseldonk, E.H.; van der Kooij, H. Actively controlled lateral gait assistance in a lower limb exoskeleton. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 965–970. [Google Scholar]

- Strausser, K.A.; Kazerooni, H. The development and testing of a human machine interface for a mobile medical exoskeleton. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 4911–4916. [Google Scholar]

- Mileti, I.; Taborri, J.; Rossi, S.; Petrarca, M.; Patanè, F.; Cappa, P. Evaluation of the effects on stride-to-stride variability and gait asymmetry in children with Cerebral Palsy wearing the WAKE-up ankle module. In Proceedings of the 2016 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Benevento, Italy, 15–18 May 2016; pp. 1–6. [Google Scholar]

- Ziegler-Graham, K.; MacKenzie, E.J.; Ephraim, P.L.; Travison, T.G.; Brookmeyer, R. Estimating the prevalence of limb loss in the United States: 2005 to 2050. Arch. Phys. Med. Rehabil. 2008, 89, 422–429. [Google Scholar] [CrossRef] [PubMed]

- Pandian, G. Rehabilitation of the patient with peripheral vascular disease and diabetic foot problems. In Rehabilitation Medicine: Principles and Practice; Lippincott-Raven: Philadelphia, PA, USA, 1998; pp. 1517–1544. [Google Scholar]

- Talaty, M.; Esquenazi, A.; Briceno, J.E. Differentiating ability in users of the ReWalk TM powered exoskeleton: An analysis of walking kinematics. In Proceedings of the 2013 IEEE International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–5. [Google Scholar]

- Nakazawa, K.; Obata, H.; Sasagawa, S. Neural control of human gait and posture. J. Phys. Fit. Sports Med. 2012, 1, 263–269. [Google Scholar] [CrossRef]

- Chereshnev, R.; Kertesz-Farkas, A. RapidHARe: A computationally inexpensive method for real-time human activity recognition from wearable sensors. J. Ambient Intell. Smart Environ. 2018, 10, 377–391. [Google Scholar] [CrossRef]

- Hase, K.; Stein, R. Turning strategies during human walking. J. Neurophysiol. 1999, 81, 2914–2922. [Google Scholar] [CrossRef] [PubMed]

- Lefebvre, G.; Berlemont, S.; Mamalet, F.; Garcia, C. Inertial Gesture Recognition with BLSTM-RNN. In Artificial Neural Networks; Springer: Berlin, Germany, 2015; pp. 393–410. [Google Scholar]

- Liu, J.; Zhong, L.; Wickramasuriya, J.; Vasudevan, V. uWave: Accelerometer-based personalized gesture recognition and its applications. Pervasive Mob. Comput. 2009, 5, 657–675. [Google Scholar] [CrossRef]

- Olguın, D.O.; Pentland, A.S. Human activity recognition: Accuracy across common locations for wearable sensors. In Proceedings of the 2006 10th IEEE International Symposium on Wearable Computers, Montreux, Switzerland, 11–14 October 2006; pp. 11–14. [Google Scholar]

- Mannini, A.; Sabatini, A.M. Machine learning methods for classifying human physical activity from on-body accelerometers. Sensors 2010, 10, 1154–1175. [Google Scholar] [CrossRef] [PubMed]

- Lester, J.; Choudhury, T.; Kern, N.; Borriello, G.; Hannaford, B. A hybrid discriminative/generative approach for modeling human activities. In Proceedings of the 19th International Joint Conference on Artificial Intelligence (IJCAI), Edinburgh, Scotland, 30 July–5 August 2005. [Google Scholar]

- Junker, H.; Amft, O.; Lukowicz, P.; Tröster, G. Gesture spotting with body-worn inertial sensors to detect user activities. Pattern Recognit. 2008, 41, 2010–2024. [Google Scholar] [CrossRef]

- Zappi, P.; Lombriser, C.; Stiefmeier, T.; Farella, E.; Roggen, D.; Benini, L.; Tröster, G. Activity recognition from on-body sensors: accuracy-power trade-off by dynamic sensor selection. In Wireless Sensor Networks; Springer: Berlin, Germany, 2008; pp. 17–33. [Google Scholar]

- Gordon, D.; Czerny, J.; Miyaki, T.; Beigl, M. Energy-efficient activity recognition using prediction. In Proceedings of the 16th International Symposium on Wearable Computers (ISWC) 2012, Newcastle, UK, 18–22 June 2012; pp. 29–36. [Google Scholar]

- Yan, Z.; Subbaraju, V.; Chakraborty, D.; Misra, A.; Aberer, K. Energy-efficient continuous activity recognition on mobile phones: An activity-adaptive approach. In Proceedings of the 16th International Symposium on Wearable Computers (ISWC) 2012, Newcastle, UK, 18–22 June 2012; pp. 17–24. [Google Scholar]

- Krause, A.; Ihmig, M.; Rankin, E.; Leong, D.; Gupta, S.; Siewiorek, D.; Smailagic, A.; Deisher, M.; Sengupta, U. Trading off prediction accuracy and power consumption for context-aware wearable computing. In Proceedings of the Ninth IEEE International Symposium on Wearable Computers, Osaka, Japan, 18–21 October 2005; pp. 20–26. [Google Scholar]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine. In International Workshop on Ambient Assisted Living; Springer: Basel, Switzerland, 2012; pp. 216–223. [Google Scholar]

- Plötz, T.; Hammerla, N.Y.; Olivier, P. Feature learning for activity recognition in ubiquitous computing. In Proceedings of the IJCAI Proceedings-International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Mazilu, S.; Hardegger, M.; Zhu, Z.; Roggen, D.; Troster, G.; Plotnik, M.; Hausdorff, J.M. Online detection of freezing of gait with smartphones and machine learning techniques. In Proceedings of the 2012 6th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth), San Diego, CA, USA, 21–24 May 2012; pp. 123–130. [Google Scholar]

- Skotte, J.; Korshøj, M.; Kristiansen, J.; Hanisch, C.; Holtermann, A. Detection of physical activity types using triaxial accelerometers. J. Phys. Act. Health 2014, 11, 76–84. [Google Scholar] [CrossRef]

- Hendricks, A. UN Convention on the Rights of Persons with Disabilities. Eur. J. Health 2007, 14, 273. [Google Scholar] [CrossRef]

- Vote, S.; Vote, H. Americans with Disabilities Act of 1990; EEOC: Washington, DC, USA, 1990. [Google Scholar]

- Bell, D.; Heitmueller, A. The Disability Discrimination Act in the UK: Helping or hindering employment among the disabled? J. Health Econ. 2009, 28, 465–480. [Google Scholar] [CrossRef] [PubMed]

- Pedley, M. Tilt sensing using a three-axis accelerometer. Freescale Semicond. Appl. Note 2013, 1, 2012–2013. [Google Scholar]

- Murphy, K.P. Dynamic Bayesian Networks: Representation, Infernence and Learning. Ph.D. Thesis, University of California, Berkeley, CA, USA, 2002. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–38. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Phillips, V.L. Composite Prosthetic Foot and Leg. U.S. Patent 4,547,913, 22 October 1985. [Google Scholar]

- Chereshnev, R.; Kertész-Farkas, A. HuGaDB: Human Gait Database for Activity Recognition from Wearable Inertial Sensor Networks. In International Conference on Analysis of Images, Social Networks and Texts; Springer: Cham, Switzerland, 2017; pp. 131–141. [Google Scholar]

- Gulmammadov, F. Analysis, modeling and compensation of bias drift in MEMS inertial sensors. In Proceedings of the IEEE 4th International Conference on Recent Advances in Space Technologies, RAST’09, Istanbul, Turkey, 11–13 June 2009; pp. 591–596. [Google Scholar]

- Kertész-Farkas, A.; Dhir, S.; Sonego, P.; Pacurar, M.; Netoteia, S.; Nijveen, H.; Kuzniar, A.; Leunissen, J.A.; Kocsor, A.; Pongor, S. Benchmarking protein classification algorithms via supervised cross-validation. J. Biochem. Biophys. Methods 2008, 70, 1215–1223. [Google Scholar] [CrossRef] [PubMed]

- Winter, D.A. The Biomechanics and Motor Control of Human Gait: Normal, Elderly and Pathological; University of Waterloo: Waterloo, ON, Canada, 1991. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).