Abstract

In wireless body area networks (WBANs), the secrecy of personal health information is vulnerable to attacks due to the openness of wireless communication. In this paper, we study the security problem of WBANs, where there exists an attacker or eavesdropper who is able to observe data from part of sensors. The legitimate communication within the WBAN is modeled as a discrete memoryless channel (DMC) by establishing the secrecy capacity of a class of finite state Markov erasure wiretap channels. Meanwhile, the tapping of the eavesdropper is modeled as a finite-state Markov erasure channel (FSMEC). A pair of encoder and decoder are devised to make the eavesdropper have no knowledge of the source message, and enable the receiver to recover the source message with a small decoding error. It is proved that the secrecy capacity can be achieved by migrating the coding scheme for wiretap channel II with the noisy main channel. This method provides a new idea solving the secure problem of the internet of things (IoT).

1. Introduction

Due to the openness of wireless communication, the personal health information, which is exchanged on the wireless channel in WBAN, is readily fetched and attacked by hackers. To address this issue, there are usually two ways to enhance the security of wireless communications: one is the security guaranteed by information theory in Refs. [1,2,3], another is the security verified by the computational complexity in Refs. [4,5]. In this paper, we aim to study the secure transmission problem in WBAN on the basis of the information theory. Here, the secure transmission indicates the way to code the transmitted data so that the attackers cannot get the data. The concept of wiretap channel is introduced by Wyner in Ref. [6]. In his model, the source message was sent to the targeted user via a discrete memoryless channel (DMC). Meanwhile, an eavesdropper was able to tap the transmitted data via a second DMC. It was supposed that the eavesdropper knew the encoding scheme and decoding scheme. The object was to find a pair of encoder and decoder such that the eavesdropper’s level of confusion on the source message was as high as possible, while the receiver could recover the transmitted data with a small decoding error. Wyner’s wiretap channel model is called the discrete memoryless wiretap channel, since the main channel output was taken as the input of the wiretap channel in Ref. [7].

After Wyner’s pioneering work, the models of wiretap channels have been studied from various aspects. Csiszar and Korner considered a more general wiretap channel model called the broadcast channels with confidential messages (BCCs) in Ref. [8]. The wiretap channel was not necessarily a degraded version of the main channel. Moreover, they also considered the case where public data was supposed to be broadcasted through both main channel and wiretap channel. The degraded wiretap channels with discrete memoryless side information accessed by the encoder were considered in Refs. [9,10,11]. BCCs with causal side information were studied in Ref. [12]. Communication models with channel states known at the receiver were considered in Refs. [13,14]. Ozarow and Wyner considered another wiretap channel model called wiretap channel of type II [15]. The secrecy capacity was established there. In that model, the source data was encoded into N digital symbols and transmitted to the targeted user through a binary noiseless channel. Meanwhile, the eavesdropper was able to observe an arbitrary -subcollection of those symbols.

In the last few decades, a lot of capacity problems related to the wiretap channel II were studied. A special class of non-DMC wiretap channel was studied in Ref. [16]. The main channel was a DMC instead of noiseless, and the eavesdropper observed digital symbols through a uniform distribution. An extension of wiretap channel II was studied in Ref. [17], where the main channel was a DMC and the eavesdropper was able to observe digital bits through arbitrary strategies.

The model of finite-state Markov channel was first introduced by Gilbert [18] and Elliott [19]. They studied a kind of Markov channel model with two states, which is known as the Gilbert–Elliott channel. In their channel model, one state was related to a noiseless channel and the other state was related to a totally noisy channel. Wang in Ref. [20] extended the Gilbert–Elliott channel and considered the case with finite states.

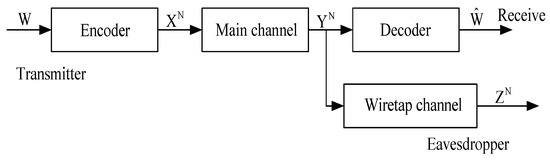

This paper discusses finite-state Markov erasure wiretap channel (FSME-WTC) (see Figure 1). In this new model, the source data W is encoded into N digital symbols, denoted by , and transmitted to the targeted user through a DMC. The eavesdropper is able to observe the transmitted symbols through a finite-state erasure Markov channel (FSMEC). Secrecy capacity of this new communication model is established, based on the coding scheme devised by the authors in Ref. [17].

Figure 1.

Communication model of degraded wiretap channels.

The model of FSME-WTC can be applied to model the security problem of WBAN readily. Let us suppose that there are N sensors in WBAN. Then, we can treat the collection of symbols obtained from the sensors as a digital sequence of length N transimitted over an imaginary channel. The imaginary channel is not DMC because the symbols from the sensors are correlated. Markov chain is an important model to characterize the correlation of random variables since it will not bring too much complexity of the system. The wiretap channel is set as an erasure channel to model the situation where the attacker in WBAN is able to tap data from only part of the sensors. Thus, our model of FSME-WTC is to ensure that the attacker is not able to get any information from the WBAN when he/she can only observe data from at most sensors.

The importance of this model is obvious. As the technology of 5G advances towards the stage of commercial applications, wireless networks are becoming more and more significant in our daily lives [21,22]. Therefore, the security problem of wireless communication is critical from the aspects of both theory and engineering. Meanwhile, the finite state Markov channel is a common model to character the properties of wireless communication. Hence, the results of this paper are meaningful to many kinds of wireless networks with high confidentiality requirements, such as WBAN and IoT.

The remainder of this paper is organized as follows. The formal statements of Finite-state Markov Erasure Wiretap Channel and the capacity results are given in Section 2 (see also Figure 1). The secrecy capacity of this model is established in Theorem 1. Some concrete examples of this communication model are given in Section 3. The converse part of Theorem 1, relying on Fano’s inequality and Proposition 1, is proved in Section 4. The direct part of Theorem 1, based on Theorem 1 in [17], is proved in Section 5. Section 6 gives the proof of Proposition 1, and Section 7 finally concludes this paper.

2. Notations, Definitions and the Main Results

Throughout this paper, is the set of positive integers. is the set of positive integers no greater than N for any . For any index set and random vector , denote by the “projection” of onto the index set such that for all , and , otherwise.

Let be any finite alphabet not containing the “error” letter ? and . It follows that is distributed on for any random vector over .

Example 1.

Let , and . Then,

Let be an arbitrary random vector distributed on . Then, the random vector is distributed on .

Definition 1. (Encoder)

Let the source message W be uniformly distributed on a certain message set . The (stochastic) encoder is specified by a matrix of conditional probability with and . The value of specifies the probability that we encode message w encoded into the sequence .

Definition 2. (Main channel)

The main channel is a DMC, whose input alphabet is and output alphabet is , where . The transition probability matrix of the main channel is denoted by with and . The input and output of the main channel are denoted by and , respectively. For any and , it follows that

where

Remark 1.

From the property of DMC, it holds that

Definition 3. (Wiretap channel)

Let be the channel state of FSMEC at time n satisfying that forms a Markov chain. The transition of channel states is homogeneous, i.e., the conditional probability is independent from the time index n. Moreover, the channel states are stationary, i.e., share a generic probability distribution on a common finite set of channel states. Moreover, let be the probability that the state at the next time slot is changed to when the state is t currently. It follows that

for . The input of FSMEC is a digital sequence , which is actually the main channel output. Denote by the wiretap channel output. For each time slot n, the channel is either totally noisy, i.e., or totally noiseless, i.e., , which depends on the value of . Thus, the channel output is totally determined by the channel input and the channel state . Let be the set of states under which the channel is noiseless. Then, it follows that contains the states where the channel is totally noisy. Denote by the probability that the channel outputs z when the channel input is y and the channel state is t. It follows that

where

For any , and , it is readily obtained that

Remark 2.

Throughout this paper, it is supposed that is independent from W, and .

Proposition 1.

forms a Markov chain for every .

Proof.

Definition 4. (Decoder)

The decoder is specified by a mapping . To be particular, the estimation of the source message is , where is the main channel output. The average decoding error probability is denoted by .

Definition 5. (Achievability)

A positive real number R is said to be achievable, if, for any real number , one can find an integer such that, for any , there exists a pair of encoder and decoder of length of length N satisfying that

Definition 6. (Secrecy capacity)

A real number is said to be the secrecy capacity of the communication model if it is achievable for every and unachievable for every .

Theorem 1.

Let be the function of defined in Definition 3 such that if , and , otherwise. If it follows that

for any , the secrecy capacity of the communication model in Figure 1 is , where is the capacity of the main channel, i.e.,

Proof.

The proof of Theorem 1 is divided into the following two parts. The first part, given in Section 4, proves that every achievable real number R must satisfy , which is the converse half of the theorem. The second part, given in Section 5, proves that every real number R satisfying is achievable, which is the direct half. □

Theorem 1 claims that, if the Markov chain satisfies Label (2), then the secrecy capacity of the wiretap channel model depicted in Figure 1 is . In the rest of this section, we will introduce a class of Markov chains satisfying (2) in Theorem 2, and provide the secrecy capacity of the related wiretap channel model in Corollary 1.

A stationary Markov chain is call ergodic if, for each pair of states , it is possible to go from state t to in expected finite steps. One can prove that, if a Markov of chain is ergodic, the stationary probability distribution of the state is unique.

Theorem 2. (Law of Large Number for Markov Chain)

If the Markov chain is ergodic, let π be the unique stationary distribution of the state. Then, it follows that

for each channel state t, where is 1 or 0, indicating whether is true or not.

With the theorem above, we immediately obtain that

Corollary 1.

If the Markov chain is ergodic with the unique stationary distribution π over , then the secrecy capacity of the wiretap channel model depicted in Figure 1 is given by

where is the capacity of the main channel, and

3. Examples

This section gives two simple examples of FSMEC defined in Definition 3. Example 2 is for discrete memoryless erasure channel (DMEC) and Example 3 is for a simple two-state FSMEC.

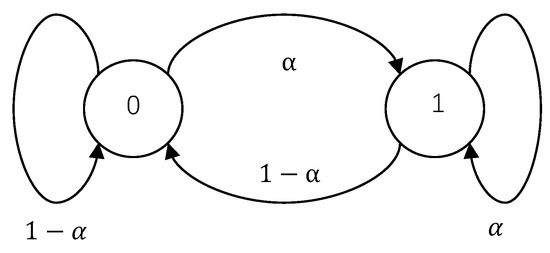

Example 2.

Suppose that the set of channel states with and . Meanwhile, let

and

The state transition diagram of the channel states in this example is depicted in Figure 2. It is obvious that the FSMEC is in fact specialized into a DMEC with the transition probability

From Theorem 2 in Ref. [6], the secrecy capacity of the communication model in Figure 1, with DMEC as the wiretap channel, is

where X and Y are the input and output of the main channel, respectively, and Z is the output of the wiretap channel under the channel state T; follows from the facts that forms a Markov chain (cf. Proposition 1) and T is independent from X and Y; follows from the fact that when , and Z is determined when ; follows from the assumption that T is independent from X and Y; and follows from (3) and (4).

Figure 2.

State transition diagram of discrete memoryless erasure channels.

Clearly, Formula (2) holds with . Thus, in this case, the result of Theorem 1 in this paper coincides with that of Theorem 2 in Ref. [6].

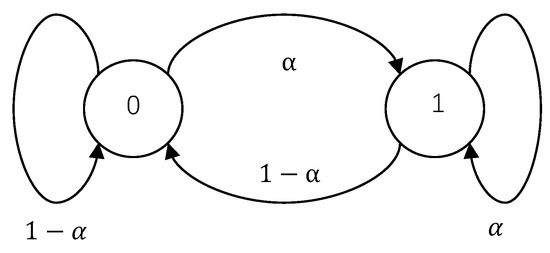

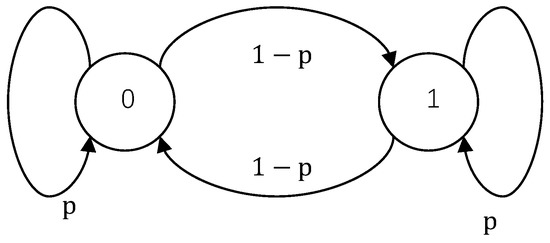

Example 3.

Let

and . We arrive at a simple two-state Markov erasure channel whose transition diagram is depicted in Figure 3. Furthermore, observe that

where the last equality follows because when , and

when . It is obvious that

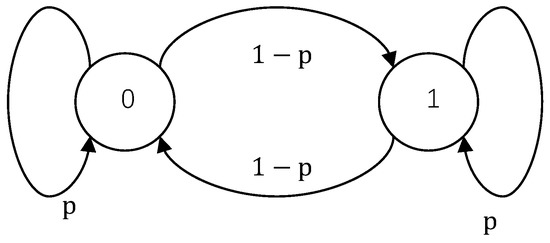

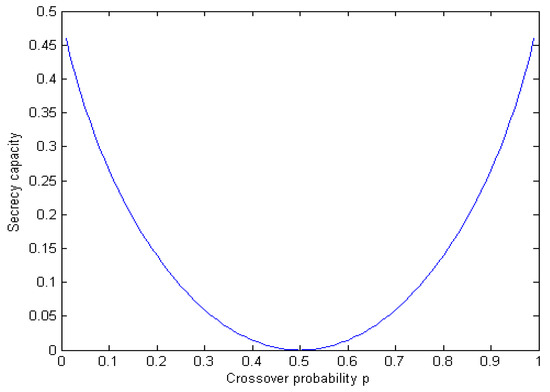

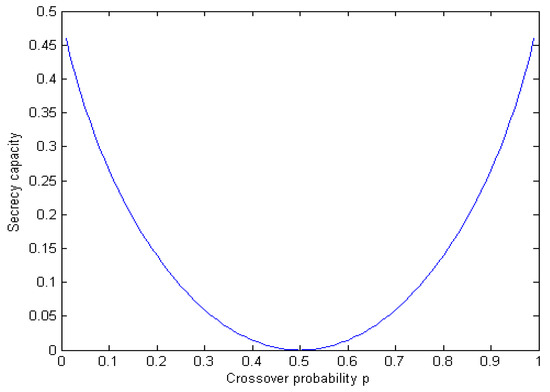

for . Formula (2) is then established immediately from the Markov Large Number Law. Applying Theorem 1, the secrecy capacity of the communication model in this case is . Figure 4 shows the relationship between the secrecy capacity and the crossover probability p in this example.

Figure 3.

State transition diagram of a two-state Markov chain.

Figure 4.

Secrecy capacity of the two-state Markov erasure wiretap channel in Example 3.

4. Converse Half of Theorem 1

This section proves that every achievable real number R must satisfy . The proof is based on Fano’s inequality (cf. Formula (76) in Ref. [6]) and Proposition 1.

For any give and , Formula (2) indicates that

or equivalently

when N is sufficiently large, where

Suppose that there exists a code of length N satisfying (1), i.e.,

Then, we have

where as , and the last inequality follows from the Fano’s inequality. Since , the formula above indicates that

The value of is upper bounded by

where (a) and (b) follow from the fact that forms a Markov chain, and (c) follows from Proposition 1 and the fact that is independent from and .

For any , denoting , Formula (7) is further deduced by

where (a) follows because forms a Markov chain when given , and (b) follows because and are independent from . For any fixed , denote . On account of the chain rule, we have

and

Moreover, from the property of DMC, Remark 1 yields

Combining Formulas (9)–(12), it follows that

Considering that forms a Markov chain, we have

or equivalently

Substituting the formula above into Formula (13), we have

Noticing that

Formula (14) is further deduced by

Substituting the formula above with Formula (8) gives

where the last inequality follows from (5). Combining (6) and the formula above yields

is finally established by letting and converge to 0. This completes the proof of converse half.

5. Direct Half of Theorem 1

This section proves that every real number R satisfying is achievable, which is the direct half of Theorem 1. It suffices to prove the achievability of . More precisely, for any given , we need to prove the existence of the encoder–decoder pair such that

The proof is based on the following theorem.

Theorem 3.

(Theorem 1 in Ref. [17]). Let a real number be fixed and given. For any and , denote

Then, for any real numbers and , one can construct a code of length N over the DMC defined in Definition 2 such that

when N is sufficiently large.

Proof.

Let

and

for a small . Suppose that is a code of length N satisfying

Applying the code to the communication model in Figure 1, it is already satisfied that

when and are sufficiently small. To establish , let the value of N be sufficiently large such that

The value of is upper bounded by

where follows because W is independent from ; follows because is independent from ; follows because when , and

when ; and follows from Formula (15). Consequently,

when is sufficiently small. The proof of the direct half is completed. □

6. Proof of Proposition 1

This section proves that forms a Markov chain for every , which is Proposition 1. It suffices to prove that

for any and . Suppose that and are given. Denote

If , both sides of (16) equal 0. Formula (16) is established. If , terms in Formula (16) are deduced as follows. Firstly,

where the last equality follows because and are independent from . Moreover,

Finally,

where the last equality follows because is independent from . Combining Formulas (17)–(19) results in Formula (16) also holding for , and with . The proof is completed.

7. Conclusions

Since the data in WBAN is highly related with the personal health, it is vital to protect this healthy information from attacks. In this paper, from the perspective of information theory, we studied the infrastructure of secure transmission system in WBAN, and solved the capacity problem of a class of finite-state Markov erasure wiretap channel for the IoT. The coding scheme used in this paper comes from the generalized wiretap channel II with the noisy main channel. The idea may be used to solve the capacity problems of other non-DMC wiretap channels. In a theoretical sense, the secure performance of our designed algorithm is not relevant with the computation capability of engaged computers and can guarantee the security of transmitted data in WBAN, by which the personal privacy could be significantly protected.

Author Contributions

Conceptualization, B.W.; Methodology, B.W.; Software, Y.S., W.G. and G.F.; Data Curation, W.G.; Writing—Original Draft Preparation, B.W.; Writing—Review & Editing, Y.S.; Supervision, J.D.; Funding Acquisition, J.D.

Funding

This research was funded by the National Natural Science Foundation of China Nos. 51804304, 61571338 and U1709218, the Natural Science Basic Research Plan of Shaanxi Province No. 2018JM5052, the Key Research and Development Plan of Shaanxi Province No. 2017ZDCXL-GY-05-01, the National Key Research and Development Program of China Nos. 2016YFE0123000, YS2017YFGH000872, and 2018YFC0808301, the Xi’an Key Laboratory of Mobile Edge Computing and Security No. 201805052-ZD3CG36, the China Postdoctoral Science Foundation No. 2015M5826, the Scientific Research Program Funded of Shaanxi Provincial Education Department No. 2016JK1501, and the Shaanxi Provincial Postdoctoral Science Foundation of Shaanxi Provincial.

Acknowledgments

The authors would like to thank Ning Cai of Shanghai Tech University for helping to prove the work in this paper. The authors are grateful to the anonymous reviewers for their constructive comments on the paper.

Conflicts of Interest

The authors declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- Tolossa, Y.J.; Vuppala, S.; Kaddoum, G.; Abreu, G. On the uplink secrecy capacity analysis in D2D-enabled cellular network. IEEE Syst. J. 2017, 12, 2297–2307. [Google Scholar] [CrossRef]

- Jameel, F.; Wyne, S.; Kaddoum, G.; Duong, T.Q. A comprehensive survey on cooperative relaying and jamming strategies for physical layer security. IEEE Commun. Surv. Tutor. 2018. [Google Scholar] [CrossRef]

- Kong, L.; Vuppala, S.; Kaddoum, G. Secrecy Analysis of Random MIMO Wireless Networks over α − μ Fading Channels. IEEE Trans. Veh. Technol. 2018. [Google Scholar] [CrossRef]

- Zhang, P.N.; Ma, J. Channel Characteristic Aware Privacy Protection Mechanism in WBAN. Sensors 2018, 18, 2403. [Google Scholar] [CrossRef] [PubMed]

- Anwar, M.; Abdyllah, A.H.; Butt, R.A.; Ashraf, M.W.; Qureshi, K.N.; Ullah, F. Securing Data Communication in Wireless Body Area Networks Using Digital Signatures. Technol. J. 2018, 23, 50–55. [Google Scholar]

- Wyner, A.D. The Wire-Tap Channel. Bell Syst. Technol. J. 1975, 54, 1355–1387. [Google Scholar] [CrossRef]

- Kramer, G. Topics in Multi-user Information Theory. Found. Trends Commun. Inf. Theory 2007, 4, 265–444. [Google Scholar] [CrossRef]

- Csiszar, I.; Korner, J. Broadcast channels with confidential messages. IEEE Trans. Inf. Theory 1978, 24, 339–348. [Google Scholar] [CrossRef]

- Chen, Y.; Han Vinck, A.J. Wiretap channel with side infor-mation. IEEE Trans. Inf. Theory 2008, 54, 395–402. [Google Scholar] [CrossRef]

- Dai, B.; Luo, Y. Some new results on the wiretap channel with side information. Entropy 2012, 14, 1671–1702. [Google Scholar] [CrossRef]

- Dai, B.; Han Vinck, A.J.; Hong, J.; Luo, Y.; Zhuang, Z. Degraded Broadcast Channel with Noncausal Side Information, Confidential Messages and Noiseless Feedback. In Proceedings of the 2012 IEEE International Symposium on Information Theory, Cambridge, MA, USA, 1–6 July 2012; pp. 438–442. [Google Scholar]

- Dai, B.; Luo, Y.; Han Vinck, A.J. Capacity region of broadcast channels with private message and causual side information. In Proceedings of the 3rd International Conference on Image and Signal Processing (CISP 2010), Yantai, China, 16–18 October 2010; pp. 3770–3773. [Google Scholar]

- Khisti, A.; Diggavi, S.N.; Womell, G.W. Secrete-key agreement with channel state information at the transmitter. IEEE Trans. Inf. Forensics Secur. 2011, 6, 672–681. [Google Scholar] [CrossRef]

- Chia, Y.H.; El Gamal, A. Wiretap channel with causal state information. IEEE Trans. Inf. Theory 2012, 58, 2838–2849. [Google Scholar] [CrossRef]

- Ozarow, L.H.; Wyner, A.D. Wire-tap channel II. AT T Bell Lab. Technol. J. 1984, 63, 2135–2157. [Google Scholar] [CrossRef]

- He, D.; Luo, Y. A kind of non-DMC erasure wiretap chan-nel. In Proceedings of the 2012 IEEE 14th International Conference on Communication Technology, Chengdu, China, 9–11 November 2012; pp. 1082–1087. [Google Scholar]

- He, D.; Luo, Y.; Cai, N. Strong Secrecy Capacity of the Wiretap Channel II with DMC Main Channel. In Proceedings of the 2016 IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, 10–15 July 2016. [Google Scholar]

- Gilbert, E.N. Capacity of a burst-noise channel. Bell Syst. Technol. J. 1960, 39, 1253–1265. [Google Scholar] [CrossRef]

- Elliott, E.O. Estimates of error rates for codes on burst-noise channels. Bell Syst. Technol. J. 1960, 42, 1977–1997. [Google Scholar] [CrossRef]

- Wang, H.S.; Moayery, N. Finite-state Markov channel—A useful model for radio communication channels. IEEE Trans. Veh. Technol. 1995, 44, 163–171. [Google Scholar] [CrossRef]

- Lv, N.; Chen, C.; Qiu, T.; Sangaiah, A.K. Deep Learning and Superpixel Feature Extraction based on Sparse Autoencoder for Change Detection in SAR Images. IEEE Trans. Ind. Inf. 2018. [Google Scholar] [CrossRef]

- Chen, C.; Hu, J.; Qiu, T.; Atiquzzaman, M.; Ren, Z. CVCG: Cooperative V2V-aided Transmission Scheme Based on Coalitional Game for Popular Content Distribution in Vehicular Ad-hoc Networks. IEEE Trans. Mob. Comput. 2018. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).