Abstract

A new variance formula is developed using the generalized inverse of an increasing function. Based on the variance formula, a new entropy formula for any uncertain variable is provided. Most of the entropy formulas in the literature are special cases of the new entropy formula. Using the new entropy formula, the maximum entropy distribution for unimodel entropy of uncertain variables is provided without using the Euler–Lagrange equation.

1. Introduction

The concept of entropy was first introduced by Rudolf Clausius in the mid-19th century as a measure of the disorder or randomness in a system. In 1948, Shannon [1] inaugurated information entropy in information theory, which measures the unpredictability of the realization of a random variable. Subsequently, information entropy developed into an essential tool in information theory (see Gray [2]). Entropy was introduced into dynamical systems by Kolmogorov [3] in 1958 to investigate the conjugacy problems of the Bernoulli shift. In 2009, Liu [4] proposed logarithmic entropy in uncertainty theory to quantify the difficulty in forecasting the outcome of an uncertain variable. In 2012, Knuth et al. [5] gave an interesting application of maximal joint entropy in automated learning machines. Currently, entropy is widely used in various fields, such as probability theory, ergodic theory, dynamical systems, machine learning and data analysis, uncertainty theory, and others. We refer the readers to Martin and England [6], Walters [7], Einsiedler and Thomas [8], and Liu [9] for a detailed introduction to the entropy theory in these fields.

The maximum entropy principle was first expounded by Jaynes [10,11], where he gave a natural criterion of choice, i.e., from the set of all probability distributions compatible with several mean values of several random variables, choose the one that maximizes Shannon entropy. From then on, the methods of maximum entropy became widely applied tools for constructing the probability distribution in statistical inference [12], decision-making [13,14], communication theory [15], time-series analysis [16], and reliability theory [17,18]. In 2017, Abbas et al. [19] made an elaborate comparison between the methods of maximum entropy and the maximum log-probability from the point of the Kullback–Leibler divergence. In this paper, we will discuss the entropy and the maximum entropy principle in uncertainty theory.

Uncertainty theory, founded by Liu [20] in 2007 and refined in 2015 [9], is a branch of axiomatic mathematics that deals with human’s belief degree based on normality, duality, sub-additivity, and product axioms. Within the past few years, it has attracted numerous researchers. Peng and Iwamura [21], Liu and Lio [22] provided a sufficient and necessary characterization of an uncertainty distribution. Based on the inverse uncertainty distribution, Yao [23] introduced a new approach to computing the variance. Subsequently, Sheng and Kar [24] extended Yao’s result to the moments of the uncertain variable.

After the introduction of entropy in uncertainty theory, the entropy and maximum entropy principle were researched by numerous experts. Inspired by the maximum entropy principles in information theory and statistics, Chen and Dai [25] explored the maximum entropy principle of logarithmic entropy. According to the maximum entropy principle, using the Lagrange multipliers method, Chen and Dai [25] proved that normal uncertainty distribution is the maximal entropy distribution under the prescribed constraints on the expected value and variance. In addition, using Fubini’s theorem, Dai and Chen [26] built an entropy formula for regular uncertain variables (see Definition 2). Subsequently, there have been many extensions of entropy, the entropy formula, and the corresponding maximum entropy distribution. Yao et al. [27] proposed sine entropy. Yao et al. [27] provided an entropy formula for regular uncertain variables, and the authors also obtained the maximum entropy distribution for sine entropy. Tang and Gao [28] proposed triangular entropy and provided an entropy formula for regular uncertain variables. Ning et al. [29] applied triangular entropy to portfolio selection. Subsequently, Dai [30] suggested quadratic entropy and provided an entropy formula for regular uncertain variables. In addition, Dai [30] found the maximum entropy distribution for quadratic entropy. Here, we point out, all the entropy formulas in Dai and Chen [26], Yao et al. [27], Tang and Gao [28], and Dai [30] are based on Fubini’s theorem. Subsequently, Ma [31] proposed unimodal entropy of uncertain variables and provided an entropy formula for regular uncertain variables. Ma [31] also found the maximum entropy distribution for unimodal entropy for regular uncertain variables. Nevertheless, limited by their methods, all the entropy formulas in Dai and Chen [26], Yao et al. [27], Tang and Gao [28], Dai [30], and Ma [31] are only established for regular uncertain variables. We know most uncertain variables are not regular. Therefore, it has some significance not only in theory but also in practice to prove the entropy formulas of Dai and Chen [26], Yao et al. [27], Tang and Gao [28], Dai [30], and Ma [31] are still true for any uncertain variable. In this paper, we will provide an entropy formula that is true for any uncertain variable by using the generalized inverse of an increasing function. We emphasize that the entropy formulas of Dai and Chen [26], Yao et al. [27], Tang and Gao [28], Dai [30], and Ma [31] are special cases of our entropy formula, and our method differs completely from those methods used by Dai and Chen [26], Yao et al. [27], Dai [30], and Ma [31].

Furthermore, we point out that all the maximum entropy distributions of Chen and Dai [25], Yao et al. [27], and Dai [30] were obtained based on the Lagrange multipliers method. Using our entropy formula, we will obtain the maximum entropy distribution without utilizing the Lagrange multipliers method. We also emphasize that the maximum entropy distributions of Chen and Dai [25], Yao et al. [27], and Dai [30] are special cases of ours.

The rest of the paper is arranged as follows. In Section 2, we first collect some necessary facts in uncertainty theory and some requisite results of the generalized inverse of an increasing function. Then, we review the entropy, entropy formulas, and the maximum entropy principles in uncertainty theory. In Section 3, we present our entropy formula and our maximum entropy distribution. We also demonstrate some applications of our entropy formula and maximum entropy distribution. In Section 4, we prove our entropy formula and derive the maximum entropy distribution of unimodel entropy. In Section 5, we verify that the maximum entropy distributions of logarithmic entropy in Theorem 2, sine entropy in Theorem 5, and quadratic entropy in Theorem 8 are special cases of our maximum entropy distributions. Finally, a concise discussion and further research are given in Section 6.

2. Preliminaries

In this section, first, we will collect the necessary facts in uncertainty theory. Second, we will recall some requisite results of the generalized inverse of an increasing function. Finally, we review the entropy, entropy formulas, and the maximum entropy principles in uncertainty theory.

2.1. Some Primary Facts in Uncertainty Theory

Let be a non-empty set and suppose is a -algebra over . Each element A in is called an event. To handle belief degree reasonably, Liu [20] introduced the uncertain measure.

Definition 1.

A function is called an uncertain measure provided satisfies the following three axioms:

- 1.

- Normality: ;

- 2.

- Duality: for each event A;

- 3.

- Subadditivity: for each sequence of events , one gets

The triplet is called an uncertainty space. A measurable function is called an uncertain variable. Let X be an uncertain variable; the function is called the uncertainty distribution of X.

Definition 2

([32]). An uncertainty distribution is called regular provided it is strictly increasing and continuous over , and

An uncertain variable is called regular if its uncertainty distribution is regular.

Obviously, a regular uncertainty distribution is invertible. Now, we recall two regular uncertainty distributions that are most used in uncertainty theory. The first is the normal uncertainty distribution [32], which is defined by

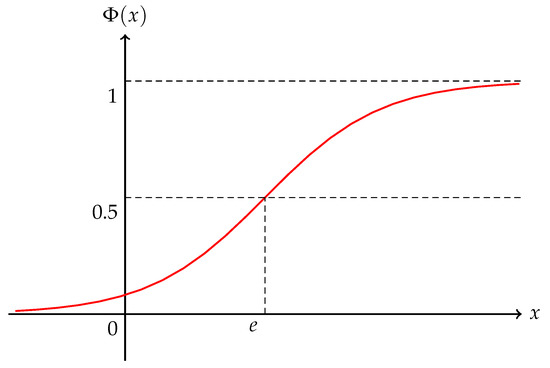

Here, e and are real numbers with . We call an uncertain variable X normal provided its uncertainty distribution is normal and write (Figure 1).

Figure 1.

Normal uncertainty distribution .

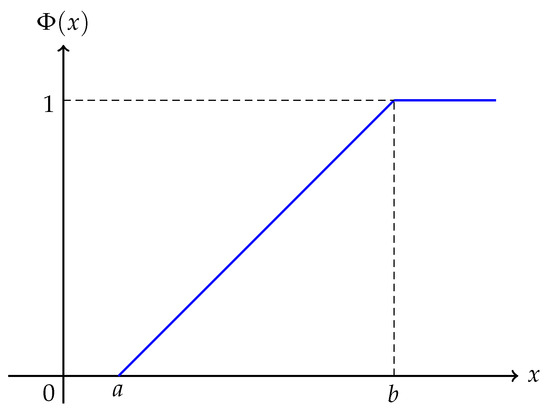

The second is the linear uncertainty distribution [32], which is defined by

where a and b are real numbers with (Figure 2).

Figure 2.

Linear uncertainty distribution .

Liu [20] suggested the expectation to describe the mean of an uncertain variable.

Definition 3

([20]). Let X be an uncertain variable. The expectation of X is defined by

if one or two of the integrals above are finite.

Let X be an uncertain variable with finite expectation e; the variance of X is defined by

Given an uncertainty variable X with uncertainty distribution and finite expectation e, the variance of X can be computed by (see Section 2.6 of Chapter 2 in [9])

Yao [23] provided a nice formula for calculating the variance by inverse uncertainty distribution.

Theorem 1

(Yao [23]). Assume that X is a regular uncertain variable with uncertainty distribution . If is finite, then

We will prove the variance formula of Yao is true for any uncertain variable in Theorem 9, which is one of our main tools for establishing our entropy formula and maximum entropy principle.

2.2. Necessary Results of the Generalized Inverse of an Increasing Function

To present our results, we need to review the generalized inverse of an increasing function, which is a basic tool in statistics; see Embrechts and Hofert [33], Resnick [34], Kampke-Radermacher [35]. Here, we follow the definition of Embrechts and Hofert [33].

Definition 4

([33]). Let be an increasing function. The generalized inverse of is defined by

with the convention that , where ∅ is the empty set.

Specifically, we define with the convention that .

Remark 1.

To stress the difference between the generalized inverse and standard inverse, we denote the generalized inverse of an increasing function by . If is strictly increasing and continuous, is just the standard inverse of .

We also point out that, by the above definition, may be and may be +∞. Here is an example used frequently in uncertainty theory.

Example 1.

Let be a normal uncertainty distribution defined by (1). Then is:

We also need to introduce the generalized inverse of a left-continuous increasing function; see Appendix B in Iritani and Kuga [36].

Definition 5

([36]). Let G be a left-continuous and increasing function over . The generalized inverse of G is defined by

for all real numbers x.

The succeeding interesting fact is that the generalized inverse of the generalized inverse for a right-continuous increasing function is just itself. To the best of our knowledge, this result was proved first by Iritani and Kuga [36] and was refined by Kampke-Radermacher [35].

2.3. Entropy Formula and the Principle of Maximum Entropy

To quantify the difficulty in predicting the outcomes of an uncertain variable, Liu [4] proposed logarithm entropy.

Definition 6

([4]). Let X be an uncertain variable with uncertainty distribution . The logarithm entropy of X is defined by

Here, for and .

Using the Lagrange multipliers method, Chen and Dai [25] obtained the maximum entropy distribution for the logarithmic entropy.

Theorem 2

([25]). Suppose X is an uncertain variable with finite expectation e and variance . Then,

and the equality holds if and only if X is a normal uncertain variable .

In addition, using Fubini’s theorem, Dai and Chen [26] attained an interesting entropy formula for regular uncertain variables.

Theorem 3

([26]). Suppose X is a regular uncertain variable with uncertainty distribution . If is finite, we have

Subsequently, Yao et al. [27] proposed sine entropy.

Definition 7

([27]). Let X be an uncertain variable with uncertainty distribution . The sine entropy of X is defined by

Here, for .

Yao et al. [27] provided an entropy formula and obtained the maximum entropy distribution of sine entropy.

Theorem 4

([27]). Suppose X is a regular uncertain variable with uncertainty distribution . Then, we have

Theorem 5

([27]). Suppose X is an uncertain variable with uncertainty distribution , expectation e, and variance . Then, we have

and equality holds if and only if

Tang and Gao [28] suggested triangular entropy and found an entropy formula.

Definition 8

([28]). Let X be an uncertain variable with uncertainty distribution . The triangular entropy of X is defined by

where

Theorem 6

([28]). Suppose X is a regular uncertain variable with uncertainty distribution . Then, we have

Dai [30] put forward quadratic entropy.

Definition 9

([30]). Let X be an uncertain variable with uncertainty distribution . The quadratic entropy of X is defined by

Here, for .

Dai [30] also provided an entropy formula and got the maximum entropy distribution of quadratic entropy.

Theorem 7

([30]). Suppose X is a regular uncertain variable with uncertainty distribution . If the entropy exists, then

Theorem 8

([30]). Suppose X is an uncertain variable with uncertainty distribution , expectation e, and variance . Then, we have

and equality holds if and only if

Ma [31] suggested unimodal entropy.

Definition 10

([31]). Let X be an uncertain variable with uncertainty distribution . The unimodal entropy of X is defined by

where function U is increasing on and decreasing on , .

Ma [31] also provided an entropy formula and got the maximum entropy distribution of the unimodal entropy for regular uncertain variables. The readers can refer to Liu [9], Yao et al. [27], Tang and Gao [28], Dai [30], Ma [31], and the references therein for details.

Here, we emphasize the entropy formulas of Dai and Chen [26], Yao et al. [27], Tang and Gao [28], Dai [30], and Ma [31] hold only for regular uncertain variables. We will develop an entropy formula that is true for any uncertain variable. To establish the entropy formulas, the method used by Dai and Chen [26], Yao et al. [27], Tang and Gao [28], and Dai [30] is Fubini’s theorem. We stress that Fubini’s theorem does not work in our case because the uncertainty distribution may not be regular here. We need to develop new methods.

Finally, we point out, to obtain the maximum entropy distribution, the main method used by Chen and Dai [25], Yao et al. [27], and Dai [30] is the Lagrange multipliers method. Based on our entropy formula, we can obtain the maximum entropy distribution without using the Lagrange multipliers method. We also stress that the only tool we need is the Cauchy–Schwartz inequality. In the following section, we will exhibit our new entropy formula and provide the maximum entropy distribution of unimodel entropy.

3. Results

In this section, we will present our results and give some remarks. First, we point out that the variance formula of Yao [23] for regular uncertain variables is valid for any uncertain variable.

Theorem 9.

Let X be an uncertain variable with uncertainty distribution , finite expectation e, and finite variance. Then

where is the generalized inverse of .

We leave the verification of Theorem 9 to the next section. Based on our variance formula and some facts on the generalized inverse, we will develop an entropy formula for any uncertain variable.

Theorem 10.

Let X be an uncertain variable with finite expectation and finite variance. Assume further that unimodal function U is differentiable and satisfies

Then we have

where is the generalized inverse of the uncertainty distribution of X.

Remark 2.

- 1.

- The entropy formulas in Dai and Chen [26], Yao et al. [27], Tang and Gao [28], Dai [30], and Ma [31] are valid only for regular uncertain variables. Our entropy formula holds for any uncertain variable.

- 2.

- Fubini’s theorem, which is used by Dai and Chen [26], Yao et al. [27], Tang and Gao [28], and Dai [30] to establish the entropy formula there, does not work in our setting, because the uncertainty distribution is not necessarily regular here.

We specifically point out, under the assumption that the uncertainty distribution is regular, let , , ,

respectively. By Theorem 10, we can regain the entropy formulas of logarithm entropy in Theorem 3, sine entropy in Theorem 4, triangular entropy in Theorem 6, and quadratic entropy in Theorem 7. As the verifications are routine, we omit them.

Using our entropy formula in Theorem 10, we obtain the maximum entropy distribution of unimodal entropy.

Theorem 11.

Let X be an uncertain variable with uncertainty distribution , finite expectation e, and finite variance . Assume further that unimodal function U is differentiable and satisfies

Then, the unimodal entropy of X satisfies

and the equality holds if and only if

where c is a real number such that

Remark 3.

We stress that the maximum entropy distribution of the unimodal entropy of Ma [31] is provided only for regular uncertain variables. Here, our maximum entropy distribution of unimodal entropy in Theorem 11 is obtained for any uncertain variable.

As applications of our maximum entropy distribution in Theorem 11, we can deduce the maximum entropy distribution of logarithm entropy in Theorem 2, sine entropy in Theorem 5, and quadratic entropy in Theorem 8 immediately.

Proposition 1

(Chen and Dai [25]). Let X be an uncertain variable with uncertainty distribution , finite expectation e, and variance . Then, the logarithmic entropy of X satisfies

and the equality holds if and only if

i.e., X is a normal uncertain variable .

Proposition 2

(Yao et al. [27]). Let X be an uncertain variable with uncertainty distribution , finite expectation e, and variance . Then, its sine entropy satisfies

and the equality holds if and only if

Proposition 3

(Dai [30]). Let X be an uncertain variable with uncertainty distribution , finite expectation e, and variance . Then, its quadratic entropy satisfies

and the equality holds if and only if

We leave the proofs of Corollary 1, Corollary 2, and Corollary 3 to Section 6. In the following section, we will prove Theorem 9, Theorem 10, and Theorem 11.

4. Proof of Theorem 9, Theorem 10, and Theorem 11

To prove Theorems 9 and 10, we need a result of the substitution rule on Lebesgue–Stieltjes integrals, which is a conclusion of Proposition 2 of Falkner and Teschl [37].

Lemma 2

([37]). Assume function M is increasing over and function N is left-continuous increasing over . Then, for each bounded Borel function f over , one has

where is the generalized inverse of M defined by (4).

Here, we point out that if , this result goes back to the classical case of Lebesgue [38]. Before proving the main results, we also need a simple fact.

Lemma 3.

Suppose function g is increasing on with

and let L be a function increasing on and decreasing on , respectively, for some a in . Then

- (a)

- means

- (b)

- means

Because the verification of Lemma 3 is a routine computation, we omit it.

We also point out that, notwithstanding that uncertainty distribution may not be right-continuous, since is increasing, the assumption that is right-continuous does not change the variance and the entropy of an uncertain variable. Thus, we assume uncertainty distribution is right-continuous in the rest. Now, we will prove Theorem 9.

Proof of Theorem 9.

Let and be the uncertainty distribution of Y. Then we have

We only need to prove

Letting , we have

where the last equality is because for .

By Lemma 2, let , , , noting that according to Lemma 1, we have

By integration by parts, we have

Then, we have

Let

Note that function G is increasing over . It follows that

By monotone convergence theorem, we have

where is indicator function of the interval .

Thus, we have

We need to prove

If

the result is obvious. If

by Lemma 3, let and , we have

Thus, we finish the proof of Theorem 9. □

Now, using Theorem 9, Lemma 2, and Lemma 3, we will prove Theorem 10, i.e., the entropy formula.

Proof of Theorem 10.

Recall

Note that

and . We have

By the monotone convergence theorem, we have

where

is indicator function of the interval .

Let

Note that is decreasing over . We have

Thus

Let , , ; by Lemma 2 and integration by parts, we have

Then, we have

We only need to show

and

Noting that , by the Cauchy–Schwartz inequality and Theorem 9, we have

By monotone convergence theorem, again we have

Let and ; by Lemma 3, we have

Then, we have

Thus, we finish the proof of Theorem 10. □

Now, based on our entropy formula, we can derive the maximum entropy distribution of unimodal entropy immediately.

Proof of Theorem 11.

Noting that , by Theorem 10, we have

By the Cauchy–Schwartz inequality and Theorem 9, we have

and “=” holds if and only if there exists some real number c such that

Then, we have

i.e.,

Thus, Theorem 11 is proved. □

5. Proof of Proposition 1, Proposition 2, and Proposition 3

In this section, we use our maximum entropy distribution to deduce Proposition 1, Proposition 2, and Proposition 3, i.e., the maximum entropy distributions of logarithm entropy, sine entropy, and quadratic entropy. As the proofs of Proposition 2 and Proposition 3 are similar to that of Proposition 1, we only prove Proposition 1.

Proof of Proposition 1.

Let

Then,

By Theorem 2 of Ma et al. [39], we have

By Theorem 11, we have

and the equality holds if and only if

Since functions

are increasing for , the real number c is positive. By equality (23), we have

Thus

Then

i.e., is a normal uncertainty distribution with expectation e and variance . □

6. Discussion

In this paper, by using the generalized inverse of an increasing function, we developed a useful entropy formula that is valid for any uncertain variable and encompasses all the previous cases in the literature. In addition, based on our entropy formula, we obtained the maximum entropy distribution for unimodel entropy. We also point out that most of the entropy formulas and maximum entropy distributions of the uncertain variables in the literature are special cases of ours.

Further research can consider the following two directions:

- (1)

- Develop the entropy formula and the maximum entropy principle of an uncertain set;

- (2)

- Establish the entropy formula and research the maximum entropy distributions of uncertain random variables.

Author Contributions

Conceptualization, Y.L. and G.M.; methodology, Y.L.; writing—original draft preparation, Y.L.; writing—review and editing, G.M.; supervision, G.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Project of Natural Science Foundation of Educational Committee of Henan Province (Grant No. 23A110014).

Institutional Review Board Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Shannon, C. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Gray, R.M. Entropy and Information Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Kolmogorov, A. New metric invariant of transitive dynamical systems and endomorphisms of lebesgue spaces. Dokl. Russ. Acad. Sci. 1958, 119, 861–864. [Google Scholar]

- Liu, B. Some research problems in uncertainty theory. J. Uncertain Syst. 2009, 3, 3–10. [Google Scholar]

- Malakar, N.K.; Knuth, K.H.; Lary, D.J. Maximum joint entropy and information-based collaboration of automated learning machines. In AIP Conference Proceedings 31st; American Institute of Physics: College Park, MD, USA, 2012; Volume 1443, pp. 230–237. [Google Scholar] [CrossRef]

- Martin, N.F.; Engl, J.W. Mathematical Theory of Entropy (No.12); Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Walters, P. An Introduction to Ergodic Theory; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar]

- Einsiedler, M.; Thomas, W. Ergodic Theory; Springer: London, UK, 2013. [Google Scholar]

- Liu, B. Uncertainty Theory, 4th ed.; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Jaynes, E. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Jaynes, E. Information theory and statistical mechanics. ii. Phys. Rev. 1957, 108, 171–190. [Google Scholar] [CrossRef]

- Polpo, A.; Stern, J.; Louzada, F.; Izbicki, R.; Takada, H. Bayesian Inference and Maximum Entropy Methods in Science and Engineering; Springer Proceedings in Mathematics & Statistics: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Buckley, J.J. Entropy principles in decision making under risk. Risk Anal. 1985, 5, 303–313. [Google Scholar] [CrossRef]

- Hoefer, M.P.; Ahmed, S.B. The Maximum Entropy Principle in Decision Making Under Uncertainty: Special Cases Applicable to Developing Technologies. Am. J. Math. Manag. Sci. 1990, 10, 261–273. [Google Scholar] [CrossRef]

- Clarke, B. Information optimality and Bayesian modelling. J. Econom. 2007, 138, 405–429. [Google Scholar] [CrossRef]

- Naidu, P.S. Modern Spectrum Analysis of Time Series: Fast Algorithms and Error Control Techniques; CRC Press: Boca Raton, FL, USA, 1995. [Google Scholar]

- Zhang, Y. Principle of maximum entropy for reliability analysis in the design of machine components. Front. Mech. Eng. 2019, 14, 21–32. [Google Scholar] [CrossRef]

- Zu, T.; Kang, R.; Wen, M.; Zhang, Q. Belief reliability distribution based on maximum entropy principle. IEEE Access 2017, 6, 1577–1582. [Google Scholar] [CrossRef]

- Abbas, A.E.H.; Cadenbach, A.; Salimi, E. A Kullback–Leibler view of maximum entropy and maximum log-probability methods. Entropy 2017, 19, 232. [Google Scholar] [CrossRef]

- Liu, B. Uncertainty Theory, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar] [CrossRef]

- Peng, Z.; Iwamura, K. A sufficient and necessary condition of uncertainty distribution. J. Interdiscip. Math. 2010, 13, 277–285. [Google Scholar] [CrossRef]

- Liu, Y.; Lio, W. A revision of sufficient and necessary condition of uncertainty distribution. J. Intell. Fuzzy Syst. 2020, 38, 4845–4854. [Google Scholar] [CrossRef]

- Yao, K. A formula to calculate the variance of uncertain variable. Soft Comput. 2015, 19, 2947–2953. [Google Scholar] [CrossRef]

- Sheng, Y.; Kar, S. Some results of moments of uncertain variable through inverse uncertainty distribution. Fuzzy Optim. Decis. Mak. 2015, 14, 57–76. [Google Scholar] [CrossRef]

- Chen, X.; Dai, W. Maximum entropy principle for uncertain variables. Int. J. Fuzzy Syst. 2011, 13, 232–236. [Google Scholar]

- Dai, W.; Chen, X. Entropy of function of uncertain variables. Math. Comput. Model. 2012, 5, 54–760. [Google Scholar] [CrossRef]

- Yao, K.; Gao, J.; Dai, W. Sine entropy for uncertain variable. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2013, 21, 743–753. [Google Scholar] [CrossRef]

- Tang, W.; Gao, W. Triangular entropy of uncertain variables. International Information Institute (Tokyo). Information 2013, 16, 1279. [Google Scholar]

- Ning, Y.; Ke, H.; Fu, Z. Triangular entropy of uncertain variables with application to portfolio selection. Soft Comput. 2015, 19, 2203–2209. [Google Scholar] [CrossRef]

- Dai, W. Quadratic entropy of uncertain variables. Soft Comput. 2018, 22, 5699–5706. [Google Scholar] [CrossRef]

- Ma, G. A remark on the maximum entropy principle in uncertainty theory. Soft Comput. 2021, 25, 13911–13920. [Google Scholar] [CrossRef]

- Liu, B. Uncertainty Theory: A Branch of Mathematics for Modeling Human Uncertainty; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Embrechts, P.; Hofert, M. A note on generalized inverses. Math. Methods Oper. Res. 2013, 77, 423–432. [Google Scholar] [CrossRef]

- Resnick, S.I. Extreme Values, Regular Variation, and Point Processes (Vol. 4); Springer Science Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Kämpke, T.; Radermacher, F.J. Income modeling and balancing. In Lecture Notes in Economics and Mathematical Systems; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Iritani, J.; Kuga, K. Duality between the Lorenz curves and the income distribution functions. Econ. Stud. Q. (Tokyo 1950) 1983, 34, 9–21. [Google Scholar]

- Falkner, N.; Teschl, G. On the substitution rule for Lebesgue–Stieltjes integrals. Expo. Math. 2012, 30, 412–418. [Google Scholar] [CrossRef]

- Lebesgue, H. Sur l’integrale de stieltjes et sur les operérations fonctionelles linéaires. CR Acad. Sci. Paris 1910, 150, 86–88. [Google Scholar]

- Ma, G.; Yang, X.; Yao, X. A relation between moments of Liu process and Bernoulli numbers. Fuzzy Optim. Decis. Mak. 2021, 20, 261–272. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).