Abstract

In the era of the Internet of Things, there are many applications where numerous devices are deployed to acquire information and send it to analyse the data and make informed decisions. In these applications, the power consumption and price of the devices are often an issue. In this work, analog coding schemes are considered, so that an ADC is not needed, allowing the size and power consumption of the devices to be reduced. In addition, linear and DFT-based transmission schemes are proposed, so that the complexity of the operations involved is lowered, thus reducing the requirements in terms of processing capacity and the price of the hardware. The proposed schemes are proved to be asymptotically optimal among the linear ones for WSS, MA, AR and ARMA sources.

1. Introduction

In the past few years, and specially in the context of the Internet of Things (IoT), numerous applications in which devices with constrained power and processing capacity acquire and transmit data have emerged. Therefore, it is crucial to find coding strategies for transmitting information with an acceptable distortion that are undemanding in terms of processing while minimizing power consumption. A well-known low-power and low-complexity coding strategy is the so called analog joint source-channel coding (see, e.g., [1]). In this context, some authors have lately proposed the use of low-power/low-cost all-analog sensors [2,3], avoiding the high power demanding analog-to-digital converters (ADC).

Here, we are focused on analog joint source-channel linear coding. Specifically, we study here the transmission of realizations of an n-dimensional continuous random vector over an additive white Gaussian noise (AWGN) channel employing a linear encoder and decoder. For this scenario, the minimum average transmission power under a fixed average distortion constraint is achieved whenever it is applied the coding strategy given by Lee and Petersen in [4]. Nevertheless, in [5,6] it was presented a linear coding scheme based on the discrete Fourier transform (DFT) that, with a lower complexity, is asymptotically optimal among the linear schemes in terms of transmission power for certain sources, namely wide-sense stationary (WSS) and asymptotically WSS (AWSS) autoregressive (AR) sources.

In this paper, we present two new DFT-based alternatives to the optimal linear coding scheme given in [4]. We prove that the average transmission power required for one of them is lower than for the approach in [5,6]. The average transmission power required for the other new alternative is shown to be higher, although it is conceptually simpler, and so is its implementation. We prove that the two new schemes along with the strategy in [5,6] are asymptotically optimal for any AWSS source. Additionally, we show that the convergence speed of the average transmission power of the alternative schemes is for the case of WSS, moving average (MA), AWSS AR and AWSS autoregressive moving average (ARMA) sources. Therefore, we conclude that it is possible to achieve a good performance with small size data blocks, allowing this schemes to be used in applications that require low latency.

This paper is organized as follows. In Section 2, we mathematically describe the communications system and review the transmission strategy presented in [4], and the alternative given in [5,6]. In Section 3, the two new coding schemes are presented. In Section 4, we compare the performance of each of the four coding strategies studied in terms of the required transmission power, and we analyze their asymptotic behavior for several types of sources. In Section 5 and Section 6 a numerical example and some conclusions are presented respectively.

2. Preliminaries

We begin this section by introducing some notation. We will denote by , , and the sets of real numbers, complex numbers, integers and positive integers respectively. represents the smallest integer higher than or equal to . is the set of the real matrices. The symbols ⊤ and ∗ denote transpose and conjugate transpose respectively, the imaginary unit is represented by , stands for expectation and is the Kronecker delta (i.e., if , and it is zero otherwise). If , and will designate the real part and the imaginary part of z respectively, is the conjugate of z and is 2-dimensional the real column vector . denotes the identity matrix and is the Fourier unitary matrix, i.e.,

and are the eigenvalues of an real symmetric matrix A and the singular values of an matrix B sorted in decreasing order, respectively. If is a random process, we denote by the random n-dimensional column vector . The Frobenius norm and the spectral norm are represented by and , respectively.

If is a continuous and -periodic function, we denote by the Toeplitz matrix given by

where is the set of Fourier coefficients of f:

2.1. Problem Statement

We consider a discrete-time analog n-dimensional real vector source with invertible correlation matrix. We want to transmit realizations of this vector by using n times a real AWGN channel with noise variance . The n noise iid random variables can then be represented by the Gaussian n-dimensional vector , with correlation matrix . We further assume that the input and the channel noise are both zero-mean and that they are uncorrelated, i.e., for all .

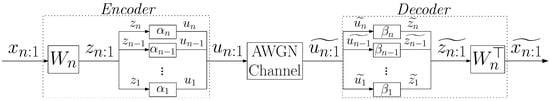

The communications system is depicted in Figure 1, where G and H are real matrices representing the linear encoder and decoder, respectively. Specifically, a source vector symbol is encoded using a linear transformation, , and then transmitted through the AWGN channel. An estimation of the source vector symbol is then obtained from the perturbed vector, , using another linear transformation, .

Figure 1.

Linear coding scheme.

In [4], Lee and Petersen found matrices G and H that minimize the average transmission power, , under a given average distortion constraint D, that is,

2.2. Known Linear Coding Schemes

2.2.1. Optimal Linear Coding Scheme

As it has been aforementioned, Lee and Petersen presented the optimal linear coding scheme in [4]. The encoder of the optimal linear coding scheme converts the n correlated variables of the source vector into n uncorrelated variables, and assigns a weight to each of the n uncorrelated variables. If is an eigenvalue decomposition of the correlation matrix of the source, where the eigenvector matrix is real and orthogonal, the optimal linear coding scheme is of the type shown in Figure 2, with . Its average transmission power is

under a given average distortion constraint .

Figure 2.

Linear coding scheme based on an real orthogonal matrix .

Since this coding scheme implies a multiplication of an matrix by an column vector, its computational complexity is .

2.2.2. DFT-Based Alternative

In [5,6], an alternative to the optimal coding scheme was presented. The encoder of that alternative scheme assigns weights to the real and imaginary parts of the entries of the DFT of the source vector. This alternative coding scheme is of the type shown in Figure 2, with , where is the sparse matrix defined in ([5] Equation (3)). Its average transmission power is

under a given average distortion constraint , where

with .

The computational complexity of this coding scheme is whenever the fast Fourier transform (FFT) algorithm is used.

3. New Coding Schemes

In this section, we propose two new transmission schemes. Similarly to the scheme reviewed in Section 2.2.2, our two new schemes make use of the DFT, and their computational complexity is also if the FFT algorithm is applied.

3.1. Low-Power Alternative

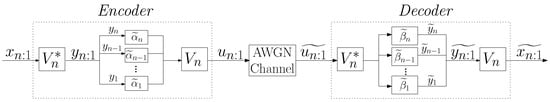

The encoder of this scheme first computes the DFT of the source vector, . Afterwards, each 2-dimensional vector is encoded using a real orthogonal eigenvector matrix of the correlation matrix . Therefore, the real part and the imaginary part of each are here jointly encoded, unlike in Section 2.2.2, where they where separately encoded. This coding scheme is shown in Figure 3 for n even (the scheme for n odd is similar), with

being an eigenvalue decomposition of where the eigenvector matrix is real and orthogonal,

and

Figure 3.

Low-power scheme.

In Appendix A, we prove that the average transmission power of this scheme under a given average distortion constraint D is

with

Furthermore, in Section 4, we show that , i.e., the average transmission power for this new scheme is lower than the one required for the scheme in Section 2.2.2.

3.2. DFT/IDFT Alternative

The encoder of this scheme uses both the DFT and the inverse DFT (IDFT). The coding scheme is shown in Figure 4, where

and

Figure 4.

DFT/IDFT scheme.

In Appendix B, we prove that the average transmission power of this scheme under a given average distortion constraint D is

As it can be seen in Figure 4, this coding scheme is conceptually simpler than the ones in Section 2.2.2 and Section 3.1.

4. Analysis of the Transmission Power of the Coding Schemes

We begin this section by comparing the performance of each of the four considered coding strategies in terms of the required transmission power.

Theorem 1.

Proof.

See Appendix C. □

4.1. Asymptotic Behavior

We now show that the three alternatives to the optimal linear coding scheme, which were presented in Section 2.2.2, Section 3.1 and Section 3.2, are asymptotically optimal whenever the source is AWSS. To that end, we first need to review three definitions. We begin with the Gray concept of asymptotically equivalent sequences of matrices given in [7].

Definition 1

(Asymptotically equivalent sequences of matrices).Let and be matrices for all . The two sequences of matrices and are said to be asymptotically equivalent, abbreviated , if there exists such that

and

Now we recall the well-known concept of WSS process.

Definition 2

(WSS process).Let be a continuous, -periodic and non-negative function. A random process is said to be WSS, with power spectral density (PSD) f, if it has constant mean, i.e., , and .

We can now review the Gray concept of AWSS process given in ([8] p. 225).

Definition 3

(AWSS process).Let be a continuous, -periodic and non-negative function. A random process is said to be AWSS, with (asymptotic) PSD f, if it has constant mean and .

Theorem 2.

Suppose that is an AWSS process with PSD f as in Definition 3. If then

for all .

Proof.

See Appendix D. □

4.2. Convergence Speed

Here, we prove that the convergence speed of the average transmission power of the three alternative schemes described in Section 2.2.2, Section 3.1 and Section 3.2 to the average transmission power of the optimal linear scheme is for AWSS ARMA, MA, AWSS AR and WSS sources. We also recall the definitions of ARMA, MA and AR processes.

4.2.1. AWSS ARMA Sources

Definition 4

(ARMA process).A real zero-mean random process is said to be ARMA if

where for all , and is a real zero-mean random process satisfying that for all with . If there exist such that for all and for all , then is called an ARMA process.

Theorem 3.

Suppose that is an ARMA() process as in Definition 4. Let and for all . Assume that and for all , and that there exist such that and for all . Then, is AWSS with PSD , and

for all .

Proof.

See Appendix E. □

4.2.2. MA Sources

Definition 5

(MA process).A real zero-mean random process is said to be MA if

where for all , and is a real zero-mean random process satisfying that for all with . If there exists such that for all , then is called an MA process.

Theorem 4.

Suppose that is an MA(q) process as in Definition 5. Let for all . Assume that for all , and that there exists such that for all . Then, is AWSS with PSD , and

for all .

Proof.

It is a direct consequence of Theorem 3. □

4.2.3. AWSS AR Sources

Definition 6

(AR process).A real zero-mean random process is said to be AR if

where for all , and is a real zero-mean random process satisfying that for all with . If there exists such that for all , then is called an AR process.

Theorem 5.

Suppose that is an AR(p) process as in Definition 6. Let for all . Assume that for all , and that there exists such that for all . Then, is AWSS with PSD , and

for all .

Proof.

It is a direct consequence of Theorem 3. □

4.2.4. WSS Sources

Theorem 6.

Suppose that is a WSS process as in Definition 2 with PSD f. Assume that for all and that there exists such that whenever . Then,

for all .

Proof.

See Appendix F. □

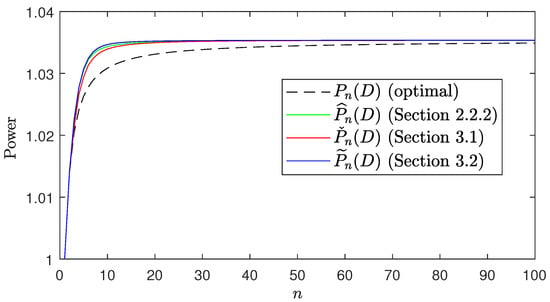

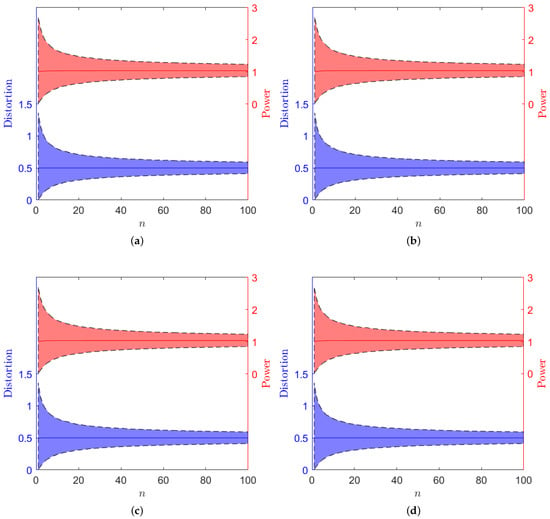

5. Numerical Example

In this section we give a numerical example. We consider an ARMA() process with , and , channel noise variance and an average distortion constraint . Figure 5 shows the theoretical value of the average transmission power required for each of the considered schemes with . It can be observed how the graphs of the average transmission power of the different schemes follow the inequalities in (9), and how the transmission power of the different schemes get closer as n increases. Moreover, we have simulated the transmission of 20,000 samples of the considered ARMA() process for . Figure 6 shows the 10th and the 90th percentile of the power and distortion of the samples.

Figure 5.

Theoretical average transmission powers of the considered schemes.

Figure 6.

Interval between the 10th and the 90th percentile for the actual transmission power and the actual distortion (shaded) and theoretical average transmission power and distortion (solid line) in each of the considered schemes. (a) Optimal linear coding scheme (Section 2.2.1). (b) Low-power alternative (Section 3.1). (c) DFT-based alternative (Section 2.2.2). (d) DFT/IDFT alternative (Section 3.2).

6. Conclusions

In this paper, two new low-complexity linear coding schemes for transmitting n-dimensional vectors by using n times an AWGN channel have been presented. These schemes are based on the DFT. The performance of these schemes, along with another DFT-based scheme that had been previously presented, has been analyzed in comparison with the optimal scheme among the linear ones. These three DFT-based schemes allow good performance in terms of distortion using low-cost, low-power hardware.

In particular, it has been proved that, under a maximum average distortion constraint, the considered low-complexity schemes require the same average transmission power as the optimal linear scheme for AWSS sources when the block length, n, tends to infinity. Moreover, it has been proved that for certain types of AWSS sources (namely WSS, MA, AWSS AR and AWSS ARMA), the difference between the transmission power of each of the three alternative schemes and the transmission power of the optimal linear scheme decreases as . Therefore, their performance will be similar to that of the optimal linear coding scheme even for small values of n. In other words, replacing the optimal linear scheme with any of the schemes studied here will not have, even for small block sizes, a large penalty in terms of transmission power, while it will lead to a noticeable reduction in complexity.

Author Contributions

Authors are listed in order of their degree of involvement in the work, with the most active contributors listed first. J.G.-G. conceived the research question. F.M.V.-R. carried out the numerical example. All authors were involved in the research and wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Spanish Ministry of Science and Innovation through the ADELE project (PID2019-104958RB-C44).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADC | Analog-to-digital converter |

| AR | Autoregressive |

| ARMA | Autoregressive moving average |

| AWGN | Additive white Gaussian noise |

| AWSS | Asymptotically wide-sense stationary |

| DFT | Discrete Fourier transform |

| FFT | Fast Fourier transform |

| IDFT | Inverse discrete Fourier transform |

| iid | Independent and identically distributed |

| IoT | Internet of Things |

| MA | Moving average |

| WSS | Wide-sense stationary |

Appendix A. Average Transmission Power and Average Distortion of the Low-Power Alternative

We first recall the basic symmetry property of the DFT.

Lemma A1.

Let for all , with . If is the DFT of , i.e., , the two following assertions are equivalent:

- .

- for all and .

Now we show that and in (3) and (4), respectively, are well defined. If , applying Theorems 1 and 2 from [9], for all . Hence and, consequently, . Thus,

and, therefore, is well defined in (3). Furthermore, and, consequently, is well defined in (4).

Next, we show that the average transmission power of the coding scheme shown in Figure 3 is given in (5):

Finally, we show that . From Figure 3, observe that for , where represents the largest integer lower than or equal to . Furthermore, as for all and given that each with is a linear combination of , for all . Consequently, since the Frobenius norm is unitarily invariant, we have:

Appendix B. Average Transmission Power and Average Distortion of the DFT/IDFT Alternative

We first show that and in (6) and (7), respectively, are well defined. If , applying Theorem 1 from [9], for all . Hence and, consequently, . Thus,

and, therefore, is well defined in (6). Furthermore, and, consequently, is well defined in (7).

We now show that the output of the encoder, , is real. Observe that is real if and only if fulfills the conditions given in assertion 2 of Lemma A1, i.e., and for . From (6), with are always real numbers. Since is the DFT of the real vector , is real, and so is . Furthermore, given that for , for . Then, from (6), for , and for .

Next, we show that the average transmission power of the coding scheme shown in Figure 4 is given in (8). Since the Frobenius norm is unitarily invariant, we have:

Finally, we show that . As for all then , where is the zero matrix. Consequently, we have:

where denotes the trace of a matrix.

Appendix C. Proof of Theorem 1

We first need to introduce the following result.

Lemma A2.

Let be a symmetric positive semi-definite matrix. Then,

Proof.

□

Next, we prove Theorem 1.

Appendix D. Proof of Theorem 2

Proof.

To prove the remaining equalities, from Theorem 1, we have to show that

To that end, we need to introduce some notation. If is an matrix, is the circulant matrix

Moreover, we denote by the circulant matrix defined as

Due to the optimality of the scheme proposed in [4], and from the proof of assertion 4 of Lemma 1 in [6],

where . Observe that, from Definition 3, is bounded. Hence, to finish the proof we only have to show that

Appendix E. Proof of Theorem 3

Proof.

Since for all , for every . As for all , from Theorem 8 in [12], is AWSS with PSD .

Next, we show that . Observe that (10) can be rewritten as

Then,

and

Applying Theorem 4.3 from [10] yields

Finally, we prove (11). From (A1) we need to show that is bounded. To that end, from Equation (16) in [11] we need to show that and are bounded. This is shown in the following two steps.

Step 1. Using Lemma 4.2 and Theorem 4.3 from [10] we have:

where

From Lemma 2 in [13], is bounded.

Step 2. Using Theorem 4.4, Lemmas 5.2 and 5.3 from [10] we have:

where

From Lemmas 2 and 3 in [13] and Lemma 5.4 in [10], is bounded. □

References

- Fresnedo, O.; Vazquez-Araujo, F.J.; Castedo, L.; Garcia-Frias, J. Low-Complexity Near-Optimal Decoding for Analog Joint Source Channel Coding Using Space-Filling Curves. IEEE Commun. Lett. 2013, 17, 745–748. [Google Scholar] [CrossRef]

- Sadhu, V.; Zhao, X.; Pompili, D. Energy-Efficient Analog Sensing for Large-Scale and High-Density Persistent Wireless Monitoring. IEEE Internet Things J. 2020, 7, 6778–6786. [Google Scholar] [CrossRef] [Green Version]

- Mouris, B.A.; Stavrou, P.A.; Thobaben, R. Optimizing Low-Complexity Analog Mappings for Low-Power Sensors with Energy Scheduling Capabilities. IEEE Internet Things J. 2022, 1. [Google Scholar] [CrossRef]

- Lee, K.H.; Petersen, D.P. Optimal Linear Coding for Vector Channels. IEEE Trans. Commun. 1976, 24, 1283–1290. [Google Scholar]

- Insausti, X.; Crespo, P.M.; Gutiérrez-Gutiérrez, J.; Zárraga-Rodríguez, M. Low-Complexity Analog Linear Coding Scheme. IEEE Commun. Lett. 2018, 22, 1754–1757. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Villar-Rosety, F.M.; Zárraga-Rodríguez, M.; Insausti, X. A Low-Complexity Analog Linear Coding Scheme for Transmitting Asymptotically WSS AR Sources. IEEE Commun. Lett. 2019, 23, 773–776. [Google Scholar] [CrossRef]

- Gray, R.M. On the Asymptotic Eigenvalue Distribution of Toeplitz Matrices. IEEE Trans. Inf. Theory 1972, 18, 725–730. [Google Scholar] [CrossRef]

- Gray, R.M. Toeplitz and Circulant Matrices: A review. Found. Trends Commun. Inf. Theory 2006, 2, 155–239. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Zárraga-Rodríguez, M.; Villar-Rosety, F.M.; Insausti, X. Rate-Distortion Function Upper Bounds for Gaussian Vectors and Their Applications in Coding AR Sources. Entropy 2018, 20, 399. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Block Toeplitz Matrices: Asymptotic Results and Applications. Found. Trends Commun. Inf. Theory 2011, 8, 179–257. [Google Scholar] [CrossRef] [Green Version]

- Zárraga-Rodríguez, M.; Gutiérrez-Gutiérrez, J.; Insausti, X. A Low-Complexity and Asymptotically Optimal Coding Strategy for Gaussian Vector Sources. Entropy 2019, 21, 965. [Google Scholar] [CrossRef] [Green Version]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Asymptotically Equivalent Sequences of Matrices and Multivariate ARMA Processes. IEEE Trans. Inf. Theory 2011, 57, 5444–5454. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Zárraga-Rodríguez, M.; Insausti, X. On the Asymptotic Optimality of a Low-Complexity Coding Strategy for WSS, MA, and AR Vector Sources. Entropy 2020, 22, 1378. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).