Abstract

ROC (Receiver Operating Characteristic) analyses are considered under a variety of assumptions concerning the distributions of a measurement X in two populations. These include the binormal model as well as nonparametric models where little is assumed about the form of distributions. The methodology is based on a characterization of statistical evidence which is dependent on the specification of prior distributions for the unknown population distributions as well as for the relevant prevalence w of the disease in a given population. In all cases, elicitation algorithms are provided to guide the selection of the priors. Inferences are derived for the AUC (Area Under the Curve), the cutoff c used for classification as well as the error characteristics used to assess the quality of the classification.

Keywords:

ROC; AUC; optimal cutoff; statistical evidence; relative belief; binormal; mixture Dirichlet process 1. Introduction

An ROC (Receiver Operating Characteristic) analysis is used in medical science to determine whether or not a real-valued diagnostic variable X for a disease or condition is useful. If the diagnostic indicates that an individual has the condition, then this will typically mean that a more expensive or invasive medical procedure is undertaken. So it is important to assess the accuracy of the diagnostic variable These methods have a wider class of applications but our terminology will focus on the medical context.

An approach to such analyses is presented here that is based on a characterization of statistical evidence and which incorporates all available information as expressed via prior probability distributions. For example, while p-values are often used in such analyses, there are questions concerning the validity of these quantities as characterizations of statistical evidence. As will be discussed, there are many advantages to the framework adopted here.

A common approach to the assessment of the diagnostic variable X is to estimate its AUC (Area Under the Curve), namely, the probability that an individual sampled from the diseased population will have a higher value of diagnostic variable X than an individual independently sampled from the nondiseased population. A good diagnostic should give a value of the AUC near 1 while a value near 1/2 indicates a poor diagnostic test (if the AUC is near 0, then the classification is reversed). It is possible, however, that a diagnostic with AUC may not be suitable (see Examples 1 and 6). In particular, a cutoff value c needs to be selected so that if then an individual is classified as requiring the more invasive procedure. Inferences about the error characteristics for the combination such as the false positive rate, etc., are also required.

This paper is concerned with inferences about the AUC, the cutoff c and the error characteristics of the classification based on a valid measure of evidence. A key aspect of the analysis is the relevant prevalence w. The phrase “relevant prevalence” means that X will be applied to a certain population, such as those patients who exhibit certain symptoms, and w represents the proportion of this subpopulation who are diseased. The value of w may vary by geography, medical unit, time, etc. To make a valid assessment of X in an application, it is necessary that the information available concerning w be incorporated. This information is expressed here via an elicited prior probability distribution for w, which may be degenerate at a single value if w is assumed known, or be quite diffuse when little is known about w. In fact, all unknown population quantities are given elicited priors. There are many contexts where data are available relevant to the value of w and this leads to a full posterior analysis for w as well as for the other quantities of interest. Even when such data are not available, however, it is still possible to take the prior for w into account so the uncertainties concerning w always play a role in the analysis and this is a unique aspect of the approach taken here.

While there are some methods available for the choice of c, these often do not depend on the prevalence w which is a key factor in determining the true error characteristics of in an application, see [1,2,3,4,5]. So it is preferable to take w into account when considering the value of a diagnostic in a particular context. One approach to choosing c is to minimize some error criterion that depends on w to obtain As will be demonstrated in the examples, however, sometimes results in a classification that is useless. In such a situation a suboptimal choice of c is required but the error characteristics can still be based on what is known about w so that these are directly relevant to the application.

Others have pointed out deficiencies in the AUC statistic and proposed alternatives. For example, it can be argued that taking into account the costs associated with various misclassification errors is necessary and that using the AUC is implicitly making unrealistic assumptions concerning these costs, see [6]. While costs are relevant, costs are not incorporated here as these are often difficult to quantify. Our goal is to express clearly what the evidence is saying about how good is via an assessment of its error characteristics. With the error characteristics in hand, a user can decide whether or not the costs of misclassifications are such that the diagnostic is usable. This may be a qualitative assessment although, if numerical costs are available, these could be subsequently incorporated. The principle here is that economic or social factors be considered separately from what the evidence in the data says, as it is a goal of statistics to clearly state the latter.

The framework for the analysis is Bayesian as proper priors are placed on the unknown distribution (the distribution of X in the nondiseased population), on (the distribution of X in the diseased population) and the prevalence In all the problems considered, elicitation algorithms are presented for how to choose these priors. Moreover, all inferences are based on the relative belief characterization of statistical evidence where, for a given quantity, evidence in favor (against) is obtained when posterior beliefs are greater (less) than prior beliefs, see Section 2.2 for discussion and [7]. So evidence is determined by how the data change beliefs. Section 2 discusses the general framework, defines relevant quantities and provides an outline for how specific relative belief inferences are determined. Section 3 develops the inferences for the quantities of interest for three contexts (1) X is an ordered discrete variable with and without constraints on (2) X is a continuous variable and are normal distributions (the binormal model) (3) X is a continuous variable and no constraints are placed on

There is previous work on using Bayesian methods in ROC analyses. For example, a Bayesian analysis for the binormal model when there are covariates present is developed in [8]. An estimate of the ROC using the Bayesian bootstrap is discussed in [9]. A Bayesian semiparametric analysis using a Dirichlet mixture process prior is developed in [10,11]. The sampling regime where the data can be used for inference about the relevant prevalence and where a gold standard classifier is not assumed to exist is presented in [12]. Considerable discussion concerning the case where the diagnostic test is binary, covering the cases where there is and is not a gold standard test, as well as the situation where the goal is to compare diagnostic tests and to make inference about the prevalence distribution can be found in [13] and also see [14]. Application of an ROC analysis to a comparison of linear and nonlinear approaches to a problem in medical physics is in [15]. Further discussion of nonlinear methodology can be found in [16,17].

The contributions of this paper, that have not been covered by previous published work in this area, are as follows:

- (i)

- The primary contribution is to base all the inferences associated with an ROC analysis on a clear and unambiguous characterization of statistical evidence via the principle of evidence and the relative belief ratio. While Bayes factors are also used to measure statistical evidence, there are serious limitations on their usage with continuous parameters as priors are restricted to be of a particular form. The approach via relative belief removes such restrictions on priors and provides a unified treatment of estimation and hypothesis assessment problems. In particular, this leads directly to estimates of all the quantities of interest, together with assessments of the accuracy of the estimates, and a characterization of the evidence, whether in favor of or against a hypothesis, together with a measure of the strength of the evidence. Moreover, no loss functions are required to develop these inferences. The merits of the relative belief approach over others are more fully discussed in Section 2.2.

- (ii)

- A prior on the relevant prevalence is always used to determine inferences even when the posterior distribution of this quantity is not available. As such the prevalence always plays a role in the inferences derived here.

- (iii)

- The error in the estimate of the cut-off is always quantified as well as the errors in the estimates of the characteristics evaluated at the chosen cut-off. It is these characteristics, such as the sensitivity and specificity, that ultimately determine the value of the diagnostic test.

- (iv)

- The hypothesis AUC is first assessed and if evidence is found in favor of this, the prior is then conditioned on this event being true for inferences about the remaining quantities. Note that this is equivalent to conditioning the posterior on the event AUC > when inferences are determined by the posterior but with relative belief inferences both the conditioned prior and conditioned posterior are needed to determine the inferences.

- (v)

- Precise conditions are developed for the existence of an optimal cutoff with the binormal model.

- (vi)

- In the discrete context (1), it is shown how to develop a prior and the analysis under the assumption that the probabilities describing the outcomes from the diagnostic variable X are monotone.

The relative belief ratio, as a measure of evidence, is seen to have a connection to relative entropy. For example, it is equivalent, in the sense that the inferences are the same, to use the logarithm of the relative belief ratio as the measure of evidence. The relative entropy is then the posterior expectation of this quantity and so can be considered as a measure of the overall evidence provided by the model, prior and data concerning a quantity of interest.

The methods used for all the computations in the paper are simulation based and represent fairly standard Bayesian computational methods. In each context considered, sufficient detail is provided so that these can be implemented by a user.

2. The Problem

Consider the formulation of the problem as presented in [18,19] but with somewhat different notation. There is a measurement defined on a population with where is comprised of those with a particular disease, and represents those without the disease. So is the conditional cdf of X in the nondiseased population, and is the conditional cdf of X in the diseased population. It is assumed that there is a gold standard classifier, typically much more difficult to use than such that for any it can be determined definitively if or There are two ways in which one can sample from namely,

- (i)

- take samples from each of and separately or

- (ii)

- take a sample from

The sampling method used affects the inferences that can be drawn. For many studies (i) is the relevant sampling mode, as in case-control studies, while (ii) is relevant in cross-sectional studies.

It supposed that the greater the value is for individual the more likely it is that For the classification, a cutoff value c is required such that, if , then is classified as being in and otherwise is classified as being in However, X is an imperfect classifier for any c and it is necessary to assess the performance of . It seems natural that a value of c be used that is optimal in some sense related to the error characteristics of this classification. Table 1 gives the relevant probabilities for classification into and , together with some common terminology, in a confusion matrix.

Table 1.

Error probabilities when indicates a positive.

Another key ingredient is the prevalence of the disease in In practical situations, it is necessary to also take w into account in assessing the error in The following error characteristics depend on

Under sampling regime (ii) and cutoff c, Error(c) is the probability of making an error, FDR(c) is the conditional probability of a subject being misclassified as positive given that it has been classified as positive and FNDR(c) is the conditional probability of a subject being misclassified as negative given that it has been classified as negative. In other words, FDR(c) is the proportion of those individuals in the population consisting of those who have been classified by the diagnostic test as having the disease, but in fact do not have it. It is often observed that when w is very small and FNR(c) and FPR(c) are small, then FDR(c) can be big. This is sometimes referred to as the base rate fallacy as, even though the test appears to be a good one, there is a high probability that an individual classified as having the disease will be misclassified. For example, if FNR(c) = FPR then Error, FDR FNDR and when then Error, FDR FNDR In these cases the false nondiscovery rate is quite small while the false discovery rate is large. If the disease is highly contagious, then these probabilities may be considered acceptable but indeed they need to be estimated. Similarly, FNDR may be small when FNR is large and w is very small.

It is naturally desirable to make inference about an optimal cutoff and its associated error quantities. For a given value of the optimal cutoff will be defined here as Error, the value which minimizes the probability of making an error. Other choices for determining a can be made, and the analysis and computations will be similar, but our thesis is that, when possible, any such criterion should involve the prior distribution of the relevant prevalence As demonstrated in Example 6 this can sometimes lead to useless values of even when the AUC is large. While this situation calls into question the value of the diagnostic, a suboptimal choice of c can still be made according to some alternative methodology. For example, sometimes Youden’s index, which maximizes Error over c with , is recommended, or the closest-to-(0,1) criterion which minimizes , see [2] for discussion. Youden’s index and the closest-to-(0,1) criterion do not depend on the prevalence and have geometrical interpretations in terms of the ROC curve, but as we will see, the ROC curve does not exist in full generality and this is particularly relevant in the discrete case. The methodology developed here provides an estimate of the c to be used, together with an exact assessment of the error in this estimate, as well as providing estimates of the associated error characteristics of the classification.

Letting denote the estimate of , the values of Error and are also estimated and the recorded values used to assess the value of the diagnostic test. There are also other characteristics that may prove useful in this regard such as the positive predictive value (PPV)

namely, the conditional probability a subject is positive given that they have tested positive, which plays a role similar to FDR. See [14] for discussion of the PPV and the similarly defined negative predictive value (NPV). The value of can be estimated in the same way as the other quantities as is subsequently discussed.

2.1. The AUC and ROC

Consider two situations where are either both absolutely continuous or both discrete. In the discrete case, suppose that these distributions are concentrated on a set of points When are selected using sampling scheme (i), then the probability that a higher score is received on diagnostic X by a diseased individual than a nondiseased individual is

Under the assumption that is constant on for every there is a function ROC (receiver operating characteristic) such that ROC so AUCROC Putting then ROC In the absolutely continuous case, AUCROC which is the area under the curve given by the ROC function. The area under the curve interpretation is geometrically evocative but is not necessary for (1) to be meaningful.

It is commonly suggested that a good diagnostic variable X will have an AUC close to 1 while a value close to 1/2 suggests a poor diagnostic test. It is surely the case, however, that the utility of X in practice will depend on the cutoff c chosen and the various error characteristics associated with this choice. So while the AUC can be used to screen diagnostics, it is only part of the analysis and inferences about the error characteristics are required to truly assess the performance of a diagnostic. Consider an example.

Example 1.

Suppose that for some where is continuous, strictly increasing with associated density Then using (1), AUC which is approximately 1 when q is large. The optimal c minimizes Error which implies c satisfies when and the optimal c is otherwise . If then AUC and with so FNR FPR Error FDR and FNDR. So X seems like a good diagnostic via the AUC and the error characteristics that depend on the prevalence although within the diseased population the probability is of not detecting the disease. If instead then the AUC is the same but and the optimal classification always classifies an individual as non-diseased which is useless. So the AUC does not indicate enough about the characteristics of the diagnostic to determine if it is useful or not. It is necessary to look at the error characteristics of the classification at the cutoff value that will actually be used, to determine if a diagnostic is suitable and this implies that information about w is necessary in an application.

2.2. Relative Belief Inferences

Suppose there is a model for data together with a prior probability measure with density on These ingredients lead, via the principle of conditional probability, to beliefs about the true value of as initially expressed by being replaced by the posterior probability measure with density Note that if interest is instead in a quantity where and we use the same notation for the function and its range, then the model is replaced by where is obtained by integrating out the nuisance parameters, and the prior is replaced by the marginal prior This leads to the marginal posterior with density

For the moment suppose that all the distributions are discrete. The principle of evidence then says that there is evidence in favor of the value if evidence against the value if and no evidence either way if So, for example, there is evidence in favor of if the probability of increases after seeing the data. To order the possible values with respect to the evidence, we use the relative belief ratio

Note that indicates whether there is evidence in favor of (against) the value If there is evidence in favor of both and then there is more evidence in favor of than whenever and, if there is evidence against both and then there is more evidence against than whenever For the continuous case consider a sequence of neighborhoods as and then

under very weak conditions such as and being continuous at

All the inferences about quantities considered in the paper are derived based upon the principle of evidence as expressed via the relative belief ratio. For example, it is immediate that the value indicates whether or not there is evidence in favor of or against the hypothesis Furthermore, the posterior probability measures the strength of this evidence for, if and this probability is large, then there is strong evidence in favor of as there is a small belief that the true value has a larger relative belief ratio and if and this probability is small, then there is strong evidence against as there is high belief that the true value has a larger relative belief ratio. For estimation it is natural to estimate by the relative belief estimate as this value has the maximum evidence in its favor. Furthermore, the accuracy of this estimate can be assessed by looking at the plausible region consisting of all those values for which there is evidence in favor, together with its size and posterior content which measures how strongly it is believed the true value lies in this set. Rather than using the plausible region to assess the accuracy of , one could quote a relative belief credible region

where the constant is the largest value such that . It is necessary, however, that as otherwise will contain values for which there is evidence against, and this is only known after the data have been seen.

It is established in [7], and in papers referenced there, that these inferences possess a number of good properties such as consistency, satisfy various optimality criteria and clearly they are based on a direct measure of the evidence. Perhaps most significant is the fact that all the inferences are invariant under reparameterizations. For if , where is a smooth bijection, then

and so, for example, This invariance property is not possessed by the most common inference methods employed such as MAP estimation or using posterior means and this invariance holds no matter what the dimension of is. Moreover, it is proved in [20] that relative belief inferences are optimally robust among all Bayesian inferences for , to linear contaminations of the prior on .

An analysis, using relative belief, of the data obtained in several physics experiments that were all concerned with examining whether there was evidence in favor of or against the quantum model versus hidden variables is available in [21]. Furthermore, an approach to checking models used for quantum mechanics via relative belief is discussed in [22]. Other applications of relative belief inferences to common problems of statistical practice can be found in [7].

The Bayes factor is an alternative measure of evidence and is commonly used for hypothesis assessment in Bayesian inference. To see why the relative belief ratio has advantages over the Bayes factor for evidence-based inferences consider first assessing the hypothesis When the prior probability of satisfies then the Bayes factor is defined as the ratio of the posterior odds in favor of to the prior odds in favor of namely,

It is easily shown that the Bayes factor satisfies the principle of evidence and is evidence in favor (against) so in this context it is a valid measure of evidence.

One might wonder why it is necessary to consider a ratio of odds as opposed to the simpler ratio of probabilities, as specified by the relative belief ratio, for the purpose of measuring evidence but in fact there is a more serious issue with the Bayes factor. For suppose, as commonly arises in applications, that is a continuous probability measure so that as then the Bayes factor for is not defined. The common recommendation in this context is to require the specification of the following ingredients: a prior probability a prior distribution concentrated on which provides the prior predictive density , a prior distribution concentrated on which provides the prior predictive density and then the full prior is taken to be the mixture With this prior the Bayes factor for is defined, as now the prior probability of equals and an easy calculation shows that Typically the prior is taken to be the prior that we might place on when interest is in estimating .

Now consider the problem of estimating and the prior is such that for every value of as with a continuous prior. The Bayes factor is then not defined for any value of and, if we wished to use the Bayes factor for estimation purposes, it would be necessary to modify the prior to be a different mixture for each value of so that there would be in effect multiple different priors. This does not correspond to the logic underlying Bayesian inference. When using the relative belief ratio for inference only one prior is required and the same measure of evidence is used for both hypothesis assessment and estimation purposes.

Another approach to dealing with the problem that arises with the Bayes factor and continuous priors is to take a limit as in (2) and, when this is done, we obtain the result

as whenever the prior density of is continuous and positive at In other words the relative belief ratio can be also considered as a natural definition of the Bayes factor in continuous contexts.

3. Inferences for an ROC Analysis

Suppose we have a sample of from , namely, and a sample of from , namely, and the goal is to make inference about the AUC, the cutoff c and the error characteristics FNR FPR Error FDR and FNDR. For the AUC it makes sense to first assess the hypothesis AUC via stating whether there is evidence for or against together with an assessment of the strength of this evidence. Estimates are required for all of these quantities, together with an assessment of the accuracy of the estimate.

3.1. The Prevalence

Consider first inferences for the relevant prevalence If w is known, or at least assumed known, then nothing further needs to be done but otherwise this quantity needs to be estimated when assessing the value of the diagnostic and so uncertainty about w needs to be addressed.

If the full data set is based on sampling scheme (ii), then binomial A natural prior to place on w is a beta distribution. The hyperparameters are chosen based on the elicitation algorithm discussed in [23] where interval is chosen such that it is believed that with prior probability Here is chosen so that we are virtually certain that and then seems like a reasonable choice. Note that choosing corresponds to w being known and so in that case. Next pick a point for the mode of the prior and a reasonable choice might be Then putting leads to the parameterization beta beta where locates the mode and controls the spread of the distribution about Here gives the uniform distribution and gives the distribution degenerate at With specified, is the smallest value of such that the probability content of is and this is found iteratively. For example, if and so w is known reasonably well, then and so the prior is beta and the posterior is beta

The estimate of w is then

In this case the estimate is the MLE, namely, The accuracy of this estimate is measured by the size of the plausible region . For example, if and then and which has posterior content So the data suggest that the upper bound of is too strong although the posterior belief in this interval is not very high.

The prior and posterior distributions of w play a role in inferences about all the quantities that depend on the prevalence. In the case where the cutoff is determined by minimizing the probability of a misclassification, then FNR FPR Error FDR and FNDR all depend on the prevalence. Under sampling scheme (i), however, only the prior on w has any influence when considering the effectiveness of Inference for these quantities is now discussed in both cases.

3.2. Ordered Discrete Diagnostic

Suppose X takes values on the finite ordered scale and let so and . These imply that FPR FNR

with the remaining quantities defined similarly. Ref. [23] can be used to obtain independent elicited Dirichlet priors

on these probabilities by placing either upper or lower bounds on each cell probability that hold with virtual certainty as discussed for the beta prior on the prevalence. If little information is available, it is reasonable to use uniform (Dirichlet) priors on and This together with the independent prior on w leads to prior distributions for the AUC, and all the quantities associated with error assessment such as FNR etc.

Data lead to counts and which in turn lead to the independent posteriors

Under sampling regime (ii) this, together with the independent posterior on leads to posterior distributions for all the quantities of interest. Under sampling regime (i), however, the logical thing to do, so the inferences reflect the uncertainty about is to only use the prior on w when deriving inferences about any quantities that depend on this such as and the various error assessments.

Consider inferences for the AUC. The first inference should be to assess the hypothesis AUC for, if is false, then X would seem to have no value as a diagnostic (the possibility that the directionality is wrong is ignored here). The relative belief ratio is computed and compared to 1. If it is concluded that is true, then perhaps the next inference of interest is to estimate the AUC via the relative belief estimate. The prior and posterior densities of the AUC are not available in closed form so estimates are required and density histograms are employed here for this. The set is discretized into L subintervals and putting the value of the prior density is estimated by proportion of prior simulated values of AUC in and similarly for the posterior density Then is maximized to obtain the relative belief estimate AUC together with the plausible region and its posterior content.

These quantities are also obtained for in a similar fashion, although has prior and posterior distribution concentrated on so there is no need to discretize. Estimates of the quantities FNR FPR Error FDR and FNDR are also obtained as these indicate the performance of the diagnostic in practice. The relative belief estimates of these quantities are easily obtained in a second simulation where is fixed.

Consider now an example.

Example 2.

Simulated example.

For and , data were generated as

With these choices for the true values are AUC, and with , FNR FPR Error FDR and FNDR. So X is not an outstanding diagnostic but with these error characteristics it may prove suitable for a given application. Uniform, namely, Dirichlet priors were placed on and reflecting little knowledge about these quantities.

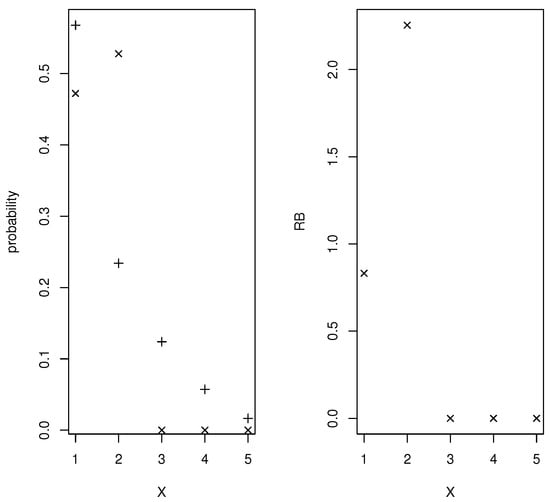

Simulations based on Monte Carlo sample sizes of from the prior and posterior distributions of and were conducted and the prior and posterior distributions of the quantities of interest obtained. The hypothesis AUC is assessed by So there is evidence in favor of and the strength of this evidence is measured by the posterior probability content of which equals to machine accuracy and so this is categorical evidence in favor of

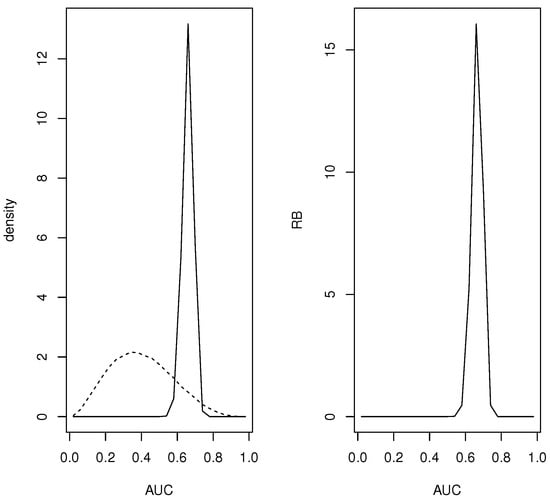

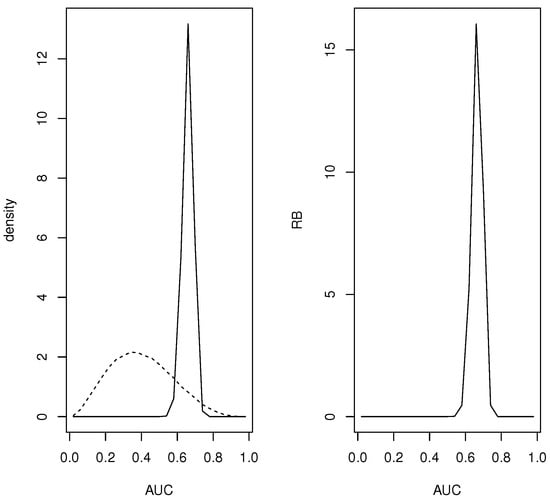

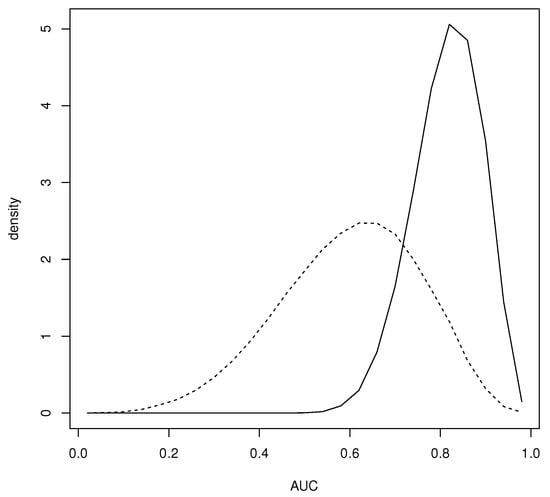

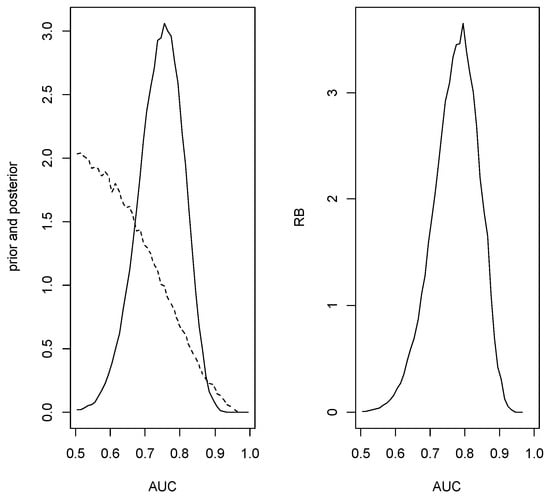

For the continuous quantities a grid based on equispaced points was used and all the mass in the interval assigned to the midpoint Figure 1 contains plots of the prior and posterior densities and relative belief ratio of the AUC. The relative belief estimate of the AUC is AUC with having posterior content Certainly a finer partition of than just 24 intervals is possible, but even in this relatively coarse case the results are quite accurate.

Figure 1.

In Example 2, plots of the prior (- - -), the posterior (—) and the RB ratio of the AUC.

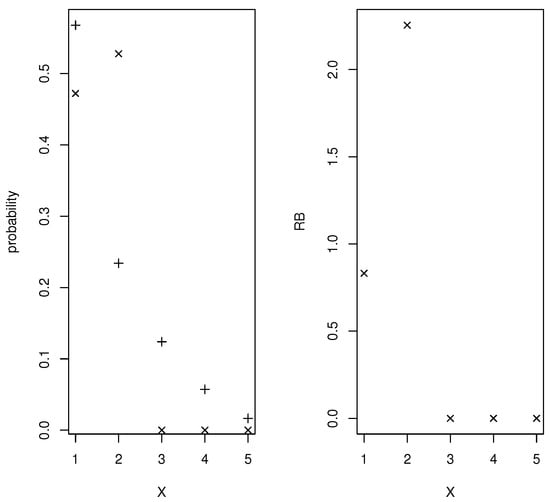

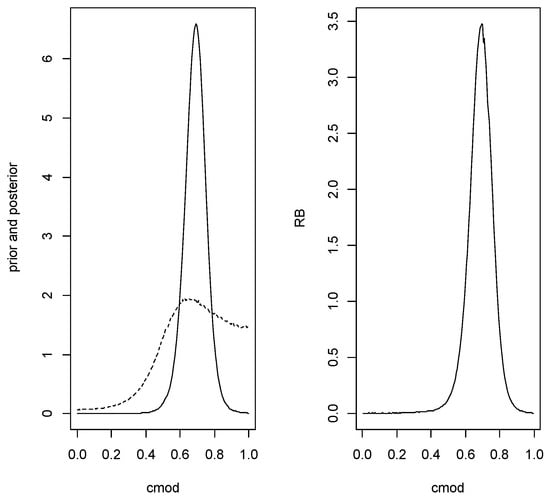

Supposing that the relevant prevalence is known to be Figure 2 contains plots of the prior and posterior densities and relative belief ratio of The relative belief estimate is with with posterior probability content so the correct optimal cut-off has been identified but there is a degree of uncertainty concerning this. The error characteristics that tell us about the utility of X as a diagnostic are given by the relative belief estimates (column (a)) in Table 2. It is interesting to note that the estimate of Error is determined by the prior and posterior distributions of a convex combination of FPR and FNR and the estimate is not the same convex combination of the estimates of FPR and FNR. So, in this case Error seems like a much better assessment of the performance of the diagnostic.

Figure 2.

In Example 2, plots of the the prior (+), the posterior (×) and the RB ratio of .

Table 2.

The estimates of the error characteristcs of X at in Example 2 where (a) w is assumed known, (b) only the prior for w is available, (c) the posterior for w is also available.

Suppose now that the prevalence is not known but there is a beta prior specified for w and consider the choice discussed in Section 3.1 where and When the data are produced according to sampling regime (i), then there is no posterior for w but this prior can still be used in determining the prior and posterior distributions of and the associated error characteristics. When this simulation was carried out with with posterior probability content and column (b) of Table 2 gives the estimates of the error characteristics. So other than the estimate of the FPR, the results are similar. Finally, assuming that the data arose under sampling scheme (ii), then w has a posterior distribution and using this gives with with posterior probability content and error characteristics as in column (c) of Table 2. These results are the same as if the prevalence is known which is sensible as the posterior concentrates about the true value more than the prior.

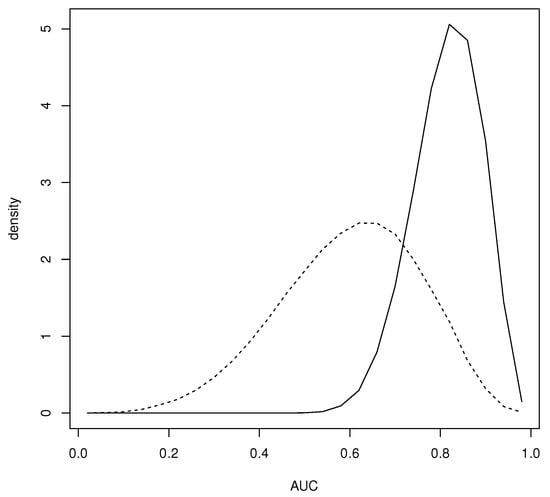

Another somewhat anomalous feature of this example is the fact that uniform priors on and do not lead to a prior on the AUC that is even close to uniform. In fact one could say that this prior has a built-in bias against a diagnostic with AUC and indeed most choices of and will not satisfy this. Another possibility is to require and namely, require monotonicity of the probabilities. A result in [22] implies that satisfies this iff where the standard -dimensional simplex, and with i-ith row equal to and satisfies this iff where and where contains all 0’s except for 1’s on the crossdiagonal. If and are independent and uniform on then and are independent and uniform on the sets of probabilities satisfying the corresponding monotonicities and Figure 3 has a plot of the prior of the AUC when this is the case. It is seen that this prior is biased in favor of AUC Figure 3 also has a plot of the prior of the AUC when is uniform on the set of all nondecreasing probabilities and is uniform on This reflects a much more modest belief that X will satisfy AUC and indeed this may be a more appropriate prior than using uniform distributions on Ref. [22] also provides elicitation algorithms for choosing alternative Dirichlet distributions for and

Figure 3.

Prior density of the AUC when is uniform on the set of nondecreasing probabilities independent of uniform on the set of nonincreasing probabilities (–) as well as when is uniformly distributed on the set of nondecreasing probabilities independent of uniform on (- -).

When AUC is accepted, it makes sense to use the conditional prior, given that this event is true, in the inferences. As such it is necessary to condition the prior on the event In general, it is not clear how to generate from this conditional prior but depending on the size of m and the prior, a brute force approach is to simply generate from the unconditional prior and select those samples for which the condition is satisfied and the same approach works with the posterior.

Here and using uniform priors for and , the prior probability of AUC is while the posterior probability is so the posterior sampling is much more efficient. Choosing priors that are more favorable to AUC will improve the efficiency of the prior sampling. Using the conditional priors led to AUC with with posterior content . This is similar to the results obtained using the unconditional prior but the conditional prior puts more mass on larger values of the AUC hence the wider plausible region with lower posterior content. Moreover, with with posterior probability content approximately (actually ) which reflects virtual certainty that the true optimal value is in

3.3. Binormal Diagnostic

Suppose now that X is a continuous diagnostic variable and it is assumed that the distributions and are normal distributions. The assumption of normality should be checked by an appropriate test and it will be assumed here that this has been carried out and normality was not rejected. While the normality assumption may seem somewhat unrealistic, many aspects of the analysis can be expressed in closed form and this allows for a deeper understanding of ROC analyses more generally.

With denoting the cdf, then FNRFPR so and

For given and all these values can be computed using except the AUC and for that quadrature or simulation via generating is required.

The following results hold for the AUC with the proofs in the Appendix A.

Lemma 1.

From Lemma 1 it is clear that it makes sense to restrict the parameterization so that but we need to test the hypothesis first. Clearly ErrorFNRFPR as and Error as so, if Error does not achieve a minimum at a finite value of then the optimal cut-off is infinite and the optimal error is It is possible to give conditions under which a finite cutoff exists and express in closed form when the parameters and the relevant prevalence w are all known.AUC iff and when the AUC is a strictly increasing function of

Lemma 2.

(i) When then a finite optimal cut-off minimizing Error exists iff and in that case

(ii) When then a finite optimal cut-off exists iff

and in that case

Corollary 1.

The restrictions and (6) hold iff

Consider now examples with equal and unequal variances.

Example 3.

Binormal with

There may be reasons why the assumption of equal variance is believed to hold but this needs to be assessed and evidence in favor found. If evidence against the assumption is found, then the approach of Example 4 can be used. A possible prior is given by where

which is a conjugate prior. The hyperparameters to be elicited are Consider first eliciting the prior for For this an interval is specified such that is it believed that with virtual certainty (say with probability Then putting implies

which implies where The interval will contain an observation from with virtual certainty and let be lower and upper bounds on the half-length of this interval so with virtual certainty. This implies This leaves specifying the hyperparameters and letting denote the cdf of the gamma distribution, then satisfying

will give the specified γ coverage. Noting that first specify and solve the first equation in (9) for and then solve the second equation in (9) for and continue this iteration until the probability content of is sufficiently close to γ. Using the posterior is then

where

Suppose the following values of the mss were obtained based on samples of from and from

So the true values of the parameters are In this case AUC Supposing that the relevant prevalence is FNR, FPR, Error, FDR, FNDR,

For the prior elicitation, suppose it is known with virtual certainty that both means lie in and so we take and the iterative process leads to For inference about it is necessary to specify a prior distribution for the prevalence This can range from w being completely known to being completely unknown whence a uniform(0,1) (beta) would be appropriate. Following the developments of Section 3.1, suppose it is known that with prior probability so in this case and and the prior is beta

The first inference step is to assess the hypothesis AUC which is equivalent to by computing the prior and posterior probabilities of this event to obtain the relative belief ratio. The prior probability of given is

and averaging this quantity over the prior for we get The posterior probability of this event can be easily obtained via simulating from the joint posterior. When this is done in the specific numerical example, the relative belief ratio of this event is with posterior content so there is strong evidence that AUC is true.

If evidence is found against then this would indicate a poor diagnostic. If evidence is found in favor, then we can proceed conditionally given that holds and so condition the joint prior and joint posterior on this event being true when making inferences about AUC, etc. So for the prior it is necessary to generate gamma and then generate from the joint conditional prior given and that Denoting the conditional priors given by and we see that this joint conditional prior is proportional to

While generally it is not possible to generate efficiently from this distribution we can use importance sampling to calculate any expectations by generating with serving as the importance sampling weight and where denotes the distribution conditioned to with density

for and 0 otherwise. Generating from this distribution via inversion is easy since the cdf is Note that, if we take the posterior from the unconditioned prior and condition that, we will get the same conditioned posterior as when we use the conditioned prior to obtain the posterior. This implies that in the joint posterior for it is only necessary to adjust the posterior for as was done with the prior and this is also easy to generate from. Note that Lemma 2 (i) implies that it is necessary to use the conditional prior and posterior to guarantee that exists finitely.

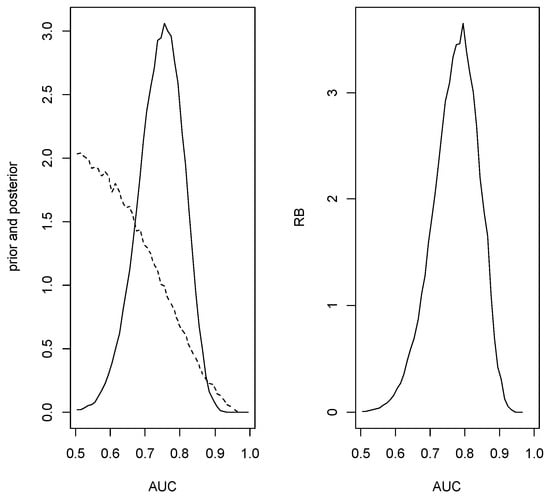

Since was accepted, the conditional sampling was implemented and the estimate of the AUC is with plausible region which has posterior content So the estimate is close to the true value but there is substantial uncertainty. Figure 4 is a plot of the conditioned prior, the conditioned posterior and relative belief ratio for this data.

Figure 4.

The conditioned prior (- -) and posterior (–) densities (left panel) and the relative belief ratio (right panel) of the AUC in Example 3.

With the specified prior for the posterior is beta which leads to estimate for w with plausible interval having posterior probability content Using this prior and posterior for w and the conditioned prior and posterior for we proceed to an inference about and the error characteristics associated with this classification. A computational problem arises when obtaining the prior and posterior distributions of as it is clear from (5) that these distributions can be extremely long-tailed. As such, we transform to (the Cauchy cdf), obtain the estimate where and its plausible region and then, applying the inverse transform, obtain and its plausible region. It is notable that relative belief inferences are invariant under 1-1 smooth transformations, so it does not matter which parameterization is used, but it is much easier computationally to work with a bounded quantity. Furthermore, if a shorter tailed cdf is used rather than a Cauchy, e.g., a cdf, then errors can arise due to extreme negative values being always transformed to 0 and very extreme positive values always transformed to 1. Figure 5 is a plot of the prior density, posterior density and relative belief ratio of For these data with plausible interval having posterior content Large Monte Carlo samples were used to get smooth estimates of the densities and relative belief ratio but these only required a few minutes of computer time on a desktop. The estimated error characteristics at this value of are as follows: FNR, FPR, Error, FDR, FNDR which are close to the true values.

Figure 5.

Plots of the prior (- -), posterior (left panel) and relative belief ratio (right panel) of in Example 3.

Example 4.

Binormal with

In this case the prior is given by where

Although this specifies the same prior for the two populations, this is easily modified to use different priors and, in any case, the posteriors are different. Again it is necessary to check that the AUC but also to check that exists using the full posterior based on this prior and for this we have the hypothesis given by Corollary 1. If evidence in favor of is found, the prior is replaced by the conditional prior given this event for inference about This can be implemented via importance sampling as was done in Example 3 and similarly for the posterior.

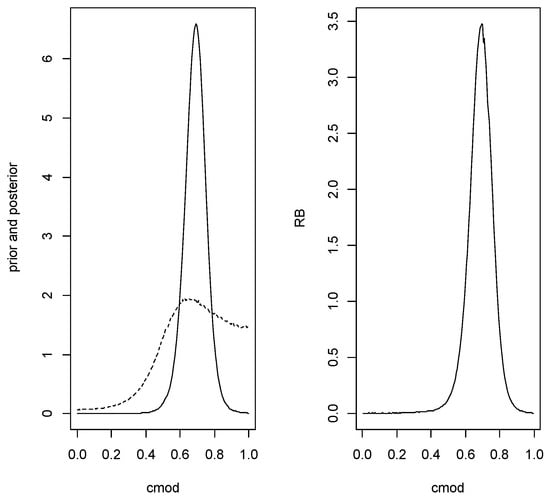

Using the same data and hyperparameters as in Example 3 the relative belief ratio of is with posterior content so there is reasonably strong evidence in favor of Estimating the value of the AUC is then based on conditioning on being true. Using the conditional prior given that is true, the relative belief estimate of the AUC is with plausible interval with posterior content The optimal cutoff is estimated as with plausible interval having posterior content Figure 6 is a plot of the prior density, posterior density and relative belief ratio of The estimates of the error characteristics at are as follows: FNR, FPR, Error, FDR, FNDR

Figure 6.

Plots of the prior (- -), posterior (left panel) and relative belief ratio (right panel) of in Example 4.

It is notable that these inferences are very similar to those in Example 3. It is also noted that the sample sizes are not big and so the only situation where it might be expected that the inferences will be quite different between the two analyses is when the variances are substantially different.

3.4. Nonparametric Bayes Model

Suppose that X is a continuous variable, of course still measured to some finite accuracy, and available information is such that no particular finite dimensional family of distributions is considered feasible. The situation is considered where a normal distribution perhaps after transforming the data, is considered as a possible base distribution for X but we want to allow for deviation from this form. Alternative choices can also be made for the base distribution. The statistical model is then to assume that the and are generated as samples from and where these are independent values from a DP (Dirichlet) process with base for some and concentration parameter Actually, since it is difficult to argue for some particular choice of it is supposed that also has a prior The prior on is then specified hierarchically as a mixture Dirichlet process,

To complete the prior it is necessary to specify and the concentration parameters and For the prior is taken to be a normal distribution elicited as discussed in Section 3.3 although other choices are possible. For eliciting the concentration parameters, consider how strongly it is believed that normality holds and for convenience suppose If DP with H a probability measure, then and When F a random measure from then which, when DP equals

where denotes the beta measure. This upper bound on the probability that the random F differs from H by at least on an event can be made as small as desirable by choosing a large enough. For example, if and it is required that this upper bound be less than then this satisfied when and if instead , then is necessary. Note that, since this bound holds for every continuous probability measure it also holds when H is random, as considered here. So a is controlling how close it is believed that the true distribution is to H. Alternative methods for eliciting a can be found in [24,25].

Generating from the prior for given can only be done approximately and the approach of [26] is adopted. For this, integer is specified and measure is generated where independent of since DP as So to carry out a priori calculations proceed as follows. Generate

and similarly for and Then is the random cdf at and similarly for , so AUC is a value from the prior distribution of the AUC. This is done repeatedly to get the prior distribution of the AUC as in our previous discussions and we proceed similarly for the other quantities of interest.

Now independent of with and is the empirical cdf (ecdf) based on and similarly for The posteriors of and are obtained via results in [27,28]. The posterior density of given is proportional to

where is the number of unique values in and is the set of unique values with mean and sum of squared deviations . From this it is immediate that

where A similar result holds for the posterior of

To approximately generate from the full posterior specify some put and generate

and similarly for and If the data does not comprise a sample from the full population, then the posterior for w is replaced by its prior.

There is an issue that arises when making inference about namely, the distributions for that arises from this approach can be very irregular and particularly the posterior distribution. In part this is due to the discreteness of the posterior distributions of and . This does not affect the prior distribution because the points on which the generated distributions are concentrated vary quite continuously among the realizations and this leads to a relatively smooth prior density for For the posterior, however, the sampling from the ecdf leads to a very irregular, multimodal density for So some smoothing is necessary in this case.

Consider now applying such an analysis to the dataset of Example 3, where we know the true values of the quantities of interest and then to a dataset concerned with the COVID-19 epidemic.

Example 5.

Binormal data (Examples 3 and 4)

The data used in Example 3 are now analyzed but using the methods of this section. The prior on and w is taken to be the same as that used in Example 4 so the variances are not assumed to be the same. The value is used and requiring (11) to be less than leads to So the true distributions are allowed to differ quite substantially from a normal distribution. Testing the hypothesis AUC led to the relative belief ratio (maximum possible value is 2) and the strength of the evidence is so there is strong evidence that is true. The AUC, based on the prior conditioned on being true, is estimated to be equal to with plausible interval having posterior content For these data with plausible interval having posterior content . The true value of the AUC is and the true value of is so these inferences are certainly reasonable although, as one might expect, when the length of the plausible intervals are taken into account, they are not as accurate as those when binormality is assumed as this is correct for this data. So the DP approach worked here although the posterior density for was quite multimodal and required some smoothing (averaging 3 consecutive values).

Example 6.

COVID-19 data.

A dataset was downloaded from https://github.com/YasinKhc/Covid-19 containing data on 3397 individuals diagnosed with COVID-19 and includes whether or not the patient survived the disease, their gender and their age. There are 1136 complete cases on these variables of which 646 are male, with 52 having died, and 490 are female, with 25 having died. Our interest is in the use of a patient’s age X to predict whether or not they will survive. More detail on this dataset can be found in [29]. The goal is to determine a cutoff age so that extra medical attention can be paid to patients beyond that age. Furthermore, it is desirable to see whether or not gender leads to differences so separate analyses can be carried out by gender. So, for example, in the male group ND refers to those males with COVID-19 that will not die and D refers to the population that will. Looking at histograms of the data, it is quite clear that binormality is not a suitable assumption and no transformation of the age variable seems to be available to make a normality assumption more suitable. Table 3 gives summary statistics for the subgroups. Of some note is that condition (8), when using standard estimates for population quantities such as for Males and for females, is not satisfied which suggests that in a binormal analysis no finite optimal cutoff exists.

Table 3.

Summary statistics for the data in Example 6.

For the prior, it is assumed that and are independent values from the same prior distribution as in (10). For the prior elicitation, as discussed in Example 3, suppose it is known with virtual certainty that both means lie in and so we take and the iterative process leads to which implies a prior on the σ’s with mode at and the interval containing of the prior probability. Here the relevant prevalence refers to the proportion of COVID-19 patients that will die and it is supposed that with virtual certainty which implies beta So the prior probability that someone with COVID-19 will die is assumed to be less than 15% with virtual certainty. Since normality is not an appropriate assumption for the distribution of the choice with the upper bound (11) equal to seems reasonable and so This specifies the prior that is used for the analysis with both genders and it is to be noted that it is not highly informative.

For males the hypothesis AUC is assessed and (maximum value 2) with strength effectively equal to was obtained, so there is extremely strong evidence that this is true. The unconditional estimate of the AUC is with plausible region having posterior content so there is a fair bit of uncertainty concerning the true value. For the conditional analysis, given that AUC the estimate of the AUC is with plausible region having posterior content So the conditional analysis gives a similar estimate for the AUC with a small increase in accuracy. In either case it seems that the AUC is indicating that age should be a reasonable diagnostic. Note that the standard nonparametric estimate of the AUC is so the two approaches agree here. For females the hypothesis AUC is assessed and with strength effectively equal to 1 was obtained, so there is extremely strong evidence that this is true. The unconditional estimate of the AUC is with plausible region having posterior content . For the conditional analysis, given that AUC the estimate of the AUC is with plausible region having posterior content The traditional estimate of the AUC is so the two approaches are again in close agreement.

Inferences for are more problematical in both genders. Consider the male data. The data set is very discrete as there are many repeats and the approach samples from the ecdf about 84% of the time for the males that died and 98% of the time for the males that did not die. The result is a plausible region that is not contiguous even with smoothing. Without smoothing the estimate is for males, which is a very dominant peak for the relative belief ratio. The plausible region contains of the posterior probability and, although it is not a contiguous interval, the subinterval is a -credible interval for that is in agreement with the evidence. If we make the data continuous by adding a uniform(0,1) random error to each age in the data set, then and plausible interval with posterior content is obtained. These cutoffs are both greater than the maximum value in the ND data, so there is ample protection against false positives but it is undoubtedly false negatives that are of most concern in this context. If instead the FNDR is used as the error criterion to minimize, then and plausible interval with posterior content is obtained and so in this case there will be too many false positives. So a useful optimal cutoff incorporating the relevant prevalence does not seem to exist with these data.

If the relevant prevalence is ignored and FNRFPR is used for some fixed weight to determine , then more reasonable values are obtained. Table 4 gives the estimates for various values. With (corresponding to using Youden’s index) while if then When is too small or too large then the value of is not useful. While these estimates do not depend on the relevant prevalence, the error characteristics that do depend on this prevalence (as expressed via its prior and posterior distributions) can still be quoted and a decision made as to whether or not to use the diagnostic. Table 5 contains the estimates of the error characteristics at for various values of where these are determined using the prior and posterior on the relevant prevalence Note that these estimates are determined as the values that maximize the corresponding relative belief ratios and take into account the posterior of So, for example, the estimate of the Error is not the convex combination of the estimates of FNR and FPR based on the weight. Another approach is to simply set the cutoff Age at a value at a value and then investigate the error characteristics at that value. For example, with then the estimated values are given by FNR FPR Error FDR and FNDR

Table 4.

Weighted error FNR+(FPR determining for Males in Example 6.

Table 5.

Error characteristics for Males in Example 6 at various weights.

Similar results are obtained for the cutoff with female data although with different values. Overall, Age by itself does not seem to be useful classifier although that is a decision for medical practitioners. Perhaps it is more important to treat those who stand a significant chance of dying more extensively and not worry too much that some treatments are not necessary. The clear message from this data, however, is that a relatively high AUC does not immediately imply that a diagnostic is useful and the relevant prevalence is a key aspect of this determination.

4. Conclusions

ROC analyses represent a significant practical application of statistical methodology. While previous work has considered such analyses within a Bayesian framework, this has typically required the specification of loss functions. Losses are not required in the approach taken here which is entirely based on a natural characterization of statistical evidence via the principle of evidence and the relative belief ratio. As discussed in Section 2.2 this results in a number of good properties for the inferences that are not possessed by inferences derived by other approaches. While the Bayes factor is also a valid measure of evidence, its usage is far more restricted than the relative belief ratio which can be applied with any prior, without the need for any modifications, for both hypothesis assessment and estimation problems. This paper has demonstrated the application of relative belief to ROC analyses under a number of model assumptions. In addition, as documented in points (ii)–(vi) of the Introduction, a number of new results have been developed for ROC analyses more generally.

Author Contributions

Methodology, L.A.-L. and M.E.; Investigation, Q.L.; Writing—original draft, M.E.; Supervision, M.E. All authors have read and agreed to the published version of the manuscript.

Funding

Evans was supported by grant 10671 from the Natural Sciences and Engineering Research Council of Canada.

Data Availability Statement

The data and R code used for the examples in Section 3.2, Section 3.3 and Section 3.4 can be obtained at https://utstat.utoronto.ca/mikevans/software/ROCcodeforexamples.zip (accessed on 15 November 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Lemma 1.

Consider as a function of so

When then is increasing in b for , decreasing in b for equals 0 when and when it is decreasing in b for , increasing in b for Therefore, when then and when then □

Proof of Lemma 2.

Note that will satisfy

which implies

So is a root of the quadratic . A single real root exists when and is given by (5).

If , then there are two real roots when the discriminant

establishing (6). To be a minimum the root c has to satisfy

and by (A1), this holds iff

which is true iff When this is true iff which completes the proof of (i). When this, together with the formula for the roots of a quadratic establishes (7). □

Proof of Corollary 1.

Suppose and (6) hold. Then putting

we have that, for fixed and then is a quadratic in This quadratic has discriminant and so has no real roots whenever and, noting a does not depend on the only restriction on is When the roots of the quadratic are given by and so, since the quadratic is negative between the roots and the two restrictions imply Combining the two cases gives (8).

References

- Metz, C.; Pan, X. “Proper” binormal ROC curves: Theory and maximum-likelihood estimation. Math. Psychol. 1999, 43, 1–33. [Google Scholar] [CrossRef] [PubMed]

- Perkins, N.J.; Schisterman, E.F. The inconsistency of “optimal” cutpoints obtained using two criteria based on the Receiver Operating Characteristic Curve. Am. J. Epidemiol. 2006, 163, 670–675. [Google Scholar] [CrossRef] [PubMed]

- López-Ratón, M.; Rodríguez-Álvarez, M.X.; Cadarso-Suárez, C.; Gude-Sampedro, F. OptimalCutpoints: An R package for selecting optimal cutpoints in diagnostic tests. J. Stat. 2014, 61, 8. [Google Scholar] [CrossRef]

- Unal, I. Defining an optimal cut-point value in ROC analysis: An alternative approach. Comput. Math. Model. Med. 2017, 2017, 3762651. [Google Scholar] [CrossRef]

- Verbakel, J.Y.; Steyerberg, E.W.; Uno, H.; De Cock, B.; Wynants, L.; Collins, G.S.; Van Calster, B. ROC plots showed no added value above the AUC when evaluating the performance of clinical prediction models. Clin. Epidemiol. 2020, in press. [Google Scholar]

- Hand, D. Measuring classifier performance: A coherent alternative to the area under the ROC curve. Mach. Learn. 2009, 99, 103–123. [Google Scholar] [CrossRef]

- Evans, M. Measuring Statistical Evidence Using Relative Belief. In Monographs on Statistics and Applied Probability; CRC Press: Boca Raton, FL, USA; Taylor & Francis: Abingdon, UK, 2015; Volume 144. [Google Scholar]

- O’Malley, A.J.; Zou, K.H.; Fielding, J.R.; Tempany, C.M.C. Bayesian regression methodology for estimating a receiver operating characteristic curve with two radiologic applications: Prostate biopsy and spiral CT of ureteral stiones. Acad. Radiol. 2001, 8, 5407–5420. [Google Scholar]

- Gu, J.; Ghosal, S.; Roy, A. Bayesian bootstrap estimation of ROC curve. Stat. Med. 2008, 27, 5407–5420. [Google Scholar] [CrossRef]

- Erkanli, A.; Sung, M.; Costello, E.J.; Angold, A. Bayesian semi-parametric ROC analysis. Stat. Med. 2006, 25, 3905–3928. [Google Scholar] [CrossRef] [PubMed]

- De Carvalho, V.; Jara, A.; Hanson, E.; de Carvalho, M. Bayesian nonparametric ROC regression modeling. Bayesian Anal. 2013, 3, 623–646. [Google Scholar] [CrossRef]

- Ladouceur, M.; Rahme, E.; Belisle, P.; Scott, A.; Schwartzman, K.; Joseph, L. Modeling continuous diagnostic test data using approximate Dirichlet process distributions. Stat. Med. 2011, 30, 2648–2662. [Google Scholar] [CrossRef] [PubMed]

- Christensen, R.; Johnson, W.; Branscum, A.; Hanson, T.E. Bayesian Ideas and Data Analysis; Chapman and Hall/CRC: Boca Raton, FL, USA, 2011. [Google Scholar]

- Rosner, G.L.; Laud, P.W.; Johnson, W.O. Bayesian Thinking in Biostatistics; Chapman and Hall/CRC: Boca Raton, FL, USA, 2021. [Google Scholar]

- Diab, A.; Hassan, M.; Marquea, C.; Karlsson, B. Performance analysis of four nonlinearity analysis methods using a model with variable complexity and application to uterine EMG signals. Med. Eng. Phys. 2014, 36, 761–767. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.-Y.; Guo, Y.-J.; Shan, W.-R. Regarding the shallow water in an ocean via a Whitham-Broer-Kaup-like system: Hetero-Bäcklund transformations, bilinear forms and M solitons. Chaos Solitons Fractals 2022, 162, 112486. [Google Scholar] [CrossRef]

- Gao, X.-T.; Tian, B. Water-wave studies on a (2+1)-dimensional generalized variable-coefficient Boiti–Leon–Pempinelli system. Appl. Math. Lett. 2022, 128, 107858. [Google Scholar] [CrossRef]

- Obuchowski, N.; Bullen, J. Receiver operating characteristic (ROC) curves: Review of methods with applications in diagnostic medicine. Phys. Med. Biol. 2018, 63, 07TR01. [Google Scholar] [CrossRef]

- Zhou, X.; Obuchowski, N.; McClish, D. Statistical Methods in Diagnostic Medicine, 2nd ed.; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Al-Labadi, L.; Evans, M. Optimal robustness results for some Bayesian procedures and the relationship to prior-data conflict. Bayesian Anal. 2017, 12, 702–728. [Google Scholar] [CrossRef]

- Gu, Y.; Li, W.; Evans, M.; Englert, B.-G. Very strong evidence in favor of quantum mechanics and against local hidden variables from a Bayesian analysis. Phys. Rev. A 2019, 99, 022112. [Google Scholar] [CrossRef]

- Englert, B.-G.; Evans, M.; Jang, G.-H.; Ng, H.-K.; Nott, D.; Seah, Y.-L. Checking the model and the prior for the constrained multinomial. arXiv 2018, arXiv:1804.06906. [Google Scholar]

- Evans, M.; Guttman, I.; Li, P. Prior elicitation, assessment and inference with a Dirichlet prior. Entropy 2017, 19, 564. [Google Scholar] [CrossRef]

- Swartz, T. Subjective priors for the Dirichlet process. Commun. Stat. Theory Methods 1993, 28, 2821–2841. [Google Scholar] [CrossRef]

- Swartz, T. Nonparametric goodness-of-fit. Commun. Stat. Theory Methods 1999, 22, 2999–3011. [Google Scholar] [CrossRef]

- Ishwaran, H.; Zarepour, M. Exact and approximate sum representations for the Dirichlet process. Can. J. Stat. 2002, 30, 269–283. [Google Scholar] [CrossRef]

- Antoniak, C.E. Mixtures of Dirichlet processes with applications to Bayesian nonparametric problems. Ann. Stat. 1974, 2, 1152–1174. [Google Scholar] [CrossRef]

- Doss, H. Bayesian Nonparametric Estimation for Incomplete Data Via Successive Substitution Sampling. Ann. Stat. 1994, 22, 1763–1786. [Google Scholar] [CrossRef]

- Charvadeh, Y.K.; Yi, G.Y. Data visualization and descriptive analysis for understanding epidemiological characteristics of COVID-19: A case study of a dataset from January 22, 2020 to March 29, 2020. J. Data Sci. 2020, 18, 526–535. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).