Abstract

Bounds are developed on the maximum communications rate between a transmitter and a fusion node aided by a cluster of distributed receivers with limited resources for cooperation, all in the presence of an additive Gaussian interferer. The receivers cannot communicate with one another and can only convey processed versions of their observations to the fusion center through a Local Array Network (LAN) with limited total throughput. The effectiveness of each bound’s approach for mitigating a strong interferer is assessed over a wide range of channels. It is seen that, if resources are shared effectively, even a simple quantize-and-forward strategy can mitigate an interferer 20 dB stronger than the signal in a diverse range of spatially Ricean channels. Monte-Carlo experiments for the bounds reveal that, while achievable rates are stable when varying the receiver’s observed scattered-path to line-of-sight signal power, the receivers must adapt how they share resources in response to this change. The bounds analyzed are proven to be achievable and are seen to be tight with capacity when LAN resources are either ample or limited.

1. Introduction

We are at a remarkable time for radio systems. With the significant reductions in cost and improvements in performance, there has been an explosion in the number of radio systems with a wide range of applications including personal, machine-to-machine, vehicle-to-vehicle, and Internet-of-Things (IoT) communications. In many situations, radios cluster in physically close groups. This has two effects. First, it increases the likelihood of interference between systems and necessitates that systems be designed to maintain good performance in these conditions. Second, it creates an opportunity for groups of independent radios to be used as a distributed array to improve performance. Considering this new setting, we study the problem of receiving a signal in the presence of interference with the help of a distributed array, as illustrated in Figure 1.

Figure 1.

A broadcast node speaks to many helper nodes, which forward limited amounts of information to a base node. Additive interference from neighboring systems is present in the link from broadcaster to helpers.

We develop bounds on the maximum communications rate from a single transmitter to a base node that is provided side information from a distributed receive array in the presence of interference. The nodes that provide side information to the base are identified as helpers, and we assert they do not communicate with one another, but only forward information to the base through a previously established reliable Local Array Network (LAN) link, and that this link only supports a maximum total throughput which all the helpers must share.

This receive system can mitigate a strong interferer in a wide variety of environments. We consider the effects of an overall LAN capability that is parameterized by a total number of bits that helpers can share with the base for each source channel usage. Furthermore, to investigate algorithmic needs as a function of environmental conditions, we consider a spatially Ricean channel and a parametrically defined interference. Finally, we consider both the conditions in which the base node does and does not have its own noisy observation of the source signal. Our bound development in this paper provides significant extensions to and clarifications of the results found in References [1,2], and our preliminary efforts in Reference [3].

1.1. Results

We perform an analysis of the achievable rates of the system in the presence of an interferer. The following contributions are provided:

- Upper and lower bounds on the system’s achievable communication rate in correlated Gaussian noise and regimes where these bounds are tight (Section 3). The strongest lower bound is given in Theorem 7.

- Performance characteristics of these rates in the presence of an interferer (Section 4.1).

- Behavior of the strategies in various scattering environments (Section 4.2). The scattering environment is seen to not affect average performance if LAN resource sharing adapts to the channel.

- A strengthening of an existing achievability proof for the system, where the same rate is achieved using less cooperation between nodes (Remark 7).

1.2. Background

The problem of communicating with the help of an array of distributed receivers has been studied in a variety of contexts, most generally as an instance of communications over a single-input multiple-output (SIMO) channel. A variety of SIMO channels have been extensively analyzed, but most analyses are subtly different than the problem considered here. Our work is done in the context of information theory and bounds achievable communications rates, while in contrast most existing studies work towards minimizing distortion metrics or bit error rates.

Results presented here are a significant extension and generalization of the work in [3], where there is no treatment of the system’s performance in the presence of an interferer. A tighter inner bound than all those in [3] is included in Theorem 7, and full proofs are provided for all the results.

Achievable communications rates for this network topology were studied in References [1,2], although their bounds do not directly apply to channels with a Gaussian interferer. The studies provide an example demonstrating the sub-optimality of a Gaussian broadcaster when no interference is present, and suggest that non-Gaussian signaling techniques are needed for this sort of network. In contrast, we demonstrate in Section 4 that an achievable rate using Gaussian signaling typically comes quite close to the system’s upper bound in many practical regimes.

The helpers in the system we have described seem like relay nodes but there is an important distinction. Here, the ensemble of links from each helper to the base are like those in a graphical network, available for design within distributional and rate constraints. This is not usual in the context of relay networks, including those studied in very general setting such as Reference [4]. Studies such as References [5,6,7] detailed using a collection of receivers as beam forming relays to a destination node in a network with structure similar to the system considered here. In our situation, each helper-to-base link is orthogonal to the others, so beam forming studies are not directly applicable.

Performance of specific coding schemes for this system were studied in References [8,9,10]. In particular, [10] was able to identify a scheme that can perform to within 1.5 dB of allowing the base and receivers to communicate without constraints. Results in our study further work towards characterizing achievable rates of the system rather than designing and analyzing the performance of specific modulation schemes.

The topology we consider is similar to many-help-one problems such as the “Chief Executive Officer (CEO) problem” posed by Berger in Reference [11]. In this scenario, some node called the CEO seeks to estimate a source by listening to “agent” nodes which communicate to the CEO at fixed total rates. Many variations of this problem have been studied, for instance in Reference [12] in limit with number of agents. The focus of the CEO problem and most of its derivatives are to estimate a source to within some distortion (often mean squared error), whereas the focus here is on finding achievable rates for lossless communications.

The noisy Slepian–Wolf problem [13] may be interpreted as communications in the opposite direction of our system: distributed, cooperative helpers have a message for a base, but their cooperation ability is limited and their modulations must be sent over a noisy channel.

2. Problem Setup

Throughout the paper, we use the notation in Table 1. A broadcaster seeks to convey a message to a base at some rate bits per time period. The message M is uniformly distributed along over some time periods. Our goal is to determine the greatest rate R at which the base can recover M with low probability of error.

Table 1.

Notation and terminology.

Before communicating, the broadcaster and base agree on a modulation scheme , where each possible message is mapped to a T-length signal through . Each of these signals is denoted , and it is chosen within the broadcaster’s power constraint: . To send message M, the broadcaster transmits , which we denote .

The signal is observed by single-antenna receive nodes through a static flat fading channel and additive white Gaussian noise. Enumerating these receivers 0–N, we identify the base as the “” receiver, and call the rest helpers. For , at each time t, the receiver observes

where

- the channel is constant over time t, and

- the noise has covariance across receivers, and is independent over t.

The receiver observations are written as vectors:

Helper n for employs a (possibly randomly chosen) vector quantizer on its received sequence to produce a coarse -bitrate summary of its observations, . The vector of helper messages is denoted:

and the quantizers satisfy two properties:

- Every node in the system is informed of each quantizer’s behavior, and quantizers produce their compression using only local information. Formally, the probability distribution of factors:

- Quantizations are within the LAN constraint:

The vector of the helper’s quantization rates is denoted:

Each is conveyed precisely to the base over a Local Array Network (LAN). We assume the LAN only supports a limited amount of communication between helpers and the base. For instance, the helpers must all share a small frequency band. We model this by asserting that feasible rate vectors belong to a bounded set with a sum-capacity :

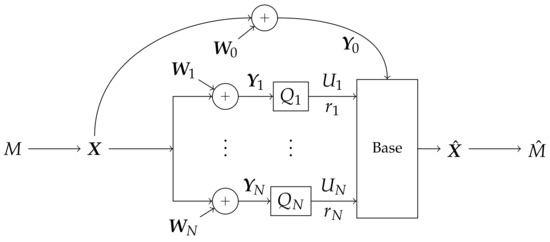

We refer to the condition that as the LAN constraint. The base reconstructs an estimate of using its own full-precision observation and side information from the helpers, then recovers an estimate of message M from . A block diagram of the system is shown in Figure 2.

Figure 2.

The system in consideration. A message M is broadcast as with average power 1. The signal is received by a base and N helper nodes, occluded by correlated AWGN . Helper n for quantizes through to produce an -bit summary . The quantized observations and the base’s full-precision reception are combined to produce an estimate of , then an estimate of M.

Channel and noise covariance are assumed to be static throughout the transmission of each message and are known to all the receive nodes. Both the transmitter and the receivers are assumed to have knowledge of the set of feasible rates, . The environment determines:

- channel fades ,

- noise covariance and

- maximum LAN throughput L.

Available for design are:

- quantizers ,

- message modulation ,

- rates each helper should send to the base and

- fusion and decoding methods at the base to produce .

We say a communication rate R is achievable if for any then for large enough T there is some distribution of quantizers satisfying for each k, encoders and decoders where:

Theorem 1.

Proof.

C is achievable through the usual noisy channel coding argument [14]. For any achievable rate R, applying Fano’s inequality to gives . □

In the sequel, we bound C from Theorem 1 with computable expressions.

3. Bounds on Communications Rates

The system’s capacity C can be upper bounded by considering a stronger channel where all helpers are informed of each other’s observations. Compute:

where the first inequality follows since is a Markov chain (Equation (6)). If the LAN constraint were not imposed and the base had access to the helpers’ receptions in full precision, the receive side would be equivalent to a multi-antenna Gaussian receiver. By noisy channel coding [15], the capacity of this stronger channel is (here, are variables at a single timepoint) which, by a derivation given in [16], simplifies to the familiar upper bound:

Taking the minimum of this bound and Equation (14) yields an upper bound for the original system. This is an instance of a general cut-set upper bound from [17].

Remark 1.

Any achievable rate R must satisfy

Proof.

Justified by the preceding discussion. □

The rest of the section is dedicated to four communication strategies for the system in order of increasing complexity and diversity utilization. The strongest bound is presented in Theorem 7. It is described using the context of the preceding two bounds.

3.1. Achievable Rate by Decoding and Forwarding

Treating each helper node as a user seeking to receive its own message, the link from the broadcaster to helpers is a scalar Gaussian broadcast channel. The capacity region of this channel was characterized in Reference [18], and in particular its sum-rate was given in Reference [19]. In the scalar case, this sum rate reduces to:

where only the point-to-point channel between transmitter and the best receiver is used.

Remark 2.

The following rate is achievable

The rate is achievable with the following facilities:

- Helpers are informed of which helper is the best (best is derived from ).

- The transmitter and best helper coordinate a codebook of appropriate rate and distribution.

Proof.

By having the broadcaster send a message to the nth receiver, a rate

is achievable from transmitter-to-receiver. If (i.e., the transmitter broadcasts to the base), then Equation (19) is achievable from transmitter-to-base. Otherwise, Equation (19) is achievable from transmitter-to-base if it is less than L by forwarding the receiver’s decoding over the LAN. □

Remark 3.

When the base does not have its own reception (i.e., ) and

then the system’s capacity is L.

Proof.

By assumption, the upper bound in Equation (16) equals . This is achievable by Remark 2. □

The strategy to achieve this rate requires a strong amount of cooperation from the broadcaster, since the decoding receivers must all share codebooks with the transmitter.

3.2. Achievable Rate through Gaussian Distortion

Rate-distortion theory shows that to encode a Gaussian source with minimum rate such that distortion does not exceed some maximum allowable mean squared error , the rate must be at least

To achieve this rate, the encoding operation must emulate the following test channel (Reference [14]):

where and . Dithered lattice quantization [20] is one method that can be used to realize such a quantizer in limit with block length in addition to having other nice analytic properties. Using this method, in limit with block length, the base’s estimates for a helper’s observation at any single timepoint can be made to approximate

where is independent Gaussian distortion with variance some function of the helper’s rate . We will now derive this function. Define

and the vector of s corresponding to this choice of as:

The subscript Q here is a decoration to distinguish quantization distortion terms from environment noise,

If the nth helper is to forward information to the base at rate then the amount of distortion in the helper’s encoding under this strategy can be determined by setting Equation (21) equal to and solving for , with equal to the helper observation’s variance, . Scaling the output of the test channel the helper emulates (Equation (22)) by causes it to equal the helper’s observation plus independent Gaussian noise with variance . This means that, in such a system, the distortion from the helpers is equivalent to adding independent additive Gaussian noise with variance:

By Equations (1) and (26), all the noise and distortion on the signal present in the helpers’ messages to the base are summarized by the vector with covariance matrix

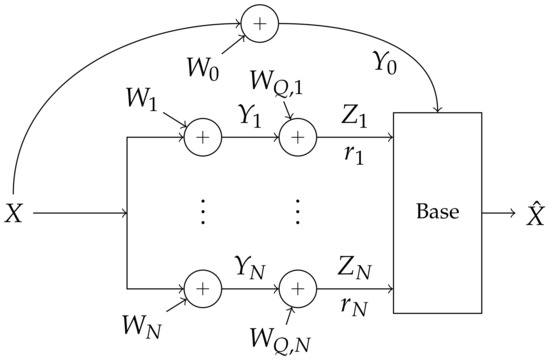

We refer to this system as a Gaussian distortion system. A block diagram of this system is shown in Figure 3. This system is equivalent to a multi-antenna Gaussian receiver and its achievable rate has a closed form:

Figure 3.

Gaussian distortion System: Quantizer distortion is modeled as additive white Gaussian noise, where enough noise is added so that the capacity across quantizers is some given rate vector .

Theorem 2.

For a distributed receive system as described in Section 2 with noise covariance matrix Σ, LAN constraint L and fixed average helper quantization rates , then a rate is achievable with the following facilities:

- The transmitter and base coordinate a codebook of appropriate rate and distribution.

- Each n-th helper is informed of an encoding rate (optimal choice derived from ).

- Each helper coordinates a random dither with the base.

- The base is informed of .

All the details of this derivation are formalized in Appendix B.

Maximizing over gives a lower bound on the system capacity:

Unfortunately, this expression cannot be simplified much in general. Maximization of over can be performed efficiently using quasi-convex optimization algorithms:

Remark 4.

The max of follows the maximum of which is quasi-concave in .

Proof.

The maximum of follows the maximum of by the matrix determinant lemma.

It suffices to show that the restriction of this functional on the intersection of any line with is quasi-concave (see Reference [21]). Further, a one-dimensional function is quasi-concave if anywhere its derivative is 0 and its second derivative is below 0.

Fix any denote for any t where . Define and . Then,

If then is in the null space of so the term in Equation (31) vanishes. is negative definite in so there, establishing f’s quasi-concavity and thus that of . Since is quasi-concave along all of , then it is also quasi-concave in any convex restriction of that domain. is a simplex, which is convex. □

is tight with the upper bound in Equation (16), in limit with LAN throughput L.

Remark 5.

as

Proof.

This strategy has the benefit of not needing much coordination between receive nodes. The transmitter does not have to share codes with helpers because here the helpers do not perform any decoding. Each helper needs only its encoding rate and its own channel state to form its messages properly.

3.3. Achievable Rate through Distributed Compression

Helper n for in a realization of the Gaussian distortion system described in Section 3.2, on average and with long codes, will produce an -bit encoding of its observation that can be decoded to produce the helper observation with additional approximately Gaussian noise. Since all the helper’s quantizations contain the same signal component (and possibly the same interference), they are correlated and can be compressed before forwarding to allow for less noise to be introduced in quantization.

The Slepian–Wolf theorem [22] shows that if the LAN is such that helpers can encode at rates , then the helpers can losslessly convey the encodings to the base at lower rates , as long as and the encodings satisfy the conditions that for all subsets , then:

Note that is always included in the conditioning because is available at the base node in full precision.

In the Gaussian distortion setting described right before Equation (23), can be made close to with time expansion. The details of this are given in Lemma 2. Maximizing over the LAN constraint in Equation (9), the following rate is achievable:

where

in Equation (35) is defined as in Equation (25). We can then state the following:

Theorem 3.

For a distributed receive system as described in Section 2 with noise covariance matrix Σ and LAN constraint L, is achievable with the following facilities:

- The transmitter and base coordinate a codebook of appropriate rate and distribution.

- Each n-th helper is informed of a quantization rate and binning rate , (optimal choice derived from ).

- Each helper coordinates a random dither with the base.

- The base is informed of .

The base can unambiguously decompress the helper’s compressed encodings with low probability of error if and only if is chosen such that Equation (33) is satisfied. However, in analog to Corollary 1 from Reference [1], the rate in 6 can be improved by expanding to include some of the where the base cannot perform unambiguous decompression. This helps because even if the encoding rates in are chosen outside of so that helpers cannot convey to the base unambiguously, some extra correlation with X is retained through this distortion.

Theorem 4.

For a distributed receive system as described in Section 2 with noise covariance matrix Σ and LAN constraint L, the following rate is achievable:

where

The rate requires the following facilities:

- The transmitter and base coordinate a codebook of appropriate rate and distribution.

- Each n-th helper is informed of a quantization rate , binning rate and hull parameter λ (optimal choice derived from ).

- Each helper coordinates a random dither with the base.

- The base is informed of .

All the details of Theorems 6 and 7 are shown in Appendix B.

Remark 6.

The capacity of the system is under the following restrictions:

- Σ is diagonal (no interference).

- The base does not have its own full-precision observation of the broadcast ().

- The broadcaster must transmit a Gaussian signal.

- Construction of helper messages is independent of the transmitter’s codebook .

This is demonstrated in Appendix C. The last three assumptions are necessary:

- Since the base has codebook knowledge, it is possible for the transmitter to send a direct message to the base, which is not accounted for in the compress-and-forward strategy used for .

- The Gaussian broadcast assumption is needed because of a counterexample given in Reference [1].

- Codebook independence is necessary because is strictly less than the upper bound in Equation (16), but, by Remark 3, this upper bound is achieved in some regimes.

Theorem 7 and Remark 7 strengthen Corollary 1 and Theorem 5 from Reference [1] since we show that the same rate can be achieved with less cooperation between transmitter and receivers. Sanderovich et al. [1] used a “nomadicity” assumption which asserts that the mapping from transmitter messages to codewords is not present at the helpers, whereas Theorem 7 shows (the same argument applying to the general discrete case) that indeed the same rate is achievable when helpers have no knowledge at all of the codewords the transmitter is using.

Similar to the Gaussian distortion achievable rate, the distributed compression technique does not require cooperation between the transmitter and helpers. It does, however, require a priori sharing of codes from the helpers to the base so that the helpers can perform distributed compression.

4. Ergodic Bounds

In this section, the bounds are averaged over random channels in various regimes. Each bound tested is a deterministic function of:

- number of helpers N,

- LAN constraint L,

- noise covariance matrix , and

- channel (assumed to be static and precisely estimated a priori per each channel use).

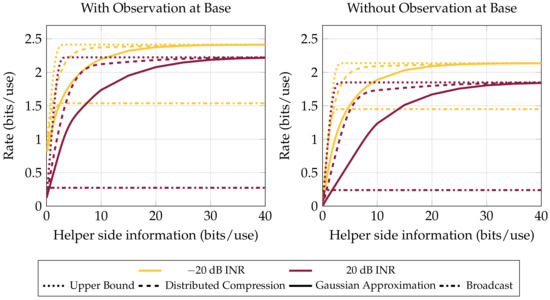

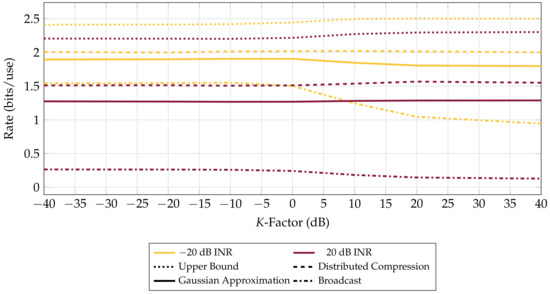

In all graphs, the rate from Equation (16) is called “Upper Bound”, the rate from Equation (28) is “Gaussian Distortion”, the rate from Theorem 7 is “Distributed Compression” and the rate from Remark 2 is “Broadcast”. A discussion of the optimizations performed for the Gaussian Distortion and Distributed Compression bounds is in Appendix A.

4.1. Performance in the Presence of an Interferer

One motivation for increasing receive diversity is to better mitigate the effect of interference, so it is important to study the extent to which the strategies from Section 3 can do so.

We model interference as a zero-mean Gaussian broadcast independent of our system. Our system’s nodes are informed of nothing more than the scales at which the interferer appears in our receivers’ observations. This assumption prohibits using dirty paper coding [23] and other transmit-side interference mitigation strategies. With this model, the covariance matrix of noise terms associated with a particular interferer seen by the receivers is a matrix where In all trials run, a single interferer was assumed to be present at all nodes with power and random phase so that

with where is the uniform distribution.

Figure 4 shows the difference in achievable rates with and without interference for different receive-side strategies. Even when a strong rank-one interferer is present at all the nodes, achievable communications rates are comparable to the case without an interferer.

Figure 4.

Bound performance versus LAN constraint (that is, the total rate available to hub from all the helpers combined). Four helpers with 0 dB average receiver SNR, averaged over 1000 channels with gains . Interferer present at each receiver with a uniform random phase and specified INR. Single-user decode-and-forward is greatly affected by interference (Compare the Broadcast curves from −20 dB to 20 dB INR). Distributed compression only offers significant benefits over Gaussian compress-and-forward in strong interference and when LAN resources are scarce.

Sanderovich et al. [1] provided an example of a system such as the one in the present work where a Gaussian transmitter is significantly sub-optimal. In contrast, we see in Figure 4 that, over an average of many random channels in a range of settings, achievable rates using Gaussian signaling come quite close to an upper bound on the system. Even the relatively simple Gaussian distortion bound is close in performance to the optimum in all regimes but one with strong interference and little cumulative helper-to-base information. In this regime the LAN constraint limits the helper’s ability to provide the base with the diversity of observations necessary to perform good interference mitigation.

4.2. Path Diversity versus Performance

It is reasonable to expect that the best strategy for a distributed receiver to use will change depending on its environment. If the receivers observe the signal through a single line-of-sight path, all else being equal, the signal and noise at each receiver will have similar statistics. In contrast, in environments with many scatterers, the channel statistics will vary more across spatially distributed receivers.

The level of attenuation at a receiver can be modeled with a Rician distribution [24]. The Rician distribution follows the magnitude of a circularly-symmetric complex Gaussian with nonzero mean and can be parameterized by two nonzero values: a scale representing the average receive SNR and a shape (called a K-factor in other literature) denoting the ratio of signal power received from direct paths to the amount of power received from scattered paths. By construction, if , then a Rician distribution is equivalent to a Rayleigh distribution with mean . In contrast, if , then the distribution approaches a point mass at (Reference [25]).

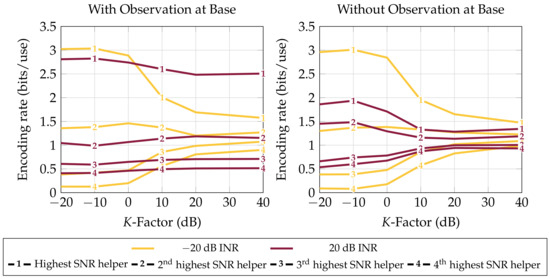

The type of scattering environment does not greatly affect the average rate achievable by any bound other than the Broadcast bound (Figure A1). This is because the Broadcast bound’s only utilization of receive diversity is in channel variances which is small when the channel is dominated by line-of-sight receptions. Despite the invariance of most bounds to K, the profile of helper-to-base bits does change. Figure 5 shows that, no matter the other parameters, in high scattering, it is most helpful for the base to draw most of its information from the highest SNR helper while mostly ignoring low-SNR helpers’ observations. The imbalance is less pronounced in interference and when SNR is less varied across receivers (high K), since in these regimes, gaining diverse observations for combining is more beneficial than using the strongest helper’s observation.

Figure 5.

Average rate at which each helper forwards to base in distributed compression (Theorem 7) versus K-factor (scattered-to-direct-path received signal power ratio). Four helpers with 0 dB average receive SNR and a LAN constraint of bits per channel use of side information the helpers share to inform the base of their observations. Averaged over 1000 channels with gains . Interferer present at each receiver with a uniform random phase and specified INR. As the proportion of line-of-sight path power increases, the need for receive diversity increases and the base draws information from more helpers. This does not occur when the base has its own observation, when presumably it is used as an estimate of the interferer to be mitigated from a compression of the highest-SNR helper’s observation.

When the base collects its own full-precision observation, the helper rate profile is unaffected by interference and scattering, where most helper information is provided by the highest SNR helper. In practice, in this situation, the base might use its observation as an estimate of the interferer and subtract it from a compression of the strongest helper’s observation.

5. Conclusions

One upper bound and three lower bounds on communication rate were developed. A simple upper bound (Remark 1) was derived, achievable when the LAN constraint is stringent (small L), and achievable in limit as the LAN constraint is relaxed (). An achievable rate (Theorem 5) considering quantizers which add Gaussian distortion was seen to provide large gains in interference over a rate that does not fully use the distributed receive array (Remark 2). The rate (Theorem 7) achieved when a distributed compression stage is added to the previous technique provides gains over Theorem 5 when interference is strong and helper-to-base communication is limited, but may be more difficult to implement.

Leaving SNR fixed, performance is mostly unchanged in high-scattering versus low-scattering environments, although the profile of helper-to-base communication changes: In high-scattering environments, some helper observations are ignored by the base, while, in line-of-sight environments, each helper informs the base equally. The presence of a strong interferer dampens this effect, since in this regime the base needs more spatial diversity to mitigate the interferer.

An immediate goal for future work is to devise practical implementations of the presented strategies. This may include refining the models of the LAN and helpers to more precisely reflect system capabilities.

Supplementary Materials

The following are available online at http://www.mdpi.com/1099-4300/20/4/269/s1.

Acknowledgments

We would like to thank Shankar Ragi for aid in producing datasets for this paper. We would also like to thank Bryan Paul for his comments and corrections. Support by Hans Mittelmann offered by AFOSR under grant FA 9550-15-1-0351. DISTRIBUTION STATEMENT A: Approved for Public Release, Distribution Unlimited. This work was sponsored in part by Defense Advanced Research Projects Agency under Air Force Contract FA8721-05-C-0002. Opinions, interpretations, conclusions, and recommendations are those of the authors and are not necessarily endorsed by the United States Government.

Author Contributions

Daneil W. Bliss, Adam Margetts and Christian Chapman developed the theoretical work in the paper. Christian Chapman and Hans Mittelmann performed the simulations. Christian Chapman wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Optimization Method

Evaluation of the Gaussian distortion and distributed compression bounds in each environment requires optimization of a quasi-convex objective (Remark 4). In the case of distributed compression, the space of acceptable parameters is not convex: consider compressing the encodings for two highly-correlated sources. By the Slepian–Wolf theorem, if the encodings are high-bitrate, then perturbing the encodings’ bitrate does not greatly affect the region of feasible compression rates, since the encodings have high redundancy. On the other hand, perturbing the bitrate of low-bitrate encodings greatly affects the region of feasible compression rates, since uncorrelated distortion from encoding dominates source redundancies. Then, a compression to an average between minimum feasible compression rates for low-bitrate encodings and ones for high-bitrate encodings is infeasible, and the distance from the feasible set becomes large as the difference between high and low bitrates is made large. This is the mechanism that governs hence the domain’s non-convexity.

To overcome this, an iterative interior point method was used to find the maximum: each constraint is replaced with a stricter constraint, for some and a minimization of the objective is performed under the new constraint. The optimal point is passed as the initial guess for the next iteration, where the objective function is minimized with updated constraints with . The method is iterated until the constraint is practically equivalent to or the optimal point converged. Each individual minimization was performed using sequential least-squares programming (SLSQP) through SciPy [26]. The code for this is included in the Supplementary Materials.

Appendix B. Achievability of Bounds

Several lemmas are needed to prove bound achievability. The following lemma demonstrates that lattice encodings become close in joint information content to the equivalent random variables in a Gaussian distortion system from Section 3.2. Lemma 1 demonstrates the joint differential entropies between source estimates from the encodings are close to the equivalent differential entropies in the Gaussian distortion system. Lemma 2 demonstrates the encodings themselves have joint Shannon entropies that are close to mutual informations in the Gaussian distortion system.

Lemma 1.

Take helper observations and independent dithers where, for each n, the dither vector is distributed along the base cell of a regular lattice in whose normalized second moment is . For any , and t large enough,

Proof.

The proof is similar to Theorem 6 from [20]. Notice that, for any additive noise channel, then so that . Then,

where Equation (A4) follows since the terms are independent, Equation (A5) follows by the worst additive noise lemma [27], and the substitution and convergence in Equation (A7) follow by Section III of [20]. In addition:

where Equation (A4) follows by Lemma 1 from [28] and Equations (A10) and (A11) follow since the terms in are independent and by Section III of [20]. □

Lemma 2.

For length-t helper observations , dithered lattice encodings of using dithers where each encodes onto a regular lattice in whose cells have normalized second moment . Then, for any and if t is large enough, any has

In Equations (A13), with distributed the same as helper observations at a single time point, and

Proof.

We prove this by induction. By Theorem 1 in Reference [29], for any

Theorem 6 in Reference [20] has that

Thus, the statement holds for any singleton . Take any disjoint for which the claim holds for . By construction, given , there is a function where with independent of but distributed like [20]. Compute:

The first inequality follows by the data processing inequality. Equation (A19) follows since is independent of the other variables in the term. The last inequality follows by the worst additive noise lemma [27]. For t large enough,

Similar to before, given there are deterministic functions where and with iid to , . Compute:

where, again, the first line follows by the data processing inequality and the second line since the conditional is independent of the rest of the mutual information’s terms. For large enough t, the second inequality follows by Lemma 1. Combining Equations (A20), (A22) and (A27),

Since , the inductive step will only need to be used up to N times. Letting , the initial statement holds. □

A small result is also needed to show that the base having its own full precision observation is approximately equivalent to receiving a high-bitrate helper message. Recall that the base is associated with helper index 0.

Lemma 3.

If , then

then, some value can be chosen sufficiently large so that for any

Proof.

Since all the conditional mutual information terms in the statement are continuous in , it is enough to show the statement for . In this case, with no loss of generality, we can say there is some where:

Fix large enough so that for any ,

There is guaranteed to be such an because the above expression is a continuous function of the covariance matrix of component-wise, and as is made large, the covariance matrices involved in the second term converge component-wise to those of the first since as . By Equations (A31) and (A32), the statement holds for any and it remains to show that it also holds for each

Note that, for any ,

where Equation (A35) follows because conditioning reduces mutual information, and Equation (A36) follows from what was shown at the start. □

Theorem 5.

For a distributed receive system as described in Section 2 with noise covariance matrix Σ, LAN constraint L and fixed average helper quantization rates , a rate is achievable.

Proof.

If some has , the system is equivalent to the case where the nth receiver’s observation is not present, so, without loss of generality, we assert that . Fix some rate and a block length . Form a message M uniform on

- Outline

Form a rate-R codebook with length-T codewords. At receiver , form a length-t regular lattice encoder with lattice chosen coarse enough that its encodings have rate . Combine encodings at base and find the typical codeword. Observe that probability of error is low by recognizing that each t-length encoding from a helper approximately contains the information content of the helper’s observation plus Gaussian distortion.

- Transmitter setup

Generate a codebook mapping where:

with each component drawn iid from . Reveal to the transmitter and base.

- Helper encoder setup

For each helper , generate a dither vector , where each successive t-segment is uniform in the base region of the Voroni partition of a regular, white t-dimensional lattice which has the following normalized-second-moment:

These terms are detailed at the beginning of Reference [20]. Reveal to the base and helper n. Similarly, at the base, generate a dither vector with chosen large enough for Lemma 3 to hold.

- Transmission

To send message have the transmitter broadcast

- Helper encoding and forwarding

Helper n ( observes a sequence of length T, . Now is composed of t consecutive length-t segments. For the ℓ-th length-t segment (), form a quantization by finding the point in associated with the region in in which that segment resides. The properties of such are the subject of References [20,29]. By the extension of Theorem 1 in Appendix A of Reference [29],

Further, by Theorem 6 in Reference [20],

where has the distribution of any individual component of and (in agreement with notation in Section 3.2). Thus, the t encoded messages produced by helper , are within the LAN constraint for large enough block length T. Helper forwards to the base.

- Decoding

At the base, receive all of the lattice quantizations,

Take to be the collection of

which are jointly -weakly-typical with respect to the joint distribution of conditional on all the dithers . Weak- and joint-typicality are defined in Reference [14].

At the base, find a message estimate where . Declare error events if M is not found to be typical with , and if there is some where .

- Error analysis

By typicality and the law of large numbers, as . In addition,

is defined as in Equation (25). Equation (A47) follows by the data processing inequality. Equation (A48) follows by combining the entropies into a mutual information, then using the worst additive noise lemma [27]. Thus, if R is chosen less than then as . Mutual information is lower semi-continuous so can be made arbitrarily close to . □

Theorem 6.

For a distributed receive system as described in Section 2 with noise covariance matrix Σ and LAN constraint L, a rate is achievable.

Proof.

Apply Theorem 7 with (proof shown below). □

Theorem 7.

For a distributed receive system as described in Section 2 with noise covariance matrix Σ and LAN constraint L then is achievable.

Proof.

It is enough to show that any component in the maximization from Equation (36) is achievable. Fix a helper rate vector , and a compression rate vector . If some has or , the system is equivalent to the case where the nth helper is not present, so, without loss of generality, we assert that each . Fix some rate and a block length . Form a message M uniform on .

- Outline

Form a rate-R codebook with length-T codewords. At receiver , form a length-t regular lattice encoder with lattice chosen coarse enough that its encodings have rate . Randomly bin the encodings down to rate . At the base find the codeword jointly typical with the binned encodings. Observe that probability of error is low by recognizing that the unbinned t-length encodings from helpers approximately contain the joint-information content of the helper’s observations plus Gaussian distortion.

- Transmitter setup

Generate a codebook mapping where

with each component drawn iid from . Reveal to the transmitter and base.

- Helper encoder setup

For each helper , generate a dither vector , where each successive t-segment is uniform in the base region of the Voroni partition of a regular, white dimensional lattice which has the following normalized-second-moment:

These terms are detailed at the beginning of Reference [20]. Reveal to the base and helper n. Similarly at the base generate a dither vector with chosen large enough for Lemma 3 to hold.

For each receiver form a random mapping which takes on iid values at each :

is the binning scheme used by receiver . Distribute each to helper n and the base.

- Transmission

To send a message have the transmitter broadcast

- Helper encoding

Helper n ( observes a sequence of length T, . Now is composed of t consecutive length-t segments. For the ℓ-th length-t segment, , form a quantization by finding the point in associated with the region in in which that segment resides. The properties of such are the subject of References [20,29].

Applying Lemma 2, then for large enough t, any has

where the right-hand inequality comes from choice of

- Helper joint-compression and forwarding

At receiver , form binned encodings where for each ℓ, . Note that so and so that the LAN constraint is satisfied.

Each receiver forwards to the base.

- Decoding

At the base, receive all all the binned helper encodings

Take to be the set of

which are jointly -weakly-typical with respect to the joint distribution of , conditional on all the dithers . Weak- and joint-typicality are defined in Reference [14].

For an ensemble of bin indices from all the receivers, , define:

Each represents the set of helper lattice quantizations represented by the ensemble of helper bin indices . At the base, find for which there is some where . Declare the message associated with to be the broadcast.

- Error analysis

We have the following error events:

- : is not typical with anything in .

- : For there is some and where and , but any has . denotes the situation where the base identifies the wrong quantizations for all the receivers in S.

By the law of large numbers and properties of typical sets, as t becomes large, eventually . For each , take to be the collection of jointly-typical sequences in up to the joint distribution on (any ), conditioned on . Now compute:

where is a random variable independent of and distributed identically to . Then, with high probability for large enough t,

where Equation (A58) follows by construction of . Taking the

Equation (A62) follows by using Lemma 2 on and using reasoning identical to Equations (A45)–(A48) on . Recall that R was chosen so that . Then, Equation (A62) will approach as (thereby approaches 0) if all satisfy:

By assumption that and for small enough then Equation (A63) holds for each S. Since all error events approach 0, then a rate of is achievable. For small enough by lower semi-continuity of mutual information can be made arbitrarily close to . □

Appendix C. Proof of Conditional Capacity

Remark 7.

The capacity of the system is under the following restrictions:

- Σ is diagonal (no interference).

- The base does not have its own full-precision observation of the broadcast ().

- The broadcaster must transmit a Gaussian signal.

- Construction of helper messages is independent of the transmitter’s codebook .

Proof.

By Theorem 5 in Reference [1] (variable names altered, constants adapted for complex variables), the capacity of the system under the assumed restrictions is

In Equation (A65), is the collection of random vectors whose components are of the form with independently distributed :

for any . Since both the variance in Equation (A66) as a function of and Equation (26) as a function of are injective on , then for any there is some (in the context of Equation (37); either inside or outside ) which will yield a variable with identical distribution to .

Fixing helper rates for any , we can instead write as:

where follows because, for each n, is a Markov chain. Maximizing over , Equation (A70) is identical to the set of rates shown in Theorem 7. □

Figure A1.

Achievable rates versus K. Four helpers with an observation available at the base and LAN constraint bits shared among helpers. Averaged over 500 channel realizations with uniform independent phase and average receiver SNR fixed at 0 dB. Scattering environment has negligible impact on achievable rates for these bounds, except in the Broadcast bound where receive diversity is not well utilized. Similar results are obtained when the base does not have its own observation.

References

- Sanderovich, A.; Shamai, S.; Steinberg, Y.; Kramer, G. Communication via Decentralized Processing. IEEE Trans. Inf. Theory 2008, 54, 3008–3023. [Google Scholar] [CrossRef]

- Sanderovich, A.; Shamai, S.; Steinberg, Y. On Upper bounds for Decentralized MIMO Receiver. In Proceedings of the 2007 IEEE International Symposium on Information Theory, Nice, France, 24–29 June 2007; pp. 1056–1060. [Google Scholar]

- Chapman, C.; Margetts, A.R.; Bliss, D.W. Bounds on the Information Capacity of a Broadcast Channel with Quantizing Receivers. In Proceedings of the IEEE 2015 49th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 8–11 November 2015; pp. 281–285. [Google Scholar]

- Kramer, G.; Gastpar, M.; Gupta, P. Cooperative Strategies and Capacity Theorems for Relay Networks. IEEE Trans. Inf. Theory 2005, 51, 3037–3063. [Google Scholar] [CrossRef]

- Bliss, D.W.; Kraut, S.; Agaskar, A. Transmit and Receive Space-Time-Frequency Adaptive Processing for Cooperative Distributed MIMO Communications. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 5221–5224. [Google Scholar]

- Quitin, F.; Irish, A.; Madhow, U. Distributed Receive Beamforming: A Scalable Architecture and Its Proof of Concept. In Proceedings of the IEEE 77th Vehicular Technology Conference (VTC Spring), Dresden, Germany, 2–5 June 2013; pp. 1–5. [Google Scholar]

- Vagenas, E.D.; Karadimas, P.; Kotsopoulos, S.A. Ergodic Capacity for the SIMO Nakagami-M Channel. EURASIP J. Wirel. Commun. Netw. 2009, 2009, 802067. [Google Scholar] [CrossRef]

- Love, D.J.; Choi, J.; Bidigare, P. Receive Spatial Coding for Distributed Diversity. In Proceedings of the 2013 Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 3–6 November 2013; pp. 602–606. [Google Scholar]

- Choi, J.; Love, D.J.; Bidigare, P. Coded Distributed Diversity: A Novel Distributed Reception Technique for Wireless Communication Systems. IEEE Trans. Signal Process. 2015, 63, 1310–1321. [Google Scholar] [CrossRef]

- Brown, D.R.; Ni, M.; Madhow, U.; Bidigare, P. Distributed Reception with Coarsely-Quantized Observation Exchanges. In Proceedings of the 2013 47th Annual Conference on Information Sciences and Systems (CISS), Altimore, MD, USA, 20–22 March 2013; pp. 1–6. [Google Scholar]

- Berger, T.; Zhang, Z.; Viswanathan, H. The CEO Problem [Multiterminal Source Coding]. IEEE Trans. Inf. Theory 1996, 42, 887–902. [Google Scholar] [CrossRef]

- Tavildar, S.; Viswanath, P.; Wagner, A.B. The Gaussian Many-Help-One Distributed Source Coding Problem. IEEE Trans. Inf. Theory 2010, 56, 564–581. [Google Scholar] [CrossRef]

- Yedla, A.; Pfister, H.D.; Narayanan, K.R. Code Design for the Noisy Slepian-Wolf Problem. IEEE Trans. Commun. 2013, 61, 2535–2545. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements Informations Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Shannon, C.E. A Mathematical Theory of Communication. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Telatar, I.E. Capacity of Multi-Antenna Gaussian Channels. Eur. Trans. Telecommun. 1999, 10, 585–595. [Google Scholar] [CrossRef]

- Gamal, A.E.; Kim, Y. Network Information Theory; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Weingarten, H.; Steinberg, S.; Shamai, S. The Capacity Region of the Gaussian Multiple-Input Multiple-Output Broadcast Channel. IEEE Trans. Inf. Theory 2006, 52, 3936–3964. [Google Scholar] [CrossRef]

- Wei, Y.; Cioffi, J.M. Sum Capacity of Gaussian Vector Broadcast Channels. IEEE Trans. Inf. Theory 2004, 50, 1875–1892. [Google Scholar]

- Zamir, R.; Feder, M. On lattice Quantization Noise. IEEE Trans. Inf. Theory 1996, 42, 1152–1159. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Slepian, D.; Wolf, J.K. Noiseless Coding of Correlated Information Sources. IEEE Trans. Inf. Theory 1973, 19, 471–480. [Google Scholar] [CrossRef]

- Costa, M. Writing on Dirty Paper (corresp.). IEEE Trans. Inf. Theory 1983, 29, 439–441. [Google Scholar] [CrossRef]

- Goldsmith, A. Wireless Communications; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Bliss, D.W.; Govindasamy, S. Adaptive Wireless Communications: MIMO Channels and Networks; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Jones, E.; Oliphant, T.; Peterson, P. SciPy: Open Source Scientific Tools for Python. 2001. Available online: http://www.scipy.org/ (accessed on 10 September 2017).

- Diggavi, S.N.; Cover, T.M. The Worst Additive Noise Under a Covariance Constraint. IEEE Trans. Inf. Theory 2001, 47, 3072–3081. [Google Scholar] [CrossRef]

- Ihara, S. On the Capacity of Channels with Additive Non-Gaussian Noise. Inf. Control 1978, 37, 34–39. [Google Scholar] [CrossRef]

- Zamir, R.; Feder, M. On Universal Quantization by Randomized Uniform/Lattice Quantizers. IEEE Trans. Inf. Theory 1992, 38, 428–436. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).