Computer Vision Based Smart Sensing

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Sensing and Imaging".

Viewed by 55689

Editor

Topical Collection Information

Dear Colleagues,

Sensing of visual information with video cameras has become an almost ubiquitous element of our environment. Internet connectivity and the massive use of the Internet of Things (IoT) has contributed to this in a very important way. The number of video cameras deployed with the capacity to capture information both in the visible range and outside the visible range (IR, UV, or other bands) has grown very significantly in recent years, with figures ranging from 50 to 150 surveillance cameras per 1000 inhabitants in the most important cities in Asia, Europe, and America. Moreover, this is only with regard to fixed surveillance cameras (on streets, roads, and buildings). If, in addition, mobile and wearable devices incorporating cameras are taken into account, the possibilities for capturing visual information are almost infinite.

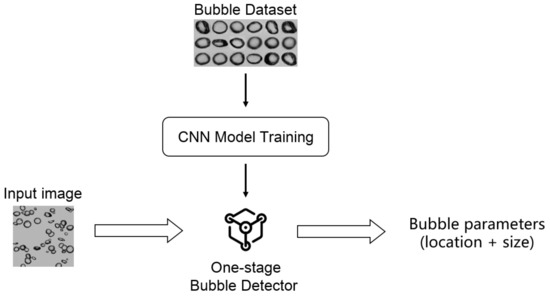

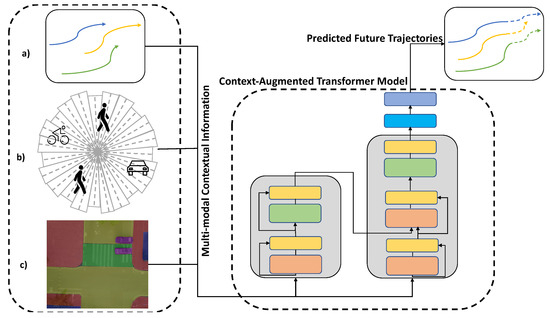

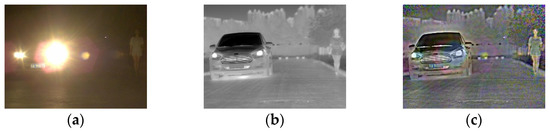

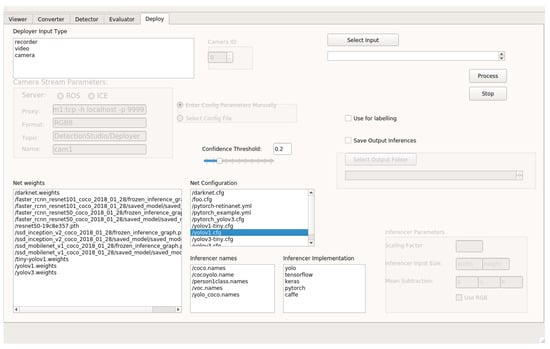

To be able to process such a large volume of information, it is necessary to develop computer vision applications for the different areas in which they are usually applied: security, health, multimodal transport, accessibility for people with disabilities of any kind, cultural heritage preservation, etc. The capacity for smart sensing with cameras has grown significantly with the application of deep learning strategies to images and video sequences. These applications usually have a high computing cost, which is encouraging the emergence of smart processing strategies: on the edge near the point of capture, distributed in the cloud, etc. In order to make these applications robust, it is usually necessary to provide them with certain self-calibration capabilities, resilience, or even the ability to fuse visual information with other devices, such as radars or LiDAR.

This Special Issue aims to address the open research challenges and unsolved problems related to computer-vision-based smart sensing applications in different domains, making use of IoT-connected, camera-acquired information (inside the visible range or outside it) in an isolated way or by fusing them with other image-based devices, such as LiDAR or radar, in a monocular configuration or a multicamera one.

Dr. Jose Manuel Menéndez García

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 250 words) can be sent to the Editorial Office for assessment.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- smart computer vision/IR/UV-based sensing

- edge processing/distributed processing in the IoT

- camera fusion with other devices

- single-camera/multicamera/multidevice resilience

- application of smart vision in different domains