Abstract

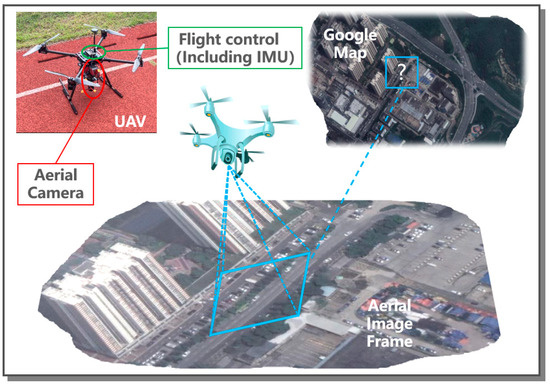

The employment of unmanned aerial vehicles (UAVs) has greatly facilitated the lives of humans. Due to the mass manufacturing of consumer unmanned aerial vehicles and the support of related scientific research, it can now be used in lighting shows, jungle search-and-rescues, topographical mapping, disaster monitoring, and sports event broadcasting, among many other disciplines. Some applications have stricter requirements for the autonomous positioning capability of UAV clusters, requiring its positioning precision to be within the cognitive range of a human or machine. Global Navigation Satellite System (GNSS) is currently the only method that can be applied directly and consistently to UAV positioning. Even with dependable GNSS, large-scale clustering of drones might fail, resulting in drone cluster bombardment. As a type of passive sensor, the visual sensor has a compact size, a low cost, a wealth of information, strong positional autonomy and reliability, and high positioning accuracy. This automated navigation technology is ideal for drone swarms. The application of vision sensors in the collaborative task of multiple UAVs can effectively avoid navigation interruption or precision deficiency caused by factors such as field-of-view obstruction or flight height limitation of a single UAV sensor and achieve large-area group positioning and navigation in complex environments. This paper examines collaborative visual positioning among multiple UAVs (UAV autonomous positioning and navigation, distributed collaborative measurement fusion under cluster dynamic topology, and group navigation based on active behavior control and distributed fusion of multi-source dynamic sensing information). Current research constraints are compared and appraised, and the most pressing issues to be addressed in the future are anticipated and researched. Through analysis and discussion, it has been concluded that the integrated employment of the aforementioned methodologies aids in enhancing the cooperative positioning and navigation capabilities of multiple UAVs during GNSS denial.

1. Introduction

UAVs have low production costs [1], a longer battery life [2], good concealment [3], great vitality [4], no worry of casualties [5], simple takeoff and landing [6], good autonomy [7], versatility [8], and convenience [9]. They are suitable for doing more demanding tasks in dangerous environments [5,10]. It has enormous military and civilian use potential. UAVs may be used in the military [11] for early air warning, reconnaissance and surveillance, communication relay, target attack, electronic countermeasures, and intelligence acquisition, among other tasks; in civilian applications [12], UAVs may be utilized for meteorological observation, terrain survey, urban environment monitoring, artificial rainfall, forest fire warning, smart agriculture [13] and aerial photography. As the number of applications for UAVs increases, the performance criteria for UAVs, namely their control and positioning performance [14], become more stringent. Existing positioning and navigation techniques make it difficult for UAVs to operate in dynamic environments [15].

Recently, UAV positioning and navigation rely heavily on location data provided by GNSS [16]; nevertheless, GNSS signals are extremely weak and susceptible to interference [17]. In highly occluded outdoor and indoor conditions, GNSS cannot reliably work and deliver steady speed and location data, thus preventing the drone from flying correctly. This has led to the development of cutting-edge solutions to complement or replace satellite navigation in settings devoid of GNSS signals [17]. The vision sensor is a passive perception sensor that uses external light to detect information about the surrounding environment via images. It has a small size, a low cost, abundant information, strong positioning autonomy and dependability, and high positioning precision [18]. In autonomous visual positioning and navigation of UAVs, features are often retrieved or matched directly based on the brightness value of each pixel in the image to determine pose changes. Hence, in the majority of light-sensitive conditions, visual-based positioning technology might be a useful complement to GNSS navigation means.

With the growing number of drone crews and cluster applications, the question of how to use visual navigation to enhance cluster autonomous navigation capabilities has also become crucial [18,19]. In complex situations, multi-UAV collaboration can effectively prevent navigation pauses or insufficient precision caused by obstacles to a single drone’s sensor vision or flying height restrictions, as well as coordinate positioning and navigation in a great number of places [19,20]. Several UAV coordination points offer the following benefits over single drones: (1) can improve visibility and data utilization through the sharing of space measurement information; (2) in large areas, can reduce the completion time of tasks by parallel execution, thus improving efficiency; (3) can improve positioning by increasing their visibility to one another; (4) can increase the probability of successful positioning through coordinated assignment.

This paper is divided into the following sections: First, an overview of absolute vision autonomous positioning and navigation technology based on UAVs is provided in Section 2, followed by a discussion of matching positioning based on the prior map, cross-view matching, and the application of visual odometry in absolute positioning. In Section 3, the difficulties and significance of distributed collaborative measurement fusion methods in UAV cluster applications with a highly dynamic topology are briefly presented. Then, Section 4 describes how group navigation technology based on active behavior control can provide rich fusion information for UAV cluster research and implementation. Afterward, Section 5 discusses and examines the primary approaches to distributed fusion of existing multi-source dynamic perception data. In Section 6, the unresolved challenges in the existing research are presented, and future research work is reviewed and proposed. Finally, the research conclusions are summarized in Section 7.

3. Distributed Collaborative Measurement Fusion under Cluster Dynamic Topology

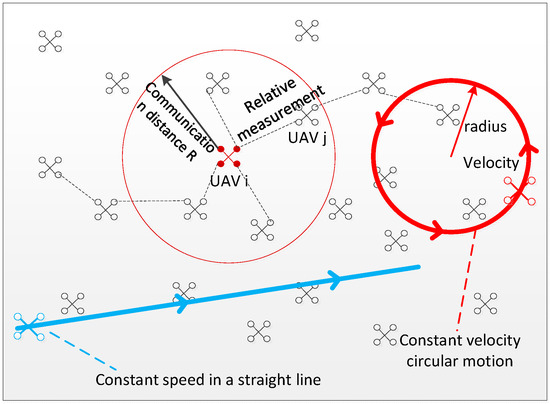

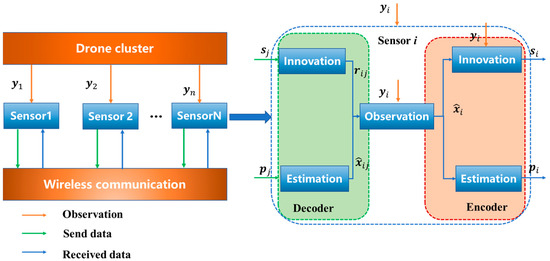

State estimation is the essential technology in sensor network applications. In order to meet the actual requirements of numerous applications, all nodes or a subset of nodes in a distributed sensor network must implement accurate and consistent estimation and prediction of the target state of interest. This enables the formation of unified and clear situation information for each sensor, which improves the success probability and efficiency of network task execution in a dynamically changing monitoring environment. It is an efficient estimation fusion method in distributed sensor networks for implementing consistent state estimation of targets via node cooperation. The classic KCF algorithm [113] was proposed in 2007 to solve the issue of consistent state estimation in network topology. The KCF algorithm used the average consistency method to synthesize the state estimation between neighboring nodes, and the state estimation of all nodes had the same consistent rate factor in the summation formula. In 2014, [114] proposed an information filter based on square root volume Kalman to solve the problem of consistent state estimation in nonlinear systems, achieving a substantial increase in estimation accuracy. In 2015, [115] introduced a distributed steady-state filter whose structure consists of the measurement update term from adjacent nodes and the consistency term about state estimation, thereby transforming the calculation of filter gain into a convex optimization problem. The 2016 publication [116] proposed a recursive information uniform filter for decentralized dynamic state estimation, but did not take into consideration the topology of the communication network; another study [117] proposed an insensitive Kalman filter algorithm based on weighted average consistency and proved the lower bound of estimation error; In 2017, [118] analyzed the nonlinear state estimation problem of unknown measurement noise statistics and proposed a variational Bayesian consistent volume filtering method; another study [119] developed a network channel model suitable for dense deployment and introduced a new class of distributed weighted consistency strategies, which can realize distributed learning of local observation to achieve network positioning. [120] explored the issue of consistency-based distributed estimating of linear time-invariant systems on sensor networks and proposed a novel code-decode scheme (EDS), shown in Figure 4. This scheme is composed of two pairs of innovative encoders/decoders and estimation encoders/decoders, which are used to compress data on each sensor to adapt to bandwidth-restricted networks. Designed is a consistency estimator based on EDS. The necessary and sufficient conditions for maintaining the dynamic convergence of the state estimate error are established, as are the bounds of the transmission data size. Three optimization algorithms are presented for the quickest convergence of error dynamics, the minimizing of estimator gain, and the tradeoff between convergence speed and estimation error.

Figure 4.

Schematic diagram of dynamic encoding and decoding based on observation.

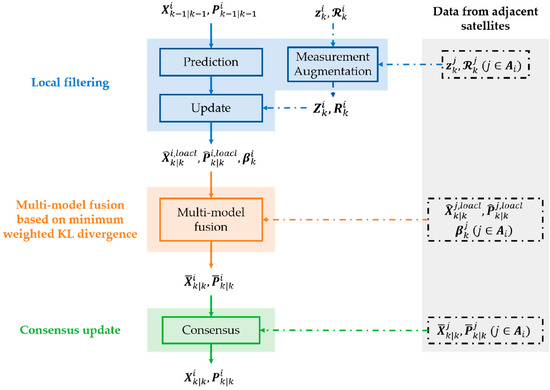

The authors of [121] analyzed the consistency state estimation framework of distributed sensor networks, proposed a four-level functional model, described the main process of consistency state estimation technology from the perspective of information processing, interaction, and fusion, and designed an adaptive weight allocation method based on dynamic topology information. On this basis, they describe an adaptive weighted Kalman consistency filter (AW-KCF) algorithm. The authors of [122] proposed a fast-distributed multi-model (FDMM) nonlinear estimating approach for satellites in an effort to enhance the stability and accuracy of tracking and lower the processing burden. This algorithm employs a novel architecture for distributed multi-model fusion, as shown in Figure 5. At first, each satellite must perform local filtering based on its own model, and the corresponding fusion factor generated from the Wasserstein distance must still be computed for each local estimation; Then, each satellite performs a multi-model fusion of the received estimation based on the minimum weighted Kullbac–Leibler divergence; Ultimately, each satellite updates its state estimation based on the consistency agreement.

Figure 5.

FDMM-based distributed fusion framework [122].

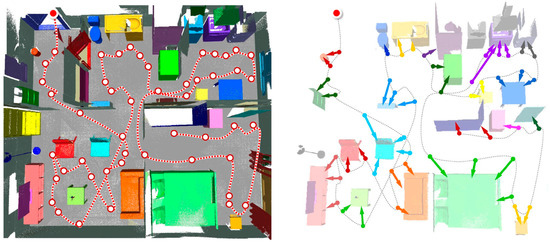

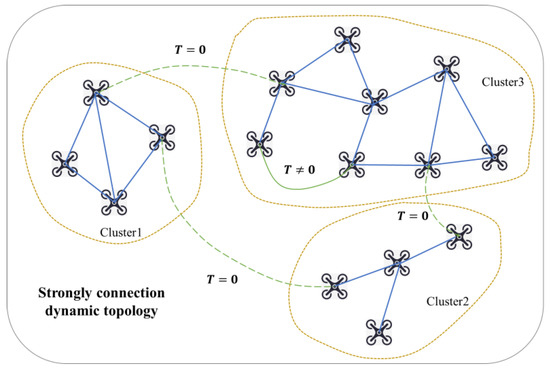

In [123], the estimation problem is simplified into a tiny local sub-problem that responds to the time-varying communication topology via the self-organization process of the local estimation node, as shown in Figure 6 for the topological structure diagram. Relying on the communication mode between local sensor nodes and neighboring nodes, the existing literature proposes four sample distributed fusion strategies: sequential fusion, consensus protocol, gossip protocol, and diffusion fusion strategy. In sequential fusion, two sensors communicate with each other sequentially and repeatedly combine two sensors in sequence. The fusion strategy is simple and straightforward, even though the topologies must be connected sequentially. Each node is capable of observing the target. Each sensor node in the consensus protocol fusion method communicates iteratively with all of its linked neighbors and carries out state fusion based on weighted average consistency, which can converge globally and has broad applicability in general topology. Global optimum convergence requires several iterative communications, which is a disadvantage. In the fusion strategy of the gossip protocol, each sensor node randomly or deterministically communicates with one of its connected neighbors iteratively, and the state fusion is also based on the weighted average consistency, which can be globally convergent, and the general topology is extremely relevant. However, countless (preferably infinite) iterations are required. In the diffusion fusion strategy, each sensor node communicates with all connected neighbors once and performs linear combination weighted fusion by using diffusion convex combination of local estimation. The diffusion fusion estimation is a fully distributed estimation with low communication load and no topology limitations; however, there is no global convergence [124]. The most current application of distributed estimating is shown in Figure 7.

Figure 6.

Schematic diagram of dynamic topology.

Figure 7.

Distributed estimation application: cooperative tracking of heterogeneous autonomous robot cluster [124].

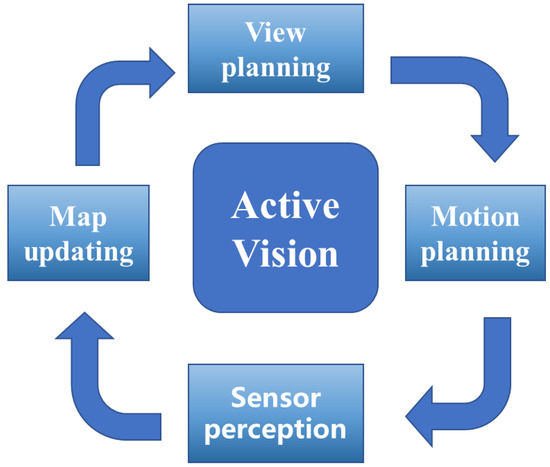

Traditional measurement is a passive measurement, which is a form of passive reception of the geometry, color, or texture information of other agents within the field of vision or measurement range. Until now, passive measurement or detection of other agents has not been beneficial to unified cluster planning and control. To meet the comprehensive application of group intelligent positioning and navigation technology, group navigation technology based on active behavior control is absolutely necessary. Section 4 would then introduce the group navigation technology based on active behavior control.

5. Distributed Fusion of Multi-Source Dynamic Sensing Information

In distributed cooperative positioning and navigation of clustered UAVs, the fusion of collected data by multiple UAVs can enhance the positioning accuracy of individual UAVs; consequently, research on multi-transmitter data fusion is critical. Multi-sensor data fusion is achieved by combining data from many sensors with model-based predictions to generate more meaningful and accurate state estimates. Currently, multi-sensor data fusion is extensively employed in process control and autonomous navigation. Although centralized fusion can produce optimal solutions in theory, it cannot scale the number of nodes; that is, as the number of nodes grows, it may or may not be feasible to handle all sensor measurements at a single terminal due to communication limits and reliability requirements. In the distributed fusion architecture [175], multi-source measurement data are independently processed at each node, and local estimates are required prior to communication to the central node for fusion. Distributed fusion, on the other hand, is robust to system failures while offering the virtue of minimal communication costs.

Nonetheless, distributed fusion must take into account the correlation between local estimates. Due to redundant calculations or the sharing of prior information or data sources, local estimates may be interdependent, but data received by distributed sensors include a clear physical relationship between their observed values [176]. In a centralized architecture where the independence hypothesis holds true, the Kalman filter (KF) [177] calculates an optimal estimate based on the minimum mean square error. In contrast, in distributed structures where the independence hypothesis does not hold, filtering without taking cross-correlation into account may result in divergence due to the mismatch between the mean and covariance of the fusion process. It remains challenging to estimate cross-correlation between data sources, particularly in a distributed fusion architecture. Fusion may be too expensive for large, distributed sensor networks, even when all cross-correlations are enabled. However, eliminating cross-correlation will result in a more conservative fusion mean and covariance.

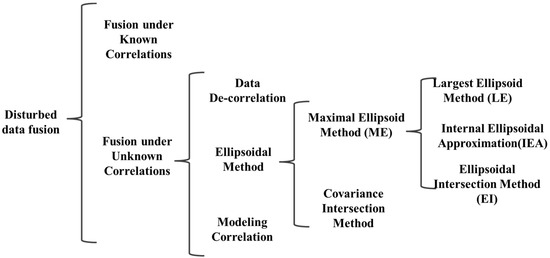

Methods to address the unknown correlation fusion problem in distributed architecture can be categorized into three categories based on their processing techniques. (1) Data de-correlation: the input data source is de-correlated based on measurement reconstruction prior to fusion [178] or the straightforward elimination of double calculation [179,180]; (2) Modeling correlation: obtaining fusion solutions based on unknown correlation information and modeling [181,182,183,184]; (3) Ellipsoid method (EM): Under the hypothesis of bounded cross-correlation, the suboptimal but consistent fusion solution is generated by approximating the intersection of several data sources without cross-correlation information.

A second concern with sensor fusion is that sensors commonly generate unpredictable or incorrectly modeled data. Yet, the sensor may provide inconsistent and incorrect data for a variety of reasons, including sensor failure, sensor noise, and slow failure due to sensor component failure, among others [185,186,187]. The fusion of irregular sensor data with reliable data can result in extremely incorrect results [188]. Hence, inconsistencies must be identified and eliminated prior to fusing the distributed fusion architecture. Multi-sensor data fusion with inconsistencies and incorrect sensor data can be roughly categorized into three groups: (1) Model-based methods: sensor data are compared with reference to identify and eliminate inconsistencies, which can be obtained through mathematical models [189,190]; (2) Redundancy-based approaches: multiple sensors provide estimates of observed quantities, and then inconsistent estimates are identified and eliminated by consistency checking and majority voting [185]. (3) Fusion-based method: the fusion covariance is amplified to embrace all the local means and covariances, hence making the fusion estimation consistent under the pseudo-data [191,192].

Distributed sensor networks cannot match the estimated quality of centralized systems, but they are more adaptable and tolerant to faults. In distributed architectures, local sensor estimates might be linked because observations from distributed sensors may be impacted by the same process noise. Local estimations may be effective due to double counting. Cross-correlations should be considered by distributed fusion algorithms to maintain optimality and consistency.

5.1. Fusion under Known Correlations

In distributed estimating, the conditional independence of the estimation is a simplification assumption. However, neglecting cross-correlations in distributed structures might result in inconsistent outputs, which in turn can result in inconsistent outcomes in fusion methods. By combining known cross-correlations, there are several approaches for state estimation and fusion. One study [193] presented just one fusion rule that is applicable to centralized, distributed, and hybrid fusion architectures with complete prior knowledge. The authors of [194] proposed a fusion approach for discrete multi-rate independent systems based on multi-scale theory under the assumption that the sampling ratio between local estimates is a positive integer. Distributed fusion estimation of asynchronous systems with correlated noise is studied in [195,196,197].

Other researchers have also investigated learning-based multi-sensor data fusion methods [198,199,200,201,202,203]. The multi-feature fusion method proposed in the literature [200] is applied to visual recognition in multimedia applications. The authors of [201] presented a neural network-based framework for the fusion of multi-rate and multi-sensor linear systems. The framework transforms a multi-rate multi-sensor system into a single multi-sensor system with the highest sampling rate and efficiently fuses local estimates using neural networks. In [204,205], neural network-based multi-sensor data fusion was compared to the traditional method and is demonstrated to offer superior fusion performance. Nevertheless, the learning-based strategy is limited by the huge amount of training data required.

In a centralized architecture, KF/IF performs at its highest level since the independence assumption is true. By computing and combining accurate cross-correlations, it is feasible to achieve optimality in a distributed fusion architecture. In addition, the proposed fusion method can be utilized independently or cooperatively, depending on the fusion structure and practical constraints, to address the complex fusion problems.

5.2. Fusion under Unknown Correlation

There are numerous sources of correlation in a distributed architecture that affect the state estimation and fusion processes. When cross-correlation is absent, the outputs will be excessively extreme, and the fusion method could diverge. Given the double computation and lack of internal parameters, it is challenging to estimate the cross-correlation in large-scale distributed sensor networks with accuracy. Proper management and maintaining cross-correlations is complex and expensive, and the cost is quadratic with the number of updates. Thus, it appears that a suboptimal strategy is employed to seek the fusion solution based on numerous data sources without understanding the real cross-correlation. Figure 13 shows the distributed fusion classification under an unknown correlation.

Figure 13.

Distributed fusion classification with unknown correlation.

5.2.1. Data De-Correlation

Cross-association of data is prevalent in distributed architectures. When the same data reaches the fusion node via a distinct or cyclic path, a double count occurs. Literature [179,180] proposes an approach for eliminating correlation by deleting duplicate counts. The purpose is to parse external measurements from other sensor nodes’ state estimates, store them, and employ them to update state estimates. In this way, double-counted data are eliminated prior to data merging. This approach presupposes a specific network structure and eliminates dependencies caused by multiple calculations. The authors of [206] propose an approach based on an algorithm derived from graph theory that is appropriate to any network topology with variable delay. Nevertheless, this is neither scalable nor practical for large sensor networks [207]. In measurement reconstruction [178], system noise is artificially modified to eliminate the correlation between measurement sequences. At fusion nodes, wireless measurements are reconstructed based on local sensor estimation. This strategy has been employed for tracking in cluttered environments [208], unordered filtering [209], and non-Gaussian distributions with the Gaussian mixture model [210]. To correctly reconstruct measurement results, however, external information such as Kalman gain, associated weight, and sensor model information must be considered [211,212]. Because de-correlation methods rely on empirical knowledge and specialized analysis of a particular real system, one‘s fusion performance is compromised. Moreover, as the number of sensors grows, these methods become incredibly inefficient and impractical.

5.2.2. Modeling Correlation

Although it is challenging to obtain accurate cross-correlations between local estimates in distributed architectures, the nature of the joint covariance matrix can impose some limitations on the cross-correlations that are feasible. In addition, certain applications can provide prior knowledge and limitations on the degree of correlation, allowing someone to infer whether estimates at the local level are strongly or weakly related. The cross-correlation is not completely unknown since the estimations provided by several sensors are neither completely independent nor completely correlated. Thus, the information regarding unknown cross-correlation can be used to enhance the accuracy of fusion solutions under uncertain correlation.

Reference [182] proposes the closed equation of scalar value fusion and the approximative solution of vector value fusion based on the uniform distribution correlation coefficient. Through the use of single covariance and constraint correlation coefficients, the compact upper bound of the joint covariance matrix from reference [213] is derived. On the basis of bounded correlation, the universal approach of bounded covariance expansion (BCINF) [214] with upper and lower cross-correlation bounds is proposed. The model proposed in [184] ensures the semi-positive value of the joint covariance matrix and complies with the canonical correlation analysis of multivariate correlation [215]. In [184], the Cholesky decomposition model of unknown cross-correlation was applied to the BC formula, and the Min-Max optimization function was employed to iteratively estimate the fusion solution to the unknown cross-correlation value. In addition, conservative fusion solutions are provided under the assumption that correlation coefficients are distributed uniformly. Using the correlation model in the BC formula, [181] analyzed the maximum limit of unknown correlation estimation from track-to-track fusion. The reference [216] studies the multi-sensor estimation issue under the norm-bounded cross-correlation hypothesis, where the worst-case fusion MSE is minimized for all feasible mutual covariances. To take advantage of some prior knowledge of mutual covariance, the tolerance formula for mutual covariance was proposed in [217] in order to capitalize on this information. Based on the proposed model, semidefinite programming (SDP) was employed to develop an optimal fusion strategy that minimizes the worst-case fusion mean square error (MSE).

5.2.3. Ellipsoidal Method

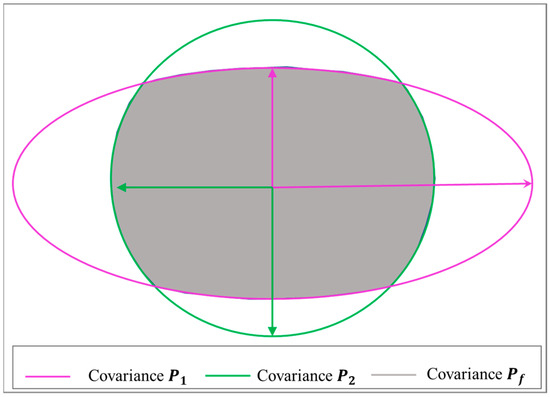

The potential cross-covariances between data sources are bound [176,218,219], thereby limiting the possible results of fusion covariances to sets with bounds. As shown in Figure 14, several mutual covariances are selected, and the covariances of fusion are located at the intersection of several data sources. The ellipsoid method (EM) aims at estimating fusion by approximating the crossing regions of several ellipsoids. Other subdivisions of EM include the covariance intersection method (CI) [219], the largest ellipsoid method (LE) [220], the inner ellipsoid approximation method (IEA) [221], and the ellipsoid intersection method (EI). The goal of the three methods, LE, IEA, and EI, is to find the maximum ellipsoid in the region where a single ellipsoid intersects, which is called the maximum ellipsoid method (ME).

Figure 14.

An example of the fusion covariance of a single data source with a correlation coefficient where the gray area represents all fusion covariance possibilities.

Several applications involve CI approaches, including positioning [222,223,224], target tracking [225,226], simultaneous localization and mapping (SLAM) [227], image integration [228], NASA Mars Rovers [229], and spacecraft state estimation [226]. In order to avoid overestimating CI, the maximum ellipsoid approach [220] provides the greatest ellipsoid within the intersection of two independent ellipsoids. The internal ellipsoid approximation method (IEA) [221,230,231] takes into account the intersection regions of a single ellipsoid internally to complement the LE method. The ellipsoidal intersection approach [232] computes the fusion mean and covariance by employing mutually exclusive information from two data sources in order to solve the fusion problem under an unknown correlation.

In the case when the cross-correlation hypothesis is uncertain, the choice of fusion method is reliant on the prospective fusion problem. The data de-correlation method eliminates correlations prior to fusion estimation but is restricted to tiny network topologies. To achieve optimality in distributed fusion architectures, it is preferable to employ accurate cross-correlations. Thus, if there is some prior knowledge of the degree of correlation, this information may be employed to improve the estimation’s accuracy. CI methods can be used to combine data with unknown correlations in a consistent manner. However, CI results tend to be conservative and less reliable. The EI approach can produce fewer conservative solutions.

6. Open Problems and Possible Future Research Directions

Open Problem 1: Model resolution improvement and similarity model establishment based on sparse features and invariant features.

The majority of existing surface feature model approaches are typically focused on one or more of the surface features that are widely available to visible light, such as geometry, brightness, color, etc., with the lowest number of feature requirements. The research on the features of invariance is relatively limited, and the research is reasonably straightforward [233,234,235]. The information on deep features is not exploited, nor is the resolution of the surface feature model at different scales addressed. If the fast retrieval and matching of the ground object model established at a certain scale could result in mismatches and damage the geometry of the final position solution accuracy, this must be further explored and discussed. Applying feature invariance and invariance feature vector analysis, surface features and deep features are continuously extracted, and a multi-scale and highly available similarity feature model construction approach is proposed for the fundamental goal of enhancing model resolution.

Open Problem 2: Multi-scale and multi-sensor adjustment and nonlinear optimization.

As UAVs coordinate their positioning, they must share relative measurement data in real-time, resulting in an exceedingly large information vector for each UAV to address. Traditional optimization theory (such as the Kalman filter, etc.) and related optimization methods do not utilize this numerous information solution. It is essential to implement the whole network adjustment strategy, evaluate its nonlinear optimization issue, evaluate its optimization method, and learn from each other [236,237]. Analyze the optimization theory for the cooperative positioning technology of UAVs, optimize and solve the existing observation equation, and establish theoretical support for the problem of multi-scale multi-sensor position and attitude fusion estimations.

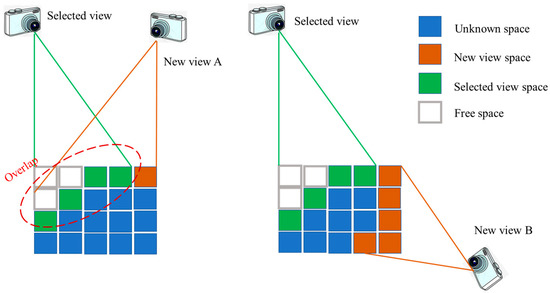

Open Problem 3: Observability of distributed cooperative measurement of UAVs.

Due to the navigation interruption or insufficient accuracy caused by factors such as single UAV sensor field of view occlusion or flight height limitation, UAV cluster distributed cooperative positioning can achieve group positioning and navigation in a large area, thereby enhancing the observability of measurements [238,239]. On the basis of considerable measurements, resolve the issue that the number of UAVs that can be observed in the field of view of a single UAV changes with time, establish a reasonable cluster hierarchical cooperative positioning method, employ the real-time broadcast inter-aircraft relative measurement information, and then improve the global positioning accuracy of UAVs through the joint calculation of the whole network adjustment and nonlinear optimization.

6.1. Research on Feature Extraction and Modeling of Key Features in Geographic Information

The existing feature extraction methods [240,241,242] have certain challenges, such as poor universality and usability in large-scale environments and low-resolution images, a lack of feature information, and complexity in modeling. First of all, study the perspective changes of images at different heights and the impact of different perspectives on the features, analyze the features of changes caused by time changes (such as seasonal changes in vegetation, shadow angles, perspective of ground objects, and the existence or absence of vehicles), and use the rich color and texture information of airborne multi-frame aerial images obtained by cameras to make use of the spectrum, space, and context. Semantic and auxiliary information are employed for the semantic description of ground objects, as well as depth information collected by deep learning, and the feature-based feature extraction approach is used to generate excellent feature extraction of ground objects in low-texture environments. After that, with a focus on sparse feature descriptors, we examined feature invariance and how much a feature depends on the local texture. In a low-texture environment, the features of each module are reconstructed separately based on the image data of the retrieved feature points.

The research on feature extraction and modeling of key features in geographic data is divided primarily into two categories: feature extraction and modeling of key features. Firstly, the edge contour of the key features is extracted from the original remote sensing image using plane expansion segmentation technology, and the geometric and attribute description of the feature contour is employed. Through describing the geometry and features, the impact of different factors can be exhaustively considered, and the necessary influence factors can be specified to constrain the contour geometry and separate the image from the figure. The ground feature identification method can be used to rapidly extract ground feature meanings based on the retrieved geometric and attribute features. Then, based on the segmented feature image and after the main feature has been extracted, the image data are divided into various components based on the feature using the connectivity of the image data and the module connection segmentation method, and the feature of each module is reconstructed using the segmentation contour extraction technology and the topological data editing function, or directly using the simple model function.

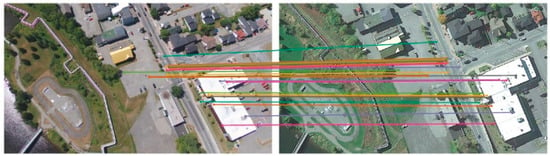

6.2. Research on Fast Matching Method of Ground Objects Based on Mapping Base Map

The existing feature matching methods [243,244,245,246,247,248] suffer from poor real-time performance, strict feature point requirements (such as a minimum number of feature points, similar lighting, scale, rotation, and viewpoint, etc.), the use of only traditional feature points, and the absence of deep feature points. The fast matching of ground objects based on a map is a similarity-based measuring challenge. We explored and analyzed the features of the geometric and semantic features of RGB images, extracted the deep feature information through the deep learning method, strengthened the feature efficiency levels, enhanced the real-time performance of retrieval and matching by reducing the number of features, and optimized the overall solution for the number of features and time loss. Given the benefits of the FAST corner point and SIFT descriptor approach, along with the position and attitude support of inertial sensors, the matching speed of ground objects is sped up, the accuracy of ground object matching is boosted, and the positioning accuracy of UAVs is enhanced.

Fast matching of ground features consists primarily of two components: fast extraction and fast matching of ground features. First, the FAST approach is employed to extract feature corners, followed by the SIFT method for giving the principal direction and descriptor for feature points. The output attitude data from various sensors is then combined with the similarity measure based on the dot product to assist the search strategy in completing the initial fast matching. Using the statistical feature point distance error method, the mismatched points are eliminated to generate the final homologous point set. According to the motion of the UAV, the camera motion between two consecutive images should be limited, with a limited range of attitude and position changes, and the update frequency of inertial data is considerably higher than that of the photograph. When comparing two subsequent image frames, the camera’s position and attitude changes can be determined by integrating the measured values of the gyroscope and AC-accelerometer. After acquiring the attitude adjustment, the feature points in the image to be matched can be re-projected, and the matching search area is demarcated based on the projection position. According to the camera attitude prediction feature point area, it can effectively minimize the amount of computation while minimizing the likelihood of mismatched points, enhancing the algorithm’s accuracy as well as speed. Following the preceding matching of features, the initial matching point set S is obtained. Unavoidably, there will be a certain number of mismatched points. Thus, it is essential to eliminate the mismatched points from the initial set of matching points. The RANSAC algorithm is a popular method to eliminate mismatched points. It estimates the model’s parameters by iterating over a collection of observational information containing “outliers.” Yet, it is an uncertain strategy that enhances the likelihood by increasing the number of rounds and requires expensive computation. Thus, we are considering just using an approach that counts the mean distance error between feature points to eliminate mismatched points.

6.3. Research on Pose Fusion Estimation Based on Multi-Sensor

In the UAV cluster, each UAV broadcasts its own measurement and position information, receives information from other UAVs in real time, and employs relative measurements between specific individuals to enhance the UAV’s global positioning capabilities. Due to the load limit, however, the UAV sensors may be heterogeneous, and the number of UAVs in the field of vision at different times is not fixed, resulting in time-varying observation and measurement, which introduces the problem of data obfuscation and complicating fusion. The conventional optimization model [249,250] is impractical for this distributed, time-varying system, but the network-wide adjustment that incorporates nonlinear optimization is extremely effective at resolving this issue. In addition, we propose feature-level fusion based on the existing theory of data fusion, taking into account the difficulty of fusing data at the data level and the high cost of preprocessing at the decision level. For the proper positioning of various sensors, fusion data are required. Selecting the nonlinear optimization adjustment method for the UAV position and attitude fusion estimation at the feature layer can help reduce the computational complexity of the data fusion and better adapt to the complex dynamic environment based on the application environment requirements and the capability of the airborne processing unit. This approach is essentially based on the theory of estimating and generates the state space model for various sensor data before estimating its state so as to do data fusion.

The purpose of multi-sensor data fusion is to estimate the position and attitude of multiple UAVs accurately. Two processes comprise multi-sensor fusion estimation: multi-sensor joint calibration and feature layer fusion. The assumption of multi-vision sensor data fusion is that multiple sensors simultaneously describe the same target. There are two main methods for calibrating sensor joints. The first step is to install the sensor in accordance with the specified relative transformation relationship; the second step is to calculate the relative transformation relationship between two sensors based on the constraint relationship between distinct sensor data. After sensor failure maintenance, the first method must be recalibrated, and the relative inaccuracy will probably continue to be due to the UAV’s movement-induced vibration. Thus, it is proposed that the second calibration approach be employed for the joint calibration of this project.

The coordinate conversion coefficient matrix equation is generated using geometric restrictions and the given calibration plate in order to calculate the conversion connection between multiple camera coordinate systems. In addition, for a monocular camera that has not been calibrated or exhibits a high calibration error, the global optimization approach can be employed to optimize the camera’s internal and external calibrations simultaneously. By analyzing the structure and characteristics of multi-sensors carried by multiple UAVs, as well as their measurement information and error distribution characteristics, a whole network adjustment method with non-linear optimization is proposed for UAV position and attitude fusion estimation at the feature level.

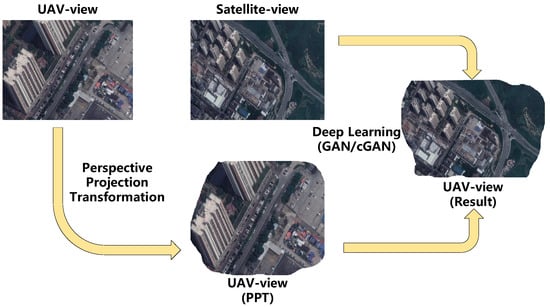

6.4. Research on Absolute Position Estimation Method of Multi-UAV Scale Matching Based on Ground Features

Given the differences in load and flight speed of heterogeneous UAVs during cooperative positioning of multiple UAVs, the number of UAVs that might be observed in the field of vision of UAVs at different times is unpredictable, and low-altitude UAVs suffer an obstacle avoidance challenge [20,251]. When an unmanned aerial vehicle (UAV) changes its altitude, the corresponding camera image’s perspective and imaging parameters fluctuate, which makes scale matching and recognizing multiple UAVs challenging. By analyzing the structure and characteristics of the sensors carried by multiple UAVs, the influence of perspective changes and differences on the characteristics of the images acquired by the camera at different heights, and the effect of time changes on the light intensity, the image is de-noised to improve the signal-to-noise ratio and enhance the feature information. Deep learning is a technique for extracting depth features that is insensitive to shifts in scale. However, its usefulness is quite poor, and it requires global optimization of attitude upon initial matching. The goal of image registration is to address the UAV’s unique localization challenge. With the method of deep learning, the image of the measuring platform’s common features is learned, and then the UAV’s real-time position is estimated through matching. Attitude parameter optimization is a SLAM problem. With the process of attitude optimization, the attitude of the camera or a landmark can be corrected during camera movement to enhance positioning accuracy.

Scale-matching positioning of multiple UAVs is principally comprised of UAV-satellite image registration and attitude parameter optimization. At first, when UAV images and satellite images are matched, CNN is employed to learn the common features (geometry, color, texture, etc.) between UAV images and satellite images, and then UAV images and satellite images are registered. The CNN network may be employed to learn the effective features between the calibrated satellite image and the UAV image under a variety of illumination conditions (such as seasonal changes, shifts in time, different visual angles, and the presence of moving objects) and directly align all image pixels because this can make use of the global texture of the image during image registration, which is crucial for registration of low-texture images. By aligning the UAV image with the satellite image, the UAV’s positioning information may be retrieved. Then, by employing the mutual measurement information between multiple UAVs, all pose parameters in the whole image sequence are optimized, and the attitude map optimization technology is utilized to attempt to optimize the attitude of the camera or landmark when the camera is moving. The photometric beam is employed to directly optimize the pixel intensity by optimizing the sum of the square differences between the pixel intensities of adjacent UAV frames and the sum of the square differences between the UAV frames and the satellite map. The major advantage of this method is that only a smaller frame set is required to match the satellite map, enabling the UAV to be accurately localized on all frames.

7. Conclusions

In this paper, we review and evaluate the most emerging advances and advancements in the study of multi-UAV visual cooperative positioning (including autonomous intelligent positioning and navigation of UAVs based on vision, distributed cooperative measurement and fusion in cluster high dynamic topology, group navigation based on active behavior control, and distributed fusion of multi-source dynamic perception information). While GNSS signals are denied, the UAV’s only options for position perception are limited communication and self-carrying sensors (such as inertial navigation and vision). Although inertial navigation drift is random, its uncertainties can be reduced by adjusting the network system through the cluster’s information-sharing mechanism. Long-term flight without a guiding anchor will cause the cluster positioning datum to diverge at the same time. With the use of data from earth observations, the visual positioning technologies based on geographic information were employed to extract the invariant feature information of ground objects. The entire group drift was eliminated by comparing prefabricated geographic information data and adjusting for multiple-view observations.

The visual odometry approach for earth observation is significantly expanded to account for the discontinuity and low overlap rate of observed images in the case of fast maneuvering with geographic information, thereby enhancing the cumulative measurement accuracy and robustness of visual odometry. With the rich color and texture information of the aerial multi-frame images captured by the camera, the spectral, spatial, contextual, semantic, and auxiliary information in the images, as well as the depth information extracted by deep learning, may be employed to describe the semantic features of the ground objects, enabling the effective extraction of the features of the ground objects in the low-texture environment. A real-time frame can be roughly registered with a satellite image using a multi-view geographic positioning approach to transform the perspective projection of a UAV image. A satellite image with a realistic appearance and maintained content is generated from the corresponding UAV perspective, which can bridge the obvious gap in perspective between the two domains and enable geographic positioning. In a drone cluster, each drone broadcasts measurements and positions and receives information from other drones in real time. The relative measurement between different individuals can enhance the drone’s capacity to locate itself globally and properly react to the complex and difficult dynamic environment.

Author Contributions

Conceptualization, P.T. and X.Y.; methodology, P.T. and P.W.; writing—original draft preparation, P.T.; writing—review and editing, X.Y. and Y.Y.; supervision, X.Y. and W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Radočaj, D.; Šiljeg, A.; Plaščak, I.; Marić, I.; Jurišić, M. A Micro-Scale Approach for Cropland Suitability Assessment of Permanent Crops Using Machine Learning and a Low-Cost UAV. Agronomy 2023, 13, 362. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Othman, N.Q.H.; Khan, M.A.; Amjad, H.; Żywiołek, J. A comprehensive review of micro UAV charging techniques. Micromachines 2022, 13, 977. [Google Scholar] [CrossRef]

- Liu, X.; Li, H.; Yang, S. Optimization Method of High-Precision Control Device for Photoelectric Detection of Unmanned Aerial Vehicle Based on POS Data. Sci. Program. 2022, 2022, 2449504. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, H.; Liu, Q.; Huang, J. Key Technologies of the Cooperative Combat of Manned Aerial Vehicle and Unmanned Aerial Vehicle. In Advances in Guidance, Navigation and Control: Proceedings of 2022 International Conference on Guidance, Navigation and Control, Harbin, China, 5–7 August 2022; Springer Nature Singapore: Singapore, 2023; pp. 671–679. [Google Scholar]

- Lu, Y.; Qin, W.; Zhou, C.; Liu, Z. Automated detection of dangerous work zone for crawler crane guided by UAV images via Swin Transformer. Autom. Constr. 2023, 147, 104744. [Google Scholar] [CrossRef]

- Rehan, M.; Akram, F.; Shahzad, A.; Shams, T.A.; Ali, Q. Vertical take-off and landing hybrid unmanned aerial vehicles: An overview. Aeronaut. J. 2022, 126, 1–41. [Google Scholar] [CrossRef]

- Çoban, S. Autonomous performance maximization of research-based hybrid unmanned aerial vehicle. Aircr. Eng. Aerosp. Technol. 2020, 92, 645–651. [Google Scholar] [CrossRef]

- Alqurashi, F.A.; Alsolami, F.; Abdel-Khalek, S.; Sayed Ali, E.; Saeed, R.A. Machine learning techniques in internet of UAVs for smart cities applications. J. Intell. Fuzzy Syst. 2022, 42, 3203–3226. [Google Scholar] [CrossRef]

- Amarasingam, N.; Salgadoe, S.; Powell, K.; Gonzalez, L.F.; Natarajan, S. A review of UAV platforms, sensors, and applications for monitoring of sugarcane crops. Remote Sens. Appl. Soc. Environ. 2022, 26, 100712. [Google Scholar] [CrossRef]

- Li, B.; Liu, B.; Han, D.; Wang, Z. Autonomous Tracking of ShenZhou Reentry Capsules Based on Heterogeneous UAV Swarms. Drones 2023, 7, 20. [Google Scholar] [CrossRef]

- Akter, R.; Golam, M.; Doan, V.S.; Lee, J.M.; Kim, D.S. Iomt-net: Blockchain integrated unauthorized uav localization using lightweight convolution neural network for internet of military things. IEEE Internet Things J. 2022, 10, 6634–6651. [Google Scholar] [CrossRef]

- AL-Dosari, K.; Hunaiti, Z.; Balachandran, W. Systematic Review on Civilian Drones in Safety and Security Applications. Drones 2023, 7, 210. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.V. Unmanned aerial vehicles in smart agriculture: Applications, requirements, and challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Sal, F. Simultaneous swept anhedral helicopter blade tip shape and control-system design. Aircr. Eng. Aerosp. Technol. 2023, 95, 101–112. [Google Scholar] [CrossRef]

- Khan, A.; Zhang, J.; Ahmad, S.; Memon, S.; Qureshi, H.A.; Ishfaq, M. Dynamic positioning and energy-efficient path planning for disaster scenarios in 5G-assisted multi-UAV environments. Electronics 2022, 11, 2197. [Google Scholar] [CrossRef]

- Gyagenda, N.; Hatilima, J.V.; Roth, H.; Zhmud, V. A review of GNSS-independent UAV navigation techniques. Robot. Auton. Syst. 2022, 152, 104069. [Google Scholar] [CrossRef]

- Gao, W.; Yue, F.; Xia, Z.; Liu, X.; Zhang, C.; Liu, Z.; Jin, S.; Zhang, Y.; Zhao, Z.; Zhang, T.; et al. Weak Signal Processing Method for Moving Target of GNSS-S Radar Based on Amplitude and Phase Self-Correction. Remote Sens. 2023, 15, 969. [Google Scholar] [CrossRef]

- Gao, W.; Xu, Z.; Han, X.; Pan, C. Recent advances in curved image sensor arrays for bioinspired vision system. Nano Today 2022, 42, 101366. [Google Scholar] [CrossRef]

- Liu, Z.; Cao, Y.; Gao, P.; Hua, X.; Zhang, D.; Jiang, T. Multi-UAV network assisted intelligent edge computing: Challenges and opportunities. China Commun. 2022, 19, 258–278. [Google Scholar] [CrossRef]

- Tang, J.; Duan, H.; Lao, S. Swarm intelligence algorithms for multiple unmanned aerial vehicles collaboration: A comprehensive review. Artif. Intell. Rev. 2022, 1–33. [Google Scholar] [CrossRef]

- Shen, S.; Mulgaonkar, Y.; Michael, N.; Kumar, V. Multi-sensor fusion for robust autonomous flight in indoor and outdoor environments with a rotorcraft MAV. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4974–4981. [Google Scholar]

- Mueller, M.W.; Hamer, M.; D’Andrea, R. Fusing ultra-wideband range measurements with accelerometers and rate gyroscopes for quadrocopter state estimation. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1730–1736. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Camera-based navigation of a low-cost quadrocopter. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 2815–2821. [Google Scholar]

- Nemra, A.; Aouf, N. Robust cooperative UAV visual SLAM. In Proceedings of the 2010 IEEE 9th International Conference on Cyberntic Intelligent Systems, Reading, UK, 1–2 September 2010; pp. 1–6. [Google Scholar]

- Loianno, G.; Thomas, J.; Kumar, V. Cooperative localization and mapping of MAVs using RGB-D sensors. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4021–4028. [Google Scholar]

- Piasco, N.; Marzat, J.; Sanfourche, M. Collaborative localization and formation flying using distributed stereo-vision. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1202–1207. [Google Scholar]

- Fei, W.; Jin-Qiang, C.U.I.; Ben-Mei, C.H.E.N.; Tong, H.L. A comprehensive UAV indoor navigation system based on vision optical flow and laser FastSLAM. Acta Autom. Sin. 2013, 39, 1889–1899. [Google Scholar]

- Bryson, M.; Sukkarieh, S. Building a Robust Implementation of Bearing-only Inertial SLAM for a UAV. J. Field Robot. 2007, 24, 113–143. [Google Scholar] [CrossRef]

- Kim, J.; Sukkarieh, S. Real-time implementation of airborne inertial-SLAM. Robot. Auton. Syst. 2007, 55, 62–71. [Google Scholar] [CrossRef]

- Gandhi, D.; Pinto, L.; Gupta, A. Learning to fly by crashing. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3948–3955. [Google Scholar]

- Pinto, L.; Gupta, A. Supersizing self-supervision: Learning to grasp from 50k tries and 700 robot hours. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3406–3413. [Google Scholar]

- Fu, Q.; Quan, Q.; Cai, K.-Y. Robust pose estimation for multirotor UAVs using off-board monocular vision. IEEE Trans. Ind. Electron. 2017, 64, 7942–7951. [Google Scholar] [CrossRef]

- Zhou, H.; Zou, D.; Pei, L.; Ying, R.; Liu, P.; Yu, W. StructSLAM: Visual SLAM with building structure lines. IEEE Trans. Veh. Technol. 2015, 64, 1364–1375. [Google Scholar] [CrossRef]

- Zou, D.; Tan, P. Coslam: Collaborative visual slam in dynamic environments. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 354–366. [Google Scholar] [CrossRef]

- Wang, K.; Shen, S. Mvdepthnet: Real-time multiview depth estimation neural network. In Proceedings of the 2018 International Conference on 3d Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 248–257. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-precision, consistent EKF-based visual–inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.P. Automatic and precise orthorectification, coregistration, and subpixel correlation of satellite images, application to ground deformation measurements. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1529–1558. [Google Scholar] [CrossRef]

- Google Earth, Google. 2022. Available online: https://www.google.com/earth/ (accessed on 15 July 2022).

- ArcGIS Online, Esri. 2022. Available online: https://www.arcgis.com/index.html (accessed on 10 June 2022).

- Couturier, A.; Akhloufi, M.A. Relative visual localization (RVL) for UAV navigation. In Degraded Environments: Sensing, Processing, and Display 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10642, pp. 213–226. [Google Scholar]

- Couturier, A.; Akhloufi, M.A. UAV navigation in GPS-denied environment using particle filtered RVL. In Situation Awareness in Degraded Environments 2019; SPIE: Bellingham, WA, USA, 2019; Volume 11019, pp. 188–198. [Google Scholar]

- Couturier, A.; Akhloufi, M. Conditional probabilistic relative visual localization for unmanned aerial vehicles. In Proceedings of the 2020 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), London, ON, Canada, 30 August–2 September 2020; pp. 1–4. [Google Scholar]

- Warren, M.; Greeff, M.; Patel, B.; Collier, J.; Schoellig, A.P.; Barfoot, T.D. There’s no place like home: Visual teach and repeat for emergency return of multirotor uavs during gps failure. IEEE Robot. Autom. Lett. 2018, 4, 161–168. [Google Scholar] [CrossRef]

- Brunelli, R. Template Matching Techniques in Computer Vision: Theory and Practice; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Brunelli, R.; Poggiot, T. Template matching: Matched spatial filters and beyond. Pattern Recognit. 1997, 30, 751–768. [Google Scholar] [CrossRef]

- Van Dalen, G.J.; Magree, D.P.; Johnson, E.N. Absolute localization using image alignment and particle filtering. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, San Diego, CA, USA, 4–8 January 2016; p. 647. [Google Scholar]

- Lewis, J.P. Fast template matching. Vis. Interface. 1995, 95, 15–19. [Google Scholar]

- Thrun, S. Particle Filters in Robotics. UAI 2002, 2, 511–518. [Google Scholar]

- Bing Maps, Microsoft. 2022. Available online: https://www.bing.com/maps (accessed on 17 December 2022).

- Magree, D.P.; Johnson, E.N. A monocular vision-aided inertial navigation system with improved numerical stability. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Kissimmee, FL, USA, 5–9 January 2015; p. 97. [Google Scholar]

- Sasiadek, J.; Wang, Q.; Johnson, R.; Sun, L.; Zalewski, J. UAV navigation based on parallel extended Kalman filter. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Dever, CO, USA, 14–17 August 2000; p. 4165. [Google Scholar]

- Johnson, E.; Schrage, D. The Georgia Tech unmanned aerial research vehicle: GTMax. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Austin, TX, USA, 11–14 August 2003; p. 5741. [Google Scholar]

- Yol, A.; Delabarre, B.; Dame, A.; Dartois, J.É.; Marchand, E. Vision-based absolute localization for unmanned aerial vehicles. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 3429–3434. [Google Scholar]

- Cover, T.M. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 1999. [Google Scholar]

- Gray, R.M. Entropy and Information Theory; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar]

- Wan, X.; Liu, J.; Yan, H.; Morgan, G.L. Illumination-invariant image matching for autonomous UAV localisation based on optical sensing. ISPRS J. Photogramm. Remote Sens. 2016, 119, 198–213. [Google Scholar] [CrossRef]

- Keller, Y.; Averbuch, A. A projection-based extension to phase correlation image alignment. Signal Process. 2007, 87, 124–133. [Google Scholar] [CrossRef]

- Patel, B. Visual Localization for UAVs in Outdoor GPS-Denied Environments; University of Toronto (Canada): Toronto, ON, Canada, 2019. [Google Scholar]

- Pascoe, G.; Maddern, W.P.; Newman, P. Robust direct visual localization using normalised information distance. In Proceedings of the British Machine Vision Conference, Oxford, UK, 21–24 November 2015; pp. 70–71. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference 1988, Manchester, UK, 1 January 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Computer Vision—ECCV 2006; Lecture Notes in Computer Science; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Tang, G.; Liu, Z.; Xiong, J. Distinctive image features from illumination and scale invariant keypoints. Multimed. Tools Appl. 2019, 78, 23415–23442. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Computer Vision—ECCV 2010; Lecture Notes in Computer Science; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Seema, B.S.; Hemanth, K.; Naidu, V.P.S. Geo-registration of aerial images using RANSAC algorithm. In NCTAESD-2014; Vemana Institute of Technology: Bangalore, India, 2014; pp. 1–5. [Google Scholar]

- Saranya, K.C.; Naidu, V.P.S.; Singhal, V.; Tanuja, B.M. Application of vision based techniques for UAV position estimation. In Proceedings of the 2016 International Conference on Research Advances in Integrated Navigation Systems (RAINS), Bangalore, India, 6–7 May 2016; pp. 1–5. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Computer Vision—ECCV 2006; Lecture Notes in Computer Science; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Shan, M.; Wang, F.; Lin, F.; Gao, Z.; Tang, Y.Z.; Chen, B.M. Google map aided visual navigation for UAVs in GPS-denied environment. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 114–119. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 25 July 2005; Volume 1, pp. 886–893. [Google Scholar]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Chiu, H.P.; Das, A.; Miller, P.; Samarasekera, S.; Kumar, R. Precise vision-aided aerial navigation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 688–695. [Google Scholar]

- Mantelli, M.; Pittol, D.; Neuland, R.; Ribacki, A.; Maffei, R.; Jorge, V.; Prestes, E.; Kolberg, M. A novel measurement model based on abBRIEF for global localization of a UAV over satellite images. Robot. Auton. Syst. 2019, 112, 304–319. [Google Scholar] [CrossRef]

- Masselli, A.; Hanten, R.; Zell, A. Localization of unmanned aerial vehicles using terrain classification from aerial images. In Advances in Intelligent Systems and Computing; Intelligent Autonomous Systems 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 831–842. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random forests. In Ensemble Machine Learning: Methods and Applications; Springer: New York, NY, USA, 2012; pp. 157–175. [Google Scholar]

- Shan, M.; Charan, A. Google map referenced UAV navigation via simultaneous feature detection and description. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Tombari, F.; Di Stefano, L. Interest points via maximal self-dissimilarities. In Computer Vision—ACCV 2014; Lecture Notes in Computer Science; Cremers, D., Reid, I., Saito, H., Yang, M.-H., Eds.; Springer International Publishing: Singapore, 2014; pp. 586–600. [Google Scholar]

- Shechtman, E.; Irani, M. Matching local self-similarities across images and videos. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Chen, X.; Luo, X.; Weng, J.; Luo, W.; Li, H.; Tian, Q. Multi-view gait image generation for cross-view gait recognition. IEEE Trans. Image Process. 2021, 30, 3041–3055. [Google Scholar] [CrossRef]

- Yan, X.; Lou, Z.; Hu, S.; Ye, Y. Multi-task information bottleneck co-clustering for unsupervised cross-view human action categorization. ACM Trans. Knowl. Discov. Data (TKDD) 2020, 14, 1–23. [Google Scholar] [CrossRef]

- Liu, X.; Liu, W.; Zheng, J.; Yan, C.; Mei, T. Beyond the parts: Learning multi-view cross-part correlation for vehicle re-identification. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Zhao, J.; Han, R.; Gan, Y.; Wan, L.; Feng, W.; Wang, S. Human identification and interaction detection in cross-view multi-person videos with wearable cameras. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Shao, Z.; Li, Y.; Zhang, H. Learning representations from skeletal self-similarities for cross-view action recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 160–174. [Google Scholar] [CrossRef]

- Xu, C.; Makihara, Y.; Li, X.; Yagi, Y.; Lu, J. Cross-view gait recognition using pairwise spatial transformer networks. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 260–274. [Google Scholar] [CrossRef]

- Cai, S.; Guo, Y.; Khan, S.; Hu, J.; Wen, G. Ground-to-aerial image geo-localization with a hard exemplar reweighting triplet loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Shi, Y.; Yu, X.; Liu, L.; Zhang, T.; Li, H. Optimal feature transport for cross-view image geo-localization. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Regmi, K.; Borji, A. Cross-view image synthesis using geometry-guided conditional gans. Comput. Vis. Image Underst. 2019, 187, 102788. [Google Scholar] [CrossRef]

- Regmi, K.; Shah, M. Bridging the domain gap for ground-to-aerial image matching. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Shi, Y.; Liu, L.; Yu, X.; Li, H. Spatial-aware feature aggregation for image based cross-view geo-localization. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 February 2019; p. 32. [Google Scholar]

- Shi, Y.; Yu, X.; Campbell, D.; Li, H. Where am I looking at? joint location and orientation estimation by cross-view matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–20 June 2020. [Google Scholar]

- Toker, A.; Zhou, Q.; Maximov, M.; Leal-Taixé, L. Coming down to earth: Satellite-to-street view synthesis for geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Kuala Lumpur, Malaysia, 18–20 December 2021. [Google Scholar]

- Zheng, Z.; Wei, Y.; Yang, Y. University-1652: A multi-view multi-source benchmark for drone-based geo-localization. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Wang, T.; Zheng, Z.; Yan, C.; Zhang, J.; Sun, Y.; Zheng, B.; Yang, Y. Each part matters: Local patterns facilitate cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 867–879. [Google Scholar] [CrossRef]

- Ding, L.; Zhou, J.; Meng, L.; Long, Z. A practical cross-view image matching method between UAV and satellite for UAV-based geo-localization. Remote Sens. 2020, 13, 47. [Google Scholar] [CrossRef]

- Tian, X.; Shao, J.; Ouyang, D.; Shen, H.T. UAV-Satellite View Synthesis for Cross-View Geo-Localization. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 4804–4815. [Google Scholar] [CrossRef]

- Lin, T.Y.; Cui, Y.; Belongie, S.; Hays, J. Learning deep representations for ground-to-aerial geolocalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Tian, Y.; Chen, C.; Shah, M. Cross-view image matching for geo-localization in urban environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, L.; Li, H. Lending orientation to neural networks for cross-view geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Workman, S.; Souvenir, R.; Jacobs, N. Wide-area image geolocalization with aerial reference imagery. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Workman, S.; Jacobs, N. On the location dependence of convolutional neural network features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chen, C.; Qin, C.; Qiu, H.; Ouyang, C.; Wang, S.; Chen, L.; Tarroni, G.; Bai, W.; Rueckert, D. Realistic adversarial data augmentation for MR image segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020; Springer: Berlin, Germany, 2020. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Lu, X.; Li, Z.; Cui, Z.; Oswald, M.R.; Pollefeys, M.; Qin, R. Geometry-aware satellite-to-ground image synthesis for urban areas. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Nister, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 1. [Google Scholar]

- Goforth, H.; Lucey, S. GPS-denied UAV localization using pre-existing satellite imagery. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2974–2980. [Google Scholar]

- Torr, P.H.S.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Anderson, S.; Barfoot, T.D. Full STEAM ahead: Exactly sparse gaussian process regression for batch continuous-time trajectory estimation on SE(3). In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 157–164. [Google Scholar]

- Olfati-Saber, R. Kalman-consensus filter: Optimality, stability, and performance. In Proceedings of the 48h IEEE Conference on Decision and Control (CDC) held jointly with 2009 28th Chinese Control Conference, Shanghai, China, 15–18 December 2009. [Google Scholar]

- Yu, L.; You, H.; Haipeng, W. Squared-root cubature information consensus filter for non-linear decentralised state estimation in sensor networks. IET Radar Sonar Navig. 2014, 8, 931–938. [Google Scholar] [CrossRef]

- De Souza, C.E.; Kinnaert, M.; Coutinho, D. Consensus-based distributed mean square state estimation. In Proceedings of the 2015 American Control Conference (ACC), Chicago, IL, USA, 1–3 July 2015. [Google Scholar]

- Tamjidi, A.; Chakravorty, S.; Shell, D. Unifying consensus and covariance intersection for decentralized state estimation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Republic of Korea, 9–14 October 2016. [Google Scholar]

- Li, W.; Wei, G.; Han, F.; Liu, Y. Weighted average consensus-based unscented Kalman filtering. IEEE Trans. Cybern. 2015, 46, 558–567. [Google Scholar] [CrossRef]

- Shen, K.; Jing, Z.; Dong, P. A consensus nonlinear filter with measurement uncertainty in distributed sensor networks. IEEE Signal Process. Lett. 2017, 24, 1631–1635. [Google Scholar] [CrossRef]

- Soatti, G.; Nicoli, M.; Savazzi, S.; Spagnolini, U. Consensus-based algorithms for distributed network-state estimation and localization. IEEE Trans. Signal Inf. Process. Over Netw. 2016, 3, 430–444. [Google Scholar] [CrossRef]

- Gao, C.; Wang, Z.; Hu, J.; Liu, Y.; He, X. Consensus-Based Distributed State Estimation Over Sensor Networks with Encoding-Decoding Scheme: Accommodating Bandwidth Constraints. IEEE Trans. Netw. Sci. Eng. 2022, 9, 4051–4064. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Xu, C.; Wang, C.; Qi, L.; Ding, Z. Distributed consensus state estimation algorithm in asymmetric networks. Syst. Eng. Electron. 2018, 40, 1917–1925. [Google Scholar]

- Zhou, F.; Wang, Y.; Zheng, W.; Li, Z.; Wen, X. Fast Distributed Multiple-Model Nonlinearity Estimation for Tracking the Non-Cooperative Highly Maneuvering Target. Remote Sens. 2022, 14, 4239. [Google Scholar] [CrossRef]

- Cicala, M.; D’Amato, E.; Notaro, I.; Mattei, M. Scalable distributed state estimation in UTM context. Sensors 2020, 20, 2682. [Google Scholar] [CrossRef]

- He, S.; Shin, H.-S.; Xu, S.; Tsourdos, A. Distributed estimation over a low-cost sensor network: A review of state-of-the-art. Inf. Fusion 2020, 54, 21–43. [Google Scholar] [CrossRef]

- Chen, S.; Li, Y.; Kwok, N.M. Active vision in robotic systems: A survey of recent developments. Int. J. Robot. Res. 2011, 30, 1343–1377. [Google Scholar] [CrossRef]

- Scott, W.R.; Roth, G.; Rivest, J.-F. View planning for automated three-dimensional object reconstruction and inspection. ACM Comput. Surv. (CSUR) 2003, 35, 64–96. [Google Scholar] [CrossRef]

- Roy, S.D.; Chaudhury, S.; Banerjee, S. Active recognition through next view planning: A survey. Pattern Recognit. 2004, 37, 429–446. [Google Scholar]

- Scott, W.R. Model-based view planning. Mach. Vis. Appl. 2009, 20, 47–69. [Google Scholar] [CrossRef]

- Tarabanis, K.; Tsai, R.Y.; Allen, P.K. Automated sensor planning for robotic vision tasks. In Proceedings of the IEEE International Conference on Robotics & Automation, Sacramento, CA, USA, 9–11 April 1991. [Google Scholar]

- Tarabanis, K.; Allen, P.; Tsai, R. A survey of sensor planning in computer vision. IEEE Trans. Robot. Autom. 1995, 11, 86–104. [Google Scholar] [CrossRef]

- Ye, Y.; Tsotsos, J.K. Sensor planning for 3D object search. Comput. Vis. Image Underst. 1999, 73, 145–168. [Google Scholar] [CrossRef]

- Pito, R. A solution to the next best view problem for automated surface acquisition. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 1016–1030. [Google Scholar] [CrossRef]

- Pito, R. A sensor-based solution to the “next best view” problem. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996. [Google Scholar]

- Banta, J.; Wong, L.; Dumont, C.; Abidi, M. A next-best-view system for autonomous 3-D object reconstruction. IEEE Trans.Syst. Man Cybern.-Part A Syst. Hum. 2000, 30, 589–598. [Google Scholar] [CrossRef]

- Kriegel, S.; Rink, C.; Bodenmüller, T.; Suppa, M. Efficient next-best-scan planning for autonomous 3D surface reconstruction of unknown objects. J. Real-Time Image Process. 2015, 10, 611–631. [Google Scholar] [CrossRef]

- Corsini, M.; Cignoni, P.; Scopigno, R. Efficient and flexible sampling with blue noise properties of triangular meshes. IEEE Trans. Vis. Comput. Graph. 2012, 18, 914–924. [Google Scholar] [CrossRef]

- Khalfaoui, S.; Seulin, R.; Fougerolle, Y.; Fofi, D. An efficient method for fully automatic 3D digitization of unknown objects. Comput. Ind. 2013, 64, 1152–1160. [Google Scholar] [CrossRef]

- Krainin, M.; Curless, B.; Fox, D. Autonomous generation of complete 3D object models using next best view manipulation planning. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Kriegel, S.; Rink, C.; Bodenmüller, T.; Narr, A.; Suppa, M.; Hirzinger, G. Next-best-scan planning for autonomous 3d modeling. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Wu, C.; Zeng, R.; Pan, J.; Wang, C.C.L.; Liu, Y.-J. Plant phenotyping by deep-learning-based planner for multi-robots. IEEE Robot. Autom. Lett. 2019, 4, 3113–3120. [Google Scholar] [CrossRef]

- Dong, S.; Xu, K.; Zhou, Q.; Tagliasacchi, A.; Xin, S.; Nießner, M.; Chen, B. Multi-robot collaborative dense scene reconstruction. ACM Trans. Graph. (TOG) 2019, 38, 1–16. [Google Scholar] [CrossRef]

- Liu, L.; Xia, X.; Sun, H.; Shen, Q.; Xu, J.; Chen, B.; Huang, H.; Xu, K. Object-aware guidance for autonomous scene reconstruction. ACM Trans. Graph. (TOG) 2018, 37, 1–12. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Blaer, P.S.; Allen, P.K. Data acquisition and view planning for 3-D modeling tasks. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007. [Google Scholar]