Defining Valid Activity Monitor Data: A Multimethod Analysis of Weight-Loss Intervention Participants’ Barriers to Wear and First 100 Days of Physical Activity

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Intervention

2.3. Activity Monitor and Data Collection

2.4. Definitions of Valid Activity Monitor Wear

2.5. Definitions of Physical Activity Variables

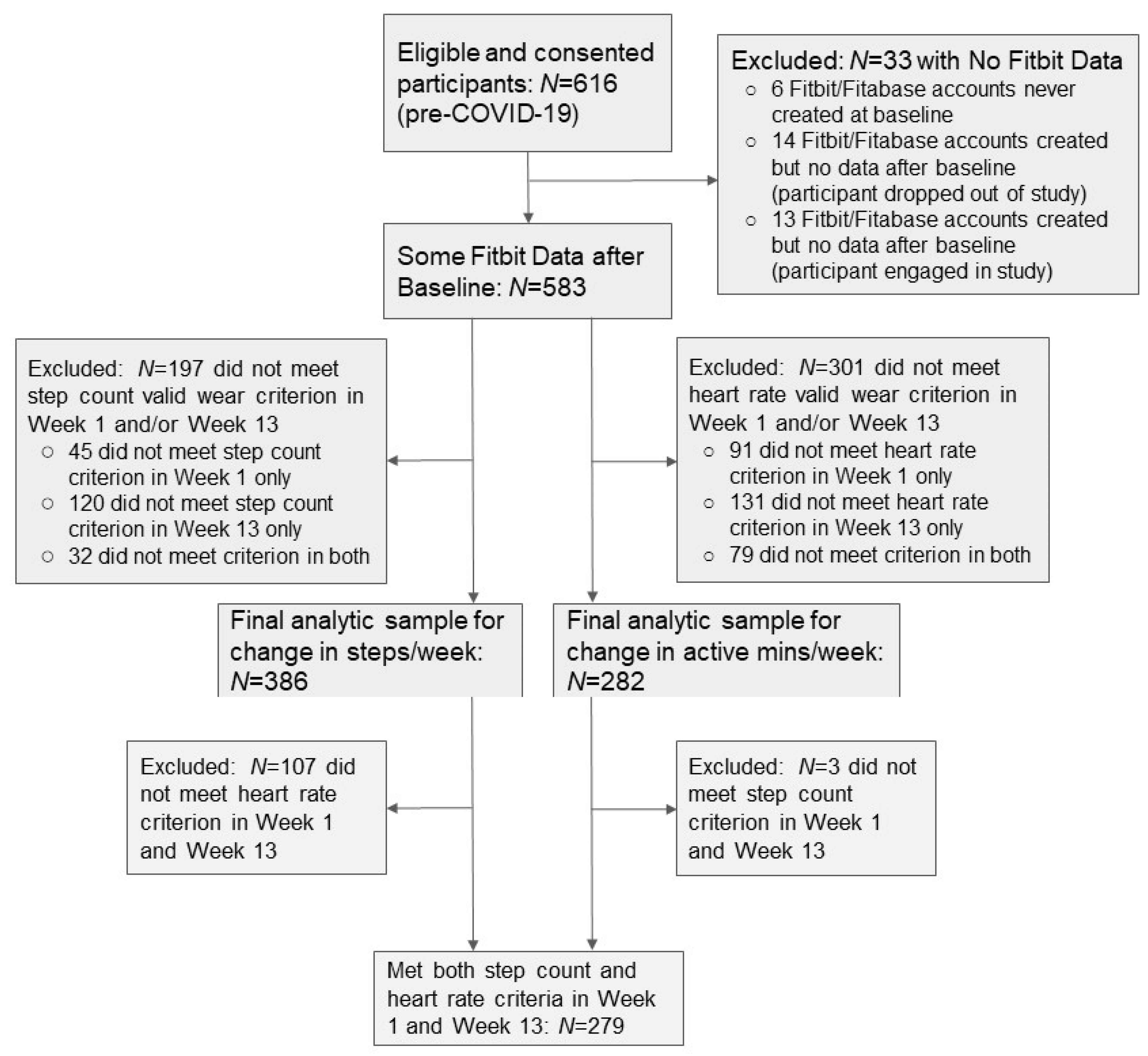

2.6. Analytic Sample

2.7. Quantitative Analyses

2.8. Content Analysis

2.9. Qualitative Interview Methods

3. Results

3.1. Prevalence of Participants with Valid Activity Monitor Data

3.2. Differences between Groups with and without Valid Wear Weeks

3.3. Percentage Difference between PA Outcomes Using Step Count and Heart Rate Methods

3.4. Concordance between PA Outcomes among Participants Meeting Both Criteria

3.5. Barriers to Wear from Research Staff Notes

3.6. Barriers to Wear from Intervention Exit Interviews

3.7. Findings from Post-Study In-Depth Interviews

4. Discussion

4.1. Strengths and Limitations

4.2. Future Research Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| WAM | wearable activity monitor |

| PA | physical activity |

| LPA | light-intensity physical activity |

| MVPA | moderate to vigorous-intensity physical activity |

| SC | step count |

| HR | heart rate |

| BMI | body mass index |

References

- Chong, K.P.L.; Guo, J.Z.; Deng, X.; Woo, B.K.P. Consumer Perceptions of Wearable Technology Devices: Retrospective Review and Analysis. JMIR mHealth uHealth 2020, 8, e17544. [Google Scholar] [CrossRef]

- Sanders, J.P.; Loveday, A.; Pearson, N.; Edwardson, C.; Yates, T.; Biddle, S.J.; Esliger, D.W.; Lyden, K.; Miner, A. Devices for Self-Monitoring Sedentary Time or Physical Activity: A Scoping Review. J. Med. Internet Res. 2016, 18, e90. [Google Scholar] [CrossRef]

- Goode, A.P.; Hall, K.S.; Batch, B.C.; Huffman, K.M.; Hastings, S.N.; Allen, K.D.; Shaw, R.J.; Kanach, F.A.; McDuffie, J.R.; Kosinski, A.S.; et al. The Impact of Interventions that Integrate Accelerometers on Physical Activity and Weight Loss: A Systematic Review. Ann. Behav. Med. 2017, 51, 79–93. [Google Scholar] [CrossRef]

- De Vries, H.J.; Kooiman, T.J.; van Ittersum, M.W.; van Brussel, M.; de Groot, M. Do activity monitors increase physical activity in adults with overweight or obesity? A systematic review and meta-analysis. Obes. 2016, 24, 2078–2091. [Google Scholar] [CrossRef] [PubMed]

- CPSTF. Physical Activity: Interventions Including Activity Monitors for Adults with Overweight or Obesity; Community Preventive Services Task Force: Atlanta, GA, USA, 2017. [Google Scholar]

- Cai, X.; Qiu, S.H.; Yin, H.; Sun, Z.L.; Ju, C.P.; Zügel, M.; Steinacker, J.M.; Schumann, U. Pedometer intervention and weight loss in overweight and obese adults with Type 2 diabetes: A meta-analysis. Diabet. Med. 2016, 33, 1035–1044. [Google Scholar] [CrossRef]

- Qiu, S.; Cai, X.; Chen, X.; Yang, B.; Sun, Z. Step counter use in type 2 diabetes: A meta-analysis of randomized controlled tri-als. BMC Med. 2014, 12, 36. [Google Scholar] [CrossRef]

- Silfee, V.J.; Haughton, C.F.; Jake-Schoffman, D.; Lopez-Cepero, A.; May, C.N.; Sreedhara, M.; Rosal, M.C.; Lemon, S.C. Objective measurement of physical activity outcomes in lifestyle interventions among adults: A systematic review. Prev. Med. Rep. 2018, 11, 74–80. [Google Scholar] [CrossRef] [PubMed]

- Fitabase. Introducing the Engage Planner and our 1000th Research Study! Email communication, 22 February 2021. [Google Scholar]

- Henriksen, A.; Mikalsen, M.H.; Woldaregay, A.Z.; Muzny, M.; Hartvigsen, G.; Hopstock, L.A.; Grimsgaard, S. Using Fitness Trackers and Smartwatches to Measure Physical Activity in Research: Analysis of Consumer Wrist-Worn Wearables. J. Med. Internet Res. 2018, 20, e110. [Google Scholar] [CrossRef] [PubMed]

- Ringeval, M.; Wagner, G.; Denford, J.; Paré, G.; Kitsiou, S. Fitbit-Based Interventions for Healthy Lifestyle Outcomes: Systematic Review and Meta-Analysis. J. Med. Internet Res. 2020, 22, e23954. [Google Scholar] [CrossRef]

- DeGroote, L.; Hamerlinck, G.; Poels, K.; Maher, C.; Crombez, G.; De Bourdeaudhuij, I.; VandenDriessche, A.; Curtis, R.G.; Desmet, A. Low-Cost Consumer-Based Trackers to Measure Physical Activity and Sleep Duration Among Adults in Free-Living Conditions: Validation Study. JMIR mHealth uHealth 2020, 8, e16674. [Google Scholar] [CrossRef] [PubMed]

- Tully, M.A.; McBride, C.; Heron, L.; Hunter, R.F. The validation of Fitbit Zip™ physical activity monitor as a measure of free-living physical activity. BMC Res. Notes 2014, 7, 952. [Google Scholar] [CrossRef]

- Ferguson, T.; Rowlands, A.V.; Olds, T.; Maher, C. The validity of consumer-level, activity monitors in healthy adults worn in free-living conditions: A cross-sectional study. Int. J. Behav. Nutr. Phys. Act. 2015, 12, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Hicks, J.L.; Althoff, T.; Sosic, R.; Kuhar, P.; Bostjancic, B.; King, A.C.; Leskovec, J.; Delp, S.L. Best practices for analyzing large-scale health data from wearables and smartphone apps. Digit. Med. 2019, 2, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Colley, R.; Gorber, S.C.; Tremblay, M.S. Quality control and data reduction procedures for accelerometry-derived measures of physical activity. Health Rep. 2010, 21, 63. [Google Scholar] [PubMed]

- Tang, L.M.; Meyer, J.; Epstein, D.A.; Bragg, K.; Engelen, L.; Bauman, A.; Kay, J. Defining Adherence: Making sense of physical activity tracker data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–22. [Google Scholar] [CrossRef]

- Choi, L.; Liu, Z.; Matthews, C.; Buchowski, M.S. Validation of Accelerometer Wear and Nonwear Time Classification Algorithm. Med. Sci. Sports Exerc. 2011, 43, 357–364. [Google Scholar] [CrossRef] [PubMed]

- Troiano, R.P.; Berrigan, D.; Dodd, K.W.; Mâsse, L.C.; Tilert, T.; Mcdowell, M. Physical Activity in the United States Measured by Accelerometer. Med. Sci. Sports Exerc. 2008, 40, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Claudel, S.E.; Tamura, K.; Troendle, J.; Andrews, M.R.; Ceasar, J.N.; Mitchell, V.M.; Vijayakumar, N.; Powell-Wiley, T.M. Comparing Methods to Identify Wear-Time Intervals for Physical Activity with the Fitbit Charge. J. Aging Phys. Act. 2021, 29, 529–535. [Google Scholar] [CrossRef]

- Jake-Schoffman, D.E.; Silfee, V.J.; Sreedhara, M.; Rosal, M.C.; May, C.N.; Lopez-Cepero, A.; Lemon, S.C.; Haughton, C.F. Reporting of Physical Activity Device Measurement and Analysis Protocols in Lifestyle Interventions. Am. J. Lifestyle Med. 2019. [Google Scholar] [CrossRef]

- Clarke, J.; Colley, R.; Janssen, I.; Tremblay, M.S. Accelerometer-Measured Moderate-to-Vigorous Physical Activity of Canadian Adults, 2007 to 2017; Catalogue no. 82-003-X; Statistics Canada: Ottawa, ON, Canada, 2019; pp. 3–10. [Google Scholar]

- Xu, S.Y.; Nelson, S.; Kerr, J.; Godbole, S.; Patterson, R.; Merchant, G.; Abramson, I.; Staudenmayer, J.; Natarajan, L. Statistical approaches to account for missing values in accelerometer data: Applications to modeling physical activity. Stat. Methods Med. Res. 2016, 27, 1168–1186. [Google Scholar] [CrossRef]

- Kwasnicka, D.; Dombrowski, S.U.; White, M.; Sniehotta, F.F. N-of-1 study of weight loss maintenance assessing predictors of physical activity, adherence to weight loss plan and weight change. Psychol. Health 2017, 32, 686–708. [Google Scholar] [CrossRef]

- Finkelstein, E.A.; Haaland, B.A.; Bilger, M.; Sahasranaman, A.; Sloan, R.A.; Nang, E.E.K.; Evenson, K.R. Effectiveness of activity trackers with and without incentives to increase physical activity (TRIPPA): A randomised controlled trial. Lancet Diabetes Endocrinol. 2016, 4, 983–995. [Google Scholar] [CrossRef]

- Carrasco, J.J.; Pérez-Alenda, S.; Casaña, J.; Soria-Olivas, E.; Bonanad, S.; Querol, F. Physical Activity Monitoring and Acceptance of a Commercial Activity Tracker in Adult Patients with Haemophilia. Int. J. Environ. Res. Public Health 2019, 16, 3851. [Google Scholar] [CrossRef]

- Serper, M.; Barankay, I.; Chadha, S.; Shults, J.; Jones, L.S.; Olthoff, K.M.; Reese, P.P. A randomized, controlled, behavioral intervention to promote walking after abdominal organ transplantation: Results from the LIFT study. Transpl. Int. 2020, 33, 632–643. [Google Scholar] [CrossRef] [PubMed]

- Cadmus-Bertram, L.A.; Marcus, B.H.; Patterson, R.E.; Parker, B.A.; Morey, B.L. Randomized Trial of a Fitbit-Based Physical Activity Intervention for Women. Am. J. Prev. Med. 2015, 49, 414–418. [Google Scholar] [CrossRef]

- Dreher, N.; Hadeler, E.K.; Hartman, S.J.; Wong, E.C.; Acerbi, I.; Rugo, H.S.; Majure, M.C.; Chien, A.J.; Esserman, L.J.; Melisko, M.E. Fitbit Usage in Patients With Breast Cancer Undergoing Chemotherapy. Clin. Breast Cancer 2019, 19, 443–449.e1. [Google Scholar] [CrossRef]

- Gorny, A.W.; Liew, S.J.; Tan, C.S.; Müller-Riemenschneider, F.; Lang, M.; Maslove, D. Fitbit Charge HR Wireless Heart Rate Monitor: Validation Study Conducted Under Free-Living Conditions. JMIR mHealth uHealth 2017, 5, e157. [Google Scholar] [CrossRef]

- Pradhan, S.; Kelly, V.E. Quantifying physical activity in early Parkinson disease using a commercial activity monitor. Park. Relat. Disord. 2019, 66, 171–175. [Google Scholar] [CrossRef] [PubMed]

- Hermsen, S.; Moons, J.; Kerkhof, P.; Wiekens, C.; De Groot, M. Determinants for Sustained Use of an Activity Tracker: Observational Study. JMIR mHealth uHealth 2017, 5, e164. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.B.; Cadmus-Bertram, L.A.; Natarajan, L.; White, M.M.; Madanat, H.; Nichols, J.F.; Ayala, G.X.; Pierce, J.P. Wearable Sensor/Device (Fitbit One) and SMS Text-Messaging Prompts to Increase Physical Activity in Overweight and Obese Adults: A Randomized Controlled Trial. Telemed. e-Health 2015, 21, 782–792. [Google Scholar] [CrossRef] [PubMed]

- Simblett, S.; Greer, B.; Matcham, F.; Curtis, H.; Polhemus, A.; Ferrão, J.; Gamble, P.; Wykes, T.J.J. Barriers to and facili-tators of engagement with remote measurement technology for managing health: Systematic review and content analysis of findings. J. Med. Internet Res. 2018, 20, e10480. [Google Scholar] [CrossRef] [PubMed]

- Brakenridge, C.L.; Healy, G.N.; Winkler, E.A.; Fjeldsoe, B.S.; Whelan, M.; Ummels, D.; Di, J. Usage, Acceptability, and Effectiveness of an Activity Tracker in a Randomized Trial of a Workplace Sitting Intervention: Mixed-Methods Evaluation. Interact. J. Med. Res. 2018, 7, e5. [Google Scholar] [CrossRef]

- Clawson, J.; Pater, J.A.; Miller, A.D.; Mynatt, E.D.; Mamykina, L. No longer wearing: Investigating the abandonment of per-sonal health-tracking technologies on craigslist. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 647–658. [Google Scholar]

- Hergenroeder, A.L.; Gibbs, B.B.; Kotlarczyk, M.P.; Perera, S.; Kowalsky, R.J.; Brach, J.S. Accuracy and Acceptability of Commercial-Grade Physical Activity Monitors in Older Adults. J. Aging Phys. Act. 2019, 27, 222–229. [Google Scholar] [CrossRef]

- Galarnyk, M.; Quer, G.; McLaughlin, K.; Ariniello, L.; Steinhubl, S.R. Usability of a Wrist-Worn Smartwatch in a Direct-to-Participant Randomized Pragmatic Clinical Trial. Digit. Biomark. 2019, 3, 176–184. [Google Scholar] [CrossRef]

- Henriksen, A.; Sand, A.-S.; Deraas, T.; Grimsgaard, S.; Hartvigsen, G.; Hopstock, L. Succeeding with prolonged usage of consumer-based activity trackers in clinical studies: A mixed methods approach. BMC Public Health 2020, 20, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Ma, L.; Zhao, X.; Kankanhalli, A. Factors influencing user’s adherence to physical activity applications: A scoping literature review and future directions. Int. J. Med. Inf. 2020, 134, 104039. [Google Scholar] [CrossRef] [PubMed]

- Petersen, R.; Pan, L.; Blanck, H.M. Racial and Ethnic Disparities in Adult Obesity in the United States: CDC’s Tracking to Inform State and Local Action. Prev. Chronic Dis. 2019, 16, E46. [Google Scholar] [CrossRef]

- Xu, X.; Tupy, S.; Robertson, S.; Miller, A.L.; Correll, D.; Tivis, R.; Nigg, C.R. Successful adherence and retention to daily monitoring of physical activity: Lessons learned. PLoS ONE 2018, 13, e0199838. [Google Scholar] [CrossRef]

- Jay, M.; Orstad, S.L.; Wali, S.; Wylie-Rosett, J.; Tseng, C.-H.; Sweat, V.; Wittleder, S.; Shu, S.B.; Goldstein, N.J.; Ladapo, J.A. Goal-directed versus outcome-based financial incentives for weight loss among low-income patients with obesity: Rationale and design of the Financial Incentives foR Weight Reduction (FIReWoRk) randomised controlled trial. BMJ Open 2019, 9, e025278. [Google Scholar] [CrossRef]

- U.S. Bureau of the Census. 2015. Available online: https://www.census.gov/ (accessed on 6 May 2021).

- Buchner, D.; Bishop, J.; Brown, D.; Fulton, J.; Galuska, D.; Gilchrist, J.; Guralnik, J.; Hootman, J.; Johnson, M.; Kohl, H., III; et al. 2008 Physical Activity Guidelines for Americans; National Institutes of Health: Bethesda, MD, USA, 2008.

- Piercy, K.L.; Troiano, R.P.; Ballard, R.M.; Carlson, S.A.; Fulton, J.E.; Galuska, D.A.; George, S.M.; Olson, R.D. The Physical Activity Guidelines for Americans. JAMA 2018, 320, 2020–2028. [Google Scholar] [CrossRef]

- Trost, S.G.; Mciver, K.L.; Pate, R.R. Conducting Accelerometer-Based Activity Assessments in Field-Based Research. Med. Sci. Sports Exerc. 2005, 37, S531–S543. [Google Scholar] [CrossRef] [PubMed]

- Tudor-Locke, C.; Burkett, L.; Reis, J.; Ainsworth, B.; Macera, C.; Wilson, D. How many days of pedometer monitoring predict weekly physical activity in adults? Prev. Med. 2005, 40, 293–298. [Google Scholar] [CrossRef]

- Carrasco, J.L.; Phillips, B.R.; Puig-Martinez, J.; King, T.S.; Chinchilli, V.M. Estimation of the concordance correlation coefficient for repeated measures using SAS and R. Comput. Methods Programs Biomed. 2013, 109, 293–304. [Google Scholar] [CrossRef] [PubMed]

- Carrasco, J.L.; Jover, L. Estimating the Generalized Concordance Correlation Coefficient through Variance Components. Biomaterials 2003, 59, 849–858. [Google Scholar] [CrossRef]

- Patton, M.Q. Qualitative Research & Evaluation Methods: Integrating Theory and Practice; Sage Publications: New York, NY, USA, 2014. [Google Scholar]

- Cascio, M.A.; Lee, E.; Vaudrin, N.; Freedman, D.A. A team-based approach to open coding: Considerations for creating in-tercoder consensus. Field Meth. 2019, 31, 116–130. [Google Scholar] [CrossRef]

- Medin, J.; Larson, J.; Von Arbin, M.; Wredling, R.; Tham, K.; Windahl, J. Striving for control in eating situations after stroke. Scand. J. Caring Sci. 2010, 24, 772–780. [Google Scholar] [CrossRef]

- Archibald, M.M. Investigator triangulation: A collaborative strategy with potential for mixed methods research. J. Mix. Meth. Res. 2016, 10, 228–250. [Google Scholar] [CrossRef]

- Hartman, S.J.; Nelson, S.H.; Weiner, L.S.; Lyons, E.; Dominick, G. Patterns of Fitbit Use and Activity Levels Throughout a Physical Activity Intervention: Exploratory Analysis from a Randomized Controlled Trial. JMIR mHealth uHealth 2018, 6, e29. [Google Scholar] [CrossRef]

- Cadmus-Bertram, L.; Marcus, B.H.; Patterson, R.E.; Parker, B.A.; Morey, B.L.; Freak-Poli, R.; Allen, G. Use of the Fitbit to Measure Adherence to a Physical Activity Intervention Among Overweight or Obese, Postmenopausal Women: Self-Monitoring Trajectory During 16 Weeks. JMIR mHealth uHealth 2015, 3, e96. [Google Scholar] [CrossRef]

- Tudor-Locke, C.; Craig, C.L.; Brown, W.J.; Clemes, S.A.; De Cocker, K.; Giles-Corti, B.; Hatano, Y.; Inoue, S.; Matsudo, S.M.; Mutrie, N.; et al. How many steps/day are enough? for adults. Int. J. Behav. Nutr. Phys. Act. 2011, 8, 79. [Google Scholar] [CrossRef]

- Bull, F.C.; Al-Ansari, S.S.; Biddle, S.; Borodulin, K.; Buman, M.P.; Cardon, G.; Carty, C.; Chaput, J.-P.; Chastin, S.; Chou, R.; et al. World Health Organization 2020 guidelines on physical activity and sedentary behaviour. Br. J. Sports Med. 2020, 54, 1451–1462. [Google Scholar] [CrossRef] [PubMed]

- Amagasa, S.; Machida, M.; Fukushima, N.; Kikuchi, H.; Takamiya, T.; Odagiri, Y.; Inoue, S. Is objectively measured light-intensity physical activity associated with health outcomes after adjustment for moderate-to-vigorous physical activity in adults? A systematic review. Int. J. Behav. Nutr. Phys. Act. 2018, 15, 65. [Google Scholar] [CrossRef]

- Evenson, K.R.; Goto, M.M.; Furberg, R.D. Systematic review of the validity and reliability of consumer-wearable activity trackers. Int. J. Behav. Nutr. Phys. Act. 2015, 12, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Rosenberger, M.E.; Buman, M.; Haskell, W.L.; Mcconnell, M.V.; Carstensen, L.L. Twenty-four Hours of Sleep, Sedentary Behavior, and Physical Activity with Nine Wearable Devices. Med. Sci. Sports Exerc. 2016, 48, 457–465. [Google Scholar] [CrossRef]

- Dominick, G.M.; Winfree, K.N.; Pohlig, R.T.; Papas, M.A. Physical Activity Assessment Between Consumer- and Research-Grade Accelerometers: A Comparative Study in Free-Living Conditions. JMIR mHealth uHealth 2016, 4, e110. [Google Scholar] [CrossRef] [PubMed]

- Crane, T.E.; Skiba, M.B.; Miller, A.; Garcia, D.O.; Thomson, C.A. Development and Evaluation of an Accelerometer-Based Protocol for Measuring Physical Activity Levels in Cancer Survivors: Development and Usability Study. JMIR mHealth uHealth 2020, 8, e18491. [Google Scholar] [CrossRef]

- Burke, L.E.; Wang, J.; Sevick, M.A. Self-Monitoring in Weight Loss: A Systematic Review of the Literature. J. Am. Diet. Assoc. 2011, 111, 92–102. [Google Scholar] [CrossRef] [PubMed]

- The Look AHEAD Research Group. The Look AHEAD Study: A Description of the Lifestyle Intervention and the Evidence Supporting It. Obesity 2006, 14, 737–752. [Google Scholar] [CrossRef]

- Hennink, M.M.; Kaiser, B.N.; Marconi, V.C. Code Saturation Versus Meaning Saturation: How Many Interviews Are Enough? Qual. Health Res. 2016, 27, 591–608. [Google Scholar] [CrossRef]

- Migueles, J.H.; Cadenas-Sanchez, C.; Ekelund, U.; Nyström, C.D.; Mora-Gonzalez, J.; Löf, M.; Labayen, I.; Ruiz, J.R.; Ortega, F.B. Accelerometer Data Collection and Processing Criteria to Assess Physical Activity and Other Outcomes: A Systematic Review and Practical Considerations. Sports Med. 2017, 47, 1821–1845. [Google Scholar] [CrossRef]

- Eisenhauer, C.; Arnoldussen, B.; López, D.L.; Rodríguez, S.M.; Hu, R.; van Velthoven, M.H.; Meinert, E.; Brindley, D. Perspectives of People Who Are Overweight and Obese on Using Wearable Technology for Weight Management: Systematic Review. JMIR mHealth uHealth 2020, 8, e12651. [Google Scholar] [CrossRef]

| All Participants N = 616 | Step Count (SC) Wear Criterion | Heart Rate (HR) Wear Criterion | Invalid HR but Valid SC Wear Weeks N = 107 | p-Value a | |||||

|---|---|---|---|---|---|---|---|---|---|

| Participants with Invalid SC Wear Weeks N = 230 | Participants with Valid SC Wear Weeks N = 386 | p-Value | Participants with Invalid HR Wear Weeks N = 334 | Participants with Valid HR Wear Weeks N = 282 | p-Value | ||||

| Categorical Variables | N(%) | N(%) | N(%) | N(%) | N(%) | N(%) | |||

| Gender | |||||||||

| Female | 499 (81.0) | 190 (82.6) | 309 (80.1) | 0.49 | 279 (83.5) | 220 (78.0) | 0.10 | 92 (86.0) | 0.10 |

| Male | 117 (19.0) | 40 (17.4) | 77 (20.0) | 0.73 | 55 (16.5) | 62 (22.0) | 0.45 | 15 (14.0) | 0.49 |

| Race/Ethnicity | |||||||||

| Hispanic | 451 (73.2) | 175 (76.1) | 276 (71.5) | 0.28 | 256 (76.7) | 195 (69.2) | 0.07 | 84 (78.5) | 0.11 |

| Non-Hispanic Black | 88 (14.3) | 30 (13.0) | 58 (15.0) | 0.80 | 41 (12.3) | 47 (16.7) | 0.56 | 11 (10.3) | 0.60 |

| Non-Hispanic White | 38 (6.2) | 11 (4.8) | 27 (7.0) | 0.80 | 16 (4.8) | 22 (7.8) | 0.71 | 5 (4.7) | 0.81 |

| Other | 39 (6.3) | 14 (6.1) | 25 (6.5) | 0.96 | 21 (6.3) | 18 (6.4) | 0.99 | 7 (6.5) | 0.99 |

| Immigrant to United States | |||||||||

| Yes | 394 (64.0) | 148 (64.4) | 246 (63.7) | 0.89 | 217 (65.0) | 177 (62.8) | 0.65 | 71 (66.4) | 0.59 |

| Spanish Spoken at Home | |||||||||

| Yes | 427 (69.3) | 164 (71.3) | 263 (68.1) | 0.49 | 244 (73.1) | 183 (64.9) | 0.07 | 81 (75.7) | 0.08 |

| Spanish Spoken at Study Visit | |||||||||

| Yes | 289 (46.9) | 118 (51.3) | 171 (44.3) | 0.24 | 173 (51.8) | 116 (41.1) | 0.07 | 56 (52.3) | 0.16 |

| Employment Status | |||||||||

| Working full- or part-time | 289 (46.9) | 103 (44.8) | 186 (48.2) | 0.58 | 141 (42.2) | 148 (52.5) | 0.09 | 38 (35.5) | 0.06 |

| Unemployed or looking for work | 133 (21.6) | 46 (20.0) | 87 (22.5) | 0.74 | 69 (20.7) | 64 (22.7) | 0.78 | 25 (23.4) | 0.94 |

| Keeping house or raising children | 134 (21.8) | 51 (22.2) | 83 (21.5) | 0.92 | 83 (24.9) | 51 (18.1) | 0.36 | 33 (30.8) | 0.17 |

| Retired | 60 (9.7) | 30 (13.0) | 30 (7.8) | 0.51 | 41 (12.3) | 19 (6.7) | 0.51 | 11 (10.3) | 0.73 |

| Education | |||||||||

| 8th grade or less | 129 (20.9) | 63 (27.4) | 66 (17.1) | 0.16 | 84 (25.2) | 45 (16.0) | 0.23 | 22 (20.6) | 0.64 |

| Some high school | 83 (13.5) | 25 (10.9) | 58 (15.0) | 0.62 | 46 (13.8) | 37 (13.1) | 0.93 | 22 (20.6) | 0.45 |

| High school grad or equivalent | 148 (24.0) | 61 (26.5) | 87 (22.5) | 0.58 | 83 (24.9) | 65 (23.1) | 0.80 | 22 (20.6) | 0.81 |

| Some college | 160 (26.0) | 51 (22.2) | 109 (28.2) | 0.42 | 76 (22.8) | 84 (29.8) | 0.32 | 26 (24.3) | 0.24 |

| 4-year college grad or higher | 96 (15.6) | 30 (13.0) | 66 (17.1) | 0.61 | 45 (13.5) | 51 (18.1) | 0.54 | 15 (14.0) | 0.71 |

| Marital Status | |||||||||

| Married | 234 (38.0) | 85 (37.0) | 149 (38.6) | 0.81 | 127 (38.0) | 107 (37.9) | 0.99 | 42 (39.3) | 0.87 |

| Separated or divorced | 121 (19.6) | 46 (20.0) | 75 (19.4) | 0.94 | 69 (20.7) | 52 (18.4) | 0.75 | 24 (22.4) | 0.68 |

| Widowed | 25 (4.1) | 12 (5.2) | 13 (3.4) | 0.82 | 16 (4.8) | 9 (3.2) | 0.85 | 4 (3.7) | 0.96 |

| Never married | 236 (38.3) | 87 (37.8) | 149 (38.6) | 0.90 | 122 (36.5) | 114 (40.4) | 0.54 | 37 (34.6) | 0.53 |

| Health Insurance | |||||||||

| Public insurance | 526 (85.4) | 192 (83.5) | 334 (86.5) | 0.35 | 283 (84.7) | 243 (86.2) | 0.63 | 94 (87.9) | 0.67 |

| No insurance | 87 (14.1) | 36 (15.7) | 51 (13.2) | 0.74 | 48 (14.4) | 39 (13.8) | 0.95 | 12 (11.2) | 0.50 |

| Unknown insurance | 3 (0.5) | 2 (0.9) | 1 (0.3) | 3 (0.9) | 0 (0.0) | 1 (0.93) | |||

| Smartphone Use | |||||||||

| Yes | 539 (87.5) | 189 (82.2) | 350 (90.7) | 0.004 ** | 273 (81.7) | 266 (94.3) | <0.001 *** | 86 (80.4) | <0.001 *** |

| Health App Use | |||||||||

| Yes | 109 (17.7) | 48 (20.9) | 61 (15.8) | 0.49 | 58 (17.4) | 51 (18.1) | 0.92 | 11 (10.3) | 0.53 |

| No | 443 (71.9) | 147 (63.9) | 296 (76.7) | 0.005 ** | 225 (67.4) | 218 (77.3) | 0.02 ** | 79 (73.8) | 0.53 |

| Not applicable | 64 (10.4) | 35 (15.2) | 29 (7.5) | 0.34 | 51 (15.3) | 13 (4.6) | 0.31 | 17 (15.9) | 0.33 |

| Activity Monitor Wear | |||||||||

| Yes | 35 (5.7) | 15 (6.5) | 20 (5.2) | 0.87 | 17 (5.1) | 18 (6.4) | 0.87 | 2 (1.9) | 0.80 |

| No | 84 (13.6) | 33 (14.4) | 51 (13.2) | 0.88 | 50 (15.0) | 34 (12.1) | 0.71 | 19 (17.8) | 0.57 |

| Not applicable | 497 (80.7) | 182 (79.1) | 315 (81.6) | 0.50 | 267 (80.0) | 230 (81.6) | 0.65 | 86 (80.4) | 0.81 |

| Cigarette Smoking (past 30 days) | |||||||||

| Yes | 58 (9.4) | 23 (10.0) | 35 (9.1) | 0.91 | 35 (10.5) | 23 (8.2) | 0.77 | 12 (11.2) | 0.77 |

| History of Heart Problems | |||||||||

| Yes | 55 (8.9) | 26 (11.3) | 29 (7.5) | 0.63 | 37 (11.1) | 18 (6.4) | 0.58 | 12 (11.2) | 0.64 |

| History of Lung Problems | |||||||||

| Yes | 88 (14.3) | 37 (16.1) | 51 (13.2) | 0.70 | 49 (14.7) | 39 (13.8) | 0.90 | 13 (12.2) | 0.88 |

| History of Arthritis | |||||||||

| Yes | 189 (30.7) | 78 (33.9) | 111 (28.8) | 0.46 | 106 (31.7) | 83 (29.4) | 0.73 | 30 (28.0) | 0.88 |

| History of Bariatric Surgery | |||||||||

| Yes | 29 (4.7) | 13 (5.7) | 16 (4.2) | 0.85 | 14 (4.2) | 15 (5.3) | 0.89 | 3 (2.8) | 0.85 |

| Food Security Status | |||||||||

| High or marginal | 365 (59.3) | 123 (53.5) | 242 (62.7) | 0.10 | 190 (56.9) | 175 (62.1) | 0.31 | 68 (63.6) | 0.83 |

| Low | 182 (29.6) | 80 (34.8) | 102 (26.4) | 0.22 | 104 (31.1) | 78 (27.7) | 0.62 | 26 (24.3) | 0.73 |

| Very low | 69 (11.2) | 27 (11.7) | 42 (10.9) | 0.92 | 40 (12.0) | 29 (10.3) | 0.83 | 13 (12.2) | 0.86 |

| Clinic Site | |||||||||

| Brooklyn, New York | 194 (31.5) | 86 (37.4) | 108 (28.0) | 0.16 | 111 (33.2) | 83 (29.4) | 0.57 | 26 (24.3) | 0.61 |

| BManhattan, New York | 170 (27.6) | 65 (28.3) | 105 (27.2) | 0.88 | 85 (25.5) | 85 (30.1) | 0.50 | 21 (19.6) | 0.34 |

| Los Angeles, California | 252 (40.9) | 79 (34.4) | 173 (44.8) | 0.12 | 138 (41.3) | 114 (40.4) | 0.89 | 60 (56.1) | 0.05 |

| Continuous Variables | M(SD) | M(SD) | M(SD) | M(SD) | M(SD) | M(SD) | |||

| Age (years) | 48.7 (12.4) | 48.4 (12.6) | 47.3 (12.3) | 0.29 | 48.7 (12.8) | 46.6 (11.8) | 0.04 * | 49.2 (13.1) | 0.06 |

| Body mass index (BMI) | 38.0 (6.6) | 38.8 (7.1) | 37.5 (6.2) | 0.02 * | 38.5 (6.8) | 37.3 (6.3) | 0.02 * | 38.4 (6.9) | 0.14 |

| Total physical activity MET-minutes per week b | 922.0 (2504.0) | 1055.0 (2689.0) | 866.3 (2313.0) | 0.63 | 792.0 (2574.0) | 960.0 (2337.0) | 0.39 | 693.0 (2330.0) | 0.09 |

| Physical activity behavioral intention | 6.2 (1.2) | 6.1 (1.2) | 6.2 (1.2) | 0.32 | 6.1 (1.2) | 6.2 (1.2) | 0.30 | 6.1 (1.3) | 0.47 |

| Intrinsic motivation for self-monitoring | 1.4 (1.5) | 1.5 (1.5) | 1.4 (1.5) | 0.42 | 1.4 (1.5) | 1.5 (1.5) | 0.41 | 1.3 (1.5) | 0.24 |

| Financial well-being score | 55.8 (12.8) | 55.3 (12.3) | 56.0 (13.1) | 0.51 | 55.5 (12.5) | 56.0 (13.2) | 0.63 | 55.6 (13.0) | 0.79 |

| Tract median household income | $34,626.1 | $34,445.4 | $33,660.7 | 0.26 | $34,100.1 | $33,780.3 | 0.64 | $34,206.4 | 0.65 |

| (10,254.4) | (8641.40) | (8258.10) | (8477.00) | (8330.20) | (8120.80) | ||||

| Physical Activity Variables | Step Count Wear Criterion | Heart Rate Wear Criterion | ||||

|---|---|---|---|---|---|---|

| N | M (SD) | Range | N | M (SD) | Range | |

| Valid Days of Data in First 100 Days | 583 | 75.7 (28.0) | 0 to 100 | 583 | 62.7 (31.3) | 0 to 100 |

| Steps | ||||||

| Mean Daily Steps in First 100 Days | 571 | 8743.4 (3869.3) | 1671.5 to 27,905.2 | 563 | 9000.3 (4006.7) | 670.1 to 23,284.9 |

| Mean Daily Steps in Week 1 | 506 | 9057.6 (4163.8) | 1721.4 to 29,103.0 | 413 | 9525.3 (4378.2) | 683.5 to 29,103.0 |

| Total Steps in Week 1 | 506 | 63,403.5 (29,146.8) | 12,049.8 to 20,3721.0 | 413 | 66,677.4 (30,647.5) | 4784.5 to 20,3721.0 |

| Mean Daily Steps in Week 13 | 431 | 9040.4 (4271.7) | 1677.0 to 24,030.9 | 373 | 9448.1 (4456.8) | 705.7 to 24,030.9 |

| Total Steps in Week 13 | 431 | 63,283.1 (29,901.7) | 11,739.0 to 16,8216.0 | 373 | 66,136.9 (31,197.4) | 4940.0 to 168,216.0 |

| Change in Mean Daily Steps from Week 1 to 13 | 386 | −162.4 (3026.6) | −11961.7 to 12856.1 | 282 | −249.4 (2868.7) | −11,961.7 to 12,856.1 |

| Active Minutes | ||||||

| Mean Daily Active Minutes in First 100 Days | NA | NA | NA | 563 | 43.6 (40.9) | 0 to 299.3 |

| Mean Daily Active Minutes in Week 1 | NA | NA | NA | 413 | 47.0 (43.8) | 0 to 299.7 |

| Total Active Minutes in Week 1 | NA | NA | NA | 413 | 328.9 (306.9) | 0 to 2098.0 |

| Mean Daily Active Minutes in Week 13 | NA | NA | NA | 373 | 54.8 (55.5) | 0 to 396.0 |

| Total Active Minutes in Week 13 | NA | NA | NA | 373 | 383.4 (388.2) | 0 to 2772.0 |

| Change in Mean Daily Active Minutes from Week 1 to 13 | NA | NA | NA | 282 | 6.4 (44.4) | −160.1 to 271.4 |

| Physical Activity Variables | Concordance Coefficient | |||

|---|---|---|---|---|

| N | CCC | SE CCC | 95% CI | |

| Valid Days of Data in First 100 Days | 279 | 0.597 | 0.034 | 0.527–0.659 |

| Mean Daily Steps in Week 1 and Week 13 | 279 | 0.988 | 0.001 | 0.986–0.990 |

| Mean Daily Active Minutes in Week 1 and Week 13 | 279 | 0.990 | 0.001 | 0.988–0.992 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Orstad, S.L.; Gerchow, L.; Patel, N.R.; Reddy, M.; Hernandez, C.; Wilson, D.K.; Jay, M. Defining Valid Activity Monitor Data: A Multimethod Analysis of Weight-Loss Intervention Participants’ Barriers to Wear and First 100 Days of Physical Activity. Informatics 2021, 8, 39. https://doi.org/10.3390/informatics8020039

Orstad SL, Gerchow L, Patel NR, Reddy M, Hernandez C, Wilson DK, Jay M. Defining Valid Activity Monitor Data: A Multimethod Analysis of Weight-Loss Intervention Participants’ Barriers to Wear and First 100 Days of Physical Activity. Informatics. 2021; 8(2):39. https://doi.org/10.3390/informatics8020039

Chicago/Turabian StyleOrstad, Stephanie L., Lauren Gerchow, Nikhil R. Patel, Meghana Reddy, Christina Hernandez, Dawn K. Wilson, and Melanie Jay. 2021. "Defining Valid Activity Monitor Data: A Multimethod Analysis of Weight-Loss Intervention Participants’ Barriers to Wear and First 100 Days of Physical Activity" Informatics 8, no. 2: 39. https://doi.org/10.3390/informatics8020039

APA StyleOrstad, S. L., Gerchow, L., Patel, N. R., Reddy, M., Hernandez, C., Wilson, D. K., & Jay, M. (2021). Defining Valid Activity Monitor Data: A Multimethod Analysis of Weight-Loss Intervention Participants’ Barriers to Wear and First 100 Days of Physical Activity. Informatics, 8(2), 39. https://doi.org/10.3390/informatics8020039