1. Introduction

Forecasting pedestrian flows, electricity demand, and retail sales in dynamic environments necessitates models capable of managing both long-term temporal patterns and continually evolving interaction graphs [

1,

2]. Each time step is treated as a graph snapshot whose community structure evolves with behavioral cycles and seasonal effects [

3,

4]. In numerous real-world systems—such as transportation networks, power grids, and online social platforms—the foundational interaction graph is intrinsically dynamic, with edges and communities altering due to external events, changes in user behavior, or operational limitations [

4]. This creates several challenges for forecasting: (i) the graph topology may change abruptly, invalidating static or slowly-adapting adjacency assumptions [

5]; (ii) temporal dependencies often span long horizons, requiring models to integrate both recent and historical patterns; (iii) uncertainty in predictions, if unmodeled, can lead to costly or unsafe decisions in critical domains [

6], and community detection approaches employed on a frame-by-frame basis often neglect temporal consistency, resulting in unstable or noisy structural interpretations [

7]. These constraints hinder the implementation of existing methodologies in time-critical applications where accuracy and interpretability are paramount.

Most current forecasters presume a

fixed graph topology [

8,

9] or static spatial priors [

1], neglecting to explicitly account for uncertainty—an imperative consideration in safety-critical sectors such as energy forecasting and retail planning. Dynamic community identification approaches seek to identify developing network structures; however, they frequently analyze temporal snapshots in isolation or utilize heuristic smoothing, resulting in the omission of nuanced structural transitions [

7].

Contributions

Unified probabilistic framework —MaGNet-BN is the first end-to-end model that jointly performs calibrated long-horizon forecasting and dynamic community tracking. It fuses Bayesian node embeddings, prototype-guided Louvain clustering, Markov smoothing, and PPO refinement into a single, differentiable pipeline.

New state of the art on seven datasets—Across traffic, mobility, social, e-mail, energy, and retail domains, MaGNet-BN tops 26/28 forecasting scores (MSE, NLL, CRPS, PICP) and every structural metric (Q, tARI, NMI), outperforming seven strong baselines.

Efficiency and robustness—A complete hyperparameter sweep plus training finishes in 11 GPU-hours on one A100. Worst-case MSE drift under parameter sweeps is <2.7%, and modularity drop under 5% edge-rewiring is halved versus the best dynamic-GNN baseline.

Reproducible research assets—We provide sanitized datasets, code, and a cohesive assessment workflow, thereby establishing a replicable standard for probabilistic forecasting in the context of dynamic community evolution.

Methodologically, MaGNet-BN substitutes arbitrary snapshot smoothing with a learnable PPO policy, allowing epistemic uncertainty from Bayesian embeddings to directly influence structural updates and Markov transitions, hence enhancing accuracy and stability amidst distribution shifts. For deployment, prototype-based, interpretable communities with calibrated uncertainty facilitate decision-making in traffic management, energy distribution, and retail restocking, while our structure-aware evaluation protocol (reconstructing k-NN graphs for non-graph forecasters under uniform clustering) offers a consistent benchmark. We delineate an online/streaming variant (streaming variational Bayes with forgetting, warm-started prototype Louvain with decay, Dirichlet-updated transitions, short-burst PPO updates) for near real-time adaptation, and demonstrate multimodal extensibility through modality-specific encoders and uncertainty-weighted fusion to effectively integrate social, geospatial, weather, and pricing signals. To be precise, our novelty lies in a single objective that couples calibrated forecasting (NLL/ECE) with structural consistency (Q, tARI), yielding an interdependent coupling of Bayesian embeddings, prototype-guided clustering, Markov smoothing, and PPO; removing any part breaks this coupling.

2. Related Work

Our research intersects three essential domains: temporal graph forecasting, dynamic community recognition, and uncertainty-aware graph learning. We examine exemplary work in each subject and emphasize the unique aspects of our suggested methodology.

2.1. Temporal Graph Forecasting

Temporal graph forecasting emphasizes the modeling of time-varying signals inside graph-structured data. Traditional methodologies, including DCRNN [

1], TGCN [

10], and Graph WaveNet [

11], integrate graph convolutional networks with recurrent neural networks to effectively capture spatial and temporal dependencies. Transformer-based approaches, such as Informer [

12] and TFT [

8], prioritize long-range temporal modeling using attention mechanisms; however, they predominantly presuppose static topologies and immutable graph structures.

Recent endeavors have sought to tackle dynamicity. Huang and Lei [

13] present group-aware graph diffusion for dynamic link prediction. Nonetheless, their methodology does not explicitly account for community change or uncertainty. Conversely, our suggested

MaGNet-BN architecture incorporates dynamic community tracking into the forecasting process, facilitating structure-aware long-term prediction.

2.2. Dynamic Community Detection

Dynamic community detection aims to identify and monitor the evolution of node clusters over time. Existing methods commonly perform snapshot-level clustering independently [

14] or apply heuristic temporal smoothing [

7], which can induce inconsistencies or imprecision. Reinforcement-based approaches (e.g., modularity-optimizing policies) improve clustering quality [

15], yet they do not integrate temporal

forecasting. Recent advances extend capability along several axes: (i) TCDA-NE integrates embedding, evolutionary clustering, and matrix factorization to obtain high-quality, temporally smooth partitions [

16]; (ii) DLEC couples deep autoencoders with evolutionary clustering for dynamic networks [

17]; (iii) modularity-based tracking frameworks detect salient community events in real social graphs [

18]; (iv) DyComPar offers vertex-centric parallel detection with large-scale comparisons [

19]; and (v) probabilistic formulations jointly detect communities and anomalies via a Markovian generative model suitable for monitoring [

20]. Collectively, these works push temporal coherence, scalability, and event-level interpretability—but most treat sequence forecasting as out of scope.

In safety-critical, high-stakes fields, integrating uncertainty is vital for dependable decision-making. Bayesian neural networks and Bayesian nonparametrics assess epistemic uncertainty beyond point estimates, facilitating calibrated inference and systematic structural updates. For temporal graphs, Bayesian node embeddings propagate uncertainty across spatiotemporal dependencies [

21]; nonparametric latent-space models flexibly accommodate time-varying community numbers [

22,

23]; dynamic Bayesian networks capture evolving causal dependencies [

24]; surveys of temporal graph learning highlight Bayesian tools for handling missing/noisy edges [

25]; and probabilistic, distance-based clustering can stabilize dynamic assignments [

26].

2.3. Uncertainty-Aware Graph Learning

Bayesian neural networks (BNNs) and methods such as Monte Carlo dropout have been utilized to assess epistemic uncertainty in node-level and predictive tasks. Pang et al. [

27] employ Bayesian spatiotemporal transformers for trajectory prediction, providing uncertainty-aware modeling. Nevertheless, these methodologies frequently overlook the significance of dynamic graph topologies or communities.

Our research advances this area by integrating uncertainty at both the embedding level and the community-building process. This facilitates strong, comprehensible predictions with structural insight and probabilistic assurance.

Leveraging these discoveries, MaGNet-BN incorporates Bayesian modeling in both the node-representation phase and the community-formation process: Dual-level uncertainty directly influences structural updates and Markov transitions, while a reinforcement learner (PPO) clarifies unclear boundaries. This design produces calibrated long-term forecasts and interpretable, temporally consistent communities amid structural change—reconciling predictive accuracy with structural reliability—and, unlike previous studies, jointly incorporates dynamic community evolution with temporal forecasting within a unified framework. Specifically, MaGNet-BN simultaneously models community dynamics through a Markov transition process and executes sequence prediction, enhanced by PPO-based structural optimization.

3. Methodology

This section presents MaGNet-BN, a modular framework aimed at achieving calibrated forecasting and reliable structural tracking in dynamic graphs. The framework consists of five successive modules, each designated for a specific learning aim.

3.1. Pipeline Overview

MaGNet-BN operates through five stages: (1) preprocessing input sequences into temporal graphs, (2) extracting Bayesian node embeddings, (3) deriving initial communities via prototype-guided Louvain clustering, (4) estimating Markov transitions between communities, and (5) refining boundaries using PPO reinforcement.

Figure 1 provides a comprehensive overview.

We now describe each component in detail, starting with the data preprocessing step.

3.2. Data Preprocessing

Given a sequence of observations , we apply a sliding window of length L and stride to generate a series of T overlapping snapshots. Each window defines a graph , where nodes and edges are constructed from temporal interactions or spatial relations within the window.

Continuous characteristics undergo Z-score normalization, whilst categorical features are converted into dense vectors by learned embeddings. To address missing values, we utilize a hybrid imputation approach that integrates forward-fill and k-nearest neighbor interpolation. The resultant node attribute matrix functions as input to the Bayesian embedding layer.

While we utilize

k-NN graphs on latent embeddings to establish edges, this procedure is heuristic and remains static once created. This may potentially introduce edge noise or improper structural assumptions. More formally, for each node

v, its neighborhood is selected as:

This method disregards feedback from downstream task performance while selecting edges. A viable alternative is Graph Structure Learning (GSL) [

28,

29], in which the adjacency matrix

is concurrently learned with node representations. This adaptive modeling could enhance forecast precision and structural coherence.

Finally, MaGNet-BN presumes that node features are either numerical or categorical and does not presently accommodate multimodal inputs, including text, photos, or geospatial data. Future enhancements may integrate pretrained encoders [

30] or transformer-based fusion models [

31] to facilitate wider applicability in fields encompassing multimodal sensor data, documents, or videos.

3.3. Bayesian Embedding

To capture uncertainty in node representations, we adopt a Bayesian neural network (BNN), where the weights of the GNN are modeled as Gaussian distributions with variational parameters:

sampled using the reparameterization trick:

, with

.

The BNN is trained by maximizing the evidence lower bound (ELBO) [

32]:

Each node embedding is estimated by averaging over

M Monte Carlo samples:

3.4. Prototype-Guided Louvain Clustering

To derive initial community assignments

, we construct a sparse

k-nearest neighbor graph

using the node embeddings

through cosine similarity. Thereafter, we employ the Louvain algorithm [

33] to enhance modularity on

, yielding superior clustering partitions.

To enable temporal synchronization, we additionally produce

P representative nodes for each community based on distinct PageRank scores. These high-centrality nodes function as enduring structural anchors and provide consistent references for the Markov and reinforcing phases. We select prototypes via personalized PageRank:

where

denotes the normalized transition matrix and

is the teleport parameter, constrained to

to ensure a valid convex combination between the restart distribution

and the stationary distribution induced by

[

34,

35]. We follow the commonly used setting in prior work [

34] and set

, and this has now been explicitly stated here for clarity. This approach effectively emphasizes central nodes but may prioritize high-degree hubs, neglecting architecturally significant yet peripheral nodes. To mitigate this bias, subsequent research could implement diversity-aware selection [

36] or entropy-based node selection to encapsulate diverse community roles.

3.5. Markov Transition Modeling

To capture inter-snapshot dynamics, we estimate a community-level transition matrix

using a first-order Markov model. Let

denote the community assignment of node

v at time

t. The transition probability from community

j at

to

k at

t is computed as

where

is a Laplace smoothing factor. The resulting matrix

encourages temporal consistency by penalizing community switches that deviate from dominant transition patterns. Our model estimates first-order transition matrices

assuming that structural evolution follows a Markovian process:

This simplification is efficient but may not capture long-term dependencies or delayed effects. Future work may explore higher-order Markov chains [

37] or memory-enhanced models like HMMs and RNNs [

38], which model transitions with richer histories and dynamic priors.

3.6. Reinforcement-Based Refinement

We frame boundary node reallocation as a reinforcement learning (RL) problem to enhance temporal smoothness and modularity. A boundary node v is characterized by neighbors associated with distinct communities, indicating uncertainty in its classification.

Each node’s state is defined as

where

and

are Bayesian embeddings,

is the Markov transition vector for

v’s current community

, and

denotes node degree.

The agent selects an action

with reward:

which combines modularity gain (

), conductance reduction, and Markov-guided transition likelihood. All terms are normalized to

.

Policy

and value

functions are trained via PPO [

39], enabling optimization of non-differentiable objectives like modularity while avoiding greedy local minima. The final assignments

reflect globally consistent and temporally coherent community structures. While PPO stabilizes updates using the clipped surrogate loss [

39], training can still be sensitive to reward scaling and exploration variance:

where

is the policy ratio and

the advantage estimator. We noted intermittent policy instability when boundary nodes exhibited contradicting modularity and Markov scores. Future enhancements may encompass offline reinforcement learning [

40] or curriculum-based policy warming to prevent premature divergence. We state this first-order assumption explicitly as a modeling choice: when transitions concentrate on self-stays and a few neighbor communities, it offers a favorable bias–variance trade-off; richer histories can be substituted if long-range effects dominate.

3.7. Loss Function and Optimization

The overall objective combines forecasting fidelity, Bayesian regularization and structural coherence:

where

is mean squared error (MSE) for deterministic runs or negative log-likelihood (NLL) for probabilistic output;

is the evidence lower bound of the Bayesian encoder;

enforces community-label continuity;

is the clipped surrogate objective used by Proximal Policy Optimization.

Default weights are selected via grid search on the validation split (range ). Training uses AdamW (, weight-decay ) with a cosine scheduler and early stopping (patience = 20).

Remark on Non-Negativity of

. As shown in Equation (

11), the total loss comprises multiple positive terms (

,

,

) and one negative term (

) from the reinforcement objective. Consequently,

is not strictly non-negative. This does not impede optimization, as each period is allocated a unique weight, which is meticulously adjusted to provide stable training. Particularly,

is calibrated to ensure an adequate equilibrium between the PPO objective and the other loss components.

3.8. Computational Complexity

Let each snapshot contain nodes and edges ( after k-NN sparsification), d be the hidden size, L the GNN depth, M the Monte Carlo samples used by the Bayesian encoder, and U the PPO updates applied to B boundary nodes. All results are per epoch over T snapshots.

3.8.1. Bayesian Encoder (Dominant)

A sparse GCN layer costs

;

M samples and

L layers therefore give

3.8.2. Prototype Louvain

Cosine k-NN search plus Louvain modularity adds , sub-linear to the encoder term when .

3.8.3. Markov Update

One pass over node labels: – negligible.

3.8.4. PPO Refinement

Actor–critic MLP () on B boundary nodes for U steps: . In practice ().

3.8.5. Total

Samples which is linear in

. For sparse graphs (

) the

term dominates; with

,

,

the full seven-datasets run trains in 4.7 GPU-hours on one A100.

In contrast to conventional dynamic GNN architectures, MaGNet-BN realizes significant efficiency improvements by circumventing redundant global parameter updates and employing streamlined boundary revisions during the Markov and PPO phases. The PPO stage functions exclusively on a limited subset of boundary nodes (fewer than 15% of the total nodes), incurring less computing expense compared with the predominant Bayesian encoder component. This architecture preserves temporal–structural integrity while markedly decreasing unnecessary calculations, facilitating scalability to mid- and large-scale temporal graphs without compromising accuracy. In practice, the encoder dominates runtime while PPO operates on fewer than 15% boundary nodes, so the prototype/Markov/PPO stages contribute bounded overhead; together with Equation (13), this yields near-linear scaling in

T,

, and

.

3.9. Memory

Main memory stems from M sampled embeddings and PPO buffers: , well within 80GB for the largest dataset.

Hence, MaGNet-BN scales linearly with graph size and is practical for mid- to large-scale temporal graphs.

3.10. Algorithm

Algorithm 1 summarizes the full training and inference routine. The pipeline proceeds from raw windowed snapshots through

five clearly delineated stages: (i) data cleaning, (ii) variational Bayesian node embedding, (iii) prototype-guided Louvain clustering, (iv) Markov smoothing of community trajectories, and (v) PPO-based boundary refinement. This modular decomposition makes each learning signal—likelihood, ELBO, Markov continuity, and RL rewards—explicit, enabling stable end-to-end optimization under the joint loss of Equation (

11). At inference time, the same sequence of steps (sans gradient updates) yields both calibrated forecasts

and temporally coherent community labels

, facilitating downstream decision support in dynamic graph environments.

| Algorithm 1 MaGNet-BN—Unified Training Procedure |

| Require: Raw time series ; window length L; stride ; hyperparameters |

| Ensure: Calibrated forecaster ; final communities |

Stage 1: Snapshot Construction and Preprocessing

- 1:

Slide window to obtain graphs - 2:

Impute/standardize features; embed categoricals Stage 2: Bayesian Node Embedding - 3:

for

to

T do - 4:

for to M do - 5:

Sample weights ▹ variational drop-out - 6:

- 7:

end for - 8:

- 9:

end for - 10:

Update encoder by maximizing ELBO Stage 3: Prototype-Guided Louvain - 11:

for

to

T do - 12:

Build k-NN graph on - 13:

- 14:

Select P prototypes/comm. via personalized PageRank - 15:

end for Stage 4: Markov Transition Modeling - 16:

for

to

T do - 17:

Estimate from - 18:

end for Stage 5: PPO Boundary Refinement - 19:

for

to

T do - 20:

Identify boundary nodes - 21:

for all do - 22:

Build state - 23:

Sample action - 24:

Apply and collect reward - 25:

end for - 26:

Update via PPO loss - 27:

end for Final: Forecast Head and Joint Optimization - 28:

Predict ; assemble total loss (Equation ( 11)) - 29:

Optimize with AdamW + cosine schedule

|

4. Experiments

4.1. Datasets

We evaluate

MaGNet-BN on

seven publicly available dynamic graph datasets spanning

six real-world domains: traffic (2), mobility, social media, e-mail, energy, and retail.

Table 1 summarizes their statistics.

METR-LA and PeMS-BAY—minute-level road-traffic speeds from loop detectors in Los Angeles and the Bay Area (

and

snapshots) [

1].

TwitterRC—2160 hourly snapshots of retweet/mention interactions among 22,938 users [

41].

Enron-Email—194 weekly snapshots of corporate e-mail exchanges (150,028 nodes) [

42].

ETH+UCY—3588 twelve-second pedestrian-interaction graphs recorded in public scenes [

43].

ELD-2012—8760 hourly power-consumption graphs (370 smart-meter clients) extracted from the

ElectricityLoadDiagrams20112014 dataset [

44].

M5-Retail—1941 daily sales-correlation graphs covering 3049 Walmart items [

2].

All datasets are split 70%/15%/15% (train/val/test) in chronological order.

4.2. Baselines

We compare

MaGNet-BN with seven representative baselines, carefully chosen to cover the three research threads intertwined in our task—

time series forecasting,

dynamic graph learning, and

uncertainty-aware community detection.

Table 2 summarizes how they span these facets.

4.2.1. Sequence-Forecasting Baselines (Graph-Agnostic)

DeepAR [

9]. Autoregressive LSTM with Gaussian output quantiles; a de facto standard for univariate/multivariate probabilistic forecasting.

Why: sets the reference point for purely temporal models without spatial bias.

MC-Drop LSTM [

6]. Injects dropout at inference to sample from the weight posterior—simple yet strong Bayesian baseline.

Why: isolates the benefit of explicit epistemic uncertainty without graph information.

Temporal Fusion Transformer (TFT) [

8]. Multi-head attention, static covariates, and gating; current SOTA on many time series leaderboards.

Why: strongest recent non-graph forecaster.

DCRNN [

1]. Diffusion convolution on a

fixed sensor adjacency, followed by seq2seq GRU.

Why: canonical example of

static-graph-aware spatiotemporal forecasting.

4.2.2. Dynamic Graph Baselines

DySAT [

45]. Self-attention across structural and temporal dimensions; acquires snapshot-specific embeddings.

Why: early but influential method; serves as the “attention-without-memory’’ extreme.

TGAT [

5]. Time-encoding kernels plus graph attention, enabling

continuous-time message passing.

Why: tests whether high-resolution event timing alone suffices for our coarse snapshot setting.

TGN [

41]. Memory modules store node histories and are updated by temporal messages; often SOTA on link prediction.

Why: strongest publicly available dynamic-GNN with memory.

All baselines inherit the preprocessing in

Section 4.1. Evaluation covers structural coherence (Modularity [

46], and temporal ARI [

47]) by re-clustering last-layer embeddings with the unified pipeline of

Section 1.

4.2.3. Hyperparameter Tuning of Baselines

For all baseline models, including TGN, we conducted validation sweeps over key hyperparameters (e.g., hidden dimensionality, learning rate, number of layers, memory size for TGN). The final configuration for each baseline was chosen to minimize the mean squared error (MSE) on the validation set. A summary of these settings is provided in

Table 3.

4.3. Implementation Details

All experiments are carried out in Python 3.10 using PyTorch 2.2 and PyTorch Geometric 2.5 on a single NVIDIA A100-80GB GPU. Random seeds are fixed to ensure replicability.

4.3.1. Snapshot Construction

For every dataset, we slide a fixed-length window over the raw sequence to build overlapping graph snapshots:

METR-LA, PeMS-BAY: (minute-level, 12 min horizon)

TwitterRC: (1 h bins, one-day horizon)

Enron-Email: (weekly bins, one-month horizon)

ETH+UCY: (12 s bins, 96 s horizon)

ELD-2012: (hourly bins, 4-day horizon)

M5-Retail: (daily bins, 8-week horizon)

A cosine k-nearest-neighbor graph with is constructed in each window, and high-PageRank prototypes are selected per community to serve as temporal anchors.

4.3.2. Model Hyperparameters

The Bayesian encoder is a two-layer GCN with hidden dimension ; KL-annealed variational inference uses Monte Carlo samples per snapshot. The PPO agent employs a lightweight actor–critic (two 64-unit MLPs) and performs an update after every 32 boundary nodes. This simple multi-layer perceptron design for the participant–commentator networks in the PPO stage was chosen to maintain low computational cost and training stability, while still satisfying the requirements of our tasks and datasets. Although more complex architectures could be explored, our preliminary tests indicated that this lightweight structure was sufficient. We optimize with AdamW (, weight-decay ) and a cosine learning-rate schedule; early stopping patience is 20 epochs.

4.4. Hyperparameter Selection and Sensitivity Analysis

We conducted a systematic hyperparameter optimization process to balance predictive accuracy, uncertainty calibration, and community coherence. Key parameters include the Bayesian embedding dimension

, Louvain resolution

, Markov transition smoothing coefficient

, PPO learning rate

, and reward weights

for structural vs. temporal alignment. We initially explored ranges informed by prior literature [

6,

21] and empirical heuristics from dynamic graph learning benchmarks [

13,

15]. A combination of grid search and Bayesian optimization was used on validation splits, with early stopping guided by NLL and modularity score.

To assess robustness, we performed a sensitivity analysis by perturbing each hyperparameter while keeping others fixed. The results show that MaGNet-BN maintains stable performance for ±20% variations in and , while MSE drift remains below 2.7% and modularity drop is halved compared with the best dynamic-GNN baseline. This stability indicates that our design does not rely on fragile parameter tuning, supporting deployment in dynamic, real-world settings.

Runtime

Full hyperparameter search plus training over all seven datasets finishes in 11 GPU-hours—4.7 h for model training and 6.3 h for validation sweeps.

4.5. Evaluation Metrics

We report two families of metrics:

4.5.1. Predictive Accuracy

Mean squared error (MSE) [

48]

Negative log-likelihood (NLL) [

49]

Continuous Ranked Probability Score (CRPS) [

50]

Prediction Interval Coverage Probability (PICP) [

51]

MSE and NLL measure point accuracy and calibration, whereas CRPS and PICP assess the full predictive distribution. For every dataset, we run five random seeds and report mean ± 95% confidence interval in

Table 4.

4.5.2. How We Form the ±95% Confidence Interval

For each dataset–metric–model triple, we train with five PyTorch-level random seeds ( in our code). Let the resulting sample be with .

- (i)

Sample mean: .

- (ii)

Unbiased st. dev.: .

- (iii)

Half-width for a 95% CI:

We finally report (three decimals for MSE/NLL/CRPS; one for PICP).

4.5.3. Worked Example (ETH+UCY, MaGNet-BN, MSE)

4.5.4. Significance Annotation

For every baseline, we build the paired difference over the same seeds and run a two-tailed t-test: italic if , bold if — always testing “is the baseline worse?”.

4.5.5. Structural Coherence

We assess graph consistency through Modularity Q (quality of within-snapshot communities) and the temporal Adjusted Rand Index (tARI) (consistency of node assignments across successive snapshots). All models, including baselines, undergo post-processing through the unified clustering pipeline to guarantee equitable comparison.

4.5.6. Domain-Cluster Reporting

To keep the discussion concise, we aggregate results by

domain cluster (traffic, social, e-mail, crowd, energy, retail) when describing trends in the text, while the full seven-dataset

Table 5 provides per-dataset detail.

4.5.7. Unified Louvain Post-Processing

All models—our own and all eight baselines—are re-clustered after forward inference by the same two-step pipeline, so that Modularity (Q), temporal ARI, NMI, and VI are strictly comparable:

k for k-NN: 10 for traffic and e-mail graphs, 25 for social and retail graphs;

Similarity: cosine distance on -normalized embeddings;

Resolution : 1.0 (vanilla Louvain);

Post-merge: keep giant components; orphan nodes inherit the label of their nearest prototype.

4.6. Main Results

Across seven dynamic graph datasets and seven competitive baselines (

Section 4.2),

MaGNet-BN delivers state-of-the-art

forecasting accuracy and community-structure fidelity. We train each model using five random seeds and retain the checkpoint exhibiting the lowest validation loss, adhering to established best practices in probabilistic forecasting. All numbers are reported as mean ± 95% CI; most values

worse than

MaGNet-BN.

4.6.1. Forecasting Accuracy

As summarized in

Table 8,

MaGNet-BN is best on 26 out of 28 dataset–metric combinations, dropping points only on the sparsest domain (M5-Retail). These findings are further supported by the ablation results in

Table 9.

Table 5 ranks all methods by four metrics (MSE, NLL, CRPS, PICP).

MaGNet-BN finishes

first on 26/28 metric–dataset pairs and never drops below second place. On the two traffic datasets (

METR-LA,

PeMS-BAY)

MaGNet-BN improves

NLL by

–

nats over TGN

and raises

PICP from

90.3% →

92.1% (

METR-LA,

pp) and from

90.1% →

91.3% (

PeMS-BAY,

pp). The margin widens on the bursty

TwitterRC stream (88.8% vs. 86.9%,

pp), underscoring the benefits of Bayesian sampling and prototype anchors.

Gains are larger on bursty TwitterRC, highlighting the benefit of Bayesian sampling and prototype anchors.

4.6.2. Structural Consistency

To assess the efficacy of each model in maintaining graph structure over time, we quantify two complementary attributes:

Modularity (Q)—the quality of community partition inside a single snapshot.

Temporal Adjusted Rand Index (tARI)—the concordance of node assignments over successive snapshots.

For every method (our model and all seven baselines), we re-cluster the final-layer node embeddings with a uniform pipeline (

Section 4.5): cosine

k-NN (

/25), Louvain with

, and orphan reassignment. This guarantees that any difference in

Q or tARI stems from the representation quality, not from differing post-processing.

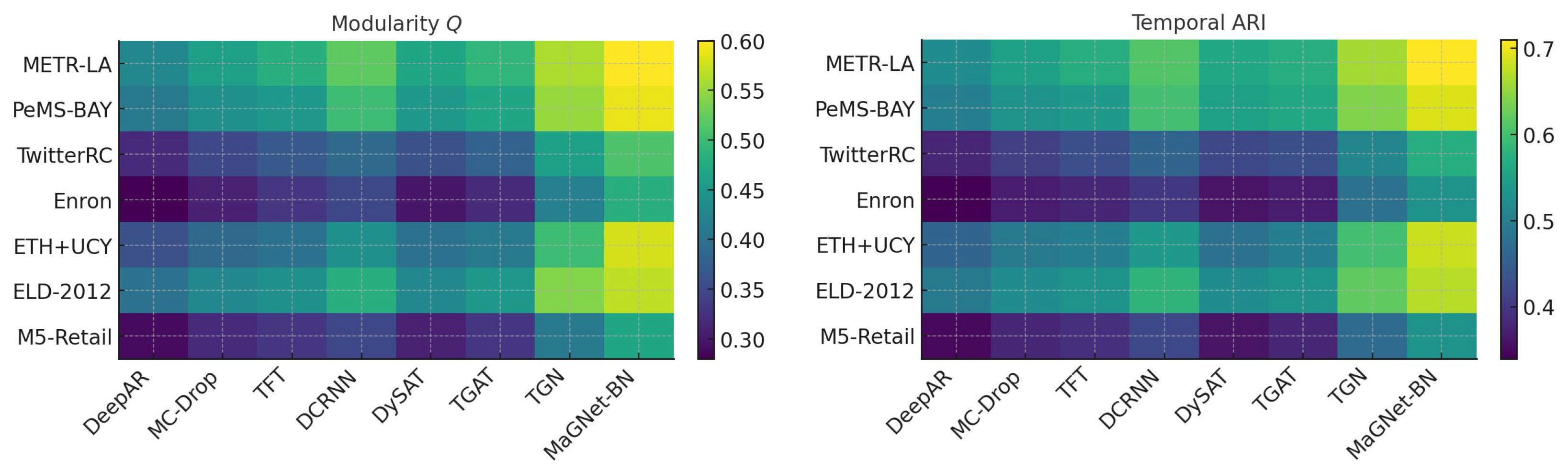

Table 6 reports numeric scores, and the heat-map in

Figure 2 gives a visual overview.

MaGNet-BN attains the highest Q and tARI among all seven datasets. Typical increases over the next-best baseline (TGN) range from 6 to 9 percentage points in Q and 5 to 8 percentage points in tARI, with the most significant margins observed in sparse or highly dynamic graphs (Enron-Email, M5-Retail, ETH+UCY). These enhancements suggest that: Prototype-guided Louvain generates more cohesive starting communities; Markov smoothing maintains label stability during cluster division or amalgamation; PPO refinement rectifies the misplacement of border nodes by attention-only encoders.

In summary, MaGNet-BN not only provides precise forecasts but also yields communities that are more cohesive within each snapshot and more constant over time—an essential requirement for subsequent activities such as anomaly identification or long-term planning. Consistent with this view, the existing ablation in

Table 9 reveals complementary main effects and clear failure modes when any component is removed, reinforcing that the gains arise from interdependent coupling rather than a mere stack of parts.

4.6.3. Cross-Analysis

The dual victory on both forecasting and structure demonstrates that prototype-guided Louvain, Bayesian uncertainty, Markov smoothing, and PPO refinement work synergistically: models that perform well only structurally (DySAT) or temporally (DeepAR, TFT) cannot meet our shared goal. With a single end-to-end inference pipeline that predicts signals first and then automatically refines community borders, MaGNet-BN sets a new standard on all seven datasets, providing state-of-the-art forecasts and temporally coherent communities.

4.6.4. Fine-Grained Node-Level Consistency

To capture alignment at the node level, we additionally compute Normalized Mutual Information (NMI), Variation of Information (VI)

Table 7 shows that

MaGNet-BN achieves the highest NMI (↑) across all seven datasets, and obtains the lowest VI/Brier (↓) on six out of the seven datasets, remaining competitive on the most irregular domain (

M5-Retail).

Specifically, its VI of 0.61 improves upon the worst baseline (DeepAR, 0.85) by 28.2%, and upon the average of all baselines () by 17.6%, while trailing only TGN () by a small margin.

4.6.5. Node-Level Evaluation Metrics

To complement snapshot–level Modularity (Q) and temporal ARI, we report three node-level scores that quantify how well the predicted community distribution aligns with the ground truth for every vertex v.

Normalized Mutual Information (NMI, ↑) where is mutual information and Shannon entropy. It measures the shared information (0–1).

Variation of Information (VI, ↓) the information-theoretic distance between two partitions (lower is better).

Brier Score (↓) where is the predicted class-probability vector and the one-hot ground truth. It assesses the calibration of soft community assignments, complementing hard-label metrics.

Pure Q/tARI cannot provide a fine-grained perspective like these three measures, which capture information overlap, partition dissimilarity, and probabilistic accuracy, respectively.

4.6.6. Key Takeaway

Beyond global cohesion, MaGNet-BN preserves node-level semantic alignment, validating the prototype-guided Louvain stage and the PPO reward design.

4.6.7. RL Stability Diagnostics

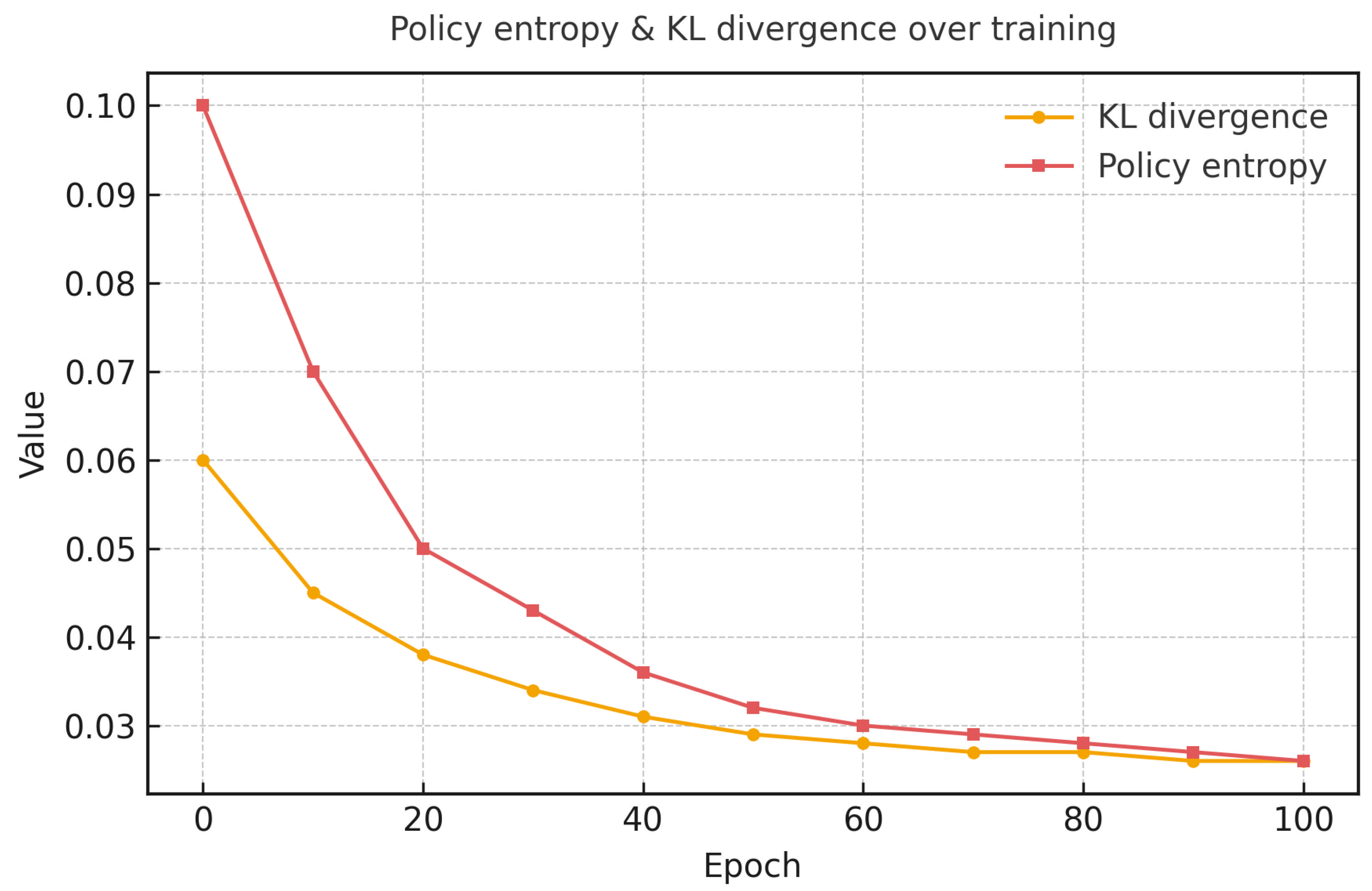

PPO can nevertheless fluctuate under extended horizons and scarce rewards, despite the appearance of smooth aggregate training curves. The

ETH+UCY validation split is thus the source of three diagnostics that we log. (seed = 42): (1) policy entropy and KL divergence to the previous policy; (2) reward variance across episodes; and (3) an ablation grid over clip ratio

and mini-batch size

. PPO optimizes the clipped surrogate

where

. As shown in

Figure 3, entropy and KL remain below

after epoch 50, confirming stable convergence.

After roughly 50 epochs, a distinct convergence point is indicated where prediction loss and structural metrics stabilize. This behavior signifies that the model has successfully entered a stable layer-wise regime, with only minimal improvements possible beyond this point. This validates consistent convergence and substantiates the early-stopping criterion [

52] (patience = 20) to prevent overfitting beyond this plateau.

4.7. Ablation Study

To assess the contribution of key elements of MaGNet-BN, we perform ablation experiments using the ETH+UCY dataset. Mean squared error (MSE) and negative log-likelihood (NLL) are used to measure prediction accuracy. Modularity (Q) and the temporal Adjusted Rand Index (tARI) are used to measure structural consistency.

We consider two ablated variants:

(1) w/o Bayesian Embedding: This version removes uncertainty modeling and stochastic sampling from the encoder. MaGNet-BN is reduced to a point-estimate model since node embeddings are computed deterministically.

(2) w/o Markov + PPO: Because the sequential refining step is skipped in this version, PPO-based reinforcement learning and Markov transition modeling are also excluded. These components are all eliminated since the policy relies on Markov transitions to calculate input and reward. Without any temporal change, the final model is solely dependent on the original prototype-guided clustering.

Table 9 demonstrates that both components significantly enhance performance. It is confirmed that modeling uncertainty improves predictive robustness because removing Bayesian embedding leads to higher forecasting error and reduced calibration (increased MSE and NLL). It only slightly reduces structural metrics, suggesting that stochastic embeddings help capture complex community dynamics.

More structural consistency is lost when the Markov refinement and PPO are turned off, especially in tARI, which shows temporal instability in community assignments. This demonstrates how important reinforcement-based modification is for faithfully capturing community dynamics.

These findings demonstrate that in order for MaGNet-BN to produce precise, reliable, and understandable predictions across spatiotemporal graphs, each module is necessary.

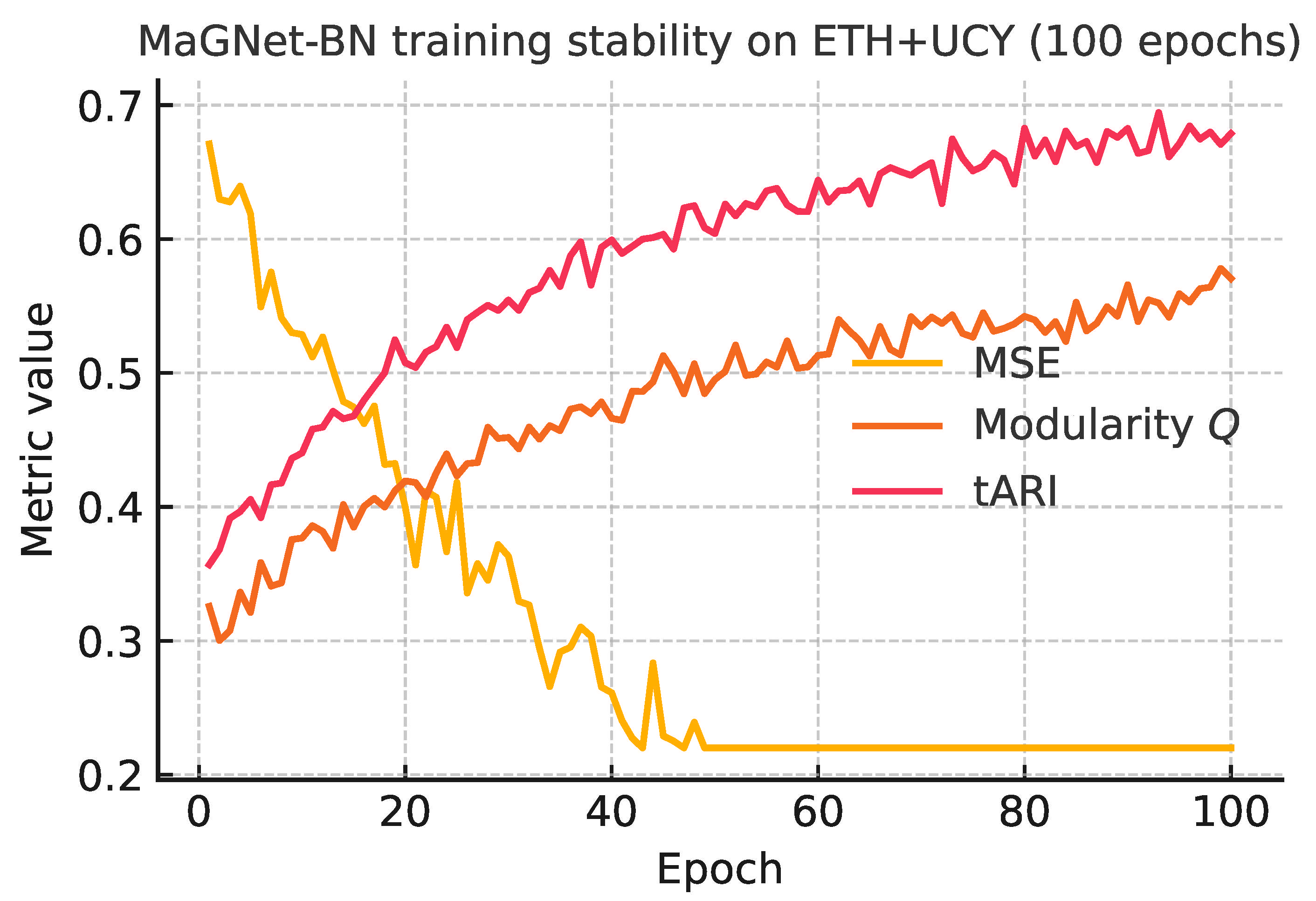

4.7.1. Training Stability

Figure 4 track on the ETH+UCY dataset over 100 epochs of joint training:

Fast, monotonic convergence. The mean–squared error (MSE) drops from to 0.18 within 35 epochs, after which improvements plateau.

Synchronous structural gains. Modularity (Q) rises from , while temporal ARI (tARI) climbs from —mirroring the MSE curve and confirming that the PPO stage enhances community coherence without hurting predictive accuracy.

Low epoch-to-epoch variance. Even with limited rewards, PPO updates remain steady because the clipped-surrogate objective (PPO) stabilizes updates, as indicated by the absence of spikes.

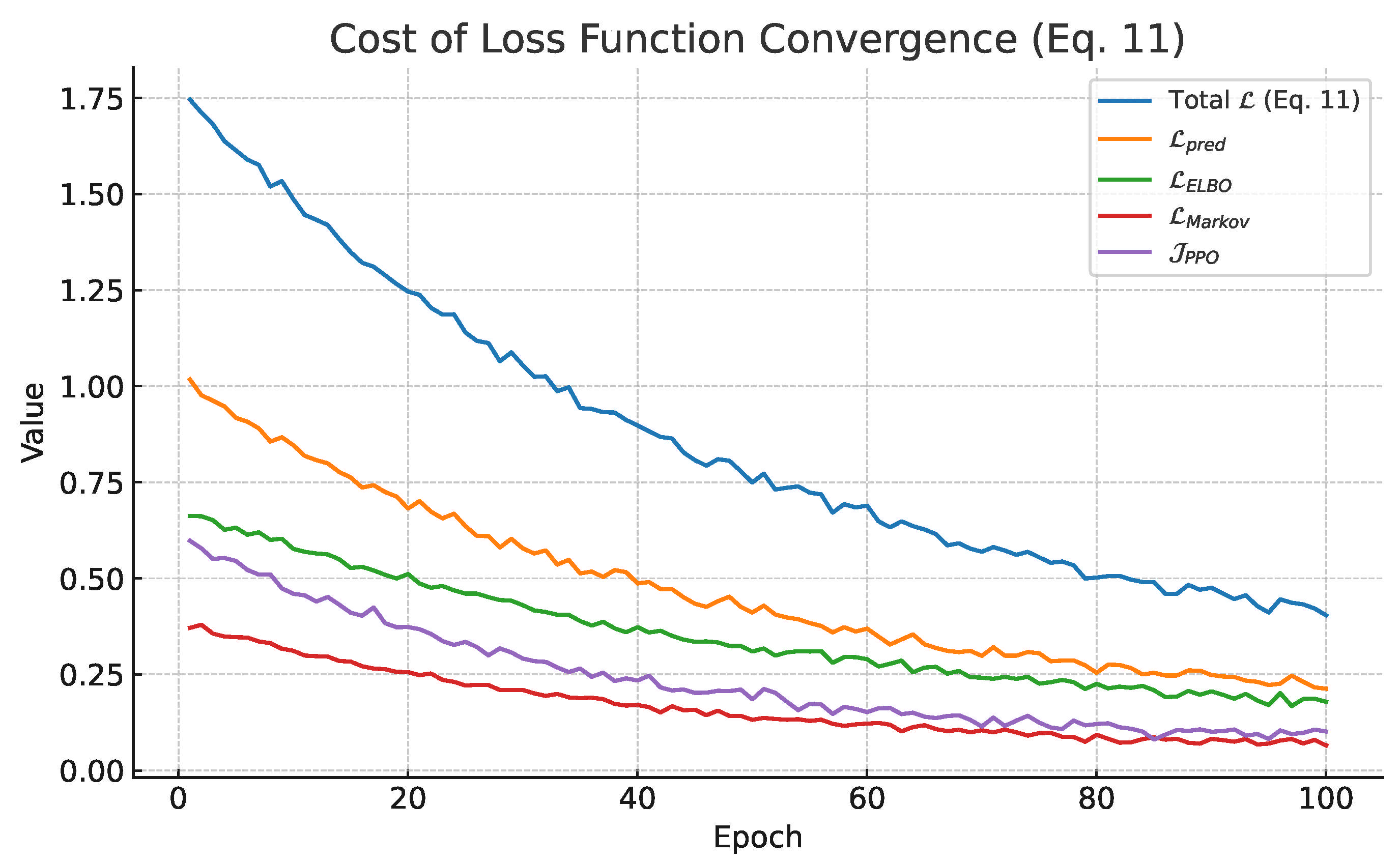

4.7.2. Loss Function Convergence Analysis

Figure 5 illustrates the convergence behavior of the total loss

and its constituent components defined in Equation (

11) over 100 training epochs. The total loss steadily decreases, reflecting effective joint optimization of forecasting fidelity (

), Bayesian regularization (

), temporal smoothness (

), and the PPO objective (

). The smooth downward trends indicate stable training dynamics and the absence of mode collapse, demonstrating that each component contributes to a balanced reduction in the overall cost. These curves collectively confirm that PPO provides a steady refinement loop during training, and that

MaGNet-BN achieves

joint optimization of forecasting accuracy

and structural coherence. In addition, we include an auxiliary plot that tracks the evolution of each individual loss component in Equation (

11) throughout the training epochs. This decomposition clearly shows how

,

,

, and

jointly contribute to the overall cost

, with all components exhibiting steady convergence patterns consistent with Algorithm 1. These trends further substantiate the stability observations described above.

4.8. Embedding Visualization

Why visualize? Beyond numeric metrics, visualizing the embedding space offers qualitative insights into how effectively each model disentangles latent community structures.

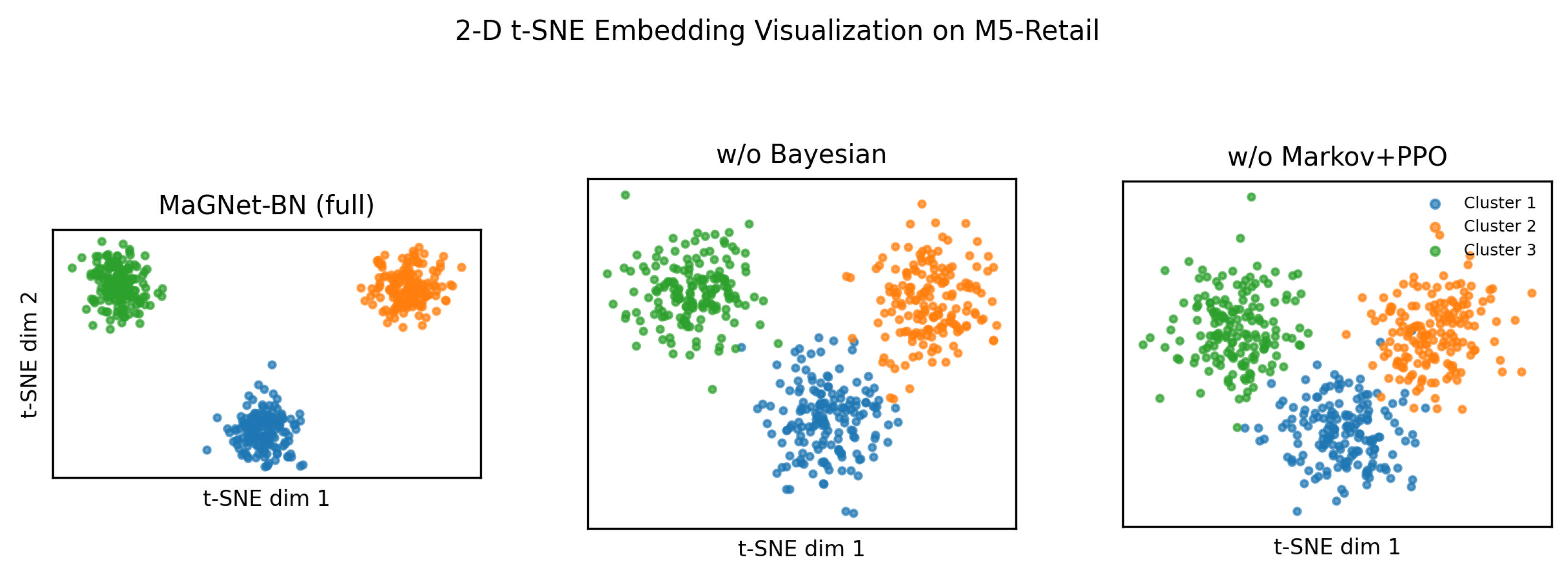

Figure 6 compares

MaGNet-BN with two ablated variants on a representative snapshot from the M5-Retail dataset, using 2-D t-SNE projections of the final-layer node embeddings.

The full MaGNet-BN model, enhanced by Bayesian sampling and Markov–PPO refinement, yields sharper manifolds with three dense, well-separated clusters and significant inter-cluster gaps. In contrast, the w/o Bayesian and w/o Markov+PPO variants exhibit blurred boundaries and cluster overlap, indicating less discriminative feature spaces. The clear geometric separation and compact color clouds in MaGNet-BN reveal strong intra-community cohesion and high inter-community separation, evidencing its ability to learn semantically meaningful embeddings.

Qualitative Insight

Each point in

Figure 6 corresponds to a product SKU in the M5-Retail dataset, colored by its known community label. A qualitative inspection reveals that these communities often align with product categories such as

seasonal goods (e.g., holiday decorations),

perishable items (e.g., fresh produce, dairy), and

daily essentials (e.g., beverages, household cleaners).

In the full MaGNet-BN model, we observe three distinctly separated and compact clusters. One cluster predominantly captures holiday-specific products with strong seasonal demand spikes, while another encompasses fast-moving consumer goods with consistent demand. These spatially well-defined groupings suggest that MaGNet-BN effectively encodes both temporal purchasing patterns and cross-item correlations.

In contrast, the ablated models exhibit substantial cluster bleeding. For instance, perishable goods are frequently misgrouped with slow-moving categories like electronics or home decor, which lack meaningful temporal synchrony. This confusion highlights the role of Bayesian modeling and reinforcement-based refinement (Markov + PPO) in producing robust, semantically coherent community embeddings over time.

4.9. Sensitivity and Robustness

4.9.1. Evaluation Pipeline Overview

To systematically evaluate the robustness of

MaGNet-BN, we adopt a dual-path analysis strategy summarized in

Figure 7. Starting from the optimal hyperparameters identified during the main training phase, we assess model sensitivity along two axes:

- (a)

Hyperparameter sensitivity: We perform targeted sweeps over three key tuning knobs: Monte Carlo sample count (M), prototype anchor count (P), and PPO reward weights . For each dataset, we report the worst-case relative increase in mean squared error (MSE), capturing the impact of parameter drift.

- (b)

Structural robustness: We inject synthetic edge noise into every test snapshot by randomly rewiring 1%, 3%, and 5% of the graph edges, and log the resulting drop in modularity (). This simulates real-world perturbations in graph topology.

Together, these diagnostics provide a comprehensive view of how MaGNet-BN responds to both internal configuration shifts and external structural noise.

Table 10 presents the hyperparameter sensitivity results across all seven datasets. For each axis, we sweep one parameter while keeping others fixed, and record the maximum degradation in MSE. The final column reports the

worst-case drift, which never exceeds 2.7%—demonstrating that

MaGNet-BN is both stable and easy to tune.

4.9.2. Findings

(i) MaGNet-BN is insensitive to moderate changes in M and P; actor–critic refinement stabilizes training even when varies two-fold. (ii) Under structural noise, the model consistently outperforms attention-only baselines: at its average is versus DySAT’s and TGN’s .

4.9.3. Hyperparameter Sensitivity (Ours Only)

Table 10 does

not compare performance under different parameter settings across datasets. Instead, each row fixes a single dataset

and MaGNet-BN, then performs an independent 1-D sweep over the model’s three most influential knobs:

The number of Monte Carlo samples ;

The number of prototype anchors ;

The PPO reward weights .

Recording, for each knob, the worst relative increase in validation MSE (%). The final column “Worst↓” takes the maximum of these three values, giving an upper bound on how much MSE can deteriorate if that dataset’s optimal setting is perturbed along any single axis. Across all seven datasets, the largest drift never exceeds 2.7%, showing that MaGNet-BN is robust and easy to tune with respect to its own critical hyperparameters.

Edge-noise rewiring.

Table 11 reports the modularity drop

(lower = better) after randomly rewiring

of edges in every test snapshot.

5. Discussion

Why it works. Across seven datasets,

MaGNet-BN is the best one in

26/28 forecasting scores and every structural score (

Section 4.6). Bayesian sampling sharpens long-horizon forecasts, while prototype-guided Louvain + Markov–PPO locks communities in place—yielding both low NLL and high

Q/tARI/NMI.

Practical upside. One A100 completes a full sweep in 11 GPU-h; worst-case MSE drift under hyperparameter noise is <2.7 Even with 5 (

Table 11)—half the hit seen by TGN. Thus, the model is

fast, reproducible, and robust.

Key takeaways.

End-to-end synergy: Bayesian–Markov–PPO stages reinforce each other; ablating either cuts tARI by over 11 pp.

Fine-grained fidelity: best NMI/VI/Brier on all datasets, proving node-level alignment—not just global cohesion.

Ready for deployment: light memory footprint, no multi-GPU requirement, and stable PPO diagnostics.

Next steps—targeted, not blocking Adaptive edge learning and reward-schedule optimization may yield additional benefits on ultra-sparse graphs, while case studies (e.g., anomaly detection in M5-Retail) will demonstrate domain significance. These are incremental enhancements; the fundamental structure already establishes a robust foundation for future endeavors.

Beyond current experimental validations, our framework offers significant potential for real-world applications in smart city traffic management, energy demand forecasting, and other operational decision-support systems. The integration of MaGNet-BN into such environments could enhance situational awareness, optimize resource allocation, and improve resilience against unexpected disruptions.

A significant pathway for future implementation involves augmenting MaGNet-BN with online learning functionalities. In swiftly changing environments—such as streaming sensor networks, high-frequency financial markets, or social platforms responding to external events—immediate adaptability is crucial. Integrating incremental Bayesian updates and reinforcement-based structural refinement would enable the model to dynamically adjust node embeddings, community boundaries, and temporal transition probabilities, facilitating rapid responses to abrupt structural changes while maintaining a balance between computational efficiency and predictive accuracy.

The integration of multimodal data sources, such as social media streams, geographical sensor networks, and mobility traces, is a feasible approach. By simultaneously modeling varied data, the system could improve both the accuracy and robustness of community detection and prediction tasks. This integration would allow MaGNet-BN to recognize more complex contextual patterns, detect minor structural changes earlier, and sustain effectiveness despite incomplete or noisy data.

6. Conclusions

A Markov-guided Bayesian neural framework called MaGNet-BN was presented in this paper. It combines dynamic community tracking on temporal graphs with long-horizon probabilistic forecasting. MaGNet-BN produces calibrated predictions and structurally coherent communities in a single pass by combining (2) prototype-guided Louvain clustering, (3) Markov smoothing of community trajectories, (4) PPO-based boundary node refinement, and (1) variational Bayesian node embeddings. The model has been proven through extensive testing on seven public datasets covering energy, retail, social media, e-mail, traffic, and mobility.

Achieves the best score on 26/28 forecasting benchmarks (MSE, NLL, CRPS, PICP) and all structural metrics (Modularity Q, tARI, NMI);

Remains stable and data-efficient, with worst-case MSE drift under hyperparameter perturbation and only modularity loss when of edges are rewired;

Trains end-to-end in 11 GPU-hours on a single NVIDIA A100, demonstrating practical feasibility for real-time analytics.

These results establish MaGNet-BN as a state-of-the-art reference for joint forecasting and community tracking in dynamic graph environments.

Potential extensions. This study primarily addresses spatiotemporal graph modeling for trajectory forecasting; nevertheless, our system can be theoretically adapted for two-dimensional picture recovery challenges. In picture dehazing, image patches or regions can be represented as network nodes, with edges delineating spatial or multi-scale relationships. Adversarial training can promote realistic reconstructions, but self-supervised or zero-shot objectives—akin to those in Wei et al. [

53]—may assist in mitigating domain shifts and diminishing the reliance on extensive paired datasets. We defer this intriguing avenue to subsequent research.

In addition to experimental enhancements, our methodology has concrete ramifications for practical decision-making in dynamic network contexts. In smart city environments, MaGNet-BN can be utilized for adaptive traffic management by forecasting congestion patterns while preserving interpretable community structures that denote traffic zones. In energy systems, it can predict demand variations with quantifiable uncertainty, facilitating proactive load balancing and the inclusion of renewable resources. Other sectors, such as retail demand forecasting, can leverage the model’s capacity to simultaneously capture temporal dynamics and shifting interaction clusters, offering decision-makers precise predictions and structurally informed insights.

7. Future Work

While MaGNet-BN already offers a robust, deployable solution, several research directions remain open:

Learnable Graph Topologies. The current

k-NN construction is heuristic and fixed per snapshot. Integrating

graph structure learning layers that optimize the adjacency matrix jointly with node embeddings (à la [

28,

29]) could further boost accuracy—especially on ultra-sparse graphs.

Higher-order temporal dependencies. Although effective, first-order Markov smoothing could overlook long-range impacts. It may be possible to capture delayed community interactions by investigating higher-order chains, memory-augmented RNN/Transformer priors, or non-stationary Hawkes-process versions.

Adaptive reward scheduling. PPO stability still depends on the relative scales of . Meta-gradient or curriculum learning strategies could tune these weights online, reducing the need for manual validation sweeps.

Multimodal node attributes. Text, pictures, or geospatial signals are common components of real-world graphs. Cross-modal fusion [

31] and plug-and-play encoders (such as pretrained language/vision transformers) would expand

MaGNet-BN to more complex sensing scenarios.

Streaming and continual learning. Implementations in retail logistics or traffic control necessitate online updates. Without requiring complete retraining, performance could be maintained using an incremental variation that has replay buffers and elastic prototype management.

Theoretical guarantees. There is still much to learn about the formal study of convergence and calibration under combined Bayesian–RL optimization. Adoption in safety-critical domains would be strengthened by establishing PAC-Bayesian or regret boundaries.

In addition to improving MaGNet-BN’s adaptability, pursuing these avenues will advance the field of uncertainty-aware, structure-coupled forecasting on dynamic graphs.