1. Introduction

Several works related to the use of techniques from the field of artificial intelligence in astronomy have been undertaken due to the high demand for processing, detection, and automated analysis of large amounts of data. It has become necessary to use machine learning techniques capable of modelling, detecting, predicting, or classifying events in real-time at observatories, or facilitating the analysis of data directly from the surveys [

1,

2].

This kind of study requires the production of artificial data, i.e., generated by simulations, theoretical models, and statistical experiments, among others. In the context of stellar parameters’ determination, we refer to theoretical spectra computed under physical assumptions and the radiative transfer process occurring in the stellar atmosphere, which is denominated stellar atmosphere models [

3,

4,

5,

6,

7]. These simulated data of stellar spectra are used to deduce the physical parameters of the stars by comparing them to their respective observed spectra managed from different databases. This work uses machine learning techniques to develop a method that looks for the best representative model of an observed spectrum from a database containing many theoretical spectra.

We used the grid ISOSCELES (see

Section 2.1), computed to analyse the stellar and wind properties of massive stars (approximately over 8 solar masses,

) and, therefore, to study the important role that stellar winds have in the determination of mass-loss rates [

8]. The challenge was to generate multiple comparisons between these models (also called synthetic spectra) with the real (or observed) stellar spectra, to deduce the parameters corresponding to the object under study. Therefore, the synthetic spectrum that best matches the real spectrum will be the one selected to determine the stellar and wind parameters.

During the last decades, the procedure for determining stellar parameters in massive stars has been refined generating a standard with excellent results [

9,

10,

11]. However, substantial improvements in models of stellar atmospheres and the description of physical processes have further improved the estimation of such parameters [

12]. In general, the procedure consists of a visual comparison of the observed spectral line profiles and their respective synthetic lines from the models. In the case of massive stars, the Balmer lines (hydrogen) are analysed in this process due to their great intensity, and their direct relation with gravity and stellar winds, together with the contribution provided by the helium lines. Through spectroscopic analysis in the optical range, it is possible to determine the following parameters: effective temperature (

), surface gravity (

log g), micro-turbulence velocity (

), and abundance of elements, such as helium and silicon. In addition, particularly using the ISOSCELES spectral models, the values of the line-force parameters are obtained as follows:

,

and

, where

is a ratio between the line force from optically thick lines and the total line force;

is related to the number of lines effectively contributing to the driving of the wind; and

changes in the ionisation throughout the wind.

In this context, the challenge is summarised as finding the best spectral model for a real spectrum using the ISOSCELES grid, which has more than 573 thousand samples. In addition, each model has six variations according to the different values of the micro-turbulence velocities (

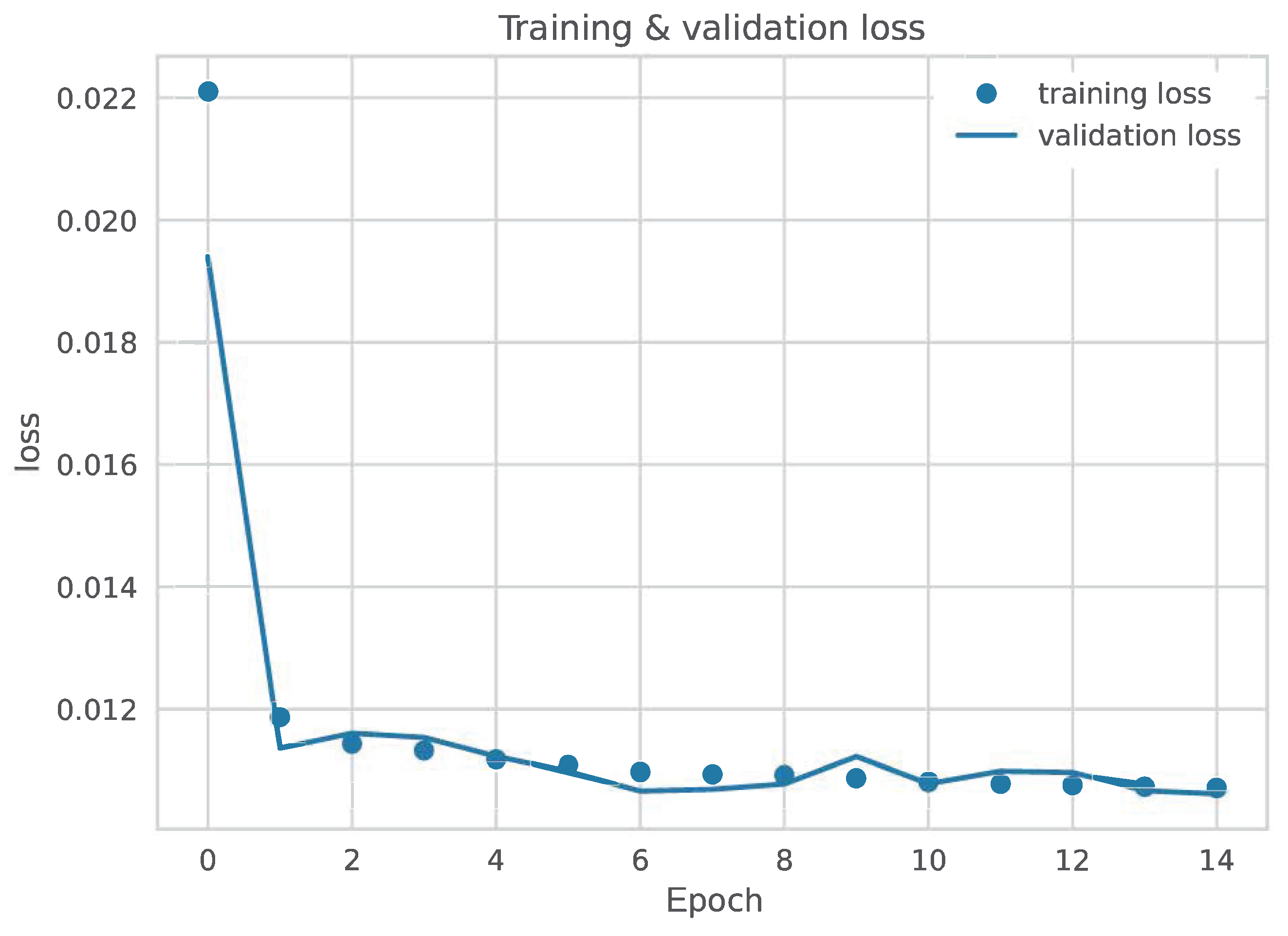

). Due to the complexity of the problem, machine learning techniques were used to limit data processing and search times. Specifically, clustering and deep learning techniques were used for grouping data, since multi-layer neural networks manage to extract the main features of the data automatically and thus reduce the dimensionality of the problem [

13,

14,

15].

In the literature, it is possible to find similar problems that have been addressed using deep learning techniques [

16,

17] as a solution to obtain good results, although for much narrower stellar parameter ranges (

[4000, 11,500] K;

log g∈ [2, 5] dex) than those addressed in this work, and without considering the complexity of the wind hydrodynamic solutions for stellar models. Another case is reported in [

18], where the authors succeeded in training long short-term memory type ANNs with the synthetic spectra produced by [

19,

20] covering the region of OB main-sequence stars in the H-R diagram. Different noise levels were added to the synthetic spectra to decrease the ANN sensitivity to observed spectra. However, the number of synthetic spectra used for training the ANN was 25,718, which is considerably lower than the 573,190 spectral models used in our work. Therefore, the level of granularity and resolution of stellar parameter estimation is less than for our method. Another recent method that used a recurrent artificial neural network is described in [

21]. The proposed system was trained with 5557 synthetic spectra computed with the stellar atmosphere code CMFGEN that covers stars with the following stellar parameter ranges:

[20,000, 58,000] K;

log g∈ [2.4, 4.2] dex; and stellar mass from 9 to 120 solar masses. On the other hand, autoencoder architecture techniques have been employed to reduce the dimensionality of the spectra projected in a new feature space [

22]. After that, the algorithm transfers the learning to convolutional neural networks that finally determine the stellar parameters of M dwarf stars.

The main contributions of this paper are the following:

In this article, we used a grid of spectral models comprising more than 500,000 samples. These models were created based on the first grid, which considers hydrodynamic solutions for the behaviour of stellar winds and radiative transport. As a result, our method produced stellar parameters with greater resolution and a better fit than other methods.

The method we developed allows estimating parameters of massive stars in a relatively short time (between 6 and 13 min per observed spectrum). This represents less than

of the total time required to compare the observed spectrum against each of the synthetic spectra in the grid (see exhaustive search method using the same data [

23]). Therefore, the proposed method provided is much more efficient.

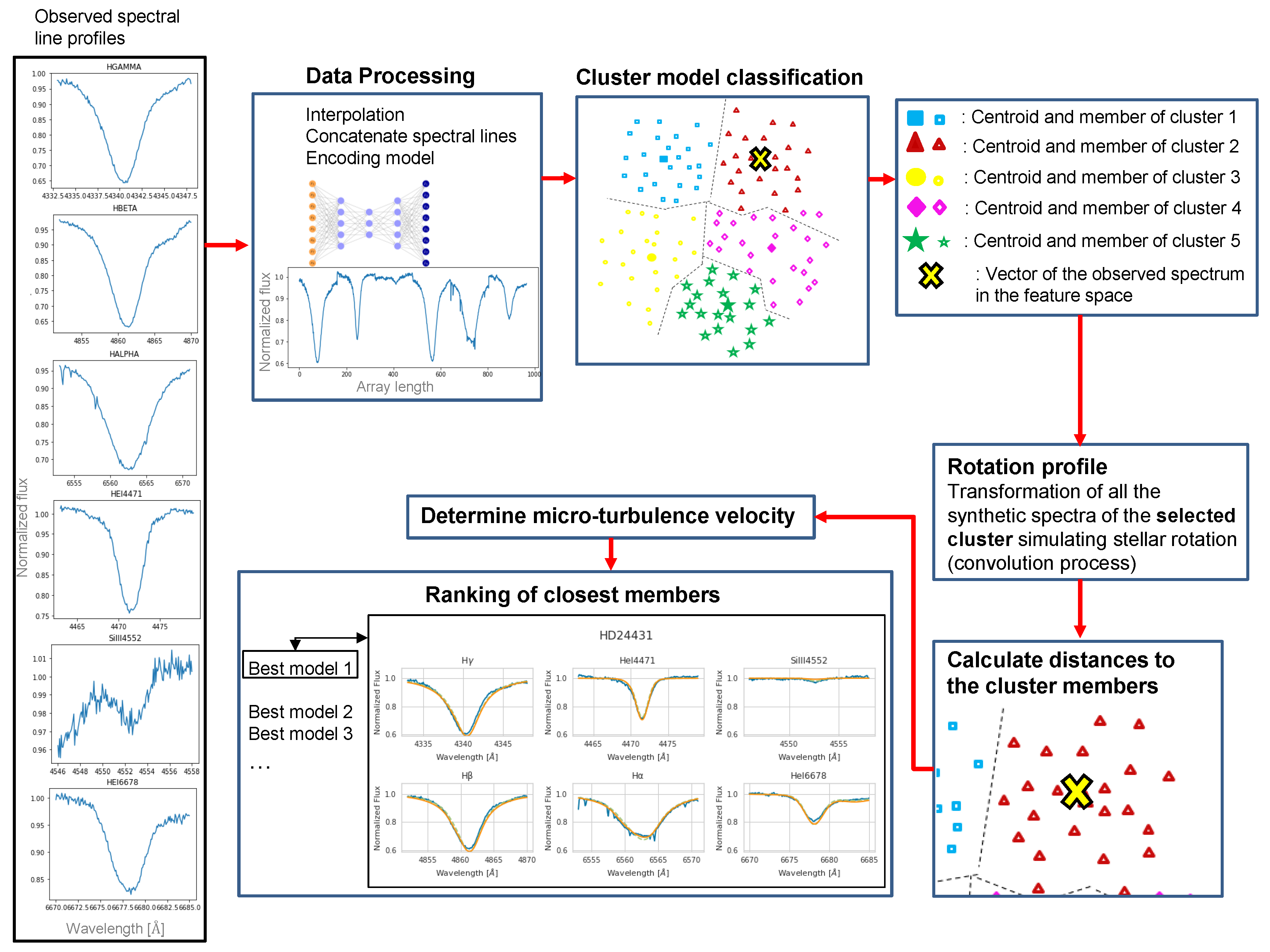

Our method proposes a combination of deep learning, clustering, and supervised non-parametric learning (KNN) techniques to obtain the synthetic model that best fits a real stellar spectrum. The observed spectrum is represented in a latency space by an autoencoder neural network. It is then classified into clusters from this compact representation. Finally, the observed spectrum is compared with the synthetic spectra of the selected cluster using the KNN algorithm (specifically, the “Ball-Tree” algorithm). The architecture of this method is novel for the treatment of spectra and the estimation of stellar parameters in comparison with other proposed methods (e.g., [

9,

10,

23]).

The remainder of the paper is organised as follows:

Section 2 presents some context for the ISOSCELES grid and the work related to the development of a search algorithm;

Section 3 describes the methodology and algorithm developed;

Section 4 shows and discusses the experimental results of this research, and

Section 5 presents the main conclusion and future work.

2. Background

2.1. Massive Stars and Their Winds

Massive stars (∼ over 8 M⊙) are continuously losing large amounts of material via their stellar winds, enriching the interstellar medium chemically and dynamically, and therefore affecting the evolution of the host galaxy [

24,

25,

26,

27]. The huge mass-loss rate from the star (e.g., a solar-type star typically loses ∼

M⊙/year, while for massive stars, the mass-loss rate is about ∼

to

M⊙/year) modifies its stellar evolution stages and hence the type of supernova remnant left [

28,

29]. In addition, stellar winds allow us to perform quantitative spectroscopic studies of the most luminous stellar objects in distant galaxies and thus enable us to obtain important quantitative information about their host galaxies. Observing the winds of isolated OBA supergiants in spiral and irregular galaxies provides an independent tool for the determination of extragalactic distances using the wind momentum–luminosity (WML) relationship [

30,

31,

32]. To understand all these physical phenomena occurring in massive stars and all the impacts that they have in their surrounding media, we need to analyse spectroscopic (and photometric) observations at different wavelengths of different spectral and luminosity classes, and in different metallicity media, to cover all evolutionary stages of these stars. Currently, the theory that describes these winds (m-CAK theory) is based on the pioneering work of [

33] and the improvements made by [

34,

35]. From the standard m-CAK theory, the line force parameters (

,

, and

) provide scaling laws for the mass-loss rate and the terminal velocity of the wind.

In addition, in recent years, observational data have dramatically increased, introducing the era of big astronomical data. Currently, thanks to the advent of multi-object spectrographs and multi-wavelength techniques, there are several observational projects, such as ESO-GAIA, APOGEE (SDSS), POLLUX, IACOB, VLT-FLAMES Tarantula survey, and LAMOST, collecting and storing huge quantities of stellar spectra. Moreover, the analysis of spectral lines is performed by stellar atmosphere model comparison, using models that have been improved over the years. Therefore, it has become necessary to develop efficient analysis tools to use the models and compare them with observations, since this task is no longer possible by human interaction.

2.2. ISOSCELES Grid

ISOSCELES, GrId of Stellar AtmOSphere and HydrodynamiC ModELs for MassivE Stars, is the first grid of synthetic data for massive stars that involves both the m-CAK hydrodynamics (instead of the generally used

) and the NLTE radiative transport [

36].

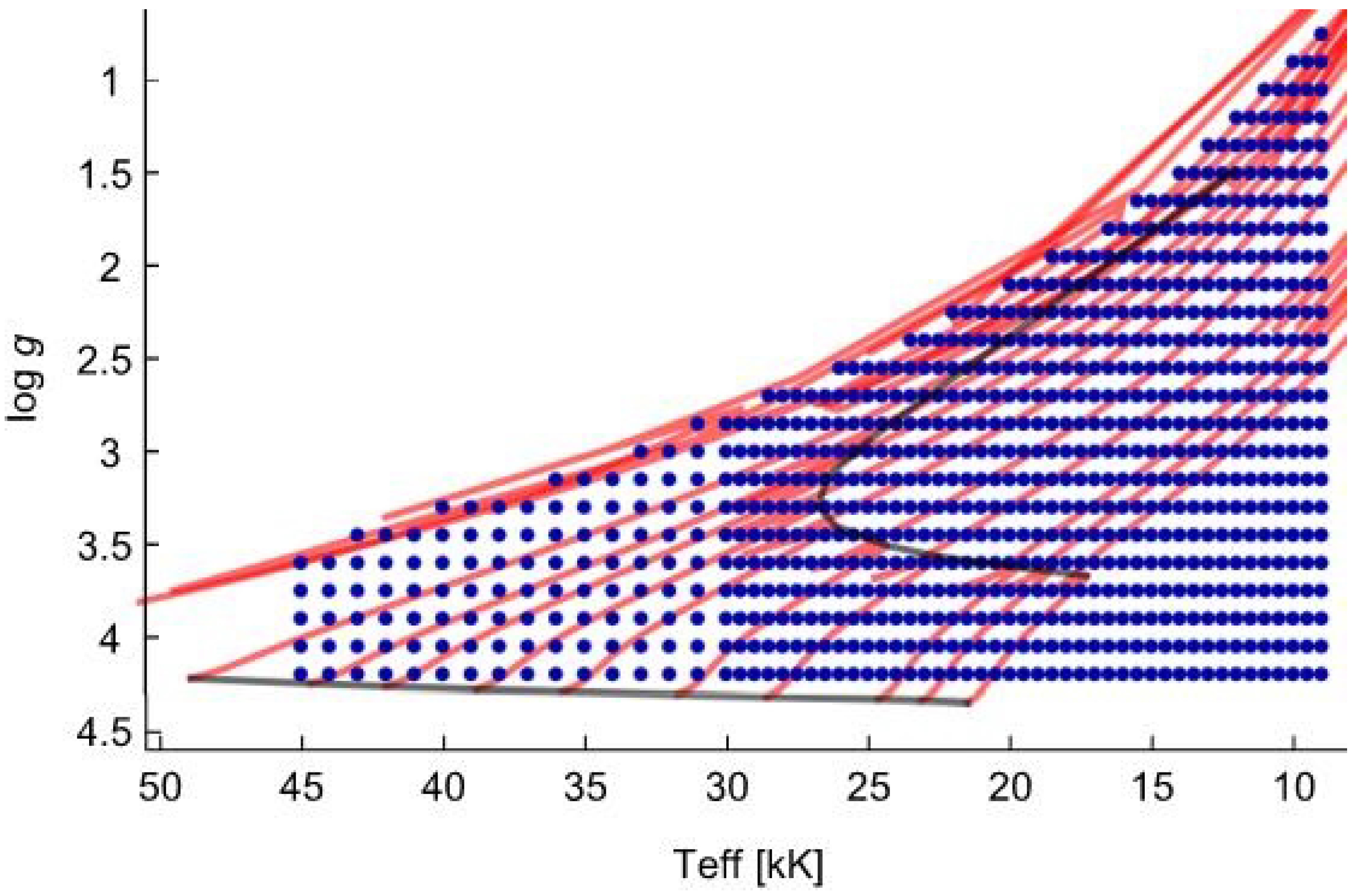

ISOSCELES covers the complete parameter space of O-, B-, and A-type stars. To produce a grid of synthetic line profiles with the code FASTWIND, we first computed a grid of hydrodynamic wind solutions with the stationary code HYDWIND. The surface gravities comprise the range of down to about of the Eddington limit in steps of . We consider 58 effective temperature grid points, ranging from 10,000 [K] to 45,000 [K], in steps of below and in steps of 1000 [K] above it.

Therefore, the grid points were selected to cover the region of the

diagram (

Figure 1), where the O-, B-, and A-type stars are located from the main sequence to the supergiant phase.

Although we focused on the study of B- and A-type stars, we have implemented a wide grid for further analysis and to avoid observed stars too close to the grid’s borders. Each HYDWIND model is described by six main parameters:

(effective temperature),

g (log of gravity),

(stellar radius),

,

, and

. All these models consider the boundary condition for the optical depth,

, at the stellar surface. For each given pair (

,

), the radius was calculated from

by means of the FGLR [

38,

39]). Note that this relationship was observationally established for supergiant stars, but we have also used it for models that represent stars that do not belong to this luminosity class. However, this calculation of the radius does not affect the analysis because it will be derived in the final step of the analysis. The range of line force parameters is given in

Table 1, where the values of

are necessary to obtain both fast and

solutions. It is worth noting that not all combinations of these parameters converge to a physical stationary hydrodynamic solution.

We used H, He, and Si atomic lines to derive the photospheric properties of the star and the characteristics of its wind. In our case, each FASTWIND model is described by seven main parameters:

,

g,

,

,

,

, and

(note that the first five parameters were calculated in the hydrodynamic part of this methodology). For calculating the NLTE model atmospheres, we used micro-turbulent velocities of 8, 10, and 15 [km/s] for temperature ranges

,

and

, respectively, whereas for all synthetic line profiles (from all models) micro-turbulent velocities (

) of 1, 5, 10, 15, 20, and 25 [km/s] were used. For silicon abundances (

), we adopted five different values:

,

,

(solar),

and

. The abundance of helium was fixed to the solar value

. The actual radius should be determined from the visual magnitude, the distance of the star, and its reddening. The grid was transformed from ASCII to binary format (FITS) to reduce its size. In total, we obtained 573,433 synthetic spectra with a size of 289 [Gb] (FITS format). Currently, part of our grid ISOSCELES is publicly available at

https://www.ifa.uv.cl/grid (accessed on 10 August 2024).

Considering the huge amount of observational data and the half million models from ISOSCELES, there is a necessity to develop new computational methods to determine the best model that reproduces the observed spectra, and to determine the stellar and wind parameters and the evolutionary state of the stars, their chemical composition, and many other characteristics.

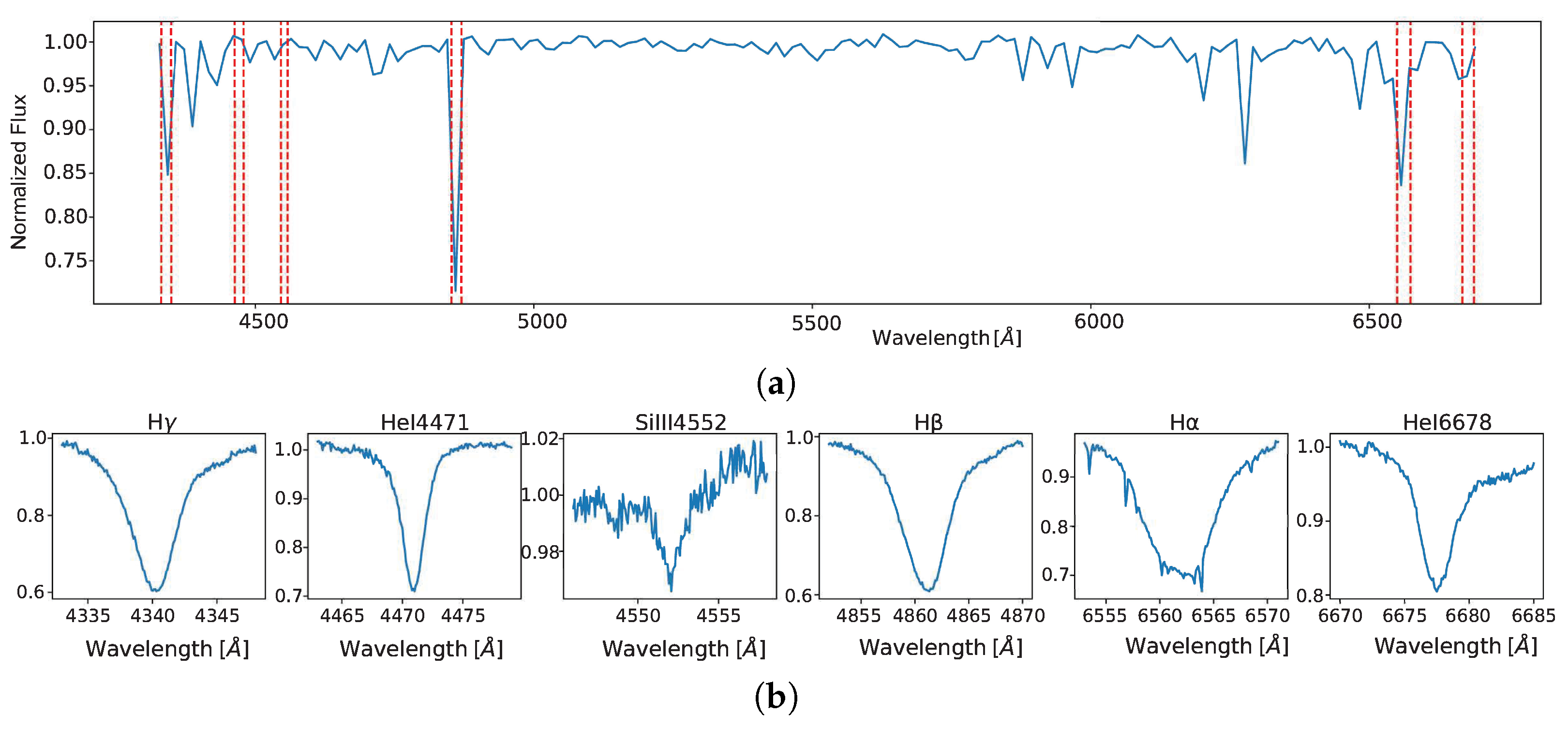

2.3. Related Work

In the context of a master’s thesis, during the year 2022, the study “Analysis of the momentum–luminosity relationship in massive stars” was presented, which, in turn, presented the development of an exhaustive search algorithm for spectral models belonging to ISOSCELES [

23]. Regarding the algorithm, before being executed, it requests the entry of data of the profiles of six spectral lines of the star that need to be analysed, these are as follows: H

, He I4471, Si III4552, H

, H

, and He I 6678. In addition, the rotational parameters of the star

vsin

i and

are required with which the synthetic spectra will be convolved, simulating line broadening due to stellar rotation. Furthermore, and optionally, it is possible to enter expected values of

,

g, and type of solution (

or

-

), and to set an offset

of each line. The latter is because the lines are formed in different parts of the photosphere, so they usually present small shifts in wavelength. The optional data help narrow the search areas for spectral models in the grid, so they depend on the user’s knowledge of the stellar object under study. The lack of such optional information implies that the algorithm uses all grid models in the search process.

This code was tested on a multiprocessing server, where in each search, the loads, interpolation, and convolution of the spectral models are performed in parallel by each processor. Synthetic lines are compared with the observed spectral lines by (6 for the number of lines). The algorithm stores in a search list all spectral models with less than 1.0. Finally, it sorts the results in ascending order, selecting the best spectral model for the analysed star based on the lowest value of from the list. In summary, the processing times of the algorithm will depend on the expected parameters that are optionally entered before execution. The result was obtained of times from 5 to 10 min, when all possible optional parameters were delivered, and of 240 min, when no optional parameter was delivered.

As this work represents a first approach to the development of an algorithm that facilitates searches for ISOSCELES spectral models, the results obtained here will be used for comparison, including the same observed spectra and their lines managed from the same database.

4. Experimental Results

4.1. Data Acquisition

The observed spectra selected to analyse and test the developed algorithm correspond mainly to the dataset that was used in the masterwork of [

23]. This research uses the ISOSCELES spectral model grid to make the comparisons using an exhaustive search algorithm. The objective is to compare the search times and efficiency of the algorithm developed in this work, together with the validation of the results. For this reason, the same six spectral lines and wavelength-shift values have also been used for analysis.

The observed spectra used in this work come from two databases. The first set of spectra was captured by the REOSC spectrograph, installed on the Jorge Sahade telescope (2.15 m in diameter) at the “El Leoncito” Astronomical Complex (CASLEO), located in San Juan, Argentina. On the other hand, the second group of spectra comes from the IACOB database, which is part of a scientific contribution project in the study of massive stars, where large amounts of spectra and photometry, among other empirical data, have been made freely available at:

http://research.iac.es/proyecto/iacob/pages/en/introduction.php (accessed on 10 August 2024). The spectra were collected from the “Roque de los Muchachos” Observatory (ORM) located in La Palma, Spain.

4.2. Input Data Specification

When inputting the spectral data of the star into the search algorithm, the wavelength ranges of each line profile must be specified, which must be less than or equal to the ranges considered for the synthetic spectra with which the classification models were trained (see

Table 2). However, all the line profiles of the observed spectra presented a smaller wavelength range, since this avoided considering ranges where noise present in the line continuum is included.

Other data that must be entered are the wavelength shifts of each spectral line, since these are formed in different parts of the photosphere. In this way, it will be possible to correctly visualise the comparison of the spectral lines with their respective models selected by the search algorithm.

4.3. Technical Processing Data

The algorithm development is written in Python version 3.10. The hardware dedicated to processing the algorithm is a 64-processor server, with 126 [Gb] of RAM, plus a swap memory of 1 [Gb]. This amount of available memory is necessary to run the algorithm as the feature vectors of all spectral models must be loaded into the run-time environment together with the clustering models, coding, and name list of each synthetic spectrum, which commits approximately 35 [Gb] of memory in total. To simplify this process, all the data required for loading are stored in serialised objects using functions from the “Pickle” library. The total data loading time is approximately 16 min.

However, running the algorithm involves using between 60 and 80 [Gb] of additional memory, depending on the number of individuals in the sorted cluster. The convolution and loading processes of the closest models with all their values are distributed over 30 processors running in parallel, which speeds up the process, but at the same time requires a large amount of available memory.

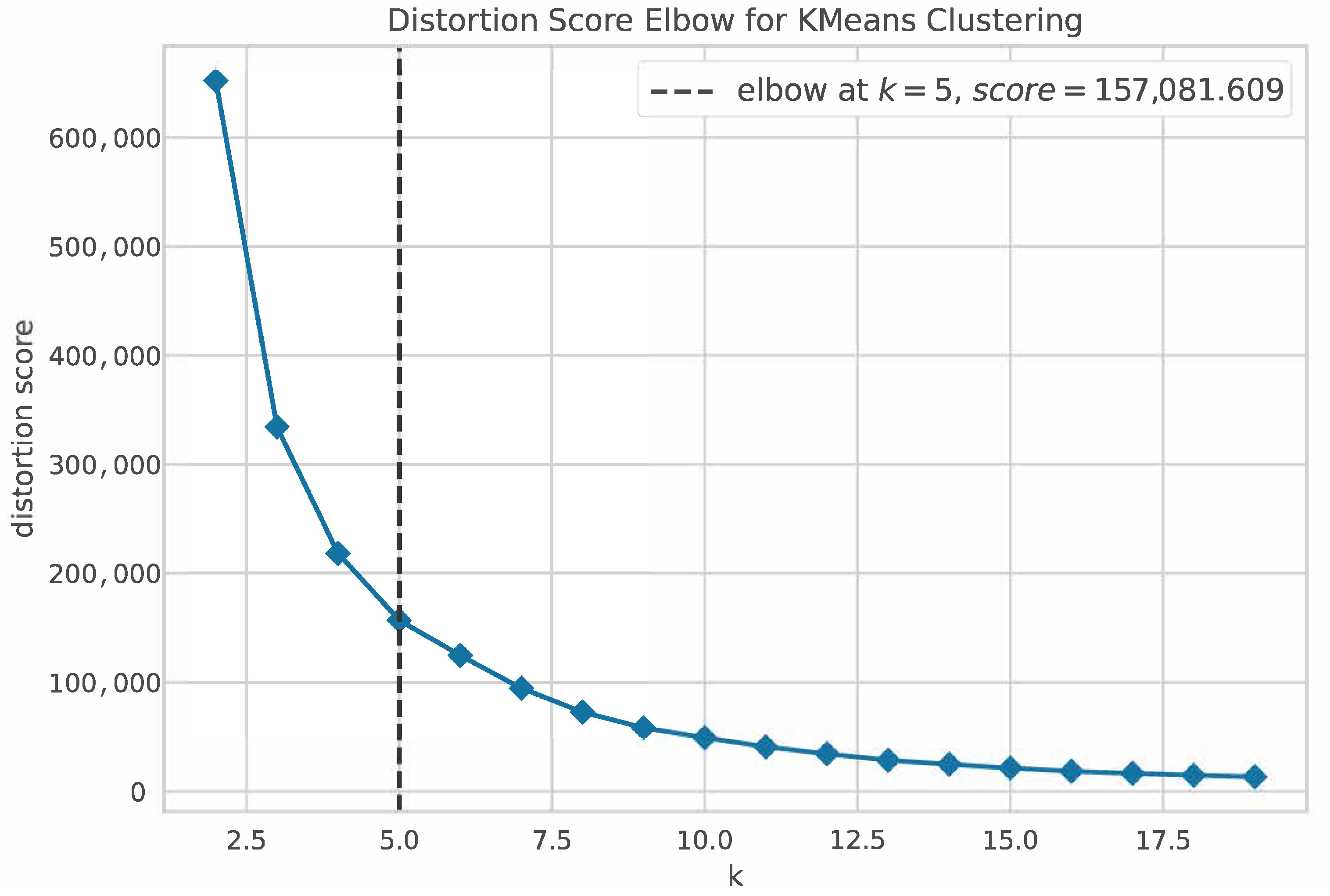

4.4. Algorithm Execution and Results

The real stellar spectra were processed one-by-one; the algorithm is detailed in

Section 3.1.3, whose execution times are detailed in

Table 6, which are dependent on the number of individuals in the classified cluster.

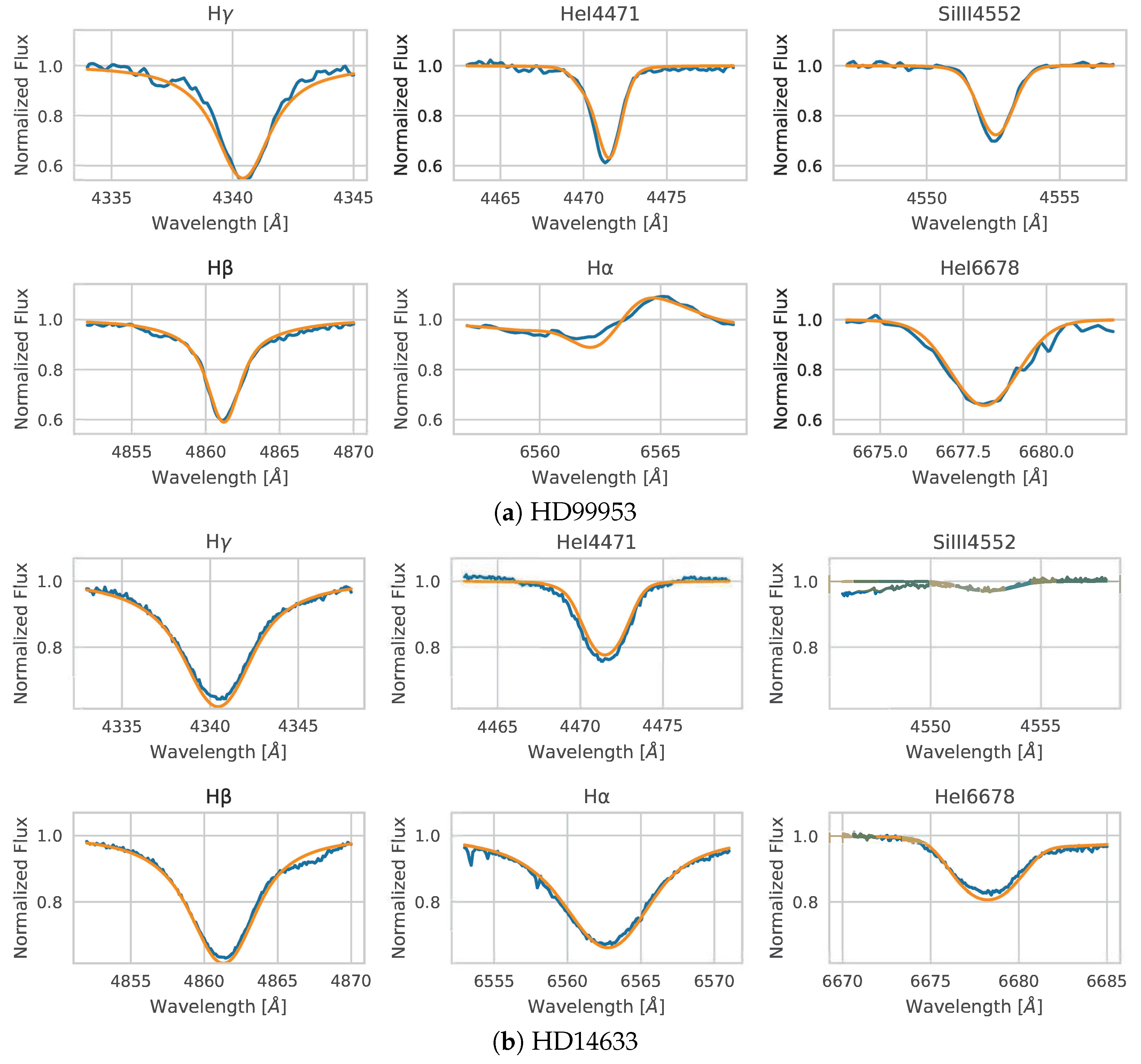

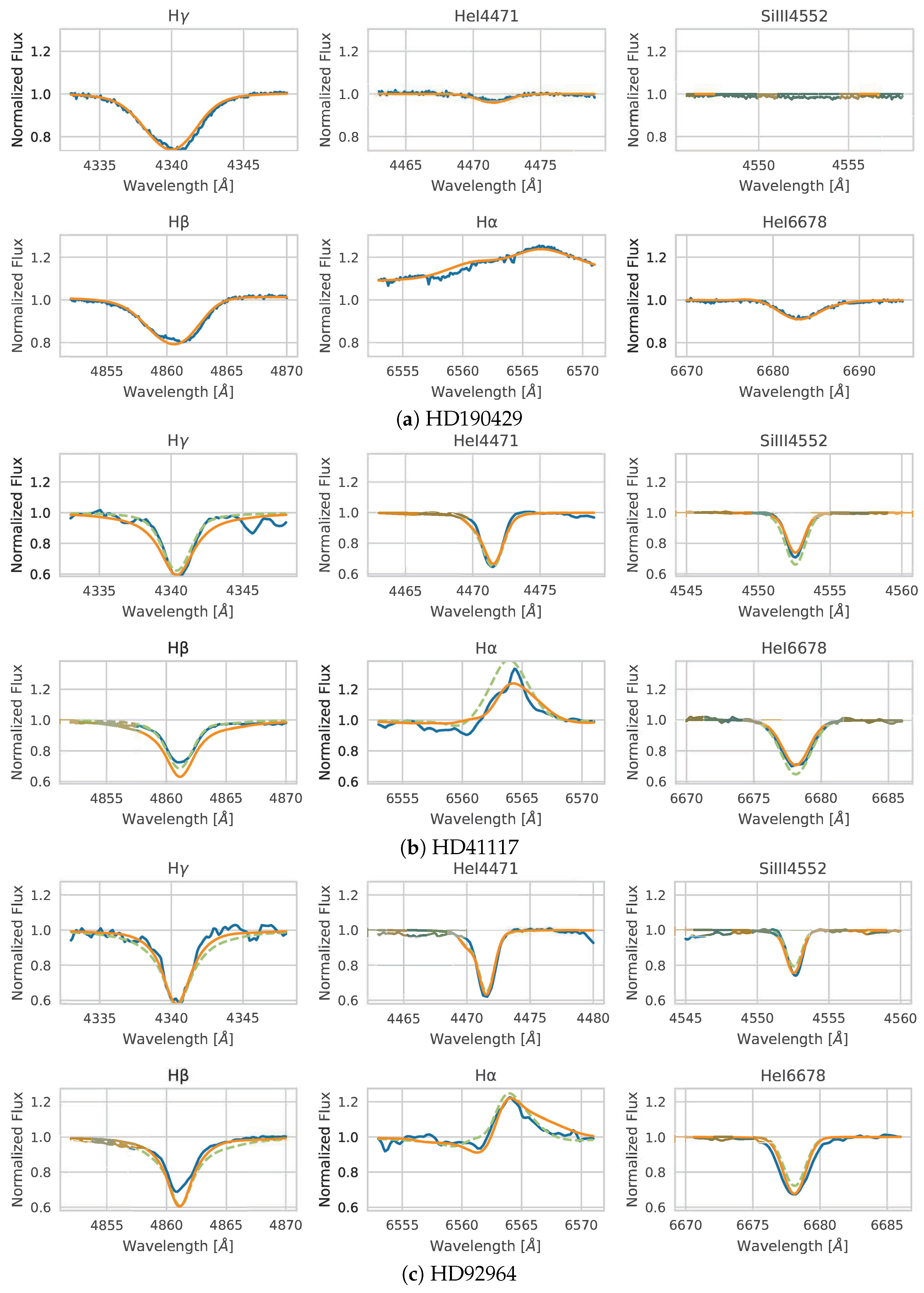

Figure 6 and

Figure 7 show some results of comparative plots as examples, where the observed spectra (blue curve) entered into the algorithm and their respective spectral models obtained (orange curve) are shown. In addition, the best model found by the exhaustive search algorithm of [

23] can be seen (segmented green curve) as a comparison, though for the cases where the same results were obtained, the latter is not shown.

Table 7 summarises the best stellar parameters obtained for each observed spectrum, where Obj: Stellar object; Solution type:

or Fast; ED: the result of Euclidean distance; and ED* is the Euclidean distance calculated to the spectral model found in [

23], while the red highlights identify the unexpected results.

Analogously, other spectral lines available in the models were selected to evaluate the six spectra with unexpected parameters. In the case of the star HD30614, the spectral models obtained in [

23] and in this work were compared. In

Figure 8, some additional spectral lines are shown (differently from those mentioned in

Table 2). The lines of SiIV4116 and HeI4922 were compared between those belonging to the model selected by the algorithm, those of the expected model, and the lines of the observed spectrum. In general, we can see a better fit of the spectral lines by the spectral model obtained in [

23] concerning the observed spectrum.

These additional spectral lines were selected because of their close relationship with the effective temperature and

in massive stars. Furthermore, it is important to note that the Euclidean distance of the model selected by the algorithm is greater than the Euclidean distance of the model found in [

23] (see

Table 7). This is because both models were classified into different clusters. Although these models have close Euclidean distances concerning the observed spectrum, they do not belong to the same cluster. For this reason, it is estimated that these models are located near the borders of their respective clusters. Finally, it is concluded that is necessary to include more than six spectral lines to evaluate the Euclidean distances between the spectral models, as well as to better generate the clusters.

4.5. Discussion

As mentioned above, several spectra analysed in this research were also studied in [

23], since those works used the same dataset (ISOSCELES) to obtain the stellar parameters. However, it was decided not to include three spectra of that work which lack information; specifically, we refer to the data quality of one of its spectral lines (in this case, HeI 6678). This is because the machine learning models were trained to receive and analyse six spectral lines detailed in

Section 3.1.1 and not less than this amount. Thus, the main requirement to process the algorithm for an observed spectrum is to have data of the six spectral lines mentioned in

Table 2.

The algorithm developed processed a total of 40 observed spectra. The spectral models found for each spectrum correctly fit the spectral line profiles. The range values of the Euclidean distances were as follows: [

,

] (see

Table 7, and

Figure 6 and

Figure 7). However, six of the spectral models found by the algorithm had unexpected stellar parameters. These cases are the following stellar objects: HD 30614, HD 204172, HD 206165, HD 31327, HD 35299, HD 37209. In general, for these cases, the best spectral models selected by the algorithm had a large difference in the stellar surface temperature and

compared to other studies. For example, for the star HD 30614, an effective temperature of approximately 28,500 ± 1000 [K] and

± 0.10 [dex] is expected based on the results of [

23,

49]. However, the spectral model selected by the algorithm has a difference of

and

[dex], which is not certain according to previous work. Another case is the star HD 206165; our method found a spectral model with a difference of

and

[dex] concerning [

52]. Another example is the star HD 36862; the best spectral model selected by the developed algorithm had a difference of

and

[dex] [

62]. On the other hand, all the spectral models selected by the developed algorithm, except for the six mentioned, presented stellar parameters close to those expected. In general, these 34 spectral models show average differences of

,

,

,

,

,

,

6 [km/s].

In this work, there is an important issue related to the algorithm classification process that needs to be discussed. The training of the cluster model was carried out with spectral models without stellar rotation effects. On the other hand, the observed spectrum introduced in the coding and classification process is naturally affected by stellar rotation. However, from the final results of the spectral models selected for each observed spectrum, we can see that their classification in each cluster is correct. Our hypothesis is based on the process of automatic feature extraction using deep learning algorithms. The autoencoder process manages to represent complex spectral models in a compact form. Therefore, we can see that this process is independent of stellar rotation effects.

5. Conclusions

In summary, the search algorithm was tested with 40 real stellar spectra, obtaining accuracy in the estimated stellar parameters. However, it should be noted that the spectral models with unexpected stellar parameters corresponded to those closest to the real spectrum. Therefore, it is concluded that it is necessary to include more spectral lines in the feature vectors of each of the models, which are available in each synthetic spectrum or probably from other wavelength ranges (UV, for example).

Alternatively, the algorithm was programmed to deliver a ranking of closeness to each cluster according to the Euclidean distance to their respective centroids for each real spectrum entered. This gives the analyst user the possibility to run the algorithm again, but this time for the second closest cluster to the observed spectrum, if deemed necessary. Finally, the visual inspection work to compare spectral lines with their respective models is fundamental to evaluating the results obtained.

5.1. Contributions

The main contributions are focused on shortening the search times without neglecting the comparison of an observed spectrum with all models of its “species”. All this happens automatically. The spectral models were grouped according to the shapes of their most important spectral lines. The search times obtained by the algorithm are less than

of the total execution time for a manual search process, such as in [

23]. Moreover, the comparison of the actual spectrum with all cluster members does not require a one-versus-all calculation, since the “Ball Tree” algorithm reduces the execution time by calculating the distances to centroids and only to nearby spectral models. On the other hand, it is important to emphasise that using the search algorithm does not require the knowledge or input of an expert to run it, without having to search through all the spectral models in the grid to find the best fit, since the search areas are defined according to the actual spectrum classification entered. In addition, the user can edit the search parameters as needed. It is open to the user to select the cluster according to the ranking of the proximity of the centroids to the observed spectrum. Furthermore, the selection radius of the nearest neighbours is editable, as well as the number of spectral models provided by the closeness ranking in its

and fast solution versions.

5.2. Future Work

At the end of this research, and after analysing the results, it was possible to visualise different opportunities for improvement in the search algorithm. Although the spectral analysis process was facilitated by limiting the search times using an algorithm, it is still possible to continue reducing them. Analogously to this work, recently, a solution to the problem of the line profile broadening process of spectral models has been published [

63]. This research seeks to avoid this procedure in exchange for applying a transformation capable of “removing” the effects of stellar rotation on the observed line profiles through a deconvolution process. In this way, it will be possible to compare the observed (deconvoluted) spectrum with the spectral models of the grid, which were created without considering line broadening due to stellar rotation effects. This would significantly decrease the processing time, as long as the deconvolution process time is less than the convolution time of the spectral models.

As mentioned in the previous section, it is necessary to modify the search algorithm to discard spectral models with parameter values far from the expected ones, considering the condition that there is enough scientific evidence regarding the stellar parameters of the studied object. In addition, it is necessary to have clustering models trained with different numbers of lines depending on the data available to the analyst user. Currently, we are working on spectral models that cover line profiles of ultraviolet spectral ranges. Having these data for training new models would give greater assurance to the results. On the other hand, it is proposed that future work includes an automatic wavelength shift adjustment in the algorithm, considering also that it can compensate for small errors that usually occur in the normalisation of the flux intensity of the studied stellar object without the need for manual adjustment by the user.