AccessiLearnAI: An Accessibility-First, AI-Powered E-Learning Platform for Inclusive Education

Abstract

1. Introduction

1.1. Context and Motivation

1.2. Proposed Solution and Contributions

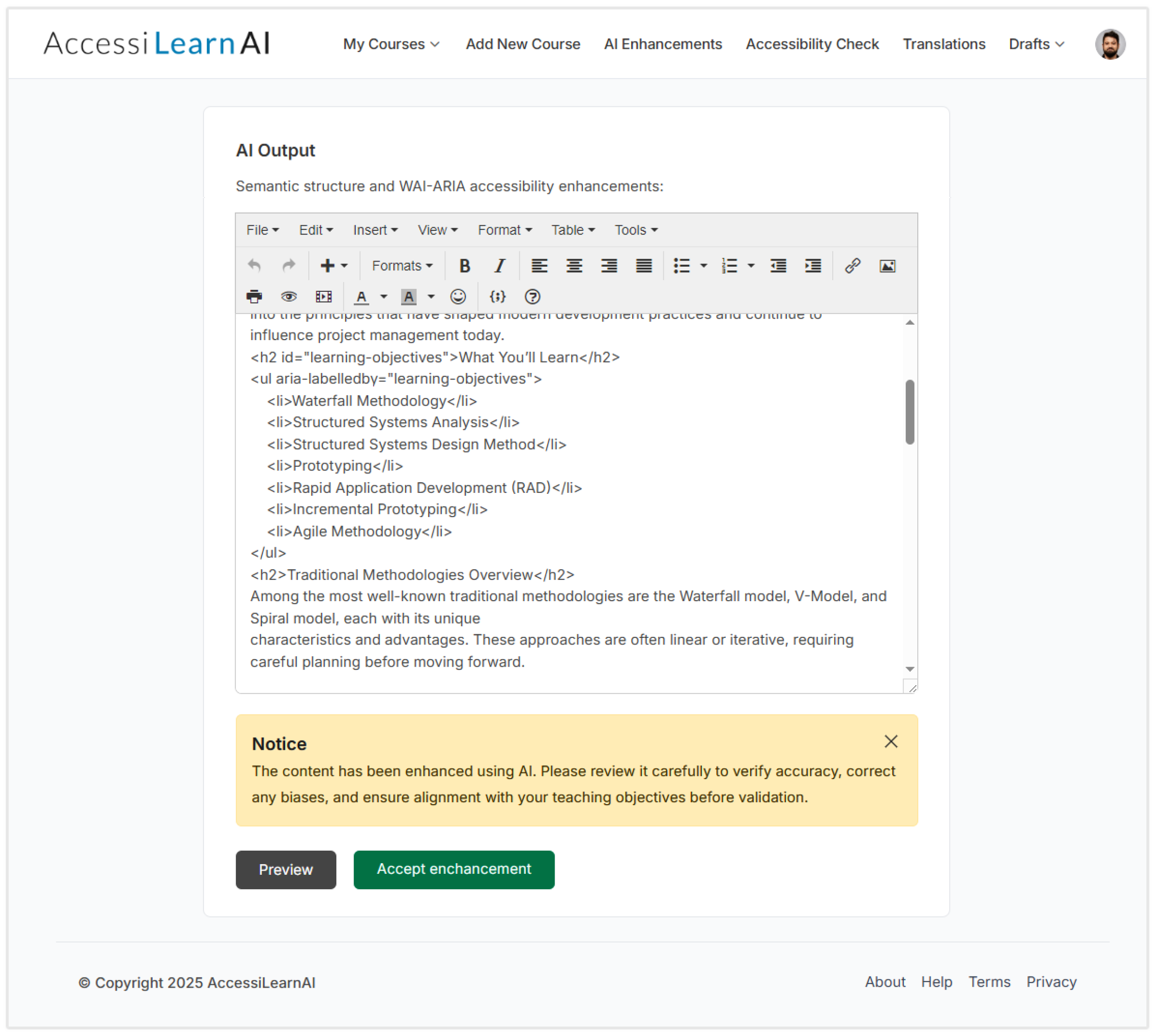

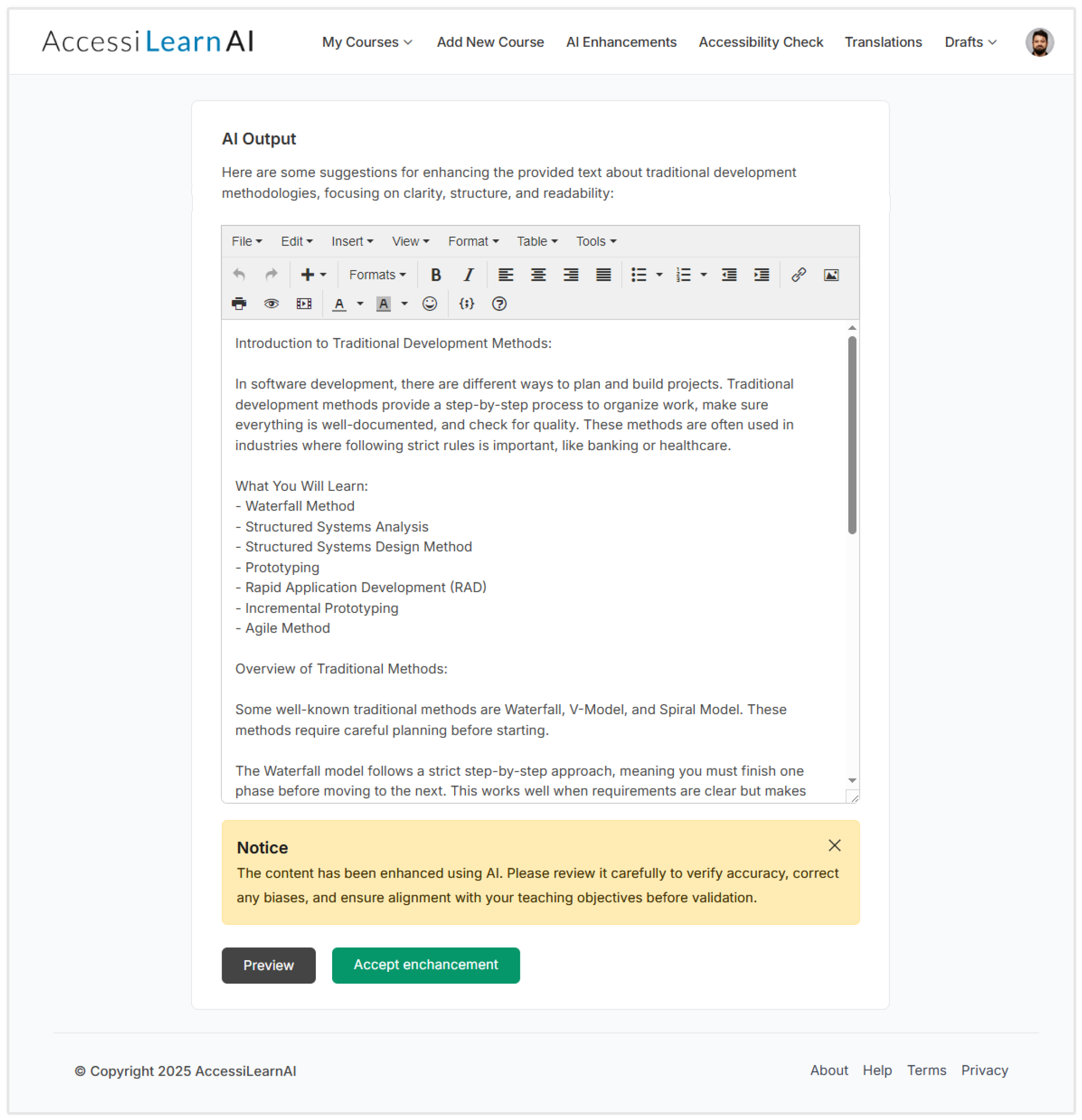

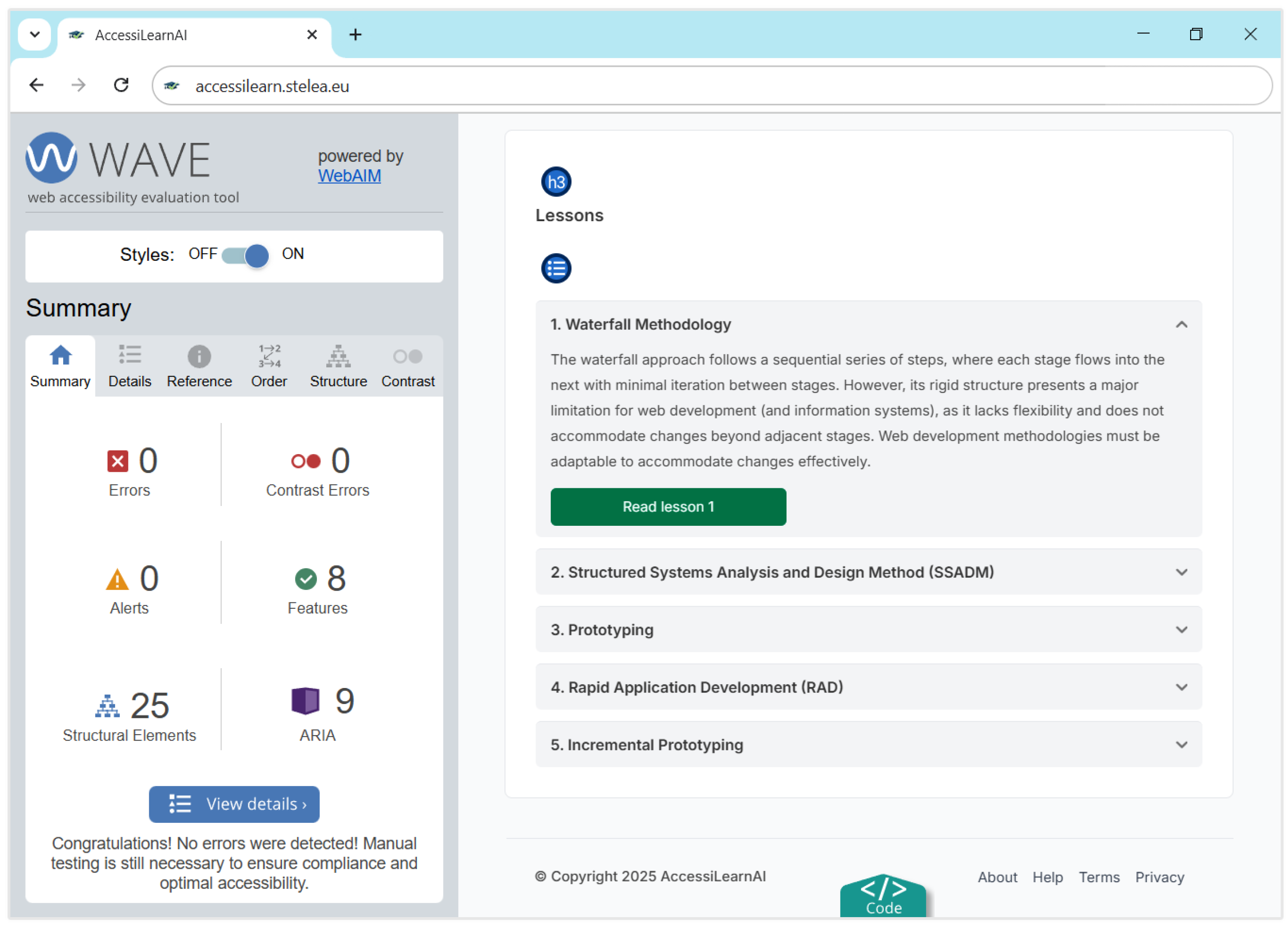

- Accessibility-Driven Content Structuring: We propose an AI-powered content organization system that automatically incorporates HTML5 semantic elements and ARIA attributes (World Wide Web Consortium [W3C], 2025b) to improve accessibility. Unlike many current e-learning platforms that incorporate accessibility support into the system as an afterthought, our system ensures from the outset that content is organized and structured for screen readers and adaptive technologies. A “human-in-the-loop” validation system allows teachers to optimize accessibility improvements generated by AI, thus ensuring high-quality implementation and usability.

- AI-Based Personalization and Adaptive Learning: Our framework uses AI-based techniques to generate multi-level summaries, automatic alternative text for images, real-time text-to-speech (TTS) (Reddy et al., 2023), and dynamic translation. This set of features enables personalized learning experiences that adapt to students’ cognitive preferences and accessibility needs. Unlike previous studies that focus on isolated applications of these technologies, we integrate them into a unified system that improves student engagement and comprehension.

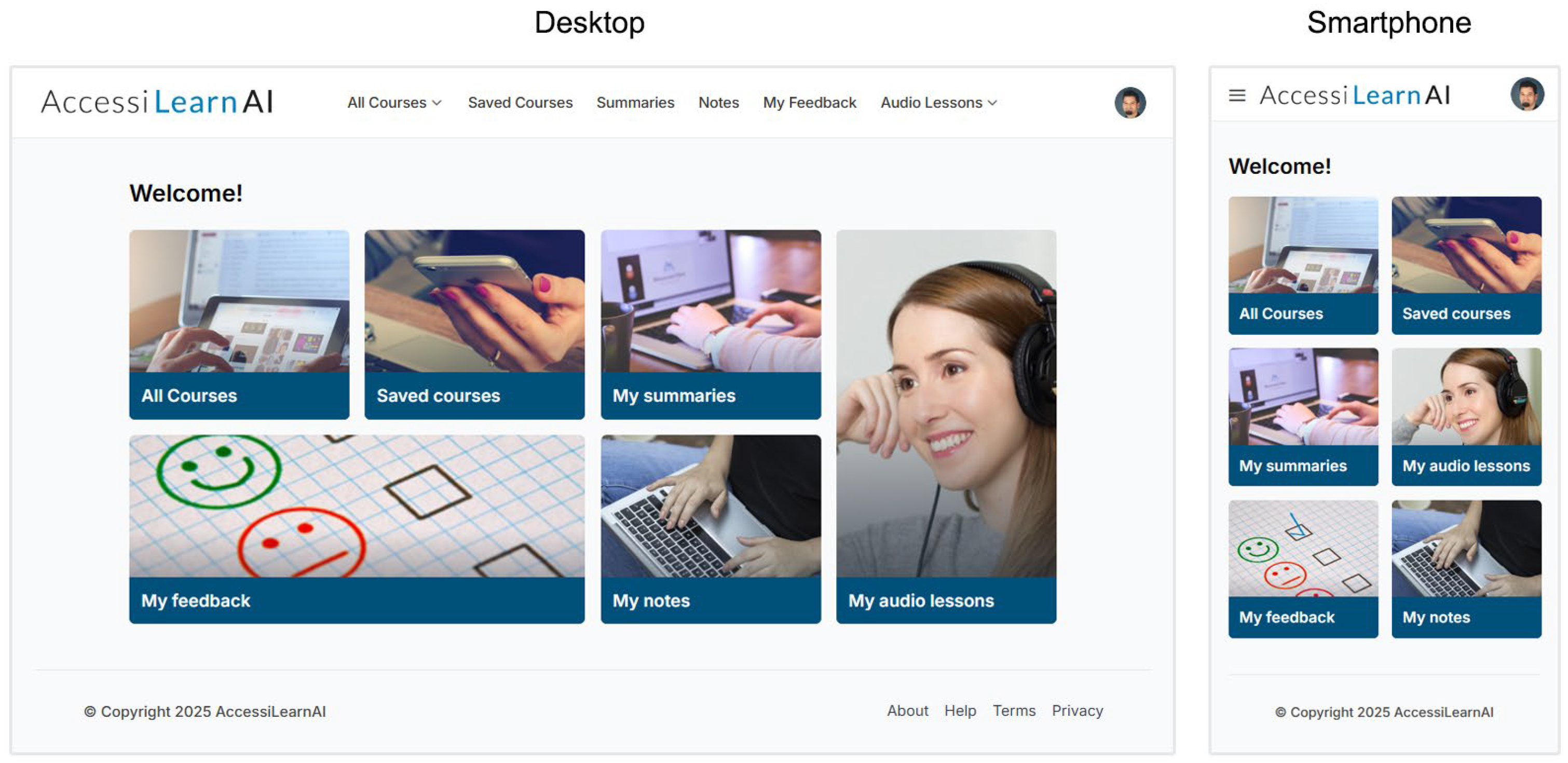

- Semantic Enhancement and Offline Accessibility: We adopted Progressive Web Application (PWA) technology to provide seamless, offline access to educational content while maintaining optimal interactivity. Our platform ensures that accessibility features, such as screen reader support and AI-enhanced content structuring, remain functional and available even in offline mode. This contribution is particularly relevant for students in low-connectivity environments, enabling uninterrupted learning experiences.

- Ethical Data Handling and Privacy Compliance: Our platform incorporates strong privacy measures aligned with GDPR (European Parliament & Council, 2016) and other data protection regulations. We address concerns about student data security, transparency around AI decisions, and bias mitigation by ensuring ethical implementation of AI. The system provides explainable AI (XAI) (Dwivedi et al., 2023) feedback mechanisms that allow students and teachers to understand and control how AI-based adaptations are used.

1.3. Research Questions

- RQ1 (Architecture & Feasibility). Can an accessibility-first, AI-augmented e-learning platform integrate semantic HTML5/ARIA scaffolding, AI-generated alternative text, multi-level summarization/translation, text-to-speech, and offline PWA capability into a cohesive, usable system for the higher demands of academic education?

- RQ2 (Accessibility & Usability in Practice). To what extent does such an integrated approach improve practical accessibility and user experience for diverse learners—including blind/visually impaired users—relative to mainstream LMS baselines, as reflected in screen-reader compatibility, keyboard-only navigation, and perceived ease-of-use?

- RQ3 (Pedagogical Utility of AI Outputs). How useful and reliable are AI-generated summaries and image descriptions when mediated by a human-in-the-loop workflow for teachers?

1.4. Paper Organization

2. Related Work and Research Gap

2.1. Personalized E-Learning and AI-Driven Accessibility

2.2. AI Tools for Summaries, Translation, and TTS

2.3. PWA-Based E-Learning

2.4. Identified Gaps

- Limited Personalization. Many current e-learning platforms still offer static and uniform content to learners without taking into account their environment, level of prior knowledge, or different learning speeds (Murtaza et al., 2022; Gligorea et al., 2023). Therefore, beginning learners may be overwhelmed by the level of the material, while advanced learners may feel disengaged. Such a one-size-fits-all approach often fails to optimize learner engagement and can frequently lead to high dropout rates.

- Deficient Accessibility. Acosta-Vargas et al. (2024b) highlight major accessibility deficiencies in generative AI tools, citing poor screen reader support, inadequate keyboard navigation, and low contrast as basic impediments to the inclusion of users with disabilities. They also highlight challenges such as the lack of transparency regarding AI and inclusive training data, calling for the need to develop proactive development strategies to ensure compliance with ethical and regulatory standards for digital inclusion. Despite the existence and promotion of W3C guidelines and legal mandates such as Section 508 or EN 301 549, e-learning platforms still have many gaps in inclusive design. Common deficiencies include the lack of alternative text for images, inadequate ARIA roles, limited text resizing, or insufficient keyboard navigation (Acosta-Vargas et al., 2024a). Because students with disabilities (visual, auditory, or motor impairments) require more than superficial compliance, these gaps often reduce participation and increase dropout rates among them. The lack of or limited implementation of additional features, such as sign language overlays or real-time TTS for any text, significantly reduces the level of inclusive education.

- Language Barriers. The globalization of online education has increased the demand for multilingual courses, but many online learning platforms still offer, sometimes only partial, translation capabilities (Jónsdóttir et al., 2023). Students from non-English speaking backgrounds often face major obstacles in understanding domain-specific terminologies or examples that are embedded in different cultures. Although machine translation technologies are improving every day, they rarely adapt to the nuances of the local language in specialized domains—e.g., medical or engineering jargon—failing to generate optimal clarity and understanding of the content (for instance, instead of “electromagnetic field” machine translation could produce “electromagnetic plain”).

- Unfulfilled AI Potential. Although research in the field of artificial intelligence in education (AIED) has produced advanced techniques and methods (Chen et al., 2020), such as automatic question generation, real-time text summarization, or adaptive reading level adjustments, commercial LMSs typically do not integrate them at scale. As a result, many students cannot benefit from AI-based dynamic personalization, multilingual content summarization, or advanced assistance tools.

3. Architecture and Implementation

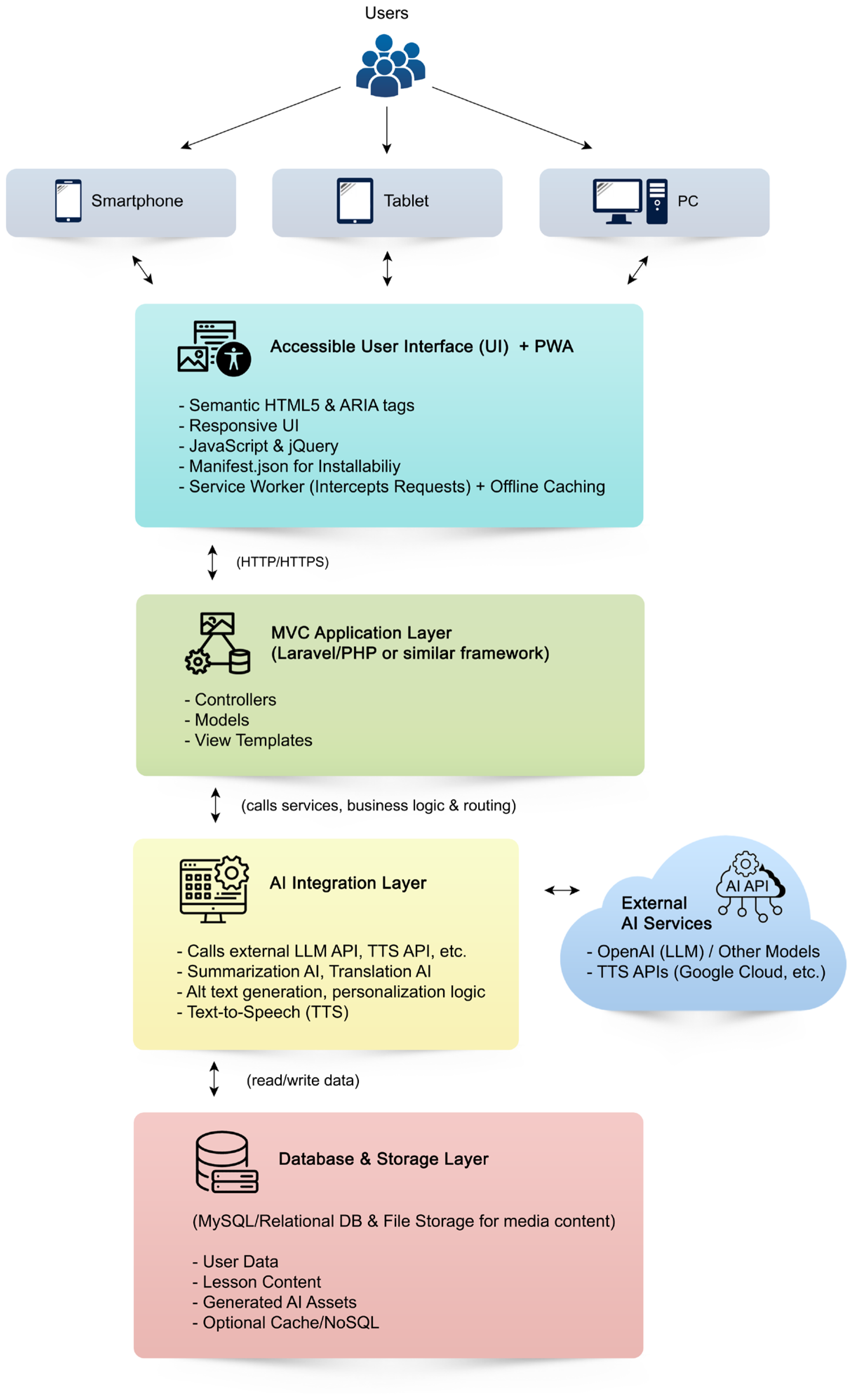

3.1. System Overview

3.2. Core Architectural Components

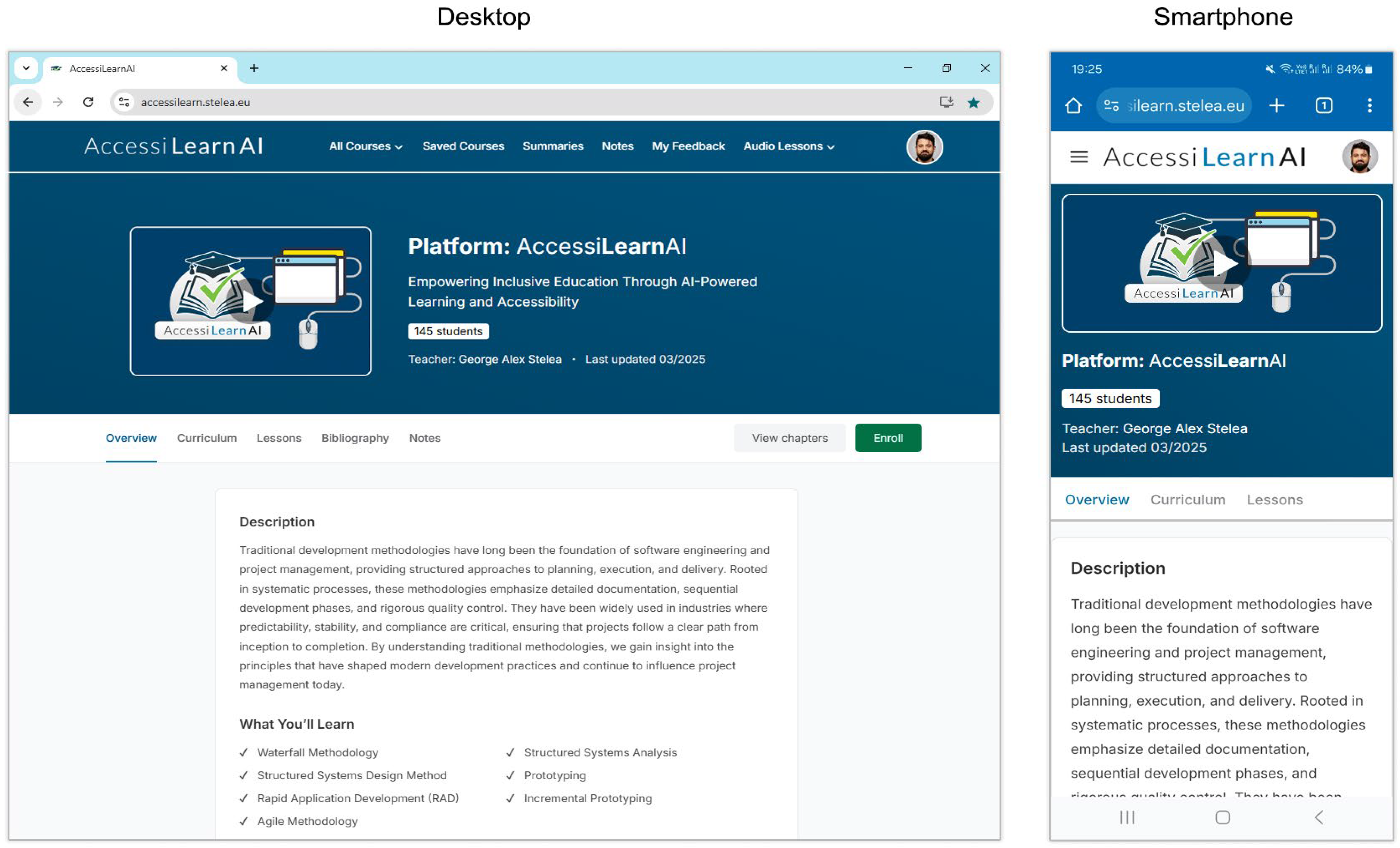

3.2.1. Front-End (User Interface & PWA Layer)

3.2.2. Back-End Application Logic (MVC Layer)

- Controllers: Act as intermediaries that manage requests (e.g., file upload, summary generation) by invoking services and enforcing role-based access policies. The MVC framework provides built-in security features like session management and input validation (e.g., preventing SQL injection (Nasereddin et al., 2021) or cross-site scripting (XSS) (Weamie, 2022) by design through prepared statements and output escaping).

- Services/Managers: In our architecture, processes that are more complex are handled by service classes. These classes handle tasks that involve AI or external APIs. For example, an “AIContentService” class has been implemented that completely handles all tasks that are related to AI-generated content. It can receive raw text from a lesson, process it, and communicate with AI APIs to produce summaries, simplified versions, or accessibility suggestions. This structure makes controllers easy and convenient to test, as services can be emulated during unit tests.

- View Templates: Dynamic HTML pages are built with Laravel’s Blade templates, which combine static structure with AI-generated content. This ensures that semantic tags and ARIA annotations are correctly applied in the final rendering. Templates are reusable (e.g., headers, footers) and help maintain consistent accessibility features across all pages.

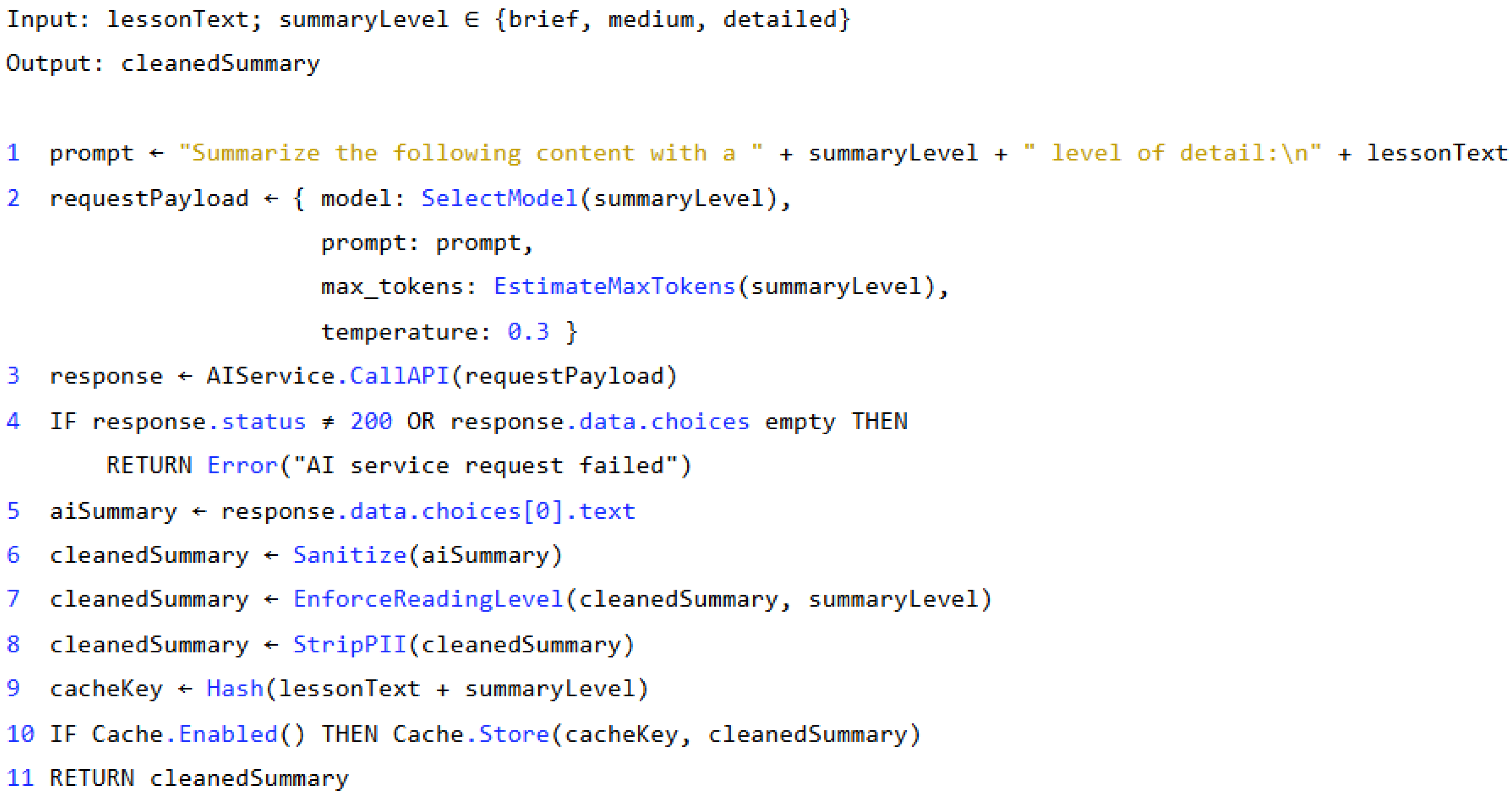

3.2.3. AI Integration Layer

3.2.4. Database and Storage Layer

3.2.5. External Services and APIs

- Authentication and Identity (optional): Even though the platform has its own user database, it is extended to integrate also an external Single Sign-On (SSO) provider (like Google OAuth (Google Developers, 2025c) or the university login system), this is to allow users to authenticate using existing accounts. This implementation choice is configured based of the deployment needs or technical requirements. For our prototype, we used local authentication, but the architecture allows configuring an external OAuth service.

- Notification Services: Because the workflow in the platform requires sending notifications (for example, emailing a teacher when AI processing of their content is complete, or sending students reminders), it is recommended to use specialized external email APIs (like SendGrid (2025)) or push notification services. The PWA’s push notifications are integrated using Firebase Cloud Messaging service to notify and send updates to the user’s device when new content is available or when AI-generated results are ready.

- Analytics and Monitoring: In order to track and monitor the performance of the application, external services and components for analytics should be integrated. These could be services like Google Analytics (Google Developers, 2025d) for user behavior analysis (ensuring also privacy measures) or tools like NewRelic (New Relic, 2025) for server performance monitoring. The Analytics and monitoring tools are not part of the core learning capabilities, but they come as auxiliary features in order to help maintain the platform’s usage.

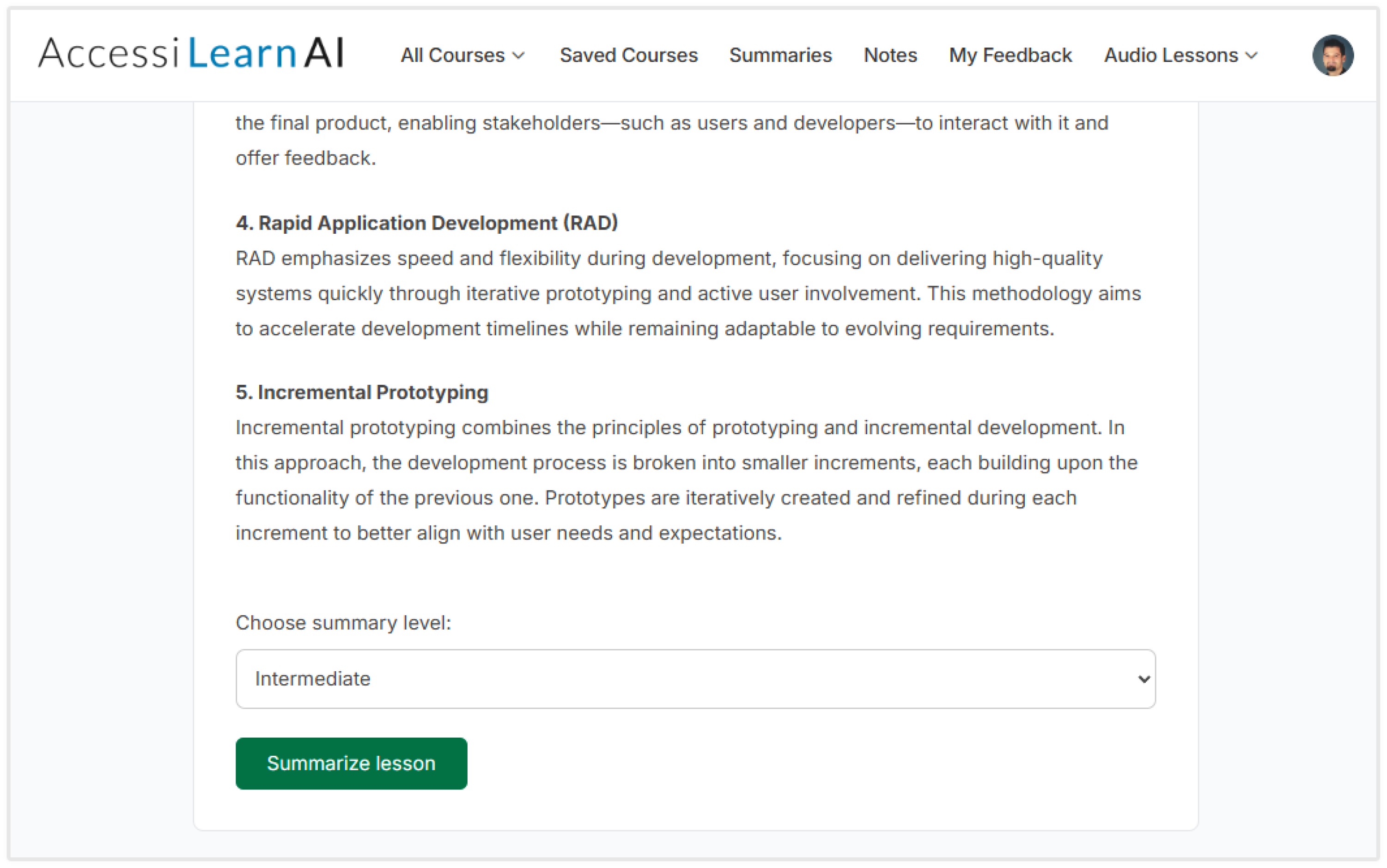

3.3. AI and Personalization Features

- Adaptive Content Summarization: The platform provides on-demand text summarization at different levels (short, intermediate, or detailed) using an integrated LLM API. Students or teachers can request summaries tailored to their needs, whether for quick review or in-depth exam preparation. Summaries are generated in real time, can be adjusted or regenerated for different focuses, and help adapt content to both advanced and beginner students. This dynamic approach addresses the lack of context-aware summarization in traditional e-learning. All summaries are clearly marked as AI-generated, while students are encouraged to verify them against the full content.

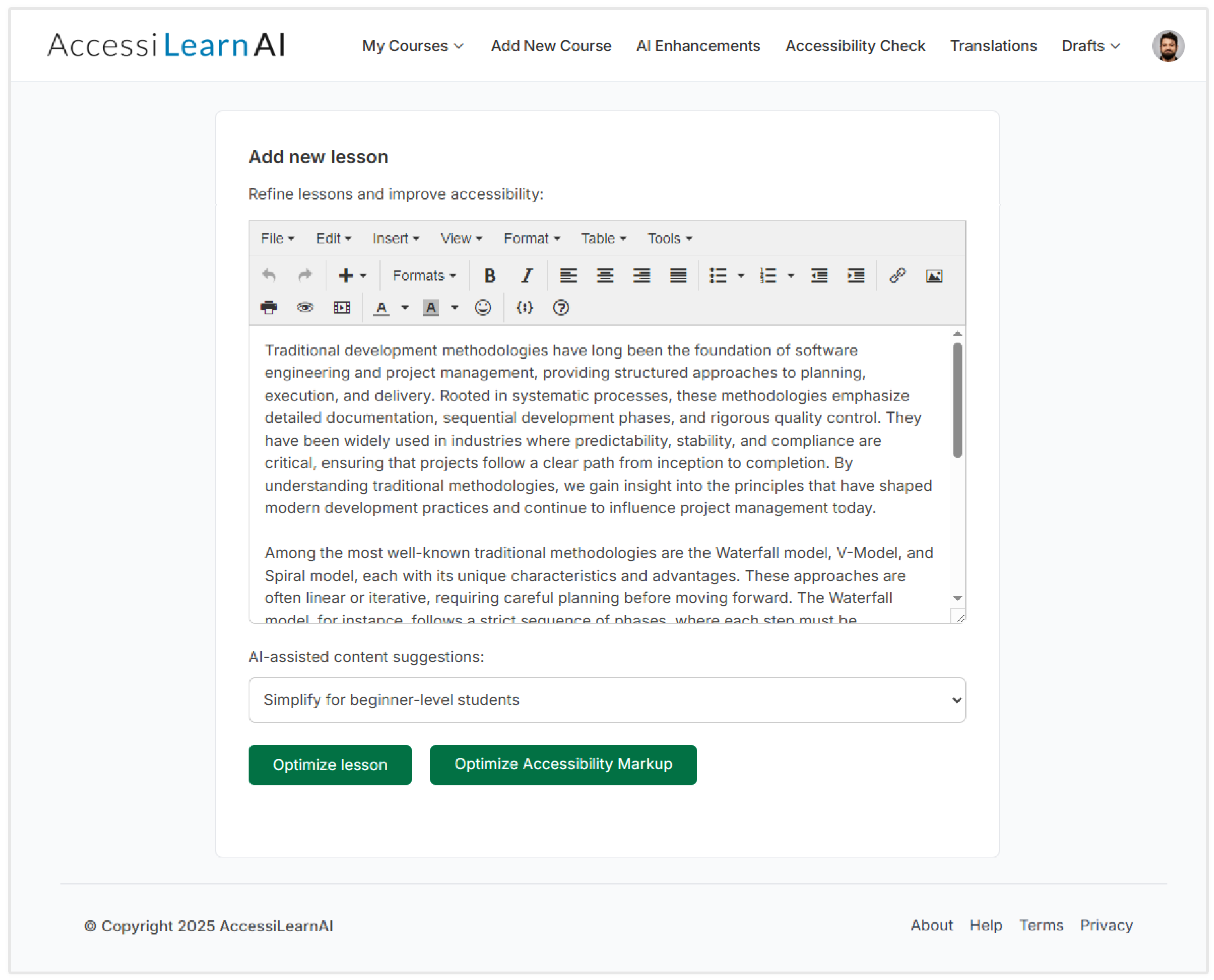

- Reading Level Adjustment and Content Rewriting: Teachers can use AI to adapt content for different reading levels and learning needs, by simplifying complex texts, adding details and examples, or rephrasing content for clarity. While students mainly use summarization, teachers can apply this adaptation feature when preparing lessons. AI can suggest simpler versions of difficult sections, which teachers then refine (the human oversight). This helps address diverse student needs and overcomes the limitations of one-size-fits-all content.

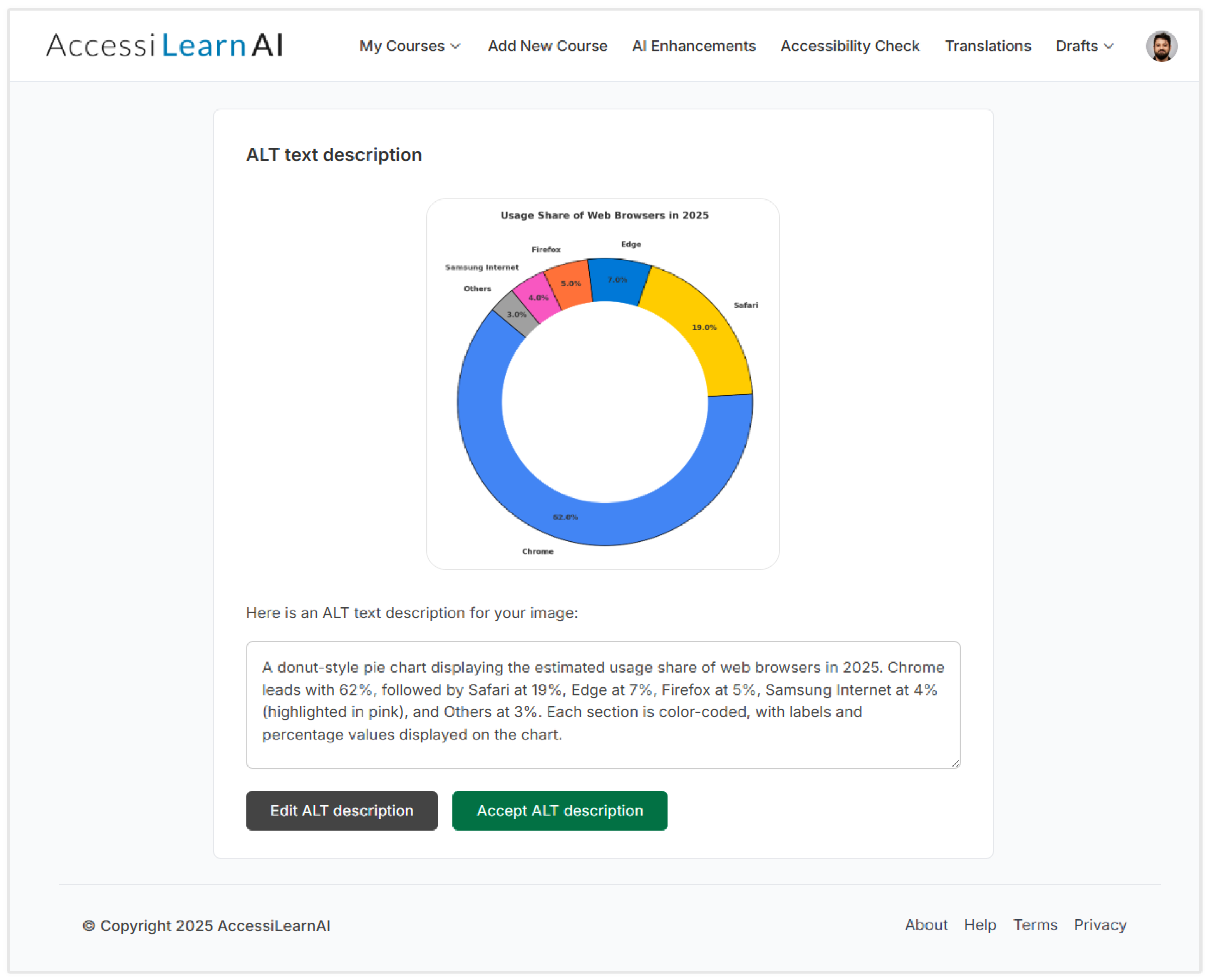

- Automated Alternate Text for Images: An important feature of the platform is AI-generated alt text. When a teacher adds an image, the system creates a description using the LLM. For example, when the biology lesson has an image representing a cell, the AI might produce alt text like “Diagram of a cell showing labeled organelles including the nucleus, mitochondria, and cell membrane”. This suggestion is then displayed to the teacher for approval or editing. This practice removes the barrier of accessibility in the educational environment where a great number of resources do not have descriptive alt texts due to the extra effort to write them manually. Our approach, inspired by works like Tiwary and Mahapatra (2022) who explored AI-generated image descriptions, integrates alt-text creation as part of the content workflow. Students with visual impairments orthose using screen readers benefit from having these descriptions available for every image.

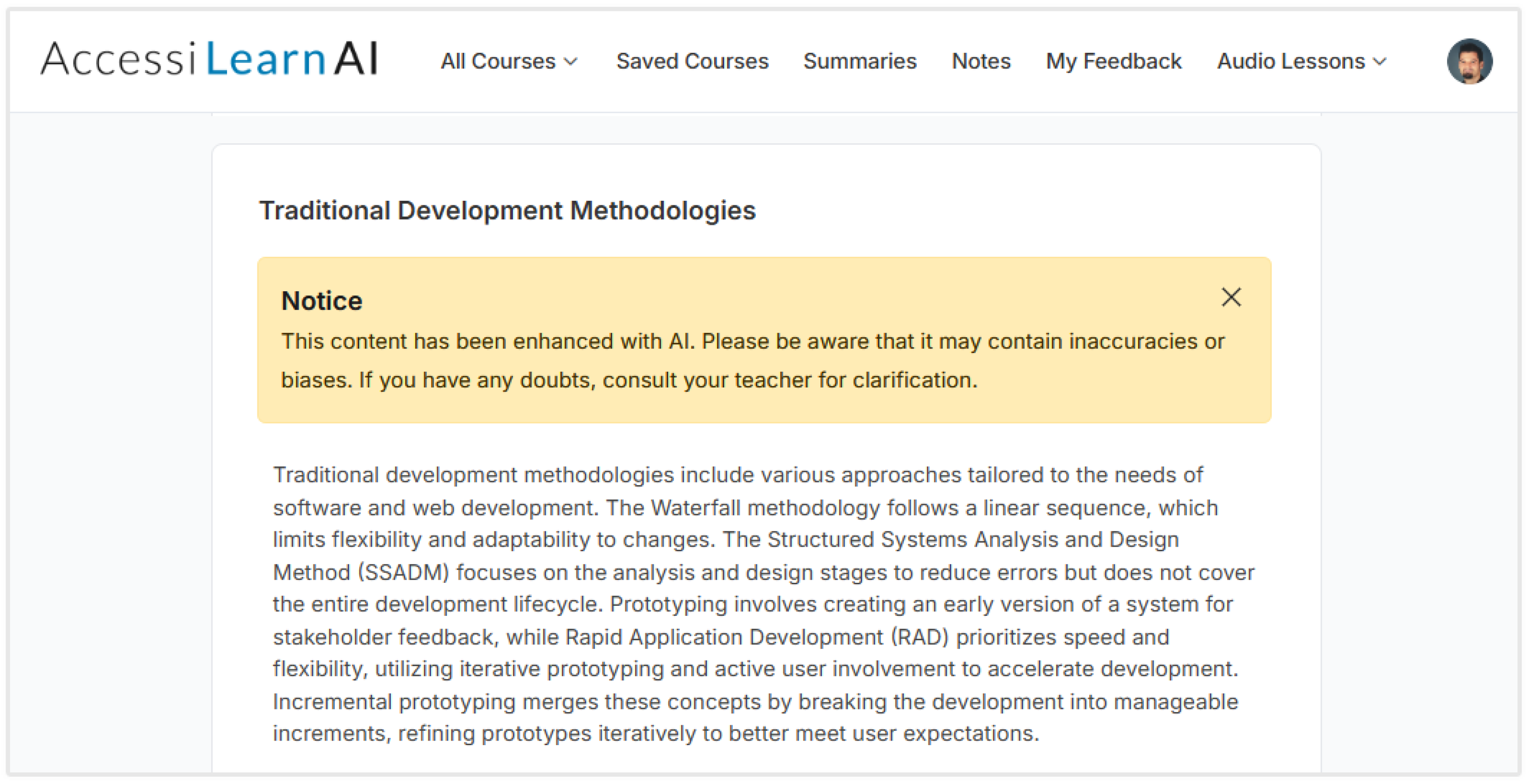

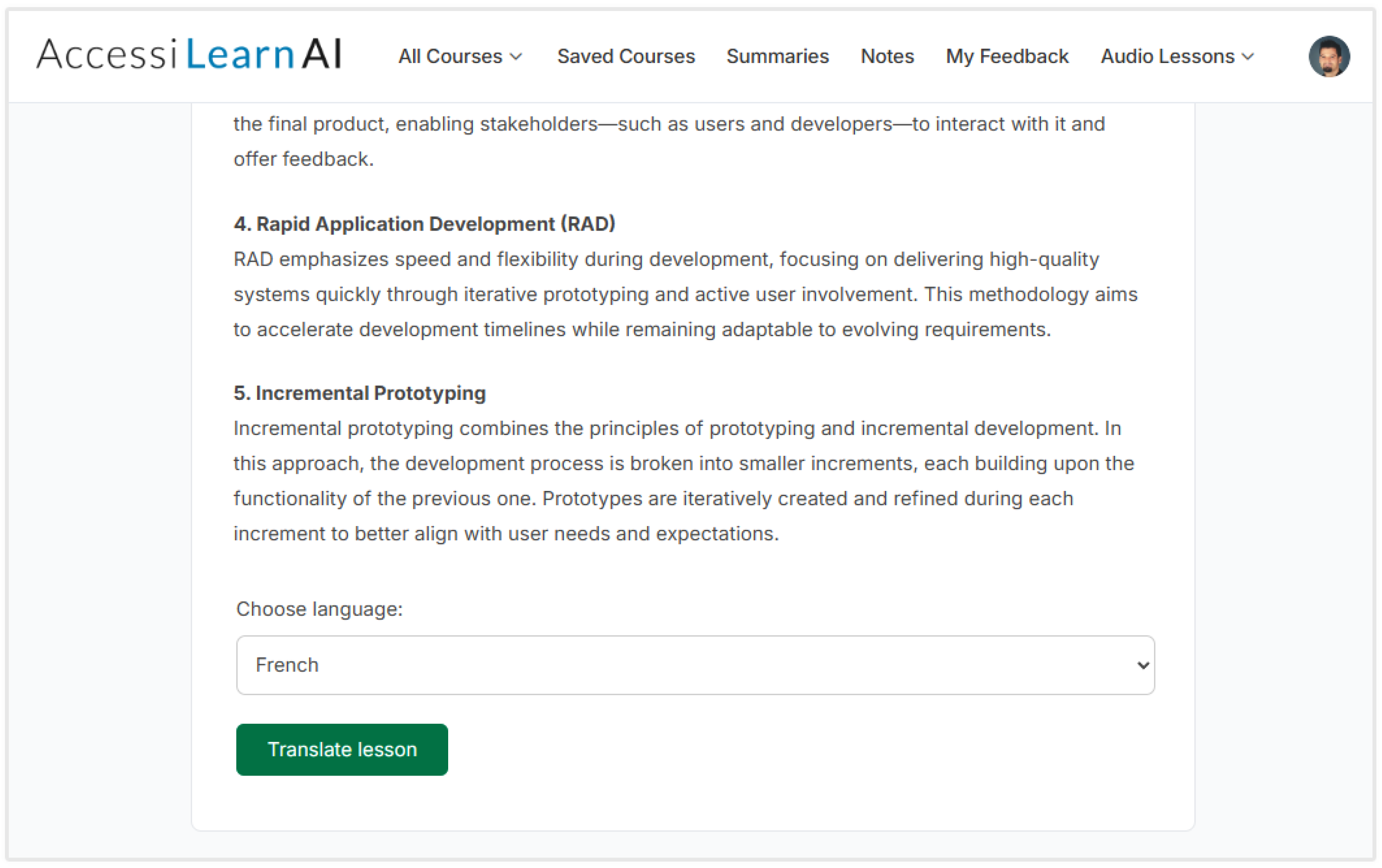

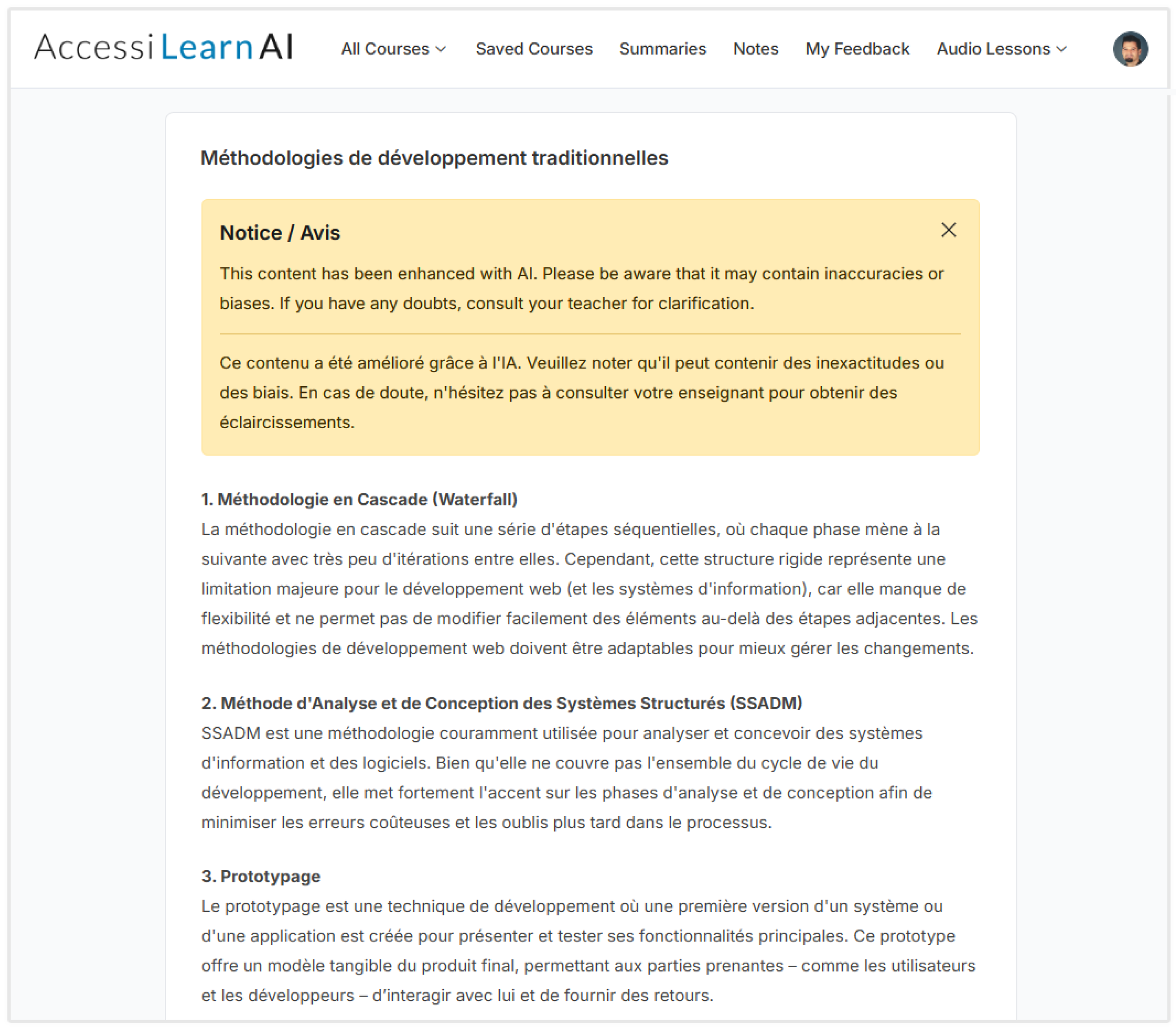

- Real-Time Language Translation: The AccessiLearnAI platform aims to improve the ease of understanding of lessons for students in a multilingual setting. A student is able to select any language from those available (for the prototype we refer to major languages such as English, Spanish, French, etc.) and access complete lessons in the language of their choice. Lessons are translated using a LLM or neural machine translation service, with attention to context and technical terms. For example, an English computer science lesson can be rendered in Spanish while keeping accurate terminology. Students can learn in their native language, while teachers can review or refine translations for accuracy. Translations are generated in real time, cached for better performance, and can be improved through human review. This feature supports inclusive design, broadens course access, and helps to address gaps in domain-specific or less-resourced languages.

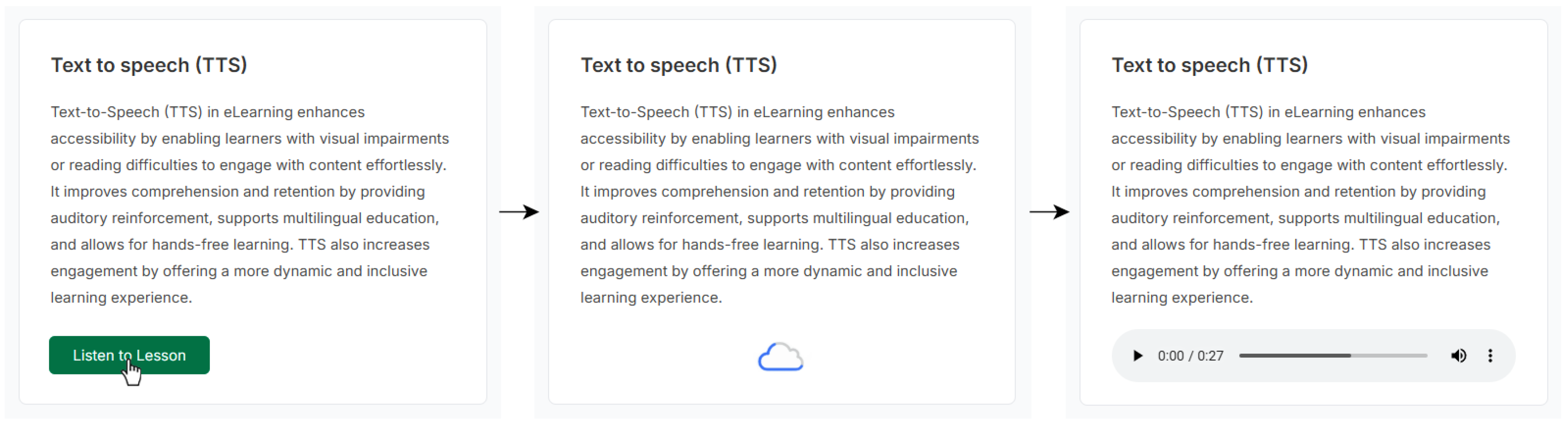

- Text-to-Speech (Audio Learning Mode): The platform’s Text-to-Speech feature lets students listen to any content—whether a sentence, summary, or full lesson—in a natural voice. This benefits visually impaired learners, students with reading difficulties, and those who prefer audio learning, such as during commutes. Adjustable playback speed, and where possible also voice selection is supported, in order to better suit students’ preferences. Studies have shown that TTS functionality can improve engagement for mobile learners and those who favor auditory processing (Jafarian & Kramer, 2025). TTS is integrated seamlessly: when a student clicks ‘Listen to lesson,’ the audio is streamed or generated and then played. Thanks to caching in the PWA, once an audio file is created, it can be replayed offline. This audio mode improves accessibility, supports students who struggle with on-screen reading, and reinforces learning by allowing reading and listening together.

- User Preference Personalization: The platform can also provide a more personalized experience by allowing a user to specify his preferences. An example includes a student that always opts for “detailed” summaries, the interface could be set to “detailed” mode by default for that student. If another student finds a specific font size or color contrast more suitable to his visual needs, this information can be stored and applied while he logs in (this is more an accessibility UI feature than AI, but it comes complementary to the AI feature). Additionally, the platform’s adaptive features learn from feedback, if a student will rate AI-generated content (summary or translation) as not helpful, the system saves this feedback and could adjust, by altering the generated content or recommend to the student to request a different level of detail. As data will accumulate with time, AI with the help of the data can adjust itself better or select the best strategy for various users (e.g., very literal summaries might be suitable for some of the users, while others might require abstracted ones).

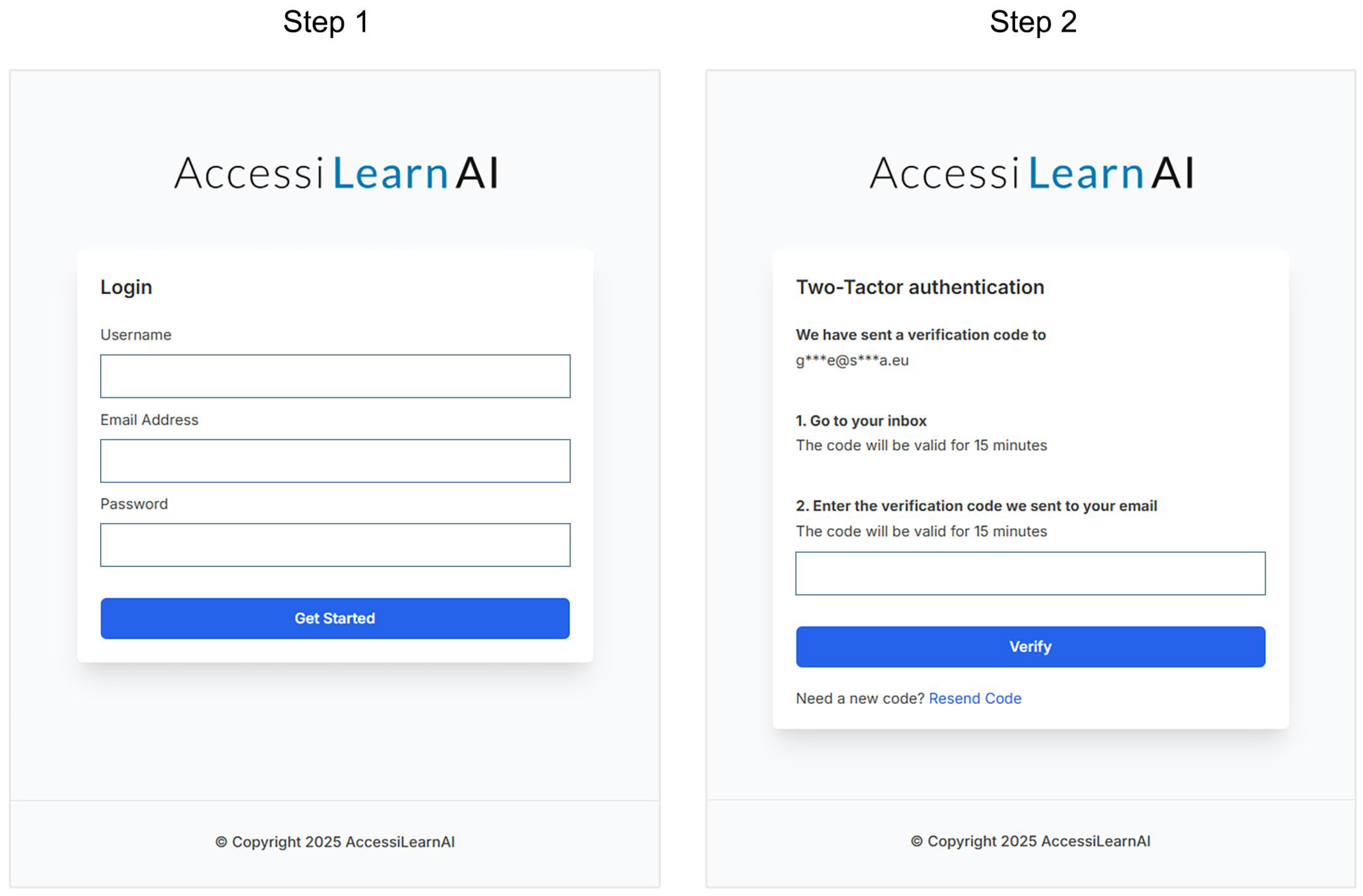

3.4. Security and Privacy Considerations

3.5. Scalability and Performance Optimization

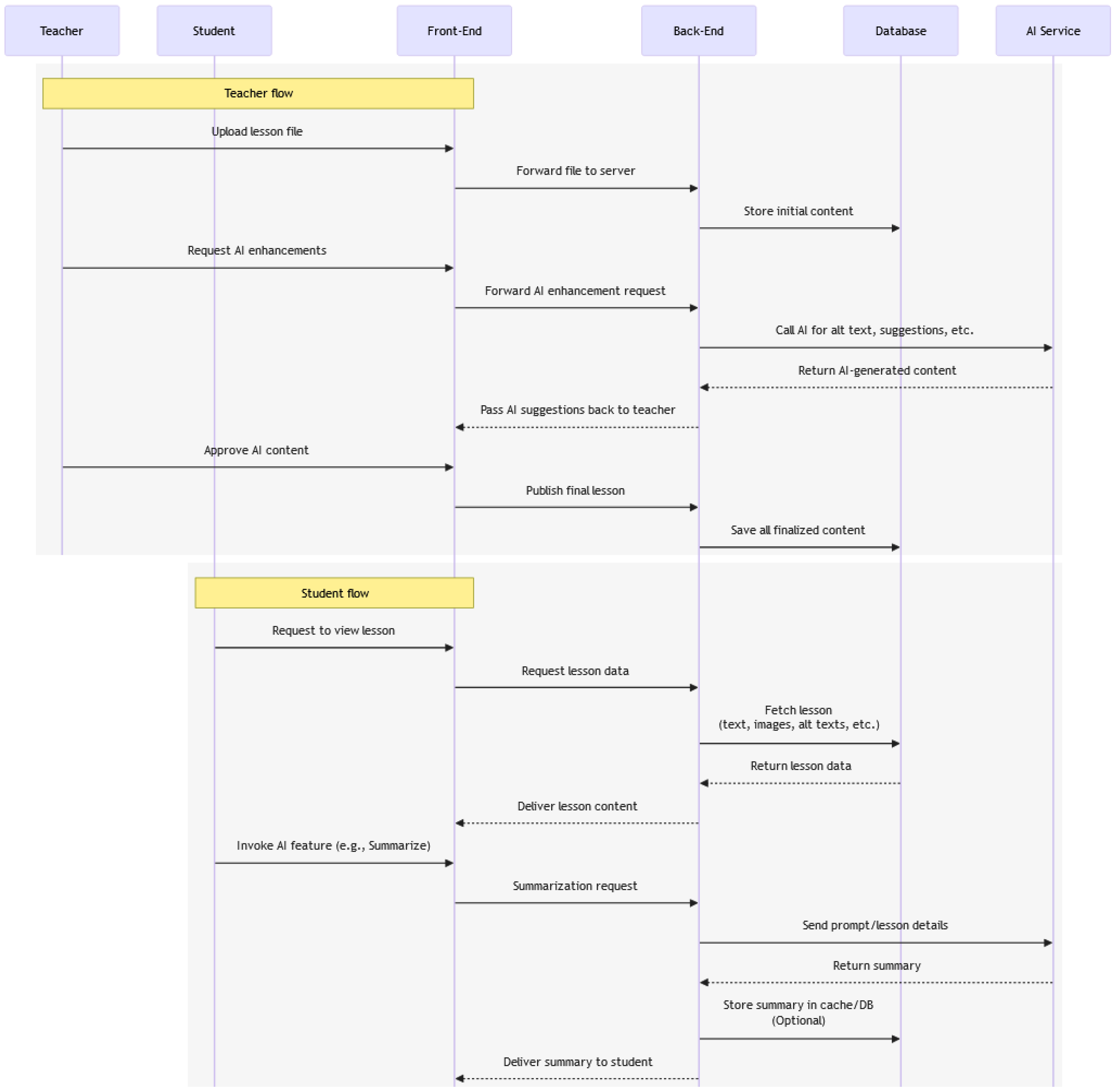

4. Workflow for Different User Roles and Interaction Model

4.1. Teacher Workflow

4.2. Student Workflow

4.3. Data Flow and Interaction Model

5. Comparative Analysis with Established E-Learning Platforms

6. Discussion

7. Limitations

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- AccessibleEU. (2025). A new era of inclusion begins: The European accessibility act enters into force. Available online: https://accessible-eu-centre.ec.europa.eu/content-corner/news/new-era-inclusion-begins-eaa-enters-force-2025-06-27_en (accessed on 21 July 2025).

- Acosta-Vargas, P., Acosta-Vargas, G., Salvador-Acosta, B., & Jadán-Guerrero, J. (2024a, June 24–26). Addressing web accessibility challenges with generative artificial intelligence tools for inclusive education. 10th International Conference on eDemocracy & eGovernment (ICEDEG) (pp. 1–7), Lucerne, Switzerland. [Google Scholar] [CrossRef]

- Acosta-Vargas, P., Salvador-Acosta, B., Novillo-Villegas, S., Sarantis, D., & Salvador-Ullauri, L. (2024b). Generative artificial intelligence and web accessibility: Towards an inclusive and sustainable future. Emerging Science Journal, 8(4), 1602–1621. [Google Scholar] [CrossRef]

- Adams, C. (2025). Salt. In S. Jajodia, P. Samarati, & M. Yung (Eds.), Encyclopedia of cryptography, security and privacy (pp. 2157–2158). Springer. [Google Scholar] [CrossRef]

- Airaj, M. (2024). Ethical artificial intelligence for teaching-learning in higher education. Education and Information Technologies, 29, 17145–17167. [Google Scholar] [CrossRef]

- Al-Fraihat, D., Alshahrani, A. M., Alzaidi, M., Shaikh, A. A., Al-Obeidallah, M., & Al-Okaily, M. (2025). Exploring students’ perceptions of the design and use of the Moodle learning management system. Computers in Human Behavior Reports, 18, 100685. [Google Scholar] [CrossRef]

- Ally. (2025). Ally accessibility tool. Available online: https://ally.ac/ (accessed on 12 April 2025).

- Amazon Web Services. (2025a). Amazon cloudfront. Available online: https://aws.amazon.com/cloudfront/ (accessed on 9 April 2025).

- Amazon Web Services. (2025b). Amazon polly. Available online: https://aws.amazon.com/polly/ (accessed on 11 December 2024).

- Arredondo-Trapero, F. G., Guerra-Leal, E. M., Kim, J., & Vázquez-Parra, J. C. (2024). Competitiveness, quality education and universities: The shift to the post-pandemic world. Journal of Applied Research in Higher Education, 16(5), 2140–2154. [Google Scholar] [CrossRef]

- Bootstrap. (2025). Bootstrap framework. Available online: https://getbootstrap.com/ (accessed on 18 April 2025).

- Bray, A., Devitt, A., Banks, J., Sanchez Fuentes, S., Sandoval, M., Riviou, K., Byrne, D., Flood, M., Reale, J., & Terrenzio, S. (2024). What next for universal design for learning? A systematic literature review of technology in UDL implementations at second level. British Journal of Educational Technology, 55, 113–138. [Google Scholar] [CrossRef]

- CAST. (2024). Universal design for learning guidelines. Available online: https://udlguidelines.cast.org/ (accessed on 12 November 2024).

- Chen, L., Chen, P., & Lin, Z. (2020). Artificial intelligence in education: A review. IEEE Access, 8, 75264–75278. [Google Scholar] [CrossRef]

- Corrêa, N. K., Galvão, C., Santos, J. W., Del Pino, C., Pinto, E. P., Barbosa, C., Massmann, D., Mambrini, R., Galvão, L., Terem, E., & de Oliveira, N. (2023). Worldwide AI ethics: A review of 200 guidelines and recommendations for AI governance. Patterns, 4(10), 100857. [Google Scholar] [CrossRef] [PubMed]

- Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20, 22. [Google Scholar] [CrossRef]

- Dilmegani, C. (2025). LLM latency benchmark by use cases in 2025. Research AImultiple.com. Available online: https://research.aimultiple.com/llm-latency-benchmark/ (accessed on 10 July 2025).

- DreamBox. (2024). Online math & reading programs for students—DreamBox by discovery education. Available online: https://www.dreambox.com/ (accessed on 23 July 2024).

- Dwivedi, R., Dave, D., Naik, H., Singhal, S., Omer, R., Patel, P., Qian, B., Wen, Z., Shah, T., Morgan, G., & Ranjan, R. (2023). Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Computing Surveys, 55(9), 194. [Google Scholar] [CrossRef]

- Egger, R., & Gokce, E. (2022). Natural language processing (NLP): An introduction. In R. Egger (Ed.), Applied data science in tourism (pp. 307–334). Springer. [Google Scholar] [CrossRef]

- Ehsan, A., Abuhaliqa, M. A. M. E., Catal, C., & Mishra, D. (2022). RESTful API testing methodologies: Rationale, challenges, and solution directions. Applied Sciences, 12, 4369. [Google Scholar] [CrossRef]

- European Commission. (2025). Persons with disabilities—Strategy and policy. Available online: https://commission.europa.eu/strategy-and-policy/policies/justice-and-fundamental-rights/disability/persons-disabilities_en (accessed on 21 July 2025).

- European Parliament & Council. (2016). Regulation (EU) 2016/679 (general data protection regulation). Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj/eng (accessed on 4 November 2024).

- European Union. (2019). Directive (EU) 2019/882 of the European Parliament and of the Council of 17 April 2019 on the accessibility requirements for products and services (European Accessibility Act). Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32019L0882 (accessed on 21 July 2025).

- Fleur, D. S., Bredeweg, B., & van den Bos, W. (2021). Metacognition: Ideas and insights from neuro- and educational sciences. npj Science of Learning, 6, 13. [Google Scholar] [CrossRef]

- Gligorea, I., Cioca, M., Oancea, R., Gorski, A.-T., Gorski, H., & Tudorache, P. (2023). Adaptive learning using artificial intelligence in e-learning: A literature review. Education Sciences, 13, 1216. [Google Scholar] [CrossRef]

- Google. (2025). ChromeVox screen reader extension. Available online: https://chromewebstore.google.com/detail/screen-reader/kgejglhpjiefppelpmljglcjbhoiplfn (accessed on 15 January 2025).

- Google Cloud. (2025). Text-to-speech documentation. Available online: https://cloud.google.com/text-to-speech (accessed on 12 April 2025).

- Google Developers. (2025a). Explore progressive web apps—Web.dev. Available online: https://web.dev/explore/progressive-web-apps (accessed on 11 January 2025).

- Google Developers. (2025b). Google analytics developer documentation. Available online: https://developers.google.com/analytics (accessed on 18 April 2025).

- Google Developers. (2025c). Learn PWA: Service workers. Available online: https://web.dev/learn/pwa/service-workers (accessed on 16 April 2025).

- Google Developers. (2025d). OAuth 2.0 for web server applications. Available online: https://developers.google.com/identity/protocols/oauth2 (accessed on 19 March 2025).

- Hillaire, G., Iniesto, F., & Rienties, B. (2019). Humanising text-to-speech through emotional expression in online courses. Journal of Interactive Media in Education, 2019(1), 12. [Google Scholar] [CrossRef]

- Huang, C., Zhang, Z., Mao, B., & Yao, X. (2023). An overview of artificial intelligence ethics. IEEE Transactions on Artificial Intelligence, 4(4), 799–819. [Google Scholar] [CrossRef]

- Huber, S., Demetz, L., & Felderer, M. (2021). PWA vs the others: A comparative study on the UI energy-efficiency of progressive web apps. In Web engineering (ICWE 2021) (Lecture Notes in Computer Science 12706). Springer. [Google Scholar] [CrossRef]

- Ingavelez-Guerra, P., Oton-Tortosa, S., Hilera-González, J., & Sánchez-Gordón, M. (2023). The use of accessibility metadata in e-learning environments: A systematic literature review. Universal Access in the Information Society, 22, 445–461. [Google Scholar] [CrossRef]

- Jafarian, N. R., & Kramer, A.-W. (2025). AI-assisted audio-learning improves academic achievement through motivation and reading engagement. Computers and Education: Artificial Intelligence, 8, 100357. [Google Scholar] [CrossRef]

- Jónsdóttir, A. A., Kang, Z., Sun, T., Mandal, S., & Kim, J.-E. (2023). The effects of language barriers and time constraints on online learning performance: An eye-tracking study. Human Factors, 65(5), 779–791. [Google Scholar] [CrossRef]

- jQuery. (2025). The write less, do more, JavaScript library. Available online: https://jquery.com/ (accessed on 16 April 2025).

- Kasirzadeh, A., & Clifford, D. (2021, May 19–21). Fairness and data protection impact assessments. 2021 AAAI/ACM Conference on AI, Ethics, and Society (AIES’21) (pp. 146–153), Virtual Event, USA. [Google Scholar] [CrossRef]

- Kazimzade, G., Patzer, Y., & Pinkwart, N. (2019). Artificial intelligence in education meets inclusive educational technology—The technical state-of-the-art and possible directions. In J. Knox, Y. Wang, & M. Gallagher (Eds.), Artificial intelligence and inclusive education (pp. 61–73). Springer. [Google Scholar] [CrossRef]

- Khamzina, K., Stanczak, A., Brasselet, C., Desombre, C., Legrain, C., Rossi, S., & Guirimand, N. (2024). Designing effective pre-service teacher training in inclusive education: A narrative review of the effects of duration and content delivery mode on teachers’ attitudes toward inclusive education. Educational Psychology Review, 36, 13. [Google Scholar] [CrossRef]

- Kirss, L., Säälik, Ü., Leijen, Ä., & Pedaste, M. (2021). School effectiveness in multilingual education: A review of success factors. Education Sciences, 11, 193. [Google Scholar] [CrossRef]

- Klimova, B., Pikhart, M., Benites, A. D., Lehr, C., & Sanchez-Stockhammer, C. (2023). Neural machine translation in foreign language teaching and learning: A systematic review. Education and Information Technologies, 28, 663–682. [Google Scholar] [CrossRef]

- Lakshmi, G. J., Surekha, T. L., Harathi, R., & Bhargavi, B. (2025). Developing an online/offline educational application to the students in rural areas. In Data science & exploration in artificial intelligence (CODE-AI 2024). CRC Press. [Google Scholar]

- Laravel. (2024). Laravel PHP framework. Available online: https://laravel.com/ (accessed on 18 December 2024).

- Laravel. (2025a). Concurrency—Laravel 12.x—The PHP framework for web artisans. Available online: https://laravel.com/docs/12.x/concurrency (accessed on 15 July 2025).

- Laravel. (2025b). Laravel hashing documentation. Available online: https://laravel.com/docs/12.x/hashing (accessed on 17 November 2024).

- Laravel. (2025c). Laravel horizon—Laravel 12.x—The PHP framework for web artisans. Available online: https://laravel.com/docs/12.x/horizon (accessed on 15 July 2025).

- Laravel. (2025d). Laravel octane—Laravel 12.x—The PHP framework for web artisans. Available online: https://laravel.com/docs/12.x/octane (accessed on 15 July 2025).

- Liew, T. W., Tan, S. M., Pang, W. M., Khan, M. T. I., & Kew, S. N. (2023). I am Alexa, your virtual tutor!: The effects of Amazon Alexa’s text-to-speech voice enthusiasm in a multimedia learning environment. Education and Information Technologies, 28, 1455–1489. [Google Scholar] [CrossRef]

- Liu, J., Li, S., Ren, C., Lyu, Y., Xu, T., Wang, Z., & Chen, W. (2023). AI enhancements for linguistic e-learning systems. Applied Sciences, 13, 10758. [Google Scholar] [CrossRef]

- MariaDB Foundation. (2025). MariaDB database. Available online: https://mariadb.org/ (accessed on 24 March 2025).

- Masapanta-Carrión, S., & Velázquez-Iturbide, J. A. (2018, February 21–24). A systematic review of the use of bloom’s taxonomy in computer science education. 49th ACM Technical Symposium on Computer Science Education (SIGCSE’18), Baltimore, MD, USA. [Google Scholar] [CrossRef]

- MDN Web Docs. (2025a). AJAX—MDN glossary. Available online: https://developer.mozilla.org/en-US/docs/Glossary/AJAX (accessed on 14 April 2025).

- MDN Web Docs. (2025b). Document Object Model (DOM)—MDN. Available online: https://developer.mozilla.org/en-US/docs/Web/API/Document_Object_Model (accessed on 18 April 2025).

- Metcalf, J., Moss, E., Watkins, E. A., Singh, R., & Elish, M. C. (2021, March 3–10). Algorithmic impact assessments and accountability: The co-construction of impacts. 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT’21) (pp. 735–746), Virtual Event, Canada. [Google Scholar] [CrossRef]

- Momen, A., Ebrahimi, M., & Hassan, A. M. (2023). Importance and implications of theory of bloom’s taxonomy in different fields of education. In M. A. Al-Sharafi, M. Al-Emran, M. N. Al-Kabi, & K. Shaalan (Eds.), Proceedings of the 2nd international conference on emerging technologies and intelligent systems (ICETIS 2022) (Lecture Notes in Networks and Systems). Springer. [Google Scholar] [CrossRef]

- Moodle. (2025a). Accessibility plugins for moodle. Available online: https://moodle.org/plugins/?q=accessibility (accessed on 15 April 2025).

- Moodle. (2025b). Accessibility with moodle. Available online: https://moodle.com/accessibility/ (accessed on 15 July 2025).

- Moodle. (2025c). GDPR—MoodleDocs. Available online: https://docs.moodle.org/500/en/GDPR/ (accessed on 15 July 2025).

- Moodle. (2025d). Moodle app|Moodle downloads. Available online: https://download.moodle.org/mobile/ (accessed on 14 July 2025).

- Moodle. (2025e). Moodle is a learning platform or learning management system (LMS). Available online: https://moodle.org/ (accessed on 12 July 2025).

- Moodle. (2025f). Moodle plugins directory. Available online: https://moodle.org/plugins/ (accessed on 16 July 2025).

- Mosqueira-Rey, E., Hernández-Pereira, E., Alonso-Ríos, D., Bobes-Bascarán, J., & Fernández-Leal, Á. (2023). Human-in-the-loop machine learning: A state of the art. Artificial Intelligence Review, 56, 3005–3054. [Google Scholar] [CrossRef]

- Mukherjee, D., & Hasan, K. K. (2022). Learning continuity in the realm of education 4.0: Higher education sector in the post-pandemic of COVID-19. In Future of work and business in COVID-19 era (Springer Proceedings in Business and Economics). Springer. [Google Scholar] [CrossRef]

- Murtaza, M., Ahmed, Y., Shamsi, J. A., Sherwani, F., & Usman, M. (2022). AI-based personalized e-learning systems: Issues, challenges, and solutions. IEEE Access, 10, 81323–81342. [Google Scholar] [CrossRef]

- Nasereddin, M., ALKhamaiseh, A., Qasaimeh, M., & Al-Qassas, R. (2021). A systematic review of detection and prevention techniques of SQL injection attacks. Information Security Journal: A Global Perspective, 32(4), 252–265. [Google Scholar] [CrossRef]

- New Relic. (2025). New relic application performance monitoring. Available online: https://newrelic.com/ (accessed on 8 March 2025).

- Niarman, A., Iswandi, & Candri, A. K. (2023). Comparative analysis of PHP frameworks for development of academic information system using load and stress testing. International Journal Software Engineering and Computer Science (IJSECS), 3, 424–436. [Google Scholar] [CrossRef]

- Nugraha, D., Anjara, F., & Faizah, S. (2022). Comparison of web-based and PWA in online learning. In 5th FIRST T1-T2 2021 International Conference (FIRST-T1-T2 2021) (pp. 201–205). Atlantis Press. [Google Scholar] [CrossRef]

- OpenAI. (2025). OpenAI platform documentation—Overview. Available online: https://platform.openai.com/docs/overview (accessed on 14 April 2025).

- Oracle Corporation. (2025). MySQL database. Available online: https://www.mysql.com/ (accessed on 16 April 2025).

- Recite Me. (2025). European accessibility act: What it means and what the fines are. Available online: https://reciteme.com/news/european-accessibility-act-fines/ (accessed on 21 July 2025).

- Reddy, V. M., Vaishnavi, T., & Kumar, K. P. (2023, July 19–21). Speech-to-text and text-to-speech recognition using deep learning. 2nd International Conference on Edge Computing and Applications (ICECAA) (pp. 657–666), Namakkal, India. [Google Scholar] [CrossRef]

- Redis. (2025). Redis in-memory data store. Available online: https://redis.io/ (accessed on 11 April 2025).

- Sagi, S. (2025). Optimizing LLM inference: Metrics that matter for real time applications. Journal of Artificial Intelligence & Cloud Computing, 2025(4), 2–4. [Google Scholar] [CrossRef]

- Sanchez-Gordon, S., Aguilar-Mayanquer, C., & Calle-Jimenez, T. (2021). Model for profiling users with disabilities on e-learning platforms. IEEE Access, 9, 74258–74274. [Google Scholar] [CrossRef]

- Sasmoko, Indrianti, Y., Manalu, S. R., & Danaristo, J. (2024). Analyzing database optimization strategies in Laravel for an enhanced learning management. Procedia Computer Science, 245, 799–804. [Google Scholar] [CrossRef]

- SendGrid. (2025). SendGrid email delivery service. Available online: https://sendgrid.com/en-us (accessed on 15 April 2025).

- Shafique, R., Aljedaani, W., Rustam, F., Lee, E., Mehmood, A., & Choi, G. S. (2023). Role of artificial intelligence in online education: A systematic mapping study. IEEE Access, 11, 52570–52584. [Google Scholar] [CrossRef]

- Smart Sparrow. (2025a). Higher education|Smart sparrow. Available online: https://www.smartsparrow.com/solutions/highered/ (accessed on 14 July 2025).

- Smart Sparrow. (2025b). How to make your lessons accessible. Available online: https://www.smartsparrow.com/2018/11/09/how-to-make-your-lessons-accessible/ (accessed on 14 July 2025).

- Smart Sparrow. (2025c). Smart sparrow adaptive eLearning platform. Available online: https://www.smartsparrow.com/ (accessed on 14 February 2025).

- Smart Sparrow. (2025d). What is adaptive learning? Available online: https://www.smartsparrow.com/what-is-adaptive-learning/ (accessed on 14 July 2025).

- Sri Ram, M. S., Joy, E., & J, L. S. (2024, April 26–27). WebSight: An AI-based approach to enhance web accessibility for the visually impaired. International Conference on Science Technology Engineering and Management (ICSTEM) (pp. 1–7), Coimbatore, India. [Google Scholar] [CrossRef]

- Stelea, G. A., Sangeorzan, L., & Enache-David, N. (2025a). Accessible IoT dashboard design with AI-enhanced descriptions for visually impaired users. Future Internet, 17, 274. [Google Scholar] [CrossRef]

- Stelea, G. A., Sangeorzan, L., & Enache-David, N. (2025b). When cybersecurity meets accessibility: A holistic development architecture for inclusive cyber-secure web applications and websites. Future Internet, 17, 67. [Google Scholar] [CrossRef]

- Tailwind CSS. (2025). Rapidly build modern websites without ever leaving your HTML. Available online: https://tailwindcss.com/ (accessed on 10 April 2025).

- Tate, T., & Warschauer, M. (2022). Equity in online learning. Educational Psychologist, 57(3), 192–206. [Google Scholar] [CrossRef]

- Timbi-Sisalima, C., Sánchez-Gordón, M., Hilera-González, J. R., & Otón-Tortosa, S. (2022). Quality assurance in e-learning: A proposal from accessibility to sustainability. Sustainability, 14, 3052. [Google Scholar] [CrossRef]

- Tirfe, D., & Anand, V. K. (2022). A survey on trends of two-factor authentication. In H. K. D. Sarma, V. E. Balas, B. Bhuyan, & N. Dutta (Eds.), Contemporary issues in communication, cloud and big data analytics (Lecture Notes in Networks and Systems 281). Springer. [Google Scholar] [CrossRef]

- Tiwary, T., & Mahapatra, R. P. (2022, November 11–12). Web accessibility challenges for disabled and generation of alt text for images in websites using artificial intelligence. 3rd International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT) (pp. 1–5), Ghaziabad, India. [Google Scholar] [CrossRef]

- U.S. General Services Administration. (2025). Section 508 standards. Available online: https://www.section508.gov/ (accessed on 6 December 2024).

- Weamie, S. (2022). Cross-site scripting attacks and defensive techniques: A comprehensive survey. International Journal of Communications, Network and System Sciences, 15, 126–148. [Google Scholar] [CrossRef]

- WebAIM. (2025). WAVE web accessibility evaluation tool. Available online: https://wave.webaim.org/ (accessed on 20 February 2025).

- World Wide Web Consortium (W3C). (2018). Web Content Accessibility Guidelines (WCAG) 2.1. Available online: https://www.w3.org/TR/WCAG21/ (accessed on 5 November 2024).

- World Wide Web Consortium (W3C). (2025a). ARIA in HTML. Available online: https://www.w3.org/TR/html-aria/ (accessed on 15 March 2025).

- World Wide Web Consortium (W3C). (2025b). WAI-ARIA overview. Available online: https://www.w3.org/WAI/standards-guidelines/aria/ (accessed on 26 March 2025).

- World Wide Web Consortium (W3C). (2025c). WCAG 2 Overview|Web Accessibility Initiative (WAI). Available online: https://www.w3.org/WAI/standards-guidelines/wcag/ (accessed on 21 April 2025).

- Wray, E., Sharma, U., & Subban, P. (2022). Factors influencing teacher self-efficacy for inclusive education: A systematic literature review. Teaching and Teacher Education, 117, 103800. [Google Scholar] [CrossRef]

- Yamani, A., Bajbaa, K., & Aljunaid, R. (2022, December 4–6). Web application security threats and mitigation strategies when using cloud computing as backend. 14th International Conference on Computational Intelligence and Communication Networks (CICN 2022) (pp. 811–818), Al-Khobar, Saudi Arabia. [Google Scholar] [CrossRef]

- Yao, Y., Duan, J., Xu, K., Cai, Y., Sun, Z., & Zhang, Y. (2024). A survey on large language model (LLM) security and privacy: The good, the bad, and the ugly. High-Confidence Computing, 4(2), 100211. [Google Scholar] [CrossRef]

- Yenduri, G., Ramalingam, M., Selvi, G. C., Supriya, Y., Srivastava, G., & Maddikunta, P. K. R. (2024). GPT (generative pre-trained transformer)—A comprehensive review on enabling technologies, potential applications, emerging challenges, and future directions. IEEE Access, 12, 54608–54649. [Google Scholar] [CrossRef]

| Feature | AccessiLearnAI | Moodle | Smart Sparrow |

|---|---|---|---|

| Accessibility-Driven Content Structuring | Designed accessibility-first: automatically structures content with semantic HTML5 elements and ARIA roles from the outset, ensuring screen readers can navigate course materials easily. AI tools embed alt-text and proper headings by default, with a human-in-the-loop for quality control, so accessibility is not an afterthought but built into content creation. | Emphasizes compliance: Moodle’s interface and authoring tools are built to meet WCAG 2.1 standards (Moodle, 2025a). Core activities are accessible and perceivable, but detailed content structuring is left to instructors. Accessibility plugins can check for issues like missing alt text, but the platform does not automatically optimize semantic structure or add ARIA labels—authors must implement those best practices. | Supports accessible design through guidance: the platform allows lessons to be created to meet WCAG 2.0 and Section 508 standards (Smart Sparrow, 2025b). It provides checklists and guidelines; however, it does not auto-generate accessibility markup. Achieving structured, assistive-technology-friendly content depends on the course creator applying the provided guidelines during development (Smart Sparrow, 2025c). |

| AI-Based Personalization & Adaptive Learning | Unified AI adaptivity: integrates multiple AI-driven features (multi-level text summarization, automatic image alt-text, real-time TTS, and on-demand translation) to tailor content to each learner. The system dynamically adjusts material complexity and presentation based on learners’ needs and feedback, providing a highly personalized experience within one platform, guided by AI while remaining under teacher oversight. | Rule-based personalization, emerging AI: Moodle allows some personalization through conditional activities and learning analytics, but mostly presents the same content to all learners unless teachers manually branch lessons. It lacks built-in AI adaptation—e.g., no automatic adjustment of reading level or difficulty based on performance. Adaptivity in Moodle comes from plugins or instructor-defined rules. (Moodle, 2025a) | Adaptive by design: Smart Sparrow’s core focus is adaptive learning. It employs algorithms and instructor-set rules to continuously adjust content sequencing and difficulty based on student performance. The platform can provide just-in-time feedback, alternate pathways, or more challenging tasks depending on how a learner responds to questions (Smart Sparrow, 2025d). |

| Semantic Enhancement & Offline Accessibility | PWA-enabled offline learning: AccessiLearnAI leverages Progressive Web App technology to ensure content (including AI-generated summaries, translations, and audio) is available offline. Even without internet, the platform’s cached lessons retain their semantic structure and accessibility features, so a learner can use screen readers and navigate content offline. Any updates (e.g., new alt-text or teacher notes) sync automatically when connection is restored, providing continuous learning for low-bandwidth users | Mobile app for offline use: Moodle itself is a web LMS (not inherently a PWA), but it offers an official mobile app that supports offline access. Students can use course content offline. This ensures learners with limited connectivity are not left behind, though some interactive or external content may still require internet. In the web interface, Moodle requires an active connection; offline use is primarily via the app rather than the browser (Moodle, 2025c). | Primarily online: Smart Sparrow does not provide dedicated offline mode as part of the platform features—it is intended for use with an active internet connection to deliver interactive, adaptive content. Lessons are accessed via the web, and the platform’s real-time analytics and feedback loop presume connectivity. Students and instructors need to be online to fully utilize the adaptive learning experiences (Smart Sparrow, 2025c). |

| Ethical Data Handling & Privacy Compliance | Privacy-by-design: The platform incorporates strong data protection aligned with GDPR and other privacy regulations. Student data is kept secure and minimal—personal info not needed is not stored or is encrypted, leveraging Laravel’s security features. AccessiLearnAI also emphasizes transparency and ethics in AI: it uses explainable AI feedback and human review of AI outputs to prevent bias, and it clearly informs users about AI-generated content. This ethical framework means adaptations are not only effective but also accountable and privacy-respectful. | Institution-controlled privacy: Moodle lets institutions self-host and control data. The Moodle project has built-in privacy features (e.g., a GDPR compliance toolkit) to assist in handling user consent and data requests (Moodle, 2025d). Moodle’s approach to AI is human-centered and transparent (guided by its AI ethics principles), but most data handling practices (retention, consent, etc.) are configured by the administering institution, reflecting Moodle’s role as a platform provider (Moodle, 2025e). | Standard compliance, less emphasis on AI ethics: Smart Sparrow, as a commercial e-learning service, enables institutions to integrate via LMS and follow standard data protection protocols, though it does not publicly highlight specialized privacy features. Unlike AccessiLearnAI’s explicit human-in-the-loop AI checks, Smart Sparrow did not provide insight into its algorithmic decisions, operating as a “black box” adaptive engine from the user perspective (Smart Sparrow, 2025d). |

| Inclusive Education Support | Universal design for learning: AccessiLearnAI was created to maximize inclusion, combining accessibility and personalization. It supports multimodal content delivery (text, audio, and visuals with captions/alt-text) and on-the-fly adjustments (like simplifying complex text or providing translations) to accommodate different needs. By bridging language barriers and disability accommodations in one system, it promotes equitable access. | Widely used but no automatic personalization: Instructors can upload materials in multiple formats and enable features to support students with different needs. However, Moodle does not automatically personalize content for individual learners—the level of inclusivity depends on how educators use its features. Support comes through community-contributed plugins and good course design rather than inherent adaptivity (Moodle, 2025f). | Instructor-enabled adaptivity, not automatic: Smart Sparrow’s adaptivity can make learning more inclusive by addressing individual learner needs. The platform thus can support inclusive education, by empowering instructors to create differentiated experiences, though it does not inherently provide features like automatic text simplification or multilingual translations (the instructor must anticipate and build those variants). |

| Summarization & Content Transformation | Built-in AI content transformation: AccessiLearnAI can instantly transform content into more accessible forms. Users can request an on-demand summary of any lesson or section, with the AI generating concise or detailed versions as needed. Similarly, the platform can translate content segments into other languages and produce text-to-speech audio, broadening access for non-native readers and those who prefer listening. Images without descriptions are handled by AI-generated alt-text, and even reading level can be adjusted, all within the platform. These transformations occur seamlessly, allowing teachers and students to toggle between original and AI-enhanced content without leaving the learning environment. | Manual and plugin-based transformation: Moodle does not natively offer AI summarization or automatic content simplification. Any summaries or alternative formats of content must be created by the teacher or added via external tools. For instance, an instructor might provide a summary of a reading, upload caption files for videos, or use a third-party plugin for text-to-speech—but Moodle itself treats these as additional content, not something it generates. While Moodle supports a variety of resources (PDFs, ebooks, etc.), it relies on human effort or plugins for transforming content into different forms, rather than doing it automatically (Moodle, 2025b). | No automatic summarization features: Smart Sparrow focused on adaptivity and interactivity, and it did not include tools to algorithmically summarize or translate content. The platform expects course content to be crafted in advance; any needed simplifications or alternative explanations would be built into the lesson by the designer. It does not, for example, generate a summary of a text or convert a lesson into another language on the fly, the system concentrated on guiding learners through authored content variants and giving feedback, rather than rewriting or reformatting learning materials automatically (Smart Sparrow, 2025d). |

| User Experience & Ease of Use | Intuitive and assistive UI: The interface is designed to be clean and straightforward, with an emphasis on accessibility and simplicity. Key AI functions are exposed via one-click buttons (“Summarize”, “Translate”, “Listen”) directly in the lesson UI, so users with all levels of tech-savvy can access them easily. The platform being a responsive PWA means, whether on desktop or mobile, the experience is consistent and fast, including for those using assistive tech or low-end devices. By unifying features in a single system, it avoids the patchwork feeling of plugins—navigation and controls are cohesive. | Feature-rich, improved over time: Moodle is known for its comprehensive features (Moodle, 2025b), the platform is considered reasonably user-friendly for most educators and learners, but new users can find Moodle overwhelming at first, given its vast array of options and settings. Some users note that the interface can feel complex without customization (though many themes exist to improve it). In practice, with minimal training, most find it easy to navigate courses, and the extensive community documentation helps mitigate usability issues (Al-Fraihat et al., 2025). | Empowering but requires design effort: Smart Sparrow provides a robust authoring environment that is graphical and meant to be relatively easy for instructors to use without programming. It offers editable templates and a library of content components, which facilitate creating interactive, visually rich lessons (Smart Sparrow, 2025d). Smart Sparrow aimed for a balance where instructors are empowered to create UX for students—the platform provides the means, but ease of use ultimately depends on the skill and effort. |

| Scalability & Technical Framework | Scalable modern architecture: AccessiLearnAI is built on a Laravel PHP framework (MVC pattern) with a microservices-oriented backend. This modular design means different services (AI processing, content management, etc.) can scale independently and be updated without monolithic disruption. The platform supports multi-tenancy (multiple institutions or courses on the same system) and uses caching and job queues to handle high loads efficiently. | Proven at large scale: Moodle uses a monolithic PHP architecture with a modular plugin system. While not microservices-based, it can run in clustered environments and is highly configurable for performance. The trade-off is that implementing very new tech (like certain AI features) can be slower due to legacy support, but numerous APIs and plugin points exist to extend Moodle’s functionality within its stable core framework (Moodle, 2025d). | Cloud service with enterprise adoption: Smart Sparrow’s platform is delivered via cloud infrastructure, exact technical details are proprietary, but it is built to integrate with other systems for wide deployment (Smart Sparrow, 2025b). However, Smart Sparrow often complemented an existing LMS rather than replacing it entirely; this meant it typically scaled in specific courses or modules rather than as a single system running an entire university’s operations. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stelea, G.A.; Robu, D.; Sandu, F. AccessiLearnAI: An Accessibility-First, AI-Powered E-Learning Platform for Inclusive Education. Educ. Sci. 2025, 15, 1125. https://doi.org/10.3390/educsci15091125

Stelea GA, Robu D, Sandu F. AccessiLearnAI: An Accessibility-First, AI-Powered E-Learning Platform for Inclusive Education. Education Sciences. 2025; 15(9):1125. https://doi.org/10.3390/educsci15091125

Chicago/Turabian StyleStelea, George Alex, Dan Robu, and Florin Sandu. 2025. "AccessiLearnAI: An Accessibility-First, AI-Powered E-Learning Platform for Inclusive Education" Education Sciences 15, no. 9: 1125. https://doi.org/10.3390/educsci15091125

APA StyleStelea, G. A., Robu, D., & Sandu, F. (2025). AccessiLearnAI: An Accessibility-First, AI-Powered E-Learning Platform for Inclusive Education. Education Sciences, 15(9), 1125. https://doi.org/10.3390/educsci15091125