Abstract

Technology has always represented the key to human progress. It is believed that the use of supportive technological mediators can facilitate teaching/learning processes and enable everyone to learn how to critically manage technology without being its slave or passive user while contributing to the collective well-being. Educational robotics is a new frontier for learning that can offer numerous benefits to students. The use of robots can offer the possibility of creating inclusive educational settings in which all students, regardless of their abilities or disabilities, can participate meaningfully. The article proposes an analysis of the evidence obtained from a systematic literature review with reference to general educational robotics and social robotics for emotion recognition. Finally, as a practical implementation of an educational robotic intervention on emotion recognition, the “Emorobot Project” as part of the EU-funded “Ecosystem of Innovation—Technopole of Rome” Project in NextGenerationEU will be presented. The project’s aim is to foster the development of social skills in children with autism spectrum disorders through the creation of an open-source social robot that can recognize emotions. The project is intended to provide teachers with a supportive tool that allows them to design individual activities and later extend the activity to classmates. An educational robot can be used as a social mediator, a playmate during the learning phase that can help students develop social skills, build peer connection, reduce social isolation—one of the main difficulties of this disorder—and foster motivation and the acquisition of interpersonal skills through interaction and imitation. This can help ensure that all students have access to quality education and that no one is left behind.

1. Introduction

Technology has always represented the key to human progress. It constitutes the corpus of knowledge and practices related to the application of skills and expertise to problem solving, developing new tools or improving processes, and is closely related to the creation and use of tools, devices and systems for practical purposes. The term technology comes from the Greek τεχνολογία, composed of “Téchnē” meaning “art” or “skill” and “Logía” meaning “study” or “discourse”, literally indicating the “study of art or skill.” It has always entailed research and theoretical reworking around rules, knowledge and products of human genius for a practical resolving purpose, and artifacts are “amplifiers […] of action (hammers, levers, pickaxes, wheels) […], of the senses […] from smoke and greeting signals, to diagrams and figures that stop action or microscopes that enlarge it […], of thought, that is, of ways of thinking that employ language and explanation formation, and later use languages such as mathematics and logic” [1]. From this perspective, the concept of technology itself, originally used to describe manual arts and techniques, has, with the passage of time and evolution of humankind, taken on a meaning with increasingly broader contours, ending up describing, through a dynamic and flexible process, any development or discovery that enables problem-solving, improved living conditions and human needs. In education, as Calvani [2] argues, “if we can show that technologies contribute to improving some aspect of the school context and life, without any counterproductive effects on learning, it would make poor sense to oppose their use”.

Over the past few decades, in particular, there have been numerous initiatives by various countries to reform the education system in order to prepare future generations for the challenges they will encounter in various contexts and along their lifespan. Examples of this are the 2030 Agenda for Sustainable Development [3], adopted by the United Nations General Assembly and signed by the governments of the 193 member countries, which among the 17 goals to be achieved includes an increasingly inclusive and quality education or the National Plan for Digital Education (PNSD) [4], a strategic document drafted within the regulatory framework of Law No. 107 of 2015 (called “The Good School”), which has as its long-term goals the innovation of the Italian school system and, more generally, the promotion of opportunities related to the country’s digitization process. In this view, the use of robots has taken on an increasingly important role in industrial production and public services, helping to improve people’s life quality in a variety of manners, such as providing assistance, developing new products and services, improving efficiency, or automating a variety of repetitive or dangerous tasks.

It is believed that the use of supportive technological mediators can also facilitate teaching/learning processes and enable everyone to learn how to critically manage technology without being its slave or passive user while contributing to the collective well-being. Educational robotics is a new frontier for learning that can offer numerous benefits to students. It is an educational approach that is set to grow and become more widespread in the next few years.

2. Research Method

In the research conducted, we adopted the systematic literature review as a method to examine the current state of knowledge in our field of interest. We chose this approach to ensure transparency and rigor in the review process, thus enabling the study to be replicable. The steps followed include (1) formulation of the search questions, (2) identification of keywords and development of the search string, (3) establishment of inclusion and exclusion criteria, (4) search engine selection, (5) data extraction, (6) final selection of articles, and (7) summary of key findings.

Based on the book “Systematic Review in the Social Sciences: A Practical Guide” by Mark Petticrew and Helen Roberts [5], where it is extensively explained that a systematic literature review is an approach aimed at identifying areas of uncertainty and providing a scientific synthesis of evidence to test hypotheses or answer specific questions in any subject area and that it is particularly effective in creating an overview based on the analysis of primary studies, in order to guide future research and promote the development of new methodological approaches, we decided to use PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analysis) for study submission, entering in the appropriate identification areas the number of searches found, the number of relevant ones as a result of screening, and finally the total number of studies selected for analysis.

Starting from a general study on the use and effectiveness of robotics in the educational field, our research subsequently focused on robots that promote and encourage social skills in children with autism spectrum disorders (ASDs), with particular attention to emotion recognition.

The initial research questions we asked ourselves were as follows: What are the advantages and/or disadvantages of using robotics in education? In which fields does it most influence? How can the use of robotics promote skill development? How can robots be used to promote inclusive learning and educational accessibility? What are the effects of interactions with educational robots on children with autism spectrum disorder? How can educational robotics foster the development of social skills in children with autism? What types of robots are most effective in engaging children with autism in educational activities? How can the use of educational robotics foster communication and social interaction in children with autism spectrum disorder?

Based on these questions, search strings were grouped and formulated in order to proceed with database querying: “educational robots” and “advantages and disadvantages”, “social robots” and “ASD”, and “ASD” and “emotion recognition.” In the study, we decided to use three bibliographic search engines, Google Scholar, Scopus, and Web of Science, which offer the ability to search by both title and topic. Inclusion and exclusion criteria were defined by date (2000 to present), language (English, Italian), and type of publication (journals or conference proceedings).

The process of article selection was conducted manually and consisted of two separate steps: selection by title and selection by abstract. Each article was evaluated for its relevance to the inclusion criteria. The ratings were made using a three-point scale (0—not relevant, 1—partially relevant, 2—certainly relevant), which allowed for the calculation of various scores for selection (below 4—excluded, between 4 and 7—further screening, 8—included). Based on this procedure, the search yielded a total of 529 articles (213 from Google Scholar, 151 from Scopus, and 165 from Web of Science) and was reduced to 331 with the removal of duplicates. A summary of the results obtained is shown in the next three paragraphs of this contribution.

3. Learning with Educational Robotics

In the educational system, robotics is an emerging field that combines engineering, science, mathematics and technology to provide a practical and engaging approach to learning. This field offers students the opportunity to apply skills learned in the classroom in a hands-on setting, thus promoting a deeper understanding of concepts. It is possible to use robots in different disciplines and in different school orders and grades depending on the age and needs of the students. They can help them learn complex computer science concepts, mathematics, one or more different languages, and social and interpersonal skills in a more active and engaging manner.

Educational robotics offers many benefits. First, it makes learning fun and immersive. Students are naturally attracted to technology and robots, so using robotics in the classroom can increase motivation and learning interest. Second, it promotes active learning. Students are not simply onlookers; they are actively involved in the learning process. They can design, build, and plan their robots to make them perform certain tasks; they have to problem-solve to build or program a robot; they have to think critically to understand how the robot is to function; and they have to collaborate to work in groups and communicate to share ideas, which requires the development of soft skills such as critical thinking, creativity, collaboration, and problem-solving, which are fundamental elements for educational, personal and professional success.

Some evidence is available which shows that the use of robotics in education has a positive impact on students’ behavior and development. Naturally, the idea that introducing robots into the classroom can automatically lead to improved learning of new skills and abilities has been the focus of considerable research in the international literature, highlighting a number of important considerations. First of all, it is crucial to understand that the simple use of robots in an educational setting does not in itself guarantee positive results in student learning; indeed, several studies [6,7] have shown that the integration of educational robotics activities may even pose risks of worsening in some learning indicators if appropriate pedagogical strategies are not adopted. Moreover, the positive effects of educational robotics occur only at specific conditions. This means that educational success depends on various factors, such as the design of the activities, the quality of the robots used, and the teacher’s guidance during the learning process. In particular, to promote metacognitive skills, that is, the ability to reflect on one’s own learning and develop greater awareness of one’s own study strategies, it is necessary that teachers adopt specific pedagogical approaches. These approaches may include planning activities that require students to self-assess, monitoring their own progress and adapting their learning strategies in response to their own needs.

An analysis of the scientific literature on the topic (for a review, see Wang et al. [8]) shows that the application of educational robots is coherent with several contemporary learning theories such as active learning principles, social constructivism, and Papert’s constructionism theory. Commonly, as pointed out by Castro et al. [9], studies on the use of educational robotics in schools are oriented toward studying the impact of educational robotics activities on STEM (Science, Technology, Engineering, and Mathematics) areas, with a particular focus on robot design and building [10,11,12,13,14], while other research has explored its use as an assistive device in cases of motor or social problems and as a tool for inclusion [15,16,17,18,19].

Educational robots, designed for educational use, significantly differ from their industrial or service counterparts. These robots are specially designed to be manipulated in complete safety, even by very young children, making learning fun and instructive. They are characterized by some important features:

- Advanced sensorization: Educational robots are equipped with a variety of sensors, including proximity, touch, sound, light, and acceleration sensors. These sensors enable robots to sense their environment and react appropriately to external stimuli, opening up new possibilities for interactive learning.

- Variations in shape: Educational robots can take a variety of forms, from wheeled vehicles to more complex shapes, such as insects, animals, or humanoid forms. This variety allows students to explore and understand design and engineering concepts in a hands-on manner.

- Programmability: These robots can be programmed by students through procedural or event-based programming languages. This process allows students to define the behavior of the robot in response to sensory input. Visual programming languages such as Scratch or Blockly simplify the creation of syntactically correct programs, allowing students to focus on logic and computational thinking.

- Accessibility for all ages: Some educational robots are also suitable for elementary school students or younger. These tools offer a gradual on-ramp to learning programming and engineering, adapting to different age groups and skills.

- Push-button programmable robots: Some robots, such as Bee-Bot and Blue-Bot, can be programmed by simply pressing buttons on their chassis. These systems provide a hands-on experience for younger children, introducing them to the concept of sequences of commands.

- Modular Robotics: Systems such as littleBits and Cubelets allow students to build robots in a modular manner by assembling various components and linking them together. This approach promotes creativity and learning through exploration.

- Use of Arduino and Raspberry Pi boards: Some teaching activities involve building robots using Arduino or Raspberry Pi development boards. These projects may be more technical, but they offer a wide range of possibilities for advanced learning.

In summary, the use of robots in the classroom can be a precious opportunity to improve student learning, but it is not a panacea. A holistic approach including an appropriate activity design, the informed use of robots, and active involvement by teachers is needed to maximize the benefits.

Furthermore, it is important to emphasize that the final goal should be the enhancement of each student’s learning experience. This perspective recognizes the importance of valuing each individual, that is, allowing the full development of their specific abilities and potential. To achieve this goal, it is essential to adopt processes of individualization and personalization of instructions. The personalization of education means adapting learning to the specific needs of individuals, taking into consideration their specificities, learning styles, interests and abilities. Personalization recognizes that each student is unique and requires a customized approach.

Robot use can offer the possibility of establishing inclusive educational settings in which all students, regardless of ability or disability, can participate meaningfully. Inclusion is not only about physical access but also about access to meaningful learning opportunities and full involvement in the school community. This promotes equality and diversity within the educational environment and can be a valuable guideline to direct teachers’ good teaching practices toward a more equal, inclusive, and person-centered approach.

4. Social Robots and Emotion Recognition

Emotions are an essential part of each individual’s life and are a key part of our personality. Recognizing, accepting and living our emotions fully, learning to create dialogue with ourselves to manage and modify our negative thoughts, can make us stronger, more resilient, and aware of others’ value. Emotion education usually takes place in the family but in strict cooperation with the school. In fact, school plays a key role in emotion education for children and teenagers as it is a social environment that promotes the development of social and emotional skills.

For the past few decades, social robots have been entering the educational setting, opening up new and promising prospects for a change in education [20]. These robots, equipped with artificial intelligence and the ability to interact with humans, are becoming increasingly common tools in many schools and educational institutions around the world. Social robots are machines that combine artificial intelligence, sensors and communication skills to interact naturally with humans. They are designed to recognize emotions, respond to non-verbal signs and establish relationships with people. This social interaction capability distinguishes them from traditional industrial or automated robots.

The history of social robots began in the 1990s with the creation of robots such as “Kismet” and “Cog” at the Massachusetts Institute of Technology (MIT). Through the years, the technology has continuously evolved, leading to increasingly sophisticated robots such as SoftBank Robotics’ “Pepper” [21] and Aldebaran Robotics’ “NAO” [22], robots deployed in a variety of fields, including education, able to identify faces and the main human emotions and designed to interact with humans as naturally as possible through dialogue and touch screens.

How do they work practically?

This sort of robot uses a combination of sensors, artificial intelligence and software to interpret people’s emotional signs. More specifically, they use sensors such as the following:

- Cameras: Robots can be equipped with cameras that capture people’s facial expressions. These cameras can detect facial muscle movements and changes in facial appearance.

- Microphones: microphones enable the robot to detect voice tone, speech rate and vocal intonations, providing clues to a person’s emotional state.

- Touch sensors: some robots are equipped with tactile sensors that can detect physical contact, such as a caress or handshake, to understand related emotions.

- Motion sensors, such as gyroscopes and accelerometers, can detect body movements and postures, which can reflect emotional states.

After detecting the emotion, the robot processes the data, categorizes it and responds appropriately:

- The data collected from the sensors are processed by an artificial intelligence system, which may be based on artificial neural networks or machine learning algorithms. This software analyzes the data to recognize patterns of facial expressions, voice tones, gestures and movements related to specific emotions, such as happiness, sadness, anger, surprise, fear, etc.

- After analyzing the data, the robot classifies emotions into specific categories; e.g., it might determine whether a person is happy, sad, angry or neutral. Some emotion recognition systems use reference patterns of facial expressions, such as the “Facial Action Coding System” (FACS), which identifies combinations of muscle movements associated with specific emotions.

- Once the emotion is recognized, the robot can react appropriately, e.g., it can adapt its behavior, communication, or response to the emotional needs of the interlocutor. This is because social robots are designed to interact empathically and adaptively, seeking to establish positive relationships with humans.

The potential related to the use of this technology in the educational and health fields is easy to understand [23]. Examples are Milo [24], developed by RoboKind to help children with autism spectrum disorders learn more about emotional expression and empathy, or Robin [25], developed by Expper Tech to provide emotional support to children during medical therapy. These robots can play a significant role in fostering social relationships in children with autism spectrum disorder (ASD) by helping them to develop social and communication skills.

Several studies on the use of socially assistive robotics (SAR) in therapeutic and educational contexts have highlighted the effectiveness of the use of robots in improving cognitive, emotional and motor development in children with autism spectrum disorder [26,27]. Socially assistive robotics (SAR) involves various fields, such as psychology, engineering, IT, teaching, robotics and rehabilitation. The implementation of social robotics in healthcare and educational applications represents a shift towards the use of robotics in general and, in particular, in human–robot interaction (HRI). SAR is considered a subfield of human–robot interaction (HRI), rehabilitation robotics, social robotics and service robotics and represents an established research area in which robots are used, in therapeutic and educational contexts, to provide support for people through social interaction [28].

One of the most valuable contributions of social assistive robotics (SAR) has been to support therapies for children with autism spectrum disorders (ASDs). Numerous studies have demonstrated how robots can be considered social mediators capable of supporting children with autism, encouraging the implementation of social behaviors, such as maintaining gaze, imitation and attention [29,30,31]. This happens because an interaction with the robot stimulates children with autism to perform behaviors that are rarely triggered spontaneously.

Autism spectrum disorder (ASD) is a neurodevelopmental disorder characterized by the impairment of two main areas: (1) the area of social communication, relating to verbal and non-verbal language and social interaction; and (2) the area of imagination, which refers to the repertoire of restricted and repetitive behaviors [32]. Children with this disorder may present heterogeneous symptoms which may concern poor communication skills (for example, talking incessantly about a topic of interest or a lack of verbal skills) and social interaction (i.e., difficulty relating to adults and peers) which seems to be the most visible difficulty. In fact, children with autism often tend to avoid eye contact with others and follow social rules; their difficulties are related to understanding people’s emotional states and the connection they have in influencing the actions and feelings of others.

For this reason, various interventions have been developed with the aim of improving the cognitive skills of children with autism through the use of assisted technologies in therapy sessions [33]. Indeed, many studies have designed and developed robots to support people with ASD in dealing with daily challenges and social difficulties, including social learning, imitation and communication [34]. The use of robots in therapy sessions for children with ASD offers many advantages, as children with autism prefer to interact with the robot due to the difficulties encountered in communicating with people [35,36].

Numerous studies have implemented robot-assisted autism therapy (RAAT) solutions to help children with autism develop skills relevant to their social context. For example, research has shown that interactions with robots can enhance a child’s sense of self, promote sharing, improve communication, and increase their overall comfort in engaging with others [37]. Anamaria and their colleagues [38] emphasized the positive impact of the social robot Probo in enhancing children’s abilities to identify emotions based on different situations. Furthermore, Di Nuovo and colleagues [39] studied new deep learning neural network architectures for automatically estimating whether a child focused his or her visual attention on the robot during a therapy session.

Other research has focused on building verbal communication in children with autism. Lee and colleagues [40] examined how robot characteristics influenced the development of social communication skills in children with low-functioning ASD and how these children responded to the robot’s verbal communication characteristics. The results indicated that children with autism engaged more intensely with the robots than with the human experimenters. Some aspects of the robot, such as its face and movable limbs, often captured children’s attention and improved their facial expression skills.

Regarding the learning and interaction skills of children with autism, a study conducted by Bharataraj and colleagues [41] drew inspiration from the design of a parrot-shaped robot. This study showed that children were attracted to the robot and enjoyed interacting with it. Similarly, Boccanfuso and his team [42] developed a prototype of an inexpensive toy-like robot called Charlie, with the aim of promoting joint attention and imitation. This initiative led to notable improvements in children’s spontaneous expressions, social interactions and joint attention skills.

Although there is numerous evidence on the effectiveness of socially assisted robotics (SAR) in the therapy of ASD [43,44,45], there is currently a lack of consensus on how to manage interactions and which morphology of the robot could be most effective. Most of the robots used are standardized robots (such as toy robots or social robots) that have not been specifically designed for therapeutic interventions with people with ASD [46]. For this reason, Ramirez-Duque and colleagues [47] developed a participatory design method to outline guidelines in the design of social robotic devices intended for use in robot-assisted therapy for children with ASD.

The design and development of a programmable robot aims to address the main challenges that children with autism face in the field of social interaction and in understanding the behavior and emotions of others. The robot could provide concrete support and help children practice recognizing their own and others’ emotions. This is due to the fact that it can be personalized and adapted to the individual child, allowing the latter to carry out activities gradually, avoiding sensory overload.

It is important to emphasize that social robots should be used as part of a global intervention approach alongside individualized therapies and pathways. Supervision by educators, specialized therapists and parents is essential to ensure that the use of robots is appropriate and beneficial. Using them wisely in school environments can provide substantial benefits for children with ASD and the entire classroom group. They can be used, e.g., as communication supports, helping children learn and practice verbal and non-verbal communication skills such as the use of facial expressions, gestures, and eye contact; they can help conduct structured training exercises to teach social skills such as greeting, conversation, and respecting personal space, activities repeated consistently that help them understand social dynamics; they can help develop empathic skills by teaching them to recognize and respond to the emotions of others, presenting stories and scenarios in which children have to interpret and replicate the facial expressions and emotions of others; they can reduce social anxiety related to human interactions by creating a comfortable, non-judgmental environment to practice becoming familiar with anxious situations in a gradual and controlled manner; they can provide immediate and neutral feedback during social interactions, indicating when behavior is appropriate or inappropriate, helping them understand the consequences of their actions and providing positive feedback and visual, auditory, or tactile reinforcement to encourage desired behavior; they can serve as mediators to recognize and manage their own emotions; they can be programmed to provide individualized instructional support, adapting the difficulty level and lesson timing to individual needs so as to develop learning skills and reinforce levels of confidence, self-esteem and self-efficacy in a way that also fosters recognition of their abilities and acceptance by the peer group; and they can collect data allowing teachers and therapists to adjust intervention programs and monitoring progress.

Despite advances in the field of educational robotics, there are still many challenges to be faced.

Some are related to cost and accessibility. Robots can be expensive, which can limit access to this technology in many schools and educational institutions. Others are related to privacy and data security. It is important to ensure the protection of student data and to prevent distorted and illegal use of the information collected. Schools, regulatory agencies responsible and designers need to establish explicit guidelines and ensure that the data collected are properly protected and used in a way that complies with the established rules. Other challenges include social and ethical impacts. Their use may be seen by some people as invasive or dangerous. A major ethical question concerns whether social robots can replace teachers or be used as a supportive tool. In reality, complementarity can make the best use of the capabilities of both parts. Teachers need to be properly trained to use robots effectively and to assess the appropriate mix between the widespread use of robots and human interactions. Still, others are related to more technical aspects such as design, the tools used and levels of accuracy in correctly identifying human emotions. According to a study conducted by Rudovic et al. [48], existing robots are limited in their ability to automatically sense and respond to human affect, which is necessary to establish and maintain engaging interactions. Their inference challenge is made even harder by the fact that many individuals with autism have atypical and unusually different styles of expressing their affective–cognitive states. For this reason, it is considered essential to use the latest advances in deep learning to formulate a personalized machine learning framework using contextual information (demographic data and behavioral assessment scores) and individual characteristics.

5. Automatic Emotion Recognition and Autism Spectrum Disorders

The recognition of emotions in autism spectrum disorder (ASD) is a critical research area aimed at understanding the challenges individuals with ASD face in deciphering and interpreting the emotional expressions of others. Simon Baron-Cohen and Patricia Howlin are two influential researchers whose contributions have been pivotal in the development of this field.

Baron-Cohen’s empathizing–systemizing theory (ESM) has significantly influenced the understanding of emotional dynamics in individuals with ASD. According to this theory, empathy is divided into affective empathy and theory of mind. Affective empathy involves the ability to share the emotions of others, while theory of mind pertains to the ability to understand and predict the mental states of others.

Baron-Cohen contends that individuals with ASD exhibit deficits in theory of mind, indicating potential difficulties in the automatic recognition of emotional expressions. This theoretical perspective has provided the conceptual foundation for exploring the challenges that children with ASD may encounter in this domain.

One of Baron-Cohen’s key studies on this topic is “Mindblindness: An Essay on Autism and Theory of Mind” [49], where he elaborates extensively on his theory of mind and its application to the autistic context.

Patricia Howlin’s work has focused on the assessment and intervention in autism spectrum disorders. Her research has underscored the importance of addressing difficulties related to social skills, including emotion recognition, in individuals with ASD.

Charman has advocated for the implementation of early and targeted interventions to improve social and emotional skills in children with ASD. Her practical intervention-oriented perspective has guided further research into the development and effectiveness of specific strategies to enhance automatic emotion recognition in individuals with ASD.

Charman’s work, particularly her contribution to “Social and Communication Development in Autism Spectrum Disorders: Early Identification, Diagnosis, and Intervention” [50], has significantly influenced clinical practice and the design of targeted interventions to address social challenges in individuals with ASD.

Research on the automatic recognition of emotions in autism spectrum disorder has witnessed significant advancements over the years. Numerous studies have examined the neurobiological bases of these difficulties, utilizing advanced neuroimaging techniques to identify neural correlates of deficits in emotional recognition.

In an experiment conducted by Adolphs et al. [51], the involvement of the superior temporal cortex in facial expression perception was examined, revealing anomalies in individuals with ASD. These findings have contributed to outlining the neural bases of altered emotional recognition in individuals with ASD.

In the exploration of automatic emotion recognition in autism spectrum disorder (ASD), researchers have frequently utilized experimental approaches that involve presenting individuals with faces displaying various emotional expressions. One noteworthy study conducted by Pelphrey et al. [52] delved into the neural and behavioral responses exhibited by individuals with ASD while perceiving facial expressions.

The study, led by Pelphrey and colleagues, aimed to investigate how individuals with ASD process and respond to facial expressions conveying emotions. The researchers utilized neuroimaging techniques and behavioral assessments to scrutinize the intricate interplay between neural activity and observable behaviors in response to emotional stimuli.

The findings of the study shed light on specific challenges faced by individuals with ASD in the realm of facial expression perception. The neural responses, as captured by neuroimaging methods, provided insights into the unique patterns of brain activation associated with processing emotional cues in individuals with ASD. Concurrently, behavioral responses were observed and analyzed to discern any distinct patterns or deviations from neurotypical responses.

This study contributed to a nuanced understanding of the difficulties individuals with ASD encounter in automatically recognizing and responding to emotional expressions in faces. The combination of neural and behavioral analyses offered a comprehensive perspective on the intricacies of emotional processing in ASD, thereby enriching the broader literature on the topic.

The implications of these findings extend beyond the specific study, informing ongoing research endeavors focused on refining interventions and support mechanisms tailored to address the identified challenges in emotion recognition for individuals with ASD. Furthermore, such studies contribute to the broader scientific discourse on the neural underpinnings of social cognition in ASD and have implications for the development of targeted therapeutic strategies.

Recent advancements in technology have ushered in a new era for the automatic recognition of emotional expressions in individuals with autism spectrum disorder (ASD). Notably, the integration of artificial intelligence (AI) has emerged as a promising avenue for enhancing the accuracy and effectiveness of emotion recognition systems.

One seminal perspective in this domain is presented by Picard. According to her approach, the utilization of advanced AI systems holds substantial promise in bolstering social interactions for individuals with ASD. The implementation of AI technologies in this context involves the development of sophisticated algorithms capable of analyzing facial expressions, vocal intonations and other non-verbal cues associated with emotions.

Picard’s foundational work in “Affective Computing” [53] underscores the potential of AI to contribute significantly to the social well-being of individuals with ASD. The author advocates for the creation of AI-driven tools designed to assist individuals with ASD in navigating social interactions more effectively. Such tools could serve as personalized aids, offering real-time feedback and guidance based on the nuanced analysis of emotional cues in social situations through the development of a novel AI-based system specifically tailored to the unique socio-emotional processing characteristics of individuals with ASD. The study investigates the system’s efficacy in providing real-time feedback during social interactions, aiming to enhance emotional understanding and responsiveness.

Furthermore, the groundbreaking research conducted by Salgado et al. [54] delves into the potential of employing deep learning models to unravel the intricacies of subtle emotional expressions, an aspect that poses particular challenges for individuals with autism spectrum disorder (ASD). The study seeks to elucidate the accuracy of these sophisticated models in decoding the complex array of emotional states, thereby providing valuable insights into the feasibility of harnessing advanced artificial intelligence (AI) techniques to address the nuanced landscape of emotion recognition in ASD.

The investigation is rooted in the recognition that individuals with ASD often encounter difficulties in perceiving and interpreting subtle cues in facial expressions and other non-verbal communication, hindering their ability to navigate social interactions effectively. By focusing on deep learning, a subset of machine learning techniques inspired by the structure and function of the human brain, the researchers aim to develop models capable of discerning subtle emotional nuances that may go unnoticed by conventional approaches.

The study involves the construction and training of deep learning models using extensive datasets of facial expressions representing a diverse range of emotions. These models are designed to learn intricate patterns and associations within the data, enabling them to make nuanced predictions about the emotional states conveyed in facial expressions. The emphasis on deep learning reflects an acknowledgment of the complex and multi-dimensional nature of emotional expression, particularly in the context of ASD.

Through rigorous testing and validation, the models’ accuracy in decoding emotions is evaluated, with a focus on basic and complex emotional states. The research team employs a comprehensive set of parameters to evaluate model performance, including sensitivity to subtle variations, generalization across different individuals and robustness in the presence of confounding factors.

The outcomes of this study are anticipated to shed light on the potential of integrating deep learning models into practical applications aimed at supporting individuals with ASD in real-world social scenarios. By demonstrating the effectiveness of these advanced artificial intelligence techniques in capturing the intricate nuances of emotional expression, the work promises the development of innovative tools and interventions that could significantly improve the social communication skills and emotional well-being of individuals on the autism spectrum. These recent scientific contributions highlight the ongoing evolution of AI applications in the realm of emotional expression recognition for individuals with ASD. The integration of cutting-edge technologies not only reflects the dynamic nature of research in this field but also holds promise for the development of innovative tools that can significantly impact the social interactions and emotional well-being of individuals living with ASD. Research on the automatic recognition of emotions in autism spectrum disorder, initiated by the seminal contributions of Baron-Cohen and Howlin [55], has progressed significantly. The integration of theoretical perspectives, neurobiological investigations and technological applications has enriched our understanding of how individuals with ASD navigate challenges in recognizing and interpreting emotional expressions.

As knowledge of the cognitive and neural bases of emotional deficits in individuals with ASD continues to grow, the next step is the translation of this knowledge into practical and personalized interventions. An interdisciplinary approach, encompassing psychology, neuroscience and computer science, is essential to enhance the quality of life for individuals with ASD. Only through the development of effective strategies can we improve social and emotional skills, crucial for a fulfilling life for those living with autism.

6. Emorobot Project

Therefore, the present work aims to develop an accessible and customizable robot prototype to adapt to the different needs of children with ASD.

The Emorobot project endeavors to develop an open-source robot dedicated to fostering social inclusion among children with autism. The foundational concept involves utilizing a participatory design (PD) process [56], wherein individuals can express their opinions and actively participate in the decision-making process. Employing participatory design ensures the creation of a product that authentically reflects the needs and expectations of the users involved. Notably, when implementing co-design with children with ASD, it is imperative to consider the diverse abilities of the participants and tailor the robot based on their capacities rather than focusing solely on their disabilities.

The literature lacks a unanimous consensus on the ideal morphology for a robot to enhance therapy effectiveness for autistic children. To address this gap, the study conducted by Ramírez-Duque and colleagues [47] adopts a focus group approach involving parents of children with autism and specialists. The aim is to garner insights into valuable characteristics for designing a robot specifically catered for therapy sessions. Participants suggested that a robot equipped with peaceful sounds and familiar songs could assist children in reducing anxiety and avoiding meltdowns. Furthermore, parents assert that the presence of the robot during therapies can boost motivation and encourage communication. From this perspective, the robotic system aims to engage the child through various communication channels, including sight, hearing and tactile perception, in addition to promoting the child’s own body awareness and exploration of the surrounding space. Moreover, participants envision the robot as an intermediary and facilitator, serving as a natural extension of the family intervention approach.

Concerning the physical appearance of the robot, it is preferable for it to possess the following characteristics: (1) an appearance resembling an animal or a fantastic character; (2) a friendly demeanor; (3) active upper limbs and passive lower limbs (with optional active lower limbs); (4) proportions around two–three heads with a height between 40 and 50 cm; (5) well-defined facial features, including a mouth, two eyes, eyebrows, nose and two ears; (6) soft fabric or different textures, such as silicone, plush and polymers; (7) primary colors and an absence of prints or images; (8) a gentle voice with the ability to reproduce familiar sounds; (9) sensors and actuators for gradual, fluid and predictable movements; (10) visual and tactile sensors, with the possibility of using a touch screen to provide diversified stimuli; and (11) a mobile head and upper limbs, enabling the performance of social behaviors, such as facial expressions, speaking, rewarding, grasping objects and hugging [34]. Additionally, it is recommended that the robotic platform be equipped with an accessory kit to customize interactions, including musical instruments, clothes, and educational tools.

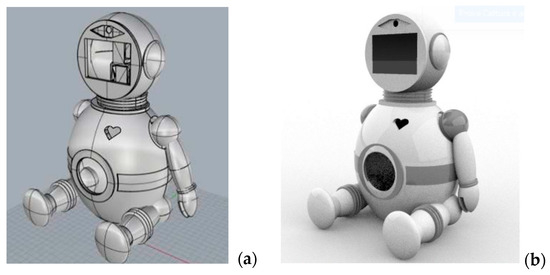

Emorobot was meticulously designed, taking into account insights from scientific literature, to target specific skills in children with autism, including social skills [57,58], promoting eye contact [59] and joint attention [60,61,62], increasing self-initiated interactions [63], and encouraging basic verbal and non-verbal communication [63]. The robot was designed using Rhinoceros software (V 7) and created with the PrusaMK3s 3D printer (Prusa i3 MK3S, Prague, Czech Republic) to reduce production costs and allow educators and students to download the 3D model for classroom construction. The physical characteristics of Emorobot align with those recommended in the literature, featuring a friendly appearance and active upper limbs with passive lower limbs [64]. Precisely, the robot is equipped with a LattePanda card connected to a display (reproducing different emotions) and a camera. Additionally, it includes an Arduino Uno microcontroller (Arduino UNO R3 microcontroller ATmega328, Interaction Design Institute Ivrea, Ivrea, Italy) tasked with managing the movement of servomotors in the neck and arms to enhance expressiveness. Emorobot also features software called EmoTracker (researchers at Cassino University, Cassino, Italy), equipped with an artificial intelligence algorithm capable of recognizing emotions on people’s faces through the webcam video stream input. These functionalities enable the robot to train children with autism to understand the emotional states of others and correctly interpret body signals and facial expressions. (See Figure 1).

Figure 1.

(a) Emorobot design through CAD software; (b) rendering of the robot prototype.

Regarding the software component, the robot is set to incorporate the “EmoTracker” software (V 1.3.0), a cutting-edge program still in the developmental phase at the University of Cassino and Southern Lazio. This software, utilizing an advanced artificial intelligence algorithm, demonstrates remarkable capabilities in recognizing the user’s level of attention and various emotional states [65]. Expanding its functionalities, the software leverages the video stream from the webcam to proficiently discern the age and gender of the user. The overarching objective is to imbue Emorobot with interactivity and customization, thereby positioning it as a tool to alleviate social isolation—one of the predominant challenges faced by individuals with autism spectrum disorder (ASD).

The EmoTracker software’s unique capacity to decode emotional cues and assess the user’s attention level marks a significant stride in enhancing Emorobot’s responsiveness and adaptability to individual needs. By being cognizant of the user’s emotional states, the robot can dynamically adjust its interactions, fostering a tailored and supportive environment. Moreover, the software’s ability to identify the age and gender of the user further contributes to personalizing the robot’s responses and activities, creating a more engaging and user-centric experience.

The overarching vision for Emorobot is to serve as more than just a technological tool; it aspires to be a catalyst for reducing the social isolation commonly experienced by individuals with ASD. Through its interactive features and customization options, Emorobot is strategically designed to motivate users and facilitate the acquisition of essential relational skills. By harnessing the capabilities of the EmoTracker software, the robot endeavors to create meaningful connections with users, enhancing their emotional well-being and overall quality of life. As development progresses, Emorobot stands poised to exemplify how advanced technology, when thoughtfully integrated, can positively impact the lives of individuals with ASD, transcending mere functionality to become a valuable companion on their journey toward enhanced social engagement and personal development.

Within the educational setting, Emorobot emerges as a multifaceted tool strategically designed to not only stimulate children’s curiosity and motivation but also to facilitate experiential learning through play. Play, recognized as a fundamental aspect of childhood, serves as a dynamic environment wherein children can explore their surroundings, acquire new knowledge and foster crucial social relationships [64]. Despite the inherent benefits of play, children with autism often encounter barriers that hinder their full engagement in such experiences. These obstacles may include sensory and cognitive challenges, as well as a lack of play materials tailored to their specific needs.

In addressing these challenges, Emorobot assumes a pivotal role as an instrumental playmate, effectively embodying the dual roles of peer and toy. By doing so, Emorobot becomes an invaluable asset in cultivating connections between the child, the teacher and other students during play activities. This dynamic interaction not only supports the child in overcoming sensory and cognitive hurdles but also serves as a bridge to enhance social engagement. Emorobot becomes a conduit for fostering meaningful social interactions, helping the child navigate and comprehend the intricate landscape of human connections [66].

Through purposeful play scenarios, Emorobot contributes to a rich and inclusive learning environment. It provides a medium through which children with autism can actively participate in activities that promote sensory exploration, cognitive development and social integration. The robot’s adaptability and interactive features make it a versatile companion, capable of tailoring its engagement to the unique needs and preferences of each child. As an instrumental playmate, Emorobot not only addresses the challenges inherent in the play experiences of children with autism but also acts as a catalyst for fostering a sense of inclusion, understanding and camaraderie within the school community. In essence, Emorobot transcends its technical functionalities, evolving into an educational tool that fosters holistic development and meaningful social connections for children with autism in the school context.

7. Conclusions

The body of scientific literature extensively underscores the potential benefits of incorporating robots into therapeutic interventions to enhance the engagement of children within autism spectrum disorder (ASD) during activities, surpassing the efficacy of traditional sessions led by human therapists [59,60,61]. This paradigm underscores the pivotal role of social robots as supportive tools in promoting the multifaceted development of social, cognitive and motor skills in children with autism throughout their daily routines. While there are existing social robots designed explicitly for interactions with children with ASD, our overarching objective is to pioneer the creation of Emorobot—a low-cost, open-source robot tailored for educational settings.

Emorobot, currently in the prototype phase of development, stands out as a customizable and programmable tool accessible to anyone interested in constructing and adapting it to meet the specific needs of children with disabilities. Beyond its potential in educational environments, Emorobot proves particularly valuable during psychoeducational interventions, serving as a supportive presence for the child throughout therapeutic sessions. Simultaneously, it facilitates the educator or therapist’s observation of the child’s interactions with the robot and responses to stimuli during therapy, offering valuable insights into the child’s progress.

In a broader context, Emorobot assumes the role of a therapeutic and educational tool dedicated to teaching social skills and desired behaviors to children with ASD, thereby contributing to the overall improvement of the therapeutic process. The robot operates as an intermediary during therapeutic sessions, aiding children with ASD in navigating challenges associated with communication within their environment and interactions with others. Despite the growing body of research on robot-based autism therapy, this field remains relatively nascent, necessitating further investigation to comprehensively grasp the effectiveness and efficiency of employing robots in autism therapy. Continued exploration and scrutiny of this innovative approach are imperative to refine and optimize its applications in promoting the well-being and development of children with ASD.

Author Contributions

The entire contribution is the result of shared reflection and the joint work of the authors: F.S., L.C., M.D.T. and P.A.D.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Project “Ecosistema dell’innovazione—Rome Technopole” financed by EU in NextGenerationEU plan through MUR Decree n. 1051 23.06.2022—CUP H33C22000420001.

Institutional Review Board Statement

The study was approved by the Institutional Review Board IRB_SUSS of the University of Cassino and Lazio Meridionale (Protocol code 0004860, 29/02/2024).

Informed Consent Statement

Written informed consent will be given by the parents/tutors of the study participants.

Data Availability Statement

Data will be made available on request by the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Menichetti, L. Robotics, augmented reality, virtual worlds, to support cognitive development, learning outcomes, social interaction, and inclusion Robotica, realtà aumentata, mondi virtuali, per supportare lo sviluppo cognitivo, gli apprendimenti, l’interazione sociale, l’inclusione. Form@ Re-Open J. Per La Form. Rete 2019, 19, 1–11. [Google Scholar]

- Calvani, A. Qual’è il senso delle tecnologie nella scuola? Una “road map” per decisori ed educatori. Ital. J. Educ. Technol. 2013, 21, 52–57. [Google Scholar]

- The 2030 Agenda for Sustainable Development. Available online: https://sdgs.un.org/2030agenda (accessed on 20 October 2023).

- National Plan for Digital Education. Available online: https://scuoladigitale.istruzione.it/pnsd/ (accessed on 20 October 2023).

- Petticrew, M.; Roberts, H. Systematic Reviews in the Social Sciences: A Practical Guide; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Lindh, J.; Holgersson, T. Does lego training stimulate pupils’ ability to solve logical problems? Comput. Educ. 2007, 49, 1097–1111. [Google Scholar] [CrossRef]

- Atmatzidou, S.; Demetriadis, S.; Nika, P. How does the degree of guidance support students’ metacognitive and problem solving skills in educational robotics? J. Sci. Educ. Technol. 2018, 27, 70–85. [Google Scholar] [CrossRef]

- Wang, K.; Sang, G.Y.; Huang, L.Z.; Li, S.H.; Guo, J.W. The Effectiveness of Educational Robots in Improving Learning Outcomes: A Meta-Analysis. Sustainability 2023, 15, 4637. [Google Scholar] [CrossRef]

- Castro, E.; di Lieto, M.C.; Pecini, C.; Inguaggiato, E.; Cecchi, F.; Dario, P.; Sgandurra, G. Robotica Educativa e potenziamento dei processi cognitivi esecutivi: Dallo sviluppo tipico ai bisogni educativi speciali. Form@ re 2019, 19, 60–77. [Google Scholar]

- Barker, B.S.; Ansorge, J. Robotics as means to increase achievement scores in an informal learning environment. J. Res. Technol. Educ. 2007, 39, 229–243. [Google Scholar] [CrossRef]

- Conrad, J.; Polly, D.; Binns, I.; Algozzine, B. Student perceptions of a summer robotics camp experience. Clear. House A J. Educ. Strateg. Issues Ideas 2018, 91, 131–139. [Google Scholar] [CrossRef]

- Hussain, S.; Jörgen Lindh, J.; Shukur, G. The effect of LEGO training on pupils’ school performance in mathematics, problem solving ability and attitude: Swedish data. J. Educ. Technol. Soc. 2006, 9, 182–194. [Google Scholar]

- Nugent, G.; Barker, B.; Grandgenett, N. The effect of 4-H robotics and geospatial technologies on science, technology, engineering, and mathematics learning and attitudes. In EdMedia+ Innovate Learning; Association for the Advancement of Computing in Education (AACE): Asheville, NC, USA, 2008. [Google Scholar]

- Nugent, G.; Barker, B.; Grandgenett, N.; Adamchuk, V.I. Impact of robotics and geospatial technology interventions on youth STEM learning and attitudes. J. Res. Technol. Educ. 2010, 42, 391–408. [Google Scholar] [CrossRef]

- Daniela, L.; Lytras, M.D. Educational robotics for inclusive education. Technol. Knowl. Learn. 2019, 24, 219–225. [Google Scholar] [CrossRef]

- Daniela, L.; Strods, R. Robot as agent in reducing risks of early school leaving. In Innovations, Technologies and Research in Education; Daniela, L., Ed.; Cambridge Scholars Publishing: Newcastle upon Tyne, UK, 2018; pp. 140–158. [Google Scholar]

- Krebs, H.I.; Fasoli, S.E.; Dipietro, L.; Fragala-Pinkham, M.; Hughes, R.; Stein, J.; Hogan, N. Motor learning characterizes habilitation of children with hemiplegic cerebral palsy. Neurorehabilit. Neural Repair 2012, 26, 855–860. [Google Scholar] [CrossRef]

- Srinivasan, S.M.; Eigsti, I.M.; Gifford, T.; Bhat, A.N. The effects of embodied rhythm and robotic interventions on the spontaneous and responsive verbal communication skills of children with Autism Spectrum Disorder (ASD): A further outcome of a pilot randomized controlled trial. Res. Autism Spectr. Disord. 2016, 27, 73–87. [Google Scholar] [CrossRef] [PubMed]

- Vanderborght, B.; Simut, R.; Saldien, J.; Pop, C.; Rusu, A.S.; Pintea, S.; David, D.O. Using the social robot probo as a social story telling agent for children with ASD. Interact. Stud. 2012, 13, 348–372. [Google Scholar] [CrossRef]

- Aresti-Bartolome, N.; Garcia-Zapirain, B. Technologies as support tools for persons with autistic spectrum disorder: A systematic review. Int. J. Environ. Res. Public Health 2014, 11, 7767–7802. [Google Scholar] [CrossRef]

- Nao. Available online: https://www.aldebaran.com/it/nao (accessed on 25 October 2023).

- Pepper. Available online: https://www.aldebaran.com/it/pepper (accessed on 25 October 2023).

- Benitti, F.B.V. Exploring the educational potential of robotics in schools: A systematic review. Comput. Educ. 2012, 58, 978–988. [Google Scholar] [CrossRef]

- Milo. Assistive Technology & Curriculum for Autistic Students. Available online: https://www.robokind.com/ (accessed on 26 October 2023).

- Robin. Available online: https://expper.tech/ (accessed on 26 October 2023).

- Robins, B.; Dautenhahn, K. Tactile Interactions with a Humanoid Robot: Novel Play Scenario Implementations with Children with Autism. Int. J. Soc. Robot 2014, 6, 397–415. [Google Scholar] [CrossRef]

- Ricci, C.; Magaudda, C.; Carradori, G.; Bellifemine, D.; Romeo, A. Il Manuale ABAVB-Applied Behavior Analysis and Verbal Behavior: Fondamenti, Tecniche e Programmi di Intervento; Edizioni Centro Studi Erickson: Trento, Italy, 2014. [Google Scholar]

- Clabaugh, C.; Mataric, M. Escaping Oz: Autonomy in Socially Assistive Robotics. Annu. Rev. Control. Robot. Auton. Syst. 2019, 2, 33–61. [Google Scholar] [CrossRef]

- Robins, B.; Dickerson, P.; Stribling, P.; Dautenhahn, K. RobotMediated Joint Attention in Children with Autism: A Case Study in Robot-Human Interaction. Interact. Stud. 2004, 5, 161–198. [Google Scholar] [CrossRef]

- Scassellati, B. How Social Robots Will Help Us to Diagnose, Treat, and Understand Autism. In Proceedings of the Robotics Research: Results of the 12th International Symposium ISRR, San Francisco, CA, USA, 12–15 October 2005. [Google Scholar]

- Colton, M.; Ricks, D.; Goodrich, M.; Dariush, B.; Fujimura, K.; Fukiki, M. Toward therapist-in-the-loop assistive robotics for children with autism and specific language impairment. Autism 2009, 24, 25. [Google Scholar]

- Cottini, L. Che Cos’è L’autismo Infantile; Carocci: Rome, Italy, 2013. [Google Scholar]

- DiPietro, J.; Kelemen, A.; Liang, Y.L.; Sik-Lanyi, C. Computer- and Robot-Assisted Therapies to Aid Social and Intellectual Functioning of Children with Autism Spectrum Disorder. Medicina 2019, 55, 440. [Google Scholar] [CrossRef] [PubMed]

- Tennyson, M.F.; Kuester, D.A.; Casteel, J.; Nikolopoulos, C. Accessible robots for improving social skills of individuals with autism. J. Artif. Intell. Soft Comput. Res. 2016, 6, 267–277. [Google Scholar] [CrossRef]

- Van den Berk-Smeekens, I.; Van Dongen-Boomsma, M.; De Korte, M.W.P.; Den Boer, J.C.; Oosterling, I.J.; Peters-Scheffer, N.C.; Buitelaar, J.K.; Barakova, E.I.; Lourens, T.; Staal, W.G.; et al. Adherence and acceptability of a robot-assisted Pivotal Response Treatment protocol for children with autism spectrum disorder. Sci. Rep. 2020, 10, 8110. [Google Scholar] [CrossRef] [PubMed]

- Marino, F.; Chila, P.; Sfrazzetto, S.T.; Carrozza, C.; Crimi, I.; Failla, C.; Busa, M.; Bernava, G.; Tartarisco, G.; Vagni, D.; et al. Outcomes of a Robot-Assisted Social-Emotional Understanding Intervention for Young Children with Autism Spectrum Disorders. J. Autism Dev. Disord. 2020, 50, 1973–1987. [Google Scholar] [CrossRef]

- Barakova, E.I.; Bajracharya, P.; Willemsen, M.; Lourens, T.; Huskens, B. Long-term LEGO therapy with humanoid robot for children with ASD. Expert. Syst. 2015, 32, 698–709. [Google Scholar] [CrossRef]

- Anamaria, P.C.; Ramona, S.; Sebastian, P.; Jelle, S.; Alina, R.; Daniel, D.; Johan, V.; Dirk, L.; Bram, V. Can the social robot probo help children with autism to identify situation-based emotions? A series of single case experiments. Int. J. Hum. Robot. 2013, 10, 24. [Google Scholar]

- Di Nuovo, A.; Conti, D.; Trubia, G.; Buono, S.; Di Nuovo, S. Deep Learning Systems for Estimating Visual Attention in Robot-Assisted Therapy of Children with Autism and Intellectual Disability. Robotics 2018, 7, 25. [Google Scholar] [CrossRef]

- Lee, J.; Takehashi, H.; Nagai, C.; Obinata, G.; Stefanov, D. Which Robot Features Can Stimulate Better Responses from Children with Autism in Robot-Assisted Therapy? Int. J. Adv. Robot. Syst. 2012, 9, 6. [Google Scholar] [CrossRef]

- Bharatharaj, J.; Huang, L.L.; Elara, M.R.; Al-Jumaily, A.; Krageloh, C. Robot-Assisted Therapy for Learning and Social Interaction of Children with Autism Spectrum Disorder. Robotics 2017, 6, 4. [Google Scholar] [CrossRef]

- Boccanfuso, L.; Scarborough, S.; Abramson, R.K.; Hall, A.V.; Wright, H.H.; O’Kane, J.M. A low-cost socially assistive robot and robot-assisted intervention for children with autism spectrum disorder: Field trials and lessons learned. Auton. Robot. 2017, 41, 637–655. [Google Scholar] [CrossRef]

- Costescu, C.A.; Vanderborght, B.; David, D.O. Reversal learning task in children with autism spectrum disorder: A robot-based approach. J. Autism Dev. Disord. 2014, 45, 3715–3725. [Google Scholar] [CrossRef] [PubMed]

- Feil-Seifer, D.; Matari’c, M.J. Toward socially assistive robotics for augmenting interventions for children with autism spectrum disorders. Springer Tracts Adv. Robot. 2009, 54, 201–210. [Google Scholar] [CrossRef]

- Scassellati, B.; Admoni, H.; Matari´c, M.J. Robots for use in autism research. Ann. Rev. Biomed. Eng. 2012, 14, 275–294. [Google Scholar] [CrossRef]

- Vallès-Peris, N.; Angulo, C.; Domènech, M. Children’s imaginaries of human–robot interaction in healthcare. Int. J. Environ. Res. Public Health 2018, 15, 970. [Google Scholar] [CrossRef] [PubMed]

- Ramirez-Duque, A.A.; Aycardi, L.F.; Villa, A.; Munera, M.; Bastos, T.; Belpaeme, T.; Frizera-Neto, A.; Cifuentes, C.A. Collaborative and Inclusive Process with the Autism Community: A Case Study in Colombia About Social Robot Design. Int. J. Soc. Robot. 2021, 13, 153–167. [Google Scholar] [CrossRef]

- Rudovic, O.; Lee, J.; Dai, M.; Schuller, B.; Picard, R.W. Personalized machine learning for robot perception of affect and engagement in autism therapy. Sci. Robot. 2018, 3, eaao6760. [Google Scholar] [CrossRef]

- Baron-Cohen, S. Mindblindness: An Essay on Autism and Theory of Mind; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Charman, T.; Stone, W. (Eds.) Social and Communication Development in Autism Spectrum Disorders: Early Identification, Diagnosis, and Intervention; Guilford Press: New York, NY, USA, 2008. [Google Scholar]

- Adolphs, R.; Sears, L.; Piven, J. Abnormal processing of social information from faces in autism. In Autism; Routledge: London, UK, 2013; pp. 126–134. [Google Scholar]

- Pelphrey, K.A.; Sasson, N.J.; Reznick, J.S.; Paul, G.; Goldman, B.D.; Piven, J. Visual scanning of faces in autism. J. Autism Dev. Disord. 2002, 32, 249–261. [Google Scholar] [CrossRef] [PubMed]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Salgado, P.; Banos, O.; Villalonga, C. Facial expression interpretation in asd using deep learning. In International Work-Conference on Artificial Neural Networks; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Howlin, P.; Baron-Cohen, S.; Hadwin, J.A. Teaching Children with Autism to Mind-Read: A Practical Guide for Teachers and Parents; John Wiley & Sons: Hoboken, NJ, USA, 1999. [Google Scholar]

- Guha, M.; Druin, A.; Fails, J. Cooperative inquiry revisited: Reflections of the past and guidelines for the future of intergenerational co-design. Int. J. Child-Comput. Interact. 2014, 1, 14–23. [Google Scholar] [CrossRef]

- Raptopoulou, A.; Komnidis, A.; Bamidis, P.D.; Astaras, A. Human–robot interaction for social skill development in children with ASD: A literature review. Healthc. Technol. Lett. 2021, 8, 90–96. [Google Scholar] [CrossRef]

- Cabibihan, J.J.; Javed, H.; Ang, M.; Aljunied, S.M. Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. Int. J. Soc. Robot. 2013, 5, 593–618. [Google Scholar] [CrossRef]

- Yun, S.S.; Choi, J.; Park, S.K.; Bong, G.Y.; Yoo, H. Social skills training for children with autism spectrum disorder using a robotic behavioral intervention system. Autism Res. 2017, 10, 1306–1323. [Google Scholar] [CrossRef]

- Kumazaki, H.; Yoshikawa, Y.; Yoshimura, Y.; Ikeda, T.; Hasegawa, C.; Saito, D.N.; Tomiyama, S.; An, K.M.; Shimaya, J.; Ishiguro, H.; et al. The impact of robotic intervention on joint attention in children with autism spectrum disorders. Mol. Autism 2018, 9, 46. [Google Scholar] [CrossRef] [PubMed]

- Scassellati, B.; Boccanfuso, L.; Huang, C.M.; Mademtzi, M.; Qin, M.; Salomons, N.; Ventola, P.; Shic, F. Improving social skills in children with ASD using a long-term, in-home social robot. Sci. Robot. 2018, 3, eaat7544. [Google Scholar] [CrossRef] [PubMed]

- David, D.O.; Costescu, C.A.; Matu, S.; Szentagotai, A.; Dobrean, A. Developing joint attention for children with autism in robot-enhanced therapy. Int. J. Soc. Robot. 2018, 10, 595–605. [Google Scholar] [CrossRef]

- Kim, E.S.; Berkovits, L.D.; Bernier, E.P.; Leyzberg, D.; Shic, F.; Paul, R.; Scassellati, B. Social robots as embedded reinforcers of social behavior in children with autism. J. Autism Dev. Disord. 2013, 43, 1038–1049. [Google Scholar] [CrossRef] [PubMed]

- Chiusaroli, D.; Di Tore, P.A. EmoTracker: Emotion Recognition between Distance Learning and Special Educational Needs. Ital. J. Health Educ. Sport. Incl. Didact. 2020, 4, 42–52. [Google Scholar] [CrossRef]

- Piaget, J. La Rappresentazione del Mondo nel Fanciullo; Boringhieri: Torino, Italy, 1926. [Google Scholar]

- Costa, S.; Lehmann, H.; Dautenhahn, K.; Robins, B.; Soares, F. Using a humanoid robot to elicit body awareness and appropriate physical interaction in children with autism. Int. J. Soc. Robot. 2014, 7, 265–278. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).