1. Introduction

Experimental research on code-switching in bilingual speakers has established some of the factors that affect the likelihood and type of a code-switch (see

Van Hell et al. (

2015) for a review). The research indicates that the immediate spoken context affects the likelihood of a code-switch. It has been established that the grammatical structure of a prior utterance can influence use of its counterpart in the other language. Under the conditions examined then, and in line with theoretical proposals (

Hartsuiker et al. 2004), there is structural or grammatical priming across languages. A further factor that influences the likelihood of a code-switch is the presence of bilingual homophones. Such an effect is consistent with the trigger hypothesis (

Clyne 2003) and its extension (

Broersma and De Bot 2006). From the point of view of understanding the cognitive mechanism that mediates code-switching, experimental data indicate that prior utterances can influence the activation of lexico-syntactic representations, making such representations more available for selection. Such representations provide a source of stimulus-driven or bottom-up control. Two matters remain open from experimental data. First, do such results generalise to real world contexts? Corpus data are highly pertinent in establishing the ecological validity of experimental data. Second, code-switching may be sensitive to priming but bottom-up processes of control cannot be sufficient because bilingual speakers may code-switch in order to convey their communicative intentions (e.g.,

Myers-Scotton and Jake 2017). There is a need for code-switching to reflect the speaker’s communicative intention that, if necessary, overrides bottom-up influences. I refer to intentional control as top-down control. I consider each question.

Fricke and Kootstra’s (

2016) rich analysis of the Bangor-Miami corpus data (

Deuchar et al. 2014) provides good evidence for priming effects. They analysed successive clauses. I note three results from their analysis. Code-switching can be primed by lexical items in a prior utterance that unambiguously belong to the language of the code-switch in a current utterance. Structurally, the presence of a finite verb in one language increased the likelihood that that language would form the matrix language for a code-switch in the current utterance independent of lexical factors. Corpus data then corroborate inferences from experimental research of the importance of bottom-up control (see also

Broersma and De Bot 2006), but code-switched utterances accounted for only 5.8% of utterances in the analysis of the Bangor-Miami corpus by

Fricke and Kootstra (

2016). The bulk of utterances were in a single language. Priming is relevant, but it seems preferable to say that top-down processes of control allow such priming in overt speech in line with the speaker’s communicative intention.

The evidence that code-switches can be primed is consistent with the idea that representations in the language network vary in their degree of activation. It is then a simple step to suggest that when a speaker is using just one of their languages that representations in their other language are suppressed (e.g.,

Muysken 2000). However, are variations in levels of activation a sufficient mechanism to account for the pattern of language use in the corpus data? Experimental evidence indicates that even when only one language is in play, lexical representations and grammatical constructions are active in the other language and lexical representations reach to the level of phonological form (e.g., (

Blumenfeld and Marian 2013;

Christoffels et al. 2007;

Costa et al. 2000;

Hoshino and Thierry 2011;

Kroll et al. 2015) for a review). Given the corpus data cited above, top-down processes of control must allow speakers to produce words and constructions in just one language in a code-switching context despite parallel activation of words and constructions in the other language (for further discussion of bottom-up and top-down processes of language control, see (

Kleinman and Gollan 2016;

Morales et al. 2013)). What kind of mechanism might be envisaged? Core to the proposed mechanism is the idea that control processes external to the language network govern entry of items and constructions into a speech plan (

Green 1986,

1998). Such an idea is consistent with neuropsychological data where, to take one example, following stroke, a bilingual patient may display intact clausal processing in each of their languages, but switch languages unintentionally when talking to a monolingual speaker (

Fabbro et al. 2000). Based on this idea,

Green and Wei (

2014), proposed a control process model (CPM) of code-switching in which control processes establish the conditions for entry to a speech plan by setting the state of a language “gate”. Given that utterances are planned in advance of their production, the CPM also includes a means (a competitive queuing network) to convert the parallel activation of the items in the plan to a serial order required for production. The aim of the present paper is to extend the CPM significantly and yet retain its basic architecture. In the next section, I review the background to the extension, specifically concerning the control processes, before identifying and presenting the extensions in the following section.

4. The Extended Control Process Model

The extended CPM retains the architecture of the original model but includes speech input as an explicit component and articulates the construction of a speech plan.

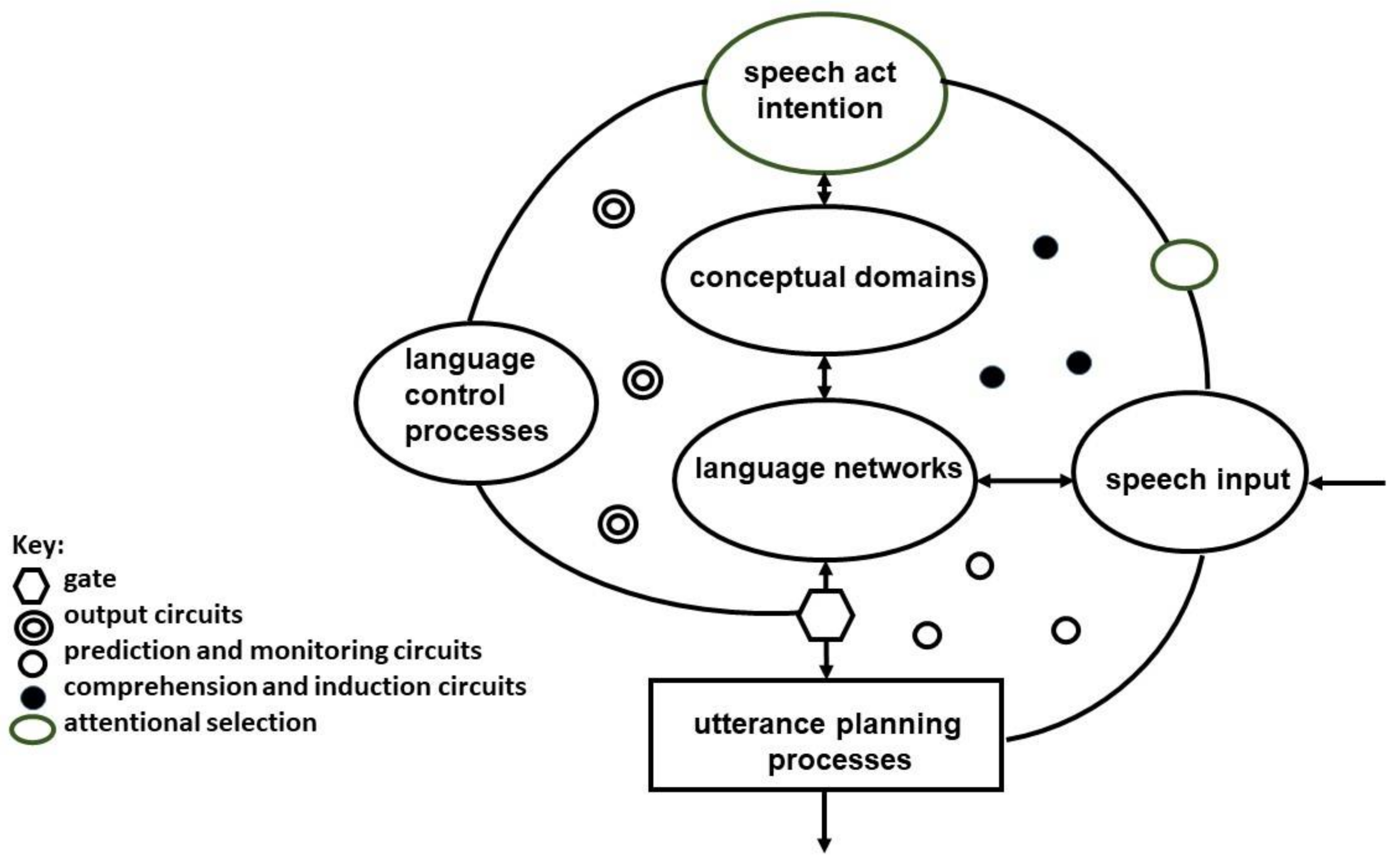

Figure 1 and

Figure 2 illustrate the extended CPM.

Figure 1 provides a schematic of the process of mapping a speech act into the utterance planning process.

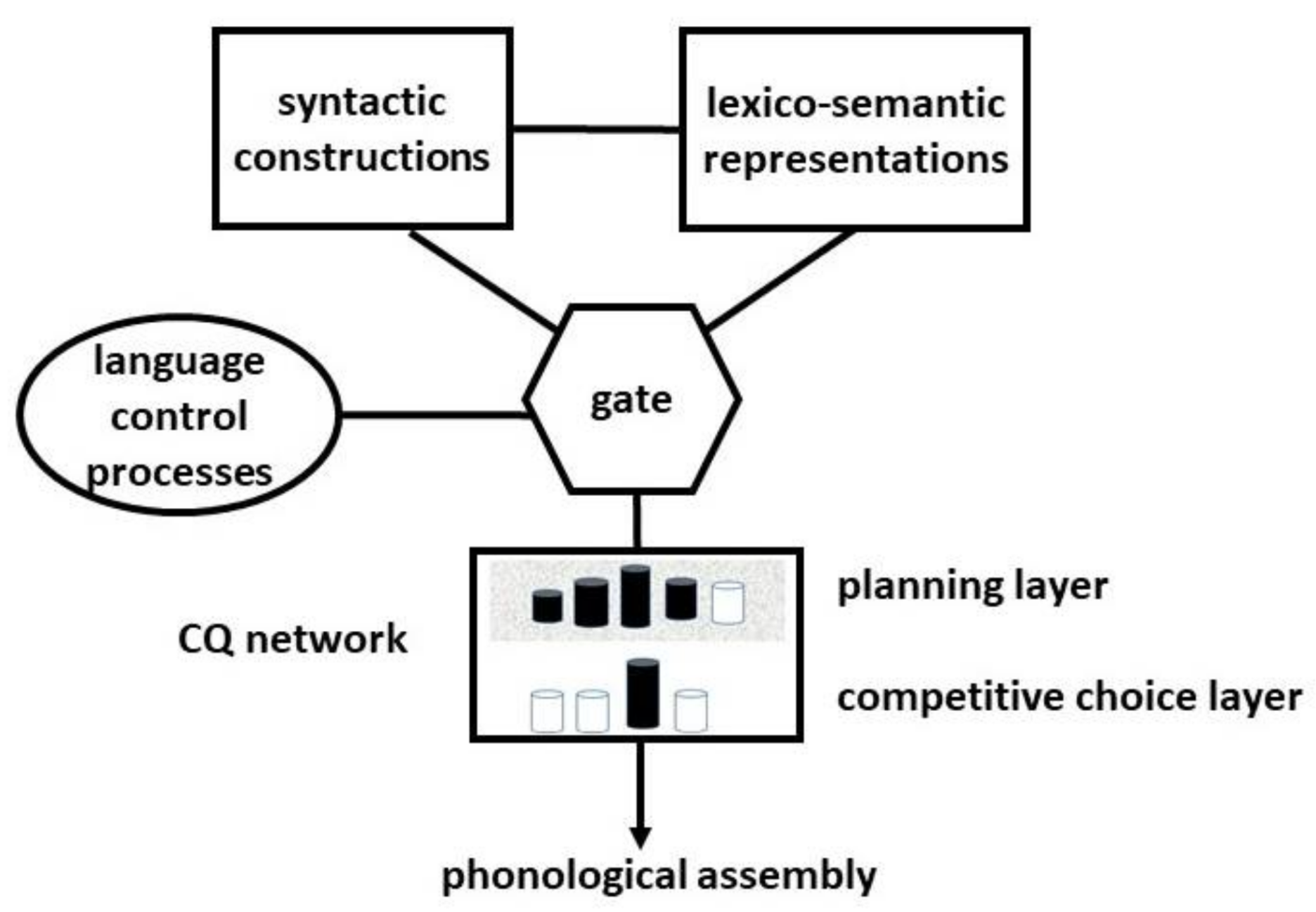

Figure 2 illustrates the role of the gate in controlling entry of syntactic form and lexical content into the competitive queuing network that yields the serial order required for speech production.

In

Figure 1, the lower right quarter of the figure indicates that speech production processes can tacitly support speech perception (

Halle and Stevens 1962;

Skipper et al. 2017). The upper right quadrant refers to processes that can determine the meaning of what is said and induce significance. A speech act binds thoughts (conceptual content) onto the language networks and leads to the construction of an utterance plan (left half of the figure). In line with conventional proposals, and as described in

Green and Wei (

2014), nodes in the language network refer to words (lexical concepts), collocations and constructions.

I reprise the description of the network in

Green and Wei (

2014) so as to flesh out, for the present paper, the nature of the language networks envisaged. Connecting links between the nodes pass activation as a function of link strength. Activation of a given set of items can then reflect not only activation from the unfolding conceptual representation but properties of the network itself and momentary changes arising from the speech of another or oneself. Each lexical concept or construction is identified in terms of the language to which it belongs, that is, it is tagged for language membership (e.g.,

Albert and Obler 1978;

Green 1986;

Poulisse and Bongaerts 1994) by a link to a language node (e.g.,

Dijkstra and Van Heuven 2002). Such linkage allows functionally distinct but interconnected networks (

Kroll et al. (

2010) for further discussion) and is consistent with evidence of intermingled neuronal populations mediating language representation (

Consonni et al. 2013;

Green 2003;

Paradis 2004) and for wider discussion (

Green and Kroll forthcoming). We followed

Hartsuiker et al. (

2004) in supposing that common syntactic constructions, that underlie congruent lexicalisation, are represented by combinatorial nodes (see also

Kootstra et al. 2010). For example, English and Dutch would share common combinatorial nodes for the verb

give for a prepositional object construction (as in

Jack gave the ball to Jill) and for the double object construction (as in

Jack gave Jill the ball). Language pairs from the same language family (e.g., English-Dutch) will share more of such combinatorial nodes.

Given experimental evidence that conceptual activation induces activation of the syntactic and lexical inventories of both languages, there is then competition for entry into the utterance plan. The gate selects items and constructions on the basis of language control signals. Such signals reflect the communicative intentions of the speaker and I treat such signals as necessary and sufficient to control the operation of the gate. Here, I assume a single gate that can have different operating properties depending on the language control signals. In line with

Green and Wei (

2014) and

Green and Abutalebi (

2013), I suppose that these signals are generated by language task schemas that can be configured in different ways to implement the speaker’s intention to use their languages in specific ways (

Green 1986,

1998). With repeated use, a given configuration becomes a habit of control that can then be triggered by contextual cues (

Green and Abutalebi 2013). Schemas can be configured competitively. In this configuration, activation of one schema suppresses activation of the other and signals the selection of one language but not the other. Alternatively, schemas can be coordinated cooperatively to signal coupled or open control. I describe how the gate operates, given the language control signals, after reviewing the speech planning process. However, the net result is that selection reflects items and constructions that are the most appropriate at that moment in time from the speaker’s perspective on the basis of pragmatic, semantic, syntactic and collocational considerations and readiness for use (cf.

Ward 1992).

Given that we cannot utter all that we have in mind at once, speech demands the imposition of serial order over the set of activated items in the plan (

Lashley 1951). Competitive queuing (CQ) networks comprising a planning layer and competitive choice layer provide a neuroanatomically plausible way to achieve this goal (

Bohland et al. 2009;

Grossberg 1978;

Houghton 1990). The gradient of item activations in the planning layer indicates production order (see

Figure 2). In the choice layer, the item with the current highest level of activation suppresses all others, allowing that item to be released from the planning layer. Once released, its activation is suppressed in the planning layer, allowing the next most active item to be selected via the choice layer and so the cycle iterates. A plan precedes its execution but this does not mean that it must be fully specified before a person begins to speak. Rather, planning and execution can be interleaved (see

MacDonald 2013) enabling the time between conversational turns to be quite short (

Levinson 2016).

Granting this way to control serial order, and that other CQ networks are required in the mapping to overt speech, we need to envisage a neuroanatomically plausible way to construct an utterance plan in the first place and one which enables code-switching. Whatever the mechanism, it must be one that captures a critical aspect of language use: namely the human facility to create diverse utterances using a finite means. A basic requirement is the separation of form (syntactic constructions) from content (lexico-semantic representations).

In the case of a sentence, a constituent can be represented as a variable permitting any word to be assigned or bound to it that meets its specification, as a finite verb, say or as the agent in a sentence. We do not have a deep understanding of how the human brain achieves such variable binding but one way, consistent with known neuroanatomy, has been proposed (

Kriete et al. 2013), and I adopt it.

Figure 2 isolates components from

Figure 1 and illustrates a partitioning of the language networks. Each syntactic construction is a frame comprising a set of slots or roles (e.g., agent-action- patient). In accordance with

Kriete et al. (

2013), I suppose that each slot can point to, that is bind, specific lexical content. This means, for example, that a given lexical item can serve as an agent in one sentence but a patient in another. In this way, the same structure can give rise to diverse sentences.

In

Figure 2 I treat the gate as the plan constructor. Control signals from the gate select from activated constructions, update their lexico-semantic content, and signal release to the CQ network. Allowing selected structures in turn to affect the control signals from the gate provides a way to build nested structures. Known multiple reciprocal connections between frontal cortex and subcortical structures provide a plausible neural substrate (see also

Stocco et al. 2014). Following,

Kriete et al. (

2013), I identify the gate with the basal ganglia (a subcortical structure) and the syntactic constructions and content with regions (“stripes”) in the prefrontal cortex. We note that neuroimaging data further suggest that one particular region of the basal ganglia, the caudate, is important in selecting a syntactic structure (

Argyropoulos et al. 2013). Caudate activation, for example, increases when experimental participants have to generate a sentence (i.e., produce and execute an utterance plan) rather than merely repeat a presented sentence. Damage to the caudate, following stroke, impairs a person’s ability to avoid inappropriate code-switching. However, their code-switched utterances are nonetheless well-formed as in

I cannot communicare con you (patient, A.H.,

Abutalebi et al. (

2000)).

Kriete et al. (

2013) implemented a network model of their proposal and so established proof-in-principle of its viability. The goal here is more circumscribed: it is to establish a conceptual extension of their model for the case of bilingualism and code-switching specifically. In this extension the operation of the gate depends on the nature of the language control signals (

Figure 2). In an interactional context where only one language is in use, the gate blocks entry into the speech plan of activated constructions and items from the non-target language. Crucially, on this proposal, activated but non-target, language items are inhibited before they compete for binding.

Code-switching, on the other hand, requires that both languages are in play. Under coupled control, in the case of alternation, the gate opens to select a phrase or clause from the non-matrix language as part of the conversational turn. For insertions, the gate temporarily opens to bind an item from the non-matrix language to a role in the current clause of the matrix language. A further operation is presumably needed if the item has to be adapted to the local context as in the adaptation of the French verb

choisir (‘to choose’) to

choisieren with a German particle

–ieren (

Edwards and Gardner-Chloros 2007, p. 82; see also

Gardner-Chloros 2009). We consider insertion as the best example of coupled control because it is intraclausal whereas, alternation, at least interclausal alternation, is consistent with competitive control. For present purposes, the key point is that because speech is planned before it is executed, any processing correlate of an intended insertion should be detectable

before the actual insertion occurs in the speech stream. In other words, we should look at what is going on, not only at the time of overt switching but at the covert processes before that time.

Could there be a processing cost? One potential cost is associated with switching away from, and then back to, the matrix language. Such switching acts at a global level rather than at the item level (

Green 1998) to inhibit any item from the current language. A second potential cost is increased competition in the binding of items from the non-matrix language to unfilled roles in the matrix language frame once the opportunity to do so is briefly available. This possibility arises, in contrast to single language use, because items from both languages are temporarily available for binding. So despite the insertion being maximally appropriate pragmatically (e.g.,

Myers-Scotton and Jake 2017), there could be a binding cost. Such a cost arises at the item level (e.g.,

Green 1998). How might these two types of cost be detected?

We know from research on language switching that activation in the caudate increases during an actual language switch (e.g.,

Abutalebi and Green 2007;

Abutalebi et al. 2013;

Crinion et al. 2006). Activation reflects control at the level of the language rather at the level of the item (see

DeClerk and Philipp (

2015) for discussion of the loci for language switching)

1. Assume for present purposes that a caudate activation also tracks covert language switching. It follows that any switching cost associated with coupled control

prior to the actual code-switch should be detectable, all else being equal, as increased caudate activation relative to baseline utterances where there is no code-switching. How might binding competition be detected? Binding competition has yet to be explored but it seems reasonable to suppose that it may increase activation in frontal regions of the brain known to be linked to the suppression of lexical competitors (e.g.,

de Zubicaray et al. 2006;

Shao et al. 2014). If so, any effects of binding competition associated with coupled control

prior to the actual code-switch should be detectable, all else being equal, as increased frontal activation relative to baseline utterances where there is no code-switching. No current neuroimaging data bear on these predictions. We do know though that speech rate adjusts

prior to the actual production of an insertional code-switch in a main clause. Miami-Bangor corpus data indicate, that prior to an insertional code-switch, speech rate decreases relative to that in matched unilingual control utterances by the same speaker in the same conversation (

Fricke et al. 2016). So, for example, in a conversation predominantly in Spanish (

Fricke et al. 2016, p. 115) the inserted word involves a switch from Spanish to English as in the example:

| 6. | a. | donde siempre tenemos el delay |

| | | ‘Where we always have the delay.’ |

and the speech rate comparison is with its unilingual control

| 6. | b. | yo las vi en casa |

| | | ‘I saw them at home.’ |

However, as yet, we cannot be certain whether such slowing really reflects language switching and/or increased binding competition, as predicted, or decreased activation of the matrix language in advance of the insertion, as proposed by

Fricke et al. (

2016).

Coupled control requires selection of items and constructions by language. Open control, by contrast, does not. The gate selects constructions and items opportunistically from each language network. Under this regime, the local context of the utterance effectively establishes requests for completion (including affixes and suffixes) that can be met by either language network. Under open control, language switching costs should be minimised (

Green and Abutalebi 2013;

Green and Wei 2014). It is possible though that the process of intertwining the morphosyntax of two languages increases demand on neural regions involved in the temporal control of morphosyntax (

Green and Abutalebi 2013). If so, neuropsychological data implicate a circuit connecting the cerebellum and the frontal cortex (

Mariën et al. 2001). If this is the case, this circuit will become more active during dense code-switching.

But what about binding costs that were not considered in the previous papers? These are potentially increased. This possibility arises because the gate is free to bind items to all currently active syntactic roles regardless of language membership—an option precluded in selective language use. However, binding costs are reduced as long as the competition for open slots is minimal. This minimal condition arises when there is a marked disparity in the accessibility of competitors and may obtain during dense code-switching though empirical data are lacking. Overall, open control during dense code-switching predicts that caudate activation will be insensitive to the amount of language switching. On the other hand, response in frontal regions will track the degree of binding competition.

From a control perspective, we have emphasised the association of open control with dense code-switching rather than restricting it to code-switches under the structural distinction of congruent lexicalisation. Such an association generalises the account of

Green and Wei (

2014) but leads one to wonder about the role of other structural designations in determining the control process.

Muysken (

2000, p. 228, 2nd paragraph), for example, indicates that alternational switches can also be quite copious. Here, the awkwardness argument also surely results (see earlier) because under coupled control there would need to be repeated cycles of ceding and taking back of control by one language with respect to another. The simplest control prediction is that open control will be obtained at some switching frequency (to be determined empirically) beyond a single insertion or alternation.

Of course, the notion of open control may be incorrect. If so, one possibility is that switching between languages will engage the network typically recruited during language switching (e.g., anterior cingulate cortex/pre-supplementary motor area, caudate (

Luk et al. 2012)). This alternative possibility makes clear qualitative predictions: relative to those utterances where speakers stick to a single language, speech rate will be greatly slowed and caudate activation, along with other regions in the switching network, dramatically amplified both prior to, and during, dense code-switching.

5. Language Control States

Conversations can involve predominantly unilingual turns in one language or another or can involve code-switching. According to the present proposal, code-switching requires a shift from a competitive to cooperative language control state. Within a code-switching context, code-switched utterance may be quite few in number and so the shift to cooperative control state might be quite transient. I consider two general and interrelated aspects of these control states. The first aspect associates changes in control states with a fundamental trade-off in human foraging and decision-making between exploiting a given resource and exploring an alternative. The second aspect associates changes in control state with changes in attentional breadth.

Viewed as a form of sampling or search, single language production in a bilingual speaker exploits the resources of a single language network and restricts utterances to constructions and items from a single language. The control state involves a narrow state of attention. By contrast, code-switching explores the resources of both language networks. The control state, especially in the case of dense code-switching, involves a broad attentional state.

If dense code-switching is more exploratory, it should increase activation in neural regions such as the frontopolar cortex that mediates exploration over exploitation in decision-making (e.g.,

Laurreiro-Martínez et al. 2013). Remarkably, pupil diameter also provides an index of the trade-off (

Aston-Jones and Cohen 2005). Research implicates neuroadrenergic signals from the locus coeruleus (LC) in the exploitation/exploration trade-off. Pupil diameter is sensitive to two classes of these signals, tonic (i.e., baseline) and phasic (i.e., event-related), that are inversely related to one another. Tonic pupil diameter increases with shifts to exploration in decision-making tasks (

Jepma and Nieuwenhuis 2011) and with shifts to exploring the inner world as in “mind-wandering” during reading (

Franklin et al. 2013). All else being equal, if dense code-switching is more exploratory than single language use then we can predict that tonic pupil diameter should increase during periods of dense code-switching. If, however, contrary to hypothesis, dense code-switching actually involves competitive control then the repeated demands to switch between languages will increase cognitive effort. In this case, the prediction is that phasic pupil diameter will increase because phasic pupil diameter tracks cognitive effort (

Kahneman 1973;

Kahneman and Beatty 1996).

The second aspect associates differences in language control with differences in breadth of attention. Attention can be focused more or less broadly (e.g.,

Eriksen and Yeh 1985;

Wachtel 1967). I consider possible neural correlates in the following section but note here that language use can affect behavioural responses to interference consistent with the notion that there are attentional correlates of language control states. Competitive control requires a narrowing of attention that is arguably enhanced when participants must use one rather than another language in a dual language context. An ingenious experiment by

Wu and Thierry (

2013) found that during a dual language context, bilinguals were indeed more effective at resisting non-verbal interference as tested in a non-verbal flanker task (see also (

Hommel et al. 2011) for evidence of benefits on a convergent thinking task). Cooperative control, by contrast, is predicted to increase the breadth of attention. It follows that during dense code-switching, in particular, participants should be more susceptible to interference. Such interference might be verbal or non-verbal (e.g., a distracting sound or visual stimulus). With respect to verbal interference, I conjectured above that dense code-switching may increase a specific kind of verbal interference, binding competition. If so, participants who routinely engage in dense code-switching may become adept at resolving such interference. In this case, an increased susceptibility to immediate interference during dense code-switching may be compensated by a greater facility in disengaging from it. Such facility might be general. If so, in a non-verbal, visual flanker task, speakers who routinely engage in dense code-switching, may show reduced effects of the congruency of a prior trial on the performance of a current trial (e.g., see (

Grundy et al. 2017) for experiments examining such “sequential congruency effects” in bilinguals and monolinguals).

7. Discussion

Varying the level of activation of different language networks is insufficient to account for the variety of utterance plans in bilingual speakers where speakers can use a single language, switch between two languages within a conversation or code-switch within a clause of the same utterance. Instead, the proposal here is that language control signals external to the language network help construct utterance plans. I briefly review the proposal before offering comment.

In a conceptual extension of an implemented neural network model of utterance production (

Kriete et al. 2013), language control signals operate on a subcortical gate that acts as a constructor of utterance plans. The gate interacts with frontal regions to select a syntactic structure and binds roles in that structure to specific lexical content. Plans are constructed in the planning layer of a competitive queuing CQ network. The competitive choice layer of this network allows serial order to emerge from the parallel activation of items in the plan.

A key claim of the proposal is that single language use requires competitive language control whereas the different types of code-switching require cooperative language control. Language control signals in the single language case inhibit the selection of syntactic forms and lexical items from the non-target language. Competitive language control exploits the resources of a single language network and requires a narrow focus of attention. By contrast, cooperative language control explores the resources of both language networks and broadens the focus of attention. Single insertions, and perhaps alternations, are mediated by coupled control, in which control by the matrix language is temporarily ceded to the other language. More copious or dense code-switching, associated with congruent lexicalisation but not restricted to it, is mediated by open control that suspends selection based on language membership. Pertinent neural and behavioural predictions were proposed.

How plausible is it that intra-clausal code-switching, and especially dense code-switching, induces a language control state distinct from that involved in single language use? Parsimony favours a single control state but predicts a computational and energetic cost: dense code-switching will increase demands on regions such as the caudate involved in language switching. However, such a cost does not necessarily weigh the case in favour of open control: the cooperative control state may increase binding competition over and above that generated under a single control state.

A further objection on the grounds of parsimony might be raised. The idea of distinct language control states carries as a corollary that the mind/brain can be in distinct, and co-occurring, attentional states (

Green and Wei 2016). For instance, a speaker can be focussed on achieving the goal of a speech act (a narrow attentional state) but use a linguistic means (e.g., dense code-switching) that requires a broad attentional state. There is a hierarchical relationship between these attentional states with the language control state nested under the narrow focussed, sustained attentional state associated with achieving the communicative goal. Subjective experience does not automatically rule out the co-occurrence of distinct attentional states. We can talk attentively and walk, as it were, on auto-pilot. Typically too, our focus of attention is the goal of what we want to say, rather than on the linguistic means we recruit to do so.

Empirical testing of co-occurring and dissociable attentional states requires distinct neural markers for sustained attention and dense code-switching. There are reasonable grounds for identifying a network of frontal regions of the brain as instrumental in sustaining attention to a goal (e.g.,

Rosenberg et al. 2016). We lack an agreed, empirically determined marker for dense code-switching but I have proposed two possible indices: changes in pupil diameter and changes in the metastability of participating language networks. The upshot is that we need empirical data to test for differences in language control states. How might such data be collected?

Neuroimaging research indicates that the patterns of neural activation in the listener synchronise with those of the speaker (

Stephens et al. 2010). If so, attentional states induced in different forms of language use will entrain the same states in listeners, at least when participants are drawn from the same speech community. Eliciting relevant and extended speech samples seems tractable. Scripted dialogue tasks, such as the map description task, offer a promising approach (

Beatty-Martínez and Dussias 2017). Along with corpus data (

Fricke and Kootstra 2016), such tasks allow identification of the cues to an upcoming code-switch and the opportunity to examine neural adaptation in the listener. So, for example, in the context of prior stretches of dense code-switching in the discourse, a cue to a code-switch (

Fricke et al. 2016;

Beatty-Martínez and Dussias 2017) should increase tonic pupil diameter and increase metastability in the networks mediating language control.

On the other hand, scripted dialogue and responses to it, may need inventive designs to capture other aspects of code-switching noted in corpus data.

Myslín and Levy (

2015) argued that end of clause, single noun, insertional code switches (from Czech to English) in their corpus data met a discourse-functional purpose. Such switches signalled to the listener the need to heed discourse meaning. Similarly,

Backus (

2001) identified the importance of culturally specific connotations in determining insertional code-switches. In addition to network activity putatively mediating distinct language control states, such results point to the subtlety and complexity of neural response as listeners track and respond to the speech acts of their interlocutor and prepare their own utterances.

In line with the present proposal, future research may identify distinct language control states that affect on-line production and comprehension. If so, different profiles of bilingual language use (e.g., single language use versus extensive code-switching) may, over the longer term, affect resilience to brain atrophy with age (

Alladi et al. 2013;

Bialystok et al. 2007;

Gold 2015). If there are dissociable, and co-occurring, attentional states induced by the type of language use, then, if we are to gain a fuller picture, we should also consider the content of what is being said, as well as how it is said. Conversations are about topics as illustrated by the mapping from conceptual domains to language networks depicted in

Figure 1. Different topics, such as describing the cloudscape from a plane window or reminiscing about a shared holiday, pose different attentional demands that vary in their breadth and their reference to external or internal worlds (

Green forthcoming). May not the attentional states induced co-occur with those elicited during utterance production in bilingual speakers?