Automatic Method for Distinguishing Hardware and Software Faults Based on Software Execution Data and Hardware Performance Counters

Abstract

1. Introduction

2. Background and Related Research

2.1. Faults Caused by the Integration of Hardware and Software

2.1.1. Transient or Intermittent Faults in the CPU

2.1.2. Interface or Timing Problems in Software

2.2. Related Research

2.2.1. Detection of Transient or Intermittent Faults

2.2.2. Detection of Hang or Timing Faults in the Software

2.2.3. Distinguishing Hardware and Software Faults

3. Method Distinguishing Between Hardware and Software Faults

- Assumption 1—The target system has already been confirmed to have defects during integration testing. Our approach is not to detect faults, but to identify where the cause of a fault (or faults) is in a system where it has already been detected.

- Assumption 2—There are no defects in the hardware and OS, other than the CPU.

- Assumption 3—Applications do not contain excessive input/output (I/O) waits or excessive memory accesses.

- Assumption 4—The faults to be identified cannot be analyzed with software fault detection tools or hardware debuggers such as trace32 or probes. Furthermore, the location of the defect changes each time or the defect is not reproducible.

3.1. Data Logging

3.1.1. Kernel Module for System Call Hooking

3.1.2. Software and Hardware Data for Distinguishing Faults

- Data obtained from software include system call results, parameters, values loaded from the memory, and the call stack. The values loaded from the memory and the values of the CPU performance counters are used to determine the occurrence and location of data errors.

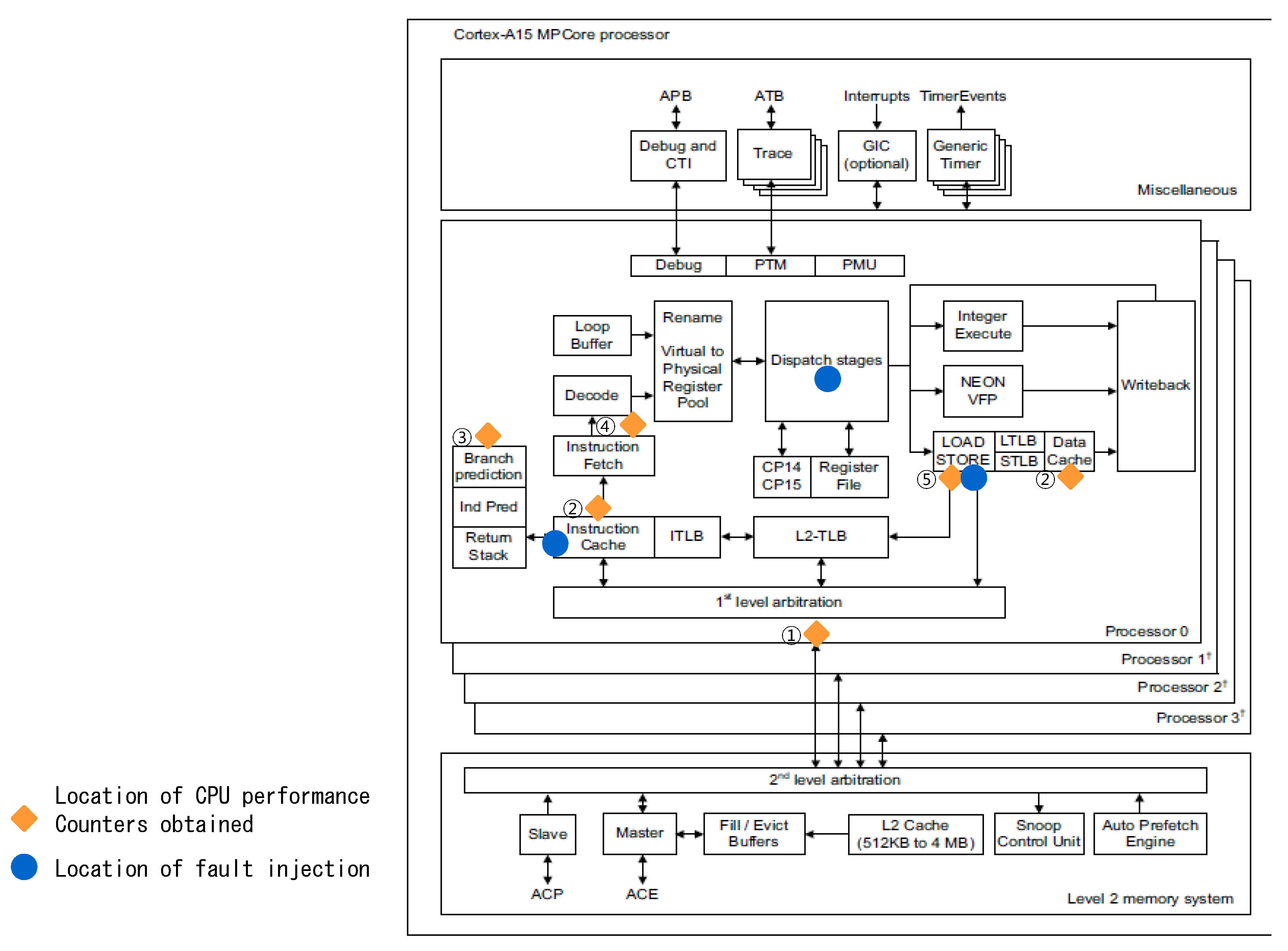

- The data obtained from the CPU hardware include the CPU ID and performance counters. The CPU ID indicates in which CPU the instruction was processed when a system call was executed. The performance counters that can be collected by the CPU depend on the type of CPU. Typically, there are various performance counters. In this study, faults are distinguished based on transient or intermittent faults generated in the CPU that seem to be caused by incorrect software operation. Thus, only performance counters related to this situation are obtained.

- For the Cortex-A15 CPU, performance counters such as the CPU cycle counter, L1/L2 cache access/refill/miss counters, BPU access/refill/miss counter, stall counter, and error counter were obtained.

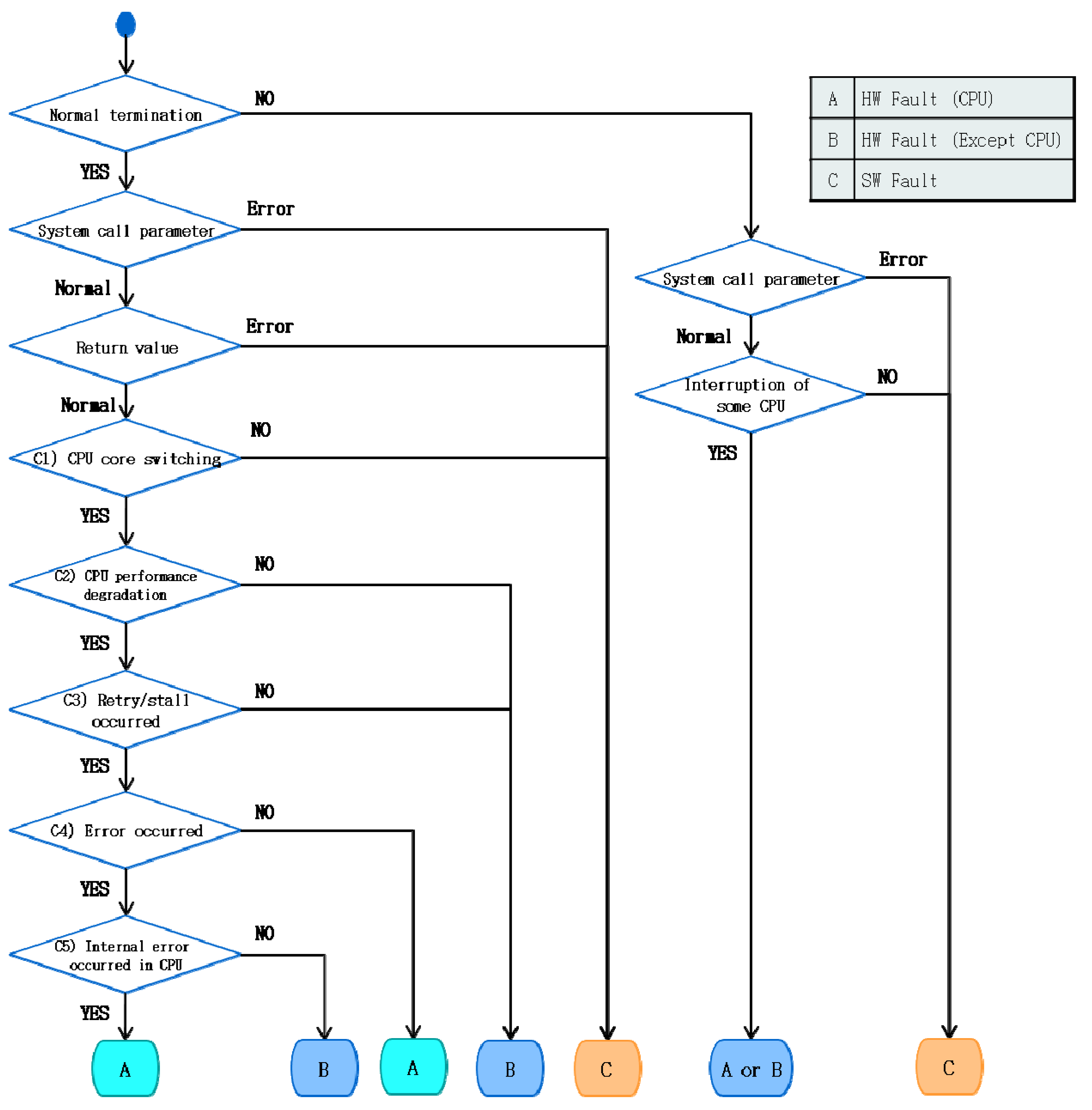

3.2. Fault Classification and Diagnosis of Factors Leading to CPU Faults

3.2.1. Normal Termination of Applications

3.2.2. Abnormal Termination of Applications

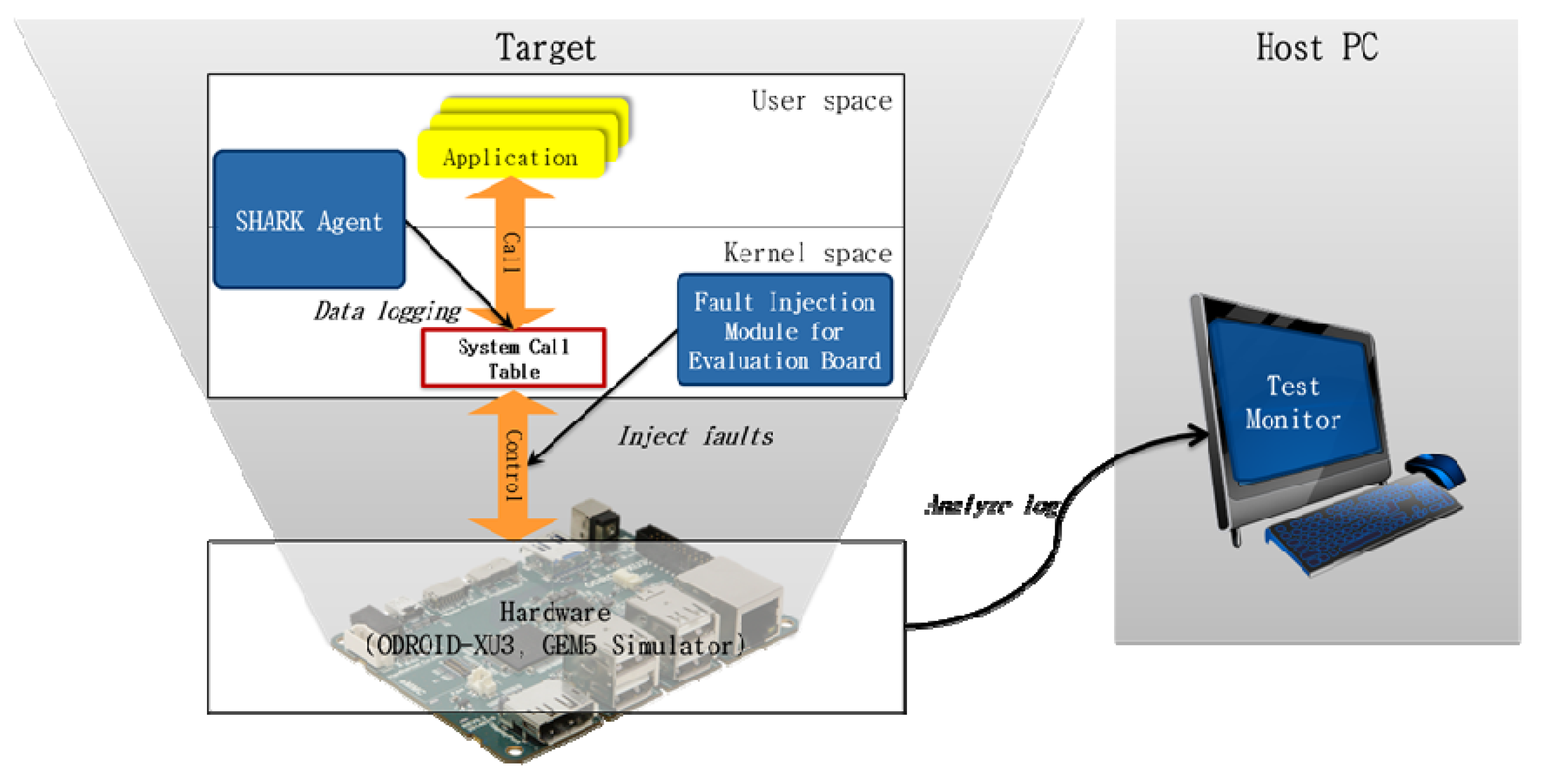

3.3. Automation

- Initializer: This module initializes the other components (SharkDriver and Logger) of the Shark agent and hooks the system call table in the kernel to execute the system call of SharkDriver instead of the original system call.

- SharkDriver: This kernel library module executes the system call, which is adjusted to obtain data to distinguish faults.

- Logger: This module logs the data obtained through SharkDriver.

- Step 1. When the Shark agent is applied to the target system for testing, the initializer operates the SharkDriver module and Logger module in the kernel.

- Step 2. SharkDriver examines the system call table and switches the system call from accessing hardware to a hooked system call.

- Step 3. When the system call targeted for hooking is called in the application, the corresponding hooked system call is executed to obtain the necessary hardware and software data to distinguish faults.

- Step 4. The Logger stores the data obtained in a log file.

- Step 5. The Shark test monitor identifies the fault by analyzing the log file and displays the types and occurrence times of the fault, CPU ID, and process ID.

4. Empirical Study

- RQ1. How accurately can the proposed method detect faults?

- RQ2. How accurately can the proposed method distinguish hardware faults from software faults?

- RQ3. How much overhead does the proposed method generate?

4.1. Experimental Environment

4.2. Experimental Design

4.2.1. Design of the Injected Faults

4.2.2. Hardware and Software Faults and Fault Injection Methods

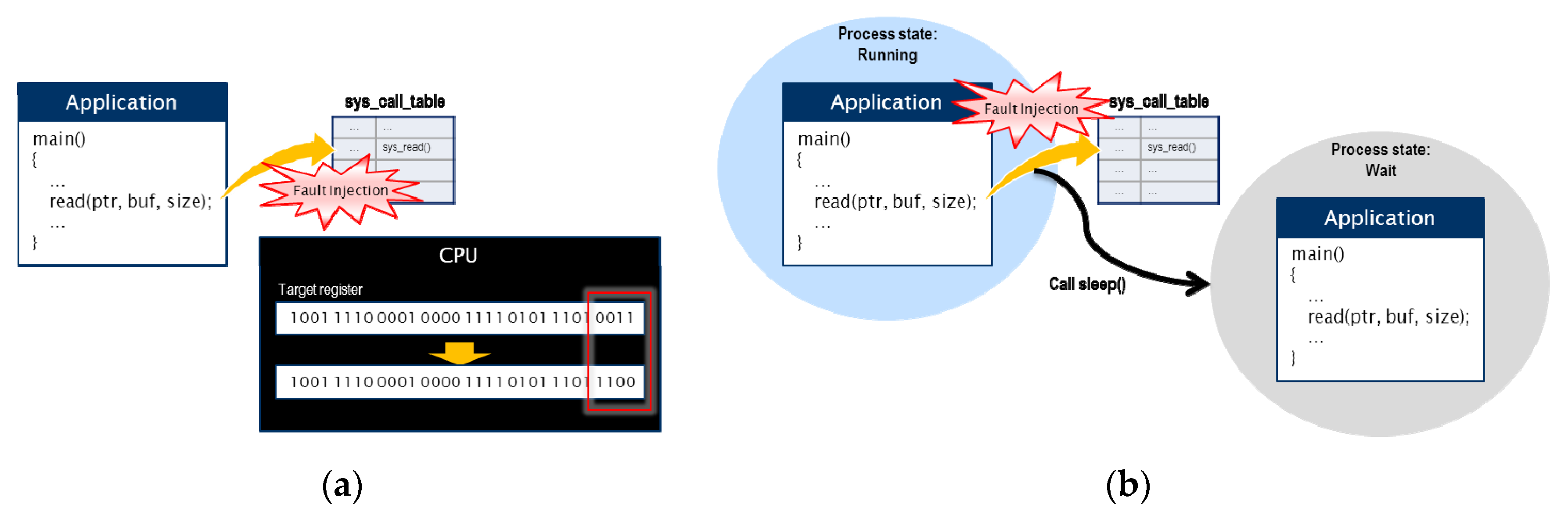

- Hardware CPU faults were injected into the instruction fetch, instruction dispatch, and load/store unit. These faults were injected by performing bit flipping on the register values corresponding to the CPU components, as shown in Figure 7a. Upon injection of a CPU intermittent fault into the evaluation board, the fault injection module was used to inject the fault immediately before a system call occurred. Injection of a fault into the simulator modified the code directly and the fault was injected randomly.

- Software faults were I/O timing faults interrupting the reception of appropriate responses when an I/O request occurred. This fault was injected to generate a time-out event in the CPU by converting the running state of a process or thread into a wait state before a system call related to I/O is executed (see Figure 7b). As an I/O timing fault leads to hanging, it was injected only once before a system call occurred.

4.2.3. Variables for Determining Experimental Results

4.2.4. Experimental Measurements

4.2.5. Number of Injected Faults

- A hardware CPU fault was injected into the evaluation board when the system calls of sys_read(), sys_write(), and sys_ioctl() were first called in the applications. Although system calls were executed several times in the applications, hardware intermittent faults occurred at sporadic intervals. Thus, faults were injected when the system calls first occurred instead of considering every system call executed in this experiment.

- Injection of a hardware CPU fault into the simulator resulted in the code related to instruction processing being directly modified, regardless of the system call; thus, it was necessary to only inject the fault once (at random).

- Both the evaluation board and simulator injected faults into the software in the same way. The first injected fault caused the system to hang; consequently, system calls that occurred after the first fault were not executed. In this regard, faults were injected when system calls were first executed and completed when faults were injected into the hardware.

4.3. Experimental Results

4.3.1. RQ1: How Accurately Can the Proposed Method Detect Faults?

4.3.2. RQ2: How Accurately Does the Proposed Method Identify Whether the Fault is A Hardware or Software Fault?

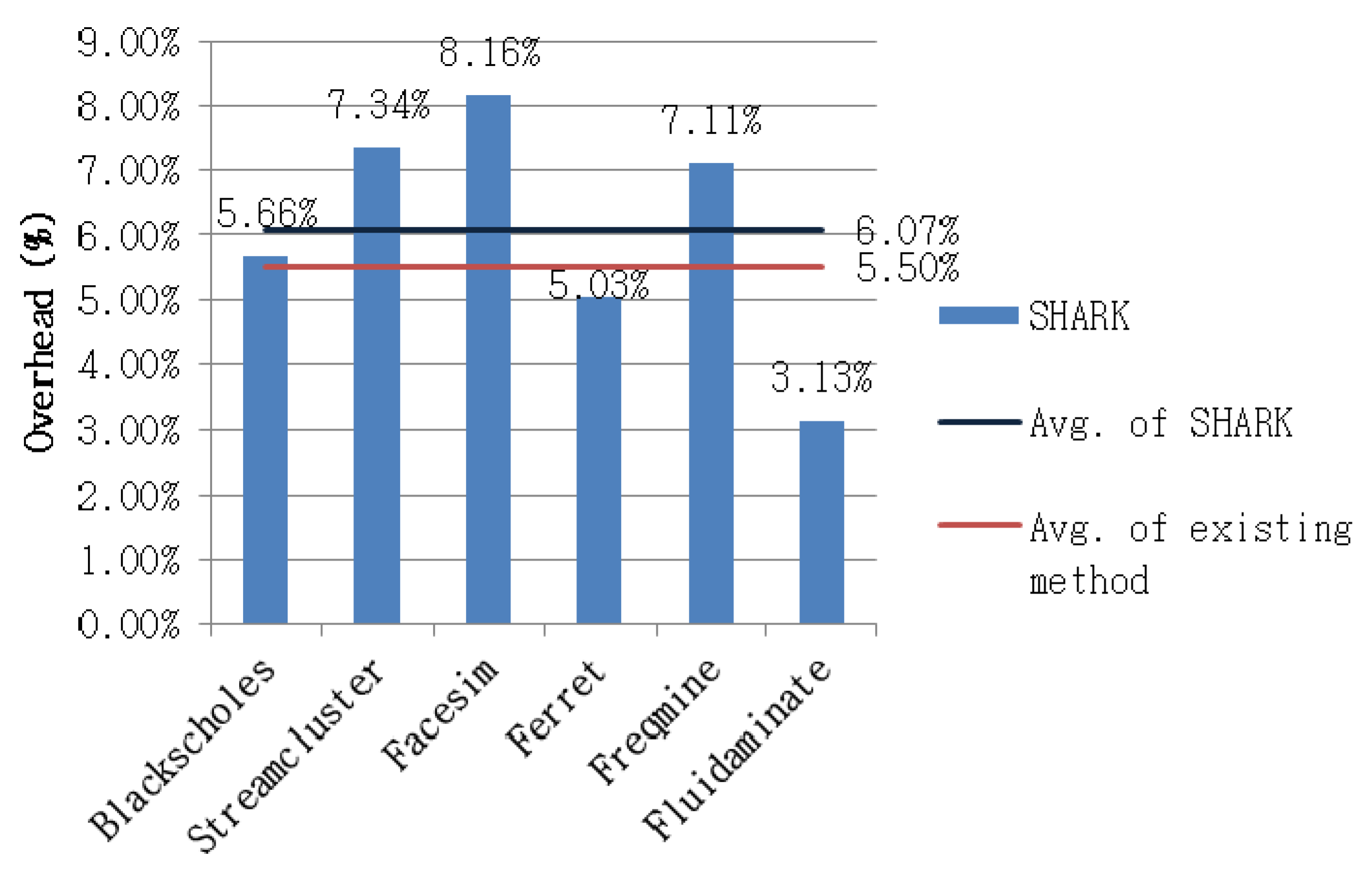

4.3.3. RQ3: How Much Overhead is Generated by the Proposed Method?

4.3.4. Experimental Limitations

5. Conclusions and Further Research

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kang, B.; Kwon, Y.-J.; Lee, R. A design and test technique for embedded software. In Proceedings of the Third ACIS Int’l Conference on Software Engineering Research, Management and Applications (SERA’05), Mount Pleasant, MI, USA, 11–13 August 2005; Institute of Electrical and Electronics Engineers (IEEE): Piscataway Township, NJ, USA, 2005; pp. 160–165. [Google Scholar]

- Hangal, S.; Lam, M.S. Tracking down software bugs using automatic anomaly detection. In Proceedings of the 24th international conference on Software engineering—ICSE ’02, Orlando, FL, USA, 25 May 2002; Association for Computing Machinery (ACM): New York, NY, USA, 2002; p. 291. [Google Scholar]

- Reis, G.; Chang, J.; Vachharajani, N.; Rangan, R.; August, D. SWIFT: Software implemented fault tolerance. In Proceedings of the International Symposium on Code Generation and Optimization, New York, NY, USA, 20–23 March 2005; IEEE Computer Society: Washington, DC, USA, 2005; pp. 243–254. [Google Scholar]

- Park, J.; Kim, H.-J.; Shin, J.-H.; Baik, J. An embedded software reliability model with consideration of hardware related software failures. In Proceedings of the 2012 IEEE Sixth International Conference on Software Security and Reliability, Gaithersburg, MD, USA, 20–22 June 2012; pp. 207–214. [Google Scholar] [CrossRef]

- Shye, A.; Moseley, T.; Reddi, V.J.; Blomstedt, J.; Connors, D.A. Using process-level redundancy to exploit multiple cores for transient fault tolerance. In Proceedings of the 37th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN’07), Edinburgh, UK, 25–28 June 2007; 2007; pp. 297–306. [Google Scholar] [CrossRef]

- Moreira, F.B.; Diener, M.; Navaux, P.O.A.; Koren, I. Data mining the memory access stream to detect anomalous application behavior. In Proceedings of the Computing Frontiers Conference on ZZZ—CF’17, Siena, Italy, 15–17 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 45–52. [Google Scholar] [CrossRef]

- Seo, J.; Choi, B.; Yang, S. A profiling method by PCB hooking and its application for memory fault detection in embedded system operational test. Inf. Softw. Technol. 2011, 53, 106–119. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Leu, K.-L.; Kun-Chun Chang, K.-C. Datapath error detection using hybrid detection approach for high-performance microprocessors. In Proceedings of the 12th WSEAS international conference on Computers, Heraklion, Greece, 23–25 July 2008. [Google Scholar]

- Wagner, I.; Bertacco, V. Engineering trust with semantic guardians. In Proceedings of the 2007 Design, Automation & Test in Europe Conference & Exhibition, Nice, France, 16–20 April 2007; pp. 1–6. [Google Scholar]

- Rashid, L.; Karthik, P.; Sathish, G. Dieba: Diagnosing intermittent errors by backtracing application failures. In Proceedings of the Silicon Errors in Logic-System Effects, Champaign, IL, USA, 27–28 March 2012. [Google Scholar]

- Bruckert, W.; Klecka, J.; Smullen, J. Diagnostic Memory Dump Method in a Redundant Processor. U.S. Patent Application 10/953,242, 2004. [Google Scholar]

- Han, S.; Shin, K.; Rosenberg, H. DOCTOR: An integrated software fault injection environment for distributed real-time systems. In Proceedings of the 1995 IEEE International Computer Performance and Dependability Symposium, Erlangen, Germany, 24–26 April 1995; pp. 204–213. [Google Scholar]

- IEEE. IEEE Standard Classification for Software Anomalies; IEEE Std.: Piscataway Township, NJ, USA, 1994; pp. 1044–1993. [Google Scholar]

- Madeira, H.; Costa, D.; Vieira, M. On the emulation of software faults by software fault injection. In Proceedings of the International Conference on Dependable Systems and Networks (DSN), New York, NY, USA, 25–28 June 2000; pp. 417–426. [Google Scholar]

- Zhu, Y.; Li, Y.; Xue, J.; Tan, T.; Shi, J.; Shen, Y.; Ma, C. What is system hang and how to handle it? In Proceedings of the 2012 IEEE 23rd International Symposium on Software Reliability Engineering, Dallas, TX, USA, 27–30 November 2012; pp. 141–150. [Google Scholar]

- Bower, F.A.; Sorin, D.J.; Ozev, S. Online diagnosis of hard faults in microprocessors. ACM Trans. Arch. Code Optim. 2007, 4. [Google Scholar] [CrossRef]

- Constantinides, K.; Mutlu, O.; Austin, T.; Bertacco, V. Software-based online detection of hardware defects: Mechanisms, architectural, and evaluation. In Proceedings of the 40th Annual IEEE/ACM International Symposium on Microarchitecture, Chicago, IL, USA, 1–5 December 2007; pp. 97–108. [Google Scholar]

- Li, M.-L.; Ramachandran, P.; Sahoo, S.K.; Adve, S.V.; Adve, V.S.; Zhou, Y. Understanding the propagation of hard errors to software and implications for resillant system design. ASPLOS XIII: Proceedings of the 13th International Conference on Architectural Support for Programming Languages and Operating Systems; Association for Computing Machinery: New York, NY, USA, 2008. [Google Scholar]

- Mickens, J.W.; Elson, J.; Howell, J. Mugshot: Deterministic capture and replay for JavaScript applications. In Proceedings of the NSDI, San Jose, CA, USA, 28–30 April 2010; Volume 10, pp. 159–174. [Google Scholar]

- Ting, D.; He, J.; Gu, X.; Lu, S.; Wang, P. Dscope: Detecting real-world data corruption hang bugs in cloud server systems. In Proceedings of the ACM Symposium on Cloud Computing, Carlsbad, CA, USA, 11–13 October 2018; pp. 313–325. [Google Scholar]

- Brocanelli, M.; Wang, X. Hang doctor: Runtime detection and diagnosis of soft hangs for smartphone apps. In Proceedings of the Thirteenth EuroSys Conference, Porto, Portugal, 23–26 April 2018; pp. 1–15. [Google Scholar]

- Wang, L.; Kalbarczyk, Z.; Gu, W.; Iyer, R.K. Reliability MicroKernel: Providing application-aware reliability in the OS. IEEE Trans. Reliab. 2007, 56, 597–614. [Google Scholar] [CrossRef]

- Sultan, F.; Bohra, A.; Smaldone, S.; Pan, Y.; Gallard, P.; Neamtiu, I.; Iftode, L. Recovering internet service sessions from operating system failures. IEEE Internet Comput. 2005, 9, 17–27. [Google Scholar] [CrossRef]

- Dadashi, M.; Rashid, L.; Pattabiraman, K.; Gopalakrishnan, S. Hardware-software integrated diagnosis for intermittent hardware faults. In Proceedings of the 2014 44th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Atlanta, GA, USA, 23–26 June 2014; pp. 363–374. [Google Scholar]

- Tiwari, V.; Malik, S.; Wolfe, A. Power analysis of embedded software: A first step towards software power minimization. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 1994, 2, 437–445. [Google Scholar] [CrossRef]

- Woo, L.L.; Zwolinski, M.; Halak, B. Early detection of system-level anomalous behaviour using hardware performance counters. In Proceedings of the 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 19–23 March 2018; pp. 485–490. [Google Scholar]

- ODROID-XU3. Available online: http://www.hardkernel.com/ (accessed on 5 October 2020).

- GEM5 Simulator. Available online: http://www.gem5.org/ (accessed on 5 October 2020).

- Exynos5422. Available online: https://www.samsung.com/semiconductor/minisite/exynos/products/mobileprocessor/exynos-5-octa-5422/ (accessed on 5 October 2020).

- ARM. ARM. Cortex-a15 MPCore Processor Technical Reference Manual; ARM: Cambridge, UK, 2013. [Google Scholar]

- ARM. ARM. Cortex-a7 MPCore Technical Reference Manual; ARM: Cambridge, UK, 2013. [Google Scholar]

- Bienia, C.; Kumar, S.; Singh, J.P.; Li, K. The PARSEC benchmark suite: Characterization and architectural implications. In Proceedings of the 17th International Conference on Parallel Architectures and Compilation Techniques, Toronto, ON, Canada, 25–29 October 2008; pp. 72–81. [Google Scholar]

- Silva, L.; Batista, V.; Silva, J.G. Fault-tolerant execution of mobile agents. In Proceedings of the International Conference on Dependable Systems and Networks (DSN), New York, NY, USA, 25–28 June 2000; pp. 135–143. [Google Scholar]

| Fault | Cause of Fault | |

|---|---|---|

| Permanent fault | Damage | External impacts and heat |

| Fatigue | Extended use | |

| Improper manufacturing | Incorrect hardware logic | |

| Transient fault | Temporary environmental condition (e.g., cosmic rays, electromagnetic interference) | |

| Intermittent fault | Unstable hardware | |

| Marginal hardware | ||

| Fault Type | Cause of Fault |

|---|---|

| Logic Problem | Forgotten case or steps, duplicate logic, extreme conditions neglected, unnecessary truncation, misinterpretation, missing condition test, checking the wrong variable, or iterating the loop incorrectly. |

| Computational Problem | Equation insufficient or incorrect, missing computation, incorrect equation operand, incorrect equation operator, parentheses used incorrectly, precision loss, rounding or truncation fault, mixed modes, or sign conversion fault. |

| Data Problem | Sensor data incorrect or missing, operator data incorrect or missing, embedded data in tables incorrect or missing, external data incorrect or missing, output data incorrect or missing, or input data incorrect or missing. |

| Interface/Timing Problem | Interrupts handled incorrectly, incorrect input/output timing, timing fault causes data loss, subroutine/module mismatch, wrong subroutine called, incorrectly located subroutine called, or inconsistent subroutine arguments. |

| Documentation Problem | Ambiguous statement or incomplete, incorrect, missing, conflicting, redundant, confusing, illogical, non-verifiable, or unachievable items. |

| Data Handling Problem | Data initialized incorrectly, data accessed or stored incorrectly, flag or index set incorrectly, data packed/unpacked incorrectly, incorrectly referenced wrong data variable, data referenced out of bounds, scaling or units of data incorrect, data dimensioned incorrectly, variable type incorrect, incorrectly subscripted variable, or incorrect scope of data. |

| Document Quality Problem | Applicable criteria not met, not traceable, not current, inconsistencies, incomplete, or no identification. |

| Environment | System | CPU Architecture | No. of CPU Cores |

|---|---|---|---|

| Evaluation Board | ODROIC-XU3 | ARM v7-a architecture | 8 |

| Simulation | GEM5 Simulator | ARM v8-a architecture | 4 |

| Program | Description | LOC | Size (KB) |

| Blackscholes: | Computational finance application (Pseudo application) | 1751 | 712 |

| Streamcluster: | Machine learning application (Kernel process) | 1825 | 1371 |

| Facesim: | Computer animation application (Pseudo Application) | 37,265 | 19,702 |

| Ferret: | Similarity search engine (Pseudo Application) | 10,984 | 2728 |

| Freqmine: | Data mining application (Pseudo Application) | 2027 | 1469 |

| Fluidanimate: | Computer animation application (Pseudo Application) | 2831 | 1363 |

| Fault | Cause of Fault | Time Fault was Generated | Hanging Occurrence | |

|---|---|---|---|---|

| Major Category | Minor Category | |||

| Interface/Timing Problem | Timing | Input/output timing incorrect | Runtime | O |

| Timing fault causes data loss | Runtime | O | ||

| Interface | Interrupts handled incorrectly | Runtime | X | |

| Subroutine/module mismatch | Compile time | X | ||

| Wrong subroutine called | Runtime | X | ||

| Incorrectly located subroutine called | Runtime | X | ||

| Inconsistent subroutine arguments | Compile time | X | ||

| Fault Type | Target | Injection Period | Fault Injection Method | |

|---|---|---|---|---|

| Hardware fault (CPU fault) | Instruction Fetch | ODROID-XU3 | Just before executing a system call | Bit flipping is performed on the value of the loaded L1 instruction cache register and the value of the L1 instruction cache register is overlaid with the value obtained through bit flipping |

| GEM5 | Random | Code modification related to instruction fetch | ||

| Instruction Dispatch | ODROID-XU3 | Just before executing a system call | Bit flipping is performed on the value of the loaded instruction dispatch register and the value of the instruction dispatch register is overlaid with the value obtained through bit flipping | |

| GEM5 | Random | Code modification related to instruction dispatch | ||

| Load/store Unit | ODROID-XU3 | Just before executing a system call | Bit flipping is performed on the value of the loaded L1 data cache register and the value of the L1 data cache register is overlaid with the value obtained through bit flipping | |

| GEM5 | Random | Code modification related to load/store unit | ||

| Software fault | I/O Timing | All | Just before executing a system call | The sleep() function is called to block the thread being currently executed |

| Application | Fault | System Call | No. of Injected Faults | No. of Detected Faults | No. of Classified Faults | Application | Fault | System Call | No. of Injected Faults | No. of Detected Faults | No. of Classified Faults | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Blackscholes | HW | Instruction cache | Write | 50 | 38 | 28 | Streamcluster | HW | Instruction cache | Write | 50 | 50 | 50 |

| Ioctl | 50 | 49 | 49 | Ioctl | - | - | - | ||||||

| Read | 50 | 42 | 42 | Read | 50 | 47 | 47 | ||||||

| Dispatch stages | Write | 50 | 50 | 50 | Dispatch stages | Write | 50 | 50 | 50 | ||||

| Ioctl | 50 | 50 | 50 | Ioctl | - | - | - | ||||||

| Read | 50 | 49 | 49 | Read | 50 | 50 | 50 | ||||||

| Load/store queue | Write | 50 | 50 | 50 | Load/store queue | Write | 50 | 50 | 50 | ||||

| Ioctl | 50 | 50 | 50 | Ioctl | - | - | - | ||||||

| Read | 50 | 50 | 50 | Read | 50 | 50 | 50 | ||||||

| SW | I/O timing | Write | 10 | 10 | 10 | SW | I/O timing | Write | 10 | 10 | 10 | ||

| Ioctl | 10 | 10 | 10 | Ioctl | - | - | - | ||||||

| Read | 10 | 10 | 10 | Read | 10 | 10 | 10 | ||||||

| Facesim | HW | Instruction cache | Write | 50 | 50 | 50 | Ferret | HW | Instruction cache | Write | 50 | 50 | 50 |

| Ioctl | 50 | 50 | 50 | Ioctl | 50 | 50 | 42 | ||||||

| Read | 50 | 50 | 50 | Read | 50 | 50 | 46 | ||||||

| Dispatch stages | Write | 50 | 50 | 50 | Dispatch stages | Write | 50 | 50 | 50 | ||||

| Ioctl | 50 | 50 | 50 | Ioctl | 50 | 50 | 50 | ||||||

| Read | 50 | 50 | 50 | Read | 50 | 50 | 46 | ||||||

| Load/store queue | Write | 50 | 50 | 50 | Load/store queue | Write | 50 | 50 | 50 | ||||

| Ioctl | 50 | 50 | 50 | Ioctl | 50 | 50 | 50 | ||||||

| Read | 50 | 50 | 50 | Read | 50 | 50 | 50 | ||||||

| SW | I/O timing | Write | 10 | 10 | 10 | SW | I/O timing | Write | 10 | 10 | 10 | ||

| Ioctl | 10 | 10 | 10 | Ioctl | 10 | 10 | 10 | ||||||

| Read | 10 | 10 | 10 | Read | 10 | 10 | 10 | ||||||

| Freqmine | HW | Instruction cache | Write | 50 | 50 | 50 | Fluidanimate | HW | Instruction cache | Write | 50 | 49 | 36 |

| Ioctl | 50 | 50 | 50 | Ioctl | 50 | 50 | 33 | ||||||

| Read | 50 | 50 | 50 | Read | 50 | 50 | 32 | ||||||

| Dispatch stages | Write | 50 | 50 | 50 | Dispatch stages | Write | 50 | 50 | 32 | ||||

| Ioctl | 50 | 50 | 50 | Ioctl | 50 | 50 | 32 | ||||||

| Read | 50 | 50 | 49 | Read | 50 | 50 | 31 | ||||||

| Load/store queue | Write | 50 | 50 | 50 | Load/store queue | Write | 50 | 50 | 50 | ||||

| Ioctl | 50 | 50 | 50 | Ioctl | 50 | 50 | 50 | ||||||

| Read | 50 | 50 | 50 | Read | 50 | 50 | 50 | ||||||

| SW | I/O timing | Write | 10 | 10 | 10 | SW | I/O timing | Write | 10 | 10 | 10 | ||

| Ioctl | 10 | 10 | 10 | Ioctl | 10 | 10 | 10 | ||||||

| Read | 10 | 10 | 10 | Read | 10 | 10 | 10 | ||||||

| Sum | Hardware fault | 2550 | 2524 | 2394 | |||||||||

| Software fault | 170 | 170 | 170 | ||||||||||

| Total | 2720 | 2694 | 2564 | ||||||||||

| Application | Fault | System Call | No. of Injected Faults | No. of Detected Faults | No. of Classified Faults | Application | Fault | System Call | No. of Injected Faults | No. of Detected Faults | No. of Classified Faults | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Blackscholes | HW | Instruction cache | - | 50 | 44 | 43 | Streamcluster | HW | Instruction cache | - | 50 | 50 | 50 |

| Dispatch stages | - | 50 | 50 | 50 | Dispatch stages | - | 50 | 50 | 50 | ||||

| Load/store queue | - | 50 | 50 | 50 | Load/store queue | - | 50 | 50 | 50 | ||||

| SW | I/O timing | Write | 10 | 10 | 10 | SW | I/O timing | Write | 10 | 10 | 10 | ||

| Ioctl | 10 | 10 | 10 | Ioctl | - | - | - | ||||||

| Read | 10 | 10 | 10 | Read | 10 | 10 | 10 | ||||||

| Facesim | HW | Instruction cache | - | 50 | 50 | 50 | Ferret | HW | Instruction cache | - | 50 | 50 | 50 |

| Dispatch stages | - | 50 | 50 | 50 | Dispatch stages | - | 50 | 50 | 50 | ||||

| Load/store queue | - | 50 | 50 | 50 | Load/store queue | - | 50 | 50 | 50 | ||||

| SW | I/O timing | Write | 10 | 10 | 10 | SW | I/O timing | Write | 10 | 10 | 10 | ||

| Ioctl | 10 | 10 | 10 | Ioctl | 10 | 10 | 10 | ||||||

| Read | 10 | 10 | 10 | Read | 10 | 10 | 10 | ||||||

| Freqmine | HW | Instruction cache | - | 50 | 50 | 50 | Fluidanimate | HW | Instruction cache | - | 50 | 50 | 41 |

| Dispatch stages | - | 50 | 50 | 50 | Dispatch stages | - | 50 | 50 | 32 | ||||

| Load/store queue | - | 50 | 50 | 50 | Load/store queue | - | 50 | 50 | 49 | ||||

| SW | I/O timing | Write | 10 | 10 | 10 | SW | I/O timing | Write | 10 | 10 | 10 | ||

| Ioctl | 10 | 10 | 10 | Ioctl | 10 | 10 | 10 | ||||||

| Read | 10 | 10 | 10 | Read | 10 | 10 | 10 | ||||||

| Sum | Hardware fault | 900 | 894 | 865 | |||||||||

| Software fault | 170 | 170 | 170 | ||||||||||

| Total | 1070 | 1064 | 1035 | ||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Choi, B. Automatic Method for Distinguishing Hardware and Software Faults Based on Software Execution Data and Hardware Performance Counters. Electronics 2020, 9, 1815. https://doi.org/10.3390/electronics9111815

Park J, Choi B. Automatic Method for Distinguishing Hardware and Software Faults Based on Software Execution Data and Hardware Performance Counters. Electronics. 2020; 9(11):1815. https://doi.org/10.3390/electronics9111815

Chicago/Turabian StylePark, Jihyun, and Byoungju Choi. 2020. "Automatic Method for Distinguishing Hardware and Software Faults Based on Software Execution Data and Hardware Performance Counters" Electronics 9, no. 11: 1815. https://doi.org/10.3390/electronics9111815

APA StylePark, J., & Choi, B. (2020). Automatic Method for Distinguishing Hardware and Software Faults Based on Software Execution Data and Hardware Performance Counters. Electronics, 9(11), 1815. https://doi.org/10.3390/electronics9111815