Sw/Hw Partitioning and Scheduling on Region-Based Dynamic Partial Reconfigurable System-on-Chip

Abstract

:1. Introduction

- The formal definition of the partitioning and scheduling problem on DPR SoC integrating the CPU and 2D DPR FPGA.

- An accurate MILP formulation for the partitioning and scheduling problem.

- A multi-step hybrid method that integrates the priority-based graph partitioning method.

- Extensive experiments with a set of real-life applications.

2. Related Work

3. Problem Description

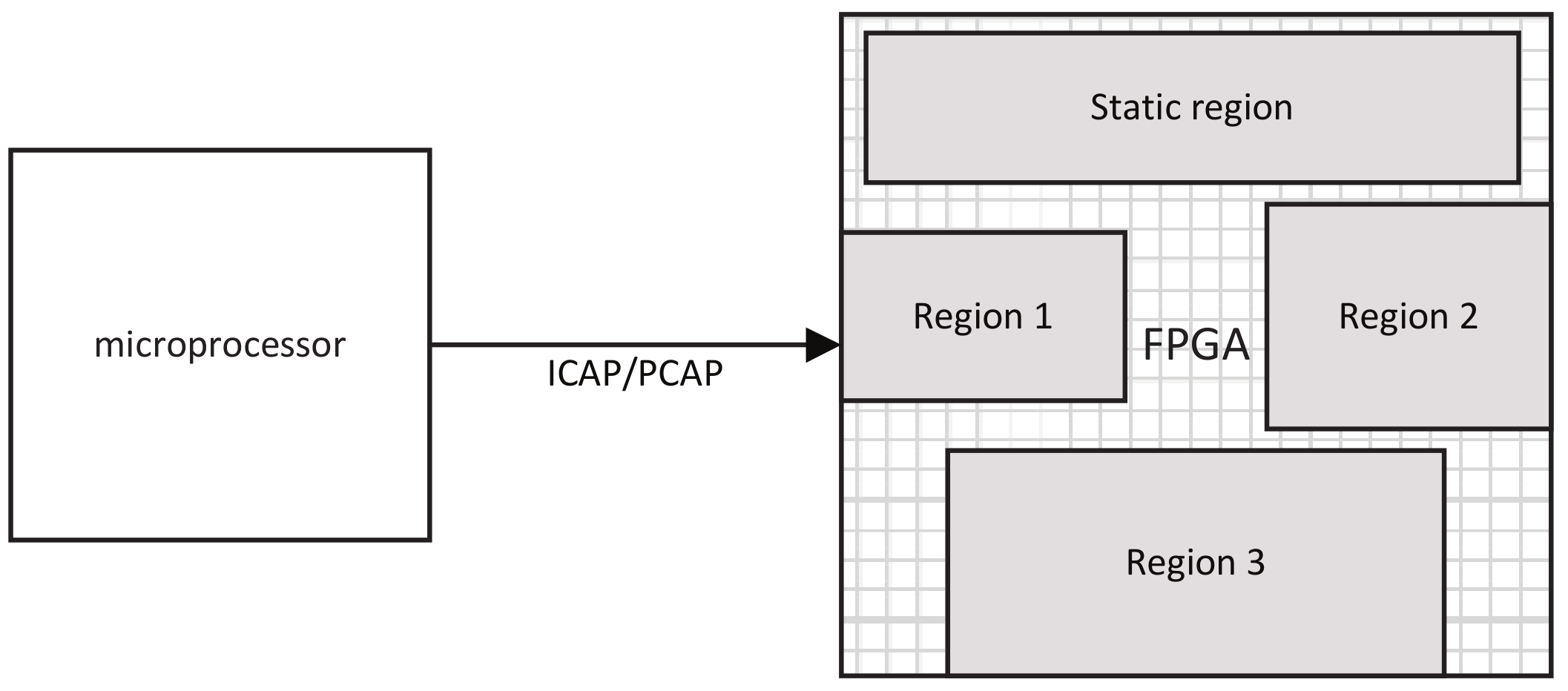

3.1. Platform Model

3.2. Application Model

3.3. The Sw/Hw Partitioning and Scheduling Problem

- The resource type and number on each reconfiguration region.

- The mapping of each task (the task may be mapped to the CPU or a reconfiguration region).

- The start execution time of each task.

- The start reconfiguration time of the task that is mapped to the FPGA.

- The execution order and reconfiguration order of all tasks.

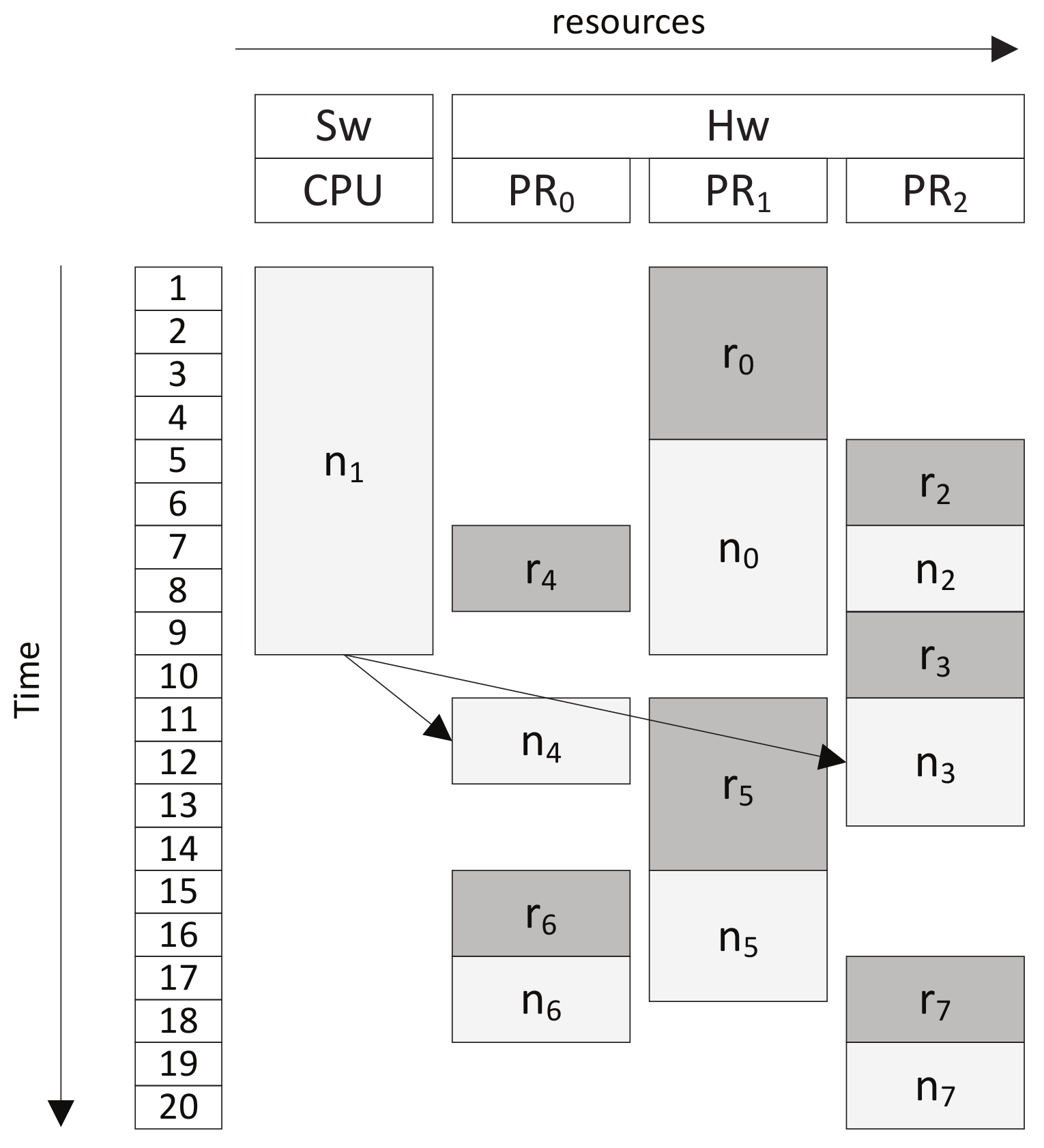

- The resource number of the FPGA is eight, while the aggregate resource number of the application by adding the resource requirement of each task of the DAG is 17, showing that the DPR ability of the hardware enables the execution of the application on a resource-constrained platform. By partitioning the FPGA into three partial dynamic regions, the resource of the FPGA is shared by the application in both time and space.

- Reconfigurations of different tasks are carried out in series. As shown in the figure, the reconfiguration port is busy during the time interval , and no pairs of reconfigurations overlap in time.

- Reconfiguration and execution of two tasks can happen in parallel (reconfiguration pre-fetching), e.g., on and on overlap during . When task is executing on , the reconfiguration of task is carried out on , thus hiding its reconfiguration delay.

- In each region, reconfiguration and execution happen in turn, e.g., in region , the reconfiguration and execution sequence is .

- The Sw/Hw partitioning and scheduling technique can speed up the execution of the application remarkably. While the schedule length of the application on the CPU takes 84 time units, the Sw/Hw partitioning and scheduling technique produces a schedule with the schedule length being only 20.

4. The Proposed MILP Formulation

4.1. Variables

4.2. Constraints

4.3. Model Complexity

4.4. Comment

5. Multi-Step Hybrid Algorithm

5.1. Algorithm Structure

| Algorithm 1 MSHA (G, P) |

|

5.2. Priority-Based Graph Partitioning

| Algorithm 2 PBGP (G, m) |

|

6. Experiments and Results

6.1. Target Platform Configuration

6.2. Benchmark

6.3. Experimental Results

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rossi, E.; Damschen, M.; Bauer, L.; Buttazzo, G.; Henkel, J. Preemption of the Partial Reconfiguration Process to Enable Real-Time Computing With FPGAs. ACM Trans. Reconfig. Technol. Syst. 2018, 11, 1–24. [Google Scholar] [CrossRef]

- Biondi, A.; Balsini, A.; Pagani, M.; Rossi, E.; Marinoni, M.; Buttazzo, G. A Framework for Supporting Real-Time Applications on Dynamic Reconfigurable FPGAs. In Proceedings of the 2016 IEEE Real-Time Systems Symposium (RTSS), Porto, Portugal, 29 November–2 December 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Saha, S.; Sarkar, A.; Chakrabarti, A. Scheduling dynamic hard real-time task sets on fully and partially reconfigurable platforms. IEEE Embed. Syst. Lett. 2015, 7, 23–26. [Google Scholar] [CrossRef]

- Cordone, R.; Redaelli, F.; Redaelli, M.A.; Santambrogio, M.D.; Sciuto, D. Partitioning and scheduling of task graphs on partially dynamically reconfigurableFPGAs. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2009, 28, 662–675. [Google Scholar] [CrossRef]

- Deiana, E.A.; Rabozzi, M.; Cattaneo, R.; Santambrogio, M.D. A multiobjective reconfiguration-aware scheduler for FPGA-based heterogeneous architectures. In Proceedings of the 2015 International Conference on ReConFigurable Computing and FPGAs, ReConFig 2015, Mexico City, Mexico, 7–9 December 2015; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Beretta, I.; Rana, V.; Atienza, D.; Sciuto, D. A mapping flow for dynamically reconfigurable multi-core system-on-chip design. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2011, 30, 1211–1224. [Google Scholar] [CrossRef] [Green Version]

- Beretta, I.; Rana, V.; Atienza, D.; Sciuto, D. Island-Based Adaptable Embedded System Design. IEEE Embed. Syst. Lett. 2011, 3, 53–57. [Google Scholar] [CrossRef] [Green Version]

- Clemente, J.A.; Rana, V.; Sciuto, D.; Beretta, I.; Atienza, D. A hybrid mapping-scheduling technique for dynamically reconfigurable hardware. In Proceedings of the 21st International Conference on Field Programmable Logic and Applications, FPL 2011, Chania, Greece, 5–7 September 2011; pp. 177–180. [Google Scholar] [CrossRef] [Green Version]

- Clemente, J.A.; Beretta, I.; Rana, V.; Atienza, D.; Sciuto, D.; Clemente, J.A.; Sciuto, D. A Mapping-Scheduling Algorithm for Hardware Acceleration on Reconfigurable Platforms. ACM Trans. Reconfig. Technol. Syst. 2014, 7, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.; Liu, J.; Zhang, C.; Luk, W. HW/SW partitioning for region-based dynamic partial reconfigurable FPGAs. In Proceedings of the 2014 32nd IEEE International Conference on Computer Design, ICCD 2014, Seoul, Korea, 19–22 October 2014; pp. 470–476. [Google Scholar] [CrossRef]

- Cong, J.; Liu, B.; Neuendorffer, S.; Noguera, J.; Vissers, K.; Zhang, Z. High-level synthesis for FPGAs: From prototyping to deployment. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2011, 30, 473–491. [Google Scholar] [CrossRef] [Green Version]

- Nane, R.; Sima, V.M.; Pilato, C.; Choi, J.; Fort, B.; Canis, A.; Chen, Y.T.; Hsiao, H.; Brown, S.; Ferrandi, F.; et al. A Survey and Evaluation of FPGA High-Level Synthesis Tools. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2016, 35, 1591–1604. [Google Scholar] [CrossRef] [Green Version]

- Meeus, W.; Van Beeck, K.; Goedemé, T.; Meel, J.; Stroobandt, D. An overview of today’s high-level synthesis tools. Des. Autom. Embed. Syst. 2012, 16, 31–51. [Google Scholar] [CrossRef]

- Pezzarossa, L.; Kristensen, A.; Schoeberl, M.; Sparso, J. Can real-time systems benefit from dynamic partial reconfiguration? In Proceedings of the 2017 IEEE Nordic Circuits and Systems Conference, NORCAS 2017: NORCHIP and International Symposium of System-on-Chip, Linkoping, Sweden, 23–25 October 2017. [Google Scholar] [CrossRef] [Green Version]

- Wang, G.; Liu, S.; Sun, J. A dynamic partial recon fi gurable system with combined task allocation method to improve the reliability of FPGA. Microelectron. Reliab. 2018, 83, 14–24. [Google Scholar] [CrossRef]

- Dai, G.; Shan, Y.; Chen, F.; Wang, Y.; Wang, K.; Yang, H. Online scheduling for FPGA computation in the Cloud. In Proceedings of the 2014 International Conference on Field-Programmable Technology, FPT 2014, Shanghai, China, 10–12 December 2014; pp. 330–333. [Google Scholar] [CrossRef]

- Fekete, S.P.; Kohler, E.; Teich, J. Optimal FPGA module placement with temporal precedence constraints. In Proceedings of the Design, Automation and Test in Europe, Munich, Germany, 13–16 March 2001; pp. 658–665. [Google Scholar] [CrossRef]

- Banerjee, S.; Bozorgzadeh, E.; Dutt, N.D. Integrating physical constraints in HW-SW partitioning for architectures with partial dynamic reconfiguration. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2006, 14, 1189–1202. [Google Scholar] [CrossRef]

- Ferrandi, F.; Lanzi, P.L.; Pilato, C.; Sciuto, D.; Tumeo, A. Ant colony optimization for mapping, scheduling and placing in reconfigurable systems. In Proceedings of the 2013 NASA/ESA Conference on Adaptive Hardware and Systems, AHS 2013, Torino, Italy, 24–27 June 2013; pp. 47–54. [Google Scholar] [CrossRef]

- Banerjee, S.; Bozorgzadeh, E.; Dutt, N. Exploiting application data-parallelism on dynamically reconfigurable architectures: Placement and architectural considerations. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2009, 17, 234–247. [Google Scholar] [CrossRef]

- Purgato, A.; Tantillo, D.; Rabozzi, M.; Sciuto, D.; Santambrogio, M.D. Resource-efficient scheduling for partially-reconfigurable FPGA-based systems. In Proceedings of the 2016 IEEE 30th International Parallel and Distributed Processing Symposium, IPDPS 2016, Chicago, IL, USA, 23–27 May 2016; pp. 189–197. [Google Scholar] [CrossRef]

- Rabozzi, M.; Lillis, J.; Santambrogio, M.D. Floorplanning for partially-reconfigurable FPGA systems via mixed-integer linear programming. In Proceedings of the 2014 IEEE 22nd International Symposium on Field-Programmable Custom Computing Machines, FCCM 2014, Boston, MA, USA, 11–13 May 2014; pp. 186–193. [Google Scholar] [CrossRef]

- He, R.; Ma, Y.; Zhao, K.; Bian, J. ISBA: An Independent Set-Based Algorithm for Automated Partial Reconfiguration Module Generation. In Proceedings of the International Conference on Computer-Aided Design, San Jose, CA, USA, 12–14 September 2012; pp. 500–507. [Google Scholar]

- Chen, S.; Huang, J.; Xu, X.; Ding, B.; Xu, Q. Integrated Optimization of Partitioning, Scheduling, and Floorplanning for Partially Dynamically Reconfigurable Systems. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2020, 39, 199–212. [Google Scholar] [CrossRef]

- Valente, G.; Mascio, T.D.; Andrea, G.D.; Pomante, L. Dynamic Partial Reconfiguration Profitabilit for Real-Time Systems. IEEE Embed. Syst. Lett. 2020, 663, 1–4. [Google Scholar] [CrossRef]

- Naouss, M.; Hannachi, M. Optimized Placement Approach on Reconfigurable FPGA. Int. J. Model. Optim. 2019, 9, 82–86. [Google Scholar] [CrossRef]

- Rabozzi, M.; Member, S.; Durelli, G.C.; Member, S.; Miele, A.; Lillis, J.; Santambrogio, M.D.; Member, S. Floorplanning Automation for Feasible Placements Generation. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2017, 25, 151–164. [Google Scholar] [CrossRef]

- Montone, A.; Santambrogio, M.D.; Sciuto, D.; Memik, S.O. Placement and Floorplanning in Dynamically Reconfigurable FPGAs. ACM Trans. Reconfig. Technol. Syst. 2010, 3, 1–34. [Google Scholar] [CrossRef]

- Tang, Q.; Wu, S.F.; Shi, J.W.; Wei, J.B. Optimization of Duplication-Based Schedules on Network-on-Chip Based Multi-Processor System-on-Chips. IEEE Trans. Parallel Distrib. Syst. 2017, 28, 826–837. [Google Scholar] [CrossRef]

- Sinnen, O. Task Scheduling for Parallel Systems; John Wiley & Sons: Hoboken, NJ, USA, 2007; Volume 60. [Google Scholar]

- Vipin, K.; Fahmy, S.A. FPGA Dynamic and Partial Reconfiguration: A Survey of Architectures, Methods, and Applications. ACM Comput. Surv. 2018, 51, 1–39. [Google Scholar] [CrossRef] [Green Version]

- Canon, L.C.; Sayah, M.E. A Comparison of Random Task Graph Generation Methods for Scheduling Problems. arXiv 2019, arXiv:1902.05808v1. [Google Scholar]

- Tang, Q.; Basten, T.; Geilen, M.; Stuijk, S.; Wei, J.B. Mapping of Synchronous Dataflow Graphs on MPSoCs Based on Parallelism Enhancement. Journal of Parallel and Distributed Computing. J. Parallel Distrib. Comput. 2017, 101, 79–91. [Google Scholar] [CrossRef]

| Task Name | Sw Time (Cycle) | Hw Time (Cycle) | CLB Num |

|---|---|---|---|

| 23 | 5 | 3 | |

| 9 | 2 | 3 | |

| 11 | 2 | 1 | |

| 14 | 3 | 1 | |

| 10 | 2 | 2 | |

| 7 | 3 | 4 | |

| 6 | 2 | 2 | |

| 4 | 2 | 1 |

| Task Name | sbl | stl | sub-DAG |

|---|---|---|---|

| 13 | 0 | ||

| 10 | 0 | ||

| 8 | 0 | ||

| 8 | 5 | ||

| 6 | 2 | ||

| 5 | 8 | ||

| 4 | 8 | ||

| 2 | 11 |

| App Name | Task Num | Edge Num |

|---|---|---|

| Ferret | 20 | 19 |

| FFT | 16 | 20 |

| Gauss elimination | 14 | 19 |

| Gauss–Jordan | 15 | 20 |

| JPEG encoder | 8 | 9 |

| Laplace equation | 16 | 24 |

| LUdecomposition | 14 | 19 |

| Parallel Gauss elimination | 12 | 17 |

| Parallel MVA | 16 | 24 |

| Parallel tiled QR factorization | 14 | 21 |

| Quadratic equation solver | 15 | 15 |

| Cyber shake | 20 | 32 |

| Epigenomics | 20 | 22 |

| LIGO | 22 | 25 |

| Montage | 20 | 30 |

| SIPHT | 31 | 34 |

| Molecular dynamics | 41 | 71 |

| Channel equalizer | 14 | 21 |

| Modem | 50 | 86 |

| MP3 decoder block parallelism | 27 | 46 |

| TDSCDMA | 16 | 20 |

| WLAN 802.a receiver | 24 | 28 |

| App Name | MILP | Hybrid | cMILP | |||

|---|---|---|---|---|---|---|

| SL | Time | SL | Time | SL | Time | |

| Ferret | 63 | timeout | 64 | 4.180 | 70 | 3810.600 |

| FFT | 42 | timeout | 47 | 4.046 | 52 | 41.320 |

| Gauss elimination | 51 | 2191.970 | 53 | 0.634 | 72 | 2.100 |

| Gauss–Jordan | 61 | timeout | 76 | 3.774 | 75 | 102.150 |

| JPEG encoder | 40 | 0.190 | 40 | 0.245 | 40 | 0.030 |

| Laplace equation | 88 | timeout | 90 | 1.643 | 103 | 6.860 |

| LU decomposition | 66 | 6292.630 | 73 | 3.104 | 83 | 3.030 |

| Parallel Gauss elimination | 45 | 18.960 | 47 | 0.976 | 61 | 0.730 |

| Parallel MVA | 60 | timeout | 64 | 1.585 | 78 | 3.810 |

| Parallel tiled QR factorization | 53 | 33.290 | 58 | 0.570 | 65 | 1.410 |

| Quadratic equation solver | 44 | timeout | 48 | 4.233 | 54 | 12.510 |

| Cyber shake | 82 | timeout | 75 | 31.997 | 78 | timeout |

| Epigenomics | 88 | timeout | 78 | 4.196 | 84 | timeout |

| LIGO | 92 | timeout | 93 | 9.477 | 90 | timeout |

| Montage | 96 | timeout | 96 | 6.536 | 110 | 1455.890 |

| SIPHT | 276 | timeout | 106 | 1821.770 | 154 | timeout |

| Molecular dynamics | 469 | timeout | 164 | 87.734 | 260 | timeout |

| Channel equalizer | 63 | timeout | 63 | 2.264 | 72 | 0.470 |

| Modem | - | timeout | 220 | 300.149 | 612 | timeout |

| MP3 decoder block parallelism | 140 | timeout | 143 | 136.323 | 136 | timeout |

| TDSCDMA | 74 | timeout | 78 | 1.282 | 101 | 3.190 |

| WLAN 802.11a receiver | 149 | timeout | 138 | 2.643 | 160 | timeout |

| App Name | MILP | Hybrid | cMILP | |||

|---|---|---|---|---|---|---|

| SL | Time | SL | Time | SL | Time | |

| Ferret | 62 | timeout | 62 | 61.079 | 70 | 4812.580 |

| FFT | 43 | timeout | 44 | 194.704 | 52 | 42.540 |

| Gauss elimination | 51 | 36.650 | 51 | 2.153 | 73 | 0.880 |

| Gauss–Jordan | 61 | timeout | 68 | 286.532 | 75 | 104.960 |

| JPEG encoder | 43 | 0.260 | 43 | 0.287 | 45 | 0.060 |

| Laplace Equation | 88 | timeout | 88 | 4.743 | 103 | 7.020 |

| LU decomposition | 66 | 2622.600 | 70 | 16.004 | 83 | 3.020 |

| Parallel Gauss elimination | 45 | 19.250 | 51 | 6.116 | 61 | 0.730 |

| Parallel MVA | 60 | timeout | 63 | 102.353 | 78 | 8.750 |

| Parallel tiled QR factorization | 53 | 75.110 | 53 | 4.841 | 65 | 3.220 |

| Quadratic equation solver | 44 | timeout | 45 | 162.174 | 54 | 30.830 |

| Cyber shake | 94 | timeout | 73 | 190.580 | 86 | timeout |

| Epigenomics | 88 | timeout | 82 | 132.572 | 84 | timeout |

| LIGO | 92 | timeout | 89 | 104.929 | 89 | timeout |

| Montage | 100 | timeout | 95 | 29.636 | 110 | 1348.030 |

| SIPHT | 247 | timeout | 106 | 1817.340 | 154 | timeout |

| Molecular dynamics | 469 | timeout | 165 | 2408.910 | 260 | timeout |

| Channel equalizer | 63 | 5359.830 | 64 | 7.680 | 72 | 0.230 |

| Modem | - | timeout | 233 | 1752.740 | 602 | timeout |

| MP3 decoder block parallelism | 140 | timeout | 131 | 636.780 | 139 | timeout |

| TDSCDMA | 74 | timeout | 78 | 9.796 | 101 | 3.270 |

| WLAN 802.11a receiver | 149 | timeout | 144 | 27.217 | 160 | timeout |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Q.; Guo, B.; Wang, Z. Sw/Hw Partitioning and Scheduling on Region-Based Dynamic Partial Reconfigurable System-on-Chip. Electronics 2020, 9, 1362. https://doi.org/10.3390/electronics9091362

Tang Q, Guo B, Wang Z. Sw/Hw Partitioning and Scheduling on Region-Based Dynamic Partial Reconfigurable System-on-Chip. Electronics. 2020; 9(9):1362. https://doi.org/10.3390/electronics9091362

Chicago/Turabian StyleTang, Qi, Biao Guo, and Zhe Wang. 2020. "Sw/Hw Partitioning and Scheduling on Region-Based Dynamic Partial Reconfigurable System-on-Chip" Electronics 9, no. 9: 1362. https://doi.org/10.3390/electronics9091362

APA StyleTang, Q., Guo, B., & Wang, Z. (2020). Sw/Hw Partitioning and Scheduling on Region-Based Dynamic Partial Reconfigurable System-on-Chip. Electronics, 9(9), 1362. https://doi.org/10.3390/electronics9091362