Compact Convolutional Neural Network Accelerator for IoT Endpoint SoC

Abstract

1. Introduction

2. Function Module Design

2.1. Notation

- K: width of convolution kernels

- N: width of input feature maps

- Ln: maximum number of connections between convolution kernels and input feature map

- psum: the partial sum of convolution

- Src A: source matrix read-channel

- Src B: convolution kernel matrix read-channel

- Src C: accumulative value read-channel

- Result: calculation results store-channel

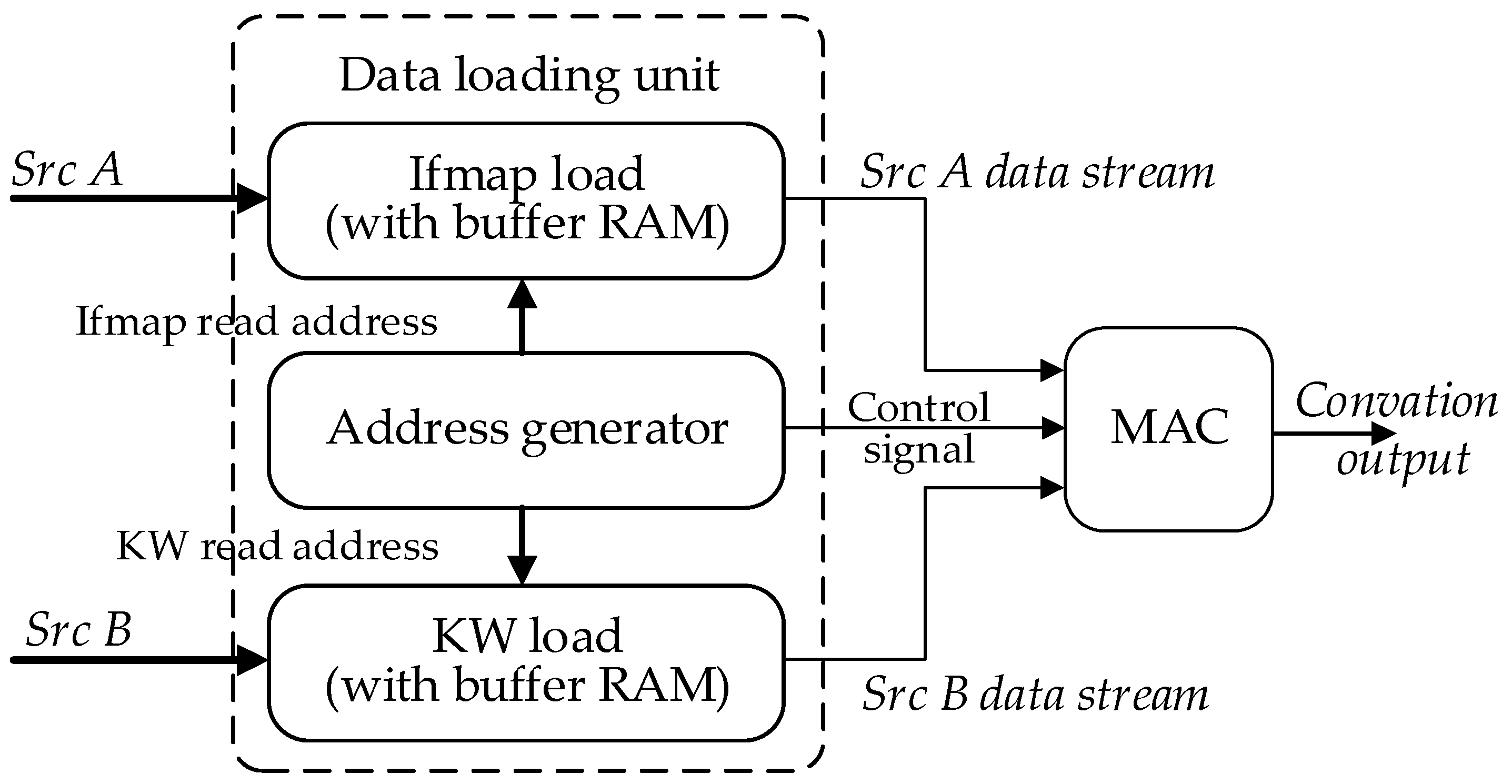

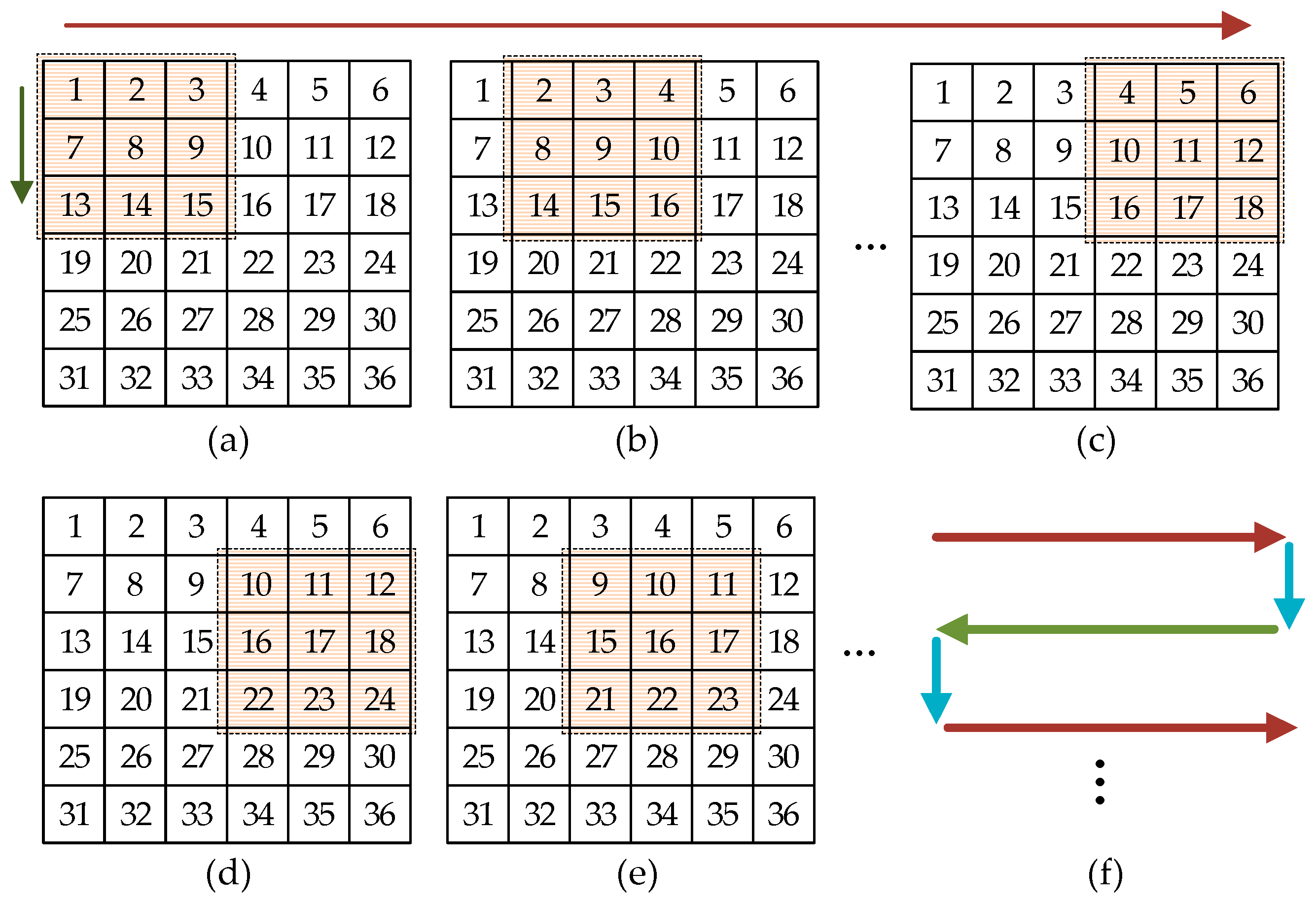

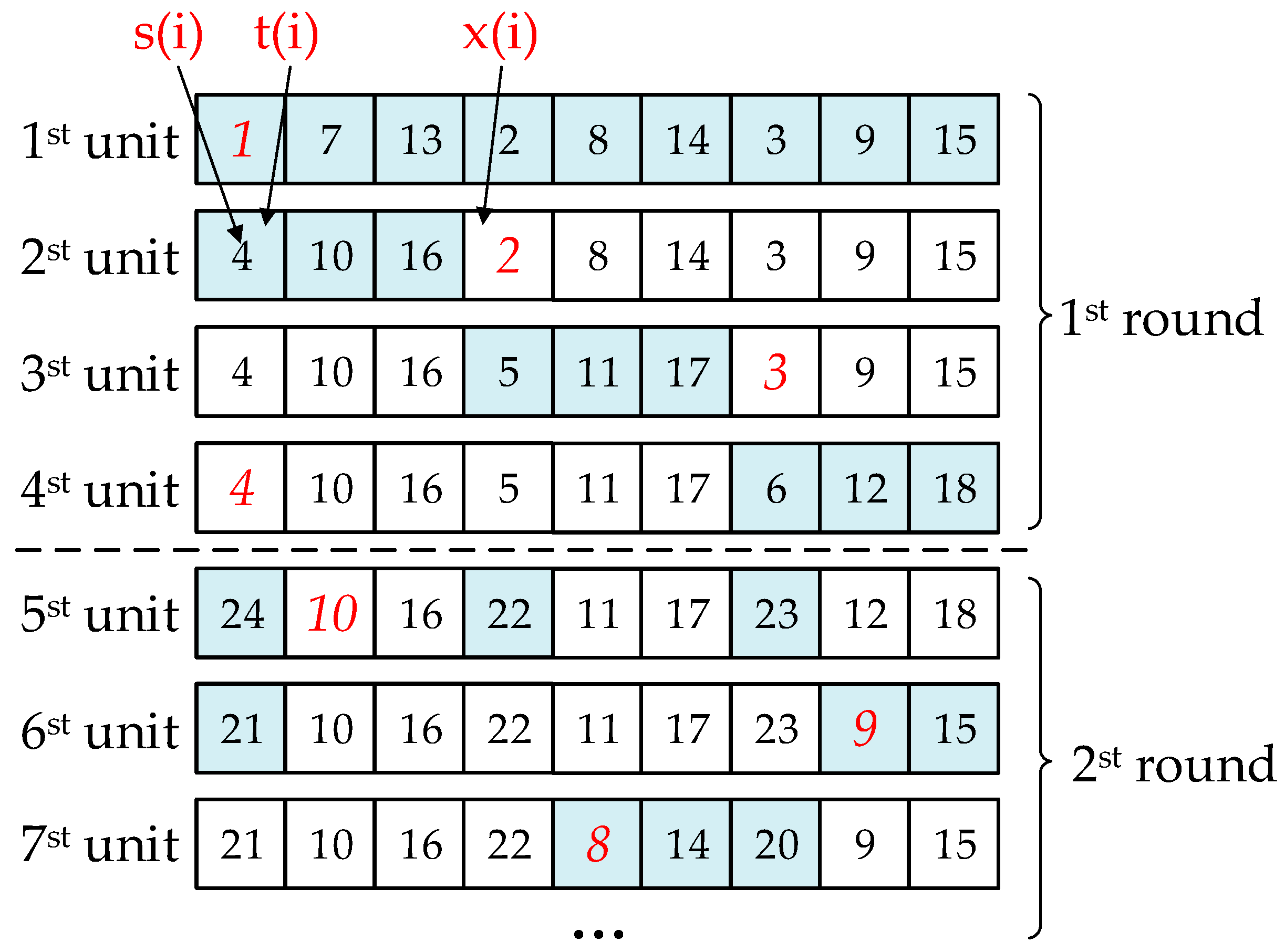

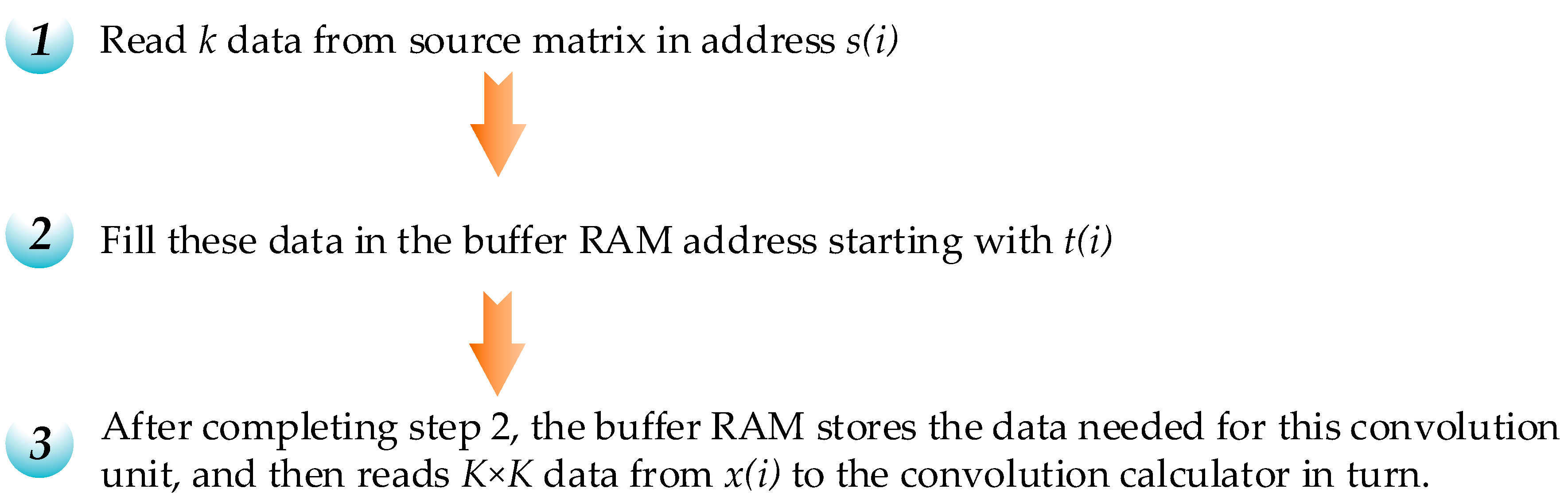

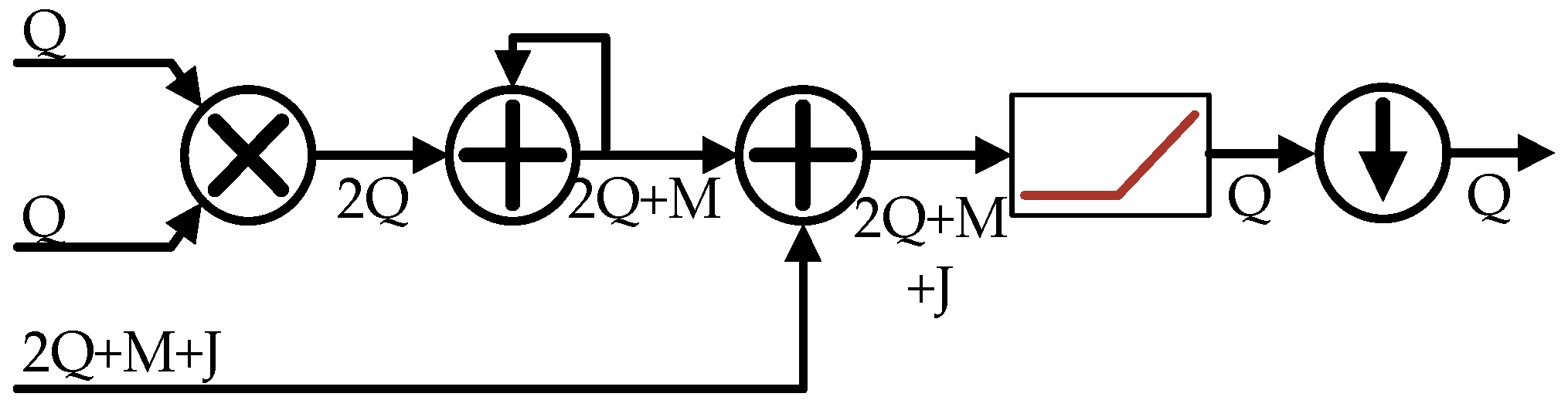

2.2. Convolution Unit

2.3. Multifunctional Accumulation Unit

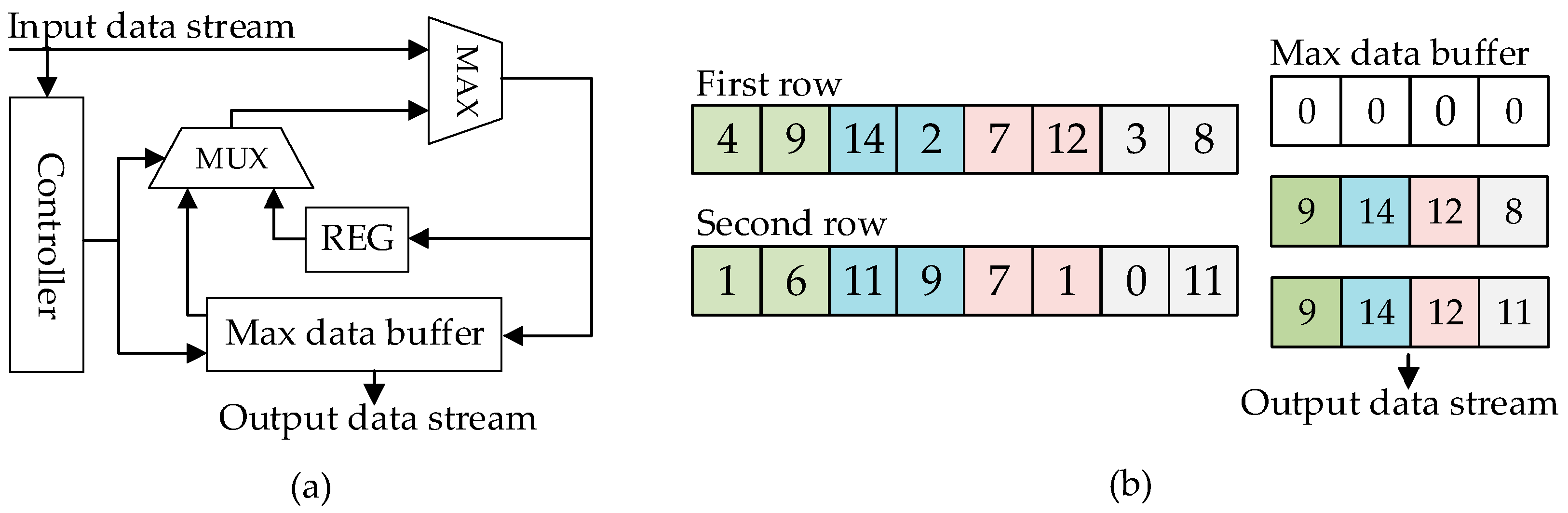

2.4. Serial Max-Pooling Unit

3. CNN Accelerator Structure

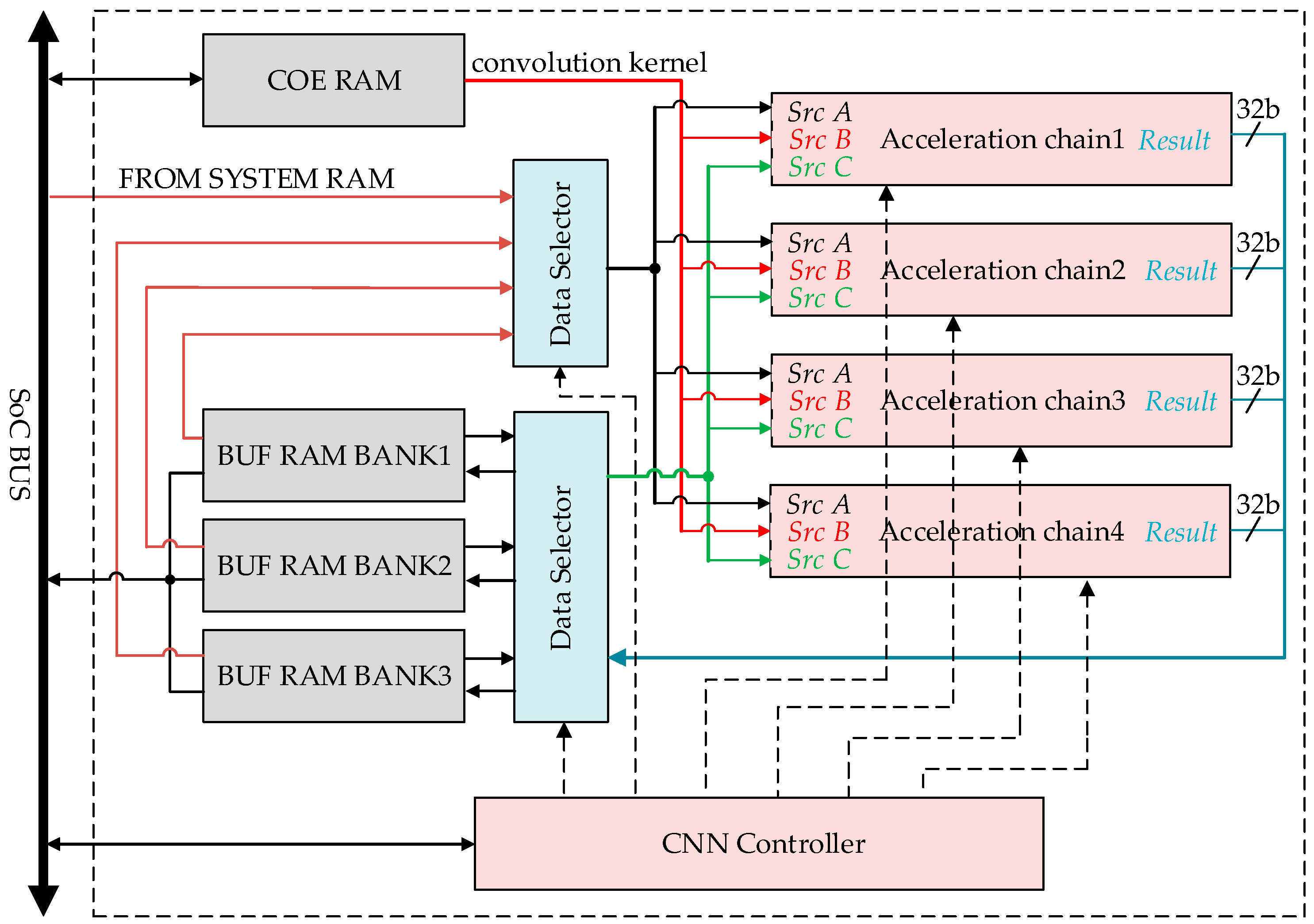

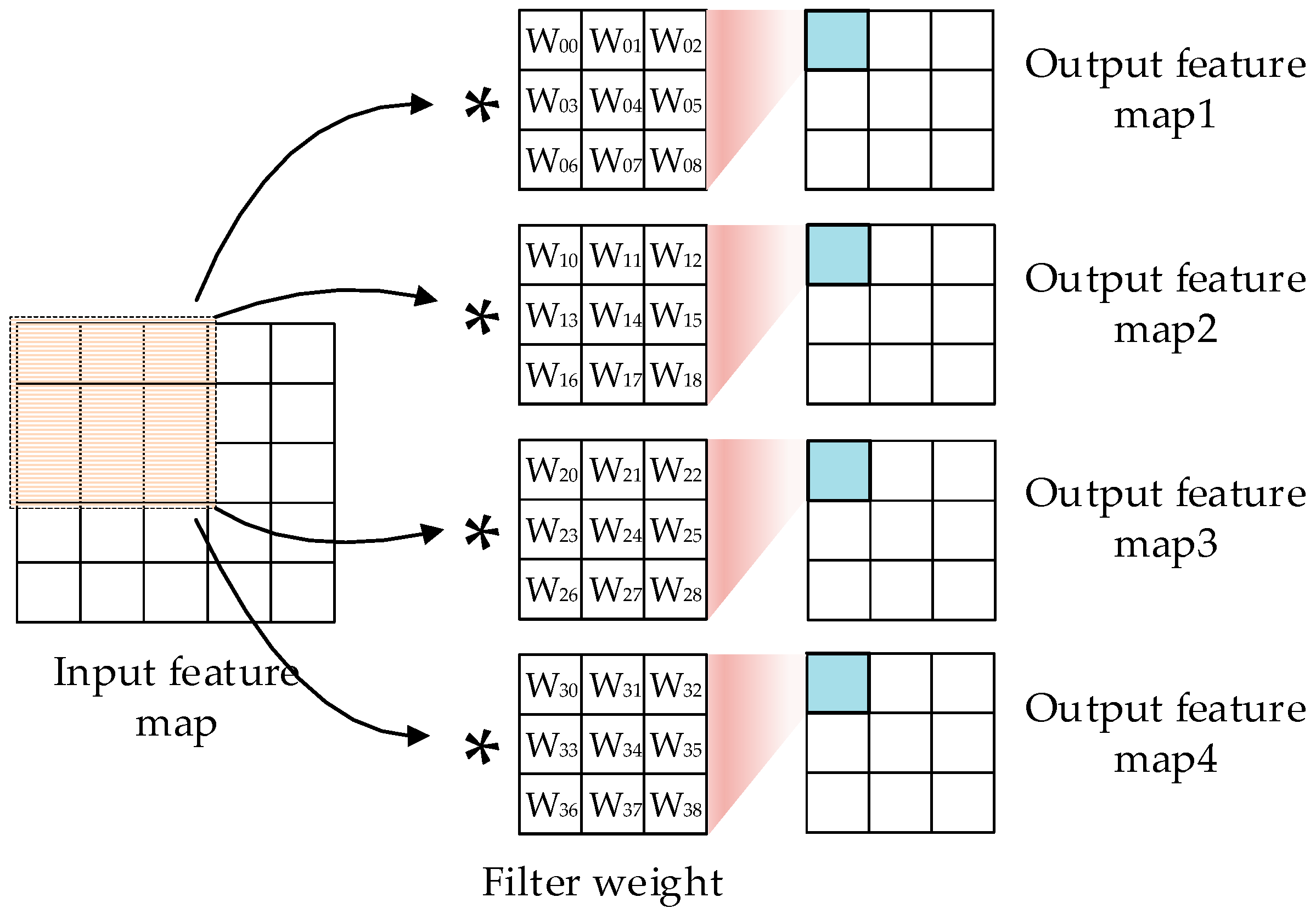

3.1. Acceleration Chain Design

3.2. Accelerator Structure Design

4. Verification Platform Construction

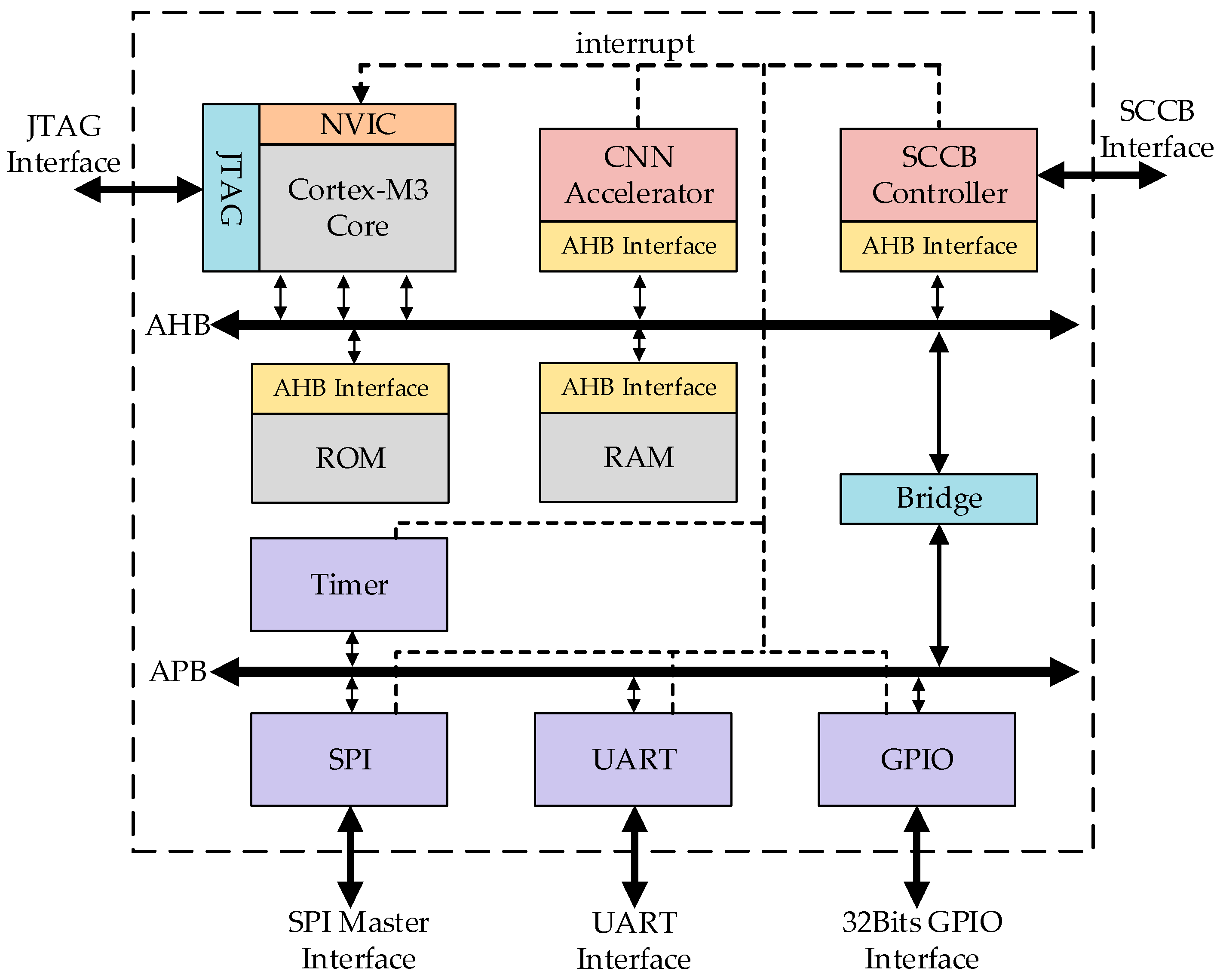

4.1. Design of the Verification Platform Based on Cortex-M3

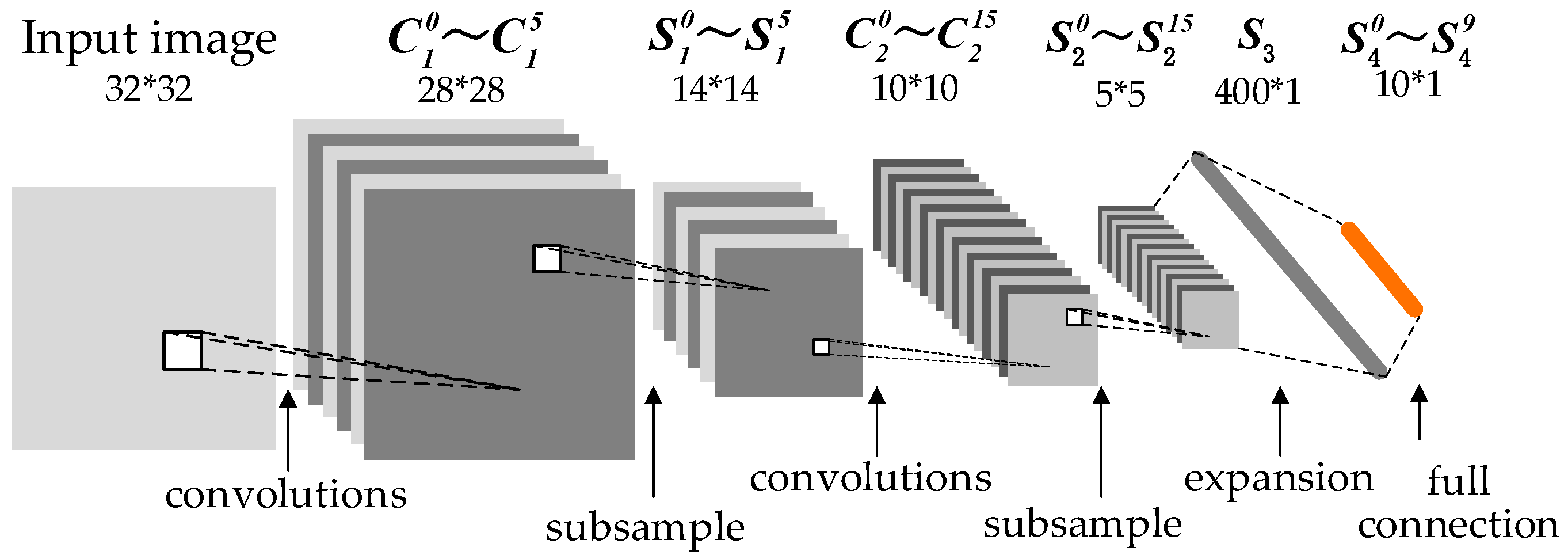

4.2. Implementation of the Lenet-5 Network in Verification SoC

5. Evaluation and Results

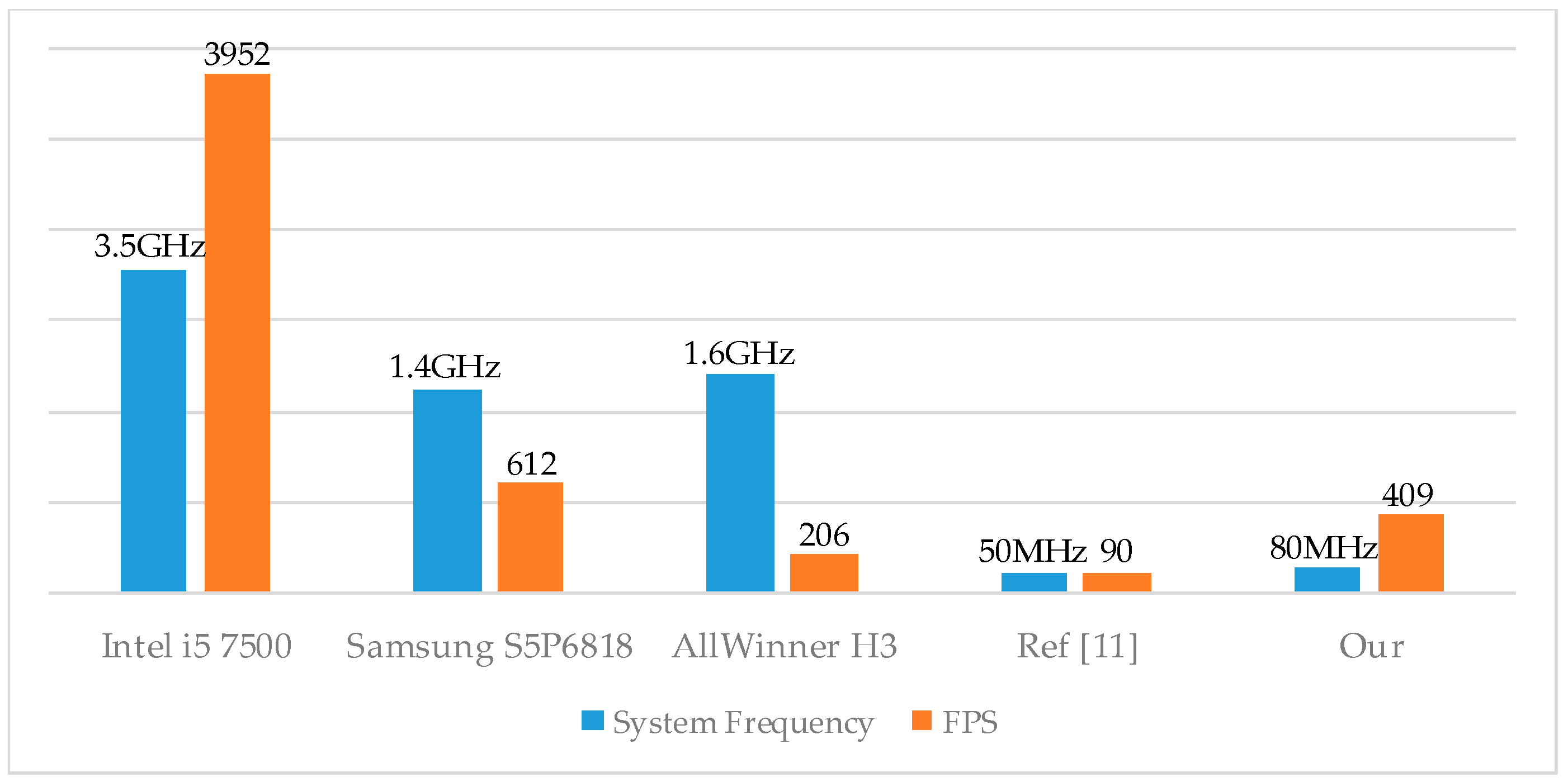

5.1. Performance Analysis of the Accelerator

5.2. Analysis of Resource Consumption

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Samie, F.; Bauer, L.; Henkel, J. From Cloud Down to Things: An Overview of Machine Learning in Internet of Things. IEEE Internet Things J. 2019, 4662, 1. [Google Scholar] [CrossRef]

- Yamakami, T. An Experimental Implementation of an Edge-based AI Engine with Edge-Cloud Coordination. In Proceedings of the ISCIT 2018—18th International Symposium on Communication and Information Technology, Bangkok, Thailand, 26–29 September 2018. [Google Scholar]

- Du, Y.; Du, L.; Li, Y.; Su, J.; Chang, M.F. A Streaming Accelerator for Deep Convolutional Neural Networks with Image and Feature Decomposition for Resource-limited System Applications. arXiv arXiv:1709.05116. Available online: https://arxiv.org/abs/1709.05116 (accessed on 10 February 2019).

- Liu, B.; Zou, D.; Feng, L.; Feng, S.; Fu, P.; Li, J. An FPGA-Based CNN Accelerator Integrating Depthwise Separable Convolution. Electronics 2019, 8, 281. [Google Scholar] [CrossRef]

- Sainath, T.N.; Kingsbury, B.; Saon, G.; Soltau, H.; Mohamed, A.R.; Dahl, G.; Ramabhadran, B. Deep Convolutional Neural Networks for Large-scale Speech Tasks. Neural Networks 2015, 64, 39–48. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2015; pp. 161–170. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. 2015, pp. 1–14. Available online: https://arxiv.org/abs/1510.00149 (accessed on 15 October 2018).

- Cavigelli, L.; Benini, L. Origami: A 803-GOp/s/W Convolutional Network Accelerator. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 2461–2475. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Krishna, T.; Emer, J.; Sze, V. Eyeriss JSSC 2017: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2017, 52, 127–138. [Google Scholar] [CrossRef]

- Conti, F.; Schilling, R.; Schiavone, P.D.; Pullini, A.; Rossi, D.; Gurkaynak, F.K.; Muehlberghuber, M.; Gautschi, M.; Loi, I.; Haugou, G.; et al. An IoT Endpoint System-on-Chip for Secure and Energy-Efficient Near-Sensor Analytics. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 64, 2481–2494. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, N.; Zhou, F.; Yahya, M.R. Design of Multifunctional Convolutional Neural Network Accelerator for IoT Endpoint SoC. In Proceedings of the World Congress on Engineering and Computer Science 2018, San Francisco, CA, USA, 23–25 October 2018; pp. 16–19. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2704–2713. [Google Scholar]

- Zhang, M.; Li, L.; Wang, H.; Liu, Y.; Qin, H.; Zhao, W. Optimized Compression for Implementing Convolutional Neural Networks on FPGA. Electronics 2019, 8, 295. [Google Scholar] [CrossRef]

- Hegde, K.; Yu, J.; Agrawal, R.; Yan, M.; Pellauer, M.; Fletcher, C.W. UCNN: Exploiting computational reuse in deep neural networks via weight repetition. In Proceedings of the 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 2–6 June 2018; pp. 674–687. [Google Scholar]

- ARM. ARM Cortex-M3 Processor Technical Reference Manual; ARM Limited Company: Cambridge, UK, 2015; pp. 1–121. [Google Scholar]

- Du, L.; Du, Y.; Li, Y.; Su, J.; Kuan, Y.-C.; Liu, C.-C.; Chang, M.-C.F. A Reconfigurable Streaming Deep Convolutional Neural Network Accelerator for Internet of Things. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 65, 198–208. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

- Li, Z.; Wang, L.; Guo, S.; Deng, Y.; Dou, Q.; Zhou, H.; Lu, W. Laius: An 8-bit fixed-point CNN hardware inference engine. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Guangzhou, China, 12–15 December 2017; pp. 143–150. [Google Scholar]

- Guo, K.; Sui, L.; Qiu, J.; Yu, J.; Wang, J.; Yao, S.; Han, S.; Wang, Y.; Yang, H. Angel-Eye: A complete design flow for mapping CNN onto embedded FPGA. IEEE Trans. Comput. Des. Integr. Circuits Syst. 2018, 37, 35–47. [Google Scholar] [CrossRef]

| Float | Int8 | Int10 | |

|---|---|---|---|

| Lenet-5 | 95.18% | 95.11% | 95.15% |

| GoogleNet | 89.10% | 87.53% | 88.83% |

| Parameter | Description |

|---|---|

| Precision | 10-bit fixed-point |

| Feature map size | (1~256)*(1~256) |

| Kernel size | (1~11)*(1~11) |

| Pool type | max pool |

| Pool size | 1~8 |

| Parallelism | 1~4 |

| Function (Arbitrary combination) | convolution (1-D or 2-D) data add (matrix or bias) pooling ReLU activation |

| Cortex-M3 Kernel | AHB Bus | APB Bus | Peripherals | Accelerator | |

|---|---|---|---|---|---|

| LUT | 15162 | 260 | 119 | 1238 | 4901 |

| Layer | Calculation Methods | Description |

|---|---|---|

| C1 & S1 | Convolution and subsampling | |

| C2 & S2 | … | Partially connection and subsampling |

| S3 | Expansion | |

| S4 | Full connection |

| SoC | Architecture | Core Number | Frequency | Latency/Image | Frame Per Second |

|---|---|---|---|---|---|

| Intel i5 7500 | Kaby Lake | 4 | 3.5 GHz | 0.253 ms | 3952 |

| Samsung S5P6818 | Cortex-A53 | 8 | 1.4 GHz | 1.633 ms | 612 |

| AllWinner H3 | Cortex-A7 | 4 | 1.6 GHz | 4.852 ms | 206 |

| Proposed | Cortex-M3 | 1 | 80 MHz | 2.44 ms | 409 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ge, F.; Wu, N.; Xiao, H.; Zhang, Y.; Zhou, F. Compact Convolutional Neural Network Accelerator for IoT Endpoint SoC. Electronics 2019, 8, 497. https://doi.org/10.3390/electronics8050497

Ge F, Wu N, Xiao H, Zhang Y, Zhou F. Compact Convolutional Neural Network Accelerator for IoT Endpoint SoC. Electronics. 2019; 8(5):497. https://doi.org/10.3390/electronics8050497

Chicago/Turabian StyleGe, Fen, Ning Wu, Hao Xiao, Yuanyuan Zhang, and Fang Zhou. 2019. "Compact Convolutional Neural Network Accelerator for IoT Endpoint SoC" Electronics 8, no. 5: 497. https://doi.org/10.3390/electronics8050497

APA StyleGe, F., Wu, N., Xiao, H., Zhang, Y., & Zhou, F. (2019). Compact Convolutional Neural Network Accelerator for IoT Endpoint SoC. Electronics, 8(5), 497. https://doi.org/10.3390/electronics8050497