AI-Driven Adaptive Segmentation of Timed Up and Go Test Phases Using a Smartphone

Abstract

1. Introduction

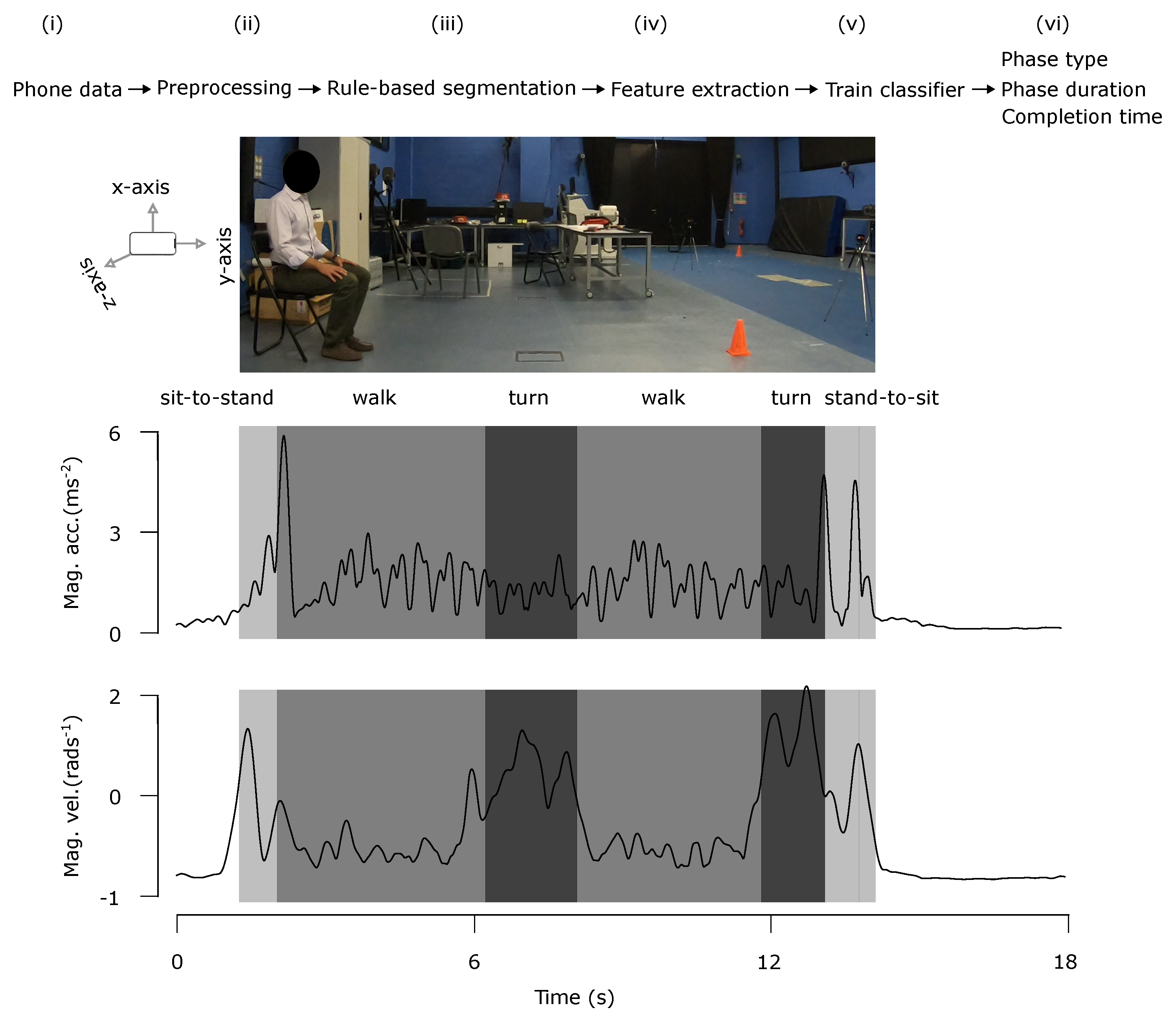

- Adaptive preprocessing: Thresholds derived from median and median absolute deviation (MAD) per trial adjust sensitivity to individual movement amplitude and enforce a no-gaps policy, preventing fragmentation during slow or irregular walking. This adaptability ensures reliable segmentation across diverse gait patterns, which is critical for clinical use.

- Sensor fusion: Accelerometer and gyroscope signals are combined to capture both linear and rotational dynamics, improving detection of walking and turning phases. This enables an accurate phase-level analysis, which helps clinicians identify whether difficulties arise from straight walking or turning.

- Adaptive turn detection: A peak-based path and an angle-area path work together to identify both sharp and gradual rotations, addressing a key limitation in prior single-device approaches. Accurate turn detection is clinically important because turning deficits are strongly associated with fall risk.

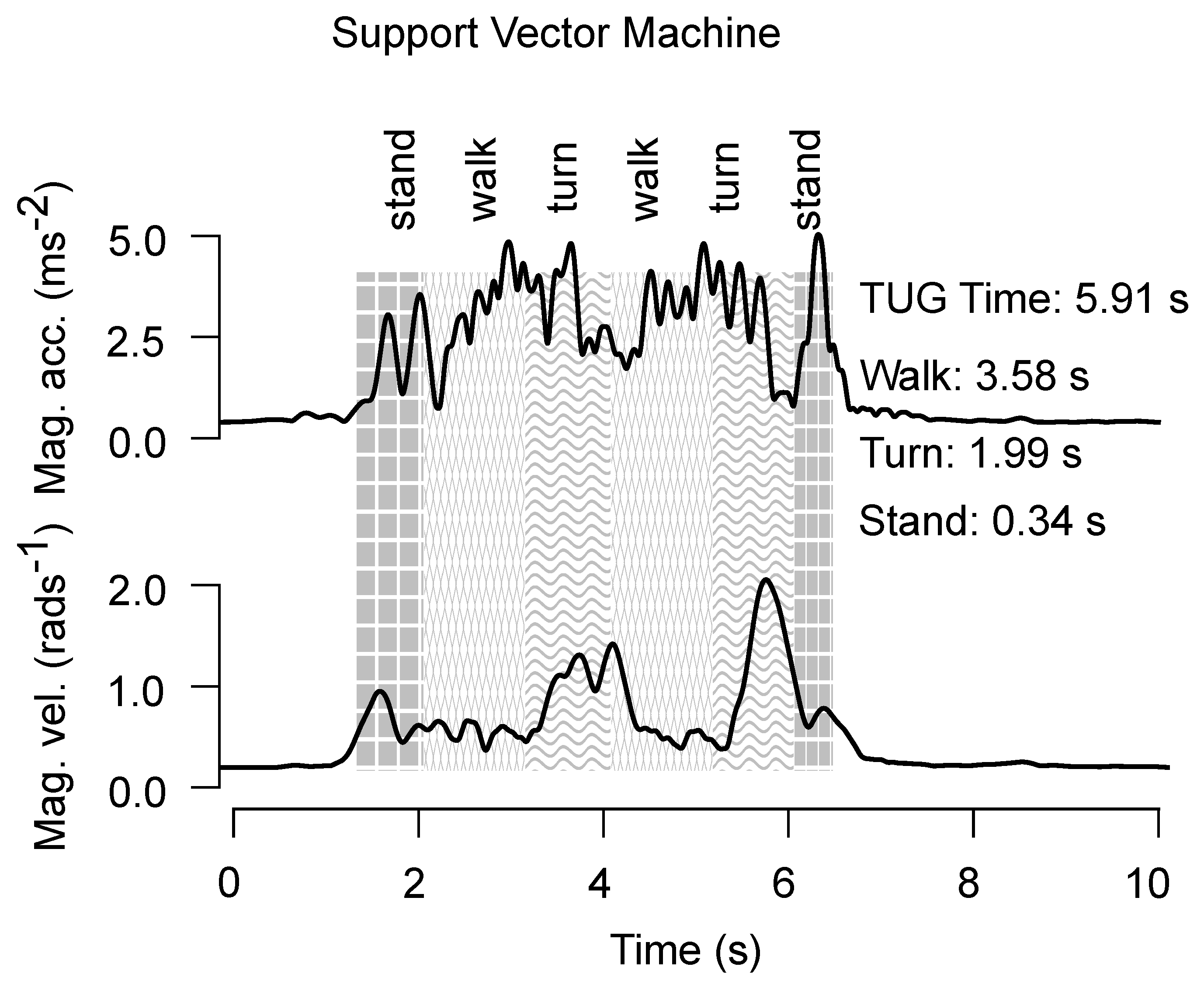

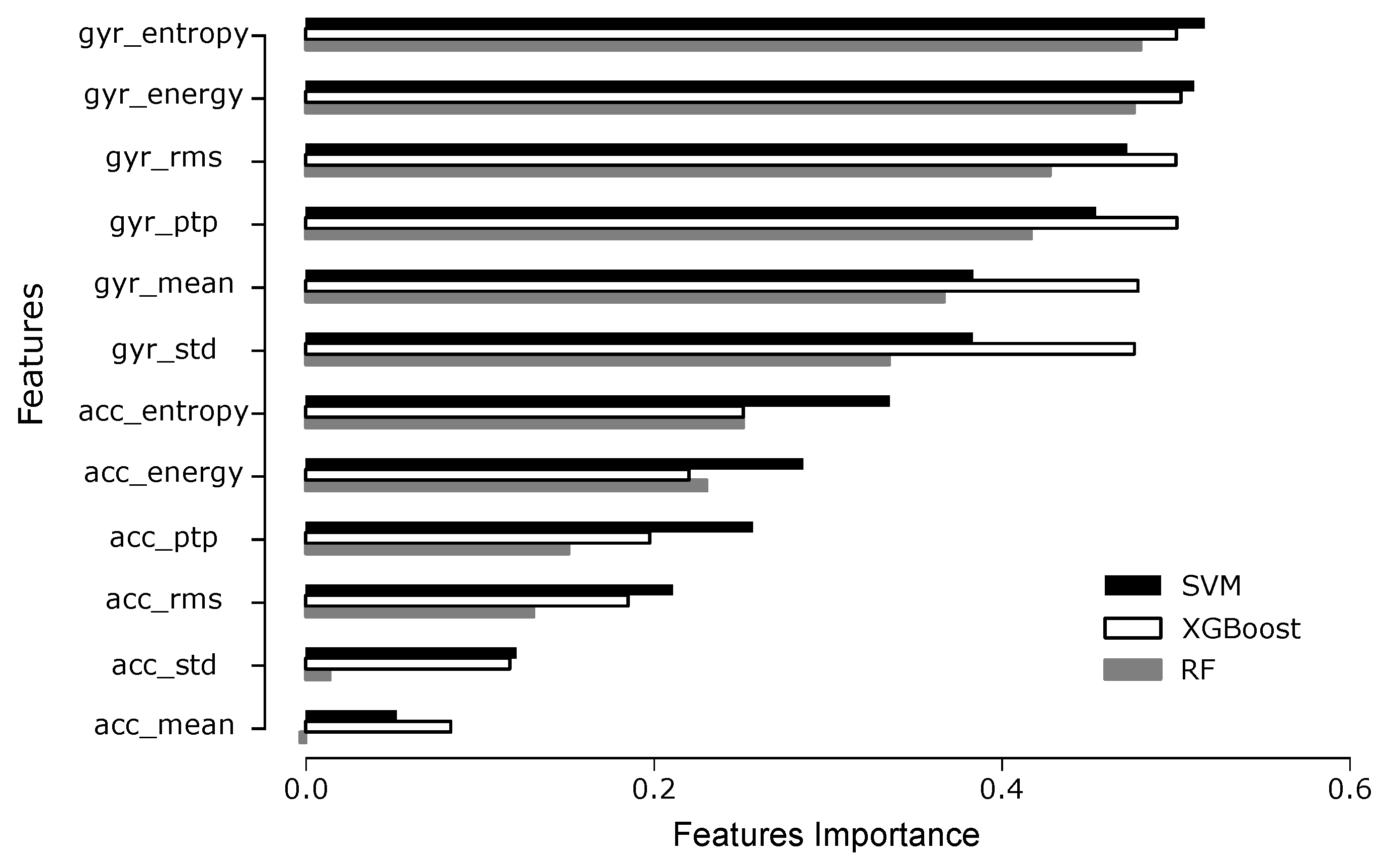

- Statistical features and classical models: Features summarizing magnitude, variability, and energy are extracted from short windows and classified using Random Forest, Support Vector Machine (SVM), and XGBoost, ensuring interpretability and efficiency. This design supports practical deployment on consumer devices without sacrificing accuracy.

2. Related Work

3. Methodology

3.1. Participants

3.2. Data Collection

3.3. Data Processing Pipeline

3.3.1. Processing

3.3.2. Rule-Based Segmentation

- Peak-based detection: Identifies high-intensity rotational activity using gyroscope RMS values. Early in the test, thresholds are lower to avoid missing the typically curvilinear, lower-peak first turn, while later thresholds are higher to avoid false positives during return walking.

- Angle-area detection: Integrates rotational activity over a 1 s window to detect low-amplitude, curvilinear turns that peak-based methods might miss. This ensures sensitivity to gradual rotations often seen in frail or Parkinsonian gait.

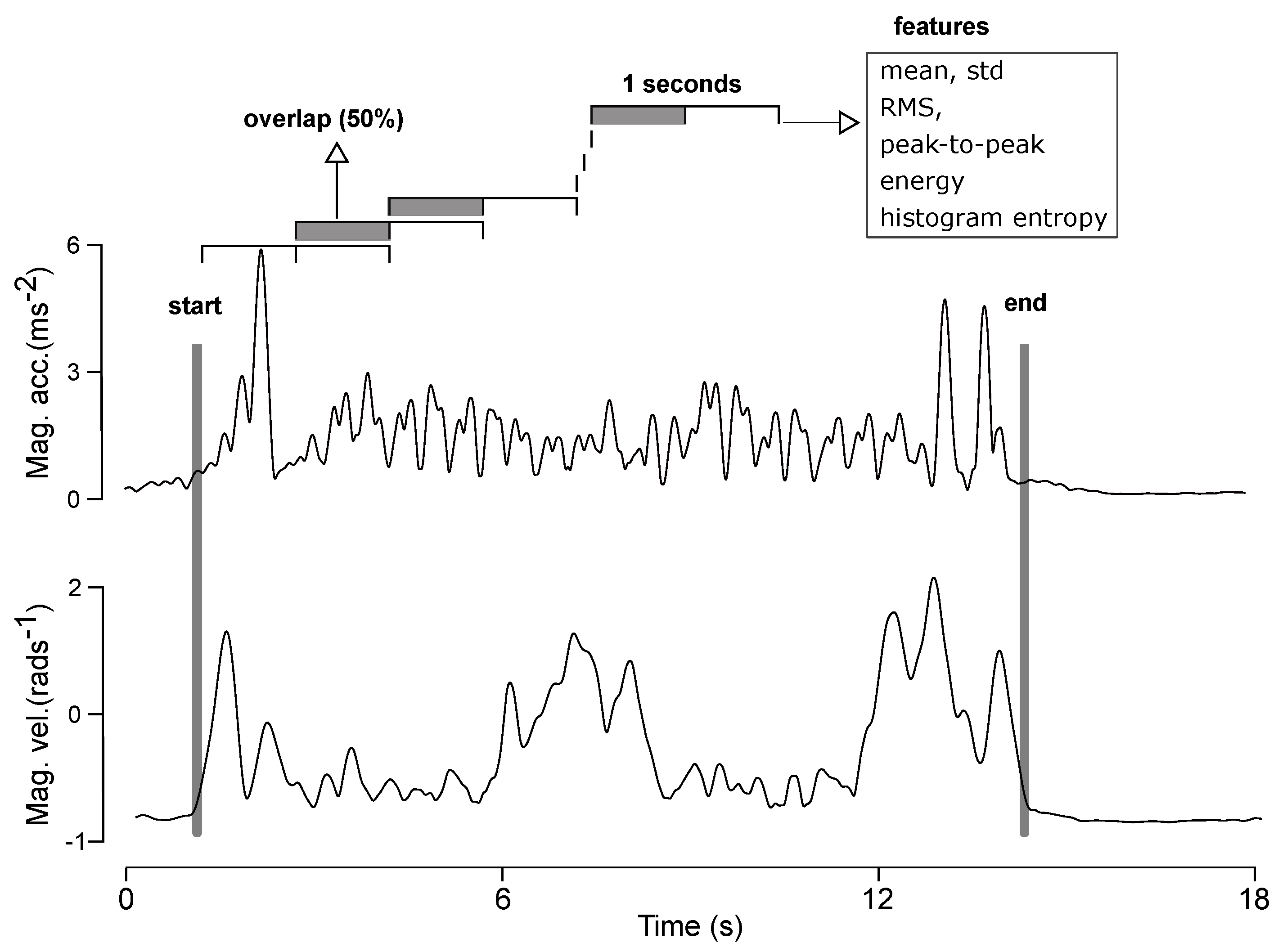

3.3.3. Feature Extraction

3.3.4. Training Classifiers

3.3.5. Duration Estimation

4. Results

4.1. Start and End Detection

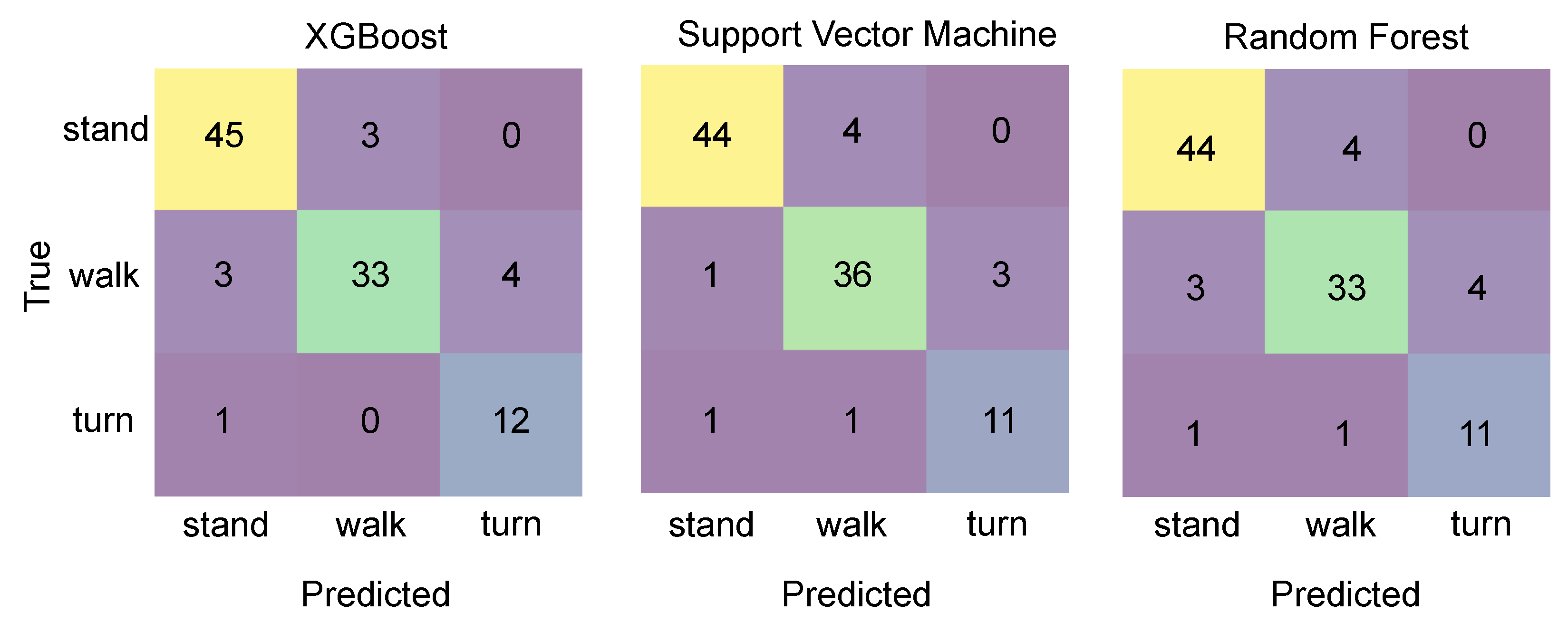

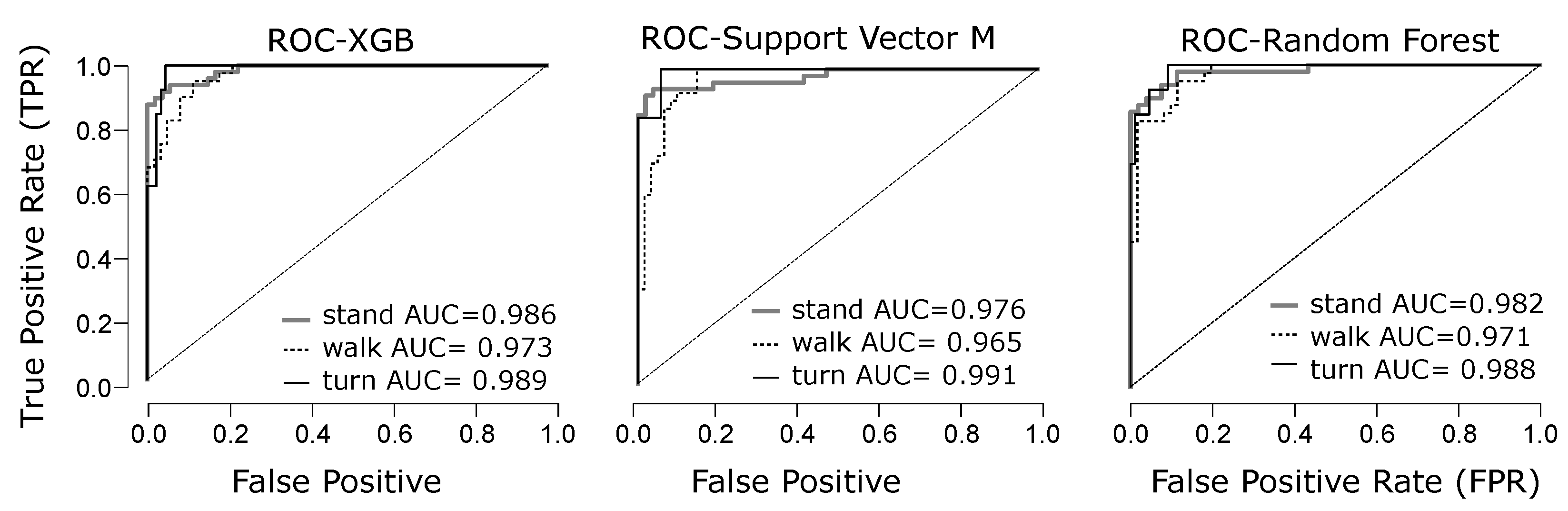

4.2. Phase Classification

4.3. Phase Estimation

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yin, L.; Chen, P.; Xu, J.; Gong, Y.; Zhuang, Y.; Chen, Y.; Wang, L. Validity and reliability of inertial measurement units for measuring gait kinematics in older adults across varying fall risk levels and walking speeds. BMC Geriatr. 2025, 25, 336. [Google Scholar] [CrossRef]

- Sun, Y.; Song, Z.; Mo, L.; Li, B.; Liang, F.; Yin, M.; Wang, D. IMU-Based quantitative assessment of stroke from gait. Sci. Rep. 2025, 15, 9541. [Google Scholar] [CrossRef]

- Felius, R.A.; Geerars, M.; Bruijn, S.M.; van Dieën, J.H.; Wouda, N.C.; Punt, M. Reliability of IMU-based gait assessment in clinical stroke rehabilitation. Sensors 2022, 22, 908. [Google Scholar] [CrossRef]

- Barry, E.; Galvin, R.; Keogh, C.; Horgan, F.; Fahey, T. Is the Timed Up and Go test a useful predictor of risk of falls in community dwelling older adults: A systematic review and meta-analysis. BMC Geriatr. 2014, 14, 14. [Google Scholar] [CrossRef]

- Steffen, T.M.; Hacker, T.A.; Mollinger, L. Age- and gender-related test performance in community-dwelling elderly people: Six-Minute Walk Test, Berg Balance Scale, Timed Up & Go Test, and gait speeds. Phys. Ther. Rehabil. J. 2002, 82, 128–137. [Google Scholar] [CrossRef]

- Podsiadlo, D.; Richardson, S. The timed up and go: A test of basic functional mobility for frail elderly persons. J. Am. Geriatr. Soc. 1991, 39, 142–148. [Google Scholar] [CrossRef]

- Mathias, S.; Nayak, U.S.L.; Isaacs, B. Balance in elderly patients: The Get-up and Go test. Arch. Phys. Med. Rehabil. 1986, 67, 387–389. [Google Scholar] [PubMed]

- McCreath Frangakis, A.L.; Lemaire, E.D.; Baddour, N. Subtask segmentation methods of the Timed Up and Go test and L test using inertial measurement units—A scoping review. Information 2023, 14, 127. [Google Scholar] [CrossRef]

- Ponciano, V.; Pires, I.M.; Ribeiro, F.R.; Marques, G.; Garcia, N.M.; Pombo, N.; Spinsante, S.; Zdravevski, E. Is the Timed Up and Go test feasible in mobile devices? A systematic review. Electronics 2020, 9, 528. [Google Scholar] [CrossRef]

- Gois, C.O.; de Andrade Guimarães, A.L.; Gois Júnior, M.B.; Carvalho, V.O. The use of reference values for the Timed Up and Go test applied in multiple scenarios. J. Aging Phys. Act. 2024, 32, 679–682. [Google Scholar] [CrossRef] [PubMed]

- Manor, B.; Yu, W.; Zhu, H.; Harrison, R.; Lo, O.Y.; Lipsitz, L.; Travison, T.; Pascual-Leone, A.; Zhou, J. Smartphone app-based assessment of gait during normal and dual task walking: Demonstration of validity and reliability. JMIR mHealth uHealth 2018, 6, e36. [Google Scholar] [CrossRef] [PubMed]

- Hayek, R.; Werner, P.; Amir, A. Smartphone-based sit-to-stand analysis for mobility assessment in older adults. Innov. Aging 2024, 8, igae079. [Google Scholar] [CrossRef] [PubMed]

- Powell, K.; Amer, A.; Glavcheva-Laleva, Z.; Williams, J.; Farrell, C.O.; Harwood, F.; Bishop, P.; Holt, C. MoveLab®: Validation and development of novel cross-platform gait and mobility assessments using gold standard motion capture and clinical standard assessment. Sensors 2025, 25, 5706. [Google Scholar] [CrossRef]

- Ortega-Bastidas, P.; Aqueveque, P.; Gómez, B.; Saavedra, F.; Cano-de-la Cuerda, R. Use of a single wireless IMU for the segmentation and automatic analysis of activities performed in the 3-m Timed Up & Go test. Sensors 2019, 19, 1647. [Google Scholar] [CrossRef]

- Matey-Sanz, M.; González-Pérez, A.; Casteleyn, S.; Granell, C. Implementing and Evaluating the Timed Up and Go Test Automation Using Smartphones and Smartwatches. IEEE J. Biomed. Health Inform. 2024, 28, 6594–6605. [Google Scholar] [CrossRef]

- Böttinger, M.J.; Mellone, S.; Klenk, J.; Jansen, C.P.; Stefanakis, M.; Litz, E.; Bredenbrock, A.; Fischer, J.P.; Bauer, J.M.; Becker, C.; et al. A smartphone-based Timed Up and Go test self-assessment for older adults: Validity and reliability study. JMIR Aging 2025, 8, e67322. [Google Scholar] [CrossRef]

- Sher, A.; Langford, D.; Villagra, F.; Akanyeti, O. Automatic scoring of chair sit-to-stand test using a smartphone. In Proceedings of the UK Workshop on Computational Intelligence, Sheffield, UK, 7–9 September 2022; Springer: Cham, Switzerland, 2022; pp. 170–180. [Google Scholar]

- Sher, A.; Bunker, M.T.; Akanyeti, O. Towards personalized environment-aware outdoor gait analysis using a smartphone. Expert Syst. 2023, 40, e13130. [Google Scholar] [CrossRef]

- Sher, A.; Akanyeti, O. Minimum data sampling requirements for accurate detection of terrain-induced gait alterations change with mobile sensor position. Pervasive Mob. Comput. 2024, 105, 101994. [Google Scholar] [CrossRef]

- Sher, A.; Langford, D.; Dogger, E.; Monaghan, D.; Lunn, L.I.; Schroeder, M.; Hamidinekoo, A.; Arkesteijn, M.; Shen, Q.; Zwiggelaar, R.; et al. Automatic gait analysis during steady and unsteady walking using a smartphone. TechRxiv 2021. [Google Scholar] [CrossRef]

- Salarian, A.; Horak, F.B.; Zampieri, C.; Carlson-Kuhta, P.; Nutt, J.G.; Aminian, K. iTUG, a sensitive and reliable measure of mobility. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 303–310. [Google Scholar] [CrossRef]

- Zampieri, C.; Salarian, A.; Carlson-Kuhta, P.; Nutt, J.G.; Horak, F.B. Assessing mobility at home in people with early Parkinson’s disease using an instrumented Timed Up and Go test. Park. Relat. Disord. 2011, 17, 277–280. [Google Scholar] [CrossRef]

- Arteaga-Bracho, E.; Cosne, G.; Kanzler, C.; Karatsidis, A.; Mazzà, C.; Penalver-Andres, J.; Zhu, C.; Shen, C.; Erb M, K.; Freigang, M.; et al. Smartphone-based assessment of mobility and manual dexterity in adult people with spinal muscular atrophy. J. Neuromuscul. Dis. 2024, 11, 1049–1065. [Google Scholar] [CrossRef]

- Abou, L.; Wong, E.; Peters, J.; Dossou, M.S.; Sosnoff, J.J.; Rice, L.A. Smartphone applications to assess gait and postural control in people with multiple sclerosis: A systematic review. Mult. Scler. Relat. Disord. 2021, 51, 102943. [Google Scholar] [CrossRef]

- Kear, B.M.; Guck, T.P.; McGaha, A.L. Timed Up and Go test: Normative reference values for ages 20 to 59 years and relationships with physical and mental health risk factors. J. Prim. Care Community Health 2017, 8, 9–13. [Google Scholar] [CrossRef]

- Mayhew, A.J.; So, H.Y.; Ma, J.; Beauchamp, M.K.; Griffith, L.E.; Kuspinar, A.; Lang, J.J.; Raina, P. Normative values for grip strength, gait speed, Timed Up and Go, single leg balance, and chair rise derived from the Canadian Longitudinal Study on Ageing. Age Ageing 2023, 52, afad054. [Google Scholar] [CrossRef] [PubMed]

- Ali, F.; Hogen, C.A.; Miller, E.J.; Kaufman, K.R. Validation of pelvis and trunk range of motion as assessed using inertial measurement units. Bioengineering 2024, 11, 659. [Google Scholar] [CrossRef]

- Hsu, W.C.; Sugiarto, T.; Lin, Y.J.; Yang, F.C.; Lin, Z.Y.; Sun, C.T.; Hsu, C.L.; Chou, K.N. Multiple-wearable-sensor-based gait classification and analysis in patients with neurological disorders. Sensors 2018, 18, 3397. [Google Scholar] [CrossRef]

- Silsupadol, P.; Teja, K.; Lugade, V. Reliability and validity of a smartphone-based assessment of gait parameters across walking speed and smartphone locations: Body, bag, belt, hand, and pocket. Gait Posture 2017, 58, 516–522. [Google Scholar] [CrossRef]

- Mellone, S.; Tacconi, C.; Chiari, L. Validity of a smartphone-based instrumented Timed Up and Go. Gait Posture 2012, 36, 163–165. [Google Scholar] [CrossRef] [PubMed]

- Ishikawa, M.; Yamada, S.; Yamamoto, K.; Aoyagi, Y. Gait analysis in a component timed-up-and-go test using a smartphone application. J. Neurol. Sci. 2019, 398, 45–49. [Google Scholar] [CrossRef] [PubMed]

- Clavijo-Buendía, S.; Molina-Rueda, F.; Martín-Casas, P.; Ortega-Bastidas, P.; Monge-Pereira, E.; Laguarta-Val, S.; Morales-Cabezas, M.; Cano-de-la Cuerda, R. Construct validity and test-retest reliability of a free mobile application for spatio-temporal gait analysis in Parkinson’s disease patients. Gait Posture 2020, 79, 86–91. [Google Scholar] [CrossRef] [PubMed]

- Abualait, T.S.; Alnajdi, G.K. Effects of using assistive devices on the components of the modified instrumented timed up and go test in healthy subjects. Heliyon 2021, 7, e06940. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Bowker, A.H. A test for symmetry in contingency tables. J. Am. Stat. Assoc. 1948, 43, 572–574. [Google Scholar] [CrossRef]

- Haertner, L.; Elshehabi, M.; Zaunbrecher, L.; Pham, M.H.; Maetzler, C.; Van Uem, J.M.; Hobert, M.A.; Hucker, S.; Nussbaum, S.; Berg, D.; et al. Effect of fear of falling on turning performance in Parkinson’s disease in the lab and at home. Front. Aging Neurosci. 2018, 10, 78. [Google Scholar] [CrossRef]

- He, J.; Wu, L.; Du, W.; Zhang, F.; Lin, S.; Ling, Y.; Ren, K.; Chen, Z.; Chen, H.; Su, W. Instrumented timed up and go test and machine learning-based levodopa response evaluation: A pilot study. J. Neuroeng. Rehabil. 2024, 21, 163. [Google Scholar] [CrossRef] [PubMed]

| Study | Device and Placement | What They Achieved | Limitations |

|---|---|---|---|

| Salarian et al. [21] | Multiple IMUs on limbs and trunk | Accurate phase detection and gait metrics | Complex setup, not practical for home use |

| Ortega-Bastidas et al. [14] | Single IMU on lower back | Segmentation for walking phases | Weak turn detection; no adaptive thresholds |

| Matey-Sanz et al. [15] | Smartphone + smartwatch | Automated TUG with better usability | Requires multiple devices |

| Ishikawa et al. [31] | Smartphone on abdomen | Six-phase segmentation; ICC ≈ 0.94 | Limited adaptability to variable gait |

| Mellone et al. [30] | Smartphone on lower back + reference device | Valid total time and sit-to-stand detection | Minimal phase-level detail |

| Metric | MAE (s) | 95% CI (s) |

|---|---|---|

| Total TUG | 0.42 | 0.36–0.48 |

| Sit-to-Stand | 0.30 | 0.18–0.42 |

| Walk | 0.28 | 0.15–0.41 |

| Turn | 0.34 | 0.15–0.53 |

| Stand-to-Sit | 0.31 | 0.18–0.44 |

| Model | Accuracy | Macro-F1 | Weighted-F1 |

|---|---|---|---|

| Random Forest | 0.871 ± 0.035 | 0.850 ± 0.030 | 0.849 ± 0.028 |

| SVM (RBF) | 0.901 ± 0.019 | 0.882 ± 0.018 | 0.854 ± 0.017 |

| XGBoost | 0.891 ± 0.021 | 0.875 ± 0.019 | 0.848 ± 0.018 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rashid, M.; Sher, A.; Povina, F.V.; Akanyeti, O. AI-Driven Adaptive Segmentation of Timed Up and Go Test Phases Using a Smartphone. Electronics 2025, 14, 4650. https://doi.org/10.3390/electronics14234650

Rashid M, Sher A, Povina FV, Akanyeti O. AI-Driven Adaptive Segmentation of Timed Up and Go Test Phases Using a Smartphone. Electronics. 2025; 14(23):4650. https://doi.org/10.3390/electronics14234650

Chicago/Turabian StyleRashid, Muntazir, Arshad Sher, Federico Villagra Povina, and Otar Akanyeti. 2025. "AI-Driven Adaptive Segmentation of Timed Up and Go Test Phases Using a Smartphone" Electronics 14, no. 23: 4650. https://doi.org/10.3390/electronics14234650

APA StyleRashid, M., Sher, A., Povina, F. V., & Akanyeti, O. (2025). AI-Driven Adaptive Segmentation of Timed Up and Go Test Phases Using a Smartphone. Electronics, 14(23), 4650. https://doi.org/10.3390/electronics14234650