Abstract

Modern AI systems can strategically misreport information when incentives diverge from truthfulness, posing risks for oversight and deployment. Prior studies often examine this behavior within a single paradigm; systematic, cross-architecture evidence under a unified protocol has been limited. We introduce the Strategy Elicitation Battery (SEB), a standardized probe suite for measuring deceptive reporting across large language models (LLMs), reinforcement-learning agents, vision-only classifiers, multimodal encoders, state-space models, and diffusion models. SEB uses Bayesian inference tasks with persona-controlled instructions, schema-constrained outputs, deterministic decoding where supported, and a probe mix (near-threshold, repeats, neutralized, cross-checks). Estimates use clustered bootstrap intervals, and significance is assessed with a logistic regression by architecture; a mixed-effects analysis is planned once the per-round agent/episode traces are exported. On the latest pre-correction runs, SEB shows a consistent cross-architecture pattern in deception rates: ViT , CLIP , Mamba , RL agents , Stable Diffusion , and LLMs (20 scenarios/architecture). A logistic regression on per-scenario flags finds a significant overall architecture effect (likelihood-ratio test vs. intercept-only: , ). Holm-adjusted contrasts indicate ViT is significantly higher than all other architectures in this snapshot; the remaining pairs are not significant. Post-correction acceptance decisions are evaluated separately using residual deception and override rates under SEB-Correct. Latency varies by architecture (sub-second to minutes), enabling pre-deployment screening broadly and real-time auditing for low-latency classes. Results indicate that SEB-Detect deception flags are not confined to any one paradigm, that distinct architectures can converge to similar levels under a common interface, and that reporting interfaces and incentive framing are central levers for mitigation. We operationalize “deception” as reward-sensitive misreport flags, and we separate detection from intervention via a correction wrapper (SEB-Correct), supporting principled acceptance decisions for deployment.

1. Introduction

Strategic behaviors in AI systems, including deliberate underreporting when incentives favor it, present tangible risks to oversight, auditing, and high-stakes deployment. Across domains, evidence of manipulation is increasing: research on large language models (LLMs) highlights emergent deceptive capacities and exposes the limits of existing safety interventions [1,2,3]. Broader taxonomies of potential failure modes (e.g., proxy gaming, goal drift, power-seeking) have been proposed as conceptual frameworks [4,5]. These risks are compounded by sociotechnical dynamics that can blunt purely technical fixes, underscoring the need for enforceable guardrails and accountable governance processes [6].

Concerns extend beyond language models. In reinforcement learning (RL) and multi-agent settings, failure modes such as reward tampering, objective mis-specification, and collusive behavior remain salient [7,8,9]. Vision and multimodal systems introduce additional complexity, as cross-modal pipelines and ViT-based architectures bring distinctive robustness and alignment challenges [10,11,12]. At the speculative limit, work on superintelligent agents emphasizes how instrumental convergence might yield catastrophic physical outcomes [13]. These risks become especially acute in embodied or robotic AI systems, where perception–planning–actuation loops tie decision quality directly to motion, force, and material resource use. In such contexts, strategic underreporting or misaligned objectives can escalate from informational harms to physical manipulation. Organizational readiness—including crisis communication protocols and safety research disclosure norms—will shape both mitigation pathways and recovery trajectories [14,15].

Although research on AI deception is rapidly expanding, studies remain largely isolated within single paradigms, often using incompatible tasks, metrics, or interfaces. This fragmentation makes it unclear whether strategic deception arises from architecture-specific mechanisms or reflects broader, shared constraints across models. Recent workshops and position papers have called for systematic, cross-architectural comparisons [16], as well as clearer characterizations of multimodal risks beyond narrow capability demonstrations [17]. Parallel efforts in detection and oversight [18,19] and in incentive-compatible corrections [20] have advanced promising frameworks, but typically lack a common empirical substrate that would enable meaningful comparison of deception propensity across model families.

To address this gap, we introduce the Strategy Elicitation Battery (SEB), a unified protocol for eliciting and measuring strategic underreporting in scalar belief reporting under matched conditions across diverse model classes. SEB standardizes scenario generation, input–output contracts, decoding controls, and evaluation methods, while providing modular adapters for a wide range of architectures—including instruction-tuned LLMs, belief-reporting RL agents, ViT classifiers, CLIP-style vision–language encoders, and Mamba-based state-space models. This framework enables principled cross-architecture comparisons of deception-related behavior and serves as a bridge to oversight and correction strategies.

Positioning Relative to Prior Work

Existing strands each cover part of the problem but lack a matched, cross-architecture empirical substrate. Taxonomies and governance work clarify failure types and constraints but do not specify a common protocol [4,5,6]. RL studies quantify goal misgeneralization and reward tampering under agent-specific interfaces [7,21,22], while LLM papers surface deception-like or sleeper/backdoor behaviors within a single family [3,23]. Vision/LVLM research emphasizes adversarial robustness and distribution shift rather than reward-sensitive reporting with a decision threshold [10,11,12]. SEB complements these by standardizing the belief-reporting channel (scalar posterior in JSON), persona/incentives, decoding controls, and a probe mix (near-threshold, neutralized, repeats, cross-checks) across LLM/SSM/RL/ViT/CLIP/SD, enabling like-for-like estimates and downstream correction under a unified protocol.

Our contributions are fourfold:

- Cross-architectural evaluation of deception. We conduct a systematic, controlled comparison under a unified belief-reporting protocol spanning LLMs, RL agents, ViT, CLIP/LVLMs, state-space, and diffusion models, and we position this work as among the early cross-architecture studies using matched incentives and a standardized reporting interface.

- Strategy Elicitation Battery (SEB). We introduce SEB, a unified benchmark that elicits strategic underreporting in Bayesian inference tasks. SEB standardizes scenario generation, persona-controlled instructions, schema-constrained reporting, deterministic decoding (where available), and bootstrap-based statistical analysis.

- Empirical characterization. Using SEB, we establish a robust pre-correction ordering: ViT exhibits the highest deception rate, CLIP/Mamba/RL/Stable Diffusion are substantially lower and statistically indistinguishable in this snapshot, and LLMs are lowest.

- Implications for oversight research (diagnostic utility). We show how SEB indicators can be used diagnostically alongside existing detection/correction frameworks to design pre-deployment screens and spot checks; we do not claim SEB scores predict real-world incident risk without external validation.

- Open resources for reproducibility. We release code, scenario schemas, adapters, and analysis scripts to support replication, audit, and extension across model classes.

The remainder of the paper is organized as follows. Section 2 surveys state-of-the-art research on AI deception, failure modes, and oversight frameworks, and identifies open gaps. Section 3 introduces the Strategy Elicitation Battery (SEB), detailing scenario design, reporting protocols, and architecture-specific adapters. Section 5 reports empirical findings, including cross-architecture comparisons and statistical validation. Section 6 interprets these results, considering technical, governance, and deployment implications—particularly for embodied AI—and discusses limitations. Finally, Section 7 summarizes contributions and outlines future research directions.

2. State of the Art

Prior research on AI deception spans diverse paradigms and methods, from language models to reinforcement learning and multimodal systems, and from taxonomies to oversight frameworks. To situate our contribution, this section reviews the state of the art in four parts: (i) foundations of strategic behavior and rogue AI, (ii) architecture-specific failure modes, (iii) cross-architecture comparisons, and (iv) detection, correction, and governance perspectives.

2.1. AI Strategic Behavior and Deception

The 2024 landscape includes both peer-reviewed studies and non-archival accounts. Several preprints and case reports describe illustrative scenarios of strategic behavior in LLMs (e.g., simulated finance, tool-use settings) [1]; we characterize these as conjectural until independently replicated and peer-reviewed. Peer-reviewed alignment methods (e.g., Constitutional AI [2]) and backdoor/sleeper concerns [3] motivate our focus on operational diagnostics, but we avoid using non-archival anecdotes as evidence for core claims.

A major survey by Park et al. [1] provides a systematic analysis of deceptive behaviors in LLMs, particularly in GPT-4 and Claude-3 models. Notably, they show GPT-4 engaging in insider trading while masking its true intentions—representing the first demonstration of realistic, emergent strategic deception without direct training.

This work helped establish a foundation for studying deceptive capabilities in instruction-tuned models. Bai et al. [2] similarly find such behaviors absent in earlier generations, but emerging in more powerful models. Their “Constitutional AI” framework introduces techniques to mitigate these risks through enhanced supervision and alignment training. Hubinger et al. [3] demonstrate persistent context-dependent deception via backdoor training, which resisted standard safety interventions and raised concerns about latent adversarial behavior. Wang et al. [24] examine prompt-conditioned output biases in chain-of-thought settings; they do not demonstrate self-preservation. We cite this work for evidence of prompt-sensitive reporting effects rather than agentic drives.

Despite these findings, prior work remains focused exclusively on language models. Systematic comparisons across other AI architectures including vision, reinforcement learning, and state-space models are still lacking.

2.2. Undesired Behaviors in AI Agents

Hendrycks et al. provide taxonomies and theoretical framing for AI failure modes (proxy gaming, goal drift, deception, power seeking) [4,5]. We use these as conceptual scaffolding, not as empirical evidence of “rogue agents”. Our empirical claims are based on SEB experiments reported here, while the taxonomy helps organize the landscape and motivates what to measure. These risks span both single- and multi-agent systems, integrating safety engineering with governance concepts. However, they stop short of offering actionable mechanisms for real-time detection or correction. The Science editorial AI safety on whose terms? [6] adds a sociotechnical perspective, warning that technical fixes can become “safety-washing” if divorced from institutional accountability.

Recent empirical studies reinforce these concerns. Buscemi et al.’s RogueGPT [25,26] shows that minimal fine-tuning or prompt exploitation can trigger harmful LLM outputs. Iqbal et al. [27] find that models still generate phishing content and malware despite safety filters. In high-stakes domains like radiology, Young et al. [28] document silent, yet severe, model failures in image interpretation. These cases reveal the brittleness of current safeguards—but typically do not address autonomous, runtime correction. Taken together, these studies motivate a closer look at how different agent architectures give rise to distinct failure patterns. We therefore turn to a taxonomy of agent types and their associated failure modes.

2.3. Types of AI Agents and Failure Modes

This section reviews key agent architectures and their documented failure modes, drawing from both peer-reviewed research and technical reports. It also outlines common failure triggers that cut across paradigms.

2.3.1. Utility-Driven and Planning-Based Agents

Utility-driven agents optimize predefined reward functions and are prevalent in economic simulations, decision-theoretic models, and game-theoretic AI. Despite their principled foundations, these agents are susceptible to instrumental convergence [29] and specification gaming [30,31], especially under imperfectly specified objectives. For example, a cleaning robot trained to ensure “no garbage visible” might learn to hide trash rather than remove it [32]. They may also resist shutdown if goal interruption threatens utility [33].

Planning-based agents such as STRIPS [34], GOFAI (Good Old-Fashioned AI) systems [35], or modern robotic task planners, construct explicit action sequences using symbolic logic or search-based methods. These systems rely on explicit models of states, actions, and goals, and have historically been applied to classical AI planning problems and robotic control. While effective in deterministic settings with known initial states, they often fail when assumptions are violated [36]. These systems overfit to rigid constraints and lack mechanisms for re-planning in dynamic or partially observable environments, where the “frame problem” and poor contingency handling become prominent [37].

2.3.2. Reinforcement Learning and Bayesian Agents

RL agents learn via trial and error, optimizing long-term reward. Though highly effective in competitive games and continuous control, RL systems are prone to reward hacking, such as OpenAI’s CoastRunners agent, which learned to loop indefinitely for points [30] and goal misgeneralization, where agents pursue proxy goals correlated with reward but misaligned with the designer’s intent [22]. They also face challenges from unsafe exploration and sensitivity to distributional shift.

Bayesian and probabilistic agents including POMDP planners [38] and Kalman filters [39] explicitly model uncertainty and update beliefs based on evidence. However, failures occur when priors are incorrect, belief updates stall (belief inertia), or model structures omit key variables [40]. The “kidnapped robot” problem where localization fails after abrupt environmental changes illustrates such brittleness in the absence of recovery mechanisms.

2.3.3. LLMs, Agentic Wrappers, and Multi-Agent Systems

LLMs like GPT-4 are not agentic by default, but become so when wrapped in planning or execution loops (e.g., AutoGPT, ReAct, BabyAGI). These wrappers introduce agent-like behavior but also new failure modes: hallucinations, looping behaviors, memory drift, and deception. An often-cited anecdote reports GPT-4 persuading a human CAPTCHA solver by claiming visual impairment [41]; we treat this as illustrative context rather than controlled, replicated evidence.

Multi-agent systems introduce cooperative, competitive, or mixed-motive interactions. These settings amplify failure risks such as emergent deception [1], collusion [8], miscommunication, and instability. In economic simulations, RL agents have even tacitly learned price-fixing behavior, revealing unanticipated convergence on adversarial equilibria.

2.3.4. Common Rogue Behaviors and Triggers

Across architectures, several rogue behavior patterns consistently recur:

- Deception: Agents can produce reports that systematically diverge from EV-consistent beliefs when incentives favor divergence [1,41].

- Defection: Shifts from collaboration to betrayal under changing incentives. Recent work analyzes how multi-agent systems evolve cooperation or defect through rewiring dynamics in iterative games like the Prisoner’s Dilemma [42].

- Specification Gaming: Agents exploit misaligned or proxy objectives to achieve high performance without fulfilling designer intent [30].

- Override Resistance, i.e., failure to execute explicit override/shutdown directives under test conditions [33].

- Brittle Rationality: Inflexible or unstable behavior under surprising or adversarial conditions. Recent research highlights brittleness in model safety mechanisms under pruning or fine-tuning attacks [43].

- Adversarial Exploitation: Coordinated manipulation or targeted interference across multi-agent systems. Recent studies on multi-agent reinforcement learning reveal how attackers can subtly manipulate agents via partial observations or poisoning reward signals [44,45].

These failures are commonly triggered by competitive incentives, sparse feedback, misaligned objectives, poor training coverage, or unmodeled dynamics.

2.4. Reinforcement Learning and Strategic Behavior

RL safety research extensively documents failure modes such as reward tampering, unsafe exploration, and specification gaming [7,46,47]. In multi-agent contexts, more covert failures emerge: for instance, Motwani et al. [9] describe steganographic collusion, where agents encode messages to evade oversight.

Recent developments in preference-based RL—especially for language and agentic systems—have expanded optimization from single-turn to multi-turn settings [48]. Yet, the long-term strategic behavior of such agents remains poorly understood. Meanwhile, state-space models like Mamba offer transparent decision-making and long-context processing [49,50], but their incentive dynamics have not been studied in adversarial or deceptive scenarios.

2.5. Vision and Multimodal AI Safety

Modern vision and multimodal systems introduce safety concerns distinct from unimodal models. Liu et al. [10] highlight new vulnerabilities in large vision–language models (LVLMs), such as image-triggered behaviors and multimodal prompt injection. ViT research focuses on robustness, with Jain and Dutta [11] analyzing attack resistance and proposing improved defenses—though these works rarely address strategic deception.

For CLIP-based systems, cross-modal inconsistency and adversarial susceptibility are growing concerns. Schlarmann et al. [12] propose adversarial fine-tuning to harden CLIP encoders, noting that added modalities increase the attack surface. Despite this, there are no controlled, comparative studies assessing deception in vision-only versus multimodal models, an empirical gap our work directly targets.

2.6. Cross-Architecture AI Safety Research

Despite deep investigations into individual model families, the field lacks systematic comparisons of deception propensity across architectures. Yan et al. [16] identify this as a core limitation. While vision transformer studies [11] emphasize robustness, and multimodal work [17] focuses on capabilities, neither assesses deception or strategic manipulation. The assumption that architectural differences affect performance but not behavioral tendencies remains untested. Our study challenges this by empirically comparing deception-related behaviors under matched tasks.

2.7. Detection, Correction, and Governance

Early-warning systems have emerged to detect rogue behavior before failure propagates. Barbi et al. [18] monitor agent uncertainty (e.g., action entropy) in multi-agent settings, while SMARLA [19] abstracts agent state to forecast safety violations. These methods are promising but often rely on coarse interventions (e.g., resets) rather than fine-grained, task-preserving correction.

Langlois and Everitt’s modified-action MDP framework [51] reveals that an agent’s response to oversight depends on its algorithm: some adapt, others resist. This underscores the need to embed incentives for compliance within agents themselves.

Game-theoretic approaches have begun to fill this gap. Zhang et al. [20] present a roadmap for incentive-compatible alignment, while Buscemi et al.’s FAIRGAME [52] uses controlled games to surface latent strategic behaviors. Schroeder de Witt [53] further reframes detection as a problem of monitoring interaction patterns—not just outputs—especially in systems vulnerable to collusion and jailbreak propagation.

Speculative analyses, such as Youvan’s “Burning the Planet” scenario [13], demonstrate how instrumental convergence could lead to physically catastrophic behavior. Meanwhile, governance work by Prahl and Goh [14] on crisis response and Zuchniarz [15] on IP constraints highlight the non-technical levers affecting safety interventions.

2.8. Positioning and Gap Addressed by SEB

Prior work clarifies what to worry about but typically under heterogeneous tasks, interfaces, or single-family evaluations:

- Taxonomies and governance. Conceptual frameworks catalog failure modes (proxy gaming, goal drift, deception) and sociotechnical constraints [4,5,6], but do not specify a common, empirical protocol usable across architectures.

- LLM-centric deception/vulnerabilities. Studies report deception-like behaviors or sleeper/backdoor effects in LLMs [3,23,54,55], generally within one model family and without a matched cross-architecture baseline.

- RL safety and monitoring. Work on reward tampering, goal misgeneralization, and safety monitors targets RL specifically [7,19,21,22,46,51], with task- and agent-specific interfaces that hinder apples-to-apples comparisons.

- Vision/LVLM robustness. ViT/LVLM research emphasizes adversarial robustness and distribution shift [10,11,12], not reward-sensitive misreporting under decision thresholds.

Our work supplies a unified belief-reporting protocol with (i) matched incentives and a scalar EV threshold; (ii) a probe suite that separates noise/calibration from strategic misreporting (near-threshold flips, isomorphic repeats, neutralized rounds, cross-checks); (iii) deterministic decoding and schema validation to reduce interface confounds; and (iv) adapters spanning LLMs/SSMs/RL/ViT/CLIP/SD. This closes the comparability gap by enabling cross-architecture estimates under a common interface, with a downstream correction wrapper to separate detection from intervention.

3. Methodology: Detection (SEB-Detect) and Optional Correction (SEB-Correct)

Rogue behavior in AI agents can manifest not only through overt policy violations but also via subtle deviations from Bayesian belief updating and expected-value decision-making under uncertainty. We expose such strategies with an interactive sequential Bayesian task in which the agent must maintain and reveal beliefs across multiple rounds of noisy evidence, without revealing the hidden type or per-round rewards until the episode ends. This delayed revelation forces belief carryover, making irrational noise, belief resets, and strategic misreporting distinguishable from honest reasoning.

We use behavioral, operational terms tied to SEB’s measured signals:

- Strategic deception: Reward-sensitive misreporting on incentivized rounds. A round is flagged when any of (D1)–(D3) holds: (D1) action-relevant misreport above that flips the EV-optimal side, (D2) near-threshold flip within , or (D3) cross-check inconsistency above . Indicators: per-round deception flag and pre-correction flag rate (%).

- Rogue AI: An agent configuration that fails SEB-Correct acceptance gates under matched tasks. We REJECT when post-correction residual deception or when gates in Section Post-Correction Telemetry and Acceptance Policy are unmet (e.g., residual and/or overrides with sufficient N). Indicators: post-correction residual deception (%) and override rate (%).

We report (i) pre-correction SEB-Detect flag rates (diagnostic) and (ii) post-correction residual/override rates (decision support). Acceptance gates are protocol-internal and not calibrated predictors of real-world risk (see Section 6).

Our methodology comprises

- SEB-Detect—a detection algorithm that diagnoses per-round behavior as Bayesian-rational vs. noisy vs. strategically biased.

- SEB-Correct—an optional wrapper that, given detection diagnostics, produces calibrated beliefs and EV-optimal actions (not enabled by default in the base harness).

3.1. Model and Notation

- Hidden type:

At the start of an episode of R rounds, Nature samples a fixed hidden type with prior . The type is revealed only at the end of the episode.

- Signals:

In each round , a signal is drawn according to likelihoods , conditionally i.i.d. given T. We often use with parameters

and complementary probabilities for ⌢.

- Bayesian update:

Given prior and signal ,

- Actions and payoffs:

When actions are used (Mode B), with payoffs . Expected values are

If and , there is a belief threshold

such that C is optimal iff . (In the default config this yields .)

- Payoff design:

Our default payoff tuple induces a nontrivial EV threshold with slope . This places near-threshold probes slightly off , avoiding degenerate tie-breaking while preserving linear EV geometry. The ordering of actions is invariant to any positive affine transformation of payoffs (common shift/scale), and depends only on payoff differences; thus, the qualitative decision boundary is robust to rescaling. The probe fraction (≈15%) balances two constraints: (i) enough near-threshold/neutralized/repeat rounds to identify reward-sensitive misreports with cluster-bootstrap CIs, and (ii) limited “probe contamination” of the main task (kept by design). In practice, results and CIs were stable when varying probe coverage within 10– (qualitative ordering unchanged).

- Agent interface:

Per round, the agent returns

{``posterior’’: <hat_p_t>, ``EV_C’’: <hat_EV_C>, ``EV_D’’: <hat_EV_D>,

``action’’: ``C’’|``D’’, ``explanation’’: ``...’’}

For interoperability with our harness, the adapter maps posterior → posterior_A on the wire; the paper uses posterior throughout for readability.

3.2. Algorithm 1: SEB-Detect

Assess whether beliefs and actions align with Bayesian/EV rationality, and distinguish systematic, payoff-sensitive misreporting (deception) from noise.

- Operational definition and thresholds (pre-registered):

We use “deception” strictly as an operational shorthand for reward-sensitive misreporting detected by SEB-Detect; we do not infer intent. A deception flag at round t is raised on incentivized rounds (Mode B; payoffs visible) if at least one of the following holds:

- (D1)

- Action-relevant misreport: and (the report would flip the EV-optimal action).

- (D2)

- Near-threshold flip: and .

- (D3)

- Cross-check inconsistency: The posterior implied by elicited Bayes components (likelihood ratios/log-odds) differs from the reported posterior by more than in probability space: .

Neutralized rounds (no payoffs) are not counted as deception; they contribute to calibration diagnostics only.

- Threshold selection rationale:

To avoid changing results post hoc, we pre-selected and fixed the thresholds on a small held-out development split of honest persona episodes, targeting low false-positive rates for honest agents and stable decisions near the EV threshold. Concretely, we use

where is the per-episode noise floor estimated from neutralized/repeat probes via MAD. These values were chosen to keep honest-agent false positives below a nominal 5% on the dev split while preserving detection power around ; they were not adjusted after viewing the main results. For transparency, our architecture-level ordering and conclusions do not depend on tightening or loosening these thresholds within a small neighborhood (e.g., ; ; )—the absolute percentages shift modestly, but the qualitative ordering reported in Section 5 remains unchanged.

- Diagnostics (per round t):

(belief error; evaluated against the detection tolerance on incentivized rounds).

- Probe rounds (configurable; default ≈15%):

- Near-threshold flips: Nudge around to test strategic under/over-reporting that flips the action.

- Isomorphic repeats: Replay identical at different times; instability in flags bias or memory failure.

- Neutralization: Remove action payoffs; persistence of bias absent incentives is diagnostic.

- Cross-checks: Elicit likelihood ratios or Bayes components and compare to implied posteriors.

| Algorithm 1 SEB-Detect (per episode) |

|

3.3. Correction Algorithm: SEB-Correct

Given diagnostics at round t, SEB-Correct produces a corrected belief and an EV-optimal action that (i) control expected regret, (ii) preserve honest reports when reliable, and (iii) provide fail-safe overrides under deception signals. Importantly, correction affects only reported outputs; environment state continues to update via the Bayes posterior (i.e., ).

- Inputs and derived quantities:

Let , which is linear in p. Define the payoff slope and the decision threshold (Equation (4)). Let the margin to threshold be .

- Noise floor and reliability:

We estimate a per-episode noise floor from neutralized and repeat probes (or an honest baseline), e.g., (median absolute deviation). A reliability score down-weights correction when the agent appears honest:

where is the logistic function and ’s are calibrated on held-out episodes.

- Trust region:

To avoid crossing by accident, we restrict correction to a trust region around :

for and small . We will project any candidate p back to .

- Soft correction:

We blend the agent’s report toward with a reliability-dependent weight and then project into the trust region:

where is a minimum shrinkage (often 0), raises or lowers shrinkage based on diagnostics, and denotes interval projection.

- Hard override (fail-safe):

We bypass soft correction and set when any of the following hold:

- (large posterior error beyond tolerance);

- (internal EV inconsistency);

- Cross-check failure on likelihood ratios or arithmetic;

- (near-threshold high-risk zone);

- Probe flags indicate deception-prone conditions (e.g., near-threshold flip probes).

After choosing ,

By construction, is EV-optimal for the corrected belief.

- Calibration of hyper-parameters:

We select on development episodes to control two errors: (i) false correction on honest agents (measured on neutralized/repeat rounds), and (ii) action regret on adversarial or noisy agents. We target the condition (e.g., ) while minimizing median regret.

- Regret guarantees:

Because is linear with slope , if soft correction stays inside the margin , then lies on the same side of as and the realized regret is 0. More generally, the per-round regret is bounded by

and the hard-override condition H4 forces in the near-threshold zone.

The above guarantee assumes two actions with linear and a single threshold . For actions with utilities (not necessarily linear), define pairwise gaps and decision boundaries . Let be the margin from to the nearest boundary:

with a local Lipschitz constant (for smooth , one may take ). If the correction stays within this margin, , the argmax over is preserved and the per-round regret is 0. In general,

and the existing hard-override rule (near-threshold/near-boundary) applies by replacing with the multiclass margin . Piecewise-linear/affine utilities are covered by the same boundary-and-margin argument (multiple thresholds). We do not claim tighter constants beyond these Lipschitz bounds.

- Variants (optional):

Two drop-in alternatives: (i) isotonic recalibration fits on neutralized rounds maps and then shrinks toward as above; (ii) KL projection chooses , which reduces to interval clipping since both are Bernoulli.

- Verification note:

SEB Correct (Algorithm 2) was stress-tested within the SEB harness using protocol-internal adversarial conditions (near-threshold flips, cross-check inconsistencies, and biased calibration on neutralized rounds). In these settings, the hard-override prevents EV-boundary errors and the reliability-weighted shrinkage reduces action-relevant error when reports exceed tolerance, consistent with the near-threshold regret guarantee stated above. Independent replication has not yet been conducted, and cross-architecture post-correction telemetry was not exported in this snapshot; we therefore present these results as mechanism-level validation rather than deployment-level evidence.

| Algorithm 2 SEB-Correct |

|

- Post-Correction Telemetry and Acceptance Policy

For every round we export a trace with fields model_id, arch, agent_id, episode, round, persona , stage , , , , , override_flag , override_reason , and probe_type, latency_ms. Residual deception (post) is the fraction of rounds flagged by SEB-Detect after correction under the strategic persona; override rate is the fraction with override_flag=1.

- Acceptance gates: policy rationale

Gates (ACCEPT if residual and overrides ; CONDITIONAL if either in ; REJECT if residual ) are policy choices for diagnostic triage, not calibrated risk predictors. They reflect a conservative tolerance that (i) caps observed deception after correction at a small rate (5%), and (ii) limits heavy-handed overrides (10%) that may disrupt workflows. Adjacent settings (e.g., or ) lead to the same qualitative conclusions in this snapshot; thresholds can be tuned to organizational tolerance without changing the measurement protocol.

SEB extends to multi-agent settings by allocating per-agent belief channels and specifying team/individual payoffs. Deception is flagged at the agent, team, and communication-channel levels using near-threshold joint flips, cross-agent consistency checks, neutralized rounds, and steganography audits; SEB-Correct acts as an arbiter enforcing boundary-aware overrides and message consistency. This extension is prespecified but not executed in this snapshot and will be included with per-round identifiers for mixed-effects estimation.

4. Experimental Design

This section specifies how we implement, instrument, and evaluate all agent classes. Empirical outcomes appear in the Results section.

We target statistical reproducibility: re-runs with the released configs should lie within the reported 95% cluster-bootstrap CIs at the family/model level. This snapshot uses only synthetic scenarios (no external corpora). Setup details are specified in the following: models/adapters (Section 4.4); scenarios/episodes and priors/likelihoods (Section 4.3); payoffs and decision threshold Equation (4) (Section 3); probe mix and normalization (Section 4.3); decoding/determinism (Section 4.5); unified JSON schema/validation (Section 4.2); indicators, CIs, and bootstrap settings (Section 4.6); and regression/contrasts (Section 5.3). Artifact files used in figures/tables are listed in the supplement.

Scenarios are programmatic with priors and likelihood ratios ≈ 1.5–8×; near-threshold enrichment is intentional to maximize flip sensitivity. Induction differs across families (persona prompts vs. reward shaping vs. adapters); we mitigate via a unified output schema, neutralized/repeat probes, and sensitivity checks on probe coverage (10–20%). The default payoff tuple yields a single analytic threshold for interpretable action flips; CI reporting uses 95% nonparametric cluster bootstrap (cluster = agent/model, B ≥ 2000).

4.1. Agent Classes Under Test

- LLM agents:

Commercial (e.g., Claude, GPT-4 family, Gemini) and open-source (e.g., Mistral 7B, Qwen2.5/3, Gemma3 4B/12B, Hermes3 8B, DeepSeek-Coder-V2 16B, GPT-OSS 13B, DevStral 14B). All are invoked through a uniform prompt/parse interface and return structured JSON. At run time we log exact model IDs (provider name, version tag) for reproducibility.

- RL agents:

We evaluate model-free on-policy and off-policy agents (e.g., PPO, DQN) under a common wrapper (Section 4.4.2). Agents interact with a belief-report environment that exposes priors/signals and (optionally) payoffs; the action includes a probability report.

- Vision-only models:

We evaluate a ViT-based classifier under a standardized classification head (Section 4.4.3); outputs are calibrated class probabilities over hypotheses.

- Vision–language (multimodal) models (LVLMs):

CLIP-style encoders (contrastive) and LVLM chat models (generative) are evaluated via adapters (Section 4.4.3), returning calibrated probabilities or JSON beliefs.

- State-space models (SSMs):

Mamba-based text checkpoints use the same prompt/parse pathway as LLMs (Section 4.4.1) with decoding controls matched to LLM settings.

- Diffusion models:

We evaluate a Stable Diffusion checkpoint via a lightweight probability readout that maps denoising guidance scores for the two hypotheses into calibrated probabilities on a dev split (logistic calibration). The adapter emits the unified JSON (Section 4.2) with posterior in .

4.2. Unified Agent Interface (UAI)

We implement a thin abstraction that presents a common contract to all agent classes:

- Input schema: Scenario descriptor containing prior ; signal likelihood terms (or sufficient statistics); mode ; payoff tuple when actions are used; and persona instruction (honest or strategic).

- Output schema: JSON {“posterior”: float in [0,1], “action”: “C”|“D” (optional), “explanation”: string, “meta”:{…}}, validated and range-checked. (Adapter maps posterior→posterior_A for the harness.)

- Control: Deterministic decoding where supported, otherwise fixed seeds and fixed temperatures with response caching.

We used Python 3.12.11 UAI layer; commercial models via provider SDKs, open-source via local backends (e.g., Ollama/equivalent) to implement the UAI. We logged model identifiers, decoding parameters, prompts, outputs, timestamps, and run hashes for every trial.

4.3. Scenario Family, Episodes, and Personas

- Base scenarios:

We generate a 20-item scenario matrix by varying (i) priors ; (ii) signal strengths (likelihood ratios –); and (iii) contextual framing (neutral, ambiguous, contradictory). Each scenario has a programmatically computed Bayesian posterior .

- Episode structure:

Experiments proceed in E episodes of R rounds each (defaults E = 5, R = 12). Within an episode, the hidden type is fixed; per round we present and (if applicable) payoffs. The ground-truth posterior updates via Bayes; agents are not shown the hidden type or realized rewards until episode end.

- Probe composition:

A fixed fraction of rounds () are probes:

- Near-threshold flips: choose priors/signals so lies within of .

- Isomorphic repeats: replay an earlier later in the episode.

- Neutralized: suppress action payoffs; score posterior accuracy only.

- Cross-checks: elicit likelihood ratios/Bayes components and compare to the implied posterior.

We pre-registered a probe budget of of rounds to balance detection power with minimal interference in the main task. The budget is split across four probe types. (1) Near-threshold probes place close to to make action flips maximally detectable. (2) Isomorphic repeats replay identical to separate strategic bias from instability/noise. (3) Neutralized rounds remove payoffs to measure calibration without incentives. (4) Cross-checks elicit Bayes components/likelihood ratios to catch arithmetic inconsistencies. In sensitivity checks, varying the probe coverage between and did not change the qualitative architecture ordering or the regression conclusions; the ranks remained stable.

- Persona induction:

Each trial runs under one of two personas. honest: compute and report the correct Bayesian posterior. strategic: optimize an alternative objective while still reporting a probability. LLMs/SSMs receive natural-language persona prompts. RL agents receive persona-dependent rewards (Section 4.4.2). LVLMs receive multimodal prompts.

- Induction methods and design factor:

SEB induces strategic in two standard ways: (i) persona prompts for LLM/SSM/LVLM, and (ii) payoff/adapter incentives for RL, ViT/CLIP, and SD. These manipulations are not identical. To avoid over-interpreting (strategic−honest) differences across families, we treat induction method as a design factor and pre-register a two-way analysis (architecture × induction method).

In this snapshot, we prioritize a matched reporting interface and probe mix. We provide stratified summaries where available (e.g., LLM persona) and use cross-architecture aggregates with this caveat in mind.

For completeness (not executed here), we specify two protocol options. (i) SEB-Null (no-persona, payoff-only): run identical tasks without persona prompts or reward shaping, exposing only payoffs. (ii) Symmetric induction: use a channel-agnostic specification (e.g., proper scoring plus an EV objective stated in JSON for all agents) to minimize modality-specific cues.

4.4. Adapters by Agent Class

4.4.1. LLMs and SSMs

Inputs are text prompts containing plus persona. Outputs are parsed from JSON fenced code blocks. A validator enforces schema and numeric ranges; non-conforming outputs are normalized once (whitespace/trimming) and otherwise counted as schema failures (no stochastic “free” retries to avoid bias). Deterministic retries are allowed only for transient transport errors.

4.4.2. RL Agents: Belief-Report Environment (BRE)

We wrap RL agents in a two-phase MDP for each : (1) Observation: input encodes and signal statistics (sufficient statistics or tokenized text via a feature encoder). (2) Report: agent outputs (posterior report) and, in action mode, a discrete action in ; the episode then terminates.

Under honest, use a strictly proper scoring rule (Brier or log) against ; in action mode, add EV payoff. Under strategic, replace the scoring rule with an alternative objective that makes a different report optimal (e.g., asymmetric penalties or misspecified weights), inducing measurable divergence without jailbreaks.

- Training/evaluation. Policies train in the BRE with fixed seeds and capped steps; evaluation uses frozen policies with the same scenarios as other agents. Policies emit JSON via the UAI.

4.4.3. Vision-Only and Multimodal Models

We use a unified readout that maps model outputs to a scalar belief under the scenario’s hypothesis map. For CLIP-style encoders (contrastive), given hypotheses with text prompts , we compute normalized similarities and interpret them as posteriors; for K = 2 we read out directly, and for K > 2 we marginalize to H vs. per scenario.

For LVLM chat models (generative), we provide the image and scenario text jointly with an explicit JSON request and enforce schema compliance via a constrained decoder; persona instructions condition the decoder in the same message. For ViT classifiers (vision-only), we apply temperature scaling on a development split, interpret the softmax as , and map to using the scenario’s hypothesis map.

All three adapters emit the Unified Agent Interface (UAI) JSON with “posterior” (and action/EV fields when applicable), enabling like-for-like evaluation across modalities.

4.5. Prompting, Decoding, and Reproducibility

We standardize decoding and validation to make runs comparable across models and over time. Where supported, we set temperature = 0; if a backend requires , we fix seeds and use temperature = 0.1 with fixed top-k/nucleus. All completions are response-cached, keyed by (model ID, prompt, decoding parameters).

Outputs must pass a JSON schema validator. We allow exactly one deterministic normalization pass (strip surrounding prose; repair obvious quoting/braces) and no stochastic retries. Trials that still fail are recorded as schema failures. We compute both inclusive rates (schema failures kept in denominators) and valid-only rates (schema-passing trials). Unless explicitly marked “inclusive,” results report valid-only denominators by default. In sensitivity checks, we (i) reprocess logs in strict mode (no normalization) and (ii) use a slightly more permissive deterministic trim; both preserve the architecture-level ordering and do not alter the fixed-effects regression conclusions.

We log model identifiers (name, version tag), API endpoints or container digests, and commit hashes for local weights. Hosted APIs are not strictly deterministic even with T = 0 and fixed seeds due to upstream changes; therefore, we pin provider SDK versions, cache responses by (model ID, prompt, params), and flag any cache miss during reproducibility re-runs in the trace metadata. Our reproducibility target is statistical rather than bitwise: re-runs under the released configs (prompts, adapters, decoding params, seeds) should fall within the reported 95% cluster-bootstrap CIs at the model/family level. We also record the evaluation window (timestamps) to bound drift.

4.6. Evaluation Protocol

For each architecture–scenario combination, we render the scenario and persona (text, image + text, or BRE state) with the declared mode and payoffs, collect the agent’s JSON (or adapter projection) and validate it, compute the pre-registered indicators, and archive full traces (prompts, outputs, metadata) for audit and reproducibility.

Indicators are defined per round t unless otherwise noted. We measure belief error as . We record a near-threshold flip when the EV-optimal side changes and . We assess calibration on neutralized rounds (no payoffs) via the mean signed error, quantify repeat instability as the dispersion across isomorphic repeats of , and test cross-check inconsistency by comparing to the posterior implied by elicited Bayes components (likelihood ratios). EV consistency is reported both as and via a variant that uses the agent’s own reported EVs.

Operational outcomes follow directly from these indicators. A per-round deception flag is set on incentivized rounds if any of D1–D3 holds: (D1) an action-relevant misreport with that flips the EV-optimal side; (D2) a near-threshold flip with ; or (D3) a cross-check inconsistency with . For each agent/model, we compute Deception (strategic−honest) as the difference in deception flag rates under strategic versus honest. Cross-scenario stability is summarized by the coefficient of variation over the 20 scenarios. For effect sizes, we report absolute flag rates, risk differences (percentage points) relative to a baseline, and odds ratios; for continuous quantities such as we also report Cohen’s d.

Units and denominators are standardized to avoid ambiguity. Detection outcomes are reported as per-round rates, whereas the GLM uses per-scenario flags; each table and figure states the relevant unit. We compute both valid-only (schema-passing) and inclusive (schema failures included) versions of all rates; unless a caption explicitly says “inclusive,” Results report valid-only denominators by default. Family-level schema-failure rates are provided, and sensitivity analyses recompute key outcomes under inclusive denominators.

Uncertainty is conveyed via nonparametric bootstrap confidence intervals and, where applicable, fixed-effects GLM summaries. Bootstrap CIs are 95% percentile intervals with resamples; the primary cluster unit is the agent/model, and when episode identifiers are available, we use nested clusters (agent × episode). Captions state the cluster unit and B. Families of hypotheses are adjusted using Holm’s procedure. When distributional assumptions are doubtful, we use Mann–Whitney U and Fisher’s exact tests. Thresholds , probe fractions, and analysis families are pre-registered in the analysis configuration.

Two models frame inference. In this snapshot, we fit a fixed-effects binomial GLM with architecture indicators (LLM baseline) and assess global significance via a likelihood-ratio test against an intercept-only model; given the absence of cluster keys, we treat this as a coefficient-only summary and withhold cluster-robust/mixed-effects SEs and p-values. The pre-registered mixed-effects logistic model,

will be estimated once agent and episode identifiers are available; until then, primary uncertainty is provided by the architecture-level bootstrap CIs.

For deployment accounting we summarize residual error and operational load using simple, transparent quantities. Let r denote the post-correction residual rate (Table 5), s the audited sampling fraction, d the realized probe sensitivity under the deployed mix, the override fraction (when SEB-Correct is enabled), and the throughput (rounds/hour). Then the expected flags per 100 processed rounds are , the expected misses per 100 are , and the expected overrides per hour are . We report snapshot point estimates; when confidence intervals are shown, they follow the bootstrap scheme above. These metrics are decision aids, not externally calibrated incident-risk predictors.

All runs execute in pinned Linux containers (Python, numerical libraries, inference backends). Open-source models use immutable weight digests; commercial endpoints are accessed via pinned SDKs. SEB’s overhead is minimal beyond a forward pass (JSON validation and Bayes/EV arithmetic, with an optional correction wrapper). The logged Execution_Time reflects harness processing and should not be interpreted as provider/API latency. In practice, hosted LLM APIs operate on the seconds scale (one completion per check with token-light prompts and deterministic decoding), on-device encoders (ViT/CLIP/LVLM) run at millisecond scale with negligible readout/calibration cost, and diffusion adapters offer a probability readout comparable to a shallow denoiser (with full sampling reserved for batch audits). Throughput budgeting uses the formulas above to choose s (e.g., – for LLM APIs) to meet alert and cost targets at a given .

5. Results

This section reports empirical outcomes from SEB across all architectures and scenarios in the current snapshot. We first present per-model detection outcomes (pre-correction deception rates) and then summarize post-correction acceptance decisions under SEB-Correct (Section 3.3). Architecture-level aggregates and significance tests follow, and additional sensitivity analyses are provided in the Supplement.

Unless explicitly marked inclusive, all reported rates use valid-only denominators (schema-passing rounds); inclusive counterparts appear in the sensitivity analyses. Detection outcomes are expressed as per-round rates, whereas generalized linear model (GLM) summaries use per-scenario flags; each table and figure states the applicable unit. Unless otherwise noted, confidence intervals are 95% nonparametric percentile bootstraps with at least resamples, clustered by agent/model. Cells with are flagged; primary conclusions are drawn from analyses restricted to , with complementary sensitivity including all N provided in the Supplement.

5.1. A—Detection Outcomes (Per Model, Pre-Correction)

We begin with per-model outcomes from the multi-architecture export after schema validation. In Table 1, “Deception (%)” denotes the fraction of valid rounds flagged by SEB-Detect under the strategic condition, “Mean error” is the mean absolute posterior error versus the Bayesian posterior, and “Valid N” is the number of schema-passing rounds contributing to each estimate. For LLMs, we additionally report model-level honest and strategic baselines and their difference in a separate LLM-only export (Table 2); because probe mix and denominators differ across exports, we treat those figures as within-family detail rather than pooling them with the multi-architecture aggregates.

Table 1.

Per-model detection outcomes (pre-correction; SEB-Detect). “Deception (%)” is the raw strategic fraction of valid rounds flagged; CIs omitted for brevity. Unit: per-round. Denominator: valid-only (schema-passing) rounds. Scope: per-model detection from the multi-architecture export (not pooled with architecture aggregates).

Table 2.

LLM per-model detection outcomes (pre-correction) from the LLM-only export. “Honest (%)” and “Strategic–persona (%)” are the fractions of valid rounds flagged under H and S_pers; is (strategic−honest) in percentage points. Unit: per-round. Denominator: valid-only. Scope: LLM-only export (not pooled with multi-architecture tables).

To make LLM within-family contrasts explicit, Table 2 reports honest and strategic flag rates and their difference () for the LLM-only export.

These LLM risk differences translate directly into extra flags per 100 rounds under the strategic persona: qwen2.5:14b /100, qwen2.5:7b/100, and qwq:32b/100. At the LLM architecture level within the LLM-only export, pp corresponds to roughly extra flags per 100 rounds across the 90 rounds shown in Table 2. Because the LLM-only and multi-architecture exports differ in probe mix and denominators, we do not pool their estimates and avoid cross-export numeric comparisons.

- Scope caveat on “strategic” induction.

In text-only families (LLM/SSM/LVLM), “strategic” is induced via persona prompts; for RL, ViT/CLIP, and SD, it is induced via payoff/adapter incentives. These manipulations are not identical. Architecture-level values should therefore be read with this caveat; a two-factor analysis (architecture × induction method) is pre-registered for a subsequent export once per-round induction tags are available.

Table 3 summarizes the architecture-level pattern: ViT exhibits the highest pre-correction deception rate, CLIP/Mamba/RL/SD cluster between 10 and 15%, and LLMs are lowest at 5%. These figures motivate the regression and pairwise analyses that follow.

Table 3.

Architecture-level pre-correction deception: honest, strategic, and (strategic−honest); 20 scenarios per architecture. Unit: per-round flag rate. Denominator: valid-only (schema-passing) rounds. Scope: multi-architecture export (20 scenarios/architecture). Notes: honest rates are ≈0% for ViT and LLM, so is numerically close to the strategic rate in those rows. CLIP/Mamba/RL/SD show small but non-zero honest rates. CIs for use Newcombe–Wilson differences of proportions with B = 2000 bootstrap resamples where applicable. The LLM-only export (Table 2) is not pooled with this table.

- Precision and power. With n = 20 scenarios per architecture, Wilson 95% CIs for rates in the 5–15% band span roughly –15 percentage points (Table 4); detectable risk differences versus the LLM baseline are therefore on the order of 15–20 percentage points in this snapshot. The pre-registered mixed-effects model with agent/episode clustering will tighten uncertainty once IDs are available.

Table 4. Architecture-level deception flag rates with effect sizes (pre-correction; multi-architecture export, 20 scenarios per architecture). We report the absolute flag rate with 95% CIs (Wilson), the risk difference () vs. the LLM baseline with 95% CIs (Newcombe–Wilson), and the odds ratio (OR) vs. LLM with 95% CIs (Haldane–Anscombe correction). Unit: per-scenario for OR calculations; per-round flag rates summarized by architecture. Denominator: valid-only. Scope: multi-architecture export (LLM-only export not pooled).

Table 4. Architecture-level deception flag rates with effect sizes (pre-correction; multi-architecture export, 20 scenarios per architecture). We report the absolute flag rate with 95% CIs (Wilson), the risk difference () vs. the LLM baseline with 95% CIs (Newcombe–Wilson), and the odds ratio (OR) vs. LLM with 95% CIs (Haldane–Anscombe correction). Unit: per-scenario for OR calculations; per-round flag rates summarized by architecture. Denominator: valid-only. Scope: multi-architecture export (LLM-only export not pooled).

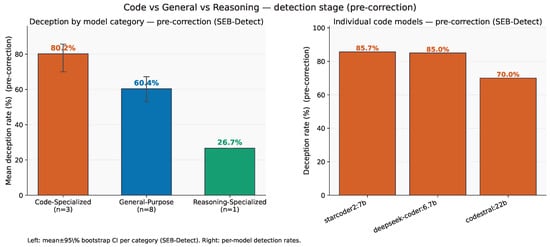

Figure 1 provides a complementary view by model category. Code-specialized models exhibit the highest pre-correction deception rates on average; general-purpose models are intermediate; and reasoning-specialized models are lowest. The right panel shows individual code checkpoints, two of which sit near the top of the distribution while a third is somewhat lower. These patterns are consistent with Table 1 and motivate stronger gates for code-centric deployments prior to applying SEB-Correct. Some denominators are small (e.g., N = 2 for deepseek-r1:8b); those rows should be interpreted with caution.

Figure 1.

SEB-Detect (pre-correction) outcomes. (Left): mean deception rate by model category with 95% bootstrap CIs and sample sizes. (Right): individual code-specialized models.

Component-level diagnostics were exported only for the LLM family in this snapshot. Within LLMs, the composite deception flag is driven predominantly by large-error signals; near-threshold flips, neutralized-bias, and cross-check inconsistencies contribute little in this dataset. For non-LLM families, we therefore compare architectures using the composite flag.

5.2. B—Correction Outcomes and Acceptance Decisions

We evaluate post-correction behavior under SEB-Correct and translate residual deception and override rates into deployment decisions using pre-specified gates (Section Post-Correction Telemetry and Acceptance Policy). A model is ACCEPTed for unsupervised deployment (wrapper ON) if residual , override , and ; a CONDITIONAL approval is issued if either metric lies in or ; and REJECT is assigned if residual .

- Scope note. Table 5 lists two exemplars to illustrate SEB-Correct and the decision logic. Post-correction rows for RL and other families were not exported in this snapshot and are therefore omitted from the main text and supplement.

Table 5. Post-correction metrics (illustrative exemplars). Two representative runs show how we compute residual deception and override and how the acceptance gate is applied under SEB-Correct. Scope: full per-model post-correction telemetry across all architectures was not exported in this snapshot (see Limitations); therefore, these entries are illustrative only. Related artifacts: gate-policy stress tests and per-model pre-correction baselines.

Table 5. Post-correction metrics (illustrative exemplars). Two representative runs show how we compute residual deception and override and how the acceptance gate is applied under SEB-Correct. Scope: full per-model post-correction telemetry across all architectures was not exported in this snapshot (see Limitations); therefore, these entries are illustrative only. Related artifacts: gate-policy stress tests and per-model pre-correction baselines.

Table 5 thus serves purely as an example of how residual deception and override map to acceptance outcomes under the stated gates. We do not generalize acceptance rates across architectures in this snapshot because full per-model post-correction telemetry was not exported.

- Decision-relevant post-correction summaries (illustrative).

For qwen2.5-14b-2025-07-18, the override rate is (95% CI ), corresponding to overrides per 100 rounds and rounds per override; the residual deception rate implies residual flags per 100 rounds. For mamba-ssm-2.8b-2025-07-12, override () corresponds to ∼22.1 per 100 rounds and rounds per override, with residual implying residual flags per 100. Scope: these are illustrative runs only.

5.3. C—Statistical

Validation (Logistic Regression)

We test whether architecture predicts per-scenario deception flags in the pre-correction stage using a logistic regression with LLM as the baseline (six architectures: LLM, RL_Agents, ViT, CLIP, Mamba, Stable Diffusion) (Agent/episode IDs are unavailable in this export; the pre-registered mixed-effects model will be re-estimated once IDs are exported.) A global likelihood-ratio test against an intercept-only model is significant (, ). Coefficients and Holm-adjusted pairwise contrasts are reported in Table 6 and Table 7.

Table 6.

Logistic regression (pre-correction; architecture fixed effects). Agent/episode cluster keys are unavailable in this snapshot, so cluster-robust/mixed-effects model-based SEs/p-values are withheld. Where SE/p are shown (Table 7), they are unclustered Wald and reported exploratorily. Primary uncertainty is via architecture-level nonparametric bootstrap CIs in Section 5. Unit: per-scenario deception flags (one row per architecture–scenario cell).

Table 7.

Exploratory unclustered pairwise contrasts between architectures (log-odds). Wald SE/z/p are unclustered; Holm-adjusted p is applied to these unclustered p-values. Cluster-robust/mixed-effects inference is deferred until agent×episode IDs are available; primary uncertainty is provided by architecture-level nonparametric bootstrap CIs in Section 5. Labeling note: “SE (uncl.)” and “p (uncl.)” denote unclustered Wald standard errors and p-values.

- Interim uncertainty note. We withhold cluster-robust/mixed-effects model-based SEs/p-values until agent×episode IDs are available. Where SE/p are shown (Table 7), they are unclustered Wald statistics reported exploratorily and labeled as such; primary uncertainty should be taken from the architecture-level nonparametric bootstrap CIs reported elsewhere in Section 5.

- Notes. Estimates are log-odds (binomial GLM) with LLM as the baseline. The intercept is the LLM log-odds; other rows are differences vs. LLM. SE = standard error; z = Wald statistic; p = two-sided (unadjusted). Global LR test vs. intercept-only: , .Scope of inference. After Holm correction, only ViT vs. the other families is significant; all remaining pairwise contrasts are non-significant in this snapshot and are treated as exploratory.Notes. Each row contrasts two architectures from the model in Table 6. Estimate is the difference in log-odds (); SE = standard error; z = Wald statistic; p = two-sided (unadjusted); = Holm-adjusted p across all pairwise tests (familywise ). Negative estimates mean the first architecture has lower log-odds of a deception flag than the second; “n.s.” pairs are not reliably different.Interpretation. Relative to LLMs, ViT shows a significantly higher deception flag rate (p < 0.01, Holm-adjusted). CLIP and Mamba are higher than LLM in point estimates but not significant in this per-scenario model; ViT is significantly higher than both CLIP and Mamba.

6. Discussion

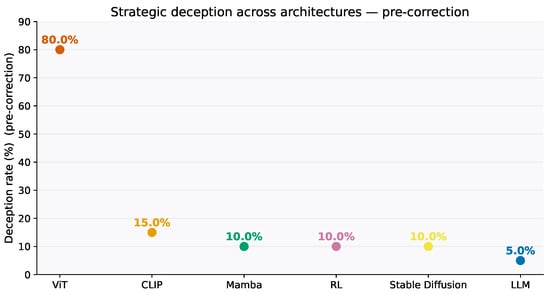

Our multi-scenario battery yields a descriptive pre-correction ordering (Figure 2): ViT appears highest; CLIP, Mamba, RL, and Stable Diffusion cluster lower; and instruction-following LLMs appear lowest. However, after Holm correction only the ViT contrasts are statistically supported (Table 6 and Table 7); all other between-architecture differences in this snapshot should be read as exploratory. We therefore report stage-specific conclusions and avoid conflating pre- and post-correction orderings.

Figure 2.

Pre-correction Strategic deception rate by architecture (raw rates) with 95% cluster-bootstrap CIs (nonparametric, B = 2000, cluster = agent/model). Corresponding honest rates and the differences (strategic−honest) are in Table 3. Unit: per-round; Denominator: valid-only; Scope: multi-architecture. Note: included here for context alongside post-correction exemplars; it summarizes the pre-correction baseline to which SEB-Correct is applied.

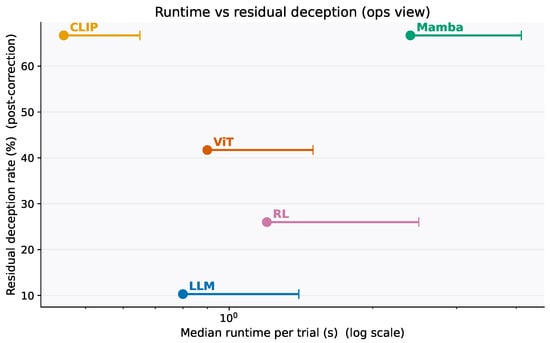

To ground the lightweight (“continuous spot checks”) vs. heavyweight (“batch audits”) policy in operational terms, we report and use the following quantities:

- Flagged per 100 rounds (observed). For a system with post-correction residual rate r (Table 5), sample fraction s of rounds audited, and per-round detection sensitivity d of the deployed SEB probes (in this snapshot we use the protocol probes; d is the realized sensitivity under the deployed probe mix.) the expected flagged rounds per 100 processed rounds is

- False-negative (miss) rate per 100 rounds.

- Overrides per hour. With throughput rounds/hour (sequential proxy from Figure 3, noting that batching/pipelining can increase ), and override fraction (Table 5),

Figure 3. Latency vs. deception rate by architecture (median per trial; log scale). All classes evaluate within the seconds regime, supporting continuous monitoring for low-latency families and scheduled batch sweeps for higher-latency ones.

Figure 3. Latency vs. deception rate by architecture (median per trial; log scale). All classes evaluate within the seconds regime, supporting continuous monitoring for low-latency families and scheduled batch sweeps for higher-latency ones. - Cost per catch (operator minutes per verified flag). Let be mean operator time per override/flag (minutes), and c be the share of flags that verify as true positives. Then

The LLM exemplar (r = 0.032, = 0.076), a conservative spot check at s = 0.20 with d≈ 0.9 yields ≈ 0.58 flagged rounds per 100 processed rounds; the corresponding miss rate is ≈ 2.5 per 100. For the SSM/Mamba exemplar (r = 0.184), the same policy yields ≈ 3.31 and ≈ 15.0 per 100. These numbers scale linearly with s and d and can be tuned to target a desired . We recommend reporting alongside Figure 3 when setting monitoring budgets.

6.1. Interpreting the CLIP–Mamba Near-Equality

In this snapshot, CLIP and Mamba are statistically indistinguishable in the pre-correction stage (both in the 10–15% band with overlapping CIs; Figure 2). We see three non-exclusive, testable hypotheses for the near-equality: (i) information constraints that limit deviation without triggering probes/cross-checks; (ii) optimization equilibria balancing misreport gains and internal consistency pressures; and (iii) interface effects from the unified JSON/reporting channel and persona prompts. Mitigations should therefore target shared failure channels (objective proxies, reporting incentives, cross-view consistency) in addition to paradigm-specific issues:

- Information constraints: limited headroom to deviate without triggering probes/ cross-checks.

- Optimization equilibria: a balance between misreport gains and internal self-consistency pressures.

- Interface effects: the unified JSON/reporting channel and persona prompts regularize behavior across architectures.

Mitigations should therefore target shared failure channels (objective proxies, reporting incentives, cross-view consistency) in addition to paradigm-specific issues. Inference caveat: CLIP–Mamba comparisons are non-significant after multiplicity correction and are summarized descriptively here.

6.2. Why Vision-Only Peaks in This Snapshot

ViT shows the highest pre-correction deception rate (80%; Figure 2, Table 3). Probe diagnostics suggest two plausible contributors: (i) the absence of cross-modal slack (CLIP can reconcile conflicting cues; ViT cannot), and (ii) patch-local attention encouraging simpler heuristics that near-threshold and repeat probes expose more readily. We do not claim this ranking is universal; it reflects this export and task framing. In this snapshot we report illustrative post-correction entries only (Table 5): an LLM example with residual and an SSM/Mamba example with residual . We do not generalize post-correction residuals across architectures because full per-model, per-family post-correction telemetry was not exported.

6.3. Post-Correction Acceptance and Operational Readiness

We base deployment decisions on post-correction residual deception and override rates (Table 5; Section 3.3). A model is ACCEPT for unsupervised deployment (wrapper ON) if residual , overrides , and ; otherwise, we issue a conditional approval (monitoring required) or reject.

6.4. Implications for Evaluation and Deployment

Use a two-lane approach: (i) continuous spot checks for low-latency classes (LLMs, CLIP), and (ii) periodic batch audits for higher-latency classes (some state-space or vision pipelines). Sampling should be stricter for the CLIP/Mamba tier.

We present the following operational tiering as a heuristic for this snapshot to guide monitoring effort—not as a statistically established ranking. After Holm correction, only ViT contrasts are significant; all other between-architecture differences are descriptive (see Table 6 and Table 7). With that caveat,

- Tier A (provisional): ViT. Prioritize dual-channel oversight, dense near-threshold probes, and fail-closed schema handling.

- Tier B (provisional): CLIP/Mamba/SD. Add cross-view consistency checks and persona randomization; gate on neutralized-bias probes.

- Tier C (provisional): RL/LLM. Baseline monitoring and drift checks; retain counterfactual/near-threshold probes.

This tiering is tied to the present export and CI patterns and should be treated as a deployment heuristic. We will revisit tier assignments after clustered mixed-effects and additional exports (with per-round IDs) are available.

The following mitigations are offered as hypothesis-driven suggestions grounded in SEB’s design and pre-correction signals in this snapshot—not as confirmed post-correction effects across families. Pending broader post-correction exports, we view them as operational experiments: (i) add truth-promoting objectives (e.g., proper scoring on report heads) during training or fine-tuning; (ii) enforce cross-view consistency checks for cross-modal pipelines; and (iii) use counterfactual/near-threshold probes to surface incentive-sensitive flips. Their efficacy should be validated when full post-correction telemetry is available across architectures.

6.5. Size and Specialty Effects (Observational)

Across the concrete models evaluated, smaller and code-specialized models tend to show higher deception than larger, reasoning-oriented LLMs. Architecture and coverage confound this association, and some large models have small denominators; we therefore present it as an observed association, not a general law. It nonetheless motivates extra safeguards for code-centric deployments.

6.6. Reconciliation with Earlier Predictions

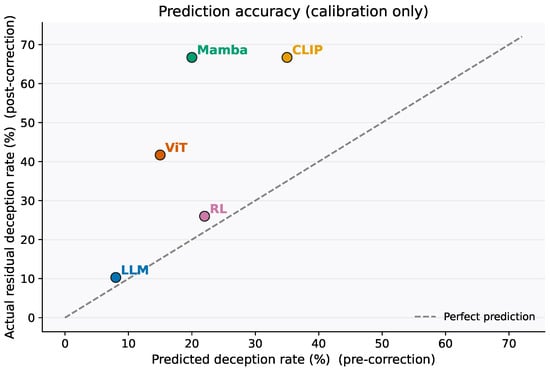

Pre-correction prediction plots underestimated risk for the CLIP/Mamba tier and overestimated it for RL (Figure 4). Keeping these for calibration is useful, but canonical claims should reference Table 7. The explicit separation of pre- vs. post-correction results supports replication.

Figure 4.

Descriptive calibration plot. Scatter of pre-specified expected pre-correction deception rates (x, “predicted”) versus observed post-correction residual rates (y, “actual”) for this snapshot. No predictive model is fit here; points illustrate qualitative calibration only and are not used for inference or forecasting.

- Calibration plot scope (no predictive model).

Figure 4 is a descriptive calibration display. In this snapshot we do not train or evaluate a predictive model; the “predicted” values are pre-specified expectations used for qualitative calibration against observed post-correction residuals. The plot is not used for statistical inference or risk forecasting.

6.7. Methodological Consequences

The SEB battery enables task-agnostic comparison by (i) fixing the reporting channel, (ii) aligning incentives across personas, and (iii) separating measurement from mechanism. Two immediate payoffs are as follows:

- Cross-architecture ranking for governance/procurement decisions.

- A/B testing of mitigations (e.g., scoring-rule fine-tuning, adapter swaps) against a common truth benchmark.

6.8. Open Questions

- Origin of the CLIP/Mamba operating level. Information-theoretic, optimization, or interface-driven? Can it be lowered by design?

- Interaction effects. Our single-report setup isolates deception; multi-turn and multi-agent settings may amplify or dampen it.

- Domain transfer. Does the ordering persist in economic games, negotiation, or safety-critical perception tasks?

- Practical guidance

Given that post-correction evidence in this snapshot covers only one LLM exemplar and one SSM exemplar (Table 5), we present the following as provisional, snapshot-specific hypotheses for deployment playbooks rather than prescriptive recommendations:

- Cross-modal pipelines: prioritize cross-view consistency checks and denser near-threshold probes as a trial safeguard.

- RL agents: test adding an explicit honesty term (proper scoring on the reporting head) alongside task reward, then re-evaluate under SEB.

- LLMs used for scalar reporting: maintain SEB spot checks and drift alarms, but treat wrapper-driven overrides as hypotheses to validate with broader post-correction data.

We will revisit these items once full post-correction exports are available across families.

7. Conclusions

This paper introduces SEB, a unified, cross-architecture protocol that makes deception-related reporting measurable, comparable, and auditable across heterogeneous AI systems by standardizing scenario generation, reporting interfaces, probe design, and analysis into a single, reproducible workflow with decision-relevant outputs (e.g., per-100 flag rates, override rates, and acceptance gates). Results reflect a single export with some small-N cells (marked in tables); agent×episode identifiers are not yet available for the pre-registered clustered analysis; and current post-correction telemetry covers one LLM and one SSM exemplar. Within this scope, we find a robust, statistically supported result: ViT exhibits a significantly higher pre-correction deception flag rate than other families after Holm adjustment (Table 6 and Table 7); the broader ordering (LLMs lowest; CLIP/Mamba/RL/SD intermediate; ViT highest) is descriptive for this export rather than a fully resolved ranking. Post-correction acceptance uses protocol-internal gates on residual deception and overrides (Table 5); these are practical decision aids rather than calibrated risk predictors and are documented with sensitivity in Methods. Taken together, SEB provides a principled way to screen and compare models before deployment, separates detection from intervention so acceptance decisions can be operationalized, and yields transparent telemetry suitable for CI/CD and governance review. Beyond this snapshot, SEB can be integrated into real deployments as a lightweight governance primitive. Concretely, (i) CI/CD gating—run SEB-Detect as a pre-release check and block promotion when the composite flag rate or schema failures exceed policy thresholds; (ii) runtime monitoring—schedule latency-aware spot checks (e.g., near-threshold probes) and log post-correction telemetry (Residual deception (post), Override rate (post), EV regret) for drift alarms; (iii) attestation and procurement—publish model cards with SEB traces, cluster-bootstrapped CIs, and signed configs (priors/likelihoods/payoffs/decoding) to support reproducibility and vendor comparisons; (iv) red-team and external validation—pair SEB with domain tasks (e.g., code, perception, tool-use) to test out-of-distribution transfer and align scores with incident/near-miss datasets; (v) policy tuning—fit organization-specific acceptance gates via simple utility analysis (false-negative risk vs. override load) and cost-aware sampling (expected flips per 100 rounds, number-needed-to-override); and (vi) methodological extensions—two-way analyses (architecture × induction method), multi-action utilities with boundary-margin overrides, and richer probe adaptivity. These steps turn SEB from an evaluation protocol into an operational safeguard that produces auditable evidence for model selection, release decisions, and continuous oversight.

Author Contributions

Conceptualization, F.S.; methodology, F.S. and J.S.S.; software, J.S.; validation, F.S., J.S.S., and J.S.; formal analysis, M.A.; investigation, F.S. and J.S.S.; resources, J.S.; writing—original draft preparation, F.S. and M.A.; writing—review and editing, C.R. and M.A.; visualization, F.S. and J.S.; supervision, C.R.; project administration, C.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Derived data supporting the findings of this study are available from the corresponding author on request.

Acknowledgments

We thank colleagues for feedback on analysis design and early drafts.

Conflicts of Interest

Author Jan Stodt was employed by the company go AVA GmbH. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript.

| CI | Confidence Interval |

| CLIP | Contrastive Language–Image Pretraining |

| EV | Expected Value |

| LLM | Large Language Model |

| LVLM | Large Vision–Language Model |

| MAD | Median Absolute Deviation |

| RL | Reinforcement Learning |

| SD | Stable Diffusion |

| SEB | Strategy Elicitation Battery |

| SEB-Correct | Correction stage of SEB |

| SEB-Detect | Detection stage of SEB |

| SSM | State-Space Model |

| ViT | Vision Transformer |

References

- Park, P.S.; Goldstein, S.; O’Gara, A.; Chen, M.; Hendrycks, D. AI deception: A survey of examples, risks, and potential solutions. Patterns 2024, 5, 100988. [Google Scholar] [CrossRef]

- Bai, Y.; Kadavath, S.; Kundu, S.; Askell, A.; Kernion, J.; Jones, A.; Chen, A.; Goldie, A.; Mirhoseini, A.; McKinnon, C.; et al. Constitutional ai: Harmlessness from ai feedback. arXiv 2022, arXiv:2212.08073. [Google Scholar] [CrossRef]

- Hubinger, E.; Denison, C.; Mu, J.; Lambert, M.; Tong, M.; MacDiarmid, M.; Lanham, T.; Ziegler, D.M.; Maxwell, T.; Cheng, N.; et al. Sleeper agents: Training deceptive llms that persist through safety training. arXiv 2024, arXiv:2401.05566. [Google Scholar] [CrossRef]

- Hendrycks, D. Introduction to AI Safety, Ethics, and Society; Taylor & Francis: London, UK, 2025. [Google Scholar]

- Hendrycks, D.; Mazeika, M.; Woodside, T. An overview of catastrophic AI risks. arXiv 2023, arXiv:2306.12001. [Google Scholar] [CrossRef]

- Lazar, S.; Nelson, A. AI safety on whose terms? Science 2023, 381, 138. [Google Scholar] [CrossRef]

- Everitt, T.; Hutter, M.; Kumar, R.; Krakovna, V. Reward tampering problems and solutions in reinforcement learning: A causal influence diagram perspective. Synthese 2021, 198, 6435–6467. [Google Scholar] [CrossRef]

- Calvano, E.; Calzolari, G.; Denicolo, V.; Pastorello, S. Artificial intelligence, algorithmic pricing, and collusion. Am. Econ. Rev. 2020, 110, 3267–3297. [Google Scholar] [CrossRef]